Abstract

Theoretical advances in language research and the availability of increasingly high-resolution experimental techniques in the cognitive neurosciences are profoundly changing how we investigate and conceive of the neural basis of speech and language processing. Recent work closely aligns language research with issues at the core of systems neuroscience, ranging from neurophysiological and neuroanatomic characterizations to questions about neural coding. Here we highlight, across different aspects of language processing (perception, production, sign language, meaning construction), new insights and approaches to the neurobiology of language, aiming to describe promising new areas of investigation in which the neurosciences intersect with linguistic research more closely than before. This paper summarizes in brief some of the issues that constitute the background for talks presented in a symposium at the Annual Meeting of the Society for Neuroscience. It is not a comprehensive review of any of the issues that are discussed in the symposium.

Introduction

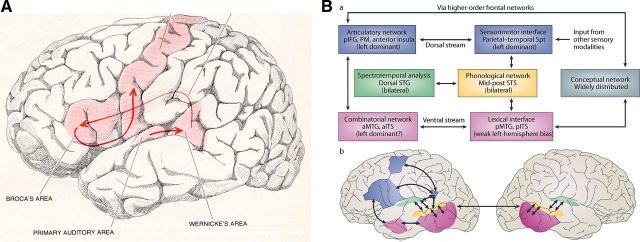

Until ∼25 years ago, our knowledge of the brain basis of language processing, the mental faculty considered to be at the very core of human nature, derived largely from rather coarse measures (neurobiologically speaking): deficit-lesion correlations in stroke patients, electrophysiological data from electroencephalographic as well as occasional intracranial recordings (associated with surgical interventions), and relatively crude measures such as the Wada test for language lateralization. The “classical model” of brain and language, developed in the 19th century by the insightful neurologists Broca (1861), Wernicke (1874), Lichtheim (1885), and others, dominated discourse in the field for more than one hundred years. To this day the Wernicke-Lichtheim model of a left inferior frontal region and a posterior temporal brain area, connected by the arcuate fasciculus fiber bundle, is familiar to every student of the neurosciences, linguistics, and psychology. This iconic model, published in virtually every relevant textbook, had (and continues to have) enormous influence in discussions of the biological foundations of language (for a famous modern instantiation, see Geschwind, 1970, 1979, reproduced in Fig. 1A).

Figure 1.

A, The classical brain language model, ubiquitous but no longer viable. From Geschwind (1979). With permission of Scientific American. B, The dorsal and ventral stream model of speech sound processing. From Hickok and Poeppel (2007). With permission from Nature Publishing Group.

The big questions, with regard to the neurosciences, concerned “lateralization of function” and “hemispheric dominance;” with regard to the cognitive sciences and language research, they concerned apportioning different aspects of language function to the anterior versus posterior language regions, e.g., production versus comprehension, respectively, although the oldest versions (i.e., Wernicke's), were actually more nuanced than much subsequent work. Crucially (and problematically), the assessment of speech, language, and reading disorders in clinical contexts continues to be informed largely by the classical view and ideas and data that range from the 1860s to the 1970s.

Not surprisingly, but worth pointing out explicitly, the era of the classical model is over. The underlying conceptualization of the relation between brain and language is hopelessly underspecified, from the biological point of view as well as from the linguistic and psychological perspectives (Poeppel and Hickok, 2004). As useful as it has been as a heuristic guide to stimulate basic research and clinical diagnosis, it is now being radically adapted, and in parts discarded. That being said, its longevity is a testament to how deeply the classical model has penetrated both the scientific and popular imaginations; few scientific models in any discipline have such staying power.

Two major changes in the conceptualization of brain-language relations have occurred, both related to better “resolution.” Obviously, in the last 20 years, research in this area has been dominated by noninvasive functional brain imaging. The new techniques have increased the spatial and temporal resolutions with which we can investigate the neural foundations of language processing. Improved resolution of the experimental techniques has led to better quality neural data, generating a range of new insights and large-scale models (Hickok and Poeppel, 2004, 2007, see Fig. 1B; Hagoort, 2005; Ben Shalom and Poeppel, 2008; Kotz and Schwartze, 2010, Friederici, 2012; Price, 2012). However, it is fair to say that a different kind of resolution has played at least as critical a role, namely improved conceptual resolution. The increasingly tight connection of neuroscience research to linguistics, cognitive psychology, and computational modeling, forged over the last two decades, has placed the work on significantly richer theoretical footing, made many of the questions computationally explicit, and is beginning to yield hints of neurobiological mechanisms that form the basis of speech and language processing.

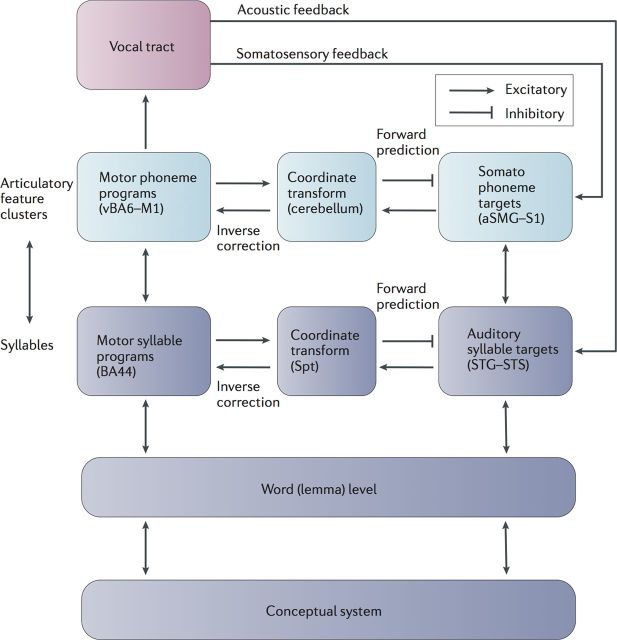

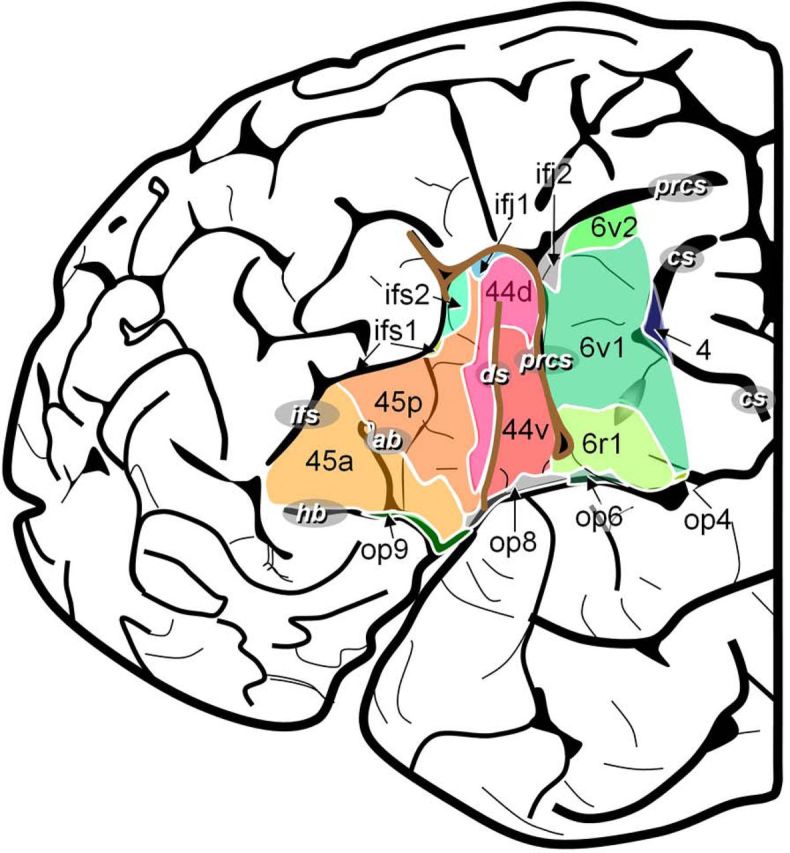

Regarding advances in methods, the remarkable improvements in recording techniques and data analyses have yielded new maps of the functional anatomy of language. Speaking spatially, local regions, processing streams, the two hemispheres, and distributed global networks are now implicated in language function in unanticipated ways. For example, the canonical language region, Broca's area, is now known, based on innovative cytoarchitectural and immunocytochemical data, to be composed of a number of subregions (on the order of 10, ignoring possible laminar specializations), plausibly implicating a much greater number of different functions than previously assumed (Amunts et al., 2010; Fig. 2), supporting both language and non-language processing. Moreover, there is emerging consensus that regions are organized into at least two (and likely more) processing streams, dorsal and ventral streams (Hickok and Poeppel, 2004; Saur et al., 2008), that underpin different processing subroutines, for example, mediating aspects of lexical recognition and lexical combination versus aspects of sensorimotor transformations for production, respectively (Hickok and Poeppel, 2007; Fig. 1B). Third, the classical issue of lateralization of function is now being investigated with respect to very specific physiological specializations, ranging from computational optimization for the encoding of sounds (Zatorre et al., 2002; Giraud et al., 2007) to the encoding of word meanings (Federmeier et al., 2008). Finally, network approaches to brain organization (Sporns, 2010) are beginning to inform how language processing is executed.

Figure 2.

The anatomic organization of Broca's region. From Amunts et al. (2010). With permission of the Public Library of Science.

Conceptual advances in linguistic and psychological research have defined what constitutes the “parts list” that comprises knowledge of language, its acquisition, and use. This description extends from important broader distinctions (e.g., phonology vs syntax) to subtle analyses of the types of representations and operations that underlie, say, meaning construction or lexical access. Early work on the neurobiology of language was largely disconnected from research on language and psychology per se and took language to be rather monolithic, with distinctions being made at the grain size of “perception” or “comprehension” versus “production.” (Imagine, for comparison, making generalizations about “vision” as an un-deconstructed area of inquiry.) In the subsequent phase (arguably the current wave of research) the experimental approaches focus on the decomposition of language in terms of subdomains. Typical studies attempt to relate brain structure and function to, say, phonology, syntax, or semantics. This represents significant progress, but the results are still of a correlational nature, with typical outcomes being of the type “area x supports syntax, area y supports semantics,” and so on. From a neurobiological perspective, the goal is to develop mechanistic (and ultimately explanatory) linking hypotheses that connect well defined linguistic primitives with equally well defined neurobiological mechanisms. It is this next phase of neurolinguistic research that is now beginning, developing a computational neurobiology of language, and there are grounds for optimism that genuine linking hypotheses between the neurosciences and the cognitive sciences are being crafted (Poeppel, 2012).

Is the classical model salvageable? Are the new approaches and insights merely expansions and adaptations to the traditional view? We submit that the model is incorrect along too many dimensions for new research to be considered mere updates. While these issues have been discussed in more detail, we point to just two serious shortcomings. First, due to its underspecification both biologically and linguistically, the classical model makes incorrect predictions about the constellation of deficit-lesion patterns (for discussion, see Dronkers et al., 2004; Hickok and Poeppel, 2004; Poeppel and Hickok, 2004). To exemplify, presenting with a Broca's area lesion does not necessarily coincide with Broca's aphasia, and analogously, presenting with Broca's aphasia does not mean having a Broca's area lesion. Current multiple stream models (that incorporate additional regions) fare better in characterizing the neuropsychological data. Second, the definition of and restriction to the few brain regions comprising the classical model dramatically underestimates the number and distribution of brain regions now known to play a critical role in language comprehension (Price, 2012) and production (Indefrey, 2011). Not just the role of the right (non-dominant) hemisphere has failed to be appreciated, but other left-lateralized extra-Sylvian regions such as the middle temporal gyrus or the anterior superior temporal lobe are now known to be essential (Narain et al., 2003; Turken and Dronkers, 2011), as is the involvement of subcortical areas (Kotz and Schwartze, 2010).

Many of the concepts (e.g., efference copy, oscillations, etc.) and methods (neuroanatomy, neurophysiology, neural coding) central to current research on cognitive neuroscience of language build on work in systems neuroscience. In light of the granularity of the linking hypotheses (Poeppel and Embick, 2005), connections to other areas of related research are more principled, allowing the investigation of mechanistic similarities and differences (say relating to work on birdsong and other animal vocalizations, on genetics, on neural circuits, on evolution, etc.). The level of analysis obviates the need for parochial discussions of what should or should not count as language and allows a sharper focus on underlying mechanisms. This review covers four areas of investigation in speech and language processing in which there has been considerable progress because of the closer connection between linguistics, psychology, and neuroscience: perception, production, sign language, meaning construction, providing a summary of research presented in a symposium at the Annual Meeting of the Society for Neuroscience.

Speech perception and cortical oscillations: emerging computational principles

The recognition of spoken language requires parsing more or less continuous input into discrete units that make contact with the stored information that underpins processing, informally, words. In addition to a parsing (or chunking) stage, there must be a decoding stage in which acoustic input is transformed into representations that underpin linguistic computation. Both functional anatomic and physiological studies have aimed to delineate the infrastructure of the language-ready brain. The functional anatomy of speech sound processing is comprised of a distributed cortical system that encompasses regions along at least two processing streams (Hickok and Poeppel, 2004, 2007). A ventral, temporal lobe pathway primarily mediates the mapping from sound input to meaning/words. A dorsal path incorporating parietal and frontal lobes enables the sensorimotor transformations that underlie mapping to output representations. New experiments aim to understand how the parsing and decoding processes are supported.

Speech (and other dynamically changing auditory signals, as well as naturalistic dynamic visual scenes) typically contain critical information required for successful decoding that is carried at multiple time scales (e.g., intonation-level information at the scale of 500–1000 ms, syllabic information closely correlated to the acoustic envelope of speech, ∼150–300 ms, and rapidly changing featural information, ∼20–80 ms). The different aspects of signals (slow and fast temporal modulation, frequency composition) must be processed for successful recognition. What kind of neuronal infrastructure forms the basis for the required multi-time resolution analyses? A series of neurophysiological experiments suggests that intrinsic neuronal oscillations at different, “privileged” frequencies (delta 1–3 Hz, theta 4–8 Hz, low gamma 30–50 Hz) may provide some of the underlying mechanisms (Ghitza, 2011; Giraud and Poeppel, 2012). In particular, to achieve parsing of a naturalistic input signal into manageable chunks, one macroscopic-level mechanism consists of the sliding and resetting of temporal windows, implemented as phase locking of low-frequency activity to the envelope of speech and resetting of intrinsic oscillations on privileged time scales (Luo and Poeppel, 2007; Luo et al., 2010). The successful resetting of neuronal activity provides time constants (or temporal integration windows) for parsing and decoding speech signals (Luo and Poeppel, 2012). These studies link very directly the infrastructure provided by well described neural oscillations to principled perceptual challenges in speech recognition. One emerging generalization is that acoustic signals must contain some type of “edge,” i.e., an acoustic discontinuity that the listener can use to chunk the signal at the appropriate temporal granularity. Although the “age of the edge” is over and done for vision, acoustic edges likely play an important (and slightly different) causal role in the successful perceptual analysis of complex auditory signals.

Computational neuroanatomy of speech production

Most research on speech production has been conducted from within two different traditions: a psycholinguistic tradition that seeks generalizations at the level of phonemes, morphemes, and phrasal-level units (Dell, 1986; Levelt et al., 1999), and a motor control/neural systems tradition that is more concerned with kinematic forces, movement trajectories, and feedback control (Guenther et al., 1998; Houde and Jordan, 1998). Despite their common goal, to understand how speech is produced, little interaction occurred between these areas of research. There is a standard position regarding the disconnect: the two approaches are focused on different levels of the speech production problem, with the psycholinguists working at a more abstract, perhaps even amodal, level of analysis, and the motor control/neuroscientists largely examining lower-level articulatory control processes. However, closer examination reveals a substantial convergence of ideas, suggesting that the chasm between traditions is more apparent than real and, more importantly, that both approaches have much to gain by paying attention to each other and working toward integration.

For example, psycholinguistic research has documented the existence of a hierarchically organized speech production system in which planning units ranging from articulatory features to words, intonational contours, and even phrases are used. Motor control approaches, on the other hand, have emphasized the role of efference signals from motor commands and internal forward models in motor learning and control (Wolpert et al., 1995; Kawato, 1999; Shadmehr and Krakauer, 2008). An integration of these (and other) notions from the two traditions has spawned a hierarchical feedback control model of speech production (Hickok, 2012).

The architecture of the model is derived from state feedback models of motor control but incorporates processing levels that have been identified in psycholinguistic research. This basic architecture includes a motor controller that generates forward sensory predictions. Communication between the sensory and motor systems is achieved by an auditory–motor translation system. The model includes two hierarchical levels of feedback control, each with its own internal and external sensory feedback loops. As in psycholinguistic models, the input to the model starts with the activation of a conceptual representation that in turn excites a corresponding word representation. The word level projects in parallel to sensory and motor sides of the highest, fully cortical level of feedback control, the auditory–Spt–BA44 loop (Spt stands for Sylvian fissure at the parieto-temporal boundary, BA44 is Brodmann area 44). This higher-level loop in turn projects, also in parallel, to the lower-level somatosensory–cerebellum–motor cortex loop. The model differs both from standard state feedback control and psycholinguistic models in two main respects. First, “phonological” processing is distributed over two hierarchically organized levels, implicating a higher-level cortical auditory–motor circuit and a lower-level somatosensory–motor circuit, which roughly map onto syllabic and phonemic levels of analysis, respectively. Second, a true efference copy signal is not a component of the model. Instead, the function served by an efference copy is integrated into the motor planning process. The anatomical correlates of these circuits (Fig. 3) have been derived from a range of functional imaging and neuropsychological studies, and some of the computational assumptions of the model have been demonstrated in computer simulations.

Figure 3.

Computational and functional anatomic analysis of speech production. From Hickok (2012). With permission from Nature Publishing Group.

Modality dependence and independence: the perspective of sign languages

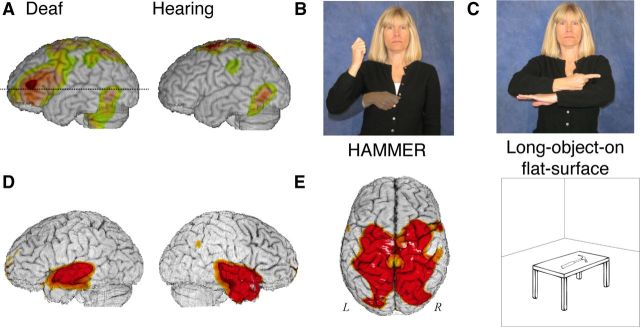

The study of sign languages has provided a powerful tool for investigating the neurobiology of human language (for review, see Emmorey, 2002; MacSweeney et al., 2008; Emmorey and McCullough, 2009). Signed languages differ dramatically from spoken languages with respect to the linguistic articulators (the hands vs the vocal tract) and the perceptual system required for comprehension (vision vs audition). Despite these biological differences, research over the past 35 years has identified striking parallels between signed and spoken languages, including a level of form-based (phonological) structure, left-hemisphere lateralization for many language-processing subroutines, and similar neural substrates, e.g., a frontotemporal neural circuit for language production and comprehension (Hickok et al., 2001; Sandler and Lillo-Martin, 2006). These similarities provide a strong basis for comparison and serve to highlight universal properties of human language. In addition, differences between signed and spoken languages can be exploited to discover how specific vocal-aural or visual-manual properties impact the neurocognitive underpinnings of language. For example, the visual-manual modality allows for iconic expression of a wide range of basic conceptual structures, such as human actions, movements, locations, and shapes (Taub, 2001). In American Sign Language (ASL) and many other sign languages, the verbs HAMMER, DRINK, and STIR resemble the actions they denote. In addition, the location and movement of objects are expressed by the location and movement of the hands in signing space, rather than by a preposition or locative affix. These differences between spoken and signed languages arise from differences in the biology of linguistic expression. For spoken languages, neither sound nor movements of the tongue can easily be used to create motivated representations of human actions or of object locations/movements, whereas for signed languages, the movement and configuration of the hands are observable and can be readily used to create non-arbitrary, iconic linguistic representations of human actions and spatial information.

To exemplify some of the empirical work, H2 15O-PET studies have explored whether these biological differences have an impact on the neural substrates that support action verb production or spatial language. In Study 1, deaf native ASL signers and hearing non-signers generated action pantomimes or ASL verbs in response to pictures of tools and manipulable objects. The generation of ASL verbs engaged left inferior frontal cortex, but when non-signers produced pantomimes in response to the same objects, no frontal activation was observed (Fig. 4A). Both groups recruited left parietal cortex during pantomime production. Overall, these results indicate that the production of pantomimes versus ASL verbs (even those that resemble pantomime) engage partially segregated neural systems that support praxic versus linguistic functions (Emmorey et al., 2011). In Study 2, deaf ASL signers performed a picture description task in which they overtly named objects or produced spatial classifier constructions in which the hand shape indicates object type [e.g., long-thin object (Fig. 4C), vehicle] and the location/motion of the hand iconically and gradiently depicts the location/motion of a referent object (Emmorey and Herzig, 2003). In contrast to the expression of location and motion, the production of both lexical signs and object type morphemes engaged left inferior frontal cortex and left inferior temporal cortex, supporting the hypothesis that classifier hand shapes are categorical morphemes that are retrieved via left hemisphere language regions. In addition, lexical signs engaged the anterior temporal lobes to a greater extent than classifier constructions (Fig. 4D), reflecting the increased semantic processing required to name individual objects, compared to simply indicating the type of object. Both location and motion components of classifier constructions engaged bilateral superior parietal cortex (Fig. 4E), but not left frontotemporal cortices, suggesting that these components are not lexical morphemes. Parietal activation reflects the different biological basis of sign language. That is, to express spatial information, signers must transform visual-spatial representations into a body-centered reference frame and reach toward target locations within signing space, functions associated with superior parietal cortex. In sum, the biologically based iconicity found in signed language alters the neural substrate for spatial language but not for lexical verbs expressing handling actions.

Figure 4.

A, Generation of “pantomimic” signs by deaf signers engaged left IFG (inferior frontal gyrus), but production of similar pantomimes by hearing non-signers did not. D, Lexical “pantomimic” signs shown in B activate the anterior temporal lobes, in contrast to less specific classifier constructions shown in C. E, Location and motion classifier constructions activate bilateral parietal cortex, in contrast to lexical signs.

The observation that spoken and signed languages are subserved by (many of) the same neural structures has been one of the foundational findings of the cognitive neuroscience of language and has cemented the insight that knowledge of language reflects a cognitive system of a certain type, despite differences in modalities and input/output systems. The data suggest that there are areas that are selective for language tasks regardless of modality, and as such “universal.” That being said, there exists considerable evidence for experience-dependent plasticity in these (and related) circuits, as well. For example, both the demands imposed by the properties of particular languages (Paulesu et al., 2000) and the ability to read or not (Castro-Caldas et al., 1998) can shape the organization of these brain regions. Both stability (e.g., across modalities) and plasticity (across speaker-dependent experience) are hallmarks of the neurocognitive system.

Building meanings: the computations of the composing brain

Whether as speech, sign, text, or Braille, the essence of human language is its unbounded combinatory potential: Generative systems of syntax and semantics allow for the composition of an infinite range of expressions from a limited set of elementary building blocks. The construction of complex meaning is not simple string building; rather, the combinatory operations of language are complex and full of mysteries that merit systematic investigation. Why, for example, does every English speaker have the robust intuition that “piling the cushions high” results in a high pile and not high cushions, whereas “hammering the ring flat” gives you a flat ring instead of a flat hammer? Questions such as these are answered by research in theoretical syntax and semantics, a branch of linguistics that offers a cognitive model of the representations and computations that derive complex linguistic meanings. So far, brain research on language (at least semantics) has remained relatively disconnected from this rich body of work. As a result, our understanding of the neurobiology of combination of words and composition of meanings is still grossly generic: neuroscience research on syntax and semantics implicates a general network of “sentence processing regions” but the computational details of this system have not been uncovered.

Recent research using magnetoencephalographic recordings aims to bridge this gap: guided by results in theoretical syntax and semantics, these studies systematically varied the properties of composition to investigate the detailed computational roles and spatiotemporal dynamics of the various brain regions participating in the construction of complex meaning (Pylkkänen et al., 2011). The combinatory network implicated by this research comprises at least the left anterior temporal lobe (LATL), the ventromedial prefrontal cortex (vmPFC), and the angular gyrus (AG). Of these regions, the LATL operates early (∼200–300 ms) and appears specialized to the combination of predicates with other predicates to derive more complex predicates (as in red boat) (Bemis and Pylkkänen, 2011, 2012), but not predicates with their arguments (as in eats meat). The roles of the AG and especially the vmPFC are more general and later in time (∼400 ms). Effects in the vmPFC, in particular, generalize across different combinatory rule types and even into the processing of pictures, suggesting a multipurpose combinatory mechanism. However, this mechanism appears specifically semantic, as opposed to syntactic, since vmPFC effects are systematically elicited for expressions that involve covert, syntactically unexpressed components of meaning (Pylkkänen and McElree, 2007; Brennan and Pylkkänen, 2008, 2010). In sum, contrary to hypotheses that treat natural language composition as monolithic and localized to a single region (Hagoort, 2005), the picture emerging from these experiments suggests that composition is achieved by a network of regions which vary in their computational specificity and domain generality. By connecting the brain science of language to formal models of linguistic representation, the work decomposes the various computations that underlie the brain's multifaceted combinatory capacity.

Summary

Research on the biological foundations of language is at an exciting moment of paradigmatic change. The linking hypotheses are informed by computational analyses, both of the cognitive functions and of the neuronal circuitry. The four presentations in this symposium highlight the new directions that are recasting how we study the brain basis of language processing. Spanning four domains, speech perception, speech production, the combination of linguistic units to generate meaning, and processing sign language, the presentations outline both major empirical developments and show new methodologies. Concepts familiar from systems neuroscience now play a central role in the neurobiology of language, pointing to important synergistic opportunities across the neurosciences. In the new neurobiology of language, the field is moving from coarse characterizations of language and largely correlational insights to fine-grained cognitive analyses aiming to identify explanatory mechanisms.

Footnotes

This work is supported by NIH Grants R01 DC010997 and RO1 DC006708 to K.E., DC009659 to G.H., R01 DC05660 to D.P., and NSF Grant BCS-0545186 to L.P.

References

- Amunts K, Lenzen M, Friederici AD, Schleicher A, Morosan P, Palomero-Gallagher N, Zilles K. Broca's region: novel organizational principles and multiple receptor mapping. PLoS Biol. 2010;8:e1000489. doi: 10.1371/journal.pbio.1000489. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bemis DK, Pylkkänen L. Simple composition: an MEG investigation into the comprehension of minimal linguistic phrases. J Neurosci. 2011;31:2801–2814. doi: 10.1523/JNEUROSCI.5003-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bemis D, Pylkkänen L. Basic linguistic composition recruits the left anterior temporal lobe and left angular gyrus during both listening and reading. Cereb Cortex. 2012 doi: 10.1093/cercor/bhs170. [DOI] [PubMed] [Google Scholar]

- Ben Shalom D, Poeppel D. Functional anatomic models of language: assembling the pieces. Neuroscientist. 2008;14:119–127. doi: 10.1177/1073858407305726. [DOI] [PubMed] [Google Scholar]

- Brennan J, Pylkkänen L. Processing events: behavioral and neuromagnetic correlates of aspectual coercion. Brain Lang. 2008;106:132–143. doi: 10.1016/j.bandl.2008.04.003. [DOI] [PubMed] [Google Scholar]

- Brennan J, Pylkkänen L. Processing psych verbs: behavioral and MEG measures of two different types of semantic complexity. Lang Cogn Processes. 2010;25:777–807. [Google Scholar]

- Broca P. Perte de la parole, ramollissement chronique et destruction partielle du lobe antérieur gauche du cerveau. Bull Soc Anthropol. 1861;2:235–238. [Google Scholar]

- Castro-Caldas A, Petersson KM, Reis A, Stone-Elander S, Ingvar M. The illiterate brain. Learning to read and write during childhood influences the functional organization of the adult brain. Brain. 1998;121:1053–1063. doi: 10.1093/brain/121.6.1053. [DOI] [PubMed] [Google Scholar]

- Dell GS. A spreading activation theory of retrieval in language production. Psychol Rev. 1986;93:283–321. [PubMed] [Google Scholar]

- Dronkers NF, Wilkins DP, Van Valin RD, Jr, Redfern BB, Jaeger JJ. Lesion analysis of the brain areas involved in language comprehension. Cognition. 2004;92:145–177. doi: 10.1016/j.cognition.2003.11.002. [DOI] [PubMed] [Google Scholar]

- Emmorey K. Language, cognition, and the brain: insights from sign language research. Mahwah, NJ: Lawrence Erlbaum; 2002. [Google Scholar]

- Emmorey K, Herzig M. Categorical versus gradient properties of classifier constructions in ASL. In: Emmorey K, editor. Perspectives on classifier constructions in signed languages. Mahwah, NJ: Lawrence Erlbaum; 2003. pp. 222–246. [Google Scholar]

- Emmorey K, McCullough S. The bimodal brain: effects of sign language experience. Brain Lang. 2009;110:208–221. doi: 10.1016/j.bandl.2008.03.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Emmorey K, McCullough S, Mehta S, Ponto L, Grabowski T. Sign language and pantomime production differentially engage frontal and parietal cortices. Lang Cogn Processes. 2011;26:878–901. doi: 10.1080/01690965.2010.492643. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Federmeier KD, Wlotko EW, Meyer AM. What's “right” in language comprehension: ERPs reveal right hemisphere language capabilities. Lang Linguistics Compass. 2008;2:1–17. doi: 10.1111/j.1749-818X.2007.00042.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friederici AD. The cortical language circuit: from auditory perception to sentence comprehension. Trends Cogn Sci. 2012;16:262–268. doi: 10.1016/j.tics.2012.04.001. [DOI] [PubMed] [Google Scholar]

- Geschwind N. The organization of language and the brain. Science. 1970;170:940–944. doi: 10.1126/science.170.3961.940. [DOI] [PubMed] [Google Scholar]

- Geschwind N. Specialization of the human brain. Sci Am. 1979;241:180–199. doi: 10.1038/scientificamerican0979-180. [DOI] [PubMed] [Google Scholar]

- Ghitza O. Linking speech perception and neurophysiology: speech decoding guided by cascaded oscillators locked to the input rhythm. Front Psychology. 2011;2:130. doi: 10.3389/fpsyg.2011.00130. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Giraud AL, Poeppel D. Cortical oscillations and speech processing: emerging computational principles and operations. Nat Neurosci. 2012;15:511–517. doi: 10.1038/nn.3063. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Giraud AL, Kleinschmidt A, Poeppel D, Lund TE, Frackowiak RS, Laufs H. Endogenous cortical rhythms determine cerebral specialization for speech perception and production. Neuron. 2007;56:1127–1134. doi: 10.1016/j.neuron.2007.09.038. [DOI] [PubMed] [Google Scholar]

- Guenther FH, Hampson M, Johnson D. A theoretical investigation of reference frames for the planning of speech movements. Psychol Rev. 1998;105:611–633. doi: 10.1037/0033-295x.105.4.611-633. [DOI] [PubMed] [Google Scholar]

- Hagoort P. On Broca, brain, and binding: a new framework. Trends Cogn Sci. 2005;9:416–423. doi: 10.1016/j.tics.2005.07.004. [DOI] [PubMed] [Google Scholar]

- Hickok G. Computational neuroanatomy of speech production. Nat Neurosci. 2012;13:135–145. doi: 10.1038/nrn3158. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hickok G, Poeppel D. Dorsal and ventral streams: a framework for understanding aspects of the functional anatomy of language. Cognition. 2004;92:67–99. doi: 10.1016/j.cognition.2003.10.011. [DOI] [PubMed] [Google Scholar]

- Hickok G, Poeppel D. The cortical organization of speech processing. Nat Rev Neurosci. 2007;8:393–402. doi: 10.1038/nrn2113. [DOI] [PubMed] [Google Scholar]

- Hickok G, Bellugi U, Klima E. Sign language in the brain. Sci Am. 2001;284:58–65. doi: 10.1038/scientificamerican0601-58. [DOI] [PubMed] [Google Scholar]

- Houde JF, Jordan MI. Sensorimotor adaptation in speech production. Science. 1998;279:1213–1216. doi: 10.1126/science.279.5354.1213. [DOI] [PubMed] [Google Scholar]

- Indefrey P. The spatial and temporal signatures of word production components: a critical update. Front Psychol. 2011;2:255. doi: 10.3389/fpsyg.2011.00255. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kawato M. Internal models for motor control and trajectory planning. Curr Opin Neurobiol. 1999;9:718–727. doi: 10.1016/s0959-4388(99)00028-8. [DOI] [PubMed] [Google Scholar]

- Kotz SA, Schwartze M. Cortical speech processing unplugged: a timely subcortico-cortical framework. Trends Cogn Sci. 2010;14:392–399. doi: 10.1016/j.tics.2010.06.005. [DOI] [PubMed] [Google Scholar]

- Levelt WJ, Roelofs A, Meyer AS. A theory of lexical access in speech production. Behav Brain Sci. 1999;22:1–75. doi: 10.1017/s0140525x99001776. [DOI] [PubMed] [Google Scholar]

- Lichtheim L. On aphasia. Brain. 1885;7:433–484. [Google Scholar]

- Luo H, Poeppel D. Phase patterns of neuronal responses reliably discriminate speech in human auditory cortex. Neuron. 2007;54:1001–1010. doi: 10.1016/j.neuron.2007.06.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Luo H, Poeppel D. Cortical oscillations in auditory perception and speech: evidence for two temporal windows in human auditory cortex. Front Psychol. 2012;3:170. doi: 10.3389/fpsyg.2012.00170. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Luo H, Liu Z, Poeppel D. Auditory cortex tracks both auditory and visual stimulus dynamics using low-frequency neuronal phase modulation. PLoS Biol. 2010;8:e1000445. doi: 10.1371/journal.pbio.1000445. [DOI] [PMC free article] [PubMed] [Google Scholar]

- MacSweeney M, Capek CM, Campbell R, Woll B. The signing brain: the neurobiology of sign language. Trends Cog Sci. 2008;12:432–440. doi: 10.1016/j.tics.2008.07.010. [DOI] [PubMed] [Google Scholar]

- Narain C, Scott SK, Wise RJ, Rosen S, Leff A, Iversen SD, Matthews PM. Defining a left-lateralized response specific to intelligible speech using fMRI. Cereb Cortex. 2003;13:1362–1368. doi: 10.1093/cercor/bhg083. [DOI] [PubMed] [Google Scholar]

- Paulesu E, McCrory E, Fazio F, Menoncello L, Brunswick N, Cappa SF, Cotelli M, Cossu G, Corte F, Lorusso M, Pesenti S, Gallagher A, Perani D, Price C, Frith CD, Frith U. A cultural effect on brain function. Nat Neurosci. 2000;3:91–96. doi: 10.1038/71163. [DOI] [PubMed] [Google Scholar]

- Poeppel D. The maps problem and the mapping problem: two challenges for a cognitive neuroscience of speech and language. Cogn Neuropsychol. 2012 doi: 10.1080/02643294.2012.710600. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Poeppel D, Embick D. The relation between linguistics and neuroscience. In: Cutler A, editor. Twenty-first century psycholinguistics: four cornerstones. Mahwah, NJ: Lawrence Erlbaum; 2005. pp. 103–120. [Google Scholar]

- Poeppel D, Hickok G. Towards a new functional anatomy of language. Cognition. 2004;92:1–12. doi: 10.1016/j.cognition.2003.11.001. [DOI] [PubMed] [Google Scholar]

- Price CJ. A review and synthesis of the first 20 years of PET and fMRI studies of heard speech, spoken language and reading. Neuroimage. 2012;62:816–847. doi: 10.1016/j.neuroimage.2012.04.062. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pylkkänen L, McElree B. An MEG study of silent meaning. J Cogn Neurosci. 2007;19:1905–1921. doi: 10.1162/jocn.2007.19.11.1905. [DOI] [PubMed] [Google Scholar]

- Pylkkänen L, Brennan J, Bemis D. Grounding the cognitive neuroscience of semantics in linguistic theory. Lang Cogn Processes. 2011;26:1317–1337. [Google Scholar]

- Sandler W, Lillo-Martin D. Sign language and linguistic universals. Cambridge, UK: Cambridge UP; 2006. [Google Scholar]

- Saur D, Kreher BW, Schnell S, Kümmerer D, Kellmeyer P, Vry MS, Umarova R, Musso M, Glauche V, Abel S, Huber W, Rijntjes M, Hennig J, Weiller C. Ventral and dorsal pathways for language. Proc Natl Acad Sci U S A. 2008;105:18035–18040. doi: 10.1073/pnas.0805234105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shadmehr R, Krakauer JW. A computational neuroanatomy for motor control. Exp Brain Res. 2008;185:359–381. doi: 10.1007/s00221-008-1280-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sporns O. Networks of the brain. Cambridge, MA: MIT; 2010. [Google Scholar]

- Taub S. Language from the body: iconicity and metaphor in American Sign Language. Cambridge, UK: Cambridge UP; 2001. [Google Scholar]

- Turken AU, Dronkers NF. The neural architecture of the language comprehension network: converging evidence from lesion and connectivity analyses. Front Syst Neurosci. 2011;5:1. doi: 10.3389/fnsys.2011.00001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wernicke C. Der aphasische Symptomencomplex: Eine psychologische Studie auf anatomischer Basis. Cohn and Weigert. 1874 [Google Scholar]

- Wolpert DM, Ghahramani Z, Jordan MI. An internal model for sensorimotor integration. Science. 1995;269:1880–1882. doi: 10.1126/science.7569931. [DOI] [PubMed] [Google Scholar]

- Zatorre RJ, Belin P, Penhune VB. Structure and function of auditory cortex: music and speech. Trends Cogn Sci. 2002;6:37–46. doi: 10.1016/s1364-6613(00)01816-7. [DOI] [PubMed] [Google Scholar]