Abstract

We consider a conductance-based neural network inspired by the generalized Integrate and Fire model introduced by Rudolph and Destexhe in 1996. We show the existence and uniqueness of a unique Gibbs distribution characterizing spike train statistics. The corresponding Gibbs potential is explicitly computed. These results hold in the presence of a time-dependent stimulus and apply therefore to non-stationary dynamics.

1 Introduction

Neural networks have an overwhelming complexity. While an isolated neuron can exhibit a wide variety of responses to stimuli [1], from regular spiking to chaos [2,3], neurons coupled in a network via synapses (electrical or chemical) may show an even wider variety of collective dynamics [4] resulting from the conjunction of non-linear effects, time propagation delays, synaptic noise, synaptic plasticity, and external stimuli [5]. Focusing on the action potentials, this complexity is manifested by drastic changes in the spikes activity, for instance when switching from spontaneous to evoked activity (see for example A. Riehle’s team experiments on the monkey motor cortex [6-9]). However, beyond this, complexity may exist some hidden laws ruling an (hypothetical) “neural code” [10].

One way of unraveling these hidden laws is to seek some regularities or reproducibility in the statistics of spikes. While early investigations on spiking activities were focusing on firing rates where neurons are considered as independent sources, researchers concentrated more recently on collective statistical indicators such as pairwise correlations. Thorough experiments in the retina [11,12] as well as in the parietal cat cortex [13] suggested that such correlations are crucial for understanding spiking activity. Those conclusions where obtained using the maximal entropy principle[14]. Assume that the average value of observables quantities (e.g., firing rate or spike correlations) has been measured. Those average values constitute constraints for the statistical model. In the maximal entropy principle, assuming stationarity, one looks for the probability distribution which maximizes the statistical entropy given those constraints. This leads to a (time-translation invariant) Gibbs distribution. In particular, fixing firing rates and the probability of pairwise coincidences of spikes lead to a Gibbs distribution having the same form as the Ising model. This idea has been introduced by Schneidman et al. in [11] for the analysis of retina spike trains. They reproduce accurately the probability of spatial spiking pattern. Since then, their approach has known a great success (see, e.g., [15-17]), although some authors raised solid objections on this model [12,18-20] while several papers have pointed out the importance of temporal patterns of activity at the network level [21-23]. As a consequence, a few authors [13,24,25] have attempted to define time-dependent models of Gibbs distributions where constraints include time-dependent correlations between pairs, triplets, and so on [26]. As a matter of fact, the analysis of the data of [11] with such models describes more accurately the statistics of spatio-temporal spike patterns [27].

Taking into account all constraints inherent to experiments, it seems extremely difficult to find an optimal model describing spike trains statistics. It is in fact likely that there is not one model, but many, depending on the experiment, the stimulus, the investigated part of the nervous system and so on. Additionally, the assumptions made in the works quoted above are difficult to control. Especially, the maximal entropy principle assumes a stationary dynamics while many experiments consider a time-dependent stimulus generating a time-dependent response where the stationary approximation may not be valid. At this stage, having an example where one knows the explicit form of the spike trains, probability distribution would be helpful to control those assumptions and to define related experiments.

This can be done considering neural network models. Although, to be tractable, such models may be quite away from biological plausibility, they can give hints on which statistics can be expected in real neural networks. But, even in the simplest examples, characterizing spike statistics arising from the conjunction of non-linear effects, time propagation delays, synaptic noise, synaptic plasticity, and external stimuli is far from being trivial on mathematical grounds.

In [28], we have nevertheless proposed an exact and explicit result for the characterization of spike trains statistics in a discrete-time version of Leaky Integrate-and-Fire neural network. The results were quite surprising. It has been shown that whatever the parameters value (in particular synaptic weights), spike trains are distributed according to a Gibbs distribution whose potential can be explicitly computed. The first surprise lies in the fact that this potential has infinite range, namely spike statistics has an infinite memory. This is because the membrane potential evolution integrates its past values and the past influence of the network via the leak term. Although leaky integrate and fire models have a reset mechanism that erases the memory of the neuron whenever it spikes, it is not possible to upper bound the next time of firing. As a consequence, statistics is non-Markovian (for recent examples of non-Markovian behavior in neural models see also [29]). The infinite range of the potential corresponds, in the maximal entropy principle interpretation, to having infinitely many constraints.

Nevertheless, the leak term influence decays exponentially fast with time (this property guarantees the existence and uniqueness of a Gibbs distribution). As a consequence, one can approximate the exact Gibbs distribution by the invariant probability of a Markov chain, with a memory depth proportional to the log of the (discrete time) leak term. In this way, the truncated potential corresponds to a finite number of constraints in the maximal entropy principle interpretation. However, the second surprise is that this approximated potential is nevertheless far from the Ising model or any of the models discussed above, which appear as quite bad approximations. In particular, there is a need to consider n-uplets of spikes with time delays. This mere fact asks hard problems about evidencing such type of potentials in experiments. Especially, new type of algorithms for spike trains analysis has to be developed [30].

The model considered in [28] is rather academic: time evolution is discrete, synaptic interactions are instantaneous, dynamics is stationary (the stimulus is time-constant) and, as in a leaky integrate and fire model, conductances are constant. It is therefore necessary to investigate whether our conclusions remain for more realistic neural networks models. In the present paper, we consider a conductance-based model introduced by Rudolph and Destexhe in [31] called “generalized Integrate and Fire” (gIF) model. This model allows one to consider realistic synaptic responses and conductances depending on spikes arising in the past of the network, leading to a rather complex dynamics which has been characterized in [32] in the deterministic case (no noise in the dynamics). Moreover, the biological plausibility of this model is well accepted [33,34].

Here, we analyze spike statistics in the gIF model with noise and with a time-dependent stimulus. Moreover, the post-synaptic potential profiles are quite general and summarize all the examples that we know in the literature. Our main result is to prove the existence and uniqueness of a Gibbs measure characterizing spike trains statistics, for all parameters compatible with physical constraints (finite synaptic weights, bounded stimulus, and positive conductances). Here, as in [28], the corresponding Gibbs potential has infinite range corresponding to a non-Markovian dynamics, although Markovian approximations can be proposed in the gIF model too. The Gibbs potential depends on all parameters in the model (especially connectivity and stimulus) and has a form quite more complex than Ising-like models. As a by-product of the proof of our main result, additional interesting notions and results are produced such as continuity, with respect to a raster, or exponential decay of memory thanks to the shape of synaptic responses.

The paper is organized as follows. In Section 2, we briefly introduce integrate and fire models and propose two important extensions of the classical models: the spike has a duration and the membrane potential is reset to a non-constant value. These extensions, which are necessary for the validity of our mathematical results, render nevertheless the model more biologically plausible (see Section 9). One of the keys of the present work is to consider spike trains (raster plots) as infinite sequences. Since in gIF models, conductances are updated upon the occurrence of spikes, one has to consider two types of variables with distinct type of dynamics. On the one hand, the membrane potential, which is the physical variable associated with neurons dynamics, evolves continuously. On the other hand, spikes are discrete events. Conductances are updated according to these discrete-time events. The formalism introduced in Sections 2 and 3 allows us to handle properly this mixed dynamics. As a consequence, these sections define gIF model with more mathematical structure than the original paper [31] and mostly contain original results. Moreover, we add to the model several original features such as the consideration of a general form of synaptic profile with exponential decay or the introduction of noise. Section 4 proposes a preliminary analysis of gIF model dynamics. In Sections 5 and 6, we provide several useful mathematical propositions as a necessary step toward the analysis of spike statistics, developed in Section 7, where we prove the main result of the paper: existence and uniqueness of a Gibbs distribution describing spike statistics. Sections 8 and 9 are devoted to a discussion on practical consequences of our results for neuroscience.

2 Integrate and fire model

We consider the evolution of a set of N neurons. Here, neurons are considered as “points” instead of spatially extended and structured objects. As a consequence, we define, for each neuron , a variable called the “membrane potential of neuron k at time t” without specification of which part of a real neuron (axon, soma, dendritic spine, …) it corresponds to. Denote the vector .

We focus here on “integrate and fire models”, where dynamics always consists of two regimes.

2.1 The “integrate regime”

Fix a real number θ called the “firing threshold of the neuron”.1 Below the threshold, , neuron k’s dynamics is driven by an equation of the form:

| (1) |

where is the membrane capacity of neuron k. In its most general form, the neuron k’s membrane conductance depends on plus additional variables such as the probability of having ionic channels open (see, e.g., Hodgkin-Huxley equations [35]) as well as on time t. The explicit form of in the present model is developed in Section 3.4. The current typically depends on time t and on the past activity of the network. It also contains a stochastic component modeling noise in the system (e.g., synaptic transmission, see Section 3.5).

2.2 LIF model

A classical example of integrate and fire model is the Leaky Integrate and Fire’s (LIF) introduced in [36] where Equation (1) reads:

| (2) |

where is a constant and is the characteristic time for membrane potential decay when no current is present (“leak term”).

2.3 Spikes

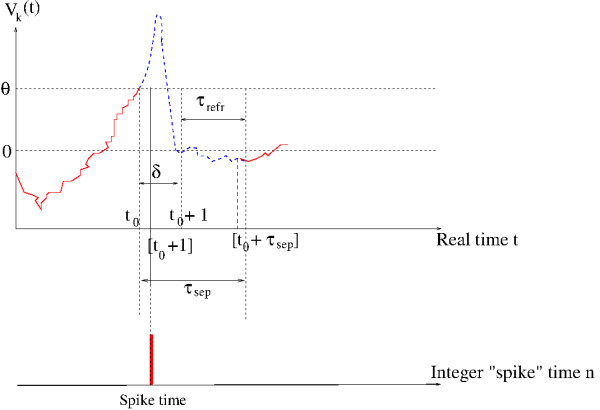

The dynamical evolution (1) may eventually lead to exceed θ. If, at some time t, , then neuron k emits a spike or “fires”. In our model, like in biophysics, a spike has a finite duration ; this is a generalization of the classical formulation of integrate and fire models where the spike is considered instantaneous. On biophysical grounds, δ is of order of a millisecond. Changing the time units, we may set without loss of generality. Additionally, neurons have a refractory period where they are not able to emit a new spike although their membrane potential can fluctuate below the threshold (see Figure 1). Hence, spikes emitted by a given neuron are separated by a minimal time scale

Fig. 1.

Time course of the membrane potential in our model. The blue dashed curve illustrates the shape of a real spike, but what we model is the red curve.

| (3) |

2.4 Raster plots

In experiments, spiking neuron activity is represented by “raster plots”, namely a graph with time in abscissa, and a neuron labeling in ordinate such that a vertical bar is drawn each “time” a neuron emits a spike. Since spikes have a finite duration δ such a representation limits the time resolution: events with a time scale smaller than δ are not distinguished. As a consequence, if neuron 1 fires at time and neuron 2 at time with the two spikes appear to be simultaneous on the raster. Thus, the raster representation introduces a time quantization and has a tendency to enhance synchronization. In gIF models, conductances are updated upon the occurrence of spikes (see Section 3.2) which are considered as such discrete events. This could correspond to the following “experiment”. Assume that we measure the spikes emitted by a set of in vitro neurons and that we use this information to update the conductances of a model, in order to see how this model “matches” the real neurons (see [34] for a nice investigation in this spirit). Then, we would have to take into account that the information provided by the experimental raster plot is discrete, even if the membrane potential evolves continuously. The consequences of this time discretization as well as the limit are developed in the discussion section.

As a consequence, one has to consider two types of variables with distinct type of dynamics. On the one hand, the membrane potential, which is the physical variable associated with neuron dynamics, evolves with a continuous time. On the other hand, spikes, which are the quantities of interest in the present paper, are discrete events. To properly define this mixed dynamics and study its properties, we have to model spikes times and raster plots.

2.5 Spike times

If, at time t, , a spike is registered at the integer time immediately aftert, called the spike time. Choosing integers for the spike time occurrence is a direct consequence of setting . Thus, to each neuron k and integer n, we associate a “spiking state” defined by:

For convenience and in order to simplify the notations in the mathematical developments, we call the largest integer which is ≤t (thus and ). Thus, the integer immediately after t is and we have therefore that whenever . Although, characteristic events in a raster plot are spikes (neuron fires), it is useful in subsequent developments to consider also the case when neuron is not firing ().

2.6 Reset

In the classical formulation of integrate and fire models, the spike occurs simultaneously with a reset of the membrane potential to some constant value , called the “reset potential”. Instantaneous reset is a source of pathologies as discussed in [32,37] and in the discussion section. Here, we consider that reset occurs after the time delay including spike duration and refractory period. We set:

| (4) |

The reason why the reset time is the integer number instead of the real is that it eases the notations and proofs. Since the reset value is random (see below and Figure 1), this assumption has no impact on the dynamics.

Indeed, in our model, the reset value is not a constant. This is a Gaussian random variable with mean zero (we set the rest potential to zero without loss of generality) and variance . In this way, we model the spike duration and refractory period, as well as the random oscillations of the membrane potential during the refractory period. As a consequence, the value of when the neuron can fire again is not a constant, as it is in classical IF models. A related reference (spiking neurons with partial reset) is [38]. The assumption that is necessary for our mathematical developments (see the bounds (37)). We assume to be small to avoid trivial and unrealistic situations where with a large probability leading the neuron to fire all the time. Note, however, that this is not a required assumption to establish our mathematical results. We also assume that, in successive resets, the random variables are independent.

2.7 The shape of membrane potential during the spike

On biophysical grounds, the time course of the membrane potential during the spike includes a depolarization and re-polarization phase due to the non-linear effects of gated ionic channels on the conductance. This leads to introduce, in modeling, additional variables such as activation/inactivation probabilities as in the Hodgkin-Huxley model [35] or adaptation current as, e.g., in FitzHugh-Nagumo model [39-42] (see the discussion section for extensions of our results to those models). Here, since we are considering only one variable for the neuron state, the membrane potential, we need to define the spike profile, i.e., the course of during the time interval . It turns out that the precise shape of this profile plays no role in the developments proposed here, where we concentrate on spike statistics. Indeed, a spike is registered whenever , and this does not depend on the spike shape. What we need is therefore to define the membrane potential evolution before the spike, given by (1), and after the spike, given by (4) (see Figure 1).

2.8 Mathematical representation of raster plots

The “spiking pattern” of the neural network at integer time n is the vector . For , we note the ordered sequence of spiking patterns between m and n. Such sequences are called spike blocks. Additionally, we note that , the concatenation of the blocks and .

Call the set of spiking patterns (alphabet). An element of , i.e., a bi-infinite ordered sequence of spiking patterns, is called a “raster plot”. It tells us which neurons are firing at each time . In experiments, raster plots are obviously finite sequences of spiking pattern but the extension to  , especially the possibility of considering an arbitrary distant past (negative times), is a key of the present work. In particular, the notation refers to spikes occurring from −∞ to n.

, especially the possibility of considering an arbitrary distant past (negative times), is a key of the present work. In particular, the notation refers to spikes occurring from −∞ to n.

To each raster and each neuron index , we associate an ordered (generically infinite) list of “spike times” (integer numbers) such that is the r-th time of firing of neuron j in the raster ω. In other words, we have if and only if for some . We use here the following convention. The index k is used for a post-synaptic neuron while the index j refers to pre-synaptic neurons. Spiking events are used to update the conductance of neuron k according to spikes emitted by pre-synaptic neurons. That is why we label the spike times with an index j.

We introduce here two specific rasters which are of use in the paper. We note Ω0 the raster such that , , (no neuron ever fires) and Ω1 the raster , , (each neuron is firing at every integer time).

Finally, we use the following notation borrowed from [43]. We note, for , and r integer:

| (5) |

For simplicity, we consider that , the refractory period, is smaller than 1 so that a neuron can fire two consecutive time steps (i.e., one can have and ). This constraint is discussed in Section 9.2.

2.9 Representation of time-dependent functions

Throughout the paper, we use the following convention. For a real function of t and ω, we write for to simplify notations. This notation takes into account the duality between variables such as membrane potential evolving with respect to a continuous time and raster plots labeled with discrete time. Thus, the function is a function of the continuous variable t and of the spike block , where by definition , namely depends on the spike sequences occurring beforet. This constraint is imposed by causality.

2.10 Last reset time

We define as the last time before t where neuron k’s membrane potential has been reset, in the raster ω. This is −∞ if the membrane potential has never been reset. As a consequence of our choice (4) for the reset time is an integer number fixed by t and the raster before t. The membrane potential value of neuron k at time t is controlled by the reset value at time and by the further subthreshold evolution (1) from time to time t.

3 Generalized integrate and fire models

In this paper, we concentrate on an extension of (2), called “generalized Integrate-and-Fire” (gIF), introduced in [31], closer to biology [33,34], since it considers more seriously neurons interactions via synaptic responses.

3.1 Synaptic conductances

Depending on the neurotransmitter they use for synaptic transmission (AMPA, NMDA, GABA A, GABA B [44]), neurons can be excitatory (population  ) or inhibitory (population

) or inhibitory (population  ). This is modeled by introducing reversal potentials for excitatory (typically for AMPA and NMDA) and for inhibitory ( for GABA A and for GABA B). We focus here on one population of excitatory and one population of inhibitory neurons although extensions to several populations may be considered as well. Also, each neuron is submitted to a current . We assume that this current has some stochastic component that mimics synaptic noise (Section 3.5).

). This is modeled by introducing reversal potentials for excitatory (typically for AMPA and NMDA) and for inhibitory ( for GABA A and for GABA B). We focus here on one population of excitatory and one population of inhibitory neurons although extensions to several populations may be considered as well. Also, each neuron is submitted to a current . We assume that this current has some stochastic component that mimics synaptic noise (Section 3.5).

The variation in the membrane potential of neuron k at time t reads:

| (6) |

where is a leak conductance, is the leak reversal potential (about −65 mV), the conductance of the excitatory population and the conductance of inhibitory population. They are given by:

| (7) |

where is the conductance of the synaptic contact .

We may rewrite Equation (6) in the form (1) setting

and

3.2 Conductance update upon a spike occurrence

The conductances in (7) depend on time t but also on pre-synaptic spikes occurring before t. This is a general statement, which is modeled in gIF models as follows. Upon arrival of a spike in the pre-synaptic neuron j at time , the membrane conductance of the post-synaptic neuron k is modified as:

| (8) |

In this equation, the quantity characterizes the maximal amplitude of the conductance during a post-synaptic potential. We use the convention that if and only if there is no synapse between j and k. This allows us to encode the graph structure of the neural network in the matrix G with entries . Note that the ’s can evolve in time due to synaptic plasticity mechanisms (see Section 9.4).

The function (called “alpha” profile [44]) mimics the time course of the synaptic conductance upon the occurrence of the spike. Classical examples are:

| (9) |

(exponential profile) or:

| (10) |

with H the Heaviside function (that mimics causality) and is the characteristic decay times of the synaptic response. Since t is a time, the division by ensures that is a dimensionless quantity: this eases the legibility of the subsequent equations on physical grounds (dimensionality of physical quantities).

Contrarily to (9) the synaptic profile (10), with while is maximal for , allows one to smoothly delay the spike action on the post-synaptic neuron. More general forms of synaptic responses could be considered as well. For example, the α profile may obey a Green equation of type [45]:

where , corresponds to (9), and so on.

3.3 Mathematical constraints on the synaptic responses

In all the paper, we assume that the ’s are positive and bounded. Moreover, we assume that:

| (11) |

for some integer d. So that decays exponentially fast as , with a characteristic time , the decay time of the evoked post-synaptic potential. This constraint matches all synaptic response kernels that we know (where typically ) [44,45].

This has the following consequence. For all t, integer, r integer, we have, setting , where is the fractional part:

Therefore, as ,

where is a polynomial of degree d.

We introduce the following (Hardy) notation: if a function is bounded from above, as , by a function we write: . Using this notation, we have therefore:

Proposition 1

| (12) |

as.

Additionally, the constraint (11) implies that there is some such that, for all t, for all k, j:

| (13) |

Indeed, for integer, call . Then,

Due to (11), this series converges (e.g., from Cauchy criterion). We set:

On physical grounds, it implies that the conductance remains bounded, even if each pre-synaptic neuron is firing all the time (see Equation (29) below).

3.4 Synaptic summation

Assume that Equation (8) remains valid for an arbitrary number of pre-synaptic spikes emitted by neuron j within a finite time interval (i.e., neglecting non-linear effects such as the fact that there is a finite amount of neurotransmitter leading to saturation effects). Then, one obtains the following equation for the conductance at time t, upon the arrival of spikes at times in the time interval :

The conductance at time s, , depends on the neuron j’s activity preceding s. This term is therefore unknown unless one knows exactly the past evolution before s. One way to circumvent this problem is to taking s arbitrary far in the past, i.e., taking in order to remove the dependence on initial conditions. This corresponds to the following situation. When one observes a real neural network, the time where the observation starts, say , is usually not the time when the system has begun to exist, s in our notations. Taking s arbitrary far in the past corresponds to assuming that the system has evolved long enough so that it has reached sort of a “permanent regime”, not necessarily stationary, when the observation starts. On phenomenological grounds, it is enough to take −s larger than all characteristic relaxation times in the system (e.g., leak rate and synaptic decay rate). Here, for mathematical purposes, it is easier to take the limit .

Since depends on the raster plot up to time t, via the spiking times , this limit makes only sense when taking it “conditionally” to a prescribed raster plot ω. In other words, one can know the value of the conductances at time t only if the past spike times of the network are known. We write from now on to make this dependence explicit.

We set

| (14) |

with the convention that so that (recall that Ω0 is the raster such that no neuron ever fires). The limit (14) exists from (13).

3.5 Noise

We allow, in the definition of the current in Equation (6), the possibility of having a stochastic term corresponding to noise so that:

| (15) |

where is a deterministic external current and a noise term whose amplitude is controlled by . The model affords an extension where depends on k but this extension is straightforward and we do not develop it here. The noise term can be interpreted as the random variation in the ionic flux of charges crossing the membrane per unit time at the post-synaptic button, upon opening of ionic channels due to the binding of neurotransmitter.

We assume that is a white noise, where is a Wiener process, so that is a N-dimensional Wiener process. Call P the noise probability distribution and the expectation under P. Then, by definition, , , , and where if , and is the Dirac distribution.

3.6 Differential equation for the integrate regime of gIF

Summarizing, we write Equation (6) in the form:

| (16) |

where:

| (17) |

This is the more general conductance form considered in this paper.

Moreover,

| (18) |

where is the synaptic weight:

These equations hold when the membrane potential is below the threshold (Integrate regime).

Therefore, gIF models constitute rather complex dynamical systems: the vector field (r.h.s) of the differential Equation (16) depends on an auxiliary “variable”, which is the past spike sequence and to define properly the evolution of from time t to later times one needs to know the spikes arising before t. This is precisely what makes gIF models more interesting than LIF. The definition of conductances introduces long-term memory effects.

IF models implement a reset mechanism on the membrane potential: If neuron k has been reset between s and t, say at time τ, then depends only on and not on previous values, as in (4). But, in gIF model, contrarily to LIF, there is also a dependence in the past via the conductance and this dependence is not erased by the reset. That is why we have to consider a system with infinite memory.

3.7 The parameters space

The stochastic dynamical system (16) depends on a huge set of parameters: the membrane capacities , , the threshold θ, the reversal potentials , , , the leak conductance ; the maximal synaptic conductances , which define the neural network topology; the characteristic times , of synaptic responses decay; the noise amplitude ; and additionally, the parameters defining the external current . Although some parameters can be fixed from biology, such as , the reversal potentials, , …some others such as the ’s must be allowed to vary freely in order to leave open the possibility of modeling very different neural networks structures.

In this paper, we are not interested in describing properties arising for specific values of those parameters, but instead in generic properties that hold on sets of parameters. More specifically, we denote the list of all parameters by the symbol γ. This is a vector in where K is the total number of parameters. In this paper, we assume that γ belongs to a bounded subset . Basically, we want to avoid situations where some parameters become infinite, which would be unphysical. So the limits of  are the limits imposed by biophysics. Additionally, we assume that and . Together with physical constraints such as “conductances are positive”, these are the only assumption made in parameters. All mathematical results stated in the paper hold for any .

are the limits imposed by biophysics. Additionally, we assume that and . Together with physical constraints such as “conductances are positive”, these are the only assumption made in parameters. All mathematical results stated in the paper hold for any .

4 gIF model dynamics for a fixed raster

We assume that the raster ω is fixed, namely the spike history is given. Then, it is possible to integrate the Equation (16) (Integrate regime) and to obtain explicitly the value of the membrane potential of a neuron at time t, given the membrane potential value at time s. Additionally, the reset condition (4) has the consequence of removing the dependence of neuron k on the past anterior to .

4.1 Integrate regime

For , , set:

| (19) |

We have:

and:

Fix two times and assume that for neuron k, , so that the membrane potential obeys (16). Then,

We have then integrating the previous equation with respect to between s and t and setting :

This equation gives the variation in membrane potential during a period of rest (no spike) of the neuron. Note, however, that this neuron can still receive spikes from the other neurons via the update of conductances (made explicit in the previous equation by the dependence in the raster plot ω).

The term given by (19) is an effective leak between . In the leaky integrate and fire model, it would have been equal to . The term:

has the dimension of a voltage. It corresponds to the integration of the total current between s and t weighted by the effective leak term . It decomposes as

where,

| (20) |

is the synaptic contribution. Moreover,

where we set:

| (21) |

the characteristic leak time of neuron k. We have included the leak reversal potential term in this “external” term for convenience. Therefore, even if there is no external current, this term is nevertheless non-zero.

The sum of the synaptic and external terms gives the deterministic contribution in the membrane potential. We note:

Finally,

| (22) |

is a noise term. This is a Gaussian process with mean 0 and variance:

| (23) |

The square root of this quantity has the dimension of a voltage.

As a final result, for a fixed ω, the variation in membrane potential during a period of rest (no spike) of neuron k between s and t reads (subthreshold oscillations):

| (24) |

4.2 Reset

In Equation (4), as in all IF models that we know, the reset of the membrane potential has the effect of removing the dependence of on its past since is replaced by . Hence, reset removes the dependence in the initial condition in (24) provided that neuron k fires between s and t in the raster ω. As a consequence, Equation (24) holds, from the “last reset time” introduced in Section 2.10 up to time t. Then, Equation (24) reads

| (25) |

where:

| (26) |

is a Gaussian process with mean zero and variance:

| (27) |

5 Useful bounds

We now prove several bounds used throughout the paper.

5.1 Bounds on the conductance

From (13), and since :

| (28) |

Therefore,

| (29) |

so that the conductance is uniformly bounded in t and ω. The minimal conductance is attained when no neuron fires ever so that Ω0 is the “lowest conductance state”. On the opposite, the maximal conductance is reached when all neurons fire all the time so that Ω1 is the “highest conductance state”. To simplify notations, we note . This is the minimal relaxation time scale for neuron k while is the maximal relaxation time.

| (30) |

5.2 Bounds on membrane potential

Now, from (19), we have, for :

| (31) |

As a consequence, exponentially fast as .

Moreover,

| (32) |

so that:

| (33) |

Thus, is uniformly bounded in .

Establishing similar bounds for requires the assumption that , but obtaining tighter bounds requires additionally the knowledge of the sign of and of . Here, we have only to consider that:

In this case,

so that:

| (34) |

Consequently,

Proposition 2

| (35) |

which provides uniform bounds ins, t, ωfor the deterministic part of the membrane potential.

5.3 Bounds on the noise variance

Let us now consider the stochastic part . It has zero mean, and its variance (27) obeys the bounds:

If the left-hand side is an increasing function of so that the minimum, is reached for while the maximum is reached for and is . The opposite holds if . The same argument holds mutatis mutandis for the right-hand side. We set:

| (36) |

so that:

Proposition 3

| (37) |

5.4 The limit

For fixed s and t, there are infinitely many rasters such that (we remind that rasters are infinite sequences). One may argue that taking the difference sufficiently large, the probability of such sequences should vanish. It is indeed possible to show (Section 8.1) that this probability vanishes exponentially fast with , meaning unfortunately that it is positive whatever . So we have to consider cases where can go arbitrary far in past (this is also a key toward an extension of the present analysis to more general conductance-based models as discussed in Section 9.3). Therefore, we have to check that the quantities introduced in the previous sections are well defined as .

Fix s real. For all ω such that - this condition ensuring that k does not fire between s and t - we have, from (28), (31), , . Now, since exists, the limit

exists as well. The same holds for the external term .

Finally, since as the noise term (26) becomes in the limit:

which is a Gaussian process with mean 0 and a variance which obeys the bounds (37).

6 Continuity with respect to a raster

6.1 Definition

Due to the particular structure of gIF models, we have seen that the membrane potential at time t is both a function of t and of the full sequence of past spikes . One expects, however, the dependence with respect to the past spikes to decay as those spikes are more distant in the past. This issue is related to a notion of continuity with respect to a raster that we now characterize.

Definition 1 Let m be a positive integer. The m-variation of a function is:

| (38) |

where the definition of is given in Equation (5). Hence, this notion characterizes the maximal variation of on the set of spikes identical from time to time (cylinder set). It implements the fact that one may truncate the spike history to time and make an error which is at most .

Definition 2 The function is continuous if as .

An additional information is provided by the convergence rate to 0 with m. The faster this convergence, the smaller the error made when replacing an infinite raster by a spike block on a finite time horizon.

6.2 Continuity of conductances

Proposition 4The conductanceis continuous inω, for allt, for all.

Proof Fix , , integer. We have, for :

since the set of firing times , are identical by hypothesis. So, since ,

Therefore, as , from (12) and setting ,

which converges to 0 as .

Therefore, from (17), is continuous with a variation

which converges exponentially fast to 0 as . □

6.3 Continuity of the membrane potentials

Proposition 5The deterministic part of the membrane potential, , is continuous and itsm-variation decays exponentially fast withm.

Proof In the proof, we shall establish precise upper bounds for the variation in , since they are used later on for the proof of uniqueness of a Gibbs measure (Section 7.4.1). From the previous result, it is easy to show that, for all , :

Therefore, from (31),

and is continuous in ω.

Now, the product is continuous as a product of continuous functions. Moreover,

so that:

Since, as :

we have,

which converges to 0 as .

Let us show the continuity of . We have, from (20),

The following inequality is used at several places in the paper. For a -integrable function , we have:

| (39) |

Here, it gives, for :

For the first term, we have,

Let us now consider the second term. If or , then and this term vanishes. Therefore, the supremum in the definition of is attained if and . We may assume, without loss of generality, that . Then, from (32),

So, we have, for the variation of , using (21):

so that finally,

| (40) |

with

| (41) |

| (42) |

and converges to 0 exponentially fast as .

Now, let us show the continuity of with respect to ω. We have:

where, in the last inequality, we have used that the supremum in the variation is attained for and . Finally:

| (43) |

where,

| (44) |

| (45) |

and is continuous.

As a conclusion, is continuous as the sum of two continuous functions. □

6.4 Continuity of the variance of

Using the same type of arguments, one can also prove that

Proposition 6The varianceis continuous inω, for allt, for all.

Proof We have, from (27)

For the first term, we have that the sup in is attained for and:

For the second term, we have:

so that finally,

| (46) |

with

and continuity follows. □

6.5 Remark

Note that the variation in all quantities considered here is exponentially decaying with a time constant given by . This is physically satisfactory: the loss of memory in the system is controlled by the leak time and the decay of the post-synaptic potential.

7 Statistics of raster plots

7.1 Conditional probability distribution of

Recall that P is the joint distribution of the noise and the expectation under P. Under P, the membrane potential V is a stochastic process whose evolution, below the threshold, is given Equations (24), (25) and above by (4). It follows from the previous analysis that:

Proposition 7Conditionally to, is Gaussian with mean:

and covariance:

whereis given by (27).

Moreover, the’s, are conditionally independent.

Proof Essentially, the proof is a direct consequence of Equations (24), (25) and the Gaussian nature of the noise . The conditional independence results from the fact that:

□

7.2 The transition probability

We now compute the probability of a spiking pattern at time , , given the past sequence .

Proposition 8The probability ofconditionally tois given by:

| (47) |

with

| (48) |

where

| (49) |

and

| (50) |

Proof We have, using the conditional independence of the ’s:

Since the ’s are conditionally Gaussian, with mean and variance , we directly obtain (47), (48).

Note that since is bounded from below by a positive quantity (see (37)), the ratio in (48) is defined for all . □

7.3 Chains with complete connections

The transition probabilities (47) define a stochastic process on the set of raster plots where the underlying membrane potential dynamics is summarized in the terms and . While the integral defining these terms extends from to where can go arbitrary far in the past, the integrand involves the conductance that summarizes an history dating back to . As a consequence, the probability transitions generate a stochastic process with unbounded memory, thus non-Markovian. One may argue that this property is a result of our procedure of taking the initial condition in a infinite past , to remove the unresolved dependency on (Section 3.4). So the alternative is either to keep s finite in order to have a Markovian process; then, we have to fix arbitrarily and the probability distribution of . Or we take , removing the initial condition, to the price of considering a non-Markovian process. Actually, such processes are widely studied in the literature under the name of “chains with complete connections” [43,46-49] and several important results can be used here. So we adopt the second approach of the alternative. As a by-product, the knowledge of the Gibbs measure provided by this analysis allows a posteriori to fix the probability distribution of .

For the sake of completeness, we give here the definition of a chain with complete connections (see [43] for more details). For , we note the set of sequences and the related σ-algebra, while  is the σ-algebra related with . is the set of probability measures on .

is the σ-algebra related with . is the set of probability measures on .

Definition 3 A system of transition probabilities is a family of functions

such that the following conditions hold for every :

• For every , the function is measurable with respect to .

• For every ,

A probability measure μ in is consistent with a system of transition probabilities if for all and all -measurable functions f:

Such a measure μ is called a “chain with complete connections consistent with the system of transition probabilities ”.

The transitions probabilities (47) constitute such a system of transitions probabilities: the summation to 1 is obvious while the measurability follows from the continuity of proved below. To simplify notations, we write instead of whenever it makes no confusion.

7.4 Existence of a consistent probability measure μ

In the definition above, the measure μ summarizes the statistics of spike trains from −∞ to +∞. Its marginals allow the characterization of finite spike blocks. So, μ provides the characterization of spike train statistics in gIF models. Its existence is established by a standard result in the frame of chains with complete connections stating that a system of continuous transition probabilities on a compact space has at least one probability measure consistent with it [43]. Since π is a continuous function the continuity of with respect to ω follows from the continuity of and the continuity of , proved in Section 6.

Therefore, there is at least one probability measure consistent with (47).

7.4.1 The Gibbs distribution

A system of transition probabilities is non-null if for all and all . Following [50], a chain with complete connection μ is a Gibbs measure consistent with the system of transition probabilities if this system is continuous and non-null. Gibbs distributions play an important role in statistical physics, as well as ergodic theory and stochastic processes. In statistical physics, they are usually derived from the maximal entropy principle [14]. Here, we use them in a more general context affording to consider non-stationary processes. It turns out that the spike train statistics in gIF model is given by such a Gibbs measure. In this section, we prove the main mathematical result of this paper (uniqueness of the Gibbs measure). The consequences for spike trains characterizations are discussed in the next section.

Theorem 1For each choice of parameters, the gIF model (16) has a unique Gibbs distribution.

The proof of uniqueness is based on the following criteria due to Fernandez and Maillard [50].

Proposition 9Let:2

and

Ifand, then there exists at most one Gibbs measure consistent with it.

So, to prove the uniqueness, we only have to establish that

| (51) |

| (52) |

Proof.

Recall that:

From (35), (37), we have:

| (53) |

Since π, given by (50), is monotonously decreasing, we have:

so that:

| (54) |

Finally,

which proves (51). This also proves the non-nullness of the system of transition probabilities.

.

The proof, which is rather long, is given in the appendix. □

8 Consequences

8.1 The probability that neuron k does not fire in the time interval

In Section 4.2, we argued that this probability vanishes exponentially fast with . This probability is . We now prove this result.

Proposition 10The probability that neuronkdoes not fire within the time interval, has the following bounds:

for some constantsdepending on the system parameters.

Proof We have:

where is given by (47) and obeys the bounds (54). Therefore, setting and , we have

□

8.2 Back to spike trains analysis with the maximal entropy principle

Here, we shortly develop the consequences of our results in relation with the statistical model estimation discussed in the introduction. A more detailed discussion will be published elsewhere (in preparation and [51]). Set:

| (55) |

with,

| (56) |

The function ϕ is a Gibbs potential [52]. Indeed, we have :

This equation emphasizes the connection with Gibbs distributions in statistical physics that considers probability distributions on multidimensional lattices with specified boundary conditions and their behavior under space translations [52]. The correspondence with our case is that “time” is represented by a mono-dimensional space and where the “boundary conditions” are the past . Note that in our case, the partition function is equal to 1.

For simplicity, assume stationarity (this is equivalent to assuming a time-independent external current). In this case, it is sufficient to consider the potential at time .

Thanks to the bounds (53), one can make a series expansion of the functions and and rewrite the potential under the form of the expansion:

| (57) |

where is the set of non-repeated pairs of integers with and . We call the product a monomial. It is 1 if and only if neuron fires at time fires at time . The ’s are explicit functions of the parameters γ. Due to the causal form of the potential, where the time-0 spike, , is multiplied by a function of the past , the polynomial expansion does not contain monomials of the form , (the corresponding coefficient λ vanishes).

Since the potential has infinite range, the expansion (57) contains infinitely many terms. One can nevertheless consider truncations to a range corresponding to truncating the memory of the process to some memory depth D. Note that although truncations with a memory depth D are approximations, the distance with the exact potential converges exponentially fast to 0 as thanks to the continuity of the potential, with a decay rate controlled by synaptic responses and leak rate.

The truncated Gibbs potential has the form:

| (58) |

where l stands for and is an enumeration of the elements in and where is the corresponding monomial. Due to the truncation, (58), contrarily to (55), is not normalized. Its partition function3 is not equal to 1, and its computation becomes rapidly intractable as soon as the number of neurons and memory depth increases.

Clearly, (58) is precisely the form of potential which is obtained under the maximal entropy principle, where the’s are constraints of type “neuronis firing at time, neuronis firing at time” and the’s the conjugated Lagrange multipliers. Thus, using the maximal entropy principle to characterize spike statistics in the gIF model by expressing constraints in terms of spike events (monomials), one can at best find an approximation which can be rather bad, especially if those constraints focus on instantaneous spike patterns () or short memory patterns. Moreover, increasing the memory depth to approach better the right statistics leads to an exponential increase in the number of monomials which becomes rapidly intractable. Finally, the Lagrange multipliers are rather difficult to interpret.

On the opposite, the analytic form (55) depends only on a finite numbers of parameters (γ) constraining the neural network dynamics, which have a straightforward interpretations being physical quantities. This shows that, at least in gIF model, the linear Gibbs potential (58) obtained from the maximal entropy principle is not really appropriate, even for empirical/numerical purposes, and that a form (55) where the infinite memory is replaced by could be more efficient although non-linear.

To finish this section, let us discuss the link with Ising model in light of the present work. Ising model corresponds to a memory-less case, hence to . Since the causal structure of the Gibbs potential forbids monomials of the form , the expansion of the gIF-Gibbs potential corresponds to a Bernoulli distribution where neurons are independent. The Ising model is therefore irrelevant to approximate the exact potential of gIF model, if one wants to reproduce spike statistics at the minimal discretization time scaleδ without considering memory effects.

However, in real data analysis, people are usually binning data, with a time windows of width . Binning consists of recoding the raster plot with spikes amalgamation. The binned raster b consists of “spikes” where if neuron k fired at least once in the time window . In the expansion (57), this corresponds to collecting all monomials corresponding to in a unique monomial. In this way, the binned potential contains indeed an Ising term… that mixes all spike events occurring within the time interval w. These events appear simultaneous because of binning, leading to the Ising pairwise term while events occurring on smaller time scales are scrambled by this procedure.

The binning effect on Gibbs potential requires, however, a more detailed description. This will be discussed elsewhere.

9 Discussion

To conclude this paper, we would like to discuss several consequences and possible extensions of this work.

9.1 The spike time discretization

In gIF model, membrane potential evolves continuously while conductance is updated with spike occurrence considered as discrete events. Here, we discuss this time discretization. Actually, there are two distinct questions.

9.1.1 The limit of time-bin tending to 0

This limit would correspond to a case where spike is instantaneous and modeled by a Dirac distribution. As discussed in [32], this limit raises serious difficulties. To summarize, in real neurons, firing occurs within a finite time δ corresponding to the time of raise and fall for the membrane potential. This involves physicochemical processes that cannot be instantaneous. The time curse of the membrane potential during the spike is described by differential equations, like Hodgkin-Huxley’s [35]. Although, the time scale dt appearing in the differential equations has the mathematical meaning of being arbitrary small, on biophysical grounds, this time scale cannot be arbitrary small, otherwise the Hodgkin-Huxley equations loose their meaning. Indeed, they correspond to an average over microscopic phenomena such as ionic channels dynamics. In particular, their time scale must be sufficiently large to ensure that the description of ionic channels dynamics (opening and closing) in terms of probabilities is valid so dt must be larger than the characteristic time of opening-closing of ionic channels . Additionally, Hodgkin-Huxley’s equations use a Markovian approach (master equation) for the dynamics of hmn gates. This requires that the characteristic time dt is quite a bit larger than the characteristic time of decay for the time correlations between gates activity . Summarizing, we must have . Thus, on biophysical grounds, δ cannot be arbitrary small.

In our case, the limit is armless, however, provided we keep a non-zero refractory period, ensuring that only finitely many spikes occur in a finite time interval. Taking the limit without considering a refractory period raises mathematical problems. One can in principle have uncountably many spikes in a finite time interval leading to the divergence of physical quantities like energy. Also, one can generate nice causal paradoxes [37]. Take a loop with two neurons one excitatory and one inhibitory and assume instantaneous propagation (the α profile is then represented by a Dirac distribution). Then, depending on the synaptic weights value, one can have a situation where neuron 1 fires instantaneously and make instantaneously 2 firing which prevents instantaneously 1 from firing and so on. So taking the limit as well as induces pathologies not inherent to our approach but to IF models.

9.1.2 Synchronization for distinct neurons

There is a more subtle issue pointed out in [53]. We do not only discretize time for each neuron’ spikes, we align the spikes emitted by distinct neurons on a discrete-time grid, as an experimental raster does. As shown in [32] this induces, in gIF models with a purely deterministic dynamics (no noise and reset to a constant value), an artificial synchronization. As a consequence, the deterministic dynamics of gIF models has generically only stable periodic orbits, although periods can be larger than any accessible computational time in a specific region of the parameters space. Additionally, these periods increase as δ decreases. The addition of noise on dynamics and on the reset value, as we propose in this paper, removes this synchronization effect.

9.2 Refractory period

In the definition of the model, we have assumed that the refractory period was smaller than 1. The consequence for raster plots is that one can have two consecutive 1’s in the spike sequence of a neuron. The extension to the case where is straightforward for spike statistics. Having such a refractory period forbids some sequences. For example, if , then all sequences containing two consecutive 1’s for one neuron are forbidden. If sequences containing for a given neuron, where , are forbidden, and so on. More generally, the procedure consisting of forbidding specific (finite) spike blocks is equivalent to introducing a grammar in the spike generation. This grammar can be implemented in the Gibbs potential: forbidden sequences have a potential equal to −∞ (resp. a zero probability). In this case, , the set of all possible rasters, becomes a subset where forbidden sequences have been removed.

9.3 Beyond IF models

Let us now discuss the extension of the present work to more general models of neurons. First, one characteristic feature of integrate and fire models is the reset, which has the consequence that the memory of activity preceding the spike is lost after reset. Although in the deterministic (noiseless) case, this is a simplifying feature allowing for example to fully characterize the asymptotic dynamics of (discrete time) IF models [32,54], here, it somewhat renders more complex the analysis. Indeed, it led us to introduce the notion of “last reset” time and, at some point in the proof (see, e.g., Equation (39)), obliged us to consider several situations (e.g., or versus and in the proof of continuity, Section 6). On the opposite, considering a model where no such reset occur would simply lead us to consider a model where , ∀ω, ∀k. This case is already considered in our formalism, and actually, considering that , ∀ω, ∀k simplifies the proofs (for example it eliminates the second term in Equation (39)).

As a matter of fact, the theorems established in this paper should therefore also hold without reset. But this requires to replace the firing condition (4) by another condition stating what the membrane potential does during the spike. Although it could be possible to propose an ad hoc form for the spike, it would certainly be more interesting to extend the results here to models where neurons activity depends on additional variables such as adaptation currents, as in the FitzHugh-Nagumo model [39-42], or activation-inactivation variables as in the Hodgkin-Huxley model [35].

The present formalism affords an extension toward such models, where the neuron fires whenever its membrane potential belongs to a region of the phase space, which can be delimited by membrane potentials plus additional variables such as adaptation currents or activation-inactivation variables, and where the spike is controlled by the global dynamics of all these variables. But while here the firing of a neuron is described by the crossing of a fixed threshold, in the FitzHugh-Nagumo model, it is given by the crossing of a separatrix in the plane (voltage-adaptation current) and by a more complex “frontier” in the Hodgkin-Huxley model [2,55]. One difficulty is to precisely define this region. To our knowledge, there is no clear agreement for the Hodgkin-Huxley model (some authors [2] even suggest that the “spike region” could have a fractal frontier). The extension toward FitzHugh-Nagumo seems more manageable.

Finally, the most important difficulty toward extending this paper results in more realistic neural networks is the definition of the synaptic spike response. In IF models, the spike is thought as a punctual “event” (typically, an “instantaneous” pulse) while the synaptic response is described by a convolution kernel (the α-profile). This leads one to consider a somewhat artificial mixed dynamics where membrane potential evolves continuously while spike are discrete events. In more realistic models, one would have to consider kinetic equations for neurotransmitter release, receptor binding and opening of post-synaptic ionic channels [5,44]. Additionally, the consideration of these mechanics deserves a spatially extended modeling of the neuron, with time delays. In this case, all variables evolve continuously and the statistics of spike trains would be characterized by the statistics of return times in the “spike region”. This statistics is induced by some probability measure in the phase space; a natural candidate would be the Sinai-Ruelle-Bowen measure [56-58], for stationary dynamics, or the time-dependent SRB measure for non-stationary cases, as defined, e.g., in [59]. These measures are Gibbs measures as well [60]. Here, the main mathematical property ensuring existence and uniqueness of such a measure would be uniform hyperbolicity. To our knowledge, conditions ensuring such a property in networks have not been established yet neither for Hodgkin-Huxley’s nor for FitzHugh-Nagumo’s models.

9.4 Synaptic plasticity

As the results established in this paper hold for any synaptic weight value in  , they hold as well for networks underlying synaptic plasticity mechanisms. The effects of a joint evolution of spikes dynamics, depending on synaptic weights distributions, and synaptic weights evolution depending on spike dynamics have been studied in [61]. In particular it has been shown that mechanisms such as Spike-Time-Dependent Plasticity are related to a variational principle for a quantity, the topological pressure, derived for the thermodynamic formalism of Gibbs distributions. In the paper [61], the fact that spike trains statistics were given by a Gibbs distribution was a working assumption. Therefore, the present work establishes a firm ground for [61].

, they hold as well for networks underlying synaptic plasticity mechanisms. The effects of a joint evolution of spikes dynamics, depending on synaptic weights distributions, and synaptic weights evolution depending on spike dynamics have been studied in [61]. In particular it has been shown that mechanisms such as Spike-Time-Dependent Plasticity are related to a variational principle for a quantity, the topological pressure, derived for the thermodynamic formalism of Gibbs distributions. In the paper [61], the fact that spike trains statistics were given by a Gibbs distribution was a working assumption. Therefore, the present work establishes a firm ground for [61].

Appendix

Here, we establish (52). We use the following lemma.

Lemma 1For a collection, , we have

| (59) |

This lemma is easily proved by recursion.

We have, for ,

where:

Therefore, using inequality (59),

The condition implies so that:

We have

with , so that

We have now to upper bound . We have

with,

so that, from (40), (43):

| (60) |

where:

(see Equations (41), (44)) and:

(see Equations (42), (45)).

Moreover, from (37),

| (61) |

From (35),

| (62) |

Finally,

while

from (37), so that:

and, from (46),

| (63) |

Summarizing (60), (61), (62), (63)

Therefore, we have:

for constants , , .

As a consequence,

Therefore, is bounded from above by the series

which converges, uniformly in . As a consequence, in (52), and we are done.

Competing interests

The author declare that he has no competing interests.

Footnotes

1We assume that all neurons have the same firing threshold. The notion of threshold is already an approximation which is not sharply defined in Hodgkin-Huxley [35] or Fitzhugh-Nagumo [40,41] models (more precisely it is not a constant but it depends on the dynamical variables [55]). Recent experiments [62-64] even suggest that there may be no real potential threshold.

2In [50], the authors use the following definition for the nm variation, which reads in our notations:

It differs therefore slightly from our definition (5), (38). The correspondence is . The definition of takes this correspondence into account.

3For , this is , ensuring that is a conditional probability . Hence, it is not a constant but a function of the past , in a similar way to statistical physics on lattices where the partition function depends on the boundary conditions. Only in the case (memory less) is this a constant.

Acknowledgements

I would like to thank both reviewers for a careful reading of the manuscript, constructive criticism, and helpful comments. I also acknowledge G. Maillard and J. R. Chazottes for invaluable advices and references. I am also grateful to O. Faugeras and T. Viéville for useful comments. This work has been supported by the ERC grant Nervi, the ANR grant KEOPS, and the European grant BrainScales.

References

- Izhikevich E. Which model to use for cortical spiking neurons? IEEE Trans. 2004;15(5):1063–1070. doi: 10.1109/TNN.2004.832719. [DOI] [PubMed] [Google Scholar]

- Guckenheimer J, Oliva R. Chaos in the Hodgkin-Huxley model. SIAM J. 2002;1:105. [Google Scholar]

- Faure P, Korn H. A nonrandom dynamic component in the synaptic noise of a central neuron. Proc. Natl. Acad. Sci. USA. 1997;94:6506–6511. doi: 10.1073/pnas.94.12.6506. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Faure P, Korn H. Is there chaos in the brain? I. Concept of nonlinear dynamics and methods of investigation. C. R. Acad. Sci., Sér. 3 Sci. Vie. 2001;324:773–793. doi: 10.1016/s0764-4469(01)01377-4. [DOI] [PubMed] [Google Scholar]

- Ermentrout GB, Terman D. Foundations of Mathematical Neuroscience. Springer, Berlin; 2010. [Google Scholar]

- Riehle A, Grün S, Diesmann M, Aertsen A. Spike synchronization and rate modulation differentially involved in motor cortical function. Science. 1997;278:1950–1953. doi: 10.1126/science.278.5345.1950. [DOI] [PubMed] [Google Scholar]

- Grammont F, Riehle A. Precise spike synchronization in monkey motor cortex involved in preparation for movement. Exp. 1999;128:118–122. doi: 10.1007/s002210050826. [DOI] [PubMed] [Google Scholar]

- Riehle A, Grammont F, Diesmann M, Grün S. Dynamical changes and temporal precision of synchronized spiking activity in monkey motor cortex during movement preparation. J. Phys. (Paris) 2000;94:569–582. doi: 10.1016/s0928-4257(00)01100-1. [DOI] [PubMed] [Google Scholar]

- Grammont F, Riehle A. Spike synchronization and firing rate in a population of motor cortical neurons in relation to movement direction and reaction time. Biol. 2003;88(5):260–373. doi: 10.1007/s00422-002-0385-3. [DOI] [PubMed] [Google Scholar]

- Rieke F, Warland D, de Ruyter van Steveninck R, Bialek W. Spikes: Exploring the Neural Code. Bradford Books, Denver, CO; 1997. [Google Scholar]

- Schneidman E, Berry M, Segev R, Bialek W. Weak pairwise correlations imply strongly correlated network states in a neural population. Nature. 2006;440(7087):1007–1012. doi: 10.1038/nature04701. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tkačik, G., Schneidman, E., Berry, M.J. II, Bialek, W.: Spin glass models for a network of real neurons. ArXiv:0912.5409v1, 15 (2009)

- Marre O, Boustani SE, Frégnac Y, Destexhe A. Prediction of spatiotemporal patterns of neural activity from pairwise correlations. Phys. 2009;102 doi: 10.1103/PhysRevLett.102.138101. [DOI] [PubMed] [Google Scholar]

- Jaynes E. Information theory and statistical mechanics. Phys. 1957;106:620. [Google Scholar]

- Shlens J, Field G, Gauthier J, Grivich M, Petrusca D, Sher A, Litke A, Chichilnisky E. The structure of multi-neuron firing patterns in primate retina. J. 2006;26(32) doi: 10.1523/JNEUROSCI.1282-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cocco S, Leibler S, Monasson R. Neuronal couplings between retinal ganglion cells inferred by efficient inverse statistical physics methods. Proc. Natl. Acad. Sci. USA. 2009;106(33):14058–14062. doi: 10.1073/pnas.0906705106. http://www.pnas.org/cgi/doi/10.1073/pnas.0906705106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tang A, Jackson D, Hobbs J, Chen W, Smith JL, Patel H, Prieto A, Petrusca D, Grivich MI, Sher A, Hottowy P, Dabrowski W, Litke AM, Beggs JM. A maximum entropy model applied to spatial and temporal correlations from cortical networks in vitro. J. 2008;28(2):505–518. doi: 10.1523/JNEUROSCI.3359-07.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roudi Y, Nirenberg S, Latham P. Pairwise maximum entropy models for studying large biological systems: when they can work and when they can’t. PLoS Comput. 2009;5(5):1–18. doi: 10.1371/journal.pcbi.1000380. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shlens J, Field GD, Gauthier JL, Greschner M, Sher A, Litke AM, Chichilnisky EJ. The structure of large-scale synchronized firing in primate retina. J. 2009;29(15):5022–5031. doi: 10.1523/JNEUROSCI.5187-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ohiorhenuan IE, Mechler F, Purpura KP, Schmid AM, Hu Q, Victor JD. Sparse coding and high-order correlations in fine-scale cortical networks. Nature. 2010;466(7):617–621. doi: 10.1038/nature09178. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lindsey B, Morris K, Shannon R, Gerstein G. Repeated patterns of distributed synchrony in neuronal assemblies. J. 1997;78:1714–1719. doi: 10.1152/jn.1997.78.3.1714. [DOI] [PubMed] [Google Scholar]

- Villa AEP, Tetko IV, Hyland B, Najem A. Spatiotemporal activity patterns of rat cortical neurons predict responses in a conditioned task. Proc. Natl. Acad. Sci. USA. 1999;96(3):1106–1111. doi: 10.1073/pnas.96.3.1106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Segev R, Baruchi I, Hulata E, Ben-Jacob E. Hidden neuronal correlations in cultured networks. Phys. 2004;92 doi: 10.1103/PhysRevLett.92.118102. [DOI] [PubMed] [Google Scholar]

- Amari SI. In: Analysis of Parallel Spike Trains. Grün S., Rotter S., editor. Springer, Berlin; 2010. Information geometry of multiple spike trains; pp. 221–253. [Google Scholar]

- Roudi, Y., Hertz, J.: Mean field theory for non-equilibrium network reconstruction. ArXiv:1009.5946v1 (2010)

- Vasquez, J.C., Viéville, T., Cessac, B.: Parametric estimation of Gibbs distributions as generalized maximum-entropy models for the analysis of spike train statistics. Research Report RR-7561, INRIA (2011)

- Vasquez, J.C., Palacios, A.G., Marre, O., Berry, M.J., Cessac, B.: Gibbs distribution analysis of temporal correlation structure on multicell spike trains from retina ganglion cells. J. Phys. (Paris) (in press) (2011) [DOI] [PMC free article] [PubMed]

- Cessac, B.: A discrete time neural network model with spiking neurons II. Dynamics with noise. J. Math. Biol. (in press) (2010). http://www.springerlink.com/content/j602452n1224x602/ [DOI] [PubMed]

- Kravchuk, K., Vidybida, A.: Delayed feedback causes non-Markovian behavior of neuronal firing statistics. J. Phys. A (2010). ArXiv:1012.6019.

- Vasquez, J.C., Viéville, T., Cessac, B.: Entropy-based parametric estimation of spike train statistics. Research Report, INRIA (2010). http://arxiv.org/abs/1003.3157.

- Rudolph M, Destexhe A. Analytical Integrate and Fire Neuron models with conductance-based dynamics for event driven simulation strategies. Neural Comput. 2006;18:2146–2210. doi: 10.1162/neco.2006.18.9.2146. http://www.mitpressjournals.org/doi/abs/10.1162/neco.2006.18.9.2146. [DOI] [PubMed] [Google Scholar]

- Cessac, B., Viéville, T.: On dynamics of integrate-and-fire neural networks with adaptive conductances. Frontiers Neurosci 2(2) (2008) [DOI] [PMC free article] [PubMed]

- Jolivet R, Lewis T, Gerstner W. Generalized integrate-and-fire models of neuronal activity approximate spike trains of a detailed model to a high degree of accuracy. J. 2004;92:959–976. doi: 10.1152/jn.00190.2004. [DOI] [PubMed] [Google Scholar]

- Jolivet R, Rauch A, Lescher HR, Gerstner W. Integrate-and-Fire Models with Adaptation Are Good Enough. MIT Press, Cambridge; 2006. [Google Scholar]

- Hodgkin A, Huxley A. A quantitative description of membrane current and its application to conduction and excitation in nerve. J. 1952;117:500–544. doi: 10.1113/jphysiol.1952.sp004764. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lapicque L. Recherches quantitatifs sur l’excitation des nerfs traitee comme une polarisation. J. Physiol. Paris. 1907;9:620–635. [Google Scholar]

- Cessac B. A view of neural networks as dynamical systems. Int. J. Bifurc. Chaos. 2010;20(6):1585–1629. http://lanl.arxiv.org/abs/0901.2203. [Google Scholar]

- Kirst C, Geisel T, Timme M. Sequential desynchronization in networks of spiking neurons with partial reset. Phys. 2009;102 doi: 10.1103/PhysRevLett.102.068101. http://link.aps.org/doi/10.1103/PhysRevLett.102.068101. [DOI] [PubMed] [Google Scholar]

- FitzHugh R. Mathematical models of threshold phenomena in the nerve membrane. Bull. 1955;17:257–278. [Google Scholar]

- FitzHugh R. Impulses and physiological states in models of nerve membrane. Biophys. 1961;1:445–466. doi: 10.1016/s0006-3495(61)86902-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nagumo J, Arimoto S, Yoshizawa S. An active pulse transmission line simulating nerve axon. Proc. IRE. 1962;50:2061–2070. [Google Scholar]

- FitzHugh R. Mathematical Models of Excitation and Propagation in Nerve. McGraw-Hill, New York; 1969. chap. 1. [Google Scholar]

- Maillard, G.: Introduction to Chains with Complete Connections. Ecole Federale Polytechnique de Lausanne (2007)

- Destexhe A, Mainen ZF, Sejnowski TJ. Methods in Neuronal Modeling. MIT Press, Cambridge; 1998. Kinetic models of synaptic transmission; pp. 1–25. [Google Scholar]

- Ermentrout B. Neural networks as spatio-temporal pattern-forming systems. Rep. 1998;61:353–430. [Google Scholar]

- Chazottes, J.: Entropie relative, dynamique symbolique et turbulence. Ph.D. thesis, Université de Provence-Aix Marseille I (1999)

- Ledrappier F. Principe variationnel et systèmes dynamiques symboliques. Z. 1974;30(185):185–202. [Google Scholar]

- Coelho Z, Quas A. Criteria for d-continuity. Trans. 1998;350(8):3257–3268. [Google Scholar]

- Bressaud X, Fernandez R, Galves A. Decay of correlations for non Hölderian dynamics. A coupling approach. Electron. 1999;4(3):1–19. [Google Scholar]

- Fernández R, Maillard G. Chains with complete connections: general theory, uniqueness, loss of memory and mixing properties. J. 2005;118:555–588. [Google Scholar]

- Cessac B, Palacios A. Spike Train Statistics from Empirical Facts to Theory: The Case of the Retina. Springer, Berlin; 2011. [Google Scholar]

- Georgii HO. Gibbs Measures and Phase Transitions. de Gruyter, Berlin; 1988. [Google Scholar]

- Kirst C, Timme M. How precise is the timing of action potentials? Front. 2009;3:2–3. doi: 10.3389/neuro.01.009.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cessac B. A discrete time neural network model with spiking neurons. Rigorous results on the spontaneous dynamics. J. 2008;56(3):311–345. doi: 10.1007/s00285-007-0117-3. [DOI] [PubMed] [Google Scholar]

- Cronin J. Mathematical Aspects of Hodgkin-Huxley Theory. Cambridge University Press, Cambridge; 1987. [Google Scholar]

- Sinai Y. Gibbs measures in ergodic theory. Russ. 1972;27(4):21–69. [Google Scholar]

- Bowen R. Equilibrium States and the Ergodic Theory of Anosov Diffeomorphisms. Springer-Verlag, New York; 1975. [Google Scholar]

- Ruelle D. Thermodynamic Formalism. Addison-Wesley, Reading, Massachusetts; 1978. [Google Scholar]

- Ruelle D. Smooth dynamics and new theoretical ideas in nonequilibrium statistical mechanics. J. 1999;95:393–468. [Google Scholar]

- Keller G. Equilibrium States in Ergodic Theory. Cambridge University Press, Cambridge; 1998. [Google Scholar]

- Cessac B, Rostro-Gonzalez H, Vasquez JC, Viéville T. How Gibbs distribution may naturally arise from synaptic adaptation mechanisms: a model based argumentation. J. 2009;136(3):565–602. http://www.springerlink.com/content/3617162602160603. [Google Scholar]

- Naundorf B, Wolf F, Volgushev M. Unique features of action potential initiation in cortical neurons. Nature. 2006;440:1060–1063. doi: 10.1038/nature04610. [DOI] [PubMed] [Google Scholar]

- McCormick D, Shu Y, Yu Y. Neurophysiology: Hodgkin and Huxley model - still standing? Nature. 2007;445:E1–E2. doi: 10.1038/nature05523. [DOI] [PubMed] [Google Scholar]

- Naundorf B, Wolf F, Volgushev M. Neurophysiology: Hodgkin and Huxley model - still standing? (Reply) Nature. 2007;445:E2–E3. doi: 10.1038/nature05523. [DOI] [PubMed] [Google Scholar]