Abstract

Detection and avoidance of impending obstacles is crucial to preventing head and body injuries in daily life. To safely avoid obstacles, locations of objects approaching the body surface are usually detected via the visual system and then used by the motor system to guide defensive movements. Mediating between visual input and motor output, the posterior parietal cortex plays an important role in integrating multisensory information in peripersonal space. We used functional MRI to map parietal areas that see and feel multisensory stimuli near or on the face and body. Tactile experiments using full-body air-puff stimulation suits revealed somatotopic areas of the face and multiple body parts forming a higher-level homunculus in the superior posterior parietal cortex. Visual experiments using wide-field looming stimuli revealed retinotopic maps that overlap with the parietal face and body areas in the postcentral sulcus at the most anterior border of the dorsal visual pathway. Starting at the parietal face area and moving medially and posteriorly into the lower-body areas, the median of visual polar-angle representations in these somatotopic areas gradually shifts from near the horizontal meridian into the lower visual field. These results suggest the parietal face and body areas fuse multisensory information in peripersonal space to guard an individual from head to toe.

Keywords: self-defense, multisensory homunculus, wearable stimulation

Obstacles are often present when we find our way through living and working environments in daily life (1–7). As we walk, our head, shoulders, elbows, hands, hips, legs, or toes may come close to many obstacles in our peripersonal space. We lower our head to get into vehicles with low door frames. We sidle through a crowd to avoid bumping into the shoulders of other pedestrians on a sidewalk. We prevent our hands and hips from hitting furniture when passing through a cluttered room (2). We watch our steps while walking up or down the stairs (4). We look a few steps ahead when walking on an uneven path to avoid tripping or stubbing our toes (4–7). In these circumstances, the brain must be able to detect obstacles that are about to impact the head, trunk, or limbs in order to coordinate appropriate avoidance actions (2). Failing to routinely detect and avoid obstacles could result in concussions or body injuries (7).

Electrophysiological studies on nonhuman primates suggested the posterior parietal cortex (PPC) plays an important role in defensive movements and obstacle avoidance (2, 8, 9). Specifically, neurons in the ventral intraparietal area of macaque monkeys respond to moving visual or tactile stimuli presented at congruent locations near or on the upper body surface, including the face and arms (8–11). A putative homolog of the macaque ventral intraparietal area in human PPC was reported by neuroimaging studies with stimuli delivered to restricted space near the face (12, 13). As obstacles could run into any body part from the ground up, neural mechanisms that only monitor obstacles approaching the face would be insufficient to guard an individual from head to toe. A complicated organization of action zones in the frontal cortex and in PPC has been suggested by recent nonhuman primate studies where electrical stimulation in different subdivisions evoked distinct movements of the face, forelimb, or hindlimb (11, 14–18). Based on the locations of movements evoked, action zones are approximately organized into overlapping areas of upper, middle, and lower spaces near the body. Other studies using tactile stimulation or retrograde neuronal tracing on nonhuman primates have also suggested that topographic organizations of multiple body parts exist in the PPC, and some subdivisions also receive visual input (19–22). A few human neuroimaging studies have shown evidence of distinct body-part representations in the superior parietal lobule (23–25). Whether a full-body multisensory organization of peripersonal space exists in the human PPC remains unknown. Searching for maps in human brains with electrophysiological techniques, such as mapping Penfield’s homunculus in primary motor and somatosensory cortices (M-I and S-I) using electrical stimulation (26), is possible in patients undergoing brain surgery. However, the invasive procedures used to comprehensively stimulate and probe widespread cortical regions in animals cannot be applied to humans.

We have developed wearable tactile stimulation techniques for noninvasive stimulation on the body surface from head to toe in human functional MRI (fMRI) experiments. We show that virtually the entire body, a higher-level homunculus, is represented in the superior PPC. We also show the relative topographical locations and overlaps among multiple body-part representations. Visual fMRI experiments in the same subjects using wide-field looming stimuli are then conducted to investigate the proportion of visual-tactile overlap and map visual field representations in these parietal body-part areas.

Results

Tactile Mapping Results.

Sixteen subjects participated in tactile fMRI experiments, during which they wore MR-compatible suits that delivered computer-controlled air puffs to the surface of multiple body parts (Fig. 1 and Fig. S1). Two distinct body parts were contrasted in each 256-s scan (SI Methods). The number of subjects differed across four tactile mapping paradigms because of limitations on their availability during the study. Functional images showing severe motion artifacts or no periodic activation at the stimulus frequency (eight cycles per scan) because of fatigue or sleepiness, as reported by the subjects, were excluded from further analysis. The resulting numbers of subjects included in the data analysis were: 14 for the “face vs. fingers,” 12 for the “face vs. legs,” 12 for the “lips vs. shoulders,” and 8 for the “fingers vs. toes” paradigms.

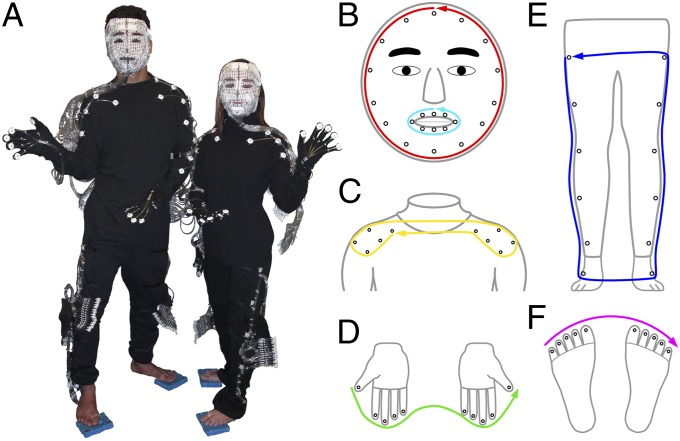

Fig. 1.

Tactile stimulation on multiple body parts. (A) Male and female tactile suits. A plastic tube ending with an elbow fitting delivers air puffs to the central hole of each white button attached to the suit. (B–F) Schematic diagrams of stimulation sites (black open circles) and traveling paths on the (B) face and lips, (C) shoulders (including part of the upper arms), (D) fingertips, (E) legs and ankles, and (F) toes.

Tactile mapping results are shown in a close-up view of the superior parietal region for each subject (Fig. 2 and Figs. S2 and S3). The activation maps are thresholded at a moderate level of statistical significance [F(2, 102) = 4.82, P = 0.01, uncorrected] across all subjects to show the greater extent of each body-part representation and overlap between adjacent representations. Surface-based regions of interest (ROIs) for each parietal body-part representation in single-subject maps were manually traced by referring to the locations and extents of significant activations in the superior parietal region on group-average maps (Fig. S4). A summary of single-subject body-part maps (Fig. S3) was created by combining respective ROI contours in Fig. S2. In the initial search of new areas, these tentative contours of ROIs were merely used to assist with interpretation of noisy activations on single-subject maps. When multiple discrete representations of a body part were activated in the superior parietal region, areas that are most consistent with the group-average maps were selected as ROIs. For example, the right hemisphere of subject 1 (S-1 in Fig. S2A) shows one additional face and two additional finger representations posterior to the selected face and finger ROIs.

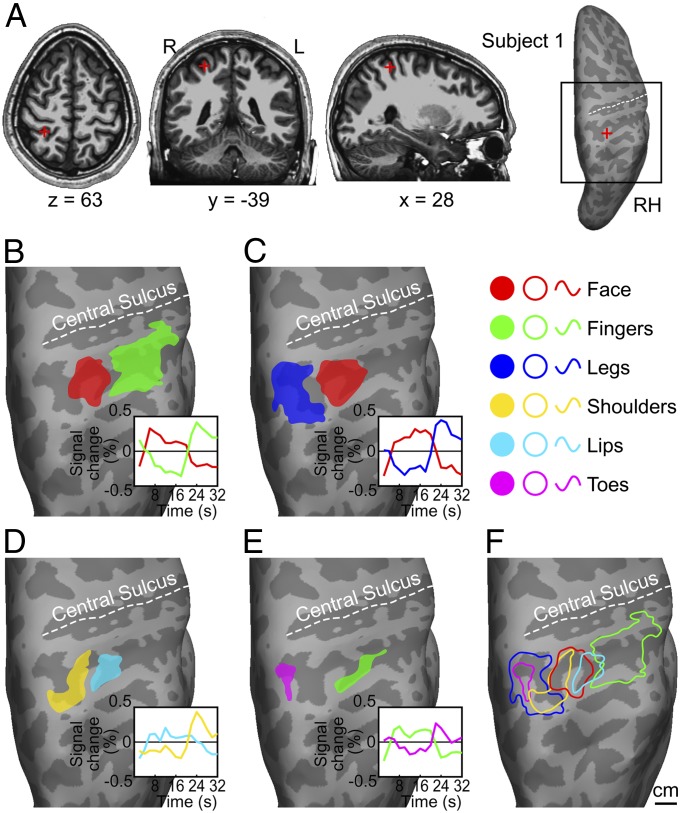

Fig. 2.

Parietal face and body areas in a representative subject. (A) Anatomical location and Talairach coordinates of the parietal face area in structural images (Left three panels) and on an inflated cortical surface (Rightmost panel). The black square indicates the location of a close-up view of the superior posterior parietal region shown below. RH, right hemisphere. (B–E) Body-part ROIs and their average signal changes (Insets) for (B) face vs. fingers scans, (C) face vs. legs scans, (D) lips vs. shoulders scans, and (E) fingers vs. toes scans. (F) A summary of parietal face and body areas. Contours were redrawn from the ROIs in B–E. To reduce visual clutter, contours of face and finger ROIs from C and E were not shown in F.

Group-average maps of four tactile mapping paradigms were rendered with activations at the same statistical threshold [group mean F(2, 102) = 3.09, P = 0.05, uncorrected] on subject 1’s cortical surfaces (Fig. S4), showing activations limited to the precentral gyrus, central sulcus, lateral sulcus, postcentral gyrus, and postcentral sulcus bilaterally on group average maps. A summary of group-average maps rendered on subject 1’s cortical surfaces (Fig. S5) was created by combining all activation contours from Fig. S4.

Talairach coordinates of the geometric center of each ROI are listed on single-subject maps (Fig. S2) and plotted in insets over individual summary maps (Fig. S3). The distributions of single-subject ROI centers, including their mean Talairach coordinates and SD of Euclidean distances from the center of each body-part cluster, are summarized in Table S1 and shown on the x-y plane in Fig. 3A.

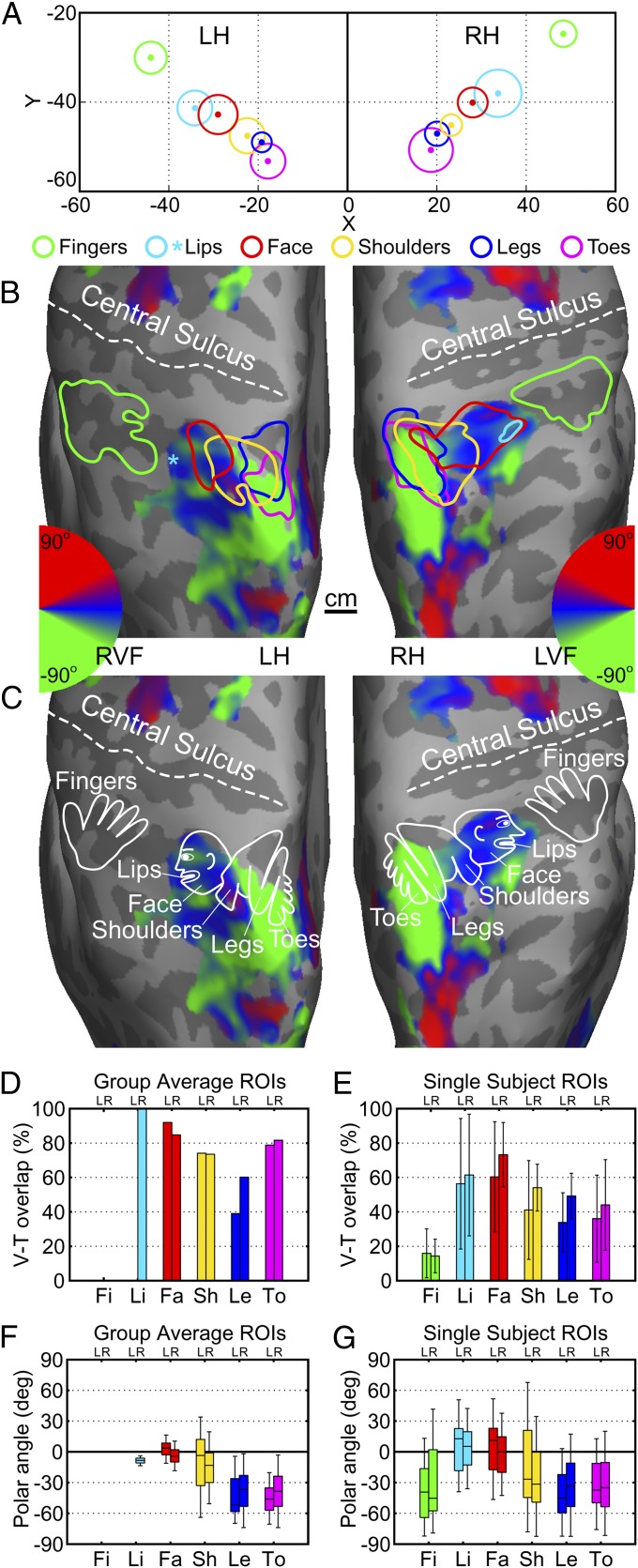

Fig. 3.

A multisensory homunculus in the superior posterior parietal cortex. (A) Distributions of Talairach coordinates of body-part ROI centers across subjects. Color dots, cluster centers; radius of each outer circle, SD of Euclidean distance from each cluster center (Table S1). (B) Contours of group-average body-part ROIs (P = 0.05, uncorrected) overlaid on group-average retinotopic maps (P = 0.05, uncorrected) rendered on subject 1’s cortical surfaces. A cyan asterisk indicates the location of average lip ROI center from the left hemispheres of subjects (n = 7) showing significant activations (Fig. S2C). Color wheels, polar angle of the contralateral visual hemifield. (C) A model of the parietal homunculus overlaid on group-average retinotopic maps. (D and E) Percentage of V-T overlap in each body-part ROI defined on group-average (D) and single-subject (E) maps. Error bar, SD. (F and G) Box plots of the distribution of polar angle within each body-part ROI outlined on group-average (F) and single-subject (G) maps. Each box represents the interquartile range, the line within each box indicates the median, and whiskers cover 90% of the distribution. LVF, left visual field; RVF, right visual field. LH and L, left hemisphere; RH and R, right hemisphere. Fi, fingers; Li, lips; Fa, face; Sh, shoulders; Le, legs; To, toes.

Body-part representations in the somatomotor cortex.

Representations of body parts are summarized in terms of their sulcal and gyral locations in the somatomotor cortex (Figs. S4 and S5) rather than using probabilistic Brodmann’s areas (e.g., 3a, 3b, 1, 2) as in other studies (27–29). Face representations overlap with the medial/superior portion of lip representations on both precentral and postcentral gyri. A distinct finger representation is located medial and superior to the superior end of the lip and face representations in the precentral gyrus bilaterally. Another finger representation is located medial and superior to the lip and face representations in the postcentral gyrus, and it extends into the ventral postcentral sulcus. We suggest that this finger representation overlaps with the ventral part of S-I digit representations (27, 28) and a parietal hand area at the confluence of ventral postcentral sulcus and anterior intraparietal sulcus (25, 30–32). An area of the toe representation partially overlaps with the superior part of the face representation in the central sulcus bilaterally. This area is inconsistent with the more medial location of toe representation depicted in Penfield’s homunculus in S-I (26) (SI Methods and SI Results). Easily detectable air puffs on the legs and shoulders did not activate S-I areas beyond the posterior part of the precentral gyrus (Discussion, SI Methods, and SI Results). Finally, secondary somatosensory representations (S-II) of the face, lips, fingers, and shoulders were found in the lateral sulcus bilaterally.

Body-part representations in the superior PPC.

Representations of six body parts in the superior PPC are illustrated in detail for a representative subject (Fig. 2). In scans contrasting stimulation on the face and fingers (Fig. 2B), air puffs circling on the face activated a parietal face area (13) located at the superior part of the postcentral sulcus (Fig. 2A), and air puffs sweeping across fingertips activated a parietal finger area lateral and ventral to the parietal face area. In scans contrasting the face and legs (Fig. 2C), essentially the same parietal face area was activated. A parietal leg area medial to the parietal face area was activated by air puffs traveling down and up the legs. In scans contrasting the lips and shoulders (Fig. 2D), the parietal lip area was located lateral and anterior to the parietal shoulder area. In scans contrasting fingers and toes (Fig. 2E), the parietal toe area was located at the medial and superior end of the postcentral sulcus. The location of the parietal finger area in the fingers vs. toes scans was consistent with the location of the parietal finger area in the face vs. fingers scans, although the area of the former was smaller. A summary of somatotopic organization created by combining the contours of body-part ROIs from Fig. 2 B–E shows an approximate linear arrangement of parietal leg, toe, shoulder, face, lip, and finger areas from medial/dorsal to lateral/ventral parts of the postcentral sulcus (Fig. 2F). This somatotopic map also shows significant overlap between adjacent body-part representations. The parietal toe area overlaps with the medial portion of the parietal leg area. The parietal shoulder and lip areas respectively overlap with the medial and lateral portions of the parietal face area. The parietal shoulder area extends medially and posteriorly to overlap with the parietal leg and toe areas. The parietal finger area slightly overlaps with the lateral portion of the parietal lip area. A summary of single-subject body-part ROIs shows consistent somatotopic organization in superior PPC across subjects (Fig. S3). The parietal finger and lip areas are located lateral to the parietal face area, and the parietal shoulder, leg, and toe areas are located medially.

Group average maps (Fig. 3 B and C, and Fig. S5) and clusters of ROI centers (Fig. 3A) show bilateral homuncular organizations with an approximate linear arrangement of parietal toe, leg, shoulder, face, lip, and finger areas distributed medially to laterally along the postcentral sulcus. As shown in Fig. S5, the spatial arrangement among the parietal face, lip, and finger areas is different from the organization of the S-I/M-I homunculus in humans and nonhuman primates (26, 33), where lips and face are located ventral and lateral to digit representations.

Visual Mapping Results.

Ten subjects participated in separate visual fMRI sessions, where they directly viewed looming balls appearing to pass near their faces with the target angle slowly rotating counterclockwise or clockwise in the visual field with each subsequent ball (Fig. S6). One-fifth of the white balls randomly turned red as they loomed toward the face, and they were designated as tokens of threatening obstacles. Subjects pressed a button to make a red ball return to white as if they had successfully detected and dodged an obstacle. All but one subject achieved near-perfect task performance (Table S2). Sparse activation in subject 7’s left hemisphere (S-7 in Fig. S7) was probably a result of poor task performance on account of self-reported sleepiness.

Looming balls periodically passing around the face activated many retinotopic areas in the occipital, temporal, parietal, and frontal lobes. Fig. S7 shows contours of four body-part ROIs from Fig. S3 overlaid on single-subject retinotopic maps rendered at the same statistical significance [F(2, 230) = 4.7, P = 0.01, uncorrected] in a close-up view of the superior parietal region. Fig. S5 shows contours of group-average somatotopic ROIs overlaid on group-average retinotopic maps [group mean F(2, 230) = 3.04, P = 0.05, uncorrected] at multiple views. Group-average retinotopic maps (Fig. S5) show that looming stimuli activated areas V1, V2, V3, and V4 in the occipital lobe (34), unnamed areas beyond the peripheral borders of V1 and V2, area V6 in the parieto-occipital sulcus (35), an area in the precuneus (PCu), areas MT, MST, and FST in the temporal lobe (36), areas V3A/V3B in the dorsal occipital lobe (37), areas in the intraparietal sulcus and superior parietal lobule (38), area polysensory zone in the precentral gyrus (2), and area FEF complex in the frontal cortex (38). The most anterior retinotopic maps overlapping with the parietal face and body areas in the superior parietal region are discussed with quantitative measures below.

Overlaps between visual and tactile maps.

The level of retinotopic activations in somatotopic areas was measured by the percentage of visual-tactile (V-T) overlap in each body-part ROI defined on group-average and single-subject maps (SI Methods). These measures differ between group-average and single-subject ROIs because of the effects of spatial smoothing by group averaging and statistical thresholds selected, but the relative levels across different body-part ROIs remain consistent. The face ROIs from the face vs. legs maps and the finger ROIs from the fingers vs. toes maps were not included in the analysis because they were redundant ROIs used only as known reference points. As shown in Fig. 3 D and E, parietal face and lip areas contain the highest levels of V-T overlap in both group-average and single-subject ROIs. Lower levels of V-T overlap were observed in the posterior parts of the parietal shoulder, leg, and toe areas, as shown on group-average and single-subject maps (Fig. 3B, and Figs. S5 and S7). Parietal finger areas contain the lowest level of V-T overlap in single-subject ROIs (Fig. 3E) and no significant visual activation in the group-average ROIs (Fig. 3D).

Visual field representations in body-part ROIs.

The distribution of polar-angle representations of the contralateral visual hemifield was computed from each of the group-average and single-subject ROIs (SI Methods). Starting at the parietal face area and traveling medially and posteriorly through the parietal shoulder area and into the parietal leg and toe areas (Fig. 3B), the median of polar-angle representations in these body-part ROIs gradually shifts from near the horizontal meridian into the lower visual field (Fig. 3 F and G). The distribution of polar-angle representations was compressed in group-average ROIs, but the trend of shifting into the lower visual field was consistent with the trend in single-subject ROIs.

Discussion

In daily life, we encounter obstacles as stationary objects that get in the way while we walk, as moving objects that loom toward us while we remain immobile, or both (2, 8, 9). In these scenarios, information about impending obstacles is usually processed by the visual system and then used by the motor system to plan appropriate avoidance movements. The intermediate stages between visual input and motor output involve awareness of one’s own body parts, estimation of the obstacle’s location relative to different body parts, and planning movements of concerning body parts. Electrophysiological studies on nonhuman primates have suggested that the PPC and premotor cortex contain neural representations of body schema and peripersonal space (2, 39–43) that play important roles in obstacle avoidance. Neuropsychological studies on brain-damaged patients have also provided behavioral evidence of multisensory representations of space near multiple body parts (41–44). Neuroimaging studies on healthy participants have revealed neural representations of multisensory space near or on the face and hands in human PPC (12, 13, 45, 46). In this study, we used wearable tactile stimulation techniques and wide-field looming stimuli to map multisensory representations of the entire body in fMRI experiments.

Air puffs delivered to the face and body activated somatotopic maps in S-I, S-II, and M-I, as well as in the PPC; looming balls passing near the face activated retinotopic maps in the occipital, temporal, parietal, and frontal lobes. Extending from the occipital pole and the central sulcus, the mosaics of maps for vision and touch meet and overlap extensively in the superior-medial part of the postcentral sulcus (Fig. S5), which is the most anterior border that can be driven by visual stimuli in the dorsal stream, also known as the “where” or “how” pathway (47, 48). Based on this evidence, we propose a model of a multisensory homunculus as depicted in Fig. 3C. This model suggests that the parietal face area sees and feels multisensory stimuli around the head, the parietal shoulder area looks after the middle space near the trunk, and the parietal leg and toe areas guard the space near the lower extremities. As shown on single-subject and group-average maps (Figs. S3 and S5), the posterior parietal homunculus occupies a more compact region of head-to-toe (excluding the fingers) representations than the homunculus in S-I. We suggest the close proximity among parietal face and body areas could be useful for efficient coordination of avoidance maneuvers involving the head, trunk, and limbs, but still maintain a balance of the whole body during locomotion (2, 3).

As bipedal animals, we rely on visual information to navigate through complex environments (1–9, 41). Walking on a flat surface, we usually look ahead without having to directly attend to our feet or mind the exact landing location of each step. Recent behavioral studies have suggested that information in the lower visual field is important for guiding locomotion in the presence of ground obstacles or steps (4–7). For example, step lighting is essential to prevent falling in a darkened movie theater. In this study, we found that the posterior parts of the parietal leg and toe areas overlap or adjoin retinotopic areas activated by looming stimuli predominantly in the lower visual field. We suggest that they could be human homologs of a multisensory area in the caudal part of the superior parietal lobule (area PEc) of macaque monkeys (21, 22), and that they are important for visually guided locomotion. One limitation of the present study is that looming stimuli were presented near the face but not close to the lower body. In a direct-view setup, however, the trunk and lower limbs on the other side of the screen were actually aligned with the subject’s lower visual field. In future studies, multisensory stimuli presented immediately next to or on the lower limbs would be required to study the direct overlap between visual and tactile representations of the lower-body space.

Previous neurophysiological studies on macaque monkeys have shown that the majority of neurons in the ventral intraparietal area respond to multisensory stimuli near or on the upper body, including the face and arms, but not the lower body. Hindlimb representations in the medial PPC have been suggested by recent studies on nonhuman primates (15, 18, 21, 22). In this study, we found parietal leg and toe areas at the superior and medial end of the postcentral sulcus, a superior parietal region that is posterior to S-I in the postcentral gyrus driven by passive stimulation on the foot (49, 50), anterior to a posterior parietal area activated by self-generated foot movements with visual feedback (51), and consistent with a superior parietal area activated during the planning of foot movements (25). To verify that these parietal body areas were not simply primary somatosensory cortex, we conducted supplementary experiments to identify S-I representations of the shoulders, arms, legs, and toes (SI Methods, SI Results, and Figs. S8 and S9). Brushing stimulation effectively activated wider regions encompassing S-I in the postcentral gyrus than previously activated by air puffs (Fig. S8 C and D). The lack of complete S-I activations extending into the central sulcus and postcentral gyrus may be explained as follows. First, gentle air puffs rapidly circling on one body part did not result in intense or sustained stimulation at a single site. Second, punctate stimuli moving across a large region on the body surface did not always result in detectable fMRI signals from S-I neurons, which likely have smaller receptive fields than neurons in the parietal body areas. A similar effect (stronger parietal than S-I signals) was observed when comparing activations in S-I face representation and parietal face area in the present study (e.g., face representation was absent in the postcentral gyrus on group-average maps in Fig. S4A) and in our previous study (13). Finally, the resolution of functional images used in this study is susceptible to misalignment between functional and anatomical images as well as group averaging. A slight shift of activation extent could result in different interpretations of functional data in terms of anatomical locations (Fig. S9). Further studies with more effective stimuli and improved methods are required to resolve these limitations.

This study has added several pieces to the jigsaw puzzle of the parietal body map, but several gaps are yet to be filled. Even with 64 stimulation sites on six body parts, the map was still somewhat coarse; this can be refined by using high-density stimulation on one or more body parts in high-resolution fMRI experiments (24). Additionally, the parietal homuncular model was constructed with one distinct area for each body-part representation. Single-subject maps (Fig. S2) suggest that multiple representations of a body part exist in the superior PPC. Repeated within-subject scans are required to validate each distinct representation observed. Finally, the present study only presented looming stimuli near the face in eye-centered coordinates, which resulted in no visual activation in the parietal finger area. Previous neurophysiological and neuroimaging studies suggested that regions in the ventral premotor cortex and anterior inferior parietal cortex encode visual space near the hands, regardless of hand and gaze positions in the visual field (39, 45, 52, 53). To investigate whether other parietal body areas respond to visual stimuli in “body part-centered” coordinates (39), air puffs can be combined with fiber optic lights to deliver multisensory stimuli anchored to the surface of multiple body parts (54). Following lights at variable locations, the gaze position can be directed to or away from a body part receiving visual or tactile stimuli. If a multisensory area is activated by the same stimuli, regardless of gaze position, one may conclude that this area also contains “body part-centered” representations.

Recent studies have suggested that the motor, premotor, and posterior parietal cortices are organized into action zones for generating families of complex movements (11, 14–19, 24, 55), rather than as mere body-part catalogs. The present study suggests that a multisensory topographical representation of the space near the face and body exists in human PPC. We also found an area in the precentral gyrus that was activated both by air puffs on the face and lips and by looming stimuli (Fig. S5), which may be considered the human homolog of the polysensory zone in macaque monkeys (2). No other multisensory areas were found in the motor and premotor cortices that could be explained by the fact that subjects only responded with button presses to token threatening targets. Realistic defensive actions, such as dodging and fending off obstacles, are usually accompanied by head movements, which would result in severe motion artifacts in fMRI data. Further studies using other noninvasive neuroimaging techniques that allow moderate head motion, such as EEG and functional near-infrared spectroscopy, will be required to investigate how parietal multisensory areas coordinate with the premotor and motor areas to execute realistic defensive movements, because human fMRI experiments restrict posture and sudden movements (24, 25, 32, 50, 51).

The most interesting finding in this study is the direct overlap between tactile and visual maps for much larger parts of the body in the superior PPC than have been previously reported. Living in crowded cities, modern humans struggle to navigate through complex structures occupied by obstacles and other people in their immediate surroundings without bumping into anything. This study suggests that the parietal face area helps to “watch your head!” and that the parietal body areas (including the shoulder, leg, and toe representations) help to “watch your shoulder!” and “watch your step!”

Methods

Subjects.

Twenty healthy subjects (8 males, 12 females; 18–30 y old) with normal or corrected-to-normal vision were paid to participate in this study. All subjects gave informed consent according to protocols approved by the Human Research Protections Program of the University of California at San Diego. Eight of the 20 subjects participated in the supplementary experiments (SI Methods).

Wearable Tactile Stimulation Techniques.

Tactile stimuli were delivered to the surface of multiple body parts in the MRI scanner using wearable tactile stimulation techniques, including a custom-built 64-channel pneumatic control system (Fig. S1) and MR-compatible tactile suits (Fig. 1). The pneumatic control system was redesigned from the Dodecapus stimulation system (56). A full-body tactile suit includes a facial mask, a turtleneck shirt, a pair of gloves, a pair of pants, two ankle pads, and two toe pads (Fig. 1). A unique mask was molded on each subject’s face using X-Lite thermoplastic sheets, and foam pads were trimmed to match each subject’s feet and toes. Sequences of computer-controlled air puffs were delivered to desired sites on the body surface via plastic tubes and nozzles embedded in the suit, including 32 sites on the mask (face and lips), 32 sites on the upper-body suit (neck, shoulders, arms, and fingertips), and 32 sites on the lower-body suit (thighs, calves, ankles, and toes). A total of 64 of these stimulation sites (Fig. 1 B–F) on the tactile suit were used in this study.

Tactile Mapping Paradigms.

In multiple tactile mapping sessions, subjects participated in two-condition, block-design scans, including face vs. fingers, face vs. legs, lips vs. shoulders, and fingers vs. toes paradigms. Each body-part pair was empirically selected so that their representations would not overlap in the postcentral sulcus based on the results of pilot experiments contrasting air-puff stimulation on one body part with no stimulation. Each paradigm was scanned twice in the same session. Each 256-s scan consisted of eight cycles of two conditions, where sequences of air puffs were delivered to the first body part for 16 s and to the second body part for 16 s, repeatedly. Each 16-s block consisted of 160 100-ms air puffs continuously circling along a fixed path (Fig. 1 B–F) on one body part. There was no delay between air puffs delivered to two consecutive sites on a path, and all subjects reported that they felt continuous sensation of airflow along the path, which was different from the stimulation pattern used to generate the cutaneous rabbit illusion (57). All stimulation paths started with the right-hand side of the body. The last paths on the face and legs were incomplete at the end of 16 s because 160 is not divided evenly by the number of sites on the face or legs.

Setup and Instruction for Tactile Experiments.

Subjects put on the tactile suit, walked into the MRI room, and lay supine on a cushion pad on the scanner table. Bundles of plastic tubes extending out of the pneumatic control system were connected to the corresponding tubes embedded in the tactile suit via arrays of quick connectors. Velcro and medical tape were used to stabilize stimulation sites on the shoulders, legs, ankles, and toes. The subject’s face was covered with the mask, and additional foam padding was inserted between the mask and the head coil to immobilize the head. To mask the hissing noise of air puffs, subjects wore earplugs and listened to white-noise radio through MR-compatible head phones. Subjects were instructed to close their eyes in complete darkness during the entire session and covertly follow the path of air puffs on each body part without making any response, which required spatial and temporal integration of tactile motion across the body surface.

Visual Mapping Paradigm.

In a visual mapping session, each subject viewed looming stimuli in four 512-s scans, two with stimulus angle slowly advancing in the counterclockwise direction and two in the clockwise direction. In each scan, subjects fixated on a central red cross while attending to looming balls apparently passing by their faces for eight rounds at a 64-s period. Each round consisted of 40 looming balls (1.6 s per trial) that appeared at increasing (counterclockwise) or decreasing (clockwise) polar angles (at a 9° step) from the right horizon, which was a variant of the phase-encoded design used in retinotopic mapping experiments (34, 35). At the beginning of each 1.6-s trial, a white ball appeared at 5.73° eccentricity and gradually expanded and moved toward the far periphery (Fig. S6). The ball completely moved out of the screen at 983 ms of the trial. In 20% of all 320 trials, the white ball randomly turned red between 450 and 650 ms after it appeared. The red ball returned to white when the subject pressed a button before the next ball appeared. The reaction time to each red-ball target, accuracy, and false responses were recorded on the stimulus computer (Table S2). Each new trial started with a white ball, regardless of the subject’s response in the previous trial.

Setup and Instruction for Visual Experiments.

Subjects’ heads were propped up (∼30° forward) by foam padding in the head coil so that they could directly view the visual stimuli back-projected onto a 35 × 26-cm screen, which was ∼15 cm from their eyes. The direct-view setup resulted in an approximate field-of-view of 100 × 80°. Additional foam padding was used to immobilize the subject’s head. Subjects were instructed to keep their head still and maintain central fixation while attending to looming balls during all functional scans. An MR-compatible response box was placed under the subject’s right hand.

Supplementary Material

Acknowledgments

We thank the University of California at San Diego functional MRI center for MRI support, the Department of Cognitive Science for administrative support, and the Center for Research in Language for laboratory and office space. This study was supported by National Institute of Mental Health Grant R01 MH081990, and a Royal Society Wolfson Research Merit Award, United Kingdom.

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1207946109/-/DCSupplemental.

References

- 1.Hall ET. The Hidden Dimension. Garden City, NY: Doubleday; 1966. [Google Scholar]

- 2.Graziano MS, Cooke DF. Parieto-frontal interactions, personal space, and defensive behavior. Neuropsychologia. 2006;44(13):2621–2635. doi: 10.1016/j.neuropsychologia.2005.09.011. [DOI] [PubMed] [Google Scholar]

- 3.Logan D, et al. The many roles of vision during walking. Exp Brain Res. 2010;206(3):337–350. doi: 10.1007/s00221-010-2414-0. [DOI] [PubMed] [Google Scholar]

- 4.Timmis MA, Bennett SJ, Buckley JG. Visuomotor control of step descent: Evidence of specialised role of the lower visual field. Exp Brain Res. 2009;195(2):219–227. doi: 10.1007/s00221-009-1773-x. [DOI] [PubMed] [Google Scholar]

- 5.Marigold DS. Role of peripheral visual cues in online visual guidance of locomotion. Exerc Sport Sci Rev. 2008;36(3):145–151. doi: 10.1097/JES.0b013e31817bff72. [DOI] [PubMed] [Google Scholar]

- 6.Marigold DS, Patla AE. Visual information from the lower visual field is important for walking across multi-surface terrain. Exp Brain Res. 2008;188(1):23–31. doi: 10.1007/s00221-008-1335-7. [DOI] [PubMed] [Google Scholar]

- 7.Catena RD, van Donkelaar P, Halterman CI, Chou LS. Spatial orientation of attention and obstacle avoidance following concussion. Exp Brain Res. 2009;194(1):67–77. doi: 10.1007/s00221-008-1669-1. [DOI] [PubMed] [Google Scholar]

- 8.Bremmer F. Navigation in space—The role of the macaque ventral intraparietal area. J Physiol. 2005;566(Pt 1):29–35. doi: 10.1113/jphysiol.2005.082552. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Bremmer F. Multisensory space: From eye-movements to self-motion. J Physiol. 2011;589(Pt 4):815–823. doi: 10.1113/jphysiol.2010.195537. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Duhamel JR, Colby CL, Goldberg ME. Ventral intraparietal area of the macaque: Congruent visual and somatic response properties. J Neurophysiol. 1998;79(1):126–136. doi: 10.1152/jn.1998.79.1.126. [DOI] [PubMed] [Google Scholar]

- 11.Cooke DF, Taylor CS, Moore T, Graziano MS. Complex movements evoked by microstimulation of the ventral intraparietal area. Proc Natl Acad Sci USA. 2003;100(10):6163–6168. doi: 10.1073/pnas.1031751100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Bremmer F, et al. Polymodal motion processing in posterior parietal and premotor cortex: A human fMRI study strongly implies equivalencies between humans and monkeys. Neuron. 2001;29(1):287–296. doi: 10.1016/s0896-6273(01)00198-2. [DOI] [PubMed] [Google Scholar]

- 13.Sereno MI, Huang RS. A human parietal face area contains aligned head-centered visual and tactile maps. Nat Neurosci. 2006;9(10):1337–1343. doi: 10.1038/nn1777. [DOI] [PubMed] [Google Scholar]

- 14.Graziano MS, Taylor CS, Moore T. Complex movements evoked by microstimulation of precentral cortex. Neuron. 2002;34(5):841–851. doi: 10.1016/s0896-6273(02)00698-0. [DOI] [PubMed] [Google Scholar]

- 15.Stepniewska I, Fang PC, Kaas JH. Microstimulation reveals specialized subregions for different complex movements in posterior parietal cortex of prosimian galagos. Proc Natl Acad Sci USA. 2005;102(13):4878–4883. doi: 10.1073/pnas.0501048102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Graziano MS, Aflalo TN. Rethinking cortical organization: Moving away from discrete areas arranged in hierarchies. Neuroscientist. 2007;13(2):138–147. doi: 10.1177/1073858406295918. [DOI] [PubMed] [Google Scholar]

- 17.Graziano MS, Aflalo TN. Mapping behavioral repertoire onto the cortex. Neuron. 2007;56(2):239–251. doi: 10.1016/j.neuron.2007.09.013. [DOI] [PubMed] [Google Scholar]

- 18.Kaas JH, Gharbawie OA, Stepniewska I. The organization and evolution of dorsal stream multisensory motor pathways in primates. Front Neuroanat. 2011;5:34. doi: 10.3389/fnana.2011.00034. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Padberg J, et al. Parallel evolution of cortical areas involved in skilled hand use. J Neurosci. 2007;27(38):10106–10115. doi: 10.1523/JNEUROSCI.2632-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Seelke AM, et al. Topographic maps within Brodmann’s Area 5 of macaque monkeys. Cereb Cortex. 2012;22(8):1834–1850. doi: 10.1093/cercor/bhr257. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Breveglieri R, Galletti C, Monaco S, Fattori P. Visual, somatosensory, and bimodal activities in the macaque parietal area PEc. Cereb Cortex. 2008;18(4):806–816. doi: 10.1093/cercor/bhm127. [DOI] [PubMed] [Google Scholar]

- 22.Bakola S, Gamberini M, Passarelli L, Fattori P, Galletti C. Cortical connections of parietal field PEc in the macaque: Linking vision and somatic sensation for the control of limb action. Cereb Cortex. 2010;20(11):2592–2604. doi: 10.1093/cercor/bhq007. [DOI] [PubMed] [Google Scholar]

- 23.Fink GR, Frackowiak RS, Pietrzyk U, Passingham RE. Multiple nonprimary motor areas in the human cortex. J Neurophysiol. 1997;77(4):2164–2174. doi: 10.1152/jn.1997.77.4.2164. [DOI] [PubMed] [Google Scholar]

- 24.Meier JD, Aflalo TN, Kastner S, Graziano MS. Complex organization of human primary motor cortex: A high-resolution fMRI study. J Neurophysiol. 2008;100(4):1800–1812. doi: 10.1152/jn.90531.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Heed T, Beurze SM, Toni I, Röder B, Medendorp WP. Functional rather than effector-specific organization of human posterior parietal cortex. J Neurosci. 2011;31(8):3066–3076. doi: 10.1523/JNEUROSCI.4370-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Penfield W, Rasmussen T. The Cerebral Cortex of Man. New York: Macmillan; 1950. [Google Scholar]

- 27.Moore CI, et al. Segregation of somatosensory activation in the human rolandic cortex using fMRI. J Neurophysiol. 2000;84(1):558–569. doi: 10.1152/jn.2000.84.1.558. [DOI] [PubMed] [Google Scholar]

- 28.Nelson AJ, Chen R. Digit somatotopy within cortical areas of the postcentral gyrus in humans. Cereb Cortex. 2008;18(10):2341–2351. doi: 10.1093/cercor/bhm257. [DOI] [PubMed] [Google Scholar]

- 29.Eickhoff SB, Grefkes C, Fink GR, Zilles K. Functional lateralization of face, hand, and trunk representation in anatomically defined human somatosensory areas. Cereb Cortex. 2008;18(12):2820–2830. doi: 10.1093/cercor/bhn039. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Burton H, Sinclair RJ. Attending to and remembering tactile stimuli: A review of brain imaging data and single-neuron responses. J Clin Neurophysiol. 2000;17(6):575–591. doi: 10.1097/00004691-200011000-00004. [DOI] [PubMed] [Google Scholar]

- 31.Ruben J, et al. Somatotopic organization of human secondary somatosensory cortex. Cereb Cortex. 2001;11(5):463–473. doi: 10.1093/cercor/11.5.463. [DOI] [PubMed] [Google Scholar]

- 32.Hinkley LB, Krubitzer LA, Padberg J, Disbrow EA. Visual-manual exploration and posterior parietal cortex in humans. J Neurophysiol. 2009;102(6):3433–3446. doi: 10.1152/jn.90785.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Kaas JH, Nelson RJ, Sur M, Lin CS, Merzenich MM. Multiple representations of the body within the primary somatosensory cortex of primates. Science. 1979;204(4392):521–523. doi: 10.1126/science.107591. [DOI] [PubMed] [Google Scholar]

- 34.Sereno MI, et al. Borders of multiple visual areas in humans revealed by functional magnetic resonance imaging. Science. 1995;268(5212):889–893. doi: 10.1126/science.7754376. [DOI] [PubMed] [Google Scholar]

- 35.Pitzalis S, et al. Wide-field retinotopy defines human cortical visual area v6. J Neurosci. 2006;26(30):7962–7973. doi: 10.1523/JNEUROSCI.0178-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Kolster H, Peeters R, Orban GA. The retinotopic organization of the human middle temporal area MT/V5 and its cortical neighbors. J Neurosci. 2010;30(29):9801–9820. doi: 10.1523/JNEUROSCI.2069-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Smith AT, Greenlee MW, Singh KD, Kraemer FM, Hennig J. The processing of first- and second-order motion in human visual cortex assessed by functional magnetic resonance imaging (fMRI) J Neurosci. 1998;18(10):3816–3830. doi: 10.1523/JNEUROSCI.18-10-03816.1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Konen CS, Kastner S. Representation of eye movements and stimulus motion in topographically organized areas of human posterior parietal cortex. J Neurosci. 2008;28(33):8361–8375. doi: 10.1523/JNEUROSCI.1930-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Graziano MS, Yap GS, Gross CG. Coding of visual space by premotor neurons. Science. 1994;266(5187):1054–1057. doi: 10.1126/science.7973661. [DOI] [PubMed] [Google Scholar]

- 40.Serino A, Haggard P. Touch and the body. Neurosci Biobehav Rev. 2010;34(2):224–236. doi: 10.1016/j.neubiorev.2009.04.004. [DOI] [PubMed] [Google Scholar]

- 41.Holmes NP, Spence C. The body schema and the multisensory representation(s) of peripersonal space. Cogn Process. 2004;5(2):94–105. doi: 10.1007/s10339-004-0013-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Cardinali L, Brozzoli C, Farnè A. Peripersonal space and body schema: Two labels for the same concept? Brain Topogr. 2009;21(3-4):252–260. doi: 10.1007/s10548-009-0092-7. [DOI] [PubMed] [Google Scholar]

- 43.Heed T, Röder B. In: in The Neural Bases of Multisensory Processes. Murray MM, Wallace MT, editors. Boca Raton, FL: CRC Press; 2011. pp. 557–580. [Google Scholar]

- 44.La’davas E. Functional and dynamic properties of visual peripersonal space. Trends Cogn Sci. 2002;6(1):17–22. doi: 10.1016/s1364-6613(00)01814-3. [DOI] [PubMed] [Google Scholar]

- 45.Makin TR, Holmes NP, Zohary E. Is that near my hand? Multisensory representation of peripersonal space in human intraparietal sulcus. J Neurosci. 2007;27(4):731–740. doi: 10.1523/JNEUROSCI.3653-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Brozzoli C, Gentile G, Petkova VI, Ehrsson HH. FMRI adaptation reveals a cortical mechanism for the coding of space near the hand. J Neurosci. 2011;31(24):9023–9031. doi: 10.1523/JNEUROSCI.1172-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Mishkin M, Ungerleider LG. Contribution of striate inputs to the visuospatial functions of parieto-preoccipital cortex in monkeys. Behav Brain Res. 1982;6(1):57–77. doi: 10.1016/0166-4328(82)90081-x. [DOI] [PubMed] [Google Scholar]

- 48.Goodale MA, Milner AD. Separate visual pathways for perception and action. Trends Neurosci. 1992;15(1):20–25. doi: 10.1016/0166-2236(92)90344-8. [DOI] [PubMed] [Google Scholar]

- 49.Di Russo F, et al. Cortical plasticity following surgical extension of lower limbs. Neuroimage. 2006;30(1):172–183. doi: 10.1016/j.neuroimage.2005.09.051. [DOI] [PubMed] [Google Scholar]

- 50.Blatow M, et al. Clinical functional MRI of sensorimotor cortex using passive motor and sensory stimulation at 3 Tesla. J Magn Reson Imaging. 2011;34(2):429–437. doi: 10.1002/jmri.22629. [DOI] [PubMed] [Google Scholar]

- 51.Christensen MS, et al. Watching your foot move—An fMRI study of visuomotor interactions during foot movement. Cereb Cortex. 2007;17(8):1906–1917. doi: 10.1093/cercor/bhl101. [DOI] [PubMed] [Google Scholar]

- 52.Lloyd DM, Shore DI, Spence C, Calvert GA. Multisensory representation of limb position in human premotor cortex. Nat Neurosci. 2003;6(1):17–18. doi: 10.1038/nn991. [DOI] [PubMed] [Google Scholar]

- 53.Ehrsson HH, Spence C, Passingham RE. That’s my hand! Activity in premotor cortex reflects feeling of ownership of a limb. Science. 2004;305(5685):875–877. doi: 10.1126/science.1097011. [DOI] [PubMed] [Google Scholar]

- 54.Huang RS, Sereno MI. Visual stimulus presentation using fiber optics in the MRI scanner. J Neurosci Methods. 2008;169(1):76–83. doi: 10.1016/j.jneumeth.2007.11.024. [DOI] [PubMed] [Google Scholar]

- 55.Fernandino L, Iacoboni M. Are cortical motor maps based on body parts or coordinated actions? Implications for embodied semantics. Brain Lang. 2010;112(1):44–53. doi: 10.1016/j.bandl.2009.02.003. [DOI] [PubMed] [Google Scholar]

- 56.Huang RS, Sereno MI. Dodecapus: An MR-compatible system for somatosensory stimulation. Neuroimage. 2007;34(3):1060–1073. doi: 10.1016/j.neuroimage.2006.10.024. [DOI] [PubMed] [Google Scholar]

- 57.Geldard FA, Sherrick CE. The cutaneous “rabbit”: A perceptual illusion. Science. 1972;178(4057):178–179. doi: 10.1126/science.178.4057.178. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.