Abstract

In the US, recognition of the appropriateness of including an upper level of intake estimate among reference values for nutrient substances was made in 1994 when the Food and Nutrition Board of the Institute of Medicine (IOM) specified the inclusion of an “upper safe” level among its proposed reference points for intake of nutrients and food components. By 1998, a group convened by the IOM had established a risk assessment model for establishing upper intake levels for nutrients, eventually termed the tolerable upper intake levels (UL). A risk assessment framework (i.e., a scientific undertaking intended to characterize the nature and likelihood of harm resulting from human exposure to agents in the environment), as developed in other fields of study, was a logical fit for application to nutrients. But importantly, whereas risk assessment requires that information be organized in specific ways, it does not require specific scientific evaluation methods. Rather, it makes transparent and documents the decision-making that occurs given the available data and the related uncertainties. During the 1990s and beyond, the various IOM committees charged with developing UL for a range of nutrients utilized the risk assessment framework, making modifications and adjustments as dictated by the data. This experience informed the general organizational process for establishing UL but also underscored the dearth of data. For many reasons, undertaking scientific research and obtaining data about the effects of excessive intake have been challenging. It is time to consider creative and focused strategies for modeling, simulating, and otherwise studying the effects of excessive intake of nutrient substances.

Introduction

Throughout most of the 20th century, the study of nutrition and its public health applications focused on the identification of human deficiencies and requirements. Over time, the realization has grown that one needed to be attentive to excessive levels of intake as well as inadequate intakes. It was spurred in large part by the increased marketing and consumption of dietary supplements, fortified foods, and so-called functional foods beginning in the late 1980s. As a result, interest in the nutrition community moved toward determining the balance between too little and too much.

This concept of a safe upper level of intake was incorporated into the establishment of nutrient reference values by the Institute of Medicine (IOM)6,7 when in 1994, the IOM’s Food and Nutrition Board proposed that nutrient reference values should include an “upper safe” level of intake of a nutrient or food component (1). The IOM approach for nutrient reference values designated the “upper safe” level of intake as the tolerable upper level, or UL (2). The term tolerable intake was chosen to avoid an implication of a possible beneficial effect and is a level that with high probability can be tolerated biologically by individuals, but it is not a recommended intake (3). A UL is defined as the highest average daily intake of a nutrient that is likely to pose no risk of adverse health effects to almost all individuals in the general population (2). Although not the subject of this paper, other international bodies have also developed approaches for specifying upper levels of intake, notably the UK’s Committee on Medical Aspects of Food Policy (3) and more recently the European Food Safety Authority. Also, 2 scientific bodies of the United Nations have collaborated to produce a model for establishing upper levels of intake for nutrients and related substances that was intended to assist with harmonizing decisions about upper levels of intake (4).

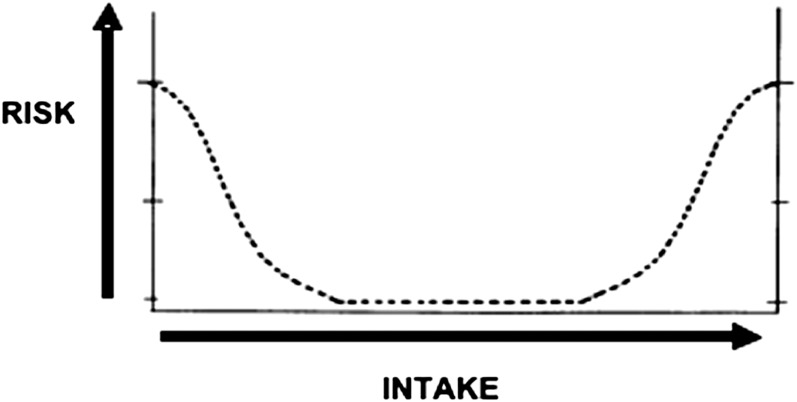

At the outset, it is important to underscore that the UL, which are reference values set within the DRI framework, are not used in the regulatory world to evaluate nutrient effects in the same way as reference values for drugs. Substances with drug-like benefits, even if of food origin, are evaluated for safety in a different way than are those with nutrient effects. Moreover, consideration of a UL is often carried out in the context of the level of intake that provides the nutritional benefit. This is because nutrients are associated with what is referred to as a dual risk, often portrayed as a curve (Fig. 1). As illustrated in Figure 1, there is risk related to getting too little and to getting too much. Specifically, the risk (y axis) is high at the left side of the figure, where intake (x axis) is sufficiently low that deficiencies are likely. As intake increases, the risk of inadequacy decreases until intake continues to levels high enough to place persons at risk for adverse events from excessive intake. The process of establishing nutrient reference values must take both ends of this continuum into account; it is a balance between the 2 types of risk. Another challenge would be the problem that arises when reference intakes related to one subgroup overlaps with an upper level for another group.

FIGURE 1.

Dual risk curve for nutrients; risk for the left-side curve relates to inadequate intake and risk for the right-side curve relates to excess intake.

Development of IOM Model for Establishing UL

The approach to establishing UL by the IOM is based on a risk assessment model. This approach is not a mathematical formula or algorithm generically applied to all nutrients and into which data are plugged and UL result. Rather, as articulated by the NRC in 1983 (5), the risk assessment model is a set of “scientific factors” that should be explicitly considered. It has evolved from work to describe the risk of adverse health effects associated with exposure of humans to toxic substances. Because nutrients are different from toxic substances in that they have a benefit as well as a risk, the model in practice has been modified to take this factor into account. Importantly, the risk assessment model anticipates that there will be uncertainty in the decision-making process and that scientific judgment will be needed; therefore, it emphases the need for documentation and transparency in the decision-making process.

The conceptual approach to UL development was initially described by an IOM subcommittee during the 1990s (3) and used as a basis for subsequent nutrient reviews in the DRI series. The approach was revisited at a workshop on the development of nutrient reference values convened in 2007 to consider lessons learning from the decade of setting DRI (6). The model is a systematic means of evaluating the probability of occurrence of adverse health effects from excessive intake based on the available data. To the extent that data are limited, scientific judgment comes into play coupled with related documentation. The goal is to establish a level of intake germane to overall public health protection. The focus is on daily intake over a lifetime, not toxic, one-time intakes or levels of intake that may be appropriate for controlled and monitored research studies.

The risk assessment model is classically outlined as a series of 4 decision-making steps. It is often more of an iterative process than it is a linear process and can be preceded by a problem formulation step. The 4 steps are: 1) hazard identification. The assessor identifies the “hazard” or adverse effect of interest through the conduct of a literature review; 2) hazard characterization. The assessor carries out 2 major tasks: describes the nature of the effect in the context of identifying the intake level that causes the adverse effect and any other related factors such a bioavailability, nutrient interactions, etc. Whereas in an ideal world there would be an identifiable dose-response relationship, practically in the absence of sufficient data to specify a dose-response relationship, the goal is a no adverse effect level or a lowest adverse effect level; and assesses uncertainty and sets a UL; 3) assessment of exposure. After using the available science to specify the level of intake that is of concern, actual real-world exposures of the population of interest are examined to assess the risk to the population based on the conclusions of the hazard characterization step; and 4) risk characterization. The overall assessment process is described and documented, the likelihood of risk to the population of interest is discussed, caveats are highlighted, and any other scientific information relevant to the users of the assessment is included.

As noted, the risk assessment approach was specifically incorporated into the IOM model for UL (3). By its nature, the process is sensitive to uncertainty and makes use of uncertainty factors. Because the UL is intended to be an estimate of the level of intake that will protect essentially all healthy members of a population, risk assessment is structured to focus on accounting for uncertainty that is inherent in the process. To help account for such variations, an uncertainty factor may be used. In this way, UL values can, at the end of the process, be “corrected” for potential sources of uncertainties. In general, for all substances subject to risk assessments, uncertainty factors are more modest when the available data are of high quality.

Challenges in Establishing UL

Table 1 lists the substances for which the IOM has issued UL. The first UL issued by the IOM in 1997 were for 5 nutrients reviewed as a group as part of the new paradigm for establishing nutrient reference values known as DRI (7). The 5 nutrients were calcium, phosphorus, magnesium, vitamin D, and fluoride. The first lesson learned was, not surprisingly, there are often limited data upon which to make decisions. Subsequent DRI reports were released between 1998 and 2004 for 5 other groups of nutrients and, when combined, these 6 reports cover ∼45 nutrients and food components. Limited data continued to be a challenge.

TABLE 1.

Nutrients for which the IOM has established UL of intake, 1997–20111

| Vitamins | Elements |

| Vitamin D | Arsenic |

| Vitamin C | Boron |

| Vitamin D | Calcium |

| Vitamin E | Chromium |

| Vitamin K | Copper |

| Thiamin | Fluoride |

| Riboflavin | Iodine |

| Niacin | Iron |

| Vitamin B-6 | Magnesium |

| Folate | Manganese |

| Vitamin B-12 | Molybdenum |

| Pantothenic acid | Nickel |

| Biotin | Phosphorus |

| Choline | Potassium |

| Carotenoids | Selenium |

| Silicon | |

| Sulfate | |

| Vanadium | |

| Zinc | |

| Sodium | |

| Chloride |

IOM, Institute of Medicine; UL, tolerable upper level.

Studies specifically designed to elicit dose-response information were at the time of the very first reports, and still are, infrequently carried out and almost never designed relative to upper levels of intake. As a result, the initially reviewed nutrients and those that have followed make use of a no observed adverse effect level or lowest observed adverse effect level as a starting point. Further, in reviewing the text of these reports, it is clear that much of the data on adverse effects has been generated as secondary outcomes in studies designed for other purposes or were gleaned from case reports. By necessity, such studies often must underpin UL development. Although some may conclude that the lack of adverse events as reported in clinical trials focused on benefits is reassuring, it is not completely reassuring. Safety issues are almost always a secondary endpoint for ethical reasons. Therefore, studies not designed with sufficient statistical power or duration to look at safety or adverse events, coupled with study exclusion criteria, are likely to miss those persons at greatest risk and likely to exhibit adverse effects. Further, monitoring and evaluation of safety endpoints are not the purpose of a study evaluating benefits and they are often not optimally carried out in the study protocol.

Because there are ethical issues associated with conducting classic random controlled clinical trials to establish adverse effects, the UL often have to rely on observational studies. However, available observational studies generally are not designed to look at safety as a general matter and signals may be missed. Additionally, the study population is often self-selected and therefore not necessarily representative of the general population. Further, safety issues may get lost in the variability or “noise” introduced by the nature of observational studies, which is reduced with the controlled nature inherent in a clinical trial. Nonetheless, such data are at times the only data available, and because setting a UL is an important tool for risk managers, scientific judgment must come into play. The 2011 IOM report on vitamin D UL (8) made use of observational data for the determination of the UL for vitamin D but incorporated uncertainty factors where it was possible given the nature of the data.

Animal models have been used to inform UL development and should be considered whenever possible, because they can provide some relevant information. Unfortunately, the type of systematic evaluations based on animal models associated with substances such as food additives, i.e., a priori hypotheses, multi-generational monitoring, pathological evaluation of all tissues, etc., are not usually conducted for nutrients. In the end, to the extent that animal models have been studied, they help to clarify biological plausibility. For example, the UL for vitamin E was based on an endpoint of hemorrhagic effects derived from animal models (9). Nonetheless, there are of course questions about the ability to extrapolate from animals to humans in general and how to translate such data into specific intakes.

Extrapolating from one age group to another is a key challenge to UL development. Information on adverse effects in older persons may not sufficiently relate to young adults. Newborns and infants are a highly vulnerable component of our population, yet they reflect groups for whom data are often very sparse; in addition, the ability to extrapolate from older children and adults to an infant is fraught with questions and concerns. In some cases, despite the importance of setting UL, there are simply no appropriate data. For example, at the time the 1997 nutrient reference value report for calcium and other related nutrients was issued (7), no UL were set for infants 0–12 mo for calcium, phosphorus, and magnesium (except for non-food sources). When calcium was revisited during the IOM review issued in 2011 (8), a small dataset analyzed in the late 1990s relating to the effects of excess calcium on infants was considered an important step forward and allowed the 2011 IOM report on calcium reference values to specify a UL for infants.

To date, the use of uncertainty factors has not been well resolved or clarified. Uncertainty factors are widely used for non-nutrient substances where there are no issues related to requirements and for which the use of 100-fold safety or even 1000-fold uncertainty factors, derived by extrapolation from animal studies and at times based on severity of the effect, is not uncommon. Nutrients, if overcorrected for uncertainty as an effort to be cautious, may run the risk of being lower than the Estimated Average Requirement. The UL for calcium developed in the 2011 IOM report (8) exemplifies this challenge. The UL is set at 2000–3000 mg/d for adults depending upon age and gender and based on the formation of kidney stones, whereas the requirement for calcium for these groups ranges between 800 and 1100 mg/d. The UL was not corrected for uncertainty as a safeguard, because even a modest uncertainty factor would have placed the UL below Estimated Average Requirement levels.

Finally, similar to decisions about selecting the basis (or “endpoint”) for a human requirement, the decision about which adverse effect to select as the basis for the UL when more than one type of adverse effect has been identified can be challenging. The goal is public health protection, so there is often an interest in focusing on endpoints that occur at the lowest level of intake compared with endpoints that, e.g., may be the most severe. Further, biomarkers of adverse effect are often key to UL development. The UL for iodine offers an illustration (10). Whereas overall, people tend to be tolerant of excess iodine intake from food, high intakes from food, water, and supplements have been associated with thyroiditis, goiter, hypothyroidism, hyperthyroidism, sensitivity reactions, thyroid papillary cancer, and acute responses in some individuals. Elevated levels of thyroid stimulating hormone was selected as the basis for the UL for iodine, because this measure is the first effect observed in iodine excess. It may not be clinically important but was viewed as an indicator for increased risk of developing clinical hypothyroidism.

Refinement of the Organizational and Research Frameworks

The evolution of UL has moved to the stage of refining and further specifying the organizational framework for UL development using the risk assessment approach. As more groups around the world have developed UL of some type, it has become clear that experts and qualified scientists working with the same data sets could apply scientific judgment and come to different conclusions about UL. This occurred not necessarily because they were interpreting the science differently but because they were asking different questions. This situation is well documented in the joint FAO and WHO workshop on nutrient upper levels held in 2005 (4), for which a comparison included in the workshop report explored the upper level reviews for vitamin A conducted by the European Food Safety Authority, the Foods Standards Agency of the UK, and the IOM.

Thus, an organizational framework that enhances transparency has been a recent and useful advance in the UL evolution. By clarifying the nature of the types of decisions to be made and underscoring the need for documentation and transparency of decision-making, the IOM incorporation of the risk assessment process has fostered an organizational approach that is transparent. The recent emphasis on the risk characterization step, an elaboration intended to integrate and summarize the preceding steps, is seen as an important component for stakeholders that helps to clarify and explain the process and also targets special considerations and challenges encountered in developing the UL. This, in turn, will allow the users of the UL review to make judgments about applying the outcomes in ways appropriate for the situation at hand.

Also important, but lagging behind consideration of an organizational framework for UL, is development of a research framework for UL. The salient question is how the type of research that would be most useful can be identified. The development of detailed decision steps such as the key event dose-response framework (KEDRF) is relevant in this regard. The ILSI Research Foundation organized a cross-disciplinary working group to examine current approaches for assessing dose-response and identifying safe levels of intake or exposure for 4 categories of bioactive agents: food allergens, nutrients, pathogenic microorganisms, and environmental chemicals (11). As reported, the effort generated a common analytical framework, i.e., KEDRF, for systematically examining key events that occur between the initial dose of a bioactive agent and the effect of concern. Individual key events are considered with regard to factors that influence the dose-response relationship and factors that underlie variability in that relationship. The intent is to illuminate the connection between the processes occurring at the level of fundamental biology and the outcomes observed at the individual and population levels. If used with sufficient and relevant data, the framework is useful for the decision-making inherent in establishing a UL. Its use could also reduce the need to rely on uncertainty factors, which at best are blunt instruments and at worst do not fit well with nutrient substances that also must be consumed at some level to cause the desired benefit. Although use of KEDRF in the face of limited data does not compensate for lack of data, it does highlight data gaps and point to research needs. The framework has been examined using vitamin A as a case study (12). Other nutrients and food substances with less data could be examined using the framework to elucidate components that are missing relative to a sufficient understanding about the biology of the effect and the outcome that manifests for individuals and populations.

In short, the current risk assessment framework for UL provides a step-wise decision-making process that addresses existing uncertainties and the potential for different outcomes due to scientific judgment by fostering transparency and documentation. Frameworks such as KEDRF can contribute to refining the process. When data are adequate, these frameworks help to focus the questions and relate basic biology to health outcomes. Importantly, these science-oriented models are extremely useful in specifying data gaps. However, the existence of such frameworks does not fix or compensate for a lack of data. An elaborate set of decision-making steps does not result in an appropriate UL if there are not sufficient data to develop a UL.

Obtaining Data for UL Development: Opportunity to Think Outside the Box

Carrying out research relative to adverse effects for humans is certainly difficult and must consider ethical concerns. The research questions are also complicated by the fact that essential nutrients are subject to homeostatic mechanisms that are still not well described and may be extremely complicated and presumably have evolved to maintain a steady state in the face of many environmental challenges.

Ignoring these challenges and relying on history of use data or the integration of a series of scientific judgments is at best only a holding pattern. It should not be the desired basis for UL development. There appears to be considerable opportunity to develop creative and innovative approaches. Some of the solutions may be drawn from other fields of study, as was done for the basic risk assessment framework for organizing an approach for UL development.

Research relative to UL would benefit within the more classical nutrition research design if the following were considered: 1) more can be done to ensure that clinical trials and other types of studies related to nutrient intake are designed to incorporate more and focused efforts to monitor for adverse events. While not ideal, this can add to the knowledge base; 2) Investigations targeted to methodologies for extrapolation from one age group to another are very limited and should be expanded; 3) animal models are useful for biological plausibility and for elucidating paths to better-designed and efficient studies in humans and should be further developed; 4) in vitro studies have not evolved as quickly as one would expect based on progress in the toxicology field and these can be the focus of innovative thinking relative to nutrients; and 5) efforts to explore the key components of the scientific judgment process as it relates to UL would be helpful in ensuring systematic development of UL and in clarifying for users of UL the nature of the uncertainties surrounding the UL values.

The field of nutrition has been slow to explore and adapt appropriate innovative methods of research that are being used in related fields such as toxicology, food additive evaluation, drug research, and even microbiology. The methodology possibilities listed in Table 2 are gleaned from informal discussions during a brainstorming session intended as background for a 2007 workshop on DRI (6) and which was based largely on a publication issued by the National Academy of Sciences entitled “Toxicity Testing in the 21st Century: A Vision and a Strategy” (13). These innovative approaches worthy of consideration relative to UL are highlighted in Table 2.

TABLE 2.

Examples of innovative methodologies from non-nutrition fields of study that may be useful to establishing UL of intake1

| Mapping pathways: a tool that aids in the identification of biologic signaling pathways. The study of “systems biology” is a powerful approach to describing and understanding the fundamental mechanisms by which biologic systems operate. |

| Microarrays: a type of protein expression profiling useful when genetic response is an issue; would increase our understanding of both disease markers and molecular pathways. |

| Computational biology: a tool for modeling data and outcomes that is particularly useful for data mining and could lead to predictive computational models. |

| Physiologically based pharmacokinetic models: if developed, they may allow for better extrapolation between species as well as clarification of routes of exposure, both essential considerations for UL development. |

| High-throughput methods: allow for economical screening of large numbers of substances in a short period but have not been explored for nutrients. |

Table created by authors based on discussions in (13). UL, tolerable upper level.

In conclusion, recent progress related to the development of UL for nutrients and food substances is impressive and has focused on providing an organized, accountable, and transparent approach and to specifying steps to better link biology with the outcomes of interest. However, data relevant to UL remains limited and presents challenges for the development of UL and for reducing reliance on uncertainty factors. Moreover, it is not a matter of “just doing more” research or concluding that it cannot be done. There is now a need to consider the possibilities of newer types of innovative research that focus on the relevant questions for UL development and offer creative solutions to data gaps.

Acknowledgments

C.L.T. developed this article based on her experiences at the U.S. FDA and the WHO in Geneva. L.D.M. made contributions based on her experience as Director of the Food and Nutrition Board at the IOM as well as her earlier work at the Department of Health and Human Services. Both authors read and approved the final manuscript.

Footnotes

Abbreviations used: IOM, Institute of Medicine; KEDRF, key event dose-response framework; UL, tolerable upper level.

The NRC and then the IOM through the Food and Nutrition Board have issued nutrient reference values since the 1940s known as RDA and more recently as DRI.

Literature Cited

- 1.Institute of Medicine, Food and Nutrition Board How should the recommended dietary allowances be revised? Washington, DC: National Academy Press; 1994 [Google Scholar]

- 2.Institute of Medicine, Food and Nutrition Board Dietary reference intakes: a risk assessment model for establishing upper intake levels for nutrients. Washington, DC: National Academy Press; 1998 [PubMed] [Google Scholar]

- 3.Committee on Medical Aspects of Food Policy Dietary reference values for food energy and nutrients in the United Kingdom. Report on health and social subjects, No. 41. London: Her Majesty’s Stationery Office; 1991 [PubMed] [Google Scholar]

- 4.WHO and FAO of the United Nations A model for establishing upper levels of intake for nutrients and related substances; report of a joint FAO/WHO technical workshop on nutrient risk assessment. Geneva: WHO Press; 2006 [DOI] [PubMed] [Google Scholar]

- 5.NRC, Commission on Life Sciences Risk assessment in the federal government: managing the process. Washington, DC: National Academy Press; 1983 [PubMed] [Google Scholar]

- 6.Institute of Medicine, Food and Nutrition Board The development of DRIs 1994–2004: lessons learned and new challenges: workshop summary. Washington, DC: National Academies Press; 2008 [Google Scholar]

- 7.Institute of Medicine, Food and Nutrition Board Dietary reference intakes for calcium, phosphorus, magnesium, vitamin D and fluoride. Washington, DC: National Academy Press; 1997 [PubMed] [Google Scholar]

- 8.Institute of Medicine Dietary reference intakes for calcium and vitamin D. Washington, DC: National Academies Press; 2011 [PubMed] [Google Scholar]

- 9.Institute of Medicine Dietary reference intakes for vitamin C, vitamin E, selenium, and carotenoids. Washington, DC: National Academy Press; 2000 [PubMed] [Google Scholar]

- 10.Institute of Medicine Dietary reference intakes for vitamin A, vitamin K, arsenic, boron, chromium, copper, iodine, iron, manganese, molybdenum, nickel, silicon, vanadium, and zinc. Washington, DC: National Academy Press; 2001 [PubMed] [Google Scholar]

- 11.Julien E, Boobis AR, Olin SS, ILSI Research Foundation Threshold Working Group The key events dose-response framework: a cross-disciplinary mode-of-action based approach to examining dose-response and thresholds. Crit Rev Food Sci Nutr. 2009;49:682–9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Ross AC, Russell RM, Miller SA, Munro IC, Rodricks JV, Yetley EA, Julien E. Application of a key events dose-response analysis to nutrients: a case study with vitamin A (retinol). Crit Rev Food Sci Nutr. 2009;49:708–17 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.NRC, Board on Environmental Studies and Toxicology Toxicity testing in the 21st century: a vision and a strategy. Washington, DC: National Academies Press; 2007 [Google Scholar]