Abstract

A critical component of how we understand a mental process is given by measuring the effect of varying the workload. The capacity coefficient (Townsend & Nozawa, 1995; Townsend & Wenger, 2004) is a measure on response times for quantifying changes in performance due to workload. Despite its precise mathematical foundation, until now rigorous statistical tests have been lacking. In this paper, we demonstrate statistical properties of the components of the capacity measure and propose a significance test for comparing the capacity coefficient to a baseline measure or two capacity coefficients to each other.

Keywords: Mental architecture, Human information processing, Capacity coefficient, Nonparametric, Race model

Measures of changes in processing, for instance deterioration, associated with increases in workload have been fundamental to many advances in cognitive psychology. Due to their particular strength in distinguishing the dynamic properties of systems, response time based measures such as the capacity coefficient (Townsend & Nozawa, 1995; Townsend & Wenger, 2004) and the related Race Model Inequality (Miller, 1982) have been gaining employment in both basic and applied sectors of cognitive psychology. The purview of application of these measures includes areas as diverse as memory search (Rickard & Bajic, 2004), visual search (Krummenacher, Grubert, & Müller, 2010; Weidner & Muller, 2010), visual perception (Eidels, Townsend, & Algom, 2010; Scharf, Palmer, & Moore, 2011), auditory perception (Fiedler, Schröter, & Ulrich, 2011), flavor perception (Veldhuizen, Shepard, Wang, & Marks, 2010), multi-sensory integration (Hugenschmidt, Hayasaka, Peiffer, & Laurienti, 2010; Rach, Diederich, & Colonius, 2011) and threat detection (Richards, Hadwin, Benson, Wenger, & Donnelly, 2011).

While there have been a number of statistical tests proposed for the Race Model Inequality (Gondan, Riehl, & Blurton, 2012; Maris & Maris, 2003; Ulrich, Miller, & Schröter, 2007; Van Zandt, 2002), there has been a lack of analytical work on tests for the capacity coefficient. At this point, the only quantitative test available for the capacity coefficient is that proposed by Eidels, Donkin, Brown, and Heathcote (2010). This test is limited to testing an increase in workload from one to two sources of information. Furthermore, it relies on the assumption that the underlying channels can be modeled with the Linear Ballistic Accumulator (Brown & Heathcote, 2008). In this paper, we develop a more comprehensive statistical test for the capacity coefficient that is both nonparametric and can be applied with any number of sources of information.

The capacity coefficient, C(t), was originally invented to provide a precise measure of workload effects for first-terminating (i.e., minimum time; referred to as “OR” or disjunctive processing) processing efficiency (Townsend & Nozawa, 1995). It complements the survivor interaction contrast (SIC), a tool which assesses mental architecture and decisional stopping rule (Townsend & Nozawa, 1995). The capacity coefficient has been extended to measure efficiency in exhaustive (AND) processing situations (Townsend & Wenger, 2004). In fact, it is possible to extend the capacity coefficient to any Boolean decision rule (e.g., Blaha, 2010; Townsend & Eidels, 2011).

The logic of the capacity coefficient is relatively straightforward. We demonstrate this logic using a comparison between a single source of information and two sources for clarity; but the reasoning readily extends to more sources and the theory section applies to the general case. Consider the experiment depicted in Fig. 1, in which a participant responds ‘yes’ if a dot appears either slightly above the mid-line of the screen, slightly below, or both and responds ‘no’ otherwise (a simple detection task). Optimally, the participant would respond as soon as she detects any dot, whether or not both were present. We refer to this as ‘first-terminating’ processing, or simply ‘OR’ processing. Alternatively, participants may wait to respond until they have determined whether or not a dot is present both above and below the mid-line. This is ‘exhaustive’ or ‘AND’ processing. In this design, AND processing is sub-optimal, but in other designs, such as when the participant must only respond ‘yes’ when both dots are present, AND processing is necessary.

Fig. 1.

An illustration of the possible stimuli in Eidels et al. (submitted for publication). The actual stimuli had much lower contrast. We refer to the condition with both the upper and lower dots present as PP (for present–present). PA indicates only the upper dot is present (for present–absent). AP indicates only the lower dot is present and AA indicates neither dot is present. In the OR task, participants responded yes to PP, AP or PA. In the AND task, participants responded yes to PP only and no otherwise.

If information about the presence of either of the dots is processed independently and in parallel, then the probability that the OR process is not complete at time t is the product of the probabilities that each dot has not yet been detected.1 Let F be the cumulative distribution function of the completion time, and the subscript X|Y indicate that the function corresponds to the completion time of process X under stimulus condition Y. Using this notation, the prediction of the independent, parallel, first-terminating process is given by,

| (1) |

We make the further assumption that there is no change in the speed of detecting a particular dot due to changes in the number of other sources of information (unlimited capacity). Thus, we can drop the reference to the stimulus condition,

| (2) |

We refer to a model with these collective assumptions – unlimited capacity, independent and parallel – as a UCIP model. This prediction of equality forms the basis of the capacity coefficient and plays the role of the null hypothesis in the statistical tests developed in this paper.

To derive the capacity coefficient prediction for the UCIP model, we need to put Eq. (2) in terms of cumulative hazard functions (cf. Chechile, 2003; Townsend & Ashby, 1983, pp. 248–254). We do this using the relationship H(t) = −ln(1 − F(t)), where is the cumulative hazard function.

| (3) |

The capacity coefficient for OR processing is defined by the ratio of the left and right hand side of Eq. (3),

| (4) |

This definition gives an easy way to compare against the baseline UCIP model performance. From Eqs. (3) and (4), we see that UCIP performance implies COR(t) = 1.

The capacity coefficient for AND processing is defined in an analogous manner, with the cumulative reverse hazard function (cf. Chechile, 2011; Townsend & Eidels, 2011; Townsend & Wenger, 2004) in place of the cumulative hazard function. The cumulative reverse hazard function, denoted by K(t) is defined as,

| (5) |

If the participant has detected both dots when both are present in an AND task, then he must have already detected each dot individually. If the dots are processed independently and in parallel, this means,

| (6) |

With the additional assumption of unlimited capacity, we have,

| (7) |

As above, we take the natural logarithm of both sides to obtain the prediction in terms of cumulative reverse hazard functions.

| (8) |

With the cumulative reverse hazard function, relatively larger magnitude implies relatively worse performance. Thus, to maintain the interpretation of C(t) > 1 implying performance is better than UCIP, the capacity coefficient for AND processing is defined by,

| (9) |

With the definitions of the OR and AND capacity coefficients in hand, we now turn to issues of statistical testing. We begin by adapting the statistical properties of estimates for the cumulative hazard and reverse cumulative hazard functions to estimates of UCIP performance. Then, based on those estimates, we derive a null-hypothesis-significance test for the capacity coefficients. We do not establish the statistical properties of the capacity coefficient itself, but rather the components. The statistical test is based on the sampling distribution of the difference of the predicted UCIP performance and the participant’s performance when all targets are present. The “difference” form leads to more analytically tractable results than a test based on the ratio form. Nonetheless, the logic of the test is the same, if the values are statistically significant (nonzero for the difference; not one for the ratio) then the UCIP model may be rejected.

1. Theory

The capacity coefficients are based on cumulative hazard functions and reverse cumulative hazard functions. Although it would be theoretically possible to use the machinery developed for the empirical cumulative distribution function to study the empirical cumulative hazard functions, using the identities mentioned above, H(t) = −log(1 − F(t)), K(t) = log(F(t)) (cf. Townsend & Wenger, 2004), we instead use a direct estimate of the cumulative hazard function, the Nelson–Aalen (NA) estimator (e.g., Aalen, Borgan, & Gjessing, 2008). This approach greatly simplifies the mathematics because many of the properties of the estimates follow from the basic theory of martingales. There is no particular advantage of one approach over the other in terms of their statistical qualities (Aalen et al., 2008).

1.1. The cumulative hazard function

Let Y(t) be the number of responses that have not occurred as of immediately before t and let Tj be the jth element of the ordered set of response times RT and let n be the number of response times. Then the NA estimator of the cumulative hazard function is given by,

| (10) |

Intuitively, this estimator results from estimating f(t) by 1/n whenever there is a response and zero otherwise; and estimating 1 − F(t) by the number of response times larger than t divided by n. This gives an estimate of h(t) = f(t)/(1 − F(ts)) ≈ (1/n)/(Y(t)/n) = 1/Y(t) whenever there is a response and zero otherwise. Integrating across s ∈ [0, t] leads to the sum in Eq. (10) to estimate .

Aalen et al. (2008) demonstrate that this is an unbiased estimator of the true cumulative hazard function and derive the variance of the estimator based on a multiplicative intensity model. We outline the argument here, then extend the results to an estimator for redundant target UCIP model performance using data from single target trials.

We begin by representing the response times by a counting process.2 At time t, we define the counting process N(t) as the number of responses that have occurred by time t. The intensity process of N(t), denoted λ(t), is the probability that a response occurs in an infinitesimal interval, conditioned on the responses before t, divided by the length of that interval. For notational convenience, we also introduce the function J(t),

| (11) |

If we introduce the martingale, , then we can write the increments of the counting process as,

| (12) |

Under the multiplicative intensity model, the intensity process of N(t) can be rewritten by λ(t) = h(t)Y(t), where Y(t) is a process representing the total number of responses that have not occurred by time t and h(t) is the hazard function. This model arises from treating the response to each trial as an individual process. Each response has its own hazard rate hi(t) and an indicator process Yi(t) which is 1 if the response has not occurred by time t and 0 otherwise. If the hazard rate is the same for each response that will be included in the estimate, then the counting process N(t) follows the multiplicative intensity model with h(t) = hi(t) and .

Rewriting Eq. (12) using the multiplicative intensity model gives,

If we multiply by J(t)/Y(t), and let J(t)/Y(t) = 0 when Y(t) = 0, then,

By integrating, we have,

The integral on the left is the NA estimator given in Eq. (10), using integral notation. The first term on the right is our function of interest, the cumulative hazard function (up until tmax, when all responses have occurred) which we denote H*(t). The last term is an integral with respect to a zero mean martingale, which is in turn a zero mean martingale. Hence,

Thus, the NA estimator is an unbiased estimate of the true cumulative hazard function, until tmax.

For statistical tests involving Ĥ(t), we will also need to estimate its variance. To do so, we use the fact that the variance of a zero mean martingale is equal to the expected value of its optional variation process.3 Because the integral with respect to a zero mean martingale, the optional variation process is given by,

Thus,

Therefore, an estimator of the variance of Ĥ(t) − H*(t) is given by,

The asymptotic behavior of the NA estimator is also well known. Ĥ(t) is a uniformly consistent estimator of H(t) and converges in distribution to a zero mean Gaussian martingale (Aalen et al., 2008; Andersen, Borgan, & Keiding, 1993).

1.2. The cumulative reverse hazard function

To estimate the capacity coefficient for AND processing, we must also adapt the NA estimator to the cumulative reverse hazard function. Based on the NA estimator of the cumulative hazard function, we define the following estimator,

| (13) |

Here G(s) is an estimate of the cumulative distribution function given by the number of responses that have occurred up to and including s. Intuitively, this corresponds to estimating by setting f(t) = 1/n whenever there is an observed response and using G(t) to estimate F(t), dovetailing nicely with the NA estimator of the cumulative hazard function. Each of the properties mentioned above for the NA estimator of the cumulative hazard (unbiasedness, consistency and a Gaussian limit distribution) also hold for the estimator of the cumulative reverse hazard.

Similar to J(t) for the cumulative hazard estimate, we need to track whether or not the estimate of the denominator of Eq. (13) is zero,

| (14) |

| (15) |

Theorem 1. K̂(t) is an unbiased estimator of K*(t).

Theorem 2. An unbiased estimate of the variance of K̂(t) is given by,

| (16) |

Theorem 3. K̂(t) is a uniformly consistent estimator of K(t).

Theorem 4. converges in distribution to a zero mean Gaussian process as the number of response times used in the estimate increases.

1.3. Estimating UCIP performance

Having established estimators for the cumulative hazard function and cumulative reverse hazard function, we now need an estimate of the performance of the UCIP model based on the single target response times. On an OR task, the UCIP model cumulative hazard function is simply the sum of the cumulative hazard functions of each of the single target conditions (cf. Eq. (3)). In this model, the probability that a response has not occurred by time t, 1−FUCIP(t), is the probability that none of the sub-processes have finished by time t, 1 − Fi(t). Hence,

| (17) |

Based on this, a reasonable estimator for the cumulative hazard function of the race model is the sum of NA estimators of the cumulative hazard function for each single target condition,

| (18) |

To find the mean and variance of this estimator, we return to the multiplicative intensity model representation of the sub-processes stated above. We use the * notation as above,

| (19) |

We will also need to distinguish among the ordered response times for each single target condition. We use Tij to indicate the jth element in the ordered set of response times from condition i.

Theorem 5. ĤUCIP(t) is an unbiased estimator of .

Theorem 6. An unbiased estimate of the variance of ĤUCIP(t) is given by,

| (20) |

Theorem 7. ĤUCIP(t) is a uniformly consistent estimator of HUCIP(t).

Theorem 8. Let where ni is the number of response times used to estimate the cumulative hazard function of the completion time of the ith channel. Then, converges in distribution to a zero mean Gaussian process as the number of response times used in the estimate increases.

Assuming a UCIP process on an AND task, the probability that a response has occurred by time t, FUCIP(t), is the probability that all of the sub-processes have finished by time t, Fi(t). Hence,

| (21) |

Thus, to estimate the cumulative reverse hazard function of the UCIP model on an AND task, we use the sum of NA estimators of the cumulative reverse hazard function for each single target condition,

| (22) |

The estimators of the UCIP cumulative reverse hazard functions retain the statistical properties of the NA estimator of the individual cumulative reverse hazard function: Because we are using a sum of estimators, the consistency, unbiasedness and Gaussian limit properties all hold for the UCIP AND estimator just as they do for the UCIP OR estimator.

Theorem 9. K̂UCIP(t) is an unbiased estimator of .

Theorem 10. An unbiased estimate of the variance of K̂UCIP(t) is given by,

| (23) |

Theorem 11. K̂UCIP is a uniformly consistent estimator of KUCIP.

Theorem 12. Let where ni is the number of response times used to estimate the cumulative hazard function of the completion time of the ith channel. Then, converges in distribution to a zero mean Gaussian process as the number of response times used in the estimate increases.

1.3.1. Handling ties

In theory the underlying response time distributions are continuous and thus there is no chance of two exactly equal response times. In practice, measurement devices and truncation limit the possible observed response times to a discrete set. This means repeated values are possible. Aalen et al. (2008) suggest two ways of dealing with these repeated values. One method is to add a zero mean, small-variance random-value to the tied response times. Alternatively, one could use the number of responses at a particular time in the numerator of Eqs. (10) and (13). If we let d(t) be the number of responses that occur exactly at time t, this leads to the estimators,

| (24) |

| (25) |

1.4. Hypothesis testing

Having established an estimator of UCIP performance, we now turn to hypothesis testing. Here, we focus on a test with UCIP performance as the null hypothesis. In the following, we will use the subscript r to refer to the condition when all sources indicate a target (i.e., the redundant target condition in an OR task or the target condition on an AND task). We begin with the difference between the participant’s performance when all sources indicate a target and the estimated UCIP performance,

| (26) |

| (27) |

Following the same line of reasoning given for the unbiasedness of the cumulative hazard function estimators, the expected difference is zero under the null hypothesis that Hr(t) = HUCIP(t). Furthermore, the point-wise variance of the function is the sum of the point-wise variances of each individual function,

| (28) |

| (29) |

The difference functions converge in distribution to a Gaussian process. Hence, at any t, under the null hypothesis, the difference will be normally distributed. We can then divide by the estimated variance at that time so the limit distribution is a standard normal.

In general, we may want to weight the difference between the two functions, e.g., use smaller weights for times where the estimates are less reliable. Let L(t) be a predictable weight process that is zero whenever any of Yi or Yr is zero (Gi or Gr is zero for AND), then we define our general test statistics as,

| (30) |

| (31) |

Theorem 13. Under the null hypothesis of UCIP performance, E (ZOR) = 0.

Theorem 14. An unbiased estimate of the variance of ZOR is given by,

Theorem 15. Assume that there exists sequences of constants {an} and {cn} such that,

Further, assume that there exists some function d(s) such that for all δ,

Then (an/cn)ZOR(n) converges in distribution to a zero mean Gaussian process as the number of response times used in the estimate increases.

The Harrington–Fleming weight process (Harrington & Fleming, 1982) is one possible weight function,

| (32) |

Here, S(t−) is an empirical survivor function estimated from the pooled response times from all conditions,

| (33) |

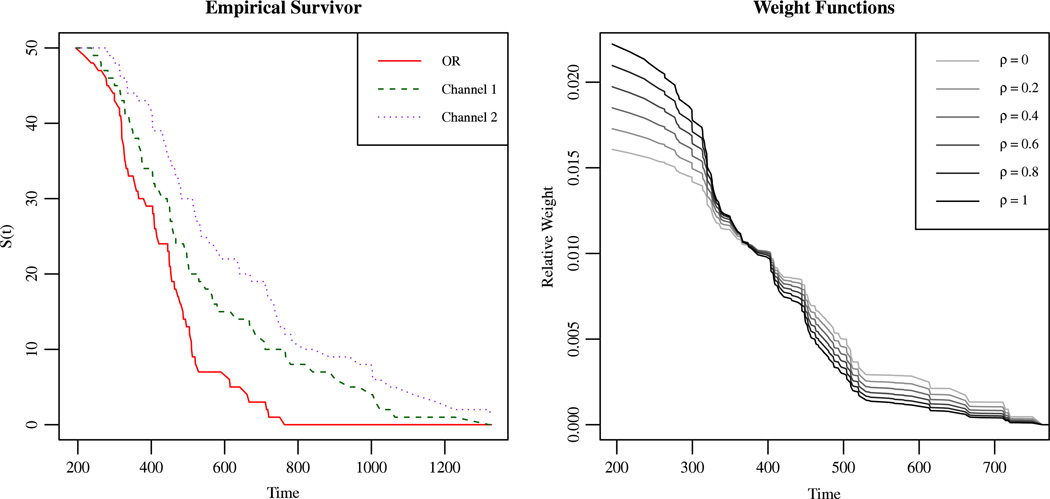

Fig. 2 depicts the relative values of the Harrington–Fleming weighting function across a range of response times and different values of ρ. Response times were sampled from a shifted Wald distribution and the estimators were based on 50 samples from each of the single channel and double target models. When ρ = 0, the test corresponds to a log-rank test (Mantel, 1966) between the estimated UCIP performance and the actual performance with redundant targets (see Andersen et al., 1993, Example V.2.1, for a discussion of this correspondence). As ρ increases, relatively more weight is given to earlier response times and less to later response times.

Fig. 2.

On the left, the empirical survivor function based on response times sampled from Wald distributions is shown. The right shows the relative values of the Harrington–Fleming (Harrington & Fleming, 1982) weighting function for the capacity test at various values of ρ.

Various other weight processes that satisfy the requirements for L(t) have also been proposed (see Aalen et al., 2008, Table 3.2, for a list). Because the null hypothesis distribution is unaffected by the choice of the weight process, it is up to the researcher to choose the most appropriate for a given application. In the simulation section, we use ρ = 0, essentially a log-rank (or Mann–Whitney if there are no censored response times) test of the difference between the estimated UCIP performance and the performance when all targets are present (Aalen et al., 2008; Mantel, 1966). We have chosen to present the Harrington–Fleming estimator here because of its flexibility and specifically with ρ = 0 for the simulation section because it reduces to tests that are more likely to be familiar to the reader. Any weight function that satisfies the conditions on L(t) could be used. A more thorough investigation of the appropriate functions for response time data will be an important next step, but is beyond the scope of this paper.

For a statistical test of CAND(t), we simply switch Y(t) with G(t) and the bounds of integration.

Theorem 16. Under the null hypothesis of UCIP performance, E (ZAND) = 0.

Theorem 17. An unbiased estimate of the variance of ZAND is given by,

Theorem 18. Assume that there exists sequences of constants {an} and {cn} such that,

Further, assume that there exists some function d(s) such that for all δ,

Then (an/cn)ZAND(n) converges in distribution to a zero mean Gaussian process as the number of response times used in the estimate increases.

This leads to a reverse hazard version of the Harrington–Fleming estimator,

| (34) |

| (35) |

Therefore, one can use the standard normal distribution for null-hypothesis-significance tests for UCIP-OR and UCIP-AND performance. Under the null hypothesis,

| (36) |

| (37) |

2. Simulation

In this section, we examine the properties of the NA estimator of UCIP performance as well as the new test statistic using simulated data. To estimate type I error rates for different sample sizes, we use three different distributions for the single target completion times, exponential, shifted Wald and exGaussian.

First, we simulated results for the test statistics with 10 through 200 samples per distribution. For each sample size, the type I error rate was estimated from 1000 simulations. In each simulation, the parameters for the distribution were randomly sampled. For the exponential rate parameter, we sampled from a gamma distribution with shape 1 and rate 500. For the shifted Wald model, we sampled the shift from a gamma distribution with shape 10 and rate 0.2. Parametrizing the Wald distribution as the first passage time of a diffusion process, we sampled the drift rate from a gamma distribution with shape 1 and rate 10 and the threshold from a gamma distribution with shape 30 and rate 0.6. The rate of the exponential in the exGaussian distribution was sampled from a gamma random variable with shape 1 and rate 250. The mean of the Gaussian component was sampled from a Gaussian distribution with mean 250 and standard deviation 100 and the standard deviation was sampled from a gamma distribution with shape 10 and rate 0.2. In each simulation, response times were sampled from the corresponding distribution with the sampled parameters. Double target response times were simulated by taking two independent samples from the single channel distribution, then taking either the minimum (for OR processing) or the maximum (for AND processing) of those samples.

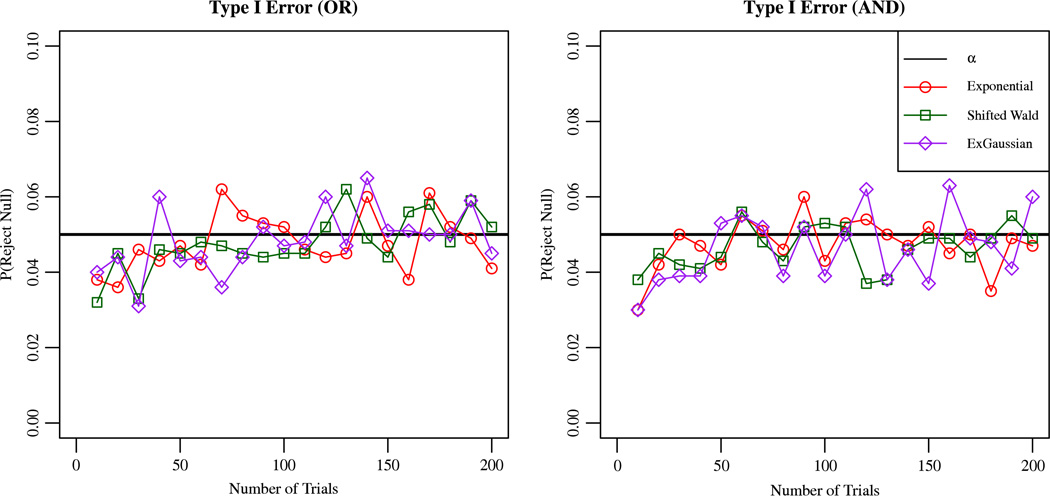

As shown in Fig. 3, the type I error rate is quite close to the chosen α, 0.05 across all three model types and for the simulated sample sizes. This indicates that, even with small sample sizes, using the standard normal distribution for the test statistic works quite well.

Fig. 3.

Type I error rates with α = 0.05 for three different channel completion times. For each model, double target response times were simulated by taking the min or max of independently sampled channel completion times for OR or AND models respectively. The error rates are based on 1000 simulations for each model with 10 through 200 trials per distribution.

Next, we examine the power as a function of the number of samples per distribution and the amount of increase/decrease in performance in the redundant target condition. To do so, we focus on models that have a simple relationship between their parameters and capacity. For OR processes, we use an exponential distribution for each individual channel, FA(t) = FB = 1 − e−λt and HA(t) = HA(t) = λt. The prediction for a UCIP or model in this case would be HAB(t) = 2λt. Thus, changes in will directly correspond to changes in the rate parameter. To explore a range of capacity, we used a range of multipliers for the rate parameters of each channel for simulating the double target response times, FA,ρ(t) = FB,ρ(t) = 1 − e−ρλt so that HAB,ρ(t) = 2ρλt. This leads to the formula HAB,ρ/HAB = ρ for the true capacity. For AND processes, we use FA(t) = FB(t) = (1 − e−λt)2/ρ as the distribution for simulating response times when both targets are present. Although ρλ no longer corresponds directly with the rate parameter, this approach maintains the correspondence α = CAND.

In general, the power of these tests may depend on the distribution of the underlying channel processes. We limit our exploration to these models because of the analytic correspondence between the rate parameter and the value of the capacity coefficient. With other distributions, the relationship between the capacity coefficient and the differences in the parameters across workload can be quite complicated. These particular results are also dependent on the weighting function used. Here, we focus on the log-rank type weight function, but many other options are possible (e.g., Aalen et al., 2008, p. 107). These estimates apply to cases when the magnitude of the weighted difference in hazard functions between single and double target conditions match those predicted by the exponential models.

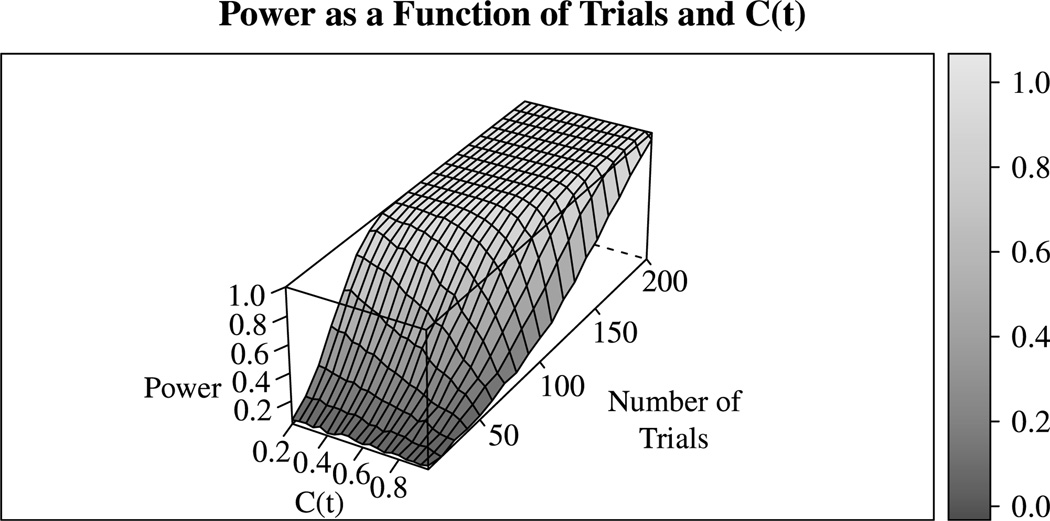

Both the UOR and UAND test statistics, applied to the corresponding OR or AND model, had the same power. Figs. 4 and 5 show the power of these tests as the capacity increases and as the number of trials used to estimate each cumulative hazard function increases. For much of the space tested, the power is quite high, with ceiling performance for nearly half of the points tested.

Fig. 4.

Power of the UOR and UAND statistics with α = 0.05 as a function of capacity and number of trials per distribution for capacity above 1. These results are based on two exponential channels, with either an OR or AND stopping rule, depending on the statistic. Using these models, the power of UOR and UAND is the same.

Fig. 5.

Power of the UOR and UAND statistics with α = 0.05 as a function of capacity and number of trials per distribution for capacity less than 1. These results are based on two exponential channels, with either an OR or AND stopping rule, depending on the statistic. Using these models, the power of UOR and UAND is the same.

With a true capacity of 1.1, the power remains quite low, even with up to 200 trials per distribution. However, with a higher number of trials, the increase in power is quite steep as a function of the true capacity. With 200 trials per distribution, the power jumps from roughly 0.5 to 0.9 as the true capacity changes from 1.1 to 1.2. On the low end of trials per distribution, i.e., 10 to 20, reasonable power is not achieved even up to a capacity of 3.0. With 30 trials per distribution, the power increases roughly linearly with the capacity, achieving reasonable power around C(t) = 2.5. The test was not as powerful with limited capacity. The power still increases with more trials and larger changes in capacity, however the increase is much more gradual than that in the super capacity plot.

Differences in the power as a function of capacity for super and limited are to be expected given that the capacity coefficient is a ratio, while the test statistics are based on differences. However, this does not explain the differences in power seen here. Part of the difference is due to a difference in scale. The super capacity plot has step sizes of 0.1 while the limited capacity plot has step sizes of 0.04. We chose different step sizes so that we could demonstrate a wider range of capacity values. Furthermore, the weighting function here may be more sensitive to super capacity. The effect of the weighting function on these tests, and determining the appropriate weights if one is interested in only one of super or limited capacity, is an important future direction of this work.

3. Application

In this section we turn to data from a recent experiment using a simple dot detection task. In this study, participants were shown one of four types of stimuli. In the single dot condition, a white 0.2° dot was presented directly above or below a central fixation on an otherwise black background. In the double dot condition, the dots were presented both above and below fixation. Each trial began with a fixation cross, presented for 500 ms, followed by either a single dot stimulus, a double dot stimulus or an entirely black screen presented for 100 ms or until a response was made. Participants were given up to 4 s to respond. Each trial was followed by a 1 s inter-trial interval. On each day, participants were shown each of the four stimuli 400 times in random order.

Participants completed two days worth of trials with each of two different instruction sets. In one version, the OR task, participants were asked to respond “Yes” to the single dot and double dot stimuli and only respond no when the entirely black screen was presented. In the other version, the AND task, participants were to respond “Yes” only to the double dot stimulus and “No” otherwise. See Fig. 1 for example stimuli. For further details of the study, see Eidels, Townsend, Hughes, and Perry (submitted for publication).

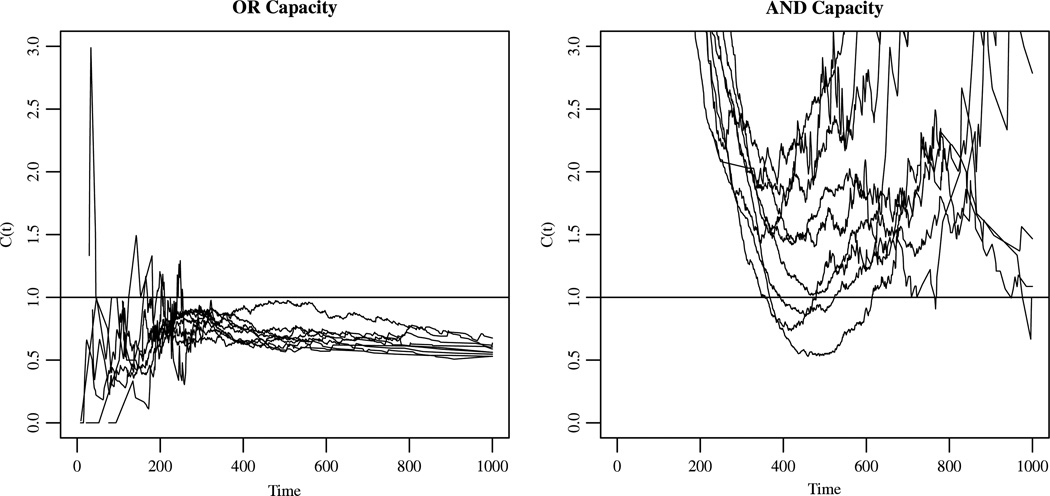

The capacity functions for each individual are shown in Fig. 6. Upon visual inspection, all participants seem to be limited capacity in the OR task. On the AND task, all of the participants had super capacity for some portion of time, with only three participants showing C(t) less than one, and those only for later times.

Fig. 6.

Capacity coefficient functions of the nine participants from Eidels et al. (submitted for publication). The left graph depicts performance on the OR task, evaluated with the OR capacity coefficient. The right graph depicts performance on the AND task, evaluated with the AND capacity coefficient.

Table 1 shows the values of the statistic for each individual. The test shows significant violations of the UCIP model for every participant on both tasks. These data are based on 800 trials per distribution, so based on the power analysis in the last section, it is no surprise that the test was significant for every participant. These data indicate that participants are doing worse than the predicted baseline UCIP processing on the OR task, and better than the UCIP baseline on the AND task. On the OR task, COR < 1 indicates either inhibition between the dot detection channels, limited processing resources, or processing worse than parallel (e.g., serial). On the AND task, CAND > 1 indicates either facilitation between the dot detection channels, increased processing resources with increased load, or processing better than parallel (e.g. coactive). Given the nature of the stimuli and previous analyses (Houpt & Townsend, 2010), it is unlikely that the failure of the assumption of parallel processing is the explanation for these results. Likewise, there is no reason to believe that a change in the available processing resources would be different between the tasks, although resources may be limited for both versions (cf. Townsend & Nozawa, 1995). We believe the most likely explanation of these data is an increase in facilitation between the dot detection processes in the AND task.

Table 1.

Results of the test statistic applied to the results of the two dot experiment of Eidels et al. (submitted for publication).

| OR task | AND task | |

| 1 | −10.12** | 14.50** |

| 2 | −4.41** | 13.81** |

| 3 | −8.22** | 3.88** |

| 4 | −6.85** | 18.62** |

| 5 | −9.21** | 6.05** |

| 6 | −2.72* | 22.27** |

| 7 | −5.14** | 10.49** |

| 8 | −5.91** | 11.59** |

| 9 | −5.53** | 15.75** |

p < 0.01.

p < 0.001.

4. Discussion

In this paper, we developed a statistical test for use with the capacity coefficient for both minimum-time (OR) decision rules and maximum-time (AND) decision rules. We did so by extending the properties of the Nelson–Aalen estimator of the cumulative hazard function (e.g., Aalen et al., 2008) to estimates of unlimited capacity, independent parallel performance. This approach yields a test statistic that, under the null hypothesis and in the limit as the number of trials increases, has a standard normal distribution. This allows investigators to use the statistic in a variety of tests beyond just a comparison against the UCIP performance, such as comparing two estimated capacity coefficients.

As part of developing the statistic, we demonstrated two other important results. First, we established the properties of the estimate of UCIP performance on an OR (AND) task given by the sum of cumulative (reverse) hazard functions estimated from single target trials. This included demonstrating that the estimator is unbiased, consistent and converges in distribution to a Gaussian process.

Furthermore, in developing the estimator for UCIP AND processing, we extended the Nelson–Aalen estimator of cumulative hazard functions to cumulative reverse hazard functions. Despite being less common than the cumulative hazard function, the cumulative reverse hazard function is used in a variety of contexts, recently including cognitive psychology (see Chechile, 2011; Eidels, Townsend et al., 2010; Townsend & Wenger, 2004). Nonetheless, we were unable to find existing work developing an NA estimator of the cumulative reverse hazard function. This estimator can also be used to estimate the reverse hazard function in the same way the hazard function is estimated by the muhaz package (Hess & Gentleman, 2010) for the statistical software R (R Development Core Team, 2011). In this method, the cumulative hazard function is estimated with the Nelson–Aalen estimator, then smoothed using (by default) an Epanechnikov kernel. The hazard function is then given by the first order difference of that smoothed function.

Although the statistical test is based on the difference between predicted UCIP performance and true performance when all targets are present, the test is valid for the capacity ratio. If one rejects the null-hypothesis that the difference is zero, this is equivalent to rejecting the hypothesis that the ratio is one. Nonetheless, we have not developed the statistical properties of the capacity coefficients. Instead, we have demonstrated the small-sample and asymptotic properties of the components of the capacity coefficients.

In future work, we hope to develop Bayesian counterparts to the present statistical tests. One advantage of this approach would be the ability to consider posterior distributions over the capacity coefficient in its ratio form, rather than being restricted to the difference. There are additional reasons to explore Bayesian alternatives as well. We will not repeat the various arguments in depth, but there are both practical and philosophical reasons why one might prefer such an alternative (cf. Kruschke, 2010).

We are also interested in a more thorough investigation of the weighting functions used in these tests. It could be that some weighting functions are more likely to detect super-capacity, while others are more likely to detect limited capacity. Furthermore, the effects of the weighting function are likely to vary depending on whether they are used with the cumulative hazard function or the cumulative reverse hazard function.

While the capacity coefficient has been applied in a variety of areas within cognitive psychology, the lack of a statistical test has been a barrier to its use. This work removes that barrier by establishing a general test for UCIP performance.

Acknowledgments

This work was supported by NIH-NIMH MH 057717-07 and AFOSR FA9550-07-1-0078.

Appendix

Proofs and supporting theory

A.1. The multiplicative intensity model

The theory of the NA estimator for cumulative hazard functions is based on the multiplicative intensity model for counting processes. Here, we give a brief overview of the model and the corresponding model for counting processes with reverse time. For a more thorough treatment, see the Andersen et al. (1993) and Aalen et al. (2008). Also, Aalen et al. (2009) gives a good introduction to the topic.

Suppose we have a stochastic process N(t) that tracks the number of events that have occurred up to and including time t, e.g., the number of response times less than or equal to t. The intensity of the counting process λ(t) describes the probability that an event occurs in an infinitesimal interval, conditioned on the information about events that have already occurred, divided by the length of that interval. The process given by the difference of the counting process and the cumulative intensity, turns out to be a martingale Aalen et al. (2008, p. 27). Under the multiplicative intensity process model, we rewrite the intensity process of N(t) by λ(t) = h(t)Y(t), where Y(t) is a process for which the value at time t is determined given the information available up to immediately before t. Of particular interest in this work is estimating the cumulative hazard function .

We will also use the concept of the counting process in reverse time N̄. Hence, we will start at some tmax, possibly infinity, and work backward through time counting all of the events that happen at or after time t. The multiplicative model takes a similar form, with a process G(t) that is determined by all the information available after t, in place of Y(t), λ̄(t) = k(t)G(t). We will use this model when we are interested in estimating the cumulative reverse hazard function .

One important consequence of the multiplicative intensity model is that the associated martingales are locally square integrable (cf. Andersen et al., 1993, p. 78), a requirement for much of the theory below.

A.2. Martingale theory

Before giving the full proofs of the theorems in this paper, we will first summarize the theoretical content that will be necessary, particularly some of the basic properties of martingales. Intuitively, martingales can be thought of as describing the fortune of a gambler through a series of fair gambles. Because each game is fair, the expected amount of money the gambler has after each game is the amount he had before the game. At any point in the sequence, the entire sequence of wins and losses before that game is known, but the outcome of the next game, or any future games is unknown. More formally, a discrete time martingale is defined as follows:

Definition 1. Suppose X1, X2, … are a sequence of random variables on a probability space (Ω, ℱ, P) and ℱ1, ℱ2, … is a sequence of σ-fields in ℱ. Then {(Xn, ℱn) : n = 1, 2, …} is a martingale if:

ℱn ⊂ ℱn+1,

Xn is measurable ℱn,

E(|Xn|) is finite and

E(Xn+1|ℱn) = Xn almost surely.

Assuming Xn corresponds to the gambler’s fortune after the nth game and ℱn corresponds to the information available after the nth game, the first two conditions correspond to the notion that after a particular game, the result of that game and all previous games is known. The third condition corresponds a condition that the expected amount of money a gambler has after any game is finite. The final condition corresponds to the fairness of the gambles; the expected amount of money the gambler has after one more game is the money he has already.

Martingales can also be defined for continuous time. In this case, the index set can be, for example, the set of all times t > 0, t ∈ ℝ. Then we require that for all s < t, ℱs ⊂ ℱt instead of (i). The second two conditions are the same after replacing the discretely valued index n with the continuously valued t. The final requirement for continuous time becomes E(Xt|ℱs) = Xs for all s ≤ t.

The expectation function of a martingale is fixed by definition, but the change in the variability of the process could differ among martingales and is often quite important. In addition to the variance function, there are two useful ways to track the variation, the predictable and optional variation processes. For discrete martingales the predictable variation process is based on the second moment of the process at each step conditioned on the σ-field from the previous step. Formally,

| (A.1) |

The optional (quadratic) variation process is similar, but the second moment at each step is taken without conditioning,

| (A.2) |

To generalize these processes to continuous time martingales, evenly split up evenly split up the interval [0, t] into n subintervals, and use the discrete definitions, then take the limit as the number of sub-intervals goes to infinity,

| (A.3) |

| (A.4) |

For reverse hazard functions, the concept of a reverse martingale will also be useful. These processes are martingales, but with time reversed so that the martingale property is based on conditioning on the future, not the past. A sequence of random variables …, Xn, Xn+1, Xn+2, … is a reverse martingale if …, Xn+2, Xn+1, Xn, … is a martingale. The definition of a reverse martingale can be generalized to continuous time by the same procedure as a martingale.

Zero mean martingales will particularly useful in the proofs that follow. Similar to the equality of the variance and the second moment for zero mean univariate random variables, the variance of a zero mean martingale is equal to the optional variation process and the predictable variation process. Also, the stochastic integral of a predictable process with respect to a zero mean martingale is again a zero mean martingale. Informally, we can see this property by examining a discrete approximation to the martingale with [0, t] divided into n sub-intervals,

| (A.5) |

Then,

| (A.6) |

Aalen et al. (2008) also give the predictable and optional variation processes of the integral of a predictable process, here H(t), with respect to a counting process martingale, M,

| (A.7) |

| (A.8) |

Furthermore, if M1, …, Mk are orthogonal martingales (i.e., for all i ≠ j, 〈Mi, Mj〉 = 0) then,

| (A.9) |

| (A.10) |

From the reverse-time relationship, the same properties hold for reverse martingales, with the lower bound of integration set to t > 0 and the upper bound set to ∞ or tmax.

We will make use of the martingale central limit theorem for the proofs involving limit distributions of the estimators. This theorem states that, under certain assumptions, a sequence of martingales converges in distribution to a Gaussian martingale. There are various versions of the theorem that require various conditions. For our purposes, we will use the condition that there exist some non-negative function y(s) such that sups∈[0,t] |Y(n)(s)/n − y(s)| converges in probability to 0, where Y(n)(s) is as defined above (the number of responses that have not occurred by time s). With the additional assumption that the cumulative hazard function is finite on [0, t], this is sufficient for the martingale central limit theorem to hold. If the martingales are zero-mean and, assuming s1 is the smaller of s1 and s2, Andersen et al. (1993, pp. 83–84). In our applications, y(s) is the survivor function of the response time random variable.

We will also need an analogous theorem for reverse martingales for the theory of cumulative reverse hazard functions. With cumulative reverse hazard functions, we can no longer assume K(0) < ∞, but we can assume limt→∞ K(t) = 0. Thus, we will need a function g(t) such that in place of the conditions on y(t). Furthermore, the covariance function of the limit distribution, cov(s1, s2), will depend on the larger of s1 and s2. In this case, g(s) is the cumulative distribution function of the response time random variable. Thus, if we additionally assume that when t > 0, K(t) < ∞, the distribution of the processes in the limit as the number of samples increases is again a Gaussian martingale (Loynes, 1969).

A.3. Proofs of theorems in the text

A.3.1. The cumulative reverse hazard function

As a reminder,

| (A.11) |

Theorem 1. K̂(t) is an unbiased estimator of K*(t).

Proof. This can be derived in the same manner that the Ĥ is shown to be unbiased in Aalen et al. (2008, pp. 87–88), but with time reversed. Instead of using the definition of the counting process N(t) in Eq. (12), we use reversed counting processes N̄(t) which is 0 at some tmax, and increases as t decreases. We represent the intensity of this process as λ̄(t) = k(t)G(t), where k(t) is the reverse hazard function at time t and let M̄(t) be the reverse martingale . Then, the transitions of this process can be written informally as,

Let Q(t)/G(t) = 0, then,

Integrating from t to tmax and multiplying through by −1, we have,

The left-hand side is K̂, the first term on the right is K*(t), and the final term is a zero mean reverse martingale. Hence, K̂(t) is an unbiased estimator of K*(t).

Theorem 2. An unbiased estimate of the variance of K̂(t) is given by,

| (A.12) |

Proof. From Theorem 1, K̂(t) − K*(t) is a zero mean reverse martingale. Thus,

The integral is with respect to a zero mean martingale with time reversed, so the optional variation process is,

Hence,

Lemma 1. .

Proof. From the proof of Theorem 1,

| (A.13) |

Thus, the predictable variation process is (cf. Andersen et al., 1993, p. 71),

| (A.14) |

Theorem 3. K̂(t) is a uniformly consistent estimator of K(t).

Proof. By Lenglart’s Inequality (e.g., Andersen et al., 1993, p. 86) and Lemma 1,

For each s ∈ [t, tmax], there is some positive probability of observing a response at or before s. Hence, as n → ∞, there will be infinitely many responses observed at or before s, so,

Because Q(n)(s) ≤ 1 and k(s) < ∞,

This implies that for any positive δ,

And thus for any positive ε,

Additionally,

Therefore, .

Theorem 4. converges in distribution to a zero mean Gaussian process as the number of response times used in the estimate increases.

Proof. In the limit as the number of samples n increases, for any t > 0, Q(t) = 0 only when k(t) = 0 so it is sufficient to demonstrate the convergence of K̂(t) − K(t). First, we must satisfy the condition that there must exist some g(t) such that for all t ∈ [τ, tmax]. This follows directly from the Glivenko–Cantelli Theorem (e.g., Billingsley, 1995, p. 269) by noting that G(t)/n is the empirical cumulative distribution function. Then we may apply the reverse martingale central limit theorem and the conclusion follows.

A.3.2. Estimating UCIP performance

As a reminder,

| (A.15) |

| (A.16) |

| (A.17) |

Theorem 5. ĤUCIP(t) is an unbiased estimator of .

Proof.

Theorem 6. An unbiased estimate of the variance of ĤUCIP(t) is given by,

| (A.18) |

Proof. To determine the variance, we use the optional variation of the martingale. Assuming that the counting processes for each process are independent, we have the following relation (e.g., Aalen et al., 2008, p. 56),

Because, under the null hypothesis, is a zero mean martingale,

Theorem 7. ĤUCIP(t) is a uniformly consistent estimator of HUCIP(t).

Proof. Suppose ni is the number of response times used to estimate Ĥi(t). For each i, Ĥi(t) is a consistent estimator of Hi(t), so

Then,

Theorem 8. where ni is the number of response times used to estimate the cumulative hazard function of the completion time of the ith channel. Then, converges in distribution to a zero mean Gaussian process as the number of response times used in the estimate increases.

Proof. For the distribution to converge, Yi/n must converge in probability to some positive function y(t) for all i. This happens as long as the proportions of ni/n converge to some fixed proportion pi, and yi(t) = pi(1 − Fi(t)). Then, we have as the limit distribution a Gaussian process with mean 0 and covariance,

Theorem 9. K̂UCIP(t) is an unbiased estimator of .

Proof.

Theorem 10. An unbiased estimate of the variance of K̂UCIP(t) is given by,

| (A.19) |

Proof. By Theorem 9, is a zero mean martingale so,

Theorem 11. K̂UCIP is a uniformly consistent estimator of KUCIP.

Proof. Suppose ni is the number of response times used to estimate K̂i. For each i, K̂i is a consistent estimator of Ki, so

Then,

Theorem 12. converges in distribution to a zero mean Gaussian process as the number of response times used in the estimate increases.

Proof. Let where ni is the number of response times used to estimate the cumulative hazard function of the completion time of the ith channel. For the distribution to converge, Yi/n must converge in probability to some positive function y(t) for all i. This happens as long as the proportions of ni/n converge to some fixed proportion pi, and yi(t) = pi(1 − Fi(t)). Then, we have as the limit distribution a Gaussian process with mean 0 and covariance,

| (A.20) |

A.3.3. Hypothesis testing

Lemma 2. Under the null hypothesis of UCIP performance,

Proof.

Theorem 13. Under the null hypothesis of UCIP performance, E (ZOR) = 0.

Proof. According to Lemma 2, ZOR is the difference of two integrals with respect to zero mean martingales. The expectation of an integral with respect to a zero mean martingale is zero. Hence, the conclusion follows from the linearity of the expectation operator.

Theorem 14. An unbiased estimate of the variance of ZOR is given by,

Proof.

Theorem 15. Assume that there exists sequences of constants {an} and {cn} such that,

Further, assume that there exists some function d(s) such that for all δ,

Then (an/cn)ZOR(n) converges in distribution to a zero mean Gaussian process as the number of response times used in the estimate increases.

Proof. Here we use the version of the martingale central limit theorem as stated in Andersen et al. (1993, Section 2.23). First, we must show that the variance process converges to a deterministic process.

Hence, from the assumptions and by the dominated convergence theorem,

The second condition holds because for all s ∈ [0, t],

Therefore, the conclusion follows from the martingale central limit theorem.

Lemma 3. Under the null hypothesis of UCIP performance,

Proof.

Theorem 16. Under the null hypothesis of UCIP performance, E (ZAND) = 0.

Proof. According to Lemma 3, ZAND is the difference of two integrals with respect to zero mean martingales. The expectation of an integral with respect to a zero mean reverse martingale is zero. Hence, the conclusion follows from the linearity of the expectation operator.

Theorem 17. An unbiased estimate of the variance of ZAND is given by,

Proof.

Theorem 18. Assume that there exists sequences of constants {an} and {cn} such that,

Further, assume that there exists some function d(s) such that for all δ,

Then (an/cn)ZAND(n) converges in distribution to a zero mean Gaussian process as the number of response times used in the estimate increases.

Proof.

Hence, from the assumptions and by the dominated convergence theorem,

The second condition holds because for all s ∈ [t, tmax],

Therefore, the conclusion follows from the reverse martingale central limit theorem.

Footnotes

Usually, response times are assumed to include some extra time that are not stimulus dependent, such as the time involved in pressing a response key once the participant has chosen a response. This is often referred to as base time. We do not treat the effects of base time in this work. Townsend and Honey (2007) show that base time makes little difference for the capacity coefficient when the assumed variance of the base time is within a reasonable range.

See Appendix A.1 for details.

The optional variation process is also known as the “quadratic variation process”. We use the notation [M] to indicate the optional variation process of a martingale M.

References

- Aalen Andersen, et al. History of applications of martingales in survival analysis. Electronic Journal for History of Probability and Statistics. 2009;5(1) [Google Scholar]

- Aalen OO, Borgan Ø, Gjessing HK. Survival and event history analysis: a process point of view. New York: Springer; 2008. [Google Scholar]

- Andersen PK, Borgan Ø, Keiding N. Statistical models based on counting processes. New York: Springer; 1993. [Google Scholar]

- Billingsley P. Probability and measure. 3rd ed. New York: Wiley; 1995. [Google Scholar]

- Blaha LM. Ph.D. Thesis. Bloomington, Indiana: Indiana University; 2010. A dynamic Hebbian-style model of configural learning. [Google Scholar]

- Brown SD, Heathcote A. The simplest complete model of choice response time: linear ballistic accumulation. Cognitive Psychology. 2008;57:153–178. doi: 10.1016/j.cogpsych.2007.12.002. [DOI] [PubMed] [Google Scholar]

- Chechile RA. Mathematical tools for hazard function analysis. Journal of Mathematical Psychology. 2003;47:478–494. [Google Scholar]

- Chechile RA. Properties of reverse hazard functions. Journal of Mathematical Psychology. 2011;55:203–222. [Google Scholar]

- Eidels A, Donkin C, Brown S, Heathcote A. Converging measures of workload capacity. Psychonomic Bulletin & Review. 2010;17:763–771. doi: 10.3758/PBR.17.6.763. [DOI] [PubMed] [Google Scholar]

- Eidels A, Townsend JT, Algom D. Comparing perception of stroop stimuli in focused versus divided attention paradigms: evidence for dramatic processing differences. Cognition. 2010;114:129–150. doi: 10.1016/j.cognition.2009.08.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eidels A, Townsend JT, Hughes HC, Perry LA. Complementary relationship between response times, response accuracy, and task requirements in a parallel processing system. 2011 Manuscript (submitted for publication). [Google Scholar]

- Fiedler A, Schröter H, Ulrich R. Coactive processing of dimensionally redundant targets within the auditory modality? Journal of Experimental Psychology. 2011;58:50–54. doi: 10.1027/1618-3169/a000065. [DOI] [PubMed] [Google Scholar]

- Gondan M, Riehl V, Blurton SP. Showing that the race model inequality is not violated. Behavior Research Methods. 2012;44:248–255. doi: 10.3758/s13428-011-0147-z. [DOI] [PubMed] [Google Scholar]

- Harrington DP, Fleming TR. A class of rank test procedures for censored survival data. Biometrika. 1982;69:133–143. [Google Scholar]

- Hess K, Gentleman R. Muhaz: hazard function estimation in survival analysis. S Original by Kenneth Hess and R Port by R Gentleman. 2010 [Google Scholar]

- Houpt JW, Townsend JT. The statistical properties of the survivor interaction contrast. Journal of Mathematical Psychology. 2010;54:446–453. [Google Scholar]

- Hugenschmidt CE, Hayasaka S, Peiffer AM, Laurienti PJ. Applying capacity analyses to psychophysical evaluation of multisensory interactions. Information Fusion. 2010;11:12–20. doi: 10.1016/j.inffus.2009.04.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Krummenacher J, Grubert A, Müller HJ. Inter-trial and redundant-signals effects in visual search and discrimination tasks: separable pre-attentive and post-selective effects. Vision Research. 2010;50:1382–1395. doi: 10.1016/j.visres.2010.04.006. [DOI] [PubMed] [Google Scholar]

- Kruschke JK. What to believe: Bayesian methods for data analysis. Trends in Cognitive Sciences. 2010;14:293–300. doi: 10.1016/j.tics.2010.05.001. [DOI] [PubMed] [Google Scholar]

- Loynes RM. The central limit theorem for backwards martingales. Probability Theory and Related Fields. 1969;13:1–8. [Google Scholar]

- Mantel N. Evaluation of survival data and two new rank order statistics arising in its consideration. Cancer Chemotherapy Reports. 1966;50:163–170. [PubMed] [Google Scholar]

- Maris G, Maris E. Testing the race model inequality: a nonparametric approach. Journal of Mathematical Psychology. 2003;47:507–514. [Google Scholar]

- Miller J. Divided attention: evidence for coactivation with redundant signals. Cognitive Psychology. 1982;14:247–279. doi: 10.1016/0010-0285(82)90010-x. [DOI] [PubMed] [Google Scholar]

- R Development Core Team. Vienna, Austria: R Foundation for Statistical Computing; 2011. R: a language and environment for statistical computing. ISBN: 3-900051-07-0. [Google Scholar]

- Rach S, Diederich A, Colonius H. On quantifying multisensory interaction effects in reaction time and detection rate. Psychological Research. 2011;75:77–94. doi: 10.1007/s00426-010-0289-0. [DOI] [PubMed] [Google Scholar]

- Richards HJ, Hadwin JA, Benson V, Wenger MJ, Donnelly N. The influence of anxiety on processing capacity for threat detection. Psychonomic Bulletin and Review. 2011;18:883–889. doi: 10.3758/s13423-011-0124-7. [DOI] [PubMed] [Google Scholar]

- Rickard TC, Bajic D. Memory retrieval given two independent cues: cue selection or parallel access? Cognitive Psychology. 2004;48:243–294. doi: 10.1016/j.cogpsych.2003.07.002. [DOI] [PubMed] [Google Scholar]

- Scharf A, Palmer J, Moore CM. Evidence of fixed capacity in visual object categorization. Psychonomic Bulletin and Review. 2011;18:713–721. doi: 10.3758/s13423-011-0101-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Townsend JT, Ashby FG. The stochastic modeling of elementary psychological processes. Cambridge: Cambridge University Press; 1983. [Google Scholar]

- Townsend JT, Eidels A. Workload capacity spaces: a unified methodology for response time measures of efficiency as workload is varied. Psychonomic Bulletin and Review. 2011;18:659–681. doi: 10.3758/s13423-011-0106-9. [DOI] [PubMed] [Google Scholar]

- Townsend JT, Honey CJ. Consequences of base time for redundant signals experiments. Journal of Mathematical Psychology. 2007;51:242–265. doi: 10.1016/j.jmp.2007.01.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Townsend JT, Nozawa G. Spatio-temporal properties of elementary perception: an investigation of parallel, serial and coactive theories. Journal of Mathematical Psychology. 1995;39:321–360. [Google Scholar]

- Townsend JT, Wenger MJ. A theory of interactive parallel processing: new capacity measures and predictions for a response time inequality series. Psychological Review. 2004;111:1003–1035. doi: 10.1037/0033-295X.111.4.1003. [DOI] [PubMed] [Google Scholar]

- Ulrich R, Miller J, Schröter H. Testing the race model inequality: an algorithm and computer programs. Behavior Research Methods. 2007;39:291–302. doi: 10.3758/bf03193160. [DOI] [PubMed] [Google Scholar]

- Van Zandt T. Analysis of response time distributions. In: Wixted JT, Pashler H, editors. Stevens’ handbook of experimental psychology. 3rd ed. Vol. 4. New York: Wiley Press; 2002. pp. 461–516. [Google Scholar]

- Veldhuizen MG, Shepard TG, Wang M-F, Marks LE. Coactivation of gustatory and olfactory signals in flavor perception. Chemical Senses. 2010;35:121–133. doi: 10.1093/chemse/bjp089. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Weidner R, Muller HJ. Dimensional weighting of primary and secondary target-defining dimensions in visual search for singleton conjunction targets. Psychological Research. 2010;73:198–211. doi: 10.1007/s00426-008-0208-9. [DOI] [PubMed] [Google Scholar]