Abstract

In April 2006, Massachusetts passed legislation aimed at achieving near-universal health insurance coverage. The key features of this legislation were a model for national health reform, passed in March 2010. The reform gives us a novel opportunity to examine the impact of expansion to near-universal coverage state-wide. Among hospital discharges in Massachusetts, we find that the reform decreased uninsurance by 36% relative to its initial level and to other states. Reform affected utilization by decreasing length of stay, the number of inpatient admissions originating from the emergency room, and preventable admissions. At the same time, hospital cost growth did not increase.

Keywords: health, health care, health reform, insurance, hospitals, Massachusetts, preventive care

1. Introduction

In April 2006, the state of Massachusetts passed legislation aimed at achieving near-universal health insurance coverage. This legislation has been considered by many to be a model for the national health reform legislation passed in March 2010. In light of both reforms, it is of great policy importance to understand the impact of a growth in coverage to near-universal levels, unprecedented in the United States. In theory, insurance coverage could increase or decrease the intensity and cost of health care, depending on the underlying demand for care and its impact on health care delivery. Which effect dominates in practice is an empirical question.

Although previous researchers have studied the impact of expansions in health insurance coverage, these studies have focused on specific subpopulations – the indigent, children, and the elderly (see e.g. Currie and Gruber (1996); Finkelstein (2007); Card et al. (2008); Finkelstein et al. (2012)). The Massachusetts reform gives us a novel opportunity to examine the impact of a policy that achieved near-universal health insurance coverage among the entire state population. Furthermore, the magnitude of the expansion in coverage after the Massachusetts reform is similar to the predicted magnitude of the coverage expansion in the national reform. In this paper, we are the first to use hospital data to examine the impact of this legislation on insurance coverage, patient outcomes, and utilization patterns in Massachusetts. We use a difference-in-differences strategy that compares Massachusetts after the reform to Massachusetts before the reform and to other states.

The first question we address is whether the Massachusetts reform resulted in reductions in uninsurance. We consider overall changes in coverage as well as changes in the composition of types of coverage among the entire state population and the population who were hospitalized. One potential impact of expansions in publicly subsidized coverage is to crowd-out private insurance (Cutler and Gruber (1996)). The impact of the reform on the composition of coverage allows us to consider crowd-out in the population as a whole as well as among those in the inpatient setting.

After estimating changes in the presence and composition of coverage, we turn to the impact of the reform on hospital and preventive care. We first study the intensity of care provided. Because health insurance lowers the price of health care services to consumers, a large-scale expansion in coverage has the potential to increase demand for health care services, the intensity of treatment, and cost. Potentially magnifying this effect are general equilibrium shifts in the way care is supplied due to the large magnitude of the expansion (Finkelstein 2007)). Countervailing this effect is the monopsonistic role of insurance plans in setting prices and quantities for hospital services. To the extent that health reform altered the negotiating position of insurers vis a vis hospitals, expansions in coverage could actually reduce supply of services, intensity of treatment, or costs. Furthermore, the existence of insurance itself can also alter the provision of care in the hospital directly (e.g. substitution towards services that are reimbursed). Without coverage, patients could face barriers to receiving follow up treatment (typically dispensed in an outpatient setting or as drug prescriptions); potentially reducing the efficacy of the inpatient care they receive or altering the length of time they stay in the hospital. Achieving near-universal insurance could alter length of stay and other measures of services intensity though physical limits on the number of beds in the hospital, efforts to increase throughput in response to changes in profitability, or changes in care provided when physicians face a pool of patients with more homogeneous coverage (Glied and Zivin (2002)). Given these competing hypotheses, expanded insurance coverage could raise or lower the intensity of care provided.

In addition to changes in the production process within a hospital, we are interested in the impact of insurance coverage on how patients enter the health care system and access preventive care. We first examine changes in the use of the emergency room (ER) as a point of entry for inpatient care. Because hospitals must provide at least some care, regardless of insurance status, the ER is a potentially important point of access to hospital care for the uninsured.1 When the ER is the primary point of entry into the hospital, changes in admissions from the ER can impact welfare for a variety of reasons. First, the cost of treating patients in the ER is likely higher than the cost of treating the same patient in another setting. Second, the emergency room is designed to treat acute health events. If the ER is a patient’s primary point of care, then he might not receive preventive care that could mitigate future severe and costly health events. To the extent that uninsurance led people to use the ER as a point of entry for treatment that they otherwise would have sought through another channel, we expect to see a decline in the number of inpatient admissions originating in the ER.

We also study the impact of insurance on access to care outside of the inpatient setting. Using a methodology developed by the Agency for Health-care Research and Quality (AHRQ), we are able to study preventive care in an outpatient setting using inpatient data. We identify inpatient admissions that should not occur in the presence of sufficient preventive care. If the reform facilitated increased preventive care, then we expect a reduction in the number of inpatient admissions meeting these criteria. These measures also indirectly measure health in the form of averted hospitalizations. We augment this analysis with data on direct measures of access to and use of outpatient and preventive care.

Finally, we turn to the impact of the reform on the cost of hospital care. We examine hospital-level measures of operating costs (e.g. overhead, salaries, and equipment) that include both fixed and variable costs. This allows us to jointly measure the direct effect of insurance on cost – the relative effect of changing the out of pocket price – as well as the potential for quality competition at the hospital level. In the latter case, hospitals facing consumers who are relatively less price elastic (or more quality elastic) increase use of costly services and may also increase use of variable inputs as well as investments in large capital projects in order to attract price-insensitive customers (Dranove and Satterthwaite 1992)). In the extreme, large expansions in coverage might lead to a so called “medical arms race,” in which hospitals make investments in large capital projects to attract customers and are subsequently able to increase demand to cover these fixed costs (Robinson and Luft (1987)). The impact of all of these effects would be increased hospital costs as coverage approaches near-universal levels.

Our analysis relies on three main data sets. To examine the impact on coverage in Massachusetts as a whole, we analyze data from the Current Population Survey (CPS). To examine coverage among the hospitalized population, health care utilization, and preventive care, we analyze the universe of hospital discharges from a nationally-representative sample of approximately 20 percent of hospitals in the United States from the Healthcare Cost and Utilization Project (HCUP) National Inpatient Sample (NIS). In addition, we use the Behavioral Risk Factor Surveillance System (BRFSS) data to augment our study of access and preventive care.

We find a variety of results pointing to an impact of the Massachusetts reform on insurance coverage, hospital, and preventive care. First, we find a significant reduction in uninsurance both in the general population and among those who are hospitalized. In the population as a whole, we find that uninsurance declined by roughly 6 percentage points, or by about 50% of its initial level. This decline primarily came through increased coverage by employer-sponsored health insurance (ESHI), which accounted for nearly half the change, and secondarily through Medicaid and newly subsidized coverage available through the Massachusetts connector. Turning to hospital and preventive care, we find that length of stay in the hospital fell significantly, particularly for long hospital stays. We also find a significant reduction emergency room utilization that resulted in an inpatient admission. Admissions originating in the ER declined by 5.2%. The impact on ER utilization was largest in poorer geographic areas. Our results provide some mixed evidence for a decline in preventable admissions to the hospital. Without including covariates that capture patient severity, we find limited evidence that the reform reduced preventable admissions. However, in a specification that controls for patient severity, we find clear evidence that preventable admissions were reduced. Finally, we find little evidence that the Massachusetts reform affected hospital cost growth. Massachusetts hospital costs appear to have been growing faster than the remainder of the country prior to reform and to have continued on the same trajectory.

In the next section, we describe the elements of the reform and its implementation, as well as the limited existing research on its impact. In the third section, we describe the data. In the fourth section, we present the difference-in-differences results for the impact of the reform on insurance coverage and hospital and preventive care. In the fifth section, we discuss the implications of our findings for national reform. In the sixth section, we conclude and discuss our continuing work in this area.

2. Description of the Reform

The recent Massachusetts health insurance legislation, Chapter 58, included several features, the most salient of which was a mandate for individuals to obtain health insurance coverage or pay a tax penalty. All individuals were required to obtain coverage, with the exception of individuals with religious objections and individuals whose incomes were too high to qualify for state health insurance subsidies but too low for health insurance to be “affordable,” as determined by the Massachusetts Health Insurance Connector Authority. For a broad summary of the reform, see McDonough et al. (2006); for details on the implementation of the reform see The Massachusetts Health Insurance Connector Authority (2008).

The reform also extended free and subsidized health insurance to low income populations in two forms: expansions in the existing Medicaid program (called “MassHealth” in Massachusetts), and the launch of a new program called CommCare. First, as part of the Medicaid expansion, the reform expanded Medicaid eligibility for children to 300 percent of poverty, and it restored benefits to special populations who had lost coverage during the 2002–2003 fiscal crisis, such as the long-term unemployed. The reform also facilitated outreach efforts to Medicaid-eligible individuals and families. Implementation of the reform was staggered, and Medicaid changes were among the first to take effect. According to one source, “Because enrollment caps were removed from one Medicaid program and income eligibility was raised for two others, tens of thousands of the uninsured were newly enrolled just ten weeks after the law was signed” (Kingsdale (2009), page w591).

Second, the reform extended free and subsidized coverage through a new program called CommCare. CommCare offered free coverage to individuals up to 150 percent of poverty and three tiers of subsidized coverage up to 300 percent of poverty. CommCare plans were sold through a new, state-run health insurance exchange.

In addition, the reform created a new online health insurance marketplace called the Connector, where individuals who did not qualify for free or subsidized coverage could purchase health insurance coverage. Unsubsidized CommChoice plans available through the Connector from several health insurers offered three regulated levels of coverage – bronze, silver, and gold. Young Adult plans with fewer benefits were also made available to individuals age 26 and younger. Individuals were also free to continue purchasing health insurance through their employers or to purchase health insurance directly from insurers.

The reform also implemented changes in the broader health insurance market. It merged the individual and small group health insurance markets. Existing community rating regulations, which required premiums to be set regardless of certain beneficiary characteristics of age and gender, remained in place, though it gave new authority to insurers to price policies based on smoking status. It also required all family plans to cover young adults for at least two years beyond loss of dependent status, up to age 26.

Another important aspect of the reform was an employer mandate that required employers with more than 10 full time employees to offer health insurance to employees and contribute a certain amount to premiums or pay a penalty. The legislation allowed employers to designate the Connector as its “employer-group health benefit plan” for the purposes of federal law.

The financing for the reform came from a number of different sources. Some funding for the subsidies was financed by the dissolution of existing state uncompensated care pools.2 Addressing costs associated with the reform remains an important policy issue.

The national health reform legislation passed in March 2010 shares many features of the Massachusetts reform, including an individual mandate to obtain health insurance coverage, new requirements for employers, expansions in subsidized care, state-level health insurance marketplaces modeled on the Massachusetts Connector, and new requirements for insurers to cover dependents to age 26, to name a few. For a summary of the national legislation, see Kaiser Family Foundation (2010). Taken together, the main characteristics of the reform bear strong similarity to those in the Massachusetts reform, and the impact of the Massachusetts reform should offer insight into the likely impact of the national reform.

As Chapter 58 was enacted recently, there has been relatively little research on its impact to date. Long (2008) presents results on the preliminary impact of the reform from surveys administered in 2006 and 2007. Yelowitz and Cannon (2010) examine the impact of the reform on coverage using data from the March 2006–2009 Supplements to the Current Population Survey (CPS). They also examine changes in self-reported health status in an effort to capture the effect of the reform on health. Using this measure of health, they find little evidence of health effects. The NIS discharge data allow us to examine utilization and health effects in much greater detail. Long et al. (2009) perform an earlier analysis using one fewer year of the same data. Long et al. (2009) and Yelowitz and Cannon (2010) find a decline in uninsurance among the population age 18 to 64 of 6.6 and 6.7 percentage points, respectively. We also rely on the CPS for preliminary analysis. Our estimates using the CPS are similar in magnitude to the prior studies, though our sample differs in that we include all individuals under age 65 and at all income levels. Our main results, however, focus on administrative data from hospitals. This builds on the existing literature by considering coverage changes specifically amongst those who were hospitalized, as well as extending the analysis beyond coverage alone to focus on hospital and preventive care.

3. Description of the Data

For our main analysis, we focus on a nationally-representative sample of hospital discharges. Hospital discharge data offer several advantages over other forms of data to examine the impact of Chapter 58. First, though hospital discharge data offer only limited information on the overall population, they offer a great deal of information on a population of great policy interest – individuals who are sick. This population is most vulnerable to changes in coverage due to the fact that they are already sick, and they disproportionately come from demographic groups that are at higher risk, such as minority groups and the indigent. Inpatient care also represents a disproportionate fraction of total health care costs. Second, hospital discharge data allow us to observe the insured and the uninsured, regardless of payer, and payer information is likely to be more accurate than it is in survey data. Third, hospital discharge data allow us to examine treatment patterns and some health outcomes in great detail. In addition, relative to the CPS, hospital discharge data allow us to examine changes in medical expenditure, subject to limitations discussed below. One disadvantage of hospital discharge data relative to the CPS is that the underlying sample of individuals in our data could have changed as a result of the reform. We use many techniques to examine selection as an outcome of the reform and to control for selection in the analysis of other outcomes.

Our data are from the Healthcare Cost and Utilization Project (HCUP) Nationwide Inpatient Sample (NIS). Each year of NIS data is a stratified sample of 20 percent of United States community hospitals, designed to be nationally representative.3 The data contain the universe of all hospital discharges, regardless of payer, for each hospital in the data in each year. Because a large fraction of hospitals appear in several years of the data, we can use hospital identifiers to examine changes within hospitals over time.

We focus on the most recently available NIS data for the years 2004 to 2008. Our full sample includes a total of 36,362,108 discharges for individuals of all ages. An advantage of these data relative to the March Supplement to the CPS is that they allow us to examine the impact of the reform quarterly instead of annually. Because some aspects of the reform, such as Medicaid expansions, were implemented immediately after the reform, but other reforms were staggered, we do not want to include the period immediately following the reform in the After or the Before period. To be conservative, we define the After reform period to include all observations in the third quarter of 2007 and later. The After period represents the time after July 1, 2007, when one of the most salient features of the reform, the individual health insurance mandate, took effect. We denote the During period as the year from 2006 Q3 through 2007 Q2, and we use this period to analyze the immediate impact of the reform before the individual mandate took effect. The Before period includes 2004 Q1 through 2006 Q2.4

In total, from 2004–2008, the data cover 42 states – Alabama, Alaska, Delaware, Idaho, Mississippi, Montana, North Dakota, and New Mexico are not available in any year because they did not provide data to the NIS. The data include the universe of discharges from a total of 3,090 unique hospitals, with 48 in Massachusetts. The unit of observation in the data and in our main analysis is the hospital discharge. To account for stratification, we use discharge weights in all summary statistics and regressions.

4. Difference-in-Differences Empirical Results

4.1. Impact on Insurance Coverage in the Overall and Inpatient Hospital Populations

We begin by considering the issue that was the primary motivation for the Massachusetts reform – the expansion of health insurance coverage. Before focusing on inpatient hospitalizations, we place this population in the context of the general population using data from the 2004 to 2009 March Supplements to the Current Population Survey (CPS). In most of our results, we focus on the nonelderly population because the reform was geared toward the nonelderly population (elderly with coverage through Medicare were explicitly excluded from purchasing subsidized CommCare plans, but they were eligible for Medicaid expansions if they met the income eligibility criteria).5

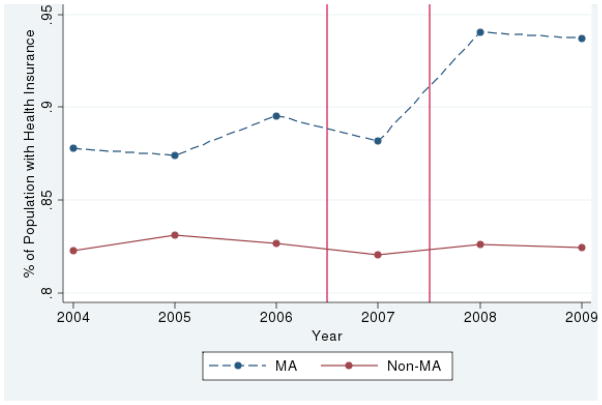

Figure 1 depicts trends in total insurance coverage of all types among nonelderly in the CPS. The upper line shows trends in coverage in Massachusetts, and the lower line shows trends in coverage in all other states. From the upper line, it is apparent that Massachusetts started with a higher baseline level of coverage than the average among other states. The average level of coverage among the nonelderly in Massachusetts prior to the reform (2004–2006 CPS) was 88.2 percent.6 This increased to a mean coverage level of 93.8 percent in the 2008–2009 CPS.7 In contrast, the remainder of the country had relative stable rates of nonelderly coverage: 82.7 percent pre-reform and 82.5 percent post-reform. For the entire population, including those over 65, coverage in Massachusetts went from 89.5 percent to 94.5 percent for the same periods while the remainder of the country saw a small decline from 84.6 percent insured pre-reform to 84.4 percent insured post-reform.8

Figure 1.

Mean Coverage Rates by Year

Source: 2004–2009 Supplements to the Current Population Survey, authors’ calculations. Vertical lines separate During and After periods.

Appendix Table A1 formalizes this comparison of means with difference-in-differences regression results from the CPS. These results suggest that the Massachusetts reform was successful in expanding health insurance coverage in the population. The estimated reduction in nonelderly uninsurance of 5.7 percentage points represents a 48 percent reduction relative to the pre-reform rate of nonelderly uninsurance in Massachusetts.9 To some, the decrease in uninsurance experienced by Massachusetts may appear small. To put this in perspective, the national reform targets a reduction in uninsurance of a similar magnitude. The Centers for Medicare and Medicaid Services, Office of the Actuary, National Health Statistics Group, predicts a decrease in uninsurance of 7.1 percentage points nationally from 2009 to 2019 (Truffer et al. (2010)).

Table A1.

Insurance in CPS

| Mutually Exclusive Types of Coverage

|

||||||

|---|---|---|---|---|---|---|

| (1) | (2) | (3) | (4) | (5) | (6) | |

| Uninsured | ESHI | Private Insurance Unrelated to Employment | Medicaid | Medicare | VA/Military | |

| MA* After | −0.0571 | 0.0345 | −0.0086 | 0.0351 | −0.0004 | −0.0036 |

| [−0.0605, −0.0537]*** | [0.0283,0.0408]*** | [−0.0106, −0.0066]*** | [0.0317,0.0386]*** | [−0.0012,0.0004] | [−0.0050, −0.0023]*** | |

| [−0.0604, −0.0536]+++ | [0.0286,0.0403]+++ | [−0.0105, −0.0066]+++ | [0.0317,0.0385]+++ | [−0.0011,0.0004] | [−0.0051, −0.0025]+++ | |

| MA* During | −0.0049 | 0.0024 | 0.0066 | −0.0005 | 0.0011 | −0.0047 |

| [−0.0093, −0.0005]** | [−0.0016,0.0064] | [0.0036,0.0096]*** | [−0.0036,0.0025] | [0.0004,0.0019]*** | [−0.0061, −0.0034]*** | |

| [−0.0097, −0.0005]++ | [−0.0020,0.0060] | [0.0041,0.0102]+++ | [−0.0036,0.0022] | [0.0004,0.0019]+++ | [−0.006, −0.0032]+++ | |

| After | 0.0007 | −0.0134 | −0.0006 | 0.0101 | 0.0023 | 0.0009 |

| [−0.0027,0.0042] | [−0.0197, −0.0072]*** | [−0.0026,0.0015] | [0.0066,0.0136]*** | [0.0015,0.0031]*** | [−0.0004,0.0022] | |

| [−0.0027,0.0037] | [−0.0192, −0.0077] | [−0.0025,0.0013]+ | [0.0068,0.0135]+++ | [0.0016,0.003]+++ | [−0.0003,0.0023]+ | |

| During | 0.0058 | −0.0077 | −0.0004 | 0.0023 | 0.0010 | −0.0009 |

| [0.0014,0.0101]** | [−0.0117, −0.0037]*** | [−0.0034,0.0025] | [−0.0008,0.0053] | [0.0002,0.0018]** | [−0.0022,0.0004] | |

| [0.0015,0.0105]+++ | [−0.0113, −0.0033]+++ | [−0.0039,0.0020]+ | [−0.0004,0.0053] | [0.0003,0.0018]+++ | [−0.0024,0.0003]+ | |

| Constant | 0.1511 | 0.6531 | 0.0459 | 0.1195 | 0.0172 | 0.0132 |

| [0.1494,0.1527]*** | [0.6508,0.6555]*** | [0.0450,0.0467]*** | [0.1180,0.1210]*** | [0.0169,0.0175]*** | [0.0127,0.0137]*** | |

| [0.1164,0.1889]+++ | [0.5922,0.6898]+++ | [0.0449,0.1138]+++ | [0.067,0.1796]+++ | [0.0041,0.0175]+++ | [0.0065,0.0708]+++ | |

|

| ||||||

| N (Nonelderly) | 1,129,221 | 1,129,221 | 1,129,221 | 1,129,221 | 1,129,221 | 1,129,221 |

| R Squared | 0.0152 | 0.0160 | 0.0030 | 0.0071 | 0.0013 | 0.0066 |

|

| ||||||

| MA Before | 0.1176 | 0.7044 | 0.0534 | 0.1113 | 0.0057 | 0.0076 |

| Non-MA Before | 0.1732 | 0.6358 | 0.0607 | 0.1066 | 0.0090 | 0.0147 |

| MA After | 0.0612 | 0.7255 | 0.0442 | 0.1565 | 0.0077 | 0.0049 |

| Non-MA After | 0.1747 | 0.6214 | 0.0602 | 0.1167 | 0.0113 | 0.0157 |

95% asymptotic CI clustered by state:

Significant at .01,

Significant at .05,

Significant at .10

95% bootstrapped CI, blocks by state, 1000 reps:

Significant at .01,

Significant at .05,

Significant at .10

All specifications and means weighted using sample weights.

All specifications include state fixed effects and time fixed effects for 2004 to 2009, annually. Omitted state: Alabama.

Source: CPS March Supplement 2004–2009 authors’ calculations. See text for more details.

4.1.1. Regression Results on the Impact on Uninsurance

Using the NIS data, we begin by estimating a simple difference-in-differences specification. Our primary estimating equation is:

| (1) |

where Y is an outcome variable for hospital discharge d in hospital h at time t. The coefficient of interest, β, gives the impact of the reform – the change in coverage after the reform relative to before the reform in Massachusetts relative to other states. Analogously, γ gives the change in coverage during the reform relative to before the reform in Massachusetts relative to other states. The identification assumption is that there were no factors outside of the reform that differentially affected Massachusetts relative to other states after the reform. We also include hospital and quarterly time fixed effects. Thus, identification comes from comparing hospitals to themselves over time in Massachusetts compared to other states, after flexibly allowing for seasonality and trends over time. We include hospital fixed effects to account for the fact that the NIS is an unbalanced panel of hospitals. Without hospital fixed effects, we are concerned that change in outcomes could be driven by changes in the sample of hospitals in either Massachusetts or control states (primarily the former since the sample is nationally representative but is not necessarily representative within each state) after the reform.10 Our preferred specification includes time and hospital fixed effects.11

For each outcome variable of interest, we also estimate models that incorporate a vector X of patient demographics and other risk adjustment variables. We do not control for these variables in our main specifications because we are interested in measuring the impact of the reform as broadly as possible. To the extent that the reform changed the composition of the sample of inpatient discharges based on these observable characteristics, we would obscure this effect by controlling for observable patient characteristics. Beyond our main specifications, the impact of the reform on outcomes holding the patient population fixed is also highly relevant. For this reason, in other specifications, we incorporate state-of-the-art risk adjusters, and we present a number of specifications focused on understanding changes in patient composition. We return to this in more detail below. In general, however, we find that though there is some evidence of selection, it is not large enough to alter the robustness of our findings with respect to coverage or most other outcomes.

We use linear probability models for all of our binary outcomes. Under each coeffcient, we report asymptotic 95 percent confidence intervals, clustered to allow for arbitrary correlations between observations within a state. Following Bertrand et al. (2004), we also report 95 percent confidence intervals obtained by block bootstrap by state, as discussed in Appendix A. In practice, the confidence intervals obtained through both methods are very similar. To conserve space, we do not report the block bootstrapped standard errors in some tables.

In addition to the specifications we present here, we consider a number of robustness checks to investigate the internal and external validity of our results. We find that the conclusions presented in Table 1 are robust to a variety of alternative control groups and do not appear to be driven by unobserved factors that are unique to Massachusetts. For brevity, we present and discuss these results in Appendix B.

Table 1.

Insurance and Outcomes in the NIS

| Dependent Variable: | Mutually Exclusive Types of Coverage

|

Outcomes | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| (1) | (2) | (3) | (4) | (5) | (6) | (7) | (8) | (9) | (10) | |

| Uninsured | Medicaid | Private | Medicare | Other | CommCare | No Coverage Info | Length of Stay | Log Length of Stay | Emergency Admit | |

| MA* After | −0.0231 | 0.0389 | −0.0306 | 0.0042 | 0.0106 | 0.0124 | 0.0015 | −0.0504 | −0.0012 | −0.0202 |

| [−0.0300, −0.0162]*** | [0.0265,0.0512]*** | [−0.0378, −0.0233]*** | [0.0013,0.0070]*** | [0.0041,0.0171]*** | [0.0123,0.0124]*** | [0.0000,0.0030]** | [−0.0999, −0.0008]** | [−0.0111,0.0086] | [−0.0397, −0.0007]** | |

| [−0.0299, −0.0166]+++ | [0.0293,0.051]+++ | [−0.0385, −0.0236]+++ | [0.0014,0.0068]+++ | [0.0050,0.0181]+++ | [0.0124,0.0125]+++ | [0.0001,0.0029]++ | [−0.1026, −0.0065]++ | [−0.0113,0.0066] | [−0.0351,0.0011]+ | |

| MA* During | −0.0129 | 0.0365 | −0.0224 | −0.0003 | −0.0009 | 0.0029 | −0.0017 | −0.0037 | 0.0037 | −0.0317 |

| [−0.0176, −0.0083]*** | [0.0293,0.0437]*** | [−0.0274, −0.0173]*** | [−0.0024,0.0017] | [−0.0043,0.0026] | [0.0029,0.0029]*** | [−0.0065,0.0031] | [−0.0369,0.0294] | [−0.0022,0.0095] | [−0.0449, −0.0184]*** | |

| [−0.0177, −0.0084]+++ | [0.0302,0.0438]+++ | [−0.0277, −0.0168]+++ | [−0.0025,0.0018] | [−0.0049,0.0026] | [0.0029,0.0029]+++ | [−0.0076,0.0014] | [−0.0367,0.0238] | [−0.0026,0.0084] | [−0.0409, −0.0166]+++ | |

| N (Nonelderly) | 23,860,930 | 23,860,930 | 23,860,930 | 23,860,930 | 23,860,930 | 23,860,930 | 23,913,983 | 23,913,183 | 23,913,183 | 23,913,983 |

| R Squared | 0.0659 | 0.1148 | 0.1502 | 0.0341 | 0.0689 | 0.0249 | 0.0662 | 0.0335 | 0.0458 | 0.1088 |

|

| ||||||||||

| Mean MA Before | 0.0643 | 0.2460 | 0.5631 | 0.1073 | 0.0193 | 0.0000 | 0.0002 | 5.4256 | 1.4267 | 0.3868 |

| Mean Non-MA Before | 0.0791 | 0.2876 | 0.4978 | 0.0928 | 0.0427 | 0.0000 | 0.0020 | 5.0770 | 1.3552 | 0.3591 |

| Mean MA After | 0.0352 | 0.2594 | 0.5518 | 0.1177 | 0.0360 | 0.0165 | 0.0040 | 5.3717 | 1.4355 | 0.4058 |

| Mean Non-MA After | 0.0817 | 0.2790 | 0.4923 | 0.1020 | 0.0450 | 0.0000 | 0.0017 | 5.0958 | 1.3596 | 0.3745 |

|

| ||||||||||

| MA* After with risk adjusters | −0.0228 | 0.0374 | −0.0275 | 0.0021 | 0.0107 | 0.0124 | 0.0014 | −0.1037 | −0.0105 | −0.0220 |

| [−0.0297, −0.0158]*** | [0.0235, 0.0514]*** | [−0.0361, −0.0190]*** | [−0.0007,0.0050] | [0.0047,0.0168]*** | [0.0123,0.0124]*** | [0.0000,0.0028]** | [−0.1471, −0.0603]*** | [−0.0186, −0.0023]** | [−0.0427, −0.0012]** | |

| R Squared | 0.0939 | 0.2232 | 0.2381 | 0.2006 | 0.0761 | 0.0249 | 0.0666 | 0.3801 | 0.4038 | 0.2907 |

95% asymptotic CI clustered by state:

Significant at .01,

Significant at .05,

Significant at .10

95% bootstrapped CI, blocks by state, 1000 reps:

Significant at .01,

Significant at .05,

Significant at .10

All specifications and means weighted using discharge weights.

All specifications include hospital fixed effects and time fixed effects for 2004 to 2008, quarterly.

Risk adjusters include six sets of risk adjustment variables: demographic characteristics, the number of diagnoses on the discharge record, individual components of the Charlson Score measure of comorbidities, AHRQ comorbidity measures, All-Patient Refined (APR)-DRGs, and All-Payer Severity-adjusted (APS)-DRGs. See Appendix 2.

CommCare included in Other. No Coverage Info not included in any other specifications.

Length of Stay is calculated as one plus the discharge date minus the admission date. The smallest possible value is one day. Emergency Admit indicates emergency room source of admission.

Source: HCUP NIS 2004–2008 authors’ calculations. See text for more details.

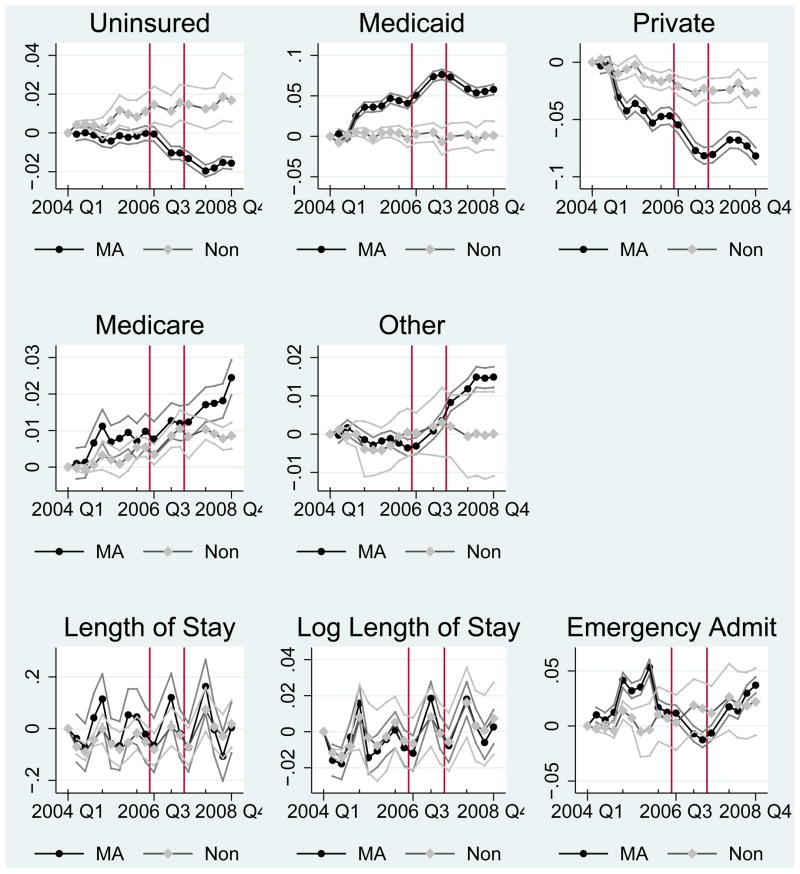

Given our short time period, we are particularly concerned about pre-trends in Massachusetts relative to control states. In Figures 2 3, and 4, we present quarterly trends for each of our outcome variables of interest for Massachusetts and the remainder of the country. Each line and the associated confidence interval are coefficient estimates for each quarter for Massachusetts and non-Massachusetts states in a regression that includes hospital fixed effects. The omitted category for each is the first quarter of 2004, which we set equal to 0. While the plots show slight variation, none of our outcomes of interest appear to have strong pre-reform trends in Massachusetts relative to control states that might explain our findings.12

Figure 2.

Trends in MA vs. Non-MA

Source: NIS authors’ calculations.

Trends obtained from regressions including hospital fixed effects.

95% asympototic confidence intervals shown.

Confidence intervals clustered by state for Non–MA and robust for MA.

All data omitted in 2006 Q4 and 2007 Q4 because of MA data availability.

Vertical lines separate During and After periods.

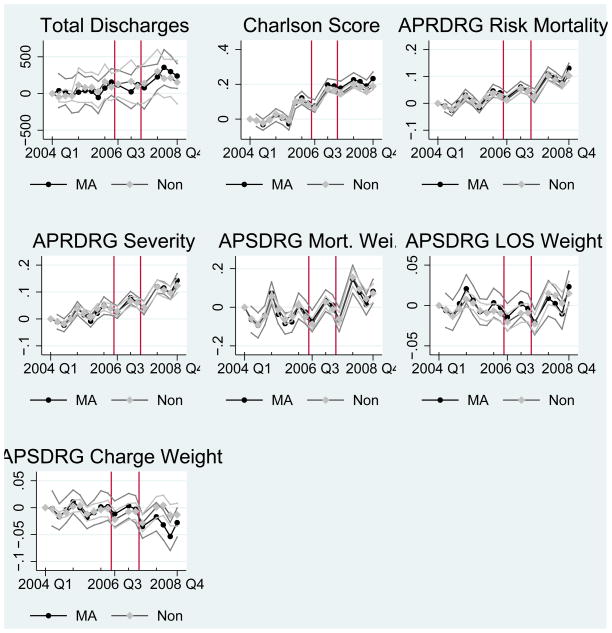

Figure 3.

Trends in MA vs. Non-MA, Hospital Quarter Level

Source: NIS authors’ calculations.

Trends obtained from regressions including hospital fixed effects.

95% asympototic confidence intervals shown.

Confidence intervals clustered by state for Non–MA and robust for MA.

All data omitted in 2006 Q4 and 2007 Q4 because of MA data availability.

Vertical lines separate During and After periods.

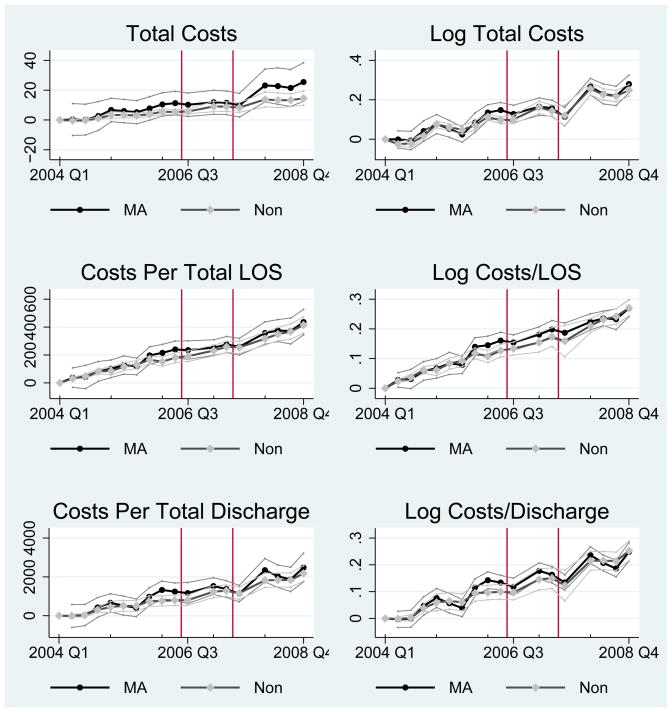

Figure 4.

Trends in MA vs. Non-MA, Hospital Quarter Level

Source: NIS authors’ calculations.

Trends obtained from regressions including hospital fixed effects.

95% asympototic confidence intervals shown.

Confidence intervals clustered by state for Non–MA and robust for MA.

All data omitted in 2006 Q4 and 2007 Q4 because of MA data availability.

Vertical lines separate During and After periods.

4.1.2. Effects on the Composition of Insurance Coverage among Hospital Discharges

In this section, we investigate the effect of the Massachusetts reform on the level and composition of health insurance coverage in the sample of hospital discharges. We divide health insurance coverage (or lack thereof) into five mutually exclusive types – Uninsured, Medicaid, Private, Medicare, and Other. CommCare plans and other government plans such as Workers’ Compensation and CHAMPUS (but not Medicaid and Medicare) are included in Other. We estimate equation (1) separately for each coverage type and report the results in columns 1 through 5 of Table 1.13 We focus on results for the nonelderly here, and we report results for the full sample and for the elderly only in Table 5.

Table 5.

Insurance and Outcomes by Income in NIS

| (1) | (2) | (3) | (4) | (5) | (6) | (7) | (8) | (9) | |

|---|---|---|---|---|---|---|---|---|---|

| Uninsured | Medicaid | Private | Medicare | Other | CommCare | Length of Stay | Log Length of Stay | Emergency Admit | |

| Patient’s Zip Code in First (Lowest) Income Quartile (28% of sample) | |||||||||

|

| |||||||||

| Ma* After | −0.0359 [−0.0440,−0.0277]*** | 0.1050 [0.0873,0.1227]*** | −0.0899 [−0.1004,−0.0793]*** | 0.0059 [0.0025,0.0093]*** | 0.0148 [0.0035,0.0260]** | 0.0130 [0.0130,0.0130]*** | 0.0535 [−0.0050,0.1119]* | 0.0158 [0.0071,0.0244]*** | −0.0570 [−0.0703,−0.0436]*** |

| Mean MA Before | 0.0643 | 0.2460 | 0.5631 | 0.1073 | 0.0193 | 0.0000 | 5.6074 | 1.4402 | 0.4665 |

| Patient’s Zip Code in Second Income Quartile (26% of sample) | |||||||||

|

| |||||||||

| Ma* After | −0.0226 [−0.0309,−0.0143]*** | 0.0447 [0.0305,0.0590]*** | −0.0495 [−0.0595,−0.0396]*** | 0.0148 [0.0113,0.0184]*** | 0.0126 [0.0053,0.0199]*** | 0.0165 [0.0164,0.0165]*** | −0.0941 [−0.1310,−0.0572]*** | −0.0081 [−0.0153,−0.0009]** | −0.0190 [−0.0297,−0.0083]*** |

| Mean MA Before | 0.0643 | 0.2460 | 0.5631 | 0.1073 | 0.0193 | 0.0000 | 5.5681 | 1.4451 | 0.4437 |

| Patient’s Zip Code in Third Income Quartile (23% of sample) | |||||||||

|

| |||||||||

| Ma* After | −0.0234 [−0.0295,−0.0174]*** | 0.0217 [0.0098,0.0336]*** | −0.0106 [−0.0187,−0.0026]** | 0.0020 [−0.0010,0.0050] | 0.0103 [0.0069,0.0138]*** | 0.0130 [0.0130,0.0130]*** | −0.0890 [−0.1417,−0.0363]*** | 0.0017 [−0.0061,0.0095] | −0.0107 [−0.0392,0.0178] |

| Mean MA Before | 0.0643 | 0.2460 | 0.5631 | 0.1073 | 0.0193 | 0.0000 | 5.3885 | 1.4240 | 0.3671 |

| Patient’s Zip Code in Fourth (Highest) Income Quartile (21% of sample) | |||||||||

|

| |||||||||

| Ma* After | −0.0059 [−0.0159,0.0042] | 0.0006 [−0.0080,0.0093] | 0.0010 [−0.0116,0.0135] | −0.0007 [−0.0066,0.0051] | 0.0050 [0.0011,0.0089]** | 0.0090 [0.0090,0.0090]*** | −0.0911 [−0.1957,0.0136]* | −0.0185 [−0.0478,0.0108] | 0.0098 [−0.0324,0.0519] |

| Mean MA Before | 0.0643 | 0.2460 | 0.5631 | 0.1073 | 0.0193 | 0.0000 | 5.2333 | 1.4067 | 0.3189 |

95% asymptotic CI clustered by state:

Significant at .01,

Significant at .05,

Significant at .10

MA*During included but coefficient not reported. All specifications and means weighted using discharge weights.

All specifications include hospital fixed effects and time fixed effects for 2004 to 2008, quarterly.

Regressions in this table estimated in the sample of nonelderly discharges. Sample sizes vary across specifications based on availability of dependent variable.

Results for missing gender and income categories not shown.

Source: HCUP NIS 2004–2008 authors’ calculations. See text for more details.

Column 1 presents the estimated effect of the reform on the overall level of uninsurance. We find that the reform led to a 2.31 percentage point reduction in uninsurance. Both sets of confidence intervals show that the difference-in-differences impact of the reform on uninsurance is statistically significant at the 1 percent level. Since the model with fixed effects obscures the main effects of MA and After, we also report mean coverage rates in Massachusetts and other states before and after the reform. The estimated impact of Chapter 58 represents an economically significant reduction in uninsured discharges of roughly 36 percent (2.31/6.43) of the Massachusetts pre-reform mean. We present coefficients on selected covariates from this regression in column 1 of Appendix Table A3.

Table A3.

Coefficients on Selected Covariates in NIS

| Dependent Variable | (1) (preferred) Uninsured |

(2) (covariates) Uninsured |

(2) (covariates cont.) | (2) (covariates cont.) | (2) (covariates cont.) | |||

|---|---|---|---|---|---|---|---|---|

| Ma* After | −0.0231 [−0.0300,−0.0162]*** | −0.0228 [−0.0297,−0.0158]*** | Female | −0.0394 [−0.0453,−0.0336]*** | AHRQ Comorb. cm_dmcx | −0.0220 [−0.0279,−0.0161]*** | Charlson 6 | 0.0255 [0.0201,0.0308]*** |

| MA* During | −0.0129 [−0.0176,−0.0083]*** | −0.0131 [−0.0177,−0.0085]*** | Gender Unknown | −0.0058 [−0.0256,0.0139] | AHRQ Comorb. cm_drug | 0.0720 [0.0506,0.0933]*** | Charlson 7 | 0.0086 [0.0047,0.0124]*** |

| 2004 Q2 | 0.0041 [0.0025,0.0058]*** | 0.0036 [0.0022,0.0050]*** | Black | 0.0067 [0.0019,0.0115]*** | AHRQ Comorb. cm_htn_c | −0.0009 [−0.0028,0.0010] | Charlson 8 | −0.0333 [−0.0407,−0.0258]*** |

| 2004 Q3 | 0.0044 [0.0024,0.0065]*** | 0.0037 [0.0018,0.0056]*** | Hispanic | 0.0362 [0.0159,0.0565]*** | AHRQ Comorb. cm_hypothy | −0.0115 [−0.0142,−0.0087]*** | Charlson 9 | 0.0063 [0.0020,0.0107]*** |

| 2004 Q4 | 0.0038 [0.0011,0.0065]*** | 0.0037 [0.0010,0.0064]*** | Asian | 0.0187 [0.0134,0.0240]*** | AHRQ Comorb. cm_liver | 0.0044 [0.0004,0.0084]** | Charlson 10 | 0.0558 [0.0427,0.0689]*** |

| 2005 Q1 | 0.0020 [−0.0034,0.0074] | 0.0025 [−0.0027,0.0077] | Native American | 0.0008 [−0.0120,0.0137] | AHRQ Comorb. cm_lymph | −0.0332 [−0.0408,−0.0256]*** | Charlson 11 | 0.0130 [0.0071,0.0189]*** |

| 2005 Q2 | 0.0059 [−0.0004,0.0121]* | 0.0062 [0.0002,0.0122]** | Other Race | 0.0353 [0.0167,0.0539]*** | AHRQ Comorb. cm_lytes | 0.0245 [0.0203,0.0287]*** | Charlson 12 | −0.0036 [−0.0060,−0.0012]*** |

| 2005 Q3 | 0.0112 [0.0037,0.0187]*** | 0.0109 [0.0036,0.0182]*** | Unknown Race | 0.0084 [−0.0043,0.0211] | AHRQ Comorb. cm_mets | −0.0244 [−0.0293,−0.0195]*** | Charlson 13 | −0.0193 [−0.0244,−0.0142]*** |

| 2005 Q4 | 0.0093 [0.0024,0.0162]*** | 0.0102 [0.0034,0.0170]*** | Zip in First (Lowest) Income Quartile | 0.0220 [0.0149,0.0291]*** | AHRQ Comorb. cm_neuro | −0.0144 [−0.0176,−0.0111]*** | Charlson 14 | −0.0066 [−0.0111,−0.0021]*** |

| 2006 Q1 | 0.0079 [−0.0005,0.0163]* | 0.0095 [0.0013,0.0176]** | Zip in Second Income Quartile | 0.0170 [0.0107,0.0234]*** | AHRQ Comorb. cm_obese | 0.0027 [0.0002,0.0053]** | Charlson 15 | 0.0248 [0.0188,0.0309]*** |

| 2006 Q2 | 0.0107 [0.0019,0.0195]** | 0.0118 [0.0033,0.0203]*** | Zip in Third Income Quartile | 0.0092 [0.0048,0.0137]*** | AHRQ Comorb. cm_para | −0.0439 [−0.0529,−0.0349]*** | Charlson 16 | 0.0582 [0.0479,0.0686]*** |

| 2006 Q3 | 0.0146 [0.0054,0.0237]*** | 0.0157 [0.0067,0.0247]*** | Zip in Unknown Income Quartile Age | 0.0470 [0.0138,0.0802]*** | AHRQ Comorb. cm_perivasc | −0.0140 [−0.0176,−0.0105]*** | Charlson 17 | −0.0008 [−0.0043,0.0028] |

| 2006 Q4 | 0.0039 [0.0030,0.0048]*** | AHRQ Comorb. cm_psych | −0.0061 [−0.0105,−0.0018]*** | Charlson 18 | −0.0353 [−0.0488,−0.0219]*** | |||

| 2007 Q1 | 0.0109 [0.0030,0.0189]*** | 0.0132 [0.0055,0.0210]*** | Age Squared | −0.0001 [−0.0001,−0.0000]*** | AHRQ Comorb. cm_pulmcirc | −0.0043 [−0.0069,−0.0016]*** | Diagnosis count | −0.0047 [−0.0057,−0.0038]*** |

| 2007 Q2 | 0.0151 [0.0061,0.0241]*** | 0.0170 [0.0082,0.0258]*** | AHRQ Comorb. cm_aids | −0.0296 [−0.0385,−0.0207]*** | AHRQ Comorb. cm_renlfail | −0.0430 [−0.0535,−0.0325]*** | APRDRG Risk mortality | 0.0044 [0.0034,0.0054]*** |

| 2007 Q3 | 0.0145 [0.0049,0.0241]*** | 0.0161 [0.0064,0.0258]*** | AHRQ Comorb. cm_alcohol | 0.0901 [0.0725,0.1077]*** | AHRQ Comorb. cm_tumor | −0.0283 [−0.0334,−0.0231]*** | APRDRG Severity | 0.0013 [−0.0005,0.0030] |

| 2007 Q4 | AHRQ Comorb. cm_anemdef | 0.0063 [0.0038,0.0089]*** | AHRQ Comorb. cm_ulcer | −0.0489 [−0.0573,−0.0405]*** | APSDRG Mortality Weight | 0.0014 [0.0009,0.0019]*** | ||

| 2008 Q1 | 0.0120 [0.0018,0.0222]** | 0.0154 [0.0052,0.0256]*** | AHRQ Comorb. cm_arth | −0.0191 [−0.0251,−0.0131]*** | AHRQ Comorb. cm_valve | −0.0042 [−0.0066,−0.0017]*** | APSDRG LOS Weight | 0.0043 [0.0004,0.0083]** |

| 2008 Q2 | 0.0131 [0.0033,0.0230]** | 0.0162 [0.0063,0.0262]*** | AHRQ Comorb. cm_bldloss | −0.0188 [−0.0244,−0.0133]*** | AHRQ Comorb. cm_wghtloss | 0.0072 [0.0037,0.0107]*** | APSDRG Charge Weight | −0.0056 [−0.0083,−0.0028]*** |

| 2008 Q3 | 0.0181 [0.0060,0.0303]*** | 0.0210 [0.0088,0.0332]*** | AHRQ Comorb. cm_chf | −0.0316 [−0.0386,−0.0246]*** | Charlson 1 | 0.0422 [0.0331,0.0512]*** | ||

| 2008 Q4 | 0.0164 [0.0055,0.0273]*** | 0.0202 [0.0092,0.0313]*** | AHRQ Comorb. cm_chrnlung | −0.0157 [−0.0195,−0.0119]*** | Charlson 2 | 0.0086 [0.0058,0.0115]*** | ||

| Constant | 0.0708 [0.0645,0.0772]*** | 0.0373 [0.0196,0.0551]*** | AHRQ Comorb. cm_coag | 0.0090 [0.0070,0.0110]*** | Charlson 3 | 0.0205 [0.0139,0.0271]*** | ||

| Hospital Indicators | Yes | Yes | AHRQ Comorb. cm_depress | 0.0016 [−0.0007,0.0039] | Charlson 4 | 0.0001 [−0.0021,0.0023] | ||

| Covariates | No | Yes, cont. next cols. | ||||||

| N (Nonelderly) | 23,860,930 | 23,860,930 | AHRQ Comorb. cm_dm | −0.0570 [−0.0688,−0.0452]*** | Charlson 5 | −0.0094 [−0.0131,−0.0058]*** | ||

| R Squared | 0.0659 | 0.0939 |

95% asymptotic CI clustered by state:

Significant at .01,

Significant at .05,

Significant at .10

95% bootstrapped CI, blocks by state, 1000 reps:

Significant at .01,

Significant at .05,

Significant at .10

All specifications and means weighted using discharge weights.

Coefficients on hospital fixed effects not reported. Omitted categories: MA*Before, 2004 Q1, Male, White, Zip in Fourth Income Quartile.

Source: HCUP NIS 2004–2008 authors’ calculations. See text for more details.

We see from the difference-in-differences results in column 2 of Table 1 that among the nonelderly hospitalized population, the expansion in Medicaid coverage was larger than the overall reduction in uninsurance. Medicaid coverage expanded by 3.89 percentage points, and uninsurance decreased by 2.31 percentage points. Consistent with the timing of the initial Medicaid expansion, the coefficient on MA*During suggests that a large fraction of impact of the Medicaid expansion was realized in the year immediately following the passage of the legislation. It appears that at least some of the Medicaid expansion crowded out private coverage in the hospital, which decreased by 3.06 percentage points. The risk-adjusted coefficient in the last row of column 2 suggests that even after controlling for selection into the hospital, our finding of crowd-out persists. All of these effects are statistically significant at the 1 percent level.

To further understand crowd-out and the incidence of the reform on the hospitalized population relative to the general population, we compare the estimates from Table 1 – coverage among those who were hospitalized – with results from the CPS – coverage in the overall population. In Appendix Table A1, we report difference-in-differences results by coverage type in the CPS. The coverage categories reported by the CPS do not map exactly to those used in the NIS. Insurance that is coded as private coverage in the NIS is divided into employer sponsored coverage and private coverage not related to employment in the CPS. Furthermore, the Census Bureau coded the new plans available in Massachusetts, CommCare and CommChoice, as “Medi-caid.”14 Thus the estimated impact on Medicaid is actually the combined effect of expansions in traditional Medicaid with increases in CommCare and CommChoice. Medicaid expansions are larger among the hospital discharge population than they are in the CPS – a 3.89 percentage point increase vs. a 3.50 percentage point increase, respectively. Furthermore, the CPS coefficient is statistically lower than the NIS coefficient. It is not surprising to see larger gains in coverage in the hospital because hospitals often retroactively cover Medicaid-eligible individuals who had not signed up for coverage.

Comparing changes in types of coverage in the NIS to changes in types of coverage in the CPS, we find that crowd-out of private coverage only occurred among the hospitalized population. In Appendix Table A1, the magnitudes of the MA*After coefficients are 0.0345 and 0.0351 for ESHI and Medicaid respectively. That is, both employer-sponsored and Medicaid, CommCare or CommChoice coverage increased by a similar amount following the reform, and those increases were roughly equivalent to the total decline in uninsurance (5.7 percentage points). The only crowding out in Appendix Table A1 seems to be of non-group private insurance, though this effect is relatively small at 0.86 percentage points. Combining coefficients for ESHI and private insurance unrelated to employment gives us a predicted increase in private coverage (as it is coded in the NIS) of 2.59 percentage points. This is in marked contrast with the 3.54 percentage point decrease in private coverage that we observe in the NIS.

Returning to the NIS, we look further at results from other specifications. Our results also indicate a statistically significant change in the number of non-elderly covered by Medicare. The magnitude of the effect, however, is quite small both in level of coverage and in change relative to the baseline share of non-elderly inpatient admissions covered by Medicare. Other coverage, the general category that includes other types of government coverage including CommCare, increased by a statistically significant 1.06 percentage points. We restrict the dependent variable to include only CommCare in specification 6. By definition, CommCare coverage is zero outside of Massachusetts and before the reform. CommCare increased by 1.24 percentage points. The coefficient is larger than the overall increase in Other coverage, though the difference in the coefficients is not statistically significant. As reported in specification 7, the probability of having missing coverage information also increased after the reform, but this increase was small relative to the observed increases in coverage.

4.2. Impacts on Health Care Provision

Having established the impact of the Massachusetts reform on coverage, we next turn to our primary focus: understanding the impact of achieving near-universal health insurance coverage on health care delivery and cost. In the next four subsections, we estimate equation (1) with dependent variables that capture the decision to seek care, the intensity of services provided conditional on seeking care, preventive care, and hospital costs.

4.2.1. Impact on Hospital Volume and Patient Composition

One potential impact of the reform could be to increase the use of inpatient hospital services. Whether more people accessed health insurance after the reform is of intrinsic interest as this implies a change in welfare due to the policy (e.g. an increase in moral hazard through insurance or a decrease in ex ante barriers to accessing the hospital due to insurance). Beyond this, changes in the composition of patients present an important empirical hurdle to estimating the causal impact of the reform on subsequent measures of care delivered. If the number of patients seeking care after the reform increased and the marginal patients differed in underlying health status, changes in treatment intensity could reffect this, rather than actual changes in the way care is delivered. We investigate this possibility in two ways: first, we examine changes in the number of discharges at the hospital level; second, we control for observable changes in the health of the patient pool and compare our results to specifications without controls.

In Table 2, we investigate selection into hospitals by estimating a series of specifications with the number of discharges at the hospital-quarter level as the dependent variable. In column 1 of Table 2, which includes hospital and quarterly fixed effects to mitigate the impact of changes in sample composition, the coefficient on MA*After indicates that the number of quarterly discharges for hospitals in Massachusetts was unchanged relative to other states following the reform. The coefficient estimate of 19 is small relative to the pre-reform quarterly discharge level of 5,616, and it is not statistically significant. In column 2, we re-estimate the model with the log of total discharges as the dependent variable to account for any skewness in hospital size. The coefficient on MA*After in this specification also indicates that the reform had no impact on the total volume of discharges. Columns 3 to 6 break down changes in discharges by age category (nonelderly and elderly). Among these subgroups we find no statistically significant change in total elderly or nonelderly discharges in either levels or logs. These findings suggest that any change in the composition of patients would have to have occurred through substitution since the total number of discharges remained unchanged. However, we are unlikely to pick up a relative change in discharges among the newly insured in our aggregate measure.15

Table 2.

Regressions on the Hospital-Quarter Level in NIS

| (1) | (2) | (3) | (4) | (5) | (6) | (7) | (8) | (9) | (10) | (11) | (12) | (13) | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Total Discharges | Log Total Disch. | Nonelderly Dischg. | Log Noneld. Dischg. | Elderly Dischg. | Log Elderly Dischg. | Hospital Bedsize | Charlson Score | APRDRG Risk Mortality | APRDRG Severity | APSDRG Mortality Weight | APSDRG LOS Weight | APSDRG Charge Weight | |

| MA* After | 19 [−183,220] | 0.0037 [−0.0133,0.0206] | −7 [−174,160] | 0.0029 [−0.0192,0.0250] | 26 [−19,70] | 0.0115 [−0.0059,0.0289] | −21 [−62,20] | 0.0340 [0.0205,0.0476]*** | 0.0057 [−0.0050,0.0164] | 0.0062 [−0.0097,0.0220] | −0.0059 [−0.0323,0.0206] | −0.006 [−0.0141,0.0022] | −0.0195 [−0.0310, −0.0080]*** |

| N (All Ages) | 18,622 | 18,622 | 18,622 | 18,590 | 18,622 | 18,327 | 11,753 | 18,622 | 18,622 | 18,622 | 18,622 | 18,622 | 18,622 |

|

| |||||||||||||

| Mean MA Before | 5616 | 8.4125 | 3,592 | 7.8958 | 2,023 | 7.4190 | 409 | 0.5750 | 1.5467 | 1.8892 | 1.1504 | 1.0660 | 1.0623 |

| Mean Non-MA Before | 5029 | 8.1894 | 3,454 | 7.7512 | 1,569 | 7.0394 | 372 | 0.5058 | 1.4919 | 1.8413 | 1.0992 | 1.0313 | 1.0356 |

| Mean MA After | 6769 | 8.5300 | 4,433 | 8.0369 | 2,336 | 7.5456 | 472 | 0.7325 | 1.6144 | 1.9672 | 1.2324 | 1.0758 | 1.0488 |

| Mean Non-MA After | 5389 | 8.2327 | 3,712 | 7.7908 | 1,672 | 7.0949 | 418 | 0.6518 | 1.5700 | 1.9289 | 1.2167 | 1.0457 | 1.0417 |

| (14) | (15) | (16) | (17) | (18) | (19) | |

|---|---|---|---|---|---|---|

| Total Costs, $Mill | Log Total Costs | Total Costs/LOS | Log Costs/LOS | Total Costs/Disch. | Log Costs/Disch. | |

| MA* After 2006 | 4.166 [0.767,7.566]** | −0.008 [−0.036,0.021] | 2.625 [−31.251,36.501] | 0.005 [−0.023,0.033] | 30.649 [−199.750,261.049] | −0.006 [−0.037,0.024] |

| N (All Ages) | 13,825.00 | 13,820.00 | 13,825.00 | 13,820.00 | 13,825.00 | 13,820.00 |

|

| ||||||

| Mean MA Before 2006 | 49.799 | 17.375 | 1379.904 | 7.199 | 8169.940 | 8.960 |

| Mean Non-MA Before 2 | 41.807 | 17.114 | 1353.560 | 7.181 | 7632.107 | 8.891 |

| Mean MA After 2006 | 78.726 | 17.690 | 1685.574 | 7.400 | 9879.292 | 9.144 |

| Mean Non-MA After 200 | 52.737 | 17.301 | 1606.186 | 7.343 | 8972.501 | 9.042 |

95% asymptotic CI clustered by state:

Significant at .01,

Significant at .05,

Significant at .10

MA*During included but coefficient not reported. All specifications and means are on hospital-quarter level, weighted by sum of discharge weights.

All specifications include hospital fixed effects and time fixed effects for 2004 to 2008, quarterly.

Hospital Bedsize is merged on to the HCUP NIS using AHA data.

Discharges with missing age included in “Total Discharges” specifications but not in “Nonelderly” or “Elderly” specifications.

Source: HCUP NIS 2004–2008 authors’ calculations. See text for more details.

To deal with changes in the patient population directly, we control for observable changes in the health of the patient pool using six sets of risk adjustment variables: demographic characteristics, the number of diagnoses on the discharge record, individual components of the Charlson Score measure of comorbidities, AHRQ comorbidity measures, All-Patient Refined Diagnosis Related Groups (APR-DRGs), and All-Payer Severity-adjusted Diagnosis Related Groups (APS-DRGs). We discuss these measures in depth in Appendix C. These measures are a valid means to control for selection if unobservable changes in health are correlated with the changes in health that we observe. We interpret our risk-adjusted specifications assuming that this untestable condition holds, with the caveat that if it does not hold, we cannot interpret our results without a model of selection.

In results presented in the second panel of Table 2, we estimate our model with a subset of our measures of patient severity as the dependent variable. For this exercise, we focus on the six sets of severity measures that are simplest to specify as outcome variables. This allows us to observe some direct changes in the population severity in Massachusetts after the reform. These results present a mixed picture of the underlying patient severity. For four of the six measures, we find no significant change in severity. Two of the severity measures, however, saw statistically significant changes after the reform. The results in specification 8 suggest that the average severity, measured by the Charlson score, increased following the reform. The model of APS-DRG charge weights suggests the opposite. Taken together, these results and the lack of any change in total discharges are not indicative of a consistent pattern of changes in the patient population within a given hospital in Massachusetts after the reform relative to before relative to other states.

Despite this general picture, and in light of the results in columns 8 and 13, for all of our outcome variables we estimate the same model incorporating the vector of covariates X, which flexibly controls for all risk adjusters simultaneously. In general, our results are unchanged by the inclusion of these controls – consistent with the small estimated impact of the reform on the individual severity measures. If anything, we find that the main results are strengthened by the inclusion of covariates as we would expect with increased severity after the reform.

In column 7 of Table 2, we investigate the possibility that either hospital size increased or sampling variation led to an observed larger size of hospitals after the reform relative to before the reform by using a separate measure of hospital size as the dependent variable. This measure, Hospital Bedsize, which we link to our data from the American Hospital Association (AHA) annual survey of hospitals, reports the number of beds in each hospital. The coefficient estimate indicates that hospital size remained very similar after the reform for the hospitals in the sample in both periods. The coefficient, which is not statistically significant, suggests that if anything, there was a decrease in hospital size by 21 beds.

4.2.2. Impact on Resource Utilization and Length of Stay

Moving beyond the question of the extensive margin decision to go to the hospital or to admit a patient to the hospital, we turn to the intensity of services provided conditional on receiving care. The most direct measure of this is the impact of the reform on length of stay. As discussed earlier, we expect length of stay to increase in response to increased coverage if newly insured individuals (or their physician agents) demand more treatment – that is, if moral hazard or income effects dominate. Alternately, we expect length of stay to decrease in response to increased coverage if newly insured individuals are covered by insurers who are better able to impact care through either quantity restrictions or prices – that is, if insurer bargaining effects dominate. Changes in insurer composition could also change the bargaining dynamic or prices paid. Expansions in Medicaid or subsidized CommCare plans might lead to relatively different payment incentives. Length of stay may also decline if insurance alters treatment decisions, potentially allowing substitution between inpatient and outpatient care or drugs that would not have been feasible without coverage.16 To investigate these two effects, we estimate models of length of stay following equation (1) in both levels and logs of the dependent variable.

The results in specifications 8 and 9 of Table 1 show that length of stay decreased by 0.05 days on a base of 5.42 days in the levels specification – a decline of approximately 1 percent. Estimates in column 2 show a 0.12 percent decline in the specification in logs. Because taking logs increases the weight on shorter stays, this difference suggests that the reform had a larger impact on longer stays. In unreported results, we also estimate models of the probability a patient exceeds specific length of stay cutoffs. The results validate the findings in columns 1 and 2. Patients were roughly 10 percent less likely to stay beyond 13 and/or 30 days in Massachusetts after the reform. These effects were statistically significant. The probabilities of staying beyond shorter cutoffs (2, 5, and 9 days) were unchanged. The results suggest that patients were significantly more likely to stay at least 3 days though the magnitude of the coefficient suggests an increase of only 1 percent relative to the baseline share.

To address the concern that our estimated reduction in length of stay was driven by differential selection of healthier patients into the hospital after the reform in MA, we report results controlling for risk adjustment variables in the last row of both panels in Table 1. The estimated decreases in length of stay and log length of stay are at least twice as large in the specifications that include risk adjusters. Holding the makeup of the patient pool constant, length of stay clearly declined. The comparison between the baseline and risk-adjusted results suggests that, patients requiring longer length of stays selected into the patient pool in post-reform Massachusetts.

One plausible mechanism for the decline in length of stay is limited hospital capacity. As with patient severity, capacity constraints are interesting in their own right, but they could bias our estimates of other reform impacts. Capacity itself is endogenous and may have changed with the reform, as we saw in the model with number of beds as the dependent variable.17

Our results can provide some insight into whether changes in length of stay seem to be related to capacity by comparing the additional capacity that resulted from the decrease in length of stay to the magnitude of the change in discharges. Because hospitals care about total changes in capacity, not just among the nonelderly, we use estimates for the change in length of stay among the entire population, a decrease of .06 days on a base of 5.88 days. The new, lower average length of stay is 5.88 – 0.06 = 5.82 days. This would make room for an extra (0.06 * 5,616)/(5.88 – 0.06) = 58 discharges. An extra 58 discharges exceeds the estimated increase of 19 discharges (from column 1 of Table 2). We note, however, that the upper bound of the 95 percent confidence interval for the estimated change in total discharges is greater than 58. Thus, decreased length of stay could have been a response to increased supply side constraints, although this explanation would be more convincing if the point estimate for the change in the number of discharges were positive and closer to change in the supply-side constraint of 58.18

4.2.3. Impact on Access and Preventive Care

One potentially important role of insurance is to reduce the cost of obtaining preventive care that can improve health and/or reduce future inpatient expenditures. In this case, moral hazard can be dynamically efficient by increasing up front care that results in future cost reductions (Chernew et al. (2007)). One manifestation of a lack of coverage that has received substantial attention is the use of the emergency room (ER) as a provider of last resort. If people do not have a regular point of access to the health care system and, instead, go to the emergency room only when they become sufficiently sick, such behavior can lead them to forego preventive care and potentially increase the cost of future treatment. In addition, emergency room care could be ceteris paribus more expensive to provide than primary care because of the cost of operating an ER relative to other outpatient settings. Although we do not observe all emergency room discharges, we can examine inpatient admissions from the emergency room as a rough measure of emergency room usage. A decrease in admissions from the ER after the reform is evidence that a subset of the population that previously accessed inpatient care through the emergency room accessed inpatient care through a traditional primary care channel or avoided inpatient care entirely (perhaps by obtaining outpatient care).

In specification 10 of Table 1, we examine the impact of the reform on discharges for which the emergency room was the source of admission. We see that the reform resulted in a 2.02 percentage point reduction in the fraction of admissions from the emergency room. Relative to an initial mean in Massachusetts of 38.7 percent this estimate represents a decline in inpatient admissions originating in the emergency room of 5.2 percent. The risk-adjusted estimate reported in the bottom row of specification 10 is very similar.19

As a further specification check, we decompose the effect by zip code income quartile.20 To the extent that income is a proxy for ex ante coverage levels, we expect larger declines in inpatient admissions originating in the ER among relatively poorer populations. We present these results in Table 5. We find that the reduction in emergency admissions was particularly pronounced among people from zip codes in the lowest income quartile. As reported in the second panel of Table 5, the coefficient estimate suggests a 12.2 (= −0.0570/.4665) percent reduction (significant at the 1 percent level) in inpatient admissions from the emergency room. The effect in the top two income quartiles, on the other hand, is not statistically significantly different from zero (coefficient estimate of −0.0107 and 0.0098 for the 3rd and 4th income quartiles respectively). Taken together, these results suggest that the reform did reduce use of the ER as a point of entry into inpatient care. This effect was driven by expanded coverage, particularly among lower income populations.

Our results focus solely on emergency room visits that resulted in a hospitalization. This group should be of particular interest to economists and policy makers. They are relatively sick since an admission was ultimately deemed necessary, and would likely benefit from access to outpatient or other care. Furthermore, this is likely to be a much higher cost group where improvements in access may yield efficiency gains. Nevertheless, many ER visits do not result in admission to the hospital. In subsequent work, Miller (2011) studies the universe of ER visits in Massachusetts directly. Her study is a useful complement to our results on this question. She finds reductions in ER admissions, primarily for preventable and deferrable conditions that would not result in inpatient admissions.

In addition to the use of the ER, we are interested in measuring whether providing health insurance directly affects access to and use of preventive care. To investigate the impact of the reform on prevention we use a set of measures developed by the Agency for Healthcare Research and Quality (AHRQ): the prevention quality indicators (PQIs).21 See Appendix D for more details on these measures. These measures were developed as a means to measure the quality of outpatient care using inpatient data, which are more readily available. The appearance of certain preventable conditions in the inpatient setting, such as appendicitis that results in perforation of the appendix, or diabetes that results in lower extremity amputation, is evidence that adequate outpatient care was not obtained. All of the prevention quality measures are indicator variables that indicate the presence of a diagnosis that should not be observed in inpatient data if adequate outpatient care was obtained.

One concern in using these measures over a relatively narrow window of time is that we might not expect to see any impact of prevention on inpatient admissions. However, validating these measures with physicians suggest that the existence of PQI admissions is likely due to short term management of disease in an outpatient setting (e.g. cleaning and treating diabetic foot ulcers to avoid amputations due to gangrene), that we expect would be manifest within the post-reform period.22 Interpreted with different emphasis, these measures also capture impacts on health through averted hospitalizations. We run our difference-and-difference estimator separately for each quality measure using the binary numerator as the outcome variable, and the denominator to select the sample.

Table 3 presents regression results for each of the prevention quality indicators. Each regression is a separate row of the table. In the first row, the outcome is the “Overall PQI” measure suggested by AHRQ – a dummy variable that indicates the presence of any of the prevention quality indicators on a specific discharge. PQI 02 is excluded from this measure because it has a different denominator. We find little overall effect in the base specification. One advantage of examining this measure relative to the individual component measures is that doing so mitigates concerns about multiple hypothesis testing. The following rows show that of the 13 individual PQI measures, 3 exhibit a statistically significant decrease, 9 exhibit no statistically significant change, and 1 exhibits a statistically significant increase. Taken together, these results suggest that there may have been small impacts on preventive care, but little overall effect in reducing the number of preventable admissions.

Table 3.

Prevention Quality Indicators in the NIS

| Prevention Quality Indicators | Improvement? | MA* After | Improvement? | MA* After, Risk Adjusted | N, Mean MA Before | ||

|---|---|---|---|---|---|---|---|

| PQI 90 Overall PQI | −0.0002 [−0.0016,0.0011] | Y | −0.0023 [−0.0036, −0.0009]*** | 17,674,454 | 0.0838 | ||

|

| |||||||

| PQI 01 Diabetes Short-term Comp. Admission | −0.0001 [−0.0002,0.0001] | −0.0002 [−0.0005,0.0001] | 17,674,454 | 0.0058 | |||

| PQI 02 Perforated Appendix Admission Rate | Y | −0.0463 [−0.0557, −0.0368]*** | Y | −0.0072 [−0.0133, −0.0012]** | 189,588 | 0.2457 | |

| PQI 03 Diabetes Long-Term Comp. Admission | 0.0000 [−0.0002,0.0003] | Y | −0.0009 [−0.0011, −0.0007]*** | 17,674,454 | 0.0083 | ||

| PQI 05 COPD Admission Rate | −0.0002 [−0.0005,0.0001] | Y | −0.0005 [−0.0008, −0.0002]*** | 17,674,454 | 0.0097 | ||

| PQI 07 Hypertension Admission rate | 0.0001 [−0.0002,0.0004] | 0.0001 [−0.0002,0.0004] | 17,674,454 | 0.0020 | |||

| PQI 08 CHF Admission Rate | 0.0000 [−0.0005,0.0005] | −0.0003 [−0.0006,0.0001] | 17,674,454 | 0.0109 | |||

| PQI 10 Dehydration Admission Rate | 0.0000 [−0.0002,0.0002] | Y | −0.0005 [−0.0007, −0.0003]*** | 17,674,454 | 0.0054 | ||

| PQI 11 Bacterial Pneumonia Admission Rate | 0.0001 [−0.0005,0.0006] | 0.0004 [−0.0002,0.0009] | 17,674,454 | 0.0172 | |||

| PQI 12 Urinary Tract Infection Admission Rate | −0.0001 [−0.0004,0.0002] | 0.0000 [−0.0003,0.0003] | 17,674,454 | 0.0070 | |||

| PQI 13 Angina without Procedure Admission Rate | N | 0.0005 [0.0004,0.0007]*** | N | 0.0005 [0.0004,0.0007]*** | 17,674,454 | 0.0014 | |

| PQI 14 Uncontrolled Diabetes Admission rate | 0.0001 [−0.0001,0.0003] | −0.0001 [−0.0003,0.0001] | 17,674,454 | 0.0007 | |||

| PQI 15 Adult Asthma Admission Rate | Y | −0.0006 [−0.0009, −0.0002]*** | Y | −0.0006 [−0.0009, −0.0003]*** | 17,674,454 | 0.0146 | |

| PQI 16 Rate of Lower-extremity Amputation | Y | −0.0005 [−0.0006, −0.0004]*** | Y | −0.0006 [−0.0007, −0.0005]*** | 17,674,454 | 0.0023 | |

95% asymptotic CI clustered by state:

Significant at .01,

Significant at .05,

Significant at .10

Y and N indicate statistically significant gains and losses, respectively.

MA*During included but coefficient not reported. All specifications and means are weighted using discharge weights.

All specifications include hospital fixed effects and time fixed effects for 2004 to 2008, quarterly.

Risk adjusters include six sets of risk adjustment variables: demographic characteristics, the number of diagnoses on the discharge record, individual components of the Charlson Score measure of comorbidities, AHRQ comorbidity measures, All-Patient Refined (APR)-DRGs, and All-Payer Severity-adjusted (APS)-DRGs. See Appendix 2.

Regressions in this table estimated in the sample of nonelderly discharges.

Source: HCUP NIS 2004–2008 authors’ calculations. See text for more details.

We also estimate the model including controls for severity. If the impact of insurance or outpatient care on the existence of a PQI varies in patient severity, it is possible that the small estimated effects mask a compositional effect of the inpatient population after the reform. That is, if relatively severe patients are more likely to be hospitalized with a PQI, regardless of the outpatient care they receive, then estimates that hold the patient population fixed provide a better estimate for the impact of the reform on preventive care. These results are presented in the second column of Table 3. For the overall PQI measure, the coefficient on MA*After is −0.0023 and is statistically significant at the 1 percent level. Compared to the baseline rate of PQIs, this corresponds to a decline of 2.7 percent in preventable admissions. Results for the individual measures tell a similar story. Taken together, these results suggest that there was a small overall effect of the reform on preventable admissions but, holding the severity of the population fixed, there were significant declines. We find that, if anything, the inpatient population was more severe after the reform. Thus comparing the two coefficients both with and without risk adjustment suggests that the effect of the reform on reducing preventable admissions was largest among relatively less severe patients.

To supplement our analysis of preventive care, we also estimate models of prevention using data from the BRFSS for years 2004–2009. The BRFSS is a state-based system of health surveys that collects information on health risk behaviors, preventive practices, and health care access. For more information on the BRFSS, see Appendix E. Table 4 column 1 presents the differences-in-differences estimate for the impact of reform on those reporting they have health insurance coverage. Consistent with the results from the CPS, we a roughly 5 percent increase in coverage after the reform relative to before the reform in Massachusetts relative to other states. The remaining seven columns present results that are relevant to outpatient and preventive care. In column 2, we see a significant increase of 1.26 percent in individuals reporting they had a personal doctor. The reform also led to a decrease of 3.06 percentage points in individuals reporting they could not access care due to cost. Columns 4–8 present difference-in-differences estimates for the impact of the reform on a set of direct measures of preventive care. We find little overall impact in the population. The only statistically significant estimate is for the impact of the reform on receiving a flu vaccination.

4.2.4. Impact on Hospital Costs

In this section, we investigate the impact of the reform on hospital costs. The cost impact, as we discuss above, depends on the relative changes in incentives facing hospitals and physicians in treatment and investment decisions. In the presence of moral hazard or income effects, we expect that the large coverage expansion in Massachusetts would lead the newly insured to seek additional care and, conditional on use, more expensive care (Pauly (1968); Manning et al. (1987); Kowalski (2009)). Insurers are also able to negotiate lower prices for care and, in the case of managed care plans, address treatment decisions directly through quantity limits (i.e. prior authorization rules that require a physician to get approval from the insurer in order for a procedure to be reimbursed, etc.) (Cutler et al. (2000)). Increased coverage through Medicaid or other insurers with relatively low reimbursement could mute the incentives for the provision of costly care. Furthermore, insurance could alter the way hospital care is produced making transitions in care easier or facilitating substitution of treatments to lower cost, outpatient settings or drugs. Thus, increases in coverage could lead to a countervailing decrease in cost with insurance coverage. Consequently, it is an empirical question whether increases in health insurance coverage among the hospitalized population will raise or lower cost.

To measure hospital costs directly, we obtained hospital level all-payer cost to charge ratios. Hospitals are required to report these ratios to Medicare on an annual basis. The numerator of the ratio represents the annual total costs of operating the hospital such as overhead costs, salaries, and equipment. Costs only include the portion of hospital operations for in-patient care; they do not include the cost of uncompensated care, which presumably declined with the increase in insurance coverage. The denominator of this ratio represents annual total charges across all payers, which we observe disaggregated by discharge in the NIS. With our information on total charges from the NIS, we can get an accurate measure of total costs at the hospital level. Several papers in the economics literature measure total costs at the discharge level by deflating total charges by the cost to charge ratio (see Almond et al. (2010)). However, since there is no variation in observed costs at a level finer than the hospital level, estimating such a regression requires the strong assumption that the ratio of costs to charges is the same for all discharges within the hospital. Since we are interested in hospital-level costs, we need not impose this assumption, and we can focus on results at the hospital level.