Abstract

Keeping track of self-executed facial expressions is essential for the ability to correctly interpret and reciprocate social expressions. However, little is known about neural mechanisms that participate in self-monitoring of facial expression. We designed a natural paradigm for social interactions where a monkey is seated in front of a peer monkey that is concealed by an opaque liquid crystal display shutter positioned between them. Opening the shutter for short durations allowed the monkeys to see each other and encouraged facial communication. To explore neural mechanisms that participate in self-monitoring of facial expression, we simultaneously recorded the elicited natural facial interactions and the neural activity of single neurons in the amygdala and the dorsal anterior cingulate cortex (dACC), two regions that are implicated with decoding of others’ gestures. Neural activity in both regions was temporally locked to distinctive facial gestures and close inspection of time lags revealed activity that either preceded (production) or lagged (monitor) initiation of facial expressions. This result indicates that single neurons in the dACC and the amygdala hold information about self-executed facial expressions and demonstrates an intimate overlap between the neural networks that participate in decoding and production of socially informative facial information.

Keywords: face-to-face interaction, spike triggered average, facial-expression production, amygdala-cingulate interaction

Accurate decoding of social interaction requires information about all participants, including one’s own behavior. We would interpret differently a smile if we knew that we smiled first (or not) and, similarly, a threatening face has different meaning if we threatened first. Computationally, this ambiguity implies that information about the observed facial expression and one’s own expression should converge in the network to incorporate these complementary sources of information. However, little is known about the neural mechanisms that enable such integration.

Successful decoding of a facial expression should allow a rapid adequate response to the outcome that is implied by this meaningful expression. Accordingly, the amygdala, a structure associated with representing the learned valence of sensory inputs (1, 2), has been implicated with decoding and processing of various facial expressions (3). Notably, bilateral amygdala lesion selectively impairs recognition of fear expressions (4), which possibly signal the existence of an immediate threat. In addition, amygdala responses to fear and other facial expressions are modulated by social context (5, 6) (e.g., gaze direction), suggesting that the amygdala also participates in the decoding processes of a wider range of emotionally salient facial signals.

The dorsal anterior cingulate cortex (dACC) is hypothesized to synthesize information about reinforcers with the current behavioral goal (7) and contributes to the resolution of emotional conflicts (8). Like the amygdala, dACC response to facial expression is rapid (9); yet emotional valence differentially modulates amygdala and dACC responses to sad and happy facial expressions (9, 10)—suggesting differential sensitivity of the dACC and amygdala to socioemotional cues. Anatomically, direct connections between the amygdala (11, 12) and the dACC (13) to motoneurons in the facial nucleus of the pons and facial areas in the motor cortex suggest that these two regions are capable of regulating production of facial expressions.

In line with this suggestion, studies have shown the involvement of the amygdala and anterior cingulate cortex (ACC) during observation and artificial (instructed) execution of facial expressions (14–17). In addition, the dACC has been directly implicated in the volitional control of emotional vocal utterances (18). However, it is unclear whether the amygdala and the ACC are recruited during spontaneous facial interactions under natural conditions, and what are the temporal relationships between single-cell activity and self-facial expressions. We therefore recorded neuronal activity in these regions during a unique natural social interaction paradigm.

Results

To assess the roles of the amygdala and the dACC in natural social behaviors, we designed a face-to-face interaction paradigm (19). Two monkeys were seated facing each other with an opaque liquid crystal display (LCD) shutter positioned in between (Fig. 1A). Every 60 ± 15 s, the LCD shutter turned clear for 5 s (by electrical pulse, <2 ms onset/offset) to encourage spontaneous facial interactions between the monkeys (20). Although the monkeys engaged in the task, we captured continuous video of their faces and simultaneously recorded the activity of neurons from the amygdala and the dACC of one of the monkeys (Fig. S1).

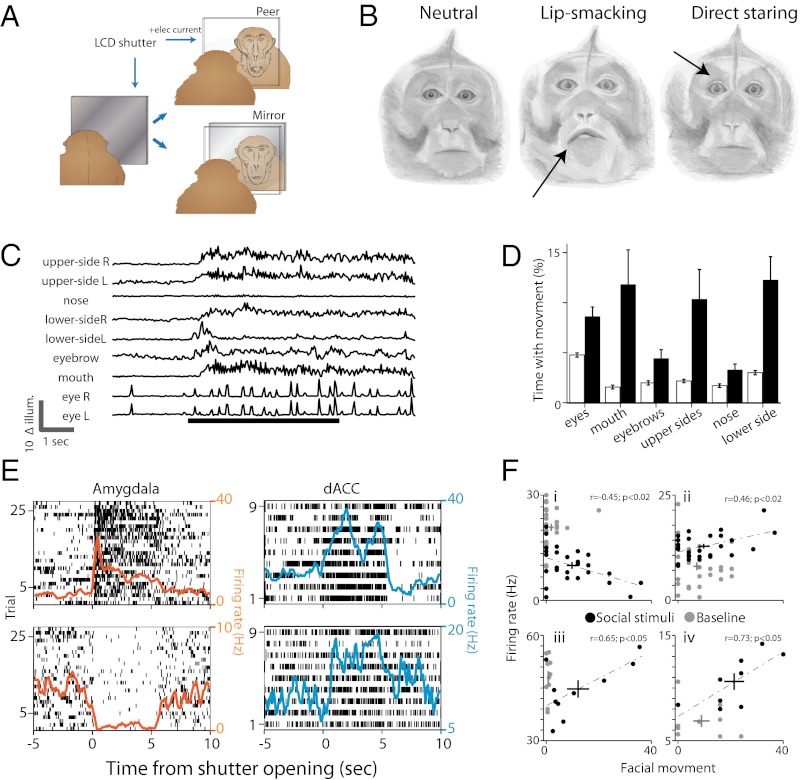

Fig. 1.

Behavioral paradigm and neural activity. (A) Monkeys were seated in front of a fast LCD shutter that became transparent for 5 s every 60 ± 15 s. Either a peer monkey or a mirror were positioned behind the LCD in different sessions. The field of view was restricted so that only the face could be seen. (B) Facial expressions remain neutral during the times the shutter was closed (Left), and we observed reoccurrence of two typical expressions upon opening of the shutter (Right, eyebrow lifting and staring; Center, lip smacking). Such expressions have been widely described in these monkeys. Drawings by Sharon Kaufman, based on real photos from our setup. (C) Illumination changes at different regions of the face were increased during shutter opening (bottom black bar) and lasted also after the shutter was closed. Shown are traces of illumination changes at nine different facial regions during a trial. (D) Facial movement was evident during shutter opening. Filled bars represent the proportion of time with significant movement at each facial region during shutter opening. Open bars represent the proportion during closed shutter (P < 0.001, ANOVA). (E) Two representative neurons from the amygdala (Left) and the dACC (Right) that significantly changed their firing rate when the shutter opened. Neurons (amygdala n = 58, dACC n = 46) modulated their firing rate immediately after shutter opening and either maintained their activity during the 5 s or had a phasic decaying response. Shown are raster plots overlaid by the PSTH. (F) Single neurons with firing rates (y axis) that are significantly correlated (dashed line) with facial movement only in the presence of visual stimuli (dark dots). In all four examples, the correlations between firing rate and movement during the baseline period were not significant. Plus represents center of the mass ± SEM.

Facial expression remained mostly still (Fig. 1B, Left) when the shutter was close. Opening the shutter induced two typical (21, 22) facial expressions: (i) Direct staring (Fig. 1B, Center): consisted of widened eyelid, multiple stretches of the eyebrows, and increased eye movements. Often, direct staring was accompanied by backward flapping of the ears toward the skull. (ii) Lip smacking (Fig. 1B, Right): involved protrusion of the lips and repetitive contraction of the muscles surrounding it (orbicularis oris and mentalis). Frequently, lip smacking also involved movement of the lower cheek’s hair and backward flapping of the ears. Occurrence of direct staring and lip smacking were not independent and, in many trials, the monkeys expressed both. We validated these observations by using automatic clustering of the videos content: Movies of the facial expressions were analyzed by extracting the net illumination changes (Δillum) at multiple facial regions of interest (fROI). This approach has allowed automatic and unbiased assessment of facial movement at the camera temporal resolution (40 ms). Principal component analysis for the correlations between the different fROI revealed three distinctive expression clusters that correspond to the three facial expressions aforementioned: neutral, direct staring, and lip smacking (Fig. S2). Accordingly, opening the shutter reliably induced an increase in movement in multiple fROI and in a manner that is specific to peer viewing (Fig. 1 C and D; P < 0.001, condition main effect, two-way ANOVA and post hoc comparisons; Fig. S3A).

Concurrently, shutter opening also induced rapid and tonic changes in firing rates of amygdala and dACC single neurons (Fig. 1E and Fig. S3 D and E; amygdala, n = 58; dACC, n = 46). Response duration and response latencies varied among neurons, yet we found no evidence for consistent differences between amygdala and dACC (Fig. S3 D and E). We next sought evidence that information regarding one’s own facial expression and visual input converge on single neurons in the amygdala and the dACC. To this end, we constructed a multivariate linear regression model for each neuron, with movement (Δillum) and shutter state (open/close) as predictors. The interaction factor (movement × state) of 34% amygdala neurons and 42% dACC neurons was significantly correlated with the firing rate of the neurons (Fig. 1F), indicating that many single neurons’ response to facial movement are conditioned on visual input of social stimuli.

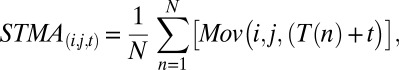

To identify neural responses that hold information about self-facial expressions, we computed spike-triggered movement averages (STMA) on movement (Δillum) in each video pixel of the monkey face (Fig. 2). STMA was evaluated from 480-ms before spike to 480-ms after spike and has two main advantages: First, it allows a hypothesis-free natural identification of what facial areas move in relation to occurrence of spikes in a specific neuron; and second, it allows identification of which spikes precede facial movement, and which follow movement. Because of the precise locking of activity in this analysis, the results cannot be attributed to facial expression of the peer, i.e., even if a specific gesture is typically reciprocating a specific peer expression, the loose functional and temporal reliability between two facial movements would be averaged out in this method. To further assure this finding, we used two conditions: peer viewing behind the shutter, and mirror viewing behind the shutter. In the peer condition, spikes that occur immediately after facial movement cannot be attributed to perception of the peer response, because such response would require <300 ms of peer response time. In the mirror condition, spikes that occur before facial movement cannot be attributed to peer expression (there is no real peer and hence all movements are taken into account in the analysis). Notice that macaques have been repeatedly reported to fail the mirror self-recognition test (23, 24) (but see ref. 25), yet strongly respond to self views (26), in line with the increased facial expressions we observed in the mirror condition (Fig. S3B; P < 0.01, ANOVA) and comparable to the peer condition.

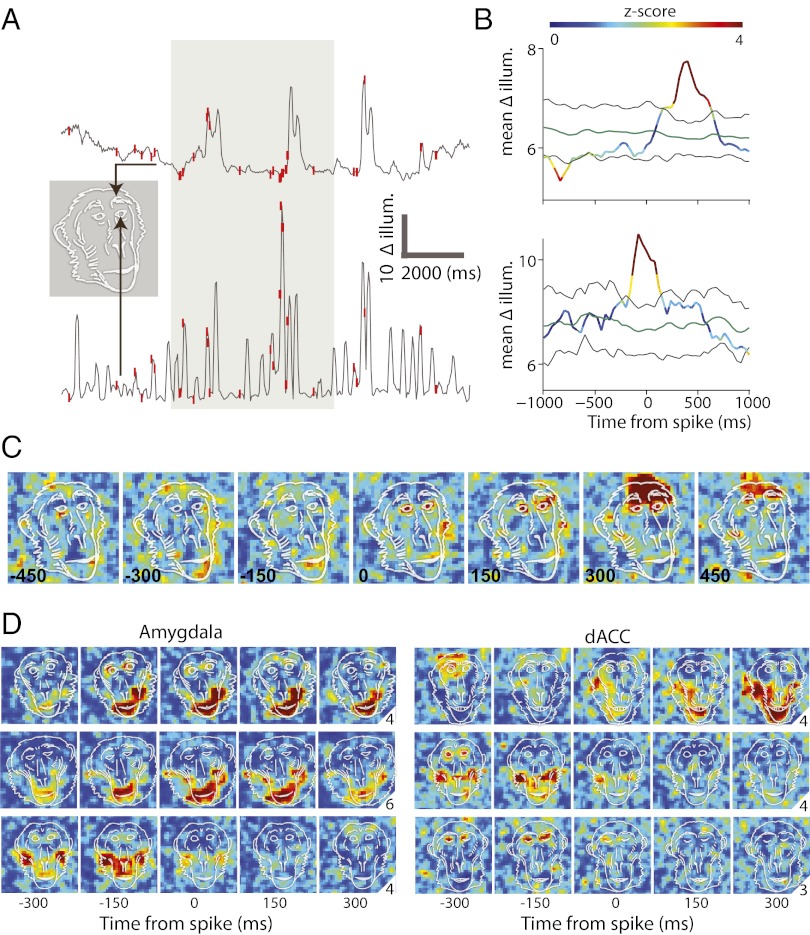

Fig. 2.

STMAs reveal locking to self facial expressions. (A) Shown are movement/illumination traces from two different pixels (arrows) taken from a single trial and overlaid by single spike discharge times (red ticks). The gray-marked area indicates shutter opening. (B) STMA was computed for the spikes that occur during shutter opening. STMA was normalized (z-scored) and color-coded by the distribution of 100 within-trial shuffling of spikes and recomputation of STMAs. Green and black lines indicate the mean and 95% confidence intervals of the shuffled STMAs. (C) STMAs were computed for all of the pixels within the frame and color-coded by the corresponding z-score value of the pixel’s STMA at each of the different time delays from spike occurrence (indicated by the number at the lower-left corners). Note that significant pixels before spike discharge (time ≤ 0; lower example) indicate that the cell fired mostly following movement, and significant pixels after spike discharge (time > 0; upper example) indicates that the cell’s spikes mostly precede movement. The overlaid monkey face is not a scheme but an exact outline of the real frame. (D) Six more examples: STMAs of three amygdala (Left) and three dACC (Right) cells overlaid by real contours of the monkeys face in the same session. Numbers at the right lower corner correspond to the maximum value of the color code used at each example. Most cells had an STMA with clear responses at mouth or eye and eyebrows related pixels.

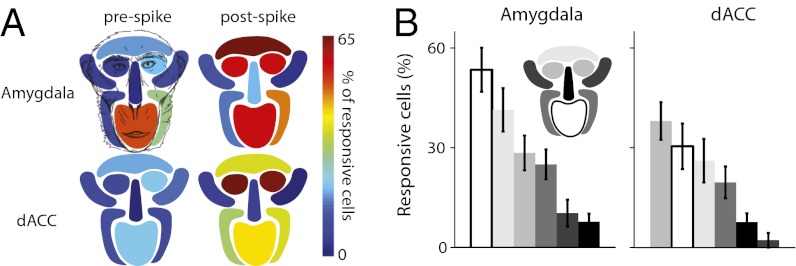

STMA values at each of the fROI indicated that movement at socially expressive facial regions was associated with coordinated firing of as many as 70% of the neurons in the dACC and the amygdala (Fig. 3A; P < 0.0001, ANOVA, Tukey–Kramer corrected). Movements of the eyes, eyebrows, and the mouth were more widely represented in these regions (Fig. 3B; P < 0.01, ANOVA for both). We did not find, however, major differences between the amygdala and the dACC. In fact, close inspection revealed only two small effects: More neurons were locked to the mouth in the amygdala (Fig. 3B, Left; P < 0.05, unpaired Student's t test) and eye-related neurons responded earlier in the dACC (Fig. 3B, Right; P < 0.05, t test), yet neither effect survived correction for multiple comparisons.

Fig. 3.

Proportion of cells with significant STMA at each facial region. (A) The proportions of cells with significant prespike STMA (from peer-monkey sessions) and postspike STMA (from mirror sessions, see main text for rationale) during shutter opening is plotted separately for each of the nine fROI. Note that the highest proportions of significant STMAs appeared at facial regions that involve production of socially facial expression: eyes, eyebrows, and the mouth. (B) Same data, but sorted by proportion. Facial region is represented by different colors according to the Inset face map.

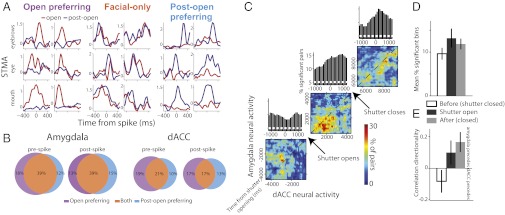

Immediately after shutter closure, the monkeys continued to produce facial expressions (Fig. 1C and Fig. S3C), suggesting that they remain at a cognitive state of social interaction a few more seconds after the visual stimuli has been removed and providing a time window in which our hypothesis, that neurons hold information about self expressions, can be further tested. We compared the STMA during shutter opening to that computed after shutter opening and identified open-preferring cells—cells with significant STMA only during shutter opening (Fig. 4A, Left) and postopen preferring cells—cells with significant STMA only after the shutter is closed (up to 5 s after closure; Fig. 4A, Right). This finding suggests that STMA are modulated by visual stimuli (see also Fig. 1F). However, the proportion of cells with significant yet similar STMA at both time windows exceed the expected independent proportion (P < 0.001 χ2; Fig. 4 A, Center and B). This result suggests that the cognitive context of social interaction, and not only visual input, plays a central role in shaping STMA.

Fig. 4.

Cells hold similar information about self-expressions during and after peer viewing. (A) Normalized STMA of the eyebrows, eyes, and mouth (rows, top to bottom, two examples for each condition) during shutter opening (red) and postopening (blue). Cells were either selectively significant only during peer viewing (Left) or postshutter opening (Right). However, for most of the cells, STMA remain similar at both time epochs (Center). (B) The proportion of cells with similar STMA at open and postopen epochs (“facial only”) is significantly larger than the proportion expected from independency. Shown are the proportions for STMA related to the mouth. Highly similar results were obtained for the eyes and eyebrows. (C) jPSTH were computed for all available pairs of amygdala-dACC neurons that were recorded simultaneously (n = 57). To avoid biased estimation as a result of increased firing rate during shutter opening, we include in the analysis only pairs of neurons that had significant correlation during baseline as well. jPSTH were computed from 5 s before shutter opening to 20 s after. The percentage of pairs with significant correlation at each bin is presented for the preshutter opening (lower corner), through the opening period (middle), and in the postopening period (upper corner). Significant bins above the diagonal in such map indicate the dACC activity tends to precede amygdala’s activity; conversely, significant bins below the diagonal indicate that amygdala firing preceded. The diagonal sum (the cross-correlation) appears above each matrix. (D) Direction index was computed as the correlation value (above diagonal − below diagonal)/(above diagonal + below diagonal) and indicates that opening the shutter shifted the directionality of the synchronized activity so that amygdala firing tends to precede dACC during and right after shutter opening. (E) This shift was also accompanied by net increase in the amount of significant correlations. Both directionality and magnitude changes returned to baseline ∼15–20 s after the shutter was closed.

Finally, to exploit our simultaneous recordings from the two regions and to identify differences in functionality that were not observed in STMA comparisons, we computed joint poststimulus time histograms (jPSTH) for pairs of dACC-amygdala neurons (n = 57; Fig. 4C). We found that opening the shutter induced a net increase in synchrony level that returned to baseline 10–15 s after closure (ANOVA P < 0.01; Fig. 4D, shuffle-corrected). In addition, whereas amygdala firing followed dACC firing during baseline, shutter opening induced a change in directionality and the synchrony was reversed—dACC followed amygdala (ANOVA P < 0.01; Fig. 4E). This directional shift also returned to baseline around 5–10 s after closure.

Discussion

Adequate decoding and production of facial expressions require computational capacities that play a primary selective pressure in the evolution of the primate brain (27). Here, we demonstrated that the amygdala and the dACC are recruited during epochs of direct face-to-face visual interaction and actively engaged in the production and monitoring of social facial expressions. In addition to the well-documented involvement of the dACC and amygdala neurons in decoding of observed facial expressions (6, 28–33), we have shown here that execution of facial expressions also recruits these cells. The fact that we did not detect major differences in functionality between the two regions is in line with the intimate relationships and anatomical projections between them (34, 35).

Our findings are also in line with the well-established function of this network in emotional learning and regulation, where the amygdala and the dACC signal the expected outcome of reinforced cues, but are also actively involved in regulating the expression of conditioned response (36–38). Similarly, adaptive social behavior requires rapid facial response to a learned social cue and, at the same time, it requires continuous regulation of the response. Together, it might suggest that production of social behavior exploits the specialization of the dACC-amygdala network at regulating execution of learned cued behaviors. It is yet to be determined whether these regions are also involved in the actual learning of socially cued response, similar to classical conditioning for example.

Little is known about the neuronal mechanisms that control facial display and, hence, it is hard to speculate whether the coupling between amygdala/dACC to facial movement reflect direct influence of these regions on facial expression or a regulatory mechanism that acts indirectly. The anatomical connections of the amygdala (12, 39) and the dACC (13) with the facial nucleus may allegedly suggest that these two brain regions engage in the actual production of facial expressions. Further support to the “direct” hypothesis can be drawn from research on the control of vocalization and ingestion, which suggests that initiation of affective signals depend on periaqueductal gray and is mediated by inputs from the amygdala, the insula, and the medial frontal cortices to facial nuclei (18, 40–43). However, despite the apparent resemblance, lip smacking and ingestive behavior consist of different rhythmic muscle coordinations (43), suggesting that the facial nucleus functions differently in mediating the rhythmic but monotonic ingestive behaviors and the more complex social facial displays.

The alternative “indirect” interpretation (which is not mutual exclusive), is that corollary discharges from motor regions provide information about production of facial expressions in millisecond resolution. This interpretation would be similar to forward models of the motor system where information about the produced movement is analyzed to produce predictions about the outcome (44, 45). In the current case, the outcome might be social responses of a peer, and this information, which was shown to reside in the same circuits (6, 28–33), can be integrated to form a more complete interpretation of the social scene. Our finding that facial movement and social stimuli converge on single neurons in the amygdala and the dACC is in line with this suggestion and suggests a possible mechanism for how social context may shape decoding and the reciprocal response to social scenes. Further evidence comes from studies that measure muscle activity directly and/or tactile stimulation (that is elicited naturally during facial expressions), and neural responses.

Finally, the amygdala-frontal network has been extensively implicated with representation and regulation of conditioned behaviors on the one hand (36), and with decoding of social signals on the other (46). It is not completely clear whether and how these lines of research converge. Both behaviors require a similar computation—contingency detection that, in turn, enables production of adequate preparatory response. Accordingly, we found here that social interaction induced an increase in amygdala-frontal coupling, which is comparable to the increase in synchrony observed during expression of conditioned behaviors (38, 47–49). This apparent similarity might suggest that both behaviors recruit this network to serve a potentially similar computational demand and offer a possible functional bridge between conditioned learning and social manifestations.

Methods

Animals.

Two male macaca fascicularis (4–7 kg) were implanted with a recording chamber (27 × 27 mm) above the right amygdala and dACC under deep anesthesia and aseptic conditions. All surgical and experimental procedures were approved and conducted in accordance with the regulations of the Weizmann Institute Animal Care and Use Committee, following National Institutes of Health regulations and with Association for Assessment and Accreditation of Laboratory Animal Care International. Food, water, and enrichments (e.g., fruits and play instruments) were available ad libitum during the whole period, except before medical procedures that require deep anesthesia.

MRI-Based Electrode Positioning.

Anatomical MRI scans were acquired before, during, and after the recording period. Anatomical images were acquired on a 3-Tesla MRI scanner (MAGNETOM Trio; Siemens) with a CP knee coil (Siemens). T1 weighted and 3D gradient-echo (MPRAGE) pulse sequence was acquired with repetition time of 2,500 ms, inversion time of 1,100 ms, echo time of 3.36 ms, 8° flip angle, and two averages. Images were acquired in the sagittal plane, 192 × 192 matrix and 0.8 mm3 or 0.6 mm3 resolution. A scan was performed before surgery and used to align and guide the positioning of the chamber on the skull for each individual animal (by relative location of the amygdala and anatomical markers of the interaural line and the anterior commissure). After surgery, we performed another scan with two electrodes directed toward the amygdala and the dACC, and two to three observers separately inspected the images and calculated the anterior-posterior and lateral-medial borders of the amygdala and dACC relative to each of the electrode penetrations. The depth of the regions was calculated from the dura surface based on all penetration points.

Recordings.

Each session/day (n = 20), three to six microelectrodes (0.6–1.2 MΩ glass/narylene coated tungsten; Alpha-Omega or We-Sense) were lowered inside a metal guide (Gauge 25xxtw, outer diameter: 0.51 mm, inner diameter: 0.41 mm, Cadence) into the brain by using a head tower and electrode-positioning system (Alpha-Omega). The guide was lowered to penetrate and cross the dura and stopped at 2–5 mm in the cortex. Electrodes were then moved independently into the amygdala and the dACC (we performed four to seven mapping sessions in each animal by moving slowly and identifying electrophysiological markers of firing properties tracking the anatomical pathway into the amygdala). Electrode signals were preamplified, 0.3 Hz–6 kHz band-pass filtered and sampled at 25 Khz; on-line spike sorting was performed by using a template based algorithm (Alpha Lab Pro; Alpha-Omega). We allowed 30 min for the tissue and signal to stabilize before starting acquisition and behavioral protocol. At the end of the recording period, offline spike sorting was performed for all sessions to improve unit isolation (offline sorter; Plexon). Lastly, the spatial distributions of responsive cells within the amygdala and the dACC were inspected to assure that the main findings reported here represent activity that is common in wide parts of these structures (Fig. S4).

Behavioral Paradigm.

Monkeys were seated face-to-face in a custom-made primate chairs with their heads 80 cm apart. The head of the monkey from which neuronal activity was recorded was posted, whereas his companion’s head remain free. Fast LCD shutter (307 × 407 mm) was placed in between the monkeys (FOS-307 × 406-PSCT-LV; Liquid Crystal Technologies) and was used to block direct visual communication during most of the experiment. Direct current of 48 V was passed through the LCD shutter for 5 s every 60 ± 15 s (random) and turned it clear. The shutter has an onset/offset rise time of <2 ms. The monkeys were strictly positioned so that they can see only the facial region of each other. Two video cameras (Provision-Isr) were used to film the monkeys continuously. Videos were captured by frame grabber card (NI PCI-1405; National Instruments) and sent to the acquisition system frame-triggered digital signal that was used to synchronize the videos with the neural data. Video resolution was 60 × 480 pixels, and the monkeys face occupied ∼300 × 300 pixels. In five out of the 20 experimental sessions, we compared the monkeys’ facial responses to a banana (placed behind the shutter). When viewing the banana, a second shutter was positioned behind it to conceal the peer monkey. Each experimental session consisted of 10–27 openings of the LCD shutter. Studio condenser microphone (JM47a; Joemeek) was placed 20 cm above and to the right of the monkey ear and recorded continuously all possible vocal communication.

Olfactory cues were available at all times (e.g., shutter closed, shutter opened, banana viewing with peer concealed behind it), and, hence, cannot be the source of the STMA we observed. Moreover, the nature of STMA computation (detailed below) i.e., the precise time locking of neural activity with changes in pixel illumination (i.e., movement), cannot be attributed to olfactory cues from the peer monkey. However, it is possible that olfactory cues generated by the peer during shutter-open states provide a general context that modulates the neural activity to self facial expressions.

In our setup, the monkeys did not emit any vocal calls, and the speaker recordings were essentially flat (Fig. S5). This quiet behavior could be the result of using pairs of monkeys that know each other well and reside together for months.

Data Analysis.

Video frames were down-sampled to 64 × 48 and the per-pixel derivative was taken to represent changes in illuminations in each pixel that reflect movement in this region. The monkeys were head-held for neural recordings and, hence, changes in illumination can only result from facial gestures. The borders of the eyes, mouth, nose, eyebrows, and upper and lower sides of the face were manually drawn on a single representative frame from each day, and the mean change in illumination at each of these fROI was computed. Because the opening and closing of the shutter could induce momentary change in illumination (due to slightly different levels of light reflection onto the monkey face), we excluded from all analyses time segments of 500 ms from opening and closing of the shutter. Movement at fROI was then determined if the change in illumination exceeded 95% confidence interval (one-sided) compared with the preopening time. The mean percentage of time where movement was detected was calculated and used for comparisons.

Firing rates were computed on a 1-ms resolution and smoothed with a 500-ms window. Histograms of firing rates were computed on the firing rates that precede shutter opening. Responsive times were indentified if a neuron’s activity crossed the 95% confidence interval (two-sided) and the mean duration in which the neurons were responsive was obtained.

Multivariate linear regression was performed on the neural activity before shutter opening (4,500–500 ms before opening) and during shutter opening (500–4,500 ms after opening). Net movement at each of the fROI at the different time window, the shutter state, and the interaction of both (multiplication of both variables) were included as variable. Neurons with significant interaction factor at two or more fROI were considered as integrators of visual stimuli and self facial expression.

STMA were computed pixel-wise on the down-sampled frames:

|

where i and j are the coordinates within the frame, t is the time from spike, Mov is the movement (change in illumination), and T(n) is the time of the ns’ spike. After computing STMA on the real spike times, STMA were recomputed on shuffled spike times (100 repetitions). Spikes were shuffled within trial because the total movement observed and the total number of spikes at individual trial could vary, and this shuffling therefore maintains the ratio between the movement and overall momentary firing rate. We next evaluated each “voxel” of the STMA by the mean and SD of the shuffled data, and converted it into z-score.

Next, we inspected the distribution of values of the STMA within each fROI to see whether it significantly differs than noise (Student’s t test P < 0.001, corrected for multiple comparisons). STMA values from an arbitrary area on the frame outside of the monkey face and with equal number of pixels were used as baseline. Moreover, STMA were assigned as significant only if the STMA differed from noise in at least two consecutive samples. Finally, if a neuron’s STMA was assigned as significant during shutter opening but not after shutter, this neuron was considered as open-preferring cell. Conversely, if a neuron’s STMA was significant only after opening, we define it a postopen preferring cell. Lastly, neurons that had significant STMA at both time windows were considered as facial-only cells i.e., cells whose STMA is poorly modulated by the visual input.

jPSTH were computed as described (38, 50). Briefly, for all pairs of amygdala and dACC neurons that were recorded simultaneously, intertrial correlations were computed for firing rates at varying lags from the onset of shutter opening. Significance was assessed by using shift predictor technique by shuffling trial order of amygdala neurons and recalculating the jPSTH 100 times. Correlation at each bin was considered significant only if its value exceeded 95% of the shuffled correlations at the same bin. In addition, only clusters of 2 × 2 significant bins were considered significant to minimize accumulative error. Directionality was assessed by computing the ratio between total correlation strength (sum of all significant correlation values) above and below the diagonal of the correlation matrix: (above − below)/(above + below).

Supplementary Material

Acknowledgments

We thank Dr. Gil Hecht and Dr. Eilat Kahana for help with medical and surgical procedures, Dr. Edna Furman-Haran and Nachum Stern for MRI procedures, and Sharon Kaufman and Genia Brodsky for illustrations. U.L. was supported by the Kahn Family Research Center. The work was supported by Israel Science Foundation Grant 430/08, a European Union-International Reintegration Grant, and European Research Council Grant FP7-StG 281171 (to R.P.).

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1207662109/-/DCSupplemental.

References

- 1.LeDoux JE. Emotion circuits in the brain. Annu Rev Neurosci. 2000;23:155–184. doi: 10.1146/annurev.neuro.23.1.155. [DOI] [PubMed] [Google Scholar]

- 2.Pape HC, Pare D. Plastic synaptic networks of the amygdala for the acquisition, expression, and extinction of conditioned fear. Physiol Rev. 2010;90(2):419–463. doi: 10.1152/physrev.00037.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Fitzgerald DA, Angstadt M, Jelsone LM, Nathan PJ, Phan KL. Beyond threat: Amygdala reactivity across multiple expressions of facial affect. Neuroimage. 2006;30(4):1441–1448. doi: 10.1016/j.neuroimage.2005.11.003. [DOI] [PubMed] [Google Scholar]

- 4.Adolphs R, Tranel D, Damasio H, Damasio A. Impaired recognition of emotion in facial expressions following bilateral damage to the human amygdala. Nature. 1994;372(6507):669–672. doi: 10.1038/372669a0. [DOI] [PubMed] [Google Scholar]

- 5.Adams RB, Jr, Gordon HL, Baird AA, Ambady N, Kleck RE. Effects of gaze on amygdala sensitivity to anger and fear faces. Science. 2003;300(5625):1536–1536. doi: 10.1126/science.1082244. [DOI] [PubMed] [Google Scholar]

- 6.Gothard KM, Battaglia FP, Erickson CA, Spitler KM, Amaral DG. Neural responses to facial expression and face identity in the monkey amygdala. J Neurophysiol. 2007;97(2):1671–1683. doi: 10.1152/jn.00714.2006. [DOI] [PubMed] [Google Scholar]

- 7.Shackman AJ, et al. The integration of negative affect, pain and cognitive control in the cingulate cortex. Nat Rev Neurosci. 2011;12(3):154–167. doi: 10.1038/nrn2994. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Etkin A, Egner T, Kalisch R. Emotional processing in anterior cingulate and medial prefrontal cortex. Trends Cogn Sci. 2011;15(2):85–93. doi: 10.1016/j.tics.2010.11.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Killgore WDS, Yurgelun-Todd DA. Activation of the amygdala and anterior cingulate during nonconscious processing of sad versus happy faces. Neuroimage. 2004;21(4):1215–1223. doi: 10.1016/j.neuroimage.2003.12.033. [DOI] [PubMed] [Google Scholar]

- 10.Blair RJ, Morris JS, Frith CD, Perrett DI, Dolan RJ. Dissociable neural responses to facial expressions of sadness and anger. Brain. 1999;122(Pt 5):883–893. doi: 10.1093/brain/122.5.883. [DOI] [PubMed] [Google Scholar]

- 11.Applegate CD, Kapp BS, Underwood MD, McNall CL. Autonomic and somatomotor effects of amygdala central N. stimulation in awake rabbits. Physiol Behav. 1983;31(3):353–360. doi: 10.1016/0031-9384(83)90201-9. [DOI] [PubMed] [Google Scholar]

- 12.Fanardjian VV, Manvelyan LR. Mechanisms regulating the activity of facial nucleus motoneurons—III. Synaptic influences from the cerebral cortex and subcortical structures. Neuroscience. 1987;20(3):835–843. doi: 10.1016/0306-4522(87)90244-2. [DOI] [PubMed] [Google Scholar]

- 13.Morecraft RJ, Schroeder CM, Keifer J. Organization of face representation in the cingulate cortex of the rhesus monkey. Neuroreport. 1996;7(8):1343–1348. doi: 10.1097/00001756-199605310-00002. [DOI] [PubMed] [Google Scholar]

- 14.Wicker B, et al. Both of us disgusted in My insula: The common neural basis of seeing and feeling disgust. Neuron. 2003;40(3):655–664. doi: 10.1016/s0896-6273(03)00679-2. [DOI] [PubMed] [Google Scholar]

- 15.Mukamel R, Ekstrom AD, Kaplan J, Iacoboni M, Fried I. Single-neuron responses in humans during execution and observation of actions. Curr Biol. 2010;20(8):750–756. doi: 10.1016/j.cub.2010.02.045. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.van der Gaag C, Minderaa RB, Keysers C. Facial expressions: What the mirror neuron system can and cannot tell us. Soc Neurosci. 2007;2(3-4):179–222. doi: 10.1080/17470910701376878. [DOI] [PubMed] [Google Scholar]

- 17.Carr L, Iacoboni M, Dubeau MC, Mazziotta JC, Lenzi GL. Neural mechanisms of empathy in humans: A relay from neural systems for imitation to limbic areas. Proc Natl Acad Sci USA. 2003;100(9):5497–5502. doi: 10.1073/pnas.0935845100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Jürgens U. The neural control of vocalization in mammals: A review. J Voice. 2009;23(1):1–10. doi: 10.1016/j.jvoice.2007.07.005. [DOI] [PubMed] [Google Scholar]

- 19.Hasson U, Ghazanfar AA, Galantucci B, Garrod S, Keysers C. Brain-to-brain coupling: A mechanism for creating and sharing a social world. Trends Cogn Sci. 2012;16(2):114–121. doi: 10.1016/j.tics.2011.12.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Mosher CP, Zimmerman PE, Gothard KM. Videos of conspecifics elicit interactive looking patterns and facial expressions in monkeys. Behav Neurosci. 2011;125(4):639–652. doi: 10.1037/a0024264. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Redican WK. Facial expressions in nonhuman primates. In: Rosenblum LA, editor. Primate Behavior: Developments in Field and Laboratory Research. New York: Academic; 1975. pp. 103–194. [Google Scholar]

- 22.Van Hooff J. Facial expressions in higher primates. Sympos Zool Soc Lond. 1962;8:97–125. [Google Scholar]

- 23.Gallup GG., Jr Absence of self-recognition in a monkey (Macaca fascicularis) following prolonged exposure to a mirror. Dev Psychobiol. 1977;10(3):281–284. doi: 10.1002/dev.420100312. [DOI] [PubMed] [Google Scholar]

- 24.Anderson JR. Responses to mirror image stimulation and assessment of self-recognition in mirror-and peer-reared stumptail macaques. Q J Exp Psychol. 1983;35(3):201–212. doi: 10.1080/14640748308400905. [DOI] [PubMed] [Google Scholar]

- 25.Rajala AZ, Reininger KR, Lancaster KM, Populin LC. Rhesus monkeys (Macaca mulatta) do recognize themselves in the mirror: Implications for the evolution of self-recognition. PLoS ONE. 2010;5(9):e12865. doi: 10.1371/journal.pone.0012865. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Couchman JJ. Self-agency in rhesus monkeys. Biol Lett. 2012;8(1):39–41. doi: 10.1098/rsbl.2011.0536. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Dunbar RIM, Shultz S. Evolution in the social brain. Science. 2007;317(5843):1344–1347. doi: 10.1126/science.1145463. [DOI] [PubMed] [Google Scholar]

- 28.Kuraoka K, Nakamura K. Responses of single neurons in monkey amygdala to facial and vocal emotions. J Neurophysiol. 2007;97(2):1379–1387. doi: 10.1152/jn.00464.2006. [DOI] [PubMed] [Google Scholar]

- 29.Fried I, MacDonald KA, Wilson CL. Single neuron activity in human hippocampus and amygdala during recognition of faces and objects. Neuron. 1997;18(5):753–765. doi: 10.1016/s0896-6273(00)80315-3. [DOI] [PubMed] [Google Scholar]

- 30.Anderson AK, Christoff K, Panitz D, De Rosa E, Gabrieli JD. Neural correlates of the automatic processing of threat facial signals. J Neurosci. 2003;23(13):5627–5633. doi: 10.1523/JNEUROSCI.23-13-05627.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Mosher CP, Zimmerman PE, Gothard KM. Response characteristics of basolateral and centromedial neurons in the primate amygdala. J Neurosci. 2010;30(48):16197–16207. doi: 10.1523/JNEUROSCI.3225-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Adolphs R, et al. A mechanism for impaired fear recognition after amygdala damage. Nature. 2005;433(7021):68–72. doi: 10.1038/nature03086. [DOI] [PubMed] [Google Scholar]

- 33.Hoffman KL, Gothard KM, Schmid MC, Logothetis NK. Facial-expression and gaze-selective responses in the monkey amygdala. Curr Biol. 2007;17(9):766–772. doi: 10.1016/j.cub.2007.03.040. [DOI] [PubMed] [Google Scholar]

- 34.Ghashghaei HT, Hilgetag CC, Barbas H. Sequence of information processing for emotions based on the anatomic dialogue between prefrontal cortex and amygdala. Neuroimage. 2007;34(3):905–923. doi: 10.1016/j.neuroimage.2006.09.046. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Amaral DG, Price JL. Amygdalo-cortical projections in the monkey (Macaca fascicularis) J Comp Neurol. 1984;230(4):465–496. doi: 10.1002/cne.902300402. [DOI] [PubMed] [Google Scholar]

- 36.Milad MR, Quirk GJ. Fear extinction as a model for translational neuroscience: Ten years of progress. Annu Rev Psychol. 2012;63(1):129–151. doi: 10.1146/annurev.psych.121208.131631. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Sierra-Mercado D, Padilla-Coreano N, Quirk GJ. Dissociable roles of prelimbic and infralimbic cortices, ventral hippocampus, and basolateral amygdala in the expression and extinction of conditioned fear. Neuropsychopharmacology. 2011;36(2):529–538. doi: 10.1038/npp.2010.184. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Livneh U, Paz R. Amygdala-prefrontal synchronization underlies resistance to extinction of aversive memories. Neuron. 2012;75(1):133–142. doi: 10.1016/j.neuron.2012.05.016. [DOI] [PubMed] [Google Scholar]

- 39.Morecraft RJ, et al. Amygdala interconnections with the cingulate motor cortex in the rhesus monkey. J Comp Neurol. 2007;500(1):134–165. doi: 10.1002/cne.21165. [DOI] [PubMed] [Google Scholar]

- 40.Coudé G, et al. Neurons controlling voluntary vocalization in the macaque ventral premotor cortex. PLoS ONE. 2011;6(11):e26822. doi: 10.1371/journal.pone.0026822. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Caruana F, Jezzini A, Sbriscia-Fioretti B, Rizzolatti G, Gallese V. Emotional and social behaviors elicited by electrical stimulation of the insula in the macaque monkey. Curr Biol. 2011;21(3):195–199. doi: 10.1016/j.cub.2010.12.042. [DOI] [PubMed] [Google Scholar]

- 42.Jürgens U. Neural pathways underlying vocal control. Neurosci Biobehav Rev. 2002;26(2):235–258. doi: 10.1016/s0149-7634(01)00068-9. [DOI] [PubMed] [Google Scholar]

- 43.Shepherd SV, Lanzilotto M, Ghazanfar AA. Facial muscle coordination in monkeys during rhythmic facial expressions and ingestive movements. J Neurosci. 2012;32(18):6105–6116. doi: 10.1523/JNEUROSCI.6136-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Sommer MA, Wurtz RH. A pathway in primate brain for internal monitoring of movements. Science. 2002;296(5572):1480–1482. doi: 10.1126/science.1069590. [DOI] [PubMed] [Google Scholar]

- 45.Shadmehr R, Smith MA, Krakauer JW. Error correction, sensory prediction, and adaptation in motor control. Annu Rev Neurosci. 2010;33:89–108. doi: 10.1146/annurev-neuro-060909-153135. [DOI] [PubMed] [Google Scholar]

- 46.Adolphs R. What does the amygdala contribute to social cognition? Ann N Y Acad Sci. 2010;1191(1):42–61. doi: 10.1111/j.1749-6632.2010.05445.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Lesting J, et al. Patterns of coupled theta activity in amygdala-hippocampal-prefrontal cortical circuits during fear extinction. PLoS ONE. 2011;6(6):e21714. doi: 10.1371/journal.pone.0021714. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Popa D, Duvarci S, Popescu AT, Léna C, Paré D. Coherent amygdalocortical theta promotes fear memory consolidation during paradoxical sleep. Proc Natl Acad Sci USA. 2010;107(14):6516–6519. doi: 10.1073/pnas.0913016107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Adhikari A, Topiwala MA, Gordon JA. Synchronized activity between the ventral hippocampus and the medial prefrontal cortex during anxiety. Neuron. 2010;65(2):257–269. doi: 10.1016/j.neuron.2009.12.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Paz R, Pelletier JG, Bauer EP, Paré D. Emotional enhancement of memory via amygdala-driven facilitation of rhinal interactions. Nat Neurosci. 2006;9(10):1321–1329. doi: 10.1038/nn1771. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.