Abstract

Purpose: Iterative reconstruction methods often offer better imaging quality and allow for reconstructions with lower imaging dose than classical methods in computed tomography. However, the computational speed is a major concern for these iterative methods, for which the x-ray transform and its adjoint are two most time-consuming components. The speed issue becomes even notable for the 3D imaging such as cone beam scans or helical scans, since the x-ray transform and its adjoint are frequently computed as there is usually not enough computer memory to save the corresponding system matrix. The purpose of this paper is to optimize the algorithm for computing the x-ray transform and its adjoint, and their parallel computation.

Methods: The fast and highly parallelizable algorithms for the x-ray transform and its adjoint are proposed for the infinitely narrow beam in both 2D and 3D. The extension of these fast algorithms to the finite-size beam is proposed in 2D and discussed in 3D.

Results: The CPU and GPU codes are available at https://sites.google.com/site/fastxraytransform. The proposed algorithm is faster than Siddon's algorithm for computing the x-ray transform. In particular, the improvement for the parallel computation can be an order of magnitude.

Conclusions: The authors have proposed fast and highly parallelizable algorithms for the x-ray transform and its adjoint, which are extendable for the finite-size beam. The proposed algorithms are suitable for parallel computing in the sense that the computational cost per parallel thread is O(1).

Keywords: x-ray transform, discrete Radon transform, parallel algorithm, CT, GPU

INTRODUCTION

Computed tomography (CT) is perhaps the most widely used medical imaging modality. For health care purpose, the reduction of imaging doses is highly desirable. A promising practice for the dose reduction is through iterative image reconstruction methods, which often offer better image reconstruction quality than the conventional methods, especially for the low-dose scans3, 4, 5, 6, 7 and four-dimensional (4D) CT scans.8, 9, 10, 11, 12 Despite their advantages, the iterative methods are not yet commonly used for clinical diagnosis. A critical obstacle is that the iterative methods need to be further accelerated to meet the clinical needs. To address this, various algorithms13, 14, 15 and GPU-based parallel solvers16, 17, 18, 19, 20 were developed for computing the x-ray transform and its adjoint, which are often the most computationally expensive components of the iterative methods. The focus of this paper is to study the fast parallel algorithms for the x-ray transform and its adjoint.

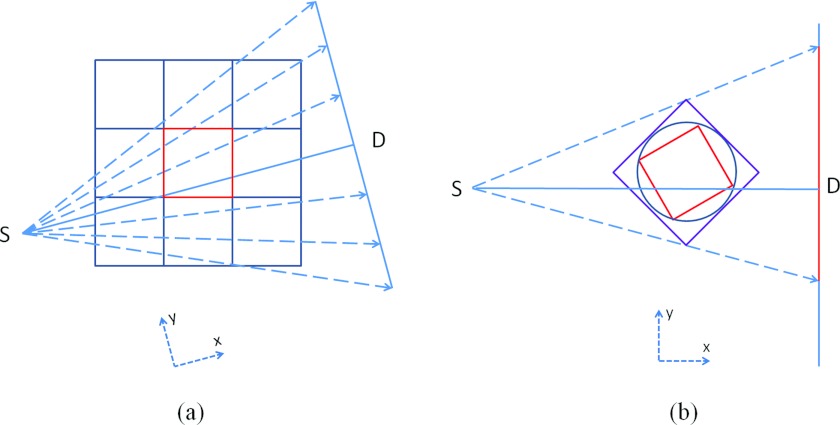

We shall first specify the notations for the discrete x-ray transform. For simplicity, let us consider a 2D grid with (Nx, Ny) pixels, illuminated with the infinitely narrow beams under the fan-beam CT setting from Nv directions, with Nd detectors for each direction. For each beam indexed by (m, n), m ≤ Nv, n ≤ Nd, the x-ray transform is to compute the summation of the intersection lengths of the beam with each pixel weighted by the attenuation coefficient of that pixel [Fig. 1a]. That is,

| (1.1) |

where x is the attenuation coefficient, lmn,ij is the intersection length of the beam (m, n) with the pixel (i, j), and Dmn consists of the indices of pixels that have nontrivial intersections with the beam (m, n).

Figure 1.

The x-ray transform. (a) The x-ray transform is to compute the summation of the intersection lengths of the beam with each pixel weighted by its attenuation coefficient. (b) The key of the popular Siddon's algorithm (Ref. 2) is to consider the parametric line form of the intersections.

Under the same fan-beam CT setting, for each pixel indexed by (i, j), I ≤ Nx, j ≤ Ny, the adjoint of the discrete x-ray transform is to compute the summation of the intersection lengths of all the beams that nontrivially intersect with this pixel weighted by their x-tray transform data y. That is,

| (1.2) |

where Dij consists of the indices of beams that have nontrivial intersections with the pixel (i, j).

A popular algorithm for the x-ray transform was proposed by Siddon.2 The key is the parametric line representation of the beams so that the complexity of computing the intersection lengths of each beam with a 2D or 3D domain is still with respect to a 1D line. Take a 2D domain with the isocenter at the origin and unit pixel length for example. The algorithm computes the parametric coordinates of the intersections of the beam with the line sets {x = i, −Nx/2 ≤ i ≤ Nx/2} and {y = i, −Ny/2 ≤ i ≤ Ny/2}, sort these coordinates, and then find the nontrivial intersection pixels with the intersection length for every two consecutive parametric coordinates [Fig. 1b]. Therefore, the computational complexity for each beam is still with respect to 1D, i.e., O(Nx), the total complexity is roughly O(Nx·Nv·Nd), and the complexity of its ideal parallel version is O(Nx). However, this algorithm for infinitely narrow beams does not generalize for finite-size beams, and it does not directly apply to the parallel computation of the adjoint x-ray transform either.

In this paper, we will study the fast and highly parallelizable algorithms for the x-ray transform and its adjoint for both the infinitely narrow beam and the finite-size beam, and then present the numerical results to demonstrate the efficiency of the proposed algorithms.

ALGORITHMS FOR THE X-RAY TRANSFORM

2D version

Let us first consider the intersection of a beam with two end points (x1, y1) and (x2, y2), with a 2D domain D of (Nx, Ny) pixels and the pixel size (dx, dy). Without loss of generality, let Nx = Ny, dx = dy = 1, and the isocenter be at the origin. Let us also define the columns of the domain to be the following, which consists of the centers of pixels,

| (2.1) |

and similarly the rows of the domain to be

| (2.2) |

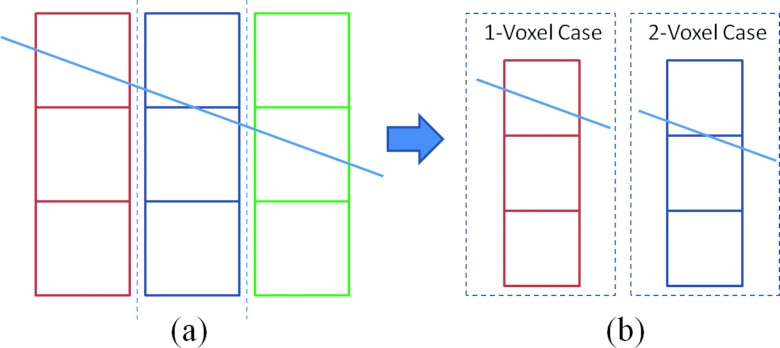

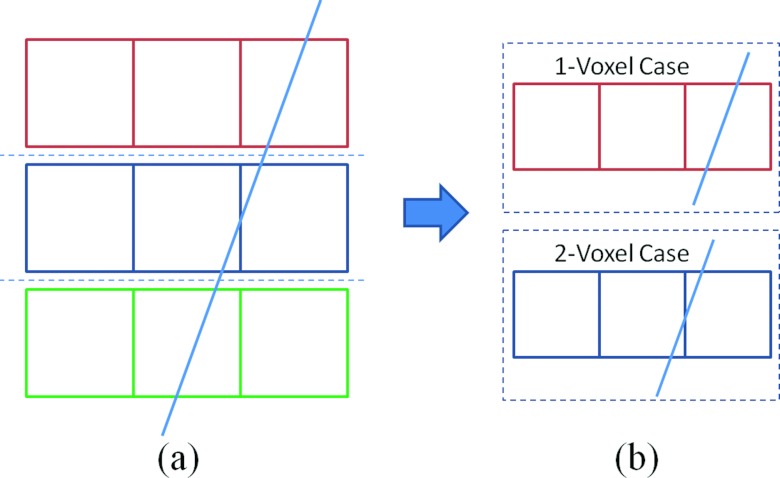

The key for the proposed algorithm is the observation that when the absolute slope of the beam is smaller than 1, i.e., |x2−x1|>|y2−y1|, the beam intersects each column 2.1 for at most two pixels (Fig. 2); otherwise, the beam intersects each row 2.2 for at most two pixels (Fig. 3). (In general, the classification criterion should be dy/dx>|y2−y1|/|x2−x1| instead. However, for the simplicity of presentation, dx = dy = 1 is assumed in the following discussion.)

Figure 2.

Fast algorithm for the x-ray transform. (a) When |x2−x1|>|y2−y1|, it is natural to divide the 2D domain D into the columns; (b) within each column, the beam intersects nontrivially with only at most two pixels, which allows for an efficient parallelization with the O(1) computation per thread.

Figure 3.

Fast algorithm for the x-ray transform. (a) When |x2−x1|≤|y2−y1|, it is natural to divide the 2D domain D into the rows; (b) within each row, the beam intersects nontrivially with only at most two pixels, which allows for an efficient parallelization with the O(1) computation per thread.

When |x2−x1|>|y2−y1|, it is natural to divide the 2D domain D into the columns 2.1 [Fig. 2a]. Within each column, the beam intersects nontrivially with only at most two pixels, which is essential for the efficient parallelization. Moreover, the indices and the intersection lengths can be conveniently determined. That is, for the ith column, we first compute the y-coordinates of the intersections of the beam with two vertical boundaries of this column, i.e.,

| (2.3) |

with

| (2.4) |

and then take the greatest integers that are smaller than yi− and yi+, i.e.,

| (2.5) |

which are exactly the y-indices of the intersecting pixel candidates. If Yi+ = Yi−, there is only one intersecting pixel provided 1 ≤ Yi− ≤ Ny [Fig. 2b], i.e.,

| (2.6) |

if Yi+ ≠ Yi−, there are two consecutive intersecting pixels provided 1 ≤ Yi−,Yi+ ≤ Ny [Fig. 2b], i.e.,

| (2.7) |

Similarly, when |x2−x1|≤|y2−y1|, it is natural to divide the 2D domain D into the rows 2.2 [Fig. 3a]. Again, within each row, the beam intersects nontrivially with only at most two pixels [Fig. 3b]. We will skip the algorithm details since it is similar to the case with |x2−x1|>|y2−y1|.

For the convenience of implementation, the algorithm for the 2D x-ray transform with |x2−x1|>|y2−y1| is summarized in Appendix C. For details of the CPU and GPU implementations in C see Ref. 1.

Here for the convenience of the presentation, dx = dy = 1 is assumed. In case that dx = dy ≠ 1, the following algorithm still works after the normalization by dividing all lengths by dx. In case that dx ≠ dy, a few corresponding changes need to be made. For example, the criterion on whether to divide the domain into columns or rows depends on the absolute slope |dy/dx| instead of 1. Without further mentioning, the situation is similar for the following 3D algorithm.

The computational complexity of this algorithm is O(Nx) for each beam. It is faster than Siddon's algorithm2 mainly due to its simplicity and no required sorting, for which the numerical verification will be presented. More importantly, when considering the parallel version, the complexity of the new algorithm is O(1) per parallel thread, since the intersection of the beam for each row/column can be computed in parallel. In contrast, the complexity is O(Nx) per parallel thread for the parallelized Siddon's algorithm. In this aspect, this new algorithm is more suitable for parallel computing.

3D version

The previous 2D algorithm for the x-ray transform can be extended to 3D. Let us similarly consider the intersection of a beam with two end points (x1, y1, z1) and (x2, y2, z2), with a 3D domain of (Nx, Ny, Nz) voxels and the pixel size (dx, dy, dz). Without loss of generality, let Nx = Ny, dx = dy = 1, and the isocenter be at the origin. Let us also define the x-planes of the domain

| (2.8) |

the y-planes of the domain

| (2.9) |

and the z-planes of the domain

| (2.10) |

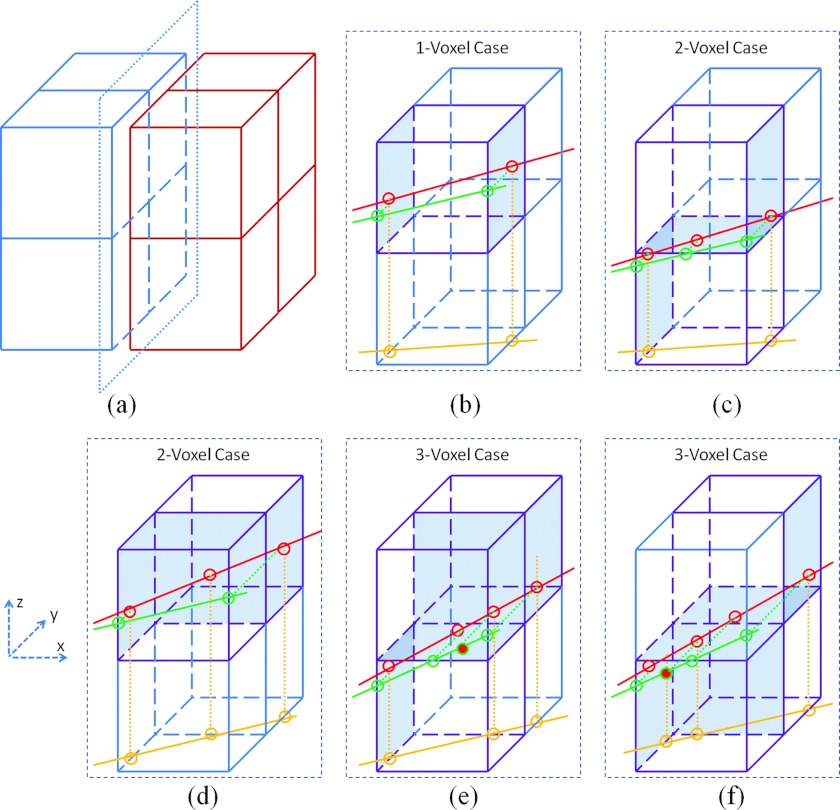

Again, the key for the proposed fast algorithm is the observation that when |x2−x1|>|y2−y1| and |x2−x1|>|z2−z1|, the beam intersects each x-plane 2.8 for at most four pixels (Fig. 4); when |y2−y1|≥|x2−x1| and |y2−y1|>|z2−z1|, the beam intersects each y-plane 2.9 for at most four pixels; when |z2−z1|≥|x2−x1| and |z2−z1|≥|y2−y1|, the beam intersects each z-plane 2.10 for at most four pixels. In the following, let us only consider the first situation, since the other two cases can be derived similarly.

Figure 4.

Fast algorithm for the x-ray transform in 3D. (a) when |x2−x1|>|y2−y1| and |x2−x1|>|z2−z1|, it is natural to divide the 3D domain D into the x-planes, and the intersections can be up to three consecutive voxels contained in a 2 × 2 “box”; (b) the case with one intersecting voxel along both y-direction (when viewed in the x-y projection plane) and z-direction (when viewed in the x-z projection plane); (c) the case with one intersecting voxel along y-direction and two intersecting voxels along z-direction; (d) the case with two intersecting voxels along y-direction and one intersecting voxel along z-direction; (e) and (f) the cases with two intersecting voxels along both y-direction and z-direction (Fig. 5).

In the case with |x2−x1|>|y2−y1| and |x2−x1|>|z2−z1|, it is natural to divide the 3D domain into the x-planes 2.8 [Fig. 4a]. Inspired by the 2D case, we view the projection of the intersection into the x-y plane (i.e., along y-direction of the x-plane) and the x-z plane (i.e., along z-direction of the x-plane). That is, for the voxels within each x-plane, the beam intersects nontrivially with only at most two consecutive voxels along the y-direction, and at most two consecutive voxels along the z-direction as well, which is again essential for the efficient parallelization.

In fact, the intersections can be at most three consecutive voxels contained in a 2 × 2 “box” [Fig. 4a], which can be classified into five cases shown in Figs. 4b, 4c, 4d, 4e, 4f. The cases with one or two intersecting voxels are similar to the 2D case, i.e., one intersecting voxel along both y-direction (when viewed in the x-y projection plane) and z-direction (when viewed in the x-z projection plane) [Fig. 4b], one intersecting voxel along y-direction and two intersecting voxels along z-direction [Fig. 4c], and two intersecting voxels along y-direction and one intersecting voxel along z-direction [Fig. 4d]. In these cases, the indices and the weights can be determined with the similar 2D formulas as 2.6, 2.7.

The situation is slightly more complicated when there are two intersecting voxels along both y-direction and z-direction [Figs. 4e, 4f]. To determine the intersection indices and lengths for the ith x-plane, we first compute yi+, yi−, Yi+, Yi− through 2.3, 2.4, 2.5, and the intersecting ratio of the projected beam along the y-direction

| (2.11) |

then similarly determine zi+, zi−, Zi+, Zi− and the intersecting ratio of the projected beam along the z-direction through follows:

| (2.12) |

with

| (2.13) |

| (2.14) |

and

| (2.15) |

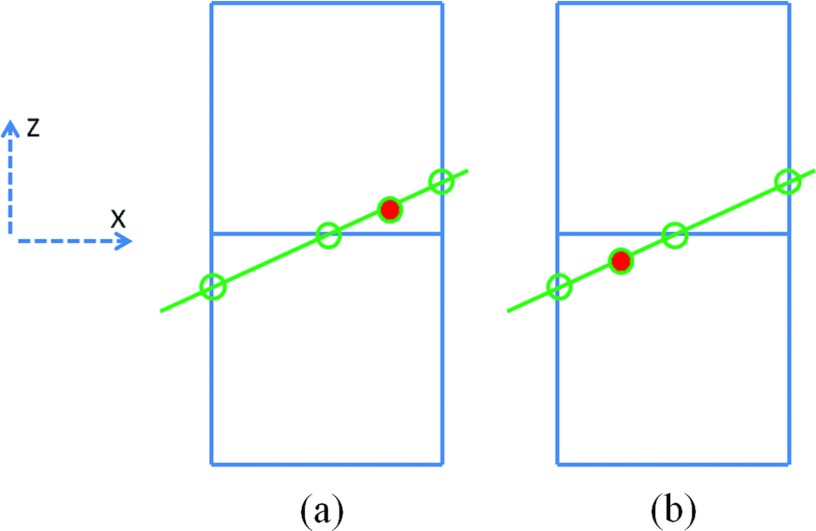

When ry>rz [Fig. 4e], the y-direction intersecting point is above the z-direction intersecting point when both are viewed along the z-direction in the x-z projection plane [Fig. 5a]. Therefore, the indices and the weights of three intersecting voxels are

| (2.16) |

Otherwise, when ry ≤ rz [Fig. 4f], the y-direction intersecting point is below the z-direction intersecting point when both are viewed along the z-direction in the x-z projection plane [Fig. 5b]. Therefore, the indices and the weights of three intersecting voxels are

| (2.17) |

Figure 5.

Projection view of Figs. 4e, 4f into the x-z plane. (a) When ry>rz [Fig. 4e], the y-direction intersecting point is above the z-direction intersecting point when both are viewed along the z-direction in the x-z projection plane; (b) when ry≤rz [Fig. 4f], the y-direction intersecting point is below the z-direction intersecting point when both are viewed along the z-direction in the x-z projection plane.

For the convenience of implementation, the algorithm for the 3D x-ray transform with |x2−x1|>|y2−y1| and |x2−x1|>|z2−z1| is summarized in Appendix D. For details of the CPU and GPU implementations in C see Ref. 1.

As a result, the computational complexity of this algorithm for 3D is also O(Nx) for each beam. It is again faster than Siddon's algorithm2 mainly due to its simplicity and no required sorting, for which the numerical verification will be also presented next. Moreover, when considering the parallel version, the complexity of the new algorithm in 3D is again O(1) per parallel thread, since the intersection of the beam for each 2D plane can be computed in parallel. In contrast, the complexity is O(Nx) per parallel thread for the parallelized Siddon's algorithm. In this aspect, this new algorithm is more suitable for parallel computing in 3D.

Generalization for the finite-size beam

For improved accuracy of the x-ray transform, it is sometimes necessary to take the finite size of the beam into account, e.g., when the width of the beam is larger than the pixel size. Unlike the Siddon algorithm, the proposed new algorithms have a natural and simple extension for the finite-size beam.

For simplicity, let us take the 2D case with the same notations as in Sec. 2A for example. The key for the fast and highly parallelizable algorithm is similar as that for the infinitely narrow beam. That is, when the absolute slope of the central beam line of this finite-size beam is smaller than 1, i.e., |x2−x1|>|y2−y1|, we divide the 2D domain into the columns 2.1 and compute the intersection of the beam with each column individually. As a result, the parallelization is efficient, since the nontrivial intersection of the beam with each column is still at most a few pixels as long as the beam is still fairly focused. Even in the rare cases when the beam size is significantly larger than the pixel size, this algorithm is still suitable for the parallelization.

In this case with |x2−x1|>|y2−y1|, within each column 2.1, the indices of all intersecting pixels can be determined by, two intersecting pixels by the boundary beam lines of the finite-size beam with the column, as the lower and upper bound. Then the intersection area of the beam with each nontrivially intersecting pixel can be computed with an efficient geometric formula given in Appendix B. For details of the CPU and GPU implementations in C see Ref. 1.

Similarly in 3D, the 3D domain can be divided into x-planes 2.8, y-planes 2.9, and z-planes 2.10 according to the slopes of the beam. The intersecting voxels within each plane can be specified by the lower and upper bounding voxels from two directions. For example, when considering x-planes, the lower and upper bounding voxels are from the y-direction and the z-direction. However, an efficient 3D formula to compute the intersection volume of the beam with each nontrivially intersecting voxel needs to be supplied.

In the following, the extension to the finite-size beam is implemented in 2D. Overall, in the case with finite-size beams, the computational complexity of the new algorithms is still O(Nx) for each finite-size beam, and the complexity of their parallel version is again O(1) per parallel thread.

ALGORITHMS FOR THE ADJOINT X-RAY TRANSFORM

2D version

By the nature of the adjoint x-ray transform 1.2, its computation can be done in parallel at least for each pixel. In this aspect, the efficient algorithm for the adjoint x-ray transform will be quite different from that for the x-ray transform. For simplicity, let us start with the fan-beam setting [Fig. 6a] using the same notations as in Sec. 2A, and we shall consider the adjoint x-ray transform for a fixed pixel. For the notations, let (xc, yc) be the coordinate of the center of the pixel, SO be the distance from the source to the isocenter, SD be the distance from the source to the detection line, dy be the detector width, and dy be the scan angle. Without loss of generality, let dx = dy = 1.

Figure 6.

Fast parallelizable algorithm for the adjoint x-ray transform. (a) As the first parallelization step, the computation for the adjoint x-ray transform is done in parallel for each pixel. (b) As the second parallelization step, for a fixed pixel, the computation is done in parallel for each scan view, for which the contribution from this scan view to this pixel only comes from the detectors that lie in the shadow region that is bounded above and below by the beams passing through four corners of the pixel. Therefore, the computational complexity is O(1) per parallel thread, assuming the summation of the contribution from all views is relatively negligible.

For a fixed pixel and a fixed scanning direction, the contribution from this scan to this pixel only comes from the detectors that lie in the shadow region that is bounded above and below by the beams passing through four corners of the pixel [Fig. 6b]. To put this into the algorithm, we first rotate the coordinate system back to the original position for the ease of the computation, so that the detector is perpendicular to the x-axis. As a result, the new coordinate (xr, yr) of the center of the pixel is

| (3.1) |

Then we determine the lower and upper bound of the contributing detectors. Due to the rotation, the pixel may not be aligned with the coordinate axis. Therefore, the computation for the beams passing through four corners is required, and then the minimum and the maximum are determined as the lower and upper bound. However for the computation efficiency, another way is to consider the circle that encloses this pixel, and then the upright rhombus that encloses this circle [Fig. 6b]. Then the diagonal of this rhombus is also the unit length. Therefore, the lower and upper index bound of the contributing detectors are

| (3.2) |

Once the contributing detectors are found, which are often only a few, the efficient geometric formula given in Appendix A can be used to compute the intersection length of the beam from these detectors with the pixel. Please note that the offset of the detection plane with respect to the isocenter can be conveniently taken care of by adding the offset distance yos to Eq. 3.2.

For the convenience of implementation, the algorithm for the 2D adjoint x-ray transform with the fan-beam setting that takes yos into account is summarized in Appendix E. For details of the CPU and GPU implementations in C see Ref. 1.

The computational complexity of this algorithm is O(Nv) for each pixel, and the overall complexity is O(Nx·Ny·Nv). In its parallel version, the contribution of the scans from different directions to the same pixel can be computed in parallel, and then summed together. Therefore, the complexity of this algorithm is O(1) per parallel thread, given that the time for the summation is relatively negligible. In this aspect, this algorithm is suitable for parallel computing of the adjoint x-ray transform. Finally, although the algorithm is presented for the fan-beam scanning setting, it can be easily modified for other scanning settings.

3D version

This algorithm can be easily extended for the 3D case. Without loss of generality, let us only consider the cone-beam scan. For the notations, let (xc, yc, zc) be the coordinate of the center of the pixel, (na, nb) and (dy, dz) be the number of detectors and the detector width along the y-direction and the z-direction (two orthonormal directions of the detection plane), and ϕ be the scan angle. Without loss of generality, let us also assume dx = dy = 1.

Similar to the 2D case, for a fixed voxel and a fixed scanning direction, the contribution from this scan to this voxel only comes from the detectors that lie in the shadow region on the 2D detection plane that is bounded all around by the beams passing through the corners of the voxel. Therefore, we compute the rotated voxel coordinate through 3.1, find the detection bound along y-direction and z-direction through

| (3.3) |

and

| (3.4) |

and then compute the intersection length for the detectors only within this localized region. Please note that for helical scans, we simply need to subtract the incremental z-change from zc to get the transformed coordinate zr.

Here, the method for computing the length of the intersection line of the infinitely narrow beam with a voxel in 3D is rather preliminary. That is, we calculate the intersecting coordinate of the surfaces of the voxel with the beam, and then compute the distance between two intersecting points. The dedicated geometric formulas will be searched in the future study.

For the convenience of implementation, the algorithm for the 3D adjoint x-ray transform with the helical-scan setting that takes yos into account is summarized in Appendix F. For details of the CPU and GPU implementations in C see Ref. 1.

Again, the computational complexity of this algorithm for 3D is still O(Nv) for each voxel, and the overall complexity is O(Nx·Ny·Nz·Nv). When considering the parallel version, the complexity of this algorithm in 3D is also O(1) per parallel thread, provided that the time for the summation of the contributions from Nv views to a voxel is relatively negligible.

Generalization for the finite-size beam

The extension of this algorithm for the finite-size beam is again straightforward. That is, for a fixed voxel and a fixed scan direction, the detectors with nontrivial contribution to the voxel can be localized through the same method, e.g., Eqs. 3.2, 3.4, and then the efficient geometric formulas need to be supplied.

Here, we implement the 2D version for the finite-size beam based on the geometric formula in Appendix B for computing the intersection area of the beam with each nontrivially intersecting pixel. For details of the CPU and GPU implementations in C see Ref. 1.

RESULTS

The following results are based on Intel E6850 CPU (3.00 GHz), and the parallel computing results are based on NVIDIA GeForce GTX 460. The results can be reproduced with the online codes.1

The 3D computations are performed with a typical cone-beam setting. The parameters of the cubic domain with the side length 250 are Nx = 256, Ny = 256, Nx = 192, dx = 0.98, dy = 0.98, dz = 1.30; the parameters of the detection plane are Na = 512, Nb = 384, dy = 0.776, dz = 0.776; the parameters of the scan are SO = 1000, SD = 1500, Nv = 668. And the 2D fan-beam computations are preformed with the central slice of the detection plane, i.e., Nx = 256, Ny = 256, dx = 0.98, dy = 0.98, Nd = 512, dy = 0.776, SO = 1000, SD = 1500, and Nv = 668. Here, the unit of length is millimeter.

For the x-ray transform, the comparison of computational efficiency in 2D and 3D is performed between the popular Siddon's algorithm2 and the proposed algorithm, and also between their parallel versions, for the case with infinitely narrow beams. In addition, the result with the proposed algorithm for the finite-size beam in 2D is also presented. The Siddon algorithm is parallelized for each beam, and each parallel thread carries out the computation of the intersection of this beam with the 2D or 3D domain. The results are summarized in Table 1. Here, the 2D parallel computing result suggests that the new algorithm is a order of magnitude faster than the Siddon algorithm, which is consistent with the difference in their computational complexity per parallel thread, i.e., O(1) versus O(Nx). However, the gain in speed is reduced to the two–three folds in 3D, which is possibly due to the saturated parallelizability of the current GPU card (GeForce GTX 460).

Table 1.

Computational time (Unit: seconds). The first, second, and third columns represent the benchmark algorithm (Siddon), the proposed new algorithm (New), and the proposed new algorithm for the finite-size beam (New-FB), respectively, for the x-ray transform. The fourth and fifth columns represent the proposed new algorithm (Adjoint) and the proposed new algorithm for the finite-size beam (Adjoint-FB), respectively, for the adjoint x-ray transform. For rows, “2D” and “2D-p” denote the 2D computations and their parallel versions for the given fan-beam setting; “3D” and “3D-p” denote the 3D computations and their parallel versions for the given cone-beam setting.

| Siddon | New | New-FB | Adjoint | Adjoint-FB | |

|---|---|---|---|---|---|

| 2D | 4.8 | 2.6 | 8.6 | 4.7 | 6.7 |

| 2D-p | 0.61 | 0.05 | 0.18 | 0.07 | 0.09 |

| 3D | 3102 | 1960 | … | 12 770 | … |

| 3D-p | 83 | 36 | … | 238 | … |

For the adjoint x-ray transform, the computation in 2D and 3D is performed with the proposed algorithm for the case with infinitely narrow beams. Please note that the current method for computing the intersection length of a voxel with a beam in 3D is rather preliminary, and a more efficient method should improve the speed considerably. In addition, the result with the proposed algorithm for the finite-size beam in 2D is also presented. The results are summarized in Table 1.

CONCLUSION AND FUTURE WORK

In this paper, a faster algorithm than the popular Siddon's algorithm for computing the x-ray transform has been proposed, particularly for the parallel computing of the x-ray transform. And this fast algorithm can be extended for the finite-size beam. On the other hand, a fast and highly parallelizable algorithm has been proposed for computing the adjoint x-ray transform, which can also be extended for the finite-size beam. The proposed algorithms are suitable for parallel computing in the sense that their computational complexity is O(1) per parallel thread.

The proposed fast algorithms will serve as the critical building blocks to develop the iterative reconstruction methods for the low-dose CT imaging, such as breast imaging,21, 22 cardiac imaging,23 four-dimensional CT,24 multienergy CT,25 and radiation therapy.26, 27, 28, 29

The future work will also include the search of an efficient geometric formula for computing the intersecting volume of a finite-size beam with a voxel in order to extend the proposed algorithm for the 3D adjoint x-ray transform with the finite-size beam. In addition, more efficient geometric formulas for computing the intersecting length and area will be researched in order to further accelerate the proposed algorithm for computing the x-ray transform with the finite-size beam or the adjoint x-ray transform, especially in 3D.

ACKNOWLEDGMENT

This work is partially supported by National Institutes of Health (NIH)/NIBIB Grant No. EB013387.

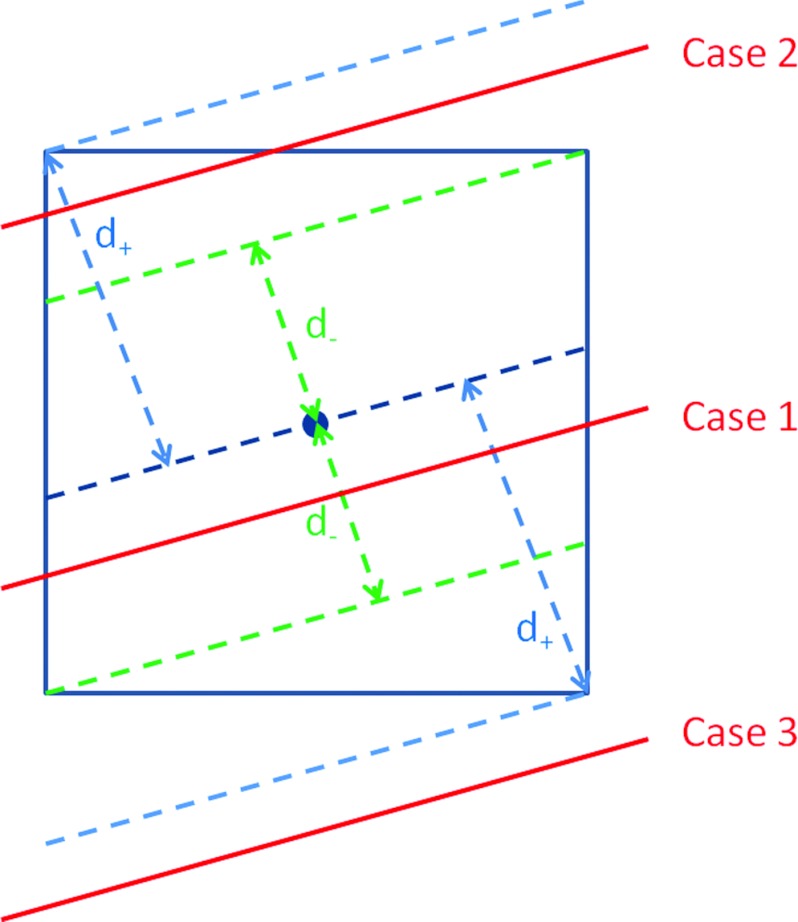

APPENDIX A: INTERSECTING LINE FORMULA IN 2D

Here, we present an efficient computation of the length of the intersection line of the infinitely narrow beam with a pixel, given the line formula ax+by = c with normalized coefficients (i.e., a2+b2 = 1), and (x0, y0) as the coordinate of the pixel center. Without loss of generality, we still assume dx = dy = 1.

The distance from the pixel center to the beam is utilized to divide the computation into three cases (Fig. 7). Let a2 = |a| and b2 = |b|. The distance from the pixel center to the beam d = |ax0+by0−c|, d+ = (a2+b2)/2, and d− = | a2-b2|/2. Then the geometric formula is

| (A1) |

Figure 7.

An efficient geometric formula for computing the length of the intersection line of the infinitely narrow beam with a pixel. The computation can be conveniently divided into three cases according to the distance from the pixel center to the beam.

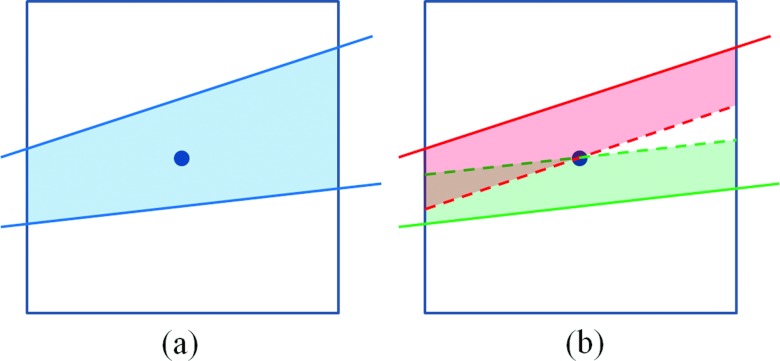

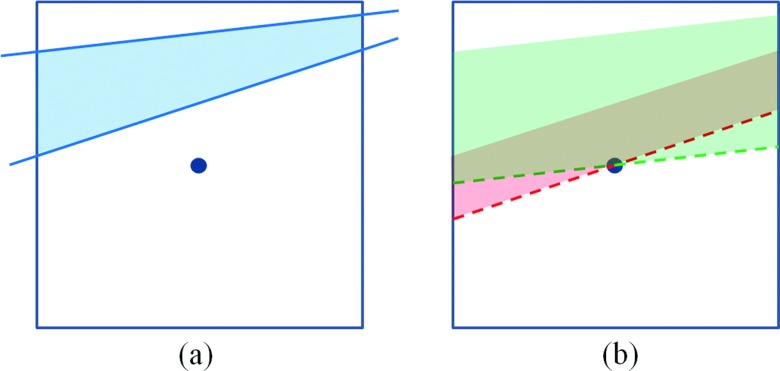

APPENDIX B: INTERSECTING AREA FORMULA IN 2D

Here, we present an efficient computation of the area of the intersection region of the finite-size beam with a pixel, given two boundary lines of the beam prescribed by a1x+b1y = c1 and a2x+b2y = c2 with normalized coefficients (i.e., a12+b12 = a22+b22 = 1), and (x0, y0) as the coordinate of the pixel center (Figs. 89). Without loss of generality, we still assume dx = dy = 1.

Figure 8.

When two sides of the beam are at the different side with respect to the pixel center, the area of the intersection of the beam with the pixel [the colored region in (a)] is equal to the area sum of two parallelograms [two colored regions in (b)], which can be individually computed based on the method in Fig. 7.

Figure 9.

When two sides of the beam are at the same side with respect to the pixel center, the area of the intersection of the beam with the pixel [the colored region in (a)] is equal to the absolute area difference of two parallelograms [two colored regions in (b)], which can be individually computed based on the method in Fig. 7.

Based on the method in Appendix A, we first compute the length of each boundary line of the beam l, and then each area can be individually computed by

| (B1) |

Finally, the total area of the intersection region is given by

| (B2) |

APPENDIX C: ALGORITHM FOR THE 2D X-RAY TRANSFORM

For the convenience of implementation, the proposed algorithm for the 2D x-ray transform is summarized as follows for the case with |x2-x1|>|y2-y1|, where the C language is used with the convention that the indices start from 0 instead of 1. Please note that dx = dy = 1 is assumed. For details of the CPU and GPU implementations in C see Ref. 1

| ********************************* |

| y = 0; nx2 = nx/2; ny2 = ny/2; |

| if (ABS(x1-x2)>ABS(y1-y2)) |

| { ky = (y2-y1)/(x2-x1); |

| for (ix = 0; ix<nx; ix++) |

| { xx1 = ix-nx2; xx2 = xx1+1; |

| if (slope> = 0) |

| {yy1 = y1+ky*(xx1-x1)+ny2; yy2 = y1+ky*(xx2-x1)+ny2;} |

| else |

| {yy1 = y1+ky*(xx2-x1)+ny2; yy2 = y1+ky*(xx1-x1)+ny2;} |

| c1 = floor(yy1); c2 = floor(yy2); |

| if (c2 = = c1) |

| { if (c1> = 0&&c1< = ny-1) |

| {iy = c1; y+ = sqrt(1+ky*ky)*x[iy*nx+ix];} |

| } |

| else |

| { if (c1> = -1&&c1< = ny-1) |

| { if (c1> = 0) |

| {iy = c1; y+ = ((c2-yy1)/(yy2-yy1))*sqrt(1+ky*ky)* |

| x[iy*nx+ix];} |

| if (c2< = ny-1) |

| {iy = c2; y+ = ((yy2-c2)/(yy2-yy1))*sqrt(1+ky*ky)* |

| x[iy*nx+ix];} |

| } |

| } |

| } |

| } |

| ********************************* |

APPENDIX D: ALGORITHM FOR THE 3D X-RAY TRANSFORM

For the convenience of implementation, the proposed algorithm for the 3D x-ray transform is summarized as follows for the case with |x2−x1|>|y2−y1| and |x2−x1|>|z2−z1|, where the C language is used with the convention that the indices start from 0 instead of 1. Please note that dx = dy = 1 is assumed. For details of the CPU and GPU implementations in C see Ref. 1.

| ********************************* |

| y = 0; nx2 = nx/2; ny2 = ny/2;nz2 = nz/2; |

| if(ABS(x1-x2)>ABS(y1-y2)) |

| { ky = (y2-y1)/(x2-x1); |

| kz = (z2-z1)/(x2-x1); |

| for(ix = 0;ix<nx;ix++) |

| { xx1 = ix-nx2;xx2 = xx1+1; |

| if(ky> = 0) |

| {yy1 = y1+ky*(xx1-x1)+ny2;yy2 = y1+ky*(xx2-x1)+ny2;} |

| else |

| {yy1 = y1+ky*(xx2-x1)+ny2;yy2 = y1+ky*(xx1-x1)+ny2;} |

| cy1 = floor(yy1);cy2 = floor(yy2); |

| if(kz> = 0) |

| {zz1 = (z1+kz*(xx1-x1))/dz+nz2;zz2 = (z1+kz*(xx2-x1))/dz+nz2;} |

| else |

| {zz1 = (z1+kz*(xx2-x1))/dz+nz2;zz2 = (z1+kz*(xx1-x1))/dz+nz2;} |

| cz1 = floor(zz1);cz2 = floor(zz2); |

| if(cy2 = = cy1) |

| { if(cy1> = 0&&cy1< = ny-1) |

| { if(cz2 = = cz1) |

| { if(cz1> = 0&&cz1< = nz-1) |

| {iy = cy1;iz = cz1;y+ = sqrt(1+ky*ky+kz*kz)*x[iz*ny*nx+iy*nx+ix];} |

| } |

| else |

| { if(cz1> = -1&&cz1< = nz-1) |

| { rz = (cz2-zz1)/(zz2-zz1); |

| if(cz1> = 0) |

| {iy = cy1;iz = cz1;y+ = rz*sqrt(1+ky*ky+kz*kz)*x[iz*ny*nx+iy*nx+ix];} |

| if(cz2< = nz-1) |

| {iy = cy1;iz = cz2;y+ = (1-rz)*sqrt(1+ky*ky+kz*kz)*x[iz*ny*nx+iy*nx+ix];} |

| } |

| } |

| } |

| } |

| else |

| { if(cy1> = -1&&cy1< = ny-1) |

| { if(cz2 = = cz1) |

| { if(cz1> = 0&&cz1< = nz-1) |

| { ry = (cy2-yy1)/(yy2-yy1); |

| if(cy1> = 0) |

| {iy = cy1;iz = cz1;y+ = ry*sqrt(1+ky*ky+kz*kz)*x[iz*ny*nx+iy*nx+ix];} |

| if(cy2< = ny-1) |

| {iy = cy2;iz = cz1;y+ = (1-ry)*sqrt(1+ky*ky+kz*kz)*x[iz*ny*nx+iy*nx+ix];} |

| } |

| } |

| else |

| { if(cz1> = -1&&cz1< = nz-1) |

| { ry = (cy2-yy1)/(yy2-yy1);rz = (cz2-zz1)/(zz2-zz1); |

| if(ry>rz) |

| {if(cy1> = 0&&cz1> = 0) |

| {iy = cy1;iz = cz1;y+ = rz*sqrt(1+ky*ky+kz*kz)*x[iz*ny*nx+iy*nx+ix];} |

| if(cy1> = 0&&cz2< = nz-1) |

| {iy = cy1;iz = cz2;y+ = (ry-rz)*sqrt(1+ky*ky+kz*kz)*x[iz*ny*nx+iy*nx+ix];} |

| if(cy2< = ny-1&&cz2< = nz-1) |

| {iy = cy2;iz = cz2;y+ = (1-ry)*sqrt(1+ky*ky+kz*kz)*x[iz*ny*nx+iy*nx+ix];} |

| } |

| else |

| { if(cy1> = 0&&cz1> = 0) |

| {iy = cy1;iz = cz1;y+ = ry*sqrt(1+ky*ky+kz*kz) *x[iz*ny*nx+iy*nx+ix];} |

| if(cy2< = ny-1&&cz1> = 0) |

| {iy = cy2;iz = cz1;y+ = (rz-ry)*sqrt(1+ky*ky+kz*kz)*x[iz*ny*nx+iy*nx+ix];} |

| if(cy2< = ny-1&&cz2< = nz-1) |

| {iy = cy2;iz = cz2;y+ = (1-rz)*sqrt(1+ky*ky+kz*kz)*x[iz*ny*nx+iy*nx+ix];} |

| } |

| } |

| } |

| } |

| } |

| } |

| } |

| ********************************* |

APPENDIX E: ALGORITHM FOR THE 2D ADJOINT X-RAY TRANSFORM

For the convenience of implementation, the proposed algorithm for the 2D adjoint x-ray transform with the fan-beam setting is summarized as follows, where the C language is used with the convention that the indices start from 0 instead of 1. Here, the detector offset distance yos is taken into account. For details of the CPU and GPU implementations in C see Ref. 1.

| ********************************* |

| x = 0;nd2 = nd/2; |

| for(iv = 0;iv<nv;iv++) |

| { xr = cos_phi[iv]*xc+sin_phi[iv]*yc; |

| yr = -sin_phi[iv]*xc+cos_phi[iv]*yc; |

| tmp = SD/((xr+SO)*d_y); |

| nd_max = floor((yr+1)*tmp-y_os+nd2); |

| nd_min = floor((yr-1)*tmp-y_os+nd2); |

| for(id = MAX(0,nd_min);id< = MIN(nd_max,nd-1);id++) |

| {if(l>0){x+ = l*y[iv*nd+id];}} |

| } |

| ********************************* |

APPENDIX F: ALGORITHM FOR THE 3D ADJOINT X-RAY TRANSFORM

For the convenience of implementation, the proposed algorithm for the 3D adjoint x-ray transform with the helical-scan setting is summarized as follows, where the C language is used with the convention that the indices start from 0 instead of 1. Here, the detector offset distance yos is taken into account. For details of the CPU and GPU implementations in C see Ref. 1.

| ********************************* |

| x = 0;na2 = na/2;nb2 = nb/2;nd = na*nb; |

| d = sqrt((1+dz*dz)/2); |

| for(iv = 0;iv<nv;iv++) |

| { xr = cos_phi[iv]*xc+sin_phi[iv]*yc; |

| yr = -sin_phi[iv]*xc+cos_phi[iv]*yc; |

| zr = zc-z[iv]; |

| tmp = SD/((xr+SO)*d_y); |

| na_max = floor((yr+1)*tmp-y_os+na2); |

| na_min = floor((yr-1)*tmp-y_os+na2); |

| tmp = SD/((xr+SO)*d_z); |

| nb_max = floor((zc+d)*tmp+nb2); |

| nb_min = floor((zc-d)*tmp+nb2); |

| for(ib = MAX(0,nb_min);ib< = MIN(nb_max,nb-1);ib++) |

| { for(ia = MAX(0,na_min);ia< = MIN(na_max,na-1);ia++) |

| {if(l>0){x+ = l*y[iv*nd+ib*na+ia];}} |

| } |

| } |

| ********************************* |

References

- https://sites.google.com/site/fastxraytransform.

- Siddon R. L., “Fast calculation of the exact radiological path for a three-dimensional CT array,” Med. Phys. 12, 252–255 (1985). 10.1118/1.595715 [DOI] [PubMed] [Google Scholar]

- Chen G. H., Tang J., and Leng S. H., “Prior image constrained compressed sensing (PICCS): A method to accurately reconstruct dynamic CT images from highly undersampled projection data sets,” Med. Phys. 35, 660–663 (2008). 10.1118/1.2836423 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jia X., Lou Y., Li R., Song W. Y., and Jiang S. B., “Cone beam CT reconstruction from undersampled and noisy projection data via total variation,” Med. Phys. 37, 1757–1760 (2010). 10.1118/1.3371691 [DOI] [PubMed] [Google Scholar]

- Sidky E. Y. and Pan X. C., “Image reconstruction in circular cone-beam computed tomography by constrained, total-variation minimization,” Phys. Med. Biol. 53, 4777–4807 (2008). 10.1088/0031-9155/53/17/021 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wang J., Li T. F., and Xing L., “Iterative image reconstruction for CBCT using edge-preserving prior,” Med. Phys. 36, 252–260 (2009). 10.1118/1.3036112 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yu H. Y. and Wang G., “Compressed sensing based interior tomography,” Phys. Med. Biol. 54, 2791–2805 (2009). 10.1088/0031-9155/54/9/014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vedam S. S., Keall P. J., Kini V. R., Mostafavi H., Shukla H. P., and Mohan R., “Acquiring a four-dimensional computed tomography dataset using an external respiratory signal,” Phys. Med. Biol. 48, 45–62 (2003). 10.1088/0031-9155/48/1/304 [DOI] [PubMed] [Google Scholar]

- Low D., Nystrom M., Kalinin E., Parikh P., Dempsey J., Bradley J., Mutic S., Wahab S., Islam T., Christensen G., Politte D., and Whiting B., “A method for the reconstruction of four-dimensional synchronized CT scans acquired during free breathing,” Med. Phys. 30, 1254–1263 (2003). 10.1118/1.1576230 [DOI] [PubMed] [Google Scholar]

- Keall P. J., Starkschall G., Shukla H., Forster K. M., Ortiz V., Stevens C. W., Vedam S. S., George R., Guerrero T., and Mohan R, “Acquiring 4D thoracic CT scans using a multislice helical method,” Phys. Med. Biol. 49, 2053–2067 (2004). 10.1088/0031-9155/49/10/015 [DOI] [PubMed] [Google Scholar]

- Rietzel E., Pan T., and Chen G. T., “Four-dimensional computed tomography: Image formation and clinical protocol,” Med. Phys. 32, 874–889 (2005). 10.1118/1.1869852 [DOI] [PubMed] [Google Scholar]

- Li T., Schreibmann E., Thorndyke B., Tillman G., Boyer A., Koong A., Goodman K., and Xing L., “Radiation dose reduction in four-dimensional computed tomography,” Med. Phys. 32, 3650–3660 (2005). 10.1118/1.2122567 [DOI] [PubMed] [Google Scholar]

- Zhao H. and Reader A. J., “Fast ray-tracing technique to calculate line integral paths in voxel arrays,” in Proceedings of the IEEE Nuclear Science Symposium and Medical Imaging Conference (2003), pp. 2808–2812.

- De Man B. and Basu S., “Distance-driven projection and backprojection in three dimensions,” Phys. Med. Biol. 49, 2463–2475 (2004). 10.1088/0031-9155/49/11/024 [DOI] [PubMed] [Google Scholar]

- Long Y., Fessler J. A., and Balter J. M., “3-D forward and back-projection for x-ray CT using separable footprints,” IEEE Trans. Med. Imaging 29, 1839–1850 (2010). 10.1109/TMI.2010.2050898 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Xu F. and Mueller K., “Accelerating popular tomographic reconstruction algorithms on commodity PC graphics hardware,” IEEE Trans. Nucl. Sci. 52, 654–663 (2005). 10.1109/TNS.2005.851398 [DOI] [Google Scholar]

- Zhao X., Bian J., Sidky E. Y., Cho S., Zhang P., and Pan X., “GPU-based 3D cone-beam CT image reconstruction: Application to micro CT,” in Proceedings of the IEEE Nuclear Science Symposium and Medical Imaging Conference (2007), pp. 3922–3925.

- Knaup M., Steckmann S., and Kachelrieß M., “GPU-based parallel-beam and cone beam forward- and backprojection using CUDA,” in Proceedings of the IEEE Nuclear Science Symposium and Medical Imaging Conference (2008), pp. 5153–5157.

- Xu W. and Mueller K., “A performance-driven study of regularization methods for GPU-accelerated iterative CT,” in Proceedings of the High Performance Image Reconstruction Workshop (Beijing, China, 2009).

- Jia X., Dong B., Lou Y., and Jiang S. B., “GPU-based iterative cone-beam CT reconstruction using tight frame regularization,” Phys. Med. Biol. 56, 3787–3807 (2011). 10.1088/0031-9155/56/13/004 [DOI] [PubMed] [Google Scholar]

- Ducote J. L. and Molloi S., “Quantification of breast density with dual energy mammography: An experimental feasibility study,” Med. Phys. 37, 793–801 (2010). 10.1118/1.3284975 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sechopoulos I., “X-ray scatter correction method for dedicated breast computed tomography,” Med. Phys. 39, 2896–2903 (2012). 10.1118/1.4711749 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hsieh J., Londt J., Vass M., Li J., Tang X., and Okerlund D., “Step-and-shoot data acquisition and reconstruction for cardiac x-ray computed tomography,” Med. Phys. 33, 4236–4248 (2006). 10.1118/1.2361078 [DOI] [PubMed] [Google Scholar]

- Gao H., Cai J. F., Shen Z., and Zhao H., “Robust principal component analysis-based four-dimensional computed tomography,” Phys. Med. Biol. 56, 3181–3198 (2011). 10.1088/0031-9155/56/11/002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gao H., Yu H., Osher S., and Wang G., “Multi-energy CT based on a prior rank, intensity and sparsity model (PRISM),” Inverse Probl. 27, 115012 (2011). 10.1088/0266-5611/27/11/115012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jiang S. B., Wolfgang J., and Mageras G. S., “Quality assurance challenges for motion-adaptive radiation therapy: Gating, breath holding, and four dimensional computed tomography,” Int. J. Radiat. Oncol., Biol., Phys. 71, S103–S107 (2008). 10.1016/j.ijrobp.2007.07.2386 [DOI] [PubMed] [Google Scholar]

- Niu T., Al-Basheer A., and Zhu L., “Quantitative cone-beam CT imaging in radiation therapy using planning CT as a prior: First patient studies,” Med. Phys. 39, 1991–2000 (2012). 10.1118/1.3693050 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wang J., Li T., Liang Z., and Xing L., “Dose reduction for kilovotage cone-beam computed tomography in radiation therapy,” Phys. Med. Biol. 53, 2897–2909 (2008). 10.1088/0031-9155/53/11/009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Xing L., Thorndyke B., Schreibmann E., Yang Y., Li T. F., Kim G. Y., Luxton G., and Koong A., “Overview of image-guided radiation therapy,” Med. Dosim. 31, 91–112 (2006). 10.1016/j.meddos.2005.12.004 [DOI] [PubMed] [Google Scholar]