Abstract

Prior research indicates that pigeons do not prefer an alternative that provides a sample (for matching-to-sample) over an alternative that does not provide a sample (i.e., there is no indication of which comparison stimulus is correct). However, Zentall and Stagner (2010) showed that when delay of reinforcement was controlled, pigeons had a strong preference for matching over pseudo-matching (there was a sample but it did not indicate which comparison stimulus was correct). Experiment 1 of the present study replicated and extended the results of the Zentall and Stagner study by including an identity relation between the sample and one of the comparison stimuli in both the matching and pseudo-matching tasks. In Experiment 2, when we asked if the pigeons would still prefer matching if we equated the two tasks for probability of reinforcement, we found no systematic preference for matching over pseudo-matching. Thus, it appears that in the absence of differential reinforcement, the information provided by a sample that signals which of the two comparison stimuli is correct is insufficient to produce a preference for that alternative.

Keywords: information, conditioned reinforcement, choice, matching, pseudo-matching, pigeons

For many years comparative psychologists have asked whether animals prefer information over its absence (Prokasy, 1956; Roper & Zentall, 1999; Wyckoff, 1952). In all of these procedures, animals can choose between obtaining cues that signal both the presentation of reinforcement and the absence of reinforcement and not obtaining those cues. The general finding is that animals prefer to obtain cues, even if obtaining them does not affect the probability or rate of reinforcement. However, there is little evidence that these preferences reflect the preference for information as defined by classical information theory (Berlyne, 1957; Hendry, 1969).

According to classical information theory, the amount of information transmitted is a function of the degree to which uncertainty is reduced. When prior to an initial choice the outcome is totally uncertain (50% reinforcement for choice) and following the choice the probability of reinforcement either increases to 100% or decreases to 0%, uncertainty reduction should be maximal. Any increase or decrease in the overall probability of reinforcement should reduce the amount of information transmitted because it should result in a decrease in uncertainty reduction. From this perspective, information should be symmetrical and a cue for reinforcement should provide just as much information as a cue for the absence of reinforcement.

Roper and Zentall (1999) tested this prediction of information theory by manipulating the overall probability of reinforcement. They started with an overall probability of 50% reinforcement (one alternative provided a cue 50% of the time that always predicted reinforcement or a cue 50% of the time that signaled the absence of reinforcement, whereas the other alternative provided one of two cues on each trial each associated with 50% reinforcement) and they found a strong preference for the alternative that provided cues for reinforcement and its absence. Consistent with information theory, Roper and Zentall found that increasing the overall probability of reinforcement associated the two alternatives to 87.5% (one alternative provided a cue for reinforcement 87.5% of the time and a cue for the absence of reinforcement 12.5% of the time, whereas the other alternative provided one of two cues on each trial each associated with 87.5% reinforcement) decreased the preference for the alternative associated with the discriminative stimuli. On the other hand, contrary to information theory, they found that lowering the overall probability of reinforcement associated with the two alternatives to 12.5%, which also should have decreased the preference for the alternative that was followed by the discriminative stimuli, actually increased it. That is, when one alternative provided a cue for reinforcement 12.5% of the time and a cue for the absence of reinforcement 87.5% of the time, whereas the other alternative provided one of two cues on each trial, each associated with 12.5% reinforcement (which also should have decreased the preference for the alternative that was followed by the discriminative stimuli), it actually increased it.

An alternative theory was proposed by Dinsmoor (1983). He suggested that animals will prefer conditioned reinforcers. For example, a good conditioned reinforcer such as a signal for 100% reinforcement would be preferred over a signal for an uncertain 50% reinforcement, a poorer conditioned reinforcer. The asymmetry found by Roper and Zentall (1999) is consistent with a conditioned reinforcement interpretation because the signal for 100% reinforcement would be compared with the uncertain alternative. When the overall probability of reinforcement associated with the two alternatives increased (to 87.5%), the difference in the probability of reinforcement between the two conditioned reinforcers became relatively small (100% − 87.5% = 12.5%) and thus, the preference for the conditioned reinforcer decreased. However, when the overall probability of reinforcement associated with the two alternatives decreased (to 12.5%), the difference in the probability of reinforcement between the two conditioned reinforcers became relatively large (100% − 12.5% = 87.5%) and thus, the preference for the conditioned reinforcer increased. Thus, it appears that preference for discriminative stimuli may be controlled by the preference for a cue that reliably predicts reinforcement, a conditioned reinforcer.

To fully account for the results of Roper and Zentall (1999), one must also posit that the negative effect of the stimulus associated with the absence of food (inhibition) does not detract from the discriminative-stimulus alternative sufficiently to counteract the effects of conditioned reinforcement. In support of this hypothesis, Stagner and Zentall (2010) found that pigeons will prefer discriminative stimuli even when the ratio of stimuli associated with the absence of reinforcement to stimuli associated with reinforcement was 4 to 1 and the nondiscriminative-stimulus alternative provided 2.5 times as much food as the discriminative-stimulus alternative.

Recently, Roberts, Feeney, McMillan, MacPherson, Musolino, and Petter (2009, Exp. 4) modified the task used by Roper and Zentall (1999) by replacing the simple successive discrimination in the terminal link with a conditional discrimination. Pigeons were offered an initial-link choice between a conditional discrimination (matching-to-sample) in which they could obtain close to 100% reinforcement and the same task but without a sample, that is, with a simultaneous discrimination in which they could obtain only about 50% reinforcement because there was no cue to indicate which of the comparison stimuli was correct on each trial. Surprisingly, the pigeons showed little preference for the matching task that would have provided them with almost twice as much reinforcement.

Zentall and Stagner (2010) argued that differential delay of reinforcement may have played a role in the finding by Roberts et al. (2009). Typically, in matching-to-sample research, a fixed number of responses are required to the sample or the sample is presented for a fixed duration before the comparison stimuli are presented. However, to attempt to make the sample and no-sample tasks comparable, Roberts et al. presented the sample and the two comparison stimuli at the same time, so after choosing either task, the pigeons could immediately choose one of the comparison stimuli. For this reason, if a pigeon chose the sample-absent alternative, it could choose one of the comparison stimuli and could possibly receive reinforcement immediately, but of course only 50% of the time. However, if the pigeon chose the matching-to-sample task, to obtain high probability of reinforcement it would have to attend to and identify the sample, and then locate the correct (matching) comparison stimulus. This would have required additional time. As pigeons are known to have relatively steep delay-discounting functions (Green, Myerson, Holt, Slevin, & Estle, 2004), it may not be surprising that they would be relatively indifferent to the tradeoff between the higher probability of delayed reinforcement (that they could obtain with the matching-to-sample alternative) over the immediate 50% reinforcement (that they could obtain with the absent-sample alternative).

To test this hypothesis, Zentall and Stagner (2010) equated the nominal delay to reinforcement associated with the matching task by using a pseudo sample, rather than no sample, with the alternative task. If a pigeon chose the matching task, it received a sample for 5 s followed by presentation of the comparison stimuli. Choice of the matching comparison stimulus was reinforced. However, if the pigeon chose the pseudo-matching task, it received a sample for 5 s and then comparison stimuli, but neither comparison stimulus matched the sample and the color of the sample did not indicate which comparison stimulus was correct. Thus, choice of the pseudo-matching alternative resulted in 50% reinforcement. Under those conditions, the pigeons showed a clear preference for the matching-to-sample alternative. Thus, when delay to reinforcement is controlled, pigeons do show a preference for the alternative that provides them with a sample stimulus that they can use to choose the correct comparison stimulus over one that does not.

The advantage of using a conditional discrimination (Zentall & Stagner, 2010) over a simple successive discrimination (Roper & Zentall, 1999) is that although the two conditional stimuli provide information in the form of a cue that signals which comparison stimulus is correct, the comparison stimuli are not differentially associated with reinforcement. Thus, they should not become differential conditioned reinforcers, as is the case with a simple successive discrimination in which one stimulus is associated with reinforcement and the other with the absence of reinforcement.

The purpose of the present experiments was to further explore pigeons' sensitivity to the relation between the sample and the comparison stimuli. In Experiment 1, we asked whether the results found by Zentall and Stagner (2010) were obtained because the pigeons preferred the task in which one of the comparison stimuli matched the sample (there was an identity relation between them) rather than because the matching task provided a conditional stimulus that allowed the pigeons to choose the correct comparison stimulus that was signaled by the sample. In Experiment 2, we asked if pigeons would prefer a matching task even if it was not associated with a higher rate of reinforcement. That is, would they prefer a task in which reinforced responding was contingent on choice of the stimulus that matched the sample, over a second matching task in which the sample did not indicate which comparison was correct, but the two tasks were equated for the probability of reinforcement. This is another way of asking if pigeons prefer information (in the classic sense) over its absence. In the case of matching-to-sample, however, there should be no differential conditioned reinforcer as there was in the Roper and Zentall (1999) experiment, and the probability and delay of reinforcement between the two tasks should be the same. If pigeons prefer information, they should choose the alternative that provides them with a matching task over one that yields the same probability of reinforcement but does not provide information about which comparison stimulus is correct.

Experiment 1

In Experiment 1 we tested the hypothesis that preference for the alternative that provided a matching-to-sample task depended on the identity relation between the sample and one of the comparison stimuli. In this experiment, one alternative provided an identity matching task in which choice of the comparison stimulus that matched the sample was reinforced, whereas the other alternative provided a pseudo-matching task in which one of the comparison stimuli matched the sample but choice of either comparison stimulus was reinforced on a random 50% of the trials.

Method

Subjects

The subjects were eight unsexed White Carneau pigeons ranging from 5-8 years of age. They were retired breeders purchased from the Palmetto Pigeon Plant, Sumter, South Carolina. The pigeons had all served as subjects in a previous experiment involving daily reversals of a simultaneous hue discrimination. The pigeons were kept on a 12:12-h light/dark cycle and were maintained at 80-85% of their free-feeding body weight. The pigeons had free access to grit and water, and were cared for in accordance with the University of Kentucky's animal care guidelines.

Apparatus

A standard (LVE/BRS, Laurel, Md.) test chamber was used, with inside measurements 35 cm high, 30 cm long, and 35 cm across the response panel. The response panel in the chamber had a horizontal row of three response keys, 25 cm above the floor. The rectangular keys (2.5 cm high × 3.0 cm wide) were separated from each other by 1.0 cm and behind each key was a 12-stimulus inline projector (Industrial Electronics Engineering, Van Nuys, Calif.) that projected a white plus and a white line-drawn circle on a back background as well as red, yellow, blue, green, and white hues (Kodak Wratten Filter Nos. 26, 9, 38, 60, and no filter respectively). In the chamber, the bottom of the center-mounted feeder (filled with Purina Pro Grains) was 9.5 cm from the floor. When the feeder was raised, it was illuminated by a 28 V, 0.04 A lamp. A 28 V 0.1 A houselight was centered above the response panel and an exhaust fan was mounted on the outside of the chamber to mask extraneous noise. A microcomputer in the adjacent room controlled the experiment.

Procedure

Pretraining

All pigeons received two pretraining sessions in which they were required to peck once at each discriminative stimulus on each key (yellow, red, green, blue), as well as white on the center key and circle and plus on the side keys, for 1.5 s access to mixed-grain reinforcement. Each session consisted of five presentations of each stimulus in each position, for a total of 75 trials per session.

Training

All trials began with a white light presented on the center key. A single peck to the white stimulus illuminated one or both of the side keys. On forced matching trials, the plus, for example, appeared on one of the side keys. One peck extinguished the plus, and illuminated, for example, a red or green sample on the center key for 5.0 s, after which the sample was turned off and red and green comparison stimuli appeared on the side keys. Choice of the comparison color that matched the sample was reinforced and started the 10-s intertrial interval (ITI), whereas choice of the other comparison led directly to ITI. There were 32 forced matching trials per session.

On forced pseudo-matching trials, the circle, for example, appeared on the other side key. One peck extinguished the circle and illuminated a blue or yellow stimulus, for example, on the center key for 5.0 s, after which the stimulus was turned off and blue and yellow comparison stimuli appeared on the side keys. On a random half of these trials choice of either comparison stimulus was reinforced and started the ITI. On the remaining half of the pseudo-matching trials, the comparison choice was not reinforced and led directly to ITI. There were 32 forced pseudo-matching trials per session.

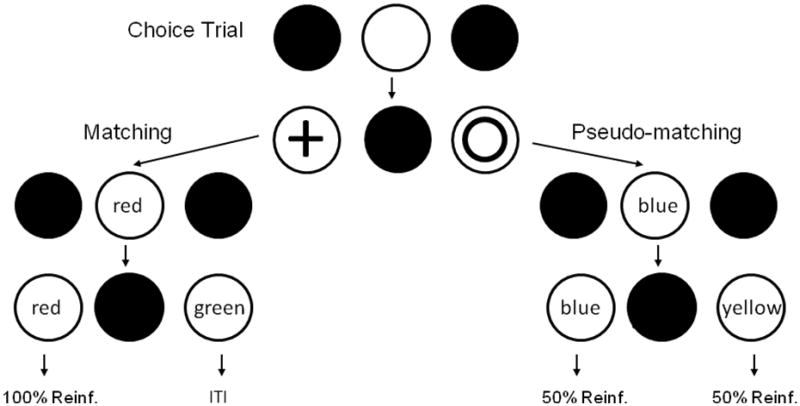

On choice trials, both the plus and the circle were presented on the side keys following the response to the white center key. A single peck to either shape stimulus was followed by the contingencies associated with that stimulus on forced trials. There were 32 choice trials in each session, randomly mixed among the forced trials. The design of Experiment 1 appears in Figure 1. For a given pigeon, the shape stimuli appeared on the same side keys throughout the experiment but the assignment of the shape and side, as well as the colors associated with the matching and pseudo-matching task, was counterbalanced over subjects. The pigeons received 16 training sessions.

Figure 1.

Design of Experiment 1: Choice trials. Pigeons could choose between matching and pseudo-matching. The red and blue samples on the third line would sometimes be green or yellow. The spatial location of the comparison colors (left and right) were counterbalanced over trials (fourth line). Shapes and colors were counterbalanced over subjects.

Results and Discussion

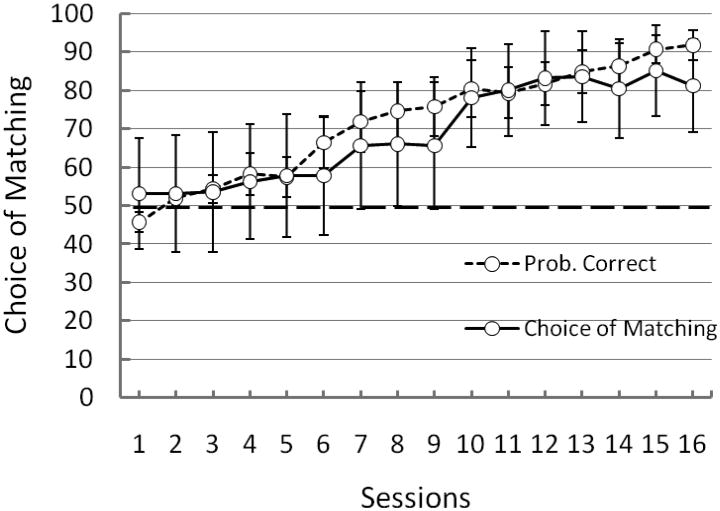

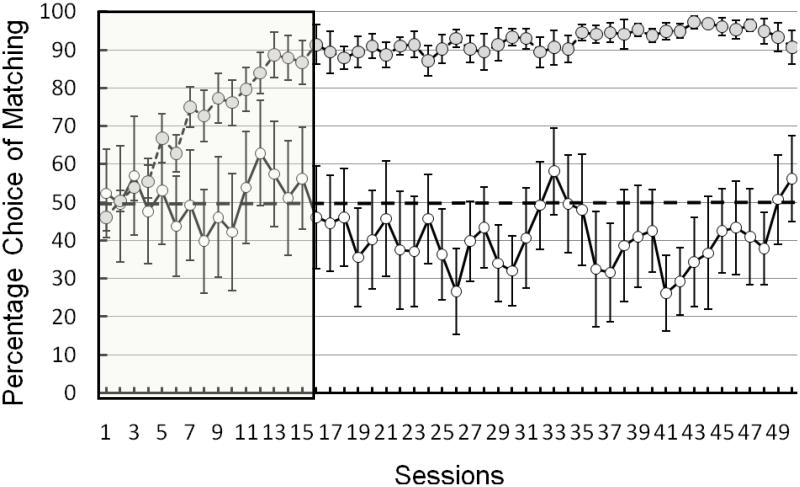

The pigeons acquired the matching task quickly (see Figure 2). By Session 15 they were performing at 90.6% correct. In parallel with their acquisition of matching, the pigeons showed a preference for the matching over the pseudo-matching alternative. By Session 15 they chose the matching alternative on 85.2% of the choice trials. A t-test performed on the matching accuracy scores pooled over the last 5 sessions of training indicated that the pigeons were performing significantly above chance, t(7) = 8.93, p < .0001. A t-test performed on the pigeons' choice of the matching alternative pooled over the last 5 sessions of training indicated that they had a strong preference for that alternative, t(7) = 2.72, p = .03. Finally, we asked if matching accuracy was greater than the preference for the matching alternative. When pooled over the last 5 sessions of training, the difference between matching accuracy and matching preference was not statistically significant, t < 1.

Figure 2.

Experiment 1: Preference for the matching alternative over the pseudo-matching alternative as a function of training session (solid lines). Matching accuracy on forced trials (dashed lines).

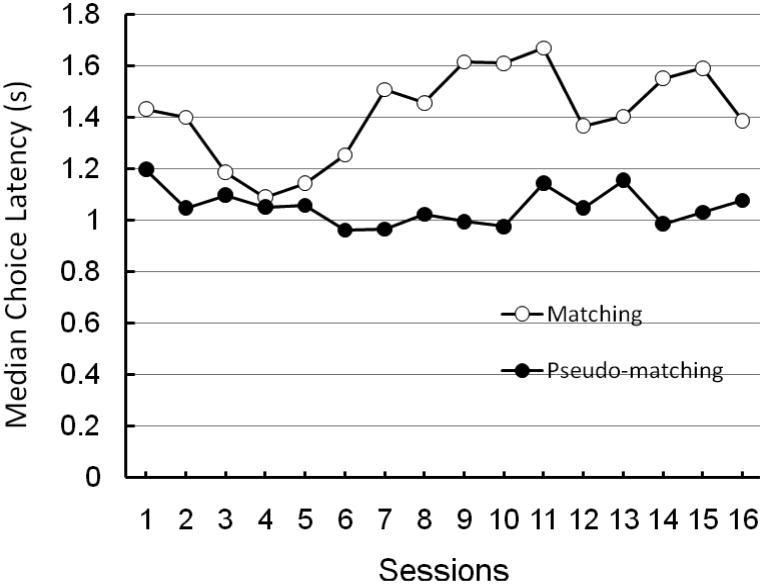

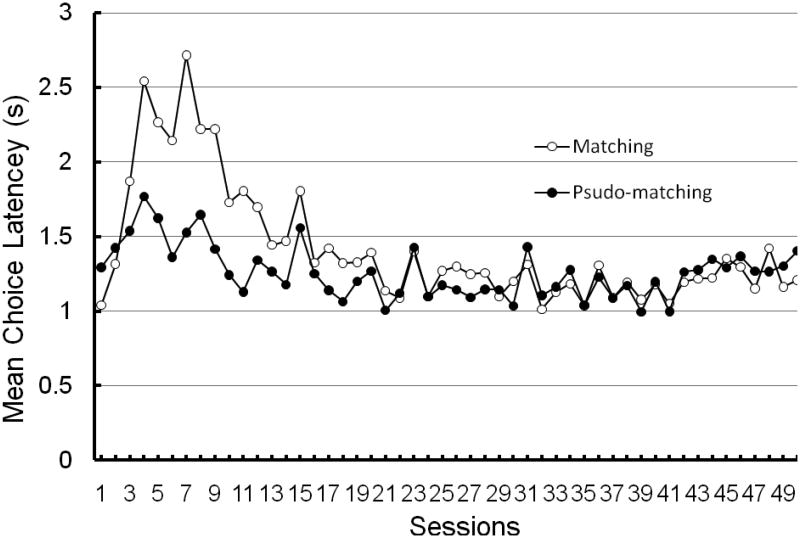

An examination of the comparison choice latencies, when pooled over all sessions, indicated that they were longer on matching trials (1.41 s) than they were on pseudo-matching trials (1.05 s) (see Figure 3). A correlated t-test performed on the choice latencies pooled over training sessions, indicated that the difference in choice latencies between matching and pseudo-matching trials was significant, t(7) = 3.76, p = .007. To get a better feel for the terminal difference in comparison choice latency the latency data were pooled over the last 6 training sessions. A correlated t-test indicated that the difference in latencies between matching (1.49 s) and pseudo-matching (1.07 s) trials was also significant, t(7) = 2.75, p = .028. Thus, the pigeons took somewhat longer (about 0.4 s) to make their comparison choices on matching trials than they did on pseudo matching trials. However, this difference in comparison choice latency cannot account for the preference for the matching alternative because the increase in delay to reinforcement on matching trials should have reduced the preference for matching trials over pseudo-matching trials.

Figure 3.

Experiment 1: Comparison choice latencies on matching and pseudo-matching trials as a function of training session.

These results were quite similar to those reported by Zentall and Stagner (2010). They reported matching accuracy at Session 15 of 81.3% and choice of the matching alternative over the pseudo-matching alternative of 82.8% at that point in training. Thus, the results reported by Zentall and Stagner did not result from the fact that in their pseudo-matching task there was no identity match between the sample and one of the comparison stimuli. When in the present study, both the matching and pseudo-matching tasks involved a potential identity relation, preference for the matching alternative was at least as strong as it was in the Zentall and Stagner study.

The present results also confirm that when delay to reinforcement is carefully controlled, pigeons are sensitive to the probability of reinforcement and will choose to see a sample rather than no sample or a pseudo sample. These results, together with the results from Zentall and Stagner, indicate Roberts et al.'s (2009, Experiment 4) finding that pigeons do not prefer a sample (that could result in near 100% reinforcement) over the absence of a sample (that could result in only 50% reinforcement) was very likely due to the simultaneous matching procedure that they used. Specifically, when the pigeons chose the matching-task alternative they would have had to identify the sample color and then look for the matching comparison color on that trial (sometimes on the left, sometimes on the right), whereas when the pigeons chose the alternative that did not include a sample, they could have selected one of the comparison stimuli without regard to its identity. The difference in choice latency between these two chains could have been 1-2 s.

An interesting question unrelated to the purpose of the present experiment is what did the pigeons choose on forced pseudo-matching trials? Recall that trials were defined as either reinforced or not reinforced independent of comparison choice. One interesting possibility is that the pigeons showed evidence of generalized matching. That is, because whenever the colors associated with the identity matching task appeared, color matching was reinforced and it is possible that the pigeons generalized choice of the matching comparison on pseudo-matching trials. However, no evidence of generalized matching was found on pseudo-matching trials. Over the last 10 sessions of training, on pseudo-matching forced trials, of the eight pigeons, five showed a strong position bias, with 4 preferring the right key (mean = 99.2%) and one preferring the left key (100%), and three showed a strong color bias, with one pigeon each preferring red (100%), green (100%), and blue (96.5%). The position biases would have been expected because the pigeons could have anticipated pecking their preferred comparison location at the time of pseudo-sample presentation. However, the color preferences were not anticipated because pecking the preferred comparison color would have required locating the preferred color before choosing it, thereby incurring a small additional delay.

Experiment 2

Roper and Zentall (1999) found that pigeons preferred discriminative stimuli of equal frequency (one stimulus associated with 100% reinforcement or a different stimulus associated with 0% reinforcement) over nondiscriminative stimuli (both stimuli associated with 50% reinforcement) in spite of the fact that the alternatives were associated with an equal overall rate of reinforcement.

According to information theory (Berlyne, 1957; Hendry, 1969) this preference may result from a preference for information over the absence of information. However, Dinsmoor (1983) proposed that the preference resulted from the strong conditioned reinforcement associated with the stimulus associated with 100% reinforcement.

As already noted, Roper and Zentall (1999) offered support for the conditioned reinforcement hypothesis. They found that although the pigeons showed a decrease in preference for the discriminative stimulus alternative when the probability of reinforcement associated with each alternative was increased (consistent with information theory), they found an increase in preference for the discriminative stimulus alternative when the probability of reinforcement associated with each alternative was decreased (contrary to information theory but consistent with conditioned reinforcement).

The design of Experiment 1 provides an alternative means of distinguishing between information theory and conditioned reinforcement, if instead of differential reinforcement for choice of matching and pseudo-matching the two alternatives are followed by similar probabilities of reinforcement. By following the initial choice with a conditional discrimination, rather than sometimes by a simple conditioned reinforcer (a stimulus followed by 100% reinforcement) and at other times by a simple conditioned inhibitor (a stimulus followed by 0% reinforcement), there should be no differential conditioned reinforcement and thus no differential preference. On the other hand, if information theory is correct, the pigeons should prefer informative samples over uninformative samples, even if the probability of reinforcement is equated.

The purpose of Experiment 2 was to determine if pigeons will prefer an alternative that provides them with information in the form of a sample that indicates which comparison stimulus is correct, over a sample that provides no information but is associated with the same probability of reinforcement. If information has value for pigeons, one might expect them to prefer the matching alternative, especially during the acquisition of the matching task.

Method

Subjects

The subjects were eight unsexed White Carneau pigeons ranging from 5-8 years of age. They were retired breeders purchased from the Palmetto Pigeon Plant, Sumter, South Carolina. The pigeons were kept on a 12:12-h light/dark cycle and were maintained at 80-85% of their free feeding body weight. They had free access to grit and water, and were cared for in accordance with the University of Kentucky's animal care guidelines. All of the pigeons had taken part in a previous experiment in which they had learned a simultaneous discrimination between red and yellow key lights and between blue and green key lights.

Apparatus

The apparatus was the same as that used for Experiment 1.

Procedure

Pretraining

All pigeons received two pretraining sessions in which they were required to peck once at each discriminative stimulus on each key (yellow, red, green, blue), as well as white on the center key and circle and plus on the side keys, for 1.5 s access to mixed-grain reinforcement. Each session consisted of five presentations of each stimulus in each position, for a total of 75 trials per session.

Equating for Reinforcement

All trials began with a white light presented on the center key. A single peck to the white stimulus illuminated one or both of the side keys. On forced matching trials, the plus appeared on one of the side keys. One peck extinguished the plus, and illuminated a red or green sample on the center key for 5 s, after which the sample was turned off and red and green comparison stimuli appeared on the side keys. Choice of the comparison hue that matched the sample was reinforced and started the 10-s ITI, whereas choice of the other comparison led directly to ITI. There were 32 forced matching trials per session.

On forced pseudo-matching trials, the circle appeared on the other side key. One peck extinguished the circle and illuminated a blue or yellow pseudo sample on the center key for 5 s, after which the pseudo sample was turned off and blue and yellow comparison stimuli were appeared on the side keys. If that trial was designated as one with reinforcement, a response to either comparison key was reinforced (independent of the hue of the pseudo sample) and started the 10-s ITI. If that trial was designated as one without reinforcement, a response to neither comparison key was reinforced but there was a 10-s ITI. There were 32 forced pseudo-matching trials per session.

The total number of forced pseudo-matching trials for which choice was reinforced was contingent upon how many correct forced matching trials there were on the previous session. On the first training session, the number of reinforced pseudo-matching trials was set at 16 (50% correct). To correct for the fact that the pigeons were acquiring the matching task and would show improvement from session to session, if the number of correct forced matching trials was odd, one additional reinforcement was added to the pseudo-matching number of reinforced trials and if it was even, two additional reinforcements were added. For example, if the pigeon was correct on 24 of the matching forced trials on the previous session, then the subject would receive reinforcement on 26 of the 32 pseudo-matching trials on the following day. The same approximate ratio of reinforcement was applied to choice trials whenever there was a choice of the pseudo-matching alternative.

On choice trials, both the plus and the circle were presented on the side keys following the response to the white center key. A single peck to either shape stimulus was followed by the contingencies associated with that stimulus on forced trials. There were 32 choice trials in each session, randomly mixed among the forced trials. For a given pigeon, the shape stimuli appeared on the same side keys throughout the experiment but the assignment of the shape and side, as well as the colors associated with the matching and pseudo-matching task, was counterbalanced over subjects. The pigeons received 50 sessions of training.

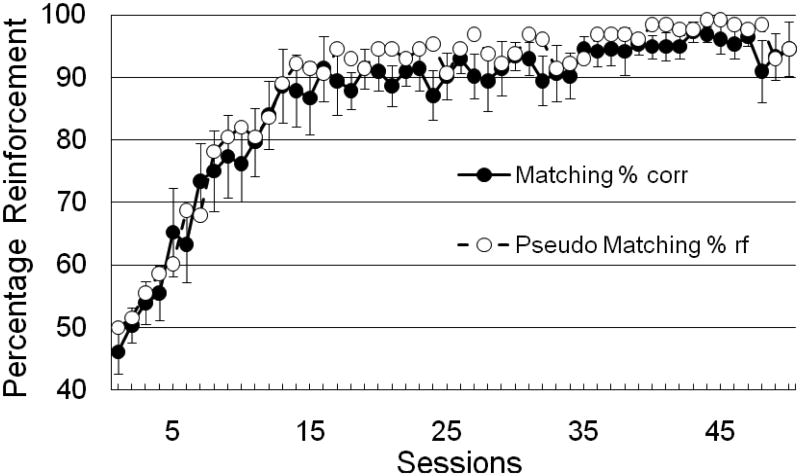

Results

In an attempt to equate the probability of reinforcement on matching and pseudo-matching trials, the number pseudo-matching trials for which choice of either comparison was reinforced on any session depended on the number of correct matching trials from the preceding session. To confirm that this procedure resulted in a comparable probability of reinforcement, the probability of reinforcement on forced matching and pseudo-matching trials over sessions was plotted (see Figure 4). As can be seen in the figure, the probability of reinforcement associated with the matching and the pseudo-matching task was approximately the same throughout training.

Figure 4.

Experiment 2: Matching accuracy on forced trials as a function of training session (filled circles) and probability of reinforcement on forced pseudo-matching trials (open circles).

When the matching task and the pseudo-matching task were equated for probability of reinforcement, no consistent preference was found on choice trials (see Figure 5). Although individual pigeons showed large fluctuations in preference over sessions, if anything, the pigeons tended to favor the pseudo-matching alternative. The mean preference for the matching alternative when pooled over the 50 training sessions was 43.7%, a level that was not significantly different from 50%, t(7) < 1. The mean preference for the matching alternative pooled over the last 10 training sessions was 39.8%, a level that once again was not significantly different from chance, t(7) = 1.07.

Figure 5.

Experiment 2: Preference for the matching alternative over the pseudo-matching alternative (open circles; error bars = ±sem) and accuracy on matching choice trials (filled circles) as a function of training session.

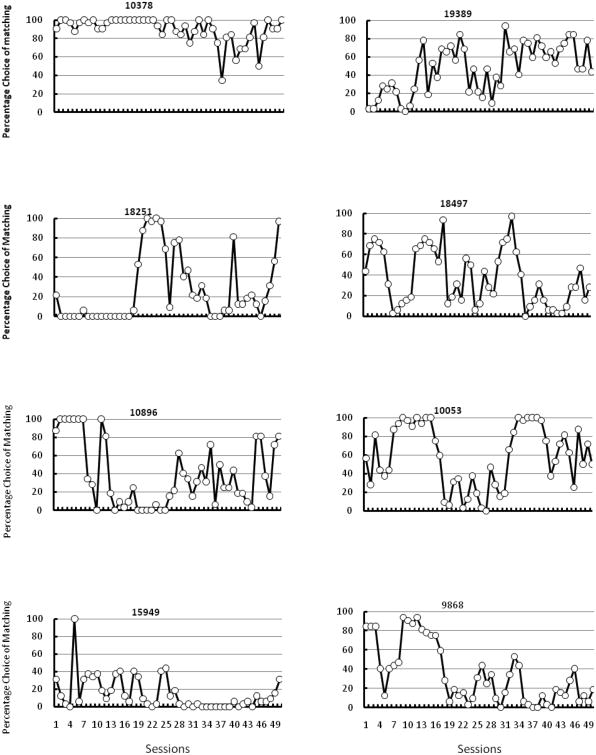

To get a better idea of the individual differences and changes in preference for the matching alternative, the results for individual pigeons are presented in Figure 6. As can be seen in the figure, there was no consistent choice over training either within or between pigeons. Pigeon 10378 showed a somewhat consistent preference for the matching alternative but that preference was inconsistent towards the end of training. Pigeon 10053 initially was inconsistent but after a few sessions of training preferred the matching alternative. However, it then preferred the pseudo-matching alternative but returned to the matching alternative and then became inconsistent toward the end of training. Pigeon 19389 originally preferred the pseudo-matching alternative and after showing a preference for the matching alternative for a few sessions returned to the pseudo-matching alternative and then returned to the matching alternative at the end of training. Pigeon 18497 was quite inconsistent throughout training but overall showed a somewhat stronger preference for the pseudo-matching alternative. Pigeon 10896 preferred the matching alternative at the start of training, then showed a strong preference for the pseudo-matching alternative and then showed an erratic preference but mostly for the pseudo-matching alternative. Pigeon 15949 showed a mostly consistent preference for the pseudo-matching alternative especially towards the end of training. And Pigeon 9868 mostly showed a preference for the matching alternative early in training but it changed to mostly a preference for the pseudo-matching alternative later in training.

Figure 6.

Experiment 2: Preference for the matching alternative over the pseudo-matching alternative for individual pigeons.

One could argue that because reinforcement for the pseudo-matching task was equated to the matching task that once the matching task was acquired to a high degree, whatever response bias the pigeons had developed in the pseudo-matching task would have been reinforced most of the time. Thus, it would be primarily during acquisition of the matching task that the information provided by the samples in the matching task would be more informative than the samples in the pseudo-matching task. For this reason, in Figure 5, we placed a box around the matching acquisition sessions (the 15 sessions in which matching accuracy was < 90%) to indicate where the information provided by samples in the matching task should have been most informative relative to the samples in the pseudo-matching task. As can be seen in the figure, there is no indication that there was any reliable preference for the matching alternative over those acquisition sessions.

Matching accuracy on choice trials on which the matching alternative was chosen also appear in Figure 5. As one might expect, those data appear quite similar to the matching accuracy on forced matching trials (presented in Figure 4).

An examination of the comparison choice latencies, when pooled over all sessions in Experiment 2, indicated that they were longer on matching trials (1.42 s) than they were on pseudo-matching trials (1.26 s) (see Figure 7). A correlated t-test performed on the choice latencies indicated that the difference in choice latencies between matching and pseudo-matching trials was not significant, t < 1. When pooled over the last 6 sessions, a correlated t-test indicated that the difference in latencies between matching (1.49 s) and pseudo-matching (1.07 s) trials was also not significant, t < 1. However it appears that early in training choice latencies on matching trials were longer than later in training. In fact, it appears that choice latencies on matching trials were longer during the sessions in which matching was acquired. For this reason, a separate correlated t-test was conducted on the matching and pseudo-matching latencies from the first 15 training sessions. However, that analysis indicated as well that the difference (1.89 s matching, 1.42 s pseudo-matching) was not statistically significant, t(3) = 2.0, p> .05. Furthermore, following matching acquisition, the comparison choice latencies on matching and pseudo-matching trials were virtually indistinguishable.

Figure 7.

Experiment 2: Comparison choice latencies on matching and pseudo-matching trials as a function of training session.

Discussion

The purpose of Experiment 2 was to determine if pigeons would prefer the matching task over the pseudo-matching task if the probability of reinforcement was (approximately) equal for the two tasks. We asked if pigeons would prefer an informative sample that consistently allowed them to choose the comparison stimulus that would provide reinforcement over an uninformative sample for which reinforcement did not depend on which comparison stimulus was chosen. We found that when the probability of reinforcement was equated, the pigeons showed no consistent preference for the matching alternative. Thus, under the present conditions, in the absence of a clear conditioned reinforcer, information per se appears to play little role for pigeons.

A surprising outcome of Experiment 2 was the relatively unstable preference for the matching alternative shown by most of the pigeons. It was our expectation that the pigeons would either show indifference by choosing each alternative about half of the time or show idiosyncratic but consistent preferences for one alternative or the other. The fact that most pigeons showed strong consistent preferences over blocks of sessions, but then often reversed, was somewhat surprising and is difficult to explain.

In Experiment 2, we found no difference between latencies on matching and pseudo-matching trials, whereas in Experiment 1 latencies were somewhat longer on matching trials than on pseudo-matching trials. It is not obvious why those differences did not appear in Experiment 2. Latencies on matching trials were somewhat longer in Experiment 1 than in Experiment 2, whereas latencies on pseudo-matching trials were somewhat shorter in Experiment 1 than in Experiment 2. It does appear that longer comparison choice latencies appear on matching trials during acquisition (compare early sessions of training in both experiments) and because the preference for matching trials appeared very quickly in Experiment 1, training was not extended as it was in Experiment 2.

General Discussion

The results of Experiment 1 confirm and extend the results of Zentall and Stagner (2010). They indicate that the earlier preference for matching task over pseudo-matching did not depend on the fact that one of the comparison stimuli in the matching task matched the sample, whereas neither comparison stimulus in the pseudo-matching task matched the pseudo-sample. In both experiments, when delay to reinforcement was controlled, the pigeons showed a strong preference for matching over pseudo-matching.

The results of Experiment 2 indicate that in the absence of differential reinforcement, pigeons do not appear to prefer an alternative that provides a cue that informs them which comparison stimulus is correct. Thus, in contrast to the results of Roper and Zentall (1999), when the initial choice does not provide a clear conditioned reinforcer, but instead provides a conditional stimulus (or an occasion setter, a stimulus such as a sample that modulates responding to another stimulus, see Holland, 1983) that alternative is not preferred by pigeons. This finding offers additional support for the conditioned reinforcement account (Dinsmoor, 1983) which bases the pigeon's preference on the change in predictive value of the stimuli that follow the choice.

According to the conditioned reinforcement account, a stimulus will be preferred if it serves as a better predictor of reinforcement when it is present than when it is absent. When the terminal link consists of a simple successive discrimination, as in Roper and Zentall (1999), the positive discriminative stimulus serves as a better predictor of reinforcement (100%) after the initial choice is made than before the initial choice is made (50%), whereas selection of the other alternative does not improve prediction of reinforcement. It was 50% before that alternative was selected and it remains at 50% after that alternative was selected.

However, if one adopts the conditioned reinforcement account one must assume that the effect of the negative stimulus (associated with the absence of reinforcement) plays less of a role than the positive stimulus. That is, the aversive properties of the conditioned inhibitor do not outweigh the conditioned reinforcing effects of the conditioned reinforcer.

According to conditioned reinforcement theory the value of a signal for reinforcement is determined by the probability of reinforcement in the presence of the signal relative to the probability of reinforcement in its absence (Gibbon & Balsam, 1981; Jenkins, Barnes, & Barrera, 1981; Stagner & Zentall, 2010; Zentall & Stagner, 2011). Thus, if the overall probability of reinforcement goes down (i.e., the signal for reinforcement occurs less often) but the signal for reinforcement remains a good predictor of reinforcement, the conditioned reinforcer should increase in value. On the other hand, if a signal for reinforcement occurs frequently, then a signal for reinforcement is not very informative because relative to its absence, it does not do much better at predicting reinforcement.

Classical information theory views information as being symmetrical. Specifically, a signal for the absence of food should have just as much information value as a signal for the presence of food. However, consistent with the results of Roper and Zentall (1999), Fantino and his colleagues (Case, Fantino, & Wixted, 1985; Fantino & Case, 1983; Fantino, Case, & Altus, 1983; Fantino & Silberberg, 2010) have shown that while humans prefer “good news” (conditioned reinforcement) over “no news,” they also prefer “no news” over “bad news” (a presumed conditioned inhibitor). That is, they do not prefer information if it is associated with a bad outcome but Lieberman, Cathro, Nichol, and Watson (1997) have found that humans will prefer bad news over no news as long as the information is useful.

More recent models of information theory include a temporal parameter such that the information value of a conditioned stimulus or signal includes the degree to which the onset of the signal reduces the expected time to the next reinforcement (Balsam & Gallistel, 2009). This view of information theory is similar to delay-reduction theory (Fantino, 1969) because it considers not just the absolute delay to reinforcement but the rate of reinforcement predicted by the signal for reinforcement relative to its absence. It has even been suggested that the function of a conditioned reinforcer is not to strengthen behavior but to predict when and where that primary reinforcer is available, a role that Rachlin (1976) has referred to as the discriminative stimulus hypothesis of conditioned reinforcement (see also, Shahan, 2010).

How these theories would deal with the results of Experiment 2 of the present study is not clear. If they view the comparison stimuli as conditioned reinforcers, in the sense that they have been similarly associated with reinforcement, then the prediction would be that the pigeons would be indifferent between the two alternatives because each comparison stimulus in either matching or pseudo-matching was associated with the same probability of reinforcement. If, however, the conditioned reinforcers were considered to be the successive presentation of the sample and the correct comparison stimulus (i.e., a successive compound stimulus) then both theories would predict a preference for the matching alternative over the pseudo-matching alternative because in the matching task, the two stimuli together would predict a higher probability of reinforcement than the pseudo sample and either comparison in the pseudo-matching task. Although these theories have not addressed conditional discrimination procedures, if, as these theories suggest, organisms learn what, where, and that events occur in the environment (Gallistel & Gibbon, 2002), then they should predict that matching would be preferred over pseudo-matching.

The present results add to the growing evidence that although pigeons prefer stimuli that differentially predict reinforcement and its absence, it is not because of the formal information value of those stimuli because when the conditioned reinforcement value of the comparison stimuli is controlled, the information value of the sample-comparison stimulus combination is insufficient to maintain a preference for the matching alternative.

Acknowledgments

This research was supported by Grant HD060996 from the National Institute of Child Health and Human Development.

References

- Balsam P, Gallistel CR. Temporal maps and informativeness in associative learning. Trends in Neurosciences. 2009;32:73–78. doi: 10.1016/j.tins.2008.10.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Berlyne DE. Uncertainty and conflict: A point of contact between information-theory and behavior-theory concepts. The Psychological Review. 1957;64:329–339. doi: 10.1037/h0041135. [DOI] [PubMed] [Google Scholar]

- Case DA, Fantino E, Wixted J. Human observing: Maintained by negative informative stimuli only if correlated with improvement in response efficiency. Journal of the Experimental Analysis of Behavior. 1985;43:289–300. doi: 10.1901/jeab.1985.43-289. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dinsmoor JA. Observing and conditioned reinforcement. The Behavioral and Brain Sciences. 1983;6:693–728. [Google Scholar]

- Dinsmoor JA, Browne MP, Lawrence CE. A test of the negative stimulus as a reinforcer of observing. Journal of the Experimental Analysis of Behavior. 1972;18:79–85. doi: 10.1901/jeab.1972.18-79. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fantino E. Choice and rate of reinforcement. Journal of the Experimental Analysis of Behavior. 1969;12:723–730. doi: 10.1901/jeab.1969.12-723. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fantino E, Case DA. Human observing: Maintained by stimuli correlated with reinforcement but not extinction. Journal of the Experimental Analysis of Behavior. 1983;40:193–210. doi: 10.1901/jeab.1983.40-193. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fantino E, Case DA, Altus D. Observing reward-informative and –uninformative stimuli by normal children of different ages. Journal of Experimental Child Psychology. 1983;36:437–452. [Google Scholar]

- Fantino E, Silberberg A. Revisiting the role of bad news in maintaining human observing behavior. Journal of the Experimental Analysis of Behavior. 2010;93:157–170. doi: 10.1901/jeab.2010.93-157. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gallistel CR, Gibbon J. The symbolic foundations of conditioned behavior. Mahwah, NJ: Lawrence Erlbaum Associates; 2002. [Google Scholar]

- Gibbon J, Balsam P. Spreading association in time. In: Locurto CM, Terrace HS, Gibbon J, editors. Autoshaping and conditioning theory. New York: Academic Press; 1981. pp. 219–253. [Google Scholar]

- Green L, Myerson J, Holt DD, Slevin JR, Estle SJ. Discounting of delayed food rewards in pigeons and rats: Is there a magnitude effect? Journal of the Experimental Analysis of Behavior. 2004;81:39–50. doi: 10.1901/jeab.2004.81-39. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hendry DP. Introduction. In: Hendry DP, editor. Conditioned reinforcement. Homewood, IL: Dorsey; 1969. [Google Scholar]

- Jenkins HM, Barnes RA, Barrera FJ. Why autoshaping depends on trial spacing. In: Locurto CM, Terrace HS, Gibbon J, editors. Autoshaping and conditioning theory. New York: Academic Press; 1981. pp. 255–284. [Google Scholar]

- Lieberman DA, Cathro JS, Nichol K, Watson E. The role of S2 in human observing behavior: Bad news is sometimes better than no news. Learning and Motivation. 1997;28:20–42. [Google Scholar]

- Prokasy WF. The acquisition of observing responses in the absence of differential external reinforcement. Journal of Comparative and Physiological Psychology. 1956;49:131–134. doi: 10.1037/h0046740. [DOI] [PubMed] [Google Scholar]

- Rachlin H. Behavior and learning. San Francisco: W. H. Freeman; 1976. [Google Scholar]

- Roberts WA, Feeney MC, McMillan N, MacPherson K, Musilino E, Petter M. Do pigeons (Columba livia) study for a test? Journal of Experimental Psychology: Animal Behavior Processes. 2009;35:129–142. doi: 10.1037/a0013722. [DOI] [PubMed] [Google Scholar]

- Roper KL, Zentall TR. Observing behavior in pigeons: The effect of reinforcement probability and response cost using a symmetrical choice procedure. Learning and Motivation. 1999;30:201–220. [Google Scholar]

- Shahan TA. Conditioned reinforcement and response strength. Journal of the Experimental Analysis of Behavior. 2010;93:269–289. doi: 10.1901/jeab.2010.93-269. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stagner JP, Zentall TR. Suboptimal choice behavior by pigeons. Psychonomic Bulletin & Review. 2010;17:412–416. doi: 10.3758/PBR.17.3.412. [DOI] [PubMed] [Google Scholar]

- Wyckoff LB. The role of observing responses in discrimination learning: Part 1. Psychological Review. 1952;59:431–442. doi: 10.1037/h0053932. [DOI] [PubMed] [Google Scholar]

- Zentall TR, Stagner JP. Pigeons prefer conditional stimuli over their absence: A comment on Roberts et al. (2009) Journal of Experimental Psychology: Animal Behavior Processes. 2010;36:506–509. doi: 10.1037/a0020202. [DOI] [PubMed] [Google Scholar]

- Zentall TR, Stagner JP. Maladaptive choice behavior by pigeons: An animal analog of gambling (sub-optimal human decision making behavior) Proceedings of the Royal Society B:Biological Sciences. 2011;278:1203–1208. doi: 10.1098/rspb.2010.1607. [DOI] [PMC free article] [PubMed] [Google Scholar]