Abstract

Health literacy is associated with a person’s capacity to find, access, contextualize, and understand information needed for health care-related decisions. The level of health literacy thus has an influence on an individual’s health status. It can be argued that low health literacy is associated with poor health status. Health care literature (eg, pamphlets, brochures, postcards, posters, forms) are published by public and private organizations worldwide to provide information to the general public. The ability to read, use, and understand is critical to the successful application of knowledge disseminated by this literature. This study assessed the readability, suitability, and usability of health care literature associated with concussion and traumatic brain injury published by the United States Centers for Disease Control and Prevention. The Flesch–Kincaid Grade Level, Flesch Reading Ease, Gunning Fog, Simple Measure of Gobbledygook, and Suitability Assessment of Materials indices were used to assess 40 documents obtained from the Centers for Disease Control and Prevention website. The documents analyzed were targeted towards the general public. It was found that in order to be read properly, on average, these documents needed more than an eleventh grade/high school level education. This was consistent with the findings of other similar studies. However, the qualitative Suitability Assessment of Materials index showed that, on average, usability and suitability of these documents was superior. Hence, it was concluded that formatting, illustrations, layout, and graphics play a pivotal role in improving health care-related literature and, in turn, promoting health literacy. Based on the comprehensive literature review and assessment of the 40 documents associated with concussion and traumatic brain injury, recommendations have been made for improving the readability, suitability, and usability of health care-related documents. The recommendations are presented in the form of an incremental improvement process cycle and a list of dos and don’ts.

Keywords: readability, usability, suitability, CDC health literature, concussion, traumatic brain injury

Introduction

The American Medical Association reported that one in every three patients in the United States (US) has basic or below basic health literacy.1 Inefficiencies and ineffectiveness in delivering health care due to poor health literacy cost US$58 billion per year.1 Within the context of this study, health literacy can be best defined as the degree to which individuals have the capacity to access, process, and understand basic health information and obtain services needed to make appropriate health-related choices.2 MedLinePlus identified that when a person’s health literacy is limited, (s)he has difficulty doing things such as filling out forms, finding providers, and understanding the specifics of medication.3 The objectives of the Healthy People 2020 project include the improvement of health literacy amongst the population.4 Specifically the project aims to increase the proportion of persons who report that their health care provider always gave them easy-to-understand instructions related to their illness or health condition.

Naidu showed that on an international level, health literacy has an influence on an individual’s health status and that low health literacy is related to low health status.5 Further studies by Lauder et al on patients aged ≥ 65 years determined that low health literacy had a strong relationship with mortality.6 They reported that within 67.8 months of a treatment, 39.4% of patients with low health literacy died as opposed to 18.9% with high health literacy. Osborn et al further clarified the relationship between health literacy and mortality.7 They indicated that literacy was a significant predictor of human immunodeficiency virus patients’ nonadherence to a medicine regimen. Solutions to the problem of low health literacy being prevalent in society are varied in that they include both putting the onus on the patient and the health care provider to ensure effective health understanding. Research by Hay concerning the use of the Internet by patients showed that while health literacy has improved, so has the need for patients to empower themselves to make use of this new technology in a more effective manner and to take on their own health.8 These materials were designed for the general public. Davis et al identified that the average reading ability in the US is equal to that of an eighth grader in the US school system.9 A survey conducted by the National Assessment of Adult Literacy identified that about 40% of the 19,000 subjects surveyed were below the basic level of proficiency in prose, document, and qualitative literacy skills.10 The study aims to add to the growing body of literature about the importance of improving health literacy, which in turn aids better patient care. This is achieved by examining the readability of pamphlets and brochures related to concussion and traumatic brain injury available on the Centers for Disease Control and Prevention (CDC) website for the general public.

The paper is structured to present a review of existing studies on the readability of health care materials. The focus is then limited to concussion and traumatic brain injury publications from CDC. This is followed by a presentation of the analyses of the documents collected. The results of the analyses provide the basis for recommendations on improving the readability of health care materials.

Readability and health literacy

Parker et al specified that multiple governmental organizations such as the Joint Commission on Accreditation of Health Care Organizations and the National Committee for Quality Assurance are focused on creating guidelines for patient readability of materials.11 Williams et al put forth that the reason health literacy is a problem worthy of research is due to the gap that exists between the literacy skills of the average American and the readability level of patient education materials published for public consumption.12 McCray put forth that the use of grade level of reading ability has emerged as a standard in understanding health care document readability.13 Doak et al suggested modifying terminology to use common words and phrases, larger font sizes, and larger space between lines in text.14 They felt that this would make readability easier for people who fall below this mean.

A standard instrument to measure health literacy is the Test of Functional Health Literacy in Adults. In a study of urban public hospitals, the Test of Functional Health Literacy in Adults determined that health literacy was significantly higher in patients aged ≥ 60 years than in younger patients.15 While age influences readability, Gazmararian et al constructed a study to understand readability’s influence.16 They showed that discharge instructions, consent forms, and medical education brochures often are written at levels exceeding patients’ reading skills.

Readability studies of health care literature

Several studies that examined the readability of health care-related materials targeted at the general public were reviewed. These studies indicated that health care-related materials are not as readable as desired. That is, they do not cater to the reading and literacy skills of their target audience. Hendrickson et al examined 27 pediatric oral health pamphlets or brochures.17 These materials were meant for parents of young children. The indices used for assessment were: (1) readability: using the Flesch–Kincaid Grade Level, Flesch Reading Ease, and Simple Measure of Gobbledygook (SMOG) indices; (2) thoroughness: inclusion of topics important to young children’s oral health; (3) textual framework: frequency of complex phrases and use of pictures, diagrams, and bulleted text within materials; and (4) terminology: frequency of difficult words and dental jargon. These materials were from commercial, government, industry, and private nonprofit sources within the US. Hendrickson et al noticed that the materials they analyzed had varied levels of readability. The materials were not always well suited for their target patient population. They also noticed that the government materials were more readable as compared to those published by commercial and industry entities.17

There are several studies that evaluated the readability of health care-related material by comparing it with the readability of local newspapers. Nicoll and Harrison compared the readability of health-related pamphlets with English national newspapers.18 They found that the pamphlets were not as readable as desired for the targeted audience. The authors felt that some of the specialist and commercially produced pamphlets were difficult to read. The authors suggested that readability formulae could be useful in the initial assessment of health care-related literature. In a similar study, Oates and Oates analyzed health care pamphlets intended for parents.19 They used the Flesch Reading Ease and Flesch–Kincaid Grade Level indices for their analysis. The parameters examined were (1) readability and (2) human interest. Further, Herreid described that in fast-paced novels, where characters’ names abound, the human interest score could be around 20.20 In readable nonfiction magazines, such as Time or Newsweek, it ranges between six and eight. Oates and Oates compared the readability scores of the health care pamphlets with those for daily Sydney newspapers.19 They observed that (1) pamphlets produced by government agencies were more readable when compared to pamphlets produced by nongovernment agencies, (2) pamphlets produced with input from the parents (target audience) were the most readable of all, (3) pamphlets produced by government agencies had higher human interest scores when compared to pamphlets produced by nongovernment agencies, (4) pamphlets produced with input from the parents had the highest human interest scores of all, and (5) the readability and human interest scores of government-produced pamphlets were similar to those of the daily Sydney newspapers. Oates and Oates put forth that health care literature for parents needs to be easy to understand. They suggested involving parents in the production of the pamphlets to help improve their readability, human interest scores, and thus effectiveness.19

Murphy et al conducted a study to compare the readability of 99 adult-subject information leaflets for medical research projects with the readability of nine New Zealand newspaper editorials and nine popular magazine articles.21 They calculated the Flesch Reading Ease, Flesch–Kincaid Grade Level, and Gunning Fog indices using a computerized grammar check. They found that (1) the subject information leaflets were easier to read as compared to newspaper editorials, but more difficult to read when compared to popular magazine articles, and (2) the level of secondary school education required to read the leaflets averaged at 2 years, which is equivalent to a form four rating. Newspaper editorials had a form four rating, while magazine articles had a form one rating. They concluded that readability scores are useful in assessing the ease with which information can be read. Along the same lines, Wong audited the readability of patient information leaflets intended for marketing proprietary antiepileptic drugs in United Kingdom.22 The study compared twelve leaflets with six antiepileptic drug articles from medical journals and six headline articles from United Kingdom newspapers. The readability was judged through the scores obtained on the Gunning Fog and Flesch Reading Ease indices. Through these readability indices, this study established that the leaflets were suitable for the reading age of the general adult population. The Audit Commission in the United Kingdom recommends that patient information leaflets should be audited by health professionals using a formal readability test.22

Amini et al assessed the readability of American Academy of Pediatric Dentistry patient education brochures.23 They compared the readability of these brochures with the readability recommended by health education experts. This assessment used the Gunning Fog and Flesch Reading Ease indices to measure the readability of the association’s brochures. They found that the association’s brochures were above the sixth grade reading level recommended by the health experts. Thus the authors put forth that readability formulae could be used to help improve the reading ease of health education materials.23 In another study, Grossman et al assessed the readability of consent forms used to describe clinical oncology protocols.24 They analyzed 137 consent forms at the Johns Hopkins Oncology Center. The indices used to measure readability were the Flesch Reading Ease, Flesch–Kincaid Grade Level, and Gunning Fog indices. The authors concluded that from a patient point-of-view, the consent forms were more difficult to read than desired. They put forth that the consent process could be improved by increasing the readability of the consent forms. Mumford examined the readability of 24 health care-related information leaflets, designed by nurses.25 These leaflets were meant for patients. He used the Flesch Reading Ease index, Gunning Fog index, and SMOG readability formula for his assessment. Through the scores obtained, he established that patients could not comprehend the information on the leaflets easily. Mumford mentioned that the results of his study were similar to the results of other related studies he had reviewed.

Clauson et al assessed the readability of dietary supplement leaflets used for diabetes mellitus and chronic fatigue syndrome.26 These leaflets were meant for patients. They used the Flesch–Kincaid Grade Level and health information Readability Analyzer to evaluate readability. They recorded that these leaflets were higher than the desired sixth grade reading level – the level recommended by experts – and thus were more difficult to read. They also put forth that the health information Readability Analyzer was a better tool for measuring readability as compared to Flesh–Kincaid Grade Level.

Collins et al identified that according to Health Information Portability and Accountability Act (HIPAA) regulations, agencies must furnish patients with authorizations that they can read and understand.27 They put forth that half of the American population reads at an eighth grade level. The authors studied HIPAA templates provided by 51 institutional review boards to judge if they catered to this reading level. They used the Flesch Reading Ease index, the Dale–Chall formula, and the Fry Readability Graph method to measure readability. Their results showed that the mean readability of the templates, estimated with the Flesch Reading Ease index and the Fry Readability Graph method, was at high school reading level or above. Similarly, the readability using the Dale–Chall formula was at a ninth grade level. They concluded that the HIPAA authorization forms were difficult to read for the targeted population and patients were being asked to read health care-related material that was higher than their reading level. Osborne et al identified that there was only one study that evaluated the readability of advance directives.28 Advance directives help people convey how they wish to receive end-of-life care.29 This study evaluated ten advance directive documents using the Flesch–Kincaid Grade Level and Gunning Fog indices. The average reading level of these documents was found to be equivalent to the reading level of an adult, someone who has completed 14 grade levels of education. Osborne et al asserted that often people are known to read two to five levels below their highest level of education. Thus the advance directives were not ideal for the general public.28 Breese and Burman evaluated the readability of privacy practices forms from the top 185 health care institutions in the US.30 HIPAA articulates that these forms must be in plain language. SMOG, Flesch–Kincaid Grade Level, and Flesch Reading Ease indices were used to measure readability. They concluded that (1) the privacy notifications were lengthier than desired, (2) the font size used was smaller than desired, and (3) these forms shared characteristics similar to major medical literature journals, which highlighted higher reading level requirement and language complexity.

Using the aforementioned studies as examples, it is plausible to assume that most health care-related literature is less readable than desired. In other words, health care-related literature is difficult for the average patient to read and comprehend. The studies indicate that, on average, the general public finds it difficult to read and understand health care-related brochures and pamphlets. Agencies designing these pamphlets and brochures do not seem to be evaluating their material with respect to the target audience. Furthermore, readability assessment can be performed using well-established indices similar to the one listed earlier.

Sampling of studies in health care

Badarudeen and Sabharwal presented the importance and relevance of health literacy as the single best predicator of an individual’s health.31 They put forth that Medicaid, the US health care program meant for people with low income and resources, cost about four times more for those who do not have adequate health literacy. The authors also established that adequate health literacy facilitates improved communication between the health care provider and the patient, which in turn improves health care quality. They suggested raising awareness among health care providers about the importance of designing health care-related material for the general public.

Banasiak and Meadows-Oliver studied the readability of six websites that provided information on asthma.32 They chose websites that had previously been evaluated for quality and accuracy of information. The authors used the Flesch–Kincaid Grade Level to evaluate readability. Through their study they found that the average readability of the websites was for a 9.73 grade level, which is high for an average consumer. They put forth that the desired reading level should not be higher than the eighth grade level. Paasche-Orlow et al conducted a study to test the readability of informed consent forms provided by institutional review boards.33 They surveyed 114 US medical school websites to evaluate institutional review board readability standards and the informed consent form templates using Flesch–Kincaid Grade Level. The average readability of the templates was at the tenth grade level, two grades higher than the desired level. Paasche-Orlow et al concluded that the institutional review boards’ informed consent forms fall short of their own readability standard, and are not designed based on the local literacy rate. Berland et al evaluated health information on breast cancer, obesity, and childhood asthma available on the internet.34 They focused on English and Spanish language search engines and websites. The authors evaluated the accessibility of 14 websites, quality of 25 websites, and content provided by one search engine. Thirty-four physicians were on the review board and followed a prestructured review list. They evaluated quality, coverage, and accuracy of key clinical elements. The Fry Readability Graph method was used for evaluation. They determined that (1) using search engines to search simple terms was not efficient, (2) the coverage of key information on English and Spanish language websites was poor and inconsistent, (3) the accuracy of key clinical elements was good, and (4) a high reading level was required to comprehend web-based health-related information.

Walsh and Volsko studied the readability of Internet-based health care information about the top five medical related causes of death in the US.35 They studied the websites of the American Heart Association, American Cancer Society, American Lung Association, American Diabetes Association, and American Stroke Association. The authors gathered 100 consumer health information articles and assessed their readability using the SMOG, Gunning Fog, and Flesch–Kincaid Grade Level indices. They determined that Internet-based information was written above the level recommended by the US Department of Health and Human Services. They recommended that health care providers adhere to these standards, which could help improve patient comprehension of health care-related information.

Jukkala et al evaluated the readability of the Clinical Microsystem Assessment Tool.36 The Clinical Microsystem Assessment Tool is used to assess the quality of high-performance health care tools. They used the Flesch Reading Ease index for their evaluation and determined that the tool was very difficult to read. They also conducted an analysis using SMOG and found that 14.71 years of education was required to understand the content. Considering the diversity within the health care staff, they concluded that the Clinical Microsystem Assessment Tool had its limitations and did not necessarily cater to the whole workforce. Pizur-Barnekow et al examined the readability of 94 documents provided by nine agencies that participate in the Birth to Three program.37 The documents used for the study provide information about the nature of the program, financials, intervention planning, and programmatic consent of the families. The Flesch–Kincaid Grade Level, Flesch Reading Ease, and SMOG indices were used for this analysis. The authors determined that a majority of the documents were above the required fifth grade reading level and did not take into account the users’ purpose, interests, and cultural background, which can have an influence on reading ability.

Sampling of readability studies performed for the US CDC

The preceding section presented the work done to evaluate the readability of health care information materials targeted at the general public. In the current section, the focus is on similar readability analyses conducted on health care-related documents published by CDC. Melman et al conducted a study involving 150 parents/caretakers.38 They reported that a majority of the subjects had an inadequate reading level and were not able to comprehend vaccine information pamphlets issued by CDC. Davis et al conducted a study with 522 subjects on the polio vaccine information pamphlets issued by CDC in 1994.39 They reported that the readability level of the pamphlets was higher than desired. It was suggested that the information material be modified to a level equivalent to a third or fourth grade level as opposed to the eighth grade level available then. In a similar study, Davis et al established that it is important to include instructional graphics in addition to simplifying text to make information pamphlets more understandable.40

Lagasse et al assessed the readability of the online information CDC had issued on H1N1/09 influenza.41 They concluded that it was crucial to modify the reading level of information based on the technical know-how of the target population. They also underlined that factors like formatting, font, and layout should be looked into to enhance the readability and accessibility of such material. Sugerman et al conducted a study to assess the readability of material used to communicate emergency health risk information.42 They had 1802 persons respond to a survey. They realized that a majority of the respondents found it difficult to recall, comprehend, and comply with the technical messages presented in the survey as opposed to the nontechnical messages. This suggested that using simple language and words could help improve the readability of health care documents.

Through two trials at an emergency department, Merchant et al compared patient comprehension of rapid human immunodeficiency virus pretest information.43 They compared two methods of information delivery: in-person discussion and a tablet computer-based discussion. “Do you know about rapid human immunodeficiency virus testing?” was the topic of discussion for this test. The content for the discussion was based on material for pretest information as suggested by CDC. Participants were asked to complete a questionnaire. The Wilcoxon rank-sum test was used to score the questionnaires, and scores from both types of pretesting were compared. After analyzing the scores, the authors found that the video discussion could substitute an in-person discussion. Therefore, it is plausible to assume that people find it easier to understand and assess visual information.

CDC’s concussion and traumatic brain injury related publications

CDC is a US federal agency. It is an integral component of the US Department of Health and Human Services.44 On its website, CDC states that it is dedicated to protecting health and promoting quality of life through the prevention and control of disease, injury, and disability. The CDC website is one of the primary ways in which it communicates with the general public. On average, the website gets about 41 million hits monthly. This number goes up to more than a hundred million annually.45 A wide spectrum of users, ranging from health care providers, scientists, researchers, public health professionals, and students may refer to this resource for health-related information. CDC’s efforts are focused on the following five areas:46

Supporting state and local health departments,

Improving global health,

Implementing measures to decrease leading causes of death,

Strengthening surveillance and epidemiology, and

Reforming health policies.

According to the website, users can find health information on a wide variety of topics. These may include but are not limited to the following:

Data and statistics

Emergencies and disasters

Healthy living

Life stages and populations

Workplace safety and health

Diseases and conditions

Environmental health

Injury, violence, and safety

Travelers’ health.

The topics related to injury, safety, and violence could be further divided into the subtopics listed in Table 1. This paper focuses on the subtopics of concussion and traumatic brain injury.

Table 1.

Subtopics covered under the topic of injury, safety, and violence on the Centers for Disease Control and Prevention website

| Subtopics of injury, safety, and violence | |

| Burns/fire safety | Poisoning |

| Falls/drowning | School health/safety/bullying |

| Hospital visit/field triage | Sexual violence |

| Injury/safety – multitopic | Sports health/safety |

| Intimate partner relationship/violence | Stress management |

| Maltreatment | Suicide |

| Motor vehicle safety | Concussion/traumatic brain injury |

| Online violence | Youth violence/risks |

Research questions

The readability of concussion and traumatic brain injury literature published by CDC has not been assessed adequately. In order to fill in this gap, this research effort tried to answer the following research questions:

What is the mean and standard deviation of the Flesch–Kincaid Grade Level, Flesch Reading Ease, Gunning Fog, Suitability Assessment of Materials (SAM), and SMOG indices for 40 selected concussion and traumatic brain injury publications from the US CDC?

What is the median, mode, and range of the Flesch–Kincaid Grade Level, Flesch Reading Ease, Gunning Fog, SAM, and SMOG indices for 40 selected concussion and traumatic brain injury from the US CDC?

Data collection

The health care literature associated with concussion and traumatic brain injury was collected from the CDC website ( http://www.cdc.gov/injuryviolencesafety/ ) on June 10, 2012, starting with the category titled injury safety and violence. This topic consisted of 16 subtopics (Table 1). The study was concentrated on the subtopics of concussion and traumatic brain injury. A total of 40 documents associated with this subtopic were downloaded in Adobe® Acrobat™ (.pdf; Adobe Systems Inc, San Jose, CA) format. The count of documents by specific subject is shown in Table 2. The titles of the documents assessed are listed in Appendix A. These documents were then converted into plain text files. Sentences that were fragmented due to the conversion process were realigned by removing extra line breaks and/or white space. No other changes were made to the text.

Table 2.

The number of documents in the health care literature associated with concussion and traumatic brain injury

| Subject | n |

|---|---|

| Concussion | 22 |

| Traumatic brain injury | 18 |

| Total | 40 |

Data analysis

Consistent with studies included in previous sections, and in particular based on the recommendations of the National Institutes of Health at the US Department of Health and Human Services,47 the following indices were used to evaluate the 40 documents collected: Flesch–Kincaid Grade Level, Flesch Reading Ease, Gunning Fog, and SMOG.46 The indices were calculated using the Readability Studio™ software (version 2012.0.1) (Oleander Software, Vandalia, OH) in accordance with the recommendations from the National Institutes of Health at the US Department of Health and Human Services.47 However, SAM was calculated manually.

Flesch–Kincaid Grade Level and Flesch Reading Ease index

Calderon et al maintained that Flesch–Kincaid Grade Level and Flesch Reading Ease formulas are most commonly used to assess readability.48 The Flesch–Kincaid Grade Level score rates text on a US school grade level. The Flesch Reading Ease index rates text on a 100-point scale. The higher the score, the easier it is to understand the document. The Flesch–Kincaid Grade Level score is given by (0.39 × ASL) + (11.8 × ASW) − 15.59.49 The Flesch Reading Ease index score is given by 206.835 − (1.015 × ASL) − (84.6 × ASW).49 In the preceding formulas, ASL denotes the number of words divided by the number of sentences and ASW denotes the number of syllables divided by the number of words. The results of the descriptive analyses associated with Flesch–Kincaid Grade Level and Flesch Reading Ease index ratings of the 40 selected documents are presented in Table 3.

Table 3.

Descriptive analysis associated with the Flesch–Kincaid Grade Level and Flesch Reading Ease indices

| Flesch–Kincaid | Flesch Reading Ease | |

|---|---|---|

| Mean | 11.1 | 49.5 |

| Median | 11.6 | 48.0 |

| Mode | 7.4 | 35.0 |

| Standard deviation | 3.9 | 17.4 |

| Range | 17.4 | 70.0 |

| Minimum | 2.6 | 20.0 |

| Maximum | 20.0 | 90.0 |

It was found that the mean Flesch–Kincaid Grade Level for the 40 documents assessed was 11.1, standard deviation was 3.9, median was 11.6, mode was 7.4, and range was 17.4. It was found that the average Flesch Reading Ease index for the 40 documents was 49.5, standard deviation was 17.4, median was 48.0, mode was 35.0, and range was 70.0.

Gunning Fog index

The Gunning Fog index was the first, and now well-known, readability indicator.50 The index correlates certain quantifiable aspects of a text with its grade level.50 Most people are comfortable reading two grades below their highest attained grade.50 The Gunning Fog index grade level is given by:51

In this equation, w is the number of words, w3 is the number of words with at least three syllables, and s is the number of sentences. The results of the descriptive analysis associated with the Gunning Fog index are presented in Table 4.

Table 4.

Descriptive analysis associated with the Gunning Fog index

| Gunning Fog | |

| Mean | 11.3 |

| Median | 11.0 |

| Mode | 9.6 |

| Standard deviation | 3.1 |

| Range | 12.3 |

| Minimum | 5.0 |

| Maximum | 17.3 |

It was found that the mean Gunning Fog index for the 40 documents assessed was 11.3, standard deviation was 3.1, median was 11.0, mode was 9.6, and range was 12.3.

SAM

The SAM index assesses not only the readability, but also the usability and suitability of written materials. In order to evaluate the SAM index, a written material is evaluated based on 22 factors on a scale ranging from zero to three. The overall rating based on the 22 factors is calculated in percent. Materials with an overall rating of 70%–100% are deemed superior. Materials with an overall rating of 40%–69% are deemed adequate. Materials with an overall rating of 0%–39% percent are deemed not suitable. The 22 factors and the associated scoring sheet are copyrighted material. However, additional details associated with calculating the SAM index can be found in the work of Doak et al.14 The results of the descriptive analysis associated with the SAM index are presented in Table 5.

Table 5.

Descriptive analysis associated with the Suitability of Assessment Materials index

| Suitability of Assessment Materials | |

| Mean | 72.2% |

| Median | 72.0% |

| Mode | 72.0% |

| Standard deviation | 6.0% |

| Range | 23.0% |

| Minimum | 61.0% |

| Maximum | 84.0% |

It was found that the mean SAM index for the 40 documents assessed was 72.2%, standard deviation was 6.0%, median was 72.0%, mode was 72.0%, and range was 23.0%.

SMOG

This index estimates the years of education needed to fully understand a piece of writing.52 SMOG is widely used, particularly for checking health messages. In order to calculate SMOG from a document with more than 30 sentences, count the words of three or more syllables (polysyllables) in three ten-sentence samples from the text. SMOG grade level is given by:53

In cases where there are less than 30 sentences, SMOG grade level is given by:53

In these equations, ps is the number of polysyllables in a sample of 30 sentences, pa is the average number of polysyllabic words per sentence, p is the total number of polysyllables, and s is the total number of sentences. It must be noted that in order to calculate SMOG, all abbreviations must be unabbreviated and numbers pronounced to determine whether they are polysyllabic. The results of the descriptive analysis associated with the SMOG index are presented in Table 6.

Table 6.

Descriptive analysis associated with the Simplified Measure of Gobbledygook index

| Simplified Measure of Gobbledygook | |

| Mean | 12.8 |

| Median | 13.0 |

| Mode | 14.4 |

| Standard deviation | 2.5 |

| Range | 10.4 |

| Minimum | 7.6 |

| Maximum | 18.0 |

It was found that the mean SMOG index for the 40 documents assessed was 12.8, standard deviation was 2.5, median was 13.0, mode was 14.4, and range was 10.4.

Discussion

The mean ratings of the Flesch–Kincaid Grade Level, Gunning Fog, and SMOG indices indicate that the documents assessed were readable by people with an education level higher than the eleventh grade. The mean ratings of the Flesch Reading Ease index showed that the documents assessed were difficult to read. The median and mode of the ratings associated with the indices also confirmed these appraisals. These findings are consistent with the findings of many studies included in the “Readability studies of health care literature” section above. Furthermore, the wide ranges for these indices showed that there were some documents that required an advanced reader while there were others that could be read by rudimentary readers. Wilson, Kasabwala et al, and Wilson state that the average American reads at an eighth or ninth grade level.54–56 Furthermore, Badarudeen and Sabharwal reported that several health care organizations have recommended the readability of patient education materials be no higher than sixth to eighth grade level.31 Hence, it could be concluded that the readability of the documents assessed is not adequate and needs to be improved.

The mean, median, and mode associated with the SAM index show that the documents assessed were superior in terms of usability and suitability. The lowest SAM rating was 61%. Hence, it can be concluded that the documents assessed were at least adequate in terms of usability and suitability. This conclusion presents a paradox: although the documents assessed were found not to be very readable, they were very usable. A plausible explanation for this paradox could be that while the text included in these documents was relatively hard to read, the layout, graphics/illustrations, and format made them very usable. In order to test this hypothesis, future research should try to evaluate and compare readability and usability for other types of documents from various sources. Lastly, the SAM index should be used in conjunction with the various other indices to assess both usability and readability of health care-related documents.

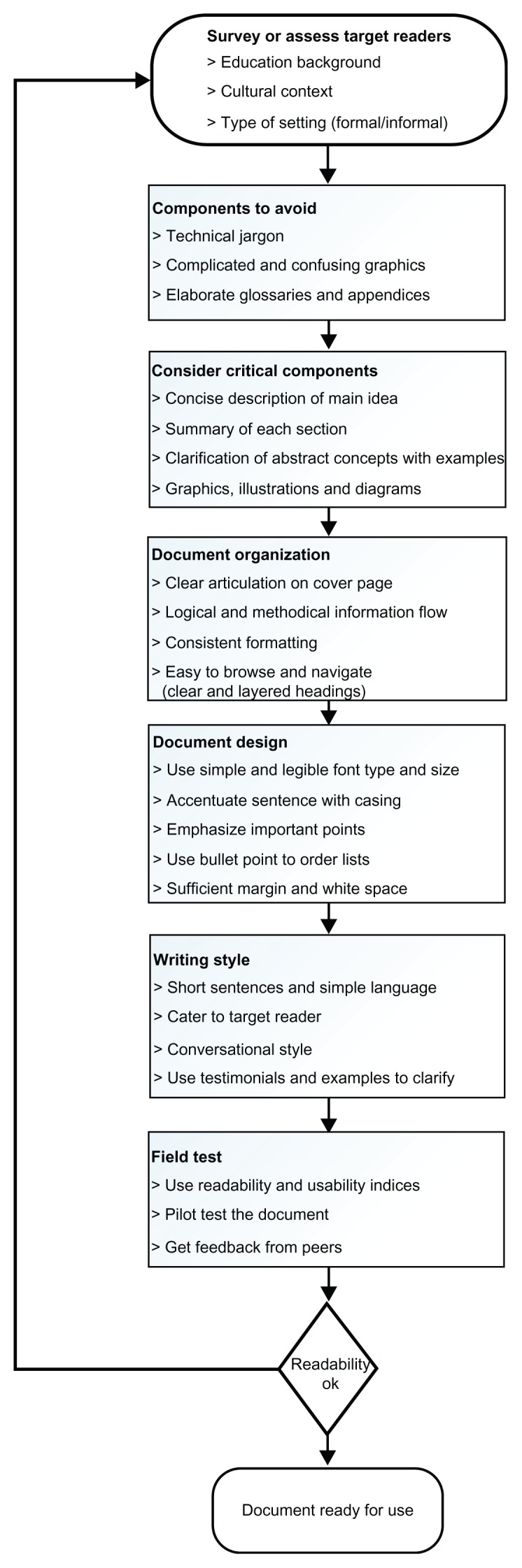

Based on the literature review and analyses of the 40 documents assessed for this study, recommendations were formulated to improve the readability, usability, and suitability of health care-related documents geared towards the general public. These recommendations have been supplemented by guidelines from the National Institutes of Health, Doak et al, Reinhard et al, and McKinney et al.14,47,57,58 Figure 1 presents these recommendations in the form of an incremental improvement process cycle. The diagram can be used as a step-by-step checklist to design health care information materials targeted at the general public with varied readability levels. The process cycle checklist also provides a methodology to improve the readability of health care-related documents geared towards the general public. Additionally, the recommendations were created into a dos and don’ts list. This list is illustrated in Table 7.

Figure 1.

Recommendations to improve the readability, usability, and suitability of health care-related documents geared towards the general public.

Table 7.

Dos and don’ts list of improving the readability, usability, and suitability of health care-related documents geared towards the general public

| Dos | Don’ts |

|---|---|

| ✓ Assess target readers | ✘ Technical and profession-specific terms |

| ✓ Think and write like the target readers | ✘ Images that are unclear and will not photocopy or print well |

| ✓ Convey central focus of the document to the reader | ✘ Complicated illustrations |

| ✓ Adopt methods to effectively provide concise information, eg, summaries and lists | ✘ Fancy fonts |

| ✓ Use examples | ✘ Cramping information with no white space |

| ✓ Include graphics: diagrams, graphs, and illustrations pertinent to the audience | ✘ Capitalize all the information |

| ✓ Use techniques to grab the readers’ attention, eg, images, diagrams, text emphasis, headers | |

| ✓ Use techniques to emphasize important information | |

| ✓ Provide relevant definitions | |

| ✓ Expand on abbreviations | |

| ✓ Design a well-articulated cover/title page | |

| ✓ Provide methodical explanations | |

| ✓ Make the document easy to navigate through | |

| ✓ Make the document easy to read by using simple terms, short sentences, legible font, appropriate font size, adequate white space, and margins | |

| ✓ Always field test the document |

Conclusion

This study has attempted to highlight the importance of evaluating the readability of health care-related literature geared towards the general public. The varying levels of health literacy and a significant portion of the general population at or under the basic literacy level reinforce the need for such studies. The recommendations based on the literature review and data analyses can be used to improve the readability, usability, and suitability of health care-related literature.

Appendix A.

Titles of documents assessed

Heads Up: Concussion in Youth Sports – A Fact Sheet for Athletes

Heads Up: Concussion in High School Sports – A Fact Sheet for Athletes

Heads Up: Concussion in Youth Sports – Signs and Symptoms and Action Plan

Heads Up: Concussion in High School Sports – Signs and Symptoms and Action Plan

Heads Up: Concussion in Youth Sports – A Fact Sheet for Coaches

Concussion in Sports

Heads Up to Schools: Know Your Concussion ABCs

Heads Up: Preventing Concussion

Heads Up: Concussion in Youth Sports – What Should You Do

Concussion – Main Message Poster

Parent/Athlete Concussion Information Sheet

Heads Up: Concussion in Youth Sports – A Fact Sheet for Parents

Heads Up: Concussion in High School Sports – A Fact Sheet for Parents

Heads Up: Concussion in Youth Sports – Signs and Symptoms

A Quiz For Coaches, Athletes, and Parents

Concussion – Signs Symptoms Poster

Heads Up: Concussion in High School Sports – Wallet Card

Check for Safety – A Home Fall Prevention Checklist for Older Adults

What You Can Do to Prevent Falls

Protect the Ones You Love – Falls

What You Can Do To Prevent Falls: Have Your Vision Checked

Home and Community – Fall Prevention Strategies

Sports, Recreation, and Exercise

Get the Stats on Traumatic Brain Injury in the United States

Facts about Concussion and Brain Injury

Preventing Traumatic Brain Injury

Preventing Traumatic Brain Injury in Older Adults – Information for Family Members and Other Caregivers

Preventing Traumatic Brain Injury in Older Adults

Help Seniors Live Better, Longer: Prevent Brain Injury

Help Seniors Live Better, Longer: Prevent Brain Injury – Signs and Symptoms

Signs and Symptoms of Traumatic Brain Injury

Help Seniors Live Better, Longer: Prevent Brain Injury – Key Facts

What is a Concussion?

Concussion: A Fact Sheet for Teachers, Counselors, and School Professionals

Concussion: A Fact Sheet for Parents

Returning to School After a Concussion

Concussion Signs and Symptoms Checklist

Signs and Symptoms of a Concussion

Traumatic Brain Injury

Victimization of Persons with Traumatic Brain Injury or Other Disabilities

Footnotes

Disclosure

The authors report no conflicts of interest in this work.

References

- 1.O’Reilly K American Medical News. The ABCs of health literacy. Mar 19, 2012. [Accessed July 21, 2012]. Available from: http://www.ama-assn.org/amednews/2012/03/19/prsa0319.htm.

- 2.Schneider JM. Health literacy – matching patients to the right materials. J Hosp Librariansh. 2006;6(2):99–105. [Google Scholar]

- 3.MedLinePlus. Health literacy. Dec 21, 2011. [Accessed July 20, 2012]. Available from: http://www.nlm.nih.gov/medlineplus/healthliteracy.html.

- 4.HealthyPeople.gov. Health communication and health information technology. 2012. [Accessed July 20, 2012]. Available from: http://www.healthypeople.gov/2020/topicsobjectives2020/objectiveslist.aspx?topicid=18.

- 5.Naidu A. Health literacy. Whitireia Nurs J. 2008;(15):39–46. [Google Scholar]

- 6.Lauder B, Gabel-Jorgensen N. Health literacy and mortality among elderly persons. Home Healthcare Nurse. 2008;26(4):253–254. [Google Scholar]

- 7.Osborn CY, Paasche-Orlow MK, Davis TC, Wolf MS. Health literacy: an overlooked factor in understanding HIV health disparities. Am J Prev Med. 2007;33(5):374–378. doi: 10.1016/j.amepre.2007.07.022. [DOI] [PubMed] [Google Scholar]

- 8.Hay L. In practice. Perspect Public Health. 2009;129(4):156. doi: 10.1177/17579139091290040305. [DOI] [PubMed] [Google Scholar]

- 9.Davis TC, Mayeaux EJ, Fredrickson D, Bocchini JA, Jr, Jackson RH, Murphy PW. Reading ability of parents compared with reading level of pediatric patient education materials. Pediatrics. 1994;93(3):460–468. [PubMed] [Google Scholar]

- 10.Kirsch IS, Jungeblut A, Jenkins L, Kolstad A. Adult Literacy in America: A First Look at the Findings of the National Adult Literacy Survey. 3rd ed. Washington, DC: Department of Education; 2002. [Google Scholar]

- 11.Parker RM, Ratzan SC, Lurie N. Health literacy: a policy challenge for advancing high-quality health care. Health Aff (Millwood) 2003;22(4):147–153. doi: 10.1377/hlthaff.22.4.147. [DOI] [PubMed] [Google Scholar]

- 12.Williams MV, Davis T, Parker RM, Weiss BD. The role of health literacy in patient-physician communication. Fam Med. 2002;34(5):383–389. [PubMed] [Google Scholar]

- 13.McCray AT. Promoting health literacy. J Am Med Inform Assoc. 2005;12(2):152–163. doi: 10.1197/jamia.M1687. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Doak CC, Doak LG, Root JH. Teaching Patients with Low Literacy Skills. 2nd ed. Philadelphia, PA: JB Lippincott; 1996. [Google Scholar]

- 15.Williams MV, Parker RM, Baker DW, et al. Inadequate functional health literacy among patients at two public hospitals. JAMA. 1995;274(21):1677–1682. [PubMed] [Google Scholar]

- 16.Gazmararian JA, Baker DW, Williams MV, et al. Health literacy among Medicare enrollees in a managed care organization. JAMA. 1999;281(6):545–551. doi: 10.1001/jama.281.6.545. [DOI] [PubMed] [Google Scholar]

- 17.Hendrickson RL, Huebner CE, Riedy CA. Readability of pediatric health materials for preventive dental care. BMC Oral Health. 2006;6(1):14. doi: 10.1186/1472-6831-6-14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Nicoll A, Harrison C. The readability of health-care literature. Dev Med Child Neurol. 1984;26(5):596–600. doi: 10.1111/j.1469-8749.1984.tb04497.x. [DOI] [PubMed] [Google Scholar]

- 19.Oates MS, Oates RK. Readability of health care literature. Aust Paediatr J. 1989;25(1):35–38. doi: 10.1111/j.1440-1754.1989.tb01410.x. [DOI] [PubMed] [Google Scholar]

- 20.Herreid CF. The way of Flesch: the art of writing readable cases. J Coll Sci Teach. 2002;31(5):288–291. [Google Scholar]

- 21.Murphy J, Gamble G, Sharpe N. Readability of subject information leaflets for medical research. N Z Med J. 1994;107(991):509–510. [PubMed] [Google Scholar]

- 22.Wong IC. Readability of patient information leaflets on antiepileptic drugs in the UK. Seizure. 1999;8(1):35–37. doi: 10.1053/seiz.1998.0220. [DOI] [PubMed] [Google Scholar]

- 23.Amini H, Casamassimo PS, Lin HL, Hayes JR. Readability of the American Academy of Pediatric Dentistry patient education materials. Pediatr Dent. 2007;29(5):431–435. [PubMed] [Google Scholar]

- 24.Grossman SA, Piantadosi S, Covahey C. Are informed consent forms that describe clinical oncology research protocols readable by most patients and their families? J Clin Oncol. 1994;12(10):2211–2215. doi: 10.1200/JCO.1994.12.10.2211. [DOI] [PubMed] [Google Scholar]

- 25.Mumford ME. A descriptive study of the readability of patient information leaflets designed by nurses. J Adv Nurs. 1997;26(5):985–991. doi: 10.1046/j.1365-2648.1997.00455.x. [DOI] [PubMed] [Google Scholar]

- 26.Clauson KA, Zeng-Treitler Q, Kandula S. Readability of patient and health care professional targeted dietary supplement leaflets used for diabetes and chronic fatigue syndrome. J Altern Complement Med. 2010;16(1):119–124. doi: 10.1089/acm.2008.0611. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Collins N, Novotny NL, Light A. A cross-section of readability of Health Information Portability and Accountability Act authorizations required with health care research. J Allied Health. 2006;35(4):223–225. [PubMed] [Google Scholar]

- 28.Osborne H, Hochhauser M. Readability and comprehension of the introduction to the Massachusetts Health Care Proxy. Hosp Top. 1999;77(4):4–6. doi: 10.1080/00185869909596531. [DOI] [PubMed] [Google Scholar]

- 29.MedlinePlus. Advance directives. May 11, 2007. [Accessed August 15, 2012]. Available from: http://www.nlm.nih.gov/medlineplus/advancedirectives.html.

- 30.Breese P, Burman W. Readability of notice of privacy forms used by major health care institutions. JAMA. 2005;293(13):1593–1594. doi: 10.1001/jama.293.13.1593. [DOI] [PubMed] [Google Scholar]

- 31.Badarudeen S, Sabharwal S. Assessing readability of patient education materials: current role in orthopaedics. Clin Orthop Relat Res. 2010;468(10):2572–2580. doi: 10.1007/s11999-010-1380-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Banasiak N, Meadows-Oliver M. Readability of asthma web sites. J Pediatr Health Care. 2011;25(5):e24. [Google Scholar]

- 33.Paasche-Orlow MK, Taylor HA, Brancati FL. Readability standards for informed-consent forms as compared with actual readability. New Engl J Med. 2003;348(8):721–726. doi: 10.1056/NEJMsa021212. [DOI] [PubMed] [Google Scholar]

- 34.Berland GK, Elliott MN, Morales LS, et al. Health information on the Internet: accessibility, quality, and readability in English and Spanish. JAMA. 2001;285(20):2612–2621. doi: 10.1001/jama.285.20.2612. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Walsh TM, Volsko TA. Readability assessment of internet-based consumer health information. Respir Care. 2008;53(10):1310–1315. [PubMed] [Google Scholar]

- 36.Jukkala AM, Patrician PA, Northen A, Block V. Readability and usefulness of the Clinical Microsystem Assessment Tool. J Nurs Care Qual. 2011;26(2):186–191. doi: 10.1097/NCQ.0b013e318209fa2f. [DOI] [PubMed] [Google Scholar]

- 37.Pizur-Barnekow K, Patrick T, Rhyner PM, Cashin S, Rentmeester A. Readability of early intervention program literature. Topics Early Child Spec Educ. 2011;31(1):58–64. [Google Scholar]

- 38.Melman ST, Kaplan JM, Caloustian ML, Weinberger JA, Smith J, Anbar RD. Readability of the childhood immunization information forms. Arch Pediatr Adolesc Med. 1994;148(6):642–644. doi: 10.1001/archpedi.1994.02170060096019. [DOI] [PubMed] [Google Scholar]

- 39.Davis TC, Bocchini JA, Jr, Fredrickson D, et al. Parent comprehension of polio vaccine information pamphlets. Pediatrics. 1996;97(6 Pt 1):804–810. [PubMed] [Google Scholar]

- 40.Davis TC, Fredrickson DD, Arnold C, Murphy PW, Herbst M, Bocchini JA. A polio immunization pamphlet with increased appeal and simplified language does not improve comprehension to an acceptable level. Patient Educ Couns. 1998;33(1):25–37. doi: 10.1016/s0738-3991(97)00053-0. [DOI] [PubMed] [Google Scholar]

- 41.Lagasse LP, Rimal RN, Smith KC, et al. How accessible was information about H1N1 flu? Literacy assessments of CDC guidance documents for different audiences. PLoS One. 2011;6(10):e23583. doi: 10.1371/journal.pone.0023583. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Sugerman DE, Keir JM, Dee DL, et al. Emergency health risk communication during the 2007 San Diego wildfires: comprehension, compliance, and recall. J Health Commun. 2012;17(6):698–712. doi: 10.1080/10810730.2011.635777. [DOI] [PubMed] [Google Scholar]

- 43.Merchant RC, Gee EM, Clark MA, Mayer KH, Seage GR, 3rd, Degruttola VG. Comparison of patient comprehension of rapid HIV pre-test fundamentals by information delivery format in an emergency department setting. BMC Public Health. 2007;7:238. doi: 10.1186/1471-2458-7-238. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Centers for Disease Control and Prevention. About CDC. Jun 4, 2012. [Accessed July 21, 2012]. Available from: http://www.cdc.gov/about/

- 45.Centers for Disease Control and Prevention. About CDC.gov. Dec 5, 2011. [Accessed July 21, 2012]. Available from: http://www.cdc.gov/Other/about_cdcgov.html.

- 46.CDC. CDC – About CDC: our history, our story. Jan 11, 2010. [Accessed July 24, 2012]. Available from: http://www.cdc.gov/about/history/ourstory.htm.

- 47.MedlinePlus. How to write easy-to-read health materials. Jul 11, 2011. [Accessed July 20, 2012]. Available from: http://www.nlm.nih.gov/medlineplus/etr.html.

- 48.Calderon JL, Morales LS, Liu H, Hays RD. Variation in the readability of items within surveys. Am J Med Qual. 2006;21(1):49–56. doi: 10.1177/1062860605283572. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Terblanche M, Burgess L. Examining the readability of patient-informed consent forms. Open Access J Clin Trials. 2010;2010:157–162. [Google Scholar]

- 50.Herman Steve. The index of gunning. Global Cosmetic Industry. 2000;167(1):34–35. [Google Scholar]

- 51.Sparks BI., 3rd Readability of ophthalmic literature. Optom Vis Sci. 1993;70(2):127–130. doi: 10.1097/00006324-199302000-00008. [DOI] [PubMed] [Google Scholar]

- 52.McLaughlin GH. SMOG grading – a new readability formula. J Reading. 1969;12:639–646. [Google Scholar]

- 53.Nore GWE. Clear Lines: How to Compose and Design Clear Language Documents for the Workplace. Toronto: Frontier College; 1991. [Google Scholar]

- 54.Wilson JF. The crucial link between literacy and health. Ann Intern Med. 2003;139(10):875–878. doi: 10.7326/0003-4819-139-10-200311180-00038. [DOI] [PubMed] [Google Scholar]

- 55.Kasabwala K, Agarwal N, Hansberry DR, Baredes S, Eloy JA. Readability assessment of patient education materials from the American Academy of Otolaryngology – Head and Neck Surgery Foundation. Otolaryngol Head Neck Surg. 2012;147(3):466–471. doi: 10.1177/0194599812442783. [DOI] [PubMed] [Google Scholar]

- 56.Wilson M. Readability and patient education materials used for low-income populations. Clin Nurse Spec. 2009;23(1):33–40. doi: 10.1097/01.NUR.0000343079.50214.31. [DOI] [PubMed] [Google Scholar]

- 57.Reinhard SC, Scala MA, Stone R. Writing easy-to-read materials. Issue Brief Cent Medicare Educ. 2007;1(2):1–4. [Google Scholar]

- 58.McKinney J, Kurtz-Rossi S. Family Health and Literacy: A Guide to Easy-to-Read Health Education Materials and Web Sites for Families. Boston, MA: World Education; 2006. [Google Scholar]