Abstract

Two auditory lexical decision experiments were conducted to determine whether facilitation can be obtained when a prime and a target share word-initial phonological information. Subjects responded “word” or “nonword” to monosyllabic words and nonwords controlled for frequency. Each target was preceded by the presentation of either a word or nonword prime that was identical to the target or shared three, two, or one phonemes from the beginning. The results showed that lexical decision times decreased when the prime and target were identical for both word and nonword targets. However, no facilitation was observed when the prime and target shared three, two, or one initial phonemes. These results were found when the interstimulus interval between the prime and target was 500 msec or 50 msec. In a second experiment, no differences were found between primes and targets that shared three, one, or zero phonemes, although facilitation was observed for identical prime-target pairs. The results are compared to recent findings obtained using a perceptual identification paradigm. Taken together, the findings suggest several important differences in the way lexical decision and perceptual identification tasks tap into the information-processing system during auditory word recognition.

Researchers concerned with issues in word recognition and lexical access have relied on the lexical decision paradigm to answer a number of fundamental questions about the representation of words in memory and the processes used to contact these representations in language processing. This paradigm requires subjects to determine as quickly as possible whether a stimulus item is a word or a nonword. Early research using lexical decision examined structural effects of visually presented lexical items on the speed of classifying these items as words or nonwords (Snodgrass & Jarvella, 1972; Stanners & Forbach, 1973; Stanners, Forbach, & Headley, 1971). In other research, the lexical decision task has been used to investigate the effects of frequency on classification time (Rubenstein, Garfield, & Millikan, 1970; Rubenstein, Lewis, & Rubenstein, 1971; Stanners, Jastrzembski, & Westbrook, 1975) and the status of morphologically related items in memory (Stanners, Neiser, Hernon, & Hall, 1979; Stanners, Neiser, & Painton, 1979; Taft & Forster, 1975, 1976).

The basic design of the paradigm has also been extended to examine the priming effects of associated items on lexical decision times. Meyer and Schvaneveldt (1971) found that subjects were faster at classifying a letter string (e.g., DOCTOR) as a word if the preceding letter string was an associated word (e.g., NURSE) than if the preceding letter string was an unassociated word (e.g., BUTTER). Since Meyer and Schvaneveldt’s original study, priming in the lexical decision paradigm has been used to test models of semantic facilitation (Antos, 1979; Norris, 1984; O’Connor & Forster, 1981), as well as to study processes involved in word recognition and lexical access (Chambers, 1979; Meyer, Schvaneveldt, & Ruddy, 1975; Schuberth & Eimas, 1977; Schvaneveldt, Meyer, & Becker, 1976; Shulman, Hornak, & Sanders, 1978).

Research examining priming effects in the lexical decision task has primarily been concerned with items that are semantically associated. Moreover, most of the research has been conducted using visually presented stimuli. Such priming effects are assumed to reflect semantic facilitation resulting from some form of association between two related items (but see James, 1975, and Shulman & Davison, 1977, regarding the role of semantic information in lexical decision). Several studies, however, have observed facilitation for phonemically and orthographically related words (Hillinger, 1980; Jakimik, Cole, & Rudnicky, 1985; Meyer, Schvaneveldt, & Ruddy, 1974), suggesting that priming in lexical decision encompasses more than semantic associations between items. Using pairs of words that rhyme (e.g., BRIBE-TRIBE), Meyer et al. (1974) and Hillinger (1980) found facilitation to make a visual lexical decision when words were phonemically similar. Meyer et al. (1974) presented stimulus items visually and found that subjects responded more rapidly to word pairs that were similar both graphemically and phonemically (BRIBE-TRIBE) than to control pairs (BREAK-DITCH). In addition, these researchers observed slower responses when the pairs shared only graphemic similarity (TOUCH-COUCH). Hillinger (1980) also reported rhyming facilitation when the first item in the pair was presented auditorily and when the rhymes were graphemically dissimilar (EIGHT-MATE). Based on these findings, Hillinger argued that rhyming facilitation is a result of spreading activation between entries in a physical as opposed to a semantic access file.

A number of researchers have suggested that the lexical decision task involves considerable postaccess processing (see Balota & Chumbley, 1984; Jakimik et al., 1985). Jakimik et al. (1985) reported a study in which subjects made a lexical decision to spoken one-syllable stimulus items. Jakimik et al. were interested in examining the effects of orthographic similarity on lexical decisions. In their experiments, subjects made lexical decisions to spoken monosyllabic targets preceded by a prime that was: (1) phonologically and orthographically related (mess preceded by message), (2) only phonologically related (deaf preceded by definite), (3)only orthographically related (fig preceded by fighter), or (4) unrelated (pill preceded by blanket). Jakimik et al. found facilitation to make a lexical decision to monosyllabic words and nonwords only when the preceding polysyllabic words were related phonologically and orthographically. These researchers concluded that spelling plays a role in lexical decision. Furthermore, they argued that lexical decision may involve substantial postrecognition processing since information from the lexicon (e.g., spelling) affects lexical decision time. The conclusion that lexical decisions reflect postrecognition processes has a number of implications for the use of the lexical decision task in studying the course of word recognition processes. Moreover, this conclusion is consistent with questions raised by other researchers concerning the specific nature of facilitation effects obtained with this task (Balota & Chumbley, 1984; Fischler, 1977; Kiger & Glass, 1983; Neely, 1976, 1977a, 1977b).

Facilitation obtained for two items that are phonetically or phonologically related can be explained in terms of a theory of word recognition developed by Marslen-Wilson and Welsh (1978). Cohort theory (Marslen-Wilson & Tyler, 1980; Marslen-Wilson & Welsh, 1978) proposes that a “cohort” of all the words beginning with a particular acoustic-phonetic sequence will be activated during the initial stage of the word recognition process. Members of this “word-initial cohort” are then deactivated by an interaction of top-down knowledge and continued bottom-up processing of acoustic-phonetic information until only the word to be recognized remains activated.

Recently Slowiaczek, Nusbaum, and Pisoni (1985) described a model developed to formalize the time course of cohort activation in cohort theory. The model they developed (MACS) suggests the way in which the acoustic-phonetic representation of a prime word could facilitate recognition of a target word. Specifically, the process of matching encoded sensory information to lexical representations is decribed in MACS. The model assumes that words are recognized one phoneme at a time in left-to-right sequence from the beginning of the stimulus. According to the model, the spoken stimulus activates phoneme units that serve as input to word units. Words are processing units that accumulate activation over time from the phoneme inputs. Word units can be in one of three activation states. First, word units are activated when a match exists between the currently activated phoneme unit and the word unit. Second, a word unit is deactivated if the currently activated phoneme unit is not part of that word unit. Finally, at the end of a stimulus, when no phoneme units are activated, activation of word units decays at a constant rate.

At the end of a stimulus, the amount of residual activation that remains for a given candidate in the cohort depends on the point at which the word candidate was deactivated. Moreover, for isolated word recognition, the deactivation point is dependent on the amount of phonological overlap that exists between the input stimulus and that cohort candidate. Because of this residual activation, one might expect to find facilitation in an auditory lexical decision task in which a target item is preceded by a stimulus that shares word-initial phonological information with the target (i.e., plan-pride, prone-pride, price-pride). Specifically, targets that share word-initial phonological information with preceding primes are assumed to be included in the word-initial cohort that is activated during recognition of the prime. Under these circumstances, the target is activated and deactivated while the prime is being processed. The amount of residual activation remaining for the target item, as a result of processing the prime, should depend on the amount of phonological overlap between the prime and target, and this residual activation could subsequently facilitate target processing.

Experiment 1 was designed to determine whether phonological priming can be obtained in an auditory lexical decision task when the prime and target share word-initial phonological information. The model of cohort activation (Slowiaczek et al., 1985) suggests that reaction time to a target should be facilitated when the target item is preceded by a prime that shares phonological information. Moreover, as the overlap between phonological information in the prime and the target increases, the amount of facilitation observed in classifying the target as a word or a nonword should increase.

In order to test these predictions, we presented subjects with pairs of items that were phonologically related at the beginning. Specifically, the primes in the present study were words and nonwords that were related to target words and nonwords in one of the following ways: (1) prime was identical, (2) first, second, and third phonemes were the same, (3) first and second phonemes were the same, or (4) first phonemes were the same. In the identical-prime condition, we predicted that the prime should facilitate recognition of the target item. With respect to the shared-phoneme conditions, if overlap between the phonemes at the beginning of the prime and target result in higher levels of residual activation, then we would expect to find facilitation of the target for each of the shared-phoneme conditions. The condition sharing three phonemes should be faster than the two-shared-phonemes condition, which, in turn, should be faster than the one-shared-phoneme condition. Furthermore, priming should occur for word targets based on the amount of phonological overlap between the prime and the target, regardless of the lexical status of the prime. For nonword targets, initial phonological similarity should also facilitate the lexical decision, since a cohort is activated based on the acoustic-phonetic information, without reference to the lexical status of the item. However, non-words should be recognized as nonwords when no candidates remain activated. The fact that nonwords are anomalous to the recognition system may result in slower response times overall compared to those for word targets, regardless of the lexical status of the prime. In addition, high-frequency items should be responded to faster than low-frequency items and word items should be responded to faster than nonword items. These predictions should replicate the frequency and lexicality effects normally found in lexical decision experiments.

EXPERIMENT 1

Method

Subjects

Forty-two undergraduate students were obtained from a paid subject pool maintained in the Speech Research Laboratory at Indiana University. Subjects were paid $3.00 for their participation in the experiment. All subjects were native speakers of English with no known history of hearing loss or speech disorder.

Materials

Ninety-eight monosyllabic words (49 high frequency and 49 low frequency) were obtained using the Kučera and Francis (1967) computational norms (see Appendix for complete list of the target items). High-frequency words had a frequency count greater than 100. The frequency count for low-frequency words was less than 10. In addition, 98 nonwords were formed from each of the 98 words by changing one phoneme in the word (e.g., best-besk). The position of the changed phoneme was balanced across all possible positions in the words.

Each of these 196 target items was then paired with seven separate primes. Each prime was related to a target in one of the following ways: (1) an identical word, (2) a word with the same first, second, and third phonemes, (3) a nonword with the same first, second, and third phonemes, (4) a word with the same first and second phonemes, (5) a nonword with the same first and second phonemes, (6) a word with the same first phoneme, and (7) a nonword with the same first phoneme. Table 1 lists some examples of word and nonword targets and their corresponding primes.

Table 1.

Examples of Target Items and Their Corresponding Primes Used in Experiment 1

| Targets | Identical | Word3 | Word2 | Word1 | Nonword3 | Nonword2 | Nonword1 |

|---|---|---|---|---|---|---|---|

| Words: High Frequency | |||||||

| black | black | bland | bleed | burnt | /blæt/ | /blim/ | /brεm/ |

| drive | drive | dried | drug | dot | /draɪl/ | /drʌt/ | /dalf/ |

| Nonwords: High Frequency | |||||||

| /blæf/ | /blæf/ | blank | blind | big | /blæʃ/ | /blʌz/ | /bʌv/ |

| /praɪv/ | /praɪv/ | prime | point | /praɪk/ | /prɪl/ | /poɪl/ | |

| Words: Low Frequency | |||||||

| bald | bald | balls | bought | bank | /bɔlf/ | /bɔʃ/ | /brɪl/ |

| dread | dread | dress | drill | dove | /drεn/ | /drʌb/ | /dʌs/ |

| Nonwords: Low Frequency | |||||||

| /bʌld/ | /bʌld/ | bulb | bust | bride | /bʌln/ | /bʌp/ | /braɪf/ |

| /drɪd/ | /drɪd/ | drip | drag | desk | /drɪs/ | /drʌs/ | /dist/ |

A male speaker recorded the target and prime items in a sound-attenuated IAC booth (Controlled Acoustical Environments, No. 106648) using an Electro-Voice D054 microphone and an Ampex AG500 tape deck. The stimulus items were produced in the carrier sentence “Say the word ––––– please” to control for abnormal durations when words are produced in isolation. The stimulus items were digitized at a sampling rate of 10 kHz using a 12-bit A/D converter and then excised from the carrier sentence using a digital speech waveform editor (WAVES) on a PDP-11/34 computer (Luce & Carrell, 1981). The range of durations of the digitized target items was 330–600 msec for high-frequency words, 365–635 msec for high-frequency nonwords, 308–640 msec for low-frequency words, and 365–630 msec for low-frequency nonwords. The range of durations of the digitized prime items was 306–640 msec for the word primes, and 312–626 msec for the non-word primes. The target items and their corresponding primes were stored digitally as stimulus files on computer disk for later playback to subjects in the experiment.

Procedure

Subjects were run in groups of 4 or less. The presentation of stimuli and collection of data were controlled on-line by a PDP-11/34 computer. Signals were output via a 12-bit D/A converter and low-pass filtered at 4.8 kHz. Subjects heard the stimuli at 75-dB SPL with background noise at 45-dB SPL over a pair of TDH-39 headphones. Subjects were asked to perform a lexical decision task for the 196 test items. The subject responded “word” or “nonword” as quickly and as accurately as possible after the presentation of each target stimulus item by pressing one of two appropriately labeled buttons located on a response console interfaced to the computer.

A typical trial sequence proceeded as follows: First, a cue light was presented for 500 msec at the top of the subject’s response box to alert the subject that the trial was beginning. Then there was a 1,000-msec pause, followed by an auditory presentation of the prime item. The subject was not required to respond overtly to the presentation of the prime. An interstimulus interval of 500 msec intervened between the prime and the presentation of the target item. The subject responded “word” or “nonword” to the presentation of the target item on each trial. Immediately following the subject’s response, the computer indicated which response was correct by illuminating a feedback light above the appropriate response button. The subject’s response (i.e., “word” vs. “nonword”) was recorded, as well as the response latency. Latencies were measured from the onset of the target item to the subject’s response.

Six subjects were run in each of seven stimulus sets for a total of 42 subjects. Subjects received 98 word and 98 nonword targets, half of which were low frequency and half of which were high frequency. There was an equal number of words primed by each of the seven prime types. The distribution of primes for nonword targets was the same as for the word targets. The prime-target pairs were counterbalanced across the seven stimulus sets. Presentation of prime-target pairs was randomized for each session, and subjects were never presented with the same target or prime item on any of the 196 stimulus trials.

Results and Discussion

The data were analyzed with respect to two dependent measures: response accuracy (word vs. nonword) and response latency. Mean response times and error rates were calculated across subjects and conditions.

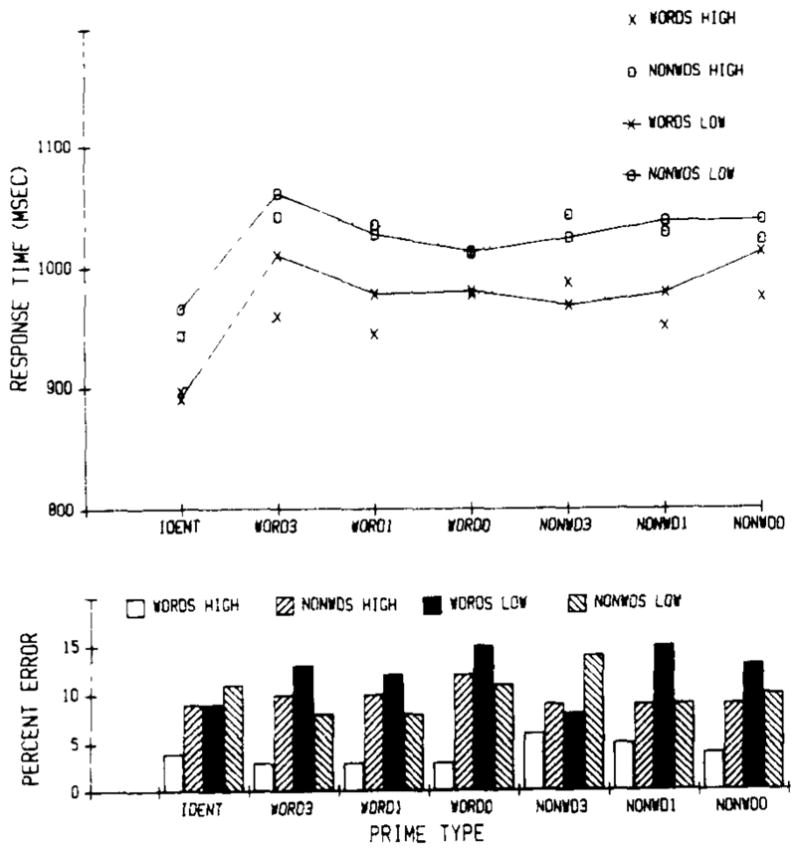

The main results are shown in Figure 1. The top half of the figure displays mean response times, the bottom half shows percent errors for the four types of target items as a function of the seven prime types.

Figure 1.

Response times (top panel) and error rates (bottom panel) of four types of target items (high-frequency words, high-frequency nonwords, low-frequency words, and low-frequency nonwords) for the seven prime types from Experiment 1.

As typically found in lexical decision tasks, analyses of variance revealed that the lexicality (word-nonword) main effect was significant for response time data [F(1,41) = 42.78, p < .0001] and percent error data [F(1,41) = 10.16, p < .002]. The mean response time was 968 msec for words and 1,041 msec for nonwords. The mean percent error was 5.6 for words and 8.3 for nonwords.

In addition, as expected, we observed a significant frequency effect on response times [F(1,41) = 29.16, p < .0001] and error rates [F(1,41) = 35.62, p < .0001]. High-frequency items were responded to faster and more accurately than were low-frequency items. The overall mean response time was 990 msec for high-frequency target items and 1,019 msec for low-frequency items, and the mean percent error was 5.8 for high-frequency targets and 9.2 for low-frequency targets.

A frequency × lexicality interaction was also obtained [F(1,41) = 24.21, p < .0001, for response time, and F(1,41) = 30.84, p < .0001, for error data]. This interaction revealed a differential frequency effect for word and nonword items. One-way ANOVAs on this interaction for response times confirmed that high- and low-frequency items were significantly different for word targets [F(1,41) = 47.19, p < .0001], but not for nonword targets [F(1,41) = .61, n.s.]. Note in Figure 1 that the curve for high-frequency words shows consistently faster response times than does the curve for low-frequency words. The curves for nonwords derived from high- and low-frequency words show that this frequency effect was not observed for nonword targets.

The overall analysis of variance also revealed a main effect of prime type on response times [F(6,246) = 34.75, p < .0001]. This effect was not found in the analysis of the error rates (F < 1). In addition, a lexicality × prime type interaction was observed for response times [F(6,246) = 2.49, p < .02] and error rates [F(6,246) = 2.93, p < .008]. Separate one-way ANOVAs on the response time data revealed that prime type was significant for the word targets [F(6,246) = 15.51, p < .0001] and the nonword targets [F(6,246) = 23.05, p < .0001].

Planned comparisons were conducted to test for differences across prime types. Results of these comparisons revealed that the difference between the identical-prime type and all other prime types was significant [F(1,246) = 145.1, p < .01]. No significant difference was found, however, between the word prime types (Word3, Word2, Word1) and the nonword prime types (Nonword3, Nonword2, Nonword1) [F(1,246) < 1]. Planned comparisons examining differences between shared-phoneme word prime types revealed a significant difference for Word3 versus Word2 [F(1,246) = 7.739, p < .01] and Word3 versus Word1 [F(1,246) = 8.05, p < .01]. The direction of these effects, however, was opposite to the predicted direction. The mean response time for Word3 was 1,038.11 msec compared to mean response times of 1,008.95 msec for Word2 and 1,008.37 msec for Word1. No significant differences were found between any of the other word or nonword shared-phoneme prime types.

To summarize, the results of this experiment revealed a pattern of main effects typically found in earlier lexical decision experiments. Specifically, we found that words were responded to faster than nonwords and that high-frequency items were responded to faster than low-frequency items. In addition, we found a main effect of prime type on the classification of word and nonword targets. However, this main effect was primarily due to the facilitation of targets preceded by identical primes. We did not find facilitation in response time to both word and nonword targets as the phonological overlap between the prime and the target increased. Moreover, the results suggest that interference may be operative as phonological overlap increased between word primes and their corresponding targets.

The failure to find facilitation in a lexical decision task based on phonological similarity is inconsistent with our earlier predictions based on cohort theory and the model of cohort activation (MACS). The failure to obtain phonological priming across the different prime types may have been due to several factors, including the interstimulus interval used in the experiment. Although semantic facilitation has been obtained in several different experimental paradigms at SOAs up to 2,000 msec (Neely, 1977b; Seidenberg, Tanenhaus, Leiman, & Bienkowski, 1982; Tanenhaus, Leiman, & Seidenberg, 1979), the effects of phonological priming may be substantially more fragile and, therefore, much shorter in duration.

However, the latency data obtained in an additional experiment using a 50-msec ISI replicated the results found in Experiment 1 when a 500-msec ISI was used. In the 50-msec ISI experiment, we found effects of lexicality (word vs. nonword) and frequency (high vs. low) on the classification of word and nonword targets. In addition, when the prime and target were identical, facilitation in response time to the target was observed. However, as in the original experiment, facilitation was only observed when the target and prime were identical. Facilitation due to phonological similarity was not observed in the lexical decision task when the prime and target shared word-initial phonemes. In fact, some evidence suggesting interference for primes that share initial phonological information was obtained. However, we cannot safely conclude that facilitation did not occur for the primes that shared phonemes until we examine the effects of a “neutral” prime on lexical decision time. Experiment 2 included this control condition so that appropriate comparisons could be drawn.

EXPERIMENT 2

Method

Subjects

Forty-two additional undergraduates were obtained from the same subject file used in the first experiment. Subjects were paid $3.50 for their participation in the experiment. None of the subjects in Experiment 2 had participated in Experiment 1.

Materials

The stimuli used in Experiment 2 were identical to the stimuli used in Experiment 1, with the following exception. The Word2 and Nonword2 prime types were re-paired with the 196 target items in order to create Word0 and Nonword0 (unrelated) conditions. Therefore, each prime in Experiment 2 was related to a target in one of the following seven ways: (1) an identical word or nonword, (2) a word with the same first, second, and third phonemes, (3) a nonword with the same first, second, and third phonemes, (4) a word with the same first phoneme, (5) a nonword with the same first phoneme, (6) a word with no phonemes in common. and (7) a nonword with no phonemes in common. The unrelated baseline conditions (Word0 and Nonword0) only allow assessment of priming for similar primes relative to dissimilar primes. This experiment does not assess the effect of unrelated primes on the target response relative to unprimed targets. The results of an experiment in which no primes are used as the baseline may reveal inhibition for the unrelated prime condition.

Procedure

The procedure for Experiment 2 was identical to the procedure used in Experiment 1, except that the interstimulus interval between the prime and the target was shortened to 50 msec.

Results and Discussion

The data were analyzed with regard to response accuracy and response latency. Mean response times were calculated across subjects and conditions. The main results are shown in Figure 2. The top half of the figure displays averaged response times, and the bottom half displays percent errors for the four types of target stimuli as a function of the seven prime types.

Figure 2.

Response times (top panel) and error rates (bottom panel) of four types of target items for the seven prime types from Experiment 2.

Overall analyses of variance were performed separately for the error data and the response latency data. Both analyses revealed effects of frequency [F(1,41) = 5.81, p < .02, for response latency data, and F(1,41) = 58.64, p < .0001, for error data] and lexicality [F(1,41) = 33.32, p < .0001, for response latency data, and F(1,41) = 6.99, p < .01, for error data]. As expected, word targets were responded to faster and more accurately than were nonword targets, and high-frequency items were responded to faster and more accurately than were low-frequency items. In addition, a frequency × lexicality interaction was found for the error data [F(1,41) = 38.11, p < .0001]. The overall analysis on response times also revealed a main effect of prime type [F(6,246) = 12.43, p < .0001].

Planned comparisons were performed to test differences across the seven prime types. The test comparing the identical-prime type to all other prime types was significant [F(1,246) = 69.24, p < .01]. However, the difference between the word primes (Word0, Word1, Word3) and the nonword primes (Nonword0, Nonword1, Nonword3) and the difference between the unrelated primes (Word0, Nonword0) and the primes that shared one or three phonemes (Word1, Word3, Nonword1, Nonword3) were not significant. Therefore, inclusion of a neutral prime (Word0 and Nonword0) in the design did not reveal a facilitatory (or inhibitory) effect in the lexical decision task for phonologically similar primes, relative to dissimilar primes, extending those results obtained in Experiment 1. Although some inhibition may be present in the shared-phoneme conditions, relative to an unprimed condition, the results obtained with the unrelated prime suggest the absence of a phonological priming effect in the lexical decision task. Furthermore, none of the comparisons between pairs of shared-phoneme primes was significant (e.g., Word3 vs. Word0, Word3 vs. Word1, Nonword1 vs. Nonword0, etc.).

GENERAL DISCUSSION

The present experiments replicated a number of well-known effects reported in the word recognition literature. First, we found substantial lexicality and frequency effects. These are routinely observed in lexical decision experiments. Second, we found facilitation in response to a target when it was preceded by an identical prime. This effect is not unlike repetition effects observed in the literature (Feustel, Shiffrin, & Salasoo, 1982; Forster & Davis, 1983; Jackson & Morton, 1984; Scarborough, Cortese, & Scarborough, 1977). However, we found no evidence of facilitation in response to targets preceded by primes that shared word-initial phonological information with the target when the length of the interstimulus interval was 50 or 500 msec. This was the case regardless of the lexical status of the prime and the target. These results reveal that partial phonological information does not facilitate response to a target in a lexical decision task, and they are inconsistent with the predictions derived from the cohort activation assumption of cohort theory. Furthermore, these results are inconsistent with results showing facilitation in this task when the prime and target items shared semantic information or were associatively related. Thus, semantic facilitation effects may be logically separated from acoustic-phonetic or phonological effects (see Jakimik et al., 1985).

The fact that we did not find phonological priming appears to be in conflict with the results obtained by Hillinger (1980), Jakimik et al. (1985), and Meyer et al. (1975). However, an examination of the differences in the stimuli employed in these previous experiments can clarify the pattern of results. The studies by Hillinger (1980) and Meyer et al. (1975) used primes and targets that rhymed. None of our stimulus pairs rhymed. In the present study, we were interested in the effect of word-initial phonological similarity on lexical decision times. Furthermore, the phonological similarity between Meyer et al. ’s prime-target pairs generally involved phonological overlap for more than 50% of the items (i.e., the final 75 % of four-phoneme prime target items was similar, and the final 66% of three-phoneme items was similar). In the present study, the percentage of phonologically similar information between primes and targets varied from 0% to 75% of the items. Therefore, the phonological priming in these earlier studies may be due to the percentage of shared information or the fact that the items shared phonological information from the end of each item. In the Jakirnik et al. (1985) study, the primes comprised the first syllable of the target item (e.g., nap-napkin). The phonological priming effects observed in their study may be due, in part, to overall syllabic similarity and the fact that the entire target item (nap) appeared intact in the prime (napkin). Our prime-target items were designed to test predictions derived from cohort theory’s emphasis on the primacy of left-to-right processing in auditory word recognition. The fact that we did not observe facilitation may be due to the specific constraints on the degree of phonological overlap between the primes and targets used in our studies.

The present findings, therefore, do not provide support for the primary activation assumption of cohort theory or for the model of cohort activation developed by Slowiaczek et al. (1985). We found no evidence of facilitation to classify target items as the amount of phonological similarity between the beginning of a prime and target increased. Although these results raise questions regarding the activation of a list of word candidates based on word-initial acoustic-phonetic information during word recognition, the failure to find a phonological priming effect may be due to the paradigm chosen rather than the cohort activation assumption.

It is of some interest, therefore, to compare the results obtained in the present series of experiments using the lexical decision task with recent findings of Slowiaczek et al. (1985), who reported phonological priming in a perceptual identification task. In the Slowiaczek et al. study, subjects identified isolated English words presented in white noise at various signal-to-noise ratios. In a primed session, each target word was preceded by a prime that was identical to the target word, was unrelated to the target word, or shared one, two, or three phonemes in common with the beginning of the target word. They found increased priming effects as the phonemic overlap between the prime and target word increased. In the present studies using a lexical decision task, priming based on partial phonological similarity was not observed. The only evidence of facilitation occurred when the prime and target were identical items. Moreover, some evidence for inhibition was observed in the lexical decision task when the prime and target shared partial phonological information.

Several explanations of the differences in the results of the two studies are suggested, based on an examination of the processes used in perceptual identification and lexical decision tasks. First, the two tasks require subjects to make different types of responses. In the perceptual identification task, subjects must use the phonological information in the signal in order to identify the segments and subsequently recognize the word. In the lexical decision task, subjects must classify the target as a word or a nonword. The classification processes involved in lexical decision may be operative at a point at which the phonological information has already been replaced by a more abstract lexical representation. Second, in the perceptual identification task, the response set is usually quite large, including all of the words the subject knows. In the lexical decision task, the response set includes only two responses—word and nonword. A third difference between the two tasks is that the targets in the identification task were degraded by white noise, whereas the targets in the lexical decision task were presented in the clear. The presence of the noise in the perceptual identification task results in greater stimulus degradation and may force subjects to attend more to the phonological information in the signal. Finally, in the perceptual identification task, subjects are not under any time pressure in making their responses. In the lexical decision task, on the other hand, subjects are instructed to respond as quickly and accurately as possible, which encourages subjects to adopt a strategy of not using all available phonological information provided by the preceding prime.

These explanations, based on response differences or the presence of noise in the perceptual identification task, are consistent with previous research in the visual word recognition literature suggesting that the lexical decision task may involve processes in addition to those necessary to simply locate information in the lexicon (Balota & Chumbley, 1984; Clarke & Morton, 1983; James, 1975; Neely, 1977b; Seidenberg, Waters, Sanders, & Langer, 1984; Shulman & Davison, 1977).

Although not predicted by the activation assumption, some evidence for the operation of inhibition was observed as phonological similarity increased for certain prime-target pairs in Experiments 1 and 2. This inhibition, although not evident under all conditions, may be due to competition among phonologically similar candidates. Because cohort theory describes only those processes involved in the activation of lexical candidates, it does not postulate mechanisms to account for inhibition or competition among lexical candidates. To the extent that these particular effects can be replicated and generalized to other paradigms (e.g., naming), cohort theory could be modified to predict such effects. This modification would involve the development of a decision mechanism subsequent to the lexical activation component of the model where such inhibitory effects could occur.

In conclusion, the results of the present series of experiments do not support the cohort activation assumption derived from cohort theory. However, although the failure to find phonological priming in a lexical decision task is inconsistent with the predictions derived from the cohort activation assumption, these results indicate ways in which the lexical decision paradigm can be used to test assumptions about word recognition processing. The present results, combined with our earlier findings demonstrating phonological priming in perceptual identification, suggest several important differences in what lexical decision and perceptual identification tasks are measuring about the processing of information in word recognition and lexical access. The differences observed with these tasks involve the availability of different kinds of information concerning the internal organization and phonological structure of words and nonwords. Hence, these tasks are differentially sensitive to experimental manipulations based on the phonological similarity of successive items.

APPENDIX.

Target Items Used in Experiments 1 and 2

| High-Frequency Words | ||||||

| think | black | hand | must | drive | told | went |

| small | place | great | still | state | group | want |

| mind | help | glass | field | blood | change | close |

| land | trees | class | plan | built | brown | stage |

| cold | tried | range | bring | growth | start | stand |

| stock | sent | trade | trial | friend | chance | placed |

| truth | spent | plant | sense | green | speak | price |

| High-Frequency Nonwords | ||||||

| /bɪnk/ | /blæf/ | /hɔnd/ | /mʌnt/ | /praɪv/ | /tæld/ | /wɪnt/ |

| /smol/ | /plef/ | /gref/ | /stæl/ | /spet/ | /prup/ | /lant/ |

| /mʌnd/ | /hεlk/ | /glɪs/ | /mild/ | /blɪd/ | /kendʓ/ | /klop/ |

| /lɪnd/ | /troz/ | /klæg/ | /plʌn/ | /mɪlt/ | /graʄn/ | /stεdʓ/ |

| /dʓold/ | /graɪd/ | /rɪndʓ/ | /brɪv/ | /gloθ/ | /spart/ | /smænd/ |

| /stik/ | /sɪnt/ | /dred/ | /draɪl/ | /frεld/ | /bæns/ | /pleft/ |

| /trʌθ/ | /spont/ | /glænt/ | /sεls/ | /grib/ | /spʌk/ | /praɪl/ |

| Low-Frequency Words | ||||||

| bald | belch | smug | creep | flock | halt | hint |

| scanned | slate | spade | wrist | blend | blows | blunt |

| dense | dread | dusk | grips | mask | slack | sped |

| trades | clicked | clocks | crisp | kills | plots | scout |

| slips | stunned | tricks | cracks | hunch | prop | slick |

| twin | wink | brute | pinch | snack | cling | bins |

| bolt | gram | scars | stole | clause | quill | clashed |

| Low-Frequency Nonwords | ||||||

| /bʌld/ | /bεld/ | /smʌf/ | /krɪp/ | /slak/ | /kɔlt/ | hoʄnt/ |

| /skʌnd/ | /klet/ | /spad/ | /dɪst/ | /brεnd/ | /blov/ | /plʌnt/ |

| /dεls/ | /drɪd/ | /Iʌsk/ | /grɪks/ | /mosk/ | /klæk/ | /smεd/ |

| /predʓ/ | /blɪkt/ | /glaks/ | /krɪlp/ | /kɪlf/ | /flats/ | /skot/ |

| /stɪps/ | /stind/ | /trɪfs/ | /præks/ | /hʌlʈ ʃ/ | /pras/ | /slʌk/ |

| /kwɪn/ | /wank/ | /brɪt/ | /pænʈ ʃ/ | /snæf/ | /krɪŋ/ | /lɪnz/ |

| /bolf/ | /glæm/ | /skæz/ | /stεl/ | /kliz/ | /kwaɪl/ | /klæft/ |

Acknowledgments

The research reported here was supported by NIH Grant NS-12179 to Indiana University in Bloomington. We would like to thank Paul A. Luce for assistance in recording the stimuli and for his comments on the manuscript. We also thank Joseph Stemberger for several suggestions.

References

- Antos SJ. Processing facilitation in a lexical decision task. Journal of Experimental Psychology: Human Perception & Performance. 1979;5:527–545. [Google Scholar]

- Balota DA, Chumbley JI. Are lexical decisions a good measure of lexical access? The role of word frequency in the neglected decision stage. Journal of Experimental Psychology: Human Perception & Performance. 1984;10:340–357. doi: 10.1037//0096-1523.10.3.340. [DOI] [PubMed] [Google Scholar]

- Chambers SM. Letter and order information in lexical access. Journal of Verbal Learning & Verbal Behavior. 1979;18:225–241. [Google Scholar]

- Clarke R, Morton J. Cross modality facilitation in tachistoscopic word recognition. Quarterly Journal of Experimental Psychology. 1983;35A:79–96. [Google Scholar]

- Feustel TC, Shiffrin RM, Salasoo A. Episodic and lexical contributions to the repetition effect in word identification. Journal of Experimental Psychology: General. 1982;112:309–346. doi: 10.1037//0096-3445.112.3.309. [DOI] [PubMed] [Google Scholar]

- Fischler I. Associative facilitation without expectancy in a lexical decision task. Journal of Experimental Psychology: Human Perception & Performance. 1977;3:18–26. [Google Scholar]

- Forster KI, Davis C. Unpublished manuscript. 1983. Repetition priming and frequency attenuation in lexical access. [Google Scholar]

- Hillinger ML. Priming effects with phonemically similar words: The encoding-bias hypothesis reconsidered. Memory & Cognition. 1980;8:115–123. doi: 10.3758/bf03213414. [DOI] [PubMed] [Google Scholar]

- Jackson A, Morton J. Facilitation of auditory word recognition. Memory & Cognition. 1984;12:568–574. doi: 10.3758/bf03213345. [DOI] [PubMed] [Google Scholar]

- Jakimik J, Cole RA, Rudnicky AI. Sound and spelling in spoken word recognition. Journal of Memory & Language. 1985;24:165–178. [Google Scholar]

- James CT. The role of semantic information in lexical decisions. Journal of Experimental Psychology: Human Perception & Performance. 1975;104:130–136. [Google Scholar]

- Kiger JI, Glass AL. The facilitation of lexical decisions by a prime occurring after the target. Memory & Cognition. 1983;11:356–365. doi: 10.3758/bf03202450. [DOI] [PubMed] [Google Scholar]

- Kučera H, Francis WN. Computational analysis of present-day American English. Providence, RI: Brown University Press; 1967. [Google Scholar]

- Luce PA, Carrell TD. Creating and editing wave-forms using WAVES (Research on Speech Perception, Progress Report No. 7) Bloomington, IN: Speech Research Laboratory, Department of Psychology, Indiana University; 1981. [Google Scholar]

- Marslen-Wilson WD, Tyler LK. The temporal structure of spoken language understanding. Cognition. 1980;8:1–71. doi: 10.1016/0010-0277(80)90015-3. [DOI] [PubMed] [Google Scholar]

- Marslen-Wilson WD, Welsh A. Processing interactions and lexical access during word recognition in continuous speech. Cognitive Psychology. 1978;10:29–63. [Google Scholar]

- Meyer DE, Schvaneveldt RW. Facilitation in recognizing pairs of words: Evidence of a dependence between retrieval operations. Journal of Experimental Psychology. 1971;90:227–234. doi: 10.1037/h0031564. [DOI] [PubMed] [Google Scholar]

- Meyer DE, Schvaneveldt RW, Ruddy MG. Functions of graphemic and phonemic codes in visual word recognition. Memory & Cognition. 1974;2:309–321. doi: 10.3758/BF03209002. [DOI] [PubMed] [Google Scholar]

- Meyer DE, Schvaneveldt RW, Ruddy MG. Loci of contextual effects in visual word recognition. In: Rabbitt PMA, Dornic S, editors. Attention and performance V. New York: Academic Press; 1975. [Google Scholar]

- Neely JH. Semantic priming and retrieval from lexical memory: Evidence for facilitatory and inhibitory processes. Memory & Cognition. 1976;4:648–654. doi: 10.3758/BF03213230. [DOI] [PubMed] [Google Scholar]

- Neely JH. The effects of visual and verbal satiation on a lexical decision task. American Journal of Psychology. 1977a;90:447–459. [Google Scholar]

- Neely JH. Semantic priming and retrieval from lexical memory: Roles of inhibitionless spreading activation and limited capacity attention. Journal of Experimental Psychology: General. 1977b;106:226–254. [Google Scholar]

- Norris D. The mispriming effect: Evidence of an orthographic check in the lexical decision task. Memory & Cognition. 1984;12:470–476. doi: 10.3758/bf03198308. [DOI] [PubMed] [Google Scholar]

- O’Connor RE, Forster KI. Criterion bias and search sequence bias in word recognition. Memory & Cognition. 1981;9:78–92. doi: 10.3758/bf03196953. [DOI] [PubMed] [Google Scholar]

- Rubenstein H, Garfield L, Millikan JA. Homographic entries in the internal lexicon. Journal of Verbal Learning & Verbal Behavior. 1970;9:487–494. [Google Scholar]

- Robenstein H, Lewis SS, Rubenstein MA. Homographic entries in the internal lexicon: Effects of systematicity and relative frequency of meanings. Journal of Verbal Learning & Verbal Behavior. 1971;10:57–62. [Google Scholar]

- Scarborough DL, Cortese C, Scarborough HS. Frequency and repetition effects in lexical memory. Journal of Experimental Psychology: Human Perception & Performance. 3:1–17. [Google Scholar]

- Schuberth RE, Eimas PD. Effects of context on the classification of words and nonwords. Journal of Experimental Psychology: Human Perception & Performance. 1977;3:27–36. [Google Scholar]

- Schvaneveldt RW, Meyer DE, Becker CA. Lexical ambiguity, semantic context, and visual word recognition. Journal of Experimental Psychology: Human Perception & Performance. 1976;2:243–256. doi: 10.1037//0096-1523.2.2.243. [DOI] [PubMed] [Google Scholar]

- Seidenberg MS, Tanenhaus MK, Leiman JM, Bienkowski M. Automatic access of the meanings of ambiguous words in context: Some limitations of knowledge-based processing. Cognitive Psychology. 1982;14:489–537. [Google Scholar]

- Seidenberg MS, Waters GS, Sanders M, Langer P. Pre- and postlexical loci of contextual effects on word recognition. Memory & Cognition. 1984;12:315–328. doi: 10.3758/bf03198291. [DOI] [PubMed] [Google Scholar]

- Shulman HG, Davison TCB. Control properties of semantic coding in a lexical decision task. Journal of Verbal Learning & Verbal Behavior. 1977;16:91–98. [Google Scholar]

- Shulman HG, Hornak R, Sanders E. The effects of graphemic, phonetic, and semantic relationships on access to lexical structures. Memory & Cognition. 1978;6:115–123. [Google Scholar]

- Slowiaczek LM, Nusbaum HC, Pisoni DB. Unpublished manuscript. 1985. Acoustic-phonetic priming in auditory word recognition. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Snodgrass JG, Jarvella RJ. Some linguistic determinants of word classification times. Psychonomic Science. 1972;27:220–222. [Google Scholar]

- Stanners RF, Forbach GB. Analysis of letter strings in word recognition. Journal of Experimental Psychology. 1973;98:31–35. [Google Scholar]

- Stanners RF, Forbach GB, Headley DB. Decision and search processes in word-nonword classification. Journal of Experimental Psychology. 1971;90:45–50. [Google Scholar]

- Stanners RF, Jastrzembski JE, Westbrook A. Frequency and visual quality in a word-nonword classification task. Journal of Verbal Learning & Verbal Behavior. 1975;14:259–264. [Google Scholar]

- Stanners RF, Neiser JJ, Hernon WP, Hall R. Memory representation for morphologically related words. Journal of Verbal Learning & Verbal Behavior. 1979;18:399–412. [Google Scholar]

- Stanners RF, Neiser JJ, Painton S. Memory representation for prefixed words. Journal of Verbal Learning & Verbal Behavior. 1979;18:733–743. [Google Scholar]

- Taft M, Forster KI. Lexical storage and retrieval of prefixed words. Journal of Verbal Learning & Verbal Behavior. 1975;14:638–647. [Google Scholar]

- Taft M, Forster KI. Lexical storage and retrieval of polymorphemic and polysyllabic words. Journal of Verbal Learning & Verbal Behavior. 1976;15:607–620. [Google Scholar]

- Tanenhaus MK, Leiman JM, Seidenberg MA. Evidence for multiple stages in the processing of ambiguous words in syntactic contexts. Journal of Verbal Learning & Verbal Behavior. 1979;18:427–440. [Google Scholar]