Abstract

Current theories of medial temporal lobe (MTL) function focus on event content as an important organizational principle that differentiates MTL subregions. Perirhinal and parahippocampal cortices may play content-specific roles in memory, whereas hippocampal processing is alternately hypothesized to be content specific or content general. Despite anatomical evidence for content-specific MTL pathways, empirical data for content-based MTL subregional dissociations are mixed. Here, we combined functional magnetic resonance imaging with multiple statistical approaches to characterize MTL subregional responses to different classes of novel event content (faces, scenes, spoken words, sounds, visual words). Univariate analyses revealed that responses to novel faces and scenes were distributed across the anterior–posterior axis of MTL cortex, with face responses distributed more anteriorly than scene responses. Moreover, multivariate pattern analyses of perirhinal and parahippocampal data revealed spatially organized representational codes for multiple content classes, including nonpreferred visual and auditory stimuli. In contrast, anterior hippocampal responses were content general, with less accurate overall pattern classification relative to MTL cortex. Finally, posterior hippocampal activation patterns consistently discriminated scenes more accurately than other forms of content. Collectively, our findings indicate differential contributions of MTL subregions to event representation via a distributed code along the anterior–posterior axis of MTL that depends on the nature of event content.

Keywords: hippocampus, novelty, parahippocampal cortex, perirhinal cortex, spatial memory

Introduction

The medial temporal lobe (MTL) plays an essential role in episodic memory (Gabrieli 1998; Eichenbaum and Cohen 2001; Squire et al. 2004; Preston and Wagner 2007); yet, it remains an open question how MTL subregions differentially subserve episodic memory. Anatomical evidence suggests that event content might be an important organizing principle for differentiating MTL subregional function, with the nature of to-be-remembered information influencing MTL subregional engagement.

Predominant inputs from ventral visual areas to perirhinal cortex (PRc) and dorsal visual areas to parahippocampal cortex (PHc) suggest that these regions may differentially support memory for visual objects and visuospatial information, respectively (Suzuki and Amaral 1994; Suzuki 2009). While several neuropsychological (Bohbot et al. 1998; Epstein et al. 2001; Barense et al. 2005, 2007; Lee, Buckley, et al. 2005; Lee, Bussey, et al. 2005) and neuroimaging studies in humans (Pihlajamaki et al. 2004; Sommer et al. 2005; Awipi and Davachi 2008; Lee et al. 2008) have revealed functional differences between PRc and PHc along visual object and visuospatial domains, other evidence suggests distributed processing of event content across subregional boundaries. In particular, encoding responses have been observed for scenes, faces, and objects in human PRc (Buffalo et al. 2006; Dudukovic et al. 2011; Preston et al. 2010) and PHc (Bar and Aminoff 2003; Aminoff et al. 2007; Bar et al. 2008; Litman et al. 2009).

Existing evidence thus suggests 2 distinct possibilities for the nature of content representation in PRc and PHc: one comprised of well-defined PRc and PHc functional modules that exhibit specialized and preferential responding to specific event content and an alternate possibility where PRc and PHc represent multiple forms of event content. Recent studies have attempted to reconcile these conflicting accounts by demonstrating content-based representational gradients along the anterior–posterior axis of MTL cortex (Litman et al. 2009; Staresina et al. 2011). Encoding responses specific to visual object information have been observed in the anterior extent of PRc, while posterior regions of PHc show encoding responses specific to visuospatial information (Staresina et al. 2011). Interestingly, however, a transitional zone between anterior PRc and posterior PHc contributed to encoding of both visual object and visuospatial information. These findings suggest that discrete functional boundaries may not exist within MTL cortex; rather, different forms of event content would evoke a graded pattern of response along the MTL cortical axis, with content-specific responses being more likely in the anterior and posterior extents of MTL cortex. Notably, the particular distribution of such representational gradients may differ greatly depending on the nature of the event content (Litman et al. 2009).

Neuroimaging research on content representation in the MTL has almost exclusively employed standard univariate measures of response preferences that consider content sensitivity as a function of the maximal response within a specific region. However, such univariate statistical techniques overlook the possibility that weaker nonmaximal responses represent important information about event content (Haxby et al. 2001; Norman et al. 2006; Harrison and Tong 2009; Serences et al. 2009). Unlike standard univariate analyses, multivariate analysis of neuroimaging data examines the entire pattern of response within a region of interest (ROI) and is not necessarily limited to responses within a region that reach a predefined statistical threshold (Norman et al. 2006; Poldrack 2006; Kriegeskorte et al. 2008). These methods have proved a powerful tool for understanding the nature of representational codes for different forms of perceptual content in higher order visual centers in the brain (Haxby et al. 2001; Kriegeskorte and Bandettini 2007; Kriegeskorte et al. 2007; MacEvoy and Epstein 2009, 2011). For example, patterns of response in ventral visual regions that project to the MTL discriminate between multiple categories of visual stimuli (including houses, faces, and objects) even in regions that respond maximally to only one category of stimuli, suggesting widely distributed and overlapping representational codes for visual content in these regions (Haxby et al. 2001).

Given evidence for a distributed coding of event content in content-selective visual regions, it may follow that representational coding in MTL cortical regions that receive direct input from these regions may also be distributed. In support of this view, recent evidence has shown that patterns of activation within PHc discriminate between nonpreferred classes of content, including faces and objects even in the most posterior aspects of the region (Diana et al. 2008). This finding suggests that representational gradients along the anterior–posterior axis of MTL cortex do not sufficiently describe the distribution of content representation in this region. Thus, the precise nature of representational codes for different forms of event content in MTL cortex remains an important open question.

Evidence for the nature of content representation in the hippocampus is similarly mixed. Selective hippocampal damage impairs memory for visuospatial information while sparing memory for nonspatial information (Cipolotti et al. 2006; Bird et al. 2007, 2008; Taylor et al. 2007), suggesting a content-specific hippocampal role in spatial memory (Kumaran and Maguire 2005; Bird and Burgess 2008). Alternatively, the hippocampus may contribute to memory in a domain-general manner given the convergence of neocortical inputs onto hippocampal subfields (Davachi 2006; Knierim et al. 2006; Manns and Eichenbaum 2006; Diana et al. 2007). In support of this view, neuroimaging evidence has revealed hippocampal activation that is generalized across event content (Prince et al. 2005; Awipi and Davachi 2008; Staresina and Davachi 2008; Preston et al. 2010).

The application of multivariate statistical techniques to understand content coding in hippocampus has been limited to a single report to date (Diana et al. 2008; for discussion of related findings, see Rissman and Wagner 2012). In the study by Diana et al., hippocampal activation patterns demonstrated poor discrimination of scene and visual object content, suggesting that hippocampal representations are not sensitive to the modality of event content. However, that study examined the pattern of response across the entire hippocampal region. As in MTL cortex, one possibility is that different regions along the anterior–posterior axis of the hippocampus might demonstrate distinct representational codes for specific forms of event content. Animal research has shown that the anatomical connectivity and function of the ventral (anterior in the human) and dorsal (posterior in the human) hippocampus are distinct (Swanson and Cowan 1977), with the dorsal hippocampus being particularly implicated in spatial learning tasks (Moser MB and Moser EI 1998). Representational codes in the human brain might also reflect such anatomical and functional differences along the anterior–posterior hippocampal axis, with distinct spatial codes being most prevalent in the posterior hippocampus.

To provide an in-depth characterization of content representation in human MTL, we combined high-resolution functional magnetic resonance imaging (hr-fMRI) with both univariate and multivariate statistical approaches. Univariate analyses assessed responses to different classes of novel event content within anatomically defined MTL subregions. Importantly, by utilizing auditory (spoken words and sounds) and visual (faces, scenes, and visual words) content, the current study aimed to broaden our knowledge of content representation in the human MTL beyond the visual domain. As a complement to these univariate approaches, multivariate pattern classifiers trained on data from MTL subregions assessed whether distributed activity in each subregion discriminated between distinct content classes, including “nonpreferred” content. Representational similarity analysis (RSA) (Kriegeskorte and Bandettini 2007; Kriegeskorte et al. 2008) further characterized the representational distance between exemplars from the same content class and between exemplars from different content classes to determine whether MTL subregions maintain distinctive codes for specific forms of information content. Given existing evidence for gradations in content sensitivity that cross anatomical boundaries, we examined univariate and multivariate responses within individual anatomically defined MTL subregions as well as the distribution of novelty responses along the anterior–posterior axis of the hippocampus and parahippocampal gyrus to test for content-based representational gradients.

By combining multiple statistical approaches with hr-fMRI, the present study aimed to provide a more precise characterization of content representation in human hippocampus and MTL cortex than afforded by previous research. In particular, univariate and multivariate methods each index different aspects of the neural code. The use of both analysis methods in the current study provides a means to directly compare findings derived from these different approaches to present a comprehensive picture of representational coding in MTL subregions.

Materials and Methods

Participants

Twenty-five healthy right-handed volunteers participated after giving informed consent in accordance with a protocol approved by the Stanford Institutional Review Board. Participants received $20/h for their involvement. Data from 19 participants were included in the analyses (age 18–23 years, mean = 20.4 ± 1.7 years; 7 females), with data from 6 participants being excluded due to failure to respond on more than 20% of trials (3 participants), scanner spiking during functional runs (1 participant), and excessive motion (2 participants).

Behavioral Procedures

During functional scanning, participants performed a target detection task with 5 classes of stimuli: grayscale images of scenes, grayscale images of faces, visually presented words referencing common objects (white text on a black background; Arial 48 point), spoken words referencing common objects, and environmental sounds (e.g., jet engine, door creaking, water gurgling). During scanning, stimuli were generated using PsyScope (Cohen et al. 1993) on an Apple Macintosh computer and back-projected via a magnet-compatible projector onto a screen that could be viewed through a mirror mounted above the participant's head. Participants responded with an optical button pad held in their right hand.

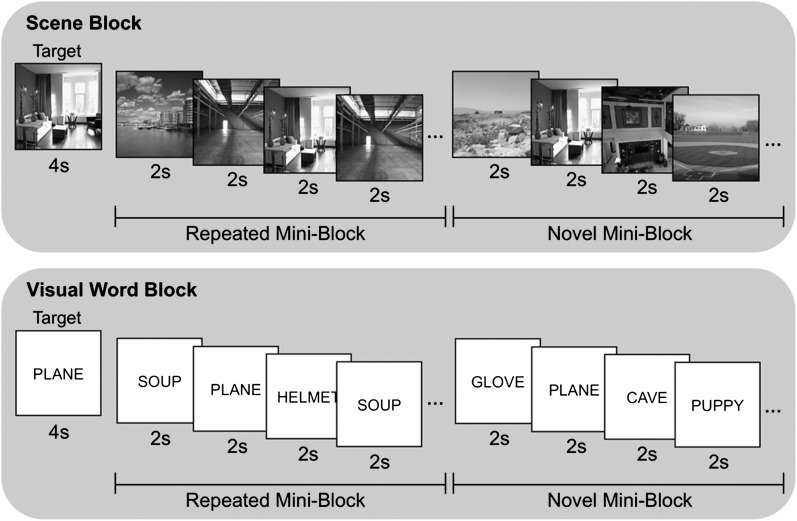

During 8 blocked-design functional runs, participants viewed or heard novel and repeated stimuli from each of the 5 stimulus classes while performing a target detection task (Fig. 1). Each run consisted of 5 stimulus-class blocks, one of each of the 5 stimulus classes, along with baseline blocks. At the start of each stimulus-class block, a cuing stimulus appeared for 4 s that represented the target for that block. Following this target cuing, 2 repeated and 2 novel miniblocks of the stimulus class were presented in random order. During novel miniblocks, participants were presented with 8 stimuli (1 target and 7 trial-unique novel stimuli) in a random order; each stimulus was presented for 2 s, and participants indicated with a yes/no key press whether the stimulus was the target. Repeated miniblocks also consisted of 8 stimuli, including one target, with presentation and response procedures identical to novel miniblocks. However, for repeated miniblocks, the 7 nontarget stimuli consisted of 2 repeated stimuli that were used throughout the entire experiment. Participants viewed the 2 repeated stimuli from each class 20 times each prior to scanning.

Figure 1.

During functional scanning, participants performed target detection on novel and repeated stimuli from 5 classes: faces, scenes, sounds, spoken words, and visual words. At the beginning of a stimulus class block, a target stimulus would appear followed by 2 novel and 2 repeated miniblocks in random order.

Novel and repeated miniblocks lasted 16 s each; thus, each stimulus-class block had a duration of 68 s (4-s target, 2 × 16-s novel miniblocks, and 2 × 16-s repeated miniblocks). Across the entire experiment, participants performed the target detection task for 16 novel and 16 repeated blocks from each stimulus class. The presentation order of the stimulus-class blocks within each functional run was determined by 1 of 3 random orders, counterbalanced across participants. One 16-s baseline task block occurred at the beginning and end of each functional run. During baseline blocks, participants performed an arrow detection task; on each of 8 trials, an arrow was presented for 2 s, and participants indicated by key press whether the arrow pointed to the left or right.

fMRI Acquisition

Imaging data were acquired on a 3.0-T Signa whole-body MRI system (GE Medical Systems, Milwaukee, WI) with a single-channel custom-made transmit/receive head coil. Head movement was minimized using a “bite bar” and additional foam padding. Prior to functional imaging, high-resolution, T2-weighted, flow-compensated spin-echo structural images (time repetition [TR] = 3000 ms; time echo [TE] = 68 ms; 0.43 × 0.43 mm in-plane resolution) were acquired in 22 3-mm thick oblique coronal slices oriented perpendicular to the main axis of the hippocampus allowing for visualization of hippocampal subfields and MTL cortices. These high-resolution imaging parameters optimized coverage across the entire length of MTL but precluded collection of whole-brain imaging data.

Functional images were acquired using a high-resolution -sensitive gradient echo spiral in/out pulse sequence (Glover and Law 2001) with the same slice locations as the structural images (TR = 4000 ms; TE = 34 ms; flip angle = 90°; field of view = 22 cm; 1.7 × 1.7 × 3.0 mm resolution). Prior to functional scanning, a high-order shimming procedure, based on spiral acquisitions, was utilized to reduce B0 heterogeneity (Kim et al. 2002). Critically, spiral in/out methods are optimized to increase signal-to-noise ratio (SNR) and blood oxygen level–dependent contrast-to-noise ratio in uniform brain regions while reducing signal loss in regions compromised by susceptibility-induced field gradients (SFG) (Glover and Law 2001), including the anterior MTL. Compared with other imaging techniques (Glover and Lai 1998), spiral in/out methods result in less signal dropout and greater task-related activation in MTL (Preston et al. 2004), allowing targeting of structures that have previously proven difficult to image due to SFG.

A total of 768 functional volumes were acquired for each participant over 8 scanning runs. To obtain a field map for correction of magnetic field heterogeneity, the first time frame of the functional time series was collected with an echo time 2 ms longer than all subsequent frames. For each slice, the map was calculated from the phase of the first 2 time frames and applied as a first-order correction during reconstruction of the functional images. In this way, blurring and geometric distortion were minimized on a per-slice basis. In addition, correction for off-resonance due to breathing was applied on a per-time-frame basis using phase navigation (Pfeuffer et al. 2002). This initial volume was then discarded as well as the following 2 volumes of each scan (a total of 12 s) to allow for T1 stabilization.

Preprocessing of fMRI Data

Data were preprocessed using SPM5 (Wellcome Department of Imaging Neuroscience, London, UK) and custom Matlab routines. An artifact repair algorithm (http://cibsr.stanford.edu/tools/ArtRepair/ArtRepair.htm [date last accessed; 28 August 2007]) was first implemented to detect and remove noise from individual functional volumes using linear interpolation of the immediately preceding and following volumes in the time series. Functional images were then corrected to account for the differences in slice acquisition times by interpolating the voxel time series using sinc interpolation and resampling the time series using the center slice as a reference point. Functional volumes were then realigned to the first volume in the time series to correct for motion. A mean -weighted volume was computed during realignment, and the T2-weighted anatomical volume was coregistered to this mean functional volume. Functional volumes were high pass filtered to remove low frequency drift (longer than 128 s) before being converted to percentage signal change in preparation for univariate statistical analyses or z-scored in preparation for multivoxel pattern analysis (MVPA).

Univariate fMRI Analyses

Voxel-based statistical analyses were conducted at the individual participant level according to a general linear model (Worsley and Friston 1995). A statistical model was calculated with regressors for novel and repeated miniblocks for each stimulus class. In this model, each miniblock was treated as a boxcar, which was convolved with a canonical hemodynamic response function.

To implement group-level analyses, we used a nonlinear diffeomorphic transformation method (Vercauteren et al. 2009) implemented in the software package MedINRIA (version 1.8.0; ASCLEPIOS Research Team, France). Specifically, each participant's anatomically defined MTL ROIs were aligned with those of a representative “target” subject using a diffeomorphic deformation algorithm that implements a biologically plausible transformation respecting the boundaries dictated by the ROIs. Anatomically defined ROIs were demarcated on the T2-weighted high-resolution in-plane structural images for each individual participant, using techniques adapted for analysis and visualization of MTL subregions (Pruessner et al. 2000, 2002; Zeineh et al. 2000, 2003; Olsen et al. 2009; Preston et al. 2010). A single participant's structural image was then chosen as the target, and all other participants' images were warped into a common space in a manner that maintained the between-region boundaries. To maximize the accuracy of registration within local regions and minimize distortion, separate registrations were performed for left hippocampus, right hippocampus, left MTL cortex, and right MTL cortex. Compared with standard whole-brain normalization techniques, this ROI alignment or “ROI-AL-Demons” approach results in more accurate correspondence of MTL subregions across participants and higher statistical sensitivity (e.g., Kirwan and Stark 2007; Yassa and Stark 2009).

The transformation matrix generated from the anatomical data for each region was then applied to modestly smoothed (3 mm full-width at half-maximum) beta images derived from the first-level individual participant analysis modeling novel and repeated stimuli for each content class. To assess how novelty-based MTL responses vary as a function of information content, 2 anatomically based ROI approaches were implemented. For the first analysis, parameter estimates for novel and repeated blocks for each of the 5 stimulus classes were extracted from 5 anatomically defined ROIs: PRc, PHc, entorhinal cortex (ERc), anterior hippocampus, and posterior hippocampus. Group-level repeated measures analysis of variance (ANOVA) was used to test for differences in activation between novel blocks for each of the stimulus classes in each of the ROIs. Subsequent pairwise comparisons between content classes further characterized the stimulus sensitivity in each region.

In the present study, ERc did not demonstrate significant task-based modulation for any condition. Given the putative role of the ERc in the relay of sensory information to the hippocampus (Knierim et al. 2006; Manns and Eichenbaum 2006), the lack of task-based modulation despite the diversity of stimulus content may be somewhat surprising. To address the possibility that signal dropout in ERc might account for these null findings, we calculated the SNR observed during the baseline task within each anatomical ROI. Pairwise comparisons between ROIs revealed that posterior MTL regions exhibited higher SNR relative to anterior regions (all P < 0.01); posterior hippocampus had the highest SNR (mean = 9.17, standard error [SE] = 0.30), followed by PHc (7.06 ± 0.30), anterior hippocampus (5.82 ± 0.21), and finally ERc (3.03 ± 0.21) and PRc (2.80 ± 0.18). Notably, SNR within ERc and PRc did not significantly differ (P > 0.2); yet, the present findings reveal above-baseline responding to multiple experimental conditions in PRc. Thus, signal dropout in anterior MTL remains a possible but inconclusive explanation for our lack of findings in ERc.

Because of the lack of task-based modulation of ERc, we focused our subsequent analyses of MTL cortical activation on the PRc and PHc ROIs. Region × content interactions, comparing PRc with PHc and anterior with posterior hippocampus, examined whether content sensitivity differed across the anterior–posterior axis of MTL cortex and hippocampus, respectively. A parallel set of analyses assessed differences between novel and repeated blocks for each class of content. Where appropriate alpha-level adjustment was calculated using a Huynh–Feldt correction for nonsphericity.

A second anatomical ROI approach examined the distribution of novelty-based responses across the anterior–posterior axis of MTL cortex and hippocampus. To perform this analysis, the length of MTL cortex was divided into 11 anatomical ROIs defined using the representative target participant as the model. The placement of the ROIs along the anterior–posterior axis was selected to maintain the anatomical boundary between PRc and PHc. Each ROI was 4.5-mm long; however, due to the hemispheric asymmetry in length of the parahippocampal gyrus in the model subject, the anterior-most ROI of PRc was only 3-mm long in the left hemisphere and 6-mm long in the right hemisphere. Similarly, the longitudinal axis of the hippocampus was divided into 9 ROIs based on the model participant, and the placement of the ROIs was selected to maintain the anatomical boundaries between the hippocampal head and body and between the hippocampal body and tail. Each ROI was 4.5-mm long; but again, due to the particular anatomy of the model subject, the posterior-most ROI in the hippocampal tail was 3-mm long in the right hemisphere and 6-mm long in the left hemisphere. Repeated measures ANOVA assessed novelty-based activation (measured as both the response to novel stimulus blocks relative to baseline and the difference between novel and repeated blocks) as a function of content and anterior–posterior position along the axis of each structure. For both MTL cortex and hippocampus, one participant was excluded from this analysis because the slice prescription did not include the anterior-most aspect of the MTL region. For all analyses, hemisphere (left, right) was included as a within-subjects factor; however, because the effect of hemisphere did not interact significantly with any effect of interest (all P > 0.1), it is not considered in the Results. Moreover, the lack of any observable effect of hemisphere suggests that the size discrepancy between the model participant's left and right MTL ROIs had no significant impact on the observed pattern of results.

Multivariate Pattern Analysis of fMRI Data

In addition to the preceding univariate statistical analyses, we used MVPA to determine the sensitivity of MTL subregions (anterior hippocampus, posterior hippocampus, PRc, and PHc) to different forms of event content. Pattern classification analyses were implemented using the Princeton MVPA toolbox and custom code for MATLAB. MVPA was performed at the individual participant level using the functional time series in native space. Classification was performed for each anatomical ROI region separately and included all voxels within each ROI.

MVPA classification was performed by first creating a regressor matrix to label each time series image according to the experimental condition to which it belonged (e.g., novel faces, novel scenes, novel visual words, etc.). Classification was restricted to novel stimulus blocks, and there were an equal number of time points in each condition in the analysis (64 time points per condition). For each anatomical ROI, we assessed how accurately the classifier could discriminate between the stimulus classes. Classification performance for each ROI for each participant was assessed using an 8-fold cross-validation procedure that implemented a regularized logistic regression algorithm (Bishop 2006; Rissman et al. 2010) to train the classifier. Data from 7 scanning runs were used for classifier training, and the remaining run was used as test data to assess the generalization performance of the trained classifier. This process was iteratively repeated 8 times, one for each of the possible configurations of training and testing runs. Ridge penalties were applied to each cross-validation procedure to provide L2 regularization. The penalties were selected based on performance during classification over a broad range of penalties, followed by a penalty optimization routine that conducted a narrower search for the penalty term that maximized classification accuracy (Rissman et al. 2010). Classifications performed for the purpose of L2 penalty selection were applied only to training data to avoid peeking at test data. The final cross-validated classification was performed once the optimal penalties were selected. The classification performances across the iterative training were then averaged to obtain the final pattern classification performance for each ROI for each participant.

To more closely examine the underlying activation patterns driving MVPA classification performance, we constructed confusion matrices indicating how often the MVPA classifier categorized voxel patterns correctly and how often it confused the voxel patterns with each other class of content. The goal of this analysis was to determine the distribution of classification errors for each class of stimuli (i.e., if a stimulus block was not correctly categorized, what stimulus class did the classifier identify it as). To do so, we constructed confusion matrices for each ROI from each participant and averaged them across the group. We then normalized each row of a given confusion matrix (representing one stimulus class) by dividing each cell of the matrix by the proportion of correctly classified test patterns for that stimulus category. This normalization procedure yielded values along the matrix diagonal equal to 1, and the resulting off-diagonal values indicate confusability relative to the correct class of content. For example, stimulus classes that were highly confusable with the correct stimulus class would also yield values close to 1.

To determine whether the level of confusability between stimulus classes was significantly different from chance, we scrambled the MVPA regressor matrix for each ROI for each participant so that each image of the time series was given a random condition label. Using Monte Carlo simulation (1000 iterations), we then created a null distribution of classification performance for each stimulus class based on the randomly labeled data as well as a null distribution of classifier confusion matrices. Classifier confusion values that lay outside of the confidence intervals based on the null distributions were determined to be significant. The alpha level of the confidence intervals was chosen based on Bonferroni correction for each of 80 statistical tests of significance performed across all anatomical ROIs (α = 10−3).

Representational Similarity Analysis of fMRI Data

To more precisely characterize the underlying representational structure for each form of stimulus content within MTL subregions, we examined responses to individual blocks of novel content using RSA (Kriegeskorte and Bandettini 2007; Kriegeskorte et al. 2008). We compared the patterns evoked by individual stimuli within and across content classes by considering the voxelwise responses observed for each novel miniblock viewed by the participants. Each novel miniblock contained the same configuration of 8 stimuli across participants (though the miniblocks were seen in different orders across participants). Here, we considered each miniblock to represent an “exemplar” of a content class (Kriegeskorte et al. 2008) and constructed a separate general linear model with individual regressors for every miniblock of novel content. We first performed this analysis within the anatomically defined PHc, PRc, posterior hippocampus, and anterior hippocampus ROIs. To understand how representational structure changes as a function of position along the anterior–posterior axis of MTL, we also performed this analysis within each anterior–posterior segment of MTL cortex and hippocampus.

Representational dissimilarity matrices (RDMs) were constructed for each MTL subregion for each individual participant. Each cell in the RDM indicates the Pearson linear correlation distance (1 − r) between voxelwise parameter estimates for any given pair of novel miniblocks. Individual participants RDMs were averaged across the group. To better visualize the dissimilarities between stimulus class exemplars, we applied metric multidimensional scaling (MDS) to the group-averaged RDMs, which resulted in a 2D characterization of the representational space of each region (Edelman 1998; Kriegeskorte et al. 2008). Metric MDS minimizes Kruskal's normalized “STRESS1” criterion to represent each stimulus class exemplar as a point in, here, 2D space so that the rank order of linear distances between points matches the rank order of dissimilarities between exemplars in each RDM.

Based on these 2D representations of the RDMs, we calculated the mean within-class linear distance for each form of stimulus content as well as the mean cross-class linear distances for each pair of content classes. This analysis allowed us to determine whether exemplars from the same class of stimulus content (e.g., face miniblock A vs. face miniblock B) were clustered together in the representational structure of a given MTL subregion and whether the representation of those exemplars was distinct from exemplars from other contents classes (e.g., the distance between face miniblock A vs. scene miniblock A). Monte Carlo simulation was used to assess whether within-class and cross-class linear distances were significantly different from the distances expected by chance. For each of 1000 iterations, the exemplar labels for each row and column of individual participant RDMs were randomly scrambled. These scrambled RDMs were averaged across participants and transformed using metric MDS to obtain null distributions of within-class and cross-class linear distances. Linear distances that lay outside of confidence intervals based on the null distributions were determined to be significant. The alpha level of the confidence intervals was chosen based on Bonferroni correction for each of 15 statistical tests of significance performed within all anatomical ROIs (α = 10−2).

Results

Behavioral Performance

Percent correct performance on the target detection task averaged 97.4 (SE = 0.41) for spoken words, 97.5 (0.68) for faces, 98.1 (0.34) for scenes, 96.5 (0.48) for sounds, and 98.7 (0.23) for visual words. A repeated measures ANOVA revealed an effect of block type (novel, repeated: F1,18 = 7.25, P = 0.02), an effect of stimulus content (spoken words, faces, scenes, sounds, and visual words: F4,72 = 3.99, P = 0.01), but no interaction (F < 1.0). Performance for novel blocks (97.9, 0.21) was superior to performance for repeated blocks (97.4, 0.35). Pairwise comparisons revealed superior performance for visual word blocks relative to spoken word, face, and sound blocks (all t > 2.20, P < 0.05) as well as superior performance during scene blocks relative to spoken word and sound blocks (all t > 2.10, P < 0.05).

Analyses of reaction times (RTs) revealed effects of novelty (F1,18 = 29.58, P < 0.001), stimulus content (F4,72 = 150.32, P < 0.001), and an interaction between novelty and content (F4,72 = 3.12, P = 0.04). RTs for repeated blocks (670 ms, SE = 20 ms) were faster than those for novel blocks (699 ms, 23). Significant differences in RTs were observed between all stimulus classes (all t > 2.65, P < 0.05), with the fastest RTs for visual word blocks (512 ms, 19), followed by scene (570 ms, 21), face (603 ms, 26), spoken word (832 ms, 23), and sound (895 ms, 34) blocks. The novelty × content interaction revealed that RTs decreased from repeated to novel blocks for all stimulus classes (all t > 2.40, P < 0.05), except sound blocks that demonstrated no RT difference between repeated and novel blocks (t18 = 1.69). Performance on the baseline arrows task averaged 96.6% correct (SE = 0.75%).

Content Sensitivity within Anatomically Defined MTL ROIs

We first assessed whether activation during novel stimulus blocks varied based on content using a standard univariate analysis approach employed in several prior studies examining content-specific responding in MTL. Parameter estimates for novel blocks from each anatomically defined MTL ROI (ERc, PRc, PHc, anterior hippocampus, and posterior hippocampus) were subjected to repeated measures ANOVA for an effect of content. Within MTL cortex, significant task-based modulation was observed only in PHc and PRc; we did not observe significant modulation of ERc activation for any condition or stimulus class (all F < 1), and therefore, we did not consider this region in any further analyses.

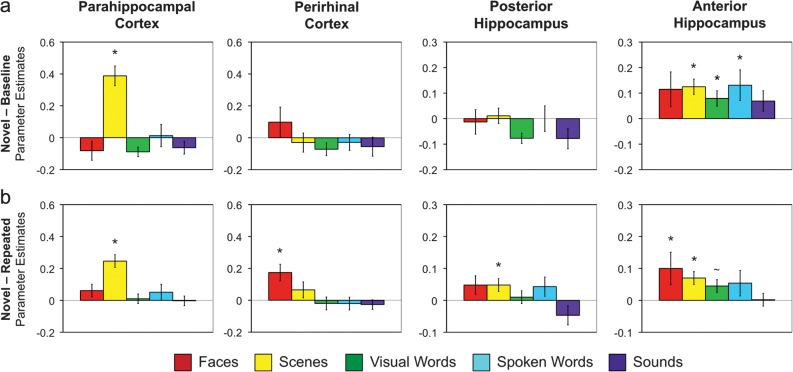

PHc activation during novel stimulus blocks demonstrated a significant main effect of content (F4,72 = 22.82, P < 0.001). Among the 5 stimulus classes, only novel scenes elicited a significant response above baseline (t18 = 5.98, P < 0.001; Fig. 2a). Pairwise comparisons revealed that PHc activation for novel scenes was greater than activation for novel stimuli of all other stimulus classes (all t > 5.95, P < 0.001). Similar effects were observed for a parallel analysis assessing differences in PHc activation between novel and repeated stimuli for each class of content (Fig. 2b). The difference in activation for novel relative to repeated stimuli demonstrated a significant effect of content (F4,72 = 7.39, P < 0.001), with the novel–repeated difference being significant only for scenes (t18 = 5.92, P < 0.001).

Figure 2.

Response to novel event content in anatomically defined MTL ROIs (PHc, PRc, posterior hippocampus, and anterior hippocampus). (a) Parameter estimates representing activation during novel content blocks relative to baseline. Error bars represent standard error of the mean. Asterisks indicate significant differences from baseline (P < 0.05); tilde indicates a trend for difference (P < 0.10). (b) Difference in parameter estimates between novel and repeated content blocks. Error bars represent standard error of the mean. Asterisks indicate significant differences between novel and repeated blocks (P < 0.05); tilde indicates a trend for difference (P < 0.10).

In PRc, activation during novel stimulus blocks was not different from baseline (all t < 1.1) and did not vary based on information content (F4,72 = 2.01, P = 0.12; Fig. 2a). When considering the difference in activation between novel and repeated blocks, however, a significant effect of content was observed in PRc (F4,72 = 4.03, P < 0.01; Fig. 2b), with the novel–repeated difference being significant only for faces (t18 = 3.84, P = 0.001). Pairwise comparisons revealed that the novel–repeated difference in activation was greater for faces than for visual words, spoken words, and sounds (all t > 3.0, P < 0.05), with a trend for a difference from scenes (t18 = 1.89, P = 0.08). Finally, the apparent difference in content sensitivity in PHc and PRc was confirmed by a significant region × content interaction, both when considering responses to novel stimuli in isolation (F4,72 = 24.96, P < 0.001) and when considering differences between novel and repeated stimuli (F4,72 = 4.90, P = 0.005).

Within hippocampus, novelty-based activation was observed primarily in the anterior extent. Specifically, in anterior hippocampus, activation during novel stimulus blocks did not differ based on information content (F < 1.0; Fig. 2a) and was significantly above baseline for scenes (t18 = 4.32, P < 0.001), spoken words (t18 = 2.36, P < 0.05), and visual words (t18 = 2.47, P < 0.05). When comparing anterior hippocampus activation for novel relative to repeated stimuli, again there were no significant differences across stimulus content (F4,72 = 1.16, P = 0.33; Fig. 2b), with significant effects observed for face (t18 = 2.06, P = 0.05) and scene (t18 = 3.58, P < 0.01) stimuli.

By contrast, posterior hippocampal activation during novel stimulus blocks did not differ from baseline for any class of stimuli (all t < 0.5; Fig. 2a). While there was a significant difference in posterior hippocampal activation when comparing novel relative to repeated scenes (t18 = 2.16, P = 0.04), there was only a trend for an effect of content (F4,72 = 2.71, P = 0.06; Fig. 2b) and no pairwise comparison between content classes reached significance (all t < 1.5). The apparent difference in novelty-based responding in anterior and posterior hippocampus was supported by a main effect of region when considering responses to novel stimuli in isolation (F4,72 = 39.22, P < 0.001) and when comparing differences between novel and repeated stimuli (F4,72 = 20.13, P < 0.001); however, because this finding was not accompanied by a region × content interaction, interpretative caution is warranted. Finally, anterior hippocampus demonstrated a different pattern of content sensitivity relative to MTL cortical regions, as reflected in a significant region × content interaction for novel stimuli (F4,72 = 38.59, P < 0.001) and for the difference between novel and repeated stimuli (F4,72 = 7.54, P < 0.001) when compared with activation in PHc and trends for region × content interactions when compared with PRc activation (novel: F4,72 = 2.08, P = 0.10; novel–repeated: F4,72 = 2.26, P = 0.09).

Distribution of Content Sensitivity across PRc and PHc

The preceding results assume that content sensitivity is uniform within anatomically defined MTL subregions. It is possible, however, that content sensitivity does not adhere to discrete anatomical boundaries but rather is distributed across anatomical subregions. This possibility would further suggest that content sensitivity within anatomical subregions should be heterogeneous. To address this hypothesis, we examined content-sensitive novelty responses in MTL cortex and hippocampus as a function of position along the anterior–posterior axis of each structure (Figs 3 and 4). (For a similar analysis performed within PRc and PHc individually, see Supplementary Results.)

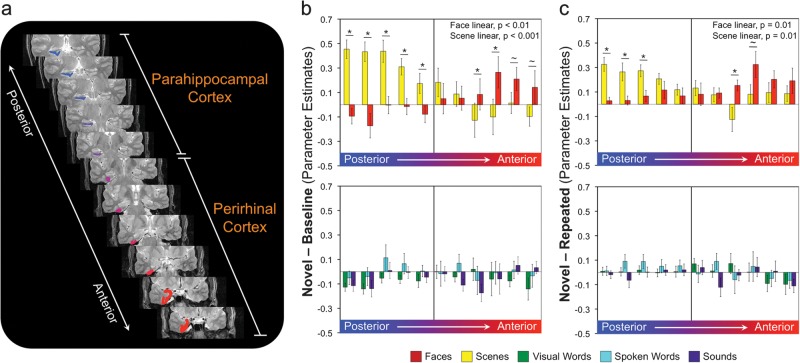

Figure 3.

Responses to novel event content along the anterior–posterior axis of the parahippocampal gyrus. (a) Coronal slices through parahippocampal gyrus with anatomical ROIs represented as color-coded regions in the right hemisphere. (b) Top: parameter estimates for novel faces and scene blocks relative to baseline in each of the anatomically defined ROIs along the anterior–posterior axis of MTL cortex. Bottom: parameter estimates for novel visual word, sound, and spoken word blocks relative to baseline. (c) Top: parameter estimates for novel–repeated face and scene blocks along the anterior–posterior axis of MTL cortex. Bottom: novel–repeated parameter estimates for visual words, sounds, and spoken words. Error bars represent standard error of the mean. Asterisks indicate significant pairwise differences (P < 0.05); tilde indicates a trend for difference (P < 0.10).

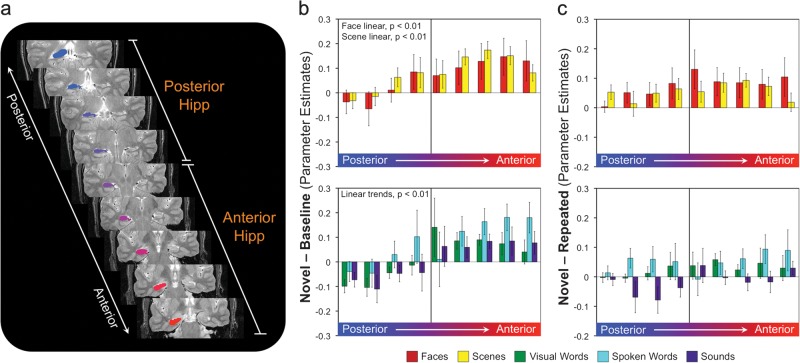

Figure 4.

Responses to novel event content along the anterior–posterior axis of the hippocampus. (a) Coronal slices through hippocampus with anatomical ROIs represented as color-coded regions in the right hemisphere. (b) Top: parameter estimates for novel face and scene blocks relative to baseline in each of the anatomically defined ROIs along the anterior–posterior axis of hippocampus. Bottom: parameter estimates for novel visual word, sound, and spoken word blocks relative to baseline. (c) Top: parameter estimates for novel–repeated face and scene blocks along the anterior–posterior axis of hippocampus. Bottom: novel–repeated parameter estimates for visual words, sounds, and spoken words. Error bars represent standard error of the mean. Asterisks indicate significant pairwise differences (P < 0.05); tilde indicates a trend for difference (P < 0.10).

Within MTL cortex, activation for novel stimuli demonstrated a significant main effect of content (F4,68 = 4.89, P < 0.005) and an interaction between anterior–posterior position and content (F40,680 = 6.15, P < 0.001; Fig. 3b). The main effect of content was reflected by greater activation for novel scenes relative to spoken words, visual words, and sounds (all t > 2.9, P < 0.005). Moreover, responses to novel scenes demonstrated a significant linear trend along the anterior–posterior axis (F1,17 = 34.50, P < 0.001), with maximal activation in the posterior MTL cortex and decreasing as one moves anteriorly. The opposite linear trend was observed for novel faces (F1,17 = 10.07, P < 0.01), with maximal activation in anterior regions and decreasing as one moves posteriorly. No other class of content demonstrated significant linear trends along the anterior–posterior axis of MTL cortex (all F < 1.6).

When considering the difference in activation between novel and repeated blocks, a similar distribution was observed across MTL cortex, where there was a significant main effect of content (F4,68 = 4.10, P < 0.01) as well as a significant interaction between anterior–posterior position and content (F40,680 = 2.80, P < 0.05; Fig. 3c). The effect of content in this case was reflected by greater difference between novel and repeated stimuli for scenes and faces relative to all other forms of stimulus content (all t > 1.7, P < 0.05). The interaction between position and content was reflected by a decreasing scene response from posterior to anterior (F1,17 = 8.36, P = 0.01) and an increasing face response from posterior to anterior (F1,17 = 8.42, P = 0.01). No other class of content demonstrated significant linear trends (all F < 2.1). Notably, these observed functional gradients in MTL cortex were not the result of individual differences in the anterior–posterior boundary between PRc and PHc across individuals (see Supplementary Results).

Distribution of Content Sensitivity across Anterior and Posterior Hippocampus

We performed similar analyses examining activation for novel stimuli along the anterior–posterior axis of the hippocampus (Fig. 4). Within hippocampus, we observed a main effect of anterior–posterior position (F8,136 = 6.88, P < 0.001) but did not observe an effect of content (F4,68 = 1.01, P = 0.39) or a content × position interaction (F32,544 = 1.02, P = 0.40). Significant linear trends were observed for all content classes (all F1,17 > 11.81, P < 0.01), with activation for novel stimuli increasing from posterior to anterior hippocampus (Fig. 4b). When considering the difference in activation for novel and repeated stimuli (Fig. 4c), only a trend for an effect of position (F8,136 = 2.67, P = 0.06) was observed, reflecting greater novel–repeated differences in the 4 anterior-most hippocampal positions compared with the 3 most posterior positions (all t > 2.4). These differences were not reflected in a significant linear trend for any class of content (all F1,17 < 2.35). For similar analyses performed at the level of individual participants, see Supplementary Results.

Multivariate Pattern Classification in MTL Subregions

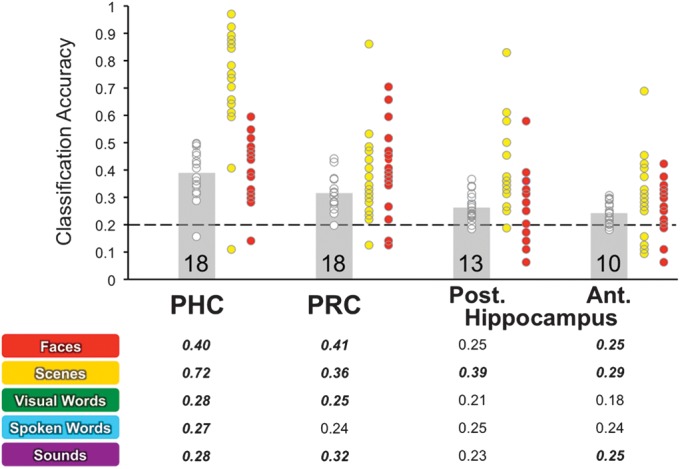

Using MVPA, we examined whether each MTL subregion carries sufficient information about a specific class of content to distinguish it from other categories of information, providing an additional measure of content sensitivity distinct from standard univariate measures. For each region—PHc, PRc, anterior hippocampus, and posterior hippocampus—we trained a classifier to differentiate between novel stimulus blocks from each of the 5 content classes and tested classification accuracy using a cross-validation procedure. Overall, classification accuracy (Fig. 5, gray bars) was significantly above chance (20%) using data from each MTL subregion (all t18 > 4.67, P < 0.001).

Figure 5.

MVPA classification accuracy in anatomically defined MTL subregions. Top: overall classification accuracy across the 5 classes of event content in each anatomical region. Gray bars indicate the overall mean classification accuracy across participants. Chance classification performance is indicated by the dashed line. White circles represent overall classification accuracy for individual participants. Numbers indicate the number of individual participants with above chance classification accuracy. Yellow circles indicate individual participant accuracies for scenes, and red circles individual participant accuracies for faces. Bottom: classification accuracies for each content class expressed as the proportion of hits across participants. Significant classification accuracy is indicated by bold/italics.

We also determined the number of participants whose overall classification performance lay significantly outside of an assumed binomial distribution of performance given a theoretical 20% chance-level accuracy. Overall performance in the top 5% of the binomial distribution was considered above chance. The binomial test revealed that the number of participants with above chance classification performance was greater in PRc (n = 18) and PHc (n = 18) than in anterior (n = 13) and posterior hippocampus (n = 10). Superior classification performance in MTL cortical regions relative to hippocampus was further revealed by repeated measures ANOVA assessing the difference in classification accuracy across regions. A significant main effect of region was observed when comparing classification accuracy for anterior hippocampus with PRc (F1,18 = 42.84, P < 0.001) and PHc (F1,18 = 90.53, P < 0.001) and when comparing classification accuracy for posterior hippocampus with PRc (F1,18 = 23.85, P < 0.001) and PHc (F1,18 = 50.68, P < 0.001).

We also considered individual classification accuracies for each class of information content to determine whether certain classes of content evoked more consistent and meaningful patterns of activation within MTL subregions than others and whether classification of individual classes of content differed by region (Fig. 5). While classification accuracy in PHc was significantly above chance for all classes of stimulus content (all t18 > 2.91, P < 0.01), repeated measures ANOVA revealed a significant main effect of content (F4,72 = 53.23, P < 0.001), with classification accuracy for novel scenes being greater than all other classes of content (all t18 > 7.51, P < 0.001) and classification accuracy for novel faces being greater than that for visual words, spoken words, and sounds (all t18 > 3.71, P < 0.001).

In PRc, classification accuracy exceeded chance for all stimulus classes (all t > 2.90, P < 0.05) except spoken words (t18 = 1.81, P = 0.09). A significant main effect of content on classification accuracy was also observed in PRc (F4,72 = 6.31, P < 0.001), with greater classification accuracy for novel faces and scenes relative to visual words and spoken words (all t > 2.60, P < 0.05) and greater accuracy for novel sounds relative to visual words (t18 = 2.29, P = 0.04). When considering classification accuracies for individual classes of content across PHc and PRc, a significant region × content interaction was observed (F4,72 = 25.07, P < 0.001).

In posterior hippocampus, repeated measures ANOVA revealed a main effect of content on classification accuracies (F4,72 = 6.74, P < 0.001), with only the classification of novel scenes being significantly above chance (t18 = 5.20, P < 0.001) and being significantly better than classification of every other class of content (all t18 > 2.88, P < 0.05). In contrast, classification accuracies in anterior hippocampus were above chance for novel faces, scenes, and sounds (all t > 2.56, P < 0.05). A main effect of content on classification accuracies was further observed in anterior hippocampus (F4,72 = 2.91, P < 0.05), with lower classification accuracy for visual words compared with all other stimulus classes (all t > 2.51, P < 0.05). When comparing classification accuracies for individual classes of content across anterior and posterior hippocampus, we observed trends for a main effect of region (F1,18 = 3.97, P = 0.06) and a region × content interaction (F4,72 = 2.49, P = 0.08), suggesting modest differences in the representation of novel information content across the long axis of the hippocampus.

We also investigated the possibility that higher classification performance in MTL cortical subregions was driven primarily by their ability to discriminate preferred content identified in the univariate analyses (i.e., novel faces in PRc and novel scenes in PHc). Three follow-up analyses interrogated subregional pattern classification performance: one analysis omitting novel faces from classification, one omitting novel scenes, and one omitting both novel faces and scenes from classification training and testing. Importantly, classification performance in PHc and PRc remained greater than that of hippocampal subregions despite the omission of preferred subregional content (Supplementary Figs S1–S3). For additional details on these analyses, see Supplementary Results.

Multivariate Pattern Confusion in MTL Subregions

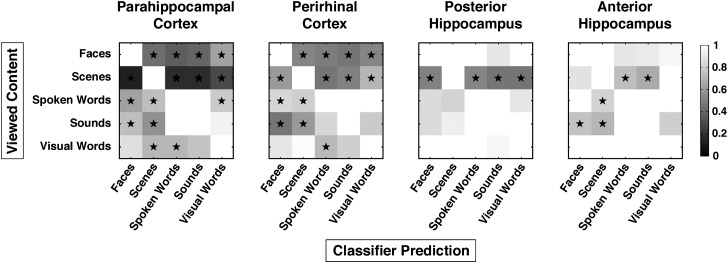

The preceding results suggest a substantial difference between MTL cortical subregions and hippocampus in their ability to classify different forms of stimulus content. Classification accuracy alone, however, provides only a limited view of representational coding in MTL subregions. We also examined MVPA classifier confusion matrices to determine how often the classifier confused different forms of stimulus content, which provided a measure of the similarity between voxel patterns evoked by different forms of event content. Specifically, we constructed MVPA confusion matrices for each anatomical region indicating how often voxel patterns for each stimulus class were classified correctly, and if incorrectly classified, what form of content a given voxel pattern was labeled as (Fig. 6).

Figure 6.

MVPA classifier confusion matrices in anatomically defined MTL subregions. Each row displays classifier performance on the test patterns drawn from each of the 5 content classes. For a given content class, the cells in each row indicate the proportion of trials that those test patterns were classified as each of the 5 content classes normalized to the proportion of correctly classified test patterns for that stimulus class. Therefore, values along the diagonal are always equal to 1. Grayscale intensity along each row indicates confusability relative to the correct class of content. Test patterns that were highly confusable with the correctly classified content would yield values close to 1 (off-diagonal white squares). Stars indicate when classifier confusion values lay outside of the confidence intervals derived from null distributions of classification performance based on Monte Carlo simulation. The alpha level of the confidence intervals was chosen based on Bonferroni correction for each of the statistical tests performed across all anatomical ROIs (α = 10−3).

In PHc, only voxel patterns evoked by novel faces and scenes were distinct from patterns evoked by other classes of content, as indicated by lower cross-content confusion values than would be expected by chance (all P < 10−3). By comparison, voxel patterns evoked by spoken words were significantly dissimilar from those evoked by faces, scenes, and visual words (all P < 10−3) but not sounds. Similarly, voxel patterns evoked by novel sounds were dissimilar from those evoked by faces and scenes (all P < 10−3) but not spoken words, consistent with an overlapping representation of spoken words and sounds in PHc that is distinct from novel face and scene visual content. Voxel patterns evoked by novel visual words were dissimilar from those of spoken words and scenes (all P < 10−3).

Similar to PHc, voxel patterns evoked by novel faces and scenes in PRc were distinct from those evoked by other classes of content (all P < 10−3). Voxel patterns evoked by novel spoken words were distinct from those of faces and scenes (all P < 10−3) but not sounds or visual words. The same pattern was observed for responses to novel sounds, which were distinct from those evoked by faces and scenes (all P < 10−3) but not spoken or visual works. Voxel patterns evoked by novel visual words were distinct from those of spoken words (P < 10−3) but not faces, scenes, or sounds. Together, evidence from the classifier confusion matrices indicates that PHc and PRc contain representationally distinct codes for novel faces and scenes, while the representation of different forms of auditory content is highly overlapping.

In anterior hippocampus, voxel patterns evoked by visual and auditory content were somewhat distinct from each other, with voxel patterns evoked by novel spoken words and novel sounds differing from those evoked by scenes (all P < 10−3) and faces in the case of novel sounds (P < 10−3). Voxel patterns evoked by different forms of visual event content in anterior hippocampus did not significantly differ based on the criterion threshold, reflecting less distinctiveness between the representation of visual content in this region. In contrast, the voxel patterns evoked by novel scenes in posterior hippocampus differed from all other classes of event content (all P < 10−3), indicating a distinct representation of scene information in this region. The voxel patterns evoked by novel faces, spoken words, sounds, and visual words in posterior hippocampus did not significantly differ from one another based on the criterion threshold.

Representational Similarity Analysis in MTL Subregions

The preceding classifier confusion analysis characterizes when voxelwise patterns evoked by each form of event content are different from other content classes. This analysis, however, does not directly assess whether such differences arise solely from the distinct representation of exemplars from different content classes (i.e., low cross-class similarity) or whether such differences also result from highly similar representations of exemplars within a given stimulus class (i.e., high within-class similarity). To further interrogate the pattern of results observed in our MVPA analyses, we employed RSA (Kriegeskorte and Bandettini 2007; Kriegeskorte et al. 2008) to measure the representational distance between evoked responses for each content class exemplar and all other exemplars in the experiment. The correlation distances between exemplars were visualized in 2 dimensions using MDS (Edelman 1998; Kriegeskorte et al. 2008). This characterization of the data enabled us to measure the voxelwise pattern similarity between any 2 exemplars as their linear distance in the 2D space. Thus, RSA not only provides a means to directly compare the representational similarity between exemplars from different forms of content but also provides a means to directly measure the representational similarity of exemplars within a class.

Moreover, RSA extends upon the MVPA classifier confusion analysis by measuring within- and cross-class representational similarity not only within individual MTL subregions but also as a function of the anterior–posterior position along MTL cortex and hippocampus. Importantly, the univariate analyses identified gradients of content-sensitive responding in the MTL. It is possible that the multivoxel patterns most important for representing any given form of event content might also be distributed in a nonuniform manner within or across MTL subregions. If true, RSA performed across an entire region might fail to find distinct representational codes for different classes of content, while a consideration of the representational codes along the anterior–posterior axis might demonstrate clear distinctions between content classes. By constructing representational similarity matrices for each anatomical ROI segment of MTL cortex and hippocampus, we sought to more precisely identify positions along the anterior–posterior MTL axis where voxel patterns evoked by different forms of event content are distinct. This analysis approach yielded a rich set of data, and here, we have focused our reporting on the set of findings that help elucidate the representational codes underlying our MVPA findings. The full results of the representational similarity analyses are available from the authors upon request.

Distinct Face and Scene Representations in PHc and PRc

First, we considered face and scene representation within MTL cortical subregions, which were revealed to be distinct from other forms of content using MVPA. RSA revealed smaller within-class linear distances between novel face exemplars and between novel scene exemplars in PHc than would be expected by chance (Fig. 7a; all P < 10−2), indicating a highly clustered within-class representational structure for both forms of event content within this region. The distinct representation of scene content in PHc was further supported by significantly larger cross-class linear distances between novel scene exemplars and novel face, spoken word, and sound exemplars (all P < 10−2). In contrast, within-class linear distances between novel face exemplars and between novel scene exemplars in PRc did not reach significance (all P > 10−2), despite evidence from MVPA suggesting highly distinct face and scene voxel patterns in the region. Furthermore, we observed significantly larger cross-class distance only between voxel patterns evoked by novel scenes and those evoked by spoken words in PRc (P < 10−2).

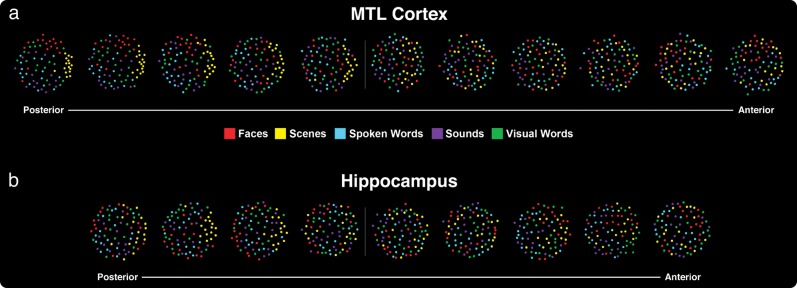

Figure 7.

Neural pattern distances between novel content exemplars visualized by MDS in (a) MTL cortex and (b) hippocampus. Each content class exemplar (i.e., a novel miniblock) is represented by a colored dot in the panels for each MTL subregion. Dots placed close together in the 2D space indicate that those 2 exemplars were associated with a similar pattern of activation. Dots placed farther apart indicate that those 2 exemplars were associated with more distinct activation patterns. The tables below each plot indicated the mean within-class linear distance for each content class and the mean cross-class linear distance between each pair of novel content. Bolded values indicated when linear distances lay outside of confidence intervals derived from null distributions of within-class and cross-class linear distances based on Monte Carlo simulation. The alpha level of the confidence intervals was chosen based on Bonferroni correction for each of the statistical tests performed for all anatomical ROIs (α = 10−2). Crosses indicate when linear distances were significantly smaller than expected by chance and reflect greater similarity in the activation patterns evoked by content class exemplars. Asterisks indicate when linear distances were significantly larger than expected by chance and reflect more distinct representation of individual exemplars.

When examining face and scene representation as a function of anterior–posterior position along the axis of MTL cortex, we found that the distinctiveness of face and scene representations was localized primarily in the most posterior positions (Fig. 8a). In the 3 posterior-most ROIs (corresponding to the posterior aspect of PHc), we observed within-class distances between individual face and individual scene exemplars that were smaller than expected by chance (all P < 10−2), indicating a distinct representational code for both face and scene content classes in posterior MTL cortex. The distinct representation of scene content continued anteriorly, with significantly smaller within-class distances for individual scene exemplars in the 5 most posterior ROIs in MTL cortex (all P < 10−2). Moreover, in the posterior-most ROI, we observed significantly larger cross-class distances between voxel patterns evoked by faces and scenes exemplars and those evoked by each other form of content except visual words (all P < 10−2). These significantly larger cross-class distances disappeared one-by-one as we moved anteriorly (Fig. 8a), being absent by the middle slice in MTL cortex corresponding to posterior PRc. Finally, we observed significant within-class clustering of face exemplars in the anterior-most ROI corresponding to PRc (P < 10−2), although this effect was absent in every other anterior MTL cortical ROI; we did not observe significant within-class clustering of scene exemplars in any anterior MTL cortical ROIs.

Figure 8.

Neural pattern distances between novel content exemplars visualized by MDS along the anterior–posterior axis of (a) MTL cortex and (b) hippocampus. Each content class exemplar (i.e., a novel miniblock) is represented by a colored dot in the panels for each MTL subregion. Dots placed close together in the 2D space indicate that those 2 exemplars were associated with a similar pattern of activation. Dots placed farther apart indicate that those 2 exemplars were associated with more distinct activation patterns. Results tables for each plot are available from the authors upon request.

Scene Representation in Hippocampus

MVPA of hippocampal responses revealed accurate discrimination of voxel patterns evoked by scenes from those evoked by each other class of content. Moreover, the classifier confusion analysis revealed that voxelwise responses to scenes were distinct from other forms of content in posterior hippocampus, which otherwise demonstrated high confusion between all other forms of content.

Using RSA to investigate voxel patterns evoked in the entire posterior hippocampal region, we did not find evidence for a distinct representation of scene content as within-class distance between individual scene exemplars did not reach our criterion threshold (Fig. 7b; P > 10−2). Moreover, significant cross-class linear distances were only observed between voxel patterns evoked by scenes and those evoked by faces in posterior hippocampus (P < 10−2) but not other forms of event content (all P > 10−2). Although we found few effects of representational distance to explain the distinctiveness of scenes in our MVPA analysis when examining the posterior hippocampus as a whole, we considered whether such distinct coding of scene content might be found in specific locations along the hippocampal axis (Fig. 8b). Indeed, significantly smaller within-class distances were present between individual scene exemplars in the second and third posterior-most ROIs of hippocampus (all P < 10−2). In both ROIs, this effect was accompanied by a significantly larger cross-class distance between scene and faces exemplars (all P < 10−2), while a significantly larger cross-class distance between scenes and visual words was additionally observed in the second posterior-most ROI (P < 10−2). Such representationally distinct coding of scene content was not observed in the anterior-most ROIs of hippocampus nor were there significant within-class linear distances for any other class of stimuli in any portion of hippocampus (all P > 10−2). Together, these observations in hippocampus show that the distinctive representation of scene content is explained primarily by the presence of a consistent spatial code in the posterior extent of this region.

Representation of Auditory Content in MTL

MVPA analysis revealed that voxel patterns evoked by spoken words and sounds in PHc and PRc are distinct from patterns evoked by visual forms of content but not from one another. One possibility is that, being the only forms of auditory stimuli presented to the participants, spoken words and sounds might be distinguished from other visual content based on sensory modality. However, this finding does not necessarily entail that these forms of auditory content share a common representational structure in PHc and PRc. To directly address how auditory content is represented in MTL cortex, we compared voxel patterns evoked by individual spoken word and sound exemplars using RSA.

In PHc, within-class linear distances were significantly smaller than would be expected by chance for spoken word (Fig. 7a; P < 10−2) but not sound exemplars. However, there was significant within-class clustering of individual sound exemplars in the third posterior-most ROI in MTL cortex (Fig. 8a; P < 10−2). We also found that the voxel patterns evoked by spoken words and sounds showed cross-class distances that were significantly smaller than chance (P < 10−2), indicating a highly overlapping representation of these content forms in PHc. The overlapping representation of auditory content was evident in the 4 posterior-most ROIs of PHc, with significantly smaller cross-class distances between spoken word and sound exemplars than would be expected by chance (all P < 10−2). Moreover, we found that auditory content was distinct from scene content in the 4 posterior-most ROIs and from face content in the 2 posterior-most ROIs, as revealed by significantly larger cross-class distances between both forms of auditory content and face and scene visual content (all P < 10−2). In contrast, voxel patterns evoked by spoken words and sounds in PRc did not demonstrate significant within-class representational similarity nor did they demonstrate significant cross-class clustering with one another (all P > 10−2), suggesting that these 2 forms of content do not share a common representational structure in PRc. This pattern of results was true both when RSA was performed for PRc as a whole and when it was performed on the anterior-most MTL cortical ROI corresponding to PRc. Together, these findings suggest that representations of auditory content are highly overlapping throughout PHc and are increasingly distinguished from visual content in the posterior extremity of MTL cortex.

When we examined the voxel patterns evoked by spoken words and sounds across the anterior–posterior hippocampal axis, we found significantly smaller cross-class distances between spoken word exemplars and sound exemplars in the second and third anterior-most ROIs and in the posterior-most ROI (Fig. 8b; all P < 10−2). The MVPA confusion matrices had previously indicated a high overall degree of classifier confusion in hippocampus but did not identify the precise nature of poor performance for any particular class of content. Here, the use of RSA within segmented hippocampal ROIs revealed that different forms of auditory content were highly confusable because they evoked similar distributed patterns of response. However, unlike PHc, this effect was not accompanied by consistently larger cross-class distance between face and scene visual content (all P > 10−2).

Discussion

Whether MTL subregions make distinct contributions to episodic memory remains a topic of considerable debate. In the present study, we combined hr-fMRI (Carr et al. 2010) with both univariate and multivariate statistical measures to investigate whether event content differentiates the function of hippocampus and MTL cortical subregions. First, our findings revealed a distributed code for event content in PRc and PHc that crosses anatomical boundaries, despite significant differences in responding to novel versus repeated items for only one stimulus class in each region (novel faces in PRc and novel scenes in PHc). In particular, multivariate analysis of responses to novel content showed that PRc and PHc contain distinct representational codes for faces and scenes. Second, we observed a dissociation in content representation along the anterior–posterior axis of the hippocampus. Anterior hippocampus demonstrated peak amplitude responses that were content general; moreover, the spatial pattern of response in this region did not discriminate between different forms of event content. In contrast, posterior hippocampus did not demonstrate significant peak amplitude responses for novel stimuli from any content class but did show a distributed coding of scene content that was representationally distinct from other content classes. By taking advantage of the complementary aspects of univariate and multivariate approaches, the present data provide new insights into the nature of representational coding in the MTL.

Content Representation in MTL Cortex

While many studies have focused on content-based dissociations between PRc and PHc (Pihlajamaki et al. 2004; Lee, Buckley, et al. 2005; Sommer et al. 2005; Lee et al. 2008; see also Dudukovic et al. 2011), several recent reports have observed encoding responses for visual object and visuospatial information in human PRc (Buffalo et al. 2006; Litman et al. 2009; Preston et al. 2010) as well as PHc (Bar and Aminoff 2003; Aminoff et al. 2007; Bar et al. 2008; Litman et al. 2009). In the present study, PRc novelty responses were maximal for faces, while PHc demonstrated maximal novelty responses to scenes, consistent with previous reports of content-based dissociations between PRc and PHc. However, when examining the distribution of novelty-based responses across MTL cortex, a response to novel scenes was observed in posterior PRc, indicating that processing of scene information is not unique to PHc. Notably, these representational gradients were evident at the level of individual participants (see Supplementary Results).

These findings complement recent reports that demonstrated greater responses to visual object content in anterior PRc and visuospatial content in posterior PHc, with a mixed response to scene, object, and face content in a transitional zone at the border between PHc and PRc (Litman et al. 2009; Staresina et al. 2011). Such findings have led to the conclusion that discrete functional boundaries do not exist in MTL cortex and the further speculation that selective responses to a single content class are limited to the anterior and posterior extents of MTL cortex. However, as discussed below, our multivariate findings suggest that distributed representations of event content can be observed at extreme ends of MTL cortex.

MVPA revealed significant differentiation of event content in PRc and PHc, when treated as 2 separate regions, both across the group and in the majority of participants. Importantly, successful classification was observed even when preferred content (i.e., novel faces and scenes) was removed from classifier training and testing (see Supplementary Results). Further consideration of the classifier confusion matrices showed that PRc and PHc maintain distinct codes for face and scene content, as those stimuli were significantly differentiated from all other forms of event content. However, as indicated by the present findings and prior reports (Litman et al. 2009; Staresina et al. 2011), clear functional boundaries between PHc and PRc may not exist. These observations of a mixed representation of event content as revealed by MVPA may inadvertently result from the fact that this analysis considered these regions as 2 distinct areas. Critically, in the present study, we used RSA to examine how patterns of activation represent different forms of event content both within individual anatomically defined PRc and PHc and as a function of anterior–posterior position along the axis of MTL cortex.

In PRc, RSA revealed significant within-class clustering for face content in the anterior-most portion of this region, and while it did not reach our threshold for correction for multiple comparisons, there was also evidence for distinctive scene representations both in PRc as a whole (P = 0.004) and in the most posterior aspect of PRc (P = 0.008) as revealed by MVPA. Moreover, the MVPA confusion matrices showed clear distinctions between the representation of face and scene content in PRc. MVPA may have emphasized distinctive face and scene codes by placing greater weight on voxels from the anterior and posterior regions of PRc, making these effects more apparent in the classifier confusion matrices. Our RSA findings are informative, however, in that they converge with our univariate findings in PRc, demonstrating a predominately face-selective response in anterior PRc combined with a scene-sensitive response in the posterior aspect of this region.

When we considered PHc as a whole region in the RSA analysis, we observed significant within-class clustering of multiple forms of content, including faces and scenes. Moreover, face and scene representations were significantly distinct from other stimulus classes. When we considered patterns of activation within individual ROIs along the anterior–posterior extent of PHc, we noted that the distinctive representation of faces and scenes was most prominent in the posterior aspect of the region and gradually became less distinct as one moved to the anterior portion of the region. Notably, the distinctive representation of faces was observed in PHc despite the absence of an above-baseline response for faces in the univariate analysis. Similarly, while univariate analysis showed no evidence for above-baseline responding to auditory content in PHc, RSA revealed a representation of auditory content that was distinct from visual content, again most evident in the posterior extent of PHc. The fact that representational distinctions were observed for multiple content classes in the posterior PHc runs counter to the hypothesis that content coding would be most scene selective at this extreme end of PHc. Thus, the present data indicate that the distributed representation of event content in MTL cortex extends beyond a transitional zone at the border between PRc and PHc (Litman et al. 2009; Staresina et al. 2011) and is also evident in posterior PHc.

It is possible that the differences in novelty-based responding observed in MTL cortex result from differences in low-level perceptual features of the stimuli used in the present study rather than differences based on encoding of conceptual information about different categories of stimuli. Because one of our goals was to assess MTL responses to a wide variety of auditory and visual event content, we did not control for perceptual differences between classes of stimuli. However, representational gradients for visual object and visuospatial information are evident in MTL cortex even when perceptual features are equated across content domains (Staresina et al. 2011). Moreover, previous work examining content representation in ventral temporal cortex has shown that patterns of nonmaximal responses that discriminate between different forms of event content are not dependent on the low-level characteristics of the stimuli, such as luminance, contrast, and spatial frequency (Haxby et al. 2001). Collectively, these converging findings suggest that distributed coding of event content observed here extends beyond simple differences in the perceptual features of events.

Content Representation in Anterior Hippocampus

Several observations of functional dissociations between anterior and posterior hippocampus are present in the neuroimaging literature (Prince et al. 2005; Strange et al. 2005; Chua et al. 2007; Awipi and Davachi 2008; Poppenk et al. 2010). However, few studies have considered the possible representational basis for such dissociations. The present findings indicate that dissociations between anterior and posterior hippocampus may result from differences in content-based representational coding between these 2 regions.

A prevailing view of MTL function proposes that hippocampus plays a domain-general role in episodic memory by binding content-specific inputs from MTL cortex into integrated memory representations (Davachi 2006; Manns and Eichenbaum 2006; Diana et al. 2007). Consistent with this view, domain-general encoding and retrieval responses have been observed in hippocampus relative to content-specific processing in MTL cortex (Awipi and Davachi 2008; Staresina and Davachi 2008; Diana et al. 2010). Human electrophysiological evidence also suggests an invariant representation of perceptual information in hippocampal neurons relative to MTL cortex (Quian Quiroga et al. 2009).