Abstract

Fallible human judgment may lead clinicians to make mistakes when assessing whether a patient is improving following treatment. This article provides a narrative review of selected studies in psychology that describe errors that potentially apply when a physician assesses a patient's response to treatment. Comprehension may be distorted by subjective preconceptions (lack of double blinding). Recall may fail through memory lapses (unwanted forgetfulness) and tacit assumptions (automatic imputation). Evaluations may be further compromised due to the effects of random chance (regression to the mean). Expression may be swayed by unjustified overconfidence following conformist groupthink (group polarization). An awareness of these five pitfalls may help clinicians avoid some errors in medical care when determining whether a patient is improving.

KEY WORDS: medical error, fallible reasoning, judgement and decisions, human psychology, patient outcomes, symptom changes

INTRODUCTION

Rigorous follow-up is an important yet fallible element for effective medical care. Two of the many mistakes to avoid are incorrectly concluding that a patient is worsening (when the patient is not) or incorrectly concluding that a patient is improving (when the patient is not). The frequency of these two errors is unknown since rigorous data are rarely collected in everyday practice or published science. Some degree of error is inevitable because of the inherent limitations in perception exhibited by patients and reviewed earlier.1The consequences from fallible patient self-report are hard to predict and can create either an unduly pessimistic or optimistic impression. The net result may lead to abandoning effective treatments (e.g., switching antibiotics when the patient was actually improving) or missed opportunities to discontinue needless treatments (e.g., persisting with acid suppressors when the patient actually had constipation).

Fallible patient self-report is not the only source of error at follow-up. Some medical errors reflect the propagation of mistakes that originate with the patient; for example, if a patient states "my knee pain is better after my arthroscopy", the clinician might be prone to exaggerate the effectiveness of the operation. However, another set of errors is created by the clinician since the professional providing the treatment is often the same person who checks whether the treatment was effective. This type of innate vested interest abounds in clinicians yet would not be accepted in athletes or other professionals.2Case studies suggest, moreover, that self-serving subjectivity is more easily recognized in others rather than oneself 3,4and that objective conflict-of-interest declarations do not eliminate the problem.5

Clinicians may believe that they have reliable judgment about patient outcomes since they practice in an impartial manner. The science of cognitive psychology indicates, however, that human error occurs even without misguided incentives, deviant personalities, or financial conflict-of-interest. That is, fallible professional judgment can arise despite the best of intentions, insight, and integrity. The purpose of this narrative review is to summarize five concepts from psychological science that are standard in psychology textbooks and that might inform judgments made by clinicians who assess patients at follow-up (Table 1). We focus on specific pitfalls that have counterintuitive features, more than 500 citations on PsycINFO, relevance to health, yet rarely appear in standard medical textbooks or MEDLINE searches.

Table 1.

Avoiding Errors When Checking Patients at Follow-up

| Error | Example | Solution | Example |

|---|---|---|---|

| Lack of double blinding | Doctor: “At a glance I can tell you’re better.” | Try to stay impartial | Doctor: “Are you feeling better, worse, or the same?” |

| Unwanted forgetfulness | Doctor: “What was your glucose before the diet?” | Use diaries and careful records | Doctor: “Record your glucose values for our next visit” |

| Automatic imputation | Doctor: “I’ve only seen patients do well with this.” | Be scrupulous about missing information | Doctor: “I ask my nurse to call every patient afterwards.” |

| Regression to the mean | Doctor: “Why didn’t the next surgery go as well?” | Anticipate repeated events as less extreme | Doctor: “Your recovery was exceptional but it may be less impressive next.” |

| Group polarization | Doctor: “She’s better.” Team: “We all agree.” | Encourage differing opinions | Doctor: “Set me straight if I’m wrong.” Team: “Some of us are not sure.” |

Lack of Double Blinding

A lack of double blinding is an easily understood pitfall for clinicians because it is the counterpart of the placebo response for patients. The core issue is that preconceptions on the part of an evaluator can cause a participant to behave in ways that subtly reinforce those beliefs.6Telling teachers that their class is enriched with gifted students, for example, somehow leads to more gains in scholastic achievement than the average class during the same time interval.7Similarly, a psychiatrist would need almost super-human objectivity to check whether intense psychotherapy sessions improved the patient beyond the effects of standard treatment alone. Arguably, a degree of positive self-belief may be indispensable for sustaining a career during difficult times where patients are terminal and treatments are generally ineffective.

One classic demonstration about double blinding involved an elaborate study of young scientists who attempted to train genetically identical albino rats to run through a simple maze for a food pellet reward.8By random assignment, half the scientists were told that they had especially bright rats whereas the other half were told they had relatively dull rats. After training, each scientist initiated ten testing trials for their rat and recorded the number of successful completions of the maze. In accord with investigator bias, scientists assigned bright rats reported more successes on average than scientists assigned dull rats (2.3 vs. 1.5, p = 0.01). These results are especially interesting since the young scientists had no semblance of a financial conflict-of-interest and had received standardized instructions on the importance of scientific rigor.

Double blinding is an effective method for eliminating the conscious and subconscious distortions related to preconceptions in clinical science.9However, double blinding is not likely to become a major element in mainstream medicine since physicians need to know the details about individual patient treatments.10The major problems occur, perhaps, when a lack of double blinding is coupled to added intellectual traps such as confirmation bias.11,12One corrective strategy is to invite second opinions from an impartial colleague.13–15Group practices with patient hand-offs, in theory, might also help attenuate this bias if different physicians have different preconceptions.16Finally, formal third-party report-cards of patient outcomes may provide some impartial benchmarks to calibrate frequent, objective, and clinically important outcomes.17

Unwanted Forgetfulness

The fallible nature of the clinician's own memory may lead to further mistakes when evaluating a patient’s response to treatment. Clinicians sometimes forget simple items such as where they have parked their own car, yet recalling a medical patient is substantially more difficult because each patient has many features.18,19The cognitive demands at follow-up become even more difficult due to the requirement for making paired comparisons (akin to remembering where a car was parked both today and last month) and the need for managing more than one patient (analogous to being a valet and remembering multiple cars parked on multiple different days). No wonder, for example, that assessing improvements in a patient’s rash might be difficult when running a follow-up clinic for patients with acne or psoriasis.20

The field of memory science is rife with studies of fallibility, including one clever demonstration involving highly experienced professional air traffic controllers tested in their domain of expertise.21The basic task was to review a dynamic air traffic pattern presented on a standard instrument display panel and provide flight instructions to individual aircraft. The air traffic controllers were then questioned at random points and asked to recall the position and altitude of 5 designated aircraft from a field of about 13 at the time. The main finding was that air traffic controllers made many mistakes, particularly when trying to recall numerical altitudes rather than geographic positions (24 % vs. 16 %, p < 0.005). When surveyed, the air traffic controllers rated altitude and position as the two most important pieces of data for a safe recommendation.

One way to avoid unwanted forgetfulness is to maintain careful records and computerized reminders.22,23Doing so can be laborious, cumbersome, and necessitates a reliable retrieval system.24Automatic recording systems can be helpful, such as glucometers with built-in memory chips and cameras with digital images that can retrieve years of past data.25Communication strategies that include electronic messaging can also provide an unambiguous method to return long afterwards to check what was and was not mentioned.26,27Another corrective strategy is to write down during the initial patient contact the specific expectations that are intended at the time of subsequent follow-up.28A final strategy is to foreshadow in dialogue with the patient the specific questions most likely to be asked at the next follow-up appointment.29

Automatic Imputation

Patient follow-up is also marked by a degree of attrition whereby some doctors and patients do not meet for a second contact.30Automatic imputation describes the widespread presumption that such missing observations are generally normal, unremarkable, and reasonably disregarded. In an emergency department, a clinician’s natural tendency might be to overlook the lack of a patient repeat visit, presume that all is fine, and believe that treatment was effective.31In a stroke unit, a medical intern might similarly be aware of a patient’s neurological deficits, presume that little further improvement is possible, and underestimate how much recovery occurs following a year of rehabilitation. In both cases, the gaps in hand-offs and discontinuities of care can promote an unduly optimistic or pessimistic impression of a patient’s outcome.32

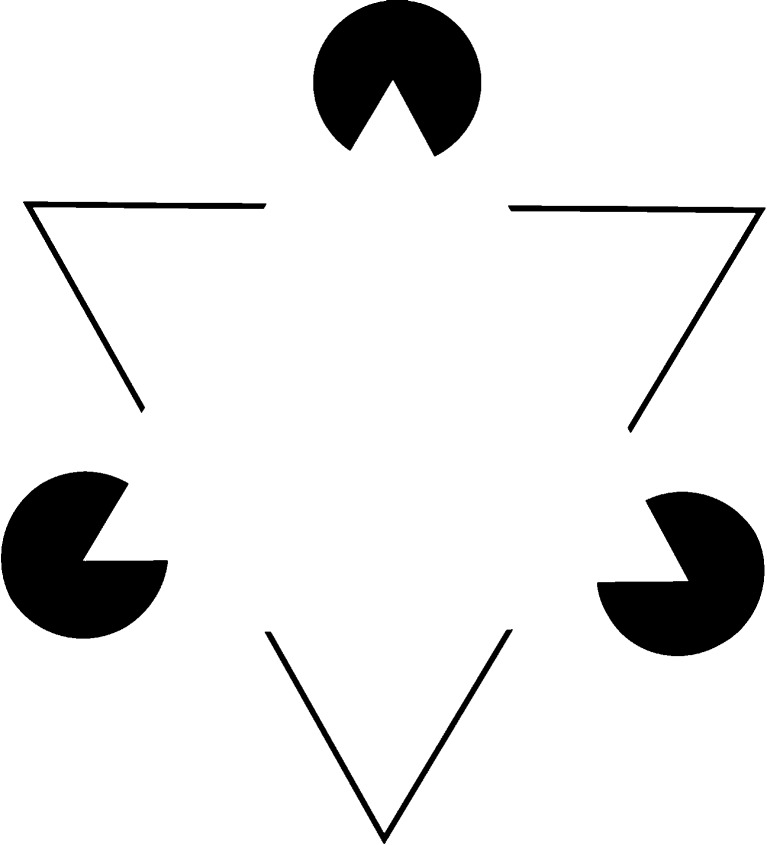

Experiments in Gestalt psychology provide remarkable examples of such unconscious automatic imputation. A classic demonstration involves optical illusions presented for about 2 minutes to university students on stimulus cards, such as a Kanizsa image (Fig. 1). After a small delay, participants are then asked to describe what they had seen.33As expected, about 70 %–90 % of participants report seeing a white equilateral triangle in the Kanizsa image even though no such shape is actually present. The reason why people are misled is that a simple white triangle would nicely account for what is present and what is absent in an otherwise complex presentation. Apparently, human cognition can create false information even when distortions due to communication and memory are almost completely eliminated.

Figure 1.

Kanizsa figure containing an array of three black chevrons and three incomplete circles. Most observers see a white equilateral triangle that seems to float in front of the six black shapes on the page, although no such triangle is actually present.

The way to avoid automatic imputation is to be meticulous about detecting and correcting missing information. Sometimes the gap is an identified patient with a missing piece of data; for example, a pre-operative diabetic patient with a normal morning blood sugar, a delay in surgery, and a missing-but-mistakenly-presumed-normal evening blood sugar (that is actually quite low).34At other times, the gap is that the entire patient is missing; for example, a patient who is discharged uneventfully after an asthma exacerbation but who is subsequently readmitted to another hospital for an asthma relapse. In medicine, wishful thinking predisposes clinicians to overlook both types of lapses.35One promise of computerized medical records with integrated decision support is to mitigate such gaps though automatic monitoring and alerts.

Regression to the Mean

Perfect data would not resolve all problems related to follow-up assessments because of the additional bias of regression-to-the-mean, defined as the statistical tendency for extreme observations to subsequently attenuate due to the laws of probability.36The counter-intuitive nature of this principle has contributed to the widespread popularity of futile remedies for centuries. The core problem arises because patients seek treatment when sick and clinicians respond with interventions to reduce suffering even though many illnesses are self-limited. At follow-up, both patient and clinician may attribute an observed recovery to the intervening treatment rather than the natural course of disease. Such faulty reasoning likely underlies the earlier popularity of many antiquated treatments including skull trepanation for migraine headaches, blood letting for pneumonia, and massive doses of vitamins for the common cold.

One demonstration of people's failure to account for regression-to-the-mean involves graduate students analyzing the flight instructor paradox.37In essence, the paradox describes how pilots sometimes make an exemplary landing, receive praise, and then perform worse on their next flight.38Similarly, pilots sometimes make a poor landing, receive criticism, and improve on their subsequent flight. A naïve interpretation might mistakenly conclude that positive reinforcement leads to complacency whereas negative reinforcement leads to diligence (contrary to learning theory). In the laboratory demonstration, sophisticated participants shown this paradox generally provided explanations based on mistaken beliefs about learning theory and no participant offered an explanation that mentioned regression-to-the-mean.

Regression-to-the-mean persists in medical care even if treatments are initiated on an elective basis.39For example, patients who undergo bilateral carpal tunnel surgery often notice that the second operation does not go as well as the first operation.40The disappointing outcomes might be mistakenly attributed to a worsening of patient disease or a decrease in quality of care. Another explanation, however, is that patients willing and able to undergo a second operation are an elite subset of patients who experienced above-average outcomes following initial surgery (and thus destined to do less well, on average, the next time). The complete solution to regression-to-the-mean would require infallible treatments; in the interim, clinicians need to continually remind themselves about the pervasive element of uncertainty influencing outcomes.41–43

Group Polarization

The assessment of a patient’s course is often conducted as a group process; however, the presumed wisdom of the crowd is no panacea without independent thinking. Group polarization is defined as the unwanted tendency for people who share similar attitudes to become entrenched following mutual discussion. Some remarkable blunders in military combat have occurred when a leader is surrounded by sycophants or a mob mentality that leaves no room for dissenting opinions (which has, in the past, resulted in thousands of deaths).44Similarly, students in naturopathic colleges can sometimes become increasingly distrustful of conventional medical vaccinations even though vaccines are not a part of their formal curriculum.45Studies in psychology suggest, furthermore, that group polarization can also arise in repeated everyday tasks.

One controlled demonstration of group polarization involved college students playing small stakes blackjack in a casino like setting.46By random assignment, some students played 20 rounds as isolated individuals with no dialogue between players. Other students played 20 rounds in a group setting where a consensus determined how much to wager on each game. The main finding was that the average wager increased by about 50 % following group dialogue compared to isolated performance (51 cents vs. 33 cents, p < 0.005). The reason behind this shift is that social dialogue is sometimes skewed by a few vocal, eloquent, or exceptional participants. Apparently, achieving a consensus among those with no strong prior beliefs does not always yield a simple average and can, instead, cause shifts in risk-taking attitudes.

The way to avoid group polarization is to start with sufficient diversity among the members so that errors are more likely to be canceled than reinforced.47–49The optimal size of the group is likely a compromise of many factors, although some research suggests that even two added individuals are an improvement over one solitary judge.50The best means of achieving a group consensus has not been established, and the same research suggests that forcing a consensus is not always necessary.51The tradition of scientific peer review is an implicit effort to avoid group polarization since the number of journal reviewers for a medical article is often far fewer than the number of authors listed on the article. A tradition of training in diverse locations is an analogous countermeasure as it allows the clinician to better distinguish truth from local opinion.52

CONCLUSION

This article reviews five concepts from psychology that are relevant when a clinician is checking a patient’s response to treatment (Table 1). In many cases the patient's change is blatant and small fallibilities in judgment will not lead to faulty decisions. In other cases, however, the situation is uncertain and skilled judgment is crucial. Patients will prize a clinician who can reach the right decision in a swift manner. An awareness of specific patterns of mistakes might lead to better clinical outcomes and fewer complications in follow-up care. In contrast, some of the most difficult pitfalls to avoid are the ones that people do not recognize. Each of the pitfalls reviewed in this article has at least one solution that can be applied if clinicians are aware of their own fallibilities and plan to see patients following treatment.

The largest limitation of our review is that it is not a systematic review of all psychology research. The field is broad and variable, so that a formal meta-analysis would fail simple tests for heterogeneity. We selected concepts that have stood the test of time and thereby did not include recent findings that have early enthusiasm but not widespread replication. Our selective approach, moreover, was constrained so that only selected examples appeared for the underlying chain of reasoning characterized as comprehension, recall, evaluation, and expression. We focused on counter-intuitive concepts relevant to how people perceive changes rather than concepts important when evaluating one patient once. Finally, the dearth of clinical trials suggests the need for more future medical research examining such issues in everyday clinical domains.

Narrative reviews reflect rigorous science yet their synthetic structure involves subjective interpretation. Condensing a century of research into a succinct list for clinicians, therefore, raises countless choices on how many studies to exclude, which clinical analogues to offer, and what potential applications are possible. The current review provides a framework for understanding potential errors in judgment, some language for identifying otherwise nebulous misgivings, and scholarly background on the underlying basic science. The current review does not indicate the frequency of the errors, the effectiveness of potential countermeasures, and the ultimate impact of decision science on improving clinical outcomes. Those important issues need future research and the answers appear nowhere in the medical literature at present.

Acknowledgements

This project was supported by the Canada Research Chair in Medical Decision Sciences, the Canadian Institutes of Health Research, and the Sunnybrook Research Institute. We thank the following individuals for helpful comments on earlier drafts of this article: William Chan, Edward Etchells, Lee Ross, Tom MacMillan, Steven Shadowitz, John Staples, and Jacob Udell.

Conflict of Interest

The authors declare that they do not have a conflict of interest. The funding organizations had no role in the design and conduct of the study; collection, management, analysis, and interpretation of the data; and preparation, review, or approval of the manuscript.

References

- 1.Redelmeier DA, Dickinson VM. Determining whether a patient is feeling better: pitfalls from the science of human perception. J Gen Intern Med. 2011;26(8):900–6. doi: 10.1007/s11606-011-1655-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Dana J, Loewenstein G. A social science perspective on gifts to physicians from industry. JAMA. 2003;290(2):252–5. doi: 10.1001/jama.290.2.252. [DOI] [PubMed] [Google Scholar]

- 3.Steinman MA, Shlipak MG, McPhee SJ. Of principles and pens: attitudes of medicine housestaff toward pharmaceutical industry promotions. Am J Med. 2001;110:551–557. doi: 10.1016/S0002-9343(01)00660-X. [DOI] [PubMed] [Google Scholar]

- 4.Choudhry NK, Stelfox HT, Detsky AS. Relationships between authors of clinical practice guidelines and the pharmaceutical industry. J Am Med Assoc. 2002;287(5):612–7. doi: 10.1001/jama.287.5.612. [DOI] [PubMed] [Google Scholar]

- 5.Loewenstein G, Sah S, Cain DM. The unintended consequences of conflict of interest disclosure. JAMA. 2012;307(7):669–670. doi: 10.1001/jama.2012.154. [DOI] [PubMed] [Google Scholar]

- 6.Jones EE. Interpreting interpersonal behavior: The effects of expectancies. Science. 1986;234(4772):41–46. doi: 10.1126/science.234.4772.41. [DOI] [PubMed] [Google Scholar]

- 7.Rosenthal R, Jacobson L. Teachers’ expectancies: determinates of pupils’ IQ gains. Psychol Rep. 1966;19(1):115–8. doi: 10.2466/pr0.1966.19.1.115. [DOI] [PubMed] [Google Scholar]

- 8.Rosenthal R, Fode KL. The effect of experimenter bias on the performance of the albino rat. Behav Sci. 1963;8(3):183–9. doi: 10.1002/bs.3830080302. [DOI] [Google Scholar]

- 9.Wigal JK, Stout C, Kotses H, Creer TL, Fogle K, Gayheart L, Hatala J. Experimenter expectancy in resistance to respiratory air flow. Psychosomatic Med. 1997;59:318–22. doi: 10.1097/00006842-199705000-00015. [DOI] [PubMed] [Google Scholar]

- 10.Leblanc VR, Brooks LR, Norma GR. Believing is seeing: the influence of a diagnostic hypothesis on the interpretation of clinical features. Acad Med. 2002;77(10):S67–9. doi: 10.1097/00001888-200210001-00022. [DOI] [PubMed] [Google Scholar]

- 11.Lord CG, Ross L, Lepper MR. Biased assimilation and attitude polarization: the effects of prior theories on subsequently considered evidence. J Pers Soc Psychol. 1979;37(11):2098–109. doi: 10.1037/0022-3514.37.11.2098. [DOI] [Google Scholar]

- 12.Mumma GH. Effects of three types of potentially biasing information on symptom severity judgements for major depressive episode. J Clin Psychol. 2002;58:1327–45. doi: 10.1002/jclp.10046. [DOI] [PubMed] [Google Scholar]

- 13.Gertman PM, Stackpole DA, Levenson DK, Manuel BM, Brennan RJ, Janko GM. Second opinions for elective surgery: the mandatory Medicaid program in Massachusetts. N Engl J Med. 1980;302(21):1169–74. doi: 10.1056/NEJM198005223022103. [DOI] [PubMed] [Google Scholar]

- 14.Epstein JI, Walsh PC, Sanfilipo F. Clinical and cost impact of second-opinion pathology: review of prostate biopsies prior to radical prostatectomy. Am J Surg Path. 1997;20(7):851–7. doi: 10.1097/00000478-199607000-00008. [DOI] [PubMed] [Google Scholar]

- 15.Newman EA, Guest AB, Helvie MA, Roubidoux MA, Chang AE, Kleer CG, Diehl KM, Cimmino VM, Pierce L, Hayes D, Newman LA, Sabel SM. Changes in surgical management resulting from case review at a breast cancer multidisciplinary tumor board. Cancer. 2006;107:2346–51. doi: 10.1002/cncr.22266. [DOI] [PubMed] [Google Scholar]

- 16.Broom DH. Familiarity breeds neglect? Unanticipated benefits of discontinuous primary care. Fam Pract. 2003;20(5):503–7. doi: 10.1093/fampra/cmg501. [DOI] [PubMed] [Google Scholar]

- 17.Jamtvedt G, Young JM, Kristoffersen DT, O’Brian MA, Oxman AD. Audit and feedback: effects on professional practice and health care outcomes. Cochrane Database of Systematic Reviews. 2006;2:CD000259. [DOI] [PubMed]

- 18.Claessen HFA, Boshuizen HPA. Recall of medical information by students and doctors. Med Educ. 1985;19:61–7. doi: 10.1111/j.1365-2923.1985.tb01140.x. [DOI] [PubMed] [Google Scholar]

- 19.Coughlin LD, Patel VL. Processing of critical information by physicians and medical students. J Med Educ. 1987;62:818–28. doi: 10.1097/00001888-198710000-00005. [DOI] [PubMed] [Google Scholar]

- 20.Eva KW. The aging physician: changes in cognitive processing and their impact on medical practice. Acad Med. 2002;77(10):S1–S6. doi: 10.1097/00001888-200210001-00002. [DOI] [PubMed] [Google Scholar]

- 21.Gronlund SD, Ohrt DD, Dougherty MR, Perry JL, Manning CA. Role of memory in air traffic control. J Exp Psychol: Appl. 1998;4(3):263–280. doi: 10.1037/1076-898X.4.3.263. [DOI] [Google Scholar]

- 22.Shachak A, Hadad-Dayagi M, Ziv A, Reis S. Primary care physicians’ use of an electronic medical record system: a cognitive task analysis. J Gen Intern Med. 2009;24(3):341–8. doi: 10.1007/s11606-008-0892-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Cebul RD, Love TE, Jain AK, Hebert CJ. Electronic health records and quality of diabetes care. N Engl J Med. 2011;365(9):825–33. doi: 10.1056/NEJMsa1102519. [DOI] [PubMed] [Google Scholar]

- 24.Wimmers PF, Schmidt HG, Verkoeijen PPJL, Wiel MWJ. Inducing expertise effects in clinical case recall. Med Educ. 2005;39:949–57. doi: 10.1111/j.1365-2929.2005.02250.x. [DOI] [PubMed] [Google Scholar]

- 25.Strowig S, Raskin P. Improved glycemic control in intensively treated type 1 diabetic patients using blood glucose meters with storage capacity and computer-assisted analyses. Diabetes Care. 1998;21(10):1694–8. doi: 10.2337/diacare.21.10.1694. [DOI] [PubMed] [Google Scholar]

- 26.Patt MR, Houston TK, Jenckes MW, Sands DZ, Ford DE. Doctors who are using e-mail with their patients: a qualitative exploration. J Med Internet Res. 2003;5(2):e9. doi: 10.2196/jmir.5.2.e9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Sharman SJ, Garry M, Jacobson JA, Loftus EF, Ditto PH. False memories for end-of-life decisions. Health Psych. 2008;27(2):291–6. doi: 10.1037/0278-6133.27.2.291. [DOI] [PubMed] [Google Scholar]

- 28.Morris PE, Fritz CO. How to improve your memory. Psychologist. 2006;19(10):608–611. [Google Scholar]

- 29.Martinali J, Bolman C, Brug J, Borne B, Bar F. A checklist to improve patient education in a cardiology outpatient setting. Patient Educ Couns. 2001;42:321–8. doi: 10.1016/S0738-3991(00)00126-9. [DOI] [PubMed] [Google Scholar]

- 30.Sims AC. Importance of a high tracing-rate in long-term medical follow-up studies. Lancet. 1973;302(7826):433–5. doi: 10.1016/S0140-6736(73)92287-3. [DOI] [PubMed] [Google Scholar]

- 31.Cox A, Rutter M, Yule B, Quinton D. Bias resulting from missing information: some epidemiological findings. Br J Prev Soc Med. 1977;31(2):131–6. doi: 10.1136/jech.31.2.131. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Berner ES, Graber ML. Over confidence as a cause of diagnostic error in medicine. Am J Med. 2008;121(5A):S2–23. doi: 10.1016/j.amjmed.2008.01.001. [DOI] [PubMed] [Google Scholar]

- 33.Rock I, Anson R. Illusory contours as the solution to a problem. Perception. 1979;8(6):665–81. doi: 10.1068/p080665. [DOI] [PubMed] [Google Scholar]

- 34.Dresselhaus TR, Luck J, Peabody JW. The ethical problem of false positives: a prospective evaluation of physician reporting in the medical record. J Med Ethics. 2002;28:291–4. doi: 10.1136/jme.28.5.291. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Szauter KM, Ainsworth MA, Holden MD, Mercado AC. Do students do what they write and write what they do? The match between the patient encounter and patient note. Acad Med. 2006;81(10 Suppl):S44–S47. doi: 10.1097/00001888-200610001-00012. [DOI] [PubMed] [Google Scholar]

- 36.Pitts SR, Adams RP. Emergency department hypertension and regression to the mean. Ann Emerg Med. 1998;31:214–8. doi: 10.1016/S0196-0644(98)70309-9. [DOI] [PubMed] [Google Scholar]

- 37.Kahneman D, Tversky A. On the psychology of prediction. Psychol Rev. 1973;80(4):237–251. doi: 10.1037/h0034747. [DOI] [Google Scholar]

- 38.Tversky A, Kahneman D. Judgment under uncertainty: heuristics and biases. Science. 1974;185(4157):1124–31. doi: 10.1126/science.185.4157.1124. [DOI] [PubMed] [Google Scholar]

- 39.Whitney CW, Koroff M. Regression to the mean in treated versus untreated chronic pain. Pain. 1992;50:281–5. doi: 10.1016/0304-3959(92)90032-7. [DOI] [PubMed] [Google Scholar]

- 40.Afshar A, Yekta Z. Subjective improvement of the hands in sequential bilateral carpal tunnel surgery. J Plast Reconstr Aesthetic Surg. 2010;63(2):e193–4. doi: 10.1016/j.bjps.2009.02.070. [DOI] [PubMed] [Google Scholar]

- 41.Bland JM, Altman DG. Some examples of regression towards the mean. BMJ. 1994;309(6957):780. doi: 10.1136/bmj.309.6957.780. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Morton V, Torgerson DJ. Effects of regression to the mean on decision making in health care. BMJ. 2003;326:1083–4. doi: 10.1136/bmj.326.7398.1083. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Greenwood MC, Rathi J, Hakim AJ, Scott DL, Doyle DV. Regression to the mean using the disease activity score in eligibility and response criteria for prescribing TNF-a inhibitors in adults with rheumatoid arthritis. Rheumatology. 2007;46:1165–7. doi: 10.1093/rheumatology/kem109. [DOI] [PubMed] [Google Scholar]

- 44.Janis IL. Victims of groupthink: a psychological study of foreign-policy decisions and fiascoes. Boston: Houghton Mifflin Co.;1972. p 277.

- 45.Busse JW, Wilson K, Campbell C. Attitudes towards vaccination among chiropractic and naturopathic students. Vaccine. 2008;26(49):6237–43. doi: 10.1016/j.vaccine.2008.07.020. [DOI] [PubMed] [Google Scholar]

- 46.Blascovich J, Ginsburg GP, Veach TL. A pluralistic explanation of choice shifts on the risk dimension. J Pers Soc Psychol. 1975;31(3):422–9. doi: 10.1037/h0076479. [DOI] [Google Scholar]

- 47.Unsworth CA, Thomas SA, Greenwood KM. Decision polarization among rehabilitation team recommendations concerning discharge housing for stroke patients. Int J Rehab Res. 1997;20:51–69. doi: 10.1097/00004356-199703000-00005. [DOI] [PubMed] [Google Scholar]

- 48.Christensen C, Larson JR, Abbott A, Ardolino A, Franz T, Pfeiffer C. Decision making of clinical teams: communication patterns and diagnostic error. Med Decis Making. 2000;20:45–50. doi: 10.1177/0272989X0002000106. [DOI] [PubMed] [Google Scholar]

- 49.Schulz-Hardt S, Brodbeck FC, Mojzisch A, Kerschreiter R, Frey D. Group decision making in hidden profile situations: dissent as a facilitator for decision quality. J Personality Soc Psychol. 2006;91(6):1080–93. doi: 10.1037/0022-3514.91.6.1080. [DOI] [PubMed] [Google Scholar]

- 50.Soll JB, Larrick RP. Strategies for revising judgment: how (and how well) people use others' opinions. J Exp Psychol Learn Mem Cogn. 2009;35(3):780–805. doi: 10.1037/a0015145. [DOI] [PubMed] [Google Scholar]

- 51.Rangel EK. Clinical ethics and the dynamics of group decision-making: applying the psychological data to decisions made by ethics committees. HEC Forum. 2009;21(2):207–28. doi: 10.1007/s10730-009-9096-7. [DOI] [PubMed] [Google Scholar]

- 52.Ozgediz D, Roayaie K, Debas H, Schecter W, Farmer D. Surgery in developing countries: essential training in residency. Arch Surg. 2005;140:795–800. doi: 10.1001/archsurg.140.8.795. [DOI] [PubMed] [Google Scholar]