Abstract

In this paper we describe our approach to understanding wrongdoing in medical research and practice, which involves the statistical analysis of coded data from a large set of published cases. We focus on understanding the environmental factors that predict the kind and the severity of wrongdoing in medicine. Through review of empirical and theoretical literature, consultation with experts, the application of criminological theory, and ongoing analysis of our first 60 cases, we hypothesize that 10 contextual features of the medical environment (including financial rewards, oversight failures, and patients belonging to vulnerable groups) may contribute to professional wrongdoing. We define each variable, examine data supporting our hypothesis, and present a brief case synopsis from our study that illustrates the potential influence of the variable. Finally, we discuss limitations of the resulting framework and directions for future research.

Keywords: professional misconduct, professional wrongdoing, moral psychology, professional ethics

Throughout much of the 20th century, the field of moral psychology was largely uncharted. Piaget (1965) and Kohlberg (1981; Kohlberg & Candee, 1984) were pioneers in developing descriptions of moral reasoning based on systematic observation and interviews, and for many years moral psychology was nearly synonymous with the study of moral reasoning. By the end of the 20th century, however, even Kohlberg’s own students and colleagues had moved in new directions (Gibbs, 2003; Murphy & Gilligan, 1980; Snarey, 1985).

As the study of moral action grows more sophisticated, it becomes more unreasonable to expect that any one construct, much less any one study, will explain the majority of variance in moral action. Contributions to moral psychology have come from biological and evolutionary (Haidt, 2007; Sinnott-Armstrong, 2008a), industrial-organizational (Helton-Fauth et al., 2003; Trevino, Butterfield, & McCabe, 1998; Trevino, Weaver, & Reynolds, 2006), developmental (Gibbs, 2003; Rest, Narvaez, Bebeau, & Thoma, 1999; Turiel, 1983), personality (Antes et al., 2007; Munro, Bore, & Powis, 2005), philosophical (Appiah, 2008; Sinnott-Armstrong, 2008b), social (Milgram, 1963, 1965, 1974; Zimbardo, 2007), and social-learning psychology (Bandura, Underwood, & Fromson, 1975).

As one small contribution to the field, we aim to study wrongdoing in medical research and practice. We are particularly interested in exploring the environmental factors that contribute to wrongdoing. We have identified 14 primary forms of wrongdoing in medical research (such as fabricating data and failing to disclose to participants known risks) and 15 in medical practice (such as fraudulent billing and negligent care of patients) (DuBois, Kraus, & Vasher, in press).

Reasonable approaches to studying professional wrongdoing include interview studies with those who have engaged in and those who have observed wrongdoing (Davis & Riske, 2002; Koocher & Keith-Spiegel, 2010); correlational surveys assessing multiple constructs with test batteries (Martinson, Anderson, Crain, & De Vries, 2006; Mumford et al., 2006), and analyses of reports from oversight bodies (Davis, Riske-Morris, & Diaz, 2007). Each of these approaches yields different information telling different aspects of the story (Wilson, 1998).

In this paper we describe our approach to understanding professional wrongdoing in medical research and practice, focusing on the development of our predictor variables, that is, the environmental factors that contribute to wrongdoing. We present our criteria for variable inclusion, describe the 10 variables that meet our inclusion criteria, and discuss the data supporting their inclusion. We also include brief case synopses that illustrate the potential influence of each variable. Finally, we discuss limitations of the resulting framework and directions for future research.

This article is meant to serve two purposes: first, to provide content validation of our predictor variables; second, to provide readers with an overview of prominent work done on the environmental factors that may contribute to professional wrongdoing.

Historiometry and The Context of Variable Development

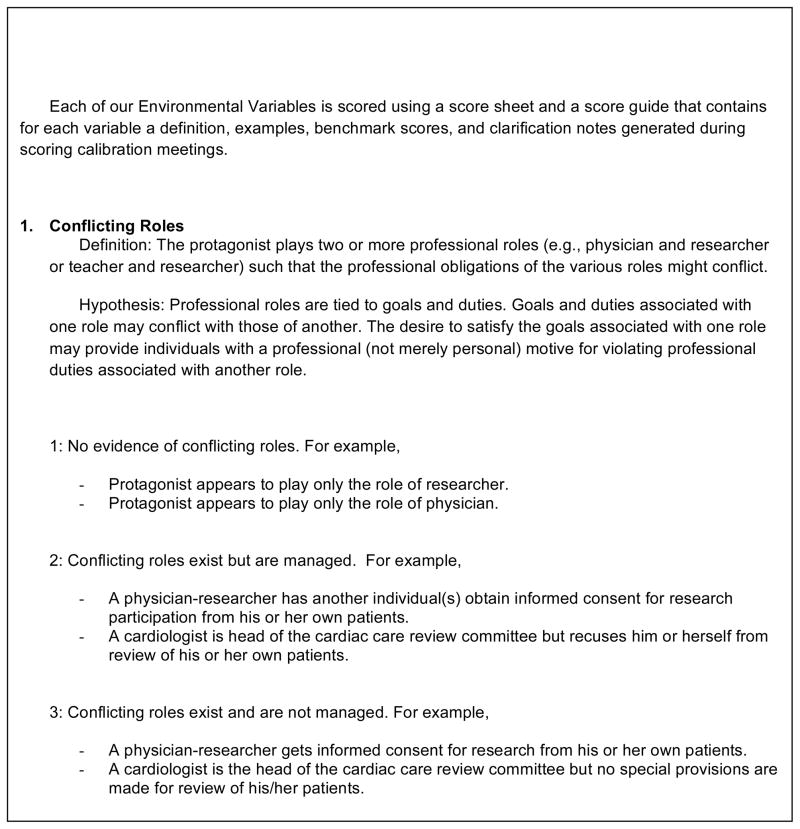

We are employing a historiometric method to understand and predict wrongdoing in health care research and practice. Historiometry involves reviewing a sufficiently large number of historical narratives about individual lives or events to enable coding and quantitative statistical analysis (Deluga, 1997; Mumford, 2006; Simonton, 1990, 1999, 2003; Suedfeld & Bluck, 1988). Analysis may be restricted to descriptive statistics, but may also include tests of significant differences between cases and even modeling using regression analyses. We are in the process of studying 120 cases of wrongdoing in medical research and practice, rating the kinds and the severity of the wrongdoing and the degree to which certain environmental variables are present. The kinds of wrongdoing are determined by three team members using a taxonomy developed for the project; the severity of wrongdoing is determined by five independent raters using a 6-item scale; and the predictor variables are rated by three team members using a 9-page benchmark scoring guide. Figure 1 provides an excerpt of the benchmark scoring guide. This approach will enable us to generate rich descriptive data and predictive models.

Figure 1.

Excerpt from the Environmental Factors Scoring Guide

We adopted a historiometric approach focusing on the professional environment for the following reasons: (1) it is ethically appropriate; (2) it is scientifically feasible; (3) the data come from real world settings; (4) environmental factors can be altered by institutions more easily than individual variables; and (5) historiometry has demonstrated predictive validity in similar studies of complex behavior.

Ethical Appropriateness

Many real-world experiments examining ethical behavior in health care practice or research settings would be unethical. For example, imagine randomly assigning physicians either to a control group or to a “treatment group” in which they receive kickbacks for the use of suboptimal surgical equipment. Alternately, imagine designing an observational study in which researchers adopt false identities, visit physician’s offices to gather data on environmental factors such as the presence of conflicts of interest or vulnerable patients, and place hidden cameras and audio-recording devices to detect unethical behavior. Of course, either approach would put us in the company of those whose professional misconduct deserves observation and study. In contrast, in our historiometric study, we use as source material only published accounts of events that have already occurred. Therefore, we are complicit neither in the occurrence of the events nor with violating privacy and confidentiality.

Scientific Feasibility

Other proposed approaches to the study of professional misconduct have significant scientific limitations. Interview studies involving individuals who have engaged in or observed misconduct depend upon the reliability of self-report, accuracy of individuals’ understanding of their own motivations, and the willingness of individuals to volunteer to rehash negative experiences (Davis & Riske, 2002). Analyses of reports from oversight bodies are unlikely to yield useful environmental findings for the prevention of misconduct as these reports rarely provide rich descriptions of the environment and rather focus heavily on details proving the guilt of a particular individual (Davis et al., 2007); furthermore, the most detailed reports are frequently treated as highly confidential and are protected (Kohler & Bernhard, 2010). Given the extensive media reporting of misconduct in health care settings, it is feasible to develop case narratives that include sufficient detail regarding the professional environment for historiometric analysis. In producing our first 60 case synopses we have drawn material from an average of 35 articles per case.

Real-world Settings

As discussed, historiometry derives its cases from published reports of real accounts. The narratives we construct using multiple published sources include rich descriptions of events in their actual contexts. While experimental approaches undoubtedly have the advantage of controlling variables, they risk generalizing poorly to specific real world settings. For example, experimental and survey data suggest that wrongdoing may occur in response to perceived institutional injustice (Keith-Spiegel & Koocher, 2005; Martinson et al., 2006; Martinson, Crain, De Vries, & Anderson, 2010); however, in the 60 cases that we have analyzed to date, this variable has appeared only to a modest degree in two cases and it has no value in predicting wrongdoing. This does not suggest that “retribution” or restoring justice is not a real dynamic in wrongdoing but rather suggests that it may be so only in some contexts and perhaps not in the context of the sorts of wrongdoing that attract the attention of oversight boards or reporters.

Environmental Variables

Individual variables certainly play a role in professional wrongdoing. For example, data suggest that cynicism and a robust sense of self-entitlement correlate with diminished ethical problem solving and increased sanctioning of wrongdoing (Antes et al., 2007; Mumford, Connelly, Helton, Strange, & Osburn, 2001). However, these data do not unequivocally suggest that institutions should screen potential employees to eliminate such traits from their labor force; doing so could put them at significant legal risk and would encourage applicants to engage in test savvy behavior to game the system. Further, such personality traits are difficult to change. In contrast, it may be more feasible to implement preventive measures related to environmental conditions (Committee on Assessing Integrity in Research Environments, 2002).

Predictive Validity

As a method, historiometry has successfully produced predictive models for understanding complex phenomena including destructive leadership (Deluga, 1997; Mumford, 2006), creative productivity (Simonton, 1984), and even the conditions for acts of military aggression (Suedfeld & Bluck, 1988). This gives us confidence that historiometry has the potential to be similarly predictive for environmental factors that contribute to professional wrongdoing in the health care environment.

Method

Inclusion Criteria

For purposes of our historiometric study, we aimed to identify environmental variables that meet the following inclusion criteria:

They are contextual features of the medical research and practice environments that might reasonably be identified and addressed by those who investigate instances of professional wrongdoing (e.g., investigative reporters, prosecutors, and ethics scholars). Thus, we do not focus on motivating factors such as the “intrinsic rewards” of doing research, e.g., satisfying intellectual curiosity or obtaining a publication; the former cannot be reasonably observed, and the latter is ubiquitous in research and cannot (or arguably should not) be changed.

They are “mid-level” features of the environment, that is, they are more specific than “moral climate” (a general description which could encompass many things) and less specific than so-called “kickbacks,” which are just one type of financial incentive for wrongdoing. The rationale behind focusing on mid-level features is the need to balance an interest in identifying fairly specific features of the environment that might be addressed by educators or policy makers with the need to limit the number of variables for statistical analysis (regressions and structural equation modeling) given our modest expected sample size (n=120). For example, a multiple regression with a p-value of .05, a modest effect size (f2) of .15, a power level of .80, and 10 predictors would require a sample of 118.

They are supported by empirical studies and illustrated by cases. Although the study of professional wrongdoing is relatively young, we decided to track a variable only if it is supported by at least some empirical data including experimental studies, but also descriptive studies and published case reports.

They are supported by criminological theory. Popular criminal theory requires one to demonstrate a suspect had means, motive, and opportunity (MMO) in order to prove guilt (Maguire, Reiner, & Morgan, 2007). These are broadly considered essential “causal factors” in the production of criminal wrongdoing, and evidence that another suspect had MMO must be shared with the defense as exculpatory evidence (Jones, 2010). We apply this theory as a unifying theory in the selection of variables for two reasons. First, the empirical evidence supporting our variables lacks a coherent overarching framework, instead drawing from theories of behaviorism, social-cognition, social psychology, and others. A unifying theory is thus useful in understanding what the variables share in common. Second, the MMO theory is so widely embraced in law and popular culture (appearing regularly in television crime shows) that we expect oversight personnel and reporters to attend to and describe factors that demonstrate MMO when they describe cases of wrongdoing in reports and published stories. We have added to the MMO framework the idea of exculpability (hence we hereafter refer to MMO/E). While courts and investigative reporters may focus on exculpatory factors or extenuating circumstances insofar as they may diminish culpability, we focus on them as factors that may increase the likelihood of a behavior occurring.

Our approach to selecting variables to track in our historiometric study involved three steps that were completed repeatedly and dialectally rather than in discrete sequential stages.

Literature Review

Using PsychInfo we conducted reviews that drew from multiple traditions and sub-disciplines of psychology that address moral behavior and professionalism. We used LexisNexis to identify empirical literature from the fields of law and criminology. Throughout the past three years of the project we have also used a “snowballing” technique, examining the reference lists of seminal articles. Initially, the principal investigator and one research assistant conducted a broad literature review using general terms such as “misconduct,” “misbehavior,” and “wrongdoing.” Once a list of potential variables was developed using the literature and inclusion criteria, extensive targeted reviews were completed for each selected variable and variables were refined.

Consultation with Experts

Over the past three years, we have held multiple discussions with psychologists from diverse sub-specialties and areas of expertise and have had several grants undergo peer review. Input from these activities has shaped the directions of our literature reviews, helped us to narrow our inclusion criteria, and contributed to the development of the definitions for each specific variable. Key consultants are named in our acknowledgements section.

Case Analysis and Rating

Throughout the writing of our first 60 cases of wrongdoing, we used the MMO/E framework to determine whether any environmental factors not identified in our literature review appeared to meet our inclusion criteria. Preliminary analysis of cases using benchmark scoring also led to the reduction of other variables; benchmark scoring indicated, for example, that some variables are ubiquitous and could not be scored meaningfully even using a simple 3-point scoring system (see Figure 1). We describe several variables that we eliminated from our study in the Discussion section.

Results

Through review of the literature, consultation with experts, and ongoing analysis of our first 60 cases, we have identified 10 variables that meet the inclusion criteria described above. We name and define each variable, and then provide a rationale for the variable using the MMO/E framework, present highlights from studies involving the variable, and present one case from our historiometric study in which the variable appeared prominently (usually receiving a score of 3—see Figure 1) and may have played a causal role in the wrongdoing. Table 1 provides a list of the 10 variables with an indication of how they might increase the likelihood of wrongdoing within the MMO/E framework.

Table 1.

A Criminological Analysis of How Environmental Factors Enable Wrongdoing in Medical Research

| Environmental Factor | * Means | Motive | Opportunity | Exculpatory |

|---|---|---|---|---|

| 1. Conflicting roles | Positions provide authority to play multiple roles in relationship to patients | Divides loyalties | Increases access to patient-participants and trust | Presents professional rather than personal motives |

| 2. Wrongdoing financially rewarded | Need or greed | |||

| 3. Others benefit from wrongdoing | Decreases others’ motive to intervene; provides motive for complicity | Increases protagonist’s opportunity through decreased oversight | Wrongdoing could be altruistically motivated to benefit others | |

| 4. Protagonist punished for right behavior | Motivated to avoid punishment | |||

| 5. Others penalized for right behavior | Fear of punishment decreases likelihood of others to intervene | |||

| 6. Mistreatment of protagonist | Retribution or desire to address a perceived injustice | |||

| 7. Ambiguous norms | Peers and others less likely to police ambiguous behaviors | Protagonist may not recognize behavior is wrong | ||

| 8. Vulnerable victims | Social stigma may motivate inferior protections | Vulnerabilities may decrease ability to protect self | ||

| 9. Oversight Failure | Absence of oversight enables wrongdoing without consequences to protagonist | |||

| 10.Protagonist in authority over co-workers | Greater control over financial and personnel resources | Reduced oversight |

We assume most professionals have the means to misbehave given that they have credentials, access to clients/participants, access to payment agencies, etc. Playing multiple professional roles and enjoying a position of authority simply increase means.

Conflicting Roles

We refer to “conflicting roles” when a protagonist plays two or more professional roles such that the professional obligations of the various roles might clash. For example, individuals may play the roles of treating physician and clinical researcher.

MMO/E rationale

Professional roles are tied to goals, duties, and expectations. The desire to satisfy the goals associated with one role may provide an individual with a professional (not merely personal) motive for violating the duties associated with another professional role. For example, while physicians have a fiduciary obligation to prioritize a patient’s well-being when making treatment decisions, serving as principal investigator may create an incentive to enroll the patient in a placebo-controlled trial, even when the risk of receiving placebo may not be in the patient’s best interests. Moreover, playing conflicting roles may present professionals with greater means and opportunity to misbehave. For example, a clinical researcher who is also a treating physician has greater access to patients and may enjoy greater levels of trust from patients than a non-clinician.

Background studies

Role conflict has been traced to high job dissatisfaction, which in turn has been shown to affect deviance (Sims, 2010; Sorensen & Sorensen, 1974). Lidz et al. (2009) found that physicians often put patients’ medical interests first by falsifying inclusion criteria to inappropriately enroll patients in studies the physician views as potentially beneficial. This could compromise the validity of study results. On the other hand, the Office of the Inspector General’s report, Recruiting Human Subjects: Pressures in Industry-Sponsored Clinical Research (2000) expressed concern with the practice of physicians enrolling their own patients and were particularly concerned that patients who do not meet inclusion criteria for a study might be enrolled in order to meet enrollment goals. Indeed, the possibility that physicians might exploit relationships to enroll or retain patients as participants could be increased by the widespread “therapeutic misconception”—the tendency of patients to confuse clinical research with individualized therapy or unreasonably expect clinical benefits (Appelbaum, Lidz, & Grisso, 2004), and by the fact that many patients report that a recommendation by their physician to enroll in a study means as much as a financial incentive to do so (Roberts et al., 2002).

In a meta-study, Cassel (1998) examined research being conducted in long term care settings. She identified several situations in which physician-researchers were faced with competing norms. For example, one researcher identified a possibly suicidal elderly patient and had to decide between maintaining participant confidentiality (a prima facie norm in research and something specifically promised in this study) and promoting patient welfare (Cassel, 1988).

Case illustration

Sherrell Aston, MD, was chairman and surgeon director of the department of plastic surgery at Manhattan Ear, Eye and Throat Hospital (MEETH). He also oversaw surgical fellows (Hamra, 1996). In 1996, under Aston’s direction, several fellows initiated a study to compare two face-lift procedures; a different procedure was performed on each side of a patient’s face. Despite the clearly experimental nature of performing different surgical procedures on each side of the face to determine which is superior, Aston did not judge this activity to be research. He instead claimed that the fellows were conducting a “clinical project to evaluate surgical procedures” (Hilts, 1998). All the patients involved paid for their facelifts, and some were unaware that two different procedures were being performed and compared (Hilts, 1998). In this case, Aston clearly manifested the professional roles of both physician and researcher. In failing to fulfill the ethical obligations of informed consent, Aston placed the goals of research (namely, scientific discovery) ahead of respect for patient autonomy; it is very likely that many patients would have wanted to know that two different procedures were being performed on opposite sides of their face and would have declined participation in such an experiment if accurately described.

Wrongdoing Financially Rewarded

Financial rewards for wrongdoing exist when wrongdoing will generate additional revenue whether through direct payments, the prospect of significant returns on stocks, or other things of clear material value. The rewards may arise through ordinary financial relationships (such as fee-for-service payments) or the special voluntary relationships with industry that are labeled financial conflicts of interest.

MMO/E rationale

While money may not be the root of all evil, it has a long history of motivating both good actions (such as ordinary work) and wrongdoing – from bank robberies to embezzlement to murder for hire. Physicians enter into many different financial relationships with patients, government and private third party payers, and industries. These relationships present a potential motive to prioritize personal financial profit over patients’ best interests. Crucially, the influence of a motive need not be conscious and deliberate.

Background studies

Within the context of medical research, financial ties to industry may lead to suppression of non-optimal research results, incomplete or misleading descriptions and interpretations of trial results, and premature termination of clinical trials, as well as ghostwriting, a relaxation of scientific standards, and recruiting unqualified research subjects (Cohen & Siegel, 2005; Tereskerz, 2003).

Physicians’ financial ties to pharmaceutical and device manufacturers can bias medical decision making and potentially harm patients (Kassirer, 2006). Research has shown that physician acceptance of gifts from industry is associated with significant negative outcomes such as misinformation about medication; increased rates of prescription, preference and rapid prescribing of new drugs; making formulary requests for new and more expensive medications without proven advantages over existing medications; and non-rational prescribing practices (Wazana, 2000). When physicians are financially invested in hospitals or other medical services, they tend to make treatment decisions that maximize profits through self-referrals and the overutilization of services (Hollingsworth et al., 2010; Kouri, Parsons, & Alpert, 2002; Mitchell, 2005, 2007, 2008). Findings also indicate that physicians who own health care facilities are more likely than other physicians to refer well-insured patients to their own facilities and refer Medicaid patients to other facilities (Cram, Vaughan-Sarrazin, & Rosenthal, 2007; Gabel et al., 2008; Kahn, 2006; Mitchell, 2005). Physicians are sometimes unaware of how financial relationships can influence their independent judgment (Campbell, 2007; Dana & Loewenstein, 2003), and they frequently believe themselves to be unaffected by these relationships (Chren, 1999; Wazana, 2000).

Case illustration

Patrick Chan, MD, was a successful neurosurgeon who specialized in spinal fusion surgery, a procedure performed on patients with broken backs or spines badly damaged by disease or deformity that requires the use of high-tech medical devices (Blackwell, 2007). It was determined that in order to increase profits, Chan solicited and accepted kick backs in the form of gifts, fraudulent consulting contracts, and support for fake research studies in exchange for using certain companies’ devices (Dembner, 2008; Gambrell, 2008; Moore, 2008). He was also found guilty of performing unnecessary surgeries in his quest to make money (Blackwell, 2007). Not only were Chan’s financial conflicts of interest unmanaged (financial interests were not disclosed to his employer nor to his patients), Chan seemed to actively seek out financial arrangements that rewarded misconduct.

Others Benefit from Wrongdoing

Others—for example, employers, funding agencies, or clients—may stand to gain in some significant manner when professionals engage in wrongdoing. For example, a hospital may profit when a physician bills for a large volume of unnecessary procedures.

MMO/E rationale

First, superiors or institutions that benefit from an individual’s wrongdoing may encourage or pressure the individual to misbehave. Such pressure may be subtle or overt and may, for example, encourage a physician to overuse or misuse medical services or a researcher to enroll people into studies who do not meet inclusion criteria. Second, superiors, institutions, or others who are in a position to provide oversight, ensure correction, or act as whistleblowers may be motivated to turn a blind eye to the wrongdoing rather than intervene to end it if they might stand to benefit. Third, an individual may be altruistically motivated to misbehave when it benefits others. For example, in order to benefit a patient or group of patients, a physician might change a billing category (e.g., for patients with limited health insurance). A physician might also charge insured patients more than uninsured patients, misrepresent the difference to insurance companies, or routinely excuse patients from routine copayments and deductibles.

Background studies

Physicians’ loyalty to patients’ well-being and the honest practice of medicine may be threatened when medical judgments and decisions are influenced by the interests of other people – employers, funding agencies, and even patients (Rodwin, 1993). Behavior is influenced by not only one’s own norms for behavior, but also by the culture, goals, and expectations of coworkers and the organization where one works (Vardi & Weitz, 2004; Vardi & Wiener, 1996). Especially among peers (as opposed to supervisors), work group norms are powerful and promote a group loyalty that discourages the reporting of others’ misconduct (Greenberger, Miceli, & Cohen, 1987). Co-workers or those in positions of oversight may not be motivated to stop a peer’s misconduct unless that misconduct directly harms them in some way, and others’ awareness of how they might benefit from a peer’s misconduct provides additional incentives for encouraging or ignoring wrongdoing (Victor, Trevino, & Shapiro, 1993).

Case illustration

Felix Vasquez-Ruiz, MD, was a general practitioner who worked in the Chicago area in the late 1980s and 90s. Vasquez-Ruiz regularly targeted Spanish-speaking immigrants and administered thousands of unnecessary tests in order to fraudulently charge taxpayer-supported health programs including Medicare and Medicaid (“Family doctor charged with $400,000 insurance fraud,” 2000; United States vs Vasquez-Ruiz, 2001). The clinic where he worked was owned and operated by John and Yolanda Todd, Vasquez-Ruiz’s sister-in-law and her husband; the Todds provided Vasquez-Ruiz with office space, advertising, and employees for little or no cost. Vasquez-Ruiz performed a significant number of unnecessary tests while working within entities owned by the Todds – the same entities that received the insurance reimbursement (United States vs Vasquez-Ruiz, 2001). As his employers, the Todds were in the position to blow the whistle on Vasquez-Ruiz’s fraud but may have been unwilling to do so because of the direct financial benefits they received.

Protagonist Penalized for Right Behavior

Punishment of a professional may include termination of employment, bullying, or denial of pay increases, promotions, or access to resources. An individual may be punished, or based on observations may assume that he or she will be punished, for doing the right thing (e.g., prioritizing patients’ best interests over institutional profits).

MMO/E rationale

According to behavioral theory, punishment (actual, perceived, or anticipated) provides a motive for avoiding a certain behavior. Thus, it can be hypothesized that wrongdoing may be the result of punishment for right behaviors.

Background studies

Behavioral research indicates that punishing a behavior decreases the likelihood of that behavior (Jansen, 1985). Research has shown that physicians are often reluctant to disclose medical errors to patients, reflecting their fear of litigation—one form of penalty (Gallagher, Waterman, Ebers, Fraser, & Levinson, 2003). Alternately, superiors may have a history of punishing people who do not cooperate with institutional wrongdoing or who fail to deliver desired results at any cost. Management research has shown that an organization’s (formal or informal) reward system determines group norms and influences behavior as employees seek to do activities that are rewarded and avoid those that are punished (Jansen, 1985). Swazey, Anderson, and Lewis (1993) noted that the manner in which a department or institution deals with suspected research misconduct is not only crucial to the integrity of the research produced, it may additionally shape the behavior of trainees.

Case illustration

Dr. Cowardman mistakenly operated on the right eye of a five-year-old girl who needed surgery to repair a muscle in her left eye. He later altered the girl’s charts to justify surgeries on both eyes. When his error came to light and the family sued, he said he did not disclose his medical error and take responsibility for it because he feared he might lose his license and his malpractice insurance rates would rise significantly.1

Others Penalized for Right Behavior

Parties other than the person directly engaged in wrongdoing (e.g., institutions, clients, research sponsors) may be penalized for doing the right thing (e.g., exercising appropriate oversight or reporting unprofessional behavior). For example, a hospital administrator may not report known Medicare billing fraud by a physician for fear that the hospital will be fined.

MMO/E rationale

Actual, perceived, or anticipated punishment for right behaviors may increase the likelihood of wrongdoing by increasing motive of others to ignore wrongdoing thereby increasing opportunity. Additionally, the threat of penalty may lead others to encourage or pressure an individual to misbehave, or individuals may be altruistically motivated to misbehave when it prevents others from being punished.

Background studies

Case studies and surveys have found that fear of retaliation is a serious concern of potential whistle-blowers (Henik, 2008). Hence, whistle-blower statutes in the United States have provisions that protect whistle-blowers from retaliation. In a 1993 study of misconduct in academic research, students and faculty members were asked whether they felt that they could report cases of suspected misconduct at their institution without expecting retaliation. Fifty-three percent of students and 26 percent of faculty members responded that they could not report suspected misconduct by a faculty member without experiencing retaliation (Swazey et. al., 1993). This perception exists even though data suggest that intervening can be done with few negative repercussion: Koocher and Keith-Spiegel found that in cases where researchers who were aware of misconduct did take action or get involved in some way, the outcomes were more often positive than negative and scientific wrongdoing was often corrected as a result of their actions (Koocher & Keith-Spiegel, 2010).

Case illustration

During the 1980s, E. Donnall Thomas, MD, was conducting research on graft versus host disease (GvHD) in patients with leukemia under Protocol 126 at the Fred Hutchinson Cancer Research Center (the Hutch). Patients being treated under this experimental protocol had unexpectedly high rates of relapse and graft failure (Wilson & Heath, 2001a). Not only did this study involve inadequately managed financial conflicts of interest and inadequate informed consent (Wilson & Heath, 2001a, 2001b), but over the course of several years, institutional review board (IRB) members failed to voice concerns regarding the study for fear of retaliation from Thomas or punishment by institutional leaders. Hutch IRB members complained to federal officials that investigators “lied to, intimidated, ignored and punished” IRB members (Clamon, 2003). In this case, other environmental factors (such as failure of oversight) could arguably have been mitigated had it not been for these threats of penalties against IRB members for drawing attention to problems with the study.

Mistreatment of Protagonist

Individuals may act in a retaliatory manner or violate norms of ethical behavior when they perceive that they are being mistreated, whether this perception is based in fact or not. For example, a researcher who feels that a study protocol was unfairly reviewed by an IRB may find ways to bypass the IRB when conducting future research.

MMO/E rationale

When people feel they are being treated unfairly, this can create a motive to misbehave in order to “restore justice” or can create a perception that ethical rules no longer apply.

Background studies

Empirical research on organizational justice has demonstrated a strong association between perceptions of the fairness of employers’ policies and employees’ ethical behavior (Colquitt, Conlon, Wesson, Porter, & Ng, 2001; Colquitt, Noe, & Jackson, 2002; Skarlicki & Folger, 1997; Vardi & Weitz, 2004). For example, Greenberg observed the responses of various factory workers that received pay cuts during a period of slow business. He found that when pay cuts were presented in such a way that employees perceived them as unfair, employee theft rates increased (Greenberg, 1990). In a later experiment, he changed the rate students were paid for completing simple work – in some cases, after the rate had been supposedly agreed upon. Students whose pay rate was changed after the agreement would more often steal extra money (when given the opportunity) (Greenberg, 1993).

In a 2004 study that asked biomedical and social behavioral scientists to rate the performance of their own IRB compared to their “ideal” IRB, Keith-Spiegel, Koocher, and Tabachnick (2006) found attributes that demonstrate fairness (procedural justice, interactional justice, absence of bias, and pro-science sensitivity) to be most important to investigators. Based on these findings, Keith-Spiegel and Koocher (2005) hypothesize that investigators who perceive bias and unjust actions by IRBs may more frequently engage in research wrongdoing and describe several real (anonymized) scenarios in which this occurred. In a large national survey of researchers at both early and middle career stages, Martinson et al. (2006) found that scientists are more likely to engage in questionable research practices and research misconduct when they believe they are being treated unfairly by their institutions. A follow-up study of biomedical and social science faculty at 50 top U.S. research universities found that perceptions of fair treatment in the workplace are positively associated with self-report of behaviors that are aligned with ethical norms and negatively associated with self-report of misconduct (Martinson et al., 2010).

Case illustration

In the late 1960s, Stanislaw Burzynski, MD, developed an alternative cancer treatment he called “antineoplastons.” Burzynski wanted to conduct research on patients at Baylor University; however, when he was unable to secure IRB approval, he left Baylor and began treating patients with antineoplastons in a private practice setting. He was eventually investigated by the Food and Drug Administration (FDA) for conducting clinical trials without appropriate review and for shipping the antineoplastons outside of Texas, which was banned under the terms of an earlier court order (Malisow, 2009). In the 1990s, the National Cancer Institute (NCI) began clinical trials of antineoplastons. At first Burzynski collaborated with NCI, but eventually he came to believe that the institute was trying to intentionally sabotage his results, accused them of this, and ended the collaboration (Malisow, 2009). In response to his perception that the government was attempting to obstruct his research, Burzynski continued the research outside of formal oversight structures, setting up his own clinical trials (Lynn, 1998; Malisow, 1999).

Ambiguous Professional Norms

Right behavior may not always be clearly addressed by professional ethics or the law. This may arise from a relative lack of guidance on a matter; for example, until very recently there has been limited formal guidance regarding research with people with cognitive impairments. Or, there may be a lack of consensus regarding how to interpret and apply relevant norms; for example, there are competing interpretations of laws relating to physician abandonment of patients. Moral ambiguity can also be present when a relevant law or regulation exists but enforcement is inconsistent; for example, different states have different laws regarding physician-assisted suicide, and in many states where the practice is not legalized physicians are rarely if ever prosecuted.

MMO/E rationale

Ambiguous norms may be exculpatory: In some circumstances it may be difficult to determine the right course of action, and in such cases, an individual may not realize that a particular action constitutes wrongdoing. Moreover, ambiguous norms may increase opportunity for wrongdoing by enabling individuals to claim innocence when violating ethical norms that are widely recognized but as yet unwritten or inconsistently enforced.

Background studies

Research has shown that in situations where the right thing to do is unclear, people seek moral guidance from those around them, that is, they look at what is commonly done (Lapinski & Rimal, 2005). Although there is much agreement regarding the norms of science, the results of a survey of over 3,000 NIH-funded scientists demonstrate high levels of normative dissonance, suggesting that the environments in which scientists work are in conflict with their own beliefs about right behavior (Anderson, Martinson, & De Vries, 2007). While these scientists deeply subscribe to scientific norms, they believe their own behavior is somewhat less than the ideal and perceive others’ behavior as even less normative (Anderson et al., 2007). The power of this dissonance may be particularly significant in ambiguous circumstances, where the tendency is to look to peers for behavioral examples, particularly if there is a fear of competitive disadvantage (Anderson et al., 2007).

While the Uniform Requirements for authorship established in 1985 by the International Committee on Medical Journal Editors (ICMJE) were endorsed by numerous journals and institutions, evidence suggests that these guidelines have not changed authorship practice as much as was hoped nor have they truly been adopted as a universal standard for authorship practices (DuBois & Dueker, 2009; Jones, 2003). A review of the instructions to contributors of 234 biomedical journals found that 41 percent provided absolutely no guidance about authorship, while the remaining journals’ guidelines widely varied; some were based on outdated versions of the ICMJE criteria (Wager, 2007).

Federal regulations for pediatric research (45 CFR 46, subpart D) do not provide a standard for “minor increase over minimal,” and “minimal” risks are to be held to the objective standard of the “risks of daily life.” IRB chairpersons were surveyed regarding their interpretation and application of federal pediatric risk standards to certain research procedures; their determination of whether certain interventions offered prospect of direct benefit; and their application of the federal definition of minimal risk in categorizing risk (Shah, Whittle, Wilfond, Gensler, & Wendler, 2004). The data show significant variability in the application of the federal pediatric risk/benefit categories, including interpretations that are inconsistent with federal regulations or misjudged actual risks.

Case illustration

In the late 1990s, P. Trey Sunderland, III, MD, was a leading Alzheimer’s disease researcher and director of the geriatric psychiatry research unit at the National Institute of Mental Health (NIMH). A congressional report found reasonable grounds to conclude that Sunderland received improper compensation from Pfizer for work he completed using NIMH resources, namely providing the pharmaceutical company with spinal fluid samples and clinical data (Lenzer, 2006). Then-NIH director Harold Varmus, MD, encouraged public-private collaborations to accelerate the pace of research, but employee compliance with guidelines regarding disclosure of payments from industry was not effectively monitored or enforced (Holden, 2006; Weiss, 2006). Additionally, although there were more than 660 NIH laboratories with repositories of human body fluids and tissues, there was no formal inventory or tracking system and no uniform, centralized, and mandatory authority for handling the samples, and these samples were shared without the explicit permission of the individuals who provided them (Agres, 2006). This case demonstrates both failure to clearly enforce policies regarding disclosure of financial relationships as well as a slow pace in the development of regulations in an emerging area of concern to research (sharing of human biological samples).

Particularly Vulnerable Victims

While everyone is vulnerable to harm, particularly vulnerable victims are those who deviate from an “idealized empowered client” (i.e., a healthy, educated, financial stable member of the majority class living in the community) through deficits of power or social stigma. Factors that might contribute to deficits in power include cognitive impairments, being poor, lacking education, or being institutionalized (National Bioethics Advisory Commission, 2001).

MMO/E rationale

Deficits of power may diminish patients or participants ability to protect themselves, and social stigma may decrease the likelihood that others will offer protections. These factors may increase opportunity for wrongdoing; vulnerable individuals may stand out as easy targets to those who want to use others to promote their own interests.

Background studies

Data show that children, people of color, the economically disadvantaged, and the elderly are disproportionately victims of violent crimes, sexual assault, and other forms of abuse – including abuse by health care professionals. Snyder examined sexual assault rates and found that juveniles were significantly more likely to be victims of sexual assault; 67 percent of all sexual assault victims were under 18 at the time of the crime (Snyder, 2000). The 2009 National Criminal Victimization Survey found that African Americans were more likely than whites to be victims of each type of violent crime measured (rape, sexual assault, robbery, aggravated assault, and simple assault) (Truman & Rand, 2010). Age was also a predictor for overall violent victimization, with the youngest group measured (12–15 years of age) having the highest rates; rates steadily declined with age (Truman & Rand, 2010). A government-funded study analyzing census and survey data found that women living in economically disadvantaged neighborhoods suffered intimate partner violence at more than twice the rate of women in more advantaged neighborhoods (6% versus 2%) (Benson & Fox, 2004). A population-based telephone survey in Pittsburgh found African-American elders (age 60 years and older) to be at greater risk than non-African American elders for both psychological mistreatment and financial exploitation even when controlling for age, education, cognitive function, household composition, and other key covariates (Beach, Schulz, Castle, & Rosen, 2010).

Certain groups are also vulnerable to exploitation by health care professionals. A telephone survey of a random sample of 577 nurses and nursing aides working in long-term care facilities (both intermediate care and skilled nursing facilities) examined rates of abuse observed and committed by staff members (Pillemer & Moore, 1989). Thirty-six percent of respondents reported witnessing at least one incident of physical abuse by another staff member, and 81 percent observed at least one incident of psychological abuse. Ten percent reported committing one or more acts of physical abuse themselves, and 40 percent reported committing at least one act of psychological abuse (Pillemer & Moore, 1989).

Case illustration

During 1999 and 2000, Shaul Debbi, MD, built a thriving ophthalmology practice treating mentally ill residents of New York City’s adult homes (Levy, 2002b, 2003b). Federal prosecutors found that during an 18-month period, Debbi performed almost 50 operations on more than 30 residents of one home – even though these patients often had no current complaints or prior history of eye problems (Levy, 2002b; “New York: Doctor charged for eye surgery on mentally ill,” 2002). Almost all the residents of this particular home could legally sign a consent form for medical procedures, and persuading them to do so was not difficult (Levy, 2002a). One former employee of the home described that residents would be told that if they did not see the doctor, they would not get their allowance (Levy, 2002a). Debbi intentionally recommended surgery for the more confused and delusional residents, and they usually consented (Levy, 2003a). New York State requires that adult homes notify the relatives of residents if they undergo any medical procedures, yet in many instances, residents’ families claimed that they were never told of the surgeries (Levy, 2002a). The prosecution submitted notes written by Debbi in which he indicated how easy it would be to obtain consent from each patient which included phrases such as “Smart. Do not invite,” and, “Confused. Must invite” (Usborne, 2003).

Oversight Failure

Many individuals and offices have responsibilities for providing oversight in medical research and practice. In research, the FDA, IRBs, data and safety monitoring boards (DSMBs), sponsored program offices, and mentors provide oversight through prospective reviews, audits, or simple observation; in practice, quality care committees, state medical boards, federal auditors, and colleagues provide oversight for clinical practice, billing, and many other aspects of medicine. Oversight failures can range from failure to provide prospective review, audits, or enforcement; or may involve active concealment of known wrongdoing.

MMO/E rationale

Failures of oversight can create opportunities for wrongdoing to continue undetected and unpunished, perhaps increasing the likelihood that individuals will act upon their motives to misbehave.

Background studies

Research suggests that an organization’s structure and the degree of employee oversight is a significant factor in predicting and promoting ethical behavior (Ferrell & Skinner, 1988). Incidents of unethical behavior are strongly correlated with opportunities to misbehave (Zey-Ferrell & Ferrell, 1982). Research has also shown that formal policies and infrastructure that facilitate the reporting of unethical behavior increase both employee awareness of potential ethical problems and the reporting of these problems to supervisors (Barnett, 1992; Barnett, Cochran, & Taylor, 1993). A review of U.S. Office of Research Integrity (ORI) cases of misconduct concluded that research misconduct is positively correlated with lax supervision and poor mentoring by mentors and senior colleagues (Wright, Titus, & Cornelison, 2008).

Case illustration

During the 1960s, Austin R. Stough, MD, a prison physician in Oklahoma, conducted drug trials for large pharmaceutical companies and harvested blood for plasma centers using inmates (Rugaber, 1969). During his most productive years, Stough presided over 130 different studies for 37 different drug companies, an estimated 25–50 percent of all experiments conducted in the US during that period (Hornblum, 1998). Stough’s experiments were roundly criticized as dangerous; he was accused of lacking medical competence, utilizing vastly undertrained technicians, and poor recordkeeping. His work occurred in an atmosphere of isolation and secrecy, and government and industry oversight was lax at best (Rugaber, 1969). An executive from Cutter Laboratories acknowledged that routine visits to monitor Stough’s trials had identified gross contamination and sloppy examination rooms, but Stough was never sanctioned (Rugaber, 1969). The same executive cited Stough’s government contacts as the reason he continued to receive hundreds of thousands of dollars to conduct drug trials (Rugaber, 1969). Gene Stripe, an Oklahoma state senator (and early critic of Stough), was influential in passing a bill that allowed Stough to continue his research in Oklahoma prisons. Investigative journalists later discovered that immediately prior to his sudden change of heart, Stripe began receiving a monthly retainer of $1,000 from an organization partially owned by Stough (Rugaber, 1969).

Protagonist in Authority over Co-Workers

Leadership positions grant authority over peers. This authority may come from holding a particular office or appointment, or it may represent the moral authority that comes with being very prominent or trusted in a particular field. For example, authority may derive from being a principle investigator of a research project, a department head or chairperson, a recognized leader in a scientific or medical field, or the owner of a medical practice.

MMO/E rationale

Authority allows control over information, resources, or the behavior of others, which may increase opportunity for wrongdoing. Leaders may also be subjected to less scrutiny, either because they are trusted or because they report to fewer superiors, again increasing opportunity. Leaders may be overextended providing an extenuating circumstance for some forms of mild forms of wrongdoing such as providing inadequate oversight or failing to meet quality standards. Finally, leaders have significant motives to engage in wrongdoing in order to maintain or grow their income and protect their reputation and position of power.

Background studies

Padilla, Hogan, and Kaiser (2007) have described the “toxic triangle”: destructive leaders (with resources), susceptible followers, and conducive environments. Research has shown that persons with narcissistic personality traits may gravitate toward leadership and authority roles due to a belief that they possess an extraordinary ability to influence or are superior to peers (Judge, LePine, & Rich, 2006). A positive correlation has been found between narcissism and deviant or counterproductive workplace behavior (Padilla et al., 2007; Penny & Spector, 2002). For example, studies have found salespersons with narcissistic personalities to be more comfortable with ethically questionable behavior (Judge et al., 2006; Soyer, Rovenpor, & Kopelman, 1999). A historiometric study of charismatic leaders found narcissism to be not only predictive of the need for power but also correlated with destructive behaviors (O’Connor, Mumford, Clifton, Gessner, & Connelly, 1995).

In role playing experiments, persons exhibiting high social dominance orientation (the degree to which individuals desire and support strict hierarchy between social groups) were more likely to gain authority and more likely to sanction misbehavior (Son Hing, Bobocel, Zanna, & McBride, 2007). Individuals with high social dominance orientation are particularly likely to gain positions of authority in hierarchical environments, such as academic medicine (Son Hing et al., 2007; Tepper, 2007). If the person in power displays unethical qualities or permits unethical behavior, a culture that permits unethical behavior is very likely to develop (Kemper, 1966).

Persons exhibiting high social dominance orientation (the degree to which individuals desire and support strict hierarchy between social groups) may have a coercive influence on the behavior of their subordinates. This is particularly true in hierarchical environments, such as academic medicine (Son Hing et al., 2007; Tepper, 2007). If the person in power displays unethical qualities or permits unethical behavior, a culture that permits unethical behavior is very likely to develop (Kemper, 1966).

Studies have also shown that people are inclined to obey authority. Milgram (1963) demonstrated this in his notorious studies; of Milgram’s 40 research subjects, 26 obeyed orders to the end, proceeding to punish an unseen learner until they reached the most potent shock available on the shock generator. Only five subjects stopped when the victim began pounding the wall – at the 300-volt mark. Half of nurses surveyed reported that they had carried out a physician order that they thought could be harmful to a patient (Krackow & Blass, 1995).

Data also suggest that leaders attract less oversight. This may in part be due to the fact that others fear whistle-blowing when superiors are involved. A 2010 survey of over 200 NIH-funded researchers found that the overall odds of intervening in cases of suspected wrongdoing were significantly lower in cases where the suspect was senior to the respondent (Koocher & Keith-Spiegel, 2010).

Case illustration

In early 2000, Chae Hyun Moon, MD, and a colleague established a lucrative cardiology practice at Redding Medical Center in California. Moon’s practice, based largely on fictitious disease diagnoses and unnecessary surgical procedures, produced a significant amount of income for the medical center (Russell & Wallace, 2002). Because of both his influence on the hospital finances and his roles as medical director and director of cardiology, Moon’s unscrupulous behavior went unchallenged and unreported for many years. In fact, as medical director, Moon would have been the first person to review any complaints about an individual physician’s practice.

Discussion

Ultimately, we decided upon the 10 environmental variables discussed above because they meet our criteria as being (a) contextual features of the medical research and practice environments that might reasonably be identified and addressed by institutions; (b) mid-level features of the environment; (c) supported by empirical studies and actual cases; and (d) supported by criminological theory (MMO/E), which serves to unite the disparate variables. Several other potential variables were explored but eventually excluded from our historiometric study. We will briefly discuss three of these factors: secrecy, non-financial conflicts of interest, and social dynamics.

Secrecy

We had originally planned to include “secrecy” as a separate factor but ultimately decided to consider it a dimension of oversight failures insofar as secrecy may describe a lack of reasonable transparency within an organization. This restriction in focus was justified by two considerations. First, individual secrecy – hiding one’s misdeeds – is ubiquitous; few people commit wrongdoing openly. If a variable appears in all cases, it cannot be used to explain or predict the kinds or severity of cases. Second, oversight failures can be identified and remedied, whereas the tendency of individuals toward secrecy when engaging in wrongdoing is not easily addressed apart from increasing transparency and oversight.

Non-financial Conflicts of Interest

Non-financial conflicts of interest are present in any professional environment and motivate both wrong and right behavior. In medical research and practice environments, prestige, recognition, and awards are certainly strong motivators and can influence the actions of individuals for whom financial gain is not important (Davis et al., 2007; Hansen & Hansen, 1993; Levinsky, 2002). Although publishing, tenure, promotions, and grants can translate to financial gain, their motivating power cannot be reduced to financial gain. Even the quest for the production of knowledge—a “good” aim in itself—can be a strong motivator for scientists’ unethical behavior insofar as they can conflict with the interests of patients or research participants. Thus, it is reasonable to hypothesize that they may play a role in wrongdoing. However, because they are intrinsic to the enterprise, they too are ubiquitous and therefore of little predictive value.

Social Dynamics

Several social dynamics may be very important for understanding professional wrongdoing, including modeling by leadership (Mumford et al., 2007), competition (Anderson, Ronning, De Vries, & Martinson, 2007; Davis et al., 2007), and obedience to authority (Trevino et al., 1998). These seem to be complex phenomena involving individual factors, environmental factors, and the interaction between them (Trevino et al., 2006).

Our 10 environmental variables may explain some of the influence of social dynamics on wrongdoing. For example, when leaders model wrongdoing this may create ambiguous norms and may decrease the likelihood that they provide proper oversight; competition may be engaged primarily in pursuit of financial rewards; and obedience may occur out of fear of punishment. Thus, we will capture variables that may be correlated with social dynamics. However, individual personality traits also affect how and how much these social factors influence behavior (Trevino et al., 2006; Zimbardo, 2007), and these cannot be captured in our historiometric study because we have found that such individual factors are not reliably reported. Moreover, each of these social dynamics may have some dimension that cannot be reduced to the interaction of individual traits with the 10 environmental variables. For example, modeling may simply and directly lead to unconscious imitation; competition may involve its own intrinsic rewards for some individuals (the pleasures of striving and of winning); and obedience to perceived authority may operate even when no threat of punishment is perceived.

We believe that research aimed at understanding professional wrongdoing must include the study of social dynamics. However, historiometric methods are poorly suited for this task because written narratives on professional wrongdoing rarely contain detailed descriptions of social dynamics. To the extent that dynamics such as competition are mentioned, it is difficult to determine the relative degree to which they exist. For example, most research environments are competitive, but only in some environments is competition explicitly fostered and collaboration thwarted as a result. The inability to capture certain social dynamics is simply a limitation of our study.

Conclusions

As noted, a historiometric approach to understanding wrongdoing will have limitations based on the kinds of information provided in published reports of misbehavior as well as limitations pertaining to the number of variables that can be considered in a study with modest sample sizes. Nevertheless, we have identified 10 environmental variables that can be identified by administrators and policy makers, are supported by empirical literature and a MMO/E theoretical framework, and can be quantified using benchmark scoring. Above all, we will study these variables as they occur in real life cases and in sufficiently large numbers to permit both correlation testing and multiple regression modeling.

As we have explored our preliminary data we have yet to be surprised when a variable is significantly correlated with wrongdoing; as noted above, there are good reasons to believe that each of the 10 variables we are tracking will be predictive of wrongdoing. In contrast, we have been surprised to find that some variables very rarely appear in cases, and they tend to appear randomly (in a manner not predictive of any type or severity of wrongdoing). However, the scientific community encourages empirical research using a variety of methods for precisely this reason: One cannot know a priori what causes complex behaviors such as professional wrongdoing, and one may find different results using different methods and study populations.

The practical implications of our research will vary greatly depending on which variables we find predict behavior, but we expect our results to be useful in crafting recommendations for professional ethics education, ethics policy and oversight, and future research.

Acknowledgments

This paper was supported by grants UL1RR024992 and 1R21RR026313 from the NIH-National Center for Research Resources (NCRR) and a seed grant from the BF Charitable Foundation. We thank Drs. Michael Mumford, Marvin Berkowitz, and John Chibnall for discussions that have led to the refinement of the psychological variables described in this paper.

Footnotes

This is a slightly fictionalized version of a case in our historiometric study. In the real case upon which this vignette is modeled, the physician did not explicitly mention the fear of punishment for disclosing medical errors he committed because he refused to discuss the cases brought before the medical board.

Contributor Information

James M. DuBois, Bander Center for Medical Business Ethics, Saint Louis University

Kelly Carroll, Gnaegi Center for Health Care Ethics, Saint Louis University.

Tyler Gibb, Gnaegi Center for Health Care Ethics, Saint Louis University.

Elena Kraus, Bander Center for Medical Business Ethics, Saint Louis University.

Timothy Rubbelke, Gnaegi Center for Health Care Ethics, Saint Louis University.

Meghan Vasher, Gnaegi Center for Health Care Ethics, Saint Louis University.

Emily E. Anderson, Neiswanger Institute for Bioethics and Health Policy, Stritch School of Medicine, Loyola University Chicago

References

- Agres T. Senior NIH scientist faulted: Sunderland improperly shared thousands of human tissue samples with drug company for $280,000 in consulting fees. The Scientist. 2006 Jun 14; Retrieved from http://classic.the-scientist.com/news/print/23643/

- Anderson MS, Martinson BC, De Vries R. Normative dissonance in science: Results from a national survey of U.S. scientists. Journal of Empirical Research on Human Research Ethics. 2007;2(4):3–14. doi: 10.1525/jer.2007.2.4.3. [DOI] [PubMed] [Google Scholar]

- Anderson MS, Ronning EA, De Vries R, Martinson BC. The perverse effects of competition on scientists’ work and relationships. Science and Engineering Ethics. 2007;13(4):437–461. doi: 10.1007/s11948-007-9042-5. [DOI] [PubMed] [Google Scholar]

- Antes AL, Brown RP, Murphy ST, Waples EP, Mumford MD, Connelly S, Devenport LD. Personality and ethical decision-making in research: The role of perceptions of self and others. Journal of Empirical Research on Human Research Ethics. 2007;2(4):15–34. doi: 10.1525/jer.2007.2.4.15. [DOI] [PubMed] [Google Scholar]

- Appelbaum PS, Lidz CW, Grisso T. Therapeutic misconception in clinical research: Frequency and risk factors. IRB: Ethics and Human Research. 2004;26(2):1–8. [PubMed] [Google Scholar]

- Appiah KA. Experiments in ethics. Cambridge, MA: Harvard University Press; 2008. [Google Scholar]

- Bandura A, Underwood B, Fromson M. Disinhibition of aggression through difussion of responsibility and dehumanization of victims. Journal of Research in Personality. 1975;9:253–269. [Google Scholar]

- Barnett T. A preliminary investigation of the relationship between selected organzational characterstics and external whistleblowing by employees. Journal of Business Ethics. 1992;11(12):949–959. [Google Scholar]

- Barnett T, Cochran D, Taylor G. The internal disclosure policies of private-sector employers: An initial look at their relationship to employee whistleblowing. Journal of Business Ethics. 1993;12(2):127–136. [Google Scholar]

- Beach SR, Schulz R, Castle NG, Rosen J. Financial exploitation and psychological mistreatment among older adults: Differences between African Americans and non-African Americans in a population-based survey. Gerontologist. 2010;50(6):744–757. doi: 10.1093/geront/gnq053. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Benson ML, Fox GL National Institute of Justice. Research in Brief. Washington, DC: U.S. Department of Justice, Office of Justice Programs; 2004. When violence hits home: How economics and neighborhood play a role. Retrieved from https://www.ncjrs.gov/pdffiles1/nij/205004.pdf. [Google Scholar]

- Blackwell T. MD faces kickback allegations. National Post; Canada: 2007. Aug 4, p. A1. [Google Scholar]

- Campbell EG. Doctors and drug companies--scrutinizing influential relationships. New England Journal of Medicine. 2007;357(18):1796–1797. doi: 10.1056/NEJMp078141. [DOI] [PubMed] [Google Scholar]

- Cassel CK. Ethical issues in the conduct of research in long term care. Gerontologist. 1988;28(Suppl):90–96. doi: 10.1093/geront/28.suppl.90. [DOI] [PubMed] [Google Scholar]

- Chren MM. Interactions between physicians and drug company representatives. American Journal of Medicine. 1999;107(2):182–183. doi: 10.1016/s0002-9343(99)00189-8. [DOI] [PubMed] [Google Scholar]

- Clamon JB. The search for a cure: Combating the problem of conflicts of interest that currently plagues biomedical research. Iowa Law Review. 2003;89(1):235–271. [PubMed] [Google Scholar]

- Cohen JJ, Siegel EK. Academic medical centers and medical research: the challenges ahead. Journal of the American Medical Association. 2005;294(11):1367–1372. doi: 10.1001/jama.294.11.1367. [DOI] [PubMed] [Google Scholar]

- Colquitt JA, Conlon DE, Wesson MJ, Porter COLH, Ng KY. Justice at the millenium: A meta-analytic review of 25 years of organizational justice research. Journal of Applied Psychology. 2001;86(3):425–445. doi: 10.1037/0021-9010.86.3.425. [DOI] [PubMed] [Google Scholar]

- Colquitt JA, Noe R, Jackson C. Justice in teams: Antecedents and consequences of procedural justice climate. Personnel Psychology. 2002;55(1):83–109. [Google Scholar]

- Cram P, Vaughan-Sarrazin MS, Rosenthal GE. Hospital characteristics and patient populations served by physician owned and non physician owned orthopedic specialty hospitals. BMC Health Services Research. 2007;7:155. doi: 10.1186/1472-6963-7-155. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Committee on Assessing Integrity in Research Environments. . Integrity in research: Creating an environment that promotes responsible conduct. Washington, DC: National Academies Press; 2002. [PubMed] [Google Scholar]

- Dana J, Loewenstein G. A social science perspective on gifts to physicians from industry. Journal of the American Medical Association. 2003;290(2):252–255. doi: 10.1001/jama.290.2.252. [DOI] [PubMed] [Google Scholar]

- Davis MS, Riske-Morris M, Diaz SR. Causal factors implicated in research misconduct: Evidence from ORI case files. Science and Engineering Ethics. 2007;13(4):395–414. doi: 10.1007/s11948-007-9045-2. [DOI] [PubMed] [Google Scholar]

- Davis MS, Riske ML. Preventing scientific misconduct: Insights from “convicted offenders”. In: Steneck NH, Scheetz M, editors. Investigating research integrity: Proceedings of the first ORI research conference on research integrity. Rockville, MD: Office of Research Integrity; 2002. pp. 143–149. [Google Scholar]

- Deluga RJ. Relationship among American presidential charismatic leadership, narcissism, and rated performance. Leadership Quarterly. 1997;8(1):49–65. [Google Scholar]

- Dembner A. Plea bolsters kickback case against Mass. medical firm. The Boston Globe. 2008 Jan 4;:D1. [Google Scholar]

- DuBois JM, Dueker JM. Teaching and assessing the responsible conduct of research: A Delphi consensus panel report. The Journal of Research Administration. 2009;40(1):49–70. [PMC free article] [PubMed] [Google Scholar]

- DuBois JM, Kraus EY, Vasher M. The development of a taxonomy of wrongdoing in medical practice and research. American Journal of Preventive Medicine. doi: 10.1016/j.amepre.2011.08.027. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Family doctor charged with $400,000 insurance fraud. The Associated Press State & Local Wire; 2000. Dec 21, [Google Scholar]

- Ferrell O, Skinner S. Ethical behavior and bureaucratic structure in marketing research organizations. Journal of Marketing Research. 1988;25(1):103–109. [Google Scholar]

- Gabel JR, Fahlman C, Kang R, Wozniak G, Kletke P, Hay JW. Where do I send thee? Does physician-ownership affect referral patterns to ambulatory surgery centers? Health Affairs. 2008;27(3):w165–174. doi: 10.1377/hlthaff.27.3.w165. [DOI] [PubMed] [Google Scholar]

- Gallagher TH, Waterman AD, Ebers AG, Fraser VJ, Levinson W. Patients’ and physicians’ attitudes regarding the disclosure of medical errors. Journal of the American Medical Association. 2003;289(8):1001–1007. doi: 10.1001/jama.289.8.1001. [DOI] [PubMed] [Google Scholar]

- Gambrell J. Ark surgeon pleads guilty to accepting kickback on equipment sale. The Associated Press State & Local Wire; 2008. Jan 4, Retrieved from LexisNexis database. [Google Scholar]

- Gibbs JC. Moral development and reality: Beyond the theories of Kohlberg and Hoffman. Thousand Oaks, CA: Sage Publications, Inc; 2003. [Google Scholar]

- Greenberg J. Employee theft as a reaction to underpayment inequity: The hidden cost of pay cuts. Journal of Applied Psychology. 1990;75(5):561–568. [Google Scholar]

- Greenberg J. Stealing in the name of justice: Informational and interpersonal moderators of theft reactions to underpayment inequity. Organizational Behavior and Human Decision Processes. 1993;54(1):81–103. [Google Scholar]

- Greenberger DB, Miceli MP, Cohen DJ. Oppositionists and group norms: The reciprocal influence of whistle-blowers and co-workers. Journal of Business Ethics. 1987;6(7):527–542. [Google Scholar]

- Haidt J. The new synthesis in moral psychology. Science. 2007;316(5827):998–1002. doi: 10.1126/science.1137651. [DOI] [PubMed] [Google Scholar]

- Hamra ST. Is there a difference? A prospective study comparing lateral and standard SMAS face lifts with extended SMAS and composite rhytidectomies. Plastic and Reconstructive Surgery. 1996;98(7):1144–1147. doi: 10.1097/00006534-199612000-00001. [DOI] [PubMed] [Google Scholar]

- Hansen BC, Hansen KD. Managing conflict of interest in faculty, federal government, and industrial relations. In: Cheney D, editor. Ethical issues in research. Frederick, MD: University Publishing Group; 1993. pp. 127–137. [Google Scholar]

- Helton-Fauth W, Gaddis B, Scott G, Mumford M, Devenport L, Connelly S, Brown R. A new approach to assessing ethical conduct in scientific work. Accountability in Research. 2003;10(4):205–228. doi: 10.1080/714906104. [DOI] [PubMed] [Google Scholar]

- Henik E. Mad as hell or scared stiff? The effects of value conflict and emotions on potential whistle-blowers. Journal of Business Ethics. 2008;80(1):111–119. [Google Scholar]

- Hilts PJ. Study or human experiment? Face-lift project stirs ethical concerns. The New York Times. 1998 Jun 21;:25–26. [PubMed] [Google Scholar]

- Holden C. NIH researcher charged with conflict of interest. ScienceNOW. 2006 Dec 5; Retrieved from http://news.sciencemag.org/sciencenow/2006/12/05-01.html.

- Hollingsworth JM, Ye Z, Strope SA, Krein SL, Hollenbeck AT, Hollenbeck BK. Physician-ownership of ambulatory surgery centers linked to higher volume of surgeries. Health Affairs. 2010;29(4):683–689. doi: 10.1377/hlthaff.2008.0567. [DOI] [PubMed] [Google Scholar]

- Hornblum AM. Acres of skin: Human experiments at Holmesburg prison: A true story of abuse and exploitation in the name of medical science. New York, NY: Routledge, Inc; 1998. [Google Scholar]

- Jansen E, Von Glinow MA. Ethical ambivalence and organizational reward systems. The Academy of Management Review. 1985;10(4):814–822. [Google Scholar]

- Jones AH. Can authorship policies help prevent scientific misconduct? What role for scientific societies? Science and Engineering Ethics. 2003;9(2):243–256. doi: 10.1007/s11948-003-0011-3. [DOI] [PubMed] [Google Scholar]

- Jones C. A reason to doubt: The suppression of evidence and the inference of innocence. Journal of Criminal Law & Criminology. 2010;100(2):415–474. [Google Scholar]

- Judge T, LePine J, Rich B. Loving yourself abundantly: Relationship of the narcissistic personality to self- and other perceptions of workplace deviance, leadership, and task and contextual performance. Journal of Applied Psychology. 2006;91(4):762–776. doi: 10.1037/0021-9010.91.4.762. [DOI] [PubMed] [Google Scholar]

- Kahn CN., III Intolerable risk, irreparable harm: The legacy of physician-owned specialty hospitals. Health Affairs. 2006;25(1):130–133. doi: 10.1377/hlthaff.25.1.130. [DOI] [PubMed] [Google Scholar]

- Kassirer JP. When physician-industry interactions go awry. Journal of Pediatrics. 2006;149(1 Suppl):S43–46. doi: 10.1016/j.jpeds.2006.04.051. [DOI] [PubMed] [Google Scholar]

- Keith-Spiegel P, Koocher GP. The IRB paradox: Could the protectors also encourage deceit? Ethics & Behavior. 2005;15(4):339–349. doi: 10.1207/s15327019eb1504_5. [DOI] [PubMed] [Google Scholar]

- Keith-Spiegel P, Koocher GP, Tabachnick B. What scientists want from their research ethics committee. Journal of Empirical Research on Human Research Ethics. 2006;1(1):67–82. doi: 10.1525/jer.2006.1.1.67. [DOI] [PubMed] [Google Scholar]

- Kemper T. Representative roles and the legitimization of deviance. Social Problems. 1966;13:288–298. [Google Scholar]

- Kohlberg L. Essays in moral development: The philosophy of moral development. San Francisco, CA: Harper & Row; 1981. [Google Scholar]

- Kohlberg L, Candee D. On the relationship of moral judgment to moral action. In: Kurtines WM, Gewirtz JL, editors. Morality, moral behavior, and moral development. New York, NY: Wiley; 1984. pp. 52–73. [Google Scholar]

- Kohler J, Bernhard B. Serious medical errors, little public information; Transparency is foresaken in the name of patient confidentiality. St. Louis Post-Dispatch; 2010. Aug 1, p. A9. [Google Scholar]

- Koocher GP, Keith-Spiegel P. Peers nip misconduct in the bud. Nature. 2010;466(7305):438–440. doi: 10.1038/466438a. [DOI] [PubMed] [Google Scholar]

- Kouri BE, Parsons RG, Alpert HR. Physician self-referral for diagnostic imaging: Review of the empiric literature. American Journal of Roentgenology. 2002;179(4):843–850. doi: 10.2214/ajr.179.4.1790843. [DOI] [PubMed] [Google Scholar]

- Krackow A, Blass T. When nurses obey or defy inappropriate physician orders: Attributional differences. Journal of Social Behavior and Personality. 1995;10(3):585–594. [Google Scholar]

- Lapinski MK, Rimal RN. An explication of social norms. Communication Theory. 2005;15(2):127–147. [Google Scholar]

- Lenzer J. Researcher received undisclosed payments of 300,000 dollars from Pfizer. British Medical Journal. 2006;333(7581):1237. doi: 10.1136/bmj.39062.603495.DB. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Levinsky NG. Nonfinancial conflicts of interest in research. New England Journal of Medicine. 2002;347(10):759–761. doi: 10.1056/NEJMsb020853. [DOI] [PubMed] [Google Scholar]

- Levy CJ. Voiceless, defenseless and a source of cash. The New York Times; 2002a. Apr 30, p. A1. [Google Scholar]

- Levy CJ. Warrant issued for eye doctor who operated on mentally ill. The New York Times; 2002b. May 16, p. B3. [Google Scholar]

- Levy CJ. The New York Times; 2003a. May 20, Doctor admits he did needless surgery on the mentally ill; p. B1. [Google Scholar]

- Levy CJ. Doctor gets prison for fraud after exploiting mentally ill. The New York Times; 2003b. Sep 4, p. B10. [Google Scholar]

- Lidz CW, Appelbaum PS, Joffe S, Albert K, Rosenbaum J, Simon L. Competing commitments in clinical trials. IRB: Ethics and Human Research. 2009;31(5):1–6. [PMC free article] [PubMed] [Google Scholar]

- Lynn C. To save my life. Health. 1998;12(6):112. [Google Scholar]

- Maguire M, Reiner R, Morgan R. The Oxford handbook of criminology. New York, NY: Oxford University Press; 2007. [Google Scholar]

- Malisow C. Cancer doctor Stanislaw Burzynski sees himself as a crusading researcher, not a quack. Houston Press; 2009. Janurary. Retrieved from http://www.houstonpress.com/2009-01-01/news/cancer-doctor-stanislaw-burzynski-sees-himself-as-a-crusading-researcher-not-a-quack/ [Google Scholar]