Abstract

Speakers convey meaning not only through words, but also through gestures. Although children are exposed to co-speech gestures from birth, we do not know how the developing brain comes to connect meaning conveyed in gesture with speech. We used functional magnetic resonance imaging (fMRI) to address this question and scanned 8- to 11-year-old children and adults listening to stories accompanied by hand movements, either meaningful co-speech gestures or meaningless self-adaptors. When listening to stories accompanied by both types of hand movements, both children and adults recruited inferior frontal, inferior parietal, and posterior temporal brain regions known to be involved in processing language not accompanied by hand movements. There were, however, age-related differences in activity in posterior superior temporal sulcus (STSp), inferior frontal gyrus, pars triangularis (IFGTr), and posterior middle temporal gyrus (MTGp) regions previously implicated in processing gesture. Both children and adults showed sensitivity to the meaning of hand movements in IFGTr and MTGp, but in different ways. Finally, we found that hand movement meaning modulates interactions between STSp and other posterior temporal and inferior parietal regions for adults, but not for children. These results shed light on the developing neural substrate for understanding meaning contributed by co-speech gesture.

During conversation, speakers convey meaning not only through spoken language, but also through hand movements—that is, through co-speech gestures—and both children and adults glean meaning from the gestures speakers produce (for review, see Hostetter, 2011). These gestures are fundamentally tied to language, even at the earliest stages of development (Iverson & Thelen, 1999), leading Bates and Dick (2002) to hypothesize a shared neural substrate for processing meaning from speech and from gesture. As evidence in support of this hypothesis, both electrophysiological (Habets, Kita, Shao, Ozyurek, & Hagoort, 2010; Holle & Gunter, 2007; Kelly, Creigh, & Bartolotti, 2010; Kelly, Kravitz, & Hopkins, 2004; Özyürek, Willems, Kita, & Hagoort, 2007; Wu & Coulson, 2005; Wu & Coulson, 2007a; Wu & Coulson, 2007b) and neuroimaging (Green et al., 2009; Holle, Gunter, Rüschemeyer, Hennenlotter, & Iacoboni, 2008; Holle, Obleser, Rueschemeyer, & Gunter, 2010; Skipper, Goldin-Meadow, Nusbaum, & Small, 2007; Straube, Green, Bromberger, & Kircher, 2011; Straube, Green, Weis, Chatterjee, & Kircher, 2009; Willems, Özyürek, & Hagoort, 2007; 2009; for review, see Willems & Hagoort, 2007) studies of adult listeners demonstrate that co-speech gesture influences neural processes in the same bilateral frontal-temporal-parietal network that is involved in comprehending language without gesture.

However, studies of adults cannot tell us how the developing brain processes gesture. Indeed, although gesture and speech form an integrated system from infancy (Butcher & Goldin-Meadow, 2000; Fenson et al., 1994; Özçalişkan & Goldin-Meadow, 2005), age-related changes in gesture comprehension and production are apparent throughout childhood (Botting, Riches, Gaynor, & Morgan, 2010; Mohan & Helmer, 1988; Stefanini, Bello, Caselli, Iverson, & Volterra, 2009). Pointing and referential gestures accompany and often precede the emergence of spoken language, and can be used to predict later spoken language ability (Acredolo & Goodwyn, 1988; Capirici, Iverson, Pizzuto, & Volterra, 1996; Morford & Goldin-Meadow, 1992; Rowe & Goldin-Meadow, 2009). Preschoolers continue to develop the ability to comprehend and produce symbolic gestures (Göksun, Hirsh-Pasek, & Golinkoff, 2010; Kidd & Holler, 2009; Kumin & Lazar, 1974; McNeil, Alibali, & Evans, 2000; Mohan & Helmer, 1988) and pantomime (Boyatzis & Watson, 1993; Dick, Overton, & Kovacs, 2005; O’Reilly, 1995; Overton & Jackson, 1973). Moreover, young children can use information from iconic gestures to learn concepts (e.g., the spatial concept “under”; McGregor, Rohlfing, Bean, & Marschner, 2009). During early and later childhood, children are developing both the ability to produce gestures to accompany narrative-level language (Demir, 2009; Riseborough, 1982; So, Demir, & Goldin-Meadow, 2010), and the ability to comprehend and take advantage of gestural information that accompanies spoken words (Thompson & Massaro, 1994), instructions (Church, Ayman-Nolley, & Mahootian, 2004; Goldin-Meadow, Kim, & Singer, 1999; Perry, Berch, & Singleton, 1995; Ping & Goldin-Meadow, 2008; Singer & Goldin-Meadow, 2005; Valenzeno, Alibali, & Klatzky, 2003), and narrative explanations (Kelly & Church, 1997; Kelly & Church, 1998). Taken together, these data suggest that gesture development is a process that extends at least into later childhood, and coincides with the development of language at multiple levels from earliest spoken word production to narrative comprehension.

These age-related changes at the behavioral level leave open the possibility that children and adults differ in the neurobiological mechanisms that underlie gesture-speech integration—defined as the construction of a unitary semantic interpretation from separate auditory (speech) and visual (gesture) sources. The goal of this study is to explore these potential differences. We investigate how functional specialization seen in the way the adult brain processes co-speech gesture emerges over childhood. We ask, in particular, whether the developing brain recruits the same regions as the adult brain but to a different degree, recruits different regions entirely, or accomplishes gesture-speech integration through different patterns of connectivity among brain regions.

We explore this question in the context of current theories of brain development that emphasize both increasing functional specialization of individual brain regions, and increasing functional interaction of those regions with age (Johnson, Grossmann, & Kadosh, 2009). We also situate our work within a body of functional magnetic resonance imaging (fMRI) studies examining gesture in adults. These studies have found that the following brain regions, comprising a frontal-temporal-parietal network for processing language, are also sensitive to gestures that accompany speech: the anterior inferior frontal gyrus (IFG; in particular pars triangularis; IFGTr), posterior superior temporal gyrus (STGp) and sulcus (STSp), supramarginal gyrus (SMG) of the inferior parietal lobule, and posterior middle temporal gyrus (MTGp; Green et al., 2009; Holle et al., 2008; Holle et al., 2010; Skipper et al., 2007; Straube et al., 2011; Straube et al., 2009; Willems et al., 2007; 2009).

Three of these regions—STSp, IFGTr, and MTGp—have been the focus of investigations attempting to determine how the brain processes the semantic contribution of co-speech gesture (Green et al., 2009; Holle et al., 2008; Willems et al., 2007). The findings with respect to the STSp have been controversial. The STSp is known to be preferentially active for biologically relevant motions (Beauchamp, Lee, Haxby, & Martin, 2003; Grossman et al., 2000), and shows increasing sensitivity to these motions with age (Carter & Pelphrey, 2006). In previous work, we have found that the STSp is not particularly sensitive to the meaning conveyed by hand movements (Dick, Goldin-Meadow, Hasson, Skipper, & Small, 2009). However, Holle and colleagues (2008, p. 2020, p. 2010) have suggested that the left STSp processes “the interaction of gesture and speech in comprehension” and that this interaction “has to occur on a semantic level.” Our goal here is to replicate the finding that the STSp shows age-related changes in processing biologically relevant information from hand motion and, in the process, explore whether it is sensitive to the meaning of those hand movements during this later childhood developmental period.

Two other regions, IFGTr and MTGp, consistently show sensitivity to the semantic relation between hand movements and speech. For example, both left (Green et al., 2009; Willems et al., 2007) and right (Green et al., 2009) IFGTr respond more strongly to gestures that are semantically incongruent with, or unrelated to, speech than to gestures that are congruent. In contrast, left MTGp responds more strongly when pantomimes mismatch speech than when iconic gestures mismatch speech (Willems et al., 2009), suggesting sensitivity to hand movements that have an unambiguous meaning. Thus, both regions—IFGTr and MTGp—are sensitive to how hand movements relate to speech in adults (Straube et al., 2011), but this sensitivity may change during development as a child learns these relationships. It is therefore possible that IFGTr and MTGp will show age-related changes in how they process the meaning relation between speech and gesture.

Finally, there is increasing interest in determining not only how the brain develops in terms of specialization of individual brain regions, but also how it organizes into functionally specialized networks (Bitan et al., 2007; Dick, Solodkin, & Small, 2010; Fair et al., 2007a; 2007b; Karunanayaka et al., 2007). Investigating interactions among brain regions can contribute important information to our understanding of the biological mechanisms relating speech to gesture. For example, Willems and colleagues (2009) showed that functional interactions between the STSp/MTGp regions and other regions of the frontal-temporal-parietal language network are modulated by semantic congruency between pantomimes and speech. These interactions might therefore be important in developing gesture-speech integration processes. The findings also suggest that the contribution of these posterior temporal regions to gesture semantics might be missed unless they are examined in the context of the network in which they are situated.

We report here an fMRI experiment in which children, ages 8–11 years, and adults listened to stories accompanied by hand movements that were either meaningfully related to the speech (iconic and metaphoric co-speech gestures), or not meaningfully related (self-adaptive movements, such as scratching or adjusting a shirt collar). We focused on 8- to 11-year–old children because children in this age range have been found to differ from adults in how they integrate representational (iconic and metaphoric) gestures with narrative level language (Kelly & Church, 1997; 1998).

We ask three questions: (1) Is the area of the posterior superior temporal sulcus that is most sensitive to biological movements of the hands in adults equivalently active in children? (2) Are the brain regions that are sensitive to meaning in hand movements in adults (i.e., IFGTr and MTGp, and possibly STSp) equivalently sensitive in children? (3) Does the connectivity of a frontal-temporal-parietal network for processing language and gesture show age-related changes that are moderated by gesture meaning?

Method

Participants

Twenty-four adults (12 females, range = 18–38 years; M age = 23.0 [5.6] years, and nine children (7 females, range = 8–11 years, M age = 9.5 [0.9] years) participated. One additional child was excluded for excessive motion (23% of timepoints > 1 mm; (23% of timepoints > 1 mm; Johnstone et al., 2006). Participants were native English speakers, right-handed (according to the Edinburgh handedness inventory; Oldfield, 1971), with normal hearing and vision, and no history of neurological or psychiatric illness. All adult participants gave written informed consent. Participants under 18 years gave assent and informed consent was obtained from a parent. The Institutional Review Board of the Biological Sciences Division of The University of Chicago approved the study.

Stimuli

Functional scans were acquired while participants passively viewed adaptations of Aesop’s Fables (M = 53 s). Although the overall study contained four conditions, only two are reported here (analysis of the remaining two conditions can be found in Dick et al., 2009; and Dick et al., 2010): (1) Gesture, in which participants viewed a storyteller while she made natural co-speech gestures, primarily metaphoric or iconic gestures bearing a meaningful relationship to the speech; (2) Self-Adaptor, in which the storyteller performed self-grooming hand movements unrelated to the meaning of the speech.

Each participant heard two stories per condition separated by 16 s Baseline fixation. Audio was delivered through headphones (85 dB SPL). Video was presented through a back-projection mirror. Following each run, participants responded to questions about each story to confirm that they were paying attention. Mean accuracy was high for both adults (Gesture M = 88.8% [20.1%]; Self-Adaptor M = 76.1% [25.0%]) and children (Gesture M = 73.8% [25.4%]; Self-Adaptor M = 64.8% [35.1%]), with no significant group or condition differences or interaction (all p’s > .05, uncorrected; for adults, the difference in accuracy across conditions approached significance; t(23) = 1.93, p = .07). The results suggest both groups paid attention to the stories.

Data Acquisition and Analysis

MRI scans were acquired at 3-Tesla with a standard quadrature head coil (General Electric, Milwaukee, WI, USA). High-resolution T1-weighted images were acquired (120 axial slices, 1.5 × .938 × .938 mm). For functional scans, thirty sagittal slices (5.00 × 3.75 × 3.75 mm) were acquired using spiral blood oxygenation level dependent (BOLD) acquisition (TR/TE = 2000 ms/25 ms, FA = 77°). The first four scans of each run were discarded.

Special steps were taken to ensure that children were properly acclimated to the scanner environment. Following Byars et al. (2002), we included a “mock” scan during which children practiced lying still in the scanner while listening to prerecorded scanner noise. When children felt confident to enter the real scanner, the session began.

Analysis Steps

Two analyses were performed: (1) a traditional “block” analysis to compare differences in focal activity, and (2) a network analysis using structural equation modeling (SEM) to compare differences in the pattern of connectivity between brain regions comprising a network. The analysis steps closely follow those used in Dick et al. (2010), and are detailed below.

Preprocessing

Preprocessing steps were conducted using Analysis of Functional Neuroimages software (AFNI; http://afni.nimh.nih.gov) on the native MRI images. For each participant, image processing consisted of (1) three-dimensional motion correction using weighted least-squares alignment of three translational and three rotational parameters, and registration to the first non-discarded image of the first functional run, and to the anatomical volumes; (2) despiking and mean normalization of the time series; (3) inspection and censoring of time points occurring during excessive motion (> 1 mm; Johnstone et al., 2006); (4) modeling of sustained hemodynamic activity within a story via regressors corresponding to the conditions, convolved with a gamma function model of the hemodynamic response derived from Cohen (1997). We also included linear and quadratic drift trends, and six motion parameters obtained from the spatial alignment procedure. This analysis resulted in regression coefficients (beta weights) and associated t statistics measuring the reliability the coefficients. False Discovery Rate (FDR; Benjamini & Hochberg, 1995; Genovese, Lazar, & Nichols, 2002) statistics were calculated to correct for multiple comparisons at the individual participant level (applicable for the SEM analysis); (5) to remove additional sources of spurious variance unlikely to represent signal of interest, we regressed from the time series signal from both lateral ventricles, and from bilateral white matter (Fox et al., 2005).

Time series assessment and temporal re-sampling in preparation for SEM

Due to counterbalancing, story conditions differed slightly in length (i.e., across participants the same stories were used in different conditions). Because SEM analyzes covariance structures, the time series must be the same length across individuals and conditions, and this required a temporal resampling of the data. To standardize time series length, we first imported time series from significant voxels (p < .05; FDR corrected) in predefined ROIs (see below), and removed outlying voxels (> 10% signal change). We averaged the signal to achieve a representative time series across the two runs for each ROI for each condition (Gesture and Self-Adaptor; baseline time points were excluded). We then re-sampled these averaged time series down (from a maximum of 108 s) to 92 s using a locally weighted scatterplot smoothing (LOESS) method. In this method each re-sampled data point is estimated with a weighted least squares function, giving greater weight to actual time points near the point being estimated, and less weight to points farther away (Cleveland & Devlin, 1988). The output of this resampling was a time series with an equal number of time points for each group for each condition. Non-significant Box’s M tests indicated no differences in the variance-covariance structure of the re-sampled and original data. The SEM analysis was conducted on these re-sampled time-series.

Signal-to-noise ratio and analysis

We carried out a Signal-to-Noise Ratio (SNR) analysis to determine if there were any cortical regions where, across participants and groups, it would be impossible to find experimental effects simply due to high noise levels (Parrish, Gitelman, LaBar, & Mesulam, 2000). We present the details and results in the Supplemental Materials. The analysis suggested that SNR was equivalent across adults and children, and was sufficient to detect differences within and across age groups.

Second-Level Analysis: Differences in Activity Across Age Group and Condition

We conducted second-level group analysis on a two-dimensional surface rendering of the brain constructed in Freesurfer (http://surfer.nmr.mgh.harvard.edu; Dale, Fischl, & Sereno, 1999; Fischl, Sereno, & Dale, 1999). Note that although children and adults do show differences in brain morphology, Freesurfer has been used to successfully create surface representations for children (Tamnes et al., 2009), and even neonates (Pienaar, Fischl, Caviness, Makris, & Grant, 2008). Further, in the age range we investigate here, atlas transformations similar to the kind used by Freesurfer have been shown to lead to robust results without errors when comparing children and adult functional images (Burgund et al., 2002; Kang, Burgund, Lugar, Petersen, & Schlaggar, 2003). Using AFNI, we interpolated regression coefficients, representing percent signal change, to specific vertices on the surface representation of the individual’s brain. Image registration across the group required an additional standardization step accomplished with icosahedral tessellation and projection (Argall, Saad, & Beauchamp, 2006). The functional data were smoothed on the surface (4mm FWHM) and imported to a MySQL relational database (http://www.mysql.com/). The R statistical package (version 2.6.2; http://www.R-project.org) was then used to query the database and analyze the information stored in these tables (Hasson, Skipper, Wilde, Nusbaum, & Small, 2008). Finally, we created an average of the cortical surfaces in Freesurfer on which to display the results of the whole-brain analysis.

We conducted whole-brain mixed (fixed and random) effects Condition (repeated measure; 2) × Age Group (2) × Participant (33) Analysis of Variance (ANOVA) on the normalized regression coefficients (statistical outliers, defined as signal > 3 SDs from the mean of transverse temporal gyrus, were removed, representing < 1% of the data). Given the unequal sample size across age group, the Welch correction for unequal variances was used to examine the age group and condition differences, and interaction. To control for the family-wise error (FWE) rate for multiple comparisons, we clustered the data using a non-parametric permutation method. This method proceeds by resampling the data under the null hypothesis without replacement, making no assumptions about the distribution of the parameter in question (Hayasaka & Nichols, 2003; Nichols & Holmes, 2002). Using this method, we determined a minimum cluster size (e.g., taking cluster sizes above the 95th percentile of the random distribution controls for the FWE at the p < .05 level). Reported clusters used a per-surface-vertex threshold of p < .01 and controlled for the FWE rate of p < .05. For regions characterized by a significant age group by condition interaction on the whole-brain, we further explored the nature of the interaction in an anatomically defined region of interest (ROI) analysis (described in the Results section).

Second Level Analysis: Differences in Network Connectivity

The goal of the network analysis was to determine if functional interactions among perisylvian regions showed age differences that were moderated by the meaning of hand movements accompanying speech. Network analysis was performed with SEM using Mplus software following the procedural steps outlined in Dick et al. (2010), Solodkin et al. (2004) and McIntosh and Gonzalez-Lima (1994). These steps are presented below.

Specification of structural equation model

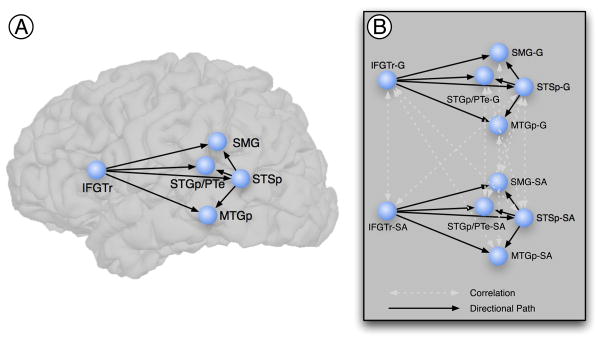

The specification of a theoretical anatomical model requires the definition of the nodes of the network (i.e., ROIs) and the directional connections (i.e., paths) among them. Our theoretical model represents a compromise between the complexity of the neural systems implementing language and gesture comprehension and the interpretability of the resulting model. Although a complex model might account for most or all known anatomical connections, it would be nearly impossible to interpret (McIntosh & Gonzalez-Lima, 1992; McIntosh et al., 1994). Further, our hypotheses focused on directional interactions of IFGTr and STSp on SMG, STGp/PTe, and MTGp regions, all previously implicated in processing gesture (reviewed in the Introduction; Figure 1A).

Figure 1.

Regions and directional connections specified in the structural equation model. A. The structural equation model specified seven directional connections (black single-headed arrows) from the inferior frontal gyrus, and from posterior superior temporal sulcus, to inferior parietal and posterior superior and middle temporal regions. The age group by condition interaction was assessed for these pathways. Anatomical regions of interest were defined on each individual brain following the anatomical conventions outlined in Table 1. B. The full model dealt with the inherent correlation, due to the within-subjects design, of the time series across conditions by modeling these correlations (white double-headed arrows). The full model was fit to both age groups and to both hemispheres. IFGTr = Inferior frontal gyrus (pars triangularis); SMG = Supramarginal gyrus; STGp/PTe = Posterior superior temporal gyrus and planum temporale; STSp = Posterior superior temporal sulcus; MTGp = Posterior middle temporal gyrus.

Connectivity among the regions was constrained by known anatomical connectivity in macaques (Schmahmann & Pandya, 2006). ROIs were defined on each individual surface representation using an automated parcellation procedure in Freesurfer (Desikan et al., 2006; Fischl et al., 2004), incorporating the neuroanatomical conventions of Duvernoy (Duvernoy, 1991). We manually edited the default parcellation to delineate the posterior portions of the predefined temporal regions. The regions are anatomically defined in Table 1. Surface interpolation of functional data inherently results in spatial smoothing across contiguous ROIs (and potentially spurious covariance). To avoid this, surface ROIs were imported to the native MRI space, and for SEM the time series were not spatially smoothed.

Table 1.

Anatomical Description of the Cortical Regions of Interest

| ROI | Anatomical Structure | Brodmann’s Area | Delimiting Landmarks |

|---|---|---|---|

| IFGTr | pars triangularis of the inferior frontal gyrus | 45 | A = A coronal plane defined as the rostral end of the anterior horizontal ramus of the sylvian fissure P = Vertical ramus of the sylvian fissure S = Inferior frontal sulcus I = Anterior horizontal ramus of the sylvian fissure |

| SMG | Supramarginal gyrus | 40 | A = Postcentral sulcus P = Sulcus intermedius primus of Jensen S = Intraparietal sulcus I = Sylvian fissure |

| STGp/PTe | Posterior portion of the superior temporal gyrus and planum temporale | 22, 42 | A = A vertical plane drawn from the anterior extent of the transverse temporal gyrus P = Angular gyrus S = Supramarginal gyrus I = Dorsal aspect of the upper bank of the superior temporal sulcus |

| MTGp | Posterior middle temporal gyrus | 21 | A = A vertical plane drawn from the anterior extent of the transverse temporal gyrus P = Temporo-occipital incisure S = Superior temporal sulcus I = Inferior temporal sulcus |

| STSp | Posterior superior temporal sulcus | 22 | A = A vertical plane drawn from the anterior extent of the transverse temporal gyrus P = Angular gyrus and middle occipital gyrus and sulcus S = Angular and superior temporal gyrus I = Middle temporal gyrus |

Note. A = Anterior; P = Posterior; S = Superior; I = Inferior; M = Medial; L = Lateral. Based on the automated parcellation from Freesurfer and the anatomical conventions of Duvernoy (1999).

The complete structural equation model, in addition to specifying the regions and the seven directional paths (Figure 1A), also models the correlations (double-headed arrows) between the conditions, which accounts for the within-subjects nature of the design. For example, because the same participants received both the Gesture and Self-Adaptor conditions, we explicitly modeled the covariance for each region (e.g., the correlation between IFGTr-G and IFGTr-SA). Based on modification indices, a small number of additional correlations were modeled across conditions to improve model fit. The complete model is given in Figure 1B, with paths of interest denoted by black single-headed arrows, and correlations denoted by white double-headed arrows. The same model was estimated separately for both age groups and for both hemispheres. Following the separate estimation and analysis of fit of the model, the “stacked model” or multiple group approach was used to assess the age group by condition interaction (see below).

We next proceeded to statistical construction of the structural equation models, which required the following steps:

Generation of covariance matrix

For each age group, we generated a variance-covariance matrix based on the mean time series from active voxels (p < .05, FDR corrected on the individual participant) across all participants, for all ROIs. One covariance matrix per group, per condition was generated.

Solution of structural equations

We used maximum likelihood to obtain a solution for each path coefficient representing the connectivity between network ROIs. The best solution minimizes the difference between the observed and predicted covariance matrices.

Goodness of fit between the predicted and observed variance-covariance matrices

Model fit was assessed against a χ2 distribution with q(q+1)/2−p degrees of freedom (q = number of nodes; p = number of unknown coefficients). Good model fit is obtained if the null hypothesis (specifying observed covariance matrix = predicted covariance matrix) is not rejected (Barrett, 2007).

Comparison between models

The age group by condition interaction was assessed using the “stacked model” approach (McIntosh & Gonzalez-Lima, 1994). In the “stacked model” approach, both age groups and conditions are simultaneously fit to the same model, with the null hypothesis that there is no age group by condition interaction. That is, the null hypothesis specifies (bAdult Gesture − bAdult Self-Adaptor) − (bChild Gesture − bChild Self-Adaptor) = 0, where b is the path coefficient for the specific pathway of interest. In the alternative model, paths of interest are allowed to differ. The differences between the degree of fit for the null model and the alternative model are assessed with reference to a critical χ2 value (χ2crit, df =1 = 3.84). A significant difference implies that better model fit is achieved when the paths are allowed to vary across groups, indicating the null model (specifying no interaction) should be rejected. Rejection of the null model indicates that the age difference for a particular path is moderated by the meaningfulness of the hand movements (i.e., an age group by condition interaction).

Results

Activity within age group and within condition compared to resting baseline

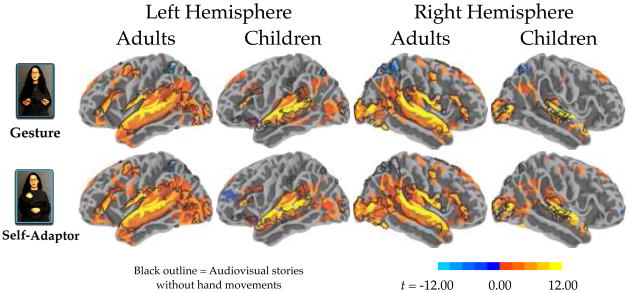

We first examined both positive (“activation”) and negative (“deactivation”) signal changes relative to resting baseline. These contrasts showed activation in frontal, inferior parietal, and temporal regions, and deactivation in posterior cingulate, precuneus, cuneus, lateral superior parietal cortex, and lingual gyrus (Figure 2). These findings are comparable to prior work in both adults and children (Karunanayaka et al., 2007; Price, 2010). For children, left anterior insula responded significantly for Gesture and Self-Adaptor conditions, and significant deactivation was observed in anterior middle frontal gyrus for the Self-Adaptor condition. To put the patterns in context, the brain regions active when these same participants listened to and watched stories told without hand movements are outlined in black in Figure 2 (see Dick et al., 2010). Note that the areas active without hand movements are a subset of those active with hand movements.

Figure 2.

Whole-brain analysis results for each condition compared to Baseline for both adults and children. The individual per-vertex threshold was p < .01 (corrected FWE p < .05). The black outline represents activation of audiovisual story comprehension without gestures relative to a resting baseline (from Dick et al., 2010, using the same sample of participants).

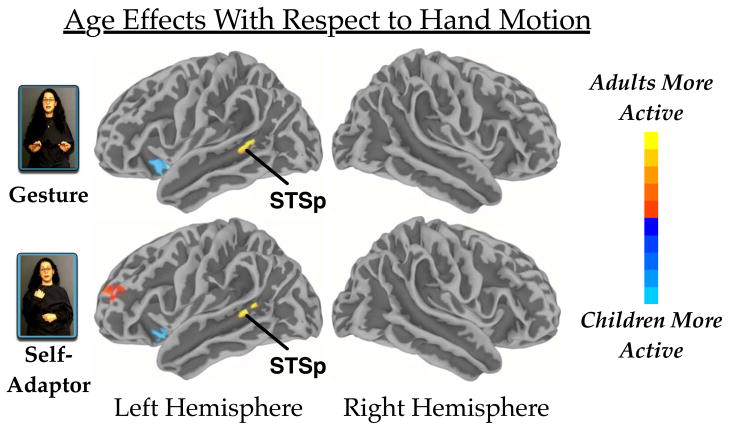

Age-related differences in processing hand movements

Our first research question addressed whether the region of STSp that is most responsive to biologically relevant motion in hand movements in adults is equally active in children. To address this question, we identified those areas that displayed an age difference on the whole brain that was not also moderated by meaning. The results of this whole-brain analysis showed that adults elicited more activity than children in STSp in both the Gesture and Self-Adaptor conditions. There was no interaction in this region (Table 2 and Figure 2), suggesting that STSp is more active in adults than children when processing moving hands whether or not the hands convey meaning.

Table 2.

Regions showing reliable differences across age groups, and for the Age by Condition interaction.

| Region | Talairach | BA | CS | MI | ||

|---|---|---|---|---|---|---|

| x | y | z | ||||

| Gesture: Adults > Children | ||||||

| L. Precuneus | −9 | −59 | 43 | 7 | 305 | 0.06 |

| L. Superior temporal sulcus | −48 | −44 | 5 | 22 | 279 | 1.09 |

| Gesture: Children > Adults | ||||||

| L. Insula | −29 | 15 | −10 | 13/47 | 528 | 0.36 |

| L. Medial superior frontal gyrus | −7 | 43 | 34 | 6 | 292 | 0.17 |

| Self-Adaptor: Adults > Children | ||||||

| L. Anterior cingulate gyrus | −6 | 20 | 28 | 32 | 888 | 0.19 |

| L. Posterior cingulate gyrus | −4 | −24 | 27 | 23 | 1137 | 0.46 |

| L. Superior frontal gyrus | −30 | 51 | 15 | 10 | 465 | 0.19 |

| L. Superior temporal sulcus | −48 | −45 | 6 | 22 | 267 | 1.11 |

| R. Posterior cingulate gyrus | 6 | −14 | 32 | 23/24 | 513 | 0.06 |

| Self-Adaptor: Children > Adults | ||||||

| L. Insula | −28 | 16 | −11 | 13/47 | 287 | 0.23 |

| Interaction [Adult: Gesture – Self-Adaptor] - [Children: Gesture – Self-Adaptor] | ||||||

| L. Middle temporal gyrus | −61 | −38 | −13 | 21 | 336 | 0.39 |

| R. Inferior frontal gyrus | 54 | 30 | 9 | 45 | 274 | 0.44 |

Note. Individual voxel threshold p < .01, corrected (FWE p < .05). Center of mass defined by Talairach and Tournoux coordinates in the volume space. BA = Brodmann Area. CS = Cluster size in number of surface vertices. MI = Maximum intensity (in terms of percent signal change).

In addition to STSp, we found other statistically reliable age differences in focal activity in several regions of the medial aspect of the cortex for processing biological hand movements. The left precuneus (for Gesture) and the left anterior and posterior cingulate (for Self-Adaptor) were more active for adults, and the medial superior frontal gyrus (for Self-Adaptor) was more active for children. Age differences were also found for both Gesture and Self-Adaptor in the left anterior insula (greater for children), and the left anterior middle and superior frontal gyrus (greater for adults). In other regions sensitive to gestures in adults (namely IFGTr and MTGp), age-related differences in processing hand movements were found, but were moderated by the meaning of these hand movements (see below).

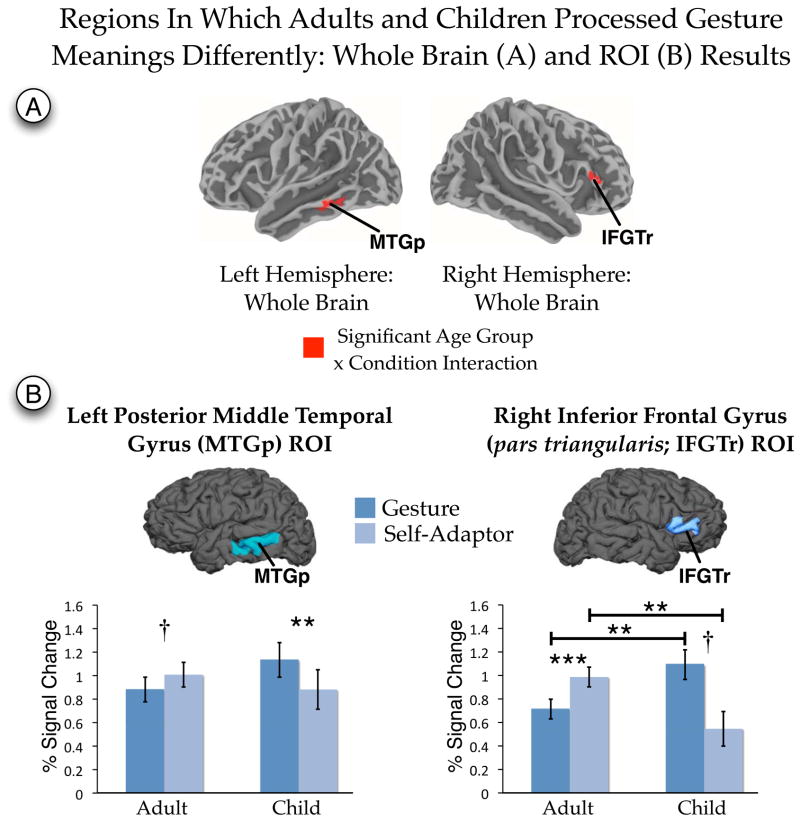

Age-related differences in processing meaningful vs. meaningless hand movements

Our second research question asked whether the brain processed the meaning of hand movements in comparable ways in children and adults. To address this question, we identified those areas that displayed an age group by condition interaction on the whole brain. Clusters showing a significant interaction were found in two regions—right IFGTr and left MTGp—indicating that the age differences found in these regions (Table 2 and Figure 4) for processing hand movements was moderated by whether the movements conveyed meaning. To characterize the nature of the interaction, we further explored these differences using an anatomical ROI approach.

Figure 4.

Age group by condition interaction reported for the analysis of the whole brain (A) and for anatomically defined regions of interest (ROI; B). Whole brain results met a per-vertex threshold of p < .01 (corrected FWE p < .05). Regions of interest results are FDR corrected for multiple comparions. Anatomical regions of interest were defined on each individual brain following the anatomical conventions outlined in Table 1. IFGTr = Inferior frontal gyrus (pars triangularis). MTGp = Posterior middle temporal gyrus. ** p < .01 (corrected). *** p < .001 (corrected). † p < .05 (uncorrected).

As a first step in the ROI analysis, the right IFGTr and left MTGp were defined on each individual surface representation (Table 1). Following the method outlined in Mitsis, Iannetti, Smart, Tracey and Wise (2008), to reduce the influence of noise the data within the ROI were thresholded to include only the most reliable values (the top quartile of t values defined from the first-level regression). This procedure increases sensitivity by ensuring that the most reliable values contribute to the summary statistic of the ROI (Mitsis et al., 2008). We were also concerned about Type I error rate and about the unequal samples size across age groups. As recommended by Krishnamoorthy, Lu and Mathew (2007), to alleviate this concern we calculated the standard errors and confidence intervals for each effect using a bootstrap approach. We adapted the procedure in Venables and Ripley (2010, p. 164) to the mixed-effects regression model and resampled the residuals. In this procedure, the linear mixed-effects model is fit to the data, the residuals are resampled with replacement, and new model coefficients are estimated. This process is iterated 5000 times to define the standard errors of each estimate. The bootstrap standard errors are used to calculate the 95% confidence intervals (CI) of the beta estimates and significance tests (t values).

Results are shown in Figure 4 and, except where noted, survived a FDR correction for multiple comparisons (Benjamini & Hochberg, 1995). The results revealed a significant interaction for both ROIs (left MTGp: t(31) = −2.88, p = .007, d = 1.03; 95% CI = −0.57 to −0.19; right IFGTr: t(31) = −3.89, p = .0005, d = 1.40; 95% CI = −1.26 to −0.43). Decomposing the interaction, we found that, for MTGp, activity in Gesture was greater than in Self-Adaptor for children (t(8) = −3.54, p = .008, d = 2.50; 95% CI = −0.40 to −0.12). The difference for adults, in which Self-Adaptor was greater than Gesture, did not reach statistical significance after FDR correcting for multiple comparisons (t(23) = 2.29, p = .03 uncorrected, d = .96; 95% CI = 0.02 to 0.23). Comparisons between children and adults within each condition did not reach significance even without the multiple comparison correction (children vs. adults within Gesture, p = .18, 95% CI = −0.10 to 0.59; children vs. adults within Self-Adaptor, p = .46, 95% CI = −0.49 to 0.22).

For IFGTr, all post-hoc comparisons were significant and survived the correction for multiple comparisons except one. Activity in Gesture was greater than in Self-Adaptor for children (t(8) = −2.32, p = .049, d = 1.64; 95% CI = −1.04 to −0.09), but less than in Self-Adaptor for adults (t(23) = 4.15, p = .0004, d = 1.73; 95% CI = 0.15 to 0.43), although the Gesture vs. Self-Adaptor comparison for children did not reach statistical significance after FDR correcting for multiple comparisons. There were, in addition, differences between children and adults: children displayed more activity than adults during Gesture (t(32) = 2.82, p = .008, d = 1.00; 95% CI = 0.12 to 0.67), but less activity than adults during Self-Adaptor (t(32) = −2.89, p = .007, d = 1.02; 95% CI = −0.76 to −0.15). Note that no significant activation survived the cluster correction for children in the whole-brain analysis in IFGTr (Figure 2). However, in the ROI analysis, both children and adults showed significant above-baseline activity in both Gesture and Self-Adaptor conditions (smallest t(8) = 3.77, p = .006, d = 1.25; Figure 4B), indicating that this region responded above baseline for both age groups for both conditions.

In summary, the significant interaction revealed in the whole-brain analysis and further explored in the ROI analysis suggests that the left MTGp and right IFGTr are sensitive to whether a hand movement conveys meaning. However, this sensitivity differs across age.

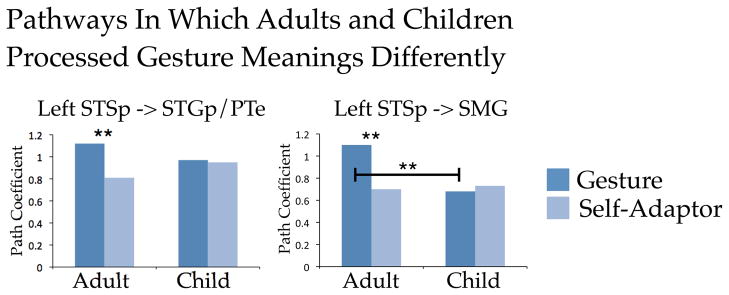

Age-related differences in connectivity: SEM analysis

The SEM analysis addressed the third aim—to explore functional pathways where children might process gesture meanings differently from adults. First, the models for each hemisphere were assessed separately, and good model fit was obtained for each age group (Left Hemisphere: Adults:χ2df = 8 = 13.04, p = .11; Children: χ2df = 8 = 5.03, p = .75; Right Hemisphere: Adults:χ2df = 8 = 4.06, p = .85; Children: χ2df = 8 = 10.50, p = .23). These results indicate that the hypothesized models should be retained for both hemispheres. In addition, we examined the squared multiple correlations (which provide a measure of explained variance) and found a high degree of explained variance (i.e., on average 69% [SD = 20%] for the left hemisphere; 60% [SD = 24%] for the right hemisphere).

After establishing good fit between the model and the data, we examined whether the meaningfulness of the hand movements moderated age differences for each path. Figure 5 presents the results of this analysis. Whether the hand movements were meaningful (Gesture) or not (Self-Adaptor) moderated age differences for two connections—the left STSp -> SMG pathway and the left STSp -> STGp/PTe pathway (χ2df = 1 = 4.81, p = .02 and χ2df = 1 = 6.32, p = .01, respectively). This finding suggests that interaction between these regions may play a role over developmental time in how the meaning of hand movements is processed.

Figure 5.

Results of the structural equation modeling analysis for the seven pathways of interest. Path coefficients for each pathway were statistically assessed for the age group by condition interaction. Significant interactions were found for the pathways left STS -> SMG and left STSp -> STGp/PTe. SMG = Supramarginal gyrus; STGp/PTe = Posterior superior temporal gyrus and planum temporale; STSp = Posterior superior temporal sulcus. * p < .05. ** p < .01.

Discussion

Our results demonstrate that, as in the adult brain, hand movements that are meaningful (i.e., gestures) influence neural processes in the child brain in the frontal, temporal, and parietal brain regions that are also involved in processing language. This finding is consistent with a partially overlapping neural substrate for processing gesture and speech in children and adults (Bates & Dick, 2002). However, we also found clear differences, reflected in both focal activity and in network connectivity, in how the developing brain responds to co-speech gesture. We found age-related differences in BOLD activity in STSp, IFGTr, and MTGp regions implicated in processing hand movements (both meaningful and meaningless). Both children and adults showed sensitivity to the meaning of those hand movements in IFGTr and MTGp (but not STSp), but the childrens’ patterns differed from the adults’. Finally, using network analysis, we found age-related differences in how meaningful vs. meaningless hand movements are processed and realized through interactions between STSp and other posterior temporal and inferior parietal regions of a frontal-temporal-parietal language network. The results underscore three points: (1) children and adults differ in how they process co-speech gestures; (2) these differences may reflect developmental changes in the functional specialization of individual brain regions; and (3) the differences may also reflect increasing connectivity between the relevant regions during development. We explore each of these findings in turn.

(1) STSp responds more strongly to hand movements in adults than in children, but it is not sensitive to the meaning contributed by the hand movements in either group

In the whole-brain analysis, we found that adults recruited the upper bank of the left STSp more strongly than children when processing both gesture and self-adaptor movements (Figure 3). STSp is implicated in processing biologically relevant motion (Beauchamp et al., 2003; Grossman et al., 2000), and this region becomes more sensitive to biologically relevant motion with age (Carter & Pelphrey, 2006). In previous work, we found that the STSp responds more strongly to speech with hand movements than to audiovisual speech without hand movements (Dick et al., 2009), and that there are no age-related differences in left STSp for processing audiovisual speech without hand movements (Dick et al., 2010). In the current study, we found (in the same sample studied by Dick et al., 2010) age-related differences for processing speech with hand movements, whether or not those movements conveyed meaning. Thus, with age, left STSp increases activity in response to hand movements, but the meaning does not moderate the amount of activity (see Willems et al., 2007; Willems et al., 2009, for complementary findings).

Figure 3.

Main effects of age group reported for both conditions for the analysis of the whole-brain. Colors in the red spectrum indicate greater activity for adults. Colors in the blue spectrum indicate greater activity for children. The figure identifies activation differences in the upper bank of the posterior superior temporal sulcus (STSp). The individual per-vertex threshold was p < .01 (corrected FWE p < .05).

(2) IFGTr and MTGp show age-related changes to the meaning of hand movements

In contrast to STSp, we found that the meaningfulness of hand movements was related to age-related changes in the BOLD response in right IFGTr and left MTGp (i.e., there was an Age Group by Condition interaction; Figure 4). The adults in our study displayed similar patterns to those reported in the literature. Previous work on adults has found that (1) activity in both regions is correlated with successful recall of previously viewed meaningful (left MTGp and right anterior IFG) and non-meaningful (right anterior IFG) hand movements (Straube et al., 2009); (2) activity in the left MTGp is not affected by the meaning of iconic gesture (Holle et al., 2008; Willems et al., 2009); (3) activity in the right IFGTr is greater for hand movements that are not related to speech than for hand movements that are speech-related (Green et al., 2009). In other words, in adults, activity in these regions is equivalent (MTGp) or greater (IFGTr) for meaningless hand movements than for meaningful hand movements.

The children in our study displayed a different pattern—the BOLD response was stronger for Gesture than for Self-Adaptor in left MTGp, and showed a trend in the same direction in right IFGTr. Thus, unlike adults who displayed less or equivalent activity in these regions when processing meaningful (as opposed to meaningless) hand movements, the children in our study displayed more activity for meaningful hand movements.

The heightened activity for meaningful over meaningless hand movements in children but not adults may be related to developmental changes in how meanings are activated and selected within context. MTGp and IFGTr have been shown in adults to be involved in long-term storage of amodal lexical representations (MTGp) and in the selection and controlled retrieval of lexical representations in context (IFGTr; Lau, Phillips & Poeppel, 2008). Empirical work has found that both left MTGp and right IFGTr are recruited during language processing when the task requires semantic activation of multiple potentially relevant word meanings, such as when listeners must process the meaning of a homonym (Hoenig & Scheef, 2009), or the meaning of a semantically ambiguous sentence (Rodd, Davis, & Johnsrude, 2005; Zempleni, Renken, Hoeks, Hoogduin, & Stowe, 2007). The left MTGp is implicated in lexical activation for language (Binder, Desai, Graves, & Conant, 2009; Doehrmann & Naumer, 2008; Lau et al., 2008; Martin & Chao, 2001; Price, 2010 for reviews) and gesture (Kircher et al., 2009; Straube et al., 2011; Straube et al., 2009), and in the storage and representation of action knowledge (Kellenbach, Brett, & Patterson, 2003; Lewis, 2006). The region is also modulated by the semantic congruency of auditory and visual stimuli depicting actions (Galati et al., 2008). The right IFGTr is implicated in semantic selection, with activity increasing as a function of demand on semantic selection (Hein et al., 2007; Rodd et al., 2005). In particular, the right IFGTr is linked to the inhibition of irrelevant or inappropriate meanings or responses (Hoenig & Scheef, 2009; Jacobson, Javitt, & Lavidor, 2011; Lenartowicz, Verbruggen, Logan, & Poldrack, 2011), and this region responds particularly strongly when input is visual (Verbruggen, Aron, Stevens, & Chambers, 2010), when visual input conflicts semantically with auditory input (Hein et al., 2007), or when the input elicits the activation of multiple potential meanings in spoken language (e.g., during comprehension of figurative language, Lauro, Tettamanti, Cappa, & Papagno, 2008; during the linking of distant semantic relations, Rodd, Davis, & Johnsrude, 2005, Zempleni, Renken, Hoeks, Hoogduin, & Stowe, 2007; or during semantic revision, Stowe, 2005).

Importantly from the point of view of our findings, developmental changes have been found in both of these areas. In an fMRI study investigating semantic judgments in 9- to 15-year-olds, Chou and colleagues (2006) found that activity in both left MTGp and right IFGTr increases as a function of age. Thus, our finding that activity in left MTGp and right IFGTr is greater when children process meaningful (as opposed to meaningless) hand movements is consistent with two facts—that these areas are involved in the selection and activation of relevant meanings in adults, and that activation in these areas shows age-related change during semantic tasks.

We now consider our results in relation to the development of gesture-speech integration. We found that children activated right IFGTr more than adults when processing gestures, but less than adults when processing self-adaptors. Further, children recruited left MTGp more when processing gestures than when processing self-adaptors, and displayed a trend in the same direction in right IFGTr. The heightened activation we see in children when processing gesture may reflect the effort they are expending as they retrieve and select semantic information from long-term memory and integrate that information with information conveyed in gesture (cf. Chou et al., 2006; Hein et al., 2007; Rodd et al., 2005; Willems et al., 2009). In this regard, it is worth noting that attending to information conveyed in both gesture and speech has been shown to be effortful for 8- to 10-year old children (Kelly & Church, 1998). When children recall information conveyed in gesture, they recall the information conveyed in the accompanying speech only 60% of the time. In contrast, when adults recall information conveyed in gesture, they recall the information conveyed in both modalities 93% of the time (Kelly & Church, 1998). The fact that attending to information in two modalities is effortful for children could explain the increased activity we see in the brain regions that are responsible for retrieving and selecting semantic information when children are asked to process stories accompanied by gesture.

Why then do the children exhibit less activity when processing self-adaptors than when processing gestures? Perhaps children do not even attempt to integrate self-adaptors with speech, which would also lead to decreased activation due to lack of attention to self-adaptors. We know from electrophysiological evidence that adults do process self-adaptors (Holle & Gunter, 2007; Experiment 3). Adults may be trying to understand the pragmatic or contextual meaning of self-adaptors (e.g., tugging the collar may provide information about social discomfort or anxiety; Waxer, 1977), or they may be trying to ignore these meaningless hand movements as irrelevant to the spoken message (Holle & Gunter, 2007). Both processes would increase demands on semantic selection (Dick et al., 2009; Green et al., 2009; Holle & Gunter, 2007), resulting in more activity in right IFGTr for self-adaptors than for gestures in adults, and more activity for self-adaptors in adults than in children. In contrast, when speech is accompanied by self-adaptors, 8- to 10-year-old children tend to focus on the message conveyed in speech, ignoring the kinds of information often conveyed in self-adaptors (Bugental, Kaswan, & Love, 1970; Morton & Trehub, 2001; Reilly & Muzekari, 1979). This would explain less activity for self-adaptors compared to gestures in children.

In summary, the results we report confirm previous findings showing that left MTGp and right IFGTr brain regions implicated in the activation and selection of relevant meanings within a semantic context, are activated when children and adults process stories accompanied by gesture. Our results take the phenomenon one step further by showing different activation patterns in these brain regions in children and adults, suggesting that that the regions are still developing during middle to late childhood.

(3) The developing brain processes the meaning of hand movements through different patterns of connectivity within a frontal-temporal-parietal network

Our connectivity analysis revealed that the left STSp -> SMG pathway and the left STSp -> STGp/PTe pathway showed an age difference that was moderated by the semantic relation between gesture and speech (Figure 5). This finding points to the developmental importance of these functional connections for processing gesture meaning. For both pathways, connectivity was stronger when processing gestures than when processing self-adaptor in adults, but there was equivalent connectivity for processing gestures and self-adaptors in children. Recall that our earlier analysis of age differences in BOLD amplitude revealed greater activity in STSp in adults than in children. However, in contrast to Holle and colleagues (2008, p. 2020, p. 2010), we found no evidence that hand movements are processed “at a semantic level” in STSp. STSp was more active in adults than in children for hand movements whether or not they conveyed meaning (i.e., gestures vs. self-adaptors), which we took as evidence that STSp responds to hand motion rather than hand meaning. Nevertheless, when we look at connectivity with STSp, we do find that meaning modulates interactions between STSp and STGp/PTe and between STSp and SMG. Thus, in addition to processing biological motion, the STSp may be playing a role in connecting information from the visual modality with information from the auditory modality, particularly when processing actions (Barraclough, Xiao, Baker, Oram, & Perrett, 2005). From this perspective, STSp works in concert with posterior temporal and inferior parietal cortices involved in auditory language comprehension to construct meaning from gesture and speech. However, this process appears to require time to develop, as children do not show the modulation in connectivity between STSp and posterior temporal/inferior parietal regions as a function of meaning that we see in adults.

The developing brain uses additional regions to process hand movements with speech

We also found that children recruited regions not recruited by adults. In particular, left anterior insula was active for children, but not for adults, when processing both gestures and self-adaptors (Figure 3). The anterior insula is late-developing (Shaw et al., 2008) and may be important for the emerging understanding of emotions conveyed through facial expressions (Lobaugh, Gibson, & Taylor, 2006)—an interpretation that is supported by the fact that we also see activity in this region when children are processing speech without gestures (Figure 2, black outline). The anterior medial superior frontal gyrus was also more active in children than adults when processing gestures (Table 2). Wang and colleagues (Wang, Conder, Blitzer, & Shinkareva, 2010) found that adults displayed less activity than children in this region when making inferences about a speaker’s communicative intent. They hypothesized that the decrease of activation with age reflects greater automatization of inference-making over time. Our findings suggest a similar developmental progression for interpreting the communicative intent of gestures.

Significance of findings for the neurobiology of cognitive development

Several authors have suggested that two fundamental patterns mark functional brain development: increasing the functional specialization of individual brain regions, and increasing the connectivity among brain regions (Fair et al., 2007a; 2007b; Fair et al., 2009; Johnson et al., 2009; Sporns, 2011). We have found evidence for these general developmental patterns in processing co-speech gesture. The regions we investigated here also been found to display structural changes into early adulthood (Luciana, 2010), with age-related changes in cortical volume (Shaw et al., 2008) and in the integrity of fiber pathways (Schmithorst, Wilke, Dardzinski, & Holland, 2005). These neuroanatomical changes may explain our findings, although maturational factors of this sort are likely to interact with experience. The children we studied have had considerable experience with both speech and gesture. It would be interesting to determine in future work how speech and gesture are processed in the brains of younger children who have had less experience with both modalities. With these caveats in mind, our results provide support for the notion that the developing neural substrate for processing meaning from co-speech gesture is characterized by both increasing functional specialization of brain regions, and increasing interactive specialization among those regions.

Supplementary Material

Acknowledgments

Research supported by NIH F32DC008909(ASD), R01NS54942(AS), and P01HD040605(SGM and SLS). Thanks to James Jaccard for structural equation modeling suggestions, and to Michael Andric, Uri Hasson, and Pascale Tremblay for helpful comments.

References

- Acredolo L, Goodwyn S. Symbolic gesturing in normal infants. Child Development. 1988;59:450–466. [PubMed] [Google Scholar]

- Argall BD, Saad ZS, Beauchamp MS. Simplified intersubject averaging on the cortical surface using SUMA. Human Brain Mapping. 2006;27(1):14–27. doi: 10.1002/hbm.20158. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barraclough NE, Xiao D, Baker CI, Oram MW, Perrett DI. Integration of visual and auditory information by superior temporal sulcus neurons responsive to the sight of actions. Journal of Cognitive Neuroscience. 2005;17(3):377–391. doi: 10.1162/0898929053279586. [DOI] [PubMed] [Google Scholar]

- Barrett P. Structural equation modeling: Adjudging model fit. Personality and Individual Differences. 2007;42:815–824. [Google Scholar]

- Bates E, Dick F. Language, gesture, and the developing brain. Developmental Psychobiology. 2002;40:293–310. doi: 10.1002/dev.10034. [DOI] [PubMed] [Google Scholar]

- Beauchamp MS, Lee KE, Haxby JV, Martin A. FMRI responses to video and point-light displays of moving humans and manipulable objects. Journal of Cognitive Neuroscience. 2003;15(7):991–1001. doi: 10.1162/089892903770007380. [DOI] [PubMed] [Google Scholar]

- Benjamini Y, Hochberg Y. Controlling the false discovery rate: A practical and powerful approach to multiple testing. Journal of the Royal Statistical Society: Series B (Statistical Methodology) 1995;57(1):289–300. [Google Scholar]

- Binder JR, Desai RH, Graves WW, Conant LL. Where is the semantic system? A critical review and meta-analysis of 120 functional neuroimaging studies. Cerebral Cortex. 2009;19:2767–2796. doi: 10.1093/cercor/bhp055. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bitan T, Cheon J, Lu D, Burman DD, Gitelman DR, Mesulam M-M, Booth JR. Developmental changes in activation and effective connectivity in phonological processing. Neuroimage. 2007;38:564–575. doi: 10.1016/j.neuroimage.2007.07.048. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Botting N, Riches N, Gaynor M, Morgan G. Gesture production and comprehension in children with specific language impairment. British Journal of Developmental Psychology. 2010;28:51–69. doi: 10.1348/026151009x482642. [DOI] [PubMed] [Google Scholar]

- Boyatzis CJ, Watson MW. Preschool children’s symbolic representation objects through gestures. Child Development. 1993;64:729–735. [PubMed] [Google Scholar]

- Bugental DE, Kaswan JW, Love LR. Perception of contradictory meanings conveyed by verbal and nonverbal channels. Journal of Personality and Social Psychology. 1970;16(4):647–55. doi: 10.1037/h0030254. [DOI] [PubMed] [Google Scholar]

- Burgund ED, Kang HC, Kelly JE, Buckner RL, Snyder AZ, Petersen SE, Schlaggar BL. The feasibility of a common stereotactic space for children and adults in fmri studies of development. Neuroimage. 2002;17:184–200. doi: 10.1006/nimg.2002.1174. [DOI] [PubMed] [Google Scholar]

- Butcher C, Goldin-Meadow S. Gesture and the transition from one- to two-word speech: When hand and mouth come together. In: McNeill D, editor. Language and gesture. New York: Cambridge University Press; 2000. pp. 235–257. [Google Scholar]

- Byars AW, Holland SK, Strawsburg RH, Bommer W, Dunn RS, Schmithorst VJ, Plante E. Practical aspects of conducting large-scale functional magnetic resonance imaging studies in children. Journal of Child Neurology. 2002;17:885–889. doi: 10.1177/08830738020170122201. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Capirici O, Iverson JM, Pizzuto E, Volterra V. Gestures and words during the transition to two-word speech. Journal of Child Language. 1996;23:645–673. [Google Scholar]

- Carter EJ, Pelphrey KA. School-Aged children exhibit domain-specific responses to biological motion. Social Neuroscience. 2006;1(3):396–411. doi: 10.1080/17470910601041382. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chou TL, Booth JR, Burman DD, Bitan T, Bigio JD, Lu D, Cone NE. Developmental changes in the neural correlates of semantic processing. Neuroimage. 2006;29(4):1141–1149. doi: 10.1016/j.neuroimage.2005.09.064. [DOI] [PubMed] [Google Scholar]

- Church RB, Ayman-Nolley S, Mahootian S. The role of gesture in bilingual education: Does gesture enhance learning? International Journal of Bilingual Education and Bilingualism. 2004;7(4):303–319. [Google Scholar]

- Cleveland WS, Devlin SJ. Locally weighted regression: An approach to regression analysis by local fitting. Journal of the American Statistical Association. 1988;83:596–610. [Google Scholar]

- Cohen MS. Parametric analysis of fmri data using linear systems methods. Neuroimage. 1997;6(2):93–103. doi: 10.1006/nimg.1997.0278. [DOI] [PubMed] [Google Scholar]

- Dale AM, Fischl B, Sereno MI. Cortical surface-based analysis I: Segmentation and surface reconstruction. Neuroimage. 1999;9(2):179–194. doi: 10.1006/nimg.1998.0395. [DOI] [PubMed] [Google Scholar]

- Demir E. Unpublished Doctoral Dissertation. Chicago, IL, USA: The University of Chicago; 2009. A tale of two hands: Development of narrative structure in children’s speech and gesture and its relation to later reading skill. [Google Scholar]

- Desikan RS, Ségonne F, Fischl B, Quinn BT, Dickerson BC, Blacker D, Killiany RJ. An automated labeling system for subdividing the human cerebral cortex on MRI scans into gyral based regions of interest. Neuroimage. 2006;31(3):968–80. doi: 10.1016/j.neuroimage.2006.01.021. [DOI] [PubMed] [Google Scholar]

- Dick AS, Goldin-Meadow S, Hasson U, Skipper JI, Small SL. Co-Speech gestures influence neural activity in brain regions associated with processing semantic information. Human Brain Mapping. 2009;30:3509–3526. doi: 10.1002/hbm.20774. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dick AS, Overton WF, Kovacs SL. The development of symbolic coordination: Representation of imagined objects, executive function, and theory of mind. Journal of Cognition and Development. 2005;6(1):133–161. [Google Scholar]

- Dick AS, Solodkin A, Small SL. Neural development of networks for audiovisual speech comprehension. Brain and Language. 2010;114:101–114. doi: 10.1016/j.bandl.2009.08.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Doehrmann O, Naumer MJ. Semantics and the multisensory brain: How meaning modulates processes of audio-visual integration. Brain Research. 2008;1242:136–50. doi: 10.1016/j.brainres.2008.03.071. [DOI] [PubMed] [Google Scholar]

- Duvernoy HM. The human brain: Structure, three-dimensional sectional anatomy and MRI. New York: Springer-Verlag; 1991. [Google Scholar]

- Fair DA, Cohen AL, Dosenbach NUF, Church JA, Miezin FM, Barch DM, Schlaggar BL. The maturing architecture of the brain’s default network. Proceedings of the National Academy of Sciences. 2007a;105(10):4028–4032. doi: 10.1073/pnas.0800376105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fair DA, Cohen AL, Power JD, Dosenbach NUF, Church JA, Miezin FM, Petersen SE. Functional brain networks develop from a “local to distributed” organization. Plos Computational Biology. 2009;5(5):e1000381. doi: 10.1371/journal.pcbi.1000381. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fair DA, Dosenbach NUF, Church JA, Cohen AL, Brahmbhatt S, Miezin FM, Schlaggar BL. Development of distinct control networks through segregation and integration. Proceedings of the National Academy of Sciences. 2007b;104(33):13507–13512. doi: 10.1073/pnas.0705843104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fenson L, Dale PS, Reznick JS, Bates E, Thal DJ, Pethick SJ. Variability in early communicative development. Monographs of the Society for Research in Child Development. 1994;59(5) Serial No. 242. [PubMed] [Google Scholar]

- Fischl B, Sereno MI, Dale AM. Cortical surface-based analysis II: Inflation, flattening, and a surface-based coordinate system. Neuroimage. 1999;9(2):195–207. doi: 10.1006/nimg.1998.0396. [DOI] [PubMed] [Google Scholar]

- Fischl B, van der Kouwe A, Destrieux C, Halgren E, Ségonne F, Salat DH, Dale AM. Automatically parcellating the human cerebral cortex. Cerebral Cortex. 2004;14(1):11–22. doi: 10.1093/cercor/bhg087. [DOI] [PubMed] [Google Scholar]

- Fox MD, Snyder AZ, Vincent JL, Corbetta M, Van Essen DC, Raichle ME. The human brain is intrinsically organized into dynamic, anticorrelated functional networks. Proceedings of the National Academy of Sciences. 2005;102(27):9673–9678. doi: 10.1073/pnas.0504136102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Galati G, Committeri G, Spitoni G, Aprile T, Di Russo F, Pitzalis S, Pizzamiglio L. A selective representation of the meaning of actions in the auditory mirror system. Neuroimage. 2008;40(3):1274–86. doi: 10.1016/j.neuroimage.2007.12.044. [DOI] [PubMed] [Google Scholar]

- Genovese CR, Lazar NA, Nichols T. Thresholding of statistical maps in functional neuroimaging using the false discovery rate. Neuroimage. 2002;15:870–878. doi: 10.1006/nimg.2001.1037. [DOI] [PubMed] [Google Scholar]

- Goldin-Meadow S, Kim S, Singer M. What the teacher’s hands tell the student’s mind about math. Journal of Educational Psychology. 1999;91(4):720–730. [Google Scholar]

- Göksun T, Hirsh-Pasek K, Golinkoff R. How do preschoolers express cause in gesture and speech? Cognitive Development. 2010;25:56–68. doi: 10.1016/j.cogdev.2009.11.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Green A, Straube B, Weis S, Jansen A, Willmes K, Konrad K, Kircher T. Neural integration of iconic and unrelated coverbal gestures: A functional MRI study. Human Brain Mapping. 2009;30:3309–3324. doi: 10.1002/hbm.20753. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grossman E, Donnelly M, Price R, Pickens D, Morgan V, Neighbor G, Blake R. Brain areas involved in perception of biological motion. Journal of Cognitive Neuroscience. 2000;12(5):711–720. doi: 10.1162/089892900562417. [DOI] [PubMed] [Google Scholar]

- Habets B, Kita S, Shao Z, Ozyurek A, Hagoort P. The role of synchrony and ambiguity in speech-gesture integration during comprehension. Journal of Cognitive Neuroscience. 2010;23:1845–1854. doi: 10.1162/jocn.2010.21462. [DOI] [PubMed] [Google Scholar]

- Hasson U, Skipper JI, Wilde MJ, Nusbaum HC, Small SL. Improving the analysis, storage and sharing of neuroimaging data using relational databases and distributed computing. Neuroimage. 2008;39(2):693–706. doi: 10.1016/j.neuroimage.2007.09.021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hayasaka S, Nichols TE. Validating cluster size inference: Random field and permutation methods. Neuroimage. 2003;20(4):2343–56. doi: 10.1016/j.neuroimage.2003.08.003. [DOI] [PubMed] [Google Scholar]

- Hein G, Doehrmann O, Müller NG, Kaiser J, Muckli L, Naumer MJ. Object familiarity and semantic congruency modulate responses in cortical audiovisual integration areas. Journal of Neuroscience. 2007;27(30):7881–7887. doi: 10.1523/JNEUROSCI.1740-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hoenig K, Scheef L. Neural correlates of semantic ambiguity processing during context verification. Neuroimage. 2009;45(3):1009–19. doi: 10.1016/j.neuroimage.2008.12.044. [DOI] [PubMed] [Google Scholar]

- Holle H, Gunter TC. The role of iconic gestures in speech disambiguation: ERP evidence. Journal of Cognitive Neuroscience. 2007;19(7):1175–1192. doi: 10.1162/jocn.2007.19.7.1175. [DOI] [PubMed] [Google Scholar]

- Holle H, Gunter TC, Rüschemeyer SA, Hennenlotter A, Iacoboni M. Neural correlates of the processing of co-speech gestures. Neuroimage. 2008;39(4):2010–2024. doi: 10.1016/j.neuroimage.2007.10.055. [DOI] [PubMed] [Google Scholar]

- Holle H, Obleser J, Rueschemeyer S-A, Gunter TC. Integration of iconic gestures and speech in left superior temporal areas boosts speech comprehension under adverse listening conditions. Neuroimage. 2010;49:875–884. doi: 10.1016/j.neuroimage.2009.08.058. [DOI] [PubMed] [Google Scholar]

- Hostetter AB. When do gestures communicate? A meta-analysis. Psychol Bull. 2011;137(2):297–315. doi: 10.1037/a0022128. [DOI] [PubMed] [Google Scholar]

- Iverson JM, Thelen E. Hand, mouth, and brain: The dynamic emergence of speech and gesture. Journal of Consciousness Studies. 1999;6(11–12):19–40. [Google Scholar]

- Jacobson L, Javitt DC, Lavidor M. Activation of inhibition: Diminishing impulsive behavior by direct current stimulation over the inferior frontal gyrus. Journal of Cognitive Neuroscience. 2011 doi: 10.1162/jocn_a_00020. [DOI] [PubMed] [Google Scholar]

- Johnson MH, Grossmann T, Kadosh KC. Mapping functional brain development: Building a social brain through interactive specialization. Developmental Psychology. 2009;45(1):151–159. doi: 10.1037/a0014548. [DOI] [PubMed] [Google Scholar]

- Johnstone T, Ores Walsh KS, Greischar LL, Alexander AL, Fox AS, Davidson RJ, Oakes TR. Motion correction and the use of motion covariates in multiple-subject fmri analysis. Human Brain Mapping. 2006;27:779–788. doi: 10.1002/hbm.20219. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kang HC, Burgund ED, Lugar HM, Petersen SE, Schlaggar BL. Comparison of functional activation foci in children and adults using a common stereotactic space. Neuroimage. 2003;19(1):16–28. doi: 10.1016/s1053-8119(03)00038-7. [DOI] [PubMed] [Google Scholar]

- Karunanayaka PR, Holland SK, Schmithorst VJ, Solodkin A, Chen EE, Szaflarski JP, Plante E. Age-Related connectivity changes in fmri data from children listening to stories. Neuroimage. 2007;34:349–360. doi: 10.1016/j.neuroimage.2006.08.028. [DOI] [PubMed] [Google Scholar]

- Kellenbach ML, Brett M, Patterson K. Actions speak louder than functions: The importance of manipulability and action in tool representation. Journal of Cognitive Neuroscience. 2003;15(1):30–46. doi: 10.1162/089892903321107800. [DOI] [PubMed] [Google Scholar]

- Kelly SD. Broadening the units of analysis in communication: Speech and nonverbal behaviours in pragmatic comprehension. Journal of Child Language. 2001;28(2):325–349. doi: 10.1017/s0305000901004664. [DOI] [PubMed] [Google Scholar]

- Kelly SD, Church RB. Can children detect conceptual information conveyed through other children’s nonverbal behavior’s. Cognition and Instruction. 1997;15(1):107–134. [Google Scholar]

- Kelly SD, Church RB. A comparison between children’s and adults’ ability to detect conceptual information conveyed through representational gestures. Child Development. 1998;69(1):85–93. [PubMed] [Google Scholar]

- Kelly SD, Creigh P, Bartolotti J. Integrating speech and iconic gestures in a stroop-like task: Evidence for automatic processing. Journal of Cognitive Neuroscience. 2010;22(4):683–94. doi: 10.1162/jocn.2009.21254. [DOI] [PubMed] [Google Scholar]

- Kelly SD, Kravitz C, Hopkins M. Neural correlates of bimodal speech and gesture comprehension. Brain and Language. 2004;89(1):253–260. doi: 10.1016/S0093-934X(03)00335-3. [DOI] [PubMed] [Google Scholar]

- Kidd E, Holler J. Children’s use of gesture to resolve lexical ambiguity. Developmental Science. 2009;12(6):903–13. doi: 10.1111/j.1467-7687.2009.00830.x. [DOI] [PubMed] [Google Scholar]

- Kircher T, Straube B, Leube D, Weis S, Sachs O, Willmes K, Green A. Neural interaction of speech and gesture: Differential activations of metaphoric co-verbal gestures. Neuropsychologia. 2009;47(1):169–179. doi: 10.1016/j.neuropsychologia.2008.08.009. [DOI] [PubMed] [Google Scholar]

- Krishnamoorthy K, Lu F, Mathew T. A parametric bootstrap approach for ANOVA with unequal variances: Fixed and random models. Computational Statistics & Data Analysis. 2007;51(12):5731–5742. [Google Scholar]

- Kumin L, Lazar M. Gestural communication in preschool children. Perceptual and Motor Skills. 1974;38:708–710. [Google Scholar]

- Lau EF, Phillips C, Poeppel D. A cortical network for semantics: (De)constructing the N400. Nature Reviews Neuroscience. 2008;9(12):920–933. doi: 10.1038/nrn2532. [DOI] [PubMed] [Google Scholar]

- Lauro LJ, Tettamanti M, Cappa SF, Papagno C. Idiom comprehension: A prefrontal task? Cerebral Cortex. 2008;18(1):162–70. doi: 10.1093/cercor/bhm042. [DOI] [PubMed] [Google Scholar]

- Lenartowicz A, Verbruggen F, Logan GD, Poldrack RA. Inhibition-Related activation in the right inferior frontal gyrus in the absence of inhibitory cues. Journal of Cognitive Neuroscience. 2011 doi: 10.1162/jocn_a_00031. [DOI] [PubMed] [Google Scholar]

- Lewis JW. Cortical networks related to human use of tools. The Neuroscientist. 2006;12(3):211. doi: 10.1177/1073858406288327. [DOI] [PubMed] [Google Scholar]

- Lobaugh NJ, Gibson E, Taylor MJ. Children recruit distinct neural systems for implicit emotional face processing. Neuroreport. 2006;17(2):215–219. doi: 10.1097/01.wnr.0000198946.00445.2f. [DOI] [PubMed] [Google Scholar]

- Luciana M. Adolescent brain development: Current themes and future directions [special issue] Brain and Cognition. 2010:72. doi: 10.1016/j.bandc.2009.11.002. [DOI] [PubMed] [Google Scholar]

- Martin A, Chao LL. Semantic memory and the brain: Structure and processes. Current Opinion in Neurobiology. 2001;11(2):194–201. doi: 10.1016/s0959-4388(00)00196-3. [DOI] [PubMed] [Google Scholar]

- McGregor KK, Rohlfing KJ, Bean A, Marschner E. Gesture as a support for word learning: The case of under. Journal of Child Language. 2009;36(4):807–28. doi: 10.1017/S0305000908009173. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McIntosh AR, Gonzalez-Lima F. Structural modeling of functional visual pathways mapped with 2-deoxyglucose: Effects of patterned light and foot shock. Brain Research. 1992;578:75–86. doi: 10.1016/0006-8993(92)90232-x. [DOI] [PubMed] [Google Scholar]

- McIntosh AR, Gonzalez-Lima F. Structural equation modeling and its application to network analysis in functional brain imaging. Human Brain Mapping. 1994;2:2–22. [Google Scholar]

- McIntosh AR, Grady CL, Ungerleider LG, Haxby JV, Rapoport SI, Horwitz B. Network analysis of cortical visual pathways mapped with PET. The Journal of Neuroscience. 1994;14(2):655–666. doi: 10.1523/JNEUROSCI.14-02-00655.1994. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McNeil NM, Alibali MW, Evans JL. The role of gesture in children’s comprehension of spoken language: Now they need it, now they don’t. Journal of Nonverbal Behavior. 2000;24(2):131–150. [Google Scholar]

- Mitsis GD, Iannetti GD, Smart TS, Tracey I, Wise RG. Regions of interest analysis in pharmacological fMRI: How do the definition criteria influence the inferred result? Neuroimage. 2008;40(1):121–132. doi: 10.1016/j.neuroimage.2007.11.026. [DOI] [PubMed] [Google Scholar]

- Mohan B, Helmer S. Context and second language development: Preschooler’s comprehension of gestures. Applied Linguistics. 1988;9(3):275–292. [Google Scholar]

- Morford M, Goldin-Meadow S. Comprehension and production of gesture in combination with speech in one-word speakers. Journal of Child Language. 1992;19:559–580. doi: 10.1017/s0305000900011569. [DOI] [PubMed] [Google Scholar]

- Morton JB, Trehub SE. Children’s understanding of emotion in speech. Child Development. 2001;72(3):834–43. doi: 10.1111/1467-8624.00318. [DOI] [PubMed] [Google Scholar]

- Nichols T, Holmes AP. Nonparametric permutation tests for functional neuroimaging: A primer with examples. Human Brain Mapping. 2002;15(1):1–25. doi: 10.1002/hbm.1058. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oldfield RC. The assessment and analysis of handedness: The edinburgh inventory. Neuropsychologia. 1971;9(1):97–113. doi: 10.1016/0028-3932(71)90067-4. [DOI] [PubMed] [Google Scholar]

- O’Reilly AW. Using representations: Comprehension and production of actions with imagined objects. Child Development. 1995;66(4):999–1010. [PubMed] [Google Scholar]

- Overton WF, Jackson JP. The representation of imagined objects in action sequences: A developmental study. Child Development. 1973;44:309–314. [PubMed] [Google Scholar]

- Özçalişkan Ş, Goldin-Meadow S. Gesture is at the cutting edge of early language development. Cognition. 2005;96:B101–B113. doi: 10.1016/j.cognition.2005.01.001. [DOI] [PubMed] [Google Scholar]

- Özyürek A, Willems RM, Kita S, Hagoort P. On-Line integration of semantic information from speech and gesture: Insights from event-related brain potentials. Journal of Cognitive Neuroscience. 2007;19(4):605–616. doi: 10.1162/jocn.2007.19.4.605. [DOI] [PubMed] [Google Scholar]

- Parrish TB, Gitelman DR, LaBar KS, Mesulam M. Impact of signal-to-noise on functional MRI. Magnetic Resonance in Medicine. 2000;44:925–932. doi: 10.1002/1522-2594(200012)44:6<925::aid-mrm14>3.0.co;2-m. [DOI] [PubMed] [Google Scholar]

- Perry M, Berch D, Singleton J. Constructing shared understanding: The role of nonverbal input in learning contexts. Journal of Contemporary Legal Issues. 1995;6:213–235. [Google Scholar]

- Pienaar R, Fischl B, Caviness V, Makris N, Grant PE. A methodology for analyzing curvature in the developing brain from preterm to adult. International Journal of Imaging Systems and Technology. 2008;18(1):42–68. doi: 10.1002/ima.v18:1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ping RM, Goldin-Meadow S. Hands in the air: Using ungrounded iconic gestures to teach children conservation of quantity. Developmental Psychology. 2008;44(5):1277–87. doi: 10.1037/0012-1649.44.5.1277. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Price CJ. The anatomy of language: A review of 100 fmri studies published in 2009. Annals of the New York Academy of Sciences. 2010;1191:62–88. doi: 10.1111/j.1749-6632.2010.05444.x. [DOI] [PubMed] [Google Scholar]

- Reilly SS, Muzekari LH. Responses of normal and disturbed adults and children to mixed messages. Journal of Abnormal Psychology. 1979;88(2):203–8. doi: 10.1037//0021-843x.88.2.203. [DOI] [PubMed] [Google Scholar]

- Riseborough MG. Meaning in movement: An investigation into the interrelationship of physiographic gestures and speech in seven-year-olds. British Journal of Psychology. 1982;73:497–503. doi: 10.1111/j.2044-8295.1982.tb01831.x. [DOI] [PubMed] [Google Scholar]

- Rodd JM, Davis MH, Johnsrude IS. The neural mechanisms of speech comprehension: Fmri studies of semantic ambiguity. Cerebral Cortex. 2005;15(8):1261–1269. doi: 10.1093/cercor/bhi009. [DOI] [PubMed] [Google Scholar]

- Rowe ML, Goldin-Meadow S. Early gesture selectively predicts later language learning. Developmental Science. 2009;12(1):182–7. doi: 10.1111/j.1467-7687.2008.00764.x. [DOI] [PMC free article] [PubMed] [Google Scholar]