Abstract

Objective

The purpose of this paper is to describe an evidence-based practice (EBP) curriculum incorporated throughout a chiropractic doctoral program and the study used to evaluate the effects of the curriculum on EBP knowledge, attitudes, and self-assessed skills and behaviors in chiropractic students.

Methods

In a prospective cohort design, students from the last entering class under an old curriculum were compared to students in the first 2 entering classes under a new EBP curriculum at the University of Western States. The assessment instruments for evaluating study outcomes were developed for this study and included knowledge exam, behavior and skills self-appraisal, and practice attitudes. ANOVA was performed using a 3-cohort × 2-quarter repeated cross-sectional factorial design to assess the effect of successive entering classes and stage of the students’ education.

Results

There was a statistically significant cohort effect with each succeeding cohort for the knowledge exam (P < .001). A similar pattern in cohort and quarter effects was found with behavior self-appraisal for greater time accessing databases such as PubMed. Student self-appraisal of their skills was higher in the 11th quarter compared to the 9th quarter. All cohorts rejected a set of sentinel misconceptions about application of scientific literature (practice attitudes).

Conclusions

An evidence-based practice curriculum can be successfully implemented in a chiropractic-training program. The implementation of the EBP curriculum at this institution resulted in acquisition of knowledge necessary to access and interpret scientific literature, the retention and improvement of skills over time, and the enhancement of self-reported behaviors favoring utilization of quality online resources.

Keywords: Evidence-Based Practice, Outcomes Assessment, Education, Professional, Chiropractic

INTRODUCTION

Sackett et al 1 define evidence-based practice (EBP) as the integration of the best available research evidence in conjunction with clinical expertise and consideration of patient values. They assert that the well-trained clinician should display the ability to pose clinically relevant questions and access the clinically relevant literature to find, appraise, and utilize the best valuable evidence in routine clinical care. Population-based outcome studies have documented that patients who receive evidence-based therapy have better outcomes than patients who do not.1–5 Chiropractic educators have also recognized that an important goal of chiropractic clinical education should be to teach specific EBP skills to chiropractic students, interns, and doctors.6,7 However, a survey on the prevalence of EBP teaching published in 2000 revealed few of the 18 responding chiropractic colleges worldwide required interns to routinely generate clinical research questions or conduct literature searches.8 There is a dearth of outcomes research relative to EBP and chiropractic education. A 2004 literature review could only identify 4 studies in the chiropractic arena, most of which measured only student self-assessment of skills.9

In 2004, the National Center for Complementary and Alternative Medicine at the National Institutes of Health recognized the importance of enhancing EBP skills in institutions training complementary and alternative medicine (CAM) practitioners with the release of a grant initiative using its R25 funding mechanism: “CAM Practitioner Research Education Project Grant Partnership”. Its specific purpose was to “to increase the quality and quantity of the research content in the curricula at CAM institutions in the United States where CAM practitioners are trained….enhance CAM practitioners’ exposure to, understanding of, and appreciation of the evidenced-based biomedical research literature and approaches to advancing scientific knowledge.”10

This funding opportunity was the impetus for the University of Western States (UWS) to incorporate EBP throughout its chiropractic curriculum. The principal goal of this project was to train doctors of chiropractic to develop the knowledge, skills, and attitudes to implement the EBP model in practice. Towards this end, a partnership was formed with the Oregon Health & Science University to train faculty, as well as design and implement a program fully integrated across the chiropractic curriculum that develops EBP knowledge, skills and behaviors. Also included in this process was curriculum development with the aim of formalizing EBP skills in research and critical thinking courses, integrating EBP applications throughout the chiropractic program, and training students to apply EBP in formulating patient care. Up until now, published studies in chiropractic education have focused on single workshop or single course outcomes.11–16 In distinction, this study measures outcomes from a major revision of a chiropractic curriculum, spanning all 4 years and crossing departments. Because we could find no existing comprehensive EBP curriculum in the chiropractic literature,17 the project team had to develop a new chiropractic EBP curriculum from the beginning.

The purpose of this report is to describe the new curriculum and to compare learning outcomes between students educated in the pre-EBP curriculum and students educated in the new EBP curriculum. Our hypothesis was that the new curriculum would improve EBP knowledge, attitudes, and self-assessed skills and behaviors.

METHODS

Design and Protocol

A prospective cohort design was used to evaluate the effectiveness of the new EBP curriculum. We compared the last entering class of students under the old curriculum (control cohort) with students in the first 2 classes matriculating under the new curriculum (intervention cohorts). Cohort 1 included students enrolled in September 2005 and January 2006 (Table 1). This cohort served as the control group. All curricular changes started the following academic year and were instituted throughout the 12-quarter program, starting after Cohort 1 passed through each course or clinical phase of the curriculum. In this way, Cohort 1 students had no direct exposure to the new curriculum. Cohort 2 (2006 – 2007) included the first students to receive the new curriculum. For Cohort 3 (2007 – 2008), the program was more entrenched with some targeted curricular updates incorporated.

Table 1.

Baseline characteristics of first-year students

| Matriculation date: | Total (n = 339) | Cohort 1 (n = 93) | Cohort 2 (n = 132) | Cohort 3 (n = 114) | P-value |

|---|---|---|---|---|---|

| 2005–2006 | 2006–2007 | 2007–2008 | |||

| Age | 27.4 ± 5.0 | 27.6 ± 4.7 | 28.6 ± 5.4 | 26.0 ± 4.9 | .065 |

| Female (%) | 36 | 31 | 42 | 33 | .183 |

| White non-Hispanic (%) | 83 | 82 | 84 | 82 | .269 |

| Openness Scale | 3.9 ± 0.5 | 3.9 ± 0.5 | 3.9 ±0.5 | 3.9 ± 0.5 | .916 |

| Bachelor’s degree or higher (%) | 71 | 78 | 71 | 65 | .100 |

| # prior research methods courses | 2.5 ± 2.7 (n=329) | 2.2 ± 2.3 (n=92) | 2.3 ± 2.3 (n=128) | 3.0 ± 3.3 (n=109) | .051 |

| # prior probability & statistics courses | 1.7 ± 1.3 (n=329) | 1.6 ± 1.5 (n=92) | 1.6 ± 1.1 (n=125) | 1.9 ± 1.2 (n=112) | .269 |

| Prior college within 2 years (%) | 93 | 85 | 97 | 93 | .008 |

| Prior job in health care (%) | 36 | 34 | 43 | 29 | .063 |

| Reason for selecting the college (%) | .450 | ||||

| Reputation | 24 | 20 | 26 | 24 | |

| EBP Teaching philosophy | 40 | 47 | 34 | 42 | |

| Other | 36 | 33 | 40 | 34 | |

| Attitudes on scientific literature* | |||||

| Important to read literature 2–3 hr/wk | 5.4 ± 1.3 | 5.6 ± 1.4 | 5.2 ± 1.3 | 5.5 ± 1.3 | .098 |

| Interpretation skills high CME priority | 4.9 ± 1.1 | 4.8 ± 1.2 | 4.9 ± 1.1 | 5.1 ± 1.1 | .157 |

Mean±SD or %. CME, continuing medical education.

evaluated on a 1 to 7 Likert scale with 1 = strongly disagree, 4 = neither, 7 = strongly agree.

Student testing was not designed to evaluate learning from a single course or courses. It evaluated the effects of the complete new EBP curriculum incorporated throughout the chiropractic doctoral program. The primary outcome was an objective EBP knowledge exam score. Secondary outcomes included self-assessment of EBP skills and behaviors, as well as attitudes related to EBP. Outcomes were assessed at the end of the end of 9th and 11th quarter. The first follow-up was administered after a limited “on-campus” clinical experience and a year after the critical thinking and EBP core courses. The final administration followed the majority of the outpatient clinical internship and two quarters of a journal club. Baseline data were collected before any exposure to EBP material whether in the old or new curriculum. The baseline questionnaire included an assessment of EBP attitudes.

Arrangements were made to administer the questionnaires during class time. Administration had no unique home because the test did not pertain to any specific course. At baseline, an investigator introduced the project to the students and asked them to fill out the instrument. The questionnaires were collect anonymously, and students were asked to create an identification code that they could remember so that data can be tracked across time. All data were secured in the Universities Division of Research. Students were given the right to refuse participation. The trial was approved by the University of Western States Institutional Review Board (FWA 851).

New EBP Curriculum Intervention

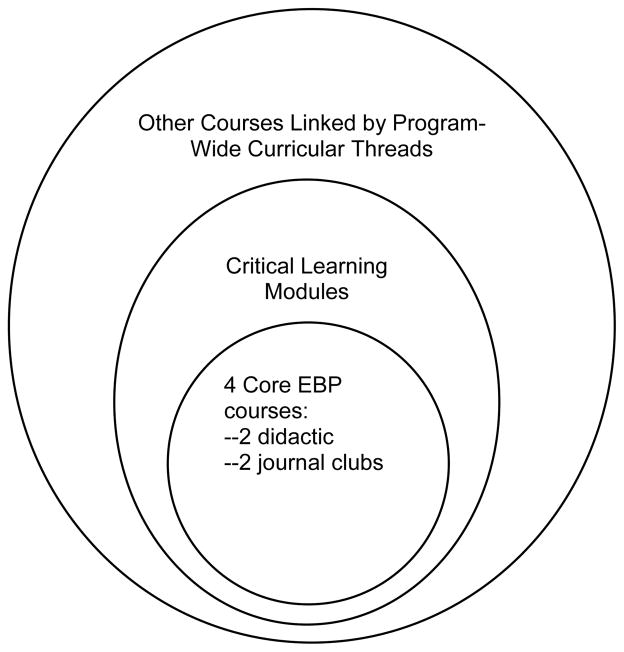

The new EBP curriculum was the program intervention. A paper describing EBP learning objectives and competencies has been published elsewhere.17 This curriculum document was based on 5 standards adopted by the Sicily conference on evidence-based medicine.18 The design of the new curriculum is divided into pre-/peri-clinical courses and clinic-based training. The pre-/peri-clinical curriculum is organized conceptually around 3 concentric rings (Figure 1). The center ring is composed of 4 core EBP courses. The first 2 are didactic in nature and the last 2 are modeled after journal clubs. The intermediate ring is a cluster of 1st- and 2rd-year courses which contain critical learning modules dealing with specific EBP skills and knowledge. These modules complement the core EBP courses. The larger outer ring consists of the rest of the basic science, diagnosis, and management courses linked by program-wide EBP curricular threads through the 4 years of the chiropractic program. In some cases, these threads are composed of actual assignments (eg, literature searches and paper assessments); in other cases, the thread is simply a purposeful effort to utilize basic EBP language and principles in teaching content specific to each of the disciplines (eg, microbiology, orthopedics, physical therapy, manual therapy).

Fig 1.

Curriculum development

The new differed from the old curriculum in 3 ways: 1) the research courses were converted to EBP courses where the content and emphasis emphasized the user rather than the doer of clinical research, 2) the number of hours in the core courses was increased, and 3) a network of EBP teaching and learning threads were woven through the regular curriculum, crossing the usual course, divisional and teaching year boundaries.

Evaluation Instruments

Questionnaire development and evaluation are described in detail in a companion paper.19 The Program Evaluation Committee identified relevant EBP domains. Finding no instruments that fully met program assessment needs, the Committee developed a questionnaire to evaluate EBP knowledge, attitudes, self-assessed skills, and self-assessed behaviors. The primary program outcome was performance on the objective knowledge component. The secondary outcomes on attitudes, skills, and behaviors are listed in Tables 1and 2.

Knowledge (primary outcome)

A detailed description on the development and psychometric characteristics of this measure is presented in a companion paper.19 Version 1.0 of the knowledge exam, which consisted of 20 multiple-choice items covering 10 domains of EBP knowledge, was used for this report. The 10 domains were research questions and finding evidence; biostatistics; study design and validity; critical appraisal of therapy studies, diagnostic studies, preventive studies, harm studies, prognosis studies, and systematic reviews; and clinical application. Across all of the knowledge items, the internal consistencies of the 9th and 11th quarter knowledge exam score were KR20 = 0.53 and 0.6, respectively. Note that this is a lower-bound estimate of test reliability.20 Scores are reported as the percentage of items answered correctly.

Self-Rated Skills and Behavior (secondary outcomes)

This component was devoted to the self-appraisal of one’s ability to apply EBP knowledge. The skills self-appraisal consisted of four, 7-point Likert scale questions asking the respondent to self-appraise their understanding of basic biostatistical concepts and their ability to find, critically appraise, and integrate clinical research into their clinical practice. The behavior self-appraisal included 3 items asking respondents to evaluate the time spent reading original research, accessing PubMed and applying EBP methods to patient care. Because these items were not constructed to form an overall scale, items were examined individually.

Attitudes (secondary outcomes)

This section consisted of nine 7-point Likert scale items that focused on attitudes relative to the weighing of research evidence as compared to expert and clinical opinion, whether all types of evidence are equal, the need to access and stay abreast of the most current information and the ability to critically review research literature. Because these items were not constructed to form an overall scale, items were examined on an individual basis.

Only two questions are reported for the baseline administration (Table 1), because they did not require any programmatic knowledge or experience to understand the questions. Only five of the items were included in Table 2. We decided before performing the final analysis that the other four were too ambiguous for meaningful interpretation. For example, “Research evidence is more important than clinical experience in choosing the best treatment for a patient.” Agreeing or disagreeing with this statement could reflect a positive EBP attitude depending on the context the respondent used.

Table 2.

Outcomes at 9th and 11th quarter for the three student cohorts

| Cohort 1 | Cohort 2 | Cohort 3 | Significance of effects (P)

|

||||

|---|---|---|---|---|---|---|---|

| n9th = 81 n11th = 41 |

n9th = 108 n11th = 71 |

n9th = 116 n11th = 61 |

Cohort | Quarter | Inter- action | Post hoc Cohort pairwise | |

| Knowledge exam (0 – 100 possible) | |||||||

| 9th | 44.2 ± 11.2 | 48.6 ± 14.8 | 55.3 ± 14.2 | <.001 | .028 | .748 | C1-2, C1-3, C2-3 |

| 11th | 46.7 ± 12.6 | 50.9 ± 17.1 | 59.8 ± 15.1 | ||||

| Behaviors self-appraisal | |||||||

| Time spent each month accessing PUBMED or similar databases (1 = “none”, 2 = “≤1 hr”, 3 = “> 1 hr but < 5 hr”, 4 = “5 to 10 hr”, 5 = “10+ hr”) | |||||||

| 9th | 2.1 ± 0.8 | 2.3 ± 0.7 | 2.5 ± 0.8 | <.001 | <.001 | .999 | C1-2, C1-3, C2-3 |

| 11th | 2.3 ± 0.7 | 2.6 ± 0.8 | 2.8 ± 0.9 | ||||

| Time spent each month reading original research articles (1 = “none”, 2 = “≤1 hr”, 3 = “> 1 hr but < 5 hr”, 4 = “5 to 10 hr”, 5 = “10+ hr”) | |||||||

| 9th | 2.1 ± 0.9 | 2.2 ± 0.8 | 2.4 ± 0.8 | .005 | <.001 | .562 | C1-3, C2-3 |

| 11th | 2.7 ± 0.9 | 2.6 ± 0.7 | 2.8 ± 0.7 | ||||

| How frequently student uses specific methods and approach of evidence-based practice (1 = “never”, 2 = “rarely”, 3 = “1–2%”, 4 = “>2% but <5%”, 5 = “5–10%”, 6 = “10+%”) | |||||||

| 9th | 4.3 ± 1.8 | 3.4 ± 1.6 | 4.1 ± 1.6 | <.001 | .063 | .806 | C1-2, C2-3 |

| 11th | 4.6 ± 1.6 | 3.6 ± 1.5 | 4.5 ± 1.4 | ||||

| Skills self-appraisal (1 – 7 ordinal scale) (1 = “not at all competent”, 7 = “very competent”) | |||||||

| Ability to identify, find, and retrieve relevant research-related information | |||||||

| 9th | 5.0 ± 1.2 | 4.9 ± 1.2 | 5.0 ± 1.1 | .358 | .001 | .374 | |

| 11th | 5.2 ± 1.3 | 5.2 ± 1.0 | 5.5 ± 1.0 | ||||

| Ability to critically evaluate whether or not a research study was well done | |||||||

| 9th | 4.8 ± 1.2 | 4.6 ± 1.1 | 4.7 ± 1.2 | .304 | .037 | .221 | |

| 11th | 4.8 ± 1.4 | 4.8 ± 1.1 | 5.2 ± 1.0 | ||||

| Ability to integrate research findings into teaching or clinical decision-making | |||||||

| 9th | 5.0 ± 1.0 | 4.9 ± 1.1 | 4.9 ± 1.0 | .675 | .001 | .641 | |

| 11th | 5.3 ± 1.1 | 5.1 ± 1.0 | 5.3 ± 0.9 | ||||

| Ability to understand basic statistical concepts used in scientific research | |||||||

| 9th | 5.0 ± 1.2 | 4.6 ± 1.2 | 4.4 ± 1.3 | .007 | .156 | .312 | C1-2, C1-3 |

| 11th | 4.8 ± 1.4 | 4.3 ± 1.4 | 4.5 ± 1.1 | ||||

| Practice attitudes (1–7 ordinal scale) (1 = “disagree strongly”, 4 = “neither agree or disagree”, 7 = “agree strongly”) | |||||||

| It is very important for a chiropractor to spend 2 to 3 hr/wk reading current clinical research literature | |||||||

| 9th | 5.3 ± 1.3 | 4.2 ± 1.4 | 4.7 ± 1.8 | <.001 | .566 | .453 | C1-2, C1-3, C2-3 |

| 11th | 5.1 ± 1.4 | 4.3 ± 1.4 | 4.6 ± 1.5 | ||||

| A high priority for CME should be for education in skills that can be used to interpret research findings | |||||||

| 9th | 4.5 ± 1.4 | 4.0 ± 1.3 | 4.5 ± 1.4 | .031 | .261 | .658 | C1-2, C2-3 |

| 11th | 4.3 ± 1.3 | 4.0 ± 1.4 | 4.2 ± 1.5 | ||||

| Article abstracts contain all study information you need to know in most cases | |||||||

| 9th | 3.2 ± 1.5 | 3.6 ± 1.3 | 3.0 ± 1.4 | .080 | .788 | .365 | |

| 11th | 3.3 ± 1.7 | 3.4 ± 1.6 | 3.3 ± 1.6 | ||||

| It is more effective to read a text than keep up with literature because many findings are later contradicted | |||||||

| 9th | 2.7 ± 1.3 | 2.9 ± 1.3 | 2.7 ± 1.1 | .046 | .544 | .549 | |

| 11th | 2.6 ± 1.1 | 3.1 ± 1.3 | 2.7 ± 1.2 | ||||

| Most of the time, a good case study will help you understand how to treat a condition better than a RCT | |||||||

| 9th | 3.3 ± 1.4 | 3.4 ± 1.3 | 2.9 ± 1.4 | .001 | .718 | .018 | C1-3, C2-3 |

| 11th | 3.9 ± 1.5 | 3.1 ± 1.4 | 2.8 ± 1.4 | ||||

Mean ± SD. CME, continuing medical education; RCT, randomized controlled trial, C1-2…, Cohort 1 – Cohort 2…

Statistical Significance: P-values are presented for the main effects of cohort (comparison of 3 cohorts) and quarter (comparison of 9th and 11th quarters), the cohort by quarter interaction effect. The post hoc Sidak test was used to correct for multiple comparisons between the three cohorts; statistically significant pairwise comparisons are noted when P < .05. Post hoc tests were conducted only if the cohort main effect was significant at the .05 level.

Statistical Analysis

Baseline characteristics were tabulated by cohort and compared for differences between groups (Table 1). Analysis of variance (ANOVA) was used for scaled variables, and chi squared was used for categorical data. Outcomes (Table 2) were analyzed with ANOVA using a 3-cohort × 2-quarter factorial design. Main effects of cohort (comparison of three cohorts), main effects of quarter (comparison of 9th and 11th quarters) and the cohort by quarter interaction effects were examined. In the case of statistically significant cohort main effects, we compared pairwise the three cohorts using a Sidak correction for multiple comparisons in a post hoc analysis. We noted statistically significant pairwise comparisons (P < .05) in Table 2.

Note that we could not treat the two levels of the quarter factor (9th and 11th quarters) as repeated measures in the analysis, because of inconsistencies in the ID number used by the participants. Baseline covariates were also excluded from the analysis because baseline and follow-up data could not be linked. The repeated cross-sectional design with follow-up quarters treated as independent does not bias the main effect of quarter, although the significance test is likely more conservative.21

For the primary outcome, the knowledge test score, the mean difference (MD) and 95% confidence intervals are included in the text. For added perspective, we computed the effect size as the standardized mean difference (SMD) using Cohen’s d.22

An additional analysis was conducted to determine whether attitudes towards EBP differed between baseline and the follow-up quarters. Attitudinal differences were evaluated as the quarter main effect of quarter in a 3-cohort × 3-quarter ANOVA by adding baseline to the analysis. Finally, the relationship of knowledge with attitudes and self-appraised behavior and skills was assessed using Pearson’s r at both follow-ups (9th and 11th quarters).

Because of the large sample size, we had >90% power to detect even a modest 0.4 between-groups effect size at the two-sided .05 level of significance.22 Statistical significance was set at .05 for all tests. Analysis was performed using SPSS 19.0 (Chicago, IL) and Stata 11.2 (College Station, TX).

RESULTS

The 3 study cohorts included 370 students of which 92% (339) filled out the baseline survey, 82% (305) the 9th quarter survey, and 49% (180) the 11th quarter survey (Table 1). The participants had a mean age of 27 and two-thirds were male. There was 1 notable difference among cohorts. The new-curriculum students were more likely to have attended college within 2 years of matriculation (P = .008). Cohort 3 also reported a slightly greater number of research methods courses prior to entering the program, but small cohort differences with large group variability suggests little effect on outcomes (MD = 0.7 to 0.8, SD = 2.3 to 3.3, P = .051). The reason for attending UWS and attitudes on scientific literature were well balanced across cohorts.

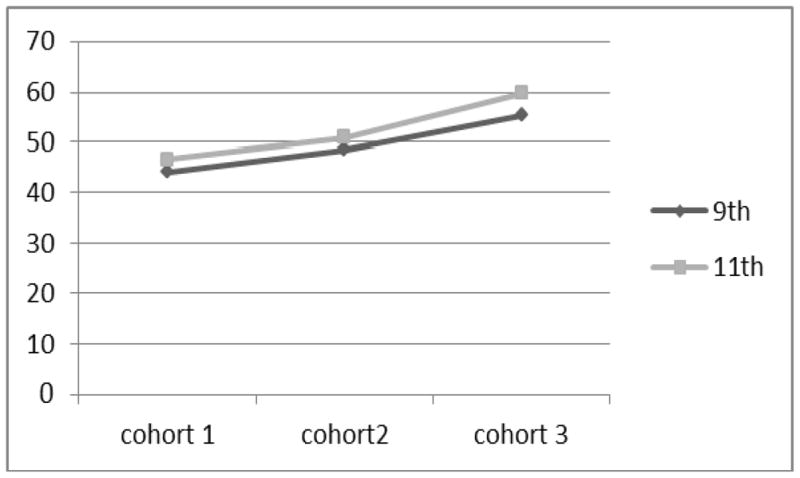

Knowledge Exam

The primary outcome, knowledge exam score, is shown in Figure 2 and Table 2. There was a statistically significant cohort effect (P < .001), such that each subsequent cohort performed better than the previous ones (pairwise P < .05). The greatest difference was between Cohort 3 and the control group Cohort 1: mean difference (MD) = 11.8 (95% CI = 7.7 to 15.9). The SMD = 0.9 and is considered a large effect size.22 Cohort 2 also performed better than the control Cohort 1: MD = 4.5 (0.4 to 8.5); the SMD = 0.3 was small in magnitude. The difference between the two new-curriculum cohorts favored Cohort 3 over Cohort 2 with MD = 7.3 (3.6 to 11.0), a moderate effect size with SMD = 0.5. There was also a small effect of quarter with students performing better in 11th than 9th quarter (P < .05): MD = 3.1 (95% CI = 0.4 to 5.8) and SMD = 0.2. The cohort × quarter interaction effect was not significant.

Fig 2.

Mean knowledge exam scores (100-point scale) for the three cohorts at 9th and 11th quarter.

Behaviors Self-Appraisal

The time spent accessing online databases such as PubMed showed a shift by the students toward more than 1 hour per week. The pattern of outcomes paralleled that of the knowledge exam score with statistically significant cohort and quarter effects (P < .001) and later cohorts reporting more usage than earlier ones. Similarly, cohort and quarter effects were significant for the time reading journal articles, with Cohort 3 reading more than the other two cohorts and 11th quarter reading more than 9th quarter. There was also a cohort effect for the use of an EBP approach. However, in this case, Cohort 2 reported less utilization, while the Cohort 3 and the control cohort had comparable results.

Skills Self-Appraisal

All 3 cohorts tended to rate their competency in research retrieval, critical appraisal, and integration into practice as slightly above the midway point between not at all competent and very competent. The students felt they had somewhat more skill in 11th quarter than in 9th quarter (P < .05), but there were virtually no differences between cohorts. The exception was appraisal of competence in statistics, where the control cohort reported a superior understanding to the cohorts that received some statistical training (P = .007). There was no trend in understanding over quarter.

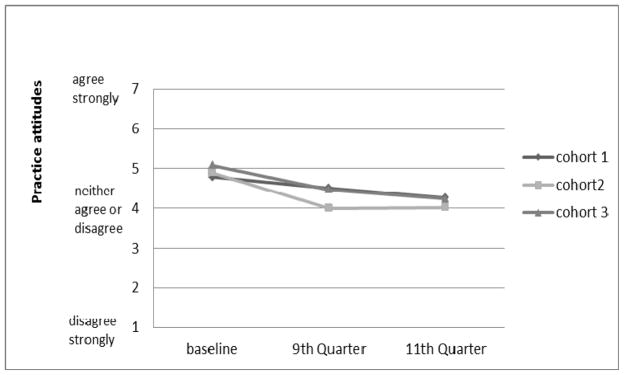

Practice Attitudes

Student attitudes tended to be slightly favorable to EBP, within 1.5 units of the neutral stance (4) on the 7-point Likert scale. Interestingly, the control cohort had the most favorable attitude toward reading the literature (P < .001) and Cohort 2 was more ambivalent about prioritizing research interpretation skills for continuing education (P = .031). All three cohorts disagreed with the propositions that an abstract contained all relevant information, texts were more effective than original articles, and that a case study was more informative than a randomized trial. There was an interaction effect between cohort and quarter (P = .018); the control cohort had a less favorable attitude toward the randomized trial in 11th quarter than in 9th quarter, while the opinions of the other cohorts remained stable over the quarters.

Time Trends in Attitudes

The attitudes towards spending 2 to 3 hours per week reading scientific literature and making EBP continuing education a high priority both declined between baseline and the follow-up (P < .001). The changes in the attitude toward reading were −0.7 (−1.0 to −0.5) for 9th quarter and −0.8 (−1.1 to −0.5) in 11th quarter. The changes in the attitude towards continuing education were −0.6 (−0.9 to −0.4) in 9th quarter and −0.8 (−1.1 to −0.5) in 11th quarter as shown in Figure 3.

Fig 3.

Mean ratings of attitude toward priority of EBP continuing education (1 to 7 Likert scale) for the three cohorts at baseline, 9th quarter, and 11th quarter.

Knowledge Correlates

The knowledge scores were poorly correlated with the attitudes and self-appraised competency variables for both the 9th and 11th quarters. All correlations were |r| < 0.3 with only two variables attaining |r| > 0.2.

DISCUSSION

Outcomes

The data from this study point towards mixed results for the first 2 cohorts of the new EBP-enriched curriculum. The main success is reflected in the favorable trend of the primary outcome: EBP knowledge scores improved over the first years of the new curriculum (Table 2). Each cohort demonstrated a better score than the previous with a moderate to large advantage in effect size for Cohort 3 over the others. The improvement in EBP knowledge is consistent with what others have reported in systematic reviews of the health education literature.23,24 These results are particularly encouraging because the knowledge tests were given nine months or longer after the main EBP conceptual courses were taught. This is in distinction to the norm in the literature where the assessment usually occurs soon after an EBP course or workshop, for example, two weeks after completion as in the recent study by Windish.25 Hopefully, our data offer insight into the understanding of this material, as well as its retention.

Interestingly, the average score on Windish’s25 biostatistics and study design exam was 58%, which was strikingly similar to our average scores (44.2% to 59.8%). Although we compare different tests on different populations (medical residents vs. chiropractic interns), these relatively low averages for both populations speak to the difficulty of learning and retaining this difficult material. The superior performance of Cohort 3 over Cohort 2, the first students receiving the new curriculum, might be explained by an increasing breadth and depth of EBP material, increased experience of the faculty, and/or changing expectations associated with entrenchment of the new curriculum. The picture should be made clearer over time as Cohorts 4 through 8 complete the program.

While “leakage” of the test content and questions over time could theoretically have accounted for some of the apparent intervention cohort improvements, it seems relatively unlikely because the exams were not tied to grades, advancement, or even individual recognition or self-esteem (students were not notified of their individual grades). We took steps to ensure exam security by using multiple proctors and accounted for all exam forms. In addition, had leakage been a significant problem, improvement within cohort from 9th to 11th quarter would be expected to have been of the magnitude of the improvement seen across cohorts.

Also of note was a modest improvement in behavior and knowledge in just two quarters (quarter effect in Table 2). This contrasts with a decline in statistical and research knowledge reportedly seen in senior medical residents compared to junior residents.26 Our success may, in part, be due to the continued exposure to this knowledge base through the journal club courses positioned in our 4th year of training. Alternatively, the change could be related to sampling error introduced if poorer students did not return the 11th quarter survey. The improved test scores could also simply reflect test-taking experience, although the fact that the tests were given approximately six months apart makes this less likely. The differences in behavior between 9th and 11th quarters cannot be explained by differential assignments requiring journal articles across cohorts.

Despite the improving knowledge and changing behavior, there were generally no notable differences between cohorts in skills self-appraisal. Students accessed more information but did not feel more competent in retrieval and understanding of research literature. In fact, the more experienced cohorts felt slightly less competent in understanding statistics than the inexperienced control cohort did. Also, experience over time did seem to affect skills self-appraisal to some degree, with students demonstrating increased confidence between 9th and 11th except for statistical understanding. Perhaps, our findings reflect that the new curriculum students, have developed an appreciation of the complexities of modern research reporting25 and a better understanding of their own limitations. Although data are not available on chiropractors, Horton and Switzer27 report that medical physicians are able to understand only about 21% of research articles.

Favorable trends were also seen in self-reported behavior with increasing access of databases such as PubMed and reading research articles. What is not clear, however, is whether these behaviors were simply the result of more mandatory course work or reflect true self- directed inquiry. To be really meaningful, the search ethic must be internalized. Unfortunately, some of the data from the attitudes survey cast doubt on this explanation.

To our surprise, the control cohort was more likely to agree “reading current scientific literature is important” than the new curriculum students, despite their greater reading responsibility. In fact, the appreciation for reading scientific literature was at its apex at baseline prior to the experience. This attitude decline was clearly apparent in the waning belief that critical appraisal skills were a priority for continuing education (Figure 3). This may be related to the stress of a demanding program. There may also be a factor of creeping nihilism. It may require some years for EBP to become fully integrated in University culture and seen as a practice standard rather than an additional rite of passage. Finally, much of the critical assessment training relentlessly exposes the flaws, many of them serious, in research studies. Unless that experience is counter-balanced by seeing EBPs useful application in a clinic setting the luster of keeping up with the literature begins to fade.

The findings regarding attitudes are complex and difficult to explain. They reflected a disconnect between increasing knowledge and decreasing prioritization with keeping up with the literature. On the other hand, some of the findings were more congruent. For example, Cohort 3, which had the best overall knowledge scores, felt that a case study was not more relevant than a randomized trial to understanding a condition. This is an important distinction for graduates to appreciate, especially in realms where case studies often outnumber RCTs, as is the case, for example, with conservative care for spinal canal stenosis.28

Overall, it was expected that knowledge proficiency would be more strongly correlated with various attitudes, skills, and behaviors. Surprisingly, this was not the case. The data overall reflect significant improvement in knowledge, but a lag in attitudes and lack of clarity about whether we are achieving our behavioral objectives.

In a systematic review of RCTs and non-randomized trials, Coomarasamy et al24 reported that single course educational programs could succeed in improving EBP knowledge, but not attitudes and behavior. On the other hand, programs that integrated EBP activities in a clinic setting were able to achieve better outcomes in all three domains. Although our integrated program is not at all comparable to a single course, the penetration into the clinic setting was very weak, especially in its first years of implementation. Although floor clinicians did receive training in EBP skills, the usual barriers of time and resources remain obstacles. Until this last critical component of the program is effectively implemented in the university clinics, improvement in attitudes and behaviors may remain problematic.

Limitations

An innovation of our EBP program was the evaluation of the program globally, as opposed to assessment for an individual course (eg, Lasater et al29). This gives a broader picture of the program, but requires broader examination and makes it more difficult to identify curricular elements related to outcomes. In part, this was resolved by the use of a curricular map that shows where test content is being taught. Using the map and knowledge questionnaire results, we were able to identify a concept being incorrectly conveyed in the classroom.19

Follow-up also became a challenge because the survey was not a required element for a particular course evaluation and there was no unique home for questionnaire administration. Furthermore, students could not be identified when they were unavailable during questionnaire administration because of the anonymity protocol. The resulting low 11th quarter follow-up rate may have biased quarter effects. However, the direction of bias is indeterminate and follow-up is misleading because it omits dropouts and leaves of absence. Response rate in 11th quarter has since been remedied by contacting students who do not sign a class attendance list. The questionnaire has also been made mandatory, but students maintain the right to refuse the use of the data for research purposes.

The 4-year length of the chiropractic education program put a limitation on the number of cohorts we could follow. We have since received a second R25 grant to further build the EBP program. Data will be collected on additional cohorts that will permit us to assess time trends over eight cohorts to determine the effects of ongoing improvement in student curriculum and expansion of faculty training. Ultimately, we need to see changes in practice behavior. Hence, an essential part of our program evaluation and refinement is ongoing assessment of graduate practice activities and EBP needs assessment.

One other potential limitation is that we used self-report measures to assess participants’ behaviors and skills. Whereas written items and multiple choice questions are appropriate for core clinical knowledge,30 they only assess one component of the EBP skill set. Self-assessment of skills and behavior although of value, is prone to recall bias and subject to participants factoring in other variables that may affect the perception of their own behavior.31 However, self-report was the most feasible data collection method available; more time-intensive and expensive methods (e.g., observation) for assessing skills and behaviors are not without their own sources of potential biases and error.

CONCLUSION

The implementation of a broad-based EBP curriculum in a chiropractic training program is feasible and can result in 1) the acquisition of knowledge necessary to access and interpret scientific literature, 2) the retention and improvement of these skills over time, and 3) the enhancement of self-reported behaviors favoring utilization of quality online resources. It remains to be seen whether EBP skills and behaviors can be translated into private practice.

Acknowledgments

FUNDING SOURCES

This study was supported by the National Center for Complementary and Alternative Medicine (NCCAM) at the National Institutes of Health (grant no. R25 AT002880) titled “Evidence-Based Practice: Faculty & Curriculum Development.” The contents of this publication are the sole responsibility of the authors and do not necessarily reflect the official views of NCCAM.

Footnotes

Conflicts of interest

None.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Contributor Information

Mitchell Haas, Associate Vice President of Research, Center for Outcomes Studies, University of Western States, Portland, Oregon, USA.

Michael Leo, Consulting Statistician, Kaiser Permanente Center for Health Research, Portland, Oregon, USA.

David Peterson, Professor of Chiropractic Sciences, University of Western States, Portland, Oregon, 97230 USA.

Ron LeFebvre, Professor of Clinical Sciences, University of Western States, Portland, Oregon, USA.

Darcy Vavrek, Assistant Professor of Research, Center for Outcomes Studies, University of Western States, Portland, Oregon, USA.

References

- 1.Sackett DL, Straus SE, Richardson WS, Rosenberg W, Haynes RB. Evidence-based medicine: How to practice and teach EBM. 2. London: Churchill Livingston; 2000. pp. 1–280. [Google Scholar]

- 2.Krumholz HM, Radford MJ, Ellerbeck EF, Hennen J, Meehan TP, Petrillo M, Wang Y, Jencks SF. Aspirin for secondary prevention after acute myocardial infarction in the elderly: prescribed use and outcomes. Ann Intern Med. 1996;124:292–8. doi: 10.7326/0003-4819-124-3-199602010-00002. [DOI] [PubMed] [Google Scholar]

- 3.Krumholz HM, Radford MJ, Wang Y, Chen J, Heiat A, Marciniak TA. National use and effectiveness of beta-blockers for the treatment of elderly patients after acute myocardial infarction: National Cooperative Cardiovascular Project. JAMA. 1998;280:623–9. doi: 10.1001/jama.280.7.623. [DOI] [PubMed] [Google Scholar]

- 4.Mitchell JB, Ballard DJ, Whisnant JP, Ammering CJ, Samsa GP, Matchar DB. What role do neurologists play in determining the costs and outcomes of stroke patients? Stroke. 1996;27:1937–43. doi: 10.1161/01.str.27.11.1937. [DOI] [PubMed] [Google Scholar]

- 5.Wong JH, Findlay JM, Suarez-Almazor ME. Regional performance of carotid endarterectomy. Appropriateness, outcomes, and risk factors for complications. Stroke. 1997;28:891–8. doi: 10.1161/01.str.28.5.891. [DOI] [PubMed] [Google Scholar]

- 6.Delaney PM, Fernandez CE. Toward an evidence-based model for chiropractic education and practice. J Manipulative Physiol Ther. 1999;22:114–8. doi: 10.1016/s0161-4754(99)70117-x. [DOI] [PubMed] [Google Scholar]

- 7.Ebrall P, Eaton S, Hinck G, Kelly B, Nook B, Pennacchio V. Chiropractic education: towards best practice in four areas of the curriculum. Chiropr J Aust. 2009;39:87–91. [Google Scholar]

- 8.Rose KA, Adams A. A survey of the use of evidence-based health care in chiropractic college clinics. J Chiropr Educ. 2000;14:71–7. [Google Scholar]

- 9.Fernandez CE, Delaney PM. Evidence-based health care in medical and chiropractic education: a literature review. J Chiropr Educ. 2004;18:103–15. [Google Scholar]

- 10.CAM Practitioner Research Education Project Grant Partnership (PAR-04-097) [Internet] Bethesda, MD: National Institutes of Health; 2004. [cited 2012 Mar 20]. Available from: http://www.nlm.nih.gov/bsd/uniform_requirements.html. [Google Scholar]

- 11.Green B, Johnson C. Teaching clinical epidemiology in chiropractic: a first-year course in evidence-based health care. J Chiropr Educ. 1999;13:18–9. [Google Scholar]

- 12.Green B. Letters to the editor for teaching critical thinking and professional communication. J Chiropr Educ. 2001;15:8–9. [Google Scholar]

- 13.Fernandez C, Delaney P. Applying Evidence-Based Health Care to Musculoskeletal Patients as an Educational Strategy for Chiropractic Interns (A One-Group Pretest-Posttest Study) J Manipulative Physiol Ther. 2004;27:253–61. doi: 10.1016/j.jmpt.2004.02.004. [DOI] [PubMed] [Google Scholar]

- 14.Smith M, Long C, Henderson C, Marchiori D, Hawk C, Meeker W, Killinger L. Report on the development, implementation, and evaluation of an evidence-based skills course: a lesson in incremental curricular change. J Chiropr Educ. 2004;18:116–26. [Google Scholar]

- 15.Feise RJ, Grod JP, Taylor-Vaisey A. Effectiveness of an evidence-based chiropractic continuing education workshop on participant knowledge of evidence-based health care. Chiropr Osteopat. 2006;14:18. doi: 10.1186/1746-1340-14-18. PMC1560147. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Green BN, Johnson CD. Use of a modified journal club and letters to editors to teach critical appraisal skills. J Allied Health. 2007;36:47–51. [PubMed] [Google Scholar]

- 17.LeFebvre R, Peterson D, Haas M, Gillette R, Novak C, Tapper J, Muench J. Training the Evidence-based Practioner. University of Western States Document on Standards and Competencies. J Chiropr Educ. 2011;25:30–7. doi: 10.7899/1042-5055-25.1.30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Dawes M, Summerskill W, Glasziou P, Cartabellotta A, Martin J, Hopayian K, Porzsolt F, Burls A, Osborne J. Sicily statement on evidence-based practice. BMC Med Educ. 2005;5:1. doi: 10.1186/1472-6920-5-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Leo M, Peterson D, Haas M, LeFebvre R. Development and psychometric evaluation of a chiropractic evidence-based practice questionnaire. J Manipulative Physiol Ther. 2012;35 doi: 10.1016/j.jmpt.2012.10.011. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Dunn G. Design and analysis of reliability studies. the statistical evaluation of measurement errors. New York: Oxford University Press; 1989. [Google Scholar]

- 21.Zimmerman DW. A note on interpretation of the paired-samples t test. J Educ Behav Stat. 1997;22:349–60. [Google Scholar]

- 22.Cohen J. Statistical power analysis for the behavioural sciences. London: Academic Press; 1969. [Google Scholar]

- 23.Taylor R, Reeves B, Ewings P, Binns S, Keast J, Mears R. A systematic review of the effectiveness of critical appraisal skills training for clinicians. Med Educ. 2000;34:120–5. doi: 10.1046/j.1365-2923.2000.00574.x. [DOI] [PubMed] [Google Scholar]

- 24.Coomarasamy A, Khan KS. What is the evidence that postgraduate teaching in evidence based medicine changes anything? A systematic review. BMJ. 2004;329:1017–9. doi: 10.1136/bmj.329.7473.1017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Windish DM. Brief curriculum to teach residents study design and biostatistics. Evid Based Med. 2011;16:100–4. doi: 10.1136/ebm.2011.04.0011. [DOI] [PubMed] [Google Scholar]

- 26.Windish DM, Huot SJ, Green ML. Medicine residents’ understanding of the biostatistics and results in the medical literature. JAMA. 2007;298:1010–22. doi: 10.1001/jama.298.9.1010. [DOI] [PubMed] [Google Scholar]

- 27.Horton NJ, Switzer SS. Statistical methods in the journal. N Engl J Med. 2005;353:1977–9. doi: 10.1056/NEJM200511033531823. [DOI] [PubMed] [Google Scholar]

- 28.Stuber K, Sajko S, Kristmanson K. Chiropractic treatment of lumbar spinal stenosis: a review of the literature. J Chiropr Med. 2009;8:77–85. doi: 10.1016/j.jcm.2009.02.001. PMC2780929. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Lasater K, Salanti S, Fleishman S, Coletto J, Hong J, Lore R, Hammerschlag R. Learning activities to enhance research literacy in a CAM college curriculum. Altern Ther Health Med. 2009;15:46–54. [PubMed] [Google Scholar]

- 30.Ilic D. Assessing competency in evidence based practice: strengths and limitations of current tools in practice. BMC Med Educ. 2009;9:53. doi: 10.1186/1472-6920-9-53. PMC2728711. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Loza W, Green K. The Self-Appraisal Questionnaire: a self-report measure for predicting recidivism versus clinician-administered measures: a 5-year follow-up study. J Interpers Violence. 2003;18:781–97. doi: 10.1177/0886260503253240. [DOI] [PubMed] [Google Scholar]