Abstract

For least squares regression, Efron et al. (2004) proposed an efficient solution path algorithm, the least angle regression (LAR). They showed that a slight modification of the LAR leads to the whole LASSO solution path. Both the LAR and LASSO solution paths are piecewise linear. Recently Wu (2011) extended the LAR to generalized linear models and the quasi-likelihood method. In this work we extend the LAR further to handle Cox’s proportional hazards model. The goal is to develop a solution path algorithm for the elastic net penalty (Zou and Hastie (2005)) in Cox’s proportional hazards model. This goal is achieved in two steps. First we extend the LAR to optimizing the log partial likelihood plus a fixed small ridge term. Then we define a path modification, which leads to the solution path of the elastic net regularized log partial likelihood. Our solution path is exact and piecewise determined by ordinary differential equation systems.

Key words and phrases: Cox’s proportional hazards model, elastic net, LARS, LASSO, ordinary differential equation, solution path algorithm

1. Introduction

The main goal of survival analysis is to characterize the dependence of the survival time Y on a covariate vector X = (X1, . . . , Xp)T. Cox’s proportional hazards model (Cox (1972)) assumes that the hazard function h(y|x) of a subject with covariate vector x takes the form

| (1.1) |

where h0(y) is a completely unspecified baseline hazard function and β = (β1, . . ., βp)T. In practice, it is not necessary that all covariates contribute to predicting survival outcomes. Thus, another goal of survival analysis is to identify important risk factors and quantify their risk contributions. As survival data with many predictors prevail in clinical trial studies, risk factor identification becomes more important than ever for analyzing high-dimensional survival data. The problem is to select a submodel of (1.1) by providing a sparse estimate of β.

There are many model selection techniques in the literature and most of them have been successfully extended to survival analysis. They include such classical methods as the best-subset selection and stepwise selection. More recently, Tibshirani (1996) proposed to use the L1 penalty to regularize least squares regression; sparse estimate of the regression parameter is made possible due to the L1 penalty’s singularity at the origin. This technique was named the least absolute shrinkage and selection operator (LASSO), and later extended to the Cox proportional hazards model in Tibshirani (1997). However the LASSO penalty leads to biased estimates for true non-zero coefficients. To alleviate this bias issue, Fan and Li (2001) proposed the SCAD penalty, which is symmetric and piecewise quadratic. It is linear around the origin and flattens out near the two ends; in between, it is smoothly connected by two quadratic pieces. They showed that asymptotically the SCAD penalized estimate behaves like the oracle estimate were the true sparsity pattern known a priori. The oracle property of the SCAD was later extended to survival models in Fan and Li (2002). The adaptive-LASSO was proposed for least squares regression by Zou (2006), and for Cox’s proportional hazards model by Zhang and Lu (2007), and its oracle properties were established as well. There are many other techniques available for variable selection, including the elastic net (Zou and Hastie (2005)). See Fan and Lv (2010) and references therein for an overview of variable selection methods.

A novel least angle regression (LAR) solution path algorithm was proposed in Efron et al. (2004). The LAR produces a piecewise linear solution path for the least squares regression. They showed that slight modifications of the LAR lead to the LASSO and Forward Stagewise linear regression solution paths. Together they are called LARS. For data {(yi, zi), i = 1, . . . , n} with zi = (zi1, . . . , zip)T ∈ ℝp, ordinary least squares (OLS) regression solves

| (1.2) |

to estimate w = (w1, . . . , wp)T. Applying location and scale transformations if necessary, we assume without loss of generality that for j = 1, . . . , p, and .

For OLS, the LAR provides a solution path w(t) indexed by t ∈ [0, ∞). It starts at the origin with w(0) = 0; for large enough t, w(t) is the same as the full solution to (1.2). The intermediate solution path is piecewise linear; over each piece, it moves along the direction that keeps the correlation between the current residuals and each active predictor equal in absolute value. Denote the jth predictor vector by z(j) = (z1j , . . . , znj)T, and define the residual vector at t by e(w(t)) = (e1(w(t)), . . . , en(w(t)))T with for i = 1, . . . , n. Then along the LAR solution path w(t), the current correlation e(w(t))Tz(j) has the same absolute value for each active predictor j. Note that

This implies that the objective function has the same absolute value of the first-order partial derivatives for each active predictor along the LAR solution path. Mathematically,

| (1.3) |

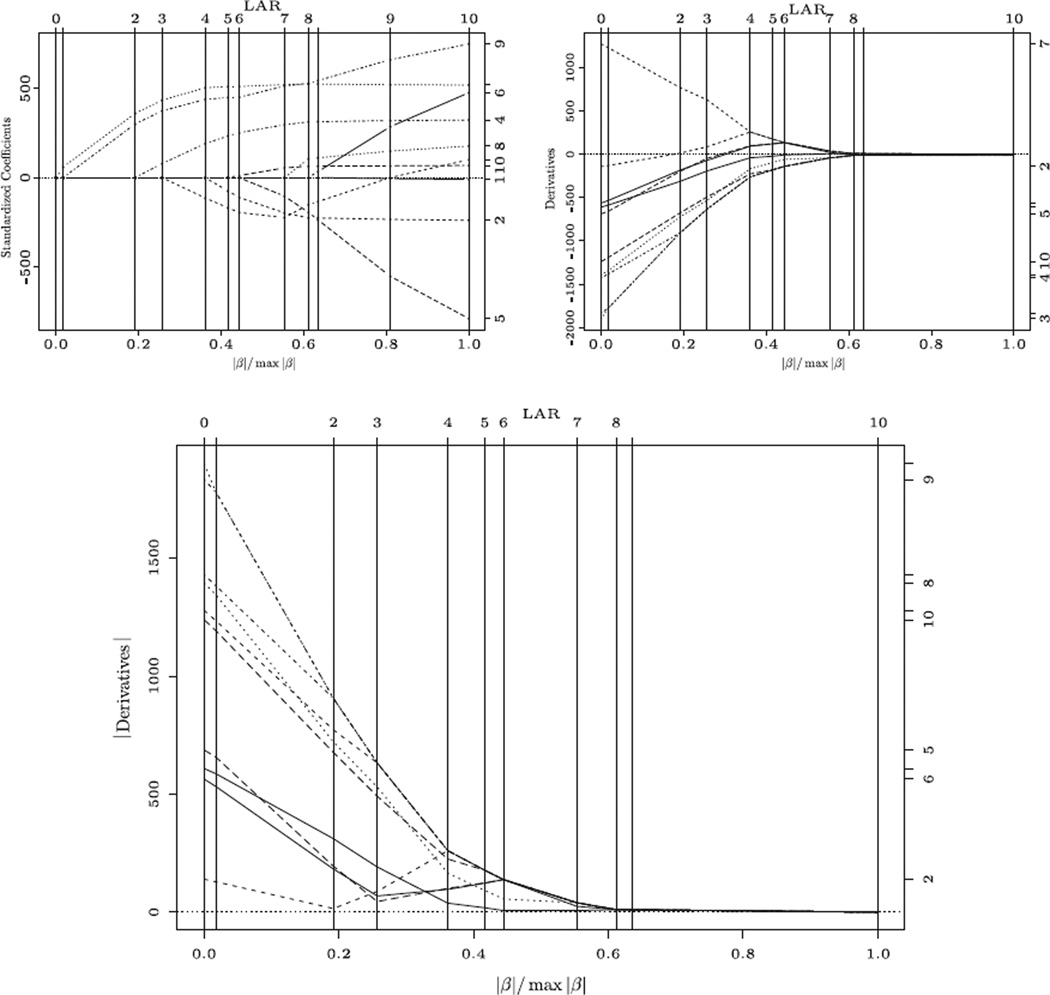

for any j and j′ among the active set at t. For the diabetes data in the R package LARS, we plot the LAR solution path in the top left panel of Figure 1. The first-order partial derivatives along the LAR solution path are shown in the top right panel of Figure 1. The derivatives in absolute value, namely , are given in the bottom panel of Figure 1. One sees that, at the end of each LAR step, a new predictor joins the group of active predictors, sharing the honor of having the same largest absolute value of the first-order partial derivatives. The LAR algorithm terminates at the full OLS estimate of (1.2) when all the first-order partial derivatives are exactly zero. Based on this observation, Wu (2011) proposed an extension to handle generalized linear models and more generally the quasi-likelihood method.

Figure 1.

LAR path of diabetes data: the top left panel plots the LAR path wj(t) against the relative one-norm |w(t)|/|w(∞)| for each predictor j = 1, 2, . . . , 10; the top right panel and the bottom panel plot the derivative and its absolute value , respectively, along the LAR path, for different predictors.

In this work, we extend the LAR to the Cox’s proportional hazards model. With the elastic net penalty in mind, we add a fixed small ridge term to the log partial likelihood function and call this extension CoxLAR-ridge. When the ridge term is exactly zero, we have the original log partial likelihood and call the corresponding algorithm CoxLAR. As in Efron et al. (2004) and Wu (2011), we show that the CoxLAR-ridge can be slightly modified to get the corresponding whole solution path for the LASSO regularized counterpart; it is called CoxEN as the LASSO penalty with a small ridge term leads to the elastic net penalty. By setting the ridge term to be zero the CoxEN includes the CoxLASSO, the LASSO regularized log partial likelihood, as a special case. Together, we use CoxLARS in the same spirit as LARS in Efron et al. (2004). In addition to considering different models, another difference from Wu (2011) is that we include a ridge penalty term to consider the more general elastic net penalty. The elastic net penalty is highly desirable in that it is capable of selecting more predictors than the sample size, while it is known that the number of predictors selected by the LASSO can be at most equal to the sample size. See more discussion on this issue in Zou and Hastie (2005).

Previously Park and Hastie (2007) provided a solution path algorithm for L1-regularized generalized linear models and Cox’s proportional hazards model. Their algorithm is based on the predictor-corrector method of convex optimization. In their R package “glmpath”, one may choose an extreme small bound for arc length (L1 norm) of each step to obtain an exact solution path. In this case, it essentially uses a warm start each time to compute the exact solution at a fine grid of the tuning parameter and connects these exact solutions by straight lines. They still need to solve many optimization problems, one at each tuning parameter point. They did not address how the solution changes when the tuning parameter changes. Our new algorithms CoxLAR and CoxLASSO answer this question, the solution path propagates according to ordinary differential equation (ODE) systems. Thus the commonly used fourth-order Runge-Kutta method can be used to solve these ODE systems to obtain the whole CoxLARS solution paths. Other papers on solution path algorithms include Hastie et al. (2004), Rosset and Zhu (2007), Zou (2008), Friedman, Hastie, and Tibshirani (2008), Yuan and Zou (2009), and references therein. In particular, Zou (2008) proposed an efficient adaptive shrinkage method for the Cox’s proportional hazards model and adapted the LARS to provide a piecewise linear solution path.

The rest of the article is organized as follows. Section 2 presents our new algorithm CoxLARS. Properties of the CoxLARS are given in Section 3. Numerical examples in Section 4 illustrate how our new algorithm works with data sets. A summary is given in Section 5. The appendix gives all technical proofs.

2. Extension of LARS: CoxLARS

Consider a sample of n subjects. Let Ti and Ci be the failure time and censoring time, respectively, for subject i = 1, . . . , n. Write Yi = min(Ti, Ci) and let the censoring indicator be δi = I(Ti ≤ Ci). Denote the covariate vector of the ith subject by xi = (xi1, . . . , xip)T. Assume that Ti and Ci are conditionally independent given covariate vector xi, and that the censoring mechanism is noninformative. Our data set is {(xi, yi, δi), i = 1, . . . , n}.

Assume the data come from model (1.1). For simplicity, we suppose there are no ties in the observed failure times, otherwise techniques in Breslow (1974) may be used. The log partial likelihood is given by

| (2.1) |

where Ri = {j = 1, . . . , n : yj ≥ yi} denotes the risk set just before the time yi.

Note that when the elastic net penalty (Zou and Hastie, 2005) is considered, we are solving

| (2.2) |

where γ ≥ 0 and λ ≥ 0 are two regularization parameters. In order to incorporate the elastic net into our consideration, we include a small ridge penalty term and set

| (2.3) |

for some fixed small γ ≥ 0. Note that this reduces to the LASSO penalized counterpart when γ = 0. It is known that the LASSO penalty can select at most n predictors for the p > n case. However as long as γ > 0, we can select more than n predictors by solving (2.2) when p > n. Our consideration is similar to the LARS-EN algorithm proposed in Zou and Hastie (2005) in that the LARS-EN adapted the LARS algorithm to obtain elastic net solution path for each fixed ridge term.

We use t to index our solution path. As motivated by (1.3), our extension CoxLAR-ridge seeks a solution path β(t) of (2.3) that satisfies

| (2.4) |

for any two predictors j and j′ that are active at t.

For L(β), denote its vector of first-order partial derivatives by b(β) = (b1(β), . . . , bp(β))T and its matrix of second-order partial derivatives by M(β) = (mjk(β))1≤j,k≤p, where

and is given by

for 1 ≤ j, k ≤ p, where I{j=k} = 1 if j = k and 0 otherwise.

At t with solution β(t), denote the corresponding active index set by 𝒜(β(t)) and, interchangeably, by 𝒜t. For any two index sets 𝒜 and ℬ, vector b, and matrix M, let b𝒜 be the sub-vector of b consisting of those elements with index in 𝒜, and M𝒜,ℬ be the sub-matrix of M consisting of those elements with row index in 𝒜 and column index in ℬ. When 𝒜 = {j} is a singleton, we write Mj,ℬ, similarly M𝒜,k when ℬ = {k}. Denote the complement of 𝒜 by 𝒜c = {1, . . . , p} \ 𝒜.

Note that, at any t with active predictor set 𝒜, the corresponding solution component is set to zero for any inactive predictor, namely βj (t) = 0 for any j ∉ 𝒜. Thus it is enough to find how the solution coefficient components, corresponding to active predictors βj(t) with j ∈ 𝒜, are updated. Recall that our desired solution path should be such that the active predictors have the same absolute value of the first-order partial derivatives as at (2.4). Thus, as t grows, |bj(β(t))| decreases at the same speed for j ∈ 𝒜. Assume that in a small neighborhood of t, the active set 𝒜t remains the same as 𝒜, say. Note that

| (2.5) |

since the active set 𝒜t remains the same as 𝒜 and thus β𝒜c (t) = 0 in a small neighborhood of t.

According to (2.4),

| (2.6) |

for some c(t) > 0. Here the negative sign on the right hand side ensures that |bj(β(t))| is decreasing in t for each j ∈ 𝒜. Furthermore (2.6) guarantees that the |bj(β(t))|, j ∈ 𝒜, decrease at the same speed. Note that we can think of t as a function of τ with the solution path indexed by τ. With an appropriate choice of t(τ), (2.6) holds with t replaced by τ and the c(t) term replaced a constant. Different c(t) lead to different indexing systems of the solution path. Thus, without loss of generality, we set c(t) ≡ 1 in (2.6) and write

| (2.7) |

In fact this turns out to be a good choice in that t here is simply related to the maximum absolute value of the first-order derivatives at t, as we shall see later.

Based on (2.5) and (2.7), the solution path should satisfy

| (2.8) |

Recall that β𝒜c (t) = 0. These completely define the path updating direction . Thus for any t* > t, we may take a tentative solution path piece

| (2.9) |

With this tentative solution path piece, we implicitly assume that the active set remains the same between t and t*. Assume that, at the start with t, |bj (β(t))| = |bj′(β(t))| for any j, j′ ∈ 𝒜. Then (2.9) guarantees that |bj(β̃(t*))| = |bj′(β̃(t*))| for any j, j′ ∈ 𝒜 along the tentative solution path piece β̃(t*) for t* > t and, further, that |bj(β̃ (t*))| is decreasing in t* for j ∈ 𝒜. Thus, as t* increases, some inactive predictor m ∉ 𝒜 may have |bm(β̃ (t*))| ≥ |bj(β̃ (t*))| for j ∈ 𝒜. Whenever this happens, the active predictor set has changed and we cannot use (2.9) any more. For any j ∉ 𝒜, define

| (2.10) |

where m is any member of the active predictor set 𝒜. Then the active set changes at T = minj∉𝒜 Tj from 𝒜 to 𝒜 ∪ {j*}, where j* = argminj∉𝒜 Tj.

2.1. Algorithm CoxLAR(-ridge)

The previous discussion leads us to our extension CoxLAR(-ridge) algorithm that is systematically presented next.

We initialize our solution path by identifying the predictor j so that the objective function L(β) changes fastest with respect to βj beginning at β = 0; set

| (2.11) |

This specially defined t0 together with (2.7), leads to t = –maxj |bj (β(t))| along our solution path. Our solution path begins with β(t0) = 0; the corresponding initial active predictor set is .

Given t0, β(t0), and 𝒜t0, we update our solution path using (2.9) until a new variable joins the active set at some t1(> t0) to be determined. We may temporarily update the solution using

| (2.12) |

for t > t0. Here β̃(t) is a temporary solution path defined for any t > t0. For any j ∉ 𝒜t0, let

| (2.13) |

where m ∈ 𝒜t0. Then

| (2.14) |

is a transition point because the set of active predictor variables changes there.

The CoxLAR-ridge algorithm updates by setting

| (2.15) |

for all t ∈ [t0, t1]. The active predictor set stays the same for t ∈ [t0, t1), namely 𝒜t = 𝒜t0. At t1, we update the active predictor set by setting 𝒜t1 = 𝒜t0 ∪ {j ∉ 𝒜t0: Tj = t1}.

At t = t1, the number of active predictors is two. Due to (2.5), (2.8), (2.12), (2.13) and (2.14), solution β(t1) satisfies |bj(β(t1))| = |bj′(β(t1))| > |bk(β(t1))| for any k ∉ 𝒜t1 and any j, j′ ∈ 𝒜t1.

The CoxLAR-ridge algorithm continues with the updated t1, β(t1), and 𝒜t1, proceeding according to Algorithm 1. Note that at the end of the mth CoxLARridge step, the transition point tm, solution β(tm), and active predictor set 𝒜tm satisfy tm = −|bj(β(tm))| for any j ∈ 𝒜tm, and |bj(β(tm)| = |bj′(β(tm)| > |bk(β(tm)| for any k ∉ 𝒜tm and any j, j′ ∈ 𝒜tm.

At the end of the (p – 1)th CoxLAR-ridge step in Step 2 of Algorithm 1, all predictors are active. Then, in Step 3, the CoxLAR solution path moves along a direction such that the absolute values of the first-order partial derivatives decrease at the same speed until all the first-order partial derivatives are exactly zero, which happens at t = 0. The solution at t = 0 exactly corresponds to the full solution argminβ L(β), just as the LAR solution ends at the full OLS estimate. This completes our CoxLAR-ridge solution path. When the ridge term in L(β) is exactly zero by setting γ = 0, we are essentially working directly with the original log partial likelihood function and the CoxLAR-ridge is also the CoxLAR in this case.

Remark 1. Note that the instantaneous path updating direction is given by –(M𝒜t,𝒜t(β(t)))−1 sign(b𝒜t(β(t))). For least squares regression, the objective function is exactly quadratic and thus M𝒜t,𝒜t depends only on the active set 𝒜t, but not on the current solution β𝒜t (t). Note that sign(b𝒜t(β(t))) does not change in a small neighborhood of t. This implies that, within a small neighborhood of t, the instantaneous path updating direction is the same for least squares regression. This leads to the piecewise linearity of the LAR path (Efron et al. (2004)) and in a more general setting (Rosset and Zhu (2007)).

Algorithm 1. CoxLAR(-ridge) for the Cox’s proportional hazards model.

Initialize by setting .

- For m = 0, 1, . . . , p − 2, take the tentative solution path using

for t ≥ tm. Let tm+1 = minj∉𝒜tm Tj, where

Update the solution path with

for t ∈ [tm, tm+1]. Set 𝒜t = 𝒜tm for t ∈ [tm, tm+1) and 𝒜tm+1 = 𝒜tm ∪ [j ∉ 𝒜tm : Tj = tm+1}. - At the end of Step 2, 𝒜tp−1 is {1, 2, . . . , p}. Next take

and 𝒜t = {1, 2, . . . , p} for t between tp−1 and tp = 0.

2.2. Cox-LASSO modification

Efron et al. (2004) showed that the whole LASSO regularized least squares regression solution path can be obtained by a slight modification of the LAR. This is confirmed by Wu (2011). Next we define our Cox-LASSO modification, and prove that the CoxLAR-ridge with the Cox-LASSO modification produces the whole elastic net regularized solution path for the Cox’s proportional hazards model by noting that adding another LASSO penalty into L(β) leads to the elastic net penalized log partial likelihood function in (2.2).

Consider the LASSO regularized counterpart of (2.3),

| (2.16) |

which is exactly the same as (2.2), and is equivalent

| (2.17) |

where two regularization parameters λ ≥ 0 and s ≥ 0 are in some one-to-one correspondence.

Let β̂ be a LASSO solution to (2.16). We can show that the sign of any nonzero component β̂j must disagree with the sign of the current derivative bj(β̂), see Lemma 2 in Section 3.

Suppose t = t* at the end of a CoxLAR-ridge step and that we have a new active set 𝒜*. At the next CoxLAR-ridge step with t ∈ [t*, T] for some T to be determined, our solution path moves along the tentative solution path

| (2.18) |

for t ≥ t*. The end point T is given by T = minj∉𝒜* Tj, where

.

For some j ∈ 𝒜*, β̃j(t) may have changed sign at some point between t* and T, in which case the sign restriction given in Lemma 2 must have been violated. We set Sj = min{t ∈ (t*, ∞) : β̃j(t) = 0} for j ∈ 𝒜*, where β̃j(t) is the jth component of β̃ (t) defined by (2.18). If S = minj∈𝒜* Sj < T, β̃(T) defined by (2.18) cannot be a LASSO regularized solution to (2.16) since the sign restriction in Lemma 2 has already been violated. The Cox-LASSO modification can be applied to ensure that we can get the LASSO regularized solution to (2.16).

Cox-LASSO modification: If S < T, stop the ongoing CoxLAR-ridge step at S and remove j̃ from the active set 𝒜* by setting 𝒜S = 𝒜t* \ {j̃}, where j̃ is chosen such that Sj̃ = S. At the new transition point S, the new path updating direction is calculated using (2.8) based on the new active predictor set 𝒜* \ {j̃}.

Theorem 1 guarantees that the Cox-LASSO modification leads to the LASSO regularized solution path to (2.16), which is the LASSO regularized log partial likelihood (CoxLASSO) when γ = 0, and the elastic net regularized log partial likelihood (CoxEN) when γ > 0. We use CoxLARS to refer to CoxLAR, CoxLARridge, CoxLASSO, and CoxEN.

Note that at each transition point of our CoxLARS solution path, two kinds of event can happen: either an inactive predictor joins the active predictor set or an active predictor is removed from the active predictor set. As in Efron et al. (2004), we assume a “one at a time” condition holds. With the “one at a time” condition, at each transition point t* only a single event can happen, namely, either one inactive predictor variable becomes active or one currently active predictor variable becomes inactive.

Theorem 1. Under the Cox-LASSO modification, and assuming the “one at a time” condition, the CoxLAR-ridge algorithm yields the LASSO regularized solution path to (2.16).

Remark 2. For simplicity we make the “one at a time” assumption. But, even when the “one at a time” condition does not hold, a CoxLASSO/CoxEN solution path is still available. The same discussion in Efron et al. (2004) applies. In applications, some slight jittering may be applied, if necessary, to ensure the “one at a time” condition holds.

2.3. Updating via ODE

Our CoxLARS algorithm involves an essential piecewise updating step

| (2.19) |

beginning at a transition point t* with solution β(t*) and active predictor set 𝒜t*.

Note that the piecewise solution path (2.19) can be easily obtained by setting β̃j(t) = 0 for j ∉ 𝒜t* and t > t*, and solving the following ordinary differential equation (ODE) system

with initial value condition β̃𝒜t* (t)|t=t* = β𝒜t* (t*). This is a standard initial-value ODE system and there are many efficient methods to solve it, for example Euler method, backward Euler method, midpoint method, and the family of Runge-Kutta methods, among many others. The commonly used member of the Runge-Kutta method family is the fourth-order Runge-Kutta method. See Atkinson, Han, and Stewart (2009) for a comprehensive introduction to the methods for solving ordinary differential equations. Our numerical examples employ the Matlab ODE solver “ODE45”, which exactly implements the fourth-order Runge-Kutta method.

3. Properties of CoxLARS

In this section, we establish some properties of our CoxLARS path, and prove Theorem 1.

With the “one at a time” condition, at each transition point t* either one inactive predictor becomes active or one active predictor becomes inactive. For the first type, the active set changes from 𝒜 to 𝒜* = 𝒜 ∪ {j*} for some j* ∉ 𝒜. We show in Lemma 1 that this new active predictor joins in a “correct” manner. Lemma 1 applies to CoxLARS.

Lemma 1. For any transition point t* during the CoxLARS solution path, if predictor j* is the only addition to the active set at t* with β (t*) and active set changing from 𝒜 to 𝒜* = 𝒜∪{j*}, then the path updating direction at t* has its j*th component disagreeing in sign with the current derivative bj* (β(t*)).

Lemma 1 is a key property for showing that the Cox-LASSO modification leads to the LASSO or elastic net regularized log partial likelihood solution path in that Lemma 1 ensures that, at any transition point, the new predictor variable enters in a “correct” manner. This “correct” manner is required by the LASSO penalty as is seen in Lemma 2.

Next we extend Lemmas 7–10 of Efron et al. (2004) to the Cox’s proportional hazards model. Our Lemmas 2–5 concern properties of the LASSO regularized solution path for (2.16) or equivalently (2.17), and as a result they lead to the proof of Theorem 1. For any s ≥ 0, we denote the unique solution of (2.17) by β̂ = β̂(s), which is continuous in s; uniqueness is due to the convexity of and the strict convexity of L(β). Throughout, we use the hat notation to designate a solution of (2.16), equivalently (2.17). For any s ≥ 0, let 𝒩s ≡ 𝒩(β̂(s)) ≜ {j : β̂j(s) ≠ 0} denote the index set of nonzero components of β̂(s). Our goal is to show that the nonzero set 𝒩s is also the active predictor set that determines the CoxLARS path updating direction.

Let β̂ be a solution of (2.16). We show that any non-zero component β̂j must disagree in sign with the current first-order derivative.

Lemma 2. A LASSO regularized solution β̂ to (2.16) satisfies sign(β̂j) = −sign (bj(β̂)) for any j ∈ 𝒩 (β̂).

Let 𝒮 be an open interval of the s axis, with infimum s, within which the nonzero set 𝒩s of β̂(s) remains constant, 𝒩s = 𝒩 for s ∈ 𝒮 and some 𝒩.

Lemma 3. For s ∈ {s} ∪ 𝒮, the LASSO regularized estimate β̂(s) of (2.17) updates along the CoxLARS path updating direction.

Lemma 4. For an open interval 𝒮 with a constant nonzero set 𝒩 during the LASSO regularized path β̂(s) of (2.17), let s = inf(𝒮). Then for s ∈ 𝒮 ∪ {s}, the first-order derivatives of L(β) at β̂(s) satisfy |bj(β̂(s))| = maxl=1,2,...,p |bl(β̂(s))| for j ∈ 𝒩 and |bj(β̂(s))| ≤ maxl=1,2,...,p| bl(β̂(s))| for j ∉ 𝒩.

Let s denote such a point, s = inf(𝒮) as in Lemma 4, with the LASSO regularized solution β̂ to (2.17), current derivatives bj(β̂) for j = 1, 2, . . . , p, and maximum absolute derivative D̂ (β̂) = maxj=1,2,...,p |bj(β̂)|. Let 𝒜1 = {j : β̂ j ≠ 0}, 𝒜0 = {j : β̂j = 0 and |bj(β̂)| = D̂(β̂)}, and 𝒜10 = 𝒜1 ∪ 𝒜0. Take β(θ) = β̂ + θd for some vector d ∈ ℝp, T(θ) = L(β(θ)), and . Let .

Lemma 5. At s, we have

| (3.1) |

with equality only if dj = 0 for and sign(dj) = −sign(bj(β̂)) for j ∈ 𝒜0. If so,

| (3.2) |

Lemma 5 implies that, at any transition point, the active predictor set of the LASSO regularized solution to (2.17) is a subset of 𝒜10. With the LASSO regularization, we are minimizing L(β) subject to a constraint on the one norm of β. In a small neighborhood β̂+θd around β̂, we are minimizing T(θ) subject to an upper bound on S(θ). The first part of Lemma 5 implies that the instantaneous relative changing rate of T(θ) and S(θ) is ≥ − D̂ (β̂). For β(θ), its one-norm S (θ) is increasing in θ as long as − and the best instantaneous relative changing rate is achieved for moving along β̂ + θd as long as dj = 0 for and sign(dj) = −sign(bj(β̂)) for j ∈ 𝒜0. In particular, sign(dj) = −sign(bj(β̂) for j ∈ 𝒜0 requires that the coefficient of any new active predictor variable should disagree in sign with the corresponding current first-order partial derivative. This is ensured by Lemma 1 and the “one at a time” condition.

The second part of Lemma 5 provides second-order information on the relative change of T(θ) with respect to S(θ). As we only care about direction, assume = Δ for some Δ > 0. Note that . Then we need to find the most efficient direction d to decrease T(θ) among all possible direction d satisfying = Δ and sign(dj) = −sign(bj(β̂)) for j ∈ 𝒜0. In terms of the second-order information, we need to solve

| (3.3) |

with a fixed Δ > 0 to select the optimal solution updating direction d. It turns out that the optimal solution to (3.3) is exactly given by our CoxLARS path updating direction as proved next.

Lemma 6. Our CoxLARS path updating direction (2.8) solves (3.3).

4. Numerical Examples

In this section, we use numerical examples to demonstrate how the extension CoxLARS works. In our implementation we first calculate t0, then set δt = −t0/K, where K is some large positive number. In our examples we use K = 2,000. In addition to the transition points tks, we evaluate the solution over our solution path at a grid of size δt. More specifically, for each piece of our solution path over [tk, tk+1], we calculate our solution β(t) at t = tk + mδt for m = 1, 2, . . . , ⌊(tk+1 − tk)/δt⌋, where ⌊a⌋ denotes the integer part of a, even though the CoxLARS solution paths are defined for any t ∈ [t0, 0].

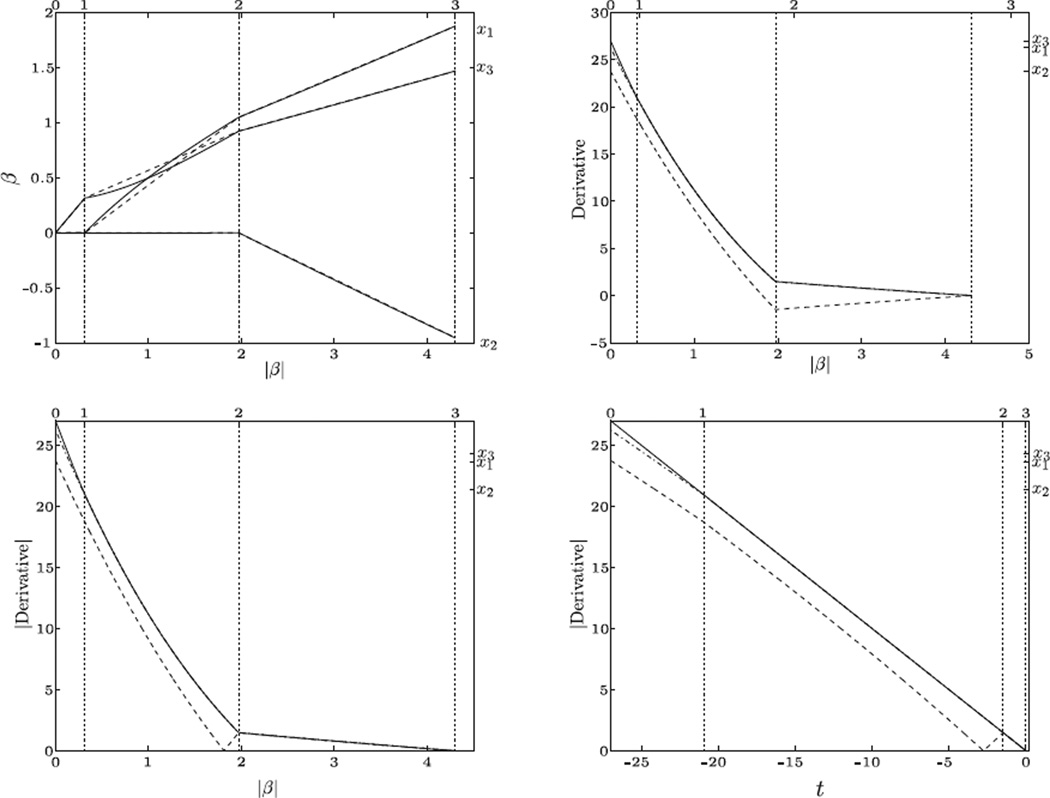

Example 1. We use a simulated dataset to demonstrate that the true LASSO regularized solution path is not piecewise linear. We set p = 3 and n = 40. The predictor covariates were generated as X ~ 𝒩 (0, Σ), where Σ is the variance-covariance matrix with (i, j)th element 1 if i = j, and 0.9 otherwise. Conditional on X = (x1, x2, x3)T, the lifetime was generated from model (1.1) with a constant baseline hazard function h0(y) = 1 and true regression coefficient vector β = (2, −2, 2.5)T. The censoring time was uniformly distributed over [0, 8] and the corresponding censoring rate is 32.3%. We applied the CoxLASSO (with a ridge term γ = 0).

The CoxLASSO solution path is shown by the solid lines in the top left panel of Figure 2. The dashed straight lines are obtained by connecting the solutions at the transition points. The true LASSO regularized solution path is clearly not piecewise linear. The first-order partial derivatives along the CoxLASSO solution path are shown in the top right panel of Figure 2. The absolute value of the first-order partial derivatives along the CoxLASSO solution path are shown in the bottom two panels of Figure 2. with different horizontal axis scales. The bottom left panel is plotted with respect to the one-norm of β(t) while the right panel uses t. A straight diagonal line is observed in the bottom right panel since our CoxLARS ensures that t = −maxj=1,...,p|bj(β(t))|.

Figure 2.

CoxLASSO path of Example 1: the top left panel plots the CoxLASSO path β(t) with respect to the one-norm |β(t)|; the top right panel plots the first-order derivatives bj(β(t)) with respect to |β(t)|; the bottom left and right panels plot |bj(β(t))| along the CoxLASSO path with respect to |β(t)| and t, respectively.

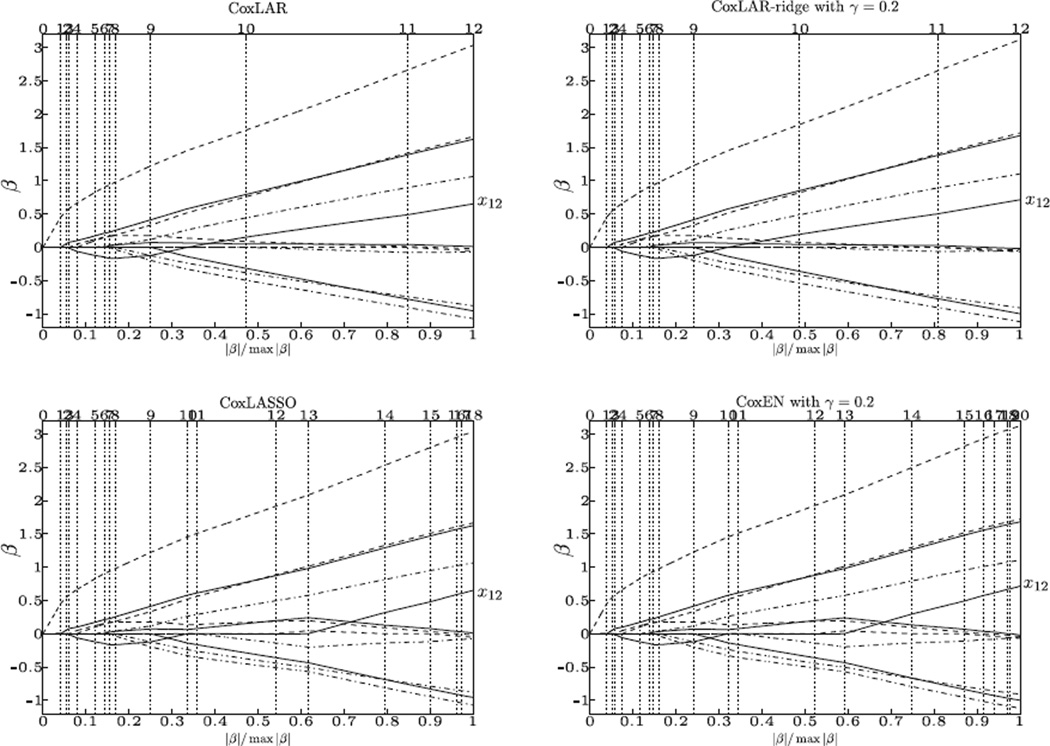

Example 2. Here we demonstrate how the Cox-LASSO modification leads to the CoxLASSO path when γ = 0 and the CoxEN path when γ > 0. We chose n = 200 and p = 12. The predictor covariates X were generated from N(0, Σ), with (i, j) element of Σ being 1 when i = j, 0.3 when 1 ≤ i, j ≤ 11 and |i–j| = 1, (−0.18)i+1 when j = 12 and 1 ≤ i ≤ 11, and (−0.18)j+1 when i = 12 and 1 ≤ j ≤ 11. Conditional on covariates, the lifetime was generated from model (1.1) with h0(y) = 1 and true coefficient vector given by (−0.8, 1.6, −0.8, 1, 0, 1.5, −1.2, 3, 0, 0, 0, 0.5)T. The censoring time was generated from Uniform[0, 10] leading to a censoring rate of 30.5%. In general, the Cox-LASSO modification may not have any effect and conseqently the CoxLAR and CoxLASSO paths are exactly the same. We designed Example 2 to show the effect of the Cox-LASSO modification.

CoxLARS solution paths are shown in Figure 3. When γ = 0, solution paths of the CoxLAR and CoxLASSO are given in the top left and bottom left panels, respectively. The CoxLAR path shows that coefficient of variable X12 switches sign between the 9th and 10th transition points. Thus a new transition point is added to the CoxLASSO solution path, in which the coefficient corresponding to X12 is kept at zero between the 10th and 13th transition points. When we add a small ridge term by setting γ = 0.2, the corresponding paths are shown in the right panels of Figure 3. A similar phenomenon is observed.

Figure 3.

CoxLARS paths of Example 2.

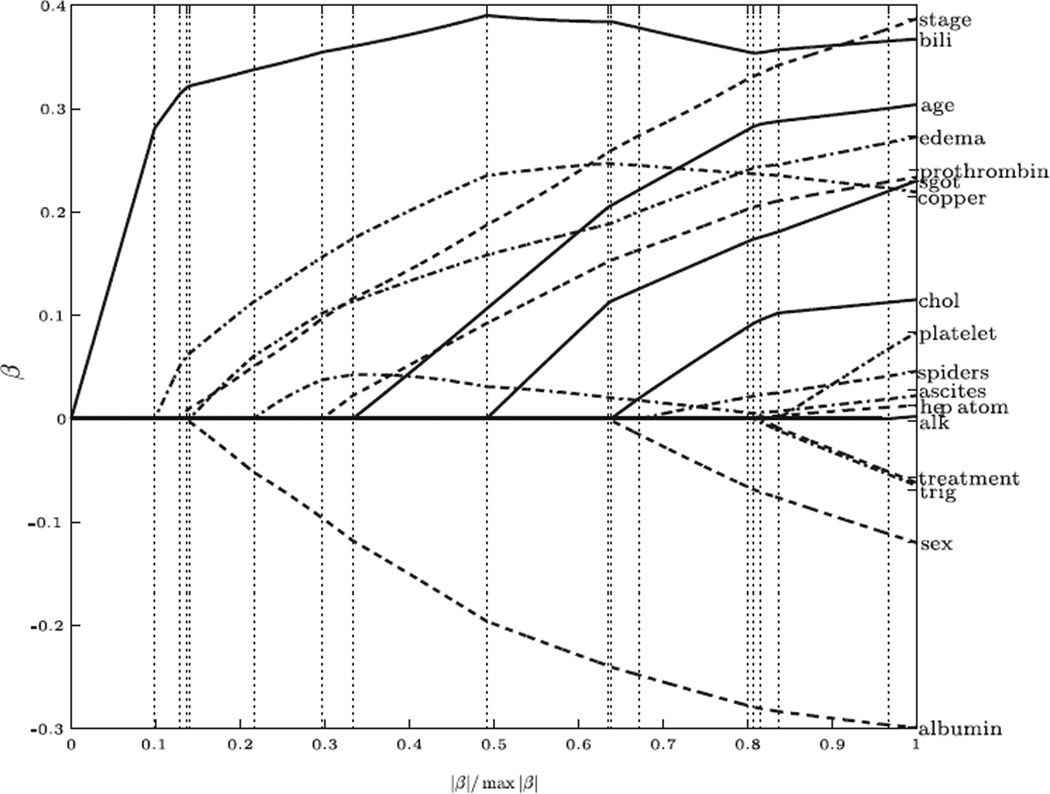

Example 3. The primary biliary cirrhosis data were collected in the Mayo Clinic trial on primary biliary cirrhosis of liver conducted between 1974 and 1984, see Therneau and Grambsch (2001). This study included a total of 424 patients. Clinical, biochemical, serological, and histological parameters were collected for each patient. Before the end of the follow-up, 125 patients died. We study the dependency of the survival time on seventeen covariates: continuous variables are age (in years), albumin (albumin in g/dl), alk (alkaline phosphatase in units/litre), bili (serum bilirubin in mg/dl), chol (serum cholesterol in mg/dl), copper (urine copper in g/day), platelets (platelets per cubic ml/1,000), prothrombin (prothrombin time in seconds), sgot (liver enzyme in units/ml), and trig (triglycerides in mg/dl); categorical variables are ascites (0 denotes absence of ascites and 1 denotes presence of ascites), edema (0 denotes no oedema, 0.5 denotes untreated or successfully treated oedema, and 1 denotes unsuccessfully treated oedema), hepatom (0 denotes absence of hepatomegaly and 1 denotes presence of hepatomegaly), sex (0 denotes male and 1 denotes female), spiders (0 denotes absence of spiders and 1 denotes presence of spiders), stage (histological stage of disease, graded 1, 2, 3, or 4), and treatment (1 for control and 2 for treatment). See Dickson et al. (1989) for more detailed information.

After excluding patients with any missing value, there are 276 patients. Out of these 276 patients, 111 died before the end of the follow-up. We standardized each predictor variable to have mean zero and variance one. CoxLARS was applied to the standardized data with all seventeen variables included. With ridge parameter γ = 0, the CoxLAR and CoxLASSO gave the same solution path, see Figure 4.

Figure 4.

CoxLARS path of the PBC data with γ = 0.

5. Discussion

In this work, we have proposed the extension CoxLAR(-ridge) of the LAR to handle Cox’s proportional hazards model. Our CoxLAR(-ridge) solution paths are based on ODE systems. Results show that a Cox-LASSO modification on CoxLAR(-ridge) leads to the exact solution of the corresponding LASSO regularized solution path. As the solution path propagates according to ODE systems, it allows us to develop a solution path package using efficient ODE solvers.

LARS is very attractive due to its speed that is possible because the corresponding path is piecewise linear. However when it comes to the Cox’s proportional hazards model, the solution path is not piecewise linear due to the nature of the log partial likelihood, as demonstrated by Example 1. This makes the implementation of the CoxLARS more difficult. Currently we have implemented the primitive version of our algorithm using the fourth-order Runge-Kutta method, which works fairly well. In addition, it is commonly assumed that the regression coefficients are sparse in the high dimension variable selection literature. Consequently there is not much need for us to compute the whole solution path. A BIC criterion may be combined as we progress along the solution path to identify an optimal solution and terminate our solution path algorithm thereafter, as done in Wu (2011).

Acknowledgements

The author thanks Jianqing Fan and Chuanshu Ji for mentoring and longtime encouragement. The author also thanks Dennis Boos, Jingfang Huang, Yufeng Liu, John Monahan, and Leonard Stefanski for helpful comments and encouragement. This work is supported in part by NSF grant DMS-0905561, DMS-1055210, NIH/NCI grant R01-CA149569, and NCSU Faculty Research and Professional Development Award grant. The content is solely the author’s responsibility and does not necessarily represent the official views of the NSF, NIH, or NCI.

Appendix

Proof of Lemma 1. The new path updating direction defined using the new active predictor set 𝒜* is given by . Using the formula for inverting a block matrix, the j*th component of our path updating direction is given by

| (A.1) |

where η = Mj*,j*(β(t*)) −Mj*,𝒜(β(t*))M𝒜,𝒜(β(t*))−1 M𝒜,j*(β(t*)) > 0 in that M(β) is positive definite when n > p, and x(j), j = 1, 2, . . . , p are linearly independent. The first term in (A.1) involves M𝒜,𝒜(β(t*))−1sign(b𝒜(β(t*))), which is exactly the opposite of the path updating direction calculated at t* using the old active set 𝒜 by ignoring the addition of predictor variable j*.

Consider ignoring the new active variable j* and updating path along the path updating direction evaluated by the old active predictor set 𝒜. This leads to another solution path piece β̄(t) defined by

when t is inside a small neighborhood [t* − Δt, t* + Δt]. The neighborhood is chosen such that both solution component β̄j(t) and the first-order partial derivative bj(β̄(t)) do not change sign for t ∈ [t* − Δt, t* + Δt] and j ∈ 𝒜. Consequently when t ∈ [t* − Δt, t* + Δt],

for j ∈ 𝒜 due to (2.5) and (2.8). Note that, for t ∈ [t* − Δt, t* + Δt],

| (A.2) |

due to the definition of β̄(t) (because β̄j(t) = 0 for j ∉ 𝒜 and t ∈ [t* − Δt, t* + Δt]).

Recall that for t ∈ [t* − Δt, t*], β(t) = β̄(t), and our CoxLARS solution matches β̄(t) exactly. Note that our CoxLARS definition implies that

| (A.3) |

for any j ∈ 𝒜 and t ∈ [t* − Δt,t*). This means that the predictor variable j* has a smaller absolute value of the first-order partial derivative than active predictors in 𝒜 for t ∈ [t* − Δt, t*) and that it catches up with active predictors in 𝒜 at t*, noting the definition of j*.

Lemma 1 can be proved by contradiction. If our claim is wrong, then

due to (A.1) and the fact that η > 0. This means that

. The fact that β̄(t) = β(t) for t ∈ [t* − Δt, t*] implies that there exists some 0 < ε < Δt such that

| (A.4) |

due to continuity. By noting (A.2) and for j ∈ 𝒜 and t ∈ (t* − ε, t*), (A.4) contradicts the conclusion that the predictor variable j* has a smaller absolute value of the first-order partial derivative than active predictors in 𝒜 for t ∈ [t* − Δt, t*) and that it catches up with active predictors in 𝒜 at t*. This completes our proof.

Proof of Lemma 2. For any j ∈ 𝒩 (β̂), taking differentiation of the objective function in (2.16) with respect βj, we get

| (A.5) |

which has to be equal to zero at β̂ because β̂ is the corresponding optimal solution. This completes the proof by noting that λ ≥ 0 and, when λ = 0, for all j.

Proof of Lemma 3. Note that β̂(s) is the optimal solution to (2.17) and has a nonzero set 𝒩s that is constant for s ∈ 𝒮, say 𝒩. Then β̄𝒩(s) also minimizes

| (A.6) |

subject to

| (A.7) |

where sj = sign(bj(β̂(s)), j = 1, 2, . . . , p, denotes the sign of the current first-order partial derivatives, s = (s1, s2, . . . , sp)T, and the second sign constraint is due to Lemma 2. Here xi𝒩 is the sub-vector of xi with index in 𝒩. Note that the inequality constraint in (2.17) can be replaced by the constraint as long as s is less than the one-norm of the full solution argminβ L(β). This justifies (A.7). Note further that the optimal solution β̂𝒩(s) is strictly inside the simplex (A.7) since β̂j(s) ≠ 0 for j ∈ 𝒩 and s ∈ 𝒮. This, in combination with the strict convexity of the object function L(β̂𝒩), implies that the condition sign(β̂j) = −sj for j ∈ 𝒩 can be dropped. Consequently β̂𝒩 (s) solves

By introducing a Lagrange multiplier λ, we get

| (A.8) |

which is equal to 0 at β̂𝒩 = β̂𝒩(s) because β̂𝒩(s) is the corresponding optimal solution.

Now consider two different values s(1) and s(2) in 𝒮 with s < s(1) < s(2). The corresponding Lagrange multiplier are denoted by λ(1) and λ(2), and they satisfy λ(1) > λ(2). Putting them into (A.8) and differencing, we get

| (A.9) |

Note that β̂𝒩c (s) = 0 for any s ∈ 𝒮. Thus (A.9) is the same as

| (A.10) |

Dividing both sides of (A.10) by s(2) − s(1) and letting s(2) → s(1), we get

| (A.11) |

where . Noting that for s ∈ 𝒮, (A.11) becomes , which leads to . Noting that λ′(s) < 0, this shows that for any s ∈ 𝒮, the solution of (2.17) progresses along the CoxLARS path updating direction. It also holds for s due to continuity.

Proof of Lemma 4. Due to (A.5), |bj(β̂(s))| = |bj′(β̂(s))| for any j, j′ ∈ 𝒩. Thus it is enough to prove that |bl(β̂(s))| ≤ |bj(β̂(s))| for any l ∉ 𝒩, j ∈ 𝒩, s ∈ 𝒮 ∪ {s}. We this first for s ∈ 𝒮, by contradiction. Suppose there is some j* ∉ 𝒩 and some s* ∈ 𝒮 such that

| (A.12) |

Let d = (d1, d2, . . . , dp)T with dj = −sign(β̂j(s*))(= sign(bj(β̂(s*))), due to Lemma 2) for j ∈ 𝒩, dj* = −n𝒩sign(bj*(β̂(s*))), and dj′ = 0 for j ∈ (𝒩 ∪ {j*})c, where n𝒩 denote the size of 𝒩.

Consider L(β̂(s*) + ud) as a function of u. Its derivative is given by

| (A.13) |

When u = 0, the right side of (A.13) becomes

| (A.14) |

where j ∈ 𝒩, and negativity is due to (A.12). Note that minj∈𝒩 |β̂j(s*)| > 0 since s* ∈ 𝒮. When 0 < u < minj∈𝒩 , noting the above definition of d. However, (A.14) contradicts the fact that β̂(s*) is an optimal solution to (2.17). This proves our claim for s ∈ 𝒮. Our claim holds at s simply due to continuity.

Proof of Lemma 5. Note that due to Lemma 2 and . Thus, due to Lemma 4 and the above definition of 𝒜0, we have

which is analogous to Equation (5.40) of Efron et al. (2004). It is enough to consider all d satisfying − , which corresponds to Ṡ(0) > 0. Thus we need in order to maximize R(d). In this case we have

| (A.15) |

which is < D̂(β̂) unless dj = 0 for , since |bj(β̂) | < D̂ (β̂) for . This proves (3.1). In this case a second order Taylor expansion leads to (3.2).

Proof of Lemma 6. The positive definiteness of M𝒜10,𝒜10 implies that (3.3) is equivalent to

| (A.16) |

For (A.16), we combine the two constraints and solve the simpler version

| (A.17) |

Afterward, we show that the solution to (A.17) satisfies the sign constraint in (A.16). By introducing a Lagrange multiplier for (A.17), we solve

| (A.18) |

Differentiating the objective function in (A.18) with respect to d𝒜10 and solving for d𝒜10, we get the optimal solution (λ/2)(M𝒜10,𝒜10(β̂))−1sign(b𝒜10(β̂)), which is exactly the same as our CoxLARS path updating direction, noting that the Lagrange multiplier λ < 0. Note that the “one at a time” condition implies that 𝒜0 is a singleton. Consequently, this optimal solution satisfies the sign constraint in (A.16) due to Lemma 1.

Proof of Theorem 1. Theorem 1 can be proved by induction as in Efron et al. (2004), by noting that Lemmas 2–5 are extensions of Lemmas 7–10 of Efron et al. (2004), which are the key results for establishing that the LASSO modification leads to the LASSO solutions, and parallel extensions of their Constraints 1–4 on page 437 are straightforward. We skip these details.

References

- Atkinson K, Han W, Stewart DE. Numerical Solution of Ordinary Differential Equations. Hoboken, New Jersey: John Wiley, Inc; 2009. [Google Scholar]

- Breslow N. Covariance analysis of censored survival data. Biometrics. 1974;30:89–99. [PubMed] [Google Scholar]

- Cox DR. Regression models and life-tables (with discussion) J. Roy. Statist. Soc. Ser. B. 1972;34:187–220. [Google Scholar]

- Dickson ER, Grambsch PM, Fleming TR, Fisher LD, Langworthy A. Prognosis in primary biliary cirrhosis: model for decision making. Hepatology. 1989;10:1–7. doi: 10.1002/hep.1840100102. [DOI] [PubMed] [Google Scholar]

- Efron B, Hastie T, Johnstone I, Tibshirani R. Least angle regression (with discussions) Ann. Statist. 2004;32:409–499. [Google Scholar]

- Fan J, Li R. Variable selection via penalized likelihood. J. Amer. Statist. Assoc. 2001;96:1348–1360. [Google Scholar]

- Fan J, Li R. Variable selection for Cox’s proportional hazards model and frailty model. Ann. Statist. 2002;30:74–99. [Google Scholar]

- Fan J, Lv J. A selective overview of variable selection in high dimensional feature space. Statist. Sinica. 2010;20:101–148. [PMC free article] [PubMed] [Google Scholar]

- Friedman J, Hastie T, Tibshirani R. Regularized paths for generalized linear models via coordinate descent Technical Report. 2008. [PMC free article] [PubMed] [Google Scholar]

- Hastie T, Rosset S, Tibshirani R, Zhu J. The entire regularization path for the support vector machine. J. Machine Learning Research. 2004;5:1391–1415. [Google Scholar]

- Park MY, Hastie T. l1-regularization path algorithm for generalized linear models. J. Roy. Statist. Soc. Ser. B. 2007;69:659–677. [Google Scholar]

- Rosset S, Zhu J. Piecewise linear regularized solution paths. Ann. Statist. 2007;35 [Google Scholar]

- Therneau TM, Grambsch PM. Modeling survival data: Extending the Cox model. Springer; 2001. [Google Scholar]

- Tibshirani R. Regression shrinkage and selection via the lasso. J. Roy. Statist. Soc. Ser. B. 1996;58:267–288. [Google Scholar]

- Tibshirani R. The lasso method for variable selection in the Cox model. Statistics in Medicine. 1997;16:385–95. doi: 10.1002/(sici)1097-0258(19970228)16:4<385::aid-sim380>3.0.co;2-3. [DOI] [PubMed] [Google Scholar]

- Wu Y. An ordinary differential equation based solution path algorith. Journal of Nonparametric Statistics. 2011;23:185–199. doi: 10.1080/10485252.2010.490584. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yuan M, Zou H. Efficient global approximation of generalized nonlinear l1 regularized solution paths and its applications. JASA. 2009;104:1562–1574. [Google Scholar]

- Zhang HH, Lu W. Adaptive lasso for Cox’s proportional hazards model. Biometrika. 2007;94:691–703. [Google Scholar]

- Zou H. The adaptive lasso and its oracle properties. J. Amer. Statist. Assoc. 2006;101:1418–1429. [Google Scholar]

- Zou H. A note on path-based variable selection in the penalized proportional hazards model. Biometrika. 2008;95:241–247. [Google Scholar]

- Zou H, Hastie T. Regularization and variable selection via the elastic net. J. Roy. Statist. Soc. Ser. B. 2005;67:301–320. [Google Scholar]