Abstract

This report highlights research projects relevant to binaural and spatial hearing in adults and children. In the past decade we have made progress in understanding the impact of bilateral cochlear implants (BiCIs) on performance in adults and children. However, BiCI users typically do not perform as well as normal hearing (NH) listeners. In this paper we describe the benefits from BiCIs compared with a single CI, focusing on measures of spatial hearing and speech understanding in noise. We highlight the fact that in BiCI listening the devices in the two ears are not coordinated, thus binaural spatial cues that are available to NH listeners are not available to BiCI users. Through the use of research processors that carefully control the stimulus delivered to each electrode in each ear, we are able to preserve binaural cues and deliver them with fidelity to BiCI users. Results from those studies are discussed as well, with a focus on the effect of age at onset of deafness and plasticity of binaural sensitivity. Our work with children has expanded both in number of subjects tested and age range included. We have now tested dozens of children ranging in age from 2-14 years. Our findings suggest that spatial hearing abilities emerge with bilateral experience. While we originally focused on studying performance in free-field, where real world listening experiments are conducted, more recently we have begun to conduct studies under carefully controlled binaural stimulation conditions with children as well. We have also studied language acquisition and speech perception and production in young CI users. Finally, a running theme of this research program is the systematic investigation of the numerous factors that contribute to spatial and binaural hearing in BiCI users. By using CI simulations (with vocoders) and studying NH listeners under degraded listening conditions, we are able to tease apart limitations due to the hardware/software of the CI systems from limitations due to neural pathology.

1. Introduction to Binaural and Spatial Hearing

The ability of human listeners to function in auditory environments depends in part on the extent to which the auditory system is able to determine the location of sound sources and extract the meaning of those sources. These tasks are relevant to children, who spend time every day in noisy environments such as classrooms and playgrounds. They are relevant also for adults who often spend long time periods in complex auditory environments, such as restaurants, multi-talker meeting rooms, etc. The auditory mechanisms that enable listeners to accomplish these tasks are generally thought to involve binaural processing.

One advantage binaural hearing provides in a normal auditory system is improved sound localization in the horizontal plane. Horizontal-plane localization abilities stem primarily from acoustic cues arising from differences in arrival time and level of stimuli at the two ears. Localization of un-modulated signals up to approximately 1500 Hz is known to depend on the interaural time difference (ITD) arising from disparities in the fine-structure of the waveform (Rayleigh, 1907). The prominent cue for localization of high-frequency signals is the inter-aural level difference (ILD) cue (Rayleigh, 1877; Durlach and Colburn, 1978; Blauert, 1997). However, it has also been well established that, for higher frequency signals, ITD information can be transmitted by imposing a slow modulation, or envelope, on the carrier (e.g., Henning, 1974; Bernstein, 2001). The use of modulated signals with high-frequency carriers may be highly relevant to stimulus coding by cochlear implant (CI) processors that utilize envelope cues and relatively high stimulation rates (Seligman et al., 1984; McDermott et al, 1992; Wilson et al., 1991; Skinner et al., 1994; Vandali et al., 2000; Wilson and Dorman, 2008a).

A second advantage binaural hearing provides to normal hearing (NH) listeners is the ability to understand speech in noisy situations, in particular when the target speech and noise are presented from different locations in the horizontal plane. When target speech and masker are spatially separated, half of the binaural advantage comes from the “better ear effect,” (also known as the “monaural head shadow effect”) where the signal-to-noise ratio is increased in one ear due to attenuation of the noise from the listener’s head (Zurek, 1993). Another advantage, the binaural squelch effect, depends on the ability of the auditory system to utilize binaural aspects of the signal, including differences in the ITDs and ILDs of the target speech and the masker (Bronkhorst, 2000); squelch provides better source segregation due to the target and masker being perceived at different locations. A third effect is that of “binaural summation” whereby the activation of both ears renders a sound that is presented from a location in front easier to hear due to summation of the signals at the two ears. Finally, for amplitude-modulated signals such as speech, ITD cues are also available from differences in the timing of the envelopes (slowly varying amplitude) of the stimuli.

Up until approximately 10 years ago, unilateral cochlear implantation was the standard of care. CIs were designed to enable speech communication and language acquisition in deaf individuals. Although fairly successful at providing cues necessary for speech and language functions, unilateral CI users continue to report having difficulty understanding speech in noise and localizing sounds. Thus, in the past decade, there has been a shift in clinical options such that many clinics now offer bilateral CIs (BiCIs) in an effort to alleviate the difficulties reported under unilateral listening conditions. BiCI users can generally localize sounds and understand speech in noise better when using both of their CIs compared with a unilateral listening condition. However, on these tasks, they generally perform worse than NH listeners.

Research conducted in the Binaural Hearing and Speech lab (BHSL; www.waisman.wisc.edu/bhl) at the University of Wisconsin-Madison’s Waisman Center focuses on spatial hearing issues described thus far. We discuss findings showing the successes observed with bilateral vs. unilateral listening modes. However, we also focus on the challenges observed in BiCI users, and thus future directions that are needed in order to further improve patients’ performance. In order to set the stage for discussing that work, it is important to identify the factors that we believe are the limiting factors in gaps between NH listeners and CI users:

Hardware- and software-based limitations: BiCI users are essentially fit with two separate monaural systems. Speech processing strategies in clinical processors utilize pulsatile, non-simultaneous multi-channel stimulation, whereby a bank of bandpass filters is used to filter the incoming signal into a small number of frequency bands (ranging from 12 to 22), and to send specific frequency ranges to individual electrodes. The envelope of the signal is extracted from the output of each filter and is used to set stimulation levels for each frequency band, thus fine-structure is discarded. Note that the processors, which do not respond when no sound is present, respond to signals above noise at independent times; there can be very small differences in each processor’s time base. This likely results in random jitter in the ITD of the envelope and of the carrier pulses (van Hoesel, 2004; Laback et al., 2004; Wilson and Dorman, 2008b). In addition, the typical microphone placement above the pinna does not maximize the capture of directional cues. Microphone characteristics, independent automatic gain control, and compression settings distort the monaural and interaural level directional cues that would otherwise be present. Thus, speech processors that would capture directional cues available to NH listeners, and that would provide them with fidelity to CI users, are not available. The value of developing speech processing strategies that provide binaural cues depends on knowledge about the extent to which BiCI users are sensitive to and able to utilize binaural cues. Further, for patients with good sensitivity under simple stimulation conditions, such as with one pair of interaural electrodes, there is the need to know how they would operate under more realistic, complex stimulation conditions that mimic aspects of real-world listening.

Surgical-based limitations: The anatomical positioning of the electrode array is such that the most apical placement is typically near the place of stimulation on the basilar membrane with best frequencies of 1,000 Hz or higher (e.g., Stakhovskaya et al., 2007). Furthermore, surgical insertion of the electrode array is not precise enough to guarantee that the electrode arrays in the two ears are physically matched for insertion depth. This is likely to cause imprecise matching of inputs at the two ears because there is potential for the stimuli delivered to place-matched electrodes to bear stimuli in different frequency ranges (e.g., van Hoesel, 2004). The importance of matching interaural place of stimulation for binaural sensitivity is not well understood. The sparse literature on this topic serves as motivation for further exploration of electrode match vs. mismatch on binaural sensitivity.

Limitations due to pathology in the auditory systems of people who are deaf: Both peripheral and central degeneration due to lack of stimulation is probable (for review see Shepherd and McCreery, 2006). At fairly peripheral levels in the auditory system, degradation in size and function of neural ganglion cells following a prolonged period of auditory deprivation is known to occur (Leake et al., 1999; Coco et al., 2007). Profound deafness in the early developmental period seems to result in loss of normal tonotopic organization of primary auditory cortex, although there is some reversal following reactivation of afferent input (e.g., Kral et al., 2009). We are interested in this potential reactivation and restoration of perceptual sensitivity to sensory input. While sensory systems demonstrate the most plasticity during infancy, when the establishment of neural architecture continues to occur, plasticity is known to continue into adulthood. Neural systems remain capable of undergoing substantial reorganization in response to altered inputs due to trauma or an adaptive byproduct known as perceptual learning (Irvine and Wright, 2005; Dahman and King, 2007). In our ongoing studies we are investigating the effect of age at onset of deafness on sensitivity to binaural stimuli and spatial hearing abilities.

The research that is reviewed in this paper was conducted by members of the Binaural Hearing and Speech Lab at the University of Wisconsin-Madison’s Waisman. In this lab, the approach taken towards understanding the successes and limitations of BiCI users is to conduct studies using methods that vary in stimulus control and naturalness. Stimulus control can be exerted at a number of stages and ranges from tightly controlled electrically pulsed signals using custom research processors to poorly controlled stimuli processed by clinical speech processors. As more control is gained over stimulation, a second parameter becomes varied, stimulus naturalness, ranging from unrealistic non-speech stimuli, such as electrically pulsed signals presented to single pairs of electrodes, to the most realistic of stimuli, speech presented under complex multi-source listening conditions. The novelty of this work is the combination of control with naturalness. The approaches are applied to populations of adults and children, who have normal acoustic hearing (NH) or who use BiCIs. In this review paper we summarize data that have been published in recent years and we present some novel data collected in both adults and in children.

2. Studies in Adult Listeners

2.1. Free Field Studies

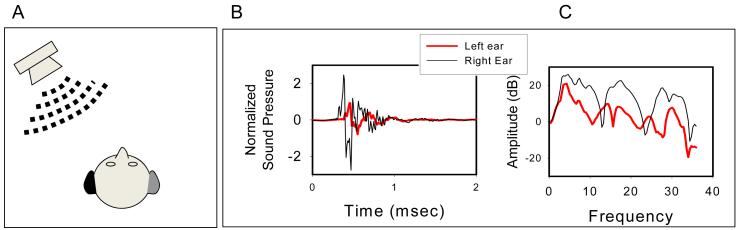

Free field studies in adults with BiCIs and clinical processors have focused on understanding the extent to which patients are able to perform on sound localization tasks and how well they can understand speech in the presence of interferers (maskers) whose locations vary relative to the target speech. These studies represent examples of methodological approaches that bear little control of the stimulus once it is played from the loudspeaker, is transmitted in a room, and is processed through the CI microphones, processors and electrode arrays. However, in these studies the approach focuses on mimicking “real world” listening situations. The early studies by Litovsky and colleagues (Litovsky et al., 2006a, 2009) were conducted in sound field rooms with multi-loudspeaker arrays, whereby speech understanding in 4-talker babble noise was assessed using the Bamford-Kowal-Bench-speech in noise (BKB-SIN) test (Etymotic Research Inc. 2005). The BKB-SIN test is based on the Quick SIN test described by Killion et al. (2004). The benefit of using BiCIs vs. one CI was measured in patients whose deafness had an onset during adulthood and whose CIs were received during the same procedure (simultaneous bilateral implantation). Data for unilateral and bilateral listening modes were compared for conditions where the target speech and masker were co-located (both at 0° in front), or spatially separated (target in front and masker on the side). Speech reception thresholds were measured using an adaptive procedure, estimating the signal-to-noise ratio (SNR) at which speech intelligibility reached 50% correct. Depending on which ear was active during the monaural condition, different effects were observed. A schematic diagram of the three masker configurations combined with each of the three listening modes is shown in Figure 1. The primary benefit from having BiCIs can be attributed to the monaural “better ear” or “monaural head shadow” cue, which arises when the monaural condition has an ear with a poor SNR, and a contralateral ear with a better SNR is added (activated) to create the bilateral listening mode. This benefit would occur by comparing speech intelligibility in conditions 5 and 2 or 9 and 3. The effect size was highly variable across patients (greater than 10 dB in some) with an average of 5.5 dB, after 6 months of use with BiCIs (Litovsky et al., 2009). A slightly smaller effect is also seen when the monaural condition has the ear with the better SNR and the ear with the poorer SNR is added to create the bilateral listening condition (known as the squelch effect). This benefit would occur by comparing speech intelligibility in conditions 8 and 2 and 3 and 6, and averaged 2 dB. A third effect known as binaural “summation” is also observed, when both target and interferer are in front, and unilateral vs. bilateral listening conditions are compared; the addition of the second ear improves speech reception thresholds, in this case by an average o 2.5 dB. This benefit would occur by comparing speech intelligibility in conditions 1 and 4 or 1 and 7. Performance of BiCI users as measured in these sound field studies was not compared directly with performance of NH listeners, and only thresholds were obtained rather than full psychometric functions.

1).

Schematic diagram of the 9 possible conditions (3 masker configurations × 3 listening modes). Binaural listening conditions (1,2,3) are in the top row, Left-ear conditions (4,5,6) in the middle row, and Right-ear listening conditions (7,8,9) in the bottom row. Target-Masker in front occurs in the three conditions in the left column (1,4,7). Masker on the left is shown in the conditions in the middle column (2,5,8) and masker on the right is shown in the three conditions in the right column (3,6,9).

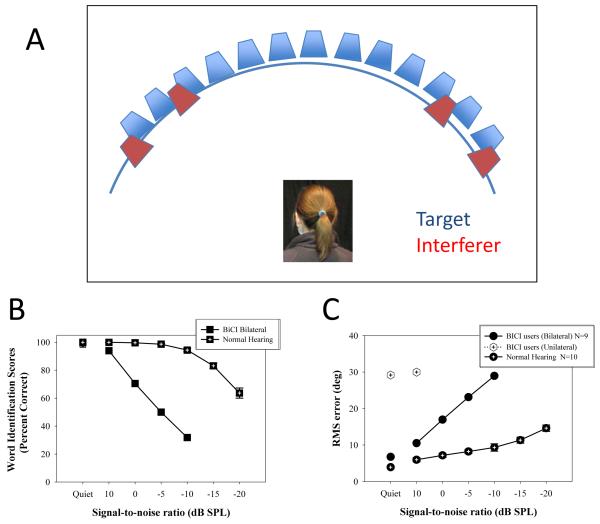

Using a different paradigm, our work has also focused on measuring performance at fixed SNR and using psychometric functions to evaluate change in speech identification as SNR becomes worse (Agrawal, 2008; Agrawal et al., 2008). In addition, the ability to understand speech in noise was tested with competitors on both the right and left, thus rendering the “head shadow” weak or absent. Figure 2A shows a schematic representation of the stimulus configuration in these experiments (note that the Target was presented from 0° in front). Figure 2B shows data from 9 BiCI users and 10 NH subjects, tested on identification of words from the CNC corpus. What is somewhat striking about the results in Figure 2B is the steep decline in performance seen in BiCI users compared with NH subjects. Effectively, the SNR at which the two groups decline to 60% correct is almost 20 dB different (just below 0 for BiCI users and near −20 for NH listeners). This difference between the two populations suggests that while the use of BiCIs is quite helpful for speech unmasking as described above, in a listening situation with maskers presented from both the right and left hemifields, the ability of BiCI users to hear speech in noise is markedly worse than that of NH listeners.

2).

Panel A shows the experimental setup, with 11 loudspeakers positioned in the horizontal plane at 10° intervals (spanning ±50°). In addition, loudspeakers positioned at +35°, +55°, −35° and −55° were used to present the competing/ masking speech. Panel B shows data from the word identification task, for BiCI users and NH listeners; percent correct is plotted as a function of SNR. Panel C shows data for the sound localization measure, with RMS errors plotted as a function of SNR.

The same patients were also tested on sound localization measures in a quiet listening environment (Litovsky et al 2006a; 2009) similar to testing conducted by most labs. Based on results such as these, it has become commonplace to argue that BiCIs provide significant benefits for sound localization. An important caveat regarding previous studies with BiCI users is a lack of “real world” listening component. In realistic listening situations, localization is typically accomplished in the presence of interfering sources. Given that listeners spend much of their time in complex auditory environments, where multiple sources compete for attention, and where abundant echoes can provide competing spatial cues in the localization process, we decided to expand our paradigm to investigate sound localization in BiCI users in the presence of speech interferers. Thus, a listening environment akin to the “cocktail party” environment was created, where the task was to localize speech sounds in the presence of competing speech (Agrawal et al., 2008; Agrawal, 2008). Figure 2A shows the experimental setup; monosyllabic consonant-vowel-consonant words were randomly presented from one of 11 loudspeakers positioned in the horizontal plane at 10° intervals (spanning ±50°). For sound localization testing, performance was measured in quiet and with SNR values ranging from 0 to −20 dB SPL. Listeners were not able to see the actual loudspeakers, as they were hidden behind a curtain. To avoid “edge effects,” listeners were permitted to respond out to ±90°. Figure 2C shows results from 9 BiCI patients and 10 NH listeners, where root-mean-square error (RMS error) for localization is plotted as a function of the SNR. In quiet (left-most data points) BiCI users and NH listeners have RMS errors that are very small and nearly identical. The benefit of BiCIs can be seen in quiet by comparing the filled symbols with the open symbols (unilateral performance); there are significantly higher errors in the unilateral mode. What is striking is the effect of interfering speech on RMS errors, which increase much more severely for BiCI users than for NH listeners as a function of decreasing SNR. While NH listeners can overcome interferers at an SNR of −20 dB and continue to perform fairly well (RMS errors of ~15°), BiCI users show steep increase in errors such that RMS error is near 30° at an SNR of −10 dB.

Remarkably similar effect of SNR on performance was observed when speech identification was measured as a function of SNR, in similar conditions to those used for testing localization. Similar to localization, performance in quiet was similar for NH and BiCI users. However, the introduction of interferers resulted in a marked decrease in performance of BiCI users, whose performance dropped from 100% correct in quiet to ~30% correct at a SNR of −10 dB, in contrast with NH listeners whose performance remained near 90% correct at −10 dB SNR. It is important to note that care was taken to ensure that stimuli were always above audibility thresholds for CI users. In addition, while data from unilateral listening are not shown here, results from this study demonstrated that bilateral listening resulted in better performance than unilateral listening. Since this finding is not novel and has been reported in numerous previous studies, here we chose to focus on performance in bilateral lisening conditions compared with what is seen in NH listeners. While BiCI users perform at a level that is similar to that of NH listeners in quiet, in the presence of interferers, the performance of BiCI users is reduced greatly compared with NH listeners. This finding highlights the existence of a gap in performance between the populations, and goal of future work is to close that gap. In the next few sections we describe our studies on binaural sensitivity, in which we attempt to capture the factors that might be contributing to reduced performance observed in BiCI users in free field.

2.2. Maximizing Binaural Sensitivity

Our long-term goal is to be able to identify factors that are responsible for the gap in performance between BiCI users and NH listeners, and to thus be able to develop stimulation strategies that will overcome (or lessen) that gap. Prior to embarking on such an engineering feat, it is necessary to understand what aspects of these binaural cues are usable by BiCI users and thus worth preserving and restoring. There are many factors that can be responsible for this gap, thus we are systematically working towards understanding how we can provide BiCI users with stimuli that will render them sensitive to binaural cues. One might consider a clinically based approach to conducting research, whereby during testing patients use clinical processors. In this case, the fact that the processors are not coordinated between the ears means that patients do not receive binaural cues with fidelity; however, the testing situation is “realistic.” Studies with clinical processors are important because they provide a baseline from which the field tries to improve with new coding strategies. On the other extreme we might consider using technical tools that enable us to achieve tight control over stimuli that reach individual electrodes within the electrode arrays in the right and left ears, and to present ITD information in a way that is not possible with clinical processors. This approach provides the experimenter with an opportunity to manipulate stimuli at various stages of processing, and to identify the conditions under which binaural advantages occur for BiCI users. With controlled stimuli, stages of the clinical processors can be progressively omitted. For example, the microphone response can be bypassed by using a direct line input. One can also omit the clinical processors and control the CIs at the single-electrode level. The staged removal and manipulation of stimulus control can be achieved through the use of custom-made research processors. In our studies, we use this approach to identify binaural pairs of electrodes, and to stimulate them using electrically pulsed signals that do not bear particular resemblance to “real world” stimuli, hence the “naturalness” of the stimuli is fairly low. Once we understand how binaural sensitivity is achieved under this set of conditions we may be in a better position to design binaural processors for BiCI users.

In the section below we focus on work in our lab that uses bilaterally coordinated research devices to control ITDs between the CIs. The simplest and most controlled acoustic stimulus is the sine tone. The closest stimulus to a sine tone in electrical hearing is a constant-amplitude pulse train on a single electrode. Across ears, one would want to use single electrodes that elicit the same loudness. In acoustic hearing equal loudness provides auditory images that are perceived to be in the center of the head. While in electrical hearing this is a bit more complicated, we like to start with loudness-balanced electrodes, so that when they are activated simultaneously there is a greater chance of ending up with a “centered”. There are a number of further steps to likely maximize binaural (ITD or ILD) sensitivity in BiCI users, which are described below.

2.2.1. Interaural place matching

First, the pair of electrodes should ideally excite the same tonotopic area in each cochlea, because difference between the ears in insertion depth and neural survival may lead to interaural differences in the site of stimulation. ITD sensitivity has been shown to decrease for interaural tonotopic mismatch (Long et al., 2003; Poon et al., 2009). Since implantation depths and neural survival are likely different between the two ears in BiCI users, some way to test the effect of tonotopic match/mismatch is necessary. Prior work (van Hoesel et al., 1993; van Hoesel and Tyler, 2003; van Hoesel et al., 2008) has shown that when studying ITD sensitivity, selecting electrodes that are matched by pitch between the ears yields similar results to methods in which place matching based on X-ray views are used. Because labs vary in methods used to determine pitch-matched electrodes, here we describe our exact approach.

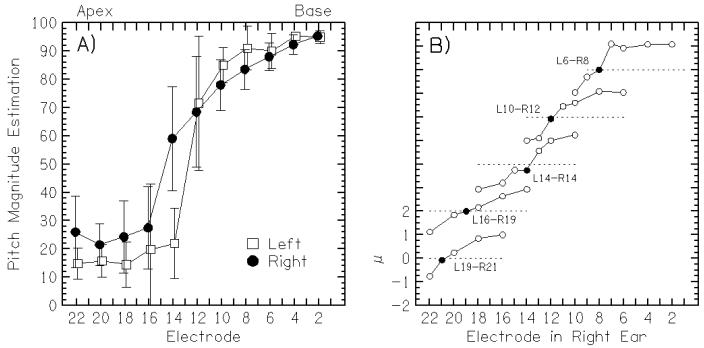

Our pitch-matching method consists of two stages: a pitch magnitude estimation and a direct left-right pitch comparison. Constant-amplitude pulse trains that elicit the same loudness in both ears are used as stimuli. They often have the same duration and rate as the stimuli used in the subsequent binaural tests, however, for reasons that are not well understood, within a given subject the parameters that are needed for testing sometimes shift over time. Using a method of constant stimuli, a single electrode chosen from the even-numbered electrodes from either ear is presented and listeners rate the pitch of the stimulus on a scale from 1 (low pitch) to 100 (high pitch). Listeners are encouraged to use a scale that is similar for both ears and are also encouraged to use the full scale. The results from one listener performing the pitch-magnitude estimation task are shown in Fig. 3A. The listener’s pitch magnitude estimation for each electrode tested in each ear is plotted, ordered from apex to base (high to low electrode numbers). It can be seen that the judgments have a large variability and that pitch-matched electrode pairs can probably only be estimated. Therefore, a direct left-right pitch comparison experiment is performed using the estimated pitch-matched pairs from the magnitude estimation with the intention of improving the quality of the pitch match. In each trial, the listeners are presented with a constant-amplitude pulse train in one ear followed by one in the other ear, both at the same rate and loudness. The pitch-comparison task tests electrodes that are typically within ±2 electrodes from the estimated pitch-matched pair. This is a 2-interval, 5-alternative forced choice where the listeners are asked to respond whether the second sound is “much higher,” “higher,” “the same,” “lower,” or “much lower” in pitch compared to the first sound. A metric, μ, is calculated to help decide the pitch-matched pair and is the weighted sum of the number of responses in each response category. Responses that are “much higher” are given a weight of 2, “higher” = 1, “same” = 0, “lower” = −1, and “much lower” = −2. The pair with μ closest to zero is considered the best pitch-matched pair. The pitch-comparison results from the same listener in Fig. 3A are shown in Fig. 3B. Five electrodes in the right ear were selected, and for each one a number of left-ear electrodes were included in the pitch task. The listener was tested on 5 electrodes in the left ear (19, 16, 14, 10, and 6), each compared with a number of electrodes in the right ear. For each combination, a “pitch comparison score” (μ) was provided. The pitch-matched electrode in the right ear was the one closest to μ = 0, shown by the filled symbol; the electrodes that were more poorly matched by pitch are the ones with 2<μ<2. Note, for example, that the middle pitch-matched pair (left 14, right 14) was not considered matched in the magnitude estimation but was the best match in the direct comparison, which justifies the need for the pitch-comparison task. Also, it is often the case in our pitch-comparison tests that listeners have difficulty using the “same” response because the sounds in the right and left ears are vastly different qualitatively, justifying the calculation of μ.

3).

In panel A, pitch magnitude estimation scores for each electrode are shown for the electrodes in the left ear (open squares) and right ear (closed circles). In panel B, direct pitch comparison scores (μ) are shown for a range of electrodes in the right ear for 5 electrodes in the left ear (19, 16, 14, 10, and 6). The pitch-matched electrode in the right ear is the one closest to μ = 0 and shown by a closed symbol.

2.2.2. Centering

Perceptual centering of an auditory image refers to the perception we experience when we hear a stereo effect, i.e., a sound that is presented to the two ears is heard in the center of the head. Centering is important because an auditory image resulting from binaural stimulation that is perceived “inside the head” (intracranial image) but shifted towards one of the two ears will reduce binaural sensitivity for ITDs and ILDs (Yost, 1974; Yost and Dye, 1988); similarly, detection of interaural decorrelation (Pichora-Fuller and Schneider, 1991; Li et al., 2009) in acoustic hearing. It is therefore our goal, whenever possible, to maximize centering so that binaural sensitivity can be optimally measured. We therefore take extreme care to ensure that binaural stimuli are not perceived by the listener as separate sounds in the two ears, rather that binaural stimuli result in stereo hearing and elicit sound percepts that are fused into a single sound, and that are centered when the ITD is 0. It is our experience that, a pair of electrodes that are perceived to have the same pitch and perceived loudness, can, when activated simultaneously at the most comfortable level (C), produce an intracranial image that is not centered (Goupell et al. 2011). Alternatively, there may be a difference in loudness between the ears (Goupell et al. 2011; Litovsky et al., 2010; Jones et al., 2011). This might occur because of asymmetrical recruitment of auditory nerve fibers in the two ears. Previously, we used a centering procedure where the two members of a left-right electrode pair were stimulated with a train of simultaneous pulses at a current level equal to 90% of the dynamic range, and the listener reported the location of the image. Typically, the center of the image was perceived to be closer to one ear than the other, in which case the pulse amplitude was reduced in that ear until the image shifted to the center. While this is similar to what may happen in a clinical setting, there may be high variability in this centering procedure. Therefore, we recently adopted a more rigorous lateralization experiment paradigm. Listeners are given an interface with a face to report the intracranial image position (or positions for cases where multiple images are heard). The selection of current level units (CU) that are used for electric ILDs requires a certain procedure, because, patients vary in their dynamic ranges and sensitivity to ILDs. First, C level is found for each ear at each electrode, defined as the CU that the patient reports to be “comfortable, speech-level-like.” Second, the C levels for pairs of electrodes that will be used for ILDs are treated as the “baseline CU” and relative to that baseline we change the relative CU in the right and left ears. Typically, the CU are varied in the two ears relative to the baseline by 0, ±2, ±5 and ±10 current level units (CU). For example, if the baseline is 200 and 210, in the right and left ears, respectively, then a listener would perceive a centered auditory image with those values, hence 200R-210L would be the ILD=0 condition. Relative to those values, CUs would be imposed that shift the auditory image to the right or left. Ten trials per condition were tested; the reported positions are converted to a scale of −10 (left) to +10 (right) and are fit with a four-parameter fit of the form:

where x is the ILD, and A, μx, σ, μy were the variables optimized to fit the data. The x-intercept of this fit is rounded to the nearest whole number and used to adjust the levels of the right and left ears.

2.2.3. Rate limitations and envelope shape

It is important to recognize that the stimuli used in our experiments are selected so as to maximize ITD sensitivity. For constant-amplitude pulse trains, sensitivity to ITD has been shown to be best at low-rate pulse rates (~100 pps), and that as stimulation rates increase listeners’ performance on ITD discrimination becomes worse (e.g., Laback and Majdak, 2008; van Hoesel et al., 2009). Similarly, physiological experiments with binaural electric hearing have shown similar limitation to sensitivity at higher rates (Goupell et al., 2009). It is thought that this rate limitation is related to a similar phenomenon seen in acoustic hearing, called binaural adaptation (Hafter and Buell, 1990). Although, Hafter et al. (1983) showed that there were also rate limitations for constant-amplitude ILDs in the typically-developed auditory system, such a phenomenon has not been tested yet in CI listeners. Understanding the mechanisms involved in rate limitation may be highly important at the clinical level: if binaural speech strategies are likely to be implemented with the goal of preserving sensitivity to binaural cues, stimulation rate may have to be reconsidered. Many patients today prefer to use high stimulation rates (>1000 pps) for speech understanding, however, those rates would not be useful for binaural sensitivity. When considering rate sensitivity as it relates to binaural hearing and speech understanding, we have assumed thus far that the binaural sensitivity is for aspects of the stimuli known as “fine-structure” or rapidly fluctuating waveform. In contrast, we can also consider the possibility that listeners would be sensitive to inter-aural differences arising from the slow-moving “envelope.” The envelop arises when a high-rate periodic pulse train is modulated with a slower-moving signal such as a tone. This “amplitude modulated” signal restore ITD sensitivity, although there may be only a partial recovery from the rate limitation (van Hoesel et al., 2009). This might be explained by the fact that these tests assumed that the loudness growth across ears followed a constant change in dynamic range, which was used to apply the AM. However, it may be that spurious ILDs were introduced into the AM stimuli which added localization blur to the stimuli, thus reducing ITD detection sensitivity (Goupell et al., 2011).

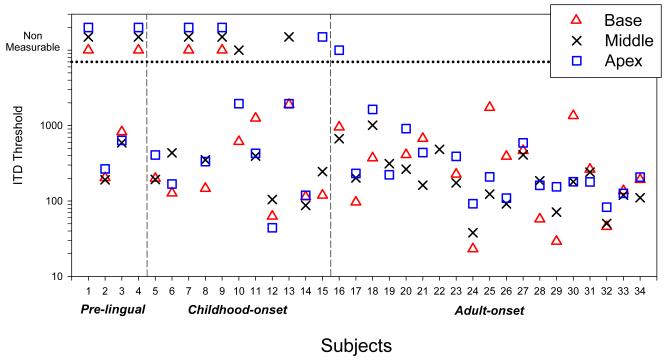

2.3. ITD and ILD Sensitivity

Figure 4 shows ITD sensitivity taken in a left-right discrimination task for 100-pps constant-amplitude pulse trains collected from 34 BiCI users during the last 8 years. The listeners have various hearing histories and etiologies. One notable effect is the age at onset of deafness. Individuals with pre-lingual or childhood onset of deafness, all of whom received their CIs as adults, had worse performance than individuals with adult-onset deafness. This finding suggests that early auditory input plays a role in the establishment of binaural sensitivity. Whether the degradation is influenced by peripheral factors (e.g., degraded auditory nerve connectivity) or central factors (i.e., degraded binaural circuitry) is yet to be determined. Another obvious feature in these data is the wide range of performance seen within the groups. This is larger than the variability in ITD thresholds typically seen in acoustic hearing, whether using high frequency carriers modulated at a low frequency, or low frequency carriers (e.g., Bernstein and Trahiotis, 1994; 1998; Wright and Fitzgerald, 2001; Laback et al., 2007). Our recent work also suggests that ILD sensitivity is variable across listeners. However, all subjects seem to have ILD discrimination and lateralization sensitivity (Litovsky et al., 2010). There are many possible reasons for this increased variability including duration of acoustic hearing, duration of binaural input, duration of deafness, duration of time between implants, and many others. One of the major areas of research in the BHSL has been to study the effects of previous hearing history on binaural sensitivity with BiCIs.

4).

ITD just-noticeable difference thresholds in a left-right discrimination task are shown for 34 BiCI users, using a 100-pps constant-amplitude pulse train. Each listener’s threshold represents the value of the ITD relative to 0 μ that could be reliably discriminated. Data from three subject groups with unequal N size are shown: prelingual onset of deafness (leftmost), childhood onset of deafness (middle) and adult onset of deafness (rightmost). For each subject, up to three data points are shown in different symbols, for the three possible places of stimulation along the cochlear array (base, middle, apex).

One such study compared ITD and ILD lateralization between pre-lingually and post-lingually deafened users (Litovsky et al., 2010). We found that all eight post-lingually deafened BiCI users could perceive a monotonic change in intracranial position for both ITDs and ILDs; however, the three pre-lingually deafened listeners did not perceive a change in intracranial position for ITDs. Furthermore, in Jones et al. (2008, 2009) we examined sensitivity to the ITD of a probe pulse train in a simulated multi-source environment. We found reduced ITD sensitivity, yet thresholds were frequently well within the range that would be usable in real life, even under test conditions that were expected to be quite challenging.

2.4. Binaural masking level differences and interaural decorrelation

BiCI listeners can demonstrate binaural masking level differences (BMLDs), which is the improvement in detecting an out-of-phase tone in a diotic noise compared to an in-phase tone (Long et al., 2006; Lu et al., 2010). For signals presented at a single electrode pair, using stimuli that had electric versions of a “realistic” amplitude compression function that is typical of clinical processing strategy BMLDs were about 9 dB, which is within the range of effects seen in listeners with acoustic hearing. This led us to question if there would be a positive BMLD for multiple electrode stimulation. Since the interaural decorrelation in the dichotic stimulus is encoded solely in the instantaneous amplitudes of the envelope (Goupell and Litovsky, 2011), it is likely that stimulation from adjacent electrodes (either diotic or dichotic) will interfere with the envelope encoding due to current spread and channel interactions. Indeed, Lu et al. (2011) showed that BMLDs were greatly reduced from 9 dB to near zero after the inclusion of adjacent masking electrodes. The degree of channel interaction was estimated from auditory nerve evoked potentials in three subjects, and was found to be significantly and negatively correlated with BMLD. Findings from that study suggest that if the amount of channel interactions can be reduced, BiCI users may experience some performance improvements in perceptual phenomena that rely on binaural mechanisms.

2.5. Acoustic simulations of BiCI listening in Adults

Noise and sine-excited channel vocoders have been used to simulate the signals presented to CI users (e.g., Shannon et al., 1995). Although, most vocoder studies typically look at speech understanding in the absence of binaural cues, a few studies have used them as BiCI simulations. Depending on the simulation characteristics and amount of experience with the simulation, comparisons between CI users and NH listeners typically show that the best CI listeners might achieve the performance of the NH listeners in the simulation. However, CI users remain, on average, worse than NH listeners (e.g., Friesen et al., 2001; Stickney et al., 2004; Goupell et al., 2009). The type of vocoder used depends on the question of interest. Thus, when vocoders are appropriately selected, they can provide an instrumental tool for understanding the mechanisms of CI users because they provide a baseline performance by which to compare across groups. For questions about temporal cues, where the fidelity of representing the temporal envelope is imperative, a sine vocoder may be most appropriate. For questions about spectral cues, like current spread, a noise vocoder would be most appropriate.

Garadat et al. (2009) measured spatial release from masking in NH listeners using a sine vocoder with 4, 8, or 16 bands. The signals were presented over headphones with non-individualized head-related transfer functions to provide virtual localization cues. We found that the advantage of separated compared to co-located speech sources changed depending on the number of channels, where the binaural advantage from spatial release increased as the number of channels decreased. We also found that the order of vocoding then applying the head-related transfer function (which includes fine-structure ITDs) or applying the head-related transfer function then vocoding (which can remove fine-structure ITDs) does not provide a significantly different spatial release from masking. Garadat et al. (2010) also measured spatial release from masking in NH listeners in the presence of spectral holes, as might occur if there is uneven neural degeneration along the length of cochlea. We found in general, holes decreased speech intelligibility and the advantage of binaural hearing. Mid-frequency holes disrupted speech reception thresholds most, but high-frequency holes disrupted spatial release most.

Besides speech understanding experiments, it is possible to simulate pulsatile CI stimulation using bandlimited acoustic pulse trains. Recent work in our lab has focused on using these simulations to understand factors that may be responsible for limiting performance in BiCI users on binaural tasks. In Kan et al. (2011b) we simulated interaural frequency mismatch using acoustic pulse trains in the lateralization and discrimination of ITDs and ILDs. Results showed that ILDs were lateralized and could be discriminated for all mismatches, whereas ITDs could be only for smaller mismatches (< 6 mm). For discrimination, thresholds increased systematically with increasing interaural frequency mismatch. This result is consistent with what we found in BiCI users (Kan et al., 2011a), where interaural mismatch is likely to occur. The advantage of simulating basic psychophysical stimuli in NH listeners is there is greater consistency across individuals. Therefore, studying NH listeners can help us to understand binaural mechanisms without the potential confounds introduced by highly variable findings seen in BiCI users.

3. Studies in Children

3.1 Free Field Studies

In recent years, growing numbers of children have received BiCIs in an effort to improve their ability to segregate speech from background noise and to localize sounds. BiCIs in children became more common clinically based on evidence from studies with adult patients demonstrating bilateral benefit such as those discussed above (see also, van Hoesel & Tyler, 2003; van Hoesel, 2004; Litovsky et al., 2004; 2009). Children and adults differ however, in particular in the experience they undergo while transitioning to CI use. Many adults with CIs lost their hearing post-lingually (sudden or progressive) after having experienced sound through acoustic hearing. In these adults, bilateral stimulation takes advantage of auditory mechanisms that had been accustomed to sound and are being re-activated with electric hearing. Most important, early activation of binaural circuits with acoustic hearing may mean that the ability to localize sound and the intrinsic knowledge and skill set associated with localization were established prior to becoming deaf. The majority of children who use CIs are, however, typically diagnosed with severe-to-profound hearing loss at birth or during the first few years of. The children we have studied have for the most part received little or no reliable use of acoustic input before the use of CIs, thus, the developing auditory circuitry is likely to not have established or maintained the mechanisms necessary for spatial hearing. In addition, these children are less likely to have established sound localization abilities that are automatic and rapid, similar to that of children with normal hearing.

The experience in the BHSL during the past decade has led the investigators to believe that binaural abilities (e.g., to segregate speech from background noise and to benefit from the availability of spatial cues) of the pediatric BiCI population is compromised relative to what is observed in NH children. Our data to date (Litovsky et al., 2006b; Misurelli et al., 2011) suggest that the primary spatial cue used by children with BiCIs for speech-masker unmasking is the head shadow (monaural) cue. Thus, their ability to use spatial cues exists, but is dominated by their ability to use a single ear, rather than on the ability to integrate inputs from the two ears. It is possible that the binaural integration ability is acquired with listening experience. As is discussed below, children perform better when using BiCIs compared with unilateral listening. Similarly, localizing sounds is a skill that needs to be learned over time; this is confirmed by anecdotal reports from some children, who initially upon bilateral activation reported that they did not understand the concept of ‘where sounds are coming from’. This is also confirmed by behavioral research in animal models such as the ferret (e.g., King et al., 2007).

3.1.1. Speech intelligibility in noise in children with bilateral cochlear implants (BiCIs)

While many studies in the literature test children while they are wearing their BiCIs vs. when only one of the devices is activated, the test with a single CI may be an “unusual” condition in children whose listening strategies reflect everyday use of two implants. For this reason we were interested in studying the children as they transition from using a single CI to becoming BiCI users. Here we present unpublished data from a prospective longitudinal study on speech intelligibility in 10 children (ages 5 to 10 years) who were recruited while they were unilateral CI users, and thus were tested during their pre-bilateral “baseline” listening condition. Some of the children wore a hearing aid (HA) in the non-implanted ear, and others did not. After receiving the second CI, each child returned to the lab for follow-up testing at 3 months and 12 months after activation of the second CI. The children were recruited from several geographic locations throughout the United States. Thus, device manufacturer and CI center varied amongst participants. Demographic information regarding individuals and details regarding the types of CI devices used can be found in Table 1. For a detailed discussion of participant characteristics, please refer to Godar & Litovsky (2010) who reported spatial hearing measures from these same children. Speech intelligibility was tested using a child-friendly, 4-alternative forced choice method (Litovsky, 2005; Johnstone and Litovsky, 2006; Garadat and Litovsky, 2007; Litovsky et al., 2006b). Speech reception thresholds (SRTs) were measured in Quiet and in the presence of background interfering speech. Note that in this approach, a reduced SRT indicates improved performance, whereby the intensity at which the target words can be correctly identified is lower. Targets were comprised of a closed set of 25 energy-normalized spondees spoken by a male voice. The background speech consisted of sentences chosen from the Harvard IEEE corpus, spoken by a female voice and overlaid to create 2-talker maskers. Target words were always presented from the loudspeaker in front (0°), while the background speech sentences were presented from either front (0°), or from a 90° loudspeaker nearer either the first CI or the second CI, with side depending on which ear was implanted first. Prior to testing, children were familiarized with each of the 25 target words and an associated picture. In order to measure speech perception and not vocabulary knowledge, only targets that the child could associate with the matching picture were included in the test. Exact procedures and methods for estimating SRTs using bootstrapping techniques are described in detail in the aforementioned studies from our lab.

Table 1.

| Subject | Gender | Etiology | Age at 1st CI Activation Yr;Mo |

Age at 2nd CI Activation Yr;Mo |

Months between 1st & 2nd CI |

Contralateral HA at Baseline |

1st CI | 2nd CI | |

|---|---|---|---|---|---|---|---|---|---|

|

CI-HA at

Baseline |

CIAP | F | Progressive, cause unknown |

3;6 | 5;2 | 20 | Oticon DigiFocusSP; Left ear |

Nucleus 24C Advance; Right ear |

Nucleus 24C Advance; Left ear |

| CIAQ | M | Connexin-26 | 3;1 | 8;1 | 60 | Widex Senso P38; Left ear |

Nucleus 24C; Right ear | Nucleus 24C Advance; Left ear |

|

| CIBA | M | Connexin-26 | 3;7 | 10;2 | 79 | Phonak Supero 412; Right ear |

Nucleus 24; Left ear |

Nucleus Freedom; Right ear |

|

| CIBH | M | Mondini Malformation |

2;5 | 7;0 | 55 | Phonak P4AZ; Right ear |

Med-El Combi; Left ear | Med-El Pulsar; Right ear | |

| CIBK | M | Connexin-26 | 2;1 | 7;1 | 60 | Oticon DigiFocus II; Left ear |

Nucleus 24C; Right ear | Nucleus Freedom; Left ear |

|

| CIBM | M | Progressive, viral cause suspected |

3;9 | 8;0 | 49 | Sonic Innovations Digital BTE; Right ear |

Nucleus 24; Left ear |

Nucleus Freedom; Right ear |

|

|

| |||||||||

|

UniCI at

Baseline |

CIAW | M | Prenatal CMV exposure |

1;2 | 5;5 | 51 | NA | Nucleus 24C; Right ear |

Nucleus Freedom; Left ear |

| CIAY | M | Progressive, bilateral ear infection |

5;2 | 5;11 | 9 | NA | Nucleus 24C Advance; Right ear |

Nucleus 24C Advance; Left ear |

|

| CIBG | M | Unknown | 1;2 | 5;5 | 39 | NA | Nucleus 24; Right ear |

Nucleus Freedom; Left ear |

|

| CIBJ | F | Progressive, cause unknown |

3;9 | 8;0 | 49 | NA | Advanced Bionics CII/HiFocus; Left ear |

Advanced Bionics HiRes/90K; Right ear |

|

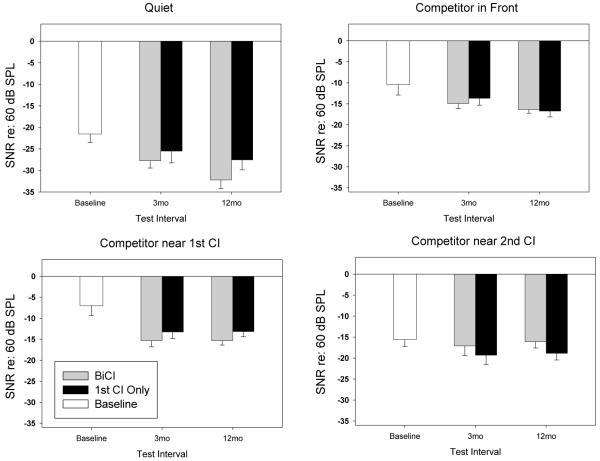

Figure 5 shows mean SRT values normalized re: level of the front competitor (60dB SPL). Each panel compares SRTs for one testing condition (Quiet, Front, Near First CI or Near Second CI), at the Baseline test visit, and at the 3- and 12-month intervals, when children listened bilaterally, or while using only their first CI. SRTs transition to more negative SNR values with additional listening experience, especially in the Quiet condition and the condition with competitors near the first CI. One-way Repeated Measures Analysis of Variance for each listening condition was considered at the significance level of p<0.05. Significant improvement in SRTs within 3 months of bilateral activation was seen in the Quiet condition, and for the condition with background speech near first CI. At the 12-month visit, there was also a significant improvement in SRT for the bilateral listening mode with competitors in Front (mean reduction in SRTs of ~6 dB). An even greater reduction in SRT at the 12-month visit was measured in this listening mode in the Quiet condition (improvement of ~10.66 dB). However, in both the bilateral and unilateral listening modes, the least amount of reduction in SRT at the 12-month visit was measured when the speech competitors were located near the second CI. This condition resulted in minimal and non-significant change in SRT over time.

5).

Results from 10 children whose SRT thresholds were tested at three intervals. Group Means and Standard Errors are shown relative to the 60dB SPL competitor level for Baseline (CI+HA or UniCI), BiCI and 1st CI Only listening modes at 3-mo and 12-mo test Intervals.

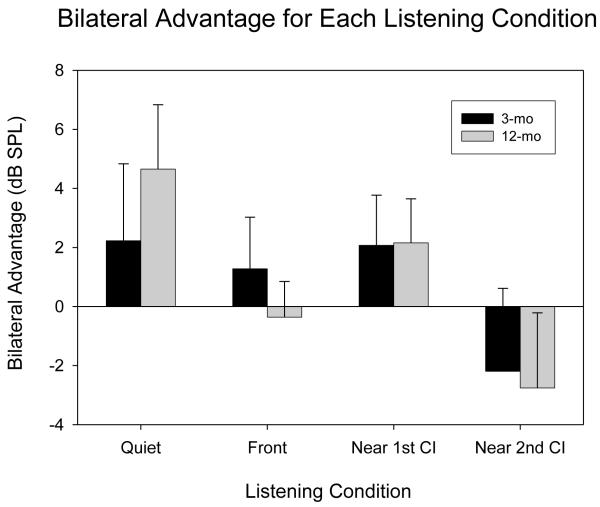

In addition to investigating change in SRT over time, a second question of interest in this study was whether the use of BiCIs results in improved speech understanding when compared to the unilateral listening mode. One way this question can be approached is to calculate a Bilateral Advantage by subtracting the SRTs obtained in the BiCI listening mode from those obtained in the unilateral listening mode. Thus, positive values denote better performance in the BiCI condition while negative values suggest a disadvantage in the BiCI listening mode. These values for Bilateral Advantage for the 3- and 12-month visits are displayed in Figure 6. Due to large variability between the children, results were not statistically significant; nonetheless, two noticeable trends emerged. First, a within-subject t-test showed that, in the Quiet condition, there was an increase in Bilateral Advantage over time that approached significance [t(9)=2.134, p=0.062] at the 12-month visit. This suggests that the children in this study continued to make gains in speech understanding in quiet throughout the first year of experience with BiCIs. Second, there was a noticeable difference between the Bilateral Advantage scores for the two unilateral listening conditions (near first CI and near second CI). While a bilateral benefit of about 2 dB was measured at both the 3- and 12-month visits when the competitor was located on the same side as the first CI, the opposite was true when the competitor was located on the same side as the second CI. In the condition with competitors near second CI, a mean disadvantage of 2.19 dB at 3-mo and 2.76 dB at 12-mo was measured. For the competitor location on the side of the second CI, the benefit of head shadow was greatest in the unilateral listening mode, when there was no amplification on the contralateral side. The additional amplification provided by the second CI, in the BiCI listening mode, appears to have interfered with the “head shadow” listening that the child had previously been able to take advantage of. Conversely, for the condition with competitors near first CI, the addition of the second CI offered a new head shadow benefit. When the competitor was located on the side of the first CI, having a second CI available appears to have allowed for “head shadow” listening to sounds from the side near the second CI. In summary, we found that, in a group of children who transitioned from using a single CI to using BiCIs, speech understanding improved over a 12-month period, as did the bilateral advantage that they demonstrated. Because these improvements were observed under specific listening conditions, we recommend that those conditions be considered when clinical evaluations are made regarding BiCIs in similar populations of patients.

6).

Bilateral Advantage group average is shown for the group of 10 children who were tested at three intervals. We subtracted the SRTs obtained in the BiCI listening mode from those obtained in the UniCI listening mode. Thus, positive values denote better performance in the BiCI condition while negative values suggest a disadvantage in the BiCI listening mode. These values are compared for the 3- and 12-month visits.

3.1.2. Spatial hearing in children with BiCIs

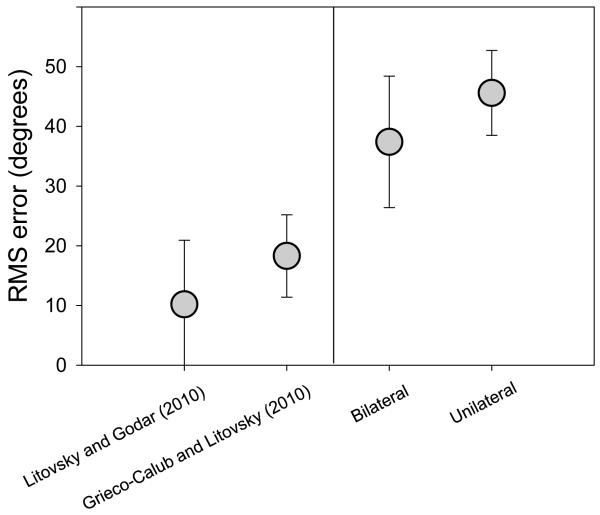

Our lab has also focused on measuring the emergence of spatial hearing skills in young BiCI users. To achieve this goal we have relied on developing a number of behavioral approaches that were age-dependent and task specific. The goal has been to measure children’s ability to either localize source positions (accuracy), or to discriminate between sound source positions (acuity). Accuracy may reflect the extent to which a child has been able to develop a spatial-hearing “map.” The tasks, whereby children have to point to where a sound is perceived, may also be somewhat difficult and involve cognitive input or executive function, compared with discrimination tasks. Data from children with normal hearing are somewhat sparse, with several studies on the topic having been conducted recently, focusing on children between the ages of 4-10 years. Figure 7 summarizes data from two published studies (left). Grieco-Calub & Litovsky (2010) tested 7, 5-year old children with NH and found errors ranging from 9-29° (avg of 18.3° ± 6.9° SD). Litovsky & Godar (2010) tested 9, 4-5 year old children with NH, and reported RMS errors ranging from 1.4-38° (avg of 10.2° ± 10.72° SD). These values overlap with those obtained in adults, but tend to be higher, suggesting that some children reach adult-like maturity for sound localization by age 4-5 years and other children undergo a more protracted period of maturation (see also Litovsky, 2011 for review).

7).

Results from published studies in which sound localization was measured in children and errors were quantified as root mean square (RMS) error are summarized here. On the left, data from two studies in which children normal hearing were tested (Grieco-Calub & Litovsky; Litovsky & Godar (2010). On the right, data from Grieco-Calub & Litovsky are shown for children with BiCIs, tested either when using both CIs or when using a single CI (see also Litovsky, 2011 for review).

Within this context for normal-hearing children one can consider and assess data obtained from BiCI users. In addition to data from children with normal hearing, Grieco-Calub and Litovsky (2010) reported RMS errors for 21 children ages 5-14 years who used BiCIs. Figure 7, two bars on the right, shows average results when the children were tested either in the bilateral or unilateral listening mode. RMS errors in 11/21 children were smaller when both CIs were activated compared with a unilateral listening mode, suggesting a bilateral benefit. When considering the bilateral listening mode, RMS errors ranged from 19°-56°. Compared with the NH children, BiCI users rarely showed absolute correct identifications, and their data suggest an internal “map” of space that is perhaps more blurred and less acutely developed. Finally, it is important to recognize that RMS error represents only one metric for evaluating performance on sound localization tasks, but this measure may not be representative of spatial hearing abilities and listening strategies employed by these children.

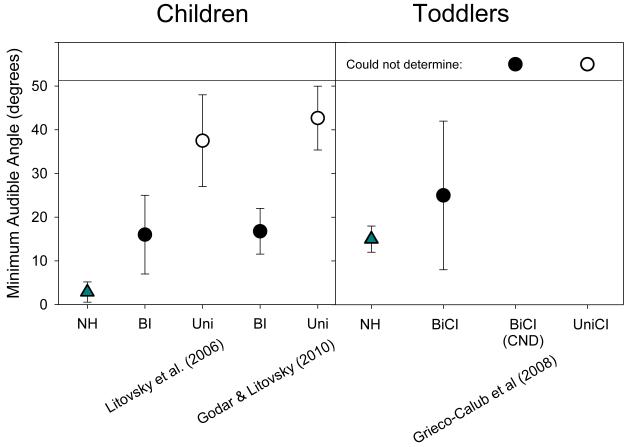

Another means of quantifying spatial hearing is by measuring acuity, in other words the listeners’ ability to discriminate between two source locations. Threshold is often defined as the minimum audible angle (MAA), or smallest angle between two source locations that can be reliably discriminated. MAA thresholds undergo significant maturation during infancy and childhood (Litovsky, 1997; 2011; Litovsky and Madell, 2009), for the most part taking place during the time window in which BiCI recipients might typically experience deafness and/or periods of unilateral hearing. On this task children are trained to respond in a way that indicates whether they perceive a sound as coming from the right versus left. While in some of our studies we investigated acuity in older children who were typically ages 5-10 years during testing and had received their second CI by age 4 years or older (Litovsky et al., 2006; Godar and Litovsky, 2010), in other studies we investigated toddlers who had received their second CI by age 12 months (Grieco-Calub et al., 2008; Grieico-Calub and Litovsky, 2012). Results from a number of studies to date are summarized in Figure 8. In the older children (left panel in Figure 8), performance was significantly better (lower MAAs) when children were tested while using both CIs than in the unilateral listening mode, however, MAAs were higher than in NH children. In the toddlers (right panel in Figure 8), some children were able to perform the task while others were unable to do so, with no obvious factor differentiating between them. It is clear that future work needs to be done to determine the source of this variability. One potential source is the task. We used head orienting behavior, which works well with infants but may be too uninteresting for toddlers. In our new work we are using a ‘reaching for sound’ task that we believe to be more ecologically valid. Since children are motivated to participate on each trial and they must use their spatial hearing skills to succeed on the task, we may be able to measure bilateral benefit that is more consistent and reliable even at the early age of 2-3 years (Litovsky et al., 2011).

8).

MAA thresholds from several studies, with children ranging in age from 3.5-16 years (Litovsky et al., 2006) or 5-10 years (Godar and Litovsky, 2010), and a group of 2-year old toddlers (Grieco-Calub et al., 2008). In the two groups of children who use BiCIs (left panel), performance was significantly better (lower MAAs) when tested in the bilateral listening mode (filled circles) than in the unilateral listening mode (open circles). For these same children, MAA thresholds were nonetheless higher than those measured in children with normal hearing (triangles).

3.2 Children: Binaural Sensitivity

The approach that we use to measure binaural sensitivity enables us to have tight control over interaural cues, and to selectively stimulate basal, middle or apical electrodes in the two ears. Because of the intricacies in this method, which require pitch matching, loudness balancing and fusion of the sounds in the two ears, these studies are challenging to conduct in adults, and even more so in children. Recently we have begun to test for binaural sensitivity in a small group of children who were bilaterally implanted between the ages of 4-10. Our early observations suggest that psychophysical measurements of pitch, loudness and fusion can be obtained in children as in adults. One important factor is the difficulty of the task and the high level of concentration required to extract information from what are often subtle auditory cues. Another factor is the lack of experience that these children have with perceiving clear, describable auditory “images” and being able to explain them, or to consistently rate them on an arbitrary scale (as in pitch and loudness). For example, when it comes to binaural hearing per se, nearly all of the children have never experienced a true binaural stimulus, and their anecdotal reports of sounds leads us to believe that they do not generally perceive sounds that are centered in their head when listening through their clinical speech processors. After meticulously ensuring that stimuli produce auditory images that are fused, centered, loudness- and pitch-balanced across the ears, we have recorded percepts of auditory images that are lateralized after introduction of ITD cues. Preliminary data from 7 children to date (Litovsky, 2011) suggest that lateralization is achieved more readily in the presence of ILD cues than with ITDs. Given that this is preliminary, the effect needs to be studied in greater detail.

3.3. Acoustic simulations of CI listening in children

As discussed above in relation to studies with adult listeners, vocoders have historically been used to simulate the signals presented to CI users (e.g., Shannon et al., 1995). The advantage is that we can study basic psychophysical phenomena in an auditory system that is not compromised due to neural degeneration, and various etiologies that are responsible for the cause of deafness. Thus, in general, data from NH listeners are more consistent across individuals. Very little work has been done with vocoders in children, hence our lab has recently begun to implement this approach in our studies. In the first such study in the field (Eisenberg et al., 2000) the question was regarding how children develop the ability to understand speech from just envelope cues from a young age. They compared speech understanding in NH 5-7 year olds, 10-12 year olds, and adults using a noise vocoder. Using speech stimuli designed for children, they found no differences between the adults and older children, but the younger children were significantly worse at understanding the vocoded speech. The young children needed a greater number of spectral channels to achieve a speech understanding level comparable to older children and adults. Since it has been demonstrated that only envelope cues are necessary for understanding speech, it is important to determine the rate of development of this skill. One possible problem with the Eisenberg et al. (2000) study is that adults are shown to adapt to vocoded speech at both fast and slow time-courses (Rosen et al., 1999; Davis et al., 2005) and it is unclear whether children adapt at the same rate. If children adapt to vocoded speech at a different rate than adults, than the comparison across ages could be confounded. In a recent study in our lab (Draves et al., 2011), twenty 8-10 year old children and adults were provided feedback after trying to understand words and sentences processed with an 8-channel noise vocoder. We found that children and adults showed the same rate of improvement across listening conditions, thus providing evidence that acute comparisons across groups is valid for this study and in Eisenberg et al. (2000). In these studies it is also important to investigate whether developmental factors contribute to speech intelligibility or speech discrimination. There is the possibility that the former is more susceptible to vocoding. Bertoncini et al. (2011) showed that six-month olds could distinguish 16-channel sine vocoded Vowel-Consonant-Vowels, and that there was no difference in performance between 5, 6, and 7 year olds.

It is also important to know whether the difference between adults and children is due to ongoing maturation of the auditory system or differences in language development. Nittrouer et al. (2009) tested native English-speaking adults and 7-year olds with 4- and 8-channel noise vocoded speech. They also tested non-native English-speaking adults. They found that the native-speaking adults performed best at the task, followed by native-speaking children, and followed by non-native speaking adults. Since the non-native adults with a mature auditory system were the worst at understanding vocoded speech, it suggests that the differences between adults and children are due to language development rather than maturity of the auditory system. As with the adults, comparing children who use BiCIs to the NH simulation group shows that the NH group provides an upper bound for CI listeners’ performance (Dorman et al., 2000). The fact that children are able to understand speech through vocoders as well as adults, at least on some conditions, suggests to us that this is a valid tool for asking questions about limitations of CIs when comparing BiCI users and NH listeners. Our current work is moving in that direction and we hope to make contributions in that area in the near future.

3.4. Language Development and Speech Production

A relatively new area of research in our lab involves language acquisition (receptive and expressive) as well as speech production in young CI users. In our first study on language development (Grieco-Calub et al., 2009) we were interested in assessing the time course of spoken word recognition in 2-year-old children who use CIs. Both BiCI and unilateral CI users were tested along with age-matched NH peers, using familiar auditory labels. Children were tested in quiet or in the presence of speech competitors at an SNR of +10 dB SPL. Eye movements to target objects were digitally recorded, and word recognition performance was quantified by measuring each child’s reaction time and accuracy. Children with CIs were less accurate and took longer to visually fixate on target objects than their age-matched peers with NH. Both groups of children showed reduced performance in the presence of the speech competitors. Performance in NH children when listening in noise was remarkably similar to that of CI users when listening in quiet, suggesting that CI users function as if “listening in noise” even when there is no background interference. This finding underscores a primary difference in real-world functioning between these populations. Finally, in this study there was no difference between BiCI and unilateral CI users, suggesting that the benefits observed with BiCIs appear under different testing conditions, either when target and masker are presented from different locations (not tested here), or perhaps at other SNR values.

Our second series of studies on language acquisition are longitudinal. Until recently, age of implantation and length of experience with the CI was thought to play an important role in predicting a child’s linguistic development, especially when unilateral implantation was the “standard of care.” The recently increasing number of children with BiCIs presents new variables such as length of bilateral listening experience and time between activation of the two CIs. Our lab has been testing children with BiCIs (n=45; ages 4-9) and following them longitudinally for their performance on standardized measures, the Test of Language Development (TOLD) and the Leiter International Performance Scale-Revised (Leiter-R), to evaluate their expressive/receptive language and IQ/memory (Hess et al., 2012a). Preliminary results show that, while large inter-subject variability exists, speech and language development improves as a function of both hearing age (amount of listening experience with first CI at time of testing) and bilateral experience (amount of listening experience with BiCIs at time of testing). Effects of age of implantation were observed for speech and language scores, as well as IQ scores. We suspect that more detailed non-standardized testing of speech perception, language comprehension, and language production will reveal additional differences over time as compared to the outcomes for a single implant. While we are continuing the longitudinal measures, we are applying novel testing paradigms such as the reaching-for-sound methods used in our spatial hearing tasks (see section 3.1.2) to investigate speech perception in very young, early-implanted children (Hess et al., 2012b).

Finally, speech production by children with CIs is generally less intelligible and less accurate than that of NH children. Our work on speech production has focused on the extent to which children with CIs are able to distinctly produce speech sounds. Research has reported that children with CIs produce less acoustic contrast between phonemes than NH children, but these studies have included correct and incorrect productions. In Todd et al. (2011) we recently compared the extent of contrast between correct productions of /s/ and /S/ by children with CIs to that of (1) NH children matched on chronological age and (2) NH children matched on duration of auditory experience. Spectral peaks and means were calculated from the frication noise of productions of /s/ and /S/. Results showed that the children with CIs produced less contrast than both groups of NH children. Thus, although adult listeners judged the productions of the CI users as ‘correct’ they were still somewhat different from the speech sounds produced by NH children. These differences in speech production between children with CIs and NH children may be due to differences in auditory feedback.

4. Summary

This paper was intended as an overview of recent and ongoing work in our lab. We are using a variety of methodological approaches to understand binaural hearing in NH listeners and BiCI users. We are interested in (i) the extent to which adults who receive BiCIs can have their performance maximized, and the effect of age at onset of deafness on their performance; (ii) the development of binaural and spatial hearing in children, and the factors that contribute to limitations in their performance; (iii) implementation of stimulation approaches that will lead to the preservation and delivery of binaural cues to BiCI users, thus leading to improved spatial hearing abilities in this population, (iv) we hope that our research will contribute to clinical evaluation, diagnosis and post-implant rehabilitation.

Acknowledgements

The work described in these pages was supported by the following NIH-NIDCD grants: 5R01-DC003083 (PI: Litovsky); 5R01-DC008365 (PI: Litovsky); 4K99/R00-DC010206 (PI: Goupell); 1F32-DC008452 (Grieco-Calub); 2P30-HD003352 (PI: Seltzer). Cochlear Americas, Advanced Bionics and MedEl have provided support for travel of some of the toddlers in our studies. In addition, Cochlear Americas has lent equipment and software, and provided technical advice regarding the projects using direct electrical stimulation.

REFERENCES

- Agrawal S. Unpublished Dissertation. University of Wisconsin-Madison; Madison WI: 2008. Spatial hearing abilities in adults with bilateral cochlear implants; p. 53705. [Google Scholar]

- Agrawal S, Litovsky R, van Hoesel R. Effect of Background Noise and Uncertainty of the Auditory Environment on Localization in Adults with Cochlear Implants; Presented At The 2008 Midwinter Meeting Of The Association For Research In Otolaryngology; 2008. [Google Scholar]

- Bernstein LR. Auditory processing of interaural timing information: new insights. J Neurosci Res. 2001;66(6):1035–46. doi: 10.1002/jnr.10103. [DOI] [PubMed] [Google Scholar]

- Bernstein LR, Trahiotis C. Detection of interaural delay in high-frequency sinusoidally amplitude-modulated tones, two-tone complexes, and bands of noise. J. Acoust. Soc. Am. 1994;95:3561–3567. doi: 10.1121/1.409973. [DOI] [PubMed] [Google Scholar]

- Bertoncini J, Nazzi T, Cabrera L, Lorenzi C. Six-month-old infants discriminate voicing on the basis of temporal envelope cues (L) J. Acoust. Soc. Am. 2011;129:2761–2764. doi: 10.1121/1.3571424. [DOI] [PubMed] [Google Scholar]

- Bertoncini J, Serniclaes W, Lorenzi C. Discrimination of speech sounds based upon temporal envelope versus fine structure cues in 5- to 7-year-old children. J Speech Lang Hear Res. 2009;52:682–695. doi: 10.1044/1092-4388(2008/07-0273). [DOI] [PubMed] [Google Scholar]

- Blauert J. Spatial Hearing: The Psychophysics of Human Sound Localization, revised ed. The MIT Press; Cambridge, MA: 1997. [Google Scholar]

- Bronkhorst AW. The cocktail party phenomenon: A review of research on speech intelligibility in multiple-talker conditions. Acustica. 2000;86(1):117–128. [Google Scholar]

- Coco A, Epp SB, Fallon JB, Xu J, Millard RE, Shepherd RK. Does cochlear implantation and electrical stimulation affect residual hair cells and spiral ganglion neurons? Hear Res. 2007;225(1-2):60–70. doi: 10.1016/j.heares.2006.12.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dahmen JC, King AJ. Learning to hear: plasticity of auditory cortical processing. Curr. Opin. Neurobiol. 2007;17(4):456–464. doi: 10.1016/j.conb.2007.07.004. [DOI] [PubMed] [Google Scholar]

- Davis MH, Johnsrude IS, Hervais-Adelman A, Taylor K, McGettigan C. Lexical information drives perceptual learning of distorted speech: evidence from the comprehension of noise-vocoded sentences. J. Exp. Psychol. Gen. 2005;134:222–241. doi: 10.1037/0096-3445.134.2.222. [DOI] [PubMed] [Google Scholar]

- Dorman MF, Loizou PC, Kemp LL, Kirk KI. Word recognition by children listening to speech processed into a small number of channels: Data from normal-hearing children and children with cochlear implants. Ear Hear. 2000;21:590–596. doi: 10.1097/00003446-200012000-00006. [DOI] [PubMed] [Google Scholar]

- Draves G, Goupell MJ, Litovsky RY. Wisconsin Speech-Language Pathology and Audiology Annual Convention. Appleton, WI: Feb, 2011. Vocoded speech understanding in children and adults. [Google Scholar]

- Durlach N, Colburn S. In: Binaural Phenomena in Handbook of Perception. Carterette E, Friedman Academic M, editors. IV. New York: 1978. pp. 365–466. [Google Scholar]

- Eisenberg LS, Shannon RV, Martinez AS, Wygonski J, Boothroyd A. Speech recognition with reduced spectral cues as a function of age. J Acoust Soc Am. 2000;107:2704–2710. doi: 10.1121/1.428656. [DOI] [PubMed] [Google Scholar]

- Friesen LM, Shannon RV, Baskent D, Wang X. Speech recognition in noise as a function of the number of spectral channels: comparison of acoustic hearing and cochlear implants. J Acoust Soc Am. 2001 Aug;110(2):1150–63. doi: 10.1121/1.1381538. [DOI] [PubMed] [Google Scholar]

- Garadat S, Litovsky RY. Speech Intelligibility in Free Field: Spatial Unmasking in Preschool Children. J. Acoust. Soc. Amer. 2007;121:1047–1055. doi: 10.1121/1.2409863. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Garadat SN, Litovsky RY, Yu G, Zeng FG. Role of binaural hearing in speech intelligibility and spatial release from masking using vocoded speech. J. Acoust. Soc. Am. 2009;126:2522–2535. doi: 10.1121/1.3238242. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Garadat SN, Litovsky RY, Yu G, Zeng FG. Effects of simulated spectral holes on speech intelligibility and spatial release from masking under binaural and monaural listening. J. Acoust. Soc. Am. 2010;127:977–989. doi: 10.1121/1.3273897. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Godar SP, Litovsky RY. Experience with bilateral cochlear implants improves sound. Otology Neurotology. 2010;31(8):1287–92. doi: 10.1097/MAO.0b013e3181e75784. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goupell MJ, Hancock KE, Majdak P, Laback B, Delgutte B. Binaurally-coherent jitter improves neural and perceptual ITD sensitivity in normal and electric hearing. In: Lopez-Poveda EA, Palmer AR, Meddis R, editors. The neurophysiological bases of auditory perception. Springer; London: 2009. pp. 303–313. [Google Scholar]

- Goupell MJ, Litovsky RY. Dynamic binaural detection in bilateral cochlear-implant users: Implications for processing schemes; Association for Research in Otolaryngology 34th MidWinter Meeting; Baltimore, MD. 2011. [Google Scholar]

- Grieco-Calub T, Litovsky RY, Werner LA. Using the observer-based psychophysical procedure to assess localization acuity in toddlers who use bilateral cochlear implants. Invited paper in special issue of Otology and Neurology. 2008;29(2):235–239. doi: 10.1097/mao.0b013e31816250fe. [DOI] [PubMed] [Google Scholar]

- Grieco-Calub T, Litovsky RY. Sound localization skills in children who use bilateral cochlear implants and in children with normal acoustic hearing. Ear Hearing. 2010;31(5):645–56. doi: 10.1097/AUD.0b013e3181e50a1d. [DOI] [PMC free article] [PubMed] [Google Scholar]