Abstract

In this paper we study the formal algebraic structure underlying the intrinsic classification algorithm, recently introduced in Singer et al. (SIAM J. Imaging Sci. 2011, accepted), for classifying noisy projection images of similar viewing directions in three-dimensional cryo-electron microscopy (cryo-EM). This preliminary classification is of fundamental importance in determining the three-dimensional structure of macromolecules from cryo-EM images. Inspecting this algebraic structure we obtain a conceptual explanation for the admissibility (correctness) of the algorithm and a proof of its numerical stability. The proof relies on studying the spectral properties of an integral operator of geometric origin on the two-dimensional sphere, called the localized parallel transport operator. Along the way, we continue to develop the representation theoretic set-up for three-dimensional cryo-EM that was initiated in Hadani and Singer (Ann. Math. 2010, accepted).

Keywords: Representation theory, Differential geometry, Spectral theory, Optimization theory, Mathematical biology, 3D cryo-electron microscopy

1 Introduction

The goal in cryo-EM is to determine the three-dimensional structure of a molecule from noisy projection images taken at unknown random orientations by an electron microscope, i.e., a random Computational Tomography (CT). Determining three-dimensional structures of large biological molecules remains vitally important, as witnessed, for example, by the 2003 Chemistry Nobel Prize, co-awarded to R. MacKinnon for resolving the three-dimensional structure of the Shaker K+ channel protein [1, 4], and by the 2009 Chemistry Nobel Prize, awarded to V. Ramakrishnan, T. Steitz and A. Yonath for studies of the structure and function of the ribosome. The standard procedure for structure determination of large molecules is X-ray crystallography. The challenge in this method is often more in the crystallization itself than in the interpretation of the X-ray results, since many large molecules, including various types of proteins, have so far withstood all attempts to crystallize them.

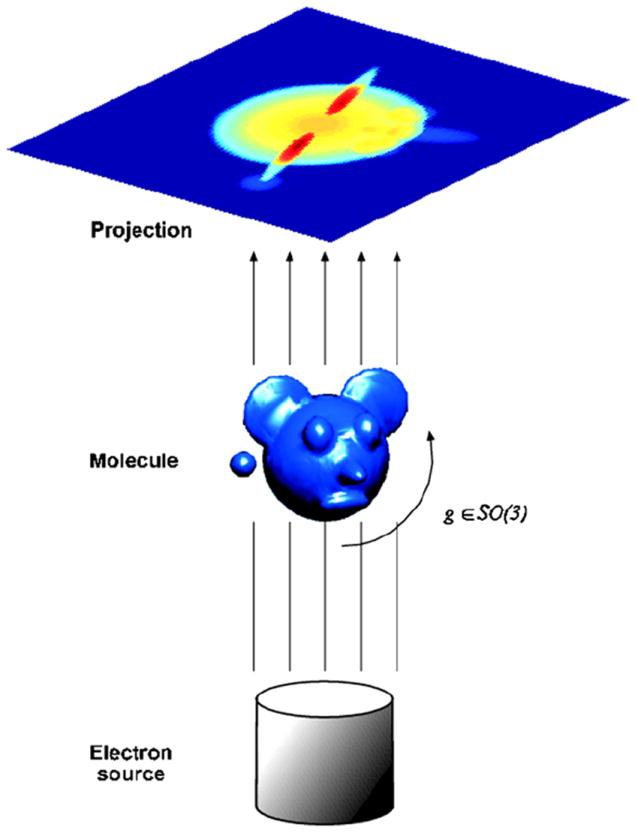

Cryo-EM is an alternative approach to X-ray crystallography. In this approach, samples of identical molecules are rapidly immobilized in a thin layer of vitreous ice (this is an ice without crystals). The cryo-EM imaging process produces a large collection of tomographic projections, corresponding to many copies of the same molecule, each immobilized in a different and unknown orientation. The intensity of the pixels in a given projection image is correlated, [5], with the line integrals of the electric potential induced by the molecule along the path of the imaging electrons (see Fig. 1). The goal is to reconstruct the three-dimensional structure of the molecule from such a collection of projection images. The main problem is that the highly intense electron beam damages the molecule and, therefore, it is problematic to take projection images of the same molecule at known different directions as in the case of classical CT1. In other words, a single molecule is imaged only once, rendering an extremely low signal-to-noise ratio (SNR), mostly due to shot noise induced by the maximal allowed electron dose.

Fig. 1.

Schematic drawing of the imaging process: every projection image corresponds to some unknown spatial orientation of the molecule

1.1 Mathematical Model

Instead of thinking of a multitude of molecules immobilized in various orientations and observed by an electron microscope held in a fixed position, it is more convenient to think of a single molecule, observed by an electron microscope from various orientations. Thus, an orientation describes a configuration of the microscope instead of that of the molecule.

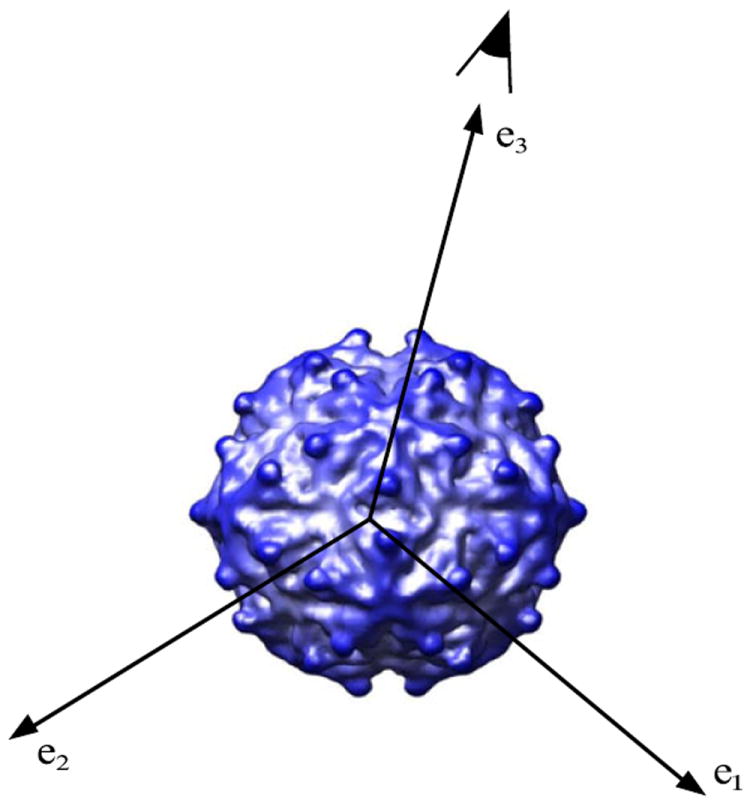

Let (V, (·, ·)) be an oriented three-dimensional Euclidean vector space. The reader can take V to be ℝ3 and (·, ·) to be the standard inner product. Let X = Fr(V) be the oriented frame manifold associated to V; a point x ∈ X is an orthonormal basis x = (e1, e2, e3) of V compatible with the orientation. The third vector e3 is distinguished, denoted by π(x) and called the viewing direction. More concretely, if we identify V with ℝ3, then a point in X can be thought of as a matrix belonging to the special orthogonal group SO(3), whose first, second and third columns are the vectors e1, e2 and e3 respectively.

Using this terminology, the physics of cryo-EM is modeled as follows:

The molecule is modeled by a real valued function ϕ : V → ℝ, describing the electromagnetic potential induced from the charges in the molecule.

A spatial orientation of the microscope is modeled by an orthonormal frame x ∈ X. The third vector π(x) is the viewing direction of the microscope and the plane spanned by the first two vectors e1 and e2 is the plane of the camera equipped with the coordinate system of the camera (see Fig. 2).

- The projection image obtained by the microscope, when observing the molecule from a spatial orientation x is a real valued function I : ℝ2 → ℝ, given by the X-ray projection along the viewing direction:

for every (p, q) ∈ ℝ2.

Fig. 2.

A frame x = (e1, e2, e3) modeling the orientation of the electron microscope, where π(x) = e3 is the viewing direction and the pair (e1, e2) establishes the coordinates of the camera

The data collected from the experiment are a set consisting of N projection images P = {I1, …, IN}. Assuming that the potential function ϕ is generic2, in the sense that each image Ii ∈ P can originate from a unique frame xi ∈ X, the main problem of cryo-EM is, [7, 13], to reconstruct the (unique) unknown frame xi ∈ X associated with each projection image Ii ∈ P.

1.2 Class Averaging

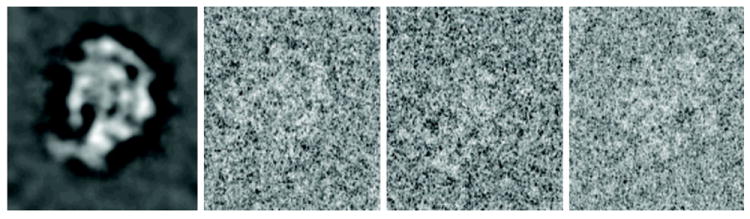

As projection images in cryo-EM have extremely low SNR3 (see Fig. 3), a crucial initial step in all reconstruction methods is “class averaging” [2]. Class averaging is the grouping of a large data set of noisy raw projection images into clusters, such that images within a single cluster have similar viewing directions. Averaging rotationally aligned noisy images within each cluster results in “class averages”; these are images that enjoy a higher SNR and are used in later cryo-EM procedures such as the angular reconstitution procedure, [11, 12], which requires better quality images. Finding consistent class averages is challenging due to the high level of noise in the raw images.

Fig. 3.

The left most image is a clean simulated projection image of the E.coli 50S ribosomal subunit. The other three images are real electron microscope images of the same subunit

The starting point for the classification is the idea that visual similarity between projection images suggests vicinity between viewing directions of the corresponding (unknown) frames. The similarity between images Ii and Ij is measured by their invariant distance (introduced in [6]) which is the Euclidean distance between the images when they are optimally aligned with respect to in-plane rotations, namely

| (1.1) |

where

for any function I : ℝ2 → ℝ.

One can choose some threshold value ε, such that d(Ii, Ij) ≤ ε is indicative that perhaps the corresponding frames xi and xj have nearby viewing directions. The threshold ε defines an undirected graph G = (Vertices, Edges) with vertices labeled by numbers 1, …, N and an edge connecting vertex i with vertex j if and only if the invariant distance between the projection images Ii and Ij is smaller than ε, namely

In an ideal noiseless world, the graph G acquires the geometry of the unit sphere S(V), namely, two images are connected by an edge if and only if their corresponding viewing directions are close on the sphere, in the sense that they belong to some small spherical cap of opening angle a = a(ε).

However, the real world is far from ideal as it is governed by noise; hence, it often happens that two images of completely different viewing directions have small invariant distance. This can happen when the realizations of the noise in the two images match well for some random in-plane rotation, leading to spurious neighbor identification. Therefore, the naïve approach of averaging the rotationally aligned nearest neighbor images can sometimes yield a poor estimate of the true signal in the reference image.

To summarize

From this point of view, the main problem is to distinguish the good edges from the bad ones in the graph G, or, in other words, to distinguish the true neighbors from the false ones (called outliers). The existence of outliers is the reason why the classification problem is non-trivial. We emphasize that without excluding the outliers, averaging rotationally aligned images of small invariant distance (1.1) yields a poor estimate of the true signal, rendering the problem of three-dimensional reconstruction from cryo-EM images non-feasible. In this respect, the class averaging problem is of fundamental importance.

1.3 Main Results

In [8], we introduced a novel algorithm, referred to in this paper as the intrinsic classification algorithm, for classifying noisy projection images of similar viewing directions. The main appealing property of this new algorithm is its extreme robustness to noise and to the presence of outliers; in addition, it also enjoys efficient time and space complexity. These properties are explained thoroughly in [8], which includes also a large number of numerical experiments.

In this paper we study the formal algebraic structure that underlies the intrinsic classification algorithm. Inspecting this algebraic structure we obtain a conceptual explanation for the admissibility (correctness) of the algorithm and a proof of its numerical stability, thus putting it on firm mathematical grounds. The proof relies on the study of a certain integral operator Th on X, of geometric origin, called the localized parallel transport operator. Specifically:

Admissibility amounts to the fact that the maximal eigenspace of Th is a three-dimensional complex Hermitian vector space and that there is a canonical identification of Hermitian vector spaces between this eigenspace and the complexified vector space W = CV.

Numerical stability amounts to the existence of a spectral gap which separates the maximal eigenvalue of Th from the rest of the spectrum, which enables one to obtain a stable numerical approximation of the corresponding eigenspace and of other related geometric structures.

The main technical result of this paper is a complete description of the spectral properties of the localized parallel transport operator. Along the way, we continue to develop the mathematical set-up for cryo-EM that was initiated in [3], thus further elucidating the central role played by representation theoretic principles in this scientific discipline.

The remainder of the introduction is devoted to a detailed description of the intrinsic classification algorithm and to an explanation of the main ideas and results of this paper.

1.4 Transport Data

A preliminary step is to extract certain geometric data from the set of projection images, called (local) empirical transport data.

When computing the invariant distance between images Ii and Ij we also record the rotation matrix in SO(2) that realizes the minimum in (1.1) and denote this special rotation by T̃ (i, j), that is,

| (1.2) |

noting that

| (1.3) |

The main observation is that in an ideal noiseless world the rotation T̃ (i, j) can be interpreted as a geometric relation between the corresponding frames xi and xj, provided the invariant distance between the corresponding images is small. This relation is expressed in terms of parallel transport on the sphere, as follows: define the rotation

as the unique solution of the equation

| (1.4) |

where tπ(xi), π(xj) is the parallel transport along the unique geodesic on the sphere connecting the points π(xj) with π(xi) or, in other words, it is the rotation in SO(V) that takes the vector π(xj) to π(xi) along the shortest path on the sphere and the action ◁ is defined by

for every x = (e1, e2, e3). The precise statement is that the rotation T̃ (i, j) approximates the rotation T (xi, xj) when {i, j} ∈ Edges. This geometric interpretation of the rotation T̃ (i, j) is suggested from a combination of mathematical and empirical considerations that we proceed to explain.

On the mathematical side: the rotation T (xi, xj) is the unique rotation of the frame xi around its viewing direction π(xi), minimizing the distance to the frame xj. This is a standard fact from differential geometry (a direct proof of this statement appears in [8]).

On the empirical side: if the function ϕ is “nice”, then the optimal alignment T̃ (i, j) of the projection images is correlated with the optimal alignment T (xi, xj) of the corresponding frames. This correlation of course improves as the distance between π(xi) and π(xj) becomes smaller. A quantitative study of the relation between T̃ (i, j) and T (xi, xj) involves considerations from image processing; thus it is beyond the scope of this paper.

To conclude, the “empirical” rotation T̃ (i, j) approximates the “geometric” rotation T (xi, xj) only when the viewing directions π(xi) and π(xj) are close, in the sense that they belong to some small spherical cap of opening angle a. The latter “geometric” condition is correlated with the “empirical” condition that the corresponding images Ii and Ij have small invariant distance. When the viewing directions π(xi) and π(xj) are far from each other, the rotation T̃ (i, j) is not related any longer to parallel transportation on the sphere. For this reason, we consider only rotations T̃ (i, j) for which {i, j} ∈ Edges and call this collection the (local) empirical transport data.

1.5 The Intrinsic Classification Algorithm

The intrinsic classification algorithm accepts as an input the empirical transport data {T̃ (i, j) : {i, j} ∈ Edges} and produces as an output the Euclidean inner products {(π(xi), π(xj)) : i, j = 1, …, N}. Using these inner products, one can identify the true neighbors in the graph G as the pairs {i, j} ∈ Edges for which the inner product (π(xi), π(xj)) is close to 1. The formal justification of the algorithm requires the empirical assumption that the frames xi, i = 1, …, N are uniformly distributed in the frame manifold X, according to the unique normalized Haar measure on X. This assumption corresponds to the situation where the orientations of the molecules in the ice are distributed independently and uniformly at random.

The main idea of the algorithm is to construct an intrinsic model, denoted by WN, of the Hermitian vector space W = CV which is expressed solely in terms of the empirical transport data.

The algorithm proceeds as follows:

- Step 1 (Ambient Hilbert space): consider the standard N-dimensional Hilbert space

- Step 2 (Self-adjoint operator): identify ℝ2 with C and consider each rotation T̃ (i, j) as a complex number of unit norm. Define the N × N complex matrix

by putting the rotation T̃ (i, j) in the (i, j) entry. Notice that the matrix T̃N is self-adjoint by (1.3). - Step 3 (Intrinsic model): the matrix T̃N induces a spectral decomposition

Theorem 1

There exists a threshold λ0such that

Define the Hermitian vector space

- Step 4 (Computation of the Euclidean inner products): the Euclidean inner products {(π(xi), π(xj)) : i, j = 1, …, N} are computed from the vector space WN, as follows: for every i = 1, …, N, denote by φi ∈ WN the vector

where pri : WN → C is the projection on the ith component and is the adjoint map. In addition, for every frame x ∈ X, x = (e1, e2, e3), denote by δx ∈ W the (complex) vector e1 − ie2.

The upshot is that the intrinsic vector space WN consisting of the collection of vectors φi ∈ WN, i = 1, …, N is (approximately4) isomorphic to the extrinsic vector space W consisting of the collection of vectors δxi ∈ W, i = 1, …, N, where xi is the frame corresponding to the image Ii, for every i = 1, …, N. This statement is the content of the following theorem:

Theorem 2

There exists a unique (approximated) isomorphism of Hermitian vector spaces such that

for every i = 1, …, N.

The above theorem enables us to express, in intrinsic terms, the Euclidean inner products between the viewing directions, as follows: starting with the following identity from linear algebra (which will be proved in the sequel):

| (1.5) |

for every pair of frames x, y ∈ X, where 〈·, ·〉 is the Hermitian product on W = CV, given by

we obtain the following relation:

| (1.6) |

for every i, j = 1, …, N. We note that, in the derivation of Relation (1.6) from Relation (1.5) we use Theorem 2. Finally, we notice that Relation (1.6) implies that although we do not know the frame associated with every projection image, we still are able to compute the inner product between every pair of such frames from the intrinsic vector space WN which, in turns, can be computed from the images.

1.6 Structure of the Paper

The paper consists of three sections besides the introduction.

In Sect. 2, we begin by introducing the basic analytic set-up which is relevant for the class averaging problem in cryo-EM. Then, we proceed to formulate the main results of this paper, which are: a complete description of the spectral properties of the localized parallel transport operator (Theorem 3), the spectral gap property (Theorem 4) and the admissibility of the intrinsic classification algorithm (Theorems 5 and 6).

In Sect. 3, we prove Theorem 3: in particular, we develop all the representation theoretic machinery that is needed for the proof.

Finally, in the Appendix, we give the proofs of all technical statements which appear in the previous sections.

2 Preliminaries and Main Results

2.1 Set-up

Let (V, (·, ·)) be a three-dimensional, oriented, Euclidean vector space over ℝ. The reader can take V = ℝ3 equipped with the standard orientation and (·, ·) to be the standard inner product. Let W = CV denote the complexification of V. We equip W with the Hermitian product 〈·, ·〉 : W × W → C, induced from (·, ·), given by

Let SO(V) denote the group of orthogonal transformations with respect to the inner product (·, ·), preserving the orientation. Let S(V) denote the unit sphere in V, that is, S(V) = {υ ∈ V : (υ, υ) = 1}. Let X = Fr(V) denote the manifold of oriented orthonormal frames in V, that is, a point x ∈ X is an orthonormal basis x = (e1, e2, e3) of V compatible with the orientation.

We consider two commuting group actions on the frame manifold: a left action of the group SO(V), given by

and a right action of the special orthogonal group SO(3), given by

for

We distinguish the copy of SO(2) inside SO(3) consisting of matrices of the form

and consider X as a principal SO(2) bundle over S(V) where the fibration map π : X → S(V) is given by π(e1, e2, e3) = e3. We call the vector e3 the viewing direction.

2.2 The Transport Data

Given a point υ ∈ S(V), we denote by Xυ the fiber of the frame manifold lying over υ, that is, Xυ = {x ∈ X : π(x) = υ}. For every pair of frames x, y ∈ X such that π(x) ≠ ± π(y), we define a matrix T (x, y) ∈ SO(2), characterized by the property

where tπ(x),π(y) : Xπ(y) → Xπ(x) is the morphism between the corresponding fibers, given by the parallel transport mapping along the unique geodesic in the sphere S(V) connecting the points π(y) with π(x). We identify ℝ2 with C and consider T (x, y) as a complex number of unit norm. The collection of matrices {T (x, y)} satisfy the following properties:

- Symmetry: for every x, y ∈ X, we have T (y, x) = T (x, y)−1, where the left hand side of the equality coincides with the complex conjugate . This property follows from the fact that the parallel transport mapping satisfies:

- Invariance: for every x, y ∈ X and element g ∈ SO(V), we have T (g ▷ x, g ▷ y) = T (x, y). This property follows from the fact that the parallel transport mapping satisfies:

for every g ∈ SO(V). -

Equivariance: for every x, y ∈ X and elements g1, g2 ∈ SO(2), we have . This property follows from the fact that the parallel transport mapping satisfies:

for every g1, g2 ∈ SO(2).The collection {T (x, y)} is referred to as the transport data.

2.3 The Parallel Transport Operator

Let H =C(X) denote the Hilbertian space of smooth complex valued functions on X (here, the word Hilbertian means that H is not complete)5, where the Hermitian product is the standard one, given by

for every f1, f2 ∈ H, where dx denotes the normalized Haar measure on X. In addition, H supports a unitary representation of the group SO(V) × SO(2), where the action of an element g = (g1, g2) sends a function s ∈ H to a function g · s, given by

for every x ∈ X.

Using the transport data, we define an integral operator T : H → H as

for every s ∈ H. The properties of the transport data imply the following properties of the operator T :

The symmetry property implies that T is self-adjoint.

The invariance property implies that T commutes with the SO(V) action, namely T (g · s) = g · T (s) for every s ∈ H and g ∈ SO(V).

-

The implication of the equivariance property will be discussed later when we study the kernel of T.

The operator T is referred to as the parallel transport operator.

2.3.1 Localized Parallel Transport Operator

The operator which arises naturally in our context is a localized version of the transport operator. Let us fix a real number a ∈ [0, π], designating an opening angle of a spherical cap on the sphere and consider the parameter h = 1 − cos(a), taking values in the interval [0, 2].

Given a choice of this parameter, we define an integral operator Th : H → H as

| (2.1) |

where B(x, a) = {y ∈ X : (π(x), π(y)) > cos(a)}. Similar considerations as before show that Th is self-adjoint and, in addition, commutes with the SO(V) action. Finally, note that the operator Th should be considered as a localization of the operator of parallel transport discussed in the previous paragraph, in the sense that now only frames with close viewing directions interact through the integral (2.1). For this reason, the operator Th is referred to as the localized parallel transport operator.

2.4 Spectral Properties of the Localized Parallel Transport Operator

We focus our attention on the spectral properties of the operator Th, in the regime h ≪ 1, since this is the relevant regime for the class averaging application.

Theorem 3

The operator Th has a discrete spectrum λn(h), n ∈ ℕ, such that dim H (λn(h)) = 2n + 1, for every h ∈ (0, 2], Moreover, in the regime h ≪ 1, the eigenvalue λn(h) has the asymptotic expansion

For a proof, see Sect. 3.

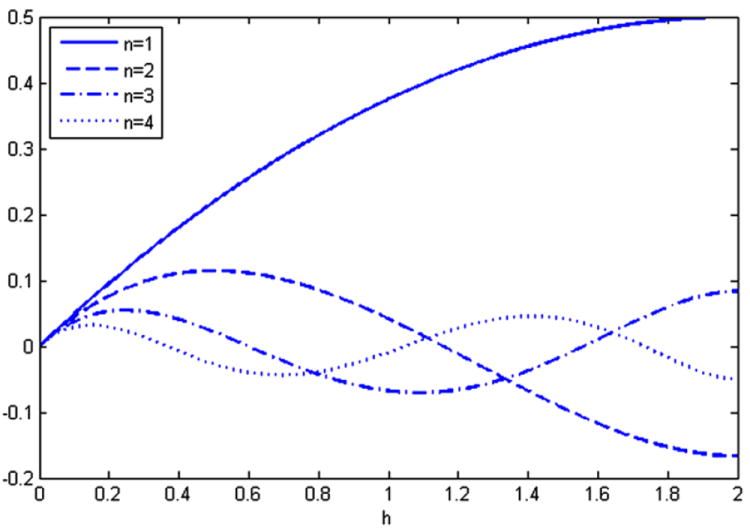

In fact, each eigenvalue λn(h), as a function of h, is a polynomial of degree n + 1. In Sect. 3, we give a complete description of these polynomials by means of a generating function. To get some feeling for the formulas that arise, we list the first four eigenvalues:

The graphs of λi(h), i = 1, 2, 3, 4 are given in Fig. 4.

Fig. 4.

The first four eigenvalues of the operator Th, presented as functions of the variable h ∈ [0, 2]

2.4.1 Spectral Gap

Noting that λ2(h) attains its maximum at h = 1/2, we have

Theorem 4

For every value of h ∈ [0, 2], the maximal eigenvalue of Th is λ1(h). Moreover, for every value of h ∈ [0, 1/2], there is a spectral gap G (h) of the form

For a proof, see the Appendix. Note that the main difficulty in proving the second statement is to show that λn(h) ≤ λ2(h) for every h ∈ [0, 1/2], which looks evident from looking at Fig. 4.

Consequently, in the regime h ≪ 1, the spectral gap behaves like

2.5 Main Algebraic Structure

We proceed to describe an intrinsic model W of the Hermitian vector space W, which can be computed as the eigenspace associated with the maximal eigenvalue of the localized parallel transport operator Th, provided h ≪ 1. Using this model, the Euclidean inner products between the viewing directions of every pair of orthonormal frames can be computed.

Extrinsic model: for every point x ∈ X, let us denote by δx : C → W the unique complex morphism sending 1 ∈ C to the complex vector e1 − ie2 ∈ W.

- Intrinsic model: we define W to be the eigenspace of Th associated with the maximal eigenvalue, which by Theorems 3 and 4, is three-dimensional. For every point x ∈ X, there is a map

where evx : H → C is the evaluation morphism at the point x, namely,

for every f ∈ H. The pair (W, {φx : x ∈ X}) is referred to as the intrinsic model of the vector space W.

The algebraic structure that underlies the intrinsic classification algorithm is the canonical morphism

defined by

for every x ∈ X. The morphism τ induces an isomorphism of Hermitian vector spaces between W equipped with the collection of natural maps {δx : C → W } and W equipped with the collection of maps {φx : C → W}. This is summarized in the following theorem:

Theorem 5

The morphism τ maps W isomorphically, as an Hermitian vector space, onto the subspace W ⊂ H. Moreover,

for every x ∈ X.

For a proof, see the Appendix (the proof uses the results and terminology of Sect. 3).

Using Theorem 5, we can express in intrinsic terms the inner product between the viewing directions associated with every ordered pair of frames. The precise statement is

Theorem 6

For every pair of frames x, y ∈ X, we have

| (2.2) |

for any choice of complex numbers υ, u ∈ C of unit norm.

For a proof, see the Appendix. Note that substituting υ = u = 1 in (2.2) we obtain (1.6).

2.6 Explanation of Theorems 1 and 2

We end this section with an explanation of the two main statements that appeared in the introduction. The explanation is based on inspecting the limit when the number of images N goes to infinity. Provided that the corresponding frames are independently drawn from the normalized Haar measure on X (empirical assumption), in the limit the transport matrix T̃N approaches the localized parallel transport operator Th : H → H, for some small value of the parameter h. This implies that the spectral properties of T̃N for large values of N are governed by the spectral properties of the operator Th when h lies in the regime h ≪ 1. In particular,

The statement of Theorem 1 is explained by the fact that the maximal eigenvalue of Th has multiplicity three (see Theorem 3) and that there exists a spectral gap G(h) ~ h/2, separating it from the rest of the spectrum (see Theorem 4). The later property ensures that the numerical computation of this eigenspace makes sense.

The statement of Theorem 2 is explained by the fact that the vector space WN is a numerical approximation of the theoretical vector space W and Theorem 5.

3 Spectral Analysis of the Localized Parallel Transport Operator

In this section we study the spectral properties of the localized parallel transport operator Th, mainly focusing on the regime h ≪ 1. But first we need to introduce some preliminaries from representation theory.

3.1 Isotypic Decompositions

The Hilbert space H, as a unitary representation of the group SO(2), admits an isotypic decomposition

| (3.1) |

where a function s ∈ Hk if and only if s(x ◁ g) = gk s(x), for every x ∈ X and g ∈ SO(2). In turn, each Hilbert space Hk, as a representation of the group SO(V), admits an isotypic decomposition

| (3.2) |

where Hn,k denotes the component which is a direct sum of copies of the unique irreducible representation of SO(V) which is of dimension 2n + 1. A particularly important property is that each irreducible representation which appears in (3.2) comes up with multiplicity one. This is summarized in the following theorem:

Theorem 7

(Multiplicity one) If n < |k| then Hn,k = 0. Otherwise, Hn,k is isomorphic to the unique irreducible representation of SO(V) of dimension 2n + 1.

For a proof, see the Appendix.

The following proposition is a direct implication of the equivariance property of the operator Th and follows from Schur’s orthogonality relations on the group SO(2):

Proposition 1

We have

Consequently, from now on, we will consider Th as an operator from H−1 to H−1. Moreover, since for every n ≥ 1, Hn, −1 is an irreducible representation of SO(V) and since Th commutes with the group action, by Schur’s Lemma Th acts on Hn, −1 as a scalar operator, namely

The reminder of this section is devoted to the computation of the eigenvalues λn(h). The strategy of the computation is to choose a point x0 ∈ X and a “good” vector un ∈ Hn, −1 such that un(x0) ≠ 0 and then to use the relation

which implies that

| (3.3) |

3.2 Set-up

Fix a frame x0 ∈ X, x0 = (e1, e2, e3). Under this choice, we can safely identify the group SO(V) with the group SO(3) by sending an element g ∈ SO(V) to the unique element h ∈ SO(3) such that g ▷ x0 = x0 ◁ h. Hence, from now on, we will consider the frame manifold equipped with commuting left and right actions of SO(3).

Consider the following elements in the Lie algebra so(3):

The elements Ai, i = 1, 2, 3 satisfy the relations

Let (H, E, F) be the following sl2 triple in the complexified Lie algebra Cso(3):

Finally, let (HL, EL, FL) and (HR, ER, FR) be the associated (complexified) vector fields on X induced from the left and right action of SO(3) respectively.

3.2.1 Spherical Coordinates

We consider the spherical coordinates of the frame manifold ω : (0, 2π) × (0, π) × (0, 2π) → X, given by

We have the following formulas.

- The normalized Haar measure on X is given by the density

- The vector fields (HL, EL, FL) are given by

- The vector fields (HR, ER, FR) are given by

3.3 Choosing a Good Vector

3.3.1 Spherical Functions

Consider the subgroup T ⊂ SO(3) generated by the infinitesimal element A3. For every k ∈ ℤ and n ≥ k, the Hilbert space Hn,k admits an isotypic decomposition with respect to the left action of T:

where a function if and only if s(e−t A3 ▷ x) = eimt s(x), for every x ∈ X. Functions in are usually referred to in the literature as (generalized) spherical functions. Our plan is to choose for every n ≥ 1, a spherical function and exhibit a closed formula for the generating function

Then, we will use this explicit generating function to compute un (x0) and Th (un) (x0) and use (3.3) to compute λn(h).

3.3.2 Generating Function

For every n ≥ 0, let be the unique spherical function such that ψn(x0) = 1. These functions are the well known spherical harmonics on the sphere. Define the generating function

The following theorem is taken from [10].

Theorem 8

The function G0,0 admits the following formula:

Take un = EL FR ψn. Note that indeed and define the generating function

It follows that G1, −1 = EL FR G0,0. Direct calculation, using the formula in Theorem 8, reveals that

| (3.4) |

It is enough to consider G1, −1 when φ = α = 0. We use the notation G1, −1 (θ, t) = G1, −1 (0, θ, 0, t). By (3.4)

| (3.5) |

3.4 Computation of un (x0)

Observe that

Direct calculation reveals that

Since , we obtain

| (3.6) |

3.5 Computation of Th (un) (x0)

Recall that h = 1 − cos(a).

Using the definition of Th, we obtain

Using the spherical coordinates, the integral on the right hand side can be written as

First

| (3.7) |

where the third equality uses the invariance property of the transport data and the second equality uses the equivariance property of the transport data.

Second, since we have

| (3.8) |

Combining (3.7) and (3.8), we conclude

| (3.9) |

where the second equality uses the fact that x0 ◁ eθ A2 is the parallel transport of x0 along the unique geodesic connecting π(x0) with π(x0 ◁ eθ A2).

Denote

Define the generating function and observe that

Direct calculation reveals that

| (3.10) |

3.6 Proof of Theorem 3

Expanding I (h, t) with respect to the parameter t reveals that the function In(h) is a polynomial in h of degree n + 1. Then, using (3.3), we get

In principle, it is possible to obtain a closed formula for λn(h) for every n ≥ 1.

3.6.1 Quadratic Approximation

We want to compute the first three terms in the Taylor expansion of λn(h):

We have

Observe that

Direct computation, using Formula (3.10), reveals that

Combing all the above yields the desired formula

This concludes the proof of the theorem.

Acknowledgments

The first author would like to thank Joseph Bernstein for many helpful discussions concerning the mathematical aspects of this work. He also thanks Richard Askey for his valuable advice about Legendre polynomials. The second author is partially supported by Award Number R01GM090200 from the National Institute of General Medical Sciences. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institute of General Medical Sciences or the National Institutes of Health. This work is part of a project conducted jointly with Shamgar Gurevich, Yoel Shkolnisky and Fred Sigworth.

Appendix: Proofs

A.1 Proof of Theorem 4

The proof is based on two technical lemmas.

Lemma 1

The following estimates hold:

There exists h1 ∈ (0, 2] such that λn(h) ≤ λ1(h), for every n ≥ 1 and h ∈ [0, h1].

There exists h2 ∈ (0, 2] such that λn(h) ≤ λ2(h), for every n ≥ 2 and h ∈ [0, h2].

The proof appears below.

Lemma 2

The following estimates hold:

There exists N1 such that λn(h) ≤ λ1(h), for every n ≥ N1 and h ∈ [h1, 2].

There exists N2 such that λn(h) ≤ λ1(h), for every n ≥ N2 and h ∈ [h2, 1/2].

The proof appears below.

Granting the validity of these two lemmas we can finish the proof of the theorem.

First we prove that λn(h) ≤ λ1(h), for every n ≥ 1 and h ∈ [0, 2]: By Lemmas 1 and 2, we get λn(h) ≤ λ1(h) for every h ∈ [0, 2] when n ≥ N1. Then we verify directly that λn(h) ≤ λ1(h) for every h ∈ [0, 2] in the finitely many cases when n < N1.

Similarly, we prove that λn(h) ≤ λ2(h) for every n ≥ 2 and h ∈ [0, 1/2]: By Lemmas 1 and 2, we get λn(h) ≤ λ2(h) for every h ∈ [0, 1/2] when n ≥ N2. Then we verify directly that λn(h) ≤ λ1(h) for every h ∈ [0, 1/2] in the finitely many cases when n < N2.

This concludes the proof of the theorem.

A.2 Proof of Lemma 1

The strategy of the proof is to reduce the statement to known facts about Legendre polynomials.

Recall h = 1 − cos(a). Here, it will be convenient to consider the parameter z = cos(a), taking values in the interval [−1, 1].

We recall that Legendre polynomials Pn(z), n ∈ ℕ appear as the coefficients of the generating function

Let

Consider the generating function

The function J(z, t) admits the following closed formula:

| (A.1) |

Using (A.1), we get for n ≥ 2

where Qn(z) = (−1)n Pn(z). In order to prove the lemma, it is enough to show that there exists z0 ∈ (−1, 1] such that for every z ∈ [−1, z0] the following inequalities hold:

Qn (z) ≤ Q3 (z) for every n ≥ 3.

Qn (z) ≤ Q2 (z) for every n ≥ 2.

Qn (z) ≤ Q1 (z) for every n ≥ 1.

Qn (z) ≤ Q0 (z) for every n ≥ 0.

These inequalities follow from the following technical proposition.

Proposition 2

Let n0 ∈ ℕ. There exists z0 ∈ (−1, 1] such that Qn (z) < Qn0 (z), for every z ∈ [−1, z0] and n ≥ n0.

The proof appears below.

Take h0 = h1 = 1 + z0. Granting Proposition 2, verify that Jn (z) ≤ J2 (z), for n ≥ 2, z ∈ [−1, z0] which implies that λn(h) ≤ λ1(h), for n ≥ 2, h ∈ [0, h0] and Jn (z) ≤ J3 (z), for n ≥ 3, z ∈ [−1, z0] which implies that λn(h) ≤ λ2(h), for n ≥ 3, h ∈ [0, h0].

This concludes the proof of the lemma.

A.2.1 Proof of Proposition 2

Denote by a1 < a2 < … < an the zeroes of Qn(cos(a)) and by μ1 < μ2 < … < μn−1 the local extrema of Qn (cos(a)).

The following properties of the polynomials Qn are implied from known facts about Legendre polynomials (Properties 1 and 2 can be verified directly), which can be found for example in the book [9]:

Property 1: ai < μi < ai+1, for i = 1, …, n − 1.

Property 2: Qn (−1) = 1 and ∂z Qn+1 (−1) < ∂z Qn (−1) < 0, for n ∈ ℕ.

Property 3: |Qn (cos(μi))| ≥ |Qn (cos(μi+1))|, for i = 1, …, [n/2].

Property 4: (i − 1/2)π/n ≤ ai ≤ iπ/(n + 1), for i = 1, …, [n/2].

Property 5: , for a ∈ [0, π].

Granting these facts, we can finish the proof.

By Properties 1, 4

We assume that n is large enough so that, for some small ε > 0,

In particular, this is the situation when n0 ≥ N, for some fixed N = Nε. By Property 5

Let a0 ∈ (0, π) be such that , for every a < a0. Take z0 = cos(a0).

Finally, in the finitely many cases where n0 ≤ n ≤ N, the inequality Qn (z) < Qn0 (z) can be verified directly.

This concludes the proof of the proposition.

A.3 Proof of Lemma 2

We have the following identity:

| (A.2) |

for every h ∈ [0, 2]. The proof of (A.2) is by direct calculation:

Since Th (x, y) = Th (y, x)−1 (symmetry property), we get

Substituting, a = cos−1 (1 − h), we get the desired formula .

On the other hand,

| (A.3) |

From (A.2) and (A.3) we obtain the following upper bound:

| (A.4) |

Now we can finish the proof.

First estimate: We know that λ1(h) = h/2 − h2/8; hence, one can verify directly that there exists N1 such that for every n ≥ N1 and h ∈ [h1, 2], which implies by (A.4) that λn(h) ≤ λ1(h) for every n ≥ N1 and h ∈ [h1, 2].

Second estimate: We know that λ2(h) = h/2 − 5h2/8 + h3/6; therefore, one can verify directly that there exists N2 such that for every n ≥ N2 and h ∈ [h2, 1/2], which implies by (A.4) that λn(h) ≤ λ2(h) for every n ≥ N2 and h ∈ [h2, 1/2].

This concludes the proof of the lemma.

A.4 Proof of Theorem 5

We begin by proving that τ maps W = CV isomorphically, as an Hermitian space, onto W = H (λmax(h)).

The crucial observation is that H (λmax(h)) coincides with the isotypic subspace H1, −1 (see Sect. 3). Consider the morphism , given by

The first claim is that Im α ⊂ H−1, namely, that , for every υ ∈ W, x ∈ X and g ∈ SO(2). Denote by 〈·, ·〉std the standard Hermitian product on C. Now write

The second claim is that α is a morphism of SO(V) representations, namely, that , for every υ ∈ W, x ∈ X and g ∈ SO(V). This statement follows from

Consequently, the morphism α maps W isomorphically, as a unitary representation of SO(V), onto H1, −1, which is the unique copy of the three-dimensional representation of SO(V) in H−1. In turns, this implies that, up to a scalar, α and hence τ, are isomorphisms of Hermitian spaces. In order to complete the proof it is enough to show that

This follows from

where dυ denotes the normalized Haar measure on the five-dimensional sphere S(W).

Next, we prove that τ ∘ δx = φx, for every x ∈ X. The starting point is the equation , which follows from the definition of the morphism α and the fact that Im α = W. This implies that . The statement now follows from

This concludes the proof of the theorem.

A.5 Proof of Theorem 6

We use the following terminology: for every x ∈ X, x = (e1, e2, e3), we denote by δ̃x : C → V the map given by δ̃x(p + iq) = pe1 + qe2. We observe that δx(υ) = δ̃x(υ) − iδ̃x (iυ), for every υ ∈ C.

We proceed with the proof. Let x, y ∈ X. Choose unit vectors υx, υy ∈ C such that δ̃x(υx) = δ̃y(υy) = υ.

Write

| (A.5) |

For every frame z ∈ X and vector υz ∈ C, the following identity can easily be verified:

This implies that

Combining these identities with (A.5), we obtain

Since υ ∈ Im δ̃x ∩ Im δ̃y, it follows that (π(x) × υ, υ) = (υ, π(y) × υ) = 0. In addition,

Thus, we obtain that 〈δx(υx), δy(υy)〉 = 1 + (π(x), π(y)). Since the right hand side is always ≥ 0 it follows that

| (A.6) |

Now, notice that the left hand side of A.6 does not depend on the choice of the unit vectors υx and υy.

To finish the proof, we use the isomorphism τ which satisfies τ ∘ δx = φx for every x ∈ X, and get

This concludes the proof of the theorem.

A.6 Proof of Proposition 7

The basic observation is that H, as a representation of SO(V) × SO(3), admits the following isotypic decomposition:

where Vn is the unique irreducible representation of SO(V) of dimension 2n + 1, and, similarly, Un is the unique irreducible representation of SO(3) of dimension 2n + 1. This assertion, principally, follows from the Peter–Weyl Theorem for the regular representation of SO(3).

This implies that the isotypic decomposition of Hk takes the following form:

where is the weight k space with respect to the action SO(2) ⊂ SO(3). The statement now follows from the following standard fact about the weight decomposition:

This concludes the proof of the theorem.

Footnotes

We remark that there are other methods like single-or multi-axis tilt EM tomography, where several lower dose/higher noise images of a single molecule are taken from known directions. These methods are used for example when one has an organic object in vitro or a collection of different objects in the sample. There is a rich literature for this field starting with the work of Crowther, DeRosier and Klug in the early 1960s.

This assumption about the potential ϕ can be omitted in the context of the class averaging algorithm presented in this paper. In particular, the algorithm can be applied to potentials describing molecules with symmetries which do not satisfy the “generic” assumption.

SNR stands for Signal-to-Noise Ratio, which is the ratio between the squared L2 norm of the signal and the squared L2 norm of the noise.

This approximation improves as N grows.

In general, in this paper, we will not distinguish between an Hilbertian vector space and its completion and the correct choice between the two will be clear from the context.

Communicated by Peter Olver.

Contributor Information

Ronny Hadani, Email: hadani@math.utexas.edu, Department of Mathematics, University of Texas at Austin, Austin C1200, USA.

Amit Singer, Email: amits@math.princeton.edu, Department of Mathematics and PACM, Princeton University, Fine Hall, Washington Road, Princeton NJ 08544-1000, USA.

References

- 1.Doyle DA, Cabral JM, Pfuetzner RA, Kuo A, Gulbis JM, Cohen SL, Chait BT, MacKinnon R. The structure of the potassium channel: molecular basis of K+ conduction and selectivity. Science. 1998;280:69–77. doi: 10.1126/science.280.5360.69. [DOI] [PubMed] [Google Scholar]

- 2.Frank J. Visualization of Biological Molecules in Their Native State. Oxford Press; Oxford: 2006. Three-Dimensional Electron Microscopy of Macromolecular Assemblies. [Google Scholar]

- 3.Hadani R, Singer A. Representation theoretic patterns in three-dimensional cryo-electron macroscopy I—The Intrinsic reconstitution algorithm. Ann Math. 2010 doi: 10.4007/annals.2011.174.2.11. accepted. A PDF version can be downloaded from http://www.math.utexas.edu/~hadani. [DOI] [PMC free article] [PubMed]

- 4.MacKinnon R. Potassium channels and the atomic basis of selective ion conduction, 8 December 2003, Nobel Lecture. Biosci Rep. 2004;24(2):75–100. doi: 10.1007/s10540-004-7190-2. [DOI] [PubMed] [Google Scholar]

- 5.Natterer F. Classics in Applied Mathematics. SIAM; Philadelphia: 2001. The Mathematics of Computerized Tomography. [Google Scholar]

- 6.Penczek PA, Zhu J, Frank J. A common-lines based method for determining orientations for N > 3 particle projections simultaneously. Ultramicroscopy. 1996;63:205–218. doi: 10.1016/0304-3991(96)00037-x. [DOI] [PubMed] [Google Scholar]

- 7.Singer A, Shkolnisky Y. Three-dimensional structure determination from common lines in cryo-EM by eigenvectors and semidefinite programming. SIAM J Imaging Sci. 2011 doi: 10.1137/090767777. accepted. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Singer A, Zhao Z, Shkolnisky Y, Hadani R. Viewing angle classification of cryo-electron microscopy images using eigenvectors. SIAM J Imaging Sci. 2011 doi: 10.1137/090778390. accepted. A PDF version can be downloaded from http://www.math.utexas.edu/~hadani. [DOI] [PMC free article] [PubMed]

- 9.Szegö G. Orthogonal Polynomials. Colloquium Publications. XXIII. American Mathematical Society, Providence; 1939. [Google Scholar]

- 10.Taylor EM. Noncommutative Harmonic Analysis. Mathematical Surveys and Monographs. Vol. 22. American Mathematical Society, Providence; 1986. [Google Scholar]

- 11.Vainshtein B, Goncharov A. Determination of the spatial orientation of arbitrarily arranged identical particles of an unknown structure from their projections. Proc 11th Intern Congr on Elec Mirco. 1986:459–460. [Google Scholar]

- 12.Van Heel M. Angular reconstitution: a posteriori assignment of projection directions for 3D reconstruction. Ultramicroscopy. 1987;21(2):111–123. doi: 10.1016/0304-3991(87)90078-7. [DOI] [PubMed] [Google Scholar]

- 13.Wang L, Sigworth FJ. Cryo-EM and single particles. Plant Physiol. 2006;21:8–13. doi: 10.1152/physiol.00045.2005. [DOI] [PubMed] [Google Scholar]