Abstract

Because some users of a Hybrid short-electrode cochlear implant (CI) lose their low-frequency residual hearing after receiving the CI, we tested whether increasing the CI speech processor frequency allocation range to include lower frequencies improves speech perception in these individuals. A secondary goal was to see if pitch perception changed after experience with the new CI frequency allocation. Three subjects who had lost all residual hearing in the implanted ear were recruited to use an experimental CI frequency allocation with a lower frequency cutoff than their current clinical frequency allocation. Speech and pitch perception results were collected at multiple time points throughout the study. In general, subjects showed little or no improvement for speech recognition with the experimental allocation when the CI was worn with a hearing aid in the contralateral ear. However, all three subjects showed changes in pitch perception that followed the changes in frequency allocations over time, consistent with previous studies showing that pitch perception changes upon provision of a CI.

Keywords: short-electrode, Hybrid, electro-acoustic stimulation, cochlear implant, pitch, speech perception, plasticity

Introduction

The multi-channel long-electrode cochlear implant (CI) has been highly successful in improving speech perception performance for people with severe-to-profound hearing loss, despite the limited functionality of seven to eight independent channels of information (Friesen et al., 2001). However, CI users have great difficulty identifying musical melodies (Gfeller et al., 2006; Kong et al., 2005) or listening to a talker in background noise (Friesen et al., 2001; Nelson et al., 2003; Turner et al., 2004), both tasks that require greater frequency resolution than speech perception in quiet.

Recently, a new approach to cochlear implantation has been developed that uses soft surgery techniques and shallower electrode array insertions to minimize surgical trauma to the apex of the cochlea and preserve residual low-frequency hearing (Gantz and Turner, 2003, 2004; von Ilberg et al., 1999; Gstoettner et al., 2004; Kiefer et al., 2004). This approach allows for residual acoustic hearing in the low frequencies to be combined with electric hearing in the high frequencies in the same ear, called electro-acoustic stimulation (EAS). There are different EAS designs that have been implemented by multiple cochlear implant manufacturers (e.g., Cochlear Corporation and MED-EL) over the past ten years, all with the same goal to preserve acoustic hearing in the low-frequencies while providing high-frequency speech and sound perception by electrically stimulating the basal region of the cochlea. Research has shown that speech perception scores using EAS can be similar to those obtained with long-electrode CIs (Gstoettner et al., 2006; Reiss et al., 2008, Dorman et al., 2009). A further advantage of EAS is that by preserving residual low-frequency hearing, frequency resolution is improved as evidenced by improved music perception abilities and better speech perception in background babble compared to electric stimulation alone (Turner et al., 2004; Helbig et al., 2008; Lorens et al., 2008; Dorman and Gifford, 2010; Gfeller et al., 2006). A similar benefit is seen when a CI is combined with acoustic input via a hearing aid (HA) in the non-implanted ear (Kong et al., 2005).

However, not all CI users with EAS maintain their residual acoustic hearing in the implanted ear after surgery. In clinical trials with the S8 short-electrode Hybrid array using six intracochlear electrodes, residual hearing was preserved to within 10 dB of pre-surgical thresholds for 70% of patients (Gantz et al., 2009). The remaining subjects had either threshold shifts of 20-30 dB for 20% of subjects or lost hearing completely for 10% of subjects. For partial insertion of full-length implants, hearing preservation rates are similar with approximately 66% of patients having hearing preserved within 10 dB of pre-surgical thresholds (Gstoettner et al., 2008). Research also indicates that the loss of residual hearing in the implanted ear is correlated with reduced benefit of EAS for speech perception in noise (Gantz et al., 2009).

One approach for such patients is to re-implant with a full-length electrode array; this has proved to be effective, but there are risks associated with a second surgery. Another approach is to change the programming strategy to compensate for the lost residual hearing. Currently, the default programming strategy for short-electrode CIs is to set the frequency-to-electrode allocation in the cochlear implant program to complement the residual acoustic hearing range in the implanted ear, with minimal overlap or gap between the two. For example, if the usable residual low-frequency hearing range is from 125-750 Hz, using this approach, the electric range of the short-electrode CI would be set to 750-8000 Hz. Additionally, the acoustic range for the contralateral ear is typically programmed independent of the implant ear. In this study, we aimed to determine what type of programming strategy would be optimal for short-electrode CI users who have lost residual hearing in the implanted ear. Would a lower frequency experimental program, i.e., a frequency allocation that provides the full range of frequencies to replace the lost residual hearing, provide better speech perception scores than a narrower frequency allocation? Or would the increased overlap with the residual hearing in the contralateral, non-implanted ear, as well as decreased spectral resolution as a result of wider analysis filters for each available channel in the electrode array, lead to poorer performance?

A secondary goal was to determine if spectral shifts in the CI program also induced shifts in pitch perception. Previous work has shown that pitch perception shifts by as much as two octaves after months to years after implantation and experience with a Hybrid CI (Reiss et al., 2007, 2008). Pitch perception shifts over time may explain why pitch perception measured in standard long-electrode CI users is one to three octaves lower than expected based on the electrode location on the basilar membrane (Greenwood, 1990; Blamey et al., 1996; Dorman et al., 1994; Boex et al., 2006). The pitch shift is likely driven by spectral discrepancies between CI frequency allocations and residual hearing (Reiss et al., 2008), or by spectral discrepancies between two CIs in subjects using bilateral cochlear implants (Reiss et al., 2011). In fact, more recent studies have shown pitch perception measured immediately after implantation to be more closely aligned with predictions based on cochlear location (Eddington et al., 1978; McDermott et al., 2009; Carlyon et al., 2010). For the subjects in this study, who have already had years of experience with the CI, will changes in the frequency allocation again lead to changes in the perceived pitch of the implant electrodes?

These two questions were addressed in a single experiment. Three short-electrode CI users who had lost all residual hearing in the implanted ear were recruited for this study. All subjects were fit with an experimental CI program, providing a lower frequency limit and a wider frequency range than their clinical listening program, and used this for at least 2.5 months. Speech and pitch perception data were collected at multiple intervals throughout the study to determine the effect of altering the CI frequency allocation on speech perception and pitch perception.

Methods

Experimental design

Three subjects (S1, S2, and S3) with a Nucleus Hybrid S8 short-electrode CI participated in this study. Candidacy for the Hybrid S8 clinical trial was based on subjects fitting the following criteria: pre-operative CNC word scores of 10-50% in the worse (to be implanted) ear and <60% in the better ear, audiogram profiles of mild-moderate sensorineural hearing loss below 500 Hz and profound hearing loss at higher frequencies, and post-lingual onset of deafness. Generally, these subjects had previously tried hearing aids with no success, and had speech recognition scores that exceeded the candidacy criteria for a traditional long-electrode CI. All three subjects were implanted at the University of Iowa and subsequently lost significant amounts of residual hearing (i.e., greater than 30dB HL) in the implanted ear within the first year of implant use. As a result, the subjects discontinued use of a HA in the ipsilateral ear with the CI.

The study consisted of three visits. At the first visit, all subjects were using their CI programmed with a relatively narrow frequency allocation (e.g., 688-7938 Hz) plus their own HA in the contralateral ear. Each subject was provided with a loaner CI processor that was programmed with an experimental CI frequency allocation. The experimental program was created by lowering the lower frequency boundary to effectively provide a wider frequency range (e.g., 188-7938 Hz) through the CI. Speech and pitch perception tests were administered to the subjects on the day of fitting. Clinical speech perception tests were administered first using both the experimental and the clinical programs immediately after device programming. Subjects were given at least an hour of listening time before pitch perception testing (typically over the lunch hour). Then pitch perception testing was administered, followed by consonant and vowel perception tests, if there was time. At the end of the first visit, subjects were instructed to wear the experimental program exclusively up to the third visit (approximately 2.5 to 5 months after fitting). Subjects returned for the second visit after one to two months, at which time only pitch perception testing was conducted (with the exception of S3, who was reprogrammed again at the second visit and underwent additional speech testing with the reprogrammed experimental map in addition to pitch testing). At the final visit, the speech and pitch perception tests were re-administered using both the experimental and the clinical programs. In addition, at the conclusion of the study, the subjects’ speech processors were reprogrammed according to their subjective preferences for the experimental or clinical program.

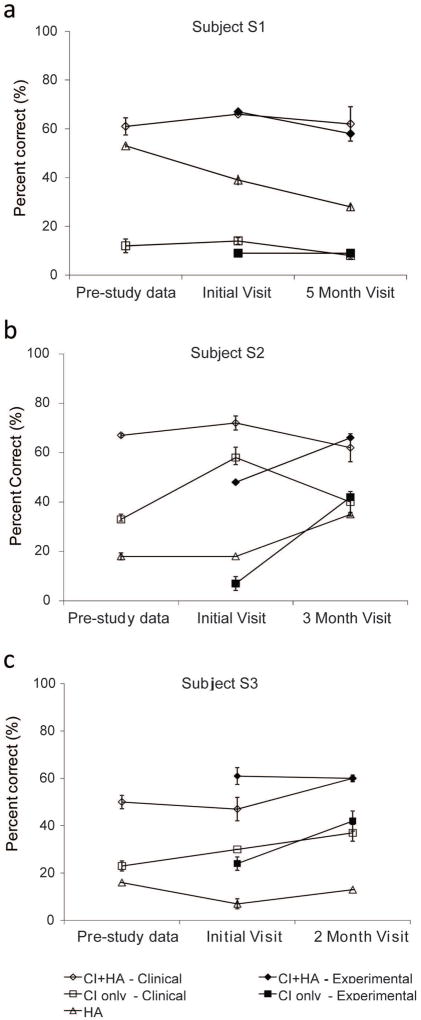

Unaided audiometric thresholds and sound field thresholds using the cochlear implant and hearing aid devices were measured at the first visit. Unaided pure tone audiometric thresholds for the contralateral ear were obtained using insert earphones for octave and inter-octave frequencies from 125-8000 Hz. Aided audiometric thresholds for the CI and HA were measured in the sound field using narrowband noise at all octave frequencies from 250-4000 Hz for each subject. Individual audiometric thresholds collected in unaided and aided conditions are shown in Figure 1, panels a-c. Throughout the study, unaided audiometric thresholds did not vary by more than 10 dB across the measured frequencies for any subject.

Figure 1. Aided and unaided audiometric thresholds for subject S1.

A. Subject S1. B. Subject S2. C. Subject S3. Frequency is shown along the x-axis from 0.125 to 8.0 kHz and threshold is shown (in dB HL) along the y-axis. Unaided thresholds obtained using insert earphones in the contralateral ear are shown by the filled circles. Aided sound field thresholds for the CI are indicated by the squares, and for the hearing aid, by the diamonds.

Hearing history and CI and HA programming information are described in detail for each subject as follows.

Subject S1

Subject S1 was a male subject with a history of noise exposure and progressive hearing loss, bilaterally, since the age of 38. This subject was implanted with a Nucleus Hybrid S8 short-electrode CI in the left ear on September 15, 2006 at the age of 63. Pre-operative thresholds below 1000 Hz for the implanted ear ranged between 25-60 dB. In the contralateral ear, he wore a Phonak Claro behind-the-ear (BTE) hearing aid. The subject experienced a complete loss of hearing in the implanted ear by three months of CI use. During the first 19 months of CI use, the CI was programmed with three different programs of 188-7938 Hz, 563-7938 Hz, and 688-7938 Hz. The subject reported a preference for the program from 688-7938 Hz.

At the initial study visit, the subject was fit with an experimental program with a wider frequency range of 188 to 7938 Hz. The subject wore the experimental program for five months during the field trial. At the conclusion of the study, the subject reported a preference for the frequency allocation of 688-7938 Hz in the clinical program over the 188-7938 Hz experimental program. The subject was then fit with two clinical programs, a 438-7938 Hz and a 688-7938 Hz, and a year later, the subject reported primarily using the frequency allocation of 688-7938 Hz.

Subject S2

Subject S2 was a female subject with a history of bilateral, progressive sensorineural hearing loss since the age of 39. This individual was implanted on June 10, 2005 in the right ear with a Nucleus Hybrid short-electrode CI at age 55. Pre-operative thresholds below 1000 Hz in the implanted ear ranged from 20-80 dB. The participant experienced hearing fluctuations in the implanted ear during the first 6 to 12 months following implantation, which resulted in a complete loss of residual hearing by 24 months. During the first 23 months of implant use, the subject was given trial programs with frequency ranges of 688-7938 Hz and 1063-7938 Hz. From 23-33 months of implant use, the subject’s preferred CI listening program was set with a frequency range of 563 to 7938 Hz.

The subject wore an Oticon SY1 in-the-ear (ITE) hearing aid in the contralateral ear.

At the first visit, the experimental program was fit using a frequency range of 188 to 7938 Hz. The subject wore this experimental program for approximately three months. At the conclusion of the study, the subject reportedly preferred the existing clinical program with a frequency allocation of 563-7938 Hz over the 188-7938 Hz experimental program.

Subject S3

Subject S3 was a male subject with a reported history of noise exposure and sensorineural hearing loss, bilaterally, since the age of 48. Pre-operative thresholds below 1000 Hz in the implanted ear ranged from 30-60 dB. This subject was implanted with a Nucleus Hybrid S8 short-electrode CI in the left ear on November 21, 2003 at the age of 69 and experienced a decrease in residual hearing within three months following activation of his CI. During the first 24 months of CI use, the subject was given the opportunity to try different frequency ranges of 188-7938, 688-7938, and 3063-7938 Hz. From 24-58 months of CI use, the CI was programmed using a frequency range of 688-7938 Hz.

The subject wore a Widex Senso Diva SD-9M BTE hearing aid in the contralateral ear.

At the initial study visit, the experimental program was fit using a frequency range of 188-7938 Hz. The subject reportedly was unable to tolerate this program and experienced significant difficulty understanding speech in everyday listening situations; the subject reverted back to wearing his clinical program after less than a week.

In response to the subject’s feedback, the low-frequency boundary of the experimental program was changed from 188 to 438 Hz at the second visit which occurred one month after the initial fitting. The new experimental program was acceptable to the subject as he reported a remarkable subjective improvement using the frequency range of 438 Hz-7938 Hz compared to both the previous experimental program (i.e., 188- 7938 Hz) and his previous clinical program (i.e., 688-7938 Hz). In total, the subject wore this new experimental MAP for 2.5 months. At the conclusion of the study, the experimental program using a frequency range of 438-7938 Hz was the subject’s preferred listening program.

Cochlear implant and hearing aid programming and verification

Following programming of the experimental frequency allocation, T- and C-levels were re-measured for all active electrodes in the Hybrid S8 device (out of 6 electrodes total) using standard clinical procedures. As for other Nucleus devices, electrodes are numbered from the basal to apical direction, with electrode 6 representing the most apical and lowest pitched electrode at approximately 10 mm insertion depth. All other CI programming parameters including stimulation rate, pulse width, and maxima were set identically across conditions and identical to the subjects’ clinical programs. Subjects were given two versions of the experimental program to use: a program where all pre-processing algorithms such as noise reduction and directional microphones were disabled, and an identically set program with Autosensitivity to be used in between visits for listening in noisy situations. The program without any pre-processing was used for speech perception testing at each visit during the study.

Because the study participants had their own HA in the contralateral ear, the fitting of this HA was verified using a NAL-NL1 hearing aid prescription (Byrne et al., 2001) at the initial study visit. Real ear measurements were obtained using a Verifit real ear analyzer for a speech input presented at 65 dB SPL and for a Maximum Power Output (MPO) setting at 85 dB SPL. For all subjects, the HA fit was determined to be appropriate as the output of the HA met the low-frequency NAL-NL1 targets (i.e., 250 and 500 Hz).

Finally, aided sound field thresholds were measured using the CI-only and HA-only to verify the programming of each device along the speech spectrum (refer to Figure 1a-c). Sound field thresholds were obtained at all octave frequencies in dB HL from 250 to 4000 Hz using narrow band noise presented from a clinical audiometer. All speech perception and audiometric threshold testing was performed in a sound-treated IAC booth in the Audiology department of the University of Iowa Hospitals and Clinics.

Speech perception

The Consonant-Nucleus-Consonant (CNC) monosyllabic word recognition test (Tillman & Carhart, 1966) and the Hearing In Noise Test (HINT; Nilsson, Soli, and Sullivan, 1994) were administered to assess speech perception abilities using the experimental and clinical programs. For the CNC word test, the stimuli consisted of two lists of 50 recorded CNC words presented in quiet. All stimuli were presented in the sound field at 70 dB SPL[C] at a distance of 1 m. The percentage of correctly repeated words, ranging from 0 to 100, represented each subject’s performance. CNC word scores were collected using the subject’s cochlear implant plus contralateral hearing aid (CI+HA), cochlear implant alone (CI-only), and contralateral hearing aid alone (HA-only). All subjects completed the CNC word test at the initial and final visits of the study.

Next, the HINT in noise was administered using recorded materials. HINT sentences were presented at 70 dB SPL[C] and the noise was an eight-talker babble presented at either +5 or +10 signal-to-noise ratio (SNR). The SNR of the noise was determined individually for each subject such that a ceiling or floor effect was avoided. For example, the SNR that elicited sentence scores between 20% to 80% was selected and used throughout the study. Four lists of 10 sentences each were presented per condition and lists were randomized across subjects. Speech and noise were administered from a front-facing loudspeaker at 1 m distance. HINT sentences were scored by percent correct and ranged from 0 (poor) to 100 (excellent). The HINT was administered using the same conditions as CNC words: CI+HA, CI-only, and HA-only. Due to time constraints while testing, the HA-only condition at the initial study visit was not completed for subject S3.

Two subjects (S1 and S3) were also tested on consonant and vowel perception. For consonant perception testing, 16 consonants were presented in an /a/-consonant-/a/ context and spoken by four different talkers (Turner et al., 1995). Stimuli were presented from a front-facing loudspeaker at 63 dB SPL. In most cases, the consonant set was presented twice, and scores were averaged over the two presentations. Performance was tested under two conditions: CI+HA, and CI-only. In both conditions, the implant ear was plugged to ensure that no sound was reaching that ear. For vowel perception testing, 12 vowel sounds were presented in a /h/-vowel-/d/ context, with each vowel spoken by 20 different talkers (Henry et al., 2005). Performance was tested similarly as for consonants; however, due to time constraints, data was typically obtained only for the acoustic plus electric condition and for one repetition only.

In comparison to CNC word recognition and HINT sentence tests, consonant and vowel perception tests were administered using the clinical programs at the start of the study, and using the experimental program at the conclusion of the study. This method was implemented to balance time constraints and to make sure that each subject had at least three months of listening experience with the program to be tested at the time of assessment. Note that consonant and vowel perception testing was completed for subjects S1 and S3 only. Subject S2 was unable to complete this testing due to testing fatigue. Results from the consonant and vowel testing were analyzed for place, manner and voicing confusions (Miller and Niceley, 1955). Vowel results were analyzed for differences in formant and duration discrimination (Xu et al., 2005).

For all speech perception tests, the contralateral ear in the CI-only condition was both plugged and muffed (to provide attenuation of greater than 30 dB, especially important for testing Hybrid subjects with mild low-frequency hearing loss in the contralateral ear).

Pitch Perception

Electric-to-acoustic pitch matches were conducted using a computer to control both electric and acoustic stimulus presentations. Electric stimuli were delivered to the CI using NIC2 cochlear implant research software (Cochlear) via the implant programming interface. Stimulation of each electrode consisted of a pulse train of 25 μsec biphasic pulses presented at 1200 pps with a total duration of 500 ms. The pulse rate of 1200 pps per electrode was selected to reduce the effects of any temporal cues on pitch. The electrode ground was set to monopolar stimulation with both the ball and plate electrodes active (MP1+2). The level of the electric stimulation for each electrode was set to a “medium loud and comfortable” current level.

Acoustic stimuli were delivered using a sound card and headphones. Acoustic tones were presented to the contralateral ear and set to “medium loud and comfortable” levels. Loudness was balanced across all tone frequencies. Then, each CI electrode was loudness balanced with the acoustic tones to reduce loudness effects on electric-to-acoustic pitch comparisons.

Generally, a two-interval, forced-choice constant-stimulus procedure was used. One interval contained the electric pulse train delivered to a particular electrode in the implant ear, and the other interval contained the acoustic tone delivered to the non-implanted ear, with the order of presentation varied. The electric and acoustic stimuli were each 500 ms in duration and separated by a 500 ms inter-stimulus interval. The patient was instructed to indicate on a touch screen the interval that contained the higher pitched stimulus. Trials were repeated by holding the stimulated electrode constant and varying the acoustic tone frequency in ¼ octave steps in pseudorandom sequence to reduce possible order effects (Reiss et al., 2007, 2011). Specifically, due to the difficulty of the electric to acoustic comparison, it has been observed in all subjects that the previous comparison tone influences the response to the subsequent comparison tone, such that the pitch of the electrode is judged to be higher in pitch with a descending sequence than with an ascending sequence (refer to Table 1). Thus, the sequence is counterbalanced to “average” out these effects, with the first half of the pseudorandom sequence mirrored by the second half. In addition, the first tone frequency in the sequence is always selected to be the highest audible frequency, because the tone at the upper frequency boundary cannot be counterbalanced; otherwise the highest tone frequency will always be preceded by a lower-frequency tone that increases the likelihood that this tone is judged as higher in pitch than the electric tone. This measure is likely to reduce the effects of acoustic tone frequency range on pitch match psychometric functions that were reported previously (Carlyon et al., 2010), although further study is needed to verify this. The sequence itself is selected from a subset of a Latin square set of sequences. An example sequence is shown in Table 1; the number of audible tone frequencies determines the length of the sequence, with each tone frequency repeated 6 times in this case. The exact same tone sequence was used in each run and session. Due to time constraints, pitch matches were conducted for three or four electrodes only and limited to those electrodes within the residual hearing frequency range of the non-implanted ear.

Table 1. An example sequence of tone frequencies used in the electric-to-acoustic pitch comparison, and the responses.

Columns 1-3 indicate the trial number, the acoustic tone frequency, and the subject response, respectively. The sequence is selected from a subset of a Latin square sequence, with the second half mirroring the first half of the sequence to average out observed sequence effects. The range of audible tone frequencies determines the length of the sequence. Subject responses of 1 indicate that the acoustic tone was higher in pitch than the electric tone, and responses of 0 indicate that the acoustic tone was lower in pitch. The exact same tone sequence was used in each run and session (in this case, for all electrodes for subject S3); the responses correspond to the data shown in Figure 4.

| Trial | Acoustic frequency (Hz) | Response (0=Lower, 1=Higher) |

|---|---|---|

| 0 | 2000 | 1 |

| 1 | 1681 | 1 |

| 2 | 1414 | 1 |

| 3 | 1189 | 0 |

| 4 | 1000 | 0 |

| 5 | 840 | 0 |

| 6 | 707 | 0 |

| 7 | 594 | 0 |

| 8 | 500 | 0 |

| 9 | 420 | 0 |

| 10 | 353 | 0 |

| 11 | 297 | 0 |

| 12 | 250 | 0 |

| 13 | 210 | 0 |

| 14 | 176 | 0 |

| 15 | 148 | 0 |

| 16 | 125 | 0 |

| 17 | 1189 | 1 |

| 18 | 594 | 1 |

| 19 | 297 | 0 |

| 20 | 148 | 0 |

| 21 | 1414 | 1 |

| 22 | 707 | 1 |

| 23 | 353 | 0 |

| 24 | 176 | 0 |

| 25 | 1681 | 1 |

| 26 | 840 | 1 |

| 27 | 420 | 0 |

| 28 | 210 | 0 |

| 29 | 2000 | 1 |

| 30 | 1000 | 1 |

| 31 | 500 | 0 |

| 32 | 250 | 0 |

| 33 | 125 | 0 |

| 34 | 707 | 1 |

| 35 | 210 | 0 |

| 36 | 840 | 1 |

| 37 | 250 | 0 |

| 38 | 1000 | 1 |

| 39 | 297 | 0 |

| 40 | 1189 | 1 |

| 41 | 353 | 1 |

| 42 | 1414 | 1 |

| 43 | 420 | 1 |

| 44 | 125 | 0 |

| 45 | 1681 | 1 |

| 46 | 500 | 1 |

| 47 | 148 | 0 |

| 48 | 2000 | 1 |

| 49 | 594 | 0 |

| 50 | 176 | 0 |

| 51 | 176 | 0 |

| 52 | 594 | 1 |

| 53 | 2000 | 1 |

| 54 | 148 | 0 |

| 55 | 500 | 1 |

| 56 | 1681 | 1 |

| 57 | 125 | 0 |

| 58 | 420 | 1 |

| 59 | 1414 | 1 |

| 60 | 353 | 0 |

| 61 | 1189 | 1 |

| 62 | 297 | 1 |

| 63 | 1000 | 1 |

| 64 | 250 | 0 |

| 65 | 840 | 1 |

| 66 | 210 | 0 |

| 67 | 707 | 1 |

| 68 | 125 | 0 |

| 69 | 250 | 1 |

| 70 | 500 | 1 |

| 71 | 1000 | 1 |

| 72 | 2000 | 1 |

| 73 | 210 | 0 |

| 74 | 420 | 1 |

| 75 | 840 | 1 |

| 76 | 1681 | 1 |

| 77 | 176 | 0 |

| 78 | 353 | 1 |

| 79 | 707 | 1 |

| 80 | 1414 | 1 |

| 81 | 148 | 0 |

| 82 | 297 | 1 |

| 83 | 594 | 1 |

| 84 | 1189 | 1 |

| 85 | 125 | 0 |

| 86 | 148 | 0 |

| 87 | 176 | 0 |

| 88 | 210 | 1 |

| 89 | 250 | 0 |

| 90 | 297 | 1 |

| 91 | 353 | 1 |

| 92 | 420 | 1 |

| 93 | 500 | 1 |

| 94 | 594 | 1 |

| 95 | 707 | 1 |

| 96 | 840 | 1 |

| 97 | 1000 | 1 |

| 98 | 1189 | 1 |

| 99 | 1414 | 1 |

| 100 | 1681 | 1 |

| 101 | 2000 | 1 |

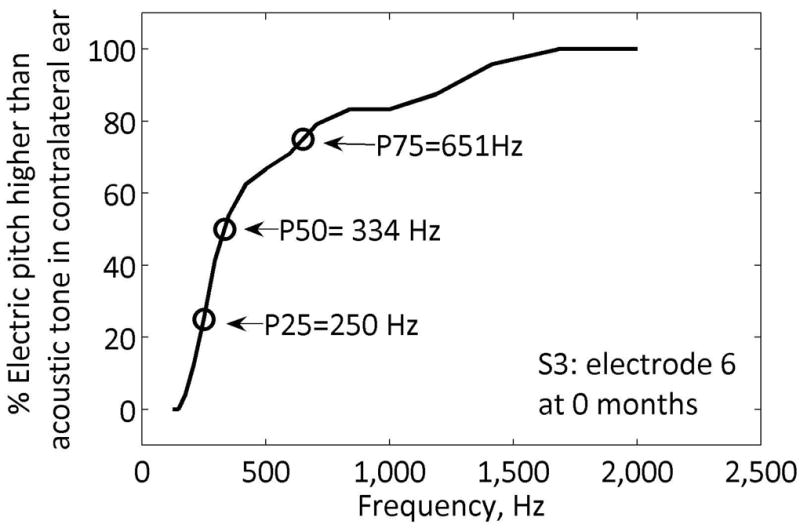

The averaged pitch matched responses were used to construct psychometric functions for each cochlear implant electrode. The range of pitch-matched frequencies was computed as those falling between the 25% and 75% on the psychometric function (refer to Figure 2). For a pitch match result to be considered valid, the psychometric function had to reach 100%, i.e., at least one acoustic tone had to be judged as higher in pitch than the electrode 100% of the time. In some cases (i.e., for subject S2 at the second visit), the electric stimulation produced a pitch sensation too high-pitched for the subject to consistently rank any acoustic tones as always higher in pitch, due to the upper limit of the residual low-frequency hearing. If this occurred, the pitch matches were recorded as “out of range”.

Figure 2. Example acoustic-to-electric pitch match for a cochlear implant electrode.

The percentage of responses for which the electric pitch was higher than the acoustic tone pitch is plotted versus tone frequency. Arrows indicate the 25%, 50%, and 75% points.

Results

Speech Perception

CNC and HINT Tests

CNC word and HINT sentence testing was conducted for both the clinical and experimental programs at the start and the conclusion of the study. If speech perception data was available prior to the onset of the study, this was also plotted for reference. Statistical significance for the CNC and HINT speech perception tests was determined based on the binomial model using critical difference scores at a 95% confidence level (Thornton and Raffin, 1978). For the CNC word test, statistical significance was calculated from a 100 word list (Carney and Schlauch, 2007), and for HINT sentences, this was calculated from a 208 item list (i.e., 52 items times 4 lists each).

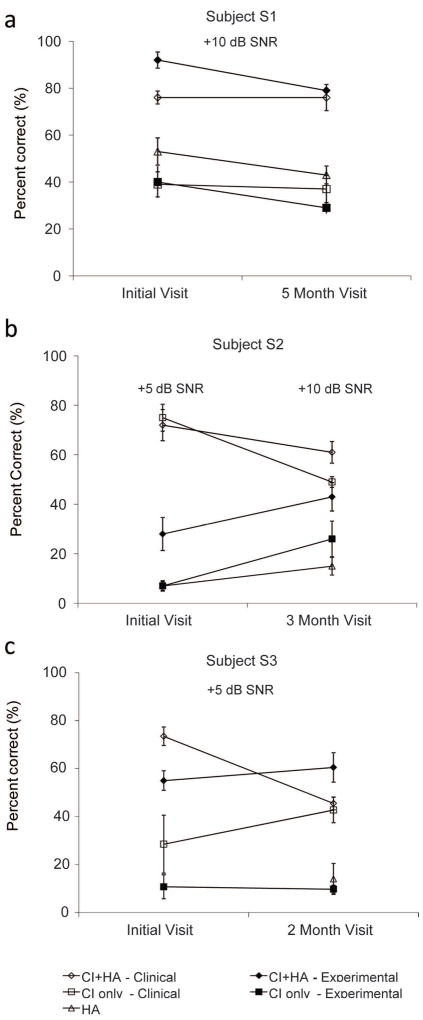

Figure 3 shows CNC word recognition results for all three subjects for the following conditions: CI+HA, CI-only, and HA-only. For the CI+HA condition, no benefit was observed with the experimental CI program compared to the clinical program for any subject (refer to filled versus open diamonds, as shown in Figure 3). For subject S1 as seen in Figure 3a, scores were initially no different using both programs (67% with the experimental program versus 66% for the clinical program), and scores did not change for either program over time (58% for the experimental and 62% for the clinical program). For subject S2 (see Figure 3b), a crossover effect was observed. Initially, scores with the clinical program were significantly higher (72%) than scores with the experimental program (48%). However, performance improved significantly over time with the experimental program (66%) and a decrement in scores was observed with the clinical program (62%). Compared to use of the clinical map at the start of the study, scores were lower for both conditions, but not significantly different. For subject S3 (Figure 3c), performance with the final experimental program (61%) was the same as performance with the clinical program at the end of the study (60%). In summary, after listening experience with the experimental program, none of the subjects performed better with the experimental program compared to their best performance with the clinical program using the both CI and HA.

Figure 3. CNC word recognition results over the duration of the study.

a. Subject S1. b. Subject S2. c. Subject S3. For each subject, the CNC percent correct scores are shown prior to starting the study (approximately -12 months), at the start of the study (initial visit or 0 months), and at the end of the study (2-5 months). Open symbols indicate scores with the clinical frequency allocation, filled symbols indicate scores with the experimental frequency allocation. Diamonds indicate CI+HA scores, squares indicate CI only scores, and triangles indicate HA-alone scores.

Performance for the CI-only condition also did not show a significant improvement using the experimental program compared to the clinical program (filled versus open squares shown in Figure 3) after listening experience. In addition, performance deteriorates significantly when the HA is removed compared to the CI+HA condition. The trends for each subject were similar to those seen with the CI+HA condition, though the overall scores were lower than obtained with the CI+HA condition. Scores for subject S2 significantly improved over time using the experimental program in the CI-only condition (i.e., from 6% to 42%), but scores at the end of the study did not differ significantly compared with the clinical program (40%). For subjects S1 and S3, no significant change was observed in CI-only scores between the conditions.

HA-only results are shown in Figure 3 by the triangles. For subject S2, HA-only scores fluctuated over time. This subject’s scores significantly improved overtime by 17%, even though the HA programming parameters and acoustic hearing in that ear were unchanged. For the other two subjects, no significant change was observed in HA-only scores throughout the study.

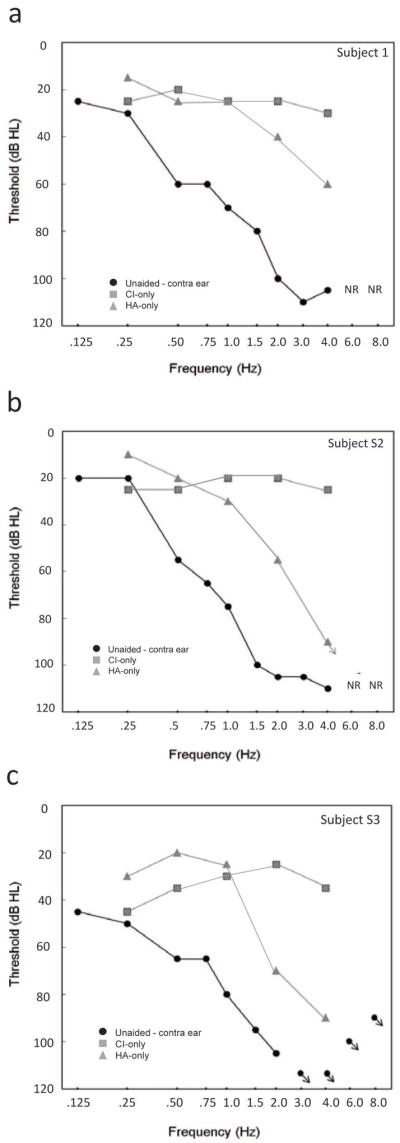

Shown in Figure 4 are the results for the HINT sentence test in noise for all three subjects. All subjects were tested at different SNRs as indicated in the text within the figures. Using the CI+HA condition, all subjects showed either no difference or a decrement in performance using the experimental program compared to the clinical program. As shown in Figure 4a, subject S1 initially performed best at +10 dB SNR with the experimental program (92%), but over time performance dropped to 80%, which was not significantly different than the clinical program at the last visit (76%). Subject S2 initially performed significantly better with the clinical program (72%) compared to the experimental program (28%), but over time, performance improved with the experimental program to 43% after three months (refer to Figure 4b). However, this subject was unable to perform the HINT testing with either program at the conclusion of the study at the original +5 dB SNR, and instead performed the test at +10 dB SNR. Thus, performance for this subject was best with the clinical program at the start of the study. As shown in Figure 4c, subject S3 had similar crossover results as subject S2. More specifically, a significant decrease in scores was measured overtime with the clinical program (74 vs. 46%) with no change using the experimental program (55 vs. 62%). However, subject S3’s best scores with the experimental program (62%) were significantly worse than the best scores with the clinical program at the start of the study (74%). Thus, performance for this subject was also best with the clinical program.

Figure 4. HINT sentence-in-noise recognition results over the duration of the study.

a. Subject S1. b. Subject S2. c. Subject S3. For each subject, the HINT scores are shown at the start of the study (initial visit or 0 months) and at the end of the study (3-5 months). Open symbols indicate scores with the clinical frequency allocation, filled symbols indicate scores with the experimental frequency allocation. Diamonds indicate CI+HA scores, squares indicate CI only scores, and triangles indicate HA-alone scores.

CI-only scores on the HINT sentences in noise were not significantly different between the experimental and clinical conditions for subject S1, showed a crossover effect for subject S2, i.e. the scores with the experimental condition improved while the scores with the clinical condition worsened, and improved significantly over time for the clinical program only for subject S3 (refer to the squares in Figure 4a-c). Using the experimental program, CI-only scores were significantly lower than the CI+HA scores for all subjects at both visits. However, for the clinical program, CI-only scores were not consistently lower than CI+HA scores for all subjects. That is, subject S2 had similar CI-only (75%) and CI+HA (72%) scores at the start of the study, while subject S3 performed similarly using the CI-only and CI+HA at the end of the study (43 vs. 46%, respectively). HA-only scores decreased significantly over for S1, but not for S2 over the duration of the study (see triangles in Figure 4). HA-only scores could not be obtained for S3 at the initial visit due to time constraints.

Speech Perception

Consonant and Vowel Tests

Consonant and vowel perception scores were also measured for the clinical and experimental programs. While performance cannot be directly compared between programs because they were not tested on the same day, the results provide some indication of the differences in speech information available to subjects with each program.

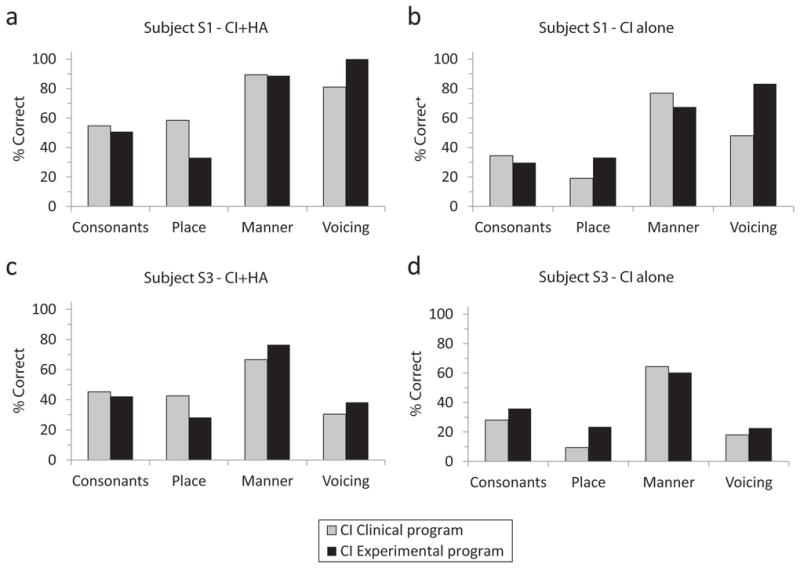

Consonant perception results are shown for subjects S1 and S3 in Figure 5. Performance for the CI+HA condition decreased in each individual when switching from the clinical to the experimental program. However, for both subjects when analyzing feature only, there is a greater transmission of voicing at a cost to transmission of place information in the CI+HA condition.

Figure 5. Consonant recognition results compared for the clinical and experimental maps for subjects S1 and S3.

Each panel shows consonant and individual feature (place, manner, and voicing) scores for each subject and condition. Gray bars indicate the clinical frequency allocation and black bars indicate the experimental frequency allocation. Subject S1 scores are shown for CI+HA in (a) and CI alone in (b). Subject S3 scores are shown for CI+HA in (c) and CI alone in (d).

Consistent with results from the CNC and HINT speech recognition tests, there was a decrement in consonant perception performance when the HA was removed and the CI was tested alone. Performance for the CI-only condition decreased with the experimental program compared to the clinical program for subject S1, but increased slightly for subject S3. When broken down by feature, the scores for both place and voicing improve at a cost to manner.

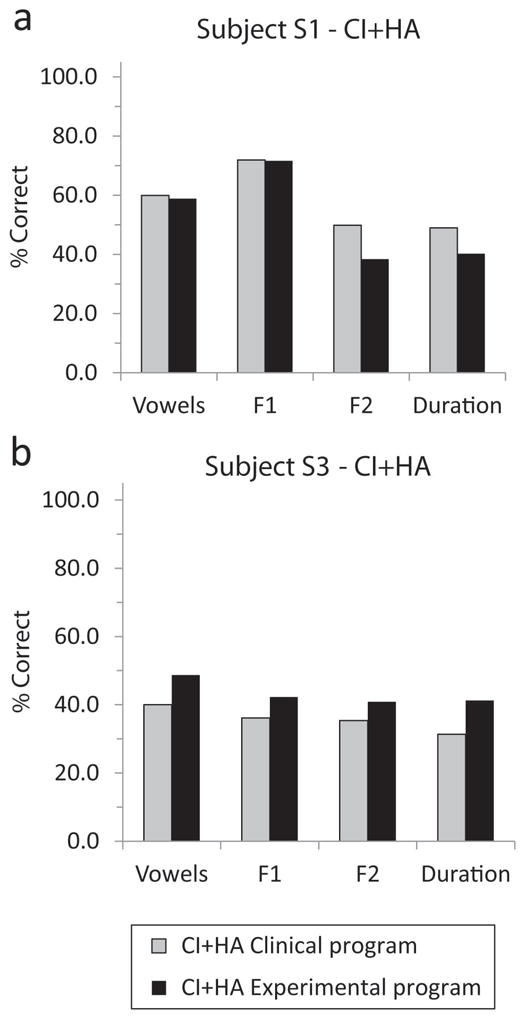

Shown in Figure 6 are vowel perception results for subjects S1 and S3. Subject S3 showed improvement in vowel recognition under the CI+HA condition when using the experimental program compared to the clinical program, and showed improved coding of all features consistent with this overall improvement. Subject S1 did not show any improvement in overall vowel recognition score, and when the score was broken down by feature, F1 transmission was unaffected, but F2 and duration transmission was decreased, consistent with the decrease in high-frequency information at the cost of increased low-frequency information.

Figure 6. Vowel recognition results compared for the clinical and experimental maps for subjects S1 and S3.

Each panel shows vowel and individual feature (F1, F2, and duration) scores for each subject and condition. Gray bars indicate the clinical frequency allocation and black bars indicate the experimental frequency allocation. Subject S1 scores are shown for CI+HA in (a) and subject S3 scores are shown for CI+HA in (b).

Changes in Pitch Perception with New MAPs

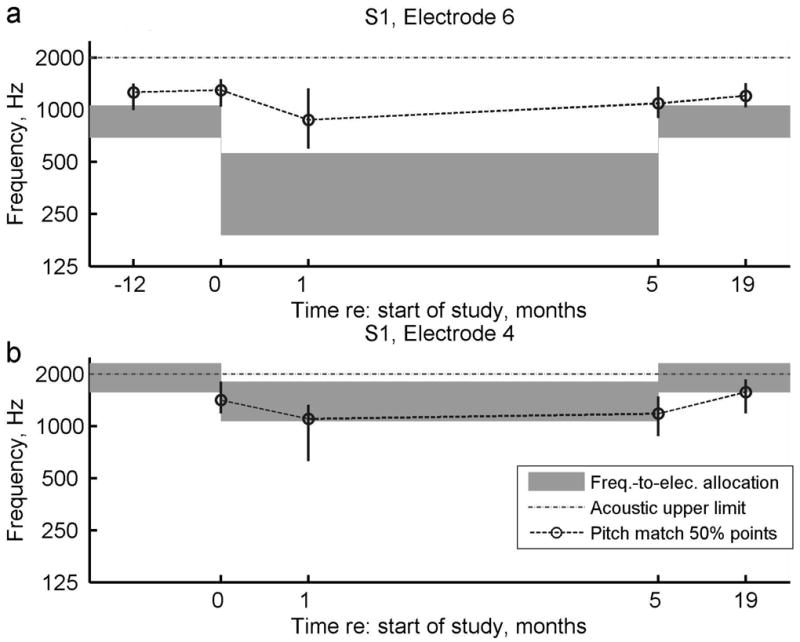

The pitch match results for all three subjects are shown in Figures 7-9. Each figure shows the pitch match for each electrode over time, as well as the corresponding electrode frequency allocations in the shaded regions for comparison. The points indicate the 50% points of the pitch match, and the vertical lines indicate the 25-75% ranges of the pitch match. The lines connecting the 50% points over time are plotted to help visualize the trends and do not necessarily indicate the true time course of the changes. Statistical significance of differences between pitch matches at different times were evaluated using non-parametric bootstrap estimation of 95% confidence intervals (Efron and Tibshirani, 1993; Wichmann and Hill, 2001).

Figure 7. Pitch match results for subject S1 over the duration of the experiment.

a. Pitch changes over time for electrode 6. b. Pitch changes for electrode 4. For reference, pitch matches from 12 months prior to the study and 19 months after starting the study are also shown when available. Circles indicate the center or 50% point of the pitch-match range, vertical lines indicate the 25-75% range, and dashed lines indicate pitch match trends over time. Gray bars indicate the frequency-to-electrode allocations for comparison. Dotted gray lines indicate the upper frequency limit of the acoustic hearing.

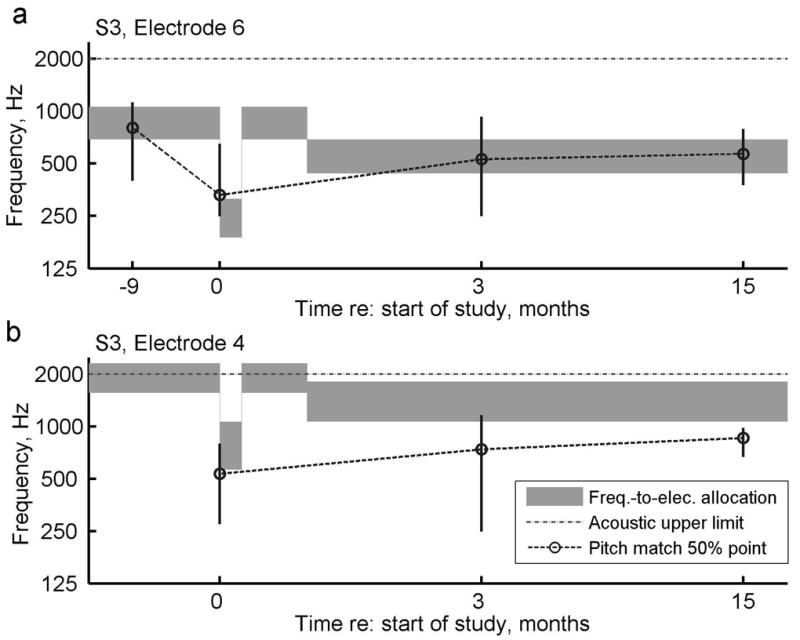

Figure 9. Pitch match results for subject S3 over the duration of the experiment.

a. Pitch changes over time for electrode 6. b. Pitch changes for electrode 4. For reference, pitch matches from 9 months prior to the study and 15 months after starting the study are also shown when available. Circles indicate the center or 50% point of the pitch-match range, vertical lines indicate the 25-75% range, and dashed lines indicate pitch match trends over time. Gray bars indicate the frequency-to-electrode allocations for comparison. Dotted gray lines indicate the upper frequency limit of the acoustic hearing.

Generally, all three subjects had pitch match centers and ranges that tended to follow the frequency allocation trends over time, with some small differences across subjects. Figure 7a shows that for subject S1, the pitch of electrode six dropped slightly at 1 month after introduction to the experimental program, and increased slowly over time after the subject reverted back to the original program at 5 months; however, the changes over time were not significant for this electrode. In addition, the pitch of electrode six was always perceived slightly higher than the frequency range allocated to that electrode. In comparison, the pitch of electrode four showed significant changes both at 1 month versus 0 months (after the experimental program was introduced), and at 1 year versus 5 months (after the subject reverted back to the original program; Figure 7b). Unlike electrode six, electrode four was always slightly lower than the allocated frequency range, even overlapping in pitch with electrode six. The difference in offsets between electrodes could be explained in two ways. Either there was an incomplete adaptation to the frequency allocations for this subject, or electrodes that were initially widely separated in pitch dropped in pitch over time and aligned with the lowest audible frequencies. In other words, over time the same pitch was perceived in all electrodes, a trend that has been observed in some long-electrode CI users (Reiss, unpublished).

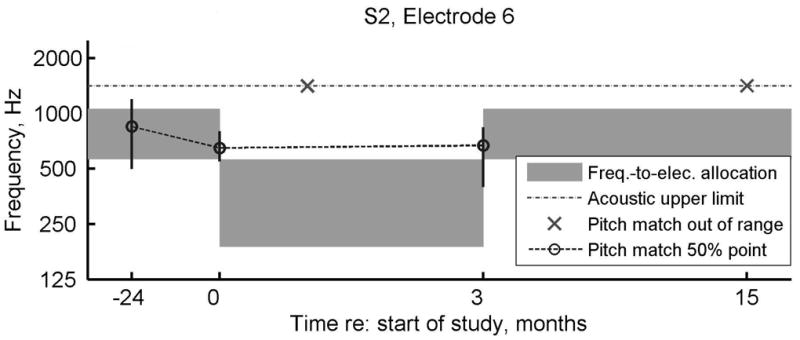

Displayed in Figure 8, subject S2 had pitch changes that were less than predicted from the change in frequency allocation. Initially, the pitch perception for this subject was in the middle of the range for the clinical frequency allocation (refer to gray shaded area in the left part of Figure 8). After the introduction of the experimental program, the pitch changed rapidly, but remained slightly higher than the new frequency allocation (as shown by circles versus gray shaded area in the middle of Figure 8). In addition, for some time points, the electrode pitch could not be bracketed within the residual acoustic frequency range, suggesting high variability or unreliability for this subject. This subject also reported sleepiness and inability to focus at these particular sessions. The changes were not significantly different over time except at 15 months versus 3 months (after the subject reverted back to the original program).

Figure 8. Pitch match results for subject S2 over the duration of the experiment for electrode 6.

For reference, pitch matches from 24 months prior to the study and 15 months after starting the study are also shown. Circles indicate the center or 50% point of the pitch-match range, vertical lines indicate the 25-75% range, and dashed lines indicate pitch match trends over time. Gray bars indicate the frequency-to-electrode allocations for comparison. Dotted gray lines indicate the upper frequency limit of the acoustic hearing, and x symbols indicate when electrode pitch could not be matched within the acoustic hearing frequency range.

Figure 9 shows that Subject S3 had pitch changes that closely followed the frequency allocation changes, especially for electrode six (Figure 9a). Electrode four may have also followed the frequency allocation changes, and shows incomplete or slow adaptation at the end of the study (Figure 9b). The changes were significant for electrodes six and four at 0 months (when the experimental program was introduced) versus 1 year prior to the study, and for electrode four at 3 months (when the subject reverted back to the original program) versus 0 months.

It is most interesting that, for subjects S2 and S3, the measured pitch changes for electrode six occurred within the same day of the fitting the new frequency allocation (i.e., zero months, as shown in Figures 8 and 9a). More specifically, the pitch changes occurred very quickly in both subjects, or within 3 to 4 hours after fitting the experimental program. In contrast, subject S1 generally had pitch changes that lagged after the frequency allocation changes. More specifically, the pitch changes for this subject occurred within one month after introducing the experimental program for both electrodes six and four (Figures 7a, b) and several months after reversion to the clinical programming for electrode four (Figure 7b). There was one exception of a rapid pitch change within one day on electrode six that occurred at the five month visit after resetting the CI frequency allocation back to the subject’s clinical program (Figure 7a).

Discussion

Overall, for the subjects in this study, use of a lower frequency experimental program compared to a clinical program did not improve speech perception outcomes. Performance on CNC word recognition tests in quiet for the CI+HA condition did not differ between the two programs after listening experience of at least 2.5 months with each program. For HINT sentences in noise, performance was variable among all three subjects and showed a decrement using the experimental program for two of the subjects. In addition, for all speech materials, performance was always better in the CI+HA condition compared to the CI-only condition, consistent with studies of EAS patients with a HA in the same ear as the CI (Gstoettner et al., 2004; Turner et al., 2004; Kiefer et al., 2005; Lorens et al., 2008; Dorman and Gifford, 2010;), as well as studies of long-electrode CI users with a HA in the contralateral ear (Armstrong et al., 1997; Tyler et al., 2002;Ching et al., 2004; Flynn & Schmidtke, 2004; Dunn et al., 2005).

The consonant and vowel perception results were not directly comparable across programs because testing was conducted on different days. However, they may provide an indication of the differences in speech information transmission between programs. For the two subjects tested on consonant perception, performance was slightly worse using the experimental program compared to the clinical program. For vowel perception, performance varied among the two subjects tested, in that subject S1 showed no change with the 188 Hz frequency allocation and subject S3 showed slightly improved vowel recognition with the 438 Hz frequency allocation. When broken down by feature, different features were transmitted using the experimental than clinical program, especially for consonants. More consonant voicing information was transmitted with a lower frequency experimental program, while less place information was transmitted, consistent with the presence of more low-frequency information at the cost of frequency resolution of high-frequency information. These tradeoffs accounted for by the limited number of available electrodes might potentially be avoided if more electrodes are available in future EAS electrode designs.

At the conclusion of the study, only subject S3 showed an improvement with the experimental program for vowel perception in quiet. Subject S3 also was the only participant that subjectively preferred the experimental program, and elected to use this program as his everyday listening program instead of reverting back to his clinical program. It is important to emphasize that this subject received a different frequency allocation for the experimental program than the other subjects (low frequency cutoff was set at 438 Hz instead of 188 Hz). It is possible that this subject’s preference for the experimental program at the end of the study was perceived as a great improvement compared to the initial experimental program of 188 Hz, which caused a significant adverse reaction by the subject. The other two subjects, S1 and S2, chose to revert back to the existing clinical program based on their subjective impressions and the speech perception results. For subjects S1 and S2, it may be possible that residual hearing in the contralateral ear provides good audibility and speech understanding when complemented by a higher frequency clinical allocation provided through the CI, and that further manipulations of the CI frequency range are not beneficial. Alternatively, it is possible that the duration of the study was not long enough to provide enough listening experience necessary for speech perception.

Pitch perception, on the other hand, changed as predicted by the lowering of the frequency allocation in the subject’s program. All subjects showed downward shifts in pitch compared to their contralateral acoustic reference after experience with the experimental program. Surprisingly, some of these shifts occurred rather quickly within a few hours of testing with the experimental program, especially for subjects S2 and S3, who had the longest duration of experience with the CI. This finding contrasts with the slow pitch shifts seen over time in newly implanted Hybrid short-electrode CI users, which occur on a scale of months to years after implantation (Reiss et al., 2007). After a slower initial adaptation to the unnatural temporal and spectral excitation patterns produced by the CI, new frequency allocations may induce faster pitch shifts in experienced CI users, much like the fast perceptual adaptation seen within minutes in the normal visual system with prisms in human subjects (e.g., von Helmholtz, 1962; Held, 1965). More rapid changes in experienced CI users may explain why adaptation to speech perception with new frequency allocations occurs relatively rapidly, within three months in some users (Fu et al., 2005).

These preliminary findings suggest that changes in pitch follow the changes in frequency-to-electrode allocation and are consistent with the hypothesis that pitch plasticity occurs in order to minimize perceived spectral mismatches between multiple inputs, such as between acoustic and electric inputs or even bilateral electric inputs (Reiss et al., 2008; Reiss et al., 2011). Initially, when patients are first implanted, the frequency-to-electrode allocations often do not match the cochlear place frequencies of the neurons that are actually stimulated. This results in a discrepancy between acoustically and electrically stimulated frequencies, which appears to be resolved by pitch plasticity that causes the pitch perceived through each CI electrode to eventually align with the frequencies allocated to that electrode. In the case of experienced CI users, when frequency-to-electrode allocations are changed, the brain again hears pitches stimulated electrically that do not match those heard acoustically, especially if the pitch perception had adapted to the previous frequency-to-electrode allocation. Our data suggest that in experienced CI users, the brain is again able to adapt the pitch perceived by each electrode to match the sound frequencies allocated to that electrode, possibly even more rapidly or by a different physiological mechanism than in new CI users.

All of the subjects in this study showed pitch changes following experience with an experimental program. It is not clear yet whether changes in speech perception were associated with changes in pitch perception based on the limited number of subjects in this study.

Clinical Implications

Currently, the default programming strategy for traditional or full-length CI users is to provide the full range of frequencies to the implanted ear, which often overlaps with the residual hearing in the contralateral ear. For EAS users, the default strategy is to complement the residual hearing in the implanted ear. Few studies have investigated whether CI programs should overlap or complement the residual hearing in the contralateral ear in either population. A study of normal-hearing listeners using simulations of EAS found superior performance when minimizing the gap between the acoustic and electric frequency ranges for speech in quiet and in noise (Dorman et al., 2005). However, this study did not look at the effects of increasing or changing the overlap between the acoustic and electric stimulation. Other studies of EAS have shown optimal speech recognition in noise when there is less overlap between the acoustic and electric frequency ranges, though there is variability in this effect across subjects (Wilson et al., 2002; James et al., 2006; Vermiere et al., 2008; Karsten et al., submitted). In particular, Vermiere et al. (2008) compared the performance of four EAS users with CI frequency allocations overlapping or complementing the acoustic frequency range. The frequency range and gain of the HA was also varied. Three of four subjects performed best on speech recognition tests in a background of speech-shaped noise when the CI frequency allocations had a higher low-frequency cutoff, complementing the acoustic frequency range, and when the frequency range of the HA amplification and gain was maximized. This benefit was largest for +10 and +15 dB SNR. However, the study was limited by the in-the-ear HA used with EAS, which limited the gain and the ability to meet amplification targets. The benefit of decreased overlap was greatest for the subject with the best preserved low-frequency thresholds. In comparison, the one subject who did not benefit from decreased overlap had the poorest low-frequency thresholds, and thus, was unlikely to have obtained optimum speech recognition benefit from the in-the-ear HA. This subject likely benefited from a full, overlapping frequency range because low-frequency acoustic information was not sufficiently amplified acoustically.

Another study (Karsten et al., submitted) also found a detriment of increased spectral overlap between the CI and the residual acoustic hearing for understanding speech in the presence of background talkers, suggesting that the negative effects of overlap apply for both speech-shaped and babble noise. This study found that speech perception in quiet was minimally affected with a broader CI frequency allocation, likely because of the redundancy of speech information across frequency, especially for consonants. In contrast, for speech perception in noise, two out of three subjects (S2 and S3) performed worse when a broader CI frequency allocation was used in combination with a HA in the contralateral ear, even after 2-3 months of experience with the broader frequency allocation. These findings suggest a potential interference effect of overlap of the CI with acoustic hearing in the contralateral ear, which may overwhelm any advantage offered by providing a broader frequency range to the CI. The data from this study agree with that of previous EAS studies showing better speech perception results in noise using decreased spectral overlap between a CI and HA in the same ear (Wilson et al., 2002; James et al., 2006; Vermiere et al., 2008; Karsten et al., submitted). Similar benefits of decreased spectral overlap between binaural inputs for speech perception in noise have also been seen in normal-hearing, hearing-impaired, and bilateral CI users (Rand, 1974; Franklin, 1975; Loizou et al., 2003). Thus, the results from this study as well as previous studies indicate that less spectral overlap of the CI with the residual acoustic hearing may be better for both partial-insertion CIs combined with a HA in the ipsilateral ear (EAS) and full-length CIs combined with a HA in the contralateral ear (bimodal stimulation).

Acknowledgments

We would like to thank the dedicated research subjects at the University of Iowa who participated in this study. We also thank Bruce Gantz and the Iowa Cochlear Implant team for providing audiometric data and for assisting with patient recruitment, Sue Karsten for help in obtaining some of the pitch-matching data, Aaron Parkinson and Cochlear Corporation for providing the research equipment for the pitch-matching experiments, and Arik Wald who helped with the custom programming of the research software. We also thank two anonymous reviewers for their very helpful comments on this manuscript. Funding for this research was provided by NIDCD grants F32 DC009157, RO1DC000377 and P50DC00242 awarded to the University of Iowa.

References

- Armstrong M, Pegg P, James C, Blamey P. Speech perception in noise with implant and hearing aid. Am J Otol. 1997;18(6 Supplement):S140–S141. [PubMed] [Google Scholar]

- Blamey PJ, Dooley GJ, Parisi ES, Clark GM. Pitch comparisons of acoustically and electrically evoked auditory sensations. Hear Res. 1996;99(1-2):139–50. doi: 10.1016/s0378-5955(96)00095-0. [DOI] [PubMed] [Google Scholar]

- Boex C, Baud L, Cosendai G, Sigrist A, Kos MI, Pelizzone M. Acoustic to electric pitch comparisons in cochlear implant subjects with residual hearing. J Assoc Res Otolaryngol. 2006;7(2):110–24. doi: 10.1007/s10162-005-0027-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Byrne D, Dillon H, Ching T, Katsch R, Keidser G. NAL-NL1 procedure for fitting nonlinear hearing aids: Characteristics and comparisons with other procedures. J Am Acad Audiol. 2001;12(1):37–51. [PubMed] [Google Scholar]

- Carlyon RP, Macherey O, Frijans JH, Axon PR, Kalkmann RK, Boyle P, Baguley DM, Briggs J, Deeks JM, Briaire JJ, Barreau X, Dauman R. Pitch comparisons between electric stimulation of a cochlear implant and acoustic stimuli presented to a normal-hearing contralateral ear. J Assoc Res Otolaryngol. 2010;11(4):625–40. doi: 10.1007/s10162-010-0222-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carney E, Schlauch RS. Critical difference table for word recognition testing derived using computer simulation. J Speech Lang Hear Res. 2007;50:1203–1209. doi: 10.1044/1092-4388(2007/084). [DOI] [PubMed] [Google Scholar]

- Ching TY, Incerti P, Hill M. Binaural benefits for adults who use hearing aids and cochlear implants in opposite ears. Ear Hear. 2004;25(1):9–21. doi: 10.1097/01.AUD.0000111261.84611.C8. [DOI] [PubMed] [Google Scholar]

- Dorman MF, Gifford RH, Lewis K, et al. Word recognition following implantation of conventional and 10 mm Hybrid electrodes. Int J Audiol. 2009;14:181–189. doi: 10.1159/000171480. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dorman MF, Gifford RH. Combining acoustic and electric stimulation in the service of speech recognition. Int J Audiol. 2010;49(12):912–9. doi: 10.3109/14992027.2010.509113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dorman MF, Smith M, Smith L, Parkin JL. The pitch of electrically presented sinusoids. J Acoust Soc Am. 1994;95(3):1677–9. doi: 10.1121/1.408558. [DOI] [PubMed] [Google Scholar]

- Dorman MF, Spahr AJ, Loizou PC, et al. Acoustic simulations of combined electric and acoustic hearing (EAS) Ear Hear. 2005;26:371–80. doi: 10.1097/00003446-200508000-00001. [DOI] [PubMed] [Google Scholar]

- Dunn CC, Tyler RS, Witt SA. Benefit of wearing a hearing aid on the unimplanted ear in adult users of a cochlear implant. J Speech Lang Hear Res. 2005;48(3):668–680. doi: 10.1044/1092-4388(2005/046). [DOI] [PubMed] [Google Scholar]

- Eddington DK, Dobelle WH, Brackmann DE, Mladejovsky MG, Parkin JL. Auditory prostheses research with multiple channel intracochlear stimulation in man. Ann Otol Rhinol Laryngol. 1978;87(6 Pt 2):1–39. [PubMed] [Google Scholar]

- Efron B, Tibshirani R. An introduction to the bootstrap. New York: Chapman and Hall; 1991. [Google Scholar]

- Flynn MC, Schmidtke T. Benefits of bimodal stimulation for adults with a cochlear implant. International Congress Series. 2004;1273:227–230. [Google Scholar]

- Franklin B. The effect of combining low- and high-frequency passbands on consonant recognition in the hearing impaired. J Speech Lang Hear Res. 1975;18(4):719–27. doi: 10.1044/jshr.1804.719. [DOI] [PubMed] [Google Scholar]

- Friesen LM, Shannon RV, Baskent D, et al. Speech recognition in noise as a function of the number of spectral channels: Comparison of acoustic hearing and cochlear implants. J Acoust Soc Am. 2001;110:1150–1163. doi: 10.1121/1.1381538. [DOI] [PubMed] [Google Scholar]

- Fu QJ, Nogaki G, Galvin JJ., 3rd Auditory training with spectrally shifted speech: implications for cochlear implant patient auditory rehabilitation. J Assoc Res Otolarnygol. 2005;6(2):180–189. doi: 10.1007/s10162-005-5061-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gantz BJ, Hansen MR, Turner CW, et al. Hybrid 10 clinical trial: preliminary results. Audiol Neurootol. 2009;14(Suppl):32–38. doi: 10.1159/000206493. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gantz BJ, Turner CW. Combining acoustic and electric hearing. Laryngoscope. 2003;113:726–1730. doi: 10.1097/00005537-200310000-00012. [DOI] [PubMed] [Google Scholar]

- Gantz BJ, Turner CW. Combining acoustic and electric speech processing: Iowa/Nucleus Hybrid Implant. Acta Otolaryngol. 2004;24:344–347. doi: 10.1080/00016480410016423. [DOI] [PubMed] [Google Scholar]

- Gfeller K, Olszewski C, Turner CW, Gantz B. Music perception with cochlear implants and residual hearing. Audiol Neurootol. 2006;11(Suppl 1):12–15. doi: 10.1159/000095608. [DOI] [PubMed] [Google Scholar]

- Greenwood D. A cochlear frequency-position function for several species--29 years later. J Acoust Soc Am. 1990;87(6):2592–2605. doi: 10.1121/1.399052. [DOI] [PubMed] [Google Scholar]

- Gstoettner W, Helbig S, Maier N, Kiefer J, Radeloff A, Adunka OF. Ipsilateral electro acoustic stimulation of the auditory system: results of long-term hearing preservation. Audiol Neurootol. 2006;11(Suppl 1):49–56. doi: 10.1159/000095614. [DOI] [PubMed] [Google Scholar]

- Gstoettner W, Kiefer J, Baumgartner WD, Pok S, Peters S, Adunka O. Hearing preservation in cochlear implantation for electro acoustic stimulation. Acta Otolaryngol. 2004;124(4):348–52. doi: 10.1080/00016480410016432. [DOI] [PubMed] [Google Scholar]

- Gstoettner WK, van de Heyning P, O’Connor AF, Morera C, Sainz M, Vermeire K, et al. Electric acoustic stimulation of the auditory system: results of a multi-centre investigation. Acta Otolaryngol. 2008;128(9):968–75. doi: 10.1080/00016480701805471. [DOI] [PubMed] [Google Scholar]

- Helbig S, Baumann U, Helbig M, von Malsen–Waldkirch N, Gstoettner W. A new combined speech processor for electric and acoustic stimulation – eight months experience. ORL J Otorhinolaryngol Relat Spec. 2008;70(6):359–65. doi: 10.1159/000163031. [DOI] [PubMed] [Google Scholar]

- Held R. Plasticity in sensory-motor systems. Sci Am. 1965;213:84–94. doi: 10.1038/scientificamerican1165-84. [DOI] [PubMed] [Google Scholar]

- Henry BA, Turner CW, Behrens A. Spectral peak resolution and speech recognition in quiet: normal hearing, hearing impaired, and cochlear implant listeners. J Acoust Soc Am. 2005;118(2):1111–21. doi: 10.1121/1.1944567. [DOI] [PubMed] [Google Scholar]

- James C, Fraysse B, Deguine O, et al. Combined Electroacoustic Stimulation in Conventional Candidates for Cochlear Implantation. Audiol Neurotol. 2006;11(Suppl.1):57–62. doi: 10.1159/000095615. [DOI] [PubMed] [Google Scholar]

- Kiefer J, Gtoettner W, Baumgartner W, Pok SM, Tillein J, et al. Conservation of low-frequency hearing in cochlear implantation. Acta Otolaryngol. 2004;124(3):272–80. doi: 10.1080/00016480310000755a. [DOI] [PubMed] [Google Scholar]

- Kiefer J, Pok M, Adunka O, Sturzebecher E, Baumgartner W, Schmidt M, et al. Combined electric and acoustic stimulation of the auditory system: Results of a clinical study. Audiol Neurootol. 2005;10:134–144. doi: 10.1159/000084023. [DOI] [PubMed] [Google Scholar]

- Kong YY, Stickney GS, Zeng FG. Speech and melody recognition in binaurally combined acoustic and electric hearing. J Acoust Soc Am. 2005;117:1351–61. doi: 10.1121/1.1857526. [DOI] [PubMed] [Google Scholar]

- Loizou PC, Mani A, Dorman MF. Dichotic speech recognition in noise using reduced spectral cues. J Acoust Soc Am. 2003;114(1):475–83. doi: 10.1121/1.1582861. [DOI] [PubMed] [Google Scholar]

- Lorens A, Polak M, Piotrowtska A, Skarzynski H. Outcomes of treatment of partial deafness with cochlear implantation: a DUET study. Laryngoscope. 2008;118(2):288–94. doi: 10.1097/MLG.0b013e3181598887. [DOI] [PubMed] [Google Scholar]

- McDermott HJ, Sucher C, Simpson A. Electro-acoustic stimulation. Acoustic and electric comparisons. Audiol Neurootol. 2009;14(Suppl 1):2–7. doi: 10.1159/000206489. [DOI] [PubMed] [Google Scholar]

- Miller GA, Nicely PE. An analysis of perceptual confusions among some English consonants. J Acoust Soc Am. 1955;27:338–352. [Google Scholar]

- Nelson PB, Jin SH, Carney AE, Nelson DA. Understanding speech in modulated interference: cochlear implant users and normal-hearing listeners. J Acoust Soc Am. 2003;113(2):961–8. doi: 10.1121/1.1531983. [DOI] [PubMed] [Google Scholar]

- Nilsson M, Soli SD, Sullivan JA. Development of the hearing in noise test for the measurement of speech reception thresholds in quiet and in noise. J Acoust Soc Am. 1994;95(2):1085–99. doi: 10.1121/1.408469. [DOI] [PubMed] [Google Scholar]

- Rand TC. Letter: Dichotic release from masking for speech. J Acoust Soc Am. 1974;55(3):678–80. doi: 10.1121/1.1914584. [DOI] [PubMed] [Google Scholar]

- Reiss LA, Gantz BJ, Turner CW. Cochlear implant speech processor frequency allocations may influence pitch perception. Otol Neurootol. 2008;29(2):160–167. doi: 10.1097/mao.0b013e31815aedf4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reiss LA, Lowder ML, Karsten SA, Turner CW, Gantz BJ. Effects of extreme tonotopic mismatches between bilateral cochlear implants on electric pitch perception: A case study. Ear Hear. 2011;32(4):536–40. doi: 10.1097/AUD.0b013e31820c81b0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reiss LAJ, Turner CW, Erenberg SR, Gantz BJ. Changes in pitch with a cochlear implant over time. J Assoc Res Otolaryngol. 2007;8(2):241–57. doi: 10.1007/s10162-007-0077-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thornton AR, Raffin MJM. Speech discrimination scores modeled as a binomial variable. J Speech Hear Res. 1978;21:507–518. doi: 10.1044/jshr.2103.507. [DOI] [PubMed] [Google Scholar]

- Tillman WT, Carhart R. An expanded test for speech discrimination utilizing CNC monosyllabic words Northwestern University Auditory Test No. 6. Technical Report SAM-TR-66-55. 1966 doi: 10.21236/ad0639638. [DOI] [PubMed] [Google Scholar]

- Turner CW, Gantz BJ, Vidal C, Behrens A. Speech recognition in noise for cochlear implant listeners: Benefits of residual acoustic hearing. J Acoust Soc Am. 2004;115:1729–1735. doi: 10.1121/1.1687425. [DOI] [PubMed] [Google Scholar]

- Turner CW, Souza PE, Forget LN. Use of temporal envelope cues in speech recognition by normal and hearing-impaired listeners. J Acoust Soc Am. 1995;97:2568–76. doi: 10.1121/1.411911. [DOI] [PubMed] [Google Scholar]

- Tyler RS, Parkinson AJ, Wilson BS, Witt S, Preece JP, Noble W. Patient utilizing a hearing aid and a cochlear implant: Speech perception and localization. Ear Hear. 2002;23(2):98–105. doi: 10.1097/00003446-200204000-00003. [DOI] [PubMed] [Google Scholar]

- Vermeire K, Anderson I, Flynn M, Van de Heyning P. The influence of different speech processor and hearing aid settings on speech perception outcomes in electric acoustic stimulation patients. Ear Hear. 2008;29:76–86. doi: 10.1097/AUD.0b013e31815d6326. [DOI] [PubMed] [Google Scholar]

- von Helmholtz HEF. In: Treatise on Physiological Optics. Southall JPC, editor. Dover; 1962. originally published 1909. [Google Scholar]

- Von Ilberg C, Keifer J, Tillein J, Pfenningdorff T, Hartmann R, Stuzebecher E, Klinke R. Electro-Acoustic Stimulation of the Auditory System. ORL J Otorhinolaryngol Relat Spec. 1999;61:334–40. doi: 10.1159/000027695. [DOI] [PubMed] [Google Scholar]

- Wichmann FA, Hill NJ. The psychometric function: II. Bootstrap-based confidence intervals and sampling. Perception and Psychophysics. 2001;63(8):1314–1329. doi: 10.3758/bf03194545. [DOI] [PubMed] [Google Scholar]

- Wilson B, Wolford R, Lawson D, Schatzer R. Additional perspectives on speech reception with combined electric and acoustic stimulation. Speech processors for Auditory Prostheses (Third Quarterly Progress Report N01-DC-2-1002. 2002 Retrieved from www.rti.org/abstract.cfm?pubid-1168.

- Xu L, Thompson CS, Pfingst BE. Relative contributions of spectral and temporal cues to speech recognition. J Acoust Soc Am. 2005;117(5):3255–3267. doi: 10.1121/1.1886405. [DOI] [PMC free article] [PubMed] [Google Scholar]