Abstract

Quantitative researchers distinguish between causal and effect indicators. What are the analytic problems when both types of measures are present in a quantitative reasoned action analysis? To answer this question, we use data from a longitudinal study to estimate the association between two constructs central to reasoned action theory: behavioral beliefs and attitudes toward the behavior. The belief items are causal indicators that define a latent variable index while the attitude items are effect indicators that reflect the operation of a latent variable scale. We identify the issues when effect and causal indicators are present in a single analysis and conclude that both types of indicators can be incorporated in the analysis of data based on the reasoned action approach.

Keywords: effect indicators, causal indicators, measurement models, Integrative Model

Quantitative researchers distinguish between causal and effect indicators. Effect indicators (also called reflective indicators) are linear functions of an unobserved theoretical construct that explains the observed covariation between the items (MacCallum and Browne 1993; Borsboom, Mellenbergh, and VanHeerden 2003). For example, “self esteem” is the underlying construct that determines the items used to measure this psychological trait, such as “I feel that I am a person of worth, at least on an equal basis with others” (Judge et al. 1998). Items like this are selected because they are assumed to have a single common cause, the latent construct self-esteem, and a battery of items with a single common cause is unidimensional (Streiner 2003). Causal indicators are different in a fundamental way. With causal indicators (also called formative indicators), the observed items define the latent construct, so the arrows of causality in the causal indicator measurement model are reversed compared with effect indicators (Edwards and Bagozzi 2000; Diamantopoulos, Riefler, and Roth 2008; Brown 2006).

Indicators of a latent construct and indicators that define a cumulative index reflect two major components of the “Integrative Model of Behavioral Prediction” (IM): the underlying beliefs and the direct measures (see the article by Ajzen and also Bleakley and Hennessy, this issue). This paper shows how the distinction between causal and effect indicators is a fundamental measurement property of the IM, demonstrates how these two types of measurement models should be simultaneously analyzed, and highlight the statistical and practical issues in doing so.

Defining Causal and Effect Indicators

Measurement models for effect indicators (Kline 2005) have causal arrows going from the latent construct to the observed variables. However, the latent construct is not the only cause of the indicator; a second cause is random error. Thus, there are two sources of variability in the observed items: (a) systematic variation from the latent construct and (b) variation from item idiosyncratic (i.e., statistically independent) errors. One conclusion from this is that indicators of the same construct should be highly correlated among themselves and should be essentially interchangeable if equally reliable (MacKenzie, Podsakoff, and Jarvis 2005; Jarvis, MacKenzie, and Podsakoff 2003). In addition, effects indicators should not be highly correlated with other less theoretically related latent variable indicators because the violation of this rule cannot result in unidimensional measures (Reise and Henson 2003).

In contrast, measurement models for causal indicators have causal arrows going from the observed variables to the latent construct and the regression coefficients attached to these arrows show the change in the latent construct due to a one unit change in the indicators (Bollen and Lennox 1991). To clarify the difference between effect and causal indicators, examples of the causal indicator situation are helpful. One application of causal indicators is measuring physical ability or disability, often motivated by an interest in the evaluation of quality of life in geriatric populations (Fayers et al. 1997). For example, Van Boxel and colleagues (Van Boxel et al. 1995) measured functional abilities related to daily living (the “Activities of Daily Living” [ADL] item battery) in a hospital sample of rehabilitation patients. The ADL consists of a check list of abilities such as “walking outdoors,” “climbing stairs,” “bathing,” and “lifting.” Similarly, Ringdal and colleagues (Ringdal et al. 1999) used a battery of negatively worded dichotomous items such as “need help with eating or dressing,” “trouble taking a long walk,” or “unable to do work or housework” to evaluate functional disabilities in a sample of cancer patients. In a public health context, Carey & Schroder (Carey and Schroder 2002) used a dichotomous check list of eighteen true-false items to measure knowledge of HIV/AIDS that contained such items as “coughing and sneezing do not spread HIV,” “there is a vaccine that can stop adults from getting HIV,” and “having sex with more than one partner can increase a person’s chance of being infected with HIV.” A final example is Schneider and colleagues’ (Schneider, Jacoby, and Coggburn 1997) analysis of states’ adoption patterns of Medicaid services. They found a systematic pattern of services adopted calculated from a check list of all possible services. Note that in all of these cases, the value of the latent variables of “functional ability”, “functional impairment”, “HIV knowledge”, and “comprehensiveness of Medicaid services” is defined by scores in the inventory of (dis)abilities, knowledge, or adopted policies.[1] The essential difference between the two types of indicators can be summarized as:

If the measures represent defining characteristics that collectively explain the meaning of the construct, a formative-indicator measurement model should be specified. However, if the measures are manifestations of the construct in the sense that they are each determined by it, a reflective indicator-indictor model is appropriate (MacKenzie, Podsakoff and Jarvis, 2005, p. 713).

Causal versus Effect Indicators: Does it make a Difference?

The difference between causal and effect indicators has important implications for research practice because with causal indicators there need be no a priori expectation about either the sign or magnitude of the correlations between the observed items. In the case of functional disability, for example, specific medical conditions might be correlated with some specific disability but not others: arthritis in the legs might impair ability to do housework but have no effect on the ability to cook a meal. Similarly, correct (or incorrect) knowledge about how HIV is transmitted might be totally unrelated to knowledge about the existence of a HIV vaccine or about the efficacy of antibiotics in reducing or eliminating infection. Thus, it will be difficult to predict the correlations between the items of the ADL in a sample of patients or between HIV knowledge items in a sample of adolescents. In summary, effect and causal indicators have different implications for the psychometric analysis of a battery of questionnaire items, as Fayers and Hand (1997, p. 146) summarize:

A scale based on effect indicators with high correlations may be expected to be unidimensional, representing a single consistent construct. This is not so with causal indicators. There need not be any sense of a unidimensional homogeneous construct and this has led some authors to describe them as ‘composite scales’.

Other researchers (Reise and Henson 2003; Streiner 2003) make the same distinction between types of indicators and come to identical conclusions concerning the unpredictable correlations between causal indicators as opposed to effect indicators. Some researchers (Streiner 2003; Diamantopoulos and Siguaw 2006) also classify latent measures constructed using effects indicators as “scales” and those constructed using causal indicators as “indices.” These two labels are useful and will be applied here.

Differentiating Between Causal and Effect Indicators

Jarvis, MacKenzie and Podsakoff (2003, p. 203) suggested a seven item check list that can be used to determine if a particular measurement item is a causal or effect indicator. To be a causal indicator, the measure: (1) should be conceptualized as defining a characteristic of the latent construct, (2) should change the latent construct if the item itself changes, (3) should not change if the latent construct changes, (4) does not necessarily share a “common theme” with other indicators, (5) if eliminated, changes the conceptual domain of the construct, (6) if changed, may not necessarily have any relation with changes in other causal indicators of the same latent construct, and (7) the causal indicator does not have “the same antecedents and consequences”.

To evaluate their plausibility, test standards (1) through (3) rely either on a strong a priori theory or on a “mental experiment” of the type described by MacKenzie, Podsakoff and Jarvis (2005, p. 713) and by Bollen and Ting (Bollen and Ting 2000):

…a researcher imagines a shift in the latent variable and then judges whether a simultaneous shift in all the observed variables is likely. If so, then this is consistent with an effect indictor specification. Alternatively, if the researcher imagines a shift in an observed variable as leading to a shift in the latent variable even if there is no change in the other indicators, then this is consistent with a causal indicator model (Bollen and Ting 2000, p. 4).

But the other test standards have empirical consequences that can be considered as validation tests for the assumptions. For example, test standard (4) implies that internal consistency of causal indicator items need not be high, test standard (5) implies that measures of internal consistency (e.g., alpha) do not necessarily decline when an item is eliminated, test standard (6) implies that the correlations between causal indicators are unpredictable, and test standard (7) implies that causal determinants of the causal indicators need not (but may) have independent variables in common.

Measurement Modeling and the Integrative Model

In this paper we focus on the attitudinal components of the IM although the analysis is applicable to all three of the predictors of intentions.[2] We are first concerned with the measurement models of the underlying behavioral beliefs and the direct measures of attitudes. Because behavioral beliefs are beliefs that performing the target behavior leads to specific consequences (Ajzen and Fishbein 2000; Fishbein 2004), they conform to the test standards discussed above that identify causal indicators: the more positive/negative outcomes are expected, the more positive/negative is the belief index. In addition, there is no reason for the items to be internally consistent across respondents because the items reflect many different domains. Relative to the behavior of interest here (i.e., having vaginal sex in the next 12 months), the belief items relate to romantic relationships, parental responses to behavior, the likelihood of STD/HIV infection or pregnancy, responses of significant others to the respondent’s sexual behavior, respondent self-perceptions, and the respondent’s and sex partner’s sexual pleasure (see below for details). Therefore, they are causal indicators in the sense that they should define a summative index of positive and negative expectations.[3]

The direct measures, on the other hand, are considered as effects indicators. These items should be internally consistent and should be highly correlated because they are a set of unidimensional items reflecting the operation of the latent variable: attitude toward the target behavior. In addition, we estimate the coefficients of the path between the behavioral belief index and the direct measure of attitudes, the path connecting the direct attitude measure and intentions to have sex, and the path predicting behavior from intentions. Our hypotheses for these parameters are discussed below.

METHODS and MEASURES

The Annenberg Sex and Media Study (ASAMS)

This paper uses data from the ASAMS longitudinal survey of adolescents from the greater Philadelphia area. ASAMS was a five-year investigation of the relationship between exposure to sex in the media and self-reported sexual behavior in adolescents. It was designed to investigate the extent to which exposure to sexual content in the media shapes adolescents’ sexual development. In ASAMS, the analytic variables used were those of the IM, so ASAMS contains direct measures of intentions, attitudes, perceived normative pressure, and self-efficacy with respect to the target behavior of “my having vaginal sex in the next 12 months” as well as measures of the behavioral, normative, and efficacy beliefs.

ASAMS Study Design

The first wave of data collection occurred in the spring and summer of 2005; wave 2 occurred 1 year later in the spring and summer of 2006, and wave 3 in the summer of 2007. Only data from waves 2 and 3 are used for this analysis because there is more variance in the behavioral dependent variable. Adolescents were recruited through print and radio advertisements, direct mail, and word of mouth to complete the survey. Eligibility criteria for the initial survey included age at the time of the survey (14, 15, or 16) and race/ethnicity (White, African-American, or Hispanic). A quota samplings design was used to achieve equal stratas of adolescents by age, gender, and race. In practice, Hispanic adolescents were difficult to locate and recruit, and are underrepresented in the sample. Written parental consent and teen assent were collected for all participants and study protocols were approved by the University of Pennsylvania IRB.

The web-based survey was accessible from any computer with internet access. Participants were given the option of taking the survey at the University or an off-site location (e.g. home, school, or community library). Enrolled adolescents were given a password to access the survey, as well as an ID number and personal password to ensure confidentiality and privacy protection. On average adolescents took one hour to complete the survey and were given compensation of $25 for completing each wave of the survey. Respondents’ who completed all three waves received an additional $25.

After submitting participant assent /parental consent forms, 547 adolescents completed the survey at wave 1. Ninety two percent of the sample was retained in wave 2 (N = 501), and of those respondents, 95% (N = 474) completed the survey in wave 3. Eighty-seven percent of the respondents in the initial sample were successfully re-contacted in all waves and 94% of the initial sample participated in at least two of the three waves. Because of their relatively small sample sizes, 14 adolescents of “other” ethnicity are excluded from the present analysis, resulting in a final sample of 457 youth from waves 2 and 3. The analysis sample is 63% female, 44% African American, 44% White, and 12% Hispanic. The mean participant age at wave 2 is 15.98 years (SD = .81). At wave 3, 49% of the respondents reported having had sex in the last 12 months.

Measures

The ASAMS survey collected direct measures of intention, attitudes, perceived normative pressure and self efficacy with respect to engaging in sexual intercourse in the next 12 months. In addition, it assessed the behavioral, normative, and self efficacy beliefs that were assumed to underlie these constructs. The belief items were identified through formative elicitation research conducted prior to the development of the survey. Open-ended questions were used to identify the outcomes, referents, barriers, and facilitators that were most salient in this population with respect to personally engaging in vaginal sexual intercourse in the next 12 months.

For effect indicators, we used six semantic differential items to assess attitudes. All items were scored on a seven point scale with “-3” indicating “Extremely”, “-2” indicating “Quite”, “-1” indicating “Slightly”, “0” indicating “Neither”, “1” indicating “Slightly”, “2” indicating “Quite” and “3” indicating “Extremely”. The common item stem was: My having sexual intercourse in the next 12 months would be…. The semantic differential end-points were Bad-Good (B-G), Foolish-Wise (F-W), Unpleasant-Pleasant (U-P), Not Enjoyable-Enjoyable (N-E), and Harmful-Beneficial (H-B).

For the indicators of behavioral beliefs, we used 12 outcome expectancy items identified during the formative research. They were scored on a seven point scale from “1” indicating “Extremely Unlikely” to “7” indicating “Extremely Likely.” The common item stem was: If I have sexual intercourse in the next 12 months, it would…. The outcomes were: 1) Make me feel as though someone had taken advantage of me, 2) Make me feel good about myself, 3) Hurt my relationship with my partner, 4) Increase the quality of my relationship with my partner, 5) Increase feeling of intimacy between me and my partner, 6) Give me pleasure, 7) Make my parents mad, 8) Please my partner, 9) Get me or my partner pregnant, 10) Gain the respect of my friends, 11) Give me a STD or HIV/AIDS, and 12) Make my friends think badly of me. Items that referred to negative outcomes were multiplied by -1 to reverse the values although item 9) was not reversed because this was specifically evaluated as a positive outcome by the respondents in a separate question.

Intention to have vaginal sex was measured with the average of three items: I am willing to have sexual intercourse in the next 12 months, I will have sexual intercourse in the next 12 months, and I intend to have sexual intercourse in the next 12 months. Each response was coded on a seven point scale from “-3” indicating “Extremely Unlikely” to “3” indicating “Extremely Likely”. The alpha for the summed items was .96 (M = .08, SD = 2.26).

Statistical Analysis

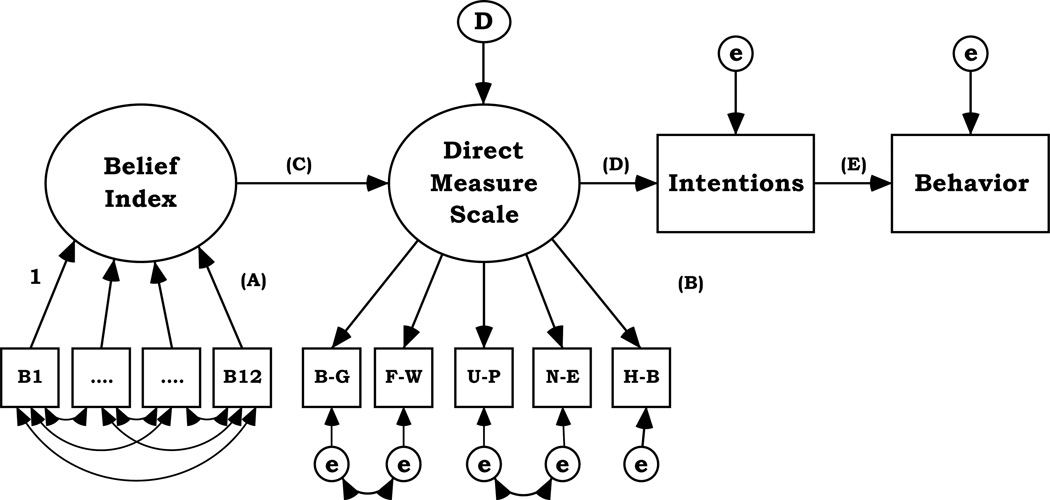

The generic model of the analysis applied to the attitude components of the IM is shown in Figure 1. The Belief Index is defined by the 12 outcome expectancy causal indicator items labeled as B1 to B12 in the figure. The double headed arrows between the causal indicator items reflect the unanalyzed correlations between the items. The regression coefficients connecting the Belief Index with the causal belief items are the (A) parameters. To define the scale of the latent index, one of the regression coefficients must be set to a value of “1” (Brown 2006). Here we set a positive outcome (“give me pleasure”) as the referent and this calibrates the metric of the Belief Index such that higher values imply positive expectancies and lower values imply negative ones.

Figure 1.

The Generic Measurement Model for the Attitude Component of the Integrative Model

The other latent variable in the model is the Direct Measure Scale that predicts the associations between the six semantic-differential effect indicator items (B-G, F-W, U-P, N-E, and H-B). The regression coefficients predicting these variables from the Direct Measure Scale (i.e., “lambdas” in confirmatory factor analysis terminology) are the (B) parameters. If the direct measure items are unidimensional, all these coefficients should be large and statistically significant.

The correlation between the Belief Index and the Direct Measure Scale is the (C) parameter and between the Direct Measure Scale and intentions is the (D) parameter. We predict large positive values for both these parameters. However, the (C) parameter should be larger than the (D) parameter because under the IM beliefs are the major determinant of direct measures while attitude is only one of the three determinants of intentions. The (E) parameter is the association between intentions in wave 2 and sexual behavior at wave 3.

Preliminary analysis of the semantic differential items showed that to attain a good fit to this component of the measurement model, the error terms between the Bad-Good and Foolish-Wise pair and the Unpleasant-Pleasant and Not Enjoyable-Enjoyable pair need to be correlated. This makes sense in this context because these pairs may reflect the operation of a latent instrumental outcome common factor in the first case and a latent experiential outcome common factor in the second (Gerbing and Anderson 1984; Bollen and Paxton 1998).

Because the Direct Measure items are ordinal and the sexual behavior outcome is dichotomous, we used the Mplus program (Muthén and Muthén 2006). When Mplus encounters ordinal indicators it implements a weighted mean and variance estimator that has been shown to have excellent statistical qualities even with small samples (Flora and Curran 2004). The estimator assumes a probit (i.e., Z score) metric on the unobserved latent variables.

RESULTS

Table 1(A) shows the correlations between the semantic differential direct measure items. All the correlations are positive, all are discernable from zero, and are .54 or greater. This correlational pattern is consistent with the assumption of a unidimensional effects indicator measurement model. In contrast, the correlations between the behavioral belief items in Table 1(B) show values that have greater variability, show non-significant values (16 of 66 correlations) and/or low values (31 of 66 correlation are less than .20 in absolute magnitude), and the items as a whole have a lower internal consistency based on Cronbach’s alpha even though the number of behavioral belief items is larger than the number of semantic differential items, a situation that should favor the larger item set (Sijtsma 2009). This correlational pattern is consistent with an assumption of an effects indicator measurement model.

Table 1.

(A) Correlations Between the Direct Measures of Attitude

| 1 | ||||

| 0.747 | 2 | |||

| 0.688 | 0.592 | 3 | ||

| 0.646 | 0.545 | 0.897 | 4 | |

| 0.660 | 0.599 | 0.598 | 0.583 | 5 |

| (B) Correlations Between the Behavioral Beliefs Items | |||||||||||

| 1 | |||||||||||

| −0.222 0.000 |

2 | ||||||||||

| 0.398 0.000 |

−0.132 0.004 |

3 | |||||||||

| −0.175 0.000 |

0.279 0.000 |

−0.058 0.211 |

4 | ||||||||

| −0.081 0.082 |

0.336 0.000 |

−0.035 0.455 |

0.483 0.000 |

5 | |||||||

| −0.392 0.000 |

0.439 0.000 |

−0.201 0.000 |

0.397 0.000 |

0.429 0.000 |

6 | ||||||

| 0.246 0.000 |

−0.101 0.030 |

0.288 0.000 |

0.004 0.929 |

0.142 0.002 |

0.003 0.947 |

7 | |||||

| −0.104 0.026 |

0.266 0.000 |

−0.101 0.030 |

0.402 0.000 |

0.461 0.000 |

0.483 0.000 |

0.140 0.003 |

8 | ||||

| −0.264 0.000 |

0.207 0.000 |

−0.375 0.000 |

0.051 0.271 |

0.021 0.653 |

0.152 0.001 |

−0.224 0.000 |

0.004 0.929 |

9 | |||

| 0.038 0.413 |

0.535 0.000 |

0.118 0.011 |

0.133 0.004 |

0.163 0.000 |

0.134 0.004 |

−0.009 0.839 |

0.196 0.000 |

0.009 0.852 |

10 | ||

| 0.440 0.000 |

−0.088 0.061 |

0.524 0.000 |

−0.029 0.536 |

−0.070 0.135 |

−0.263 0.000 |

0.272 0.000 |

−0.067 0.153 |

−0.536 0.000 |

0.108 0.021 |

11 | |

| 0.517 0.000 |

−0.207 0.000 |

0.341 0.000 |

−0.110 0.018 |

0.042 0.368 |

−0.223 0.000 |

0.363 0.000 |

−0.109 0.020 |

−0.289 0.000 |

−0.077 0.099 |

0.358 0.000 |

12 |

#1: Bad-Good. #2: Foolish-Wise. #3: Unpleasant-Pleasant. #4: Not Enjoyable-Enjoyable. #5: Harmful-Beneficial. All correlations are discernable from zero. Alpha is .87.

Top entry is correlation; significance level of correlation is below. Item list is #1: Make me feel as though someone had taken advantage of me. #2: Make me feel good about myself. #3: Hurt my relationship with my partner. #4: Increase the quality of my relationship with my partner. #5: Increase feeling of intimacy between me and my partner. #6: Give me pleasure. #7: Make my parents mad. #8: Please my partner. #9: Get me or my partner pregnant. #10: Gain the respect of my friends. #11: Give me a STD or HIV/AIDS. #12: Make my friends think badly of me. Alpha is .75.

Measurement Modeling and the IM: The Generic Model

The results for the measurement model analysis are shown in Table 2. There are many interesting findings. First, the magnitudes of the causal indicators are not consistent, but this should not be surprising given that the regression coefficients all reflect partial or conditional effects of the other predictors. After all, causal indicators measurement models are just multiple regression equations in disguise. Second, four of the regression coefficients linking the causal indicators to the latent index are not discernable from zero. This is probably due to multicollinearity between the indicators. Even though the variance inflation factors (VIF) for the Belief Index items are all less than 1.94 or less, the determinant of the correlation matrix of the 12 causal indicators is .03, very close to zero and a reliable indication of multicollinearity for the correlation matrix as a whole (Johnston 1984; Rockwell 1975). This result highlights another difference between effect and causal indicators: effects indicators should be highly correlated due to their common cause but highly correlated items are a potential problem with causal indicators because highly correlated indicators may indicate redundant items (MacKenzie, Podsakoff, and Jarvis 2005).

Table 2.

Results for the Attitude Arm of the Integrative Model

| Outcome Expectancies (Stem: If I have sexual intercourse in the next 12 months, it would…) |

Independent Variable: Outcome Expectancies Dependent Variable: Belief Index |

Independent Variable: Belief Index Dependent Variable: Direct Measure Scale |

Independent Variable: Direct Measure Scale Dependent Variable: Intentions |

Independent Variable: Intentions Dependent Variable: Behavior at Wave 3 |

|---|---|---|---|---|

| (A) Parameters | (C) Parameter | (D) Parameter | (E) Parameter | |

| Make me feel that someone had taken advantage of me |

−.12 |

.77 R2 = .59 |

.50 R2 = .25 |

.43 R2 = .19 |

| Make me feel good about myself |

.33 | |||

| Hurt my relationship with my partner. |

−.13 | (B) Parameters (Stem: My having sexual intercourse in the next 12 months would be…) |

Lambdas for Direct Measure Items |

|

| Increase the quality of my relationship with my partner |

.15 | Bad-Good | .92 | |

| Increase feelings of intimacy |

−.04 | Foolish-Wise | .85 | |

| Give me pleasure | .41* | Unpleasant- Pleasant |

.86 | |

| Make my parents mad | −.07 | Not Enjoyable- Enjoyable |

.81 | |

| Please my partner | .13 | Harmful- Beneficial |

.81 | |

| Get me or my partner pregnant |

.05 | |||

| Gain the respect of my friends |

−.04 | |||

| Give me a STD or HIV/AIDS |

−.18 | |||

| Make my friends think badly of me |

−.12 |

N = 460. Entries are standardized coefficients for the measurement models and correlations for Parameters (C), (D), and (E).

Unstandardized coefficient constrained to unity to set metric of latent index, no significance test possible. The coefficients in bold italic are discernable from zero. Goodness of Fit: N = 457, χ2 = 143, df = 42, p < .05, Tucker-Lewis Index = .97, RMSEA = .072

There is a significant path coefficient of .77 from the Belief Index to the Direct Measure Scale: as the Belief Index increases, so do positive attitudes toward sex. The Direct Measure of attitudes is itself correlated with intentions (.50). This value is reasonably less than the Belief Index/ Direct Measure Scale correlation because, as discussed previously, attitude is only one of the three main predictors of intentions in the IM while the underlying beliefs are the sole predictors of the Direct Measure Scale. The association between intentions at wave 2 and behavior at wave 3 is .43. Finally, the fit of the model is good. This is because the causal indicator belief items are correlated exogenous variables and thus contribute no miss-fit and the only miss-fit of the model must be from the latent Direct Measure measurement model and the correlation between the Direct Measure and intentions. That part of the generic model fits very well.

Specifying Causal Indicator Measurement Models

The standard advice when analyzing structural equation models that include latent variables is to perform the analysis in two distinct steps (Anderson and Gerbing 1988; McDonald 2010). First the measurement models and the inter-correlations between the latent variables are estimated simultaneously to attain a good fit and then the structural parameters that explain the correlations between the latent variables are added. This logic eliminates confusion about the sources of miss-fit in any particular SEM: is the miss-fit due to the measurement properties of the latent variables or is the miss-fit due to misspecification of the structural (e.g., associational) relationships between the latent variables?

This two-step approach can not be used in our case because the causal indicator measurement model for the behavioral belief items is under-identified. With our 12 belief items there are 78 variances and covariances in the observed input data matrix (i.e., (12*13)/2), but 89 parameters to be estimated: 12 variances, 66 covariances, and 11 regression coefficients, resulting in a negative degree of freedom. What is worse is that this is a general result: causal indicators models always have negative degrees of freedom because the entire data matrix is composed of exogenous variables (absent variance or covariance restrictions) and the correlations between the indicators use all the degrees of freedom available (Brown 2006).[4] Thus, causal indicator measurement models alone never permit the estimation of the regression coefficients linking the indicators to the construct and it is only within a context of other effect indicators that it is possible to estimate measurement models that include a causal indicator latent construct. One way to think about pure causal indicator measurement models is to consider them as under-identified saturated models, although in a formal statistical sense such a statement is an oxymoron.

Causal Versus Effects Indicators: It Makes a Difference

Researchers confronted with check-list or inventory type measures that accumulate to define a trait, severity of a condition, or aggregate beliefs about a specific behavior seem to be in a quandary given these results. Given the inability to estimate causal effect indicator models as pure measurement constructs apart from a more complex model, they may feel that they have only two choices: (1) to ignore any kind of unidimensionality measure based on correlations and rely solely on face-validity, or (2) to hope that an effects indicator model will fit their underlying belief items. This latter condition is possible because check-list or inventory type items may be highly (and convergently) correlated such that they pose no problems when used in an effect indicator model. In addition, the summative model of causal indicators may also be consistent with the operation of a general latent variable. For example, “knowledge indices” may reflect the accumulation of facts and important unobserved constructs like “cognitive ability.” It is not a coincidence that the early history of factor analysis was concerned with the problem of estimating a generalized “ability factor” from responses to verbal and mathematical test items (Kaplan 2000; Shipley 2002; Law and Wong 1999) and this logic is similar to the HIV knowledge items discussed earlier. Thus, in some cases, the distinction between effect and causal indicators may be less important than is implied in statistical and methodological discussions which tend to emphasize their differences.

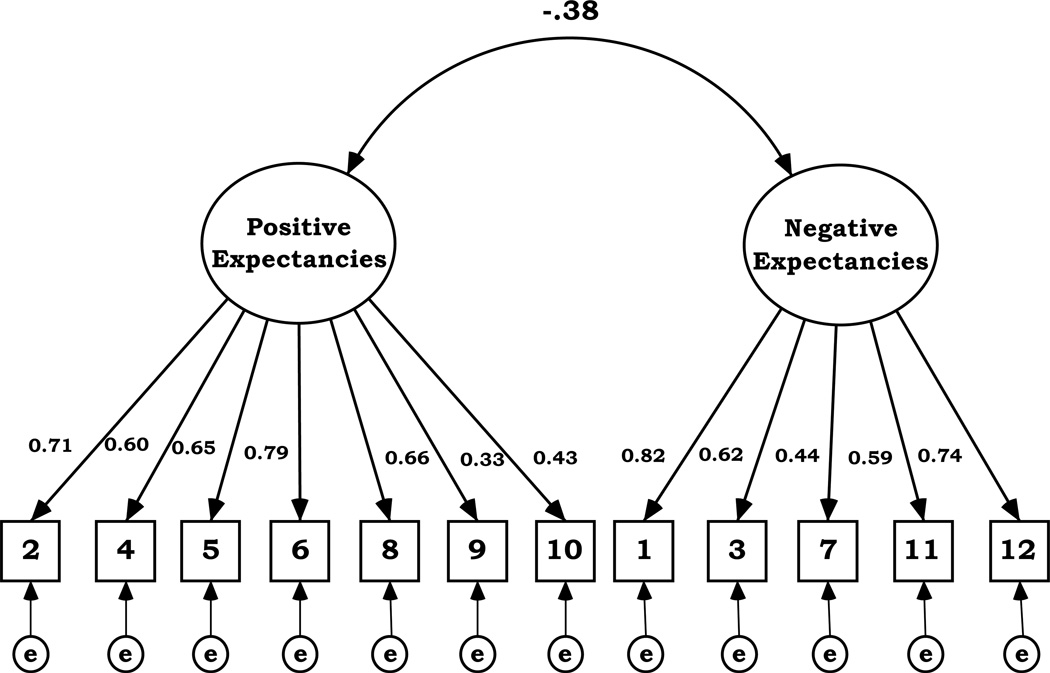

Suppose we now took the latent variable effects indicator approach and applied it to the 12 behavioral belief items testing the hypothesis that the items act as effect (and not causal) indicators. We separate the items into positive and negative outcomes (as the items were originally elicited), so the measurement model consists of two correlated factors. The results of this analysis are shown in Figure 2. In this example, there is little evidence that the belief items reflect two unidimensional latent variables in the effects indicator sense. Although all the factor loading are significant, the loadings vary widely between items, the χ2 between the fitted and actual covariance matrix is large and significant, and the overall fit of the model is poor. These results corroborate the advice of Fayers et al. (1997, p. 405):

The distinction between the two types of indicators is of fundamental importance to the design and validation of new instruments, particularly when they are intended to combine multiple items into summary scales. Effect indicators may lead to homogeneous summary scales with high reliability coefficients, whilst causal indicators should be treated with greater caution.

Figure 2. Behavioral Belief Items Treated as a Two-Factor Effect Indicator Measurement Model.

Notes: N = 460. All are standardized coefficients. All coefficients are discernable from zero. Goodness of Fit: χ2 = 596, df = 28, p < .05, Tucker-Lewis Index = .69, RMSEA = .21. Item list is: #1: Make me feel as though someone had taken advantage of me. #2: Make me feel good about myself. #3: Hurt my relationship with my partner. #4: Increase the quality of my relationship with my partner. #5: Increase feeling of intimacy between me and my partner. #6: Give me pleasure. #7: Make my parents mad. #8: Please my partner. #9: Get me or my partner pregnant. #10: Gain the respect of my friends. #11: Give me a STD or HIV/AIDS. #12: Make my friends think badly of me.

CONCLUSIONS

The IM and its earlier variants have been used to model intentions for a range of health behaviors including condom use (Albarracín et al. 2001; Sheeran and Taylor 1999), smoking (Van De Ven et al. 2007), exercise and physical activity (Hagger et al. 2001; Hausenblas, Carron, and Mack 1997), healthy eating (Conner, Norman, and Bell 2002), binge drinking (Cooke, Sniehotta, and Schüz 2007) and other behaviors (Hardeman et al. 2002). When the beliefs underlying the attitudinal, normative, or self-efficacy measures are included in analysis models that are typically some variant of structural equation modeling, both causal and effect measurement models can be included as necessary in the analysis insofar as the underlying belief items pass the “mental experiment” and other test standards described above that requires them to the treated as part of a causal indicator measurement model.

Acknowledgments

An earlier version of this paper was given at the meetings of the American Evaluation Association, session on Advancing Valid Measurement in Evaluation, Baltimore, 2007. This publication was made possible by Grant Number 5R01HD044136 from the National Institute of Child Health and Human Development (NICHD).

Biographies

Dr. Michael Hennessy is a researcher at the Annenberg Public Policy Center. His major interests are the integration of structural equation modeling and intervention program/behavioral theory, growth curve analysis of longitudinal data, and using factorial surveys to design intervention programs. He has published articles in journals as Evaluation Review, Structural Equation Modeling, Psychology Health & Medicine, The American Journal of Evaluation, Evaluation and the Health Professions, Journal of Sex Research, and AIDS and Behavior. Dr. Hennessy was previously employed by the Centers for Disease Control, has held faculty positions at the University of Hawaii and Emory University, and has worked in the private sector doing evaluation research. He received his Ph.D. from Northwestern University and his M.P.H. from the School of Public Health, Emory University.

Dr. Amy Bleakley is a Senior Research Analyst of Health and Political Communication at the Annenberg Public Policy Center of the University of Pennsylvania. Her research focuses on evaluating the effects of media on health risk behaviors and using theory to create evidence-based health interventions. Dr. Bleakley has methodological and statistical expertise in survey research, structural equation modeling, and theory testing. Her work has been published in such academic journals as the American Journal of Public Health, Archives of Pediatric and Adolescent Medicine, Health Education and Behavior, the Journal of Sex Research, and Media Psychology. Dr. Bleakley received her M.P.H and Ph.D. in Sociomedical Sciences from Columbia University.

Dr. Martin Fishbein was the Harry C. Coles Jr. Distinguished Professor in Communication at the Annenberg School for Communication, and Director of the Health Communication Program at the Annenberg Public Policy Center. Developer of the Theory of Reasoned Action, Dr. Fishbein contributed over 200 articles and chapters to professional books and journals, and has authored or edited six books. Dr. Fishbein’s research interests included attitude theory and measurement, communication and persuasion, behavioral prediction and change and behaviors in field and laboratory settings including studies of the effectiveness of health-related behavior change interventions. He was president of both the Society for Consumer Psychology and the Interamerican Psychological Society.

Footnotes

Its contents are solely the responsibility of the authors and do not necessarily represent the official views of the NICHD.

Other examples from business and economics research can be found in (Diamantopoulos and Winklhofer 2001).

In our experience, if any of the three sets of IM underlying belief items do not scale, it is usually the underlying behavioral beliefs because they are both more heterogeneous and more numerous than the normative or efficacy/control belief items in all the reasoned action data sets we have analyzed.

Our approach is consistent with Jarvis, MacKenzie and Podsakoff’s suggestion (2003, p. 202) that TRA belief items are causal indicators although in their paper this is just noted as a potential application of such a measurement model.

For some researchers (Howell, Breivik, and Wilcox 2007), this is a fatal flaw because the measurement properties of a causal indicator index can never be evaluated apart from a more comprehensive structural model. However, effect indicator measurement models with only two items are similarly underidentified, yet we know of no demands to eliminate their use in data analysis.

References

- Ajzen Icek, Fishbein Martin. Attitudes and the attitude-behavior relation: reasoned and automatic processes. In: Stroebe W, Hewstone M, editors. European Review of Social Psychology. New York: John Wiley & Sons Ltd; 2000. [Google Scholar]

- Albarracín Dolores, Johnson Blair, Fishbein Martin, Muellerleile Paige. Theories of reasoned action and planned behavior as models of condom use: A meta-analysis. Psychological Bulletin. 2001;127(1):142–161. doi: 10.1037/0033-2909.127.1.142. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anderson James, Gerbing David. Structural equation modeling in practice: a review and recommended two-step approach. Psychological Bulletin. 1988;127:142–161. [Google Scholar]

- Bollen Kenneth, Lennox Richard. Conventional wisdom on measurement: a structural equation perspective. Psychological Bulletin. 1991;110:305–314. [Google Scholar]

- Bollen Kenneth, Paxton Pamela. Detection and determinants of bias in subjective measures. American Sociological Review. 1998;63(3):465–478. [Google Scholar]

- Bollen Kenneth, Ting Kwok-fai. A tetrad test for causal indicators. Psychological Methods. 2000;5(1):3–22. doi: 10.1037/1082-989x.5.1.3. [DOI] [PubMed] [Google Scholar]

- Borsboom Denny, Mellenbergh Gideon, VanHeerden Jaap. The theoretical status of latent variables. Psychological Review. 2003;110(2):203–219. doi: 10.1037/0033-295X.110.2.203. [DOI] [PubMed] [Google Scholar]

- Brown Timothy. Confirmatory factor analysis for applied research. New York: Guilford; 2006. [Google Scholar]

- Carey Michael, Schroder Kerstin. Development and psychometric evaluation of the brief HIV Knowledge Questionnaire. AIDS Education and Prevention. 2002;14(2):172–182. doi: 10.1521/aeap.14.2.172.23902. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Conner Mark, Norman Paul, Bell Russell. The theory of planned behavior and healthy eating. Health Psychology. 2002;21(2):194–201. [PubMed] [Google Scholar]

- Cooke Richard, Sniehotta Falko, Schüz Benjamin. Predicting binge-drinking behaviour using and extended TPB: examining the impact of anticipated regret and descriptive norms. Alcohol and Alcoholism. 2007;(42):84–91. doi: 10.1093/alcalc/agl115. [DOI] [PubMed] [Google Scholar]

- Diamantopoulos Adamantios, Riefler Petra, Roth Katharina. Advancing formative measurement models. Journal of Business Research. 2008;61:1203–1218. [Google Scholar]

- Diamantopoulos Adamantios, Siguaw Judy. Formative versus reflective indicators in organizational measure development: a comparison and empirical illustration. British Journal of Management. 2006;17:263–282. [Google Scholar]

- Diamantopoulos Adamantios, Winklhofer Heidi. Index construction with formative indicators: an alternative to scale development. Journal of Marketing Research. 2001;38(1):269–277. [Google Scholar]

- Edwards Jeffrey, Bagozzi Richard. On the nature and direction of relationships between contructs and measures. Psychological Methods. 2000;5(2):155–174. doi: 10.1037/1082-989x.5.2.155. [DOI] [PubMed] [Google Scholar]

- Fayers P, Hand D. Factor analysis, causal indicators, and quality of life. Quality of Life Research. 1997;6:139–150. doi: 10.1023/a:1026490117121. [DOI] [PubMed] [Google Scholar]

- Fayers Peter, Hand David, Bjordal Kristin, Groenvold Mogens. Causal indicators in quality of life research. Quality of Life Research. 1997;6:393–406. doi: 10.1023/a:1018491512095. [DOI] [PubMed] [Google Scholar]

- Fishbein Martin. Intentional behavior. Encyclopedia of Applied Psychology. 2004;2:329–334. [Google Scholar]

- Flora David, Curran Patrick. An empirical evaluation of alternative methods of estimation for confirmatory factor analysis with ordinal data. Psychological Methods. 2004;(9):466–491. doi: 10.1037/1082-989X.9.4.466. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gerbing David, Anderson James. On the meaning of within-factor correlated measurement errors. Journal of Consumer Research. 1984;11:572–580. [Google Scholar]

- Hagger Martin, Chatzisarantis Nikos, Biddle Stuart, Orbell Sheina. Antecedents of children’s physical activity intentions and behavior: predictive validity and longitudinal effects. Psychology and Health. 2001;(16):391–407. [Google Scholar]

- Hardeman Wendy, Johnston Marie, Johnston Derek, Bonetti Debbie, Wareham Nicholas, Kinmonth Ann. Application of the theory of planned behaviour in behaviour change interventions: A systematic review. Psychology and Health. 2002;17(2):123–158. [Google Scholar]

- Hausenblas Heather, Carron Albert, Mack Diane. Application of the theories of reasoned action and planned behavior to exercise behavior: A meta-analysis. Journal of Sport and Exercise Psychology. 1997;19(1):36–51. [Google Scholar]

- Howell Roy, Breivik Einar, Wilcox James. Reconsidering formative measurement. Psychological Methods. 2007;12(2):205–218. doi: 10.1037/1082-989X.12.2.205. [DOI] [PubMed] [Google Scholar]

- Jarvis Cheryl, MacKenzie Scott, Podsakoff Philip. A critical review of construct indicators and measurement model misspecification in marketing and consumer research. Journal of Consumer Research. 2003;30:199–218. [Google Scholar]

- Johnston John. Econometric methods. New York: McGraw Hill; 1984. [Google Scholar]

- Judge Timothy, Locke Edwin, Durham Cathy, Kluger Avraham. Dispositional effects on job and life satisfaction: the role of core evaluation. Journal of Applied Psychology. 1998;83(1):17–34. doi: 10.1037/0021-9010.83.1.17. [DOI] [PubMed] [Google Scholar]

- Kaplan David. Structural equation modeling. Thousand Oaks, CA: Sage; 2000. [Google Scholar]

- Kline Rex. Principles and practice of structural equation modeling. New York: Guilford; 2005. [Google Scholar]

- Law Kenneth, Wong Chi-Sum. Multidimensional constructs in structural equation analysis: an illustration using the job perception and job satisfaction constructs. Journal of Management. 1999;25:143–160. [Google Scholar]

- MacCallum Robert, Browne Michael. The use of causal indicators in covariance structure models: some practical issues. Psychological Bulletin. 1993;114(3):533–541. doi: 10.1037/0033-2909.114.3.533. [DOI] [PubMed] [Google Scholar]

- MacKenzie Scott, Podsakoff Philip, Jarvis Cheryl. The problem of measurement model misspecification in behavioral and organizational research and some recommended solutions. Journal of Applied Psychology. 2005;90(4):710–730. doi: 10.1037/0021-9010.90.4.710. [DOI] [PubMed] [Google Scholar]

- McDonald Roderick. Structural models and the art of approximation. Perspectives on Psychological Science. 2010;5(6):675–686. doi: 10.1177/1745691610388766. [DOI] [PubMed] [Google Scholar]

- Muthén Linda, Muthén Bengt. Mplus user's guide. Los Angeles, CA: Muthen & Muthen; 2006. [Google Scholar]

- Reise Steven, Henson James. A discussion of modern versus traditional psychometrics as applied to personality assessment scales. Journal of Personality Assessment. 2003;81(2):93–103. doi: 10.1207/S15327752JPA8102_01. [DOI] [PubMed] [Google Scholar]

- Ringdal K, Ringdal G, Kaasa S, Bjordal K, Wisløff F, Sundstrøm S, Hjermstad M. Assessing the consistency of psychometric properties of the HRQoL scales within the EORTC QLQ-C30 across populations by means of the Mokken scaling model. Quality of Life Research. 1999;8:25–43. doi: 10.1023/a:1026419414249. [DOI] [PubMed] [Google Scholar]

- Rockwell Richard. Assessment of multicollinearity: the Haitovsky test of the determinant. Sociological Methods and Research. 1975;3:308–320. [Google Scholar]

- Schneider Saundra, Jacoby William, Coggburn Jerrell. The structure of bureaucratic decisions in the American states. Public Administration Review. 1997;57(3):240–249. [Google Scholar]

- Sheeran Paschal, Taylor Steven. Predicting intentions to use condoms: a meta-analysis and comparison of the theories of reasoned action and planned behavior. Journal of Applied Social Psychology. 1999;29(8):1624–1675. [Google Scholar]

- Shipley Bill. Cause and correlation in biology: a user’s guide to path analysis, structural equations and causal inference. Cambridge: Cambridge University Press; 2002. [Google Scholar]

- Sijtsma Klaas. On the use, the misuse, and the very limited usefulness of Cronbach’s alpha. Psychometrika. 2009;74:101–120. doi: 10.1007/s11336-008-9101-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Streiner David. Being inconsistent about consistency: when coefficient alpha does and doesn’t matter. Journal of Personality Assessment. 2003;80(3):217–222. doi: 10.1207/S15327752JPA8003_01. [DOI] [PubMed] [Google Scholar]

- Streiner David. Starting at the beginning: an introduction to coefficient alpha and internal consistency. Journal of Personality Assessment. 2003;80(1):99–103. doi: 10.1207/S15327752JPA8001_18. [DOI] [PubMed] [Google Scholar]

- Van Boxel Yvonne, Roest Frits, Bergen Michael, Stam Henk. Dimensionality and hierarchical structure of disability measurement. Archives of Physical Medical Rehabilitation. 1995;76:1152–1155. doi: 10.1016/s0003-9993(95)80125-1. [DOI] [PubMed] [Google Scholar]

- Van De Ven Monique, Rutger Engels, Otten Roy, Van Den Eijnden Regina. A longitudinal test of the theory of planned behavior predicting smoking onset among asthmatic and non-asthmatic adolescents. Journal of Behavioral Medicine. 2007;30:435–445. doi: 10.1007/s10865-007-9119-2. [DOI] [PubMed] [Google Scholar]