Abstract

Advantageous economic decision making requires flexible adaptation of gain‐based and loss‐based preference hierarchies. However, where the neuronal blueprints for economic preference hierarchies are kept and how they may be adapted remains largely unclear. Phasic cortical dopamine release likely mediates flexible adaptation of neuronal representations. In this PET study, cortical‐binding potential (BP) for the D2‐dopamine receptor ligand [11C]FLB 457 was examined in healthy participants during multiple sessions of a probabilistic four‐choice financial decision‐making task with two behavioral variants. In the changing‐gains/constant‐losses variant, the implicit gain‐based preference hierarchy was unceasingly changing, whereas the implicit loss‐based preference hierarchy was constant. In the constant‐gains/changing‐losses variant, it was the other way around. These variants served as paradigms, respectively, contrasting flexible adaptation versus maintenance of loss‐based and gain‐based preference hierarchies. We observed that in comparison with the constant‐gains/changing‐losses variant, the changing‐gains/constant‐losses variant was associated with a decreased D2‐dopamine receptor‐BP in the right lateral frontopolar cortex. In other words, lateral frontopolar D2‐dopamine receptor stimulation was specifically increased during continuous adaptation of mental representations of gain‐based preference hierarchies. This finding provides direct evidence for the existence of a neuronal blueprint of gain‐based decision‐making in the lateral frontopolar cortex and a crucial role of local dopamine in the flexible adaptation of mental concepts of future behavior. Hum Brain Mapp, 2013. © 2011 Wiley Periodicals, Inc.

Keywords: dopamine, feedback‐learning, frontopolar, FLB, imaging, gambling

INTRODUCTION

Which is the lesser evil? Which grass is greener? In every day decision making, our preference is often based on differences in expected losses or gains. According to economic science [Machina, 1987], financial preference hierarchies can be formally expressed as a rank order of positive or negative expected values (+EV; −EV; e.g., +EVaction A > +EVaction B > … or −EVaction A < −EVaction B < …). Hence, the capability to form and adapt cortical representations of gain‐based and loss‐based preference hierarchies critically depends on the ability to integrate and relate positive and negative expected values of multiple alternative actions. Therefore, it would appear that high‐level associative areas of the prefrontal cortex—interconnected with limbic, attentional, and memory networks—are particularly apt for this task. Yet, exactly where gain‐ or loss‐based preference hierarchies are cortically represented and how adequate adaptation of those blueprints is taking place remains largely unclear.

Prior research in this domain primarily used a four‐deck card game called the Iowa Gambling Task (IGT), which was devised to mimic real‐life human decision‐making with uncertain financial outcomes [Bechara et al., 1994]. The specific implicit goal of this task is to overcome a preference of decks that are associated with higher gains, yet even higher losses, thus resulting in a net loss. Advantageous decision making in the IGT further demands shifting preference toward decks offering lower gains, yet even lower losses, resulting in a net gain. Functional imaging studies using the IGT frequently found that activation of the ventro‐medial prefrontal (vmPFC) was predictive of good performance in this task [Christakou et al., 2009; Lawrence et al., 2009; Northoff et al., 2006]. Moreover, patients with lesions of the vmPFC tend to perseverate the decks with high gains but net losses [Bechara et al., 1994]. Generally speaking, research using the IGT supports a specific role of the vmPFC in updating mental representations of loss‐based preference hierarchies in decision making. However, as to which cortical area contains such a mental blueprint of gain‐based preference hierarchies is much less clear and may in fact not be answered by using the IGT. Indeed, recent evidence stemming from functional imaging studies using different tasks points toward a specific role of the lateral frontopolar cortex (lFPC, lateral Brodmann area 10) in signaling choice preference in gain‐based decision making [Boorman et al., 2009; Roiser et al., 2010].

It is widely accepted that prefrontal dopaminergic stimulation generally plays a crucial role in the flexible adaptation of behavior [Cools and Robbins, 2004; Durstewitz and Seamans, 2008]. Although not unanimously [Euteneuer et al., 2009; Turnbull et al., 2006], studies in patients with Parkinson's disease or Schizophrenia have found impaired performance in loss‐based decision making that was unbiased by working‐memory deficits, thus pointing toward an interference of dopamine with vmPFC function [Kobayakawa et al., 2008; Mimura et al., 2006; Pagonabarraga et al., 2007; Perretta et al., 2005; Rossi et al., 2010; Sevy et al., 2007; Shurman et al., 2005]. An increasingly influential theory of prefrontal cortex dopaminergic function formulates a specific role of cortical D2 receptor stimulation in the adaption of cortical representations [Cohen et al., 2002; Durstewitz and Seamans, 2008]. A high level of D2 receptor stimulation is thought to favor flexible adaptation of cortical representations. In contrast, a low level of D2 receptor stimulation likely stabilizes cortical representations leading to robust maintenance of the status quo [Cohen et al., 2002; Durstewitz and Seamans, 2008].

In this study, our goal was to investigate prefrontal dopaminergic stimulation during gain‐ and loss‐based decision‐making using similar behavioral tasks during PET using [11C]FLB 457, a chemical compound with great affinity for D2 receptors, which allows evaluation of extrastriatal dopamine release [Aalto et al., 2005]. Consequently, we felt it desirable to use a “two‐way” IGT of sorts. Therefore, we derived two variants of a novel four‐deck card game. In the changing‐gains/constant‐losses variant, mean gain‐based differences between decks were continuously changing, whereas mean loss‐based differences were kept constant. In the constant‐gains/changing‐losses variant, it was the other way around. Hence, these variants served as behavioral paradigms, which mutually contrasted a continuously high demand for flexible adaptation of mental representations (e.g., changing‐gains) with a rapidly decreasing demand to adapt mental representations (e.g., constant‐gains) of the gain‐based and loss‐based preference hierarchy.

We hypothesized that continuous adaptation of mental representations of loss‐based preference hierarchies would be associated with a relatively increased level of D2 receptor stimulation (i.e., release of dopamine) in the vmPFC. In contrast, we hypothesized that continuous adaptation of mental representations of gain‐based preference hierarchies is coupled with higher levels of D2 receptor stimulation (i.e., release of dopamine) in the lFPC. These hypotheses were tested using [11C]FLB 457 PET in a within‐subject repeated measures design across eight healthy individuals.

MATERIALS AND METHODS

Participants

Eight right‐handed healthy male college students (age, 21 ± 1.3, years of college education, 2 ± 1.2) participated in the study after being recruited by advertisement. Exclusion criteria were recent illicit substance use, known psychiatric or neurological disorder or first‐degree relatives diagnosed with one, and medical disorder likely to lead to cognitive impairment. After complete description of the study to the participants, written informed consent was obtained. The study was approved by the Research Ethics Committees for the Centre for Addiction and Mental Health of the University of Toronto.

Behavioral Task

Basic task structure.

Participants performed a four‐deck card game involving monetary gains and losses, which required choosing cards from a horizontal array of four stacks, so as to maximize payout. Defining a pseudo‐randomized implicit hierarchy of stacks, there was a difference in average net return value between one stack and the next best in the implicit hierarchy. Each turn, two random stacks were shaded and the other two remained available (see Fig. 1). Using their right hand, participants had to pick one of the two available stacks by pressing one of two buttons. Not pressing a button within 4 s resulted in a “penalty buzz” and a penalty of 100 Canadian cents. After pressing the button, the selected card flipped and revealed the return value for this trial (ranging from −40 to +40 Canadian cents). To maximize payout, participants had to learn the implicit hierarchy and pick a card from the stack with the higher average net return value (correct choice). Participants were advised that each stack contained an equal number of winning and losing cards (0.5 probability of picking a winning or a losing card for every stack), but that average positive or negative return values could differ between stacks. Across the experiment, all four stacks were available in similar frequencies. We decided to gray out two stacks, so that participants were forced to continuously explore stacks and “retest” the validity of a preliminary hierarchy.

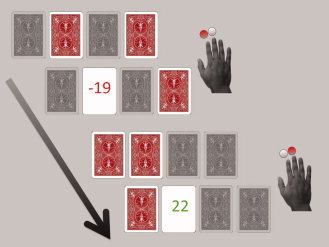

Figure 1.

Basic structure of the probabilistic financial decision‐making task. Each turn, two random stacks were shaded and two remained available. Participants had to pick one stack by pressing one of two buttons. After pressing the button, the selected card flipped and revealed the return value for this trial.

Behavioral variants.

Two variants were performed on separate days (with 6.7 ± 7 days in between) in a counterbalanced order. In the changing‐gains/constant‐losses variant (Fig. 2, left column), the relative difference between two stacks with respect to positive return values (i.e., rank in gain‐based hierarchy) was continuously changing, whereas the relative difference with respect to negative return values (i.e., rank in loss‐based hierarchy) was kept constant. In the constant‐gains/changing‐losses variant, it was the other way around (Fig. 2, right column).

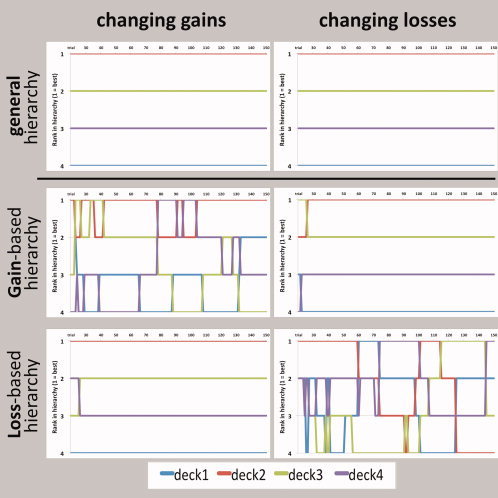

Figure 2.

Behavioral variants of the task. Exemplary data from single sessions. The general general hierarchies (top row) were constant and the same for both variants. Left column: In the changing‐gains variant, the rank of a certain deck in the gain‐based hierarchy (middle row) was unceasingly changing, whereas the rank in the loss‐based hierarchy (bottom row) was constant. Right column: In the changing‐losses variant, it was the other way around.

The resulting respective differences in average net return value—which defined the implicit hierarchy—were the same for both variants. A series of nine sessions per variant was performed on separate days, in counterbalanced order. Each session consisted of 150 trials. Between sessions, there was a fixed pause of 90 s during which the participants were told to close their eyes and wait for a warning sound announcing the imminent start of the next session.

Performance‐based adaptation of task difficulty.

To minimize individual differences in learning performance, we established an algorithm to adapt task difficulty to performance (number of trials to learning criterion) during the experiment. The learning criterion was defined as 27 correct choices in 30 consecutive trials for all variants. We prepared 12 difficulty levels for each session by varying the respective differences in average net return value between one deck and the next best in the implicit hierarchy. Participants started with an intermediate level. If the learning criterion was met in the first 100 trials, difficulty level increased by 1. If the learning criterion was met in the last 50 trials, the level stayed the same for the next session. If the learning criterion was never met, difficulty level decreased by 1.

After they completed the experiment for each day, participants had to rate the following items on a visual analogue scale: liking of the experiment, performance, reliance on negative return values, reliance on positive return values, and confidence of having learned the correct hierarchy. Rating results are found in Supporting Information Figure S1.

Positron Emission Tomography

PET scans were obtained with a high resolution PET CT, Siemens‐Biograph HiRez XVI (Siemens Molecular Imaging, Knoxville, TN) operating in 3D mode with an in‐plane resolution of ∼4.6 mm full width at half‐maximum. To minimize participant's head movements in the PET scanner, we used a custom‐made thermoplastic facemask together with a head‐fixation system (Tru‐Scan Imaging, Annapolis). Before each emission scan, following the acquisition of a scout view for accurate positioning of the participant, a low dose (0.2 mSv) CT scan was acquired and used for attenuation correction.

[11C]FLB 457 (half‐life: 20.4 min) was injected into the left antecubital vein over 60 s, and emission data were then acquired over a period of 90 min in 15 one‐minute frames and 15 five‐minute frames [Olsson et al., 2004]. The injected amount was 10 ± 0.5 mCi for the changing‐gains/constant‐losses variant; 10 ± 0.6 mCi for the constant‐gains/changing‐losses variant.

High‐resolution MRI (GE Signa 1.5 T, T1‐weighted images, and 1‐mm slice thickness) of each participant's brain was acquired and transformed into standardized stereotaxic space using nonlinear automated feature‐matching to the MNI template [Collins et al., 1994; Robbins et al., 2004]. PET frames were summed, registered to the corresponding MRI, and transformed into standardized stereotaxic space using the transformation parameters of the individual structural MRIs [Collins et al., 1994; Robbins et al., 2004]. Voxelwise [11C]FLB 457 binding potential (BP) was calculated using a simplified reference tissue (cerebellum) method [Gunn et al., 1997; Lammertsma and Hume, 1996; Sudo et al., 2001] to generate statistical parametric images of change in BP [Aston et al., 2000]. This method uses the residuals of the least‐squares fit of the compartmental model to the data at each voxel to estimate the standard deviation of the BP estimate. The individual level of feedback‐learning was included in the statistical model (general linear model) as nuisance variables [Gschwandtner et al., 2001]. Parametric images of [11C]FLB 457 BP were smoothed with an isotropic Gaussian of 6‐mm full width at half‐maximum to accommodate for intersubject anatomical variability. A threshold level of t > 4.5 was considered significant (t‐test, p < 0.05, two‐tailed) corrected for multiple comparisons [Friston et al., 1997; Worsley et al., 1996] for the regions with a priori hypothesis (i.e., frontal pole/BA 10/SFG). The volume of interest was extracted using the WFU PickAtlas and included 12,537 voxels and 100,296 mm3 [Friston et al., 1997].

RESULTS

Behavior

Average duration of the behavioral experiment (nine sessions) was 83 ± 6 min for both variants. Because of the adaptation algorithm (for individual levels per session, see Supporting Information Table S1), we observed similar respective average learning curves with relatively low variability for both variants (see Fig. 3). Average percent correct choices per bin of 10 trials increased from 58% ± 9% to 85% ± 12% in the changing‐gains/constant‐losses variant and from 54% ± 7% to 85% ± 14% in the constant‐gains/changing‐losses variant. Overall, payout was similar in both variants (changing‐gains/constant‐losses: 1999 ± 299; constant‐gains/changing‐losses: 1441 ± 760 Canadian cents).

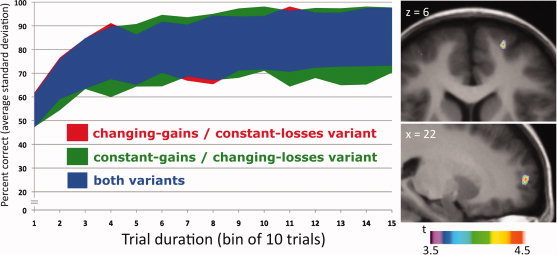

Figure 3.

Results. The graph on the left depicts the learning curves for both variants of the task, that is, average standard deviation of percent correct choices (vertical axis) per bin of 10 trials (horizontal axis). The pictures on the right show statistical parametric maps of the change in [11C]FLB 456 BP overlaid upon a transverse (top) and a sagittal (bottom) section of the average MRI of all subjects in standardized stereotaxic space. The performance of the changing‐gains/constant‐losses variant was associated with decreased [11C]FLB 457 BP (i.e., increased dopamine release) in the right lFPC (x = 22, y = 60, z = 6; t = 4.62; P < 0.05 corr.) compared to the constant‐gains/changing‐losses variant.

PET Results

Performing the changing‐gains/constant‐losses variant was associated with decreased [11C]FLB 457 BP in the right lFPC (X = 22; Y = 60; Z = 6; 8 mm3; t = 4.62; p < 0.05, corrected for multiple comparison) compared to the constant‐gains/changing‐losses variant (see Fig. 3). The mean BP of [11C]FLB 457 extracted from a spherical region of interest (r = 6 mm) centered at the statistical peak revealed by the parametric map was 0.668 ± 0.023 and 0.582 ± 0.030, respectively [t(7) = 2.428, p = 0.023, one‐tailed].

When using a less conservative threshold (uncorrected for multiple comparisons), the constant‐gains/changing‐losses variant was associated with a decreased BP in the left vmPFC (X = −6; Y = 60; Z = −22; t = 4.46) compared to the changing‐gains/constant‐losses variant. The mean BP of [11C]FLB 457 extracted from a spherical region of interest (r = 6 mm) centered at the statistical peak revealed by the parametric map was 0.585 ± 0.053 during the constant‐gains/changing‐losses variant and 0.640 ± 0.036 during the changing‐gains/constant‐losses variant [t(7) = 0.488, p = 0.320, one tailed], signifying a trend decrease in BP associated with the adaptation of loss‐based preference hierarchies.

DISCUSSION

This study provides preliminary evidence that the adaptation of gain‐based preference hierarchies (“Which grass is greener?”) depends on D2‐receptor stimulation in the right lFPC. [11C]FLB 457 BP was investigated while participants performed two behavioral variants of a probabilistic financial decision‐making task resulting in similar overall payouts. In both variants, participants equally learned to make optimal decisions based on a hierarchy of positive or negative expected values, respectively. As a benefit for the reliability of the results—especially in a study with a relatively small number of observations—we observed only minor variance in individual learning curves. We ascribe this to the fact that task difficulty was adapted to performance during the experiment and that participants were relatively homogenous with respect to age and educational background.

When interpreting the decrease of BP in the changing‐gains/constant‐losses, relative to the constant‐gains/changing‐losses variant, one has to consider that behaviorally, these variants contrast a decreasing demand to adapt mental representations of a gain‐based preference hierarchy with a constantly high demand for adaptation in the futile attempt to form an accurate gain‐based preference hierarchy. We therefore argue that our findings reflect a continuously increased D2‐receptor stimulation with continuous demand to adapt gain‐based preference hierarchies in the changing‐gains/constant‐losses variant in contrast to a “decreasingly increased” D2‐receptor stimulation with a decreasing demand to adapt gain‐based preference hierarchies in the constant‐gains/changing‐losses variant.

This conclusion is not only based on anatomical a priori information, but also on the premise that with [11C]FLB 457 PET, we are only able to describe a decrease in BP, not an increase due to a hypothetical decrease of synaptic dopamine levels below baseline [Frankle et al., 2010].

We also report a trend decrease of [11C]FLB 457 BP in the vmPFC associated with the adaptation of loss‐based preference hierarchies. Although not surviving the correction for multiple comparisons, this result may strengthen the assumption that the vmPFC plays an important role in loss‐based decision‐making coming from studies using the original IGT [Bechara et al., 1994]. Moreover, it may corroborate studies describing an influence of dopaminergic stimulation on loss‐based decision‐making in the IGT [Kobayakawa et al., 2008; Mimura et al., 2006; Pagonabarraga et al., 2007; Perretta et al., 2005; Rossi et al., 2010; Sevy et al., 2007; Shurman et al., 2005].

Generally, the findings underscore the notion that prefrontal D2‐receptor stimulation plays an important role in decision‐making by facilitating the adaptation of future behavior [Cools and Robbins, 2004].

This study generally supports the role of the lFPC in future oriented goal‐directed behavior. In functional imaging studies, activation of the lFPC has been reported for many high‐level tasks, such as rule learning [Strange et al., 2001], postretrieval evaluation in episodic memory retrieval [Shallice et al., 1994], and memory‐based guidance of visual selection [Soto et al., 2007]. Specific sustained activation of the lFPC has also been described during strategic monitoring of the environment for intention‐relevant cues in a prospective memory task [Reynolds et al., 2009]. According to Burgess et al. [ 2007], the lFPC is primarily engaged in contextual episodic memory associated with stimulus‐independent manipulation of central representations. Of particular interest with regard to the present study is the implication of the lFPC in relational integration [Bunge et al., 2009; Christoff et al., 2001]. Our task specifically demanded to mentally rank card decks according to their expected values, in this context, the ability to make transitive relational inferences (if “deck A is better than deck B” and “deck B is better than deck C” then “deck A is better than deck C”) certainly constitutes a behavioral advantage.

Of note, two recent fMRI studies directly implicate the lFPC in guiding behavior according to neural representations of value hierarchy of behavioral options. One suggests that the lFPC mediates a behavioral switch in reward‐based decisions [Boorman et al., 2009], when empirical evidence is in favor of doing so. The other found that adaptive reward learning was highly correlated with activity in the lateral frontal pole in a probabilistic reward‐learning task [Roiser et al., 2010].

One may argue that the common theme of the suggested functions of the lFPC is that they require online comparison of ongoing events with longer‐range strategies or hypotheses in goal‐directed behavior. Our findings would certainly be in line with this proposition.

Supporting information

Additional Supporting Information may be found in the online version of this article.

Supporting Information

Acknowledgements

We thank all the staff of the CAMH‐PET imaging center for their assistance in carrying out the studies. The authors declare that they have no relevant conflicts of interest.

REFERENCES

- Aalto S, Bruck A, Laine M, Nagren K, Rinne JO ( 2005): Frontal and temporal dopamine release during working memory and attention tasks in healthy humans: A positron emission tomography study using the high‐affinity dopamine D2 receptor ligand [11C]FLB 457. J Neurosci 25: 2471–2477. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aston JA, Gunn RN, Worsley KJ, Ma Y, Evans AC, Dagher A ( 2000): A statistical method for the analysis of positron emission tomography neuroreceptor ligand data. Neuroimage 12: 245–256. [DOI] [PubMed] [Google Scholar]

- Bechara A, Damasio AR, Damasio H, Anderson SW ( 1994): Insensitivity to future consequences following damage to human prefrontal cortex. Cognition 50: 7–15. [DOI] [PubMed] [Google Scholar]

- Boorman ED, Behrens TE, Woolrich MW, Rushworth MF ( 2009): How green is the grass on the other side? Frontopolar cortex and the evidence in favor of alternative courses of action. Neuron 62: 733–743. [DOI] [PubMed] [Google Scholar]

- Bunge SA, Helskog EH, Wendelken C ( 2009): Left, but not right, rostrolateral prefrontal cortex meets a stringent test of the relational integration hypothesis. Neuroimage 46: 338–342. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Burgess PW, Gilbert SJ, Dumontheil I ( 2007): Function and localization within rostral prefrontal cortex (area 10). Philos Trans R Soc Lond B Biol Sci 362: 887–899. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Christakou A, Brammer M, Giampietro V, Rubia K ( 2009): Right ventromedial and dorsolateral prefrontal cortices mediate adaptive decisions under ambiguity by integrating choice utility and outcome evaluation. J Neurosci 29: 11020–11028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Christoff K, Prabhakaran V, Dorfman J, Zhao Z, Kroger JK, Holyoak KJ, Gabrieli JD ( 2001): Rostrolateral prefrontal cortex involvement in relational integration during reasoning. Neuroimage 14: 1136–1149. [DOI] [PubMed] [Google Scholar]

- Cohen JD, Braver TS, Brown JW ( 2002): Computational perspectives on dopamine function in prefrontal cortex. Curr Opin Neurobiol 12: 223–229. [DOI] [PubMed] [Google Scholar]

- Collins DL, Neelin P, Peters TM, Evans AC ( 1994): Automatic 3D intersubject registration of MR volumetric data in standardized Talairach space. J Comput Assist Tomogr 18: 192–205. [PubMed] [Google Scholar]

- Cools R, Robbins TW ( 2004): Chemistry of the adaptive mind. Philos Trans A Math Phys Eng Sci 362: 2871–2888. [DOI] [PubMed] [Google Scholar]

- Durstewitz D, Seamans JK ( 2008): The dual‐state theory of prefrontal cortex dopamine function with relevance to catechol‐o‐methyltransferase genotypes and schizophrenia. Biol Psychiatry 64: 739–749. [DOI] [PubMed] [Google Scholar]

- Euteneuer F, Schaefer F, Stuermer R, Boucsein W, Timmermann L, Barbe MT, Ebersbach G, Otto J, Kessler J, Kalbe E ( 2009): Dissociation of decision‐making under ambiguity and decision‐making under risk in patients with Parkinson's disease: A neuropsychological and psychophysiological study. Neuropsychologia 47: 2882–2890. [DOI] [PubMed] [Google Scholar]

- Frankle WG, Mason NS, Rabiner EA, Ridler K, May MA, Asmonga D, Chen CM, Kendro S, Cooper TB, Mathis CA, et al. ( 2010): No effect of dopamine depletion on the binding of the high‐affinity D2/3 radiotracer [11C]FLB 457 in the human cortex. Synapse 64: 879–885. [DOI] [PubMed] [Google Scholar]

- Friston KJ, Buechel C, Fink GR, Morris J, Rolls E, Dolan RJ ( 1997): Psychophysiological and modulatory interactions in neuroimaging. Neuroimage 6: 218–229. [DOI] [PubMed] [Google Scholar]

- Gschwandtner U, Aston J, Renaud S, Fuhr P ( 2001): Pathologic gambling in patients with Parkinson's disease. Clin Neuropharmacol 24: 170–172. [DOI] [PubMed] [Google Scholar]

- Gunn RN, Lammertsma AA, Hume SP, Cunningham VJ ( 1997): Parametric imaging of ligand‐receptor binding in PET using a simplified reference region model. Neuroimage 6: 279–287. [DOI] [PubMed] [Google Scholar]

- Kobayakawa M, Koyama S, Mimura M, Kawamura M ( 2008): Decision making in Parkinson's disease: Analysis of behavioral and physiological patterns in the Iowa gambling task. Mov Disord 23: 547–552. [DOI] [PubMed] [Google Scholar]

- Lammertsma AA, Hume SP ( 1996): Simplified reference tissue model for PET receptor studies. Neuroimage 4( 3, Pt 1): 153–158. [DOI] [PubMed] [Google Scholar]

- Lawrence NS, Jollant F, O'Daly O, Zelaya F, Phillips ML ( 2009): Distinct roles of prefrontal cortical subregions in the Iowa Gambling Task. Cereb Cortex 19: 1134–1143. [DOI] [PubMed] [Google Scholar]

- Machina MJ ( 1987): Decision‐making in the presence of risk. Science 236: 537–543. [DOI] [PubMed] [Google Scholar]

- Mimura M, Oeda R, Kawamura M ( 2006): Impaired decision‐making in Parkinson's disease. Parkinsonism Relat Disord 12: 169–175. [DOI] [PubMed] [Google Scholar]

- Northoff G, Grimm S, Boeker H, Schmidt C, Bermpohl F, Heinzel A, Hell D, Boesiger P ( 2006): Affective judgment and beneficial decision making: Ventromedial prefrontal activity correlates with performance in the Iowa Gambling Task. Hum Brain Mapp 27: 572–587. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Olsson H, Halldin C, Farde L ( 2004): Differentiation of extrastriatal dopamine D2 receptor density and affinity in the human brain using PET. Neuroimage 22: 794–803. [DOI] [PubMed] [Google Scholar]

- Pagonabarraga J, Garcia‐Sanchez C, Llebaria G, Pascual‐Sedano B, Gironell A, Kulisevsky J ( 2007): Controlled study of decision‐making and cognitive impairment in Parkinson's disease. Mov Disord 22: 1430–1435. [DOI] [PubMed] [Google Scholar]

- Perretta JG, Pari G, Beninger RJ ( 2005): Effects of Parkinson disease on two putative nondeclarative learning tasks: Probabilistic classification and gambling. Cogn Behav Neurol 18: 185–192. [DOI] [PubMed] [Google Scholar]

- Reynolds JR, West R, Braver T ( 2009): Distinct neural circuits support transient and sustained processes in prospective memory and working memory. Cereb Cortex 19: 1208–1221. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Robbins S, Evans AC, Collins DL, Whitesides S ( 2004): Tuning and comparing spatial normalization methods. Med Image Anal 8: 311–323. [DOI] [PubMed] [Google Scholar]

- Roiser JP, Stephan KE, den Ouden HE, Friston KJ, Joyce EM ( 2010): Adaptive and aberrant reward prediction signals in the human brain. Neuroimage 50: 657–664. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rossi M, Gerschcovich ER, de Achaval D, Perez‐Lloret S, Cerquetti D, Cammarota A, Ines Nouzeilles M, Fahrer R, Merello M, Leiguarda R ( 2010): Decision‐making in Parkinson's disease patients with and without pathological gambling. Eur J Neurol 17: 97–102. [DOI] [PubMed] [Google Scholar]

- Sevy S, Burdick KE, Visweswaraiah H, Abdelmessih S, Lukin M, Yechiam E, Bechara A ( 2007): Iowa gambling task in schizophrenia: A review and new data in patients with schizophrenia and co‐occurring cannabis use disorders. Schizophr Res 92: 74–84. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shallice T, Fletcher P, Frith CD, Grasby P, Frackowiak RS, Dolan RJ ( 1994): Brain regions associated with acquisition and retrieval of verbal episodic memory. Nature 368: 633–635. [DOI] [PubMed] [Google Scholar]

- Shurman B, Horan WP, Nuechterlein KH ( 2005): Schizophrenia patients demonstrate a distinctive pattern of decision‐making impairment on the Iowa Gambling Task. Schizophr Res 72: 215–224. [DOI] [PubMed] [Google Scholar]

- Soto D, Humphreys GW, Rotshtein P ( 2007): Dissociating the neural mechanisms of memory‐based guidance of visual selection. Proc Natl Acad Sci USA 104: 17186–17191. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Strange BA, Henson RN, Friston KJ, Dolan RJ ( 2001): Anterior prefrontal cortex mediates rule learning in humans. Cereb Cortex 11: 1040–1046. [DOI] [PubMed] [Google Scholar]

- Sudo Y, Suhara T, Inoue M, Ito H, Suzuki K, Saijo T, Halldin C, Farde L ( 2001): Reproducibility of [11C]FLB 457 binding in extrastriatal regions. Nucl Med Commun 22: 1215–1221. [DOI] [PubMed] [Google Scholar]

- Turnbull OH, Evans CE, Kemish K, Park S, Bowman CH ( 2006): A novel set‐shifting modification of the iowa gambling task: Flexible emotion‐based learning in schizophrenia. Neuropsychology 20: 290–298. [DOI] [PubMed] [Google Scholar]

- Worsley KJ, Marrett S, Neelin P, Vandal AC, Friston KJ, Evans AC ( 1996): A unified statistical approach for determining significant signals in images of cerebral activation. Hum Brain Mapp 4: 58–73. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Additional Supporting Information may be found in the online version of this article.

Supporting Information