Abstract

Objectives: To explore whether structuring a literature search request form according to an evidence-based medicine (EBM) anatomy elicits more information, improves precision of search results, and is acceptable to participating librarians.

Methods: Multicenter before-and-after study involved six different libraries. Data from 195 minimally structured forms collected over four months (Phase 1) were compared with data from 185 EBM-structured forms collected over a further four-month period following a brief training intervention (Phase 2). Survey of librarians' attitudes toward using the EBM-structured forms was conducted early during Phase 2.

Results: 380 request forms, EBM-structured and minimally structured, were analyzed using SPSS. A statistically significant Pearson correlation was found between use of the EBM-structured form and complexity of the search strategy (P = 0.002). The correlation between clinical requests handled by the EBM-structured form and fewer items retrieved was also statistically significant (P = 0.028). However, librarians rated minimally structured forms more highly than EBM-structured forms against all dimensions except informativeness.

Conclusions: Although use of the EBM-structured forms is associated with more precise searches and more detailed search strategies, considerable work remains on making these forms acceptable to both librarians and users. Nevertheless, with increased familiarity and improved training, information retrieval benefits could be translated into more effective search practice.

BACKGROUND

The study of questions provides an “insight into the mental activities of participants engaged in problem solving or decision making” [1]. From a library and information science (LIS) perspective, questions are often viewed as manifestations of information needs. Within the specific context of clinical decision making, the identification of, and subsequent satisfaction of, information needs can lead to accurate diagnosis, selection of the most appropriate course of treatment, and, ultimately, improved health status. The recent emergence of evidence-based medicine (EBM), described as a new paradigm for medicine [2], has resulted in a renewed interest in the questioning behavior of clinicians, as a prerequisite to their timely and appropriate decision making. EBM seeks to isolate, from the plethora of new information that threatens to swamp the health professional, that small proportion of the knowledgebase (estimated as no more than 1% of the whole) required for the practice of patient care; hence the maxim “drowning in information, thirsting for evidence” [3]. Thus, the search for information is restricted to scenarios of immediate concern to an individual's own practice [4].

Proponents of EBM have identified the first of five sequential stages of the process as being that whereby “information needs from practice are converted into focused, structured questions” [5]. This process is facilitated by the use of an “anatomy” of a question [6], whereby the original problem is deconstituted into four parts: the Patient or Population, an Intervention or Exposure, measurable Outcomes and, optionally, a Comparison. This technique was originally proposed as a self-help tool for clinicians, most specifically medical students. It has subsequently been used as a template for search preparation and strategy development in search skills sessions [7]. The investigators in a British Library–funded project, Automated Retrieval of Clinically-relevant Evidence (AuRACLE) [8], decided to explore a previously advanced hypothesis [9] that the structured anatomy of a question would also prove valuable in the clinician-intermediary pre-search interaction. This article attempts to relate the findings of the AuRACLE Project to the broader context of questioning behavior.

LITERATURE REVIEW

Studies of the reference interview

Over many years, the reference interview has evolved as a mechanism for mediating interactions between information seekers and the systems, manual or automated, that serve them. Specifically, with the increasing availability of computerized bibliographic information systems, the “pre-search reference interview” became an important stratagem for targeting what was perceived initially as an expensive but powerful addition to the reference librarian's armory. One commentator has argued that the advent of the online or computer reference service has changed both the setting for the reference interview and the relationship between the librarian and the client [10]. Librarians have been taught to apply more scientific methods to dealing with the user's information needs, methods that arguably could also be applied in traditional reference services. Other commentators [11–12] have argued the corollary of this application, namely that traditional reference interview skills make computer search strategies more effective. Nevertheless, the literature on the role of questions in the reference interview has been relatively sparse. The review by White [13] found that only Lynch [14] and Ingwersen and Kaae [15] have considered questions librarians asked during the reference interview in some detail. Both these studies were conducted in a public library setting. Similarly, although a number of authors have proposed the use of a structured approach to the reference interview [16], few have chosen to apply this to the health information field.

Factors studied in relation to the reference interview have included the number of questions per interview, the mode of questions (closed or open, probes), the nature of probes (echo or confirmation; clarification, echo, and extension), and content sought. White has devised a typology to represent the content objectives of questions [17]:

▪ problem (the reason for soliciting the information or underlying context for the question)

▪ subject (what the question is about)

▪ service requested (what the client wants to know about the subject)

▪ external constraints (situational constraints that may affect choice or packaging of information)

▪ internal constraints (characteristics of the questioner that may influence choice or packaging of information; e.g., knowledge of the subject matter)

▪ prior search history

▪ output (characteristics of the search output; e.g., number of items, elements in the format)

▪ search strategy (e.g., Boolean logic, database characteristics)

▪ logistics/closure (information related to the logistics of doing the search)

Typically, literature request forms contained a number of open-ended elements (e.g., for a free-text description of the problem or subject) and a number of closed, limited response items (e.g., language, year-range, types of material) that mainly governed the characteristics of the search output. The authors investigated the effect of imposing a structure or anatomy on the description of the problem or subject, more typically handled as an open-ended element.

Certain factors in the interaction between librarian and clinical staff make an already complicated communication process even more complex. For example, a study of fifty-nine information exchanges between a librarian and hospital staff [18] revealed a high prevalence of technical jargon; 29% of the information exchanges contained some sort of jargon, either acronyms, books and periodicals identification, online searching, and eponyms. However, the study concluded that the ability of librarians to use highly technical language to communicate with health care professionals might cause the latter to view librarians as members of the medical team. Use of a structured anatomy to elicit a more complete picture of the originating information need (and thus a greater technical component) may improve communication and move the librarian toward a more active role in the delivery of patient-specific, evidence-based health information.

Studies of clinical questioning behavior

Various studies reported that clinicians asked 1 question of medical knowledge for every 2 patients seen (primary care physicians [19]), 2 questions for every 3 patients seen (office practice physicians [20]), or 2.4 questions for every 10 patients seen (family practice [21]). Of these questions, a consistently observed 30% [22–23] were pursued for an answer through a wide variety of information sources. Information sources consulted included human sources (e.g., colleagues and pharmacists), printed sources (e.g., textbooks and personal reprint collections), or computer-based sources (e.g., MEDLINE). Those questions presented to computer-based sources were likely to constitute only a minute proportion of the original set of patient-derived questions (possibly less than 1% [24], extrapolated from Gorman [25]). Online searching (by which term the authors also include the use of CD-ROM databases) has been characterized [26] as having moderate relevance and usefulness and high validity, but as necessitating a large amount of time and effort to extract information. This searching might be conducted by an intermediary, typically a medical librarian, or, increasingly, by the clinical end user.

The goals of the medical librarian in supporting clinical practice through intermediary searching might be described as threefold:

▪ to maximize the relevance and usefulness of literature search results

▪ to optimize retrieval of high-quality research studies to answer a question derived from a patient-centered clinical question [27]

▪ to make the pre-search interview and interaction with database sources as efficient and productive as possible

Optimally, a search is conducted with requester and intermediary working in tandem—the former supplying detailed clinical knowledge and immediate relevance feedback, the latter using information skills in command language, search logic, and search result limitation methods. However, it often proves impractical for clinician and librarian to conduct a “real time” search together either at the point of request or on a subsequent occasion. Typically, therefore, a clinician-intermediary interaction in requesting a literature search involves a brief interview (less than five minutes), conducted either via face-to-face contact or over the telephone, with details recorded on a minimally structured literature search request form.

METHODS

The authors sought to explore whether structuring the literature search request form according to an evidence-based medicine (EBM) anatomy would (1) elicit more information that subsequently might be used in a search strategy, (2) improve the precision of search results, and (3) prove acceptable to participating librarians. Reports of user satisfaction from the clinicians would also be very important. However, the authors considered a positive reaction from the librarians to be prerequisite, in terms of both implementation and compliance, to obtaining user views. If the librarians could not see a benefit from a change in procedure, it was unlikely that their users would seek to instigate such a change.

Study design

In demonstrating an effect from using an EBM-structured literature search request form, compared with a minimally structured form, a randomized controlled trial was the study design of choice. Users of literature search services would be randomized to receive an interview using either an EBM-structured or a minimally structured form. Such an option was discussed at length by the research team, but dismissed on the grounds of impracticality. It would be impossible to have the same information professionals contemporaneously offering EBM-structured and minimally structured variants of the form due to the possible acquisition of question-handling skills. Similarly intra-service contamination, particularly likely where small teams were involved, might result if colleagues received differential training in the reference interview. Randomization by library, rather than by requester or librarian, would require a much larger sample than study logistics allowed.

A before-and-after design was used involving six multidisciplinary libraries in the Midlands of England. Participants included two university health sciences libraries, one health service research library, and three district hospital libraries serving clinicians, undergraduate, and postgraduate health sciences students and researchers. All library staff involved in delivering inquiry services to users were included, irrespective of whether they were professionally qualified or paraprofessional. No attempt was made to control for age of staff or years of library experience.

One hundred ninety-five minimally structured forms were collected over a four-month period (Phase 1). A brief onsite training program, centering on use of the EBM-structured form, was then delivered to each site. Upon completion of the training program, participating libraries were admitted to Phase 2, again over a four-month period. One hundred eighty-five EBM-structured forms were collected during Phase 2.

The results of a search were sent to each requester with (1) an assessment of search results form and (2) a duplicate copy of search results, on which they were to mark relevant articles. Both these items were then to be returned to the library for analysis. Data collected in this manner included:

▪ User satisfaction—users marked on a linear scale how well the references answered their question.

▪ User perceptions—users could add qualitative comments about search strengths, weaknesses, and possible improvements into a free-text feedback section.

▪ Relevance—upon receipt of the search results, users marked those references thought to be relevant to their request and an overall figure for relevance was calculated as a percentage of the total number of items retrieved by the search. For example, if two references from a search of 100 references were marked by the user, the relevance would be 2%.

In addition, a crude measure of complexity of search strategy was obtained by quantifying the overall length of the saved strategy. Aside from the effect of the type of form used, the topics of searches were assumed to be equally complex in both the before period and the after period. The use of nearly 200 forms in each period minimized the possibility of any imbalance.

Shortly after starting to collect data for Phase 2, the research team approached twenty participating librarians at the six sites with a questionnaire asking for their views about using the EBM-structured form compared to the previously used, minimally structured form.

The EBM-structured literature search form

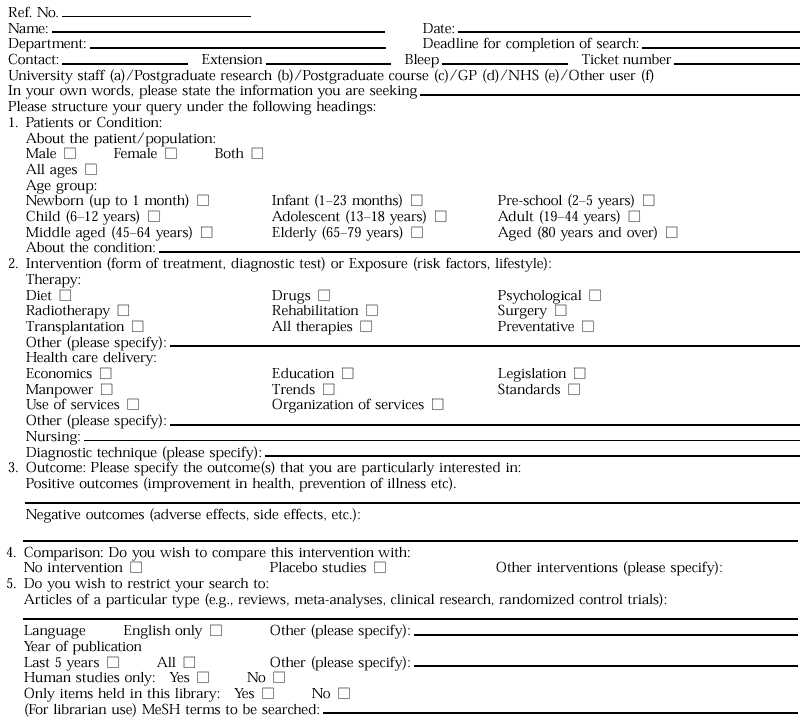

The EBM-structured literature search form sought to harness the EBM paradigm through inclusion of four sections that corresponded to the anatomy of the question. Firstly, the Patient/Population was subdivided into sections to elicit both the Patient and the Condition for a specific question (e.g., elderly and diabetes-mellitus-non-insulin-dependent). A check box was provided to make explicit the age-specific definitions used by the MEDLINE database. Next, came the Intervention section, supplemented by those subheadings that related to either therapeutic or diagnostic interventions or other most commonly used aspects. Then, the requester was prompted for specific Outcomes of interest, with positive and negative outcomes being solicited separately. Finally, the basis for Comparison, be it controlled by placebo or with an alternative intervention, was recorded.

A copy of the EBM-structured form is included in the appendix. The minimally structured form differed from this form only in that it had a single free-text box instead of the four structured Problem, Intervention, Outcomes, and Comparison boxes. An abbreviated online version of the EBM-structured form is available on the Web [28].

Training Program

Training in the basics of evidence-based medicine—in recognizing the main types of questions and applying a corresponding methodological filter and in deconstituting a request into an EBM anatomy—was tailored to the needs of individual sites. The purpose was to prepare staff to use the EBM-structured literature form with additional training components being regarded as supplementary. In three cases, a half-day workshop was delivered at participating sites. In a further two instances, staff were split for logistical reasons between two back-to-back sessions over an afternoon. The remaining site opted to use the EBM-structured literature forms following a short verbal briefing.

RESULTS

A total of 380 information request forms, EBM-structured (N = 185) and minimally structured (N = 195), were analyzed using SPSS for Windows version 6, against variables identified above. Pearson correlations were sought, a level of P < 0.05 being used to define significance. In recognition of the fact that the EBM anatomy was designed for “clinical” inquiries, an independent assessment (i.e., blinded as to outcomes) was made to evaluate, simply on the basis of the content of the request, whether requests were suitable for this paradigm (i.e., clinical) or not (i.e., nonclinical). A sensitivity analysis was then conducted to see whether findings were significant specifically within this limited context.

Analysis of literature request forms

Variables collected for the statistical analysis were analyzed using SPSS for Windows and statistically significant correlations were found between the following.

Use of the EBM-structured form and complexity of the search strategy.

Analysis of all 380 forms found a statistically significant correlation (P = 0.002, R = 0.1549, N = 380) between use of the EBM-structured form and the complexity of the search strategy. Using an EBM-structured form elicited more information concerning the nature of the information request. A typical information request would have details of both a population and an intervention: for example, dietary therapy (Intervention) used for children and adolescents with insulin dependent diabetes (Population).

However, an EBM anatomy–elicited request might supplement this information with details of both positive (improvement in health, prevention of diabetic complications) and/or negative outcomes (hyper- and hypoglycemic episodes). Optimally, it might include forms of comparison within the question (e.g., studies comparing one dietary regime with another, studies comparing tight dietary control with no control, etc.). In either of these instances, a standard Boolean relationship, Population and Intervention, was enhanced as the more complex Population and Intervention and Outcome.

Effect of EBM-structured forms on clinical requests.

A goal of information support to evidence-based health care is to retrieve fewer items without adversely affecting relevance judgments (i.e., to provide increased precision). This was, in fact, found with regard to clinical requests handled by the EBM-structured form, which were associated with fewer items retrieved (P = 0.028, R = −0.1614, N = 185) but not with fewer relevant items retrieved. This was the case even though overall, for EBM-structured and minimally structured forms, and clinical and nonclinical requests, there was a significant correlation between total number of items retrieved and number of relevant items retrieved (P = 0.005, R = 0.3764, N = 55). However, numbers were small because relevance data was only available in fifty-five cases. When clinical questions were handled by the minimally structured form, there was no significant correlation with number of items retrieved.

Opinions on the EBM-structured request form.

Clearly, it would be of little practical benefit for EBM-structured request forms to be shown to be effective, if librarians responsible for implementing them found them unusable. Four of the twenty librarians (20%) felt that users had reacted negatively to the EBM-structured forms. Fourteen of the librarians (70%) felt that that the minimally structured forms were easier to use, compared with two librarians (10%) in favor of the EBM-structured forms. Similarly, eleven (55%) felt that the minimally structured forms were quicker to complete, compared with only one (5%) for the EBM-structured forms. However, a corollary was that twelve of the librarians (60%) felt that the EBM-structured forms provided more information about the users' needs, with none feeling this way about the minimally structured forms. In all cases, the general impression of the forms was elicited from the librarians, as the focus was on the acceptability of the intervention as a whole and not on individual experiences associated with their use.

DISCUSSION

The practice of evidence-based health care and the support of clinical decision making has required that databases such as MEDLINE be used in a more precise manner than was typical for primarily educational processes. It has necessitated the following:

▪ a greater complexity of search strategy resulting from the elicitation of more detail about the originating information need (i.e., greater specificity of request)

▪ a reduction in the number of items retrieved without a corresponding decrease in the number of relevant items (i.e., improved precision of results)

It could be seen from the above that such conditions pertained only where an EBM-structured literature request form was used in conjunction with a clinical request. To implement this at a service level required that librarians be trained to filter clinical questions, appropriate to the EBM anatomy approach, from more general educational questions. Richardson and Wilson differentiated [29] between two “shapes” of question as being either foreground or background. Background questions were of the type “What is this disorder?”; “What causes it?”; “How does it present?”; and “What treatment options exist?”; while foreground questions had the specific components of the four-part anatomy. Furthermore, a lack of prior knowledge or experience of a particular condition or situation characterized background questions, whereas foreground questions related to a need for advanced decisions between treatment options. Practice further necessitated that librarians were able to translate inquiries of clinical origin into three- or four-part questions; not merely for those questions that related to the more classical therapy and diagnosis types but also for those of etiology, prognosis, clinical quality, and cost-effectiveness [30].

The project steering group debated whether the experimental version of the form should utilize the Patient-Intervention-Outcome-Comparison anatomy on its own (as a direct alternative to the free-text recording of inquiries) or whether it should also include a free-text box. The ultimate decision, for reasons relating to the unsuitability of the anatomy for certain types of question, was to include a free-text box. Clearly, a major consideration should be that a literature search form could accommodate all kinds of literature search requests regardless of whether the inquiries were clinical or not. It would not be desirable for the decision over where to put information to take so long that it impaired the user-intermediary interaction.

This decision had the following effects on the project:

1. It made it more difficult to distinguish the respective effects of the control and the experimental form. As a consequence, it was difficult to isolate whether the experimental form provided an alternative to, or merely an enhancement of, the control form.

2. In many cases, details from the free-text box were repeated within the anatomy section of the form. This may have led to users and intermediaries feeling that the form was less useful and took longer to complete.

3. In other cases, details from the free-text box were omitted from the anatomy even though they fell naturally within one or more of the purpose-designed boxes. This may have adversely affected perceptions of the utility of the EBM anatomy.

4. Additionally, one of the strengths of the anatomy was to prompt for more detail where one or more elements had not been made explicit. This could only be done at the time of the information request. Examination of some of the forms suggested that free-text requests might have been translated into the anatomy after the initial reference interview had taken place. In such cases, the benefit of the EBM form would be limited to the searching phase and would not extend to the whole information process as intended.

Notwithstanding the above, there were also a number of benefits from the inclusion of the free-text box on the experimental form:

1. The free-text box allowed users to describe the request in their own words before it was disaggregated into search concepts for the literature-searching process. If the intermediary had recorded a request directly into the vocabulary of the database—or, in this case, the necessarily arbitrary structure of the anatomy—arguably, this might have denied access to a wealth of supporting detail.

2. The free-text box provided a level of detail that would otherwise be lost by the anatomy structure. In particular, it often contained contextual information such as the purpose of the information request.

3. The presence of both free-text and anatomy on the same request form gave an extra opportunity to analyze the extent to which translation into the anatomy had been carried out successfully.

A further limitation of the study related to the fact that no attempt was made to control for differences between the library staff involved in the project. These differences would extend from different styles in the reference interview, different degrees of familiarity with the form, and, indeed, different search techniques. Nevertheless, as this study was pragmatic, conducted in a workplace setting, such confounding variables were inevitable and pertained to both the before and after phases of the study.

Librarians found the EBM-structured forms useful for clinical questions and appreciated prompts like “age group” to focus the query. However, many requests were for nonclinical questions, and the form was not considered as relevant when users sought a general “overview” rather than the answer to a precise clinical question. Several librarians felt that the form was repetitive and contained terms such as “intervention” that were unfamiliar to users. Although the form encouraged users to focus their questions, the retention of the free-text box often led to duplication of details. Preference by librarians for the minimally structured forms, against all dimensions except informativeness, might initially be viewed as disheartening by those wishing to implement an evidence-based approach. Nevertheless, EBM-structured request forms were of relatively recent introduction when compared with long-standing practice. The rationale for redesigning the form was not outright simplification but rather enhancement through the incorporation of several explicit information prompts. There was clearly the potential to increase popularity of the forms through increased familiarity and targeted training interventions.

CONCLUSIONS

The implications of the findings can be divided into those for health information practice and those for health information research. They may also have a more general bearing on information practice in connection with definitions of user need and the search request interview. Certainly, a major premise of the AuRACLE project is that lessons learned through the exemplar of medicine may be readily transferable to other subject domains. For the practitioner, one can conclude:

▪ That using an EBM-structured form can elicit more detail, result in more complex and specific search strategies, and thus improve precision of retrieval. This finding is, in fact, quite similar to findings from a sibling study that looks at the effect of structured abstracts on information retrieval [31]. One may hypothesize that enhanced structuring both at the time of request and at the time of database interaction can help to minimize “noise” at important points along the mediated search request process.

Researchers need to:

▪ examine more closely the training needs of librarians in changing their roles from passive recipients of information requests to active participants in defining and refining the clinical query and to develop appropriate training programs to meet such needs;

▪ investigate the utility of different interfaces aimed at the end user in order to replicate the process of elicitation that, as demonstrated, can yield improved dividends in the literature search process; and

▪ develop indicators that will help librarians to “triage” requests according to whether they may fit appropriately within a clinical question paradigm. This may, in turn, provide a means for rationalizing the conflicting demands of end-user training and mediated searching faced by health information practitioners.

Acknowledgments

The AuRACLE project team would like to thank the librarians and users who participated in this study.

APPENDIX

Mediated literature search form

Mediated literature search form

Footnotes

*The Automated Retrieval of Clinically-relevant Evidence (AuRACLE) Project was supported by a research grant from the British Library.

REFERENCES

- White MD. Questions in reference interviews. J Doc. 1998;54(4):443–65. [Google Scholar]

- Evidence Based Medicine Working Group. Evidence based medicine: a new approach to teaching the practice of medicine. JAMA. 1992 Nov 4;268(17):2420–5. doi: 10.1001/jama.1992.03490170092032. [DOI] [PubMed] [Google Scholar]

- Booth A. In search of the evidence: informing effective practice. J Clin Effectiveness. 1996;1(1):25–9. [Google Scholar]

- Rosenberg W, Donald A. Evidence based medicine: an approach to clinical problem solving. BMJ. 1995 Apr 29;310(6987):1122–6. doi: 10.1136/bmj.310.6987.1122. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sackett DL, Rosenberg WMC. On the need for evidence based medicine. J Pub Hlth Med. 1995 Sep;17(3):330–4. [PubMed] [Google Scholar]

- Richardson WS, Wilson MC, Nishikawa J, Hayward RS. The well-built clinical question: a key to evidence based decisions. ACP J Club. 1995;123(3):A12–3. [PubMed] [Google Scholar]

- Snowball R. Using the clinical question to teach search strategy: fostering transferable conceptual skills in user education by active learning. Health Libr Rev. 1997 Sep;14(3):167–72. [Google Scholar]

- Ford N, Booth A, Miller D, O'Rourke A, Ralph J, and Turnock E. Developing an automated extensible reference service. (British Library Research and Innovation Report 75). London, U.K.: British Library. 1998 [Google Scholar]

- Booth A. Teaching evidence-based medicine—lessons for information professionals. IFMH Inform. 1995;6(2):5–6. [Google Scholar]

- Hurych J. The professional and the client: the reference interview revisited. Ref Libr. 1982;5(6):199–205. [Google Scholar]

- Auster E. User satisfaction with the online negotiation interview: contemporary concern in traditional perspective. RQ. 1983;23(1):47–59. [Google Scholar]

- Somerville AN. The place of the reference interview in computer searching: the academic setting. Online. 1977;1(4):14–23. [Google Scholar]

- White. op. cit., 447. [Google Scholar]

- Lynch MJ. Reference interviews in public libraries. Libr Q. 1978;48:131–8. [Google Scholar]

- Ingwersen P, Kaae S. User-librarian negotiations and information search procedures in public libraries: analysis of verbal protocols: final research report. Copenhagen, Denmark: Royal School of Librarianship. 1980 [Google Scholar]

- Somerville AN. The pre-search reference interview—a step by step guide. Database. 1982;5(1):32–8. [Google Scholar]

- White. op. cit., 451. [Google Scholar]

- Shultz SM. Medical jargon: ethnography of language in a hospital library. Med Ref Serv Q. 1996;15(3):41–7. doi: 10.1300/J115V15N03_04. [DOI] [PubMed] [Google Scholar]

- Gorman PN, Helfand M. Information seeking in primary care: how physicians choose which clinical questions to pursue and which to leave unanswered. Med Decis Making. 1995;15(2):113–9. doi: 10.1177/0272989X9501500203. [DOI] [PubMed] [Google Scholar]

- Covell DG, Uman GC, Manning PR. Information needs in office practice: are they being met? Ann Intern Med. 1985 Oct;103(4):596–9. doi: 10.7326/0003-4819-103-4-596. [DOI] [PubMed] [Google Scholar]

- Barrie AR, Ward AM. Questioning behaviour in general practice: a pragmatic study. BMJ. 1997 Dec 6;315(7121):1512–5. doi: 10.1136/bmj.315.7121.1512. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gorman. op. cit, 113. [Google Scholar]

- Covell. op. cit, 596. [Google Scholar]

- Barrie. op. cit., 1512. [Google Scholar]

- Gorman. op. cit., 114. [Google Scholar]

- Smith R. What clinical information do doctors need? BMJ. 1996 Oct 26;313(7064):1062–8. doi: 10.1136/bmj.313.7064.1062. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cole C, Kennedy L, Carter S. The optimization of online searches through the labelling of a dynamic situation-dependent information need: the reference interview and online searching for undergraduates doing a social science assignment. Info Proc & Mgmnt. 1996;32(6):709–17. [Google Scholar]

- ScHARR. Library information request. [Web document]. Sheffield, U.K.: University of Sheffield. <http://panizzi.shef.ac.uk/auracle/form.html>. [Google Scholar]

- Richardson WS, Wilson MC. On questions, background and foreground. Evidence Based Healthcare Newsletter. 1997 Nov;17:8–9. [Google Scholar]

- Hicks A, Booth A, Sawers C. Becoming ADEPT: delivering distance learning on evidence-based medicine for librarians. Health Libr Rev. 1998 Sep;15(3):175–84. doi: 10.1046/j.1365-2532.1998.1530175.x. [DOI] [PubMed] [Google Scholar]

- Booth A, O'Rourke AJ. The value of structured abstracts in information retrieval from MEDLINE. Health Libr Rev. 1997 Sep;14(3):157–66. [Google Scholar]