Abstract

Most theories of categorization emphasize how continuous perceptual information is mapped to categories. However, equally important is the informational assumptions of a model, the type of information subserving this mapping. This is crucial in speech perception where the signal is variable and context-dependent. This study assessed the informational assumptions of several models of speech categorization, in particular, the number of cues that are the basis of categorization and whether these cues represent the input veridically or have undergone compensation. We collected a corpus of 2880 fricative productions (Jongman, Wayland & Wong, 2000) spanning many talker- and vowel-contexts and measured 24 cues for each. A subset was also presented to listeners in an 8AFC phoneme categorization task. We then trained a common classification model based on logistic regression to categorize the fricative from the cue values, and manipulated the information in the training set to contrast 1) models based on a small number of invariant cues; 2) models using all cues without compensation, and 3) models in which cues underwent compensation for contextual factors. Compensation was modeled by Computing Cues Relative to Expectations (C-CuRE), a new approach to compensation that preserves fine-grained detail in the signal. Only the compensation model achieved a similar accuracy to listeners, and showed the same effects of context. Thus, even simple categorization metrics can overcome the variability in speech when sufficient information is available and compensation schemes like C-CuRE are employed.

1. Categorization and Information

Work on perceptual categorization encompasses diverse domains like speech perception, object identification, music perception and face recognition. These are unified by the insight that categorization requires mapping from one or more continuous perceptual dimensions to a set of meaningful categories, and it is often assumed that the principles governing this may be common across domains (e.g., Goldstone & Kersten, 2003; though see Medin, Lynch & Solomon, 2000).

The most important debates concern the memory representations used to distinguish categories, contrasting accounts based on boundaries (e.g., Ashby & Perrin, 1988), prototypes (e.g., Homa, 1984; Posner & Keele, 1968; Reed, 1972), and sets of exemplars (Hintzman, 1986; Medin & Schafer, 1978; Nosofsky, 1986). Such representations are used to map individual exemplars, described by continuous perceptual cues, onto discrete categories. But these are only part of the story. Equally important for any specific type of categorization (e.g. speech categorization) is the nature of the perceptual cues.

There has been little work on this within research on categorization. What has been done emphasizes the effect of categories on perceptual encoding. We know that participants’ categories can alter how individual cue-values along dimensions like hue are encoded (Hansen, Olkkonen, Walter & Gegenfurtner, 2006; Goldstone, 1995). For example, a color is perceived as more yellow in the context of a banana than a pear. Categories may also warp distance within a dimension as in categorical perception (e.g. Liberman, Harris, Hofffman & Griffith, 1957; Goldstone, Lippa & Shiffrin, 2001) though this has been controversial (Massaro & Cohen, 1983; Schouten, Gerrits & Van Hessen, 2003; Roberson, Hanley & Pak, 2009; Toscano, McMurray, Dennhardt & Luck, 2010). Finally, the acquisition of categories can influence the primitives or dimensions used for categorization (Schyns & Rodet, 1997; Oliva & Schyns, 1997).

While there has been some work examining how categories affect continuous perceptual processing, there has been little work examining the other direction, whether the type of information that serves as input to categorization matters. Crucially, does the nature of the perceptual dimensions constrain or distinguish theories of categorization? In fact, some approaches (e.g. Soto & Wasserman, 2010) argue that we can understand much about categorization by abstracting away from the specific perceptual dimensions.

Nonetheless, we cannot ignore this altogether. Smits, Jongman and Sereno (2006), for example, taught participants auditory categories along either resonance-frequency or duration dimensions. The distribution of the exemplars was manipulated to contrast boundary-based, prototype and statistical accounts. While boundaries fit well for frequency categories, duration categories required a hybrid of boundary and statistical accounts. Thus, the nature of the perceptual dimension may matter for distinguishing theoretical accounts of categorization.

Beyond the matter of the cue being encoded, a second issue, and the focus of this study, is whether and how perceptual cues are normalized during categorization. Perceptual cues are affected by multiple factors, and it is widely, though not universally, accepted that that perceptual systems compensate for these sources of variance. For example, in vision, to correctly perceive hue, observers compensate for light-source (McCann, McKee & Taylor, 1976); in music, pitch is computed relative to a tonic note (relative pitch); and in speech, temporal cues like duration may be calibrated to the speaking rate (Summerfield, 1981), while pitch is computed relative to the talker’s pitch range (Honorof & Whalen, 2005).

Many theories of categorization do not address the relationship between compensation and categorization. Compensation is often assumed to be a low-level autonomous process occurring prior to and independently of categorization (though see Mitterer & de Ruiter, 2008). Moreover, in laboratory learning studies, it doesn’t matter whether perceptual cues undergo compensation. Boundaries, prototypes and exemplars can be constructed with either type of information, and most experiments control for factors that demand compensation like lighting.

However, there are conditions under which such assumptions are unwarranted. First, if the input dimensions were context-dependent and categorization was difficult, compensation could make a difference in whether a particular model of categorization could classify the stimuli with the same accuracy as humans. This is unlikely to matter in laboratory-learning tasks where categorization is relatively unambiguous, but it may be crucial for real-life category systems like speech in which tokens can not always be unambiguously identified. Here, a model’s accuracy may be as much a product of the information in the input as the nature of the mappings.

Second, if compensation is a function of categorization we cannot assume autonomy. Color constancy, for example, is stronger at hue values near category prototypes (Kulikowski & Vaitkevicius, 1997). In speech, phonemes surrounding a target phoneme affect the same cues, such that the interpretation of a cue for one phoneme may depend on the category assigned to others (Pisoni & Sawusch, 1974; Whalen, 1989; Smits, 2001a,b; Cole, Linebaugh, Munson & McMurray, 2010; though see Nearey, 1990). Such bidirectional effects imply that categorization and compensation are not independent and models of categorization must account for both, something that few models in any domain have considered (though see Smits, 2001a,b).

Finally, and perhaps most importantly, some theories of categorization make explicit claims about the nature of the information leading to categorization. In vision, Gibsonian approaches (Gibson, 1966) and Geon theory (e.g. Biederman, 1995) posit invariant cues for object recognition; while in speech perception, the theory of acoustic invariance (Stevens & Blumstein, 1978; Blumstein & Stevens, 1980; Lahiri, Gewirth & Blumstein, 1984) and quantal theory (Stevens, 2002; Stevens & Keyser, 2010) posit invariant cues for some phonetic distinctions (cf., Sussman, Fruchter, Hilbert& Sirosh, 1998). Other approaches such as the version of exemplar theory posited in speech explicitly claim that while there may be no invariant perceptual cues, category representations can cope with this without normalization (e.g. Pisoni, 1997). In such theories, normalization prior to categorization is not necessary, and this raises the possibility that normalization does not occur at all as part of the categorization process.

Any theory of categorization can be evaluated on two levels: the mechanisms which partition the perceptual space, and the nature of the perceptual space. This latter construct refers to the informational assumptions of a theory and is logically independent from the categorization architecture. For example, one could build a prototype theory on either raw or normalized inputs, and exemplars could be represented in either format. In Marr’s (1982) levels of analysis, the informational assumptions of a theory can be seen as part of the first, computational level of analysis, where the problem is defined in terms of input/output relationships, while the mechanism of categorization may be best described at the algorithmic level. However, when the aforementioned conditions are met, understanding the informational assumptions of a theory may be crucial for evaluating it. Contrasting with Marr, we argue that levels of analysis may constrain each other: one must properly characterize a problem to distinguish solutions.

Speech perception presents a compelling domain in which to examine these issues, as all of the above conditions are met. Thus, the purpose of this study is to evaluate the informational assumptions of several approaches to speech categorization, and to ask what kind of information is necessary to support listener-like categorization. This was done by collecting a large dataset of measurements on a corpus of speech tokens, and manipulating the input to a series of categorization models to determine what informational structure is necessary to obtain listener-like performance. While our emphasis is on theories of speech perception, consistent with a history of work relating speech categories to general principles of categorization1, this may also uncover principles that are relevant to categorization more broadly.

The remainder this introduction will discuss the classic problems in speech perception, and the debate over normalization. We then present a new approach to compensation which addresses concerns about whether fine-grained acoustic detail is preserved. Finally, we describe the speech categories we investigated, the eight fricatives of English. Section 2 presents the empirical work that is the basis of our modeling: a corpus of 2880 fricatives, with measurements of 24 cues for each, and listeners’ categorization for a subset of them. Sections 3 and 4 then present a series of investigations in which the input to a standard categorization model is manipulated to determine which informational account best yields listener-like performance.

1.1 The Information Necessary for Speech Perception

Speech perception is increasingly being described as a problem of mapping from continuous acoustic cues to categories (e.g. Oden & Massaro, 1978; Nearey, 1997; Holt & Lotto, 2010). We take a relatively theory-neutral approach to what a cue is, defining a cue as a specific measurable property of the speech signal that can potentially be used to identify a useful characteristic like the phoneme category or the talker. Our use of this term is not meant to imply that a specific cue is actually used in speech perception, or that a given cue is the fundamental property that is encoded during perception. Cues are merely a convenient way to measure and describe the input.

A classic framing in speech perception is the problem of lack of invariant cues in the signal for categorical distinctions like phonemes. Most speech cues are context-dependent and there are few, if any, that invariantly signal a given phonetic category. There is debate on how to solve this problem (e.g. Fowler, 1996; Ohala, 1996; Lindblom, 1996) and about the availability of invariant cues (e.g. Blumstein & Stevens, 1980; Lahiri et al., 1984; Sussman et al, 1998). But there is little question that this is a fundamental issue that theories of speech perception must address. Thus, the information in the signal to support categorization is of fundamental importance to theories of speech perception.

As a result of this, a common benchmark for theories of speech perception accuracy, the ability to separate categories. This benchmark is applied to complete theories (e.g. Johnson, 1997; Nearey, 1990; Maddox, Molis & Diehl, 2002; Smits, 2001a; Hillenbrand & Houde, 2003), and even to phonetic analyses of particular phonemic distinctions (e.g., Stevens & Blumstein, 1978; Blumstein & Stevens, 1980, 1981; Forrest, Weismer, Milenkovic & Dougall, 1988; Jongman, Wayland & Wong, 2000; Werker et al, 2007). The difficulty attaining this benchmark means that sometimes accuracy is all that is needed to validate a theory. Thus, speech meets our first condition: categorization is difficult and the information available to it matters.

Classic approaches to the lack of invariance problem posited normalization or compensation processes for coping with specific sources of variability. In speech, normalization is typically defined as a process that factors out systematic but phonologically non-distinctive acoustic variability (e.g. systematic variability that does not distinguish phonemes) for the purposes of identifying phonemes or words. Normalization is presumed to operate on the perceptual encoding prior to categorization and classic normalization processes include rate (e.g., Summerfield, 1981) and talker (Nearey, 1978; see chapters in Johnson & Mullenix, 1997) normalization. Not all systematic variability is non-phonological, however: the acoustic signal at any point in time is always affected by the preceding and subsequent phonemes, due to a phenomenon known as coarticulation (e.g., Delattre, Liberman & Cooper, 1955; Öhman, 1966; Fowler & Smith, 1986; Cole et al., 2010). As a result, the term compensation has often been invoked as a more general term to describe both normalization (e.g. compensating for speaking rate) and processes that cope with coarticulation (e.g. Mann & Repp, 1981). While normalization generally describes processes at the level of perceptual encoding, compensation can be accomplished either pre-categorically or as part of the categorization process (e.g. Smits, 2001b). Due to this greater generality, we use the term compensation throughout this paper.

A number of studies show that listeners compensate for coarticulation in various domains (e.g. Mann & Repp, 1981; Pardo & Fowler, 1997; Fowler & Brown, 2000). Crucially, the fact that portions of the signal are affected by multiple phonemes raises the possibility that how listeners categorize one phoneme may affect how subsequent or preceding cues are interpreted. For example, consonants can alter the formant frequencies listeners use to categorize vowels (Öhman, 1966). Do listeners compensate for this variability, by categorizing the consonant and then interpret the formant frequencies differently on the basis of the consonant? Or, do they compensate for coarticulation by tracking low-level contingencies between the cues for consonants and vowels or higher level contingencies between phonemes? Studies on this offer conflicting results (Mermelstein, 1978; Whalen, 1989; Nearey, 1990; Smits, 2001a).

Clearer evidence for such bidirectional processes comes from work on talker identification. Nygaard, Sommers and Pisoni (1994), for example, showed that learning to classify talkers improves speech perception, and a number of studies suggest that visual cues about a talker’s gender affect how auditory cues are interpreted (Strand, 1999; Johnson, Strand & D’Imperio, 1999). Thus, interpretation of phonetic cues may be conditioned on judgments of talker identity. As a whole, then, there is ample interest, and some evidence that compensation and categorization are interactive, the second condition under which informational factors are important for categorization.

Compensation is not a given however. Some forms of compensation may not fully occur prior to lexical access. Talker voice, or indexical, properties of the signal (which do not contrast individual phonemes and words) affects a word’s recognition (Creel, Aslin & Tanenhaus, 2008; McLennan & Luce, 2005) and memory (Palmeri, Goldinger & Pisoni, 1993; Bradlow, Nygaard & Pisoni, 1999). Perhaps most tellingly, speakers’ productions gradually reflect indexical detail in auditory stimuli they are shadowing (Goldinger, 1998), suggesting that such detail is part of the representations that mediate perception and production. Thus, compensation for talker voice is not complete—indexical factors are not (completely) removed from the perceptual representations used for lexical access and may even be stored with lexical representations (Pisoni, 1997). This challenges the necessity of compensation as a precursor to categorization.

Thus, informational factors are essential to understanding speech categorization: the signal is variable and context-dependent; compensation may be dependent on categorization, but may also be incomplete. As a result, it is not surprising that some theories of speech perception make claims about the information necessary to support categorization.

On one extreme, although many researchers have abandoned hope of finding invariant cues (e.g. Ohala, 1996; Lindblom, 1996), for others, the search for invariance is ongoing. A variety of cues have been examined, such as burst onset spectra (Blumstein & Stevens, 1981; Kewley-Port & Luce, 1984), or locus equations for place of articulation in stop consonants, (Sussman et al, 1998; Sussman & Shore, 1996), and duration ratios for voicing (e.g. Port & Dalby, 1982; Pind, 1995). Most importantly, Quantal Theory (Stevens, 2002; Stevens & Keyser, 2010) posits that speech perception harnesses specific invariant cues for some contrasts (particularly manner of articulation, e.g., the b/w distinction). Invariance views, broadly construed, then, make the informational assumptions that 1) a small number of cues should suffice for many types of categorization; and 2) compensation is not required to harness them.

On the other extreme, exemplar approaches (e.g., Johnson, 1997; Goldinger, 1998; Pierrehumbert, 2001, 2003; Hawkins, 2003) argue that invariant cues are neither necessary nor available. If the signal is represented faithfully and listeners store many exemplars of each word, context-dependencies can be overcome without compensation. Each exemplar in memory is a holistic chunk containing both the contextually conditioned variance and the context, and is matched in its entirety to incoming speech. Because of this, compensation is not needed and may impede listeners by eliminating fine-grained detail that helps sort things out (Pisoni, 1997). Broadly construed, then, exemplar approaches make the informational assumptions that 1) input must be encoded in fine-grained detail with all available cues; and 2) compensation or normalization does not occur.

Finally, in the middle, lie a range of theoretical approaches that do not make strong informational claims. For lack of a better term, we call these cue-integration approaches, and they include the Fuzzy Logical Model, FLMP (Oden, 1978; Oden & Massaro, 1978), the Normalized a Posteriori Probability model, NAPP (Nearey, 1990), the Hierarchical Categorization of coarticulated phonemes, HICAT (Smits, 2001a,b), statistical learning models (McMurray, Aslin & Toscano, 2009a; Toscano & McMurray, 2010), and connectionist models like TRACE (Elman & McClelland, 1986). Most of these can be characterized as prototype models, though they are also sensitive to the range of variation. All assume that multiple (perhaps many) cues participate in categorization, and that these cues must be represented more or less veridically. However, few make strong claims about whether explicit compensation of some form occurs (although many implementations use raw cue-values for convenience). In fact, given the high-dimensional input, normalization may not be needed—categories may be separable with a high-dimensional boundary in raw cue-space (e.g., Nearey, 1997) and these models have been in the forefront of debates as to whether compensation for coarticulation is dependent on categorization (e.g., Nearey, 1990, 1992, 1997; Smits, 2001a,b). Thus it is an open question whether compensation is needed in such models.

Across theories, two factors describe the range of informational assumptions. Invariance accounts can be distinguished from exemplar and cue-integration accounts on the basis of number of cues (and their invariance). The other factor is whether cues undergo compensation or not. On this, exemplar and invariance accounts argue that cues do not undergo explicit compensation, while cue-integration models appear more agnostic. Our goal is to contrast these informational assumptions using a common categorization model. However, this requires a formal approach to compensation, which is not currently available. Thus, the next section describes several approaches to compensation and elaborates a new, generalized approach which builds on their strengths to offer a more general and formally well-specified approach based on Computing Cues Relative to Expectations (C-CuRE).

1.2 Normalization, Compensation and C-CuRE

Classic normalization schemes posit interactions between cues that allow the perceptual system to remove the effects of confounding factors like speaker and rate. These are bottom-up processes motivated by articulatory relationships and signal processing. Such accounts are most associated with work on vowel categorization (e.g., Rosner & Pickering, 1994; Hillenbrand & Houde, 2003), though to some extent complex cue-combinations like locus equations (Sussman et al., 1998) or CV ratios (Port & Dalby, 1982), also fall under this framework. Such approaches offer concrete algorithms for processing the acoustic signal, but they have not led to broader psychological principles for compensation.

Other approaches emphasize principles at the expense of computational specificity. Fowler’s (1984; Fowler & Smith, 1986; Pardo & Fowler, 1997) gestural parsing posits that speech is coded in terms of articulatory gestures, and that overlapping gestures are parsed into underlying causes. So for example, when a partially nasalized vowel precedes a nasal stop, the nasality gesture is assigned to the stop (a result of anticipatory coarticulation), since English does not use nasalized vowels contrastively (as does French), and the vowel is perceived as more oral (Fowler & Brown, 2000). As part of direct realist accounts, gestural parsing only compensates for coarticulation—the initial gestural encoding overcomes variation due to talker and rate.

Gow (2003) argues that parsing need not be gestural. His feature-cue parsing suggests that similar results can be achieved by grouping principles operating over acoustic features. This too has been primarily associated with coarticulation—variation in talker and/or rate is not discussed. However, the principle captured by both accounts is that by grouping overlapping acoustic cues or gestures, the underlying properties of the signal can be revealed (Ohala, 1981).

In contrast, Kluender and colleagues argue that low-level auditory mechanisms may do some of the work of compensation. Acoustic properties (like frequency) may be interpreted relative to other portions of the signal: a 1000 Hz tone played after a 500 Hz tone will sound higher than after an 800 Hz tone. This is supported by findings that non-speech events (e.g. pure tones) can create seemingly compensatory effects on speech (e.g. Lotto & Kluender, 1998, Holt, 2006; Kluender, Coady & Kiefte, 2003; though see Viswanathan, Fowler, & Magnuson, 2009). Thus, auditory contrast, either from other events in the signal or from long-term expectations about cues (Kluender et al, 2003) may alter the information available in the signal.

Parsing and contrast accounts offer principles that apply across many acoustic cues, categories and sources of variation. However, they have not been formalized in a way that permits a test of the sufficiency of such mechanisms to support categorization of speech input. All three accounts also make strong representational claims (articulatory vs. auditory), and a more general approach to compensation may be more useful (Ohala, 1981).

In developing a principled, yet computationally specific approach to compensation, one final concern is the role of fine phonetic detail. Traditional approaches to normalization assumed bottom-up processes that operate autonomously to clean up the signal before categorization, stripping away factors like talker or speaking rate2. However, research shows that such seemingly irrelevant detail is useful to phonetic categorization and word recognition. Word recognition is sensitive to within-category variation in voice onset time (Andruski, Blumstein & Burton, 1994; McMurray et al, 2002, 2008a), indexical detail (Creel et al, 2005, Goldinger, 1998), word-level prosody (Salverda, Dahan & McQueen, 2003), coarticulation (Marslen-Wilson & Warren, 1994; Dahan, Magnuson, Tanenhaus & Hogan, 2001), and alternations like reduction (Connine, 2004; Connine, Ronbom & Patterson, 2008) and assimilation (Gow, 2003). In many of these cases, such detail facilitates processing by allowing listeners to anticipate upcoming material (Martin & Bunnel, 1981, 1982; Gow, 2001, 2003), resolve prior ambiguity (Gow, 2003; McMurray, Tanenhaus & Aslin, 2009b) and disambiguate words faster (Salverda et al, 2003). Such evidence has led some to reject normalization altogether in favor of exemplar approaches (e.g. Pisoni, 1997; Port, 2007) which preserve continuous detail.

What is needed is a compensation scheme which is applicable across different cues and sources of variance, is computationally well-specified, and can retain and harness fine-grained acoustic detail. Cole et al. (2010; McMurray, Cole & Munson, in press) introduced such a scheme in an analysis of vowel coarticulation; we develop it further as a more complete account of compensation. This account, Computing Cues Relative to Expectations (C-CuRE), combines grouping principles from parsing accounts with the relativity of contrast accounts.

Under C-CuRE, incoming acoustic cues are initially encoded veridically, but as different sources of variance are categorized, cues are recoded in terms of their difference from expected values. Consider a stop-vowel syllable. The fundamental frequency (F0) at the onset of the vowel is a secondary cue for voicing. In the dataset we describe here, F0 at vowel onset had a mean of 149 Hz for voiced sounds and 163 Hz for voiceless ones, though it was variable (SDvoiced=43.2; SDvoiceless=49.8). Thus F0 is informative for voicing, but any given F0 is difficult to interpret. An F0 of 154 Hz, for example, could be high for a voiced sound or low for a voiceless one. However once the talker is identified (on the basis of other cues or portions of the signal) this cue may become more useful. If the talker’s average F0 was 128 Hz, then the current 154 Hz is 26 Hz higher than expected and likely the result of a voiceless segment. Such an operation removes the effects of talker on F0 by recoding F0 in terms of its difference from the expected F0 for that talker, making it more useful for voicing judgments.

C-CuRE is similar to both versions of parsing in that it partials out influences on the signal at any time point. It also builds on auditory-contrast approaches by positing that acoustic cues are coded as the difference from expectations. However, it is also more general than these theories. Unlike gestural and feature-cue parsing, talker is parsed from acoustic cues the same way coarticulation is; unlike contrast accounts, expectations can be based on abstractions and there is an explicit role for categorization (phonetic categories, talkers, etc.) in compensation.

C-CuRE is straightforward to implement using linear regression. To do this, first a regression equation is estimated predicting the cue-value from the factor(s) being parsed out, for example, F0 as a function of talker gender. Next, for any incoming speech token, this formula is used to generate the expected cue-value given what is known (e.g., if the speaker is known), and the actual cue value is subtracted from it. The residual becomes the estimate of contrast or deviation from expectations. In terms of linear regression, then, parsing in the C-CuRE framework separates the variance in any cue into components and uses the regression formula to generate expectations on which the remaining variance can be used to do perceptual work.

When the independent factors are dichotomous (e.g. male/female), the regression predictions will be based on the cell means of each factor. This could lead to computational intractability if the regression had to capture all combinations of factors. For example, a vowel’s first formant frequency (F1) is influenced by the talker’s gender, the voicing of neighboring consonants, and the height of the subsequent vowel. If listeners required cell-means of the four-way <talker> × <initial voicing> × <final voicing> × <vowel height> contrast to generate expectations it is unlikely that they could track all of the possible combinations of influences on a cue. However, Cole et al (2010; McMurray et al, in press) demonstrated that by performing the regression hierarchically (e.g., first partialing out the simple effect of talker, then the simple effect of voicing, then vowel height, and so on), substantial improvements can be made in the utility of the signal using only simple effects, without needing higher-order interactions.

In sum, C-CuRE offers a somewhat new approach to compensation that is computationally well specified, yet principled. It maintains a continuous representation of cue values and does not discard variation due to talker, coarticulation and the like. Rather C-CuRE capitalizes on this variation to build representations for other categories. It is neutral with respect to whether speech is auditory or gestural, but consistent with principles from both parsing approaches, and with the notion of contrast in auditory contrast accounts. Finally, C-CuRE explicitly demands a categorization framework: compensation occurs as the informational content of the signal is interpreted relative to expectations driven by categories.

The goal of this project is to evaluate the informational assumptions of theories of speech categorization in terms of compensated vs. uncompensated inputs, a question at the computational level (Marr, 1982). Testing this quantitatively requires that we assume a particular form of compensation, a solution properly described at the algorithmic level, and existing forms of compensation do not have the generality or computational specificity to be applied. C-CuRE offers such a general, yet implementable compensation scheme and as a result, our test of compensation at the information level also tests this specific processing approach.

Such a test has only been examined in limited form by Cole et al (2010). This study used parsing in the C-CuRE framework to examine information used to anticipate upcoming vowels, and did not examine compensation for variance in a target segment. It showed that the recoding of formant values as the difference from expectations on the basis of talker and intervening consonant was necessary to leverage coarticulatory information for anticipating upcoming vowels. This validated C-CuRE’s ability to harness fine-grained detail, but did not address its generality as only two cues were examined (F1 and F2); these cues were similar in form (both frequencies); only a small number of vowels were used; and results were not compared to listener data. Thus, it is an open question as to how well C-CuRE scales to dozens of cues representing different signal components (e.g. amplitudes, durations and frequencies) in the context of a larger set of categories, and it is unclear whether its predictions match listeners.

1.3 Logic and Overview

Our goal is to contrast three informational accounts: 1) that a small number of invariant cues distinguishes speech categories; 2) that a large number of cues is sufficient without compensation; and 3) that compensation must be applied. To accomplish this, we measured a set of cues from a corpus of speech sounds, and used them to train a generic categorization model. This was compared to listener performance on a subset of that corpus. By manipulating the cues available to the model and whether or not compensation was applied, we assessed the information required to yield listener performance.

One question that arises is which phonemes to use. Ideally, they should be difficult to classify, as accuracy is likely to be a distinguishing factor. There should also be a large number of categories for a more realistic test. For a fair test, the categories should have a mix of cues in which some have been posited to be invariant and others more contextually determined. Finally, C-CuRE suggests that the ability to identify context (e.g. the neighboring phoneme) underlies compensation. Thus, during perceptual testing, it would be useful to be able to separate the portion of the stimulus that primarily cues the phoneme categories of interest from portions that primarily cue contextual factors. The fricatives of English meet these criteria.

1.4 Phonetics of Fricatives

English has eight fricatives created by partially obstructing the airflow through the mouth (see Table 1). They are commonly defined by three phonological features: sibilance, place of articulation and voicing. There are four places of articulation, each of which can be either voiced or voiceless. Fricatives produced at the alveolar ridge (/s/ or /z/ as in sip and zip) or at the post-alveolar position (/∫/ as in ship or /ʒ/ as in genre) are known as sibilants due to their high-frequency spectra; labiodental (/f/ or /v/ as in face and vase) and interdental fricatives (/θ/ or /ð/ as in think and this) are non-sibilants. The fact that there are eight categories makes categorization a challenging but realistic problem for listeners and models. As a result, listeners are not at ceiling even for naturally produced unambiguous tokens (LaRiviere, Winitz & Herriman, 1975; You, 1979; Jongman, 1989; Tomiak, 1990; Balise & Diehl, 1994), particularly for the non-sibilants (/f, v, θ, ð/) where accuracy estimates range from 43% to 99%.

Table 1.

The eight fricatives of English can be classified along two dimensions: voicing (whether the vocal folds are vibrating or not) and place of articulation. Fricatives produced with alveolar and post-alveolar places of articulation are known as sibilants, others are non-sibilants.

| Place of Articulation | Voiceless | Voiced | |||

|---|---|---|---|---|---|

| IPA | Examples | IPA | Examples | ||

| Non-sibilants | Labiodental | f | fat, fork | v | van, vase |

| Interdental | θ | think, thick | ð | this, those | |

| Sibilants | Alveolar | s | sick, sun | z | zip, zoom |

| Post Alveolar | ∫ | ship, shut | ʒ | Jacques, genre | |

Fricatives are signaled by a large number of cues. Place of articulation can be distinguished by the four spectral moments (mean, variance, skew and kurtosis of the frequency spectrum of the frication) (Forrest, et al., 1988; Jongman, et al., 2000), and by spectral changes in the onset of the subsequent vowel, particularly the second formant (Jongman et al, 2000; Fowler, 1994). Duration and amplitude of the frication are related to place of articulation, primarily distinguishing sibilants from non-sibilants (Behrens & Blumstein, 1988; Baum & Blumstein, 1987; Crystal & House, 1988; Jongman et al., 2000; Strevens, 1960). Voicing is marked by changes in duration (Behrens & Blumstein, 1988; Baum & Blumstein, 1987; Jongman et al., 2000) and spectral properties (Stevens, Blumstein, Glicksman, Burton, & Kurowski, 1992; Jongman et al, 2000). Thus, there are large numbers of potentially useful cues.

This offers fodder for distinguishing invariance and cue-integration approaches on the basis of the number of cues, and across fricatives we see differences in the utility of a small number of cues. The four sibilants (/s, z, ∫, ʒ/ can be distinguished at 97% by just the four spectral moments (Forrest et al, 1988). Thus, an invariance approach may be sufficient for sibilants. On the other hand, most studies have failed to find any single cue that distinguishes non-sibilants (/f, v, θ, ð/) (Maniwa, Jongman & Wade, 2008, 2009; though see Nissen & Fox, 2005), and Jongman et al.’s (2000) discriminant analysis using 21 cues only achieved 66% correct. Thus, non-sibilants may require many information sources, and possibly compensation.

In this vein, virtually all fricative cues are dependent on context including talker identity (Hughes & Halle, 1956; Jongman et al, 2000), the adjacent vowel (Soli, 1981; Jongman et al, 2000; LaRiviere et al., 1975; Whalen, 1981) and socio-phonetic factors (Munson, 2007; Munson et al, 2006; Jongman, Wang & Sereno, 2000). This is true even for cues like spectral moments that have been posited to be relatively invariant.

Thus, fricatives represent an ideal platform for examining the informational assumptions of models of speech categorization. They are difficult to categorize, and listeners can potentially utilize a large number of cues to do so. Both invariant and cue-combination approaches may be appropriate for some fricatives, but the context dependence of many, if not all cues, raises the possibility that compensation is necessary. Given the large number of cues, it is currently uncertain what information will be required for successful categorization.

1.5 Research Design

We first collected a corpus of fricative productions and measured a large set of cues in both the frication and vocalic portion of each. Next, we presented a subset of this corpus to listeners in an identification experiment with and without the vocalic portion. While this portion contains a number of secondary cues to fricative identity, it is also necessary for accurate identification of the talker (Lee, Dutton & Ram, 2010) and vowel, which is necessary for compensating in the C-CuRE framework. The complete corpus of measurements, including the perceptual results, is available in the online supplement. Finally, we implemented a generic categorization model using logistic regression (see also Cole et al, 2010; McMurray et al, in press), which is inspired by Nearey’s (1990, 1997) NAPP model and Smits’ (2001a,b) HICAT model. This model was trained to predict the intended production (not listeners’ categorizations, as in NAPP and some versions of HICAT) from particular sets of cues in either raw form or after parsing. The model’s performance was then compared to listeners’ to determine what informational structure is needed to create their pattern of responding. This was used to contrast three informational accounts distinguished by the number of cues and the presence or absence of compensation.

2.0 Empirical Work

2.1 The Corpus

The corpus of fricatives for this study was based on the recordings and measurements of Jongman et al (2000) with additional measurements of ten new cues on these tokens.

2.1.1 Methods and Measurements

Jongman et al (2000) analyzed 2873 recordings of the 8 English fricatives /f, v, θ, ð, s, z, ∫, ʒ/. Fricatives were produced in the initial position of a CVC syllable in which the vowel was /i, e, æ, ɑ, o, u/, and the final consonant was /p/. Twenty speakers (10 female) produced each CVC three times in the carrier phrase “Say ___ again”. This led to 8 (fricatives) × 6 (vowels) × 3 (repetitions) × 20 (speakers), or 2880 tokens, of which 2873 were analyzed. All recordings were sampled at 22 kHz (16 bit quantization, 11 kHz low-pass filter). The measurements reported here are all of the original measurements of Jongman et al. (2000, the JWW database)3, although some cues were collapsed (e.g. spectral moments at two locations). We also measured 10 new cues from these tokens to yield a set of 24 cues for each fricative. A complete list is shown in Table 2, and Figure 1 shows a labeled waveform and spectrogram of a typical fricative recording. Details on the measurements of individual cues and the transformations applied to them can be found in Appendix A.

Table 2.

Summary of the cues included in the present study. JWW indicates cues that were previously reported by Jongman et al (2000). Also shown are several derived cues included in a subset of the analysis. The “cue for” column indicates the phonological feature typically associated with each cue.

| Cue | Variable | Noise Cue | Description | Cue for | Source |

|---|---|---|---|---|---|

| Peak Frequency | MaxPF | * | Frequency with highest amplitude. | Place | JWW |

| Frication Duration | DURF | * | Duration of frication | Voicing | JWW |

| Vowel Duration | DURV | Duration of vocalic portion | Voicing | JWW | |

| Frication RMS | RMSF | * | Amplitude of frication | Sibilance | JWW |

| Vowel RMS | RMSV | Amplitude of vocalic portion | Normalization | JWW | |

| F3 narrow-band amplitude (frication). | F3AMPF | * | Amplitude of frication at F3. | Place | New |

| F3 barrow band Amplitude (vowel) | F3AMPV | Amplitude of vowel at F3. | Place | New | |

| F5 narrow-band amplitude (frication). | F5AMPF | * | Amplitude of frication at F5. | Place | New |

| F5 barrow band Amplitude (vowel) | F5AMPV | Amplitude of vowel at F5. | Place | New | |

| Low Frequency Energy | LF | * | Mean RMS below 500 Hz in frication | Voicing | New |

| Pitch | F0 | Fundamental frequency at vowel onset | Voicing | New | |

| First Formant | F1 | First formant frequency of vowel | Voicing | New | |

| Second Formant | F2 | Second formant frequency of vowel | Place | JWW | |

| Third Formant | F3 | Third formant frequency of vowel | Place | New | |

| Fourth Formant | F4 | Fourth formant frequency of vowel | Unknown | New | |

| Fifth Formant | F5 | Fifth formant frequency of vowel | Unknown | New | |

| Spectral Mean | M1 | * | Spectral mean at three windows in frication noise (onset, middle, offset) | Place/Voicing | JWW |

| Spectral Variance | M2 | * | Spectral variance at three windows in frication noise | Place | JWW |

| Spectral Skewness | M3 | * | Spectral skewness at three windows in frication noise | Place/Voicing | JWW |

| Spectral Kurtosis | M4 | * | Spectral kurtosis at three windows in frication noise | Place | JWW |

| Transition Mean | M1trans | Spectral mean in window including end of frication and vowel onset | Place | JWW | |

| Transition Variance | M2trans | Spectral variance in window including end of frication and vowel onset | Place | JWW | |

| Transition Skewness | M3trans | Spectral skewness in window including end of frication and vowel onset | Place | JWW | |

| Transition Kurtosis | M4trans | Spectral kurtosis in window including end of frication and vowel onset | Place | JWW |

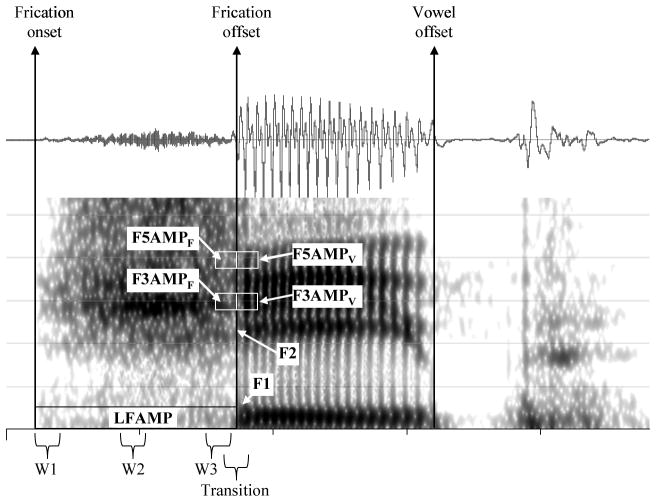

Figure 1.

Annotated waveform and spectrogram of a typical sample in the corpus, /∫ip/ ‘sheep’. Annotations indicate a subset of the cues that are described in Table 2.

We deliberately left out compound or relative cues (based on two measured values) like locus equations or duration ratios to avoid introducing additional forms of compensation into our dataset. We did include the independent measurements that contribute to such cues (e.g. duration of the vowel and consonant separately). Compound cues are discussed (and modeled in a similar framework) in the Online Supplement, Note #6.

The final set of 24 cues represents to the best of our knowledge all simple cues that have been proposed for distinguishing place, voicing or sibilance in fricatives, and also includes a number of cues not previously considered (such as F3, F4 and F5, and low frequency energy).

2.1.2 Results

Since the purpose of this corpus is to examine the information available to categorization, we do not report a complete phonetic analysis. Instead, we offer a brief analysis that characterizes the information in this dataset, asking which cues could be useful for fricative categorization and the effect of context (talker and vowel) on them. A complete analysis is found in the Online Supplement, Note #1, and see Jongman et al. (2000) for extensive analyses of those measures.

Our analyses consisted of a series of hierarchical linear regressions. In each one, a single cue was the dependent variable, and the independent variables were a set of dummy codes4 for fricative identity (7 variables). Each regression first partialed out the effect of talker (19 dummy codes) and vowel (5 dummy codes), before entering the fricative terms into the model. We also ran individual regressions breaking fricative identity down into phonetic features (sibilance, place of articulation and voicing). Table 3 displays a summary.

Table 3.

Summary of regression analyses examining effects of speaker (20), vowel (6) and fricative (8) for each cue. Shown are R2change values. Missing values were not significant (p>.05). The final column shows secondary analyses examining individual contrasts. Each cue is given the appropriate letter code if the effect size was Medium or Large (R2change>.05). A few exceptions with smaller effect sizes are marked because there were few robust cues to non-sibilants. Sibilant vs. non-sibilant (/s, z, ∫, ʒ/ vs. /f, v, θ, ð/) is coded as S; voicing is coded as V; place of articulation in non-sibilants (/f, v/ vs. /θ, ð/) is coded as Pn; and place of articulation in sibilants (/s, z/ vs. /∫, ʒ/) is coded as Ps.

| Cue | Contextual Factors | Fricative Identity df=7,2848 | Cue for | |

|---|---|---|---|---|

| Speaker df=19,2860 | Vowel df=5,2855 | |||

| MaxPF | 0.084* | 0.493* | S, Ps | |

| DURF | 0.158* | 0.021* | 0.469* | S, V |

| DURV | 0.475* | 0.316* | 0.060* | V |

| RMSF | 0.081* | 0.657* | S, V | |

| RMSV | 0.570* | 0.043* | 0.011* | |

| F3AMPF | 0.070* | 0.028* | 0.483* | S, Ps |

| F3AMPV | 0.140* | 0.156* | 0.076* | Pn1, Ps |

| F5AMPF | 0.077* | 0.012* | 0.460* | S |

| F5AMPV | 0.203* | 0.040* | 0.046* | |

| LF | 0.117* | 0.004+ | 0.607* | S, V |

| F0 | 0.838* | 0.007* | 0.023* | |

| F1 | 0.064* | 0.603* | 0.082* | V2 |

| F2 | 0.109* | 0.514* | 0.119* | S, Pn, Ps |

| F3 | 0.341* | 0.128* | 0.054* | Pn |

| F4 | 0.428* | 0.050* | 0.121* | Pn, Ps |

| F5 | 0.294* | 0.045* | 0.117* | Pn, Ps |

| M1 | 0.122* | 0.425* | V, Ps | |

| M2 | 0.036* | 0.678* | S, V, Ps | |

| M3 | 0.064* | 0.387* | S, Ps | |

| M4 | 0.031* | 0.262* | Ps | |

| M1trans | 0.066* | 0.043* | 0.430* | S, V, Ps |

| M2trans | 0.084* | 0.061* | 0.164* | Pn3, Ps |

| M3trans | 0.029* | 0.079* | 0.403* | S, V, Pn, Ps |

| M4trans | 0.031* | 0.069* | 0.192* | S, Pn, Ps |

p<.05

p<.0001

R2change=.043

R2change=.045

R2change=.038

Every cue was affected by fricative identity. While effect sizes ranged from large (10 cues had R2change> .40) to very small (RMSvowel, the smallest: R2change =.011), all were highly significant. Even cues that were originally measured to compensate for variance in other cues (e.g. vowel duration to normalize fricative duration) had significant effects. Interestingly, two of the new measures (F4 and F5) had surprisingly large effects.

A few cues could clearly be attributed to one feature over others, although none were associated with a single feature. The two duration measures and low frequency energy were largely associated with voicing; RMSF and F5AMPF were largely affected by sibilance; and the formant frequencies, F2, F4 and F5 had moderate effects of place of articulation for sibilants and non-sibilants. However, the bulk of the cues were correlated with multiple features.

While few cues were uniquely associated with one feature, most features had strong correlates. Many cues were sensitive to place of articulation in sibilants, suggesting an invariance approach may be successful for distinguishing sibilants. However, there were few cues for place in non-sibilants (F4, F5, and the 3rd and 4th moments in the transition). These showed only moderate to low effect sizes (none greater than .1), and were context-dependent. Thus, categorizing non-sibilants may require at least cue-integration, and potentially, compensation.

We next asked if any cues appeared more invariant than others. That is, are there cues that are correlated with a single feature (place of articulation, sibilance or voicing), but not with context? There is no standard for what statistically constitutes an invariant cue, so we adopted a simple criterion based on Cohen and Cohen’s (1983) definition of effect sizes as small (R2 <.05), medium (.05<R2<.15) and large (R2>.15): a cue is invariant if it had a large effect of a single feature (sibilance, place of articulation, voicing) and at most, small effects of context.

No cue met this definition. Contextual factors (talker and vowel) accounted for a significant portion of the variance in every cue, particularly cues in the vocalic portion. However, relaxing this criterion to allow moderate context effects yielded several.

Peak frequency (MaxPF) was highly correlated with place of articulation (R2change=.483), less so with sibilance (R2=.260) (it distinguishes /s/ from /∫/), and virtually uncorrelated with voicing (R2=.004). While it was moderately related to talker (R2=.084), it was not related to vowel. The narrow-band amplitudes in the frication (F3AMPF and F5AMPF) showed a similar pattern. Amplitude at F3 had a strong relationship to place (R2=.450; /s/ vs. /∫/), and a smaller relationship to sibilance (R2=.239); while amplitude at F5 was related to sibilance (R2change=.394) but not place within either class (non-sibilants: R2change<.001; sibilants: R2change=.02). Neither was strongly related to voicing (F3AMPF: R2change=.002; F5AMPF=.024) and they were only moderately affected by context (F3AMPF: R2change=.098; F5AMPF=.089).

Finally, the upper spectral moments in the frication were strongly associated with fricative identity (M2: R2change=.68; M3: R2change=.39; M4: R2change=.26) (primarily place of articulation), and only moderately with context (M2: R2change=.04; M3: R2change=.07; M4: R2change=.03). This was true to a lesser extent for M1 (Fric.: R2change=.42; Context: R2change=.12).

In sum, every cue was useful for distinguishing fricatives, although most were related to multiple phonetic features, and every cue was affected by context. There were several highly predictive cues that met a liberal criterion for invariance. Together, they may be sufficient for categorization, particularly given the large number of potentially supporting cues.

2.2 Perceptual Experiment

The perceptual experiment probed listeners’ categorization of a subset of the corpus. We assessed overall accuracy and variation in accuracy across talkers and vowels on the complete syllable and the frication alone. Excising the vocalic portion eliminates some secondary cues to fricatives, but also reduces the ability to categorize the vowel and talker, which is required for compensation in C-CuRE. Thus, the difference between the frication-only and complete-syllable conditions may offer a crucial platform for model comparison.

2.2.1 Methods

The 2880 fricatives in the corpus were too many for listeners to classify in a reasonable amount of time, so this was trimmed to include 10 talkers (5 female), 3 vowels (/i, ɑ, u/), and the second repetition. This left 240 stimuli which were identified twice by each listener. The presence or absence of the vocalic portion was manipulated between-subjects.

Procedure

Listeners were tested in groups of two to four. Stimuli were played from disk over Sony (MDR-7506) headphones, using BLISS (Mertus, 1989). Stimuli were presented in random order at 3-s intervals. Listeners responded by circling one of 9 alternatives f, v, th, dh, s, z, sh, zh, or ‘other’ on answer sheets. Participants were asked to repeat a few words with /θ, ð, ∫, ʒ/ in initial position to ensure they were aware of the difference between these sounds.

Participants

Forty Cornell University students (20 females) participated. Twenty served in each condition (complete-syllable vs. frication-only). All were native speakers of English with no known speech or hearing impairments. Participants were paid for their participation.

2.2.2 Results

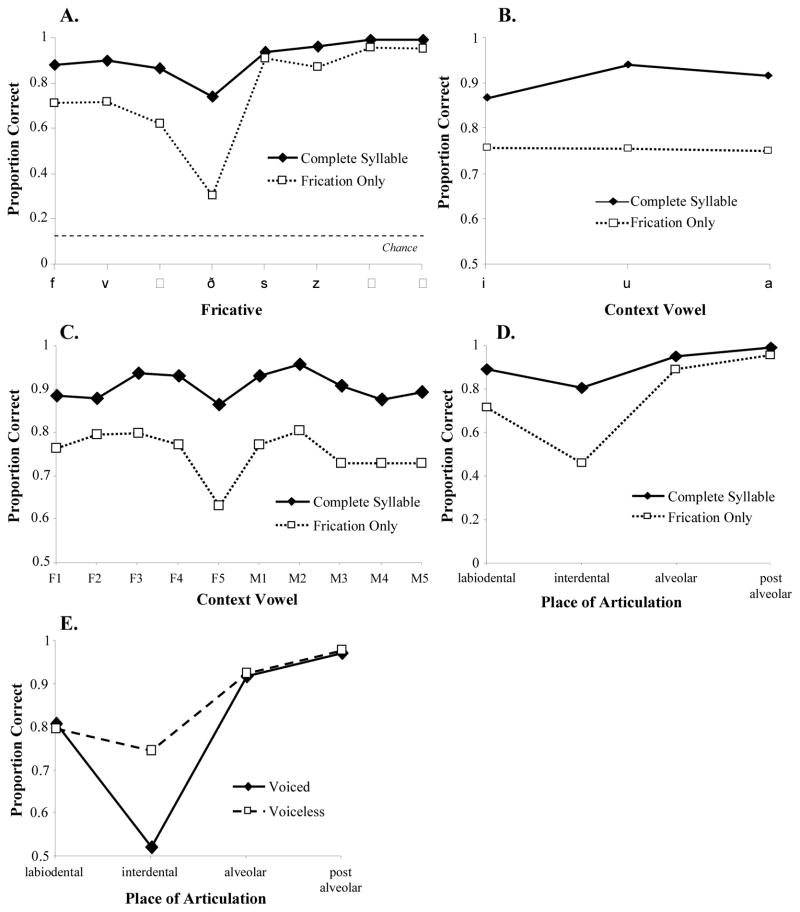

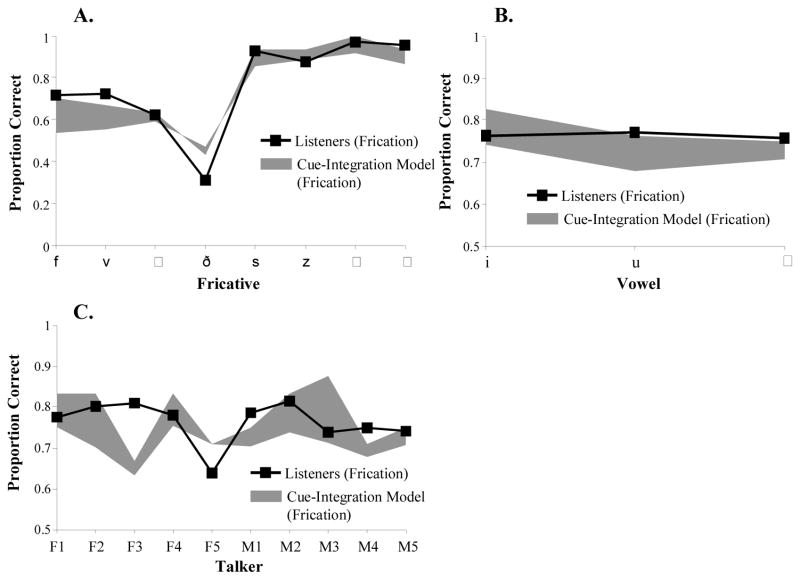

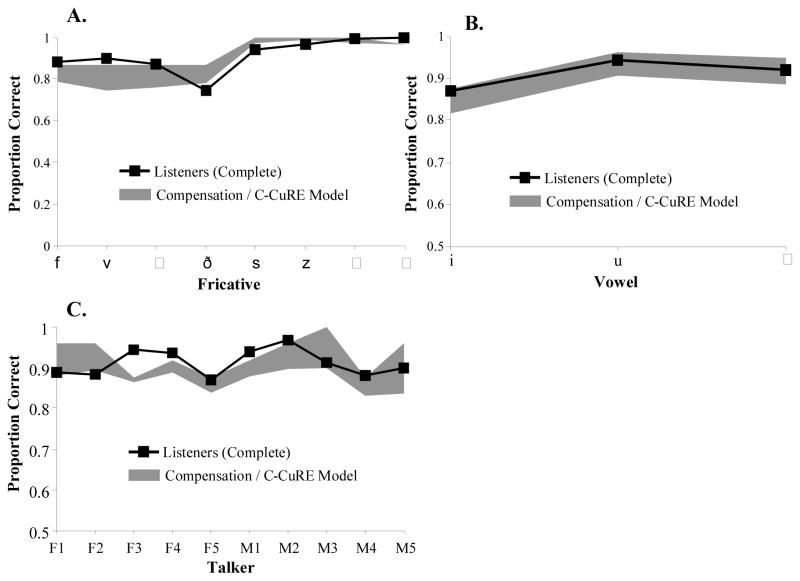

Figure 2 shows a summary of listeners’ accuracy. In the complete-syllable condition, listeners were highly accurate overall (M=91.2%), particularly on the sibilants (M=97.4%), while in the frication-only condition, performance dropped substantially (M=76.3%). There were also systematic effects of vowel (Figure 2B) and talker (Figure 2C) on accuracy. It was necessary to characterize which of these effects were reliable to identify criteria for model evaluation. However, this proved challenging given that our dependent measure has eight possibilities (eight response categories), and the independent factors included condition, talker, vowel, place and voicing. Since we only needed to identify diagnostic patterns, we simplified this by focusing on accuracy and collapsing the dependent measure into a single binary variable: correct or incorrect (though see Supplementary Note 3 for a more descriptive analysis of the confusion matrices).

Figure 2.

Listeners’ performance (proportion correct) on an 8AFC fricative categorization task. A) Performance on each of the eight fricatives as function of condition. B) Performance across fricatives as a function of vowel and condition C). Performance by condition and speaker. D) Performance as a function of place of articulation and condition. E) Performance as a function of place of articulation and voicing across conditions.

We used generalized estimating equations with a logistic linking function to conduct the equivalent of a repeated measures ANOVA (Lipsitz, Kim & Zhao, 2006). Talker, vowel, place and voicing were within-subjects factors; syllable-type (complete-syllable vs. frication-only) was between-subjects. Since we only had two repetitions of each stimulus per subject, the complete model (subject, five factors and interactions) was almost fully saturated. Thus, the context factors (talker and vowel) were included as main effects, but did not participate in interactions.

There was a significant main effect of syllable-type (Wald χ2(1)=69.9, p<.0001) with better performance in the complete-syllable condition (90.8% vs. 75.4%) for every fricative (Figure 2A). Vowel (Figure 2B) had a significant main effect (Wald χ2(2)=12.1, p=.02): fricatives preceding /i/ had the lowest performance followed by those preceding /ɑ/, and then /u/; and both /i/ and /ɑ/ were significantly different from /u/ (/i/: Wald χ2(1)=12.1, p=.001; /ɑ/: Wald χ2(1)=5.5, p=.019). Talker was also a significant source of variance (Talker: Wald χ2(9)=278.1, p<.0001), with performance by talker ranging from 75.0% to 88.2% (Figure 2C).

Place of articulation was highly significant (Wald χ2(3)=312.5, p<.0001). Individual comparisons against the postalveolars (which showed the best performance) showed that all three places of articulation were significantly worse (Labiodental: Wald χ2(1)=72.2, p<.0001; Interdental: Wald χ2(1)=75.0, p<.0001; Alveolar: Wald χ2(1)=51.6, p<.0001), though the large difference between sibilants and non-sibilants was the biggest component of this effect. A similar place effect was seen in both syllable-types (Figure 2D), though attenuated in complete-syllables, leading to a place × condition interaction (Wald χ2(3)=12.0, p=.008).

The main effect of voicing was significant (Wald χ2(1)=6.2, p=.013): voiceless fricatives were identified better than voiced fricatives. This was driven by the interdentals (Figure 2E), leading to a significant voicing × place interaction (Wald χ2(3)=47.0, p<.0001). The voicing effect was also enhanced in the noise-only condition, where voiceless sounds were 8.9% better, relative to the complete-syllable condition where the difference was 2.1%, a significant voicing × syllable-type interaction (Wald χ2(1)=4.1, p=.042). The three-way interaction (voicing × place × syllable type) was not significant (Wald χ2(3)=5.9, p=.12).

Follow-up analyses separated the data by syllable-type. Complete details are presented in the Online Note #2, but several key effects should be mentioned. First, talker was significant for both conditions (complete-syllable: Wald χ2(9)=135.5, p<.0001; frication-only: Wald χ2(9)=196.9, p<.0001), but vowel was only significant in complete-syllables (complete-syllable: Wald χ2(1)=71.0, p<.0001; frication-only: Wald χ2(2)=.9, p=.6). Place of articulation was significant in both conditions (complete-syllable: Wald χ2(3)=180.5, p<.0001; frication-only: Wald χ2(3)=189.8, p<.0001), although voicing was only significant in the frication-only condition (complete-syllable: Wald χ2(1)=.08, p=.7; frication-only: Wald χ2(1)=15.0, p<.0001).

To summarize, we found that 1) performance without the vocalic portion was substantially worse than with it, though performance in both cases was fairly good; 2) accuracy varied across talkers; 3) sibilants were easier to identify than non-sibilants but there were place differences even within sibilants; and 4) the vowel identity affected performance, but only in the complete-syllable condition. This may be due to two factors. First, particular vowels may alter secondary cues in the vocalic portion in a way that misleads listeners (for /ɑ/ and /i/) or helps them (for /u/). Alternatively, the identity of the vowel may cause subjects to treat the cues in the frication noise differently. This may be particularly important for /u/—its lip rounding has a strong effect on the frication. As a result, listeners’ ability to identify the vowel (and thus account for these effects) may offer a benefit for /u/ that is not seen for the unrounded vowels.

2.3 Discussion

The acoustic analysis revealed that every cue was useful for categorizing fricatives, but all were affected by context. However, the handful of nearly invariant cues raises the possibility that uncompensated cues, particularly in combination, may be sufficient for separating categories. Our perceptual study also revealed consistent differences across talkers, vowels and fricatives in accuracy. The presence or absence of the vocalic portion had the largest effect. This hints at compensation using C-CuRE mechanisms since this difference may be both due to secondary cues to the fricative, and also to listeners’ ability to identify the talker and the vowel as a basis of compensation. This may also account for the effect of context vowels on accuracy in the complete-syllable condition but not in the fricative-only condition.

3. Computational Approach

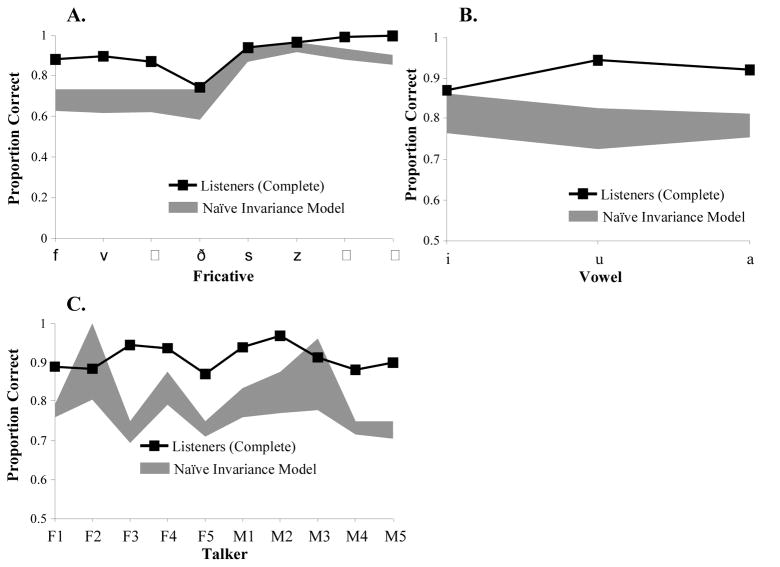

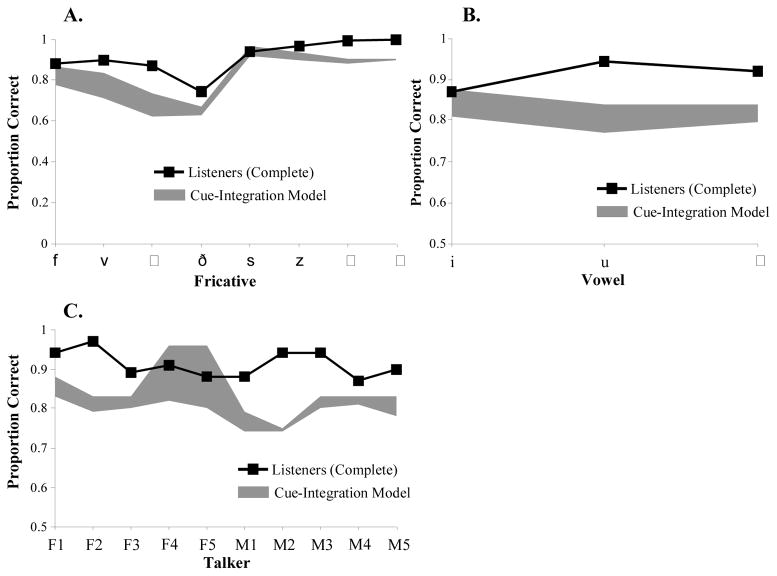

Our primary goal was to determine what information is needed to separate fricative categories at listener-like levels. We thus employed multinomial logistic regression as a simple, common model of phoneme categorization that is theoretically similar to several existing approaches (Oden & Massaro, 1978; Nearey, 1990; Smits, 2001; Cole et a., 2010). We varied its training set to examine three sets of informational assumptions:

Naïve Invariance: This model used the small number of cues that were robustly correlated with fricative identify and less with context. Cues did not undergo compensation—if cues are invariant with respect to context, this should not be required.

Cue-Integration: This model used every cue available, without compensation. This is consistent with the informational assumptions of exemplar approaches, and is an unexamined assumption of cue-integration models like NAPP (Nearey, 1997).

Compensation: This model used every cue, but after the effects of talker and vowel on these cues had been accounted for using C-CuRE.

It may seem a forgone conclusion that compensation will yield the best performance–it has the most information and involves the most processing. However, our acoustic analysis suggests there is substantial information in the raw cues to support fricative categorization, and no one has tested the power of integrating 24 cues for supporting categorization. Thus, uncompensated cues may be sufficient. Moreover, compensation in C-CuRE is not optimized to fricative categorization – it could transform the input in ways that hurt categorization. Finally, the goal is not necessarily the best performance, but listener-like performance—none of the models are optimized to the listeners’ responses and they may or may not show such effects

The next section describes the categorization model and its assumptions. Next, we describes how we instantiated each of our three hypotheses in terms of specific sets of cues.

3.1 Logistic Regression as a model of Phoneme Categorization

Our model is based on work by Nearey (1990, 1997; see also Smits, 2001a,b; Cole et al., 2010; McMurray, et al., in press) which uses logistic regression as a model of listeners’ mappings between acoustic cues and categories. Logistic regression first weights and combines multiple cues linearly. This is transformed into a probability (e.g. the probability of an /s/ given the cues). Weights are determined during training to optimally separate categories (Hosmer & Lemshow, 2000, for a tutorial). These parameters allow the model to alter both the location of the boundary in a multi-dimensional space and the amount each cue participates in categorization.

Logistic regression typically uses a binary dependent variable (e.g. /s/ vs. /∫/) as in (1).

| (1) |

Here, the exponential term is a linear function of the independent factors (cues: x1…xn) weighted by their regression coefficients (β’s). Multinomial logistic regression generalizes this to map cues to any number of categories. Consider, for example, a model built to distinguish /s/, /z/, /f/ and /v/. First, there are separate regression parameters for each of the four categories, except one, the reference category5 (in this case, /v/). The exponential of each of these linear terms is in (2)

| (2) |

These are then combined to yield a probability for any given category.

| (3) |

The probability of the reference category is

| (4) |

Thus, if there are 10 cues, the logistic regression requires 10 parameters plus an intercept for each category (minus one). Thus, a multinomial logistic regression mapping 10 cues to four categories requires 33 parameters. Typically these are estimated using gradient descent methods that maximize the likelihood of the data given the parameters.

The models used here were first trained to map the dataset of acoustic measurements to the intended production. This is overgenerous. The model knows both the cues in the acoustic signal, and the category the speaker intended to produce—something which learners may not always have access to. However, by training it on intended productions, not listener data, its match to listeners’ performance must come from the information in the input, as the model is not trained to match listeners, only to achieve the best categorization it can with the input.

We held out from training the 240 tokens used in the perception experiment. Thus, training consisted of 2880 - 240 = 2640 tokens. After estimating the parameters, we used them to determine the likelihood of each category for each token in the perceptual experiment.

3.1.1 Evaluating the models

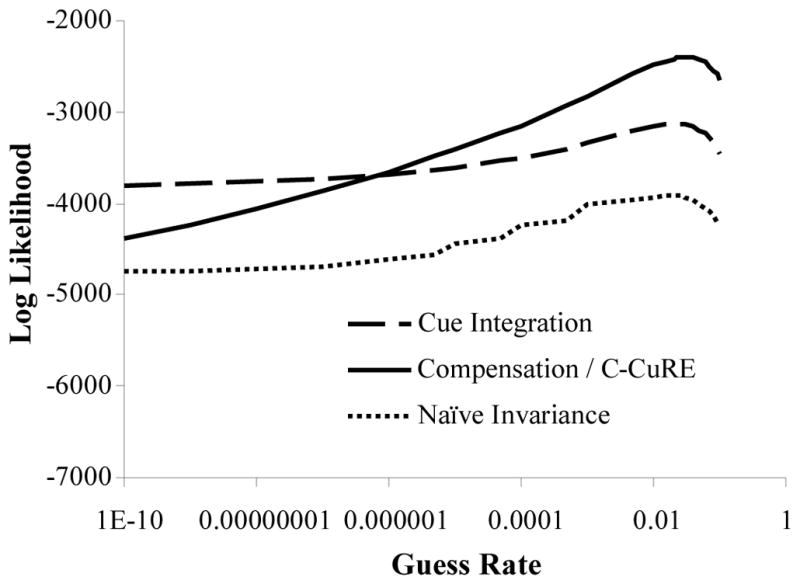

Evaluating logistic models is tricky. There is no agreed upon measure for model comparison (like R2 in linear regression). Moreover, this study compares models that use only a handful of cues to those that use many, and therefore should compensate for the increased power of the more complex models. Finally, model fit (e.g. how well the model predicts the training data) is less important than its ability to yield listener-like performance (on which it was not trained). Thus, we evaluated our models in three ways: Bayesian Information Criterion (BIC); estimated performance by experimental condition; and the likelihood of the human data given the model. We discuss the first two here, and discuss the final measure in Section 4.4 where it is used.

Bayesian Information Criterion (BIC; Schwarz, 1978) is used for selecting among competing models. BIC is sensitive to the number of free parameters and the sample-size. It is usually computed using (5), which provides an asymptotic approximation for large sample sizes.

| (5) |

Here, L is the likelihood of the model, k is the number of free parameters, and n is the number of samples. Given two models, the one with the lower BIC is preferred.

BIC can be used in two ways. It is primarily used to compare two models’ fit to the training data. Secondarily, it offers an omnibus test of model fit. To do this, the model is first estimated with no independent variables. This “intercept-only” model should have little predictive value, but if one response was a priori more likely, it could perform above chance. Next, the independent factors are added and the two models are compared using BIC to determine if the addition of the variables offers any real advantage.

Categorization performance can be computed from logistic regression models and is analogous to the listener data. This estimated listener performance can be compared as a function of experimental condition (e.g. as a function of fricative, talker or vowel), for a qualitative match to listeners. If one of the models shows similar effects of talker, vowel, or fricative this may offer a compelling case for this set of informational assumptions.

Crucially, this relies on the ability to generate data analogous to listener categorization from the logistic model. While, the logistic formula yields a probability of each of the categories for any given set of cues, there is debate about how best to map this to listener performance.

For any token, the optimal decision rule is to choose the most likely category as the response (Nearey & Hogan, 1986)6. This implies that listeners always choose the same category for repetitions of the same token (even if it is only marginally better). This seems unrealistic: in our experiment listeners responded identically to each repetition only 76.4% of the time in the frication-only condition and 90.5% in complete-syllables (close to the average accuracies). Thus, a more realistic approach is to use the probabilities generated by the model as the probability the listener chose each category (as in Nearey, 1990; Oden & Massaro, 1978).

The discrete-choice rule generally yields better performance than the probabilistic rule (typically about 10% in these models) and listeners likely lie between these extremes. This could be modeled with something like the Luce-choice rule (Luce, 1959), which includes a temperature parameter controlling how “winner-take-all” the decision is. However, we had no independent data on the listeners’ decision criteria, and since models were fit to the intended production, not to the perceptual response, we could not estimate this during training. We thus report both the discrete-choice and probabilistic decision rules for each model as a range, with the discrete-choice as the upper limit and the probabilistic rule as the lower limit.

Finally, neither method offers a direct fit to the perceptual data. BIC is based on the training data, and performance-based measures are analogous to perceptual data but offer no way to quantitatively relate them. Thus, in Section 4.4, we describe a method of comparing models based on the likelihood that the perceptual data was generated by each model.

3.1.2 Theoretical Assumptions of logistic regression as a categorization model

As a model of the interface between continuous cues and phoneme categories, logistic regression makes a number of simplifications. First, it assumes linear boundaries in cue-space (unless interaction terms are included). However, Nearey (1990) has shown that this can be sufficient for some speech categories. Similarly, cue combination is treated as a linear process. However, weighting-by-reliability in vision (e.g. Jacobs, 2002; Ernst & Banks, 2002) also assumes linear combinations and this has been tested in speech as well (Toscano & McMurray, 2010). Given the wide-spread use of this assumption in similar models in speech (e.g. Oden & Massaro, 1978; Nearey, 1997; Smits, 2001b), this seems uncontroversial. Moreover, lacking hypotheses about particular nonlinearities or interaction terms, the use of a full complement of interactions and nonlinear transformations may add too many parameters to fit effectively.

Second, while there are more complicated ways to model categorization, many of these approaches are related to logistic regression. For example, a network that uses no hidden units and the softmax activation function is identical to logistic regression, and an exemplar model in which speech is compared to all available exemplars will be highly similar to our approach

Third, logistic regression can be seen as instantiating the outcome of statistical learning (e.g. Werker et al, 2007) as its categories are derived from the statistics of the cues in the input. However, many statistical learning approaches in speech perception (e.g. McMurray et al, 2009a; Maye, Werker & Gerken, 2002) assume unsupervised learning, while logistic regression is supervised–the learner has access to both cues and categories. We are not taking a strong stance on learning—likely both are at work in development. Logistic regression is just a useful tool for getting the maximum categorization value out of the input to compare informational hypotheses.

Finally, our use logistic regression as a common categorization platform intentionally simplifies the perceptual processes proposed in models of speech perception. However this allows us to test assumptions about the information that contribute to categorization. By modeling phoneme identification using the same framework, we can understand the unique contributions of these informational assumptions made by each class of models.

3.2 Hypotheses and datasets

3.2.1 Naïve Invariance Model

The naïve invariance model asked whether a small number of uncompensated cues are sufficient for classification. Prior studies have asked similar questions for fricatives (Forrest et al, 1988; Jongman et al, 2000) using discriminant analysis and results have been good, though imperfect. This has not yet been attempted with more powerful logistic regression; and we have a lot more cues (particularly for non-sibilants). Thus, it would be premature to rule out such hypotheses.

Section 2.1.2 suggested a handful of cues that are somewhat invariant with respect to context (Table 4). These nine cues distinguish voicing and sibilance in all fricatives, and place of articulation in sibilants. We did not find any cues that were even modestly invariant for place of articulation in non-sibilants. Thus, we added four additional cues: two with relatively high R2’s for place of articulation, but also context (F4 and F5), and two that were less associated with place but also with context (M3trans and M4trans). These were located in the vocalic portion, offering a way for the naïve invariance model to account for the differences between the frication-only and complete-syllable conditions in the perceptual experiment—the loss of these cues should lead to bigger decrements for non-sibilants, and smaller decrements for sibilants. As our selection of this cue-set was made solely by statistical reliability (rather than a theory of production), and we did not use any compound cues, we term this a naïve invariance approach.

Table 4.

Summary of the 9 cues that were relatively invariant with respect to speaker and vowel as well as four non-invariant cues that were included because they provided the best information about place of articulation in non-sibilants. R2 are change statistics taken from analyses presented in Section 2.

| Cue | Cue for | Context Effects |

|---|---|---|

| MaxPF | Place in sibilants (R2=.504) | Speaker: Moderate (R2=.084) Vowel: n.s. |

| DURF | Voicing (R2=.40) | Speaker: Large (R2=.16) Vowel: Small (R2=.021) |

| RMSF | Sibilance (R2=.419) | Speaker: Moderate (R2=.081) Vowel: n.s. |

| F3AMPF | Place in sibilants (R2=.44) Sibilance (R2=.24) |

Speaker: Moderate (R2=.07) Vowel: Small (R2=.028) |

| F5AMPF | Sibilance (R2=.39) | Speaker: Moderate (R2=.07) Vowel: Small (R2=.012) |

| LF | Voicing (R2=.48) | Speaker: Moderate (R2=.11) Vowel: Small (R2=.004) |

| M1 | Place in sibilants (R2=.55) | Speaker: Moderate (R2=.122) Vowel: n.s. |

| M2 | Sibilance (R2=.44) Place in sibilants (R2=.34) |

Speaker: Small (R2=.036) Vowel: n.s. |

| M3 | Place in sibilants (R2=.37) | Speaker: Moderate (R2=.064) Vowel: n.s. |

| Non-invariant cues to place in non-sibilants | ||

| F4 | Place in non-sibilants (R2=.083) | Speaker: Large (R2=.43) Vowel: Small (R2=.05) |

| F5 | Place in non-sibilants (R2=.082) | Speaker: Large (R2=.29) Vowel: Small (R2=.045) |

| M3trans | Place in non-sibilants (R2=.061) | Speaker: Small (R2=.029) Vowel: Moderate (R2=.079) |

| M4trans | Place in non-sibilants (R2=.062) | Speaker: Small(R2=.031) Vowel: Small (R2=.069) |

3.2.2 Cue-integration Model

The cue-integration hypothesis suggests that if sufficient cues are encoded in detail, their combination is sufficient to overcome variability in any one cue. This is reflected in the informational assumptions of exemplar approaches (e.g. Goldinger, 1998; Pierrehumbert, 2001, 2003) and it is an unexamined assumption in many cue-integration models. It is possible that in a high-dimensional input-space (24 cues), there are boundaries that distinguish the eight fricatives.

Our use of logistic regression as a categorizer could is a potentially problematic assessment of exemplar accounts, as it is clearly more akin to a prototype model than true exemplar matching. However, if the speech signal is compared with the entire “cloud” of exemplars, then the decision of an exemplar model for any input will reflect an aggregate of all the exemplars, a “Generic Echo” (Goldinger, 1998, p. 254), allowing it to show prototype-like effects (Pierrehumbert, 2003). In contrast, if the signal is compared to only a smaller number of tokens this breaks down. Ultimately, formal models will be required to determine the optimal decision rule for exemplar models in speech. However, given our emphasis on information, logistic regression is a reasonable test—it maps closely to both cue-integration models and some versions of exemplar theory and is sufficiently powerful to permit good categorization.

Thus, we instantiated the cue-integration assumptions by using all 24 cues with no compensation for talker or vowel. To account for the difference between the frication-only and complete-syllable condition, we eliminated the 14 cues found in the vocalic portion (Table 2), asking whether the difference in performance was due to the loss of additional cues.

3.2.3 Compensation/C-CuRE Model

The final dataset tests the hypothesis that compensation is required to achieve listener-like performance. This is supported by our phonetic analysis suggesting that all of the cues were somewhat context-sensitive. To construct this dataset, all 24 cues were processed to compensate for the effects of the talker and vowel on each one. While the goal of this dataset was to test compensation in general, this was instantiated using C-CuRE because it is, to our knowledge, the only general purpose compensation scheme that is computationally specific and can be applied to any cue; and can accomplish compensation without discarding fine-grained detail in the signal (and may enable greater use of it: Cole et al, 2010).

To construct the C-CuRE model, all 24 cues were first subjected to individual regressions in which each cue was the dependent variable and talker and vowel were independent factors. These two factors were each represented by 19 and 5 dummy variables (respectively), one for each talker/vowel (minus one)7. After the regression equation was estimated, individual cue-values were then recoded as standardized residuals. This recodes each cue as the difference between the actual cue value and what would be predicted for that talker and vowel.

The use of context in C-CuRE offers an additional route to account for differences between the complete-syllable and frication-only condition. While excising the vocalic portion eliminates a lot of useful first order cues (as in the cue-integration model), it would also impair parsing. This is because it would be difficult to identify the talker or vowel from the frication alone. Indeed, Lee et al. (2010) showed that the vocalic portion supports much more robust identification of gender than the frication, even when only six pitch pulses were present. Thus, if fricative categorization is contingent on identifying the talker or vowel, we should see an additional decrement in the frication-only condition due to the absence of this information.

Our use of regression for compensation complicates model comparison using BIC. How do we count the additional parameters? In the cue-integration model, each cue corresponds to seven degrees of freedom (one parameter for each category minus one). In contrast, the compensation/C-CuRE model uses additional degrees of freedom in the regressions for each cue: 19 df for talkers, 5 for vowels, and an intercept. Thus, instead of 7×24 parameters, the complete C-CuRE model now has 32×24 parameters, suggesting a substantial penalty.

However, there are three reasons why such a penalty would be ill advised. First, the free parameters added by C-CuRE do not directly contribute to categorization, nor are they optimized when the categorization model is trained. These parameters are fit to a different problem (the relationship between contextual factors and cues), and are not manipulated to estimate the logistic regression model. So while they are parameters in the system, they are not optimized to improve categorization. In fact, it is possible that the transformations imposed by C-CuRE impede categorization or make categorization look less like listeners, as C-CuRE is removing the effect of factors like vowel and talker that we found to affect listener performance.