Abstract

We examined all 208 closed cases involving official findings of research misconduct published by the US Office of Research Integrity from 1992 to 2011 to determine how often scientists mention in a retraction or correction notice that there was an ethical problem with an associated article. 75 of these cases cited at least one published article affected by misconduct for a total of 174 articles. For 127 of these 174, we found both the article and a retraction or correction statement. Since eight of the 127 published statements consisted of simply the word ‘retracted,’ our analysis focused on the remaining 119 for which a more detailed retraction or correction was published. Of these 119 statements, only 41.2% mentioned ethics at all (and only 32.8% named a specific ethical problem such as fabrication, falsification or plagiarism), whereas the other 58.8% described the reason for retraction or correction as error, loss of data or replication failure when misconduct was actually at issue. Among the published statements in response to an official finding of misconduct (within the time frame studied), the proportion that mentioned ethics was significantly higher in recent years than in earlier years, as was the proportion that named a specific problem. To promote research integrity, scientific journals should consider adopting policies concerning retractions and corrections similar to the guidelines developed by the Committee on Publication Ethics. Funding agencies and institutions should take steps to ensure that articles affected by misconduct are retracted or corrected.

INTRODUCTION

Research misconduct often has wide-ranging, detrimental impacts on the scientific community. An article containing fabricated or falsified data (or involving plagiarism) may be read, cited and relied upon by many people, thereby propagating errors and inaccuracies. To prevent fraudulent work from corrupting the scientific literature, it is important to retract or correct articles affected by misconduct. This can be an onerous task when an author has published many fraudulent articles. For example, in 2002 a committee at Bell Laboratories found that physicist Jan Hendrik Schön had fabricated data in numerous articles with various coauthors; 28 of these articles were eventually retracted.1 Even retracting or correcting a single article can present problems for journal editors, because many journals require all authors to agree to the retraction or correction.1

Retractions and corrections are usually electronically linked to the original article in research databases so that readers will not inadvertently use or cite unreliable work. Unfortunately, scientists often continue to cite retracted articles, long after a retraction has been published.2 Various online tools, such as PubMed and Retraction Watch,3 a blog that provides information on retractions of scientific articles, can be used to find retracted articles.

When an article is retracted or corrected due to misconduct, how often do scientists state this in the text of the retraction or correction notice? While there have been some studies on retractions, no studies address this question directly. A recent study of 742 retracted articles published between 2000 and 2010 found that 73.5% were retracted for error and 26.6% for fraud (fabrication, falsification or plagiarism).4 This study also found that the incidence of all retractions is increasing, as is the incidence of retractions due to fraud.4 Of articles retracted ostensibly for error, 18.1% gave ambiguous reasons.4 An earlier study of 395 retracted articles found that 61.9% were retracted for error, 27.1% for misconduct and 11.1% for reasons that could not be categorised.5 Another study of 235 retracted articles found that 38.7% were retracted for error, 36.6% for misconduct or presumed misconduct, 16.2% for inability to replicate results, and 8.5% for other reasons.6 Hence, among all retracted articles, there are estimates of the proportion that were the result of misconduct. However, these studies did not link retracted articles with actual findings of misconduct. Thus, among the smaller set of retractions (or corrections) that were published in response to findings of misconduct, there are no estimates of the proportion in which the authors described the misconduct by mentioning ethics or citing a specific ethical problem.

The main objective of our study was to determine, among retractions or corrections of articles affected by misconduct, how frequently scientists mention ethics or describe a specific ethical problem such as fabrication, falsification or plagiarism. A secondary objective was to determine whether the proportion of scientists being forthcoming about ethical problems varies with the type of misconduct (fabrication, falsification or plagiarism), the type of description (retraction or correction), the journal’s impact factor or the calendar time period (year of publication of the retraction or correction).

To obtain evidence relevant to answering our research questions, we examined cases involving official findings of misconduct closed by the US Office of Research Integrity (ORI) from 1992 to 2011. ORI is responsible for overseeing research integrity in studies conducted or funded by US Public Health Service (PHS) agencies, including the US National Institutes of Health. If a local institution makes a finding of misconduct involving PHS research, it reports the case to the ORI, which reviews the evidence and makes its own determination. When the ORI makes an official finding, the respondent may appeal the case or accept the finding and enter into an agreement with the ORI in which he or she accepts specific sanctions, such as being ineligible to receive federal research funding for a particular period of time (ie, debarment). If there are articles affected by the misconduct, ORI may require the authors to retract or correct those publications as part of the agreement. Usually, official findings involve research misconduct, but in some instances they may only involve other administrative actions.

MATERIALS AND METHODS

The closed cases that we examined were available on the ORI website. Cases closed from 2007 to 2011 were available via the Case Summaries link.7 Cases closed before 2007 were available via the Case Summaries link and the ORI Newsletter link.8 Published ORI closed cases discuss the findings against the respondent and mention various affected documents, including grant applications, clinical research records, informed consent forms, surveys or questionnaires, abstracts presented at scientific meetings, masters or dissertation theses, curriculum vitae, and published articles. For our study, we focused on articles that were published in the scientific literature and linked to an ORI closed case. We searched for these publications via PubMed, Google and official journal websites. After finding the publication, we searched for a retraction or correction. Most of the time, these were available through a link in PubMed, but sometimes we had to locate the publication via another mechanism and determine whether it had been retracted or corrected.

We developed a coding system for classifying retractions and corrections. We determined whether a retraction or correction: (i) provided a description of the reason for the retraction or correction, (ii) mentioned ethical problems with the publication and (iii) named the specific ethical problem, such as fabrication, falsification or plagiarism. We defined ‘mentioning an ethical problem’ as mentioning specific problems (such as fabrication, falsification or plagiarism) or mentioning general ethical concerns (such as misconduct, lack of integrity or unethical behaviour). We used the US Office of Science and Technology Policy (OSTP) definition of fabrication, falsification and plagiarism, which has been adopted by all federal agencies. The OSTP defines fabrication as ‘making up data or results and recording or reporting them’; falsification as ‘manipulating research materials, equipment, or processes, or changing or omitting data or results such that the research is not accurately represented in the research record’; and plagiarism as ‘the appropriation of another person’s ideas, processes, results, or words without giving appropriate credit.’9 The OSTP definition also states that fabrication, falsification and plagiarism do not include honest errors or differences of opinion.9

After coding the data pertaining to retractions and corrections, the data were reviewed blindly a second time to ensure consistency in the coding. Any discrepancies between the initial coding and the subsequent coding were resolved.

We also collected data on the year that the retraction or correction was published, the journal’s impact factor at the time, the year of the ORI case report, and the type of misconduct described in the ORI case report: (a) fabrication only, (b) falsification only, (c) plagiarism only, (d) fabrication and falsification only, (e) fabrication and plagiarism only, (f) falsification and plagiarism only or (g) fabrication, falsification and plagiarism.

Our analysis focused on two endpoints: the proportion of published descriptions that mentioned ethics and the proportion that named a specific ethical problem. We investigated whether these proportions varied with the type of misconduct (plagiarism involved or not), the type of statement published (retraction or correction), the year in which the statement was published and the impact factor of the journal. We treated involvement of plagiarism as a distinct type of misconduct because plagiarism is conceptually different from fabrication and falsification; usually plagiarism involves copying of text or figures, while fabrication and falsification involve some form of data manipulation. We collected data on journal impact factor because other studies have shown that impact factor is positively associated with journal ethics policy development.10 Finally, our analysis also adjusted for possible correlations due to the same person being involved in multiple instances of misconduct.

We used SAS software (V.9.2, SAS Institute, Cary, North Carolina, USA) to perform statistical analyses. Both endpoints of interest were binary: ethics was mentioned (yes/no) and a specific ethical problem was named (yes/no). Our analyses examined associations between several predictors and each of these two endpoints separately. We investigated two binary predictors: type of statement (retraction/correction) and involvement of plagiarism (yes/no); and two continuous predictors: publication year and impact factor. Initially, we assessed the importance of each predictor alone. When evaluating the association between a binary predictor and one of the binary endpoints, the data were summarised in a two-by-two table and statistical significance was judged by applying Fisher’s exact test. When evaluating the association between a continuous predictor and one of the binary endpoints, we used the Mann–Whitney–Wilcoxon (MWW) test to compare the distribution of the continuous predictor across the two levels of the binary endpoint. Finally, we used multiple logistic regression to assess the effect of each predictor (binary or continuous) in the presence of the other predictors in case the importance of one decreased (or increased) after adjusting for another. In this joint regression analysis, we employed generalised estimating equations with a first-order autoregressive correlation matrix to account for possible correlations due to some cases of misconduct involving the same person. All statistical tests were two-sided and were performed at the usual α=0.05 significance level.

RESULTS

We reviewed all 208 closed cases involving official findings of misconduct handled by the ORI from 1992 to 2011, of which 75 cited at least one published article affected by the misconduct. These 75 cases cited a total of 174 articles. Of these 174 cited articles, we found both the article and a retraction/correction for 127, the article but no retraction/correction for 27, and neither the article nor a retraction/correction for 20. Since eight of the 127 retractions consisted of simply the word ‘retracted,’ our analysis focused on the remaining 119 articles for which a more substantial retraction or correction statement was published.

The 119 statements we examined were published between 1989 and 2011 in journals with impact factors that ranged from 1 to 39. There were fewer corrections (20.2%, 24/119) than retractions (79.8%, 95/119), and only 10.9% (13/119) of these statements acknowledged plagiarism. Less than half of the statements (41.2%, 49/119) mentioned ethics at all and only about a third (32.8%, 39/119) named a specific ethical problem (see table 1 for details).

Table 1.

Distribution of variables among 119 published statements regarding retractions or corrections of scientific articles associated with ORI findings of misconduct

| Variable | Range | Median | Mean (SD) |

|---|---|---|---|

| Year statement was published | 1989–2011 | 2003 | 2002.2 (5.7) |

| Journal impact factor* | 1.25–38.86 | 7.15 | 10.33 (8.69) |

| Category | N† (%) | ||

| Type of statement | Retraction | 95 (79.8) | |

| Correction | 24 (20.2) | ||

| Type of misconduct | Plagiarism only | 7 (5.9) | |

| Fabrication only | 5 (4.2) | ||

| Falsification only | 53 (44.5) | ||

| Fabrication and falsification | 48 (40.3) | ||

| All three types | 6 (5.0) | ||

| Did statement mention ethics? | Yes | 49 (41.2) | |

| No | 70 (58.8) | ||

| Did statement name a specific ethical problem? | Yes | 39 (32.8) | |

| No | 80 (67.2) |

Only available for 112 of the 119 publications.

N=sample size.

ORI, Office of Research Integrity.

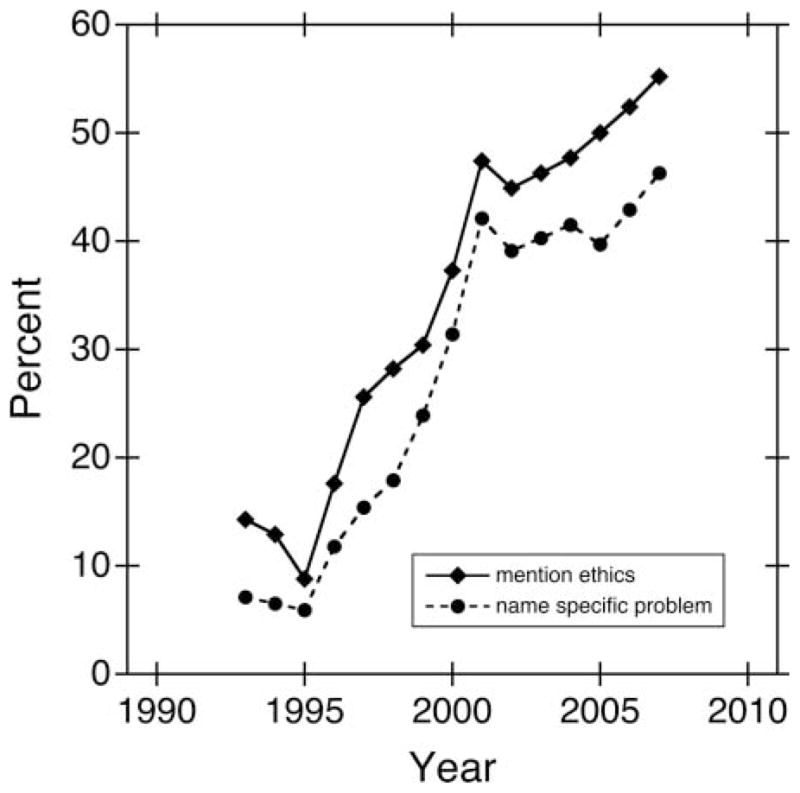

Within the time period studied, the proportion of statements that mentioned ethics was higher in recent years than in earlier years (figure 1). On average, the date of publication of the retraction or correction notice was more than 4 years later for statements mentioning ethics (late 2004) than for statements not mentioning ethics (early 2000, p<0.001, MWW test). Also, the proportion of statements that mentioned ethics was higher among retractions (47.4%) than among corrections (16.7%, p=0.010, Fisher’s exact test). However, neither journal impact factor nor involvement of plagiarism was predictive of ethics being mentioned. After simultaneously adjusting for all predictors and accounting for multiple cases involving the same person, the year in which the retraction or correction was published remained statistically significant (p=0.008) but the type of statement (retraction or correction) did not (p=0.188) (see table 2 for details).

Figure 1.

Proportion of retractions and corrections mentioning ethics or naming a specific problem over time. A simple moving average based on a window width of 9 years provides a smoothed estimate of the percent of retractions and corrections that mentioned ethics (solid curve, diamonds) or named a specific ethical problem (dashed curve, circles) as a function of the year in which the retraction or correction was published.

Table 2.

Estimated regression coefficients (β) and p values (p) under a multiple logistic regression model for the proportion of articles mentioning ethics or naming a specific ethical problem*

| Explanatory variable | Endpoint

|

|||

|---|---|---|---|---|

| Ethics was mentioned

|

A specific ethical problem was named

|

|||

| β | p Value | β | p Value | |

| Plagiarism was involved (1=yes, 0=no) | −0.932 | 0.456 | −0.425 | 0.746 |

| Type of statement published (1=retraction, 0=correction) | 0.468 | 0.188 | 0.608 | 0.168 |

| Year statement was published (1989–2011) | 0.132 | 0.008 | 0.192 | 0.008 |

| Journal impact factor (1.25–38.86) | 0.023 | 0.597 | 0.066 | 0.252 |

For each endpoint, the logistic model included a linear term for each explanatory variable (plus an overall mean) and it assumed a first-order autoregressive correlation matrix. Seven of the 119 articles were excluded because no impact factor was available for the journal in which the publication appeared.

Similarly, the proportion of statements that went further and actually named a specific ethical problem was higher in recent years than in earlier years (figure 1). The average publication date was roughly 4 years later for statements that named a specific ethical problem (late 2004) than for statements that did not (late 2000, p=0.001, MWW test). There was marginal evidence that the average impact factor was lower for statements that named a specific ethical problem (8.8) than for statements that did not (11.0, p=0.024, MWW test) and that the proportion of statements naming a specific ethical problem was higher among retractions (37.9%) than among corrections (12.5%, p=0.027, Fisher’s exact test), but plagiarism was not predictive of a specific problem being named. After simultaneously adjusting for all predictors and accounting for within person correlations, the year in which the retraction or correction was published was still statistically significant (p=0.008), but impact factor (p=0.252) and statement type (p=0.168) were no longer significant (see table 2 for details).

Finally, rather than examining the proportion of statements that named a specific ethical problem among all 119 statements, we focused on the subset of 49 statements that mentioned ethics. Conditional on having mentioned ethics at all, 79.6% (39/49) of the statements went on to name a specific ethical problem and 20.4% (10/49) did not. Given that ethics were mentioned, the proportion of statements naming a specific ethical problem did not depend on any of the predictors considered (plagiarism, type of statement, year of statement or impact factor).

DISCUSSION

The most important finding of our study is that scientists frequently do not fully and honestly explain why an article associated with research misconduct is being retracted or corrected. Of the articles that were retracted or corrected after an ORI finding of misconduct (with more than a one-word retraction statement), only 41.2% indicated that misconduct (or some other ethical problem) was the reason for the retraction or correction, and only 32.8% identified the specific ethical concern (such as fabrication, falsification or plagiarism). In some cases, it appears that authors misstated the reasons for retraction or correction because they described the problem as being due to error, loss of data or failure to replicate results when, in fact, misconduct was at issue. Among the retracted articles, 7.8% (8/103) simply provided a notice of retraction, without giving any further explanation. (A list of all the articles we examined is available upon request.)

While admitting publicly that one has been associated with an article involving research misconduct can be embarrassing, one could argue that authors should fully explain why an article is being retracted or corrected, especially when misconduct by at least one of the authors is involved. Honesty and transparency require scientists to tell the whole truth when retracting or correcting an article, so that others can evaluate their work and decide whether parts of the research unaffected by misconduct can be trusted and whether any of the coauthors are at fault.2, 11

Besides concerns about embarrassment, one reason why retraction or correction notices may fail to mention misconduct or other ethical concerns related to the article is that authors and journal editors may be wary of legal liability. If the misconduct investigation has not concluded when the retraction or correction is published, the authors or editors may be concerned that the accused party could sue them for libel, because mentioning misconduct in the retraction or correction notice may constitute a false accusation. To deal with these legal concerns, authors and editors should only mention misconduct in a retraction or correction notice when there has been an official finding of misconduct.

Journal policies can play an important role in promoting honesty and transparency in retraction or correction notices. Unfortunately, it appears that few journals have policies pertaining to retractions or corrections. One study found that only 17% of 122 high impact biomedical journals have policies regarding retractions or corrections.11 The retraction policy adopted by journals published by the American Society for Microbiology, for example, states that retractions are appropriate for ‘major errors or breaches of ethics that, for example, may call into question the source of the data or the validity of the results and conclusions of an article.’12 The policy also states that a retraction must be accompanied by an explanatory letter signed by all authors.12

In our opinion, all journals should adopt policies on retractions and corrections to promote greater honesty and transparency. The Committee on Publication Ethics (COPE) has developed extensive guidelines for retractions and corrections, which can serve as a model for journal policies.13 COPE recommends that editors should consider retracting articles when there is clear evidence that the findings are unreliable due to misconduct or honest error. Editors may also retract articles that have been published previously. Corrections are appropriate when only a small portion of an otherwise reliable publication is misleading. Retraction notices should be freely available, published promptly in electronic and print versions, and electronically linked to the retracted article. Retraction notices should state who is retracting the article and why (eg, for misconduct or error). Journal editors may retract articles even if some or all of the authors do not agree to the retraction.13

Another troubling finding of our study is that, of the 174 articles associated with research misconduct, 27 (15.5%) appear to have been published but never retracted or corrected. These articles are still available in the scientific literature but are not marked by a notice of retraction or correction on the journal’s website or in research databases, despite official findings of misconduct. While it is possible that in some instances the misconduct associated with these articles did not undermine the reliability of the data or the conclusions, and therefore did not warrant a retraction or correction, this explanation is not likely to be true in all cases. Thus, scientists may be relying on articles for which there have been official findings of misconduct.

Although only journals have the authority to retract or correct articles, we also encourage funding agencies and institutions to take steps to ensure that articles affected by misconduct are retracted or corrected. ORI could take legal action against researchers who do not honour an agreement to retract or correct an article associated with an official finding of misconduct. If an individual has been debarred for 5 years, ORI could make debarment indefinite if the individual does not make a good faith effort to honour the agreement. Institutions that make findings of misconduct could also require employees to retract or correct articles. If an employee refuses to meet such requirements, the institution could terminate his or her employment. ORI and institutions could also inform the journal that an article is associated with misconduct, if the authors do not retract it.

An encouraging finding is that our study suggests that retractions and corrections pertaining to articles associated with misconduct are becoming more honest and transparent over time. Within the timeframe studied, the proportion of retractions and corrections that mentioned ethics was significantly higher in recent years than in earlier years, as was the proportion that named a specific problem. One possible explanation for this trend is that scientists are becoming more aware of the importance of protecting the integrity of the research as a result of efforts by federal agencies, journals, universities and others to promote responsible conduct in research, and they are therefore deciding to write retractions and corrections with greater candour. Alternatively, this trend may simply reflect a change in the behaviour of editors. These possible explanations are speculation on our part, however, and more research is needed on how efforts to promote research integrity are impacting publication practices.

One limitation of our study is that we drew our articles from ORI cases involving official findings of misconduct, and this sample may not be representative of the larger collection of all articles associated with research misconduct. Cases that reach ORI may involve more egregious transgressions than cases that do not reach ORI. However, we have no way of addressing that issue because institutional misconduct proceedings are usually kept confidential to protect the rights of the accused and others involved.

Also, since ORI cases involve biomedical research funded by the PHS (mostly National Institutes of Health studies), our sample may not be representative of articles published in the physical, engineering or social sciences because the pressure to obtain funding and produce results may be more intense in biomedicine. Also, biomedical researchers may have more conflicts of interest than researchers from other scientific disciplines. While we recognise this as an important limitation, we do not think it significantly impacted our results. Researchers from the physical, engineering and social sciences also face significant pressures related to funding and the need to produce results, and they also have to deal with conflicts of interest. Also, biomedical researchers have norms, values and professional interests similar to researchers who work in other disciplines. Thus, we believe that the behaviour of biomedical researchers with regard to publication ethics is not markedly different from the behaviour of scientists in general. Others might argue, however, that higher funding levels may induce more research misconduct in biomedicine than in other scientific disciplines, which could potentially limit the generalisability of our results.

Another limitation of our study is that the sample size was small, which may have impacted the data analysis. With a larger sample of retractions and corrections resulting from misconduct, we might have been able to detect statistically significant associations that were possibly missed in this sample. While we recognise the importance of this issue, we were limited by the resources available to us, that is, ORI published closed cases involving official findings of misconduct. Although the National Science Foundation also publishes closed misconduct cases, these do not include enough detail to locate specific articles associated with misconduct.14 We are not aware of any other public databases of research misconduct that would allow us to collect data relevant to our study.

Footnotes

Contributors DBR designed the study, collected the data, interpreted the results and drafted the manuscript. GED analysed the data, interpreted the results and drafted the manuscript.

Disclaimer This article is the work product of employees of the National Institute of Environmental Health Sciences (NIEHS), National Institutes of Health (NIH), however, the statements, opinions or conclusions contained therein do not necessarily represent the statements, opinions or conclusions of NIEHS, NIH or the United States government.

Competing interests None.

Provenance and peer review Not commissioned; externally peer reviewed.

References

- 1.Claxton LD. Scientific authorship. Part 1. A window into scientific fraud? Mutat Res. 2005;589:17–30. doi: 10.1016/j.mrrev.2004.07.003. [DOI] [PubMed] [Google Scholar]

- 2.Van Noorden R. Science publishing: the trouble with retractions. Nature. 2011;478:26–8. doi: 10.1038/478026a. [DOI] [PubMed] [Google Scholar]

- 3. [accessed 23 Mar 2012];Retraction Watch. http://retractionwatch.wordpress.com/

- 4.Steen RG. Retractions in the scientific literature: is the incidence of research fraud increasing? J Med Ethics. 2011;37:249–53. doi: 10.1136/jme.2010.040923. [DOI] [PubMed] [Google Scholar]

- 5.Nath SB, Marcus SC, Druss BG. Retractions in the research literature: misconduct or mistakes? Med J Aust. 2006;185:152–4. doi: 10.5694/j.1326-5377.2006.tb00504.x. [DOI] [PubMed] [Google Scholar]

- 6.Budd JM, Sievert M, Schultz TR, et al. Effects of article retraction on citation and practice in medicine. Bull Med Libr Assoc. 1999;7:437–43. [PMC free article] [PubMed] [Google Scholar]

- 7.ORI. [accessed 21 Feb 2012];Case Summaries. http://ori.hhs.gov/case_summary.

- 8.ORI. [accessed 21 Feb 2012];Newsletter. http://ori.hhs.gov/newsletters.

- 9.OSTP. Federal research misconduct policy. Federal Register. 2000;65:76262. [Google Scholar]

- 10.Resnik DB, Patrone D, Peddada S, et al. Research misconduct policies of social science journals and impact factor. Account Res. 2010;17:79–84. doi: 10.1080/08989621003641181. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Atlas MC. Retraction policies of high-impact biomedical journals. J Med Libr Assoc. 2004;92:242–50. [PMC free article] [PubMed] [Google Scholar]

- 12.Journal of Virology. [accessed 17 Apr 2012];Instructions to Authors. http://jvi.asm.org/site/misc/journal-ita_org.xhtml#06.

- 13.Committee on Publication Ethics. [accessed 25 Jun 2012];Retraction guidelines. http://www.publicationethics.org/files/retraction%20guidelines.pdf.

- 14.National Science Foundation. [accessed 23 Mar 2012];Search Case Closeout Memoranda. http://www.nsf.gov/oig/search/