Abstract

Mathematical models have long been used for prediction of dynamics in biological systems. Recently, several efforts have been made to render these models patient specific. One way to do so is to employ techniques to estimate parameters that enable model based prediction of observed quantities. Knowledge of variation in parameters within and between groups of subjects have potential to provide insight into biological function. Often it is not possible to estimate all parameters in a given model, in particular if the model is complex and the data is sparse. However, it may be possible to estimate a subset of model parameters reducing the complexity of the problem. In this study, we compare three methods that allow identification of parameter subsets that can be estimated given a model and a set of data. These methods will be used to estimate patient specific parameters in a model predicting baroreceptor feedback regulation of heart rate during head-up tilt. The three methods include: structured analysis of the correlation matrix, analysis via singular value decomposition followed by QR factorization, and identification of the subspace closest to the one spanned by eigenvectors of the model Hessian. Results showed that all three methods facilitate identification of a parameter subset. The ”best” subset was obtained using the structured correlation method, though this method was also the most computationally intensive. Subsets obtained using the other two methods were easier to compute, but analysis revealed that the final subsets contained correlated parameters. In conclusion, to avoid lengthy computations, these three methods may be combined for efficient identification of parameter subsets.

Keywords: Parameter estimation, inverse problem, subset selection, simulation and modeling, nonlinear heart rate model, medical applications, patient specific models

1 Introduction

Mathematical models have long been used to better understand cardiovascular dynamics, but have not until recently been used in conjunction with analysis of patient specific data. Some efforts have been made to develop patient specific models for prediction of blood flow and pressure in networks of arteries [37,51,52,62,69]. Most of these models use patient specific geometry to predict blood flow and pressure in arterial vessels or networks, e.g., in preparation for or when studying the impact of a given surgery. For example, a model could use blood flow values obtained from magnetic resonance measurements combined with a 3D fluid dynamics model to predict the distribution of flow and wall-shear stress in a given artery before and after inserting a stent. While this type of model provide excellent predictions in specified domains, it cannot easily be expanded to analyze dynamics associated with the full system. An example of a systems level model is one that predicts the impact of blood pressure changes in response to a stress challenge such as head-up tilt. Models designed for this purpose typically utilize compartmental formulations, and thus ordinary differential equations. While compartment models have been around for a long time (e.g., Guyton’s elaborate model of the overall physiological control system developed in 1972 [26]), only few attempts have been made to integrate models with clinical studies. One problem is that system models often strive to incorporate as many physiological principals as possible enabling excellent prediction of the system dynamics, but at the expense of complex models that describe population rather than patient specific dynamics. One way to render models patient specific, is to use them to describe the essential mechanisms governing the dynamics, and combine these with parameter estimation allowing prediction of a set of parameters and associated initial conditions. This approach is based on the assumption that for all subjects studied the system dynamics can be modeled by the same biological mechanisms, but that the system may be tuned differently for each subject reflecting the different strength of the individual mechanisms constituting the system.

Knowledge of how parameters change within and between groups of subjects has potential to be used to improve diagnosis and treatment strategies. For example, in [47] we showed that in aging and hypertension the afferent baroreflex gain is reduced, the sympathetic delay is increased, and the parasympathetic dampening of the sympathetic response is increased. Moreover, we showed that modulation of these parameters allowed us to ”treat” the model in place of the patient, making the healthy elderly young, and the hypertensive elderly healthy. These parameters reflect quantities that are desirable to assess, while they cannot easily be measured directly.

Estimation of model parameters involves solution of the inverse problem: given a model and data predict the model parameters [3]. This problem is difficult to solve, and typically, no unique analytical or numerical solution can be found [71]. Therefore, most studies addressing parameter estimation techniques use examples involving a ”correct” model, good initial parameter values, and a comprehensive set of data, see e.g., [12]. However, in practice, only some parameters can be estimated given a model and available observations. Finding the set of parameters that can be estimated reliably given a model and observations involves parameter identification [45]. Following suggestions by Liang [40] we propose to utilize sensitivity and correlation analysis methods for this purpose.

More specifically, we test three parameter identification methods using a nonlinear differential equations model developed to predict regulation of heart rate during head-up tilt. This model was chosen, since it contains complex nonlinear dynamics, a large number of parameters, and sparse data. Data for this model is considered sparse since only one output quantity is measured (heart rate), though it is sampled at a high resolution in time. The model is complex since it contains nonlinear dynamics as well as several time scales including fast inter-beat dynamics and slow dynamics associated with changes observed during head-up tilt. The model is developed from first principles describing the underlying mechanisms, with model parameters representing physiological quantities including gain and timing associated with the firing of afferent neurons, and time scales related to the change in neurotransmitters acetylcholine and noradrenaline.

The article is organized as follows. In section 2 we introduce and discuss three parameter estimation methods. Section 3 derives the example model, discusses how simulated data are generated, and show results of applying the three parameter identification methods to the proposed model. Finally, in section 4 we discuss our findings.

2 Parameter identification and estimation

Parameters are identifiable if it is possible to predict their values given a model and a complete set of data [3,45]. However, for most systems data are not available for all states, and even given data for all states, the model may not be structural identifiable [45]. For many systems only a subset of model outputs can be measured, and therefore, only a subset of parameters may be identifiable. Parameters identified from partial data are sometimes denoted conditionally identifiable [45,56, 71].

The history of parameter identifiability and estimation is voluminous. Some of the first discussions of identifiability and estimability can be found in early work by Koopmans and Reiersol [39] and Fisher [21] who introduced these concepts in statistics and econometrics. Subsequently development took place in the field of system theory in engineering in particular for applications described by linear state space models [2,43]. Along with these developments theories and refined concepts took form. Berman and Schoenfeld [7] were among the first to address the actual identifiability problem although they did not use this term. The term ’structural identifiability’ appeared for the first time in 1970, in a paper by Bellman and Astrom [6] on compartmental systems. This manuscript spun off a stream of papers on identifiability in the 1970s and 1980s; see for example [1,11,13,25,32]. These methods are still discussed extensively today (see e.g., [58,61,64,68] and the recent review by Miao et al. [45] that summarizes seminal contributions for parameter identification).

Structural identifiability provides a priori information about the model parameters. It is a necessary but not sufficient condition for successful parameter estimation from real data, which are typically incomplete (only limited number of states can be measured, though they may be measured with a high resolution) and noisy. Furthermore, the fact that the model may not be ”correct” further complicates the concept [61,64]. Thus, recently a number of practical identifiability methods have been proposed as summarized in the review by Miao et al. [45], and methods for analysis of sensitivities and confidence intervals have been discussed in the chapter by Banks et al. [3], though more work still needs to be done to obtain efficient and reliable methods. Along with parameter identification comes reliable methods for estimation of model parameters. A large number of techniques exist including Monte Carlo methods [28,29,68], various forms of principal component methods [55], local [33,35,53,56,63] and global [33,44,59,61] methods, as well as Kalman filtering methods [4,8,31].

In this study, we devise three techniques for practical identification of parameter subsets and apply them to a mathematical model predicting heart rate regulation during head-up tilt. [46,48, 49]. The heart rate model can be described by a system of nonlinear delay differential equations that cannot be solved in closed form. Therefore, we use numerical techniques to solve the differential equations and the associated parameter identification and estimation problems. The model uses measured blood pressure data as an input predicting heart rate as an output. In agreement with Ljung [42] we use the concept ”subset selection” to denote the process involving identification of a parameter subset that can be estimated given the model and a data set, and the concept ”parameter estimation” to denote the optimization involved with computation of parameter values that minimize the least squares error between computed and measured outputs. The latter is done using both simulated and real values of heart rate.

All parameter identification methods discussed here are derived for models that can be described by a nonlinear system of differential equations of the form

| (1) |

where f : R1+n+q → Rn, t ∈ R denotes time, x ∈ Rn denotes the state vector, and θ ∈ Rq the parameter vector. Associated with the states we assume an output vector y ∈ Rm corresponding to the available data. We assume that this output can be computed algebraically as a function of the time t, the states x, and the model parameters θ, i.e.,

| (2) |

where g : R1+n+q →Rm. Associated with each component of the model output y we assume existence of a set of data Y sampled at time ti, where the sampling rate may vary between output components. To solve the inverse problem we assume that the data and associated model outputs can be evaluated at times where the data are sampled. These are given by

| (3) |

| (4) |

where Yℓ and yℓ to denote the ℓ’th component of the vectors Y and y, respectively, ℓ = 1,…,K, and K denoting the total number of observations.

Given the model (1) and its associated output (2), for all ℓ, we assume that the ℓ’th component of the data satisfies the statistical model

| (5) |

where εℓ denotes the measurement error associated with yℓ. We assume that the errors can be represented by independent identically distributed random variables with zero mean and finite variance [3,66]. Given this assumption, parameters may be estimated via solution of the inverse problem obtained by predicting the maximum likelihood estimate, which is equivalent to minimizing the least squares error between computed and measured values of the model output. The least squares error J, which also approximates the model variance σ2, is given by

| (6) |

where R = (r1, r2,…, rK)T, q is the number of parameters, and rℓ denotes the model residual for the ℓ’th observation. The latter is defined by

| (7) |

2.1 Sensitivity analysis

Classically [18,22], the sensitivity of the model output y to the model parameters θ can be predicted from the so called sensitivity matrix

| (8) |

Sensitivities can be computed analytically [65], using automatic differentiation [16], or using finite differences [53]. For simple problems, the first two approaches are preferable, since they are more accurate, but for complex problems, it is often necessary to use finite differences to compute the sensitivities.

For a given parameter and a given model output, sensitivities Si, i = 1,…,q (represented by columns in the matrix (8)) reflect how sensitive the model output is to a given value of the parameter at the instances of measurements. Since model parameters and model outputs may have different units, it may be advantageous to compute relative sensitivities defined as

| (9) |

Furthermore, it may be difficult to compare parameter sensitivities directly from the various instances of measurements. Consequently, we predict ranked sensitivities (S̅i, i = 1,…,q) by imposing a norm across all points (along each column of S or S̃). To do so, we use the operator two-norm, i.e., we define

| (10) |

It should be noted that ranked sensitivities can also be computed from the relative sensitivities S̃.

The resulting ranked sensitivities can be sorted from the most to the least sensitive, and parameters may be separated into two groups with ρ sensitive and q − ρ insensitive parameters. Insensitive parameters cannot be estimated, consequently, the subset of identifiable parameters should be found among the sensitive parameters.

To our knowledge, no theoretically based technique exists to separate parameters between those that are sensitive and those that are not. In a recent paper [63], the authors used relative sensitivity analysis combined with analysis of measurement uncertainties to identify sensitive parameters. For this study the parameters naturally got separated in two distinct groups (sensitive and insensitive parameters). However, for the heart rate model, studied here, the ranked sensitivities approximately follow a straight line if depicted on a log-scale without any clear demarcation (see Fig. 4).

Fig. 4.

Normalized ranked sensitivities computed for the log scaled parameters. Note that sensitivities decrease almost linearly and that all sensitivities are larger than the computational bound of 10−3.

Thus we analyzed the computational accuracy to separate ”sensitive” from ”insensitive” parameters: If the differential equations are solved numerically with an absolute error 𝒪(10−χ) and sensitivities are computed using finite differences, then the error of the sensitivities are 𝒪(10−χ/2) [54]. Parameters with sensitivities smaller than this bound should be included in the set of insensitive parameters. The insensitive parameters should not be included in further analysis, since these, can as discussed by Gutenkunst et al. give rise to ”sloppy” models [29,30].

When using the sensitivity analysis proposed here with a nonlinear model, one should be aware that the analysis is local, i.e., that results depend on the actual values of the parameters. Thus the better the initial estimates of the parameters, the more accurate the analysis. If the method is used for parameters with a high uncertainty, it may be beneficial to repeat the analysis for more samples, e.g., using a global sensitivity method as proposed in [38]. In addition, sensitivity analysis could be repeated using optimized parameter values. It should be kept in mind, that we use this analysis to identify insensitive parameters, thus it may in particular be of importance to investigate if insensitive parameters remain insensitive if the parameters are sampled at different values.

2.2 Subset selection

In addition to being insensitive parameters may be correlated, see [14,32,45,63] and references therein. As discussed by Miao et al. [45], two classes of methods can be used to reveal parameter correlations: Structural identification, which is used directly on the model (without data) to analyze if the model parameters can be identified given the system of equations, i.e., given information about all states defined by the model; and practical identification, which aims at predicting a subset of parameters that can be identified given the model and available data. Structural identifiability is a necessary condition for practical identifiability, though typically, as we do here, the model is assumed to be structural identifiable. Thus the aim is to devise practical identifiability methods. The importance of practical identifiability, stems from the fact that for many ”real” models, only some data is available. Thus even if the model is structurally identifiable, it may only be possible to estimate a small subset of model parameters, and it may be of biological importance to determine what parameters can be included in this subset.

In this study, we identify and estimate a subset of uncorrelated parameters in given a model that predicts heart rate regulation during head-up tilt. This model uses measured blood pressure data as an input to predict heart rate dynamics. The model is formulated using a system of 5 differential equations (described later) related using a number of model parameters. So the objective is to predict how many model parameters that can be estimated given the model output (heart rate).

We compare three methods that can easily be applied to practical problems: The first method is based on structured analysis of correlations computed from the covariance matrix (this method is similar to the one described in section 4.2 in the review by Miao et al. [45]); the second method (discussed in [53]) uses singular value decomposition of the sensitivity matrix S followed by QR factorization; and the third method is based on minimizing distances between parameter subspaces and subspaces spanned by eigenvectors for the model Hessian (this method is a variant of the orthogonal method described in section 5.3 in [45]). Below we describe each of these methods in detail.

2.2.1 Structured correlation analysis

The structured correlation subset selection method is adapted from methods described by Daun et al. [14], Jacquez [32], and Miao et al. [45]. As a point of departure, this method uses the model Hessian [64] (a positive definite symmetric matrix sometimes denoted the Fisher information matrix [12]), which for problems with constant variance σ2 can be defined as ℋ = σ−2ST S.

The basic structure to be analyzed is the correlation matrix c, which can be computed from the model covariance matrix C = ℋ−1 as

| (11) |

Note, the matrix C only exists if det(ℋ) ≠ 0.

Analysis of the correlation matrix c reveals parameter sets that are pairwise correlated. In general, a parameter pair (i, j) is correlated, iff |ci,j| > γ for γ → 1. However, no theoretical predictions exist to define an actual value for γ. In the analysis conducted here we have investigated results for γ ∈ [0.85,0.95].

In addition to providing information about pairwise correlations, the covariance matrix C can be used for computation of the standard error [12], defined by

and the confidence interval, which at the 100(1 − α)% level, can be computed as

where α ∈ [0, 1] and t1−α/2 ∈ R+. Given a small α value (e.g., α = 0.05 for 95% confidence intervals), the critical value t1−α/2 is computed from the student’s t-distribution tK−q, α with K − q degrees of freedom. The value of t1−α/2 is determined by the probability P{T ≥ t1−α/2} = α/2, where T ~ tK−q. For problems where K is large (K ≫ 40), the degree of freedom may be approximated by ∞, and the critical value t1−α/2 ≈ 1.96. A confidence interval can be constructed for each possible realization of data of size K that could have been collected, 100(1 − α)% of these intervals would contain the true parameter value θk. Thus, confidence intervals provide further information on the extent of uncertainty involved in estimating θ using the given estimator and sample size K. However, it should be noted that the confidence intervals are statistically optimistic due to the use of a linear approximation of the non-linear model in the neighborhood of the best parameter estimates [15,61]. Similarly, the standard error can be used to test if model parameters are significantly different from zero. This can be done by testing if

A number of subsets can be generated from analysis of the correlation matrix. In this study we used a structured approach to identify correlated parameters. Results from this analysis is a tree that maps parameter correlations. At the root of the tree correlations found among the original set of parameters are listed, at each subsequent generation one (correlated) parameter is eliminated from the original set of parameters. This parameter will be considered a constant that is kept fixed at its a priori value. The specific structure of the tree depends on the problem, the set of parameters, and the value γ used for identifying if a given set of parameters are correlated.

Branching continues until there are no more correlated parameters in the parameter set. We have chosen to sort correlated parameters from the least to the most sensitive, consequently the left-most vertex in the tree contains the maximal sensitive uncorrelated parameters (i.e., the least sensitive of the correlated parameters have been eliminated), while the right-most vertex has eliminated the most sensitive parameters. An example of such a tree is illustrated in Fig. 5 for the heart rate model analyzed here. The process of generating the tree is outlined in the following algorithm:

Fig. 5.

Subsets computed using the structured correlation method. Parameters noted in the tree are those appearing in pairwise correlations. Brackets below the tree show the actual parameters in each subset.

Structured covariance analysis

Compute c using (11) and identify all correlated parameters, e.g., identify parameter pairs for which |ci,j| > γ.

Calculate S̅k, k = 1,…,q using (10) and sort parameters according to their sensitivity. List all parameters ordered from the least to the most sensitive at the next available level and position in the tree. The first set of correlated parameters is placed at the root of the tree.

Remove the least sensitive correlated parameter from θ and recompute ci,j with the reduced parameter set (this set can easily be created by deleting the corresponding column of S, see (8)). The parameter removed from θ should be kept fixed at its a priori value.

Continue from 1 until |ci,j| < γ for all i, j.

When no more correlations are present, record the subset, go back one level in the tree, if there are more parameters to analyze, choose the next parameter and continue from 1 along this sub-branch.

With this method a correlation tree is formed. Rather than showing a general tree we refer to the tree shown in Fig. 5, generated using the heart rate model discussed in section 3.

2.2.2 Singular value decomposition followed by QR factorization

Subset selection via singular value decomposition followed by QR factorization (SVD-QR) follows ideas put forward by Golub and van Loan [24,23], and have been used in various applications, see Velez-Reyes [67], Burth et al. [10], and Pope et al. [53,54]. The last three studies used the method in models predicting blood pressure and flow. Ideas used here are also related to the eigenvalue method described in section 5.4 of the paper by Miao et al. [45].

The SVD-QR method is based on analysis of singular values obtained by decomposing the sensitivity matrix S. S can be decomposed as S =UΣVT, whereU and V are the left and right singular vectors, while Σ is a matrix of singular values. The singular values (extracted from Σ) are used to identify the number of parameters ρthat can be included in the subset. This is done by computing a numerical rank of the singular value matrix Σ. Subsequently, QR decomposition with column pivoting after the maximal element is used to determine which ρ parameters that can be identified. Similar to the sensitivity analysis, the numerical rank ρ can be found by analyzing computational accuracy of the singular values. The SVD-QR subset selection method can be summarized in the following algorithm:

Subset selection using SVD-QR

Given a set of parameters θ, compute the sensitivity matrix S using (8) and use singular value decomposition to rewrite as S = UΣVT, where Σ is a diagonal matrix containing the singular values of S in decreasing order, andU and V are orthogonal matrices with left and right singular vectors.

Determine ρ, the rank of the sensitivity matrix S.

Partition the matrix V of eigenvectors on the form V = [VρVq−ρ].

Determine a permutation matrix P by constructing a QR decomposition with column pivoting after the maximal element for , see [23]. That is, determine P such that , where Q is an orthogonal matrix and the first ρ columns of R form an upper triangular matrix with diagonal elements in decreasing order.

Use P to re-order the parameter vector θ according to θ̂ = PTθ.

Make the partition θ̂ = [θ̂ρ θ̂q−ρ]. The subset of identifiable parameters θ̂ρ, the first ρ elements of θ̂.

2.2.3 Subspace selection

The subspace selection method is based on an analysis similar to the SVD-QR method. However, instead of using singular values to identify the number of identifiable parameters, this method is based on predicting eigenvalues of the model Hessian ℋ = ST S, which can be decomposed as ℋ = WΛWT, where Λ is a diagonal q × q matrix with the eigenvalues in the diagonal and the columns of W contain the associated eigenvectors. Similar to the SVD-QR method we partition the sorted eigenvalues (from lowest to highest) and associated eigenvectors into two groups: eigenvalues {λ1,…,λρ} and {λρ+1,…,λq}, and the corresponding eigenvectors {w1,…,wρ} and {wρ+1,…,wq}. These two groups are associated with parameters that can be identified (the first group) and those that cannot. We denote the subspace spanned by the first ρ eigenvectors 𝒲a = span{w1,…,wρ}. The corresponding matrix, Wa = [w1,…,wρ], comprises the identifiable subspace and its dimension ρ gives the number of identifiable parameters. Hence, Wa denotes the orthogonal projection matrix onto the subspace 𝒲a.

The subspace, taken from the set of all subspaces of dimension ρ spanned by the canonical basis that is closest to 𝒲a, comprises the subset of identifiable parameters. To predict this, we compute the subspaces spanned by ρ canonical basis vectors, ℰ = {span{ei1,…, eiρ} : 1 ≤ i1 < … < iρ ≤ q}. The number of subspaces in ℰ can be found as .

Next, we define a metric on the set of all subspaces with dimension ρ. Let X and Y be arbitrary subspaces of Rq such that dim(X) = dim(Y) = ρ and let PX and PY be the orthogonal projection onto X and Y, respectively. The distance between X and Y is defined as dist(X,Y) = ‖PX − PY‖2, where the norm is the operator two-norm on the space of linear operators, see [23]. Note, the distance dist defines a metric on the set of subspaces with the same dimension. If X = image(WX) for some orthogonal projection matrix WX and Y = image(WY) for some orthogonal projection matrix WY then is an orthogonal projection matrix onto X⊥ and is an orthogonal projection matrix onto Y⊥.

The subset of identifiable parameters is then the subspace spanned by the canonical basis vectors which is closest to 𝒲a. This subspace can be found by minimizing dist(𝒲a,E(i)ρ), where E(i)ρ ∈ ℰ. Here the subscript (i)ρ represent an ordered ρ-dimensional multi-index (i)ρ = (i1,…, iρ) with 1 ≤ i1 < … < iρ ≤ q. The minimization may be done through inspection of possibilities.

In summary, the subspace selection algorithm can be written as:

Subspace selection

Given a set of parameters θ, compute the sensitivity matrix S using (8) and the model Hessian ℋ = ST S. Then, eigenvalue decomposition is used to write ℋ =WΛWT.

Determine ρ, the numerical rank of the eigenvalue matrix Λ.

Partition the matrix of eigenvectors inW = [WρWq−ρ].

Calculate the 𝒲a = span{W1,…,Wρ}.

Collect all subspaces spanned by the canonical basis vectors E(i)ρ = span{e1,…, eρ} of dimension ρ.

Predict the distances .

Find the smallest distance d = min(dist) over all E(i)ρ and find parameters associated with the eigenvectors spanning the subspace minimizing dist.

2.2.4 Concluding remarks

When estimating a subset of model parameters a question arise what to do with the remaining parameters not included in the subset. We have chosen to keep both insensitive parameters (not impacting the model outcome) and correlated parameters fixed at their a priori values. While this approach may not impact the predicted system dynamics, keeping these parameters fixed reduces the order of the model and bias values obtained for the remaining estimated parameters [9,57]. Estimating more parameters will decrease the bias, but if too many parameters are estimated the variance will increase, and as discussed in the studies by Thompson et al. [63] and Wu et al. [70], the goal is to balance the bias and the variance allowing estimation with a small prediction error.

Two steps were taken to minimize the bias: one involves investigation of the a priori knowledge for a given parameter. If two parameters are correlated, and an initial value can be estimated with high fidelity (from data) for one of these, then it may be reasonable to keep this parameter at its a priory value while estimating the more uncertain parameter. If two sensitive parameters are correlated, that are both uncertain, the bias can be reduced by fixing the parameter that is less sensitive. Alternatively, these two parameters could be estimated using a Monte-Carlo based approach.

Moreover, it is essential to develop a strategy for analysis of parameters over multiple datasets. The objective could either be to estimate the best set of parameters that minimize the least squares error over all data, or to estimate a parameter subset for each dataset. If the objective is to use statistical analysis to understand how parameters change within or between datasets, it is essential that the same parameters are estimated for all datasets. As discussed in the study by Pope et al. [53], a common subset can be obtained by including parameters appearing with the highest frequency.

Finally, the methods may be combined, to do so we propose to first use either the SVD-QR or the subspace method to estimate a subset of parameters. If the subset contains correlated parameters then the least sensitive among them may be kept fixed at its a priori value, i.e., the original parameter set will be reduced. Subsequently, the SVD-QR or subspace methods should be repeated on the reduced parameter set. These steps should be continued iteratively until no more correlations can be detected.

2.3 Parameter estimation

All three methods described above, lead to identification of a subset of parameters that can be estimated given the model and available data. We used a trust-region variant of the gradient-based Gauss-Newton optimization method to estimate parameters that minimize the least squares error J defined in (6). Gauss-Newton is an iterative method [33] that at each iteration uses a solution based on a local linear approximation to compute the next iterate. When a subset of uncorrelated parameters is identified. Convergence can be predicted theoretically [35], even if the initial parameter estimates are far from the solution, and for parameters near the solution, theory predicts that fast convergence. In general, this method provides more accurate estimates if parameters are of the same order of magnitude. One way to ensure this is to scale parameters, e.g., using logarithmic scaling. Thus, instead of estimating θ one could estimate θ̃ = log(θ). This approach is used for the example discussed below. Finally, it should be noted, that if gradients cannot be computed from the model one could use the Nelder-Mead simplex method, implicit filtering, or genetic algorithms to estimate the model parameters. Discussion of advantages/disadvantages of these methods can be found in books by Kelley [33,34].

3 Example: Heart rate regulation

Heart rate is one of the most important quantities controlled by the body to maintain homeostasis. The control of heart rate is mainly achieved by the autonomic nervous system involving a number of subsystems that operate on several time and length scales. One of the major contributors to autonomic regulation of heart rate is the baroreflex system, which operates on a fairly fast time-scale (seconds). Baroreflex control consists of three parts: an affecter part, a control center, and an effector part, see Fig. 1. The afferent baroreceptor neurons respond to changes in stretch of the arterial wall modulated through changes in arterial pressure. The afferent neurons terminate in the nucleus solitary tract (NTS) within the medulla. Sympathetic and parasympathetic outflows are generated in NTS, parasympathetic outflow travel along the vagal nerve, whereas sympathetic outflow travel via a vast network of interconnected neurons. The main neurotransmitters involved with modulating heart rate are acetylcholine, which is released by the vagal nerves and noradrenaline released from the postganglionic sympathetic nerves.

Fig. 1.

Afferent baroreceptor nerves in the carotid sinus and the aorta are stimulated in response to changes in stretch of the arterial wall, and consequently by changes in arterial pressure. Afferent signals are integrated in the NTS, where sympathetic and parasympathetic efferent signals are generated. These travel to the heart (among other organs), where heart rate is either stimulating or inhibited.

Clinically, baroreflex regulation of heart rate is assessed by imposing postural challenges such as head-up tilt or sit-to-stand. In this study we adapt a model (outlined in Fig. 2) developed in previous studies [47,48,50] to study heart rate dynamics during head-up tilt. The objective is to use the three methods discussed above to identify and estimate a subset of model parameters given our model, input blood pressure data, and a set of simulated outflow data. Simulated data are used to ensure that the parameter estimation method converge with a least squares error that is close to zero.

Fig. 2.

Model components. Mean blood pressure, age and resting heart rate is used as an input to the model. The model consists of 5 components: a module predicting averaged arterial blood pressure, a module predicting the baroreflex firing rate, modules predicting sympathetic and parasympathetic outflow, and a heart rate module. Dependencies between modules are marked by arrows.

For this study we assume that changes in arterial wall stretch is proportional to the change in the filtered blood pressure (p̅) computed as

| (12) |

where p is obtained from pressure data. Using the averaged blood pressure we predict the afferent firing rate n as

where N denotes a baseline firing rate and ni denote the firing rates related to fiber of type i, which is computed as

| (13) |

Here M denotes the maximum firing rate, κi the gain, dp̅/dt denotes the time derivative of the average pressure and τi, i = 1,2 are time scales [49,50].

The afferent firing rate n is integrated in the NTS, where sympathetic fsym and parasympathetic fpar outflows are generated. To account for complexity of the sympathetic pathway, we predict the sympathetic outflow as a function of n(t − τd), where τd is a constant time delay. The delay along the parasympathetic pathway is negligible, and consequently the parasympathetic outflow is modeled directly as a function of n. The resulting equations predicting sympathetic and parasympathetic outflow are given by [49,50]

The next step involves prediction of the concentration of neurotransmitters acetylcholine Cach and noradrenaline Cnor, which can be obtained from

| (14) |

| (15) |

where τnor and τach are time scales, [49,50]. Finally, we computed heart rate as

| (16) |

where h0 denotes the intrinsic heart rate and mnor and mach are factors attenuating/accentuating impact of neurotransmitters on the heart rate.

In terms of the general theory outlined in section 2, n = 5 corresponding to the five model states x = (p̅,n1,n2,Cach,Cnor), m = 1 corresponds to the computed output (heart rate) y = h, and y = (y1(t1), y1(t2),…, y1(tK))T. The model has 14 parameters (q = 14) given by

| (17) |

It should be noted that this model is idealized through a system of delay differential equations, whereas the method presented earlier is formulated for a system of ordinary differential equations. This system of equations has been solved with Radar5 a fortran library developed by Guglielmi and Hairer [27], using parameters and initial conditions described below.

Initial conditions The initial condition for the mean pressure p̅(0) defined in (12) is set to the mean pressure during rest obtained from data. For the firing rates defined by (13), we assume that n1(0) and n2(0) are zero initially, i.e., the total firing rate n(0)= N, we also assume that this firing rate is obtained τd time-steps back, i.e., we assume ni(t) = 0, for i = 1,2 and −τd ≤ t ≤ 0. Consequently,

On average, the concentrations of noradrenaline Cnor and Cach defined in (14–15) are not changing initially implying that Cach(0) = fpar(0) and Cnor(0) = fsym(0). Finally, we assume that the firing rate at rest h(0) used to predict the heart rate h defined in (16) can be obtained from data.

Model parameters Model parameters given in Table 1 are obtained partly from literature and partly using patient specific calculations. We assume that the maximum heart rate (beats/min), hM = 217 − 0.85 · age, is obtained when n = M, i.e., for fpar = 1 and fsym = 0. Hence,

Table 1.

Nominal and estimated model parameters. The top row shows all 14 parameters listed along with their unit, parameters marked n.d. are non-dimensional. The second row shows initial parameter values, the third row shows parameters (marked in bold) estimated using simulated data, while the bottom row shows parameter estimations obtained using real data. Parameters estimated include: θ = {N, κ1, τ1, τ2, τach, τnor, h0, mnor, mach}.

| α | N | M | κ1 | κ2 | τ1 | τ2 | τach | τnor | β | h0 | mnor | mach | τd |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Hz | Hz | Hz | mmHg−1 | mmHg−1 | sec | sec | sec | sec | n.d. | beats/sec | n.d. | n.d. | sec |

| 1.5 | 75 | 120 | 1.5 | 1.5 | 0.5 | 250 | 0.5 | 0.5 | 6 | 1.67 | 0.96 | 0.7 0 | 7 |

| 1.5 | 74.9 | 120 | 1.504 | 1.5 | 0.511 | 248 | 0.509 | 0.507 | 6 | 1.67 | 0.959 | 0.699 | 7 |

| 1.5 | 115 | 120 | 0.945 | 1.5 | 0.896 | 229 | 0.634 | 0.558 | 6 | 2.17 | 0.963 | 0.555 | 7 |

Similarly the minimum heart rate hm is obtained when N = 0, i.e., fpar = 0 and fsym = 1. Consequently,

The parameter N is obtained such that at rest, the heart rate h defined in (16) equals that found at t = 0. Using the above definitions for mnor and mach we get

3.1 Experimental and simulated data

To compare the three subset selection methods we used simultaneous measurements of heart rate and blood pressure data obtained by Dr. Mehlsen, Frederiksberg Hospital, Denmark. Data are sampled at 100 Hz during head-up tilt in a healthy young subject. Heart rate values are derived from a standard 3-lead ECG, while blood pressure measurements are obtained with a Finapres devise.

Blood pressure is measured in a finger at the level of the carotid arteries. During supine position, baroreceptors are at the level of the heart. Thus, the blood pressure measured in the finger can be used directly as a stimulus for the baroreceptors. As the subject is tilted carotid sinus baroreceptors are lifted above the heart, while aortic baroreceptors remain at the level of the heart. This differentiation generates a hydrostatic pressure differential exposing carotid baroreceptors to a larger drop in blood pressure than the aortic baroreceptors, much discussion on the relative role of these receptors can be found in the literature. In humans it is believed that the carotid baroreceptors play a more prominent role in short term blood pressure regulation, see e.g., [19,20]. Fig. 3 shows carotid blood pressure and heart rate during head-up tilt, gravity imposes a drop in pressure at the level of the baroreceptors during head-up tilt. Heart rate increases in response to the drop in the observed blood pressure.

Fig. 3.

The figure shows blood pressure (blue), heart rate data (red), and simulated heart rate (cyan) as a function of time.

The model analyzed in this study does not integrate all mechanisms involved in heart rate regulation. In particular, our model does not incorporate stimulation from the muscle sympathetic system, the vestibular system, or from the mental system, neither do we include respiratory contributions to parasympathetic response, this part of the response gives rise to fast oscillations observed in the blood pressure data input to the model. These were included in previous studies [47–49] via introduction of an impulse input stimulating parasympathetic withdrawal. Another important factor, not modeled, is the hormonal regulation, which operates over longer time scales (min-hours).

The aim in this study is to develop methods that allow identification of a subset of parameters given a model and data. Due to the model deficiencies we first estimated model parameters using simulated data before employing the real data. Both simulated and measured heart rate data are shown in Fig. 3. We used the simulated data to compare the ability to estimate model parameters from each subset. Once, a ”best” subset was obtained we estimated parameters minimizing the least squares error between the model and the measured heart rate data.

3.2 Sensitivity analysis

In our simulations we computed the k × 14 sensitivity matrix S = ∂h/∂θ̃ calculated using (8), where h denotes the heart rate computed using (16), and θ̃ = log(θ) is the log-scaled parameters. For each cardiac cycle k, k = 1…479, S is evaluated at time tk denoting the time when the heart beats.

The differential equations are solved numerically with an absolute and relative tolerance of tol = 10−8, thus the tolerance for the sensitivity matrix S is 𝒪(10−4) [33]. For each parameter θ̃i, ranked sensitivities were computed using the operator two-norm S̅i = ‖Si‖2, i = 1,…,q defined in (10). The ranked sensitivities were normalized with respect to the largest sensitivity. For the heart rate model studied here, all sensitivities are larger than the predicted error bound, i.e., S̅i ≤ 10−3 for all i = 1,…,q.

3.3 Biological considerations

Sensitivity analysis is a tool used to asses if model parameters are identifiable given a model and a set of data. For this study all model parameters are sensitive, at least when measured against the error-bound. In addition, biological arguments may eliminate estimation of certain parameters. The heart rate model discussed here contain three parameters of this type:

-

–

The parameter α used to smooth the pressure data. A large value of α provides almost no smoothing, while a small α will smooth the pressure data. But using α ≪ 1 introduces a phase shift in p̅. To achieve some smoothing, but only a small phase shift we kept this parameter constant.

-

–

The maximum firing rate M. This parameter serves as a scale for the afferent firing rate; n always appears normalized in the equations as n/M. Thus, this parameter could be eliminated by rescaling the system equations. In this study, we work with the original equations, but keep this parameter constant.

-

–

The delay parameter τd. Calculating the heart rate sensitivity with respect to this parameter is difficult since it involves analysis of both the instantaneous and delayed states resulting in an infinite dimensional problem [4]. So, to use the theory for ordinary differential equations developed here we keep this parameter constant.

Keeping these three parameters fixed at their a priori values leaves an 11-dimensional parameter vector given by

| (18) |

3.4 Subset selection

We applied the three subset selection methods outlined in sections 2.2.1, 2.2.2, and 2.2.3 to the heart rate model. The reduced parameter set listed in (18) has 11 parameters (q = 11). While this is a reduction compared to the original 14 parameters it is still not feasible to estimate all 11 parameters. Results given in Table 2 shows that even though estimation of all 11 parameters gives a small least squares error, both the average and maximum error is large, i.e., it is not possible to retrieve accurate values for all 11 parameters.

Table 2.

Subsets obtained with the three methods. The first column lists parameters in the subset, the second shows the subset number. The 3’rd and 4’th columns list the value of the final gradient from the Levenberg-Marquart optimization method and the least squares error calculated using (6). The 5’th column lists the subspace distance, the 6’th column lists the mean and maximum error in percent and column 7 lists the parameter associated with the largest error. Column 8 lists correlated parameters in the subsets (NA denotes Not Applicable), and the final column shows the rank of the given subsets within each method. These are found by sorting the least squares errors from smallest to largest.

| subset | subset # | grad ×10−5 | l.s.e. | dist | error | parm | corr | rank |

|---|---|---|---|---|---|---|---|---|

| Correlation analysis | ||||||||

| N κ1 τ1 τ2 τach τnor h0 mnor mach | 1 | 8.98 | 3.31·10−8 | 1.15 | 1.36/3.39 | mnor | NA | 1 |

| N κ1 τ1 τ2 τach τnor β h0 mach | 2 | 2.46 | 6.63·10−8 | 1.20 | 1.64/4.65 | τnor | NA | 2 |

| N κ1 κ2 τ1 τ2 τach τnor h0 mnor | 3 | 5.37 | 1.07·10−7 | 1.18 | 3.69/14.63 | mnor | NA | 3 |

| N κ1 κ2 τ1 τ2 τach τnor β h0 | 4 | 5.91 | 1.43·10−7 | 1.23 | 2.45/6.68 | τnor | NA | 6 |

| N κ1 τ1 τ2 τach τnor mnor mach | 5 | 9.49 | 1.26·10−7 | 1.42 | 3.38/9.12 | mnor | NA | 4 |

| N κ1 τ1 τ2 τach τnor β mach | 6 | 1.39 | 1.29·10−7 | 1.49 | 2.16/5.96 | τnor | NA | 5 |

| κ1 κ2 τ1 τ2 τach τnor mach | 7 | 8.77 | 1.13·10−6 | 1.69 | 4.02/8.27 | τnor | NA | 14 |

| N κ1 κ2 τ1 τ2 τach τnor | 8 | 7.64 | 1.67·10−7 | 1.64 | 2.17/8.15 | τnor | NA | 7 |

| N κ1 κ2 τ1 τach τnor mnor | 9 | 5.10 | 6.32·10−7 | 1.58 | 5.85/11.9 | mnor | NA | 10 |

| N κ1 κ2 τ1 τach τnor β | 10 | 8.25 | 7.68·10−7 | 1.64 | 3.77/9.93 | τnor | NA | 12 |

| κ1 κ2 τ1 τach τnor mach | 11 | 5.45 | 7.83·10−7 | 1.74 | 4.17/9.56 | τnor | NA | 15 |

| κ1 κ2 τ1 τ2 τach τnor | 12 | 4.46 | 2.89·10−7 | 1.91 | 1.74/7.15 | τnor | NA | 8 |

| κ1 κ2 τ1 τach τnor mnor | 13 | 6.15 | 8.41·10−7 | 1.81 | 7.61/19.3 | mnor | NA | 13 |

| N κ1 κ2 τ1,τach τnor | 14 | 5.14 | 7.61·10−7 | 1.69 | 3.80/9.63 | τnor | NA | 11 |

| κ1 κ2 τ1 τach τnor β | 15 | 4.14 | 4.96·10−7 | 1.87 | 5.39/9.73 | τnor | NA | 9 |

| SVD-QR | ||||||||

| N κ1 κ2 τ1 τ2 τach τnor β h0 mnor mach | S11 | 7.88 | 2.30·10−7 | 0 | 7.63/17.3 | κ2 | (κ2, β,mnor,mach) | 1 |

| N κ1 κ2 τ1 τach τnor h0 mnor mach | S9 | 8.76 | 8.89·10−7 | 0.927 | 5.63/16.9 | mnor | (κ2,N,mach,h0) | 3 |

| N κ1 τ1 β h0 mnor mach | S7 | 6.80 | 6.73·10−7 | 1.31 | 3.44/9.3 | mnor | (mnor,h0) | 2 |

| Subspace analysis | ||||||||

| N κ1 κ2 τ1 τach τnor h0 mnor mach | S9 | 8.76 | 8.89·10−7 | 0.927 | 5.63/16.9 | mnor | (κ2,N,mach,h0) | 2 |

| N κ1 κ2 τ1 h0 mnor mach | S7 | 2.37 | 5.46·10−8 | 1.25 | 3.49/12.9 | mnor | (κ2,N,mach) | 1 |

Quantities important for subset selection are the sensitivity matrix, which for the reduced problem is a matrix of dimension 479 × 11 (the data we use include 479 cardiac cycles, i.e., K = 479), and the model Hessian approximated by ℋ = ST S, ℋ, a positive definite symmetric matrix of dimension 11 × 11. Subsets obtained with each of the three method are printed in column 1 of Table 2. In addition, the table lists the gradient, the least squares error, the subspace distance, the average and maximal error in percent, the parameter that had the largest error, possibly correlations, and the rank within each method.

It should be noted that the largest error either always was in either mnor and τnor. As shown in Fig. 4, τnor is the least sensitive parameter, thus it is expected that this parameter is the hardest to predict. The fact that mnor is difficult to predict may be related to relative sensitivities (9). According to this ranking (results not shown) mnor is the 2nd least sensitive parameter.

3.4.1 Structured correlation subsets

The subsets obtained using the structured correlation method are shown in Fig. 5. Starting at the root of the tree, analysis of all 11 parameters reveal that parameters {κ2, β ,mnor,mach,h0} appear in correlations. The correlations are all pairwise: κ2 is correlated with mach; h0 and mnor are correlated with β; and mach is correlated with h0. At each sub-branch the correlation matrix was recomputed eliminating (keeping it fixed at its a priori value) the least sensitive correlated parameter. For the first step, the least sensitive parameter κ2 was eliminated from the correlation matrix. After removing this parameter, the correlation matrix was recomputed. The new correlation matrix revealed that the parameters β and mnor are correlated. Subsequently, eliminating either of these parameters gave rise to a reduced matrix without pairwise correlations. The final subsets labeled by ”[1]” and ”[2]” are listed below the tree and in Table 2.

During the structured correlation analysis, some pairwise correlations will appear more than once. For each set of this type, the edge associated with the leftmost occurrence of the subset(s) have been marked with a given color. Subsequent occurrences of the same subset(s) are marked with ovals of the same color. Results shown here were computed using γ = 0.9, i.e., parameters were marked as correlated if |ci, j| > 0.9. We investigated results for other values of γ as well. For γ = 0.95 the root would contain only one correlated parameter pair (κ2,mach), the sub-branches stemming from these leaves would remain the same. For γ= 0.85, the root of the tree would remain the same, but some of the sub-branches would be longer. For either value of γ, the ”best” subset (subset 1) is included in the tree.

3.4.2 SVD-QR subsets

The SVD-QR method requires prediction of ρ, the number of elements to be included in the subset. We predicted ρ from analysis of computational accuracy. The model is solved using an absolute and relative error tolerance of order 𝒪(10−8). Since sensitivities are computed using finite differences, the sensitivity matrix is accurate to order 𝒪(10−4). Consequently, the smallest singular value (shown in Fig. 6) accepted is 10−3. The latter condition is equivalent to choosing columns of S that form a matrix with a condition number no greater than 103. For our system, this bound allows inclusion of all 11 parameters in the subset. As shown in Table 2, estimating all 11 parameters gives rise to large errors and several parameters are correlated. While the model can predict the simulated data with this bound, the least squares error is small, the values of the parameters cannot be predicted well. Thus, we investigated the impact of increasing this bound to 5 ·10−3 and to 10−2. The first bound allowed inclusion of 9 parameters, the latter 7. Table 2 shows results of estimating all 11, 9, and 7 parameters picked by the SVD-QR method. Estimating fewer parameters (9 and 7 vs. 11) only has a small impact on the least squares error (it is still of order 10−7), yet both subsets have smaller errors but some parameters still appear as correlated.

Fig. 6.

Singular values associated with the sensitivity matrix.

3.4.3 Subspace selection subsets

Similar to the SVD-QR subset selection method the subspace selection method requires selection of the number of parameters to be identified. This first step of the analysis is the same as the one used by the SVD-QR method, except that eigenvalues and not singular values are used for predicting ρ. Since analysis of eigenvalues is equivalent to analysis of the singular values we used the same number of parameters as in the SVD-QR method. Consequently, we calculated subspace distances using ρ = 7,9 and 11 parameters. The complete parameter set included 11 parameters, thus the subspace distance for this parameter set is 0. Results including all 11 parameter values is listed under the SVD-QR method. Parameter sets with ρ = 7 and ρ = 9 parameters are listed in Table 2. The total number of subspaces examined were computed as , where ρ denotes the number of parameters in the subspace. For ρ = 9 the total number of subspaces is 55, while for ρ = 7 the number of subspaces is 330. The subspace distances computed with ρ = 9 parameters are shown in Fig. 7. Note, there are gaps between the subspaces. Similar to results obtained with the SVD-QR method minimum distance subspaces all contain correlated parameters, also note that the subset with ρ = 9 parameters (the one with the smallest subspace distance) is the same subset we obtained with the SVD-QR method.

Fig. 7.

Subspace distance for subsets including 9 parameters. Note the gaps in the distances.

3.4.4 Combining methods

Since subsets obtained with the SVD-QR and subspace selection methods contain correlated parameters, we investigated if combining either of these methods with structured correlation analysis would lead to an optimal subset. The combined approach was applied to subsets with ρ = 9. As a point of departure we ordered correlated parameters (κ2,N,mach,h0) from the least to the most sensitive. We kept the least sensitive parameter κ2 fixed at its a priori value and eliminated it from the original parameter vector. Then, we reapplied the SVD-QR or the subspace selection method on the 10-dimensional parameter vector

The resulting subset was the same as the one found using the structured correlation analysis (subset 1).

3.5 Optimization of parameters

For the heart rate example discussed in this study, we used the Levenberg-Marquart method to estimate model parameters. During the optimization, parameters not in the subset were kept fixed at their a priori values listed in Table 1. For each subset, comparison of the model prediction was done using the simulated data. For these simulations, the optimization algorithm converges since the residual approaches zero at optimal parameter values. Initial values for the parameters to be estimated were obtained by scaling the initial parameter values randomly by a factor 0.9. For a more complete investigation, in particular, for problems that do not rely on simulated data, one should choose a number of initial parameter sets from random distributions and then run simulations with each method for each parameter set.

For each set of optimized model parameters we calculated the value of the gradient, the least squares error, the subspace distance, and the mean and maximum percent deviation of the optimized parameters from the true parameters, listed the parameter that was predicted with the largest error. These results are reported in Table 2.

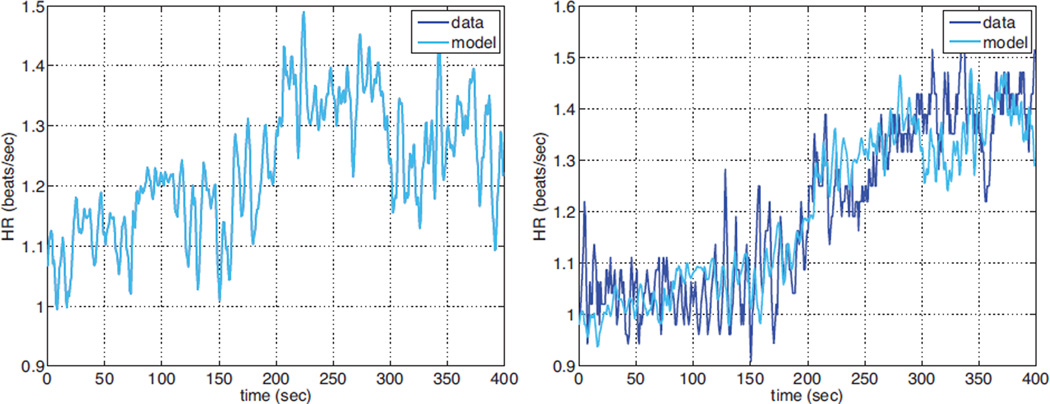

Finally Fig. 8 shows heart rate prediction using the ”best” parameter subset (subset 1). Results are shown both against the simulated and the real data. Initial and optimized parameter values are given in Table 1.

Fig. 8.

Left panel shows simulated data and model result (an almost perfect match) and the right panel shows model results compared to the real data, both results are obtained with subset 1 identified using the structured correlation method.

4 Discussion

This study has used three methods for identifying subsets of parameters that can be predicted given a model, a set of parameters, and a set of experimental data. The methods are denoted ’the structured correlation method’, ’the SVD-QR method’, and ’the subspace selection method’. We compared the three methods and showed that the ”best” subset, i.e., the subset with the smallest least squares error is found using the structured correlation method. This subset is placed the furthest to the left in the tree. We also compared optimization results among all three methods. Results showed that subsets found using the structured correlation analysis method gave more robust optimization results, the predicted parameters had a smaller error. We base this on results where optimizations were done using 10 random variations of the initial parameter values. We believe that these subsets provide more reliable parameter estimates since they contain no correlated parameters. It should be noted that the ”best” subset found with the correlation analysis method does not necessarily give rise to a minimum distance as defined by the subspace method. Furthermore, the ”best” subset, remains even if the parameter γ is varied from 0.85 to 0.95. Results obtained using either subspace analysis or the SVD-QR method did not differ significantly. An advantage of these methods is that they are computationally faster than the structured correlation method. The biggest computational effort is the parameter estimation, and for these methods it is only done once for the subset in question, whereas for the structured correlation analysis an optimization is performed for each potential subset. However, the subsets found using the SVD-QR and the sub-space selection methods contained correlated parameters. The latter gave rise to larger errors in the predicted parameter estimates.

As a consequence, we investigated the impact of combining methods. This was done by keeping the least sensitive correlated parameter (κ2), appearing in the 9-parameter subset, fixed at its a priori value and then re-applying the SVD-QR or the subspace selection method on the 10 remaining parameters. This combination of methods resulted in subset ”1”, the ”best” subset found using the structured correlation method.

While it is evident that sensitivity analysis is important, it is less clear how to distinguish sensitive from insensitive parameters. In this study, we included all parameters in the set of sensitive parameters. Though similar to the subset selection, enforcing a less strict bound on ρ, may be advantageous, i.e., parameters predicted may have a smaller error. For example, increasing the bound from 10−3 to 10−2 corresponds to marking τnor as insensitive. Note, this was the parameter that contained the largest error.

One potential problem with the structured correlation method is that it is computational intensive. For each subset, the method requires an optimization step for each potential subset. Consequently, for bigger models it may be better to use one of the other two methods e.g. combined with correlation analysis as discussed above. Another disadvantage is that no theoretical results exist to predict the level at which two parameters are correlated. In this study we denoted parameters where |c| > γ for γ → 1 as correlated. In this study results were shown for γ = 0.9, this is a somewhat arbitrary choice. Though it should be noted, that the ”best” subset would remain the same for γ∈ [0.85,0.95]. Moreover, this method only reveals pairwise correlations. Another option would be to develop a method based on partial correlations, which may have potential to offer more insight into the system, for more details see [45] and references therein.

While the SVD-QR method and the subspace selection method are fast and easy to implement (only one optimization is required), they also have some potential problems. Both methods require computation of a numerical rank ρ, which for most problems only can be estimated but not predicted theoretically. In the analysis presented here we computed the rank based on the numerical accuracy, though this bound was too low, since our computations resulted in a subset including all model parameters. A further disadvantage of the SVD-QR method is that from a parameter estimation point of view, no theoretical results support column pivoting after the maximal elements as is commonly done by most QR factorization algorithms. The latter disadvantage does not exist with the subspace selection method, in which the parameter combinations are directly found from the eigenvector associated with the smallest subspace distance. One way to remedy the problems discussed above, is to combine the methods as discussed earlier.

Finally, in addition to identify a subset, parameters should be estimated. In this study parameters were estimated, both using simulated and real data. Simulated data were used to identify a subset of parameters that can be estimated given the model output, then these parameters were estimated using the real heart rate data. When computations are done with real data, the true parameter values are not known. Consequently, the initial set of parameter values, used for the identifiability analysis, provides one estimate. But, since the models are nonlinear, results may not reveal that the chosen subset of parameters only are identifiable within a given parameter space. If this parameter space is too small, for practical method it may be necessary to use a global analysis for better results. Again, the problem with this approach is that for practical applications the computations may be excessive, and therefore difficult to cary out in practice.

In summary, we conclude that it is essential to identify a subset of parameters that can be estimated reliably given a model and a set of data, and that simulated data can be used to predict what method is the most appropriate for finding the ideal subsets. Results with the example analyzed in this study, showed that structured correlation or a combination of SVD-QR or subspace selection with correlation analysis allows prediction of a parameter subset that can be estimated given data. These subset selection methods, should be preceded or combined with a sensitivity analysis, which has potential to provide further insight into the parameter dynamics. We recommend that both the sensitivity and identifiability analysis is repeated for a number of initial parameter values, in particular for highly nonlinear models. Finally, this analysis should be combined with knowledge about the model, which often is essential for a priori reduction of the parameter set.

Acknowledgements

We would like to thank Prof. Jesper Mehlsen, Department of Clinical Physiology & Nuclear Medicine, Frederiksberg Hospital, Denmark for providing data from his head-up tilt studies.

Contributor Information

Mette S. Olufsen, Department of Science, Systems, and Models, Roskilde University, Universitetsvej 1, 4000 Roskilde, Denmark & Department of Mathematics, North Carolina State University, Campus Box 8205, Raleigh, NC 27502, Tel.: +1-919-515-2678, Fax: +1-919-513-7336, msolufse@ncsu.edu

Johnny T. Ottesen, Department of Science, Systems, and Models, Roskilde University, Universitetsvej 1, 4000 Roskilde, Denmark, Tel.: +45 4674 2298, Fax.:+45 4674 3020, johnny@ruc.dk

References

- 1.Anderson DA. Structural properties of compartmental models. Math Biosci. 1982;58:61–81. [Google Scholar]

- 2.Astrom KJ, Cykhoff P. System identification-a survey. Automatica. 1971;7:123–162. [Google Scholar]

- 3.Banks HT, Davidian M, Samuels JR, Sutton KL. In: An inverse problem statistical methodology summary, in Mathematical and statistical estimation approaches in Epidemiology. Chowell G, Hayman JM, Bettencourt LMA, Castillo-Chavez C, editors. New York, NY: Springer; 2009. [Google Scholar]

- 4.Baker CTH, Paul CAH. Pitfalls in parameter estimation for delay differential equations. SIAM J Sci Comput. 1997;18:305–314. [Google Scholar]

- 5.Batzel J, Baselli G, Mukkamala R, Chon KH KH. Modeling and disentangling physiological mechanisms: linear and nonlinear identification techniques for analysis of cardiovascular regulation. Philos Transact A Math Phys Eng Sci. 2009;367:1377–1391. doi: 10.1098/rsta.2008.0266. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Bellman R, Astrom KJ. On structural identifiability. Math Biosci. 1970;7:329–339. [Google Scholar]

- 7.Berman M, Schoenfeld R. Invariants in experimental data on linear kinetics and the formulation of models. J Appl Phys. 1956;27:1361–1370. [Google Scholar]

- 8.Borella B, Lucchi E, Nicosia S, Valigi P. A Kalman filtering approach to estimation of maximum ventricle elastsance. Conf Proc IEEE Eng Med Biol Soc. 2004;5:3642–3645. doi: 10.1109/IEMBS.2004.1404024. [DOI] [PubMed] [Google Scholar]

- 9.Burnham KP, Anderson DR. Model selection and multimodel inference: A practical information-theoretic approach. 2’nd edition. New York, NY: Springer; 2002. [Google Scholar]

- 10.Burth M, Verghese GC, Valez-Reyes MV. Subset selection for improved parameter estimation in on-line identification of a synchronous generator. IEEE Trans Power Systems. 1999;14:218–225. [Google Scholar]

- 11.Carson ER, Finkelstein L, Cobelli C. Mathematical modeling of metabolic and endocrine systems: model formulation, identification, and validation. New York, NY: John Wiley & Sons; 1985. [Google Scholar]

- 12.Cintron-Arias A, Banks HT, Capaldi A, Lloyd AL. A sensitivity matrix based methodology for inverse problem formulation. J Inv Ill-Posed Problems. 2009;17:545–564. [Google Scholar]

- 13.Cobelli C, DiStefano JJ. Parameter and structural identifiability concepts and ambiguities: a critical review and analysis. Am J Physiol. 1980;239:R7–R24. doi: 10.1152/ajpregu.1980.239.1.R7. [DOI] [PubMed] [Google Scholar]

- 14.Daun S, Rubin J, Vodovotz Y, Roy A, Parker R, Clermont G. An ensemble of models of the acute inflammatory response to bacterial lipopolysaccharide in rats: Results from parameter space reduction. J Theor Biol. 2008;253:843–853. doi: 10.1016/j.jtbi.2008.04.033. [DOI] [PubMed] [Google Scholar]

- 15.Dochain D, Vanrolleghem PA. Dynamical modelling and estimation in wastewater treatment processes. London, UK: IWA Publishing; 2001. [Google Scholar]

- 16.Ellwein LM, Tran HT, Zapata C, Novak V, Olufsen MS. Sensitivity analysis and model assessment: Mathematical models for arterial blood flow and blood pressure. J Cardiovasc Eng. 2008;8:94–108. doi: 10.1007/s10558-007-9047-3. [DOI] [PubMed] [Google Scholar]

- 17.Ellwein LM, Otake H, Gundert TJ, Koo B-K, Shinke T, Honda Y, Shite J, LaDisa JF. Optical coherence tomography for patient-specific 3D artery reconstruction and evaluation of wall shear stress in a left circumflex coronary artery. Cardiovasc Eng Technol. 2011 [Google Scholar]

- 18.Eslami M. Theory of sensitivity in dynamic systems: an introduction. Berlin, Germany: Springer-Verlag; 1994. [Google Scholar]

- 19.Fadel PJ, Stromstad M, Wray DW, Smith SA, Raven PB, Secher NH. New insights into differential baroreflex control of heart rate in humnas. Am J Physiol. 2003;284:H735–H743. doi: 10.1152/ajpheart.00246.2002. [DOI] [PubMed] [Google Scholar]

- 20.Ferguson DW, Abboud FM, Mark AL. Relative contribution of aortic and carotid baroreflexes to heart rate control in man during steady state and dynamic increases in arterial pressure. J Clin Invest. 1985;76:2265–2274. doi: 10.1172/JCI112236. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Fisher FM. Generalization of the rank and order conditions for identifiability. Econometrica. 1959;27 431-417. [Google Scholar]

- 22.Frank P. Introduction to sensitivity theory. New York, NY: Academic Press; 1978. [Google Scholar]

- 23.Golub GH, van Loan CF. Matrix computations, Johns Hopkins Studies in Mathematical Sciences. 3’rd edition. Baltimore, MD: 1996. [Google Scholar]

- 24.Golub GH, Klema VC, Stewart GW. Technical Report. Stanford, CA: Department of Computer Science, Stanford University; 1976. Rank degeneracy and least squares problems. [Google Scholar]

- 25.Godfrey K. Compartmental models and their application. New York, NY: Academic Press; 1983. [Google Scholar]

- 26.Guyton AC, Coleman TG, Granger HJ. Overall regulation. Ann Rev Physiol. 1972;34:13–44. doi: 10.1146/annurev.ph.34.030172.000305. [DOI] [PubMed] [Google Scholar]

- 27.Guglielmi N, Hairer E. Radar5: A fortran-90 code for the numerical integration of stiff and implicit systems of delay differential equations. 2010 http//www.unige.ch/math/folks/hairer. [Google Scholar]

- 28.Gustafson P. What are the limits of posterior distributions arising from nonidentified models, and why should we care? J Am Stat Assoc, Theor Method. 2009;104:1682–1695. [Google Scholar]

- 29.Gutenkunst RN, Waterfall JJ, Casey FP, Brown KS, Myers CR, Sethna JP. Universally sloppy parameter sensitivities in systems biology models. PLoS Comp Biol. 2007;3:1871–1878. doi: 10.1371/journal.pcbi.0030189. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Gutenkunst RN, Casey FP, Waterfall JJ, Myers CR, Sethna JP. Extracting falsifiable predictions from sloppy models. Ann NY Acad Sci. 2007;1115:203–211. doi: 10.1196/annals.1407.003. [DOI] [PubMed] [Google Scholar]

- 31.Hu X, Nenov V, Bergsneider M, Glenn TC, Vespa P, Martin N. Estimation of hidden state variables of the intracranial system using constrained nonlinear Kalman filters. IEEE Trans Biomed Eng. 2007;54:597–610. doi: 10.1109/TBME.2006.890130. [DOI] [PubMed] [Google Scholar]

- 32.Jacquez JA. Compartmental analysis in biology and medicine. 2’nd edition. Ann Arbor, MI: The Univ of Michigan Press; 1985. [Google Scholar]

- 33.Kelley CT. Frontiers in Applied Mathematics. Vol 18. Philadelphia, PE: SIAM; 1999. Iterative methods for optimization. [Google Scholar]

- 34.Kelley CT. Implicit filtering, software environments and tools. Vol 23. Philadelphia, PE: SIAM; 2011. [Google Scholar]

- 35.Ipsen ICF, Kelley CT, Pope SR. Nonlinear least squares problems and subset selection. SIAM J Nume Anal. 2011;49:1244–1266. [Google Scholar]

- 36.Khoo MC. Modeling of autonomic control in sleep-disordered breathing. Cardiovasc Eng. 2008;8:30–41. doi: 10.1007/s10558-007-9041-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Kim HJ, Vignon-Clementel IE, Coogan JS, Figueroa CA, Jansen KE, Taylor CA. Patient-specific modeling of blood flow and pressure in human coronary arteries. Ann Biomed Eng. 2010;38:3195–3209. doi: 10.1007/s10439-010-0083-6. [DOI] [PubMed] [Google Scholar]

- 38.Kiparissides A, Kucherenko SS, Mantalaris A, Pistikopoulos EN. Global sensitivity analysis challenges in biological systems modeling. Ind Eng Chem Res. 2009;48 71687180. [Google Scholar]

- 39.Koopmans TC, Reiersol O. Identification of structural characteristics. Ann Math Statist. 1950;21:165–181. [Google Scholar]

- 40.Liang H, Miao H, Wu H. Estimation of constant and time-varying dynamic parameters of HIV infection in a nonlinear differential equation model. Ann Appl Stat. 2010;4:460–483. doi: 10.1214/09-AOAS290. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Lo M-T, Novak V, Peng C-K, Liu Y, Hu K. Nonlinear phase interaction between nonstationary signals: A comparison study of methods based on Hilbert-Huang and Fourier transforms. Phys Rev E Stat Nonlin Soft Matter Phys. 2009;79(6 Pt 1) doi: 10.1103/PhysRevE.79.061924. 061924. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Ljung L. System Identification, theory for the user. 2’nd edition. Upper Saddle River, NJ: Prentice Hall Inc; 1999. [Google Scholar]

- 43.Mehra RK. Topics in stochastic control theory-identification in control and econometrics: similarities and differences. Ann Econ Soc Meas. 2008;3:24–47. [Google Scholar]

- 44.Miao H, Dykes C, Demeter LM, Cavenaugh J, Parka SY, Perelson AS, Wu H. Modeling and estimation of kinetic parameters and replicative fitness of HIV-1 from flow-cytometry-based growth competition experiments. Bull Math Biol. 2008;70:1749–1771. doi: 10.1007/s11538-008-9323-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Miao H, Xia X, Perelson AS, Wu H. On identifiability of nonlinear ODE models and applications in viral dynamics. SIAM Review. 2011;53:3–39. doi: 10.1137/090757009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Olufsen MS, Ottesen JT, Tran HT, Ellwein LM, Lipsitz LA, Novak V. Blood pressure and flow variation during postural change from sitting to standing: model development and validation. J Appl Physiol. 2005;99:1523–1537. doi: 10.1152/japplphysiol.00177.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Olufsen MS, Tran HT, Ottesen JT, Lipsitz LA, Novak V. Modeling baroreflex regulation of heart rate during orthostatic stress. Am J Physiol. 2006;291:R1355–R1368. doi: 10.1152/ajpregu.00205.2006. [DOI] [PubMed] [Google Scholar]

- 48.Olufsen MS, Alston AV, Tran HT, Ottesen JT, Novak V. Modeling heart rate regulation, Part I: Sit-to-stand versus head-up tilt. J Cardiovasc Eng. 2008;8:73–87. doi: 10.1007/s10558-007-9050-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Ottesen JT, Olufsen MS. Functionality of the baroreceptor nerves in heart rate regulation. Comp Meth Prog Biomed. 2011;101:208–219. doi: 10.1016/j.cmpb.2010.10.012. [DOI] [PubMed] [Google Scholar]

- 50.Ottesen JT. Nonlinearity of baroreceptor nerves. Surv Math Ind. 1997;7:187–201. [Google Scholar]

- 51.Olgac U, Poulikakos D, Saur SC, Alkadhi H, Kurtcuoglu V. Patient-specific three-dimensional simulation of LDL accumulation in a human left coronary artery in its healthy and atherosclerotic states. Am J Physiol. 2010;296:H1969–H1982. doi: 10.1152/ajpheart.01182.2008. [DOI] [PubMed] [Google Scholar]

- 52.Pennati G, Socci L, Rigano S, Boito S, Ferrazzi E. Computational patient-specific models based on 3-D ultrasound data to quantify uterine arterial flow during pregnancy. IEEE Trans Med Imaging. 2008;27:1715–1722. doi: 10.1109/TMI.2008.924642. [DOI] [PubMed] [Google Scholar]

- 53.Pope SR, Ellwein LM, Zapata CL, Novak V, Kelley CT, Olufsen MS. Estimation and identification of parameters in a lumped cerebrovascular model. Math Biosci Eng. 2009;6:93–115. doi: 10.3934/mbe.2009.6.93. [DOI] [PubMed] [Google Scholar]

- 54.Pope SR. PhD Thesis. Raleigh, NC: North Carolina State University; 2009. Parameter identification in lumped compartment cardiorespiratory models. [Google Scholar]

- 55.Poyton AA, Varziri MS, McAuley KB, McLellan PJ, Ramsay JO. Parameter estimation in continuous-time dynamic models using principal differential analysis. Comp Chem Eng. 2005;30:698–708. [Google Scholar]

- 56.Ramsay JO, Hooker G, Campbell D, Cao J. Parameter estimation for differential equations: A generalized smoothing approach. J Royal Stat Soc: Series B (Stat Methodol) 2007;69:741–796. [Google Scholar]

- 57.Rao CR. Unified Theory of Linear Estimation. Sankhya: The Indian J Stat, Series A. 1971;33:371–394. [Google Scholar]

- 58.Raue1 A, Kreutz C, Maiwald T, Bachmann J, Schilling M, Klingmuller U, Timmer J. Structural and practical identifiability analysis of partially observed dynamical models by exploiting the profile likelihood. Bioinformatics. 2009;25:1923–1929. doi: 10.1093/bioinformatics/btp358. [DOI] [PubMed] [Google Scholar]

- 59.Rech G, Terasvirta T, Tschernig R. A simple variable selection technique for nonlinear models. Comm Stat Theor Meth. 2001;30:1227–1241. [Google Scholar]

- 60.Reymond P, Bohraus Y, Perren F, Lazeyras F, Stergiopulos N. Validation of a patient specific 1D model of the systemic arterial tree. Am J Physiol. 2010 [Google Scholar]

- 61.Rodriguez-Fernandez M, Egea JA, Banga JR. Novel metaheuristic for parameter estimation in nonlinear dynamic biological systems. BMC Bioinformatics. 2006;7:483. doi: 10.1186/1471-2105-7-483. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Taylor CA, Figueroa CA. Patient-specific modeling of cardiovascular mechanics. Ann Rev Biomed Eng. 2009;11:109–134. doi: 10.1146/annurev.bioeng.10.061807.160521. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Thompson DE, McAuley KB, McLellan PJ. Parameter estimation in a simplified MWD model for HDPE produced by a Ziegler-Natta catalyst. Macromol React Eng. 2009;3:160–177. [Google Scholar]

- 64.Yue H, Brown M, Knowles J, Wang H, Broomhead DS, Kell DB. Insights into the behaviour of systems biology models from dynamic sensitivity and identifiability analysis: a case study of an NF-kB signalling pathway. Mol BioSyst. 2006;2:640–649. doi: 10.1039/b609442b. [DOI] [PubMed] [Google Scholar]

- 65.Valdez-Jasso D, Haider MA, Banks HT, Bia D, Zocalo Y, Armentano R, Olufsen MS. Viscoelastic mapping of the arterial ovine system using a Kelvin model. IEEE Trans Biomed Eng. 2008;56:210–219. [Google Scholar]

- 66.Valdez-Jasso D, Banks HT, Haider MA, Bia D, Zocalo Y, Armentano RL, Olufsen MS. Viscoelastic models for passive arterial wall dynamics. Adv Appl Math Mech. 2009;1:151–165. [Google Scholar]

- 67.Velez-Reyes MV. PhD Thesis. Cambridge, MA: Massachusetts Institute of Technology; 1992. Decomposed algorithms for parameter estimation. [Google Scholar]

- 68.Vilela M, Vinga S, Grivet Mattoso Maia MA, Voit EO, Almeida JS. Identification of neutral biochemical network models from time series data. BMC Systems Biology. 2009;3:47. doi: 10.1186/1752-0509-3-47. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Wan J, Steele BN, Spicer SA, Strohband S, Feijoo GR, Hughes TJR, Taylor CA. A one-dimensional finite element method for simulation-based medical planning for cardiovascular disease. Comp Meth Biomech Biomed Eng. 2002;5:195–206. doi: 10.1080/10255840290010670. [DOI] [PubMed] [Google Scholar]

- 70.Wu H, Zhua H, Miao H, Perelson AS. Parameter identifiability and estimation of HIV/AIDS dynamic models. Bull Math Biol. 2008;70:785–799. doi: 10.1007/s11538-007-9279-9. [DOI] [PubMed] [Google Scholar]

- 71.Zenker S, Rubin J, Clermont G. From inverse problems in mathematical physiology to quantitative differential diagnoses. PLoS Comp Biol. 2009;3:2072–2086. doi: 10.1371/journal.pcbi.0030204. [DOI] [PMC free article] [PubMed] [Google Scholar]