Abstract

Objective. To determine whether longitudinal design and delivery of evidence-based decision making (EBDM) content was effective in increasing students’ knowledge, skills, and confidence as they progressed through a doctor of pharmacy (PharmD) curriculum.

Design. Three student cohorts were followed from 2005 to 2009 (n=367), as they learned about EBDM through lectures, actively researching case-based questions, and researching and writing answers to therapy-based questions generated in practice settings.

Assessment. Longitudinal evaluations included repeated multiple-choice examinations, confidence surveys, and written answers to practice-based questions (clinical inquiries). Students’ knowledge and perception of EBDM principles increased over each of the 3 years. Students’ self-efficacy (10-items, p<0.0001) and perceived skills (7-items, p<0.0001) in applying EBDM skills to answer practice-based questions also increased. Graded clinical inquiries verified that students performed satisfactorily in the final 2 years of the program.

Conclusions. This study demonstrated a successful integration of EBDM throughout the curriculum. EBDM can effectively be taught by repetition, use of real examples, and provision of feedback.

Keywords: evidence-based decision making, longitudinal evaluation, curriculum, evidence-based medicine

INTRODUCTION

With the rapid dissemination of new information, the ability to effectively search for, critically appraise, interpret, and integrate information into clinical decision-making is an elemental skill for health care professionals. Pharmacy students, many of whom will practice as generalist pharmacists, require a broader skill set for framing questions, efficiently searching for and appraising the highest quality evidence, and formulating recommendations and evaluating their impact. The Accreditation Council for Pharmacy Education standards for PharmD students state that pharmacy students must have skills in evidence-based decision making (EBDM).1 The Center for Advancement of Pharmacy Education educational outcomes also emphasize the importance of EBDM abilities, specifying that students must be able to “retrieve, analyze, and interpret the professional, lay, and scientific literature to make informed, rational, and evidence-based decisions.”2

Many different strategies have been used for teaching EBDM including lectures, discussion groups, journal clubs, and responding to questions generated during clinical practice experiences.3-9 Different outcome measures (knowledge, attitudes and skills) have been used to assess EBDM competence.10 Some of these measures include: self-report of skills,11 use of tools to evaluate critical appraisal skills,4,5 use of validated tools (eg, Fresno and Berlin assessment tool) to assess EBDM skills and knowledge,5,6 and structured evaluation of answers to actual practice-based clinical questions.5-9

The University of Wisconsin-Madison School of Pharmacy teaches a semester-long course in drug literature evaluation that focuses on reading and critical appraisal of primary research literature. To expand students’ ability in EBDM, a longitudinal education program that includes training regarding searching for, interpreting, and applying information from secondary sources of literature (systematic reviews/meta-analyses) was designed.11 The challenge of expanding EBDM education at the school was the lack of ability to add another course to an already full curriculum. After identifying the content and skills to add, opportunities were created within the existing curriculum to teach content and skills along with strategies to evaluate students’ knowledge, skills, and attitudes (and indirectly, this pedagogical approach). This curricular assessment study was designed to determine whether the longitudinal design and delivery of EBDM content over 3 years of a 4-year PharmD program was effective in increasing students’ knowledge, skills, and confidence as they progressed through the PharmD curriculum.

DESIGN

A quasi-experimental, pretest/posttest design was used. The primary research questions were constructed to study whether a longitudinal strategy for teaching EBDM resulted in students: gaining knowledge of EBDM principles, acquiring appropriate skills to perform evidence-based research of clinical questions, and improving their level of confidence to provide recommendations based upon using these principles.

As the PharmD curriculum had no room for another required course, several methods (lectures, laboratory exercises and written responses to authentic questions asked during clinical practice experiences) were used to thread EBDM instruction throughout the majority (final 3 years) of the 4-year curriculum. Courses and faculty members were targeted who were willing to assist in teaching at various points in the curriculum. Pharmacy faculty members agreed to permit addition of a few lectures or laboratory exercises in existing courses. We were fortunate to have 2 librarians from the university’s health science library with expertise in teaching EBDM12 involved in the program.

The framework used to sequence the material incorporated the basic tenets of EBDM, including effectively searching for secondary and primary sources of literature, critically appraising the information, interpreting and integrating information into clinical decision-making, formulating a written response, and, whenever possible, evaluating the impact of the answer.10 Health science librarians were already involved in teaching basic literature search skills to first-year students. During the second year of the PharmD curriculum, the most formative year for teaching EBDM principles, 2 lectures were added focusing on use of secondary literature sources and search strategies, as well as a 3-hour “hands-on” laboratory session conducted by health science librarians. Students were taught to frame their clinical inquiry question using the PICO (population/problem, intervention, comparison, outcomes) format. The laboratory exercises featured the assignment of 5 carefully constructed therapy questions prepared by the laboratory faculty members and librarians to give students practice in framing questions and searching the Cochrane library, evidence-based and expert opinion practice guidelines, and PubMed for secondary and primary sources of information. Critical appraisal of secondary sources of literature was also taught. Students had to formulate a written answer to the question in a standard format (evidence-based answer, evidence summary, and references). See Appendix 1 for an example question and PICO format. These skills were reemphasized in the drug literature review course in the spring semester of the second year, which focused more on primary literature evaluation.

With this foundation of basic EBDM skills, the next curriculum sequence focused on students developing skills for answering real-world clinical questions they received during 2 required practice experiences in the third year. Additionally, a health science librarian provided a 1-hour lecture on EBDM as a refresher. Students answered each of the clinical questions in a structured written format and the responses were evaluated by 1 coordinator using a standardized assessment tool.

In the fourth year of the program, pharmacy students completed five or six 2-month advanced practice experiences. For each of these practice experiences, students were also assigned a therapy question by their clinical instructors, using the same process to research and prepare a written response to the question. These written responses were evaluated with the same standardized assessment tool by the course coordinator or clinical instructors (practicing pharmacists) who had completed a training program for evaluating answers to clinical questions. The standardized assessment tool contained the criteria for clinical inquiry evaluation which divided students’ grades into 75% for problem analysis and 25% for presentation format. The problem analysis consisted of demonstration of search strategy and appropriate literature sources, an evidence-based answer, and on the depth and insight of the supporting information. The standardized assessment tool is available upon request.

EVALUATION AND ASSESSMENT

This study was awarded an exemption by the Institutional Review Board of the UW-Madison Health Sciences. Because of the nature of the IRB approval for this research project, student grades were not linkable to the research survey data.

Three cohorts of student pharmacists (367students were eligible) from 2005 to 2009 were followed, beginning with their second year for the classes of 2007, 2008, and 2009 in a 4-year PharmD program.

Survey Instruments and Written Clinical Inquiries

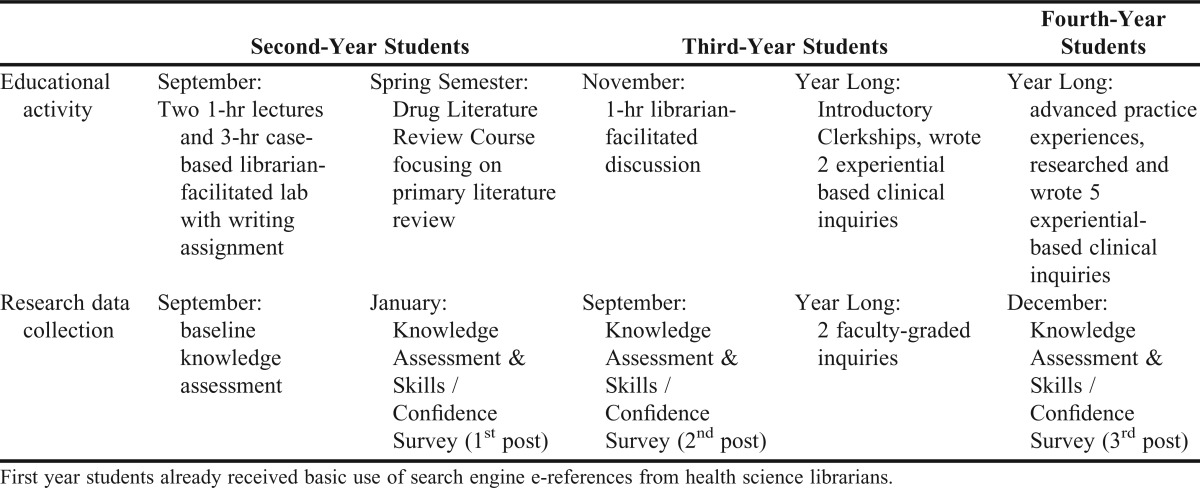

At the time of this study, the only validated instruments available were the Fresno and Berlin tools, which were validated for measuring EBDM competency in medical students, so evaluation instruments specific for this study were created.10 Second-year PharmD students were asked to complete an EBDM questionnaire at the beginning of the fall semester before beginning formal instruction to establish their baseline knowledge. To assess knowledge gained over time, this same questionnaire was administered at the end of the fall and spring semesters of the second year of the program, at the end of the fall and spring semesters of the third year of the program, and after the fourth-year objective structured clinical examination (OSCE). Additionally, students completed a self-assessment of their skills and confidence to apply EBDM principles at the end of each semester during their second and third years and on the day of the fourth-year OSCE. Table 1 summarizes the educational interventions and survey administration timeline.

Table 1.

Doctor of Pharmacy Evidence-Based Decision Making Educational Intervention Timeline

Second- and third-year students were offered extra credit points for completing the questionnaires and surveys. No inducements were used for fourth-year students completing survey instruments. Students coded their survey instruments (day of the month they were born, first 2 letters of the high school from which they graduated, and the last 3 digits of their social security number) so that their responses could be linked to survey instruments completed in the second, third, and fourth years of the program.

The questionnaire and survey instruments were pilot tested with small groups of third- and fourth-year students during summer 2005 to ensure adequate interpretation of questions. The knowledge questionnaire was originally composed of 17 multiple-choice questions. Based on the question analysis postadministration, 3 poorly written (ie, lacked one clear answer) questions were omitted. The final questionnaire included items that came primarily from the lecture content.

The survey instruments assessed students’ self-reported skills including: current level of skills in using EBDM principles (7 items), self-efficacy (ie, confidence) for using skills to answer clinical questions (10 items), and usefulness of various learning activities (7 items). Survey items were answered using a 5-point scale for current skills and usefulness (1=poor; 2=fair; 3=good; 4=very good; 5=excellent), and self-efficacy (1=not at all confident; 2=not very confident; 3=moderately confident; 4=very confident; 5=extremely confident). Using baseline student responses, the current skills scale exhibited a Cronbach alpha estimate of internal consistency of 0.87, and the confidence scale exhibited an alpha of 0.92. Using the final post responses, the calculated Cronbach alpha for the current skills and confidence scales were 0.84 and 0.57, respectively.

Students were also asked if they felt that understanding evidence-based medicine principles would be necessary for their future pharmacy practice using a 5-point scale (1=definitely not; 2=probably not; 3= not sure; 4=probably yes, 5=definitely yes).

As evidence of objective data for evaluation of student EBDM skills, overall student performance on 2 written clinical inquiries during their third year were collected and are presented in aggregate. Students in their fourth year completed clinical inquiries on every practice experience. For research purposes, students’ aggregate scores were examined from their final 2 spring semester advanced clinical practice experiences. The 2 faculty members who graded the clinical inquiries used the same format requirements and standardized grading tool.

Data Analysis and Management

SPSS 19 software was used to analyze the survey data. For all statistical tests, a significance level of 0.05 was used. Descriptive statistics were used to describe the student population, survey responses, and knowledge scores. The significance of change scores were assessed using paired t tests. Trend analysis was carried out to assess whether the average student scores on the knowledge assessment over 4 measurement points were significantly increased or decreased. Especially, linear trends were tested across all individual items. Correlation analysis was also performed to evaluate the significant relationship between the Current Skills scale and the Self-Efficacy scale.

EBDM Knowledge Questionnaire

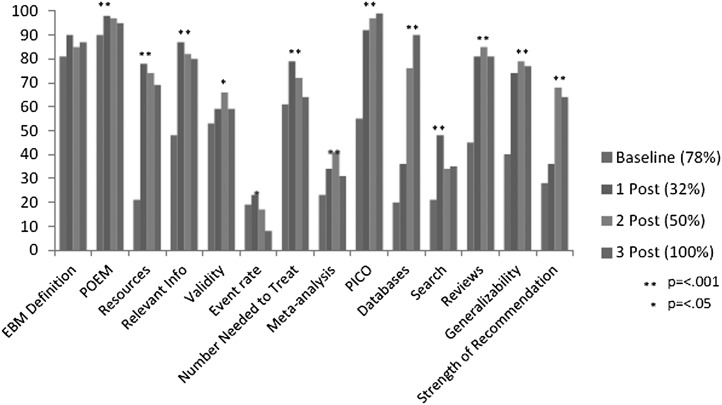

Knowledge questionnaire results are presented in Figure 1. A trend analysis was used to identify when students performed significantly better in the sequence of learning activities and whether knowledge gains were maintained. Of the 14 items, significant gains were seen for 11 items on student knowledge (p<0.001). Students performed well on the definition of EBDM and poorly on the item that required them to calculate event rate. Students performed well on the patient-oriented evidence that matters (POEM) question, recognizing that an evidence-based approach honors outcome measures that matter most to patients (eg, morbidity, mortality, cost). The highest overall scores were received on the PICO question. PICO was also the required format for all clinical inquiries submitted for required courses in the EBDM sequence. The question related to choosing specific databases also showed significant gains in the percent of students answering the question correctly throughout the time points. Students regressed in 3 areas.

Figure 1.

Trend analysis of EBDM 14-item knowledge assessment using percent of student pharmacists’ (N=367) answering the question correctly at 4 different time points (% response rate).

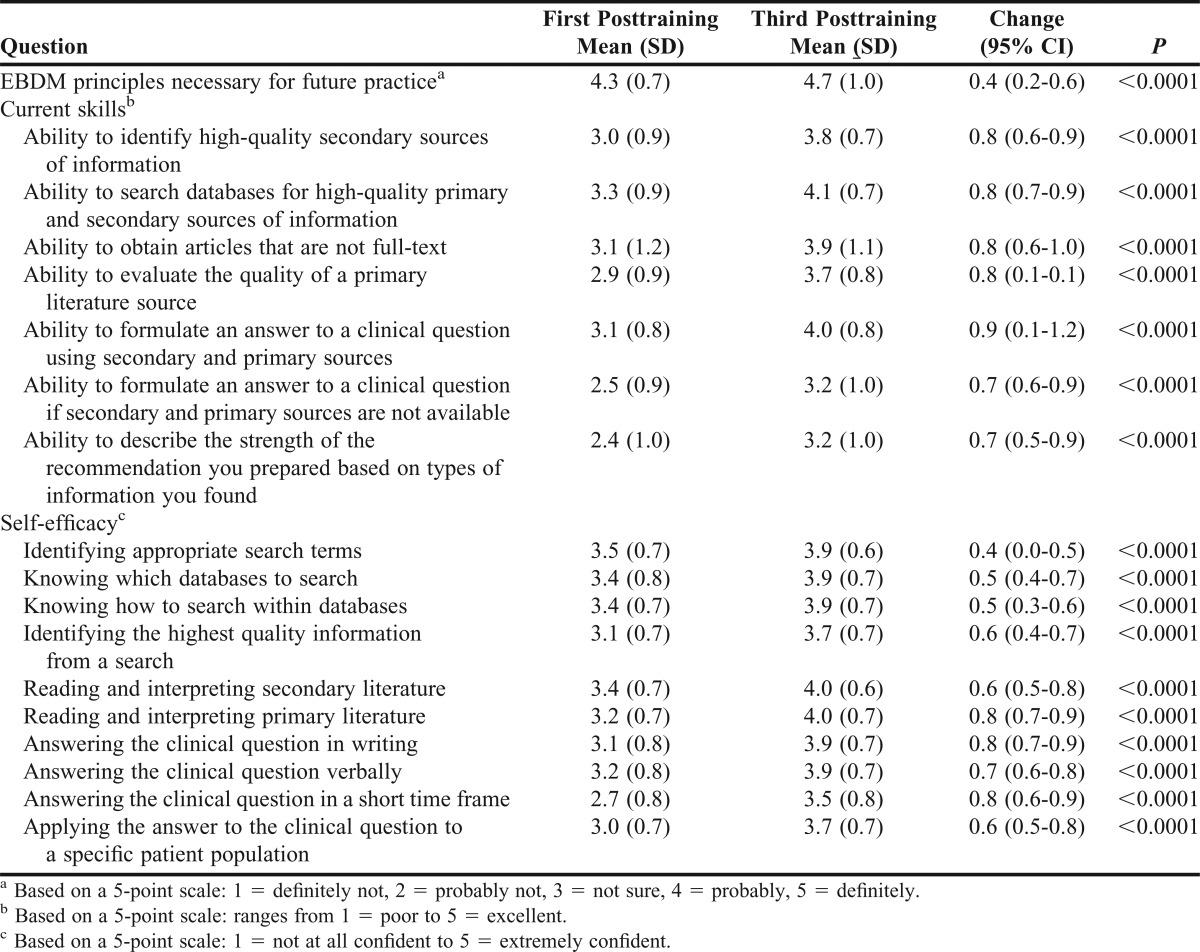

EBDM Self-Reported Skills and Confidence

Although response rates varied for each of the survey administration times and response rates for the second post-training survey instrument were poor, 43% (158) of the entire student cohort completed survey instruments at the first and third post-training data collection points. Results for the linkable survey instrument administered at the first and third time points after the initial training are presented in Table 2. Table 2 lists students’ mean scores for the first post-training survey instrument and the third (final) post-training survey instrument to analyze the matched longitudinal changes for students. A significant increase was noted in the 1-item rating of whether EBDM skills were necessary for their future as pharmacists (p<0.0001) as well as the 7-item current EBDM skill scores (p<0.0001). For self-efficacy assessments, significant increases (p<0.0001) for all items were observed.

Table 2.

Evidence-Based Medicine Application, Skills and Confidence Measures of Pharmacy Students (N=158)

The lowest mean scores were for students’ ability to formulate an answer if secondary and primary sources were not available (3.2 ± 1.0), and for students’ ability to describe the strength of a recommendation (3.2 ± 1.0). The greatest improvements in scores occurred in students’ perception of their ability to formulate an answer to a clinical question using secondary and primary sources, search databases, and answer a clinical question in writing. The lowest self-efficacy score was for students’ confidence in answering the clinical question in a short timeframe.

The relationship between students’ self-assessed current skills and their self-efficacy was investigated using the Pearson product-moment correlation coefficient. A strong, positive correlation between current skills and confidence at both postintervention time points was found at the 0.001 significance level and ranged from 0.401 to 0.909. (This finding supports earlier research and contributes to construct validity.13)

Written Clinical Inquiries

Third-year students completed 2 inquiries. Written clinical inquiry scores were available for the first 2 study cohorts (N=220); data for the third study cohort were not available due to a change in the course management system. The first inquiry was graded consistently by the same faculty member and based on a passing score of 70% or better, and 91.4% of students performed satisfactorily, with an average score of 85.0% ± 10.3%. The average score on the second written clinical inquiry improved (88.2% ± 8.5%), with 3% of students not showing improvement. One hundred five clinical inquiries by fourth-year students were examined for all 3 cohorts. Not all students completed their final 2 practice experiences in this coordinator’s clerkship region; therefore, the sample number is smaller. The average scores were 88.4% ± 6.4% and 89.4% ± 7.6% for the final 2 practice experiences of the academic year. All students received a grade greater than 70% on either clinical inquiry. These results infer students’ progress with the critical EBDM skills and also relate to their self-reported improvement in skills and confidence.

DISCUSSION

This study provided strong evidence for the effectiveness for teaching EBDM using a longitudinal curriculum design and evaluation process. Students were taught to follow the classical EBDM framework of effectively searching the literature, critically appraising the information, interpreting and integrating information into clinical decision-making, formulating a written response, and, when feasible, evaluating the impact of the answer.

In reviewing the knowledge questionnaire results, we found that the percent of students answering the question related to choosing specific databases correctly increased significantly over the 3 time points, which can probably be attributed students’ increased familiarity with and use of databases to answer clinical inquiries. Student regression occurred in 3 areas, which included meta-analysis. Although meta-analysis was covered in 1 lecture, students may not have had subsequent experiences using meta-analysis to reinforce the information they learned in the lecture. Some students may have been given questions by their preceptor where meta-analysis or even primary literature was available. Thus, those students who had to read and think about meta-analysis may have retained the information better.

Repetition is a valuable teaching tool. Students performance using secondary and primary sources, searching databases, and answering a clinical question in writing improved because they were given multiple opportunities to practice and hone these skills. The majority of students’ ability to write clinical inquiries improved. There were times when the questions students received from practice sites did not have secondary and primary literature sources available to formulate the answer. This challenge often required students to reevaluate whether they framed the questions appropriately, performed adequate searches, and applied analytical skills, sometimes prompting them to use faculty office hours to address questions.

As Ilic concluded, demonstrating EBDM competency is complex and one method of teaching and evaluation may not sufficiently accomplish this.10 When reflecting upon this curriculum, 4 of the 5 framework elements were covered quite well. The final element of the framework, the continuous quality improvement measure of evaluating the impact of the answer, was informally assessed in some cases. Some clinical inquiries were assigned to evaluate new medications considered for institutional formularies. In many of these cases, students were able to see the impact of their work. Additionally, more than 30 inquiries written by third- and fourth-year students have been peer- and editorially reviewed and published as Help Desk Answers in Evidence Based Practice, a publication of the Family Physicians Inquiries Network.12

An additional strength of this study is that it included 3 large student cohorts (130-140 students per year) and followed them over 3 years of the pharmacy program. This allowed for extrapolation of the results to verify and improve the curriculum. The teaching methods were sequenced and included repetition of written assignments to answer therapy questions generated in practice sites, sometimes providing students with opportunities to see how their answers were used. Students received written and sometimes verbal feedback from the assessor. Based upon consistent survey results, curriculum revisions were made, adding additional training about the strength of the recommendation and use of tertiary sources for background information.

The findings suggest that a combination of instructional strategies enhanced students’ perceived self-efficacy. These findings are consistent with Bandura’s Self-Efficacy Theory, which posits that higher self-efficacy can motivate learners to master the skills necessary for proficient performance and suggests that a combination of instructional strategies can enhance the learner’s self-efficacy and the likelihood that the learner will transfer these skills to practice.14 Although this study did not determine which strategies within the longitudinal training were most effective, the interactive learning activities support the skill development necessary for pharmacy practitioners to be confident in a skill on which other members of the health care team rely.

Faculty members play a critical role when students need individualized attention. Modeling and explaining problem-solving approaches to EBDM at the time the student is struggling with the question is a powerful, “just-in-time” teaching mechanism for students to gain critical skills. The experiential program that oversees students’ pharmacy practice experiences in Wisconsin has developed and implemented a training program for clinical preceptors to provide “just-in-time” guidance and feedback to students as they formulate answers to clinical questions. This has also decreased faculty workload because many of the clinical preceptors also assist in grading the fourth-year clinical inquiries using the standardized online tool.

Despite positive outcomes, the study had several limitations. Because the knowledge assessment and survey instruments were not mandatory and did not contribute to student grades, some longitudinal data points were limited and excluded a portion of students from the analysis. This was a cohort study design and therefore not robust enough to evaluate best practice. Because the EBDM principles and skills are embedded in various courses and activities, this curriculum was dependent on other faculty members to embrace the importance of teaching and assessing this skill. Considerable staff resources (about 15 to 20 minutes for each clinical inquiry) are required for grading and providing feedback to students about their performance.

The 2 lectures that were provided by faculty members in the fall of the second year and the refresher course provided by the librarian in the third year have been digitized for students. Digital technology will continue to be incorporated; these tutorials provide real-time, on-demand learning for students. Future research will explore whether similar results (ie, EBDM learning and skills) can be achieved with less curricular repetition. The current overarching curriculum uses continuing professional development principles that have required students to be more self-directed and reflective on their abilities. With the school’s newly implemented pre-advanced pharmacy practice experiences assessment criteria, the robust components of the standardized grading tool helps identify students whose EBDM skills are not satisfactory and need successful remediation.

SUMMARY

This study demonstrated a successful integration of EBDM throughout the PharmD curriculum, suggesting that a dedicated course on EBDM is not only unnecessary but perhaps undesirable, as evidence-based decision making, like other skills, can effectively be taught using repetition, real examples, and feedback. Future directions will be to determine if a more parsimonious longitudinal approach could be equally effective.

ACKNOWLEDGEMENTS

Funding was received through the University of Wisconsin–Madison School of Pharmacy Practice Division Research Fund to support Web-based survey administration and statistical analyses. We thank the librarians, Gerri Wanserski, Christopher Hooper-Lane, and Rhonda Sager, and fellow faculty partner, Denise Walbrandt Pigarelli, for their contributions to the EBDM curriculum.

Appendix 1. Example Question and PICO Format

You are a 27-year-old pregnant woman in the third trimester. You have been diagnosed with gestational diabetes and have tried to use diet and exercise for glycemic control. Unfortunately, diet and exercise alone are not sufficient and your physician has recommended starting medication therapy.

Your question for the pharmacist: Are oral agents as safe and effective for the treatment of gestational diabetes as insulin?

P - pregnant women with gestational diabetes requiring medical management

I - insulin

C - oral hypoglycemic agents (metformin, glyburide, etc)

O - glycemic control, side effect, fetal effects

Searchable terms: gestational diabetes, medication, insulin, metformin, sulfonylureas, thiazolidinedione.

REFERENCES

- 1.Accreditation Council for Pharmacy Education. Chicago: 2011. Accreditation standards and guidelines. http://www.acpe-accredit.org/standards/default.asp. Accessed September 13, 2012. [Google Scholar]

- 2.American Association of Colleges of Pharmacy. Center for the advancement of pharmaceutical education. doi: 10.5688/aj700479. http://www.aacp.org/resources/education/Pages/CAPEEducationalOutcomes.aspx. Accessed September 13, 2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Liabsuetrakul T, Suntharasaj T, Tangtrakulwanich B, Uakritdathikarn T, Pornsawat P. Longitudinal analysis of integrating evidence-based medicine into a medical student curriculum. Fam Med. 2009;41(8):585–588. [PubMed] [Google Scholar]

- 4.Bradley P, Oterholt C, Herrin J, Nordheim L, Bjorndal R. Comparison of directed and self-directed learning in evidence-based medicine: a randomized controlled trial. Med Educ. 2005;39(10):1027–1035. doi: 10.1111/j.1365-2929.2005.02268.x. [DOI] [PubMed] [Google Scholar]

- 5.Lai NM, Teng CL. Competence in evidence-based medicine of senior medical students following a clinically integrated training programme. Hong Kong Med J. 2009;15(5):332–338. [PubMed] [Google Scholar]

- 6.West CP, McDonald FS. Evaluation of a longitudinal medical school evidence-based medicine curriculum: a pilot study. J Gen Intern Med. 2008;23(7):1057–1059. doi: 10.1007/s11606-008-0625-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Thomas PA, Cofrancesco Jr., J. Introduction of evidence-based medicine into an ambulatory clinical clerkship. J Gen Intern Med. 2001;16(4):244–249. doi: 10.1046/j.1525-1497.2001.016004244.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Dorsch Jl, Aiyer MK, Meyer LE. Impact of an evidence-based medicine curriculum on medical students’ attitudes and skills. J Med Libr Assoc. 2004;92(4):397–406. [PMC free article] [PubMed] [Google Scholar]

- 9.Aronoff SC, Evans B, Fleece D, Lyons P, Kaplan L, Rojas R. Integrating evidence based medicine into undergraduate medical education: combining online instruction with clinical clerkships. Teach Learn Med. 2010;22(3):219–223. doi: 10.1080/10401334.2010.488460. [DOI] [PubMed] [Google Scholar]

- 10.Ilic D. Assessing competency in evidence based practice: strengths and limitations of current tools in practice. BMC Med Educ. 2009;9:53. doi: 10.1186/1472-6920-9-53. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Ebell M. Information at the point of care: answering clinical questions. J Am Board Fam Pract. 1999;12(3):225–235. doi: 10.3122/jabfm.12.3.225. [DOI] [PubMed] [Google Scholar]

- 12.Family Physicians Inquiries Network. http://www.fpin.org/mc/page.do;jsessionid=AC402BD86325AD5D12C9C5D60AD3B36E.mc0?sitePageId=99292. Accessed September 13, 2012. [Google Scholar]

- 13.Martin BA, Bruskiewitz RH, Chewning BA. The effect of a tobacco cessation continuing professional education program on pharmacists’ confidence, skills, and practice behaviors. J Am Pharm Assoc. 2010;50(3):9–16. doi: 10.1331/JAPhA.2010.09034. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Bandura A. Self-efficacy: the Exercise of Control. New York: WH Freeman; 1997. [Google Scholar]