Abstract

What is the relationship between variability in ongoing brain activity preceding a sensory stimulus and subsequent perception of that stimulus? A challenge in the study of this key topic in systems neuroscience is the relative rarity of certain brain ‘states’—left to chance, they may seldom align with sensory presentation. We developed a novel method for studying the influence of targeted brain states on subsequent perceptual performance by online identification of spatiotemporal brain activity patterns of interest, and brain-state triggered presentation of subsequent stimuli. This general method was applied to an electroencephalography study of human auditory selective listening. We obtained online, time-varying estimates of the instantaneous direction of neural bias (towards processing left or right ear sounds). Detection of target sounds was influenced by pre-target fluctuations in neural bias, within and across trials. We propose that brain state-triggered stimulus delivery will enable efficient, statistically tractable studies of rare patterns of ongoing activity in single neurons and distributed neural circuits, and their influence on subsequent behavioral and neural responses.

Keywords: spontaneous activity, prestimulus, online, brain-computer interface, auditory

Introduction

Our interpretation of sensory stimuli can depend heavily on instantaneous levels of attention to specific locations or stimulus features [1], presumably reflected in ongoing patterns of endogenous brain activity, or ‘brain states’, at multiple temporal and spatial scales [2–6]. Much progress has been made in understanding the perceptual effects of trial-to-trial variability in time-locked, post-target sensory brain responses (e.g. [7–10]). Interest in the influence of pre-target, ongoing brain activity on perception is growing rapidly [3, 11–31]. However, systematic study of rare, endogenous states remains elusive because these states rarely coincide with target stimulus presentation, and are not under the control of the experimenter.

Here, we propose a general approach to study the influence of targeted aspects of ongoing activity on sensory perception with improved efficiency. Inspired by brain-computer interface (BCI) algorithms for motor control [32], we employed techniques for online analysis of spatio-temporal brain activity patterns, and triggered subsequent target stimuli contingent on the presence of ongoing brain states of interest. In this way, the behavioral influence of rare pre-target brain states can be assessed repeatedly in a time-locked fashion (Figure 1A). Our proposed method for online triggering stimuli following arbitrary ongoing spatio-temporal activity patterns of interest represents a generalization of methods previously described in the limited context of online triggering of sensory stimuli at a specific phase of an ongoing brain oscillation [18, 19, 33, 34].

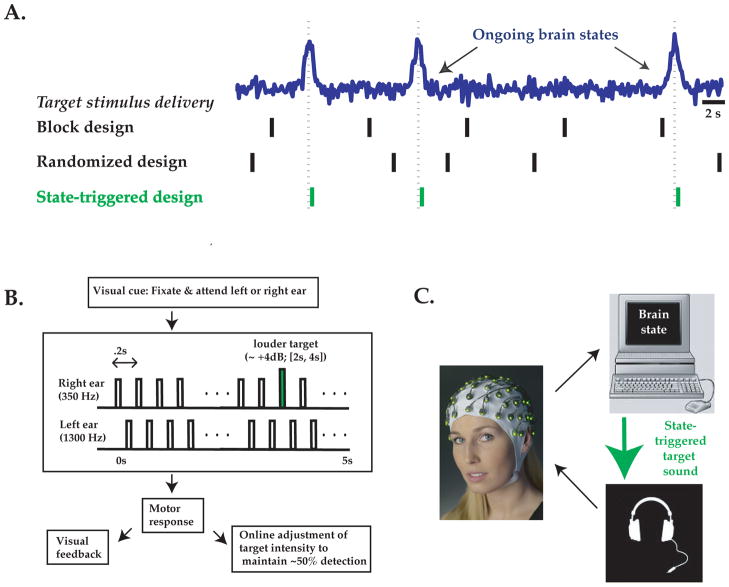

Figure 1. Brain state-triggered target presentation: General approach and application.

A: Neurophysiology experiments often involve target stimuli presented at constant or random intervals (‘block’ or randomized design, black vertical lines), and address stimulus interactions with ongoing brain states (blue schematic timecourse) using offline analyses. To more efficiently assess the influence of ongoing brain activity on behaviorally relevant stimuli, we propose presentation of stimuli triggered online by particular brain states of interest (green lines). B: We tested this approach using an auditory detection task. Subjects were presented with 5 Hz trains of tones (black rectangles) to the right ear (at 350 Hz) and left ear (at 1300 Hz, 100 ms delayed onset). Subjects were cued to attend to the right or left ear and to detect slight increases in intensity of a deviant tone (green rectangle) at the cued ear. Detected deviant tones were reported after the stimulus train ended, visual feedback was provided, and deviant tone intensity threshold was updated to maintain 50% detection performance for both attended ears. C: Electroencephalographic voltage potentials (EEG) were recorded from 29 electrodes on a whole-head EEG cap (Brainproducts.com) and sent to a computer for online analysis of responses to sounds occurring in the previous 1.25 s (i.e. sliding window of six left/right tone pairs). Emergence of a ‘brain state’ corresponding to strong directed neural bias towards stimuli at the cued or non-cued ear triggered immediate presentation of a deviant tone at the cued ear.

As a proof of principle, we have applied this general method to the study of ongoing brain dynamics in humans during a selective listening task using electroencephalography (EEG). Successful implementation of brain state-triggered target presentation requires high-quality estimates of instantaneous brain states of interest within single trials. As described below, the spatial detection task we employed generates robust, selective biasing of average evoked responses to sounds presented at the attended vs. non-attended ear [35], thus rendering it a useful task for studying the perceptual effects of neural bias within and across single trials. The largest auditory attentional modulation (and largest signal-to-noise ratio) is obtained in paradigms involving difficult target stimuli and fast sound repetition rates [36–38]. One such auditory EEG paradigm involves presentation of two rapid and independent streams of standard tones with randomized inter-tone-intervals (mean: ~200 ms) and of differing pitch at the left and right ear ([36]). In this study, subjects were cued to attend to a particular ear and detect rare ‘deviant’ target sounds of slightly different intensity. When subjects attended to a particular ear, the average neural response to a sound presented at that ear doubled in amplitude (response latency of ~80–150 ms post-sound onset, likely localized to auditory cortex; [39, 40]). However, studies of this kind could not assess drifts in ear-specific neural response bias towards or away from the cued ear within or across single trials, due to the use of randomized inter-tone intervals. More generally, such studies typically lack the statistical power to examine the effects of target presentation during instants of largest neural bias towards processing of inputs from a given ear, because such instants are rare and unpredictable.

Here, we modified the above paradigm by presenting alternating sounds to the right and left ears with a fixed inter-tone interval (Figure 1B, C). We could thus obtain a running estimate of laterality of neural bias towards processing sounds from a given ear, by separating in time the contributions to ongoing brain responses from left- and right- ear tones. We then asked: Do fluctuations in neural bias within and across identically cued trials influence behavioral detection of subsequent stimuli? We devised a simple, robust algorithm for online triggering of ‘deviant’ target stimuli of slightly differing intensity following the presence of an instantaneous ‘state’ of strongly lateralized bias towards or away from the cued ear (Figure 1B). We found that, for identical cue conditions, triggering target stimulus presentation immediately following a strong, transient state of correctly directed bias influenced behavioral performance, resulting in an increase in detection rates for target stimuli, as well as an increase in false-alarm rates. Simulation results demonstrated that brain state-triggered stimulus presentation can increase, by up to an order of magnitude, the incidence of a stimulus co-occurring with a brief, rare brain state. This general method should facilitate the study of influences of ongoing patterns of cellular and circuit activity on behavioral and neural responses, as well as the development of cortically-guided sensory prostheses.

Materials and Methodology

We conducted a single session of simultaneous psychophysics and EEG recordings in 21 healthy adult volunteers (17 males) following prior informed consent. All procedures were in accordance with the Coordinating Ethics Committee of the Hospital District of Helsinki and Uusimaa.

Auditory Stimuli and Psychophysics

Stimuli were presented in a sound-attenuated room using high-quality headphones (Sennheiser HD590) at 48000 Hz. We presented two perceptually distinct 5 Hz trains of ‘standard’ tone pips to the left and right ear for 5 s (left ear tones: 1350 Hz, right ear tones, 350 Hz; Presentation software, Neurobehavioral Systems; Figure 1C) designed to allow concerted focus on a given ear. We staggered left and right ear trains by 100 ms in order to maximally separate in time the evoked responses driven by left and right ear stimuli. Tones were 12 ms long, including 5 ms half-sinusoid tapers on either end.

One major difference between previous ‘dichotic’ listening tasks and the task employed in the current study is our use of fixed 5 Hz trains of standard tones to each ear, shifted by 100 ms. The constant timing of left/right ear tone pairs was necessary in order to obtain an ongoing estimate of ear-specific neural bias which would be confounded by use of variable inter-tone intervals known to affect response magnitude [41]. This modification also enabled us to assess dynamics of neural bias within an individual trial [42]. Note that, in using trains of stimuli, the presumed response to an auditory stimulus at one ear (e.g. at 120 ms latency, Figure 2A) may also contain a smaller response component evoked by the preceding stimulus at the other ear (at 220 ms latency).

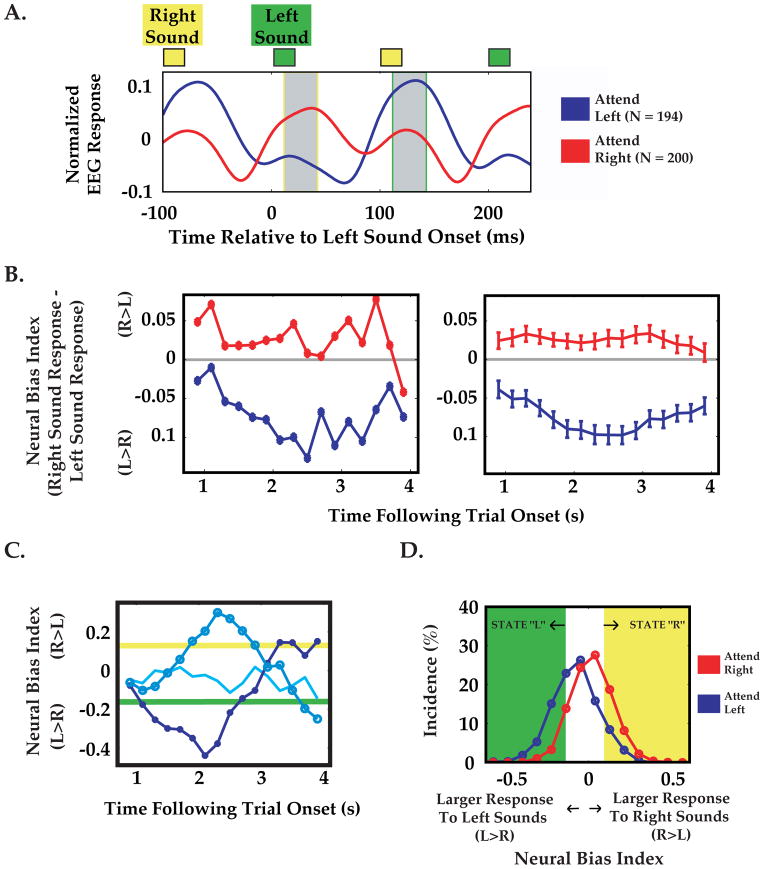

Figure 2. Dynamic measurement of neural bias ‘states’: Analysis stream for a typical subject.

A: Average EEG response to identical left-ear tones (green square, top), for attend-left (blue trace) and attend-right (red trace) cue conditions. Briefly, responses where generated by deriving a single time-series (weighted average of the 29 EEG channels) for each trial, and then averaging across the last twenty tones in each trial ([1 s, 5 s] post-onset), and across all trials of a given cue condition. Note that the average response to a left-ear sound (at ~120 ms latency, gray with green border) is much larger when attention is cued to the left-ear. Similarly, following right ear sounds (yellow square), responses (gray with yellow border) were greater for the attend-right cue condition. The neural bias index in subsequent panels was calculated as the difference between responses to a pair of right/left tones. B: Left Average neural bias index for individual pairs of tones at various times following trial onset (from data in A). Right Same data, smoothed with a boxcar filter (1.25 s duration). Error bars indicate standard error. Note small increase in response modulation during the interval where targets were expected ([2 s, 4 s]). C: Using this boxcar filter, fluctuations in neural bias could be observed within single trials. Despite identical cue conditions (attend-left), three different single trials indicate strong moments of leftward bias (darkest blue, filled circles), rightward bias (lighter blue, larger circles), or no strong selective bias (lightest blue, no circles). D: Distribution of neural bias indices for various moments in single trials, for attend-left (blue) and attend-right (red) cue trials. We labeled moments of strong left-ear and right-ear selective bias as “L” and “R” states, respectively, as illustrated by green and yellow areas in D and corresponding thresholds in C (see Materials and Methods).

We adjusted the auditory intensity of standard tones by first determining ear- and tone-specific hearing thresholds using a staircase procedure. Subsequently, tone intensity in either ear was set to 60 dB above hearing threshold. Due to differential perceptual sensitivity to high- and low-frequency tones, tone intensity was further adjusted (< 4 dB) until subjects reported equal perceived intensity in either ear, minimizing potential for general perceptual bias to a given ear.

Subjects were presented with a visual cue (large white arrow, persisting for the duration of the trial) indicating the ear to which the subject should attend. After 400 ms, separate 5 Hz trains of standard tones were presented for 5 s to the left and right ear, staggered by 100 ms so that ear-specific response components could be identified. In a subset of trials (~80%), one of the standard tones between 2 s and 4 s post-train-onset was replaced by a deviant target tone of identical frequency but slightly higher intensity (Figure 1B). After the stimulus train ended, subjects had 1200 ms to raise their right index finger to report having detection of the deviant tone. The delay of 1–3 s between target tone and motor response was important to ensure that pre-stimulus activity was not influenced by motor preparation. The possible trial outcomes were hit (correct detection), miss, false alarm (F.A.: finger lift when no deviant was present) and correct reject (C.R.: no finger lift when no deviant was present). Subjects then received visual feedback (cue arrow turns red for miss/false alarm, green for hit/correct reject; 200 ms duration).

A typical experiment lasted 1.5 hours and consisted of 1–2 training runs, followed by 6–8 test runs. Each run lasted approximately seven minutes and consisted of 48 target trials (24 trials cued to each ear), and 4–15 no-target ‘catch’ trials (see below). The sequence of trials consisted of alternating blocks of six triggered trials cued to the same ear, in order to facilitate sustained focused attention in a given direction, and to decrease spurious shifts in focus related to novel cue information. Breaks between runs (2–5 minutes) ensured sustained concentration throughout the experiment.

The task employed here contained large numbers of both ‘hit’ and ‘miss’ responses for statistical purposes. This difficult task also ensured large cue-dependent modulation of neural responses. To this end, we used online adjustment of the intensity of deviant tones separately for left- and right-ear tones to maintain ~50% success rate ((hits + correct rejects)/(all trials)). At the onset of the first training run, a clearly audible (> 8 dB louder) deviant target tone replaced a standard tone at a random time during the target period (2–4 s post-train-onset). For each subsequent trial, if three hits and/or C.R.s occurred in a row, we increased task difficulty by reducing deviant intensity by 1 dB (0.25 dB during test runs). Likewise, three misses and/or F.A.s in a row resulted in a 1 dB (0.25 dB during test runs) increase in intensity. This procedure prevented long stretches of only hits or misses, which could not be included in assessments of the influence of local fluctuations in brain state on local differences in behavioral response. Training runs were therefore crucial for subjects to reach a fairly stationary performance ‘plateau’, at which point only small intensity adjustments were made due to residual effects of learning/fatigue.

EEG Brain-Computer Interface

We modified a low-noise, 32-channel EEG brain-computer interface system previously used for online brain imagery-guided cursor control in healthy subjects and tetraplegic patients [43, 44]. The EEG cap was positioned on the subject’s head with a 20 cm separation between the nasion and Cz electrode, and electrode impedances were decreased using conductive paste. Placement of the cap was accelerated by the presence of multi-colored LED lights for each electrode (ActiCap) providing rapid feedback of whether the impedance was below the required 5 kOhm threshold, resulting in setup times of less than 15 minutes. EEG acquisition involved ‘active shielding’ for automatic reduction of estimated line noise and other external artifacts (ActiCap), followed by digitization at 500 Hz (BrainAmp amplifier and BrainVision Recorder software, BrainProducts). These technological advances were critical in facilitating the use of EEG in brain-triggered stimulus presentation.

To enable online estimation of brain states, the Recorder software passed EEG data from the last 2 s to MATLAB (Mathworks) once every 20 ms via a custom-written C/C++ control program and a TCP/IP connection. A MATLAB software program then determined whether a brain state of interest had recently occurred, prompting the C/C++ program to send a TTL trigger back to the stimulus computer, and causing the next standard tone to be replaced by a deviant intensity tone with identical timing (see below). The main C/C++ control program for the sensory brain-computer interface consisted of three threads, one for program execution, one for data acquisition from the Vision Recorder through TCP/IP, and one for signal processing and classification in MATLAB through a MATLAB Engine connection [44]. As described in Figure 1, the BCI received triggers for each stimulus tone presented (Presentation software), along with the most recent 2 s block of amplified EEG data, which was then filtered with a 4th order Butterworth filter between (2–20 Hz). To decrease artifacts, we instructed subjects to relax their facial muscles and blink between trials. We identified eye blink, saccade and muscle artifacts as epochs where the maximum minus the minimum EEG potential (in [−1.5 s, 0 s]) between contacts above and below left eye exceeded a threshold. The threshold was calculated as two standard deviations above the mean in 2–4 s post-stimulus onset in the training run. Rare epochs containing artifacts were excluded from further analysis.

We calculated a measure of the overall neural bias to left vs. right ear stimuli, termed the neural bias index, as described below. First, during the training run(s), we estimated the peak amplitude of the evoked response (between 110–190 ms post-tone) for all 29 electrodes, averaged across 20 left and right ear tones (in [1 s, 5 s] post-stimulus onset) within each trial and across all 48 trials in the training run(s). We then generated a steady-state evoked response vector from the mean of each channel in the range ±10 ms surrounding the peak response. This vector served as a spatial set of weights that was subsequently convolved with the incoming single-trial data to generate a single time-series on each trial. We note that the spatial distribution of the EEG responses was qualitatively similar following left and right ear tones (data not shown), and so to simplify our metric we averaged left- and right-ear spatial response profiles together. Future studies could potentially derive more information on selective bias by using different weights for convolution with left- and right-ear responses (see Discussion).

One additional step used to focus the online analysis to relevant EEG channels was to exclude channels that did not, on average, demonstrate clear cue-dependent response modulation during the training runs (Figure 2A, B). Specifically, we defined the measure Rbias = (right-ear response − left-ear response) for each channel and single trial, and only used channels where (mean(Rbias | attend right) − mean(Rbias | attend left))/std(Rbias) was greater than 0.15. This resulted in 83 ± 13% of channels used (mean ± std. dev., N = 21 subjects; 100% for subject in Figure 2). The final weighting vector was then used for all test runs without modification. We then averaged the resulting single timeseries across right- and left-ear cued trials, and found the 30 ms time interval (interval center between 100 and 150 ms post-tone) that generated the largest response modulation (i.e. (Rbias | attend right) − (Rbias | attend left)). The neural bias index at any given instant was defined as the right ear response (averaged over this optimal 30 ms interval following right-ear tones) minus the left ear response (averaged over the same interval following left ear tones; see Figure 2A), and this difference was further averaged across ~6 pairs of successive tones from −1.5 s to −.25 s (to increase signal-to-noise ratio).

The neural bias index should be positive when attention is directed to the right ear, negative for attention to the left ear, and near zero for ‘split attention’ or low levels of attention or focus. As discussed above, while it was not possible to uniquely ascribe the response at any moment to right- or left-ear stimuli, the response ~130 ms following left ear sounds (Figure 2A, gray box, green outline) is far greater for the attend-left than for the attend-right condition, whereas the converse is true in the same interval following the average right-ear sounds (Figure 2A, gray box, yellow outline; recall that the 5 Hz trains of left and right ear tones were staggered by 100 ms).

We determined thresholds for states of correctly or incorrectly directed neural bias towards or away from the cued ear, respectively, as follows: Using neural bias index values obtained during the training run(s), we simulated the estimated percentage of non-artifact trials containing correctly and incorrectly directed neural bias ‘states’ exceeding a threshold, for different choices of upper and lower thresholds (Figure 2 C, D). We chose threshold values such that correct/incorrect neural bias states (in [2 s, 4 s] post-stimulus onset) would each trigger deviant stimulus presentation on ~45% of all trials. The selection of 45% incidences for each state was a trade-off between obtaining sufficient a number of triggered trials for statistical purposes vs. including only mildly laterally biased states (see Discussion). The actual percentages of trials in a test run containing each state was calculated following the run, and thresholds adjusted slightly to ensure equal incidence of left-ear and right-ear neural bias state-triggered stimuli (on average across cue conditions; see Figure 3A). In addition to these selected thresholds, we also avoided triggering on extremely rare and unusually large ‘outlier’ neural bias index values exceeding ±3 standard deviations from the overall mean. Upon offline inspection, these rare cases often appeared contaminated by undetected artifacts.

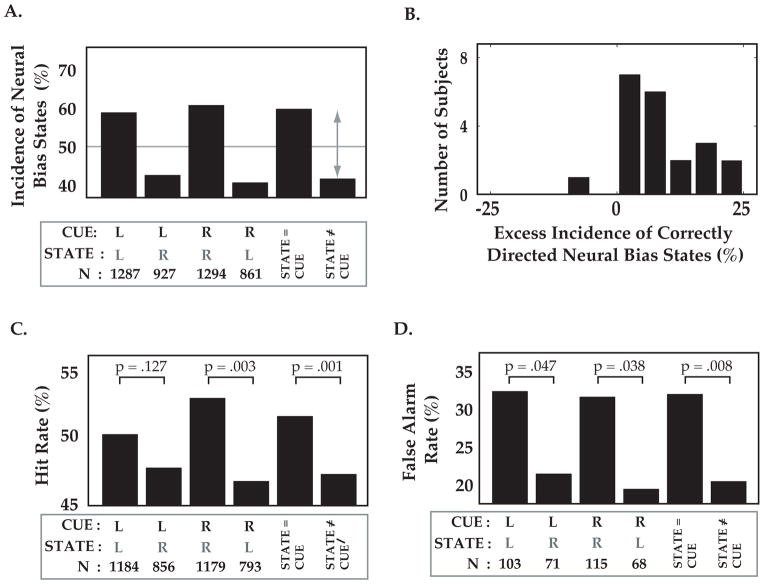

Figure 3. Triggering target stimuli on specific brain states influences behavior.

A: Incidence of neural bias states co-vary with attentional cue, confirming the robustness of the online state selection algorithm during test runs. Despite fixed thresholds and parameters for selecting brain states for each subject, leftward neural bias (“state L”) occurred more often than rightward neural bias (“state R”) during the attend-left condition (“cue L”, 1st and 2nd bars from left). Similar results were obtained for attend-right condition (middle bars), and when combined across cue conditions. Thus, moments of correctly directed neural bias (“STATE=CUE”) occurred more frequently than moments of incorrectly directed neural bias (“STATE≠CUE”). Data combined from 21 subjects. B. Excess incidence (above chance) of correctly directed bias states (gray arrow in A), across subjects. C. Despite identical cue conditions, detection of a target at the cued ear (‘hit rate’) was higher when the target was triggered by a correctly vs. incorrectly directed neural bias state. D. Reported detection of targets on catch trials lacking deviant sounds (‘false alarm rate’) was also increased following strong neural bias towards the cued ear. Panels B and C have same nomenclature as in A. Note that the same neural bias state had opposite effects on behavior for attend-left and attend-right cue conditions.

We employed several criteria for excluding non-relevant or uninterpretable trials from analyses described in Figures 3 and 4. First, all non-triggered catch trials (~10% of all trials), which could result from detected EEG artifacts or due to inattention or unbiased attention (no threshold crossing), were excluded. These trials were, however, useful in encouraging subjects not to guess. In addition, we excluded trials where behavioral decisions were strongly predicted by performance on recent trials (of same cue type), as the behavioral outcome in these trials were not likely to reflect local, within-trial fluctuations in perceptual bias. Specifically, we observed a marked increase in miss trials following false-alarms, and so discarded the next two trials following a false alarm from further analysis. In addition, we omitted hit/C.R. trials if these trials were both preceded and followed by another hit or C.R., and omitted miss/F.A. trials preceded and followed by a miss or F.A., because these trials reflected epochs in which target stimuli were far from the 50% detection threshold. These criteria resulted in exclusion, across subjects, of ~31% of all trials (min 23%, max 38%). The smaller final number of ‘usable’, artifact-free trials of comparable task difficulty and behavioral state further emphasizes the importance of state-triggered stimulus presentation for efficient study of ongoing activity.

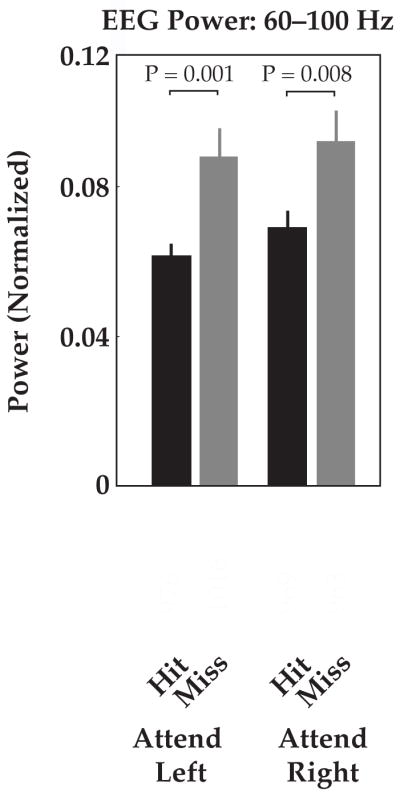

Figure 4. Endogenous EEG oscillations can improve prediction of hit rate irrespective of cue.

Offline-analysis of average EEG power across subjects revealed significant increases in power in the 1 s interval prior to ‘hit’ trials vs. ‘miss’ trials, for both attend-left and attend-right conditions (60–100 Hz band; less consistent results were observed in other bands, data not shown). This oscillatory activity may reflect generalized states of concentration or arousal, and could be used in combination with ear-specific neural bias states in future. Data were normalized by mean power across conditions for each subject prior to group averages. White numbers indicate total number of trials per condition, summed across twenty-one subjects. Error bars indicate standard error.

Simulating Gain in Efficiency Using State-Triggered Presentation

State-triggered stimulus presentation decreases the total number of experimental trials required to observe a target stimulus co-occurring with a given ongoing brain state, thereby increasing experiment efficiency over random presentation (Figure 5). We simulated this gain in efficiency across states of various durations, ‘D’, and likelihood of occurrence, ‘L’, as follows: We generated a random sequence in which brain states of fixed duration, ‘D’ (200, 400, 800 or 1000 ms) could occur once every ‘D’ milliseconds with a fixed likelihood, ‘L’ (x-axis, Figure 5). For comparison with the task employed in this study (Figure 1B), we considered the situation where a target stimulus can be presented at one of 10 moments in time (200 ms apart) during a 2 s trial, and simulated 1000 such trials. For state-triggered presentation, trials containing at least one moment in which a state is present are counted as successful state-stimulus coincidence trials (Nstate-triggered trials). For random target stimulus presentation, all trials in which an ongoing state occurring at any of 10 moments in time coincides with the (random) time of target stimulus presentation are counted as successful (Nrandom trials). The gain in efficiency using online triggering was thus defined as: .

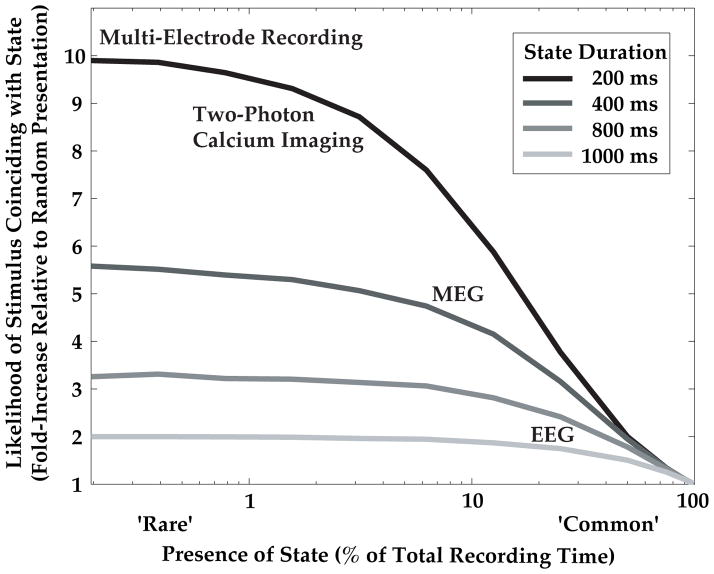

Figure 5. Simulation of gain in efficiency using brain state-triggered stimulus delivery.

The advantages of state-triggered stimulus delivery for efficient study of the neural and behavioral effects of state-stimulus interaction increase for rare or brief ongoing states (for simulation details, see Materials and Methods). For example, for 200 ms duration states that are present 5% of the time (dark blue), state-triggered stimulus presentation leads to eight-fold more trials with a state-stimulus coincidence than random stimulus presentation. Neurophysiological techniques with higher single trial signal-to-noise and dimensionality can robustly identify such brief states online, and thus can benefit greatly from the proposed method.

Statistical Metrics and Tests

Unless otherwise stated, statistical tests were unpaired t-tests. For comparison of response rates (Figure 3B, C), we employed a non-parametric shuffle test. For example, a hit rate was considered significantly greater in condition A (N trials) than condition B (M trials) if the difference in actual hit rate exceeded the difference in shuffled hit rate at least 95% of the time. A distribution of 1000 shuffled hit rates was obtained by combining all trials in A and B, shuffling, reassigning N trials to A′ and M trials to B′, and re-computing hit rates in A′ and B′. According to the method of Green and Swets [45], we defined discriminability index d′, an corrected estimate of the discriminability of deviant from standard stimuli given a non-zero likelihood of false-alarms, as d′ = z(hit rate) − z(false alarm rate), where z() is the inverse standard normal cumulative distribution function.

Results and Observations

Methodological Results

Proof of Principle: Auditory Spatial Detection Task

We employed a GO/NOGO auditory deviant detection task modified from previous studies [36], with concurrent EEG recordings, in twenty-one volunteers (Figure 1B; see Materials and Methods). Subjects were cued to attend to the left or right ear. Two spectrally separable streams of auditory tones were presented to the left and right ear at 5 Hz, staggered by 100 ms so that ear-specific neural response components could be identified (Figure 1B). In ~80% of trials, one of the standard tones at the cued ear was replaced by a deviant target tone of identical frequency but slightly higher intensity (Figure 1B). To avoid confounds in interpreting neural signals due to motor preparation, subjects were instructed to wait until the stimulus train ended (5 s), and raise their right index finger to report detection of the deviant tone, followed by brief visual feedback. The possible outcomes were hit (correct detection), miss, false alarm (finger lift when no deviant tone present), and correct reject (no finger lift when no deviant present).

Auditory Sensory Brain-Computer Interface

To obtain a rapid, online neural estimator of the instantaneous neural bias towards stimuli at either ear, we modified a low-noise, 32 channel EEG brain-computer interface system [43, 44]. Additional EOG electrodes were used for online rejection of eyeblinks and muscle artifacts (see Materials and Methods) – an important consideration in any brain-computer interface. We used a simple and robust neural bias index at each instant within a trial, defined as the ‘brain response’ to recent right-ear tones minus the response to recent left ear tones (the difference in average response to the ~6 right- vs. left-ear tones in the previous 1.25 sec; see Figure 2 and Materials and Methods). We computed the brain response to a tone as the weighted average of ‘steady-state’ evoked responses across EEG channels, at peak latency, ~100–190 ms post-tone-onset (Figure 2A and Materials and Methods). The selected weights and time intervals were optimized during initial training runs to ensure maximal difference in the neural bias index between right-and left-ear attentional cue conditions, and did not change during subsequent test runs (see Materials and Methods).

The above procedure is illustrated for a single subject in Figure 2. Following training runs, average brain responses to left- and right-ear standard tones were calculated during the attend-left and attend-right cue conditions (weighted average timeseries across channels, filtered and further averaged across 20 tone pips presented 1–5 s post-train-onset; see Materials and Methods). For initial assessment of cue-specific task modulation of neural activity, these within-trial peri-tone timeseries were further averaged across all artifact-free trials for each cue condition (Figure 2A). As shown for this subject, tones at a given ear evoked substantially larger responses when attention was cued to that ear (N = 21/21 subjects, data not shown), suggesting that cue-dependent changes in the neural bias index may reflect, in part, attentional modulation of neural responses, consistent with previous studies of attention that employed similar tasks [36].

We assessed the fluctuations in neural bias within and across trials by calculating the bias in response magnitude for each successive pair of right- and left-ear tones (see Figure 2A and Materials and Methods). Interestingly, the cue-specific modulation of the average neural bias index (Figure 2A) is also apparent for this subject at each individual 200 ms epoch during the trial (Figure 2B, left). Due to inherent physiological and hardware noise associated with EEG-recordings, we increased our signal-to noise ratio by employing a running average across multiple tone-pairs (1.25 s boxcar smoothing of ~6 successive tone-pairs; see Figure 2B, right; see Materials and Methods), thus limiting our investigation to fluctuations in neural bias on the scale of ~1 s or slower.

Fluctuations in Neural Bias Within and Across Single Trials

In contrast to the temporal stability in neural bias throughout a trial on average (Figure 2B, right), neural bias index timeseries within and across identically cued single trials were highly variable (Figure 2C). While many trials did indeed demonstrate strong response bias towards the cued ear (e.g., Figure 2C, dark blue trace), other trials showed no bias or strong bias towards the non-cued ear (Figure 2C, lighter blue traces). In addition, these traces demonstrated rapid, endogenous fluctuations even within single trials, which were hypothesized to reflect rapid, implicitly driven shifts in attention within a trial, akin to neural correlates of explicitly cued shifts in attention observed in previous studies (see Discussion). The dispersion in instantaneous neural bias within and between identically cued trials can also be observed in the broad distributions of neural bias index values during attend-left (blue) and attend-right (red) cue conditions (Figure 2D).

We sought to efficiently examine the behavioral influence of the extrema within these broad distributions of neural bias index values, as these instants in time could reflect extreme momentary biases in the subjects’ attentional focus towards one or the other ear. For purposes of online-triggering, we discretized these (unimodal) distributions of neural bias index values, identifying two “states” in which neural bias index values exceeded upper or lower thresholds (Figure 2C, D). Thus, we classified neural bias index values at each moment in time during a trial as corresponding to a state of high neural bias to left ear sounds or right ear sounds (state “L”/state ”R”, green/yellow areas in Figure 2D, respectively), or to a state of low neural bias (Materials and Methods). The threshold for an L or R state was selected such that, combined across both cue conditions, each state would occur in approximately 45% of all trials (between 2–4 s post-train-onset). The choice of threshold levels involves an important compromise between targeting only the most pronounced neural bias states exceeding a high threshold (likely the most perceptually influential states), while maintaining a sufficiently low threshold to ensure an adequate number of trials containing at least one state of interest (see Discussion).

Evaluating Algorithm Performance: Cue-Dependent Modulation of State Selection

Before addressing the effect of ongoing states on target detection, we assessed the robustness of the state-triggering algorithm. Figure 3A describes the incidence of triggered states as a function of cue direction (towards right or left ear) and state direction (R or L), across all 21 subjects. As expected, we observed a greater number of correctly vs. incorrectly directed neural bias states, both for attend-left and attend-right cue conditions, as well as for data combined across conditions (% correctly directed attentional states for attend-right trials: 60.0%, attend-left trials: 58.1%, combined: 59.1%, see Figure 3A). In other words, R states were more frequent than L states when subjects were cued to attend to the right ear, and vice versa. This excess incidence of correctly directed states across the population (gray arrow, Figure 3A) was observed in 20/21 subjects when considered individually (Figure 3B). Thus, incidence of extreme states was modulated as expected by attentional cue condition during test runs, despite freezing of parameters for calculating the neural bias index following initial training runs (see Materials and Methods).

Experimental Results

Behavioral Influence of Correctly/Incorrectly Directed Neural Bias States

Brain state-triggering of deviant tone presentation occurred upon detection by the brain-computer interface of either an L or R state (within 2–4 s post-trial onset), at which point a deviant tone of identical tone frequency and timing but slightly higher intensity replaced the next standard tone presented at the cued ear (Figure 1B). Below, we consider the behavioral consequences of deviant stimulus presentation triggered by instants of strong, correctly/incorrectly directed neural bias.

Across cue conditions, the detection rate (hits/(hits+misses)) was significantly greater when the neural bias state was directed towards the cued ear (4.4% greater, P = .001, non-parametric shuffle test, N = 21 subjects, Figure 3C, see Materials and Methods). When considering individual cue conditions, we observed a significant increase in hit rate in the attend-right cue condition for R vs. L states (6.3% increase, P = .003), and a similar but non-significant trend for the attend-left cue condition for L vs. R states (2.5% increase, P = .127). Thus, instants of strong neural bias towards the correct vs. incorrect ear do influence subsequent detection performance on identically cued trials. These data further show that pre-target, state-related effects on performance are spatially specific and likely not caused by global effects, such as arousal. In other words, the presence of the same state (e.g., a state of strong pre-target neural bias towards the right ear) had opposite effects on cued detection of targets at the right or left ear.

We also analyzed catch trials in which a pre-target neural bias state occurred, but triggered a standard tone rather than a deviant. We observed significant differences in false alarm rates (false alarms/(false alarms + correct rejects)) depending on which state occurred during that trial (Figure 3D). Specifically, subjects mistakenly reported hearing deviant target sounds more often on catch trials containing correctly vs. incorrectly directed states (attend right cue condition: 12.1% higher false alarm rate, P = .038, attend left: 10.9%, P = .047, combined: 11.5%, P = .008, N = 21 subjects, non-parametric shuffle test). These data suggest that ear-specific ‘hallucinations’ may be driven in part by instants of strong neural bias directed towards the cued ear (see Discussion).

The state-specific increases in both behavioral detection and false-alarm rates ultimately carry opposite consequences for target discriminability. We calculated an estimate of discriminability [45], for each cue/state combination, pooled across all trials and subjects. Surprisingly, the target stimuli were more discriminable in both cue conditions when the subjects’ prior brain state was incorrectly directed away from the target ear (attend-right cue: d′ for incorrect vs. correct state: .79 vs. .56; attend-left: .74 vs. .47; combined: .76 vs. .51). This finding may be explained in part by the comparatively greater increase in false-alarm rates than in hit-rates following moments of correctly directed neural bias (Figure 3 C, D).

Additional Neural Factors Influencing Perception

The above experiment represents a proof of principle demonstration that differences in ongoing brain activity detected in real-time can be efficiently correlated with behavioral responses to subsequently presented stimuli. We deliberately used a method where the ‘pre-target state’ was assessed by changes in the relative brain response magnitude following right vs. left ear tones. This ensured that the dynamic estimate of neural bias was highly similar from subject to subject, stable throughout the course of each experiment, and interpretable as the result of selective processing of inputs at a specific ear. While the states examined here helped explain some of the trial-to-trial variability in behavioral performance, considerable behavioral variability remained (Figure 3). Some of this variability could potentially be explained by other overlapping pre-target brain states, such as non-ear-specific brain oscillations reflecting global or modality-specific vigilance or arousal [13–15, 46, 47]. Therefore, we performed post-hoc analysis of the influence of pre-target oscillatory activity on perception.

We found that EEG power at frontal-temporal electrode sites F7 and F8 in the high gamma range (60–100 Hz, multi-taper spectral analysis) was significantly lower in the 1 s interval preceding ‘hit’ trials vs. ‘miss’ trials, for both attend-left and attend-right cue conditions (attend-left: P = .001; attend-right: P = .008; Figure 4; combination of trials across 21 subjects; similar but somewhat weaker effects were observed on the majority of electrodes, data not shown). Thus, in contrast to ear-specific bias states, which carried opposite behavioral consequences depending on which ear was cued (Figure 3C), decreases in gamma oscillatory activity led to increases in response rate irrespective of cue conditions (Figure 4). Other frequency bands demonstrated less consistent effects across subjects that were not statistically significant (data not shown).

Gain in Efficiency Using Brain State-Triggered Stimulus Delivery

To study the interaction of ongoing brain states with target stimuli, these stimuli must be presented coincident with or immediately following a state of interest (Figure 1A). We used a simple simulation to estimate the increase in likelihood of capturing state-stimulus coincidences when using state-triggered stimulus presentation compared to random stimulus presentation (Figure 5; Materials and Methods). We found that the utility of our proposed technique increases dramatically 1) for brain states of shorter duration, and 2) for brain states that occur more rarely (i.e. states that are present for a small fraction of the experiment). Thus, our EEG study of states with ~1 s duration that each occurred ~25% of the time (Figure 2D) led to a two-fold increase in likelihood of capturing state-stimulus coincidences, a major advance considering the limited duration of human psychophysiology experiments (see Discussion). Using other recording techniques that have increased signal-to-noise ratios and information rates, such as magnetoencephalography and large-scale invasive multi-electrode recordings or two-photon calcium imaging, it will be possible to study states that are more rare (present 1–5% of the time), and of more brief duration (e.g. 200 ms). For states of this nature, state-triggered target presentation should afford a 5–10 fold increase in efficiency (Figure 5), with potentially transformative implications.

Discussion

We have proposed a general method to efficiently study the influence of rare ongoing patterns of pre-target brain activity on perception, using online identification of brain states and state-triggered target presentation. We applied this method to the study of auditory selective listening, and found significant effects of ear-specific and non-specific pre-target brain activity on both detection and false-alarm rates. Below, we discuss our results and methodology in the context of previous auditory studies, and consider potential improvements. We then review previous work regarding brain state-triggered sensory stimulation, and discuss the potential for applying the proposed method to a broad range of neurophysiological recording modalities in humans and animal models.

Behavioral Influence of State-Triggered Target Presentation

Our data demonstrate considerable variability in neural activity (Figure 2C, D), within and across trials with identical cue conditions. The behavioral relevance of the moment-to-moment fluctuations in the neural bias index is supported by the observed perceptual correlation of these neural fluctuations with detection rate and false alarm rate. Specifically, for identically cued trials, we observed a ~10% increase in detection rate and a ~20% increase in false alarm rate for trials where target stimuli were triggered following the presence of strong neural bias towards cued ear, compared to targets following moments of strong neural bias towards the non-cued ear.

State-Dependent Illusory Percepts

The observed increase in hit rate following moments of correctly directed neural bias was expected given previous behavioral [48] and neurophysiological [8, 9] studies linking neural measures of attention with performance. However, we were surprised to observe increased false-alarm rates (i.e. increased incidence of mis-perceiving ‘deviant’ sounds when only ‘standard’ tones were presented) following moments of strong, correctly directed neural bias. This suggests that pre-stimulus activity may contribute to subjects’ percepts, perhaps reflecting internally-generated expectation [49]. Consistent with our findings, recent fMRI studies have reported correlates of detection in early auditory [50] and visual [51] cortex, in which neural responses during ‘hit’ and ‘false alarm’ trials were of similar magnitude, while responses to ‘miss’ and ‘correct reject’ trials were significantly smaller. Our findings suggest that certain components of the slower, fMRI-based neural predictors of perception in previous studies may arise not only from post-target differences in brain activity, but also from pre-target differences. Several auditory fMRI studies have also demonstrated auditory cortical activity correlating with auditory hallucinations, in schizophrenic patients [52] as well as in healthy subjects during illusory percepts [53] and silent auditory imagery [54].

Using brain state-triggered stimulus presentation, an additional factor that might drive neural correlations with behavior is the subjects’ potential ability to monitor their own mental state, leading to increased internal expectation of an upcoming target. One subject who had frequently volunteered for brain-computer interface studies prior to the present study provided a subjective report of her ability to predict, in some trials, whether and when a target would arrive based on self-assessment of ear-specific attention. However, this sensation was not reported by any of the other twenty subjects. In future, such effects could be examined explicitly by comparing behavioral responses following similar patterns of pre-target activity between blocks of trials involving state-triggered vs. random target presentation.

Optimal Targeting of Brain States: Timescales, Efficiency and Information Rates

An exciting promise of the proposed technique is the ability to assess the influence of ongoing fluctuations in neural activity at multiple timescales, by triggering on brain states lasting hundreds of milliseconds to seconds. In this first proof of principle, we decided to focus on states of longer duration (~1 second), because consideration of left- and right-ear signals from the previous 1.5 s of data permitted signal averaging and a higher signal-to-noise ratio. However, potential improvements in measurement and analysis techniques could allow robust detection of neural states using single left/right tone pairs, enabling assessment of the influence of transient, non-cued fluctuations in neural bias at the 200 ms timescale (Figure 5). Cued attentional shifts are already known to cause rapid changes in neural activity at this timescale in humans [55, 56] and non-human primates [57].

Several software and hardware methods could be employed in future studies to increase signal-to-noise ratios sufficiently to obtain robust estimates of neural states at the timescale of 200 ms. For example, online adaptive algorithms such as independent components analysis could improve artifact rejection [58, 59]. The application of online stimulus-triggering to magnetoencephalographic signals would offer both higher signal-to-noise ratio and spatial resolution, and online retrieval of these signals is now possible [7, 60–62]. Invasive brain-computer interfaces involving large-scale neuronal recordings can provide even richer and more robust readout of patterns of activity at short timescales [63].

It will be interesting, in future studies, to trigger target stimuli conditional on EEG activity from different spatial locations, though such spatial targeting may be best reserved for online triggering using real-time magnetoencephalography [60–62] or real-time fMRI [64]. Indeed, the brain state-triggered stimulus delivery method presented here is quite general, and could be used to efficiently probe the interaction between evoked neural and/or behavioral responses and transient patterns of sparse ongoing brain activity recorded from ensembles of individual neurons using multi-electrodes [63, 65, 66] or two-photon calcium imaging in vivo [67] and in vitro [68].

The current study demonstrates an estimated doubling in efficiency of recording trials containing ongoing states that coincide with the target stimulus. Thus, our method allows a given number of state-stimulus coincidences to be collected in half the time required by previous studies, a major improvement given the limited duration of non-invasive recordings in humans. However, far greater gains in efficiency will be obtained by applying state-triggered stimulus presentation in studies using higher-resolution recording techniques outlined above. In this context, triggering stimuli during states of short duration and sparse occurrence (e.g. 200 ms states present 5% of the time) should lead to an order of magnitude increase in efficiency compared with traditional stimulus presentation schedules (Figure 5).

Another important consideration when studying the neural correlates of behavior (e.g. lateralized detection, in our proof of principle experiment) is the choice of neural metric and task. The neural metric we used (bias index) was easy to calculate and to tailor to each subject during training runs, and was modulated strongly by attentional cue condition in a similar manner across subjects, simplifying online extraction of states following a relatively brief training time (15–25 minutes). To increase cue-dependent modulation of lateralized neural bias, we employed a difficult detection task (Woldorff and Hillyard, 1991) using near-threshold target stimuli and high-rates of sound presentation (5 Hz). Note that correct detection rates (Figure 3C) were roughly two-fold greater than false alarm rates (Figure 3D), suggesting that subjects performed well above chance on this deliberately difficult task.

The degree to which distinct neural ‘states’ are selectively correlated with perception will generally depend on how these states are defined. To study extrema of neural bias to a given ear, we discretized the (unimodal) distribution of neural bias values into different categories, or states, using upper and lower thresholds (Figure 2C, D). As discussed above, we determined state thresholds such that states ‘L’ (leftward neural bias) and ‘R’ each occurred on approximately 45% of trials. As with motor brain-computer interfaces [32], the state-triggered stimulus presentation approach is faced with the challenge of maximizing information rate, a combined measure of both the frequency and distinct nature of defined states occurring during an experiment. For example, increasing thresholds would lead to larger differences in neural bias index between L and R states, potentially improving prediction of subsequent behavioral responses. However, these larger effects would come at a cost of more ‘wasted’ trials that lack any state occurrence on which to trigger target presentation. Similarly, a ‘watering down’ of the influence of different states will occur when using very low thresholds, as well as a bias in the distribution of target times to earlier timepoints within a trial, with the unwanted consequence of increased predictability of target timing.

Attention-Guided Motor and Sensory BCIs: Previous Studies

Our proposed technique was inspired by recent human brain-computer interface (BCI) studies demonstrating robotic control using invasive single- or multi-unit activity [66] and non-invasive EEG activity [32]. The ability to observe modulation of neural response bias on single trials using two concurrent streams of rapidly presented stimulus trains has been reported for EEG-guided motor BCIs using auditory [69, 70] and visual [71, 72] attention. The current study is unique in that ongoing fluctuations in neural bias, likely reflecting, in part, fluctuations in selective listening (Figure 3), were used to trigger presentation of sensory stimuli rather than to guide motor output.

The concept of online stimulus triggering following ongoing brain activity has been previously employed in a limited number of neuropsychological studies. The only studies that have employed this concept to study sensory perception focused on online triggering on the phase of ongoing theta or alpha oscillations, and correlated phase-specific stimulus presentation with subjects’ reaction time [18, 19]; similar versions of this protocol are used in [33] and [34]. Guttman and Bauer focused on brain state-triggered presentation of complex stimuli during associative learning rather than sensory perceptual tasks, and were limited by technical and design considerations to slow EEG timescales ([22]; DC potentials, averages of ten seconds). Rahn, Basar and colleagues demonstrated enhanced auditory and visual evoked responses when stimuli where selectively presented following states of low oscillatory activity (alpha and theta bands) on a single EEG electrode, though no behavioral task was used [47]. In post-hoc analyses, we observed that decreased pre-target oscillatory EEG activity (albeit in a high frequency range) correlated with increased detection rates, independent of cue condition (Figure 4). The correlation between oscillatory EEG power and detection performance suggests that global fluctuations in pre-target oscillatory amplitude due to arousal or concentration effects may also contribute to trial-to-trial behavioral variability, consistent with previous reports [3, 13–16, 20, 21, 23, 24, 28, 31]. In future, experiments employing state-triggered presentation could benefit from using conjunctions of concurrent, predictive ongoing brain states for triggering target stimuli, similar to methods used for visual attention-driven motor BCIs [72].

The potential application of our proposed method to global brain state-triggered stimulus presentation shares similarities with efforts at NASA employing arousal-related EEG information for adaptive automation of flight-control programs for jet pilots. However, the timescale for neural feedback and sensori-motor task changes was quite slow (tens to hundreds of seconds, [73]. Several other promising efforts towards vigilance-based adaptive automation using EEG are currently underway [74, 75]. Our method differs from previous ‘neurofeedback’ studies that aim treat disorders of attention or cognition by asking subjects to directly regulate their brain activity in various frequency bands towards ‘normal’ levels [76, 77]. In these neurofeedback studies, subjects’ strategies for modulating their brain activity are typically unrelated to the behavioral task, rendering it difficult to gauge the origin and task-relevance of induced fluctuations in brain activity.

Clinical Applications: Sensory Prostheses?

Brain state-guided stimulus presentation may augment the utility of currently available sensory prostheses. For example, individuals that use hearing aids still have trouble hearing in noisy environments that require increased attention [78], likely due in part to persistent deficits in spatially specific cortical regulation of gain control in the inner ear [79]. In addition, peripheral auditory impairment may cause a subsequent degradation in the capacity for central selective attention [80]. The selective listening paradigm used in the current study could be adapted to track the biases in cortical attention to speech streams at a given ear. In this way, EEG-triggered synthetic filtering of incoming sound intensity and other sound features could underlie a cortically guided hearing aid. Other sensory modalities and remote-sensing applications (e.g. tactile feedback during robotic surgery) would also likely benefit from state-triggered stimulus presentation.

Acknowledgments

We thank M. Cohen, M. Histed, L. Kauhanen, A. Kepecs, J. Maunsell, N. Price, D. Ruff, and B. Shinn-Cunningham for helpful comments and discussions. We also thank D. Kislyuk for assistance with data collection. This work was supported by the Academy of Finland National Centers of Excellence Programme, 2006–2011, NIH-R01HD040712, and a Marie Curie Incoming International Postdoctoral Fellowship (M.A).

References

- 1.Wertheimer M. Experimentelle Studien über das Sehen von Bewegung. Zeitschrift fur Psychologie. 1912:61. [Google Scholar]

- 2.Gilbert CD, Sigman M. Brain states: top-down influences in sensory processing. Neuron. 2007;54:677–696. doi: 10.1016/j.neuron.2007.05.019. [DOI] [PubMed] [Google Scholar]

- 3.Giesbrecht B, Weissman DH, Woldorff MG, Mangun GR. Pre-target activity in visual cortex predicts behavioral performance on spatial and feature attention tasks. Brain Res. 2006;1080:63–72. doi: 10.1016/j.brainres.2005.09.068. [DOI] [PubMed] [Google Scholar]

- 4.Schroeder CE, Lakatos P. Low-frequency neuronal oscillations as instruments of sensory selection. Trends Neurosci. 2009;32:9–18. doi: 10.1016/j.tins.2008.09.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Engel AK, Fries P, Singer W. Dynamic predictions: oscillations and synchrony in top-down processing. Nat Rev Neurosci. 2001;2:704–716. doi: 10.1038/35094565. [DOI] [PubMed] [Google Scholar]

- 6.Brunia CH. Neural aspects of anticipatory behavior. Acta Psychol. 1999;101:213–242. doi: 10.1016/s0001-6918(99)00006-2. [DOI] [PubMed] [Google Scholar]

- 7.Poghosyan V, Ioannides AA. Attention modulates earliest responses in the primary auditory and visual cortices. Neuron. 2008;58:802–813. doi: 10.1016/j.neuron.2008.04.013. [DOI] [PubMed] [Google Scholar]

- 8.Maunsell JH, Cook EP. The role of attention in visual processing. Philos Trans R Soc Lond B Biol Sci. 2002;357:1063–1072. doi: 10.1098/rstb.2002.1107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Spitzer H, Desimone R, Moran J. Increased attention enhances both behavioral and neuronal performance. Science. 1988;240:338–340. doi: 10.1126/science.3353728. [DOI] [PubMed] [Google Scholar]

- 10.Kauramäki J, Jääskeläinen IP, Sams M. Selective attention increases both gain and feature selectivity of the human auditory cortex. PLoS ONE. 2007;2:e909. doi: 10.1371/journal.pone.0000909. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Bishop GH, Clare MH. Relations between specifically evoked and spontaneous activity of optic cortex. Electroencephalogr Clin Neurophysiol. 1952;4:321–330. doi: 10.1016/0013-4694(52)90058-8. [DOI] [PubMed] [Google Scholar]

- 12.Lindsley DB. Psychological phenomena and the electroencephalogram. Electroencephalogr Clin Neurophysiol. 1952;4:443–456. doi: 10.1016/0013-4694(52)90075-8. [DOI] [PubMed] [Google Scholar]

- 13.Makeig S, Jung TP. Tonic, phasic, and transient EEG correlates of auditory awareness in drowsiness. Brain Res Cogn Brain Res. 1996;4:15–25. doi: 10.1016/0926-6410(95)00042-9. [DOI] [PubMed] [Google Scholar]

- 14.Linkenkaer-Hansen K, Nikulin VV, Palva S, Ilmoniemi RJ, Palva JM. Prestimulus oscillations enhance psychophysical performance in humans. J Neurosci. 2004;24:10186–10190. doi: 10.1523/JNEUROSCI.2584-04.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Volberg G, Kliegl K, Hanslmayr S, Greenlee MW. EEG alpha oscillations in the preparation for global and local processing predict behavioral performance. Hum Brain Mapp. 2009;7:2173–2183. doi: 10.1002/hbm.20659. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Bollimunta A, Chen Y, Schroeder CE, Ding M. Neuronal mechanisms of cortical alpha oscillations in awake-behaving macaques. J Neurosci. 2008;28:9976–9988. doi: 10.1523/JNEUROSCI.2699-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Busch NA, Dubois J, VanRullen R. The phase of ongoing EEG oscillations predicts visual perception. J Neurosci. 2009;29:7869–7876. doi: 10.1523/JNEUROSCI.0113-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Callaway E, Yeager CL. Relationship between reaction time and electroencephalographic alpha phase. Science. 1960;132:1765–1766. doi: 10.1126/science.132.3441.1765. [DOI] [PubMed] [Google Scholar]

- 19.Dustman RE, Beck EC. Phase of alpha brain waves, reaction time and visually evoked potentials. Electroencephalogr Clin Neurophysiol. 1965;18:433–440. doi: 10.1016/0013-4694(65)90123-9. [DOI] [PubMed] [Google Scholar]

- 20.Ergenoglu T, Demiralp T, Bayraktaroglu Z, Ergen M, Beydagi H, Uresin Y. Alpha rhythm of the EEG modulates visual detection performance in humans. Brain Res Cogn Brain Res. 2004;20:376–383. doi: 10.1016/j.cogbrainres.2004.03.009. [DOI] [PubMed] [Google Scholar]

- 21.Gonzalez-Andino SL, Michel CM, Thut G, Landis T, Grave de Peralta R. Prediction of response speed by anticipatory high-frequency (gamma band) oscillations in the human brain. Hum Brain Mapp. 2005;24:50–58. doi: 10.1002/hbm.20056. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Guttmann G, Bauer H. The brain-trigger design. A powerful tool to investigate brain-behavior relations. Ann N Y Acad Sci. 1984;425:671–675. doi: 10.1111/j.1749-6632.1984.tb23593.x. [DOI] [PubMed] [Google Scholar]

- 23.Hanslmayr S, Aslan A, Staudigl T, Klimesch W, Herrmann CS, Bäuml KH. Prestimulus oscillations predict visual perception performance between and within subjects. Neuroimage. 2007;37:1465–1473. doi: 10.1016/j.neuroimage.2007.07.011. [DOI] [PubMed] [Google Scholar]

- 24.Lakatos P, Karmos G, Mehta AD, Ulbert I, Schroeder CE. Entrainment of neuronal oscillations as a mechanism of attentional selection. Science. 2008;320:110–113. doi: 10.1126/science.1154735. [DOI] [PubMed] [Google Scholar]

- 25.Liang H, Bressler SL, Ding M, Truccolo WA, Nakamura R. Synchronized activity in prefrontal cortex during anticipation of visuomotor processing. Neuroreport. 2005;13:2011–2015. doi: 10.1097/00001756-200211150-00004. [DOI] [PubMed] [Google Scholar]

- 26.Mathewson KE, Gratton G, Fabiani M, Beck DM, Ro T. To see or not to see: prestimulus alpha phase predicts visual awareness. J Neurosci. 2009;29:2725–2732. doi: 10.1523/JNEUROSCI.3963-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Silberstein RB, Song J, Nunez PL, Park W. Dynamic sculpting of brain functional connectivity is correlated with performance. Brain Topogr. 2004;16:249–254. doi: 10.1023/b:brat.0000032860.04812.b1. [DOI] [PubMed] [Google Scholar]

- 28.Weissman DH, Warner LM, Woldorff MG. The neural mechanisms for minimizing cross-modal distraction. J Neurosci. 2004;24:10941–10949. doi: 10.1523/JNEUROSCI.3669-04.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Womelsdorf T, Fries P, Mitra PP, Desimone R. Gamma-band synchronization in visual cortex predicts speed of change detection. Nature. 2006;439:733–736. doi: 10.1038/nature04258. [DOI] [PubMed] [Google Scholar]

- 30.Wyart V, Sergent C. The phase of ongoing EEG oscillations uncovers the fine temporal structure of conscious perception. J Neurosci. 2009;29:12839–12841. doi: 10.1523/JNEUROSCI.3410-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Zhang Y, Ding M. Detection of a weak somatosensory stimulus: role of the prestimulus mu rhythm and its top-down modulation. J Cogn Neurosci. 2010;22:307–322. doi: 10.1162/jocn.2009.21247. [DOI] [PubMed] [Google Scholar]

- 32.Wolpaw JR, Birbaumer N, McFarland DJ, Pfurtscheller G, Vaughan TM. Brain-computer interfaces for communication and control. Clin Neurophysiol. 2002;113:767–791. doi: 10.1016/s1388-2457(02)00057-3. [DOI] [PubMed] [Google Scholar]

- 33.Huerta PT, Lisman JE. Low-frequency stimulation at the troughs of theta-oscillation induces long-term depression of previously potentiated CA1 synapses. J Neurophysiol. 1996;75:877–884. doi: 10.1152/jn.1996.75.2.877. [DOI] [PubMed] [Google Scholar]

- 34.Kruglikov SY, Schiff SJ. Interplay of electroencephalogram phase and auditory-evoked neural activity. J Neurosci. 2003;23:10122–10127. doi: 10.1523/JNEUROSCI.23-31-10122.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Giard MH, Fort A, Mouchetant-Rostaing Y, Pernier J. Neurophysiological mechanisms of auditory selective attention in humans. Front Biosci. 2000;5:D84–94. doi: 10.2741/giard. [DOI] [PubMed] [Google Scholar]

- 36.Woldorff MG, Hillyard SA. Modulation of early auditory processing during selective listening to rapidly presented tones. Electroencephalogr Clin Neurophysiol. 1991;79:170–191. doi: 10.1016/0013-4694(91)90136-r. [DOI] [PubMed] [Google Scholar]

- 37.Rinne T, Pekkola J, Degerman A, Autti T, Jääskeläinen IP, Sams M, Alho K. Modulation of auditory cortex activation by sound presentation rate and attention. Hum Brain Mapp. 2005;26:94–99. doi: 10.1002/hbm.20123. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Neelon MF, Williams J, Garell PC. The effects of attentional load on auditory ERPs recorded from human cortex. Brain Res. 2006;1118:94–105. doi: 10.1016/j.brainres.2006.08.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Näätänen R, Picton T. The N1 wave of the human electric and magnetic response to sound: a review and an analysis of the component structure. Psychophysiology. 1987;24:375–425. doi: 10.1111/j.1469-8986.1987.tb00311.x. [DOI] [PubMed] [Google Scholar]

- 40.Hari R, Aittoniemi K, Jarvinen ML, Katila T, Varpula T. Auditory evoked transient and sustained magnetic fields of the human brain. Localization of neural generators. Exp Brain Res. 1980;40:237–240. doi: 10.1007/BF00237543. [DOI] [PubMed] [Google Scholar]

- 41.Woldorff MG. Distortion of ERP averages due to overlap from temporally adjacent ERPs: analysis and correction. Psychophysiology. 1993;30:98–119. doi: 10.1111/j.1469-8986.1993.tb03209.x. [DOI] [PubMed] [Google Scholar]

- 42.Donald MW, Young MJ. A time-course analysis of attentional tuning of the auditory evoked response. Exp Brain Res. 1982;46:357–367. doi: 10.1007/BF00238630. [DOI] [PubMed] [Google Scholar]

- 43.Kauhanen L, Nykopp T, Lehtonen J, Jylänki P, Heikkonen J, Rantanen P, Alaranta H, Sams M. EEG and MEG brain-computer interface for tetraplegic patients. IEEE Trans Neural Syst Rehabil Eng. 2006;14:190–193. doi: 10.1109/TNSRE.2006.875546. [DOI] [PubMed] [Google Scholar]

- 44.Palomäki T. Master’s Thesis. Helsinki University of Technology; 2007. [Google Scholar]

- 45.Green D, Swets J. Signal Detection Theory and Psychophysics. J. Wiley; New York: 1966. [Google Scholar]

- 46.Niedermeyer E, Lopes da Silva F, editors. Electroencephalography: Basic Principles, Clinical Applications, and Related Fields. Lippincott, Williams and Wilkins; 2004. [Google Scholar]

- 47.Rahn E, Basar E. Prestimulus EEG-activity strongly influences the auditory evoked vertex response: a new method for selective averaging. Int J Neurosci. 1993;69:207–220. doi: 10.3109/00207459309003331. [DOI] [PubMed] [Google Scholar]

- 48.Hiscock M, Inch R, Kinsbourne M. Allocation of attention in dichotic listening: differential effects on the detection and localization of signals. Neuropsychology. 1999;13:404–414. doi: 10.1037//0894-4105.13.3.404. [DOI] [PubMed] [Google Scholar]

- 49.Frith C, Dolan RJ. Brain mechanisms associated with top-down processes in perception. Philos Trans R Soc Lond B Biol Sci. 1997;352:1221–1230. doi: 10.1098/rstb.1997.0104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Pollmann S, Maertens M. Perception modulates auditory cortex activation. Neuroreport. 2006;17:1779–1782. doi: 10.1097/WNR.0b013e3280107a98. [DOI] [PubMed] [Google Scholar]

- 51.Ress D, Heeger DJ. Neuronal correlates of perception in early visual cortex. Nat Neurosci. 2003;6:414–420. doi: 10.1038/nn1024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Dierks T, Linden DE, Jandl M, Formisano E, Goebel R, Lanfermann H, Singer W. Activation of Heschl’s gyrus during auditory hallucinations. Neuron. 1999;22:615–621. doi: 10.1016/s0896-6273(00)80715-1. [DOI] [PubMed] [Google Scholar]

- 53.Petkov CI, O’Connor KN, Sutter ML. Encoding of illusory continuity in primary auditory cortex. Neuron. 2007;54:153–165. doi: 10.1016/j.neuron.2007.02.031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Voisin J, Bidet-Caulet A, Bertrand O, Fonlupt P. Listening in silence activates auditory areas: a functional magnetic resonance imaging study. J Neurosci. 2006;26:273–278. doi: 10.1523/JNEUROSCI.2967-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Muller MM, Teder-Salejarvi W, Hillyard SA. The time course of cortical facilitation during cued shifts of spatial attention. Nat Neurosci. 1998;1:631–634. doi: 10.1038/2865. [DOI] [PubMed] [Google Scholar]

- 56.Woodman GF, Luck SJ. Electrophysiological measurement of rapid shifts of attention during visual search. Nature. 1999;400:867–869. doi: 10.1038/23698. [DOI] [PubMed] [Google Scholar]

- 57.Khayat PS, Spekreijse H, Roelfsema PR. Attention lights up new object representations before the old ones fade away. J Neurosci. 2006;26:138–142. doi: 10.1523/JNEUROSCI.2784-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Valpola H, Särelä J. Independent Component Analysis and Blind Signal Separation. Vol. 3195. Springer; Berlin/Heidelberg: 2004. Accurate, Fast and Stable Denoising Source Separation Algorithms. [Google Scholar]

- 59.Makeig S, Jung TP, Bell AJ, Ghahremani D, Sejnowski TJ. Blind separation of auditory event-related brain responses into independent components. Proc Natl Acad Sci USA. 1997;94:10979–10984. doi: 10.1073/pnas.94.20.10979. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Kauhanen L, Nykopp T, Sams M. Classification of single MEG trials related to left and right index finger movements. Clin Neurophysiol. 2006;117:430–439. doi: 10.1016/j.clinph.2005.10.024. [DOI] [PubMed] [Google Scholar]

- 61.Buch E, Weber C, Cohen LG, Braun C, Dimyan MA, Ard T, Mellinger J, Caria A, Soekadar S, Fourkas A, Birbaumer N. Think to move: a neuromagnetic brain-computer interface (BCI) system for chronic stroke. Stroke. 2008;39:910–917. doi: 10.1161/STROKEAHA.107.505313. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Mellinger J, chalk G, Braun C, Preissl H, Rosenstiel W, Birbaumer N, Kübler A. An MEG-based brain-computer interface (BCI) Neuroimage. 2007;36:581–593. doi: 10.1016/j.neuroimage.2007.03.019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Shenoy KV, Santhanam G, Ryu SI, Afshar A, Yu BM, Gilja V, Linderman MD, Kalmar RS, Cunningham JP, Kemere CT, Batista AP, Churchland MM, Meng TH. Increasing the performance of cortically-controlled prostheses; Conf Proc IEEE Eng Med Biol Soc Suppl; 2006. pp. 6652–6656. [DOI] [PubMed] [Google Scholar]

- 64.deCharms RC. Reading and controlling human brain activation using real-time functional magnetic resonance imaging. Trends Cogn Sci. 2007;11:473–481. doi: 10.1016/j.tics.2007.08.014. [DOI] [PubMed] [Google Scholar]

- 65.Luczak A, Bartho P, Marguet SL, Buzsaki G, Harris KD. Sequential structure of neocortical spontaneous activity in vivo. Proc Natl Acad Sci USA. 2007;104:347–352. doi: 10.1073/pnas.0605643104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Donoghue JP. Bridging the brain to the world: a perspective on neural interface systems. Neuron. 2008;60:511–521. doi: 10.1016/j.neuron.2008.10.037. [DOI] [PubMed] [Google Scholar]

- 67.Stosiek C, Garaschuk O, Holthoff K, Konnerth A. In vivo two-photon calcium imaging of neuronal networks. Proc Natl Acad Sci USA. 2003;100:7319–7324. doi: 10.1073/pnas.1232232100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.MacLean JN, Watson BO, Aaron GB, Yuste R. Internal dynamics determine the cortical response to thalamic stimulation. Neuron. 2005;48:811–823. doi: 10.1016/j.neuron.2005.09.035. [DOI] [PubMed] [Google Scholar]

- 69.Hill NJ, Lal TN, Bierig K, Birbaumer N, Scholkopf B. NIPS. Vol. 17. Vancouver; Canada: 2004. An Auditory Paradigm for Brain-Computer Interfaces. [Google Scholar]

- 70.Kallenberg M. Thesis. RU Nijmegen; 2006. [Google Scholar]

- 71.Allison BZ, McFarland DJ, Schalk G, Zheng SD, Jackson MM, Wolpaw JR. Towards an independent brain-computer interface using steady state visual evoked potentials. Clin Neurophysiol. 2008;119:399–408. doi: 10.1016/j.clinph.2007.09.121. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Kelly SP, Lalor EC, Finucane C, McDarby G, Reilly RB. Visual spatial attention control in an independent brain-computer interface. IEEE Trans Biomed Eng. 2005;52:1588–1596. doi: 10.1109/TBME.2005.851510. [DOI] [PubMed] [Google Scholar]

- 73.Prinzel LJ, Freeman FG, Scerbo MW, Mikulka PJ, Pope AT. Effects of a psychophysiological system for adaptive automation on performance, workload, and the event-related potential P300 component. Hum Factors. 2003;45:601–613. doi: 10.1518/hfes.45.4.601.27092. [DOI] [PubMed] [Google Scholar]

- 74.Freeman FG, Mikulka PJ, Scerbo MW, Scott L. An evaluation of an adaptive automation system using a cognitive vigilance task. Biol Psychol. 2004;67:283–297. doi: 10.1016/j.biopsycho.2004.01.002. [DOI] [PubMed] [Google Scholar]

- 75.Jung TP, Makeig S, Stensmo M, Sejnowski TJ. Estimating alertness from the EEG power spectrum. IEEE Trans Biomed Eng. 1997;44:60–69. doi: 10.1109/10.553713. [DOI] [PubMed] [Google Scholar]

- 76.Angelakis E, Stathopoulou S, Frymiare JL, Green DL, Lubar JF, Kounios J. EEG neurofeedback: a brief overview and an example of peak alpha frequency training for cognitive enhancement in the elderly. Clin Neuropsychol. 2007;21:110–129. doi: 10.1080/13854040600744839. [DOI] [PubMed] [Google Scholar]

- 77.Egner T, Gruzelier JH. EEG biofeedback of low beta band components: frequency-specific effects on variables of attention and event-related brain potentials. Clin Neurophysiol. 2004;115:131–139. doi: 10.1016/s1388-2457(03)00353-5. [DOI] [PubMed] [Google Scholar]

- 78.Noble W, Gatehouse S. Effects of bilateral versus unilateral hearing aid fitting on abilities measured by the Speech, Spatial, and Qualities of Hearing Scale (SSQ) Int J Audiol. 2006;45:172–181. doi: 10.1080/14992020500376933. [DOI] [PubMed] [Google Scholar]

- 79.Maison S, Micheyl C, Collet L. Influence of focused auditory attention on cochlear activity in humans. Psychophysiology. 2001;38:35–40. [PubMed] [Google Scholar]

- 80.Shinn-Cunningham BG, Best V. Selective Attention in Normal and Impaired Hearing. Trends Amplif. 2008;12:283–299. doi: 10.1177/1084713808325306. [DOI] [PMC free article] [PubMed] [Google Scholar]