Abstract

Background

It is well known that the deterministic dynamics of biochemical reaction networks can be more easily studied if timescale separation conditions are invoked (the quasi-steady-state assumption). In this case the deterministic dynamics of a large network of elementary reactions are well described by the dynamics of a smaller network of effective reactions. Each of the latter represents a group of elementary reactions in the large network and has associated with it an effective macroscopic rate law. A popular method to achieve model reduction in the presence of intrinsic noise consists of using the effective macroscopic rate laws to heuristically deduce effective probabilities for the effective reactions which then enables simulation via the stochastic simulation algorithm (SSA). The validity of this heuristic SSA method is a priori doubtful because the reaction probabilities for the SSA have only been rigorously derived from microscopic physics arguments for elementary reactions.

Results

We here obtain, by rigorous means and in closed-form, a reduced linear Langevin equation description of the stochastic dynamics of monostable biochemical networks in conditions characterized by small intrinsic noise and timescale separation. The slow-scale linear noise approximation (ssLNA), as the new method is called, is used to calculate the intrinsic noise statistics of enzyme and gene networks. The results agree very well with SSA simulations of the non-reduced network of elementary reactions. In contrast the conventional heuristic SSA is shown to overestimate the size of noise for Michaelis-Menten kinetics, considerably under-estimate the size of noise for Hill-type kinetics and in some cases even miss the prediction of noise-induced oscillations.

Conclusions

A new general method, the ssLNA, is derived and shown to correctly describe the statistics of intrinsic noise about the macroscopic concentrations under timescale separation conditions. The ssLNA provides a simple and accurate means of performing stochastic model reduction and hence it is expected to be of widespread utility in studying the dynamics of large noisy reaction networks, as is common in computational and systems biology.

Background

Biochemical pathways or networks are typically very large. A well-characterized example is the protein-protein interaction network of the yeast Saccharomyces cerevisiae with approximately a thousand putative interactions involving an approximate equal number of proteins [1]. It is also a fact that a significant number of species are found in low copy numbers in both prokaryotic and eukaryotic cells [2,3]. Recent mass spectrometry-based studies have, for example, shown that 75% of the proteins in the cytosol of the bacterium Escherichia coli appear in copy numbers below 250 and the median copy number of all identified proteins is approximately 500 [3]. This means that simulation methods intended to realistically capture the inner workings of a cell have to (i) be stochastic to take into account the significant intrinsic noise associated with low copy number conditions; (ii) be able to simulate fairly large networks in a reasonable amount of time. The stochastic simulation algorithm (SSA) [4] has been and still is the algorithm of choice for a large number of studies exploring the role of noise in biology. The advantage of the algorithm is that it is exact, i.e., it exactly samples the trajectories of the stochastic process described by the chemical master equation (CME), the accepted mesoscopic description of chemical kinetics. Its disadvantage is that it simulates every reaction event and hence is not particularly suited for the study of large networks [5]. This problem is an outstanding challenge in the fields of computational and systems biology.

A common way of circumventing the problem is to simulate a network of species which is much smaller than the size of the full network but which nevertheless captures the essential dynamics. For example, the three elementary (unimolecular or bimolecular) reactions which describe the enzyme-assisted catalysis of substrate S into product P via the Michaelis-Menten reaction, , can be replaced by a single effective reaction . Note that here E and C denote the free enzyme species and the enzyme-substrate complex species, respectively. The latter first-order reaction is non-elementary, i.e., it can be broken down into a set of fundamental elementary reactions. The implicit assumption in this lumping or coarse-graining method is that the transients in the average concentrations of some species decay over much longer timescales than those of the rest of the species. Hence, one can argue that the relevant network to be simulated is that involving the slowly varying species only. In the Michaelis-Menten example, the fast species were the enzyme and the complex and the slow species are the substrate and product. The dynamics of this reduced network are of course only a faithful approximation of those of the full network, the Michaelis-Menten reaction, whenever the rate constants guarantee reasonable timescale separation.

On the macroscopic level, where molecule numbers are so large that intrinsic noise can be ignored, there is a well-known practical recipe for obtaining this reduced or coarse-grained network from the full network of elementary reactions. One writes down the rate equations (REs) for each species, decides which species are fast and slow, sets the time derivative of the concentration of the fast species to zero, solves for the steady-state concentrations of the fast species and finally substitutes these concentrations into the equations for the slow species. This procedure is the deterministic quasi-steady-state assumption (QSSA). The result is a set of new REs for the slow species only; corresponding to these reduced equations is the coarse-grained network, i.e., the network of reactions between slow species whose macroscopic rate laws are dictated by the new REs. Generally, all coarse-grained networks will have at least one reaction which is non-elementary; however those reactions involving the interaction of only slow species in the full network will naturally also remain elementary in the coarse-grained network. The deterministic QSSA presents a rigorous method of achieving a coarse-grained macroscopic description based on the deterministic REs [6]. Its major shortcoming is that it ignores the inherent stochasticity of the system.

On the mesoscopic level, or, in other words, whenever the size of intrinsic noise becomes comparable with the average molecule numbers, the description of chemical kinetics is given by the CME. One would hope that under conditions of timescale separation, just as one can write effective REs for a coarse-grained network starting from the REs of the full network, in a similar manner one can obtain an effective (or reduced) CME for the coarse-grained network starting from the CME of the full network. The effective REs have information about the macroscopic concentrations of the slow species only, while the effective CME has information about the fluctuations of the slow species only. This line of reasoning has led to a stochastic formulation of the QSSA which is in widespread use. In what follows we concisely review the CME formulation of stochastic kinetics and point out compelling reasons which cast doubt on the validity of the popular stochastic QSSA.

Suppose the network (full or coarse-grained) under consideration consists of a number N of distinct chemical species interacting via R elementary or non-elementary chemical reactions of the type

| (1) |

Here, j is an index running from 1 to R, Xidenotes chemical species i, sijand rij are the stoichiometric coefficients and kjis the macroscopic rate coefficient of the reaction. If reaction scheme (1) describes the full network with Ns number of slow species and Nf=N−Ns number of fast species, then we adopt the convention that X1to denote the slow species, while to XN label the fast species. Let nidenote the absolute number of molecules of the ith species; then, at any point in time, the system is described by the state vector . When the jth reaction occurs, the system jumps from state to a new state , where . Furthermore, one defines a propensity function aj for the jth reaction such that is the probability that the jth reaction occurs in the next infinitesimal time interval . Using these definitions and the laws of probability, one can then deduce that the general form of the CME is [5]

| (2) |

where is the probability that the system is in a particular mesoscopic state . The recipe becomes complete once we specify the form of the propensity functions for each chemical reaction. Figure 1 lists the microscopic rate function, , i.e., the propensity functions divided by the volume Ω, for 4 elementary reactions and 3 common non-elementary reactions. The macroscopic rate function fj, i.e., the rate of reaction according to the deterministic REs, is also shown alongside the microscopic rate functions. Note that in the macroscopic rate functions denotes the macroscopic concentration of species i.

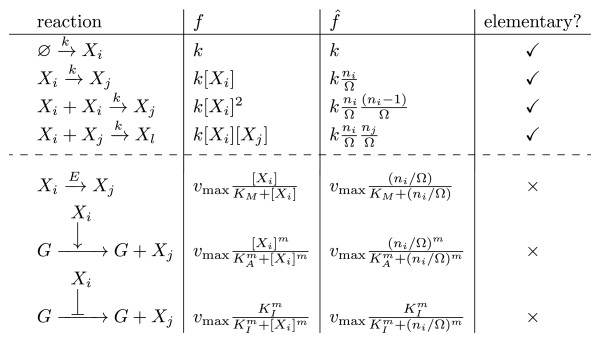

Figure 1.

Microscopic and macroscopic rate functions. The macroscopic rate function f and the microscopic rate function for various common types of chemical reaction steps. The former define the REs while the latter define the CME. The first four reactions are elementary, i.e., they are unimolecular or bimolecular reactions. The last three reactions are non-elementary, i.e., they can be decomposed into a number of simpler elementary reactions. These reactions represent (from top to bottom) the catalysis of a substrate by enzyme, up-regulation of a gene (G) by an activator and down-regulation of a gene by a repressor

If we are modeling the full network, then the constituent reactions have to be all elementary. For such reactions, the propensity and microscopic rate functions have been derived from molecular physics [7] and hence the CME for the full network is fundamentally correct. Now say that we are modeling a coarse-grained network in which case some reactions are non-elementary. Microscopic considerations do not tell us anything about the form of the propensity functions for such reactions. Rather the propensities and the microscopic rate functions are a heuristic extrapolation of the macroscopic reaction rates at the heart of the effective REs for the non-elementary reactions: is obtained by performing the substitution on f .

Hence it follows that the CME for the coarse-grained network is not rigorously derived from that of the full network under conditions of timescale separation but rather is heuristic and hence its validity is a priori doubtful. Janssen was the first to investigate this question by means of an analytical approach applied to a simple chemical example, the dissociation of N2O5; he showed that “the master equation for a complex chemical reaction cannot always be reduced to a simpler master equation, even if there are fast and slow individual reaction steps” [8]. This suggests that even if the molecules numbers are quite large, the conditions for timescale separation required for the validity of the deterministic QSSA are not generally enough to guarantee the validity of the heuristic CME, a hypothesis which has been recently verified in the context of the Michaelis-Menten reaction with substrate input [9]. In other words, the heuristic CME is not the legitimate stochastic equivalent of the deterministic QSSA, in the sense that it does not correctly describe the statistics of the intrinsic noise about the macroscopic concentrations as given by the reduced REs of the coarse-grained network.

Notwithstanding the fundamental objections of Janssen, and frequently in the name of pragmatism, many studies [10-13] have employed the heuristic CME to obtain a coarse-grained stochastic description of various complex networks. A number of studies [14-17] have reported good agreement between the results of stochastic simulations of the full and coarse-grained networks for enzyme reactions and circadian oscillators which has enhanced faith in the heuristic approach of stochastic modeling of networks with non-elementary reactions and given it the status of a mainstream methodology.

In this article we seek to derive a rigorous alternative to the heuristic approach. Given the CME of the full network of elementary reactions, we derive a reduced linear Fokker-Planck equation (FPE) which describes the noise statistics of the same network when the molecule numbers are not too small and under the same conditions of timescale separation imposed by the deterministic QSSA. This new FPE is the legitimate mesoscopic description of intrinsic noise about the macroscopic concentrations of the coarse-grained network as obtained by the deterministic QSSA. The noise statistics from this approach are compared with stochastic simulations of the full network and with simulations of the coarse-grained network using the conventional heuristic approach. In all cases our approach agrees very well with the full network results. In contrast, we show how the size of intrinsic noise as predicted by the conventional approach can be different by more than an order of magnitude than the actual value and how in some instances this approach even misses the existence of noise-induced oscillations. We also show using our method how one can obtain the regions of parameter space where the conventional approach qualitatively fails and where it fares well.

The article is organized as follows. In the Results section, we discuss in general terms the procedure of obtaining a rigorous mesoscopic description under conditions of timescale separation akin to those of the deterministic QSSA. We then apply this novel method to two different examples: an enzyme mechanism capable of displaying both Michaelis-Menten and Hill-type kinetics and a gene network with a single negative feedback loop. The results from our method are contrasted and compared with stochastic simulations of the full network and with those of the coarse-grained network using the conventional heuristic method. We finish by a discussion. Detailed derivations concerning results and applications can be found in the Methods section.

Results

The optimal method to determine the validity of the heuristic CME would be to obtain its analytical solution and compare it with that of the CME for the full network and for rate constants chosen such that the deterministic QSSA is valid. Note that the latter constraint on rate constants is necessary because the propensities of the heuristic CME are based on the macroscopic rate laws as given by the reduced REs and hence the heuristic CME can only give meaningful results if the deterministic QSSA is valid. Unfortunately, CMEs are generally analytically intractable, with exact solutions only known for a handful of simple elementary reactions [18-20]. To circumvent this problem we take recourse to a systematic approximation method, the system-size expansion of van Kampen [21]. The starting point of this method is to write the absolute number of molecules of species i in the CME, equation (2), as

| (3) |

where is the macroscopic concentration of species i and εiis proportional to the noise about this concentration. This substitution leads to an infinite expansion of the master equation. The first term, that proportional to Ω 1/2, leads to the deterministic equations for the mean concentrations as predicted by the CME in the macroscopic limit of large volumes (or equivalently large molecule numbers). The rest of the terms give a time-evolution equation for the probability density function of the fluctuations, . This partial differential equation is an infinite series in powers of the inverse square root of the volume (see [22] for the general form of this equation). Truncating this series to include only the first term, i.e., that which is proportional to Ω 0, leads to a second-order partial differential equation, also called the linear Fokker-Planck equation or the linear noise approximation (LNA) [21,23,24]. The solution of the latter equation is a multivariate Gaussian probability distribution and hence expressions for the statistics of intrinsic noise about the macroscopic concentrations, e.g., the variance of fluctuations, can be obtained straightforwardly from this formalism, a distinctive advantage over the CME. The restrictions which must be kept in mind are that this method only provides a reliable approximation to the CME if the molecule numbers are sufficiently large (small intrinsic noise) and the chemical network is monostable (see also the Discussion and conclusion section).

Hence we can now formulate two questions to precisely determine the validity of the heuristic CME in timescale separation conditions: (i) in the macroscopic limit, are the mean concentrations of the heuristic CME exactly given by the reduced REs obtained from the deterministic QSSA? (ii) are the noise statistics about these mean concentrations, as given by the LNA applied on the heuristic CME, equal to the noise statistics obtained from applying the LNA on the CME of the full network? If the heuristic CME is correct then the answer to both these questions should be yes.

The first question can be answered straightforwardly. The deterministic equations for the mean concentrations of the heuristic CME, in the macroscopic limit of infinite volumes, necessarily only depend on the macroscopic limit of the heuristic microscopic rate functions in the heuristic CME. More specifically, consideration of the first term of the system-size expansion leads to a deterministic set of equations of the form , where S is the stoichiometric matrix with elements Sij=rij−sij[23]. As discussed in the Introduction, the vector of heuristic microscopic rate functions for the heuristic CME, , is constructed from the macroscopic rate function vector, , of the reduced REs by performing the substitution on . Given the ansatz, equation (3), we can see that the heuristic method guarantees, by construction, that . This implies that the first term of the system-size expansion applied to the heuristic CME leads to a deterministic set of equations of the form , which indeed are the reduced REs obtained from the deterministic QSSA. Hence, we can conclusively state that in the macroscopic limit, the heuristic CME does reproduce the correct mean concentrations for timescale separation conditions.

The second question, regarding agreement in noise statistics not simply in the means, has not been considered before and presents a considerably more difficult challenge. In what follows we briefly review the LNA applied to the heuristic CME of the coarse-grained network which we shall call the hLNA and we derive the LNA applied to the full network under conditions of timescale separation, a novel method which we refer to as the slow-scale LNA (ssLNA).

The LNA applied to the heuristic CME

The application of the LNA to the heuristic CME has been the subject of a number of studies [23,25-30]. For a step-by-step guide to implementing the LNA we refer the reader to the supplementary material of Ref. [9]. Here we simply state the well known results. We shall use the underline notation to denote a matrix throughout the rest of the article.

Given the coarse-grained network, reaction scheme (1), one can construct the stoichiometric matrix S with elements Sij=rij−sij and the macroscopic rate vector with entries . Note that the latter, as discussed in the Introduction, encapsulates the macroscopic rate law for each individual reaction composing the network. Note also that the k’s for a coarse-grained network are generally functions of the macroscopic concentrations and not constants as for a full network (of elementary reactions). The reduced REs are then given by and consequently the Jacobian matrix J has elements , where ∂j denotes the partial derivative with respect to , the concentration of species j.

It then follows by the LNA that the noise statistics given by the heuristic CME, i.e., equation (2) with heuristic propensities, in the limit of large molecule numbers, are approximately described by the following linear FPE

| (4) |

where is the vector of concentration fluctuations about the macroscopic concentrations of the slow species, i.e., . Note that in the traditional approach due to van Kampen [21] one writes a linear FPE for the noise vector because of the form of the ansatz, equation (3) (the subscript s denotes slow species). Here we have instead chosen to write the FPE for since is the true measure of fluctuations about the mean concentrations. The operator ∇sdenotes the vector of derivatives with respect to components of the vector . The matrix Dh is the diffusion matrix which is given by the following formula

| (5) |

where F is a diagonal matrix whose non-zero diagonal entries are the elements of the macroscopic rate function vector , i.e., .

The solution of the linear FPE, equation (4), is a multivariate Gaussian and hence noise statistics can be straightforwardly computed. The covariance matrix H of the concentration fluctuations about the steady-state concentrations, as described by the linear FPE, can be obtained by solving the Lyapunov equation [24,31]

| (6) |

where Hij=〈ηs,iηs,j〉. The variance of the fluctuations of species j is hence given by the jth diagonal element of H. The power spectrum of the concentration fluctuations of the jth species is given by

| (7) |

where I is the identity matrix, i is the imaginary unit number and ω is the frequency.

Note that we have chosen to compute the variance and power spectrum as our noise statistics for the following reasons. The variance can be used to calculate the Fano factor (variance of fluctuations divided by the mean concentration) and the coefficient of variation (standard deviation of fluctuations divided by the mean concentration) [32]. The coefficient of variation provides a non-dimensional measure of the size of intrinsic noise, and is a particularly natural measure when the probability distribution solution of the CME is approximately Gaussian. The Fano factor multiplied by the volume provides another non-dimensional measure of the noise level: it gives the size of the fluctuations in the molecule numbers relative to that of a Poissonian distribution with the same mean number of molecules. Generally these measures provide different but complementary information and both have been reported in recent experiments [33,34]. Hence in this article we calculate both measures. We also calculate the power spectrum which gives the intensity of fluctuations at a given frequency; a peak in the spectrum indicates noise-induced oscillations [35], a phenomenon which is of importance in biochemical networks responsible for biological rhythms such as circadian clocks [36].

The LNA applied to the full network under conditions of timescale separation

The LNA approach mentioned in the previous subsection works equally well if applied to the CME of the full network. This leads to a linear FPE of the form

| (8) |

where and and are, respectively, the vectors of concentration fluctuations about the macroscopic concentrations of the slow and fast species. The operator ∇ denotes the vector of derivatives with respect to components of . The matrix JF is the Jacobian of the REs of the full network, while the diffusion matrix DF is given by equation (5) with S and F now equal to the stoichiometric matrix and the diagonal matrix of the macroscopic rate function vector for the full network, respectively.

Note that while equation (4) is based on the heuristic CME and therefore inherits all its problems, equation (8) has no such problems: it is derived from the CME of the full network of elementary reactions, which is fundamentally correct. Hence, ideally, we would obtain the multivariate Gaussian solution of the two linear FPEs, compare and then decide upon the validity of the heuristic CME. Unfortunately, this direct comparison is impossible because equation (4) gives a joint probability distribution function for the slow species only, whereas equation (8) leads to a joint probability density function for both slow and fast species.

In the Methods section we devise an adiabatic elimination method by which, starting from equation (8), we obtain a closed-form solution for a linear FPE that describes the time evolution of the joint probability density function of slow variables only. We call the reduced linear FPE obtained from this method, the slow-scale LNA. Our result can be stated as follows. Under conditions of timescale separation consistent with the deterministic QSSA, the noise statistics of the slow species according to the CME of the full network can be approximately described by the following linear FPE

| (9) |

Note that the matrix J is the Jacobian of the reduced REs of the coarse-grained network, indeed the same as in equation (4). However, the diffusion matrix Dss is generally different than Dh, which indeed proves the non-validity of the heuristic CME as a stochastic description of the coarse-grained network. We show that the new diffusion matrix is given by

| (10) |

where and . We have also used the following convenient definitions

|

(11) |

where S and JF are the stoichiometric and Jacobian matrices of the full network and F is the diagonal matrix of the macroscopic rate function vector of the full network with the macroscopic concentrations of the fast species expressed in terms of the macroscopic concentrations of the slow ones. The matrices S and JF are partitioned into sub-matrices of the following sizes: Ss is an Ns×R matrix, Sf is an Nf×R matrix, Js is an Ns×Ns matrix, Jsf is an Ns×Nf matrix, Jfs is an Nf×Ns matrix and Jf is an Nf×Nf matrix. Note that Nsand Nf are the number of slow and fast species respectively. Note also that the fact that the new diffusion matrix can be written in the form of equation (10) immediately implies that it is symmetric and positive semi-definite, two crucial properties of the diffusion matrices for all FPEs [21]. It also follows that the variance and power spectrum of the slow species according to the ssLNA can be calculated from equations (6) and (7) with Dh replaced by Dss.

The derivation of the ssLNA leads us to a fundamental conclusion: although the existence of an effective CME for a coarse-grained network under conditions of timescale separation cannot be generally guaranteed (as proved by Janssen), it is always possible to write down a effective linear FPE for the coarse-grained network. We now also have a viable strategy to compare the heuristic and full CMEs under conditions of timescale separation: one obtains the noise statistics from the hLNA and the ssLNA and compares them for rate constants such that the deterministic QSSA is a valid approximation (for an illustration of the comparison method, see Figure 2). Furthermore, the ssLNA provides us not only with a new method to analytically obtain the noise statistics of a coarse-grained network but also with a new simulation tool which replaces conventional SSA simulations with heuristic propensities. The new simulation method consists in numerically solving the set of stochastic differential equations (Langevin equations) which exactly correspond to equation (9) [37]

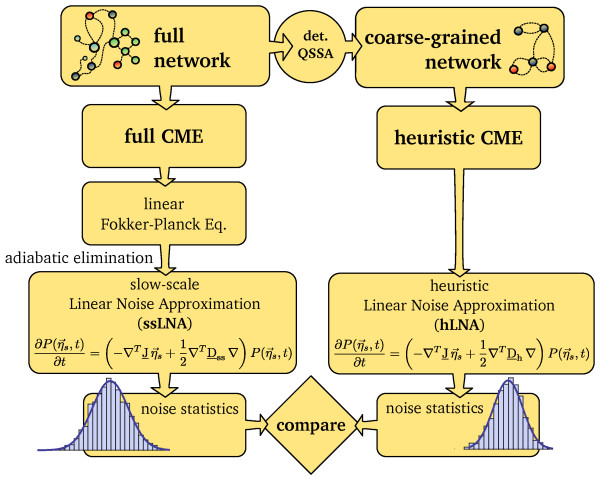

Figure 2.

Determining the validity of the heuristic CME. Scheme illustrating the analytical approach to determine the validity of the heuristic CME which is used in this article. Parameters are chosen such that the deterministic QSSA is valid and such that molecule numbers are not too small. The LNA is applied to the heuristic CME leading to a linear FPE, describing the noise of the slow species. A different reduced linear FPE describing the noise in the slow species is obtained by applying a rigorous adiabatic elimination method on the linear FPE which approximates the CME of the full network. The noise statistics from the two linear FPEs are compared

| (12) |

where the R dimensional vector is white Gaussian noise defined by 〈 Γi(t)〉=0and .

One may ask whether there is an effective CME which in the large volume limit can be approximated by the ssLNA, Eq. (12). The form of the noise coefficient in Eq. (12) implies that the ssLNA corresponds to the master equation of an effective reaction scheme with a stoichiometric matrix

| (13) |

Such a reaction scheme is compatible with the reduced REs: defining as the macroscopic concentration vector of the slow species, we have since as required by the deterministic QSSA. Note that while S’ is not the only stoichiometric matrix which is compatible with the reduced REs (any matrix of the form where A is some general matrix will do), it is the matrix which is uniquely selected by adiabatic elimination of the concentration fluctuations of the fast species. Of course this reduced reaction scheme characterized by S’ is only physically meaningful if its entries are time-independent and integer-valued. Under timescale separation, this condition is not generally fulfilled. Rather this constitutes an additional, stronger condition. Hence it follows that generally a reduced CME description does not exist under timescale separation conditions, though a reduced Langevin equation description, i.e., the ssLNA, always exists.

In the rest of this article, we apply the systematic comparison method developed in the Results section to two examples of biological importance: enzyme-facilitated catalysis of substrate into product by cooperative and non-cooperative mechanisms and a genetic network with a negative feedback loop. For each of these, we shall obtain the noise properties of the coarse-grained versions of the circuits in the limit of large molecule numbers using the ssLNA and the hLNA. Because the expressions for the noise statistics from these two are quite simple, we shall be able to readily identify the regions of parameter space where the hLNA, and hence the heuristic CME, is correct and where it gives misleading results. The theoretical results are confirmed by stochastic simulations based on the CME of the full network and on the heuristic CME of the coarse-grained network.

Application I: Cooperative and non-cooperative catalytic mechanisms

Many regulatory mechanisms in intracellular biochemistry involve multisubunit enzymes with multiple binding sites [38]. We consider a simple network involving the catalysis of substrate into product by a two- subunit enzyme

| (14) |

Substrate S is input into the compartment where the reaction is occurring, it reversibly binds with an enzyme EE which has two free binding sites to form the first complex EES with one binding site occupied by a substrate molecule. This complex either decays into the original enzyme EE and a product molecule or else it can reversibly bind to another substrate molecule leading to a second complex SEES with both binding sites occupied by substrate molecules. Finally, this last complex decays into the first complex and product P. Note that reaction scheme (14) is the considered full network, since only elementary reactions are involved.

Deterministic analysis and network coarse-graining

The full network (14) (without the input reaction) has been previously studied using REs by Tyson [39]. The coarse-grained network is obtained by implementing the deterministic QSSA: transients in the enzyme and complex concentrations are assumed to decay much faster than transients in the substrate concentrations. Hence, the time derivatives of the REs for the concentrations of the two complexes are set to zero, the steady-state concentrations of the two complexes are found and substituted in the RE for substrate concentration, leading to a single effective rate equation [39] given by

| (15) |

| (16) |

where S is the instantaneous substrate concentration and k′is an effective first-order rate coefficient. The Michaelis-Menten constants are and and the total enzyme concentration is denoted as , which is a constant at all times and equal to , the sum of the concentrations of free enzyme and of the two complexed forms. Hence, the coarse-grained version of the full network (14) is simply

| (17) |

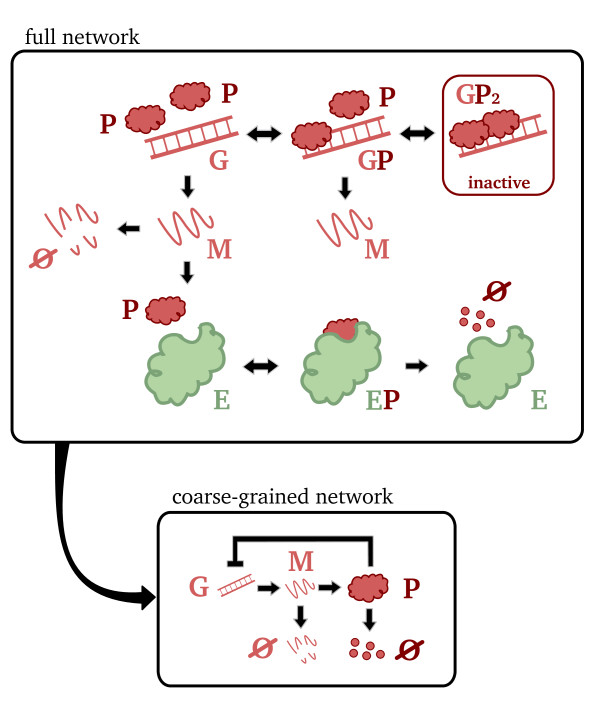

Note that the deterministic QSSA has reduced our network from one with 5 species interacting via 7 elementary reactions, reaction scheme (14), to one with 2 species interacting via 2 reactions, one elementary and one non-elementary, reaction scheme (17). A cartoon representation of the two networks can be found in Figure 3. The dynamics of the coarse-grained network are a good approximation of those of the full network provided the timescales for the decay of the transients in the concentrations of the two complexes are much shorter than those of the substrate.

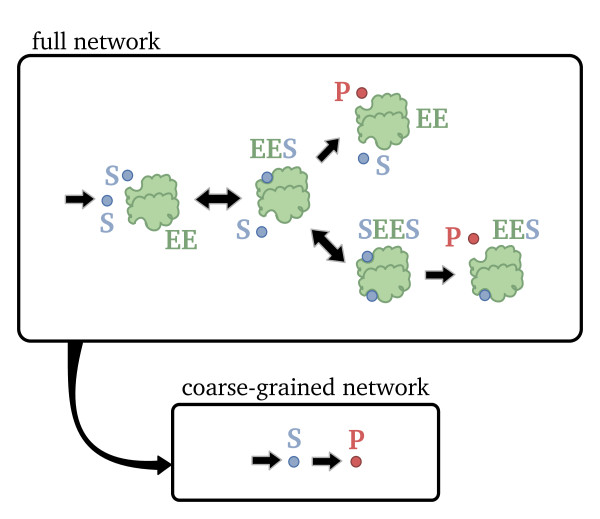

Figure 3.

Full and coarse-grained mechanisms of a two-subunit enzyme network. Cartoon illustrating the full and coarse-grained networks for the two-subunit enzyme network. The reduced, coarse-grained network is obtained from the full network under conditions of timescale separation, i.e., transients in the concentrations of all enzyme and complex species decay much faster than transients in the concentrations of the substrate and product species

Note that throughout the rest of this article, the notation [X] will generally denote the steady-state concentration of species X, unless it appears in the context of a differential equation as in equation (15) where then it necessarily refers to the instantaneous concentration.

Stochastic analysis of the coarse-grained network: ssLNA and hLNA methods

We use the ssLNA (see the Results section) to obtain the Langevin equation for the intrinsic noise ηs(t) about the steady-state macroscopic substrate concentration of the coarse-grained network, i.e., the steady-state solution [S] of equation (15). The derivation leading to the Langevin equation can be found in the Methods section. The result is

| (18) |

where J is the Jacobian of the reduced RE, equation (15), and the functions q1 and q2 are defined as

| (19) |

| (20) |

Note that Γi(t)denotes the contribution to the intrinsic noise in the steady-state substrate concentration due to the ith elementary reaction of the full network of which there are 7 in total. It is clear that Γ1(t) is the noise from the input reaction since it has a pre-factor of kin, Γ2(t)is the noise from the binding of substrate and free enzyme since it has a pre-factor of k1 and so on for the rest of the noise terms. Hence, we see that according to the ssLNA, under conditions of timescale separation, all elementary reactions in the full network contribute to the intrinsic noise in the substrate concentration. The variance of the intrinsic noise described by the Langevin equation, equation (18), can be calculated according to the recipe described in the Results section (see also the Methods section) and is found to be given by

| (21) |

Next we obtain the variance of the substrate fluctuations by applying the LNA to the heuristic CME of the coarse-grained network (hLNA). The heuristic microscopic rate functions, i.e., the propensities divided by the volume, are in this case

| (22) |

| (23) |

Using the prescription for the hLNA (see the Results and Methods sections), one obtains the variance of the fluctuations to be

| (24) |

A comparison of equations (21) and (24) leads one to the observation that the latter can be obtained from the former by setting q1=q2=0. Substituting these conditions in the Langevin equation, equation (18), we obtain physical insight into the shortcomings of the conventional heuristic method. This method rests on the incorrect implicit assumption that under conditions of timescale separation, the reversible elementary reactions involving the fast species do not contribute to the intrinsic noise in the substrate concentration.

Stochastic Michaelis-Menten and Hill-type kinetics

We now consider two subcases which are of special interest in biochemical kinetics: (i) k2→0, Km2→∞; (ii) k2→∞, Km2→0 at constant . These limits applied to the reduced RE, equations (15), lead to the two simplified REs, respectively,

| (25) |

| (26) |

Hence, the first case leads to Michaelis-Menten (MM) kinetics (non-cooperative kinetics) and the second to Hill-type kinetics with a Hill coefficient of two (cooperative kinetics).

Applying limit (i) to equations (21) and (24), we obtain the variance of the fluctuations for Michaelis-Menten kinetics as predicted by the ssLNA and the hLNA

| (27) |

| (28) |

Similarly applying limit (ii) to equations (21) and (24), we obtain the variance of the fluctuations for Hill-type kinetics as predicted by the ssLNA and the hLNA

| (29) |

| (30) |

Comparison of equations (27) and (28) shows that the heuristic CME description of the coarse-grained network overestimates the size of intrinsic noise whenever the deterministic kinetics are Michaelis-Menten. Interestingly, comparison of equations (29) and (30) shows the opposite for Hill-type kinetics: the size of noise predicted by the heuristic CME underestimates the true value. Note also that for both types of kinetics, the heuristic CME predicts the correct noise statistics in the limit of very small and very large substrate concentrations (which correspond to very large and very small free enzyme concentrations in steady-state conditions, respectively). The predictions for the Michaelis-Menten case agree with those reported by a recent simulation-based study [17] and a study using the LNA applied to the full network [9]. Indeed, this agreement is an important benchmark for the ssLNA. To our knowledge, the results for the Hill-type kinetics have not been obtained before.

The results for Hill-type kinetics are shown in Figure 4. In Figures 4a and 4b we plot the coefficient of variation CVS and the Fano factor FFS of the substrate concentration fluctuations (as predicted by equations (29) and (30)) versus the non-dimensional fraction . From equation (26) it can be deduced that ; hence, the physical meaning of Θis that it is a measure of enzyme saturation since as it increases, the substrate concentration increases as well, and consequently the free enzyme concentration decreases. The values of rate constants are chosen such that timescale separation is guaranteed, i.e., there is very good agreement between the concentration of the slow species as predicted by the REs of the full network and the reduced REs obtained using the deterministic QSSA (see Figure 5).

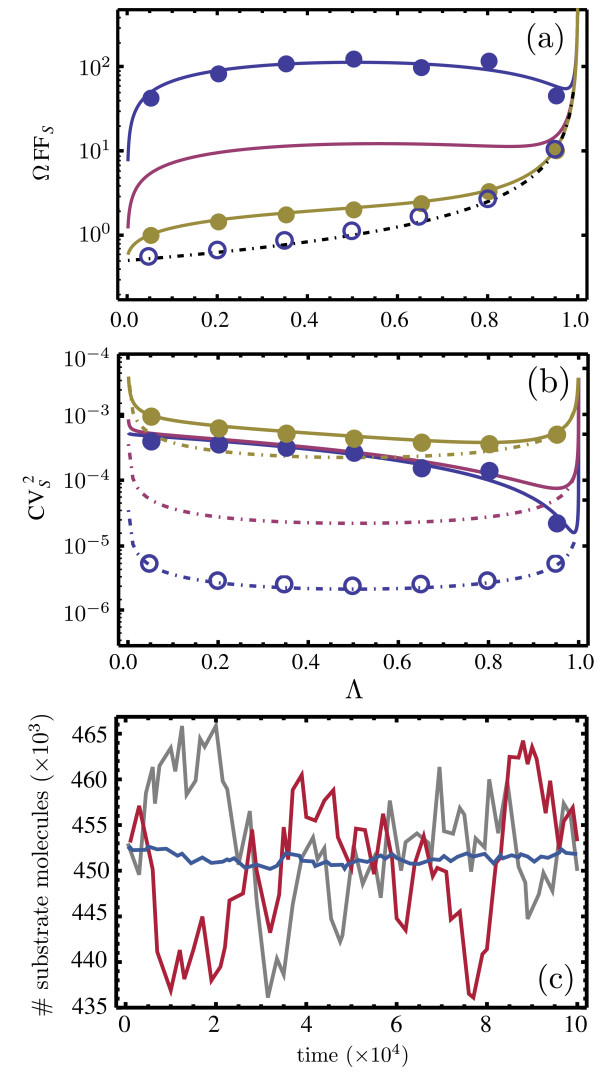

Figure 4.

Noise statistics for cooperative two-subunit enzyme network. Plots showing the Fano factor multiplied by the volume, ΩFFS, (a) and the coefficient of variation squared, , (b) for the substrate fluctuations as a function of the non-dimensional fraction Θ, in steady-state conditions. The latter is a measure of enzyme saturation. The solid lines are the ssLNA predictions, the dashed lines are the hLNA predictions, the solid circles are obtained from stochastic simulations of the full network and the open circles are obtained from stochastic simulations of the coarse-grained network using the SSA with heuristic propensities. The color coding indicates different values of the bimolecular rate constant k1: 5×10−3(yellow), 5×10−5(purple), and 5×10−7(blue). The remaining parameters are given by , k2=1000, k−1=k−2=100, k3=k4=1. Note that in (a) the black dashed line indicates the hLNA prediction for all three different values of k1, which are indistinguishable in this figure. The stochastic simulations were carried out for a volume Ω=100. In (c) sample paths of the SSA for the full network (gray), the slow scale Langevin equation (red) as given by equation (18) and the SSA with heuristic propensities (blue) are compared for Θ=0.5. The slow scale Langevin equation is numerically solved using the Euler-Maruyama method with timestep δt=0.1. Note that in all cases, the chosen parameters guarantee timescale separation (validity of the deterministic QSSA) (see Figure 5)

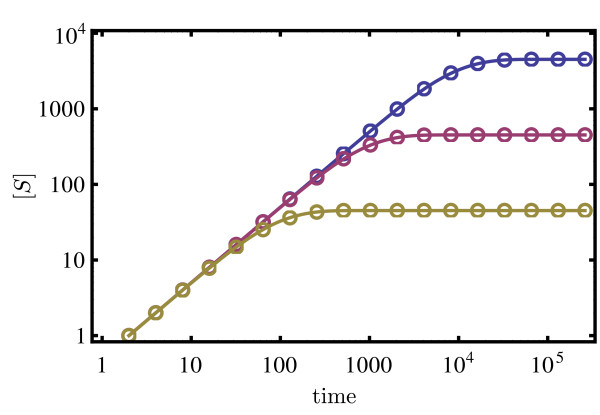

Figure 5.

Validity of the deterministic QSSA for the cooperative two-subunit enzyme network. Plot of the macroscopic substrate concentration [S] versus time, as obtained by numerically solving the deterministic REs of the full network (solid lines) and the reduced REs obtained using the deterministic QSSA (open circles). The color coding and rate constant values are as in Figure 4 (a) and (b); the value of Θis 0.5. The excellent agreement between the two RE solutions, implies timescale separation conditions

The following observations can be made from Figures 4a and 4b. The ssLNA quantitatively agrees with the results of stochastic simulations of the full network for a large enough volume Ω. In contrast, the heuristic approach, hLNA, and stochastic simulations based on the corresponding heuristic CME, are generally in quantitative disagreement with the results of the ssLNA and of stochastic simulations of the full network, even if the volume is very large. For example, for the case k1=5×10−7 and Θ=1/2, the CVS and FFS from the hLNA are approximately 11 and 112 times smaller, respectively, than the prediction of the ssLNA. In Figure 4c we also illustrate the large differences which these statistics imply, by showing sample paths (trajectories) of the CME of the full network, of the heuristic CME and of the Langevin equation given by the ssLNA, equation (18). This confirms that: (i) the hLNA and, hence, the heuristic CME on which it is based, predicts the correct mean concentrations but incorrect noise statistics even if the molecule numbers are considerably large; (ii) the Langevin equation obtained from the ssLNA is a viable accurate simulation alternative to SSA simulations based on the heuristic CME.

Besides quantitative disagreement we also note that the qualitative dependence of the FFS and the CVS with Θ as predicted by the heuristic approach is also very different than the predictions of the ssLNA and stochastic simulations with the full network. For example, for the case k1=5×10−7, according to stochastic simulations of the full network and the ssLNA, the FFS reaches a maximum at Θ=1/2, whereas the heuristic approach predicts a monotonic increase of the FFSwith Θ. The case Θ<0.5is particularly interesting because the ssLNA and stochastic simulations of the full network lead to ΩFFSwhich is much greater than 1, whereas the heuristic approach predicts ΩFFS which is below 1. Hence, for Θ<0.5, the ssLNA correctly predicts the fluctuations to be super-Poissonian, i.e., the size of the fluctuations is larger than those of a Poissonian with the same mean number of substrate molecules, whereas the hLNA incorrectly predicts the opposite case of sub-Poissonian fluctuations.

The power spectrum for the substrate fluctuations has also been calculated (see the Methods section). Although there is some quantitative disagreement between the predictions of the ssLNA and hLNA both are in qualitative agreement: the spectrum is monotonic in the frequency and hence no noise-induced oscillations are possible by this mechanism. More generally, it can be shown that the spectra of the hLNA and ssLNA are in qualitative agreement for all full networks with at most one slow species because as can be deduced from equation (7), for such networks, the spectrum for a single species chemical system is invariably monotonic in the frequency.

Application II: A gene network with negative feedback

Finally, we study an example of a gene network with autoregulatory negative feedback. Such a feedback mechanism is ubiquitous in biology appearing in such diverse contexts as metabolism [40], signaling [41], somitogenesis [42] and circadian clocks [43]. Two reasons hypothesized for its widespread occurance are that (i) it supresses the size of intrinsic noise [44,45] thereby providing enhanced stability and (ii) it can lead to concentration oscillations or rhythms which are crucial to the control of several aspects of cell physiology [36].

We consider the following prototypical gene network. For convenience, we divide the network into two parts: (i) the set of reactions which describe transcription, translation and degradation, and (ii) the set of reactions which constitute the negative feedback loop. The first part is described by the reactions

| (31) |

The mRNA, M, is produced by transcription from a single gene G, and it is translated into protein P which subsequently is degraded via an enzymatic reaction catalyzed by enzyme E. The mRNA can furthermore decay into an inactive form spontaneously. In addition, we have a negative feedback loop described by the reactions

| (32) |

Note that the gene with two bound proteins is inactive, in the sense that it does not lead to mRNA production. This implies that sudden increases in protein concentration lead to a decrease in mRNA transcription which eventually results in a lowered protein concentration; this is the negative feedback or auto-inhibitory mechanism. The reaction network as given by reaction schemes (31) and (32) is our full network for this example. Note that the first two reactions in reaction scheme (31) are not in reality elementary chemical reactions but they are the simplest accepted forms of modeling the complex processes of transcription and translation and hence it is in this spirit that we include them in our full network description.

Deterministic analysis and coarse-grained network

Model reduction on the macroscopic level proceeds by applying the deterministic QSSA to the REs of the full network (see the Methods section for details). The fast species are the enzyme, E, the enzyme complex, EP, and the gene species in its various non-complexed and complexed forms G, GP and GP2. The slow species are the mRNA, M, and the protein, P. Furthermore, we also impose the limit k2→∞, k1→0 at constant k2k1; this enforces cooperative behavior since the binding of P to G is quite slow but once it occurs the next binding of P to the complex GP is very quick. The resultant reduced REs are given by

| (33) |

where K2=k−1k−2/k1k2, , is the total enzyme concentration and is the total gene concentration. The model reduction process just described is illustrated in Figure 6.

Figure 6.

Full and coarse-grained mechanisms of a gene network. Cartoon illustrating the full and coarse-grained networks for the gene network with a single negative feedback loop. The reduced, coarse-grained network is obtained from the full network under conditions of timescale separation, i.e., transients in the concentrations of all enzyme, enzyme complex, gene and gene complex species decay much faster than transients in the concentrations of mRNA and protein

Stochastic analysis of the coarse-grained network: ssLNA and hLNA methods

We denote ηs,1and ηs,2 as the fluctuations about the concentrations of mRNA and of protein, respectively. The ssLNA leads to reduced Langevin equations of the form

| (34) |

where Γi(t) is the noise contributed by reaction number i and the reactions are numbered according to the order: G→G + M, GP→GP + M, P + G→GP, GP→P + G, P + GP→GP2, GP2→P + GP, M→∅, M→M + P, P + E→EP, EP→P + E, and EP→E. The element Jij denotes the i-jentry of the Jacobian J of the reduced REs (33). Furthermore, the parameters p1 and q are given by

| (35) |

| (36) |

where K3=k−3/k3. Note that the coupled Langevin equations (34) imply that the fluctuations in the mRNA and protein concentrations are affected by noise from all of the 11 constituent reactions of the full network (reaction schemes (31) and (32)) except from those of the reversible reaction P + GP⇌GP2. As shown in the Methods section, the noise from this reaction becomes zero due to the imposition of cooperative behavior in the feedback loop.

The covariance matrix for the fluctuations of the Langevin equations (34) is given by the Lyapunov equation (6) with Jacobian being equal to that of the reduced REs (33) and diffusion matrix Dh replaced by Dss, which is given by

| (37) |

It is also possible to calculate the covariance matrix of the fluctuations of the slow variables using the hLNA (see the Methods section). This is given by a Lyapunov equation (6) with Jacobian being equal to that of the reduced REs (33) and diffusion matrix Dh given by

| (38) |

A comparison of equation (37) and equation (38) shows that the ssLNA and hLNA are generally different except in the limits of p1→0 and q→1. From the Langevin equations (34) we see that setting p1=0 implies ignoring the noise due to the reversible reaction P + G⇌GP, while setting q=1 is equivalent to ignoring the noise from the reversible reaction P + E⇌EP. Hence, as for the previous example of enzyme kinetics, we can state that the hLNA and the heuristic CME upon which it rests, implicitly (and incorrectly) assume that under conditions of timescale separation, the reversible reactions involving the fast species do not contribute to the intrinsic noise in the slow species.

Furthermore, by the comparison of equations (37) and (38), one can also deduce that the heuristic CME provides a statistically correct description when the protein concentration [P] is either very small or very large, in other words the case of very weak or very strong transcriptional repression (and corresponding non-saturated and saturated degrading enzyme conditions).

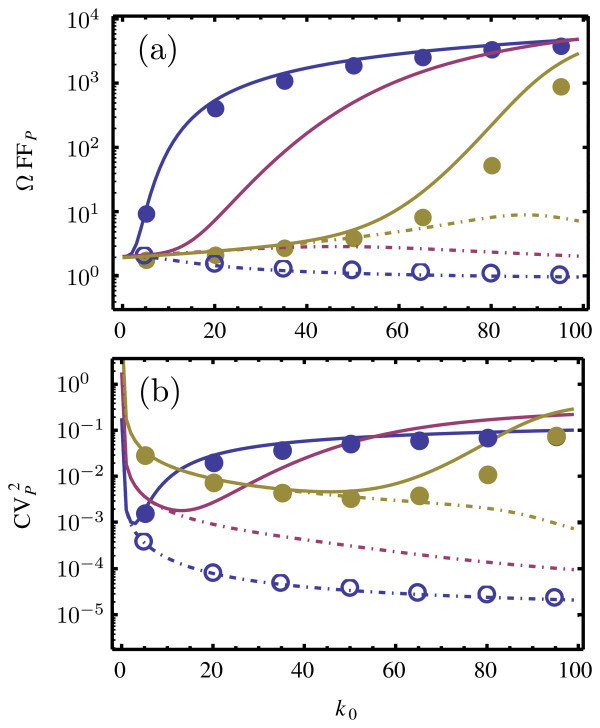

Detailed comparison of the noise statistics from the ssLNA and hLNA

Figure 7 shows the ssLNA and hLNA predictions for the coefficient of variation squared and the Fano factor of the protein fluctuations as a function of the transcription rate k0. These are obtained by solving the two Lyapunov equations mentioned in the previous subsection for the covariance matrix; the variances are then the diagonal elements of this matrix, from which one finally calculates the Fano factors and the coefficients of variation. The values of rate constants are chosen such that we have timescale separation conditions (see Figure 8). From Figure 7 we can see that under such conditions, the ssLNA predictions agree very well with the stochastic simulations of the full network but the hLNA exhibits considerable quantitative and qualitative differences compared to the latter simulations. In particular, note that for k3=1 and k0>50, the predictions of the ssLNA are approximately 3 orders of magnitude larger than those of the hLNA (and of stochastic simulations using the heuristic CME).

Figure 7.

Noise statistics of the gene network. Dependence of the Fano factor (a) and of the coefficient of variation squared (b) of the protein fluctuations on the rate of transcription k0, according to the ssLNA (solid lines) and the hLNA (dashed lines). The noise measures are calculated for three values of the bimolecular constant k3=1(yellow), k3=0.1(purple), k3=0.01(blue). All other parameters are given by , , k1=10−5, k2=100, k−1=k−2=k−3=10, k4=ks=kdM=1. Stochastic simulations of the full networks (solid circles) and of the coarse-grained network (open circles) using the CME and the heuristic CME, respectively, were performed for a volume of Ω=100. Note that at this volume there is one gene and 100 enzyme molecules. Note also that the chosen parameters guarantee timescale separation (validity of the deterministic QSSA) and cooperative behavior in the feedback loop (see Figure 8)

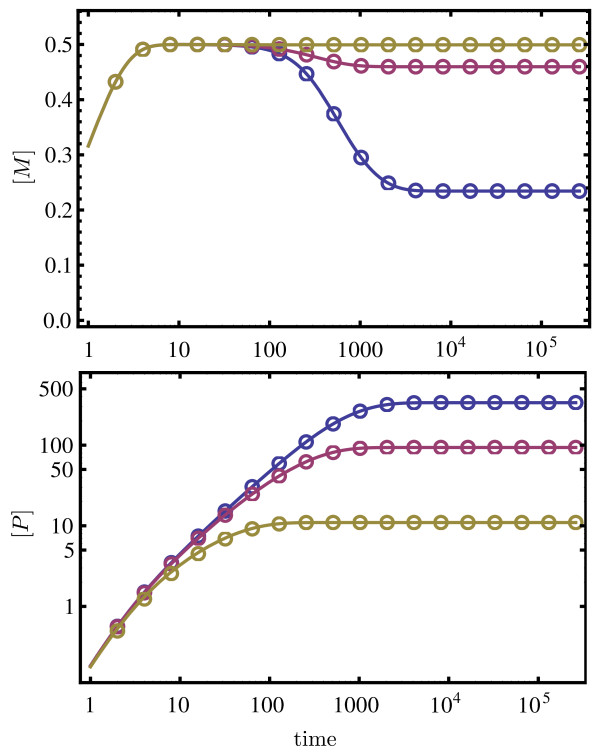

Figure 8.

Validity of the deterministic QSSA for the gene network. Plot of the macroscopic substrate concentrations of mRNA, [M], and protein, [P], versus time, as obtained by numerically solving the deterministic REs of the full network (solid lines) and the reduced REs obtained using the deterministic QSSA (open circles). The color coding and rate constant values are as in Figure 7; the value of k0is 50. The excellent agreement between the two RE solutions, implies timescale separation conditions

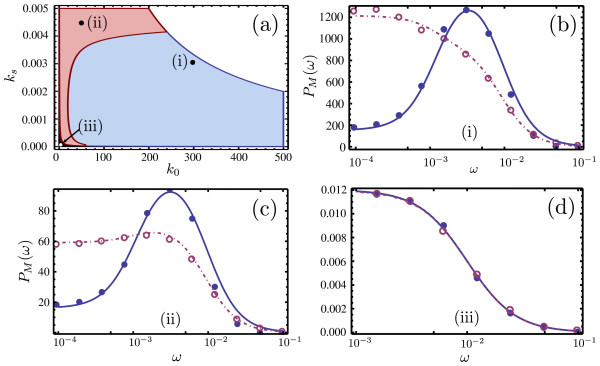

Finally, we investigate the differences between the predictions of the ssLNA and hLNA for noise-induced oscillations in the mRNA concentrations. These are oscillations which are predicted by CME based approaches but not captured by RE approaches. In particular, these noise-induced oscillations occur in regions of parameter space where the REs predict a stable steady-state [46]. Calculation of the power spectra is key to the detection of these noise-induced oscillations: a peak in the spectrum indicates a noise-induced oscillation. For the hLNA this is given by equation (7) with Jacobian being equal to that of the reduced REs, equation (33), and diffusion matrix Dh given by equation (38). For the ssLNA this is given by equation (7) with Jacobian being equal to that of the reduced REs, equation (33), and diffusion matrix Dh replaced by Dss, which is given by equation (37). Since the two diffusion matrices Dh and Dss are not generally equal to each other we expect the spectra calculated according to the ssLNA and hLNA to differ. Indeed we find 3 possible scenarios: both spectra do not have a peak in frequency (no noise-induced oscillations), both spectra have a peak in frequency (noise-induced oscillations) and the most interesting case where the ssLNA spectrum exhibits a peak but the hLNA does not predict one. The results are summarized in Figure 9, where we show the regions of parameter space in which each of these scenarios occur and a comparison of the power spectra as predicted by the hLNA and ssLNA in these regions. Note that in all cases the hLNA (purple dashed lines) agrees with stochastic simulations of the coarse-grained network using the heuristic CME (purple open circles), while the ssLNA (solid blue lines) agrees with stochastic simulations of the full network (blue solid circles) under conditions of timescale separation. The hLNA and ssLNA spectra are only in good quantitative agreement in a very small region of parameter space (shown in black in Figure 9a), where both do predict noise-induced oscillations.

Figure 9.

Noise-induced oscillations in the gene network. Comparison of the predictions of noise-induced oscillations in the mRNA concentrations by ssLNA and hLNA methods. Panel (a) shows a stochastic bifurcation diagram depicting the regions in the translation rate (ks) versus transcription rate (k0) parameter space where both methods predict no oscillations (black), both predict oscillations (red) and only the ssLNA correctly predicts an oscillation (blue). There is no steady-state in the white region. Panels (b), (c) and (d) show spectra at 3 points in the blue, red and black regions of the bifurcation plot in (a) (these points are marked by roman numbers). The solid and dashed lines show the predictions of the ssLNA and the hLNA respectively, while the dots and circles show the results of stochastic simulations of the full and coarse-grained network using the CME and the heuristic CME, respectively. The parameters are given by Ω=1000, , and kdM=0.01, k1=0.001, k−1=100, k2=1000, k−2=1, k−3=10, k3=0.1, k4=10. These parameters guarantee timescale separation (validity of the deterministic QSSA) and cooperative behavior in the feedback loop. Note that the hLNA spectrum in (b) and (c) is scaled up 5000 and 1000 times, respectively

We emphasize that the main message brought by our analysis is that there are significantly large regions of parameter space (blue region in Figure 9a), where the hLNA (and the heuristic CME) does not predict noise-induced oscillations but such oscillations are obtained from stochastic simulations of the full network as well as being captured by the ssLNA.

Qualitative discrepancies in the prediction of noise-induced oscillations arise because the hLNA does not correctly take into account the fluctuations stemming from the rate limiting step of the cooperative binding mechanism. The latter involves the slow binding reaction between a protein molecule P and a gene G leading to a complex GP. The reason for this is the hLNA’s tacit assumption that the fast species are not involved in slow reactions. This rate limiting reaction is at the heart of the negative feedback loop that is responsible for concentration oscillations in many biological networks such as circadian clocks [36] and hence why we speculate that the hLNA misses the occurrence of noise-induced oscillations in certain regions of parameter space.

Discussion and conclusion

Concluding, in this article we have rigorously derived in closed-form, linear Langevin equations which describe the noise statistics of the fluctuations about the deterministic concentrations as predicted by the reduced REs obtained from the deterministic QSSA. Equivalently, the ssLNA, as the method was called, is the statistically correct description of biochemical networks under conditions of timescale separation and sufficiently large molecule numbers. We note that our method provides an accurate means of performing stochastic simulation in such conditions. This is particularly relevant since it has been proven that there is generally no reduced CME description in such cases [8]. Another advantage of the ssLNA is that it enables quick computation of noise statistics through the solution of a set of simultaneous, deterministic linear algebraic equations. By applying the ssLNA to two biologically relevant networks, we showed that this procedure can lead to particularly simple and compact expressions for the noise statistics, which are in very good numerical agreement with stochastic simulations of the CME of the full network under the above conditions. This is in contrast to the heuristic CME, which generally performed with poor accuracy and in some instances even missed the prediction of noise-induced oscillations.

The limitations of the ssLNA are precisely those of the conventional LNA on which it is based. Namely, if the system is composed of at least one bimolecular reaction, then it is valid for large enough molecule numbers (or, equivalently, large volumes) and provided the biochemical network is monostable. If the system is purely composed of first-order reactions and if one is only interested in variance and power spectra, then the only requirement is that of monostability. This is since in such a case it is well known that the first and second moments are exactly given by the LNA. For monostable systems with bimolecular reactions, the finite-volume corrections to the LNA can be considerable when the network has implicit conservation laws, when bursty phenomena are at play and when steady-states are characterized by few tens or hundreds of molecules [24,47-49]. These problems probably become exacerbated when the network is bistable or possesses absorbing states [50]. Hence, it is clear that although the ssLNA presented in this article is valid for a considerable number of biologically interesting cases, it cannot be homogeneously applied to all intracellular reaction networks of interest. These require the development of methods beyond those presented in this article and hence present an interesting research challenge for the future.

A necessary and sufficient condition for timescale separation is that the timescales governing the decay of the transients in the average concentrations are well separated. Fast species are those whose transients decay on fast timescales while the slow species are those whose transients decay on slow timescales. At the microscopic level, there are several different scenarios which can lead to timescale separation. Grouping chemical reactions as fast or slow according to the relative size of their associated timescales, Pahlajani et al.[51] obtain timescale separation by defining fast species as those which are involved in fast reactions only and slow species as those involved in slow reactions only. Zeron and Santillan [52] use a similar but less restrictive approach whereby the fast species are involved in fast reactions only and the slow species can participate in both fast and slow reactions. Another method is that due to Cao et al.[53] who define slow species as those involved in slow reactions only and fast species as those participating in at least one fast reaction and any number of slow reactions. While the three aforementioned scenarios will lead to timescale separation, it must be emphasized that they only constitute a subset of the possible scenarios leading to such conditions. The derivation behind the ssLNA is not based on any particular microscopic scenario, rather it simply requires that the timescales of the transients in the average macroscopic concentrations are well separated. Hence it follows that in the limit of large molecule numbers, the methods developed in [51-53] cover only a sub-space of the parameter space over which timescale separation is valid. In the Methods section we indeed show that Pahlajani’s approach [51] leads to a reduced linear Langevin equation which is a special case of the ssLNA, the case where the matrix B in equation (10) can be neglected. The approaches of Zeron and Santillan [52] and of Cao et al.[53] lead to a reduced CME description. As we have shown in the Results section, under conditions of timescale separation and for small intrinsic noise, there always exists a reduced linear Langevin description of monostable stochastic reaction networks (the ssLNA) but there is generally not a physically meaningful reduced master equation description. The latter is only obtained if one imposes stronger conditions.

These results are in line with those of Mastny et al.[54] which show that for the Michaelis-Menten reaction without substrate input, the sQSPA method, a rigorous singular-perturbation approach, leads to a reduced master equation whenever the free enzyme or complex concentrations are very small (see Table II of Ref. [54]). This equation has the same form as the heuristic CME. This implies that for such conditions the error in the predictions of the heuristic CME should be zero, a result which is reproduced by the ssLNA (see Application I in the Results section). However, note that though these concentration conditions can be compatible with the deterministic QSSA they are not synonymous with it. Generally, the sQSPA methods do not lead to a reduced stochastic description consistent with the deterministic QSSA over the whole parameter space, whereas the ssLNA does, albeit within the constraints that molecule numbers should not be too small and that the network is monostable.

Finally we consider the approach of Shahrezaei and Swain [55], who derived the probability distribution for a linear two-stage model of gene expression under conditions of timescale separation. Their method rests on an exact solution of the generating function equation corresponding to the CME in the limit that the protein lifetime is much greater than that of the mRNA. In the Methods section we show that the ssLNA applied to their example leads to the same variance as obtained from their reduced probability distribution. The upside of their method over the ssLNA is that they obtain the full probability distribution valid for all molecule numbers. The downside of their method is that the generating function equation can only be solved exactly for networks composed of first-order processes (as in the gene example presented by Shahrezaei and Swain) or very simple bimolecular reactions [19] and hence the method has a restricted range of applicability compared to the ssLNA.

While the stochastic simulation algorithm explicitly simulates every individual reaction event, the Langevin approach yields approximate stochastic differential equations for the molecular populations. This is computationally advantageous whenever the reactant populations are quite large [5]. This reasoning can be deduced from the relationship between the propensities and the microscopic rate functions as given by . It is well known that in the large population number limit, the vector is approximately equal to the vector of macroscopic concentrations and hence the magnitude of the propensities increases with the reaction volume or equivalently with molecule numbers. In particular, this implies that the time between consecutive reaction events, given by where r∈(0,1) is a uniform random number, decreases with increasing reaction volume. This means that the time spent by the stochastic simulation algorithm increases with increasing volume because more reaction events have to be resolved within the same time window. Given this reasoning we can compare the discussed methods in terms of speed and accuracy. The computation time of the Langevin methods, hLNA and ssLNA, is independent of the volume and hence if the molecule numbers are not too small, both methods are much quicker than simulating any reduced CME of the coarse-grained network or the CME of the full network. However the ssLNA enjoys the additional advantage that under conditions of timescale separation, it is as accurate as the CME of the full network. The same argument does not generally hold for the hLNA.

We emphasize that besides deriving the ssLNA method, in this paper we have used it to determine the range of validity of the conventional heuristic CME approach and the size of errors in its predictions. To our knowledge, this is the first study which attempts to answer these important and timely questions via a rigorous, systematic theoretical approach.

Our main message is that, the “conventional wisdom” that the heuristic CME is generally a good approximation to the CME of the full network under conditions of timescale separation is incorrect, if one is interested in intrinsic noise statistics and the prediction of noise-induced oscillations.

Methods

Derivation of the ssLNA

The linear FPE describing the full network is given by equation (8). It is well known that with every FPE one can associate a set of Langevin equations (stochastic differential equations) [37]. Note that the Langevin and FPE formalisms are exactly equivalent but as we show now, the Langevin description is ideal for deriving a reduced description in timescale separation conditions.

The set of coupled Langevin equations equivalent to equation (8) are

| (39) |

| (40) |

Note that the time-dependence of the matrices in the above equations comes from that of the macroscopic concentrations of fast and slow species. Now say that we impose timescale separation conditions, i.e., the correlation time of fast fluctuations, τf, is much smaller than the correlation time of slow fluctuations, τs. We wish to obtain a reduced description for the fast fluctuations, i.e., for equation (39), on timescales larger than τf but much smaller than τs. On such timescales, transients in the macroscopic concentrations of fast species have decayed, a quasi-steady-state is achieved and by the deterministic QSSA, we know that the fast-species concentrations can be expressed in terms of those of the slow-species concentrations. Now the latter concentrations vary very slowly over timescales much smaller than τsimplying that for all intents and purposes they can be considered constant. Hence the matrices in equation (39) can be considered time-independent. It then follows that the solution to the latter equation is approximately given by

| (41) |

where we have put . In the case when the correlation time is very short the first term can be neglected and the lower limit of the integration in the other terms can be extended to t0→−∞. To make further analytical progress, we switch to Fourier space. First we derive the following result which will prove very useful. Given a vector , we have

| (42) |

| (43) |

| (44) |

where denotes the Fourier transform of and I is the identity matrix. It then follows that the Fourier transform of equation (41) is given by

| (45) |

Since we are interested in a description on timescales larger than τf, i.e., for fluctuations of frequency , then the above equation further reduces to

| (46) |

Taking the inverse Fourier transform of the above equation and substituting in equation (40) we obtain

| (47) |

This Langevin equation is the ssLNA: it is an effective stochastic description of the intrinsic noise in the slow variables in timescale separation conditions. Using standard methods [37] it can be shown that the FPE which is equivalent to this effective Langevin equation is equation (9). The ssLNA can also be derived more rigorously using the projection operator formalism as shown in [57].

A note on the reduced Jacobian of the ssLNA

Here we show that the reduced Jacobian in the ssLNA equation (47) is exactly the Jacobian of the reduced REs which arise from applying the deterministic QSSA on the REs of the full network. One starts by considering a small deviation from the deterministic steady state on the REs of the full network. Using the partitioned Jacobian of the form as in equation (11), we can then write

| (48) |

with slow and fast perturbations and , respectively. Applying the deterministic QSSA, i.e., setting the time derivative of fast perturbations to zero, one finds

| (49) |

Hence the Jacobian in the ssLNA equations (9) and (12) is the same as the Jacobian of the reduced REs.

Note that equations (48) are formally the same as obtained by taking the average of the LNA equations (39) and (40) (this general agreement between the LNA and linear stability analysis is discussed in [21]). This implies that the timescales of the fast and slow variables in the ssLNA (and hence of the CME under timescale separation conditions and in the macroscopic limit) is the same as the timescales obtained from the REs.

Details of the derivations for the two-subunit enzyme network

The ssLNA recipe: Langevin equation and noise statistics

We here show the details of the ssLNA method as applied to the network discussed in Application I in the Results section. The first step of the recipe is to cast the reaction scheme of the full network (14) into the form of the general reaction scheme (1). This is done by setting X1=S, X2=EE, X3=EES and X4=SEES and by labeling the input reaction as reaction 1, the binding of S to EE as reaction 2, the decay of EES to S and EE as reaction 3, the decay of EES to EE and P as reaction 4, the binding of S to EES as reaction 5, the decay of SEES into EES and S as reaction 6 and finally the decay of SEES into EES and P as reaction 7. Note that the reaction number labeling is arbitrary but the labeling of the species is not: according to the convention set out in the Introduction, we have to choose the substrate as the first species because it is the slow variable, while the rest of the species are the fast ones. Given the chosen order of the species and the reactions, the stoichiometric matrix and the macroscopic rate function vector (see definitions in the Background section and the description of the ssLNA in the Results section) are given by

|

(50) |

| (51) |

Note that the row number of the stoichiometric matrix reflects the species number, while the column number reflects the reaction number. The order of the entries in the macroscopic rate function vector reflects the reaction number.

The enzyme can only be in one of three forms, EE, EES and SEES and hence we have the conservation law, , where [EET] is the total enzyme concentration, which is a time-independent constant. Hence, we are free to remove information from the stoichiometric matrix about one of the enzyme forms; we choose to remove information about EE, and therefore, we eliminate the second row from the stoichiometric matrix, leading to

|

(52) |

Note that we have also partitioned the stoichiometric matrix into two sub-matrices as required by our method (see prescription for ssLNA in the Results section). Now we can use this matrix together with the macroscopic rate function vector to obtain the elements of the Jacobian matrix of the REs of the full network

|

(53) |

where we also partitioned the matrix into 4 sub-matrices as required by our formulation of the ssLNA in the Results section. Now we can use the two sub-matrices of the stoichiometric matrix and the four sub-matrices of the Jacobian to calculate the matrix A - B (as given by the two equations for A and B after equation (10)), which yields

| (54) |

where q1and q2 are as defined in the main text by equations (19) and (20). Furthermore, the Jacobian of the reduced RE, equation (15) in the main text, is given by

| (55) |

Finally, the Langevin equation, equation (18), is obtained by substituting equations (55) and (54) in equation (12). The equation for the variance of the substrate fluctuations, equation (21), is obtained by substituting equation (54) in equation (10) to obtain the new diffusion scalar Dss and then substituting the latter together with the new Jacobian equation (55) in the Lyapunov equation, equation (6), with Dh replaced by Dss. Note that in this example because we have only one slow species, the Lyapunov equation is not a matrix equation but simply a single linear algebraic equation for the variance. For the same reason we have a diffusion scalar rather than a diffusion matrix. The power spectrum can be obtained by substituting the new Jacobian and diffusion scalar in equation (7) (with Dh replaced by Dss), leading to

| (56) |

The power decays monotonically with frequency, which implies no noise-induced oscillation; this statement is generally true for all networks (full or coarse-grained) which have just one slow species.

The hLNA recipe: Langevin equation and noise statistics

Here we apply the LNA to the heuristic CME according to the method described in the Results section. The coarse-grained network is given by reaction scheme (17); an elementary reaction for the substrate input process and a non-elementary first-order reaction for substrate catalysis. The stoichiometric matrix and macroscopic rate function vector are given by

| (57) |

| (58) |

where k′ is defined in the main text, equation (16). The diffusion scalar Dhof the linear FPE approximating the heuristic master equation for this process can be constructed from the stoichiometric and macroscopic rate function matrices using equation (5), which leads to . Note that here we have a diffusion scalar rather than a matrix because we have only one slow variable. Finally, from equations (6) and (7), the variance and the power spectrum are obtained from the diffusion scalar and the Jacobian, J, of the effective rate equation, equation (15), leading to

| (59) |

| (60) |

In the Results section, it was shown that a reduced CME description becomes possible whenever the effective stoichiometric matrix S’ as given by equation (13) evaluates to integer values. For the reaction scheme under consideration, it can be shown that , where q1and q2 are given by equations (19) and (20). The latter two quantities are generally real values and time-dependent and hence a reduced CME description is not generally possible. A simple choice which makes S’ integer-valued is the null choice, q1=q2=0, and indeed it is for these values that in the main text we show that the hLNA (and hence the heuristic CME) is a valid description of stochastic kinetics under timescale separation conditions.

Details of the derivations for the gene network example

Reduced rate equations

The fast species of the genetic network with negative feedback given in the main text are given by the gene species G, GP, GP2 and the enzyme species E and EP. There are two conservation laws, for the gene species and for the enzyme species and hence we need to apply the deterministic QSSA only to two of the gene species and to one of the enzyme species. The QSSA applied to the latter is the standard Briggs-Haldane approximation, which is well known [38], and hence here we restrict our presentation to the QSSA on the negative feedback loop. The macroscopic rate equations for the gene species GP and GP2 read

| (61) |

Substituting the gene conservation law, setting the time derivatives to zero and solving these two equations simultaneously, we obtain the quasi-steady-state concentrations of the three gene species

| (62) |

where K1=k−1/k1, K2=k−2/k2 and K2=K1K2. Since only the ternary complex (one with 3 molecules, i.e., GP2) does not lead to mRNA production, the active gene fraction is given by

| (63) |

where in the last step we have drawn the limit of cooperative binding K2→0 at constant K (or equivalently k2→∞, k1→0 at constant k1k2). It follows that the REs for the slow variables of mRNA and protein concentrations are then given by

| (64) |

where is the Michaelis-Menten constant of the enzyme which degrades the protein species.

Derivation of the ssLNA results

We cast the species in the full network (as given by reaction schemes (31) and (32)) into the form required by the convention set in the Introduction. We denote the slow species by X1=M and X2=P and the fast species by X3=GP, X4=GP2 and X5=EP. Note that the form of the gene with no bound protein (G) as well as the free enzyme species (E) do not appear explicitly in our description due to the two inherent conservation laws (same as shown in the previous section for the enzyme example except that here we immediately remove the extra species). The eleven constituent reactions are numbered in the following order: G→G + M, GP→GP + M, P + G→GP, GP→P + G, P + GP→GP2, GP2→P + GP, M→∅, M→M + P, P + E→EP, EP→P + E, and EP→E.

The stoichiometric matrix and the macroscopic rate function vector are constructed as

|

(65) |

| (66) |

Note that the columns of S reflect the reaction number, while the rows reflect the species number. Similarly, the reaction number is reflected in the entries of the macroscopic rate function vector .

From S and we can obtain the Jacobian matrix, , of the REs of the full network

|

(67) |

where the individual submatrices read explicitly

| (68) |

| (69) |

| (70) |

| (71) |

Using these Jacobian submatrices, the stoichiometric submatrices given in equation (65) and the diagonal matrix F whose elements are those of the macroscopic rate function vector , as given in equation (66), we obtain (using the two equations for A and B after equation (10)) the matrix

| (72) |

where

| (73) |

| (74) |

| (75) |

Note that K3=k−3/k3. The Jacobian can be obtained from the reduced REs, equation (64), and is given by

| (76) |

Note that we have drawn the limit of cooperative binding on p1, p2, q and J.

Finally the Langevin equation is obtained by substituting equations (76) and (72) in equation (12). To obtain the equation for the variance of the mRNA and protein fluctuations, one must first determine the diffusion matrix Dss. Using equation (72) and the definition (10), it can be readily shown that the diffusion matrix takes the diagonal form