Abstract

Body-machine interfaces establish a way to interact with a variety of devices, allowing their users to extend the limits of their performance. Recent advances in this field, ranging from computer-interfaces to bionic limbs, have had important consequences for people with movement disorders. In this article, we provide an overview of the basic concepts underlying the body-machine interface with special emphasis on their use for rehabilitation and for operating assistive devices. We outline the steps involved in building such an interface and we highlight the critical role of body-machine interfaces in addressing theoretical issues in motor control as well as their utility in movement rehabilitation.

Keywords: Brain-machine interface, Dimensionality reduction, Rehabilitation, Wheelchair, Robotics, Redundancy

Introduction

From the concept of cyborg that was introduced in the late 1950s (Clynes & Kline, 1960; Wiener, 1950), the idea of integrating humans and machines has recurred in science fiction and popular culture. In the past two decades, there has been rapid progress in the development of “body-machine interfaces”1 that provide a link between the human and an external machine. These interfaces are capable both of extending human capabilities (e.g., controlling computers) and of replacing them (e.g., bionic limbs). The general purpose of a body-machine interface is to enable the user to retain a complete or shared control over the device through signals derived from the user’s body. This makes it distinct from systems with autonomous control, where the machine attempts to retain full command, except for occasional external interventions from the user.

In this review, we provide an overview of body-machine interfaces that are mainly based on movements, with specific applications to assistive devices and rehabilitation. Our novel approach to the task of interfacing the human body with external devices is based on two key aspects:

The possibility to take advantage of new programmable maps between body motions and their functional and sensory consequences for investigating the process of motor learning, and

The development of a new clinical approach to disability that combines the facilitation of the access to assistive devices, such as powered wheelchair, with the development of functional exercises for the recovery of motor functions.

Regarding the first aspect, a body-machine interface offers the opportunity to present subjects with tasks that they have never experienced before, thus allowing us to explore learning from a condition that is relatively free from biases due to prior experience. While the interface establishes a map from body-derived signals to a task space, the learner must effectively form some kind of inverse – or generalized inverse - of this map in order to translate a goal in task space into body signals that are appropriate for reaching that goal. The transformation from neural signals to device commands is at the core of research on brain machine-interfaces. However, while the brain-machine interfaces effectively bypass the motor system, the body-machine interface takes advantage of the motor skills that are still available to the user and has the potential to enhance these skills through their consistent use.

This utility of the body-machine interface in exploiting and enhancing motor skills plays an important role in the second aspect of body-machine interfaces, which relates to rehabilitation. For example, even subjects with severe spinal cord injury (C3 or C4 level of injury) often retain a significant level of mobility of their shoulder and neck. This residual ability can be used for exploring the external world and interacting with it. Therefore, in this situation, a body-machine interface not only aims at providing the control over an external device, but could also serve as a tool for rehabilitation by inducing the user to frequently perform body movements that acquire a new functional meaning through the interface.

A brief taxonomy of body-machine interfaces

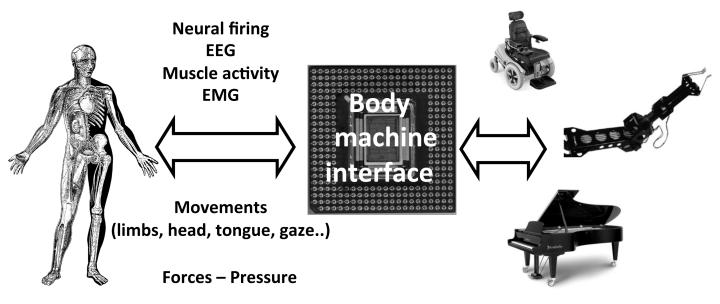

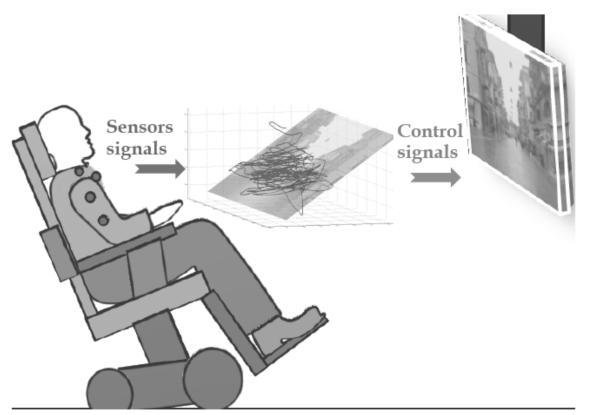

The scheme of a typical body-machine interface is shown in Figure 1. The first element is the body, which in this context refers to the human or the animal from which signals are obtained to operate an external device. These signals can be derived from a variety of sources. They may be extracted from body motions – goniometers, accelerometers or pressure switches, - or they may measure some underlying neurophysiological activity - muscle activity, EEG or neuronal firing. The second element of a body-machine interface system - the machine - refers to the device or instrument to be controlled. This may be a device of common use, such as an automotive or a musical instrument; or a tool for people with movement disorders, such as a bionic limb or a powered wheelchair. The third and key element is the interface that links the body and the machine. The interface transforms the body signals into commands for controlling the device. In principle, the link may also operate in the reverse direction, by encoding the state of the device (or the environment) into stimuli to be delivered to the user (e.g., cochlear implants). However, the inclusion of sensory interfaces in body-machine interfaces appears to be a greater challenge than the decoding of body signals into commands to the machine. Nevertheless, the possibility to augment the channels for feedback information is of critical importance and the area of sensory substitution is an active field of research (Amedi, et al., 2007; Bach-y-Rita, 2004; Bach-y-Rita, Collins, Saunders, White, & Scadden, 1969; Kaczmarek, Webster, Bach-y-Rita, & Tompkins, 1991; Tyler, Danilov, & Bach, 2003).

Figure. 1.

Schematic of a typical body-machine interface.

Many of the existing interfaces that humans interact with on a daily basis (like computer keyboards, joysticks, video game controllers or musical instruments) can be termed body-machine interfaces. However, a characteristic feature of the most recent body-machine interface advances has been the development of “learning interfaces”, where human and machine adapt to each other. This is a dual learning paradigm, in which there is constant evolution of both systems, although the learning of the two systems – human and machine - typically operates at different timescales (more details are in the section on “Additional Design Choices in Building a Map”)

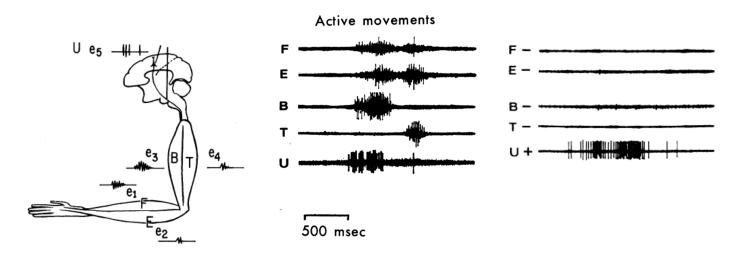

Early body-machine interfaces were used as a means for understanding the basic mechanisms through which the nervous system controls movement. Litvintsev et al. (1968) used electromyography (EMG) signals from rats as arguments to a non-linear function, whose value was then fed back as a pain stimulus to the rat. The authors found that the rats could learn to control the muscle activity of two muscles by employing search strategies that minimized the pain experienced. Shortly afterwards, (Fetz & Finocchio, 1971) published the first experiment in which monkeys were trained by operant conditioning to control the activity of individual cortical neurons (Figure 2). Similar studies were performed in humans using goniometers and visual feedback (Krinskii & Shik, 1964; McDonald, Oliver, & Newell, 1995), showing how body-machine interfaces could provide a useful tool to study important problems in motor control such as the problem of motor redundancy, i.e., how the nervous system controlled a system with multiple degrees of freedom.

Figure 2.

Operant conditioning of a motor cortical neuron. Left: Schematic diagram of the monkey, showing the location of arm muscles (B: biceps, T: Triceps, F: Flexor Carpi Radialis, E: Extensor Carpi Radialis) and the recorded motor cortical cell (U). Center: before the operant conditioning, the neural activity in U precedes the activation of the muscles during an active flexion of the elbow. Right: after training the monkey learns to activate the cortical cell without any muscle activation. (From Fetz and Finocchio, 1971)

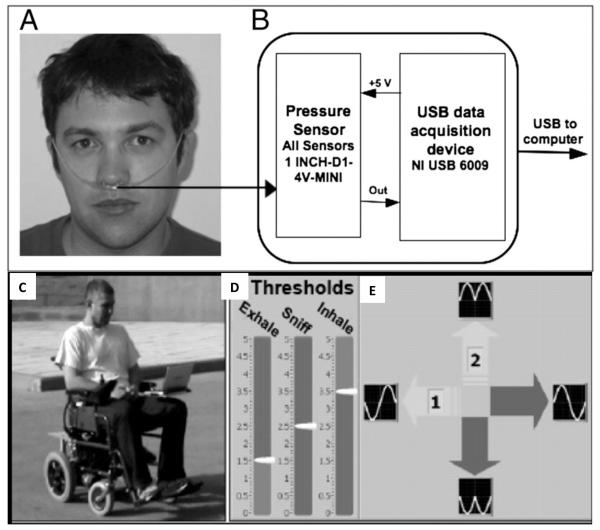

From a more practical perspective, most recent work on body-machine interfaces involves learning to control a cursor on a computer display. This approach typically offers great flexibility: once one learns to move a cursor on a screen, the same control skill can be used to control other devices (for e.g., typing on a keyboard or driving a wheelchair) by simply altering the underlying software interface over which the cursor is moving. Several approaches to cursor control have been developed that are based on various body movements. These include EMG control (Barreto, Scargle, & Adjouadi, 2000), eye movements - including electro-oculography (EOG) as well as gaze tracking (Jacob, 1991), head control devices (see the HeadmouseTM, Origin Instruments, Grand Prairie, TX), tongue pointing devices (Salem & Zhai, 1997) etc. Apart from cursor control, there have also been approaches that attempt to directly interface with assistive devices. These include multiple-DOF robot arms (Ferreira, et al., 2008; Kuiken, et al., 2009; Serruya, Hatsopoulos, Paninski, Fellows, & Donoghue, 2002), ankle/knee orthosis (Horn, 1972; Popovic & Schwirtlich, 1988) and wheelchair control (Barea, Boquete, Mazo, & Lopez, 2002; Craig & Nguyen, 2005; Plotkin, et al., 2010) (Figure 3).

Figure 3.

Driving a wheelchair with sniffs. A) The nasal cannula used to carry nasal pressure to the sensor. (B) The sniff controller. (C)A healthy participant driving the sniff-controlled wheelchair. The user interface. (D) The threshold settings for sniff-in and sniff-out activation levels. (E) The current direction. (Plotkin, et al., 2010)

A particular type of body-machine interface that has received a lot of attention is the brain-machine interface, in which signals from the brain, obtained either invasively through electrode recordings or non-invasively by electroencephalography (EEG), are directly interfaced to the machine, thereby completely bypassing movements of the body (for detailed reviews on this topic, see (Hatsopoulos & Donoghue, 2009; Lebedev & Nicolelis, 2006; Mak & Wolpaw, 2009; Mussa-Ivaldi & Miller, 2003; Wolpaw, 2004, 2007; Wolpaw, et al., 2000; Wolpaw, Birbaumer, McFarland, Pfurtscheller, & Vaughan, 2002). Brain machine interfaces are especially relevant to movement disorders such as severe paralysis, ALS or locked-in syndrome, where there is little or no residual movement control ability. Aside from such extreme cases, it might be preferable to develop and use body-machine interfaces that rely on movements for individuals with movement disorders because (i) they do not have increased risk of surgical complications that are currently present in invasive brain-machine interfaces, (ii) the bandwidth of body motions is currently an order of magnitude higher (~5 bits/s) (Felton, Radwin, Wilson, & Williams, 2009) compared to the bandwidth of decoded neural signals (~0.05 to 0.5 bits/s) (Townsend, et al., 2010; Wolpaw, et al., 2000). Since brain-machine interfaces have been extensively reviewed elsewhere (Mak & Wolpaw, 2009; Wolpaw, et al., 2002), here we focus on interfaces based on the reorganization of body motions.

Exploiting Features of the Motor Control System

The body-machine interface typically exploits two important and related features of the motor control system – redundancy and plasticity. The term redundancy refers to the fact that the human body (even after severe injury) generally has many more degrees of freedom than the few command signals needed to control devices such as wheelchairs or moving a cursor on a computer monitor. The plasticity or reorganization comes from the fact that the body has to assign new functions to the available movement’s ability.

a) Redundancy and flexibility

Redundancy is a key resource of the motor control system. It refers to the possibility to perform an action in a variety of “equivalent” ways. For example, when we reach for an object with our hand, we may use multiple configurations of the arm and multiple patterns of muscle activities. Several hypotheses have been formulated to explain how the Central Nervous System (CNS) is capable of finding the consistent and stable movement patterns that are characteristic of skilled performance. Although the abundance of such patterns for a same task presents a computational challenge for the CNS to solve, redundancy offers the flexibility that is needed to achieve a given goal when some of the options become unfeasible. This is critical after traumatic events such as stroke where, despite the loss of certain body movements, the survivor is still able to find alternative ways of carrying out daily activities through compensatory strategies (Cirstea & Levin, 2000)

Redundancy offers two important resources for body-machine interfaces: (i) the possibility to explore an overabundant number of signals for extracting the best sub-set of combinations to be used for interfacing with the external world, (ii) the possibility to find new natural subsets of solutions, either when the users’ ability decreases for the progression of a disorder, or as it increases because of the benefits of a treatment as well as a positive consequence of practice and motor learning.

b) Reorganization and plasticity

The exploitation of redundancy also requires a reorganization (or “remapping”) of the residual ability to control body motions. In a body-machine interface, the signals collected from the body, are used for achieving new functional goals. When subjects use movements of the eye (Barea, et al., 2002; Philips, Catellier, Barrett, & Wright, 2007) head (Craig & Nguyen, 2005), shoulders (Casadio, et al., 2011; Casadio, et al., 2010) or tongue (Huo, Wang, & Ghovanloo, 2008) for driving a wheelchair or piloting a robotic arm, they associate these parts of the body with functions that before the injury were performed by other parts. One remarkable feature in this reorganization process is that the assistive devices are no longer treated as external objects appended to the body, but rather they almost become an integrated, essential part of the body (Seymour, 1998). Understanding the extent of such plasticity in the brain, especially after injury, is an important consideration when designing body-machine interfaces for rehabilitation and assistive purposes.

Steps in Building a Body-Machine Interface

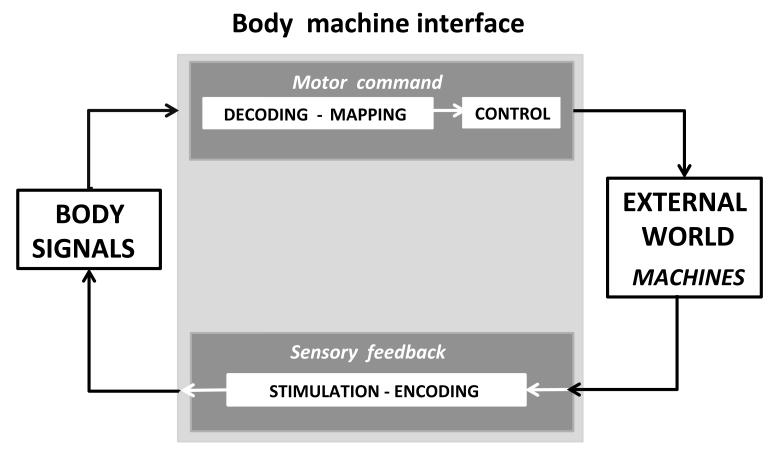

Figure 4 illustrates schematically the steps involved in building a body-machine interface. To illustrate these steps, we make reference to a body-machine interface that we have developed recently. The interface consists of a virtual environment, in which various actions (such as driving a virtual wheelchair or playing a video game) are performed by controlled motions of the shoulder complex. Subjects with high-level spinal cord injury used this interface to control a virtual wheelchair and to perform other operations, such as playing a computer game (Casadio, et al., 2011; Casadio, et al., 2010).

Figure 4.

A schematic of these steps involved in building a body-machine interface

1. Acquisition of data signals

The first step in building a body-machine interface is to acquire signals from the body. As mentioned in the introduction, a wide array of signals are available for this purpose, including those that are directly involved with the production of movement and forces (such as kinematics, dynamics, EMG, and pressure data), and those that are precursors to movement (such as EEG and neuronal activity).

In our interface to control a virtual wheelchair, we derived the control signals from shoulder motions. We placed active markers with an infrared light source on the subjects’ upper body (two on each arm). Each marker was monitored by an infrared camera. The motion capture software extracted the two coordinates of the marker’s centroid in image space. Alternatively, it is possible to capture the upper body motions with accelerometers and gyroscopes.

2. Transforming body signals into the control space

The second step in the development of the body-machine interface is to map the acquired body signals onto the control space, i.e., the space defined by the commands to the external device. We consider two alternative approaches:

Pattern Recognition

In pattern recognition, the acquired body signals are represented as a multi-dimensional “feature vector”. Depending on the values of the different features, the vector is classified into one of several patterns according to the method used. For example, consider the case of two body signals (e.g. EMGs from two muscles) where the feature vector consists of the amplitude of the signals (Fetz & Finocchio, 1971). This feature vector spans a 2-D space, which may then be divided into four quadrants using a threshold on each of the two signals. Depending on the quadrant in which the feature vector falls, a different operation of the machine is triggered – e.g., making the cursor move left, right, forward or back. In practice, more sophisticated classification techniques (e.g. Linear Discriminant Analysis, Bayesian classifiers or neural networks (Duda & Hart, 1973; Gish, 1990; Wan, 1990) are used to classify multidimensional signals into several distinct patterns. This approach has been successfully applied for controlling bionic limbs (Kuiken, et al., 2009; Saridis & Gootee, 1982; Zecca, Micera, Carrozza, & Dario, 2002). For example, EMG signals from multiple muscles are first classified as belonging to one of several distinct grasp patterns. Subsequently, the interface drives the bionic arm into the appropriate grasp configurations, enabling the user to interact with objects in the environment.

Building a continuous map

A second approach to transforming body movements into control signals is through the definition of a continuous map. Several different methods share the same basic mechanism: a map, W, from the N-dimensional vectors in body signal coordinates , to the M dimensional control vector , with M≤N:

The function W may be linear or non-linear (Table 1). The full dimensional representation of the embedded signals can be recovered via an inverse transformation if M=N (and W is not singular) or via a right inverse transformation if M<N:

In the virtual wheelchair example, we take the latter approach and use a linear map to transform the acquired signals. The four cameras together provide an 8-dimensional body-signal vector (each camera obtaining the two-dimensional position of a marker in its image). The commands to the wheelchair are two-dimensional controls for the linear speed (v) and the angular rotational speed (ω) of the wheelchair. Therefore the 8-dimensional body-signal space is effectively mapped to a 2-dimensional control space. This implies that there are redundant solutions: multiple body signals producing the same device-control signal. The “map” between the subject’s actions and the behavior of the machine is established by a calibration procedure.

Table 1.

Classification of Maps used in Body-machine interfaces based on different characteristics.

| Type of Map | Properties |

|---|---|

| Fixed | The map parameters do not change during operation |

| Time-varying | The map parameters change with time, either in a pre-determined way or based on the user’s performance |

|

| |

| Static | The instantaneous position of the control point is set based on the instantaneous value of the body-derived signals. This map is set by an algebraic equation. |

| Dynamic | The state of the control point (position, velocity and higher order derivatives, or a time history of position values) is a function of the state or time-history of body signals. This map is set by a differential equation. |

|

| |

| Linear | The control point is derived by multiplication of the body signals with a matrix of coefficients. Superposition of inputs gives superposition of outputs. |

| Non-Linear | The control point is obtained by passing the body signals through a non-linear function. Superposition does not apply. |

We used two different approaches for building the map. In the first approach, we asked subjects to maintain specified postures for pre-specified control commands (e.g. move forward, move backward, turn left, turn right, stop) (Pressman, Casadio, Acosta, Fishbach, & Mussa-Ivaldi, 2010). The map, W, from body signals to controls was derived by least-square regression over the acquired x, u pairs. In a second, alternative approach, we used principal component analysis in the space of possible movements (Casadio, et al., 2011; Casadio, et al., 2010).This method is a form of unsupervised learning (Sejnowski & Hinton, 1999). Subjects were asked to move their arms and upper body and were encouraged to freely explore the available and comfortable space of shoulder motions. Then, we extracted the first two principal components corresponding to the greatest movement variation. This approach is based on the assumption that in general, the axes along which there is greatest variance correspond to movements that can be easily performed with large range of motion (although this is not always the case – see section on Dimensionality reduction below). After deriving the two principal components with highest variance, the corresponding eigenvectors were laid over the monitor x,y axes (Figure 5). Simple scaling and rotations were applied to the map W, so as to obtain the most intuitive map between the motions of the body and the geometrical properties of the device commands (move forward, backward, left or right).

Figure. 5.

Interface concept. The subject’s movements are detected by sensors placed on the upper body. As the subject engages in spontaneous movements, the sensor signals define a point moving in a high dimensional space (here represented as 3D). The calibration procedure establishes a correspondence between the plane that captures the highest amount of signal variance with the plane of the display, where the sensor signals are represented as a moving cursor (see (Casadio, et al., 2011))

The calibrations based on both approaches were tested on impaired and unimpaired volunteers. Some control subjects preferred the least-squares regression method because using a limited number of selected postures allowed them to establish the mapping in a more straightforward way. However, this method resulted not to be a good candidate for severely impaired SCI subjects, who encountered difficulties in choosing or maintaining four distinct postures. We found that the unsupervised PCA method based on their exploratory movements, provided a customized map that took into account the subjects’ preferences and residual abilities and was easy to learn during practice (Casadio, et al., 2010).

We need to stress that the issues involved in the calibration procedure depend critically on the application. For example, to drive a wheelchair, it is important to consider design issues that not only include the ease of performing movements, but also take into account stimulus-response compatibility. For example, moving the shoulders forward should result in the wheelchair moving forward rather than moving backward or turning to one side. However, in cases where the body-machine interface is an experimental tool to investigate motor learning (Liu, Mosier, Mussa-Ivaldi, Casadio, & Scheidt, 2011; Mosier, Scheidt, Acosta, & Mussa-Ivaldi, 2005), such design constraints may not be relevant. In general, several other design choices are available for building a map. Here, we briefly discuss some of the most critical ones.

Additional Design Choices in Building a Map

Fixed and Time-varying Maps

At present, the disabled users of assistive devices must learn to perform pre-defined control actions. However, new and future generations of body-machines interface may succeed in overturning this constraint and have the devices “learn their users” by adaptively modifying the interface so as to match their evolving motor skills. This dual learning or “coadaptive’ paradigm becomes especially critical in the design of assistive devices for movement disorders, like spinal cord injury, where depending on the site and severity of the injury, there is wide variation in residual movement abilities. Then, an adaptive body-machine interface may be customized to the user’s residual mobility, thus enabling to strike a balance between two competing aims: convenience, in terms of ease of device control, and rehabilitation, through the focused exercise of underutilized muscles.

The body-machine interface can be based on two different kinds of maps: a fixed map, where the transformation matrix W is initially set with a calibration procedure that remains fixed throughout interface use; or a time-varying map where W(t) changes, based on the data acquired during the interface use (Table 1). In the latter case, as subjects use of the interface, movement information is collected to “reshape” the mapping matrix based on a continuous data stream. The co-adaptation of the interface may provide the means for updating the performance of the system online, without need to repeat the initial calibration. The online adaptation of the interface map is likely to result in a significant enhancement of learning rate and also in a higher level of final performance, in terms of control accuracy, timing, and motor coordination. However in some cases the online modification of the control map may not result in appreciable performance gains. Instead, it is also possible to observe adverse effects of map changes, because these changes may confuse the interface user and impair the learning process. In such cases, the time interval between changes must be increased and a stronger smoothness constraint can be imposed by raising the relative influence of data collected in the preceding epoch, and by placing an explicit threshold in the maximum change of W. The advantages or disadvantages of a fixed or a time varying mapping may vary depending on the particular application and on individual preferences or ability.

Dimensionality Reduction

Even with significant levels of disability, people can still generate a number of physiological or motion signals, which exceed the number of control variables needed for operating assistive devices and interacting with the external world. These signals define a high-dimensional space, where one can choose among a spectrum of possibilities to “settle” into low-dimensional control subspaces, or manifolds. The map between the body and the control signals has a critical role for establishing these subspaces by finding the patterns that are most natural or easiest to produce, and for using these patterns to generate the control signals without imposing hard constraints. In the case of signals derived from body motion, the map can be based on signal combinations, which are consistent with natural patterns of control, as established by biomechanical couplings and muscle synergies. Dimensionality reduction techniques can lead to the construction of the most appropriate map by identifying candidate “motor primitives”. Although there is significant debate over the use of terms such as synergies and motor primitives (Giszter, Patil, & Hart, 2007; Latash, 2008; Ting & Macpherson, 2005; Ting & McKay, 2007), here by “motor primitive” we merely refer to a group of body signals that the device operator controls as a single unit. Further, we assume that combinations of motor primitives generate a broad repertoire of control signals.

There are several approaches to dimensionality reduction (see (van der Maaten, Postma, & van den Herik, 2009) and some of these techniques have been used to identify motor synergies in EMG and movement data (d’Avella, Saltiel, & Bizzi, 2003; Flanders, 1991; Kargo & Nitz, 2003; Santello, Flanders, & Soechting, 1998; Soechting & Lacquaniti, 1989; Tresch, Saltiel, & Bizzi, 1999). A common feature of these techniques is their effectiveness in reducing the dimension of a large signal set by identifying relationships between subgroups of signals. Here, we will briefly consider three different and well established methods, each one with specific merits and drawbacks: principal component analysis (PCA), independent component analysis (ICA) and the Isomap method. The first two methods assume the linear combination of sources, whereas the last is a nonlinear method. All these methods can be applied to a large data set of body signals.

Principal Component Analysis (PCA)

This is the simplest approach from an algorithmic point of view (Jolliffe, 2002). It is based on the decorrelation of signals by diagonalization of their covariance matrix. Dimensionality is reduced by ranking the eigenvalues and keeping only the eigenvectors that, combined, can account for the desired amount of variance. The PCA algorithm derives a set of orthonormal basis vectors (the principal components) that span a subspace containing the desired amount of data variance. PCA has been widely used for identifying components of movements and electrophysiological data (Flanders, 1991; Holdefer & Miller, 2002; Santello, et al., 1998). A significant limitation of the method arises from its being linear and based on orthonormal eigenvectors. Both properties are often perceived to limit the biological relevance of PCA. The mechanics of force generation by the muscles is characterized by significant non-linearity. Furthermore, the skeletal structure of the limbs is such that forces produced by independently activated muscles are generally acting along lines that are not mutually orthogonal. Here however, one is relatively free from such considerations as one is only seeking a decomposition that is appropriate for the control of a device. PCA was successfully used for building maps between the residual upper body movements of high level spinal cord injury subjects and a two-dimensional control for an external device (Casadio, et al., 2011; Casadio, et al., 2010).

Independent Component Analysis (ICA)

This is a more recent method (Hyvärinen, Karhunen, & Oja, 2001) leading to components that are maximally independent in a statistical sense. ICA minimizes the mutual information between these components and not their correlations as PCA does. Unlike PCA, ICA does not impose orthogonality. However like PCA, ICA assumes that the observed signals come from a linear mixing of sources. ICA can be considered as a refinement of PCA, which minimizes second and higher order statistical dependencies in the input. ICA involves a pre-processing or “whitening” stage in which the inputs are decorrelated and are set to have unit variance. If the underlying sources are Gaussian and the transformation from signals to sources is orthogonal, then ICA and PCA coincide. ICA has been applied successfully to the analysis of motor coordination (see for example (Kargo & Nitz, 2003). A drawback of ICA over PCA is its greater computational complexity, which may lead to a higher degree of arbitrariness in the outcome. In summary, the two methods mentioned above offer different advantages – PCA is not arbitrary in the sense that a data set has only one particular group of eigenvectors. ICA, on the other hand has more “control knobs”, which are useful when attempting to extract more biologically plausible components. However, this leads to greater arbitrariness because depending upon how these control knobs are set, there may be different ICs extracted from the same data. Given these tradeoffs, if one faces the task of engineering a control map for a body-machine interface, the greater simplicity of PCA may be preferable to the higher versatility of ICA because in most cases, the motor system can learn (albeit at a slower rate) non-intuitive control of external devices (Mosier, et al., 2005).

Isomap

Nonlinear forms of dimensionality reduction are intuitively appealing. The motor primitives underlying the natural control of movement are likely to span not a linear vector space but a curved surface, or manifold. Isomap is based on the simple idea of constructing a map of local Euclidean distances between the data points. These local distances allows one to estimate geodesic lines on the embedded hypersurface by selecting paths of minimum length that are compatible with the distribution of data points. The Isomap algorithm has been proven to be effective for nonlinear pattern recognition and for the classification of behaviors (Tenenbaum, de Silva, & Langford, 2000).

In summary, the dimensionality reduction methods discussed above are different and thus present different advantages in relation to a) the dimension of the reduced space and b) the structure of the motor primitives that they assume or generate. Further, in real-time applications, computation time may also play an important role in the method used, leading to an advantage for linear methods, such as PCA and ICA, whereas in the analysis of natural behavior, nonlinear methods like isomap may be more adequate to capture the nonlinear properties that are associated with the geometrical and mechanical structure of the musculoskeletal system. Here, we have chosen to focus on linear maps for considerations of simplicity. However, researchers may consider that a body-machine interface would be more effective and easier to learn if the interface requires control that is similar to neurobiological control.

Decoding and Prediction

The maps discussed in the previous section are of the static (or algebraic) type (see Table 1). They transform a set of body signals at a particular instant into the coordinates of a control point by means of a linear operator (PCA and ICA) or a non-linear function. As such, these maps do not have a predictive nature. In contrast, a crucial issue in brain-machine interfaces is to decode motor intention from a segment of neural recordings (Serruya, et al., 2002). This is a task that has dynamical rather than static nature. Wu and collaborators, in John Donoghue’s lab have approached this task as a state estimation problem (Wu, et al., 2003). They considered, at each time interval, k, the neural activity recorded from the motor cortex, z k in the framework of Kalman theory (Kalman, 1960) as the observation of the state of motion of the hand, xk:

Here, H is the observation matrix and k is the observation noise, which is assumed to be Gaussian, with zero mean and covariance Q. The neural activity is typically a vector of recorded firing rates over a period of time ending at tk. The state of the arm is a vector of position, velocity and acceleration. In this sense, it is not the state in the classical sense –i.e.position and velocity. By adding the acceleration, it is possible to represent the hand dynamics as a linear equation

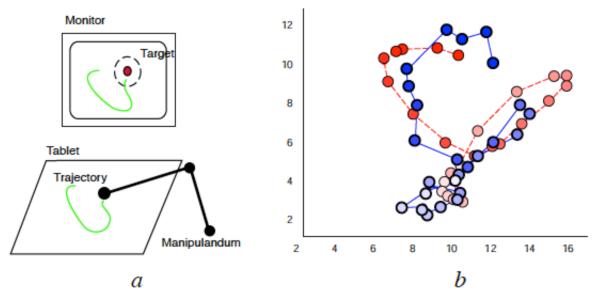

The matrix A describes the transition to the next state and the noise Wk is, again, assumed to be Gaussian, with covariance W. Without going further into the computational details, the approach of Wu and colleagues is based on two steps. First the parameters of the models – i.e. the matrices H, A, Q and W are estimated based on least squares procedures over neural and kinematic data. Then, the standard Kalman procedure is used to predict the next state – i.e. the “intended state” - xk+1 fro the current state k and the current neural activity k. Figure 6 shows a comparison of estimated versus actual trajectories, over periods of 3.5 seconds. The Kalman filter is only one of many possible approaches to state estimation and is limited to linear models corrupted by Gaussian, zero mean noise. Other methods include the Wiener filter (Wiener, 1949) and various form of non-linear estimators, including modified Kalman models (Wu, et al., 2004). The plausibility of assuming Gaussian noise in the modeling of neural processes has been called in question by the observation that these processes are often characterized by noise whose amplitude is not fixed but depends upon the amplitude of the control signals(Harris & Wolpert, 1998). Despite these limitations, the linear Kalman filter has been proven to be remarkably effective as a method to map neural commands into control signals in brain-machine interfaces.

Figure 6.

Reconstructing 2D hand motion using a Kalman filter. (a) Training: neural spiking activity is recorded while the monkey moves a jointed manipulandum on a 2D plane to control a cursor so that it hits randomly placed targets. (b) Decoding: true target trajectory (dashed (red):dark to light) and reconstruction using the Kalman filter (solid (blue): dark to light). ( From Wu et al. 2003)

While the concept of decoding motor intention from neural activity has been applied to brain-machine interfaces, it is possible to use a similar paradigm in a body-machine interface. In this case, the observation variable could be a collection of body signals recorded over a time interval and used to predict the intended state of motion of the control point. In a way this approach corresponds to predicting a future motion from past movement history. As for the brain-machine interface, the most critical issue is to estimate the optimal delay and extent of time over which the observation variable must be collected in order to predict the desired motion of the control point.

3. Control

The next step in the development of a body-machine interface is to build a control policy that serves as an interface between body-signals and the signals for the control of the external device. The aim of the control policy is to define an easy and intuitive mechanism to provide the users with naturalistic control, i.e., with the ability to control the device without excessive cognitive or motoric difficulty.

From the perspective of rehabilitation, the goal of attaining naturalistic control raises an interesting question. Are assistive devices -such as wheelchairs- to be considered as elements of the external world or as new “body parts”? A control engineer is likely to take the former viewpoint and to consider the assistive device as an external element. However, psychologists have highlighted how for disabled individuals these devices, through practice, become part of the body schema (Seymour, 1998) i.e., these devices are considered as essential parts or extensions of the body. This fundamental observation has to be taken into account for designing appropriate controllers. For example, a wheelchair for a high level SCI user becomes analogous to the legs of an able-bodied individual because it is the indispensable means for regaining mobility (Papadimitriou, 2008). Therefore, it is important that the controller establishes, whenever possible, a form of continuous mobility analogous to natural control mode of the nervous system for the limbs motion.

Discrete/Continuous

There are two broad classes of control – continuous and discrete. A continuous control system is driven by variables defined over continuous intervals of real numbers. In contrast, discrete control is based on control variables that can only take a finite set of values. Electrically powered wheelchairs are controlled both in continuous and discrete modes. An example of continuous control is the joystick (Dicianno, Cooper, & Coltellaro, 2010) that provides a means to gradually regulate the speed and direction of movement. In contrast, for individuals with severe paralysis, that cannot use a joystick controller, the approaches are based on the discrete control methods. For example, the sip-and-puff system offers a limited vocabulary of commands that the wheelchair driver issues by sucking and blowing on a tube. This is true also for head and chin switches (Nisbet, 1996) and a number of experimental interfaces such as controllers based on EMG signals (Ferreira, et al., 2008; Simpson, 2005), EEG signals (Mak & Wolpaw, 2009), eye movements (Barea, et al., 2002; Philips, et al., 2007), sniffing (figure 3), head movements (Craig & Nguyen, 2005), and tongue movements (Huo, et al., 2008).

Integrating Discrete and Continuous Control

In general, the interactions with computers and the operation of an assistive device require the ability to combine discrete and continuous control actions. For example, in a computer one uses the mouse to move a pointer (continuous) across the display and the keyboard (discrete) to input text. Similarly, when controlling powered wheelchairs, the forward speed and the direction may be regulated continuously, whereas discrete commands are issued to power on or off the device, to generate an emergency stop, and to set important control parameters such as maximum speed.

In body-machine interfaces, the discrete and continuous control actions may not share the same control space. In particular, they have separate control spaces when the control variables are different and depend on different signals from the body. Since the dimensionality of the body signals usually exceeds the number of control variables, it is possible to design the interface so that it has two separate sets of body signals for continuous and discrete control and, consequently, two distinct control subspaces.

Instead, if the two controllers share the same control space, the discrete control elements – such as a set of switches – can be implemented by recognizing in the continuous control space a number of gestures, or by partitioning the space of the control point in contiguous regions, or “keys”. To select a key, the subject moves the control point inside the key’s region and keeps it there, at rest for a short time. In contrast, continuous control is obtained by associating the controlled variable- e.g. the position of a cursor – with the instantaneous body-configuration signals. The transitions between continuous and discrete control could be established by an additional signal, a “switching gesture” that toggles the continuous map and the keyboard map. This hybrid discrete/continuous control of body-machine interfaces raises some issues of motor learning. For example, one should consider whether there may be interference between learning a continuous map and learning a discrete map. Can motor redundancy prevent or mitigate such interference? Because of redundancy, it is possible to associate different body configurations (i.e. different combinations of the same overabundant set of body signals) to the same coordinates of the control point, thus assigning to the control point different functions depending on the configuration that is used. In principle, this may allow the formation of separate and independent maps. Studies of motor adaptation to force fields have shown that indeed by associating different arm configurations to the same hand position subjects could learn multiple force fields without interference (Gandolfo, Mussa-Ivaldi, & Bizzi, 1996).

Feedback and Sensory Substitution

The final step in building the body-machine interface is to complete the loop through some sort of feedback. This is a crucial point because, as Arthur Prochazka pointed out (Prochazka, 1996) “you can control only what you sense”. Sensory feedback may be delivered either by augmenting existing sensory modalities or by replacing lost sensory modalities. For closing the control loop of many body-machine interfaces, the simplest and most used feedback modality is vision, followed by auditory feedback. These sensory channels can be manipulated by using different Virtual reality (VR) and/or Augmented Reality (AR) tools. A more advanced approach would involve the integration of other feedback modalities, such as those used for augmenting telepresence - sensing pressure, force, and vibration (Alm, Gutierrez, Hultling, & Saraste, 2003; Caldwell, Wardle, Kocak, & Goodwin, 1996; Chouvardas, Miliou, & Hatalis, 2008; Okamura, Cutkosky, & Dennerlein, 2001; Shimoga, 1993). Feedback modalities that are fundamental for control of body posture and movements (e.g. tactile feedback and proprioception) are also likely to improve body-machine interface performance because they require less attention when compared to visual feedback. In this framework, the concepts of redundancy and remapping, described above for the forward flow of motor commands, are important also for the creation of new pathways for sensory feedback.

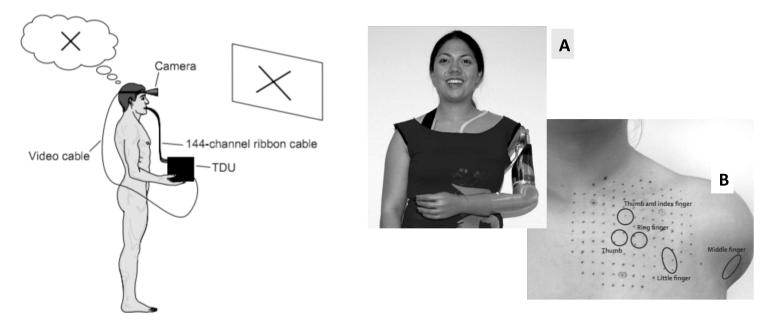

Some of the first attempts at sensory remapping and substitution were proposed by Bach-y-Rita and colleagues (Bach-y-Rita, 1999, 2004; Bach-y-Rita, et al., 1969; Kaczmarek, et al., 1991). These investigators transformed visual images into tactile feedback for allowing blind people to “see”. They converted images from a video camera into a tactile stimuli using an electrode array placed on the subject’s back (Bach-y-Rita, et al., 1969). Based on a similar idea, they also built a device to allow subjects with damaged vestibular nuclei to regain their postural stability by means of an electrical stimulator placed on the tongue (Figure 7, left panel) that reacted to a sensor that measured the subject’s motion (Tyler, et al., 2003). In addition to transmitting stimuli as electrode impulses, another innovative approach that has recently gained attention is “targeted reinnervation” (Kuiken, 2006; Kuiken, et al., 2009; Kuiken, Miller, Lipschutz, Stubblefield, & Dumanian, 2005; Kuiken, et al., 2007), a technique that allows amputees to not only to control prosthetic limbs, but also to sense the missing limb (Figure 7, right panel). In the targeted muscle reinnervation procedure, residual nerves that used to supply the amputated limb are transferred to a target muscle which is denervated. This target muscle is then reinnervated by the residual nerves and the signals at the muscle can be used to control prosthetic limbs. In addition to this motor component, there has also been a “targeted sensory reinnervation” procedure where the skin of the target muscle is denervated and is later reinnervated by the afferents from the residual nerves. In this case, touching the reinnervated skin provides a sensation of the missing limb, thereby closing a sensory feedback loop.

Figure 7.

Left panel: Schematic of a tactile-vision sensory substitution (TVSS) system. Electro-tactile stimuli are delivered to the dorsum of the tongue via flexible electrode arrays placed in the mouth, with connection to the tongue display unit (TDU) via a ribbon cable passing out of the mouth. An image is captured by a head-mounted CCD camera. The video data are transmitted to the TDU via a video cable. The TDU converts the video into a pattern of 144 low-voltage pulse trains each corresponding to a pixel. The pulse trains are carried to the electrode array via the ribbon cable, and the electrodes stimulate touch sensors on the dorsum of the tongue. The subject experiences the resulting stream of sensation as an image(from (Bach-y-Rita & Kercel, 2003)). Right panel. Experimental TMR-controlled prosthesis consisting of a motorized elbow, wrist, and hand, with passive shoulder components. B. Map of areas that the subject perceived as distinctly different fingers in response to touch (from (Kuiken, et al., 2007))

Understanding Motor Learning with Body Machine Interfaces

As mentioned in the introduction, body machine interfaces also provide a new and non-invasive framework for answering some fundamental questions in motor control and learning. For example, a question relevant to motor learning concerns the change in movement variability with learning (Darling & Cooke, 1987; Ranganathan & Newell, 2010). In particular, in redundant tasks, certain combinations of motions in different degrees of freedom do not alter task performance. The existence of this “null space” can be visualized by moving the arm while keeping the index finger at a fixed point on a table. The presence of this null space allows variability in execution while still maintaining task performance. This is consistent with a number of empirical observations, including an early one by Nikolai Bernstein (Bernstein, 1967), who investigated the hammering actions of expert blacksmiths and described the contrast between the high variability in arm motions and the accuracy of the impacts between the hammer and the head of the nail. Therefore, given that null space variability does not directly contribute to task performance, how does the CNS regulate variability in the null space with motor learning?

One hypothesis that addresses this question is the “uncontrolled manifold” (UCM) hypothesis2 (Latash, Scholz, & Schoner, 2007; Scholz & Schoner, 1999). According to the UCM hypothesis, and also to some forms of optimal feedback control (Todorov & Jordan, 2002), the brain takes advantage of the null space, either for increasing flexibility or for increasing the accuracy in the task space at the expense of a larger variance in the null space. Although several studies have shown support for this hypothesis (Yang & Scholz, 2005), there are other studies showing results that are counter to the prediction made from the UCM hypothesis (Domkin, Laczko, Jaric, Johansson, & Latash, 2002; Mosier, et al., 2005; Ranganathan & Newell, 2009). One suggestion for the disparate results is that the motor tasks used in these studies may lie on different ends of the continuum in terms of complexity and experience, with some tasks being very complicated and novel while others are simple and well-practiced (Latash, et al., 2007).

An inherent difficulty in testing this hypothesis is that it is difficult to design a task where the CNS has to “start from scratch” and may actually discover the redundancy in the system. Even in systems with a large number of degrees of freedom like the hand, the CNS is likely to have adequate prior knowledge of the redundancy in these systems through daily experience. Here, body-machine interfaces offer a way of addressing this problem because one can introduce real or virtual objects that are controlled by different body signals.

For example, one can define a novel map between movements of the finger joints and a cursor on the screen (Liu & Scheidt, 2008; Mosier, et al., 2005), where moving the cursor to different targets on the screen requires the participant to adopt different hand postures. Presumably, learning to control the cursor in this task would have to proceed by first identifying which degrees of freedom in the fingers contribute (or do not contribute) to cursor movement, making this analogous to what an infant would have to do when learning to control her arm during development.

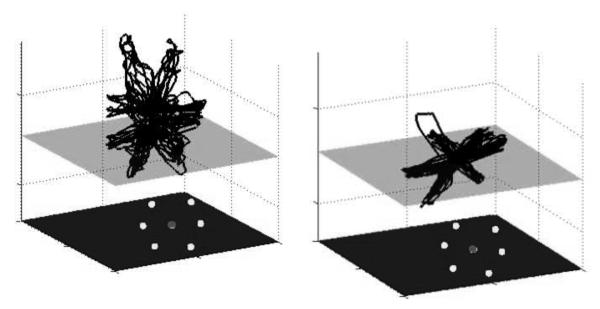

The relative distribution of variance between task space and null space appears to be different during learning of body-machine interfaces. Observations of movements while subjects learn to operate a body-machine interface (Casadio, et al., 2010; Danziger, Fishbach, & Mussa-Ivaldi, 2009; Mosier, et al., 2005) show that the variance associated with the null space variables decreases with training (Figure 8), a result that appears to be inconsistent with the predictions from the UCM hypothesis and optimal feedback control. However, the body-machine interface results and the observations supporting the UCM hypothesis are easily reconciled if one considers that the initial decrease of variance in the null space is characteristic of a system that is “learning to resolve redundancy” – i.e., trying to learn what degrees of freedom contribute (or do not contribute) to task performance. Once the CNS “knows” this distinction between null space and task space, it may take advantage of the null-space to optimize performance, for example by minimizing control effort (Soechting, Buneo, Herrmann, & Flanders, 1995). This hypothesis also leads to the prediction that there might be an increase in null-space variance with extensive practice. Therefore, body-machine interfaces offer an exciting way to test new hypotheses in motor learning that might otherwise be difficult with standard motor tasks such as reaching or grasping.

Figure 8.

Body motions of a subject performing the reaching task, during the initial (left) and final (right) target set. The motions are represented in the space of the top three principal components. The horizontal plane corresponds to the plane of the computer monitor. With practice, the motions become oriented along the image of the monitor space. (From (Casadio, et al., 2010))

Relevance to Neurorehabilitation

As mentioned earlier, one of the important advancements in body-machine interfaces is based on the system being adaptive, as the system must learn its user. In this sense, many rehabilitation robotics devices may be considered as adaptive body-machine interfaces. Commercial devices for robot assisted therapy, such as the MIT manus (Interactive Motion Technologies, Inc., Cambridge, MA, USA), the Hapticmaster (FCS Control Systems, Fokkerweg, The Netherlands) and others, measure signals from the body (such as hand movements and forces) to produce assistive forces based on these signals. Moreover, in the last decade, the controllers of these robots acquired an adaptive approach based on subjects’ error (Patton, Stoykov, Kovic, & Mussa-Ivaldi, 2006), performance (Vergaro, et al., 2010) or models of the user behavior (Emken, Benitez, & Reinkensmeyer, 2007).

On the other hand, the approach described in our example for the body-wheelchair interface could be seen in a rehabilitation perspective. This will allow combining the assistive and rehabilitative potentials of the new body-machine interfaces and will open a new frontier beneficial for a large population of impaired individual, such as SCI and stroke survivors. After an injury, subjects face several related complications, some being immediate neurological consequences of the injury, others being side-effects of immobility or reduced mobility. The limited possibility for functional use of the upper body contributes to weakness, poor posture and, with time, causes pain, bone loss, and attenuates voluntary control of residual movements (Ballinger, Rintala, & Hart, 2000; Salisbury, Choy, & Nitz, 2003). By controlling the interface with their residual body motions, the paralyzed subjects will not only operate wheelchairs and computers; they will also engage in a sustained physical exercise while performing functional and entertaining activities. In preliminary observations (Casadio, et al., 2011), we noticed that these activities appear to have relevant collateral benefits in terms of motor control, strength, engagement and mood. By mapping all the residual movement capacity into specific operational functions, we expect that paralyzed users of assistive devices will find a natural balance between ease of device control and exercise of under-utilized muscles. Moreover, the proposed body-machine interface is suited to exercise all of the available degrees of freedom in their upper body through targeted practice of control actions in VR environments. It is also possible to create a transformation from body motions to a “command” space emphasizing degrees of freedom that are more difficult to control, as determined, in our example, by principal component analysis of recorded movements.

We would like to conclude with an observation on a contrast between the brain-machine and the body-machine interface. The brain-machine interface has excited the fancy of the media, which are often impressed with the idea of “controlling machines by thought”, and this is indeed an intriguing concept. But we normally do not operate by mere thought. Think of the simple act of opening a door. We may carry out this action in an automatic way. But we may also choose to pay attention to each motion of the fingers as our hand grasps the handle. We have a body and we often like to act and feel through it, rather than operating in a subconscious way. In this sense, interfacing with machines, be it a car or a wheelchair, may be seen not only as a way to reach some practical goal, but also as a means to extend and enhance the skills of our bodies rather than to replace and bypass them.

Acknowledgment

This work was supported by grants 1R21HD053608 and 1R01NS05358 from the National Institutes of Health.

Footnotes

The body-machine interface also shares the abbreviation – BMI - with the better known “brain-machine interface”. This is not merely a coincidence or an accident of language, since there are significant commonalities between the two concepts. In both cases, the technological challenge is to decode information about motor intent, and to encode information about the state of a controlled device into some kind of sensory stimulus. If one rejects the separation between mind and body one may agree that the brain-machine interface is a particular instantiation – perhaps the most striking - of the body-machine interface.

The term manifold was used instead of space, because the theory refers to the nonlinear geometry of the configuration space of humans and animals. So, for example, the map from the arm configuration, expressed in terms of joint angles, to the position of the index finger, expressed in Cartesian coordinates, defines regions of angular coordinates that correspond to the same position of the finger tip. These regions are generally curved surfaces rather than flat linear spaces.

References

- Alm M, Gutierrez E, Hultling C, Saraste H. Clinical evaluation of seating in persons with complete thoracic spinal cord injury. Spinal Cord. 2003;41:563–571. doi: 10.1038/sj.sc.3101507. [DOI] [PubMed] [Google Scholar]

- Amedi A, Stern WM, Camprodon JA, Bermpohl F, Merabet L, Rotman S, Hemond C, Meijer P, Pascual-Leone A. Shape conveyed by visual-to-auditory sensory substitution activates the lateral occipital complex. Nature Neuroscience. 2007;10:687–689. doi: 10.1038/nn1912. [DOI] [PubMed] [Google Scholar]

- Bach-y-Rita P. Theoretical aspects of sensory substitution and of neurotransmission-related reorganization in spinal cord injury. Spinal Cord. 1999;37:465–474. doi: 10.1038/sj.sc.3100873. [DOI] [PubMed] [Google Scholar]

- Bach-y-Rita P. Tactile sensory substitution studies. Annals of the New York Academy of Sciences. 2004;1013:83–91. doi: 10.1196/annals.1305.006. [DOI] [PubMed] [Google Scholar]

- Bach-y-Rita P, Collins CC, Saunders FA, White B, Scadden L. Vision substitution by tactile image projection. Nature. 1969;221:963–964. doi: 10.1038/221963a0. [DOI] [PubMed] [Google Scholar]

- Bach-y-Rita P, Kercel SW. Sensory substitution and the human-machine interface. Trends in Cognitive Sciences. 2003;7:541–546. doi: 10.1016/j.tics.2003.10.013. [DOI] [PubMed] [Google Scholar]

- Ballinger DA, Rintala DH, Hart KA. The relation of shoulder pain and range-of-motion problems to functional limitations, disability, and perceived health of men withspinal cord injury: a multifaceted longitudinal study. Archives of Physical Medicine and Rehabilitation. 2000;81:1575–1581. doi: 10.1053/apmr.2000.18216. [DOI] [PubMed] [Google Scholar]

- Barea R, Boquete L, Mazo M, Lopez E. System for assisted mobility using eye movements based on electrooculography. IEEE Transactions on Neural Systems and Rehabilitation Engineering. 2002;10:209–218. doi: 10.1109/TNSRE.2002.806829. [DOI] [PubMed] [Google Scholar]

- Barreto AB, Scargle SD, Adjouadi M. A practical EMG-based human-computer interface for users with motor disabilities. Journal of Rehabilitation Research and Development. 2000;37:53–63. [PubMed] [Google Scholar]

- Bernstein N. The coordination and regulation of movement. Pergamon Press; Oxford: 1967. [Google Scholar]

- Caldwell DG, Wardle A, Kocak O, Goodwin M. Telepresence feedback and input systems for a twin armed mobile robot. IEEE Robotics & Automation Magazine. 1996;3:29–38. [Google Scholar]

- Casadio M, Pressman A, Acosta S, Danziger Z, Fishbach A, Muir K, Tseng HY, Chen D, Mussa-Ivaldi FA. Body machine interface: remapping motor skills after spinal cord injury. IEEE International Conference on Rehabilitation Robotics (ICORR); Zurich. 2011. pp. 1–6. [DOI] [PubMed] [Google Scholar]

- Casadio M, Pressman A, Fishbach A, Danziger Z, Acosta S, Chen D, Tseng HY, Mussa-Ivaldi FA. Functional reorganization of upper-body movement after spinal cord injury. Experimental Brain Research. 2010;207:233–247. doi: 10.1007/s00221-010-2427-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chouvardas VG, Miliou AN, Hatalis MK. Tactile displays: Overview and recent advances. Displays. 2008;29:185–194. [Google Scholar]

- Cirstea MC, Levin MF. Compensatory strategies for reaching in stroke. Brain. 2000;123(Pt 5):940–953. doi: 10.1093/brain/123.5.940. [DOI] [PubMed] [Google Scholar]

- Clynes ME, Kline NS. Cyborgs and Space. Astronautics. 1960:26–27. 74–75. [Google Scholar]

- Craig DA, Nguyen HT. Wireless real-time head movement system using a personal digital assistant (PDA) for control of a power wheelchair. Conference Proceedings - IEEE Engineering in Medicine and Biology Society; 2005. pp. 6235–6238. [DOI] [PubMed] [Google Scholar]

- d’Avella A, Saltiel P, Bizzi E. Combinations of muscle synergies in the construction of a natural motor behavior. Nature Neuroscience. 2003;6:300–308. doi: 10.1038/nn1010. [DOI] [PubMed] [Google Scholar]

- Danziger ZC, Fishbach A, Mussa-Ivaldi FA. Learning Algorithms for Human-Machine Interfaces. IEEE Transactions on on Biomedical Engineering. 2009;56:1502–1511. doi: 10.1109/TBME.2009.2013822. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Darling WG, Cooke JD. Changes in the variability of movement trajectories with practice. Journal of Motor Behavior. 1987;19:291–309. doi: 10.1080/00222895.1987.10735414. [DOI] [PubMed] [Google Scholar]

- Dicianno BE, Cooper RA, Coltellaro J. Joystick control for powered mobility: current state of technology and future directions. Physical Medicine and Rehabilitation Clinics of North America. 2010;21:79–86. doi: 10.1016/j.pmr.2009.07.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Domkin D, Laczko J, Jaric S, Johansson H, Latash ML. Structure of joint variability in bimanual pointing tasks. Experimental Brain Research. 2002;143:11–23. doi: 10.1007/s00221-001-0944-1. [DOI] [PubMed] [Google Scholar]

- Duda RO, Hart PE. Pattern classification and scene analysis. Wiley-Interscience; New York ;London: 1973. [Google Scholar]

- Emken JL, Benitez R, Reinkensmeyer DJ. Human-robot cooperative movement training: learning a novel sensory motor transformation during walking with robotic assistance-as-needed. Journal of NeuroEngineering and Rehabilitation. 2007;4:8. doi: 10.1186/1743-0003-4-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Felton E, Radwin R, Wilson J, Williams J. Evaluation of a modified Fitts law brain computer interface target acquisition task in able and motor disabled individuals. Journal of Neural Engineering. 2009;6:056002. doi: 10.1088/1741-2560/6/5/056002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ferreira A, Celeste WC, Cheein FA, Bastos-Filho TF, Sarcinelli-Filho M, Carelli R. Human-machine interfaces based on EMG and EEG applied to robotic systems. Journal of NeuroEngineering and Rehabilitation. 2008;5:10. doi: 10.1186/1743-0003-5-10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fetz EE, Finocchio DV. Operant conditioning of specific patterns of neural and muscular activity. Science. 1971;174:431–435. doi: 10.1126/science.174.4007.431. [DOI] [PubMed] [Google Scholar]

- Flanders M. Temporal patterns of muscle activation for arm movements in three-dimensional space. Journal of Neuroscience. 1991;11:2680–2693. doi: 10.1523/JNEUROSCI.11-09-02680.1991. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gandolfo F, Mussa-Ivaldi F, Bizzi E. Motor learning by field approximation. Proceedings of the National Academy of Sciences USA. 1996;93:3483–3486. doi: 10.1073/pnas.93.9.3843. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gish H. A probabilistic approach to the understanding and training of neural network classifiers. Acoustics, Speech, and Signal Processing, 1990. ICASSP-90., 1990 International Conference on.1990. pp. 1361–1364. [Google Scholar]

- Giszter S, Patil V, Hart C. Primitives, premotor drives, and pattern generation: a combined computational and neuroethological perspective. Progress in Brain Research. 2007;165:323–346. doi: 10.1016/S0079-6123(06)65020-6. [DOI] [PubMed] [Google Scholar]

- Harris CM, Wolpert DM. Signal-dependent noise determines motor planning. Nature. 1998;394:780–784. doi: 10.1038/29528. [DOI] [PubMed] [Google Scholar]

- Hatsopoulos NG, Donoghue JP. The science of neural interface systems. Annual Review of Neuroscience. 2009;32:249–266. doi: 10.1146/annurev.neuro.051508.135241. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Holdefer RN, Miller LE. Primary motor cortical neurons encode functional muscle synergies. Experimental Brain Research. 2002;146:233–243. doi: 10.1007/s00221-002-1166-x. [DOI] [PubMed] [Google Scholar]

- Horn G. Electro-control: An EMG-controlled A/K prosthesis. Medical and Biological Engineering and Computing. 1972;10:61–73. doi: 10.1007/BF02474569. [DOI] [PubMed] [Google Scholar]

- Huo X, Wang J, Ghovanloo M. Introduction and preliminary evaluation of the Tongue Drive System: wireless tongue-operated assistive technology for people with little or no upper-limb function. Journal of Rehabilitation Research and Development. 2008;45:921–930. doi: 10.1682/jrrd.2007.06.0096. [DOI] [PubMed] [Google Scholar]

- Hyvärinen A, Karhunen J, Oja E. Independent Component Analysis. John Wiley & Sons; New York, NY: 2001. [Google Scholar]

- Jacob RJK. The use of eye movements in human-computer interaction techniques: what you look at is what you get. ACM Transactions on Information Systems. 1991;9:152–169. [Google Scholar]

- Jolliffe IT. Principal Component Analysis. Springer; New York, NY: 2002. [Google Scholar]

- Kaczmarek KA, Webster JG, Bach-y-Rita P, Tompkins WJ. Electrotactile and vibrotactile displays for sensory substitution systems. IEEE Transactions on Biomedical Engineering. 1991;38:1–16. doi: 10.1109/10.68204. [DOI] [PubMed] [Google Scholar]

- Kalman RE. A New Approach to Linear Filtering and Prediction Problem. Journal of Basic Engineering (ASME) 1960;82:35–45. [Google Scholar]

- Kargo WJ, Nitz DA. Early skill learning is expressed through selection and tuning of cortically represented muscle synergies. Journal of Neuroscience. 2003;23:11255–11269. doi: 10.1523/JNEUROSCI.23-35-11255.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Krinskii VI, Shik ML. A simple motor task. Biophysics. 1964;9:661–666. [Google Scholar]

- Kuiken TA. Targeted reinnervation for improved prosthetic function. Physical Medicine and Rehabilitation Clinics of North America. 2006;17:1–13. doi: 10.1016/j.pmr.2005.10.001. [DOI] [PubMed] [Google Scholar]

- Kuiken TA, Li G, Lock BA, Lipcshutz RD, Miller LA, Subblefield KA, Englehart KB. Targeted Muscle Reinnervation for Real-time Myoelectric Control of Multifunction Artificial Arms. JAMA. 2009;301:619–628. doi: 10.1001/jama.2009.116. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kuiken TA, Miller L, Lipschutz R, Stubblefield K, Dumanian G. Prosthetic command signals following targeted hyper-reinnervation nerve transfer surgery. Conference Proceedings - IEEE Engineering in Medicine and Biology Society; 2005. pp. 7652–7655. [DOI] [PubMed] [Google Scholar]

- Kuiken TA, Miller LA, Lipschutz RD, Lock BA, Stubblefield K, Marasco PD, Zhou P, Dumanian GA. Targeted reinnervation for enhanced prosthetic arm function in a woman with a proximal amputation: a case study. Lancet. 2007;369:371–380. doi: 10.1016/S0140-6736(07)60193-7. [DOI] [PubMed] [Google Scholar]

- Latash ML. Synergy. Oxford University Press; New York ; Oxford: 2008. [Google Scholar]

- Latash ML, Scholz JP, Schoner G. Toward a new theory of motor synergies. Motor Control. 2007;11:276–308. doi: 10.1123/mcj.11.3.276. [DOI] [PubMed] [Google Scholar]

- Lebedev MA, Nicolelis MA. Brain-machine interfaces: past, present and future. Trends in Neurosciences. 2006;29:536–546. doi: 10.1016/j.tins.2006.07.004. [DOI] [PubMed] [Google Scholar]

- Liu X, Mosier KM, Mussa-Ivaldi FA, Casadio M, Scheidt RA. Reorganization of finger coordination patterns during adaptation to rotation and scaling of a newly learned sensorimotor transformation. Journal of Neurophysiology. 2011;105:454–473. doi: 10.1152/jn.00247.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu X, Scheidt RA. Contributions of online visual feedback to the learning and generalization of novel finger coordination patterns. Journal of Neurophysiology. 2008;99:2546–2557. doi: 10.1152/jn.01044.2007. [DOI] [PubMed] [Google Scholar]

- Mak JN, Wolpaw JR. Clinical Applications of Brain-Computer Interfaces: Current State and Future Prospects. IEEE Rev Biomed Eng. 2009;2:187–199. doi: 10.1109/RBME.2009.2035356. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McDonald PV, Oliver SK, Newell KM. Perceptual-motor exploration as a function of biomechanical and task constraints. Acta Psychologica. 1995;88:127–165. doi: 10.1016/0001-6918(93)e0056-8. [DOI] [PubMed] [Google Scholar]

- Mosier KM, Scheidt RA, Acosta S, Mussa-Ivaldi FA. Remapping hand movements in a novel geometrical environment. Journal of Neurophysiology. 2005;94:4362–4372. doi: 10.1152/jn.00380.2005. [DOI] [PubMed] [Google Scholar]

- Mussa-Ivaldi FA, Miller LE. Brain-machine interfaces: computational demands and clinical needs meet basic neuroscience. Trends in Neurosciences. 2003;26:329–334. doi: 10.1016/S0166-2236(03)00121-8. [DOI] [PubMed] [Google Scholar]

- Nisbet P. Integrating assistive technologies: current practices and future possibilities. Medical Engineering & Physics. 1996;18:193–202. doi: 10.1016/1350-4533(95)00068-2. [DOI] [PubMed] [Google Scholar]

- Okamura AM, Cutkosky MR, Dennerlein JT. Reality-based models for vibration feedback in virtual environments. Mechatronics, IEEE/ASME Transactions on. 2001;6:245–252. [Google Scholar]

- Papadimitriou C. Becoming en-wheeled: The situated accom-plishment of reembodiment as a wheelchair user after spinal cord injury. Disability & Society. 2008;23:691–704. [Google Scholar]

- Patton JL, Stoykov ME, Kovic M, Mussa-Ivaldi FA. Evaluation of robotic training forces that either enhance or reduce error in chronic hemiparetic stroke survivors. Experimental Brain Research. 2006;168:368–383. doi: 10.1007/s00221-005-0097-8. [DOI] [PubMed] [Google Scholar]

- Philips GR, Catellier AA, Barrett SF, Wright CH. Electrooculogram wheelchair control. Biomedical Sciences Instrumentation. 2007;43:164–169. [PubMed] [Google Scholar]

- Plotkin A, Sela L, Weissbrod A, Kahana R, Haviv L, Yeshurun Y, Soroker N, Sobel N. Sniffing enables communication and environmental control for the severely disabled. Proceedings of the National Academy of Sciences of the United States of America. 2010;107:14413–14418. doi: 10.1073/pnas.1006746107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Popovic D, Schwirtlich L. Belgrade active A/K prosthesis. In: Medica E, de Vries J, editors. Electrophysiological Kinesiology, Intern. Amsterdam: 1988. pp. 337–343. (Congress Ser. No). [Google Scholar]

- Pressman A, Casadio M, Acosta S, Fishbach A, Mussa-Ivaldi F. Sixth Computational Motor Control Workshop. Be’er Sheva, Isdrael: 2010. A First Step in Automatic Ongoing Recalibration for Body-Machine Interface Controller. [Google Scholar]

- Prochazka A. Proprioceptive feedback and movement regulation. In: Rowell LB, Shepherd JT, editors. Handbook of Physiology. Section 12. Exercise: Regulation and Integration of Multiple Systems. American Physiological Society; New York: 1996. pp. 89–127. [Google Scholar]

- Ranganathan R, Newell KM. Influence of augmented feedback on coordination strategies. Journal of Motor Behavior. 2009;41:317–330. doi: 10.3200/JMBR.41.4.317-330. [DOI] [PubMed] [Google Scholar]

- Ranganathan R, Newell KM. Influence of motor learning on utilizing path redundancy. Neuroscience Letters. 2010;469:416–420. doi: 10.1016/j.neulet.2009.12.041. [DOI] [PubMed] [Google Scholar]

- Salem C, Zhai S. An isometric tongue pointing device. ACM CHI 97 Conference on Human Factors in Computing Systems, volume 1 of TECHNICAL NOTES: Input & Output in the Future.1997. pp. 538–539. [Google Scholar]

- Salisbury SK, Choy NL, Nitz J. Shoulder pain, range of motion, and functional motor skills after acute tetraplegia. Archives of Physical Medicine and Rehabilitation. 2003;84:1480–1485. doi: 10.1016/s0003-9993(03)00371-x. [DOI] [PubMed] [Google Scholar]

- Santello M, Flanders M, Soechting JF. Postural Hand Synergies for Tool Use. Journal of Neuroscience. 1998;18:10105–10115. doi: 10.1523/JNEUROSCI.18-23-10105.1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Saridis GN, Gootee TP. EMG pattern analysis and classification for a prosthetic arm. IEEE Transactions on Biomedical Engineering. 1982;29:403–412. doi: 10.1109/TBME.1982.324954. [DOI] [PubMed] [Google Scholar]

- Scholz JP, Schoner G. The uncontrolled manifold concept: identifying control variables for a functional task. Experimental Brain Research. 1999;126:289–306. doi: 10.1007/s002210050738. [DOI] [PubMed] [Google Scholar]

- Sejnowski TJ, Hinton GE. Cambridge, Mass. MIT Press; London: 1999. Unsupervised learning : foundations of neural computation. [Google Scholar]

- Serruya MD, Hatsopoulos NG, Paninski L, Fellows MR, Donoghue JP. Instant neural control of a movement signal. Nature. 2002;416:141–142. doi: 10.1038/416141a. [DOI] [PubMed] [Google Scholar]

- Seymour W. Rehabilitation and change. Routledge; London, UK: 1998. Remaking the body. [Google Scholar]

- Shimoga KB. A survey of perceptual feedback issues in dexterous telemanipulation. II. Finger touch feedback. Virtual Reality Annual International Symposium, 1993., 1993 IEEE. 1993:271–279. [Google Scholar]

- Simpson RC. Smart wheelchairs: A literature review. Journal of Rehabilitation Research and Development. 2005;42:423–436. doi: 10.1682/jrrd.2004.08.0101. [DOI] [PubMed] [Google Scholar]

- Soechting JF, Buneo CA, Herrmann U, Flanders M. Moving effortlessly in three dimensions: does Donders’ law apply to arm movement? Journal of Neuroscience. 1995;15:6271–6280. doi: 10.1523/JNEUROSCI.15-09-06271.1995. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Soechting JF, Lacquaniti F. An assessment of the existence of muscle synergies during load perturbations and intentional movements of the human arm. Experimental Brain Research. 1989;74:353–348. doi: 10.1007/BF00247355. [DOI] [PubMed] [Google Scholar]

- Tenenbaum JB, de Silva V, Langford JC. A global geometric framework for nonlinear dimensionality reduction. Science. 2000;290:2319–2323. doi: 10.1126/science.290.5500.2319. [DOI] [PubMed] [Google Scholar]

- Ting LH, Macpherson JM. A limited set of muscle synergies for force control during a postural task. Journal of Neurophysiology. 2005;93:609–613. doi: 10.1152/jn.00681.2004. [DOI] [PubMed] [Google Scholar]

- Ting LH, McKay JL. Neuromechanics of muscle synergies for posture and movement. Current Opinion in Neurobiology. 2007;17:622–628. doi: 10.1016/j.conb.2008.01.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Todorov E, Jordan MI. Optimal feedback control as a theory of motor coordination. Nature Neuroscience. 2002;5:1226–1235. doi: 10.1038/nn963. [DOI] [PubMed] [Google Scholar]

- Townsend G, LaPallo B, Boulay C, Krusienski D, Frye G, Hauser C, Schwartz N, Vaughan T, Wolpaw J, Sellers E. A novel P300-based brain-computer interface stimulus presentation paradigm: Moving beyond rows and columns. Clinical Neurophysiology. 2010;121:1109–1120. doi: 10.1016/j.clinph.2010.01.030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tresch MC, Saltiel P, Bizzi E. The construction of movement by the spinal cord. Nature Neuroscience. 1999;2:162–167. doi: 10.1038/5721. [DOI] [PubMed] [Google Scholar]

- Tyler M, Danilov Y, Bach YRP. Closing an open-loop control system: vestibular substitution through the tongue. J Integr Neurosci. 2003;2:159–164. doi: 10.1142/s0219635203000263. [DOI] [PubMed] [Google Scholar]

- van der Maaten LJP, Postma EO, van den Herik HJ. Dimensionality Reduction: A Comparative Review. Tilburg University Technical Report; 2009. pp. 2009–005. TiCC-TR. [Google Scholar]

- Vergaro E, Casadio M, Squeri V, Giannoni P, Morasso P, Sanguineti V. Self-adaptive robot training of stroke survivors for continuous tracking movements. Journal of NeuroEngineering and Rehabilitation. 2010;7:13. doi: 10.1186/1743-0003-7-13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wan EA. Neural network classification: a Bayesian interpretation. Neural Networks, IEEE Transactions on. 1990;1:303–305. doi: 10.1109/72.80269. [DOI] [PubMed] [Google Scholar]

- Wiener N. Extrapolation, interpolation, and smoothing of stationary time series, with engineering applications. Technology Press of the Massachusetts Institute of Technology; Cambridge: 1949. [Google Scholar]

- Wiener N. Cybernetics and society. Eyre & Spottiswoode; London: 1950. The Human Use of Human Beings; p. 241. printed in U.S.A. [Google Scholar]

- Wolpaw JR. Brain-computer interfaces (BCIs) for communication and control: a mini-review. Suppl Clin Neurophysiol. 2004;57:607–613. doi: 10.1016/s1567-424x(09)70400-3. [DOI] [PubMed] [Google Scholar]

- Wolpaw JR. Brain-computer interfaces as new brain output pathways. J Physiol. 2007;579:613–619. doi: 10.1113/jphysiol.2006.125948. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wolpaw JR, Birbaumer N, Heetderks WJ, McFarland DJ, Peckham PH, Schalk G, Donchin E, Quatrano LA, Robinson CJ, Vaughan TM. Brain-computer interface technology: a review of the first international meeting. IEEE Transactions on Rehabilitation Engineering. 2000;8:164–173. doi: 10.1109/tre.2000.847807. [DOI] [PubMed] [Google Scholar]

- Wolpaw JR, Birbaumer N, McFarland DJ, Pfurtscheller G, Vaughan TM. Brain-computer interfaces for communication and control. Clinical Neurophysiology. 2002;113:767–791. doi: 10.1016/s1388-2457(02)00057-3. [DOI] [PubMed] [Google Scholar]

- Wu W, Black MJ, Gao Y, Bienenstock E, Serruya M, Shaikhouni A, Donoghue JP. Neural decoding of cursor motion using a Kalman filter. Advances in Neural Information Processing Systems. 2003;15:133–140. [Google Scholar]

- Wu W, Black MJ, Mumford D, Gao Y, Bienenstock E, Donoghue JP. Modeling and decoding motor cortical activity using a switching Kalman filter. IEEE Transactions on Biomedical Engineering. 2004;51:933–942. doi: 10.1109/TBME.2004.826666. [DOI] [PubMed] [Google Scholar]

- Yang JF, Scholz JP. Learning a throwing task is associated with differential changes in the use of motor abundance. Experimental Brain Research. 2005;163:137–158. doi: 10.1007/s00221-004-2149-x. [DOI] [PubMed] [Google Scholar]