Abstract

Background

The Centers for Medicare and Medicaid Services is considering developing a 30-day ischemic stroke hospital-level mortality model using administrative data. We examined whether inclusion of the NIH Stroke Scale (NIHSS), a measure of stroke severity not included in administrative data, would alter 30-day mortality rates in the Veterans Health Administration (VHA).

Methods

2562 veterans admitted with ischemic stroke to 64 VHA Hospitals in fiscal year 2007 were included. First, we examined the distribution of unadjusted mortality rates across the VHA. Second, we estimated 30-day-all-cause-risk standardized-mortality rates (RSMR) for each hospital by adjusting for age, gender and comorbid conditions using hierarchical models with and without inclusion of the NIHSS. Finally, we examined whether adjustment for the NIHSS significantly changed RSMRs for each hospital compared to other hospitals.

Results

The median unadjusted mortality rate was 3.6%. The RSMR inter-quartile range without the NIHSS ranged from 5.1% to 5.6%. Adjustment with the NIHSS did not change the RSMR inter-quartile range (5.1% to 5.6%). Among veterans ≥ 65 years, the RSMR inter-quartile range without the NIHSS ranged from 9.2% to 10.3%. With adjustment for the NIHSS, the RSMR inter-quartile range changed to 9.4% to 10.0%. The plot of 30-day RSMRs estimated with and without the inclusion of the NIHSS in the model demonstrated overlapping 95% confidence intervals across all hospitals, with no hospital significantly below or above the mean unadjusted 30-day mortality rate. The 30-day mortality measure did not discriminate well among hospitals.

Conclusions

The impact of the NIHSS on RSMRs was limited. The small number of stroke admissions and the narrow range of 30-day stroke mortality rates at the facility level in the VHA cast doubt on the value of using 30-day RSMRs as a means of identifying outlier hospitals based on their stroke care quality.

Keywords: stroke, outcome assessment (health care), hospital mortality

Introduction

To evaluate hospital quality, the Centers for Medicare and Medicaid Services (CMS) have developed standardized methods using administrative data to report hospital condition specific 30-day mortality rates.1-3 Currently, hospital data on 30-day mortality for patients with pneumonia, congestive heart failure and acute myocardial infarction are available on the CMS hospital compare website.4 One measure under consideration by CMS is a 30-day hospital-level acute ischemic stroke mortality measure using administrative data. However, serious concerns were raised against this proposal during the public comment period arranged by CMS. 5 Both the American Academy of Neurology and the American Heart Association expressed their opposition to the development of a 30-day acute ischemic stroke mortality measure using only administrative data. 5 These organizations and many physicians and hospital administrators expressed doubts over whether administrative data could be used to adequately risk-adjust differences in case-mix between hospitals. Chief among the concerns regarding risk adjustment was the absence of any validated measure of stroke severity in the administrative data or in the chart review data used as part of the measure development process. Many hospital administrators were concerned that patients with severe strokes would be more likely to be diverted to their hospital because of regional specialization in stroke care and a 30-day mortality model based on administrative data alone would make their hospital appear to have a higher standardized 30-day mortality rate compared to other hospitals. The National Institutes of Health Stroke Scale (NIHSS) is a widely used, validated measure of stroke severity and is a predictor of both post-stroke functional outcomes 6-8 and mortality.9-10 The development of a 30-day risk adjusted mortality model without the inclusion of the NIHSS or other validated measure of stroke severity might constitute a systematically-flawed measurement system leading to erroneous conclusions by policymakers and the public.

The development of 30-day mortality measures to compare the performance of hospitals has also been an area of interest to the Veterans Health Administration (VHA). In 2007 the VHA Office of Quality and Performance (OQP) in partnership with the VHA Stroke Quality Enhancement Research Initiative (QUERI) undertook a comprehensive review of VHA ischemic stroke care quality; we used these national VHA data to assess the importance of including the NIHSS in a 30-day mortality model that generates adjusted mortality rates to compare VA hospital performance and to examine whether 30-day mortality could be used as a means of ranking hospitals based on their stroke care quality.

Methods

Sample

A sample of 5000 veterans admitted to a VA hospital in fiscal year 200711 (FY07) with a primary discharge diagnosis of ischemic stroke was identified from VHA administrative data using a modified high specificity algorithm of International Classification of Disease Codes, 9th revision.12 The sample included all veterans at small volume centers (≤55 patients in FY07) and an 80% random sample of veterans at high volume centers (>55 patients in FY07). Data were collected through retrospective chart review of medical records performed by abstractors from the West Virginia Medical Institute who were specially trained for this study. A total of 1013 patients were excluded because: they had a carotid endarterectomy during the stroke hospitalization; the stroke occurred after admission; they had a transient ischemic attack rather than a stroke and/or they were admitted only for post-stroke rehabilitation. To be consistent with current CMS measure specifications for 30-day acute ischemic stroke mortality13 the sample was further restricted to exclude hospitals with fewer than 25 stroke patients and patients transferred from other hospitals, leaving a final sample of 2562 patients from 64 hospitals. In a secondary analysis, we excluded veterans younger than 65 years to attain a sample that is more relevant to CMS policymakers, providing 543 veterans across 17 hospitals for this analysis.

Variables

Our main dependent variable was 30-day stroke mortality defined as death from any cause within 30 days after the index admission date. Mortality was assessed from the VA Vital Status Files (VSF), which identifies VA beneficiary deaths from a variety of VA and non-VA sources (e.g., CMS). Previous reports indicate that the VA VSF is relatively complete and accurate when compared with information contained in the National Death Index (NDI), the typical “gold standard” for death ascertainment. More than 98.3% of deaths in the VA VSF were confirmed with deaths in the NDI.14 Our main independent variable was the NIHSS which was retrospectively constructed from physician notes within 24 hours of admission using trained abstractors. Similar to prior work, we used the following cutoffs for the NIHSS (0-2, 3-5, 6-10, 11-15, 16-20, 21-25, >25).9 Covariates included age, gender, and comorbid conditions available for each veteran. Comorbid conditions abstracted from the medical record included a past history of hypertension, hyperlipidemia, diabetes mellitus, coronary artery disease, acute myocardial infarction, coronary artery bypass graft, percutaneous transluminal coronary angiography, transient ischemic attack or ischemic stroke, intracranial hemorrhage or prior hemorrhagic stroke, any intracranial surgery or carotid intervention, congestive heart failure, atrial fibrillation, chronic obstructive pulmonary disease, peripheral vascular disease, kidney disease, dialysis, dementia, depression, deep venous thrombosis, HIV, cancer, liver disease, any rheumatologic disorder, and a history of gastrointestinal or genitourinary hemorrhage.

Analyses

Patient-level-analysis

While the focus of this study was to compare 30-day hospital-level mortality rates, we first verified that the NIHSS was an important independent patient-level predictor of 30-day mortality rates in our sample of patients using logistic regression and adjusting for age, gender and comorbid conditions. We also determined the incremental impact of adding the NIHSS on the C-statistic of the model that included only age, gender and comorbid conditions.

Hospital-level-analysis

We examined the distribution of patient demographic and clinical characteristics at the hospital level reporting the median and range across hospitals. We calculated the mean, median and inter-quartile range for the observed unadjusted mortality rates across the 64 hospitals. Using the approach developed by CMS and endorsed by the National Quality Forum4, we calculated hospital-specific risk standardized mortality rates (RSMR) for each hospital. The CMS method estimates hospital-level 30 day all cause risk standardized rates using a hierarchical logistic regression model to account for the clustering of patients within hospitals and sample size variations among hospitals. The model calculates the risk standardized mortality rate, by producing a ratio of the number of “predicted” deaths to the number of “expected” deaths and multiplying by the national unadjusted mortality rate.1, 4 Hospitals are then classified as better, worse, or no different from the national average based on whether the 95% CI is higher, lower, or overlaps the mean national unadjusted rate. Using this method,4 we calculated RSMRs for each hospital and examined the mean, median and inter-quartile range across the VHA. We then developed two hierarchical models, one which included age, gender and comorbid conditions and one that included these variables, but also included the NIHSS and then used these models to calculate the RSMR for each hospital.

To examine the impact of the NHISS as an additional adjuster, we performed several analyses comparing the RSMRs estimated with and without inclusion of the NIHSS. First, we plotted the RSMR and the associated 95% confidence interval for each hospital in all patients with and without inclusion of the NIHSS to examine whether mortality rates significantly changed for each hospital and relative to other hospitals. We did not examine the impact of NIHSS on hospital rankings in patient above age 65 because the small volume prohibited any meaningful comparisons. Second, we calculated Pearson’s r and Spearman’s Rho to assess the correlation between the RSMRs estimated from the two models. Third, we examined the correlations between facility mean NIHSS scores and their observed mortality rates and RSMR excluding NIHSS and RSMR with NIHSS as an adjuster.

Sensitivity Analysis in estimating 30-day RSMRs

The RSMR methodology is meant to deal with statistical estimation error as well as case-mix differences between hospitals.1 Given the relatively small patient volume at each hospital, statistical estimation error is of potential concern. We conducted a sensitivity analysis to assess the incremental impact of the adjuster variables of age, gender, and comorbid conditions by comparing the RSMRs estimated with the hierarchical models after adjusting for the covariates of interest with alternative 30-day hospital mortality rates estimated with the RSMR methodology but without the adjuster variables. All analyses were conducted using SAS Software, version 9.2 (SAS Institute, Inc., Cary, NC).

Results

Patient-level Characteristics and Model

A total of 2562 patients met the inclusion criteria. The median age for the entire sample of veterans was 66 years (patient-level data not included in tables). About half the population (50.5%) had an NIHSS of ≤2 suggesting low stroke severity for most of the patients. Only 8.2% of the population had an NIHSS of ≥ 11. The mean NIHSS in the sample was 2 with an inter-quartile range of 1 to 5. Among veterans ≥ 65, the mean NIHSS was 3 with an inter-quartile range of 2 to 6

We first demonstrated in our sample that at the patient-level, the NIHSS was an important independent predictor of 30-day mortality. Patients older than 65 had over a twofold increased odds in 30-day mortality compared to patient under 65 [odds ratio 2.4, 95% CI (1.5-3.8)] and patients with NIHSS of greater than 25 had a 59 fold higher odds of mortality compared with patients with an NIHSS ranging from 0-2 [odds ratio 59.2, 95% CI (26.2-133.5)]. Two other patient factors that were associated with 30-day mortality: a history of coronary artery disease and/or acute myocardial infarction [odds ratio 1.7, 95% CI (1.1-2.5)] and a history of cancer [odds ratio 2.2, 95% CI (1.3-3.6)]. None of the remaining comorbid conditions was significantly associated with 30-day stroke mortality. The addition of the NIHSS to the CMS model increased the C-statistic from 0.75 to 0.83 (patient-level data not shown).

Population Characteristics across Different Hospitals

A total of 2562 patients across 64 hospitals met the inclusion criteria outlined by CMS. The hospital-level median age for the entire sample of veterans was 67.6 with a range of 61.5 to 75.4 (Table 1). The sample was majority male, which is typical of the veteran population. There was considerable variation in the median percent with a particular comorbidity at each of hospital (Table 1). Hypertension was the most prevalent condition with a median prevalence of 79%. Hyperlipidemia, diabetes and coronary artery disease were also common conditions across Hospitals. The NIHSS varied with a hospital-level median of 3.8 across all hospitals ranging from 0.8 to 6.8. On average each hospital admitted 37 patients (range 25 to 97) with 15 hospitals admitting greater than 80 stroke patients in FY07 (Table 2). The mean unadjusted mortality rate was 5.1% [95% CI (4.0% - 6.2%)]. The median unadjusted mortality rate was 3.6% [inter-quartile range (2.4% - 7.5%)] with a range of 0 to 17.8% (Table 2).

Table 1. Population Characteristics across Hospitals.

| Hospital Sample Includes All Veterans |

Hospital Sample Includes Veterans ≥ 65 |

|

|---|---|---|

| No. Hospitals | 64 | 17 |

| No. Patients | 2562 | 543 |

| Hospital Median age, yrs (range) | 67.6 (61.5-75.4) | 76.5 (73.4-80.1) |

| Male, median % (range) | 98 (88-100) | 100 (90-100) |

| Comorbid Conditions | Median % (range) | |

| Hypertension | 79 (52-98) | 50 (20-78.8) |

| Hyperlipidemia | 50 (11-72) | 36.6 (16-62.9) |

| Diabetes Mellitus | 41 (9-66) | 0 (0-5.9) |

| Coronary artery disease , acute myocardial infarction, coronary artery bypass graft or percutaneous transluminal coronary angiography |

33 (8-62) | 37.0 (22.9-66.7) |

| Prior ischemic stroke or transient ischemic attack | 28 (0-53) | 34.6 (0-44) |

| Depression | 16 (0-39) | 0 (0 –9.1) |

| Atrial fibrillation | 15 ( 4 -29) | 87.9 (69.7-96) |

| Chronic obstructive pulmonary disease or asthma | 13 (0-46) | 15.9 (3.2-29.6) |

| Congestive heart failure | 11 (0-29) | 14.3 (4-27.3) |

| Peripheral vascular disease | 8 (0-24) | 10.3 (0-14.7) |

| Cancer | 7(0 -19) | 0 (0 -3.8) |

| Dementia | 7 (0-24) | 12.1 (7.3-25) |

| Any intracranial surgery or carotid Intervention | 4 (0 -24) | 6.5 (0 -33.3) |

| Intracranial hemorrhage or prior hemorrhagic stroke | 0 (0-18) | 0 (0-6.5) |

| Kidney disease requiring dialysis or dialysis in past 7 days* | 0 (0-7) | 14.8 (4-25.7) |

| HIV | 0 (0 -11) | 9.7 (0 –22.2) |

| Deep venous thrombosis or pulmonary embolism | 0 (0 - 9) | 0 (0 - 0) |

| Liver disease | 0 (0 -13) | 0 (0 - 4) |

| Rheumatologic disorder (systemic lupus erythematosus or vasculitis) |

0 (0 - 5) | 20 ( 8 –39.4) |

| Active internal bleeding gastrointestinal or genitourinary bleeding within past 21 days * |

0 (0-17) | 0 (0-20.7) |

| Serious head injury in past 3 months* | 0 (0-4) | 0 (0-3.4) |

| NIH Stroke Scale (hospital-level median and range) | 3.8 (0.8-6.8) | 4.9 (2.5-7.7) |

Three of the variables were collected as part of the OQP stroke special project to assess the appropriateness of thrombolytic therapy. This explains the time window given to some of the variables.

Table 2. Distribution of Unadjusted and Risk Standardized Mortality across Hospitals.

| Hospital Characteristics | Hospital Sample Includes All Veterans |

Hospital Sample Includes Veterans ≥ 65 |

|---|---|---|

| No. Patients | 2562 | 543 |

| No. Hospitals | 64 | 17 |

| Median patients per hospital (range) |

37 (25-97) | 29 (25-52) |

|

Observed (Unadjusted) Mortality

Rate |

||

| Mean Mortality Rate and 95% CI | 5.1% (4.0% -6.2%) | 8.8% (5.4% -12.1%) |

| Median and (IQR, 25%, 75%) | 3.6% (2.4%, 7.5%) | 8% (3.8%, 11.1%) |

| Range (min-max) | 0 -17.8% | 0 –21.2% |

|

RSMR without Inclusion of

NIHSS in Model |

||

| Mean RSMR and 95% CI | 5.40% (2.65%-9.60%) | 9.84% (3.09% -21.9%) |

| Median and (IQR, 25%, 75%) | 5.35 (5.1%-5.6%) | 10.04% (9.24% -10.27%) |

| Range (min-max) | 4.79%-6.82% | 6.86-12.09% |

|

RSMR with Inclusion of NIHSS

in Model |

||

| Mean RSMR and 95%CI | 5.42% (2.52%-9.92%) | 9.75% (3.16% -21.38%) |

| Median and (IQR, 25%, 75%) | 5.24% (5.1%-5.6%) | 9.90% (9.40% -10.04%) |

| Range (min-max) | 4.72%-6.81% | 7.53% -11.58% |

The cohort included a sample of all veterans at small volume centers (≤55 patients in fiscal year 2007) and an 80% random sample of veterans at high volume centers (>55 patients in fiscal year 2007).

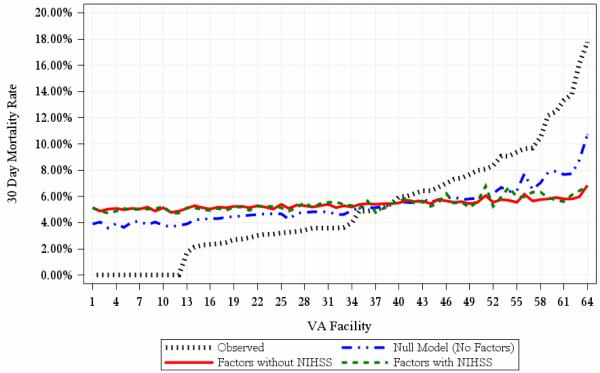

30-day RSMRs across Hospitals

After adjustment for age and comorbid conditions the RSMR across the VHA changed to 5.40% [95% CI (2.65% - 9.60%)]. Addition of the NIHSS (categorized as 0-2, 3-5, 6-10, 11-15, 16-20, 21-25, >25) to a model including age, gender and comorbid conditions did not appreciably change the mean adjusted mortality rate [5.42% 95% CI (2.52% - 9.92%)]; The RSMR inter-quartile range without the NIHSS ranged from 5.1% to 5.6%; and ranged from 5.1% to 5.6% after inclusion of the NIHSS. Figure 1 displays the very small difference in adjusted rates with or without the NIHSS.

Figure 1.

Risk Standardized 30-day mortality rates with and without inclusion of the NIH stroke scale compared to the raw unadjusted mortality rates and the null model (model without any covariates).

Among veterans m=ge 65 years, the mean unadjusted mortality rate was higher than that of the entire VA sample [8.8%, 95% CI (5.4% - 12.1%)] (Table 2). After adjustment for age and comorbid conditions, the mean RSMR increased to 9.8% [95% CI (3.1% - 21.9%)]. Again, inclusion of the NIHSS in the 30-day mortality model did not appreciably change the mean RSMR [9.8%, 95% CI (3.2% - 21.4%)] in this older population.

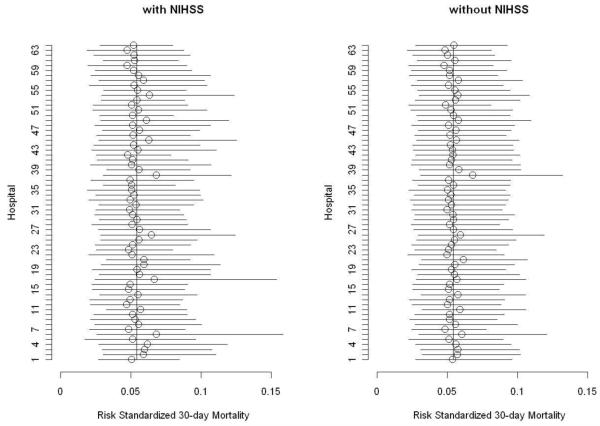

Impact of the NIHSS on Comparisons of 30-day RSMRs among All Hospitals

To examine whether there was any difference in 30-day RSMRs across hospitals and whether inclusion of the NIHSS had an impact on these differences, we plotted the 30-day RSMRs estimated with and without the inclusion of the NIHSS in the model. Figure 2 demonstrates overlapping 95% confidence intervals across all hospitals, with no hospital significantly below or above the mean unadjusted 30-day mortality rate. The 30-day mortality measure did not discriminate well among hospitals. The RSMRs from the two methods were highly correlated: the Pearson’s correlation was .940 (p < .001) and the Spearman’s Rho was .933 (p < .001). Finally, we found a modest correlation (r= .369 (p < .01) between facility mean NIHSS scores and their observed mortality rates. Before adjusting the RSMRs for the NIHSS, the correlation between facility RSMRs and their NIHSS scores remained modest (r=.371, p < .01); after adjusting the RSMRs for NIHSS, the correlation became non-significant. Thus, the stroke severity of patients accounted for some additional variation in facility mortality rates but the effect was not strong enough to make a substantive difference in the RSMR rates.

Figure 2.

Risk Standardized 30-day mortality rates and 95% confidence Interval with and without inclusion of the NIH stroke scale in the hierarchical model (all veterans).

Sensitivity Analysis

We assessed the incremental impact of the adjuster variables of age, gender, and comorbid conditions by comparing the 30-day hospital-level RSMRs with alternative 30-day hospital mortality rates. These alternative hospital mortality rates were estimated with the RSMR methodology but without the adjuster variables (null model, figure 1). The confidence intervals and inter-quartile ranges from these alternative rates were different from the observed rates but very close to the RSMRs. The alternative 30-day hospital-level RSMR for the total sample had a mean of 5.3% and inter-quartile range of 4.3% to 5.9%.

Discussion

We confirmed that the NIHSS is an important patient-level characteristic associated with 30-day mortality. 9-10 The addition of the NIHSS to the CMS-like adjustment models increased model discrimination. However, we also found that the addition of the NIHSS to a model that includes age, gender and comorbid conditions did not alter the hospital-level 30-day mortality rates in the VHA. The small number of stroke admissions and the narrow range of 30-day stroke mortality rates at the facility level in the VHA cast doubt on the value of using 30-day RSMRs as a means of identifying outlier hospitals in terms of stroke care quality. If the VA were to follow the same methodology used by CMS Hospital Compare to report 30-day ischemic stroke mortality, then all 64 VA hospitals would receive the same rank – “no different from national rate” - whether NIHSS was included or not. However, data from the national Office of Quality and Performance Study on stroke care in the VA demonstrates that there are significant differences across the VA in Stroke quality process of care.11 The power to detect differences in mortality in this study may not be adequate irrespective of risk adjustment methods used.

Our limited sample of patients 65 and older precludes our ability to provide any insight on the relative importance of including the NIHSS in the CMS 30-day acute ischemic stroke mortality model. We did observe a modest improvement in model discrimination that could suggest NIHSS may be important to hospital level comparisons. However, overall our findings may not apply to clinical settings outside the VHA. There is less variation in 30-day stroke mortality among veterans even those 65 and older in the VHA compared to the Medicare population. The mean 30-day mortality rates reported in Medicare hospitals that participate in the Get-with-the-Guidelines (GWTG) Stroke Program is 13.9% and is higher than the mean 30-day mortality rate in veterans≥ 65 (8.8%).15 Among the 1036 hospitals that participated in the GWTG Stroke Program, the mean NIHSS among stroke patients was higher than our sample of patients ≥ 65 (mean NIHSS of 5 vs. 3). However, hospitals that participate in the GWTG Stroke Program are particularly interested in stroke care and may serve a population that is not nationally representative either. The NIHSS may have a greater role in a system of care with greater variation in stroke severity such as the Medicare program. A larger study of a cross-section of different types of hospitals across the US should be undertaken to conclusively evaluate the impact of the NIHSS when comparing facility performance in the Medicare program.

Our study has a number of important limitations that deserve comment. First, we used medical record data and not administrative data in our analysis of the impact of the NIHSS on hospital RSMRs. Any extrapolation of our results to models that use administrative data must be made cautiously. Second, our analysis is based on a predominantly male cohort which may limit the generalizability of our findings. Third, our main independent variable, the NIHSS, was constructed retrospectively from physician notes. However, previous studies have shown that the summed score of the retrospective NIHSS is very highly correlated with the prospective NIHSS.16 Finally, our small sample size and limited facility specific volume although in line with CMS guidelines on inclusion of facilities with greater than 25 patients 13 may have diminished our ability to examine the impact of the NIHSS.

Summary

The limited influence of the NIHSS on 30-day mortality that we observed may be influenced by the sample size and the narrow range of variability in both 30-day mortality and stroke severity across the VHA. The NIHSS may have a greater role in adjusting facility-level mortality rates in a system of care with greater variation in stroke severity and/or mortality (such as the Medicare program); or in a system with more stroke admissions per hospital or in which mortality is reported over a longer time frame. A larger study of a cross-section of different types of hospitals across the US is needed to conclusively evaluate the impact of the NIHSS when comparing facility performance in the Medicare program.

Footnotes

Disclosures

We have no conflicts of interest to report

References

- 1.Risk adjustment models for AMI and HF 30-day mortality [Accessed March 2, 2011];Report prepared for the Centers for Medicare and Medicaid Services. Accessed at http://www.qualitynet.org.

- 2.Krumholz HM, Wang Y, Mattera JA, et al. An administrative claims model suitable for profiling hospital performance based on 30-day mortality rates among patients with an acute myocardial infarction. Circulation. 2006;113:1683–92. doi: 10.1161/CIRCULATIONAHA.105.611186. [DOI] [PubMed] [Google Scholar]

- 3.Krumholz HM, Wang Y, Mattera JA, et al. An administrative claims model suitable for profiling hospital performance based on 30-day mortality rates among patients with heart failure. Circulation. 2006;113:1693–701. doi: 10.1161/CIRCULATIONAHA.105.611194. [DOI] [PubMed] [Google Scholar]

- 4.Hospital Compare [Accessed March 2, 2011];US Department of Health and Human Services. 2011 at http://www.hospitalcompare.hhs.gov/.

- 5.Verbatim Public Comments for the Stroke Mortality and Readmission Measure. Center for Medicaid and Medicare Services; Baltimore, MD: 2010. [Google Scholar]

- 6.Bruno A, Saha C, Williams LS. Percent change on the National Institutes of Health Stroke Scale: a useful acute stroke outcome measure. J Stroke Cerebrovasc Dis. 2009;18:56–9. doi: 10.1016/j.jstrokecerebrovasdis.2008.09.002. [DOI] [PubMed] [Google Scholar]

- 7.Schmid AA, Wells CK, Concato J, et al. Prevalence, predictors, and outcomes of poststroke falls in acute hospital setting. J Rehabil Res Dev. 2010;47:553–62. doi: 10.1682/jrrd.2009.08.0133. [DOI] [PubMed] [Google Scholar]

- 8.Schmid AA, Kapoor JR, Dallas M, Bravata DM. Association between stroke severity and fall risk among stroke patients. Neuroepidemiology. 2010;34:158–62. doi: 10.1159/000279332. [DOI] [PubMed] [Google Scholar]

- 9.Smith EE, Shobha N, Dai D, et al. Risk score for in-hospital ischemic stroke mortality derived and validated within the Get With the Guidelines-Stroke Program. Circulation. 2010;122:1496–504. doi: 10.1161/CIRCULATIONAHA.109.932822. [DOI] [PubMed] [Google Scholar]

- 10.Adams HP, Jr., Davis PH, Leira EC, et al. Baseline NIH Stroke Scale score strongly predicts outcome after stroke: A report of the Trial of Org 10172 in Acute Stroke Treatment (TOAST) Neurology. 1999;53:126–31. doi: 10.1212/wnl.53.1.126. [DOI] [PubMed] [Google Scholar]

- 11.Arling G, Reeves M, Ross J, et al. Estimating and reporting on the quality of inpatient stroke care by Veterans Health Administration Medical Centers. Circ Cardiovasc Qual Outcomes. 2012;5:44–51. doi: 10.1161/CIRCOUTCOMES.111.961474. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Reker DM, Reid K, Duncan PW, et al. Development of an integrated stroke outcomes database within Veterans Health Administration. J Rehabil Res Dev. 2005;42:77–91. doi: 10.1682/jrrd.2003.11.0164. [DOI] [PubMed] [Google Scholar]

- 13.Krumholz H. Summary of Technical Expert Panel Recommendations for: 30-Day Risk Standardized All Cause Readmission and Mortality in Acute Ischemic Stroke. Report Prepared by Prepared by Yale New Haven Health Services Corporation/Center for Outcomes Research Evaluation Under Contract for the Center of Medicare and Medicaid Services New Haven; Connecticut: 2010. [Google Scholar]

- 14.Sohn MW, Arnold N, Maynard C, Hynes DM. Accuracy and completeness of mortality data in the Department of Veterans Affairs. Popul Health Metr. 2006;4:2. doi: 10.1186/1478-7954-4-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Fonarow GC, Smith EE, Reeves MJ, et al. Hospital-level variation in mortality and rehospitalization for medicare beneficiaries with acute ischemic stroke. Stroke. 2011;42:159–66. doi: 10.1161/STROKEAHA.110.601831. [DOI] [PubMed] [Google Scholar]

- 16.Williams LS, Yilmaz EY, Lopez-Yunez AM. Retrospective assessment of initial stroke severity with the NIH Stroke Scale. Stroke. 2000;31:858–62. doi: 10.1161/01.str.31.4.858. [DOI] [PubMed] [Google Scholar]