Abstract

Recent technological advances provide researchers with a way of gathering real-time information on an individual's movement through the use of wearable devices that record acceleration. In this paper, we propose a method for identifying activity types, like walking, standing, and resting, from acceleration data. Our approach decomposes movements into short components called “movelets”, and builds a reference for each activity type. Unknown activities are predicted by matching new movelets to the reference. We apply our method to data collected from a single, three-axis accelerometer and focus on activities of interest in studying physical function in elderly populations. An important technical advantage of our methods is that they allow identification of short activities, such as taking two or three steps and then stopping, as well as low frequency rare(compared with the whole time series) activities, such as sitting on a chair. Based on our results we provide simple and actionable recommendations for the design and implementation of large epidemiological studies that could collect accelerometry data for the purpose of predicting the time series of activities and connecting it to health outcomes.

Keywords: Accelerometer, matching, time series, physical activity

1. Introduction

Accurate measurement of physical activity is necessary for understanding the complex relationship between an individual's health outcomes and his or her behavior profile. Unfortunately, standard measures of activity such as questionnaires and diaries are based on self-reporting and are subject to known shortcomings. Moreover, these measures typically offer snapshots of activity and do not reflect the dynamic nature of movement in the real world. Recently, progress in sensor technologies and wearable computing devices have allowed researchers to collect real-time information on movement through the use of accelerometers. In this paper, we propose a method for predicting activity types, such as walking, standing and sitting, from a multichannel accelerometer designed with widespread deployment in observational studies in mind.

In early years, human activity function is assessed using measures of activities of daily living that depend on retrospective self-report, despite well-documented and substantial measurement error associated with these instruments [7, 18]. The results of these studies were highly impaired by problems associated with self-reported activity data. Wearable sensors started to be deployed into studies, since they allow for unbiased measurement in older populations with cognitive or physical impairment. Moreover, the accuracy of sensors is not effected by differences in sex, race/ethnicity or language, all well known sources of bias in self-reports. This is particularly important in the study of aging populations, both because issues with recall are more severe and because understanding physical activity accurately is central to the study of elderly populations in public health [21]. The use of them to collect activity information in large-scale observational studies took a major step forward with the addition of the ActiGraph to the National Health and Nutrition Examination Survey (NHANES) in 2003 [27]. Many published work have demonstrated these devices’ ability to monitor human activity status[28, 5, 1, 25, 4, 20, 10, 6, 9, 15]. Some of them focused on the quantification of total energy expenditure[28] or “activity counts”[15]. However, these devices (often combined with more sophisticated sensors) offer the potential to assess more complex questions regarding real-world function and more refined measures of specific activity types. Accelerometer, which is the basic of these wearable sensors, were discussed in many literatures because they are capable of accurately collecting adequate data for physical activity monitoring[11, 5, 25]. Since avoiding laying a burden to the subjects is crucial in large scale observational study, developing methods to predict the physical activity using accelerometry data becomes one of our major interests.

We base activity prediction on the idea that movements can be understood in terms of smaller components, which we dub “movelets”. Briefly, given accelerometer time series data, we decompose movements into short overlapping segments; these movelets are the elements which make up motions and activities. Using data with known activity labels, movelets are organized by activity type into “chapters”, or collections of movelets with the same activity label. Predictions of unknown activity labels are made by finding the closest match, defined in terms of squared error for all acceleration channels, of an unlabeled movelet to those in chapters. Thus we build our method on the intuition that movements with elements that look similar are likely to have the same labels.

Our data are generated using a single accelerometer positioned on the subject's hip at the apex of the left iliac crest. The accelerometer is built on core chip MMA7260Q by Freescale™, and records acceleration in three mutually orthogonal directions for a wide range of sampling frequencies (time points per second) and sensitivities (acceleration per unit of scale). Data were collected during in-laboratory sessions in which subjects performed a collection of activities, including resting, walking, and lying, repeated chair stands, lifting an object from the floor, up-and-go, and standing to reclining on a couch. We observe data for two subjects with two laboratory visits each. Sessions lasted roughly 15 to 20 minutes, and in that time each activity was replicated up to three times. Both the data collection device and activities performed are compatible with the needs of observational studies, especially of elderly populations: the single accelerometer worn at the hip is unobtrusive and wearable in real time, and the activities provide a useful understanding of physical movement. During the data collection, an observer recorded activity start and stop times to provide a time series of movement labels that accompanies the accelerometer signal.

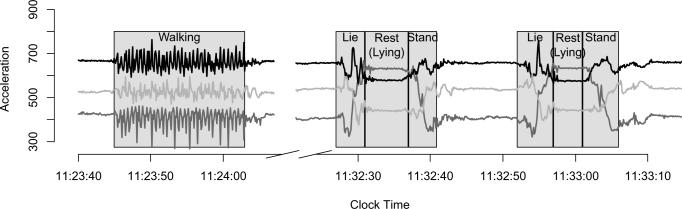

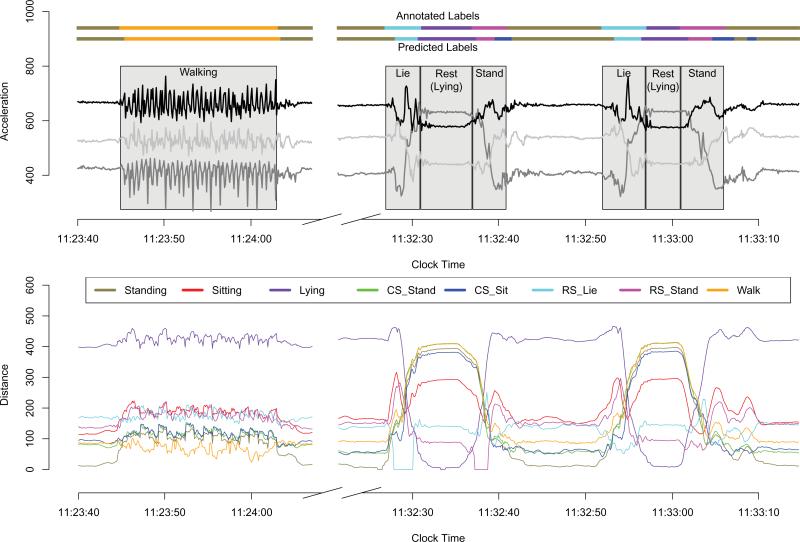

The accelerometer output consists of 3 voltage time series, which are proxy measures of acceleration. The time series vary by amplitude, frequency and correlation along the time course of the corresponding activities. For example, Figure 1 displays two segments of accelerometer data. In the first segment, the subject stands, walks twenty meters, and stands. In the second segment, the subject performs two replicates of lying down and standing up; during each replicate, the subject lies from a standing position, rests for several seconds in the lying position, and rises to a standing position. Three acceleration channels or axes are shown, and activity labels are provided. From this figure, we see that active periods, in which the subject is walking, rising or lying down, have higher variability than inactive periods, in which the subject is resting in either the standing or lying position. Walking is characterized by periodic acceleration patterns for each axis, although there are differences in amplitude between axes. Replicates of the “Chair Stand” activity display similar patterns, bolstering the intuition that movements that share a label also appear similar visually. Although there are two types of inactivity (standing and lying), the acceleration time series corresponding to these two periods are characterized by low variation around stable constants; however, the ordering and relative position of the axes are different, due to a change in the orientation of the accelerometer with respect to Earth's gravity.

Fig 1.

Two segments of accelerometer data. First, a subject walks for approximately 20 second; then, a subject preforms two replicates of ”Lie down / Rest / Stand up”. Acceleration in three mutually orthogonal directions is shown, and activity labels are included.

The goal of this work is to demonstrate the conceptual framework for the movelet approach, rather than to describe the details of its application to a large data set. The movelet prediction algorithm described in the paper is an important first step in developing accelerometer-based biomarkers of activity in large observational studies. Several strengths of this approach are illustrated by the analysis of a few subjects at a few visits; at the same time, improvements and refinements both in the statistical analysis and in the data collection are suggested by our results. For instance, matching unlabeled movelets to reference chapters provides a fast and easily understood method for predicting labels. However, results can be sensitive to the definition of the gold standard of activity type - very often, the observer annotations disagree with the raw accelerometer output. Additionally, the use of gyroscopic information, which is included in many accelerometer devices, can give accelerometer output that is robust to rotations of the device itself. These are important considerations in designing a data collection method that will give useful information regarding activity in observational studies. More importantly, our findings have already led to changing the proposed design of the experiment for an ongoing and future observational studies. Indeed, an investigator will now go to the home of study participants, help install the device correctly, provide simple hands-on instructions, and ask the participants to perform a few well defined tasks. This process will be videotaped for improving and assisting human annotation. The investigator will then leave and study participants are then called on the phone and asked to perform a few simple tasks for re-calibration. None of these features was part of the original data collection protocol. We conclude that understanding the inherent pitfalls and variability associated with even the most advanced measuring technology can lead to dramatic improvements in the design of experiments, data quality, and analysis. This paper, as a “proof-of-concept” work, provides the first part of the story for accelerometry data.

Prediction of physical activity intensity and type has been under intense methodological development in electronic engineering and computer science, but to a lesser extent in statistics. Preece et al. [24] provided a nice review of the current methods of activity prediction. Many prediction methods using either raw or transformed accelerometer data exist, including “cut-point” or linear regression [8, 11], quadratic discriminant analysis [23], artificial neural networks [13, 14, 29, 30, 26], Markov Models [16, 23], unsupervised learning [19] and combined methods [25, 2]. Previous work has often focused on activity types that are not of interest in public health studies[23], such as using computer or brushing of teeth, or has included multiple accelerometers placed at several locations on a subject's body [13, 14, 17]. A comparison of recent approaches was also applied to data generated using five biaxial accelerometers by Bao and Intille [3]. However, these approaches are unsuitable for application to accelerometer data in public health studies, either because they require more sensors on subjects or because they are not designed to detect short-term activities like standing from a lying position. Moreover, prediction results from black-boxed machine learning methods are usually difficult to examine and improve. This stimulates us to find a method which could not only detect long term activities like walking and vacuuming, but also short term activities like sitting down or lying down.

Our approach and taxonomy are inspired by the speech recognition literature [12], where words or parts of words are matched to known speech patterns. However, the parallel with speech recognition should not be overstated given the large differences between the two activities and measurement instruments. First, speech is often recorded at much higher frequencies (between 8 and 16kHz) than acceleration (10Hz in our dataset), providing density and detail to voice recognition data [22]. Second, audio data is inherently single-channel while acceleration is understood in three orthogonal directions, increasing the dimension of the activity prediction problem. In natural speech most sounds and many full words are repeated often, providing an ample training set on which to build a prediction algorithm. In activity prediction, movements can be rarely performed and infrequently observed, making the definition of a training set challenging. Moreover, high fidelity audio recorders could be treated as thought they were lossless reproductions of the original signal. In contrast, accelerometers are weak proxies for activities that are complex and could be ambiguous.

The remainder of the paper is organized as follows. In Section 2 we describe the movelet-based approach to predicting activity based on accelerometer data. Section 3 details the application of our proposed method to the real data described above. We close with a discussion in Section 4.

2. Methods

To predict activities based on accelerometer data, we first define a movelet as a basic element of 3-axis time series data. Collections of movelets paired with known labels (annotations) form chapters, which are in turn organized into reference dictionaries of known movelets and their associated activities. Classification of accelerometer data with unknown activity annotations is based on decomposing the unlabeled data into component movelets, and then matching each unlabeled movelet to these chapters. The label of the best matched chapter is used as a preliminary prediction of the activity of the unlabeled movelet.

2.1. Definitions

We observe data that is a collection of three time series representing the acceleration in three mutually orthogonal axes. Though we have two subjects and each with two visits, we actually treat them as 4 independent visits. Thus denote the data by Xi(t) = {Xi1(t), Xi2(t), Xi3(t)}, t = 1, 2, . . . , Ti, where Ti is the length of the accelerometer time series for visit i. Define an activity label time series Li(t) such that Li(t) is a function mapping t to {Act1, Act2, . . . , ActA}, t = 1, 2, . . . , Ti, where Acta denotes activity type a. Let and be a partition of observation time for visit i into training and validation sets, respectively. Thus if , then Xi(t) belongs to the training dataset and has a known activity label Li(t); otherwise Li(t) is unknown and is to be estimated. Training sets contain continuous segments or blocks of time to include full examples of each movement type.

Next we define movelets as elements of time series that characterize movement in temporal windows with length H. More specifically, let

define the movelet of subject/visit i at time t ∈ {1, 2, . . . , (Ti – H + 1)}. Note that movelets are made up of time series for all axes of the accelerometer output, and summarizes the pattern of acceleration recorded from time t to t + H – 1. The dimension of the movelet Mi(t) is 3H, because there are 3 concatenated time series, and contains all the accelerometry information for a window of movement of length H/10, because time is expressed in 10 Hz in our case. H is usually chosen so that a movelet Mi(t) captures enough information to identify a movement and is not too long to contain more than one type of activity as well. Movelets Mi(t) with are paired with their known activity labels and collected into activity-specific “chapters”. Thus, we define a chapter as a collection of movelets {Mi(t) : Li(t) = Acta} that share a common label. An important characteristic of movelets is that they are overlapping moving windows; in fact Mi(t) and Mi(t + 1) overlap everywhere, except at time t and t + H. This is an important characteristic when there is uncertainty on where the activity actually starts, because transitions between two activities can be unclear particularly for elderly subjects. This happens to be a serious problem even with the best in-lab human annotation. Allowing this sort of obscure period in our movelets may help us solve the problem. One chapter is constructed for each activity type; chapters are then combined to form a subject-visit specific “dictionary” of movelets and their labels. Dictionaries are distinct for subjects and visits to control for differences between the movement patterns for different subjects and to account for changes in the orientation of the accelerometer at different visits. This dictionary is used as a reference for movelets Mi(t) with . Table 1 displays an example of a subject-specific dictionary consisting of A chapters in total. Each chapter is constructed using the training set and is made up of movelets, the short components of three-axis accelerometer data. Usually for activities with well-defined beginning and endings(standing up from chair, etc) one full replicate is used to construct a chapter. For continuous activities(walking, sitting, etc.) we use a two-to-three-second segment to build the chapter.

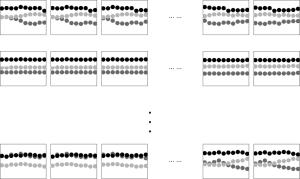

Table 1.

A subject-specific dictionary with with A chapters, one for each activity type. Each chapter consists of movelets, short overlapping segments of three-axis accelerometer data, which are illustrated in the far-right column of the table

The definitions of movelets, chapters, and dictionaries given above provide a useful analogy for our proposed classification method. Given unlabeled accelerometer data that has been decomposed into movelets, we use the dictionary as a reference by “looking up” an unlabeled movelet and finding its best match among known movelets. The label associated with the best match, which is the chapter title, is used to predict the unknown label. Matching, which is described below, quantifies the intuition that movelets with similar visual appearances are likely to be components of the same larger movement.

2.2. Matching and labeling

Given an unlabeled movelet Mi(t0), we predict the label Li(t0) first by matching Mi(t0) to a chapter in the dictionary described above. To be more specific, the closest match for movelet Mi(t0) in the dictionary is Mi(t′), where

The distance function D(·, ·) is

| (1) |

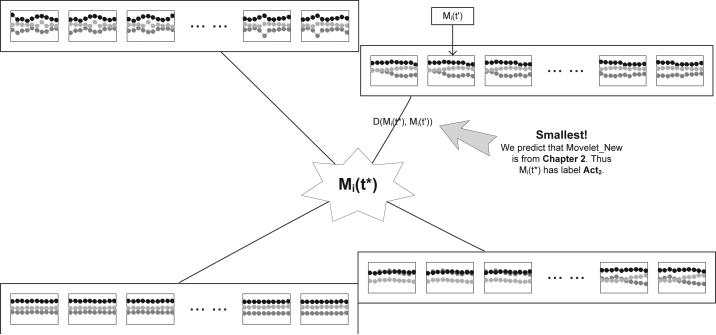

Thus, distance between movelets averages the difference taken over all acceleration axes. Based on this match, an estimate for the unknown label is ; that is, we take the label associated with the best dictionary match and use it to estimate the unknown label. Figure 2 gives a schematic of the matching process, in which an unlabeled movelet Mi(t*) is compared to a dictionary with 4 chapters. The distance between Mi(t*) and all reference movelets is calculated using the distance function (1). After Mi(t*) is compared to all reference movelets in the dictionary it is matched to Chapter 2, because movelet Mi(t′) in Chapter 2 along with Mi(t*) provides the smallest distance.

Fig 2.

A display of matching an unlabeled movelet Mi(t*) to 4 chapters in the dictionary. Points in each chapter represent labeled movelets corresponding to the activity associated with this chapter. The distance between the unlabeled Mi(t*) and each chapter is given by the minimum distance between Mi(t*) and the movelets in each chapter. After Mi(t*) is compared to all reference movelets in the dictionary, it is matched to Chapter 2 which provides the smallest distance among all the 4 chapters.

After preliminary labels , , are generated using the matching step, a majority voting procedure is used to select final estimated labels L̂i(t). Each element of is considered a single vote, and the activity with the most votes in this set is the estimate L̂i(t). An advantage of this procedure is that it smooths the predicted labels L̂i(t) by taking into account the fact that movements are continuous, meaning that neighboring movelets contain information about the current activity. Additionally, because movelets decompose movements into their constituent parts, the matching applies even when the duration of movements is variable. For instance, two replicates of sitting from the standing position may take different amounts of time, but will have similar movelet signatures.

2.3. Movement fingerprints and lazy movelets

To increase the accuracy of our dictionary-based classification method and decrease the computational burden of the looking-up process, each chapter must be carefully constructed to include useful information while excluding redundant or less useful movelets. With this in mind, chapters that were built in the manner described above can be fine-tuned using the identification of what we will label “fingerprint” and “lazy” movelets.

First, each chapter must include the signature movelets of the corresponding activity. We refer to these defining movelets as “fingerprints” because they provide excellent prediction of a specific activity related to the chapter. Fingerprints are thus the characteristic acceleration time series associated with a movement, and are most often used when matching new movelets of the same activity. Second, unnecessary or redundant information should be removed from the chapter. For example, a chapter built on several seconds of walking will include many near-identical movelets due to the periodic nature of the activity. Further, there often exist “lazy” movelets which, contrary to fingerprints, are not commonly matched to and do not usefully identify the activity; rather than aiding prediction, these can be falsely matched to by movelets of other activities. Both redundant and lazy movelets can be excluded from a chapter to increase computational performance and reduce the number of errors. Finally, some movements share very similar movelets. These “ambiguous” movelets can lead to misclassification due to very close matches in multiple chapters. In this situation, an ambiguous movelet can be removed from one chapter so that matches will be made to the remaining movelet; the choice of which movelet to retain will depend on the relative importance of correctly classifying the two movements. The selection of fingerprint and lazy movelets was done independently of performance on the test set.

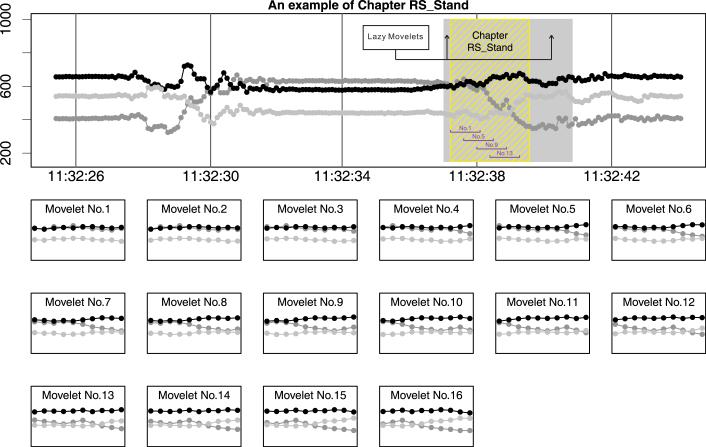

As an example of both fingerprints and lazy movelets, Figure 3 displays the chapter for “Standing from Lying” from a movelet dictionary. We used only the yellow-line-shaded region to construct the chapter, despite the fact that the areas shown in light gray are also labeled by a human observer as “Standing from Lying”. The fingerprint of this activity is the pattern that the mid gray time series goes down while the green one goes up. The movelets in the light gray bands (not shaded by yellow lines) are lazy movelets, and do not distinguish this activity from others. We removed the lazy movelets from the annotated time period and built the library conservatively to make the chapter a more useful reference for future unlabeled activities.

Fig 3.

The chapter “Standing from Lying”, which consists of 16 movelets. In dark grey is the section of the acceleration data used to construct the chapter; in light grey are time points with the same activity label, but that are excluded from the chapter as “lazy” movelets.

2.4. Summary

Movelet-based analysis of accelerometer data is built on the intuition that movements with similar acceleration patterns at the elemental level are likely to be generated by the same activity. Using this idea, we decompose movements into overlapping segments and construct reference chapters and dictionaries; given unlabeled time series, we match to the reference and use the best match to predict the unknown activity type. Movement fingerprints are identified to strengthen the construction of chapters and to aid in the basic understanding of movements, while lazy movelets are eliminated to reduce classification error and computation time. The result is a conceptually clear method for activity prediction that is computationally feasible and scalable to large datasets.

3. Application to LIFEmeter data

We now apply our methods to data from two subjects, each with two visits. Data were collected in the development of the LIFEmeter multi-sensor device, intended to assess physical function in large-scale observational studies. The subjects were community dwelling older adult participants in the LIFEmeter study, ages 65 and older who had no history of cognitive dysfunction, lived in the Baltimore area, and were capable of walking across a small room unassisted. They were observed in a clinical setting, and performed physical activities that are common in daily living. The following activities were selected as important in understanding physical function in real-world setting: walking, standing from sitting, standing from lying, sitting from standing, and lying from standing. Three sedentary states (standing, sitting, and lying) were also annotated. Table 2 lists all activities observed and provides abbreviations that will be used through the remainder of this section.

Table 2.

A list of activities of interest, with abbreviates used in remaining Figures and text

| Activity List | |

|---|---|

| Activity | Alias |

| Rest (Stand) | Standing |

| Rest (Sit) | Sitting |

| Rest (Lie) | Lying |

| Standing from Chair | CS_Stand |

| Sitting Down from Standing | CS_Sit |

| Lying Down from Standing | RS_Lie |

| Standing from Lying | RS_Stand |

| Walking | Walk |

An observer annotated the time points at which an activity was started and completed, providing activity labels . Annotations were imperfect due to early or late start and stop points, to rounding times to the nearest second, and to misalignment. Obvious errors in the observed labels were detected and corrected through comparison with the accelerometer output to create labels used to construct movelet dictionaries and assess the predictive performance of our algorithm.

3.1. Constructing the dictionary

Following the method described in Section 2, we build a dictionary with 8 chapters of activities for each subject and visit. First, we partitioned the accelerometer data into training and validation sets and . Using the training set, we decompose movements into movelets and organize by activity type. Our choice of H is 10, based on the 10Hz sample rate of the device used in our data collection. This is because each 1-second movelet contains just enough information to identify a movement, and is not so long that it restricts the matching of an unknown activity. We also tried other choices of H between 10 and 15 which did not give substantially different results. We therefore conclude that, in general, the methods is robust to the choice of H within a reasonable range (in our case around 10). For activities with well-defined beginnings and endings, such as “CS_Stand” and “CS_Sit”, we use the first replicate as training data and reserve the remaining replicates as testing data. Chapters for these activities contain between 5 and 30 movelets each, depending on the duration of the activity. For continuous movements that lack well-defined beginnings and endings, such as “Walk” or “standing”, we extract segments lasting 2 to 3 seconds that are clearly labeled with a particular activity to build the corresponding chapter. This is done to prevent chapters from becoming too large, and, since these activities are periodic, to prevent redundant information from being included in the reference.

3.2. Initial results

After constructing dictionaries for each subject and each visit using the training data, we predict activity labels L̂i(t) for by matching movelets to the reference and implementing the majority voting step. Figure 4 details this analysis. For the accelerometer data displayed in Figure 1 (one segment of walking and two replicates of lie-rest-stand), the lower panel of Figure 4 shows the minimum distance between each unlabeled movelet and all movelets contained in the reference chapters as a collection of distance curves. The preliminary labels are taken to be the chapter title with smallest distance. Next, the prediction L̂i(t) is determined via a majority vote in which each element of is considered a single vote. At the top of Figure 4 are the observer-annotated (top colored bar) and predicted labels (bottom color bar) that accompany the accelerometer data. A comparison of the annotations and predictions indicates generally high agreement between these time series. In particular, there is broad overlap between the prediction and annotation of walking and resting periods as well as the location of the shorter activities lying and standing. Moreover, there is generally reasonable separation between the distance curve corresponding the the correct chapter and the remaining chapters, indicating the ability of the movelet-based analysis to distinguish between activity types. In two regions, the distance curves are zero – these depict the first replicate of the “Lie from Stand” and “Stand from Lie” activities, and were used to construct their respective activity chapters. Isolated misclassifications in the preliminary labels, such as those that take place in the middle of walking period, are in effect smoothed by the majority-voting step which prevents single activity labels from disagreeing with its neighbors.

Fig 4.

Observer-defined annotations and predictions for two segments of accelerometer data with several activity types. Curves giving the smallest distance between movelets and each chapter are displayed.

On the other hand, as shown in the right segment of Figure 4, the annotated labels for the shorter activities have much longer time durations than the predicted intervals. This is most likely due to a combination of early and late stop points in the annotations and time spent transitioning between activities. For example, when a subject is asked to sit from a standing position, there is a brief pause as the new movement is begun; similarly, when rising to a standing position, there is a short period of stabilization as the movement is completed. The extent to which these transitions will appear in real-world data, rather than in a controlled setting, is unclear. In these periods, the “true activity” is not clearly defined but the annotations are seen to be conservative in starting and stopping short activities, whereas the predictions extend neighboring (well-predicted) resting periods. This contrast can negatively affect the apparent prediction accuracy, although many of the activities are correctly identified.

Let be the amount of time spent performing activity a (measured by ) and be the predicted amount of time spent performing activity a. For each subject and visit, in Table 3, we report for all activities a, a′.

Table 3.

Comparison of observer-annotated labels and the predicted labels L̂i(s), expressed as the proportion of the predicted time spent engaged in an activity and the time spent engaged in the activity according to the annotated activity labels

| Subject 1 Visit 1 | ||||||||

|---|---|---|---|---|---|---|---|---|

| Prediction |

||||||||

| Truth | Standing | Sitting | Lying | CS_Stand | CS_Sit | RS_Lie | RS_Stand | Walk |

| Standing | 78.3% | 0 | 0 | 21.7% | 0 | 0 | 0 | 0 |

| Sitting | 0 | 100% | 0 | 0 | 0 | 0 | 0 | 0 |

| Lying | 0 | 0 | 100% | 0 | 0 | 0 | 0 | 0 |

| CS_Stand | 4.3% | 30.5% | 0 | 55.3% | 0 | 0 | 6.4% | 3.5% |

| CS_Sit | 11.9% | 37.3% | 0 | 23.1% | 27.6% | 0 | 0 | 0 |

| RS_Lie | 0 | 0 | 22.9% | 49.4% | 0 | 27.7% | 0 | 0 |

| RS_Stand | 0 | 0 | 21.3% | 59.8% | 0 | 0 | 18.9% | 0 |

| Walk | 9.6% | 0 | 0 | 10.4% | 0.5% | 0 | 0 | 79.5% |

| Subject 1 Visit 2 | ||||||||

|---|---|---|---|---|---|---|---|---|

| Prediction |

||||||||

| Truth | Standing | Sitting | Lying | CS_Stand | CS_Sit | RS_Lie | RS_Stand | Walk |

| Standing | 96.8% | 0 | 0 | 0 | 2.7% | 0 | 0 | 0.4% |

| Sitting | 0 | 99.9% | 0 | 0 | 0.1% | 0 | 0 | 0 |

| Lying | 0 | 0 | 100% | 0 | 0 | 0 | 0 | 0 |

| CS_Stand | 25.6% | 25.6% | 0 | 40.2% | 8.5% | 0 | 0 | 0 |

| CS_Sit | 25.7% | 12.8% | 0 | 0 | 57.8% | 3.7% | 0 | 0 |

| RS_Lie | 40.3% | 0 | 14.9% | 0 | 0 | 44.8% | 0 | 0 |

| RS_Stand | 0 | 0 | 21.1% | 2.8% | 39.4% | 0 | 36.6% | 0 |

| Walk | 18.8% | 0 | 0 | 0.2% | 0.6% | 1.1% | 0 | 79.3% |

| Subject 2 Visit 1 | ||||||||

|---|---|---|---|---|---|---|---|---|

| Prediction |

||||||||

| Truth | Standing | Sitting | Lying | CS_Stand | CS_Sit | RS_Lie | RS_Stand | Walk |

| Standing | 99.9% | 0 | 0 | 0 | 0.1% | 0 | 0 | 0 |

| Sitting | 0.7% | 99.2% | 0 | 0.1% | 0.1% | 0 | 0 | 0 |

| Lying | 0 | 0 | 100% | 0 | 0 | 0 | 0 | 0 |

| CS_Stand | 10.9% | 16.4% | 0 | 57.3% | 0 | 0 | 10.0% | 5.5% |

| CS_Sit | 11.1% | 44.4% | 0 | 4.6% | 34.6% | 5.2% | 0 | 0 |

| RS_Lie | 10.6% | 0 | 50.6% | 0 | 0 | 27.1% | 11.8% | 0 |

| RS_Stand | 36.8% | 0 | 24.6% | 0 | 0 | 0 | 38.6% | 0 |

| Walk | 22.1% | 0 | 0 | 0.3% | 0 | 0.2% | 1.0% | 76.4% |

| Subject 2 Visit 2 | ||||||||

|---|---|---|---|---|---|---|---|---|

| Prediction |

||||||||

| Truth | Standing | Sitting | Lying | CS_Stand | CS_Sit | RS_Lie | RS_Stand | Walk |

| Standing | 100% | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Sitting | 0 | 100% | 0 | 0 | 0 | 0 | 0 | 0 |

| Lying | 0 | 0 | 100% | 0 | 0 | 0 | 0 | 0 |

| CS_Stand | 7.9% | 40.4% | 0 | 46.1% | 0 | 0 | 0 | 5.6% |

| CS_Sit | 33.3% | 20.6% | 0 | 9.8% | 35.3% | 0 | 0 | 1.0% |

| RS_Lie | 42.6% | 0 | 31.5% | 0 | 0 | 25.9% | 0 | 0 |

| RS_Stand | 34.4% | 0 | 34.4% | 21.3% | 0 | 0 | 9.8% | 0 |

| Walk | 31.3% | 0 | 0 | 0.1% | 0 | 0 | 0 | 68.6% |

Table 3 reinforces the observations from Figure 4 that long continuous activities, like resting and walking, are better predicted than short activities, like standing from a chair. In fact, with the exception of subject 1 at visit 1, all resting states are accurately predicted more that 99% of the time, and walking is accurately predicted between 68% and 80% of the time. However, short activities seem to be fairly poorly predicted, and are often mistaken for one of the resting states. Again, this apparent shortcoming stems from two major factors: i. these activities are undertaken for very short periods, so even minor misclassification can greatly impact results, and more importantly ii. the observer-provided annotations for these short activities are inaccurate.

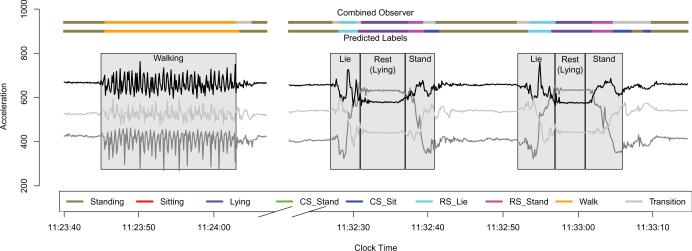

3.3. Refined results

A comparison of our initial predictions, the observer defined annotations and the raw accelerometer data indicate that a gold standard for Li(t), the true activity labels associated with acceleration data, is not given by the observer's annotations . Thus, we next create a “combined observer” to define activity labels by synthesizing information from the observer annotations and raw accelerometer output. Primarily, this resulted in designating times between two distinct activities as “transition times”, rather than misleadingly assigning these periods to one or the other activity. The new activity labels are shown in Figure 5, and a comparison of labels and predictions L̂i(t) is given in Table 4. All the tables demonstrate the large improvements in prediction accuracy that arise from improvements in the standard used to define true activity labels. We contend that these findings indicate that: 1) accurate labeling is crucial to prediction algorithm training; 2) a large source of prediction inaccuracies can reliably be traced to human labeling; and 3) prediction accuracy results reported in the literature are hard to compare because data use different labeling protocols.

Fig 5.

Comparison of “combined observer” annotations, based on observed-defined annotations and an inspection of the raw accelerometer data, and predicted labels.

Table 4.

Table of prediction agreement for both subjects and both visits, using the combined observer

| Subject 1 Visit 1 | ||||||||

|---|---|---|---|---|---|---|---|---|

| Prediction |

||||||||

| Truth | Standing | Sitting | Lying | CS_Stand | CS_Sit | RS_Lie | RS_Stand | Walk |

| Standing | 85.8% | 0 | 0 | 14.2% | 0 | 0 | 0 | 0 |

| Sitting | 0 | 100% | 0 | 0 | 0 | 0 | 0 | 0 |

| Lying | 0 | 0 | 100% | 0 | 0 | 0 | 0 | 0 |

| CS_Stand | 0 | 1.4% | 0 | 93.0% | 0 | 0 | 5.6% | 0 |

| CS_Sit | 4.2% | 0 | 0 | 41.7% | 54.2% | 0 | 0 | 0 |

| RS_Lie | 0 | 0 | 0 | 0 | 0 | 100% | 0 | 0 |

| RS_Stand | 0 | 0 | 22.6% | 0 | 0 | 77.4% | 0 | |

| Walk | 0 | 0 | 0 | 3.7% | 0 | 0 | 0 | 96.3% |

| Subject 1 Visit 2 | ||||||||

|---|---|---|---|---|---|---|---|---|

| Prediction |

||||||||

| Truth | Standing | Sitting | Lying | CS_Stand | CS_Sit | RS_Lie | RS_Stand | Walk |

| Standing | 98.8% | 0 | 0 | 0 | 1.2% | 0 | 0 | 0 |

| Sitting | 0 | 100% | 0 | 0 | 0 | 0 | 0 | 0 |

| Lying | 0 | 0 | 100% | 0 | 0 | 0 | 0 | 0 |

| CS_Stand | 7.5% | 0 | 0 | 75.0% | 17.5% | 0 | 0 | 0 |

| CS_Sit | 4.2% | 1.4% | 0 | 0 | 88.8% | 5.6% | 0 | 0 |

| RS_Lie | 0 | 0 | 16.7% | 0 | 0 | 83.3% | 0 | 0 |

| RS_Stand | 0 | 0 | 0 | 8.0% | 0 | 0 | 92.0% | 0 |

| Walk | 13.2% | 0 | 0 | 0 | 0.4% | 1.1% | 0 | 85.3% |

| Subject 2 Visit 1 | ||||||||

|---|---|---|---|---|---|---|---|---|

| Prediction |

||||||||

| Truth | Standing | Sitting | Lying | CS_Stand | CS_Sit | RS_Lie | RS_Stand | Walk |

| Standing | 99.8% | 0 | 0 | 0 | 0 | 0 | 0.2% | 0 |

| Sitting | 0 | 100% | 0 | 0 | 0 | 0 | 0 | 0 |

| Lying | 0 | 0 | 100% | 0 | 0 | 0 | 0 | 0 |

| CS_Stand | 0 | 0 | 0 | 96.9% | 0 | 0 | 3.1% | 0 |

| CS_Sit | 0 | 20.0% | 0 | 7.8% | 60.0% | 12.2% | 0 | 0 |

| RS_Lie | 0 | 0 | 0 | 0 | 0 | 100% | 0 | 0 |

| RS_Stand | 0 | 0 | 0 | 0 | 0 | 0 | 100% | 0 |

| Walk | 9.0% | 0 | 0 | 0 | 0 | 0 | 1.1% | 89.9% |

| Subject 2 Visit 2 | ||||||||

|---|---|---|---|---|---|---|---|---|

| Prediction |

||||||||

| Truth | Standing | Sitting | Lying | CS_Stand | CS_Sit | RS_Lie | RS_Stand | Walk |

| Standing | 100% | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Sitting | 0 | 100% | 0 | 0 | 0 | 0 | 0 | 0 |

| Lying | 0 | 0 | 100% | 0 | 0 | 0 | 0 | 0 |

| CS_Stand | 0 | 0 | 0 | 100% | 0 | 0 | 0 | 0 |

| CS_Sit | 6.1% | 0 | 0 | 20.4% | 73.5% | 0 | 0 | 0 |

| RS_Lie | 0 | 0 | 0 | 0 | 0 | 100% | 0 | 0 |

| RS_Stand | 0 | 0 | 0 | 68.4% | 0 | 0 | 31.6% | 0 |

| Walk | 13.7% | 0 | 0 | 0 | 0 | 0 | 0 | 86.3% |

The construction of the combined observer also illustrates the feedback from the movelet-based prediction algorithm to the annotations. Periods that were largely misclassified using as a reference, and that were labeled as “transitions” in , are periods where the distance between an unlabeled movelet and those in the reference dictionary is large. Thus, movelets that don't match well to any known reference can be quickly identified. In observational studies, this facilitates the recognition of movements that are not included in any dictionary or are otherwise abnormal.

4. Discussion

Understanding physical activity is a key component in public health studies of subject function. However, standard measures of physical function such as activities of daily living questionnaires are subject to substantial measurement error. Emerging accelerometer technologies allow the collection of real-time, real-world activity data and may alleviate many of the issues with retrospective self-report data collection.

In this paper we propose a method for activity classification built around the “movelet” as a basic element of movements. Using movelets with known activities, we construct reference chapters and dictionaries; given an unlabeled movelet, we find its closest match in the reference and use the match's label as a basis for prediction. Thus, our method is built on the intuition that movements with similar component acceleration patterns are likely to be generated by the same activity. This allows the method, and the matches it provides, to be quickly evaluated based on visual inspection of the accelerometer time series. Moreover, the extension to large data sets in which subjects are observed for hours or days is direct, because activity prediction is local in time. Finally, our method accurately predicts short activities, such as taking a few steps, as well as relatively rare and low-frequency movements such as rising from a chair.

Several directions exist for improving the movelet-based method. Focusing on the predictions for a single subject, transition models could naturally encode information about the order of movements and the likelihood of switching between them. Similarly, smoothing the distance functions (shown in Figures 4 and 5) would allow neighboring time points to influence the prediction at the current time. In our analysis, the movelet lengths were chosen to be 1 second; the sensitivity of predictions to this choice should be examined. Augmenting dictionaries to include objects other than movelets, for instance by adding measures of mean and variation, or to include sources of data other than the accelerometer, such as recorded speech or location information from a GPS device, could improve predictions. Our current method relies on models trained on each individual, and the solution of this issue is under exploration. A statistical technique is being developed to normalize the orientation of the devices across subjects, in order to enable us to perform prediction using models trained by other subjects. This will also increase our understanding of heterogeneity in acceleration patterns between and within subjects. For instance, constructing a multi-subject dictionary would necessitate an understanding of movement fingerprints across several subjects.

Our results and methods suggest three improvements that could help the deployment of this technology to large epidemiological studies. First, there is an increasing need to minimize the effect of changes in accelerometer orientation that can occur during normal movements; this can perhaps be addressed by taking advantage on gyroscopic capacities in the SHIMMER™ device. This would facilitate interpretation of the accelerometry data, especially in realistic scenarios where people wear these devices for extended periods of time, and also might allow the construction of dictionaries for use in populations. Second, the study could be more accurate if a human observer goes to the home of the participants, explains the setting up, carefully instructs the placement of the device and conducts a short testing period using a known sequence of common activities whose duration and type is carefully annotated. This would also resolve the problem of requiring subject-specific training of prediction algorithms, which was mentioned previously. It would also place a smaller burden on the participants. Finally, replication and calibration pre-studies should be conducted to ensure that prediction algorithms perform well on new subject or visit data.

The ability of technological solutions to improve the prediction of activity from accelerometer output is currently being evaluated. In the next phase of data collection, gyroscopic information will be used to normalize data to a constant vertical orientation. This may reduce the sensitivity of the movelet approach to rotations of the device that naturally occur as it is worn, and could also increase the comparability of movelets across subjects. Complementary improvements in the data collection via updated technology and in the activity prediction through refinements of the movelet approach will be needed to construct useful biomarkers of activity in large observational studies. The process of using and implementing new technologies in observational studies is a hard process filled with potential pitfalls. However, we find this challenge to be well worth undertaking by statisticians even before the beginning of the study in the design phase.

Contributor Information

Jiawei Bai, Department of Biostatistics, Johns Hopkins University 615 N. Wolfe St. Baltimore, MD 21205. USA jbai@jhsph.edu.

Jeff Goldsmith, Department of Biostatistics, Johns Hopkins University 615 N. Wolfe St. Baltimore, MD 21205. USA jgoldsmi@jhsph.edu.

Brian Caffo, Department of Biostatistics, Johns Hopkins University 615 N. Wolfe St. Baltimore, MD 21205. USA bcaffo@jhsph.edu.

Thomas A. Glass, Department of Epidemiology, Johns Hopkins University 615 N. Wolfe St. Baltimore, MD 21205. USA tglass@jhsph.edu

Ciprian M. Crainiceanu, Department of Biostatistics, Johns Hopkins University 615 N. Wolfe St. Baltimore, MD 21205. USA ccrainic@jhsph.edu

References

- 1.Atienza AA, King AC. Comparing self-reported versus objectively measured physical activity behavior: A preliminary investigation of older Filipino American Women. Research quarterly for exercise and sport. 2005;76:358–362. doi: 10.1080/02701367.2005.10599307. [DOI] [PubMed] [Google Scholar]

- 2.Bai J. Accelerometer-based prediction of activity for epidemiological research Master's thesis. Johns Hopkins University; 2011. [Google Scholar]

- 3.Bao L, Intille SS. Activity recognition from user-annotated acceleration data.. Proceedings of the 2nd International Conference on Pervasive Computing; 2004. pp. 1–17. Springer. [Google Scholar]

- 4.Boyle J, Karunanithi T, Wark T, Chan W, Colavitti C. Quantifying functional mobility progress for chronic disease management. 28th Annul Conference of the IEEE Engineering in Medicine and Biology Society; 2006. pp. 5916–5919. [DOI] [PubMed] [Google Scholar]

- 5.Bussmann JB, Martens WL, Tulen JH, Schasfoort FC, van den Berg-Emons HJ, Stam HJ. Measuring daily behavior using ambulatory accelerometry: the activity monitor. Behavior Research Methods, Instruments, & Computers. 2001;33(3):349–356. doi: 10.3758/bf03195388. [DOI] [PubMed] [Google Scholar]

- 6.Ermes M, Pärkka J, Mäntyjarävi J, Korhonen I. Detection of Daily Activities and Sports With Wearable Sensors in Controlled and Uncontrolled Conditions. IEEE Transactions on Information Technology in Biomedicine. 2008;12:20–26. doi: 10.1109/TITB.2007.899496. [DOI] [PubMed] [Google Scholar]

- 7.Feinstein AR, Josephy BR, Wells CK. Scientific and clinical problems in indexes of functional disability. Annals of Internal Medicine. 1986;105:413–420. doi: 10.7326/0003-4819-105-3-413. [DOI] [PubMed] [Google Scholar]

- 8.Freedson PS, Melanson E, Sirard J. Calibration of the Computer Science and Applications, Inc. accelerometer. Medicine & Science in Sports & Exercise. 1998;30(5):777–781. doi: 10.1097/00005768-199805000-00021. [DOI] [PubMed] [Google Scholar]

- 9.Grant PM, Dall PM, Mitchell SL, Granat MH. Activity-monitor accuracy in measuring step number and cadence in community-dwelling older adults. Journal of Aging and Physical Activity. 2008;16:204–214. doi: 10.1123/japa.16.2.201. [DOI] [PubMed] [Google Scholar]

- 10.Grant PM, Ryan CG, Tigbe WW, Granat MH. The validation of a novel activity monitor in the measurement of posture and motion during everyday activities. British Journal of Sports Medicine. 2006;40:992–997. doi: 10.1136/bjsm.2006.030262. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Hendelman D, Miller K, Baggett C, Debold E, Freedson P. Validity of accelerometry for the assessment of moderate intensity physical activity in the field. Medicine & Science in Sports & Exercise. 2000;32:442–449. doi: 10.1097/00005768-200009001-00002. [DOI] [PubMed] [Google Scholar]

- 12.Jelinek F. Statistical methods for speech recognition. the MIT Press; 1997. [Google Scholar]

- 13.Kiani K, Snijders CJ, Gelsema ES. Computerized analysis of daily life motor activity for ambulatory monitoring. International Journal of Technology Assessment in Health Care. 1997;5:307–318. [PubMed] [Google Scholar]

- 14.Kiani K, Snijders CJ, Gelsema ES. Recognition of daily life motor activity calsses using an artificial neural network. Archives of Physical Medicine and Rehabilitation. 1998;79:147–154. doi: 10.1016/s0003-9993(98)90291-x. [DOI] [PubMed] [Google Scholar]

- 15.Kozey-Keadle S, Libertine A, Lyden K, Staudenmayer J, FREEDSON PS. Validation of wearable monitors for assessing sedentary behavior. Medicine & Science in Sports & Exercise. 2011;43:1561. doi: 10.1249/MSS.0b013e31820ce174. [DOI] [PubMed] [Google Scholar]

- 16.Krause A, Sieiorek DP, Smailagic A, Farringdon J. Unsupervised, dynamic identification of physiological and activity context in wearable computing.. Proceedings of the 7th International Symposiu on Wearable Computers (White Plains, NY); 2003. pp. 88–97. IEEE Computer Society. [Google Scholar]

- 17.Mantyjarvi J, Himberg J, Seppanen T. Recognizing human motion with multiple acceleration sensors.. Proceedings of the IEEE International Conference on Systems, Man, and Cybernetics; 2001. pp. 747–752. IEEE Press. [Google Scholar]

- 18.McDowell I, Newell C. Measuring Health: A Guide to Rating Scales and Questionnaires. Oxford University Press; New York: 1987. [Google Scholar]

- 19.Nguyen A, Moore D, McCowan I. Unsupervised Clustering of Free-Living Human Activities using Ambulatory Accelerometry.. 29th Annual Conference of the IEEE Engineering in Medicine and Biology Society (Lyon); 2007. pp. 4895–4898. [DOI] [PubMed] [Google Scholar]

- 20.Pärkka J, Ermes M, Korpipää P, Mäntyjärvi J, Peltola J, Korhonen I. Activity Classification Using Realistic Data From Wearable Sensors. IEEE Transactions on Information Technology in Biomedicine. 2006;10:119–128. doi: 10.1109/titb.2005.856863. [DOI] [PubMed] [Google Scholar]

- 21.Pate RR, Pratt M, Blair SN, Haskell WL, Macera CA, Bouchard C, Buchner D, Ettinger W, Heath GW, King AC, Kriska A, Leon AS, Marcus BH, Morris J, Paffenbarger RS, Patrick K, Pollock ML, Rippe JM, Sallis J, Wilmore JH. Physical activity and public health. A recommendation from the Centers for Disease Control and Prevention and the American College of Sports Medicine. Journal of the American Medical Association. 1995;273:402–407. doi: 10.1001/jama.273.5.402. [DOI] [PubMed] [Google Scholar]

- 22.Picone JW. Signal Modeling Techniques In Speech Recognition.. Proceedings of the IEEE; 2005. pp. 1541–1546. IEEE Press. [Google Scholar]

- 23.Pober DM, Staudenmayer J, Raphael C, Freedson PS. Development of Novel Techniques to Classify Physical Activity Mode Using Accelerometers. Medicine & Science in Sports & Exercise. 2006;38(9):1626–1634. doi: 10.1249/01.mss.0000227542.43669.45. [DOI] [PubMed] [Google Scholar]

- 24.Preece SJ, Goulermas JY, Kenney LPJ, Howard D, Meijer K, Crompton R. Activity identification using body-mounted sensors—a review of classification techniques. Physiological Measurement. 2009;30:R1. doi: 10.1088/0967-3334/30/4/R01. [DOI] [PubMed] [Google Scholar]

- 25.Ravi N, Dandekar N, Mysore P, Littman ML. Activity recognition from accelerometer data.. Proceedings of the Seventeenth Conference on Innovative Applications of Artificial Intelligence; 2005. pp. 1541–1546. AAAI Press. [Google Scholar]

- 26.Staudenmayer J, Pober D, Crouter S, Bassett D, Freedson P. An artificial neural network to estimate physical activity energy expenditure and identify physical activity type from an accelerometer. Journal of Applied Physiology. 2009;107(4):338–345. doi: 10.1152/japplphysiol.00465.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Troiano RP, Berrigan D, Dodd KW, Mâsse LC, Tilert T, McDowell M. Physical activity in the United States measured by accelerometer. Medicine & Science in Sports & Exercise. 2008;40(1):181–188. doi: 10.1249/mss.0b013e31815a51b3. [DOI] [PubMed] [Google Scholar]

- 28.Welk GJ, Blair SN, Jones S, Thompson RW. A comparative evaluation of three accelerometry-based physical activity monitors. Medicine & Science in Sports & Exercise. 2000;32:489–497. doi: 10.1097/00005768-200009001-00008. [DOI] [PubMed] [Google Scholar]

- 29.Zhang K, Pi-Sunyer FX, Boozer CN. Measurement of human daily physical activity. Obesity Research. 2003;11:33–40. doi: 10.1038/oby.2003.7. [DOI] [PubMed] [Google Scholar]

- 30.Zhang K, Pi-Sunyer FX, Boozer CN. Improving energy expenditure estimation for physical activity. Medicine & Science in Sports & Exercise. 2004;36:883–889. doi: 10.1249/01.mss.0000126585.40962.22. [DOI] [PubMed] [Google Scholar]