Abstract

How do external environmental and internal movement-related information combine to tell us where we are? We examined the neural representation of environmental location provided by hippocampal place cells while mice navigated a virtual reality environment in which both types of information could be manipulated. Extracellular recordings were made from region CA1 of head-fixed mice navigating a virtual linear track and running in a similar real environment. Despite the absence of vestibular motion signals, normal place cell firing and theta rhythmicity were found. Visual information alone was sufficient for localized firing in 25% of place cells and to maintain a local field potential theta rhythm (but with significantly reduced power). Additional movement-related information was required for normally localized firing by the remaining 75% of place cells. Trials in which movement and visual information were put into conflict showed that they combined nonlinearly to control firing location, and that the relative influence of movement versus visual information varied widely across place cells. However, within this heterogeneity, the behavior of fully half of the place cells conformed to a model of path integration in which the presence of visual cues at the start of each run together with subsequent movement-related updating of position was sufficient to maintain normal fields.

Hippocampal place cells fire when the animal visits a specific area in a familiar environment (1), providing a population representation of self-location (2–4). However, it is still unclear what information determines their firing location (“place field”). Existing models suggest that movement-related information updates the representation of self-location from moment-to-moment (i.e., performing “path integration”), whereas environmental information provides initial localization and allows the accumulating error inherent in path integration to be corrected sporadically (5–13). Previous experimental work addressing this question has found it difficult to dissociate the different types of information available in the real world. Both external sensory cues (3, 14–16) and internal self-motion information (17–19) can influence place cell firing, but these have usually been tightly coupled in previous experiments.

To date, a range of computational models predicting place fields has been proposed based on the assumption that either environmental sensory information (20–22) or a self-motion metric is fundamental (7, 23). However, there is no agreement on which is more important and how these signals combine to generate spatially localized place cell firing and its temporal organization with respect to the theta rhythm (24). Recent studies showed that mice could navigate in a virtual environment (VE) and a small sample of place cells has been recorded in mice running on a virtual linear track (25–27). VE affords the opportunity to isolate the visual environment and internal movement-related information from other sensory information, and to study their contributions to place cell firing. Here we use manipulations of these inputs in a VE to dissociate the relative contributions to place cell firing and theta rhythmicity of external sensory information relating to the (virtual) visual environment and internal movement-related (motoric and proprioceptive) information.

Results

Place Cell Firing and Theta in the Virtual Environment.

Six C57B6 mice were trained to run on an air-cushioned ball with a fixed head position surrounded by liquid crystal display screens showing a first-person perspective view of a virtual linear track in which the movement of viewpoint corresponds to the movement of the ball. The mice were given 3 d of training during which they learned to run along the track to receive a soy milk reward at either end (Fig. S1 and Behavioral Procedure) before formal testing began.

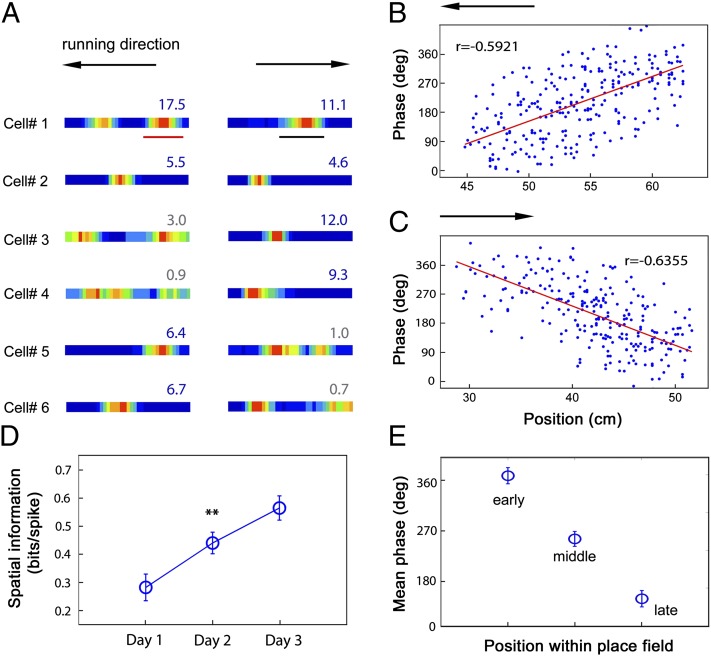

We recorded 119 CA1 complex spike cells using tetrodes (Fig. S2). Spatially localized firing fields (“place fields”) normally appeared on the first training day in the VE (Fig. S3). The spatial information content of place fields increased significantly over the first 3 training days of experience, and reached 0.56 ± 0.04 (mean ± SEM) bits/spike on the third day (Fig. 1 A and D). Seventy-nine percent of the CA1 complex spike cells were identified as place cells on the test day (i.e., having one or two localized patches of firing and spatial information above the P = 0.05 level in spatially shuffled data) (28). The majority (69%) of virtual place cells also had place fields on a similar looking linear track in the real world, although only a small percentage of cells (19%) had fields in comparable locations (Table 1, Fig. S4). In addition, the local field potential (LFP) in CA1 showed the characteristic “movement-related” theta rhythm in the VE, although with reduced frequency compared with the real environment (Table 1), which might be due to the lower running speed in the VE (9.57 ± 0.20 cm/s, compared with 16.80 ± 0.59 cm/s in the real world). Virtual place cells also showed normal theta phase precession (24), firing at successively earlier phases of the LFP theta rhythm (Fig. 1 B, C, and E and Fig. S5).

Fig. 1.

Place cells firing on a virtual linear track. (A) Six representative examples of place cells firing on a virtual linear track. Color maps of spike rates are created from multiple runs in the eastward direction (Right) and westward direction (Left). Peak firing rates (Hz) are indicated above each plot. Theta cycle phases of spikes from the place field of cell 1 on the westward runs (B) and on the eastward runs (C) are plotted against position (the underlined segments in A). (D) Average spatial information from complex spike cells (n = 56) on the first three training days (**increase from day 1 to day 2, P < 0.01). (E) Spike phase precesses within each firing field, being highest in the early third, lower in the middle third, and lowest in the late third (n = 57). Vertical bars represent ± SEM.

Table 1.

Comparison of place cells between virtual reality and real world

| Measure | Virtual reality | Real world | P value |

| Firing rate (Hz) | 1.65 ± 0.17 | 2.15 ± 0.21 | 0.0702 |

| In-field peak firing rate (Hz) | 7.23 ± 0.74 | 8.96 ± 0.83 | 0.1231 |

| Spatial information (bits/spike) | 0.76 ± 0.06 | 0.91 ± 0.08 | 0.1348 |

| Field size (%) | 36.89 ± 2.44 | 37.33 ± 2.44 | 0.8971 |

| Theta index of LFP | 4.03 ± 1.01 | 3.45 ± 0.79 | 0.2589 |

| Peak frequency of LFP (Hz) | 7.39 ± 0.08 | 8.24 ± 0.08 | 0.0106 |

Influence of Visual Information.

We tested the influence of visual cues on place cell firing by removing some or all of these from the VE on probe trials interspersed with normal trials (Fig. S6). The majority of place cells (81%) changed their firing patterns when all salient environmental visual cues were removed from the VE (probe-baseline spatial correlation more than 2 SDs below the mean baseline–baseline correlation). In subsequent probe trials, the spatial firing of most place cells was shown to be dependent on subsets of visual cues (Fig. 2). The largest percentage (61.6%) depended predominantly on the side cues, whereas only 4.1% relied predominantly on the end cues, and the place fields of a further 12.3% of cells could be supported by either side or end cues. Only two cells (2.7%), both firing at the end of the virtual track, required both the end and side cues to maintain their firing fields. (See SI Materials and Methods for further details.)

Fig. 2.

Visual control of place fields in the virtual environment. (A) Five representative cells showing different types of responses to visual cue manipulations (left to right: spatial firing that requires only side cues, only end cues, either side or end cues, both side and end cues, or some other stimuli). (B) Percentage of response types corresponding to A (see color key above each type, and SI Materials and Methods for details). Peak firing rate (C), spatial information (D), and spatial correlation (E) decreased as more visual cues were removed. n = 73. *P < 0.05; **P < 0.01; ***P < 0.001 in the comparison with baseline trials.

To support the categorical analysis described here, we analyzed the spatial correlations between the firing rate maps for baseline and probe trials. Higher correlations were seen between the baseline trials before and after the probe series (r = 0.75 ± 0.03; mean ± SEM, n = 73 place cells) than between baseline and probe trials with subsets of visual cues absent (side-cues only versus baseline: r = 0.62 ± 0.04, P < 0.05; end-cues only versus baseline: 0.30 ± 0.05, P < 0.005; no cues versus baseline: 0.10 ± 0.05, P < 0.001). Across the population, the spatial correlations between baseline and probe trials as well as peak firing rates and spatial information increased with the amount of visual information remaining in the probe trial (Fig. 2 C–E). Thus, the visual cues, and in particular those on the side walls (which have the largest spatial extent), are crucial to place cell firing in visual virtual reality.

Influence of Movement Information.

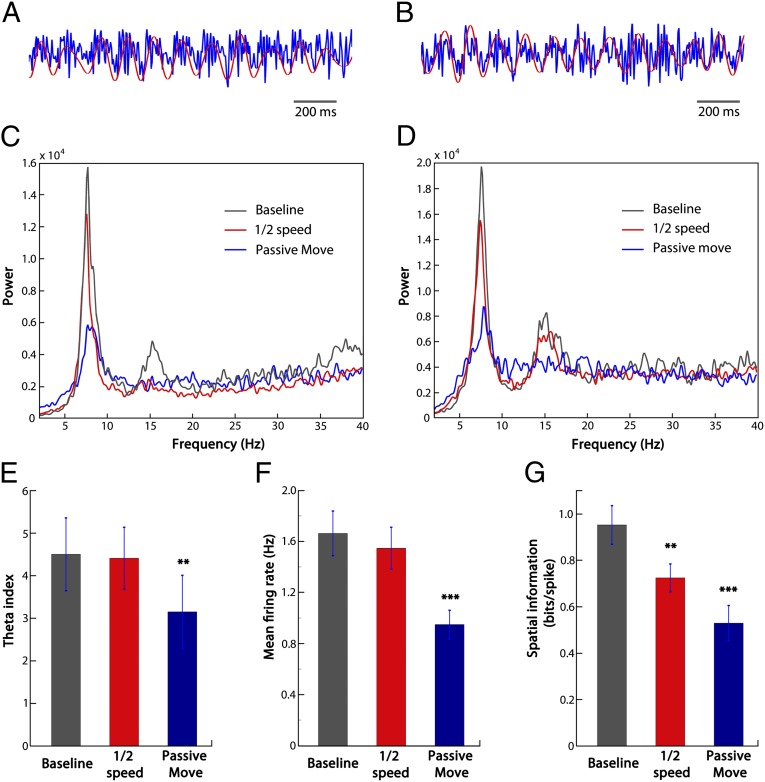

Having established the importance of the visual cues for localization on the track, we next minimized the proprioceptive and motoric inputs related to physical movement in a passive-movement probe trial. We turned off the air cushion underneath the ball so that the mouse sat passively on the ball while its viewpoint moved along the virtual track at 10 cm/s (mean speed was 9.57 ± 0.20 cm/s in self-propelled trials). In this condition, overall place cells showed significantly reduced firing rates and spatial information. Seventy-five percent of the cells showed a significant change in firing pattern (probe–baseline spatial correlation more than 2 SDs below the mean baseline–baseline correlation), with 25% maintaining their firing fields without significant change (Fig. 3 A and B). These remaining fields were distributed fairly evenly across the whole track (Fig. S7). Thus, the majority of place fields required movement-related information in addition to visual environmental information, whereas visual information alone was sufficient for a minority. We then considered whether the variation in dependence on movement-related information reflects differences between animals or within animals (Table 2). There was substantial variation across animals: two contained a substantial proportion of cells (i.e., ∼50%) for which visual cues alone were sufficient, whereas in the other three animals the majority of cells (i.e., >85%) relied on both visual and movement-related information . Theta rhythm was also clearly present in the LFP in passive-movement trials, but at significantly reduced power (Fig. 4 C–E).

Fig. 3.

Sensory and motor control of place fields in the virtual environment. (A) Two representative cells showing responses to the manipulation of passive movement: the firing field on the left was maintained, whereas that on the right scattered. (B) Sixteen of 63 cells (25%) show similar firing in the baseline and passive movement trials (spatial correlation within 2 SDs of the mean baseline–baseline correlation, i.e., R > 0.51, red line). (C) Four representative cells showing responses to the manipulation of half speed: the firing fields of cells 1 and 2 moved backward against the direction of movement, whereas that of cell 3 did not move and that of cell 4 scattered. (D) Thirty-two of 73 cells show significant centroid backward shift (shifting by more than 2 SDs from the mean baseline–baseline shift, 2.93 cm). (E) The cells from the backward-spatial-shift group (n = 32) have significantly bigger centroid shift in the half speed trial than between baseline trials. (F) Classification of place cell responses to the half-speed manipulation.

Table 2.

Cell response types in the passive movement trial for each animal

| Animal ID | No. of place cells | Visual sufficient | Motion required |

| 1 | 10 | 6 | 4 |

| 2 | 7 | 0 | 7 |

| 3 | 17 | 7 | 10 |

| 4 | 19 | 2 | 17 |

| 5 | 10 | 1 | 9 |

Fig. 4.

Comparison of theta power and mean firing rates between half speed, passive move trials, and baseline trials. (A and B) Two examples of the raw LFP traces in the baseline condition (in blue). Red traces are the filtered theta (4–12 Hz). (C and D) Two examples of LFP power spectra (A and C are from the same mouse; B and D are from the same mouse). (E) Comparison of theta index (the ratio of mean power within 1 Hz of the theta peak and mean power over 2–40 Hz; n = 5) across conditions. (F) Comparison of mean firing rates (n = 63, from the five animals). (G) Comparison of spatial information (n = 63, from the five animals). **P < 0.01, ***P < 0.001.

Effect of Conflicting Visual and Movement Information.

To further examine the role of physical movement-related inputs in controlling place cell firing, we manipulated the relationship between the movement of the ball and the movement of the viewpoint in the VE to induce a conflict between visual and movement information. The gain of ball-to-virtual movement was halved, so that animals had to run twice the distance on the ball in the presence of the visual cues to achieve the same virtual translation as in baseline trials. If place-field location was calculated on the basis of self-motion (motoric or proprioceptive) information such as the number of steps since the start of the run, then place fields would move backward against the direction of movement in this condition. Consistent with this prediction, average place field locations shifted toward the start of the track (shift in firing centroid: 2.64 ± 0.39 cm, P < 0.001).

Similarly to the passive movement manipulation, the half-speed manipulation had different effects on different cells. Across the population, 32/73 place fields (44%) showed a significant effect of the manipulation: shifting closer to the start of the virtual track by more than 2 SDs from the mean of the full-speed population (Fig. 3 C, D, and F). However, the amount of place field movement even in these cells (firing centroid shift of 5.53 ± 0.48 cm compared with the shift between baseline trials of −0.23 ± 0.20 cm, Fig. 3E) was less than would be predicted if they were located solely by path integration (18.03 ± 1.07 cm: half the mean distance from the place field centroids from the start of the track in baseline trials). Thus, even in these cells, movement-related information did not determine firing location alone, but must be combining with visual information. The two types of information conflicted in these trials, and their combination was nonlinear, because the backward-shift of the place fields did not increase parametrically with their distance from the start of the track (Fig. S8, r = 0.20, P = 0.11).

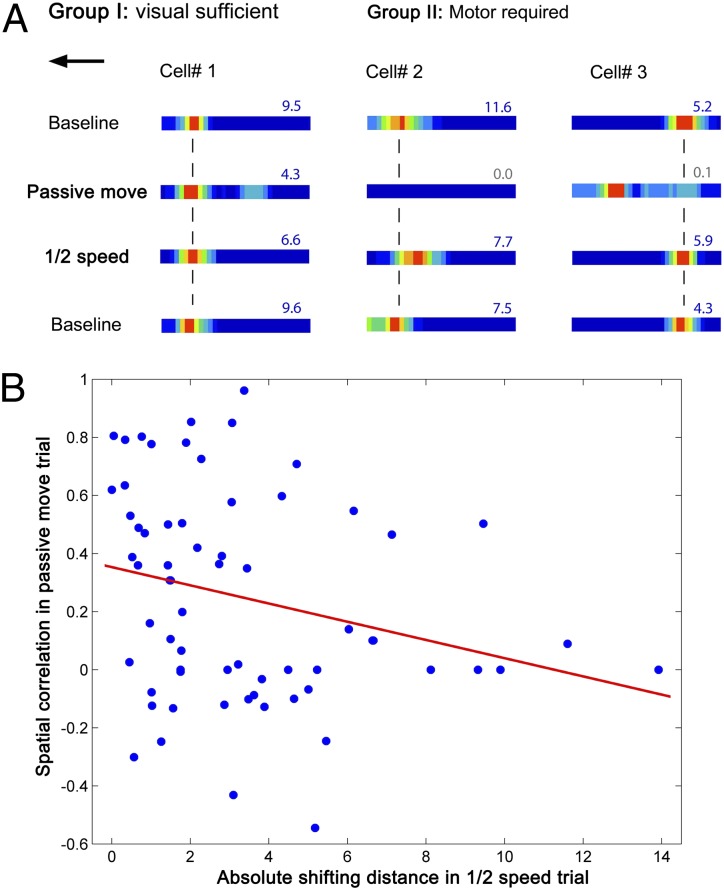

Visual and Movement Information Can Cooperate to Specify Place Field Location.

The passive-movement and half-speed trials reveal a variable dependence on movement information, ranging from cells for which visual information is sufficient (25% showing no significant effect of passive movement) to cells that require movement to fire normally (and fail to do so in the passive-movement trial) but do not show a significant field shift in the half-speed trial (40%) to cells in which movement information is required and combines with visual information in determining the firing location (35%, Fig. 5A). The effects on firing of the passive-movement condition and the half-speed condition showed a significant trend such that cells showing larger changes in the passive-movement condition (i.e., reduced spatial correlation with baseline) also tended to show larger field shifts in the half-speed condition (Fig. 5B, r = −0.26, P = 0.04).

Fig. 5.

Place cells are driven by heterogeneous combinations of visual and movement-related inputs. (A) A total of 25% of place cells can be driven solely by visual inputs. Cell 1 is an example showing that a place field remained in position in the passive move and half-speed trials. The majority (75%) of place cells also required movement-related inputs. Two representative cells showing dependence on movement-related inputs; their fields disappeared in the passive movement trial, but either moved against the direction of movement in the half-speed trial (cell 2) or maintained their position (cell 3). (B) Relationship of spatial correlation between the passive-movement and baseline conditions to the amount the field shifts in the half-speed condition (r = −0.26, P = 0.04).

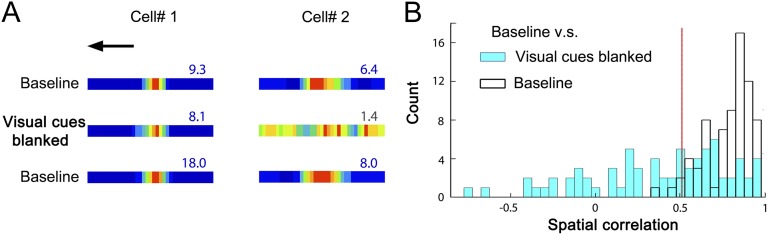

Given that the firing of the majority of place cells reflects both visual- and movement-related information, and that the firing location of a subset of place cells is influenced by both types of information (i.e., the cells which shifted in the half-speed trial), we wondered how they combine in situations in which they are not put into conflict. One possibility is that self-location is initially set (or reset) by environmental information and is updated by movement-related information (or “path integration”). To test this idea, we ran a probe trial in which visual cues were present at the beginning of each run, allowing the mouse to locate itself, but were then blanked when the mouse started to run, leaving only movement-related information to indicate how far the mouse had moved down the track (Fig. S6). Interestingly, a substantial proportion of fields (49%) were not disrupted by this manipulation (Fig. 6), suggesting that movement-related information could indeed sustain their firing during a run following initial sensory localization.

Fig. 6.

Movement-related control of place fields in the virtual environment. (A) Two representative cells showing responses to the manipulation of light-off after run start: the firing field on the left was maintained, whereas that on the right scattered. (B) Thirty-six of 73 cells (49%) show similar firing patterns in baseline and light-off trials (spatial correlations within 2 SDs of the mean baseline–baseline correlation, r > 0.51, red line).

Discussion

Our results from extracellular recordings confirm the intracellular finding of Harvey et al. (25) that CA1 place cells can be activated in a head-fixed VE. The place fields in VR are similar to those in a real environment, in terms of numbers, sizes, and in-field peak rates, with a slight reduction in firing rates (not reaching significance). There is also normal movement-related theta rhythm in the LFP and normal theta-phase precession during active movement through the environment (29). As would be expected, the majority of place cells (81%) required some visual input to show spatially localized firing within the (visual) virtual environment, and the remaining cells mostly fired at the ends of the track, possibly responding to the delivery of reward and its behavioral effects. Further studies will be required to dissociate the role of visual input in providing environmental information (i.e., the location of static landmarks) from its potential role in providing external sensory information relating to motion [i.e., optic flow, which can contribute to path integration in humans, see refs. 30–32, possibly mediated by the hippocampus (33)].

The presence of normal place cell activity in the virtual environment enabled us to dissociate the influences of environmental sensory– (visual) and internal movement–related (motoric and proprioceptive) information on place cell firing and LFP theta rhythmicity. The majority of place cells required visual cues, particularly on the side walls, for localized firing in the virtual environment. There was a more heterogeneous dependence on movement-related information. Earlier work suggested that place cells reduced their firing dramatically if animals were restrained tightly (34, 35), but some cells could maintain their firing when animals were moved passively through the environment (36). Our results showed that visual information alone was sufficient for localized firing by 25% of place cells and to maintain an LFP theta rhythm with significantly reduced power. We have previously suggested that place cells receive their environmental sensory inputs from boundary vector cells found in the subiculum and entorhinal cortex (37, 38). These cells signal the distance of the animal’s head from landmarks in an allocentric direction; in the present case, from the visual cues along the edge of the virtual environment. Removal of all salient virtual visual cues affects the majority of place fields, sparing only those adjacent to the reward locations. These findings suggest that without visual/sensory anchoring, movement-related path integration system alone is not sufficient for generating place fields.

However, in agreement with previous work stressing movement-related inputs to place cells (17, 18), our results demonstrated that the majority (75%) of place cells were also strongly dependent on movement-related information. The dependence of CA1 place cell firing on movement-related information was surprisingly heterogeneous. Diverse cell responses to manipulations suggest that individual cells are independently driven by different strengths of visual and motor-related path integration inputs; this is consistent with previous work (39, 40). Previous studies have demonstrated that the ability of place cells to follow a visual stimulus depended on the degree of mismatch between that stimulus and the remaining environment cues together with path integration cues (34, 41). It is also possible to devalue the control by the visual cue by making it unstable (42).

For half of the place cells (49%), the presence of visual cues at the start of each run was sufficient to allow normal firing to be supported by movement-related information after removal of visual cues. This is consistent with observations in the running wheel where normal dynamics of place cell firing can be driven by internal self-motion information when sensory input is constant (19, 43, 44). This path integration input to place cells is likely mediated by entorhinal cortical grid cells. Similar manipulations of the gain between visual and physical movement show behavioral evidence for a combined visual/movement-related representation in humans (45).

In conclusion, the firing of CA1 place cells in head-fixed mice navigating a virtual linear track closely resembles their firing in a similar real environment and allows dissociation of the influences of the VE and physical movement. The majority of place cells require both visual- and movement-related information, but the extent of dependence on the latter is heterogeneous across cells. Within this heterogeneity, about half of the cells conform to a model of path integration in which environmental information sets initial self-location that is then updated by movement-related information (5–14, 17).

Materials and Methods

Animal Procedures.

Six male C57BL/6J mice, aged 4–6 mo and weighing 28–32 g at time of surgery, were used as subjects. Mice were singly housed in the cages on a 12:12 h light:dark schedule (with lights on at 11:00 AM). All experiments were carried out in accordance with the UK Animals (Scientific Procedures) Act 1986 and received approval from the University College London Ethics Review Panel.

Mice were anesthetized with 1–2% isoflurane in O2/NO2, and 0.5 µg/10 g body weight buprenorphine and chronically implanted with microdrives loaded with four tetrodes. After surgery, mice were placed in a heated chamber until fully recovered from the anesthetic (normally about 1 h) and then returned to their home cages. After 1 wk of postoperative recovery, the electrodes were advanced ventrally by 60 µm/d until CA1 complex spike cells were found. See SI Materials and Methods for further details.

Virtual Reality Set-up.

The virtual reality (VR) system was designed for head-restrained mice on an air-cushioned spherical treadmill using adaptations to the CaveUT modification (http://publicvr.org/) of the game engine Unreal Tournament 2004 (Epic Software). Two 24-in Samsung monitors were placed at a 90° angle arranged as in Fig. S1 to display a 3D scene generated by the VR software. The view frustum of each monitor was adjusted according its physical arrangement relative to the head, providing a single viewing location (where animals were located) from which the virtual geometry appeared undistorted. For this physical arrangement, the horizontal field of view of each monitor was 83.8°, and the view was rotated by 45° in opposing directions for each monitor (note that there was a “dead” space of 4° horizontal directly ahead of the animal due to the monitor bezels). Rotation of the Styrofoam ball was detected by an optical computer mouse (Razer Imperator) chosen for high resolution and sampling frequency (5,600 dots per inch at 1 kHz), which was positioned behind the mouse at the intersection between the equator of the ball and the medial plane of the animal. The signal at the vertical axis was interpreted by the VR software as a control signal for the forward and backward movement of the virtual location of the animal, which was logged with a resolution of 50 Hz. Reward was delivered by a syringe pump (Harvard Apparatus) attached to silicone tubing (Sani-Tech STHT-C-040-0) positioned directly in front of the mouse’s mouth. The delivery of the reward was controlled by events in VR via UDP network packets to the local host captured by software written in Python 2.7 and relayed via USB to a Labjack U3 data acquisition device to produce 5v TTL signals to trigger the pump. Neural activity was recorded using a separate computer running the Axona DACQ system. Synchronization events were logged by the VR system and sent to the DACQ recordings via UDP network packets allowing virtual location to be time-locked to electrophysiological recordings.

Behavioral Procedure.

Mice underwent 3 d of training sessions in the VR apparatus, one session per day consisting of four trials. Trials were 10-min long; intertrial intervals were 15 min. During a trial, the mice were trained on a linear track with textural cues on the side and end walls (Fig. S1). The mice were trained to run back and forth on the virtual track for milk reward, which was triggered by arrival over white disks on the floor at either end of the track. Training continued while tetrodes were lowered in search of CA1 complex spike cells. After complex spike cells were stably recorded, probe-testing sessions began. These consisted of baseline trials in the training environment, interleaved with six probe trials as described in Fig. S2. See SI Materials and Methods for further details on behavioral procedure and signal recording.

Data Analysis.

Complex spike cells were first identified based on: (i) spike width (peak-trough) >300 µs and (ii) a prominent peak in the spike time autocorrelogram at 3–8 ms. Spatial cell detection (28) was performed to select cells with good spatial selectivity from recorded complex spike cells. For a given cell, spike times were shuffled relative to the mouse’s virtual location 1,000 times to generate shuffled rate maps. The threshold values were defined as the 95th percentiles of the shuffled populations of spatial information. Cells with spatial information higher than the threshold were included. The calculation yielded 95 spatial cells, out of 119 recorded complex spike cells. For comparisons between manipulations, cells that did not fire in all baseline trials were excluded as unreliable; as a result, 73 reliable spatial cells in total were included for further comparisons. Passive movement trials were conducted on the five of the six mice, so that 63 cells were included. Further details on data analysis are described in SI Materials and Methods.

Supplementary Material

Acknowledgments

We thank S. Burton for technical assistance. This work was supported by the Wellcome Trust, the Gatsby Charitable Foundation, the European Union SpaceBrain Grant, and the Medical Research Council (United Kingdom).

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1215834110/-/DCSupplemental.

References

- 1.O’Keefe J, Dostrovsky J. The hippocampus as a spatial map. Preliminary evidence from unit activity in the freely-moving rat. Brain Res. 1971;34(1):171–175. doi: 10.1016/0006-8993(71)90358-1. [DOI] [PubMed] [Google Scholar]

- 2.O'Keefe J, Nadel L. The Hippocampus as a Cognitive Map. Oxford: Oxford Univ Press; 1978. [Google Scholar]

- 3.Muller RU, Kubie JL. The effects of changes in the environment on the spatial firing of hippocampal complex-spike cells. J Neurosci. 1987;7(7):1951–1968. doi: 10.1523/JNEUROSCI.07-07-01951.1987. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Wilson MA, McNaughton BL. Dynamics of the hippocampal ensemble code for space. Science. 1993;261(5124):1055–1058. doi: 10.1126/science.8351520. [DOI] [PubMed] [Google Scholar]

- 5.Mittelstaedt H, Mittelstaedt ML. Mechanismen der orientierung ohne richtende aussenreize. Fortschr Zool. 1973;21:46–58. [Google Scholar]

- 6.Gallistel CR. The Organization of Learning. Cambridge, MA: MIT Press; 1990. [Google Scholar]

- 7.McNaughton BL, et al. Deciphering the hippocampal polyglot: The hippocampus as a path integration system. J Exp Biol. 1996;199(Pt 1):173–185. doi: 10.1242/jeb.199.1.173. [DOI] [PubMed] [Google Scholar]

- 8.Etienne AS, Maurer R, Séguinot V. Path integration in mammals and its interaction with visual landmarks. J Exp Biol. 1996;199(Pt 1):201–209. doi: 10.1242/jeb.199.1.201. [DOI] [PubMed] [Google Scholar]

- 9.Redish AD. Beyond the Cognitive Map: From Place Cells to Episodic Memory. Cambridge, MA: MIT Press; 1999. [Google Scholar]

- 10.Save E, Nerad L, Poucet B. Contribution of multiple sensory information to place field stability in hippocampal place cells. Hippocampus. 2000;10(1):64–76. doi: 10.1002/(SICI)1098-1063(2000)10:1<64::AID-HIPO7>3.0.CO;2-Y. [DOI] [PubMed] [Google Scholar]

- 11.Loomis JM, et al. Nonvisual navigation by blind and sighted: Assessment of path integration ability. J Exp Psychol Gen. 1993;122(1):73–91. doi: 10.1037//0096-3445.122.1.73. [DOI] [PubMed] [Google Scholar]

- 12.Srinivasan M, Zhang S, Bidwell N. Visually mediated odometry in honeybees. J Exp Biol. 1997;200(Pt 19):2513–2522. doi: 10.1242/jeb.200.19.2513. [DOI] [PubMed] [Google Scholar]

- 13.Collett TS, Fry SN, Wehner R. Sequence learning by honeybees. J Comp Physiol. 1993;172:693–706. [Google Scholar]

- 14.O’Keefe J. Place units in the hippocampus of the freely moving rat. Exp Neurol. 1976;51(1):78–109. doi: 10.1016/0014-4886(76)90055-8. [DOI] [PubMed] [Google Scholar]

- 15.O’Keefe J, Conway DH. Hippocampal place units in the freely moving rat: Why they fire where they fire. Exp Brain Res. 1978;31(4):573–590. doi: 10.1007/BF00239813. [DOI] [PubMed] [Google Scholar]

- 16.O’Keefe J, Burgess N. Geometric determinants of the place fields of hippocampal neurons. Nature. 1996;381(6581):425–428. doi: 10.1038/381425a0. [DOI] [PubMed] [Google Scholar]

- 17.Gothard KM, Skaggs WE, McNaughton BL. Dynamics of mismatch correction in the hippocampal ensemble code for space: Interaction between path integration and environmental cues. J Neurosci. 1996;16(24):8027–8040. doi: 10.1523/JNEUROSCI.16-24-08027.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Terrazas A, et al. Self-motion and the hippocampal spatial metric. J Neurosci. 2005;25(35):8085–8096. doi: 10.1523/JNEUROSCI.0693-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Pastalkova E, Itskov V, Amarasingham A, Buzsáki G. Internally generated cell assembly sequences in the rat hippocampus. Science. 2008;321(5894):1322–1327. doi: 10.1126/science.1159775. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Burgess N, Jackson A, Hartley T, O’Keefe J. Predictions derived from modelling the hippocampal role in navigation. Biol Cybern. 2000;83(3):301–312. doi: 10.1007/s004220000172. [DOI] [PubMed] [Google Scholar]

- 21.Zipser D. A computational model of hippocampal place fields. Behav Neurosci. 1985;99(5):1006–1018. doi: 10.1037//0735-7044.99.5.1006. [DOI] [PubMed] [Google Scholar]

- 22.Sharp PE, Blair HT, Brown M. Neural network modeling of the hippocampal formation spatial signals and their possible role in navigation: A modular approach. Hippocampus. 1996;6(6):720–734. doi: 10.1002/(SICI)1098-1063(1996)6:6<720::AID-HIPO14>3.0.CO;2-2. [DOI] [PubMed] [Google Scholar]

- 23.Samsonovich A, McNaughton BL. Path integration and cognitive mapping in a continuous attractor neural network model. J Neurosci. 1997;17(15):5900–5920. doi: 10.1523/JNEUROSCI.17-15-05900.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.O’Keefe J, Recce ML. Phase relationship between hippocampal place units and the EEG theta rhythm. Hippocampus. 1993;3(3):317–330. doi: 10.1002/hipo.450030307. [DOI] [PubMed] [Google Scholar]

- 25.Harvey CD, Collman F, Dombeck DA, Tank DW. Intracellular dynamics of hippocampal place cells during virtual navigation. Nature. 2009;461(7266):941–946. doi: 10.1038/nature08499. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Youngstrom IA, Strowbridge BW. Visual landmarks facilitate rodent spatial navigation in virtual reality environments. Learn Mem. 2012;19(3):84–90. doi: 10.1101/lm.023523.111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Hölscher C, Schnee A, Dahmen H, Setia L, Mallot HA. Rats are able to navigate in virtual environments. J Exp Biol. 2005;208(Pt 3):561–569. doi: 10.1242/jeb.01371. [DOI] [PubMed] [Google Scholar]

- 28.Wills TJ, Cacucci F, Burgess N, O’Keefe J. Development of the hippocampal cognitive map in preweanling rats. Science. 2010;328(5985):1573–1576. doi: 10.1126/science.1188224. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Royer S, et al. Control of timing, rate and bursts of hippocampal place cells by dendritic and somatic inhibition. Nat Neurosci. 2012;15(5):769–775. doi: 10.1038/nn.3077. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Riecke BE, Cunningham DW, Bülthoff HH. Spatial updating in virtual reality: The sufficiency of visual information. Psychol Res. 2007;71(3):298–313. doi: 10.1007/s00426-006-0085-z. [DOI] [PubMed] [Google Scholar]

- 31.Klatsky RL, Loomis JM, Beall AC, Chance SS, Golledge RG. Spatial updating of self-position and orientation during real, imagined, and virtual locomotion. Psychol Sci. 1998;9:293–298. [Google Scholar]

- 32.Kearns MJ, Warren WH, Duchon AP, Tarr MJ. Path integration from optic flow and body senses in a homing task. Perception. 2002;31(3):349–374. doi: 10.1068/p3311. [DOI] [PubMed] [Google Scholar]

- 33.Wolbers T, Wiener JM, Mallot HA, Büchel C. Differential recruitment of the hippocampus, medial prefrontal cortex, and the human motion complex during path integration in humans. J Neurosci. 2007;27(35):9408–9416. doi: 10.1523/JNEUROSCI.2146-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Knierim JJ, Kudrimoti HS, McNaughton BL. Place cells, head direction cells, and the learning of landmark stability. J Neurosci. 1995;15(3 Pt 1):1648–1659. doi: 10.1523/JNEUROSCI.15-03-01648.1995. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Foster TC, Castro CA, McNaughton BL. Spatial selectivity of rat hippocampal neurons: Dependence on preparedness for movement. Science. 1989;244(4912):1580–1582. doi: 10.1126/science.2740902. [DOI] [PubMed] [Google Scholar]

- 36.Song EY, Kim YB, Kim YH, Jung MW. Role of active movement in place-specific firing of hippocampal neurons. Hippocampus. 2005;15(1):8–17. doi: 10.1002/hipo.20023. [DOI] [PubMed] [Google Scholar]

- 37.Hartley T, Burgess N, Lever C, Cacucci F, O’Keefe J. Modeling place fields in terms of the cortical inputs to the hippocampus. Hippocampus. 2000;10(4):369–379. doi: 10.1002/1098-1063(2000)10:4<369::AID-HIPO3>3.0.CO;2-0. [DOI] [PubMed] [Google Scholar]

- 38.Lever C, Burton S, Jeewajee A, O’Keefe J, Burgess N. Boundary vector cells in the subiculum of the hippocampal formation. J Neurosci. 2009;29(31):9771–9777. doi: 10.1523/JNEUROSCI.1319-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Bures J, Fenton AA, Kaminsky Y, Wesierska M, Zahalka A. Rodent navigation after dissociation of the allocentric and idiothetic representations of space. Neuropharmacology. 1998;37(4-5):689–699. doi: 10.1016/s0028-3908(98)00031-8. [DOI] [PubMed] [Google Scholar]

- 40.Skaggs WE, McNaughton BL. Spatial firing properties of hippocampal CA1 populations in an environment containing two visually identical regions. J Neurosci. 1998;18(20):8455–8466. doi: 10.1523/JNEUROSCI.18-20-08455.1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Rotenberg A, Muller RU. Variable place-cell coupling to a continuously viewed stimulus: Evidence that the hippocampus acts as a perceptual system. Philos Trans R Soc Lond B Biol Sci. 1997;352(1360):1505–1513. doi: 10.1098/rstb.1997.0137. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Jeffery KJ, Donnett JG, Burgess N, O’Keefe JM. Directional control of hippocampal place fields. Exp Brain Res. 1997;117(1):131–142. doi: 10.1007/s002210050206. [DOI] [PubMed] [Google Scholar]

- 43.Czurkó A, Hirase H, Csicsvari J, Buzsáki G. Sustained activation of hippocampal pyramidal cells by ‘space clamping’ in a running wheel. Eur J Neurosci. 1999;11(1):344–352. doi: 10.1046/j.1460-9568.1999.00446.x. [DOI] [PubMed] [Google Scholar]

- 44.Hirase H, Czurkó A, Csicsvari J, Buzsáki G. Firing rate and theta-phase coding by hippocampal pyramidal neurons during ‘space clamping’. Eur J Neurosci. 1999;11(12):4373–4380. doi: 10.1046/j.1460-9568.1999.00853.x. [DOI] [PubMed] [Google Scholar]

- 45.Tcheang L, Bülthoff HH, Burgess N. Visual influence on path integration in darkness indicates a multimodal representation of large-scale space. Proc Natl Acad Sci USA. 2011;108(3):1152–1157. doi: 10.1073/pnas.1011843108. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.