Abstract

Objectives

To describe the development and implementation process and assess the effect on self-reported clinical practice changes of a multidisciplinary, collaborative, interactive continuing medical education (CME)/continuing education (CE) program on chronic obstructive pulmonary disease (COPD).

Methods

Multidisciplinary subject matter experts and education specialists used a systematic instructional design approach and collaborated with the American College of Chest Physicians and American Academy of Nurse Practitioners to develop, deliver, and reproduce a 1-day interactive COPD CME/CE program for 351 primary care clinicians in 20 US cities from September 23, 2009, through November 13, 2010.

Results

We recorded responses to demographic, self-confidence, and knowledge/comprehension questions by using an audience response system. Before the program, 173 of 320 participants (54.1%) had never used the Global Initiative for Chronic Obstructive Lung Disease recommendations for COPD. After the program, clinician self-confidence improved in all areas measured. In addition, participant knowledge and comprehension significantly improved (mean score, 77.1%-94.7%; P<.001). We implemented the commitment-to-change strategy in courses 6 through 20. A total of 271 of 313 participants (86.6%) completed 971 commitment-to-change statements, and 132 of 271 (48.7%) completed the follow-up survey. Of the follow-up survey respondents, 92 of 132 (69.7%) reported completely implementing at least one clinical practice change, and only 8 of 132 (6.1%) reported inability to make any clinical practice change after the program.

Conclusion

A carefully designed, interactive, flexible, dynamic, and reproducible COPD CME/CE program tailored to clinicians' needs that involves diverse instructional strategies and media can have short-term and long-term improvements in clinician self-confidence, knowledge/comprehension, and clinical practice.

Abbreviations and Acronyms: AANP, American Academy of Nurse Practitioners; ACCP, American College of Chest Physicians; ADDIE, Analysis, Design, Development, Implementation, and Evaluation; APN, advanced practice nurse; CE, continuing education; CI, confidence interval; CME, continuing medical education; COPD, chronic obstructive pulmonary disease; DO, doctor of osteopathy; GOLD, Global Initiative for Chronic Obstructive Lung Disease; MD, medical doctor; PA, physician assistant; SME, subject matter expert

Limitations of current continuing medical education (CME)/continuing education (CE) programs are well documented.1-3 Most CME/CE activities in which only attendance and satisfaction are evaluated are unable to document improved clinician performance. Several factors may explain this inability; most programs include content experts who lack expertise in instructional design principles, have limited resources, and often do not have guidance from educational experts. Research results support the fact that activities planned using specific adult learning principles can demonstrate improved clinician confidence and performance.4 The Institute of Medicine highlights 8 areas within the current continuing health care professional educational model. The primary concerns are research gaps, regulation, financing, and team-based educational activities.3 The medical community has now been called by this recent Institute of Medicine report to design CME/CE offerings tailored to clinician learners' educational needs by using diverse instructional models, strategies, and media.1

Using a collaborative effort, we created a CME/CE program that targets primary care clinicians, including physicians (medical doctors [MDs]/doctors of osteopathy [DOs]), advanced practice nurses (APNs), and physician assistants (PAs), aiming to improve their knowledge/comprehension, self-confidence, and, ultimately, clinical practice. A multidisciplinary team of subject matter experts (SMEs), including pulmonologists (S.G.A., E.J.D., N.A.H.), a family physician (B.P.Y.), an APN (J.W.), and educational specialists (J.P., E.D.), designed and implemented this program based on the Institute of Medicine recommendations.3 Our first priorities were establishing group trust and mutually agreed on goals. We ensured the program included key chronic obstructive pulmonary disease (COPD) content relevant to the interdisciplinary target audience. Our objectives in this article are to describe the development and implementation process and assess the effect on self-reported clinical practice changes of a multidisciplinary, collaborative, interactive CME/CE program on COPD. We report the process of developing and measuring the effectiveness of this interactive CME/CE program on self-confidence, knowledge/comprehension, and changes in clinical practice. For the first time, we introduce the 6 learning categories developed by one of the authors (E.D.) and depict how these categories can be applied to develop an effective CME/CE program. We describe our experience of translating concepts into practical application by applying a systematic approach based on sound educational principles. We also describe how to facilitate, collaborate, plan, design, and deliver a high-quality CME/CE program on COPD best practices in multiple US cities.

Methods

Analysis, Design, Development, Implementation, and Evaluation: Analysis Phase and Study Population

We used Analysis, Design, Development, Implementation, and Evaluation (ADDIE), a systematic approach to instructional development, to design the program. Step 1 (analysis phase) was to perform a needs assessment and identify clinical and educational practice gaps. Because physician self-assessment is unreliable,2,5 we used literature reviews, interviews, survey data, and expert opinion to assess COPD care gaps.6 The needs assessment highlighted practice gaps of what currently is happening in practice compared to what should be happening. One major practice gap is the late diagnosis of COPD, which results in suboptimal management.7-9 Primary care clinicians provide most COPD care.7

The American Academy of Nurse Practitioners (AANP) conducted a survey in 2008 to assess interest in educational topics, and COPD ranked 15th of more than 240 topics. Respondents identified the following topics: management of acute COPD exacerbations, spirometry, and pharmacotherapy. Results from another survey administered in US primary care physicians found that 41% to 48% were unaware of COPD guidelines.8 Barriers to optimal COPD recognition and care included patient-related and practice-related factors.8 Patients often defer medical attention for early COPD, and many primary care physicians rely on overt respiratory symptoms to recognize COPD.8,10 Few physicians surveyed reported high confidence in detecting (30%-36%) or treating (27%-34%) COPD.8 On the basis of these needs and practice gaps, our group of multidisciplinary SMEs (S.G.A., E.J.D., N.A.H., J.W., B.P.Y.) determined that the program had to (1) be based on the Global Initiative for Chronic Obstructive Lung Disease (GOLD) recommendations, (2) focus on recognizing clinical COPD risk factors, (3) provide practical information and tools for translating guideline recommendations into clinical practice, and (4) target primary care clinicians.

ADDIE: Design Phase and Outcome Measures

On the basis of the analysis phase, we designed the course entitled “COPD: What Really Works? A Best Practices Workshop for Primary Care” with these overall learning goals: (1) recognize clinical factors that identify patients at risk for COPD, (2) translate best practice recommendations for COPD into clinical practice, and (3) apply best practice recommendations for COPD in clinical scenarios.

We developed robust formative assessment tools consisting of 7 self-assessment confidence questions and 10 pretest and 10 posttest questions. We linked each pretest and posttest question to instructional objectives, and all SMEs and an independent reviewer with expertise in item writing and COPD peer reviewed each question. We tested and modified the questions only during the first 5 courses. To reduce testing bias, we developed the pretest and posttest questions to evaluate the same concepts but used different content. The faculty did not reveal the answers until after the posttest was completed. Five pretest and posttest questions measured Bloom's taxonomy level 1 (knowledge), and 5 questions measured levels 2 and 3 (comprehension/application).11 During courses 6 through 20, we implemented a commitment-to-change form and encouraged participants to write as many as 4 concrete, measurable goals that they were willing to implement in their practices as a result of program participation (Bloom's taxonomy level 3—application).12 Willing participants returned their completed commitment-to-change form, which we mailed approximately 3 months after the program, in the self-addressed, stamped envelope we provided. Rather than a name, these forms included a unique number for each participant, which was linked to an administrative database to identify who had not returned a survey. We sent up to 2 follow-up e-mail reminders 5 and 6 months after the course with an online survey link for nonresponders. We did not require participation to receive CME/CE credit and did not offer financial incentives.

American College of Chest Physicians 6 Learning Categories

On the basis of our target audience and learning objectives, we created a collaborative approach to develop and implement this program. We selected educational methods and media based on the educational model of 6 learning categories developed by one of the authors (E.D.) (Table 1). These learning categories are based on Bloom's taxonomy of learning, which describes different adult learning types.11 In this article, we describe and define these learning categories for the first time in the literature and explain their application to an educational program. Reinforcing existing or obtaining new knowledge for clinician learners typically is provided through lecture (learning category 1). As learning category levels increase, learners receive knowledge in more complex and integrated formats, allowing information application in sophisticated ways, such as case-based or simulated environments. Ultimately, the educational program goals are to allow participants to process information on the basis of various experiences.

TABLE 1.

Educational and Media Methods, Instructional Objectives, and ACCP 6 Learning Categories

| Educational and media method | Instructional objectives | ACCP learning category |

|---|---|---|

| Online precourse suggested reading | Discuss articles related to the care and treatment of patients with COPD | Category II: self-directed learning |

| Video of patient-clinician interaction (faculty-facilitated video: entire group for 25 minutes) | Identify the signs and symptoms that suggest a diagnosis of COPD during a routine clinic visit | Category IV: case and problem-based learning |

| List 5 screening questions that can be used to determine whether further testing is needed to confirm a diagnosis of COPD | ||

| Identify 4 treatment interventions appropriate for a patient diagnosed as having moderate COPD | ||

| Presentation: Identifying Patients With COPD (entire group for 35 minutes) | Define COPD | Category I: lecture-based learning |

| Describe the burden of COPD | ||

| Identify populations at greatest risk | ||

| Recognize case-finding methods | ||

| Presentation: Confirming the Diagnosis (entire group for 45 minutes) | Select individual patients appropriate for screening with spirometry | Category I: lecture-based learning |

| Identify the recommended GOLD standard(s) to confirm and assess a COPD diagnosis | ||

| Differentiate normal, obstructive, and restrictive spirogram patterns | ||

| Presentation: Treatment Strategies (entire group for 60 minutes) | Recognize the appropriate daily treatment found to improve outcomes in patients with moderate to severe COPD according to the GOLD recommendations | Category III: evidence-based learning |

| Recognize nonpharmacologic factors key in the prevention and management of COPD | ||

| Recognize optimal treatment for improving outcomes of moderate to severe acute exacerbations of COPD | ||

| Interactive demonstration: Inhaler devices (groups of up to 9 for 20 minutes) | List different types of inhaler devices used in delivering COPD medications | Category V: simulation |

| Identify appropriate techniques and common errors in using these devices | ||

| Recognize patient and practitioner factors that may influence choice of inhaler device | ||

| Small-group, case-based workshop: coding and spirometry interpretation (small groups with up to 12 participants per group for 60 minutes) | Discuss the elements necessary to code pulmonary function testsSelect case-based examples of pulmonary function tests | Category IV: case and problem-based learning |

| Small-group, spirometry skills workshop (small groups of up to 12 participants within the workshop but no more than 4 participants per spirometer for 60 minutes) | Reproduce a spirometry testCoach a colleague through a spirometry test | Category V: simulation |

| Small-group, simulated patient cases workshop (small groups of up to 6 participants per faculty, maximum of 12 clinicians within the workshop) | Demonstrate interviewing techniques to assess possible COPD symptoms | Category V: simulation |

| Demonstrate understanding of the symptoms and functional limitation that patients commonly experience at different levels of COPD severity | ||

| Demonstrate knowledge of the application of the GOLD COPD care management recommendations | ||

| Demonstrate ability to consider multiple morbidities and COPD comorbidities when selecting therapy | ||

| Commitment-to-change statements with follow-up survey | Demonstrate a commitment to change by identifying measurable goals that learners would like to implement into practice as a result of this course | Category VI: quality improvement |

ACCP = American College of Chest Physicians; COPD = chronic obstructive pulmonary disease; GOLD = Global Initiative for Chronic Obstructive Lung Disease.

In a CME/CE environment, information may not appear chronologically. The education model deploys a learning continuum in which knowledge generates appropriate actions and allows participants to judge whether particular actions will yield intended results. By including multiple learning strategies, the model facilitates learners' realization of their full intellectual potential. The education model involves formative assessment tools to allow learners to practice applying information before incorporating it into clinical practice. Miller et al13 described modular-type educational activities based on learner capabilities and goals. Many educators believe that such personalized approaches in educational delivery can meet future demands of high-end learners. Using these 6 learning categories as a foundation, we incorporated multiple learning strategies into our program. A multispecialty team of 5 faculty (COPD experts and respiratory therapists) collaborated to teach the program.

ADDIE: Development Phase

The SMEs worked with educational staff to develop the course content. As highlighted in evidence-based education guidelines and other resources, multiple educational activities, including clinician engagement and implementing various instructional strategies, are substantially more effective than passive single activities.1,2,4,14-16 Therefore, we developed the course based on these principles.

ADDIE: Implementation Phase (Details of the Program)

The American College of Chest Physicians (ACCP) and AANP partnered to implement and evaluate this COPD CME/CE program for clinicians in 20 US cities from September 23, 2009, through November 13, 2010 (Table 2). We chose many of these cities on the basis of the high incidence of smoking and COPD. In addition, we chose other cities on the basis of limited opportunities for CME/CE activities. The participants stayed in a group of as many as 36 participants for the morning sessions and divided into small groups of 4 to 12 clinicians in the afternoon (Table 1). We limited the participants to 36 per course to optimize the faculty-to-student ratio for the small-group sessions. The New England Institutional Review Board approved the study. All faculty members attended a 3-hour face-to-face training session. We offered as many as 6.75 hours of AMA PRA Category 1 credits, AANP CE credits, and the American Academy of Family Physicians prescribed credits.

TABLE 2.

Participant Characteristics

| Characteristic | No. (%) of participants (N=351) |

|---|---|

| Highest training | |

| MD/DO | 74 (21.1) |

| PA | 55 (15.7) |

| APN | 180 (51.3) |

| Other (PharmD, RN, RT) | 42 (12.0) |

| Number of years in practice (N=324) | |

| <6 | 129 (39.8) |

| 6-15 | 88 (27.2) |

| 16-25 | 44 (13.6) |

| >25 | 63 (19.4) |

| Practice categories (N=322) | |

| Community, hospital-owned practice | 30 (9.3) |

| Hospital-based academic setting | 38 (11.8) |

| Large multidisciplinary primary care practice | 39 (12.1) |

| Large physician-only primary care practice | 3 (0.9) |

| Small multidisciplinary primary care practice | 36 (11.2) |

| Small physician-only primary care practice | 41 (12.7) |

| NP-owned practice | 6 (1.9) |

| Specialty practice | 61 (19.0) |

| Other | 68 (21.1) |

| Age group (y) (N=326) | |

| 20-34 | 47 (14.4) |

| 35-49 | 105 (32.2) |

| 50-64 | 148 (45.4) |

| ≥65 | 26 (8.0) |

| Female (N=322) | 237 (73.6) |

| Region | |

| Ann Arbor, MI | 23 (6.6) |

| Atlanta, Ga | 21 (6.0) |

| Denver, CO | 26 (7.4) |

| Hartford, CT | 7 (2.0) |

| Houston, TX | 29 (8.3) |

| Kansas City, KS | 14 (4.0) |

| Las Vegas, NV | 10 (2.8) |

| Louisville, KY | 23 (6.6) |

| Memphis, TN | 21 (6.0) |

| Miami, FL | 9 (2.6) |

| Mobile, AL | 21 (6.0) |

| New Orleans, LA | 18 (5.1) |

| Phoenix, AZ | 20 (5.7) |

| Pittsburgh, PA | 17 (4.8) |

| Portland, OR | 30 (8.5) |

| Raleigh, NC | 32 (9.1) |

| Richmond, VA | 17 (4.8) |

| Sacramento, CA | 13 (3.7) |

APN = advanced practice nurse; DO = doctor of osteopath; MD = medical doctor; NP = nurse practitioner; PA = physician assistant; PharmD = PhD in pharmacology; RN = registered nurse; RT = respiratory therapist.

We started the day with an introduction, icebreaker, and pretest. We then showed a 17-minute, faculty-facilitated video, which used the predisposing-enabling-reinforcing instructional framework developed by Green and Kreuter.17 The video highlighted skillful clinician questioning in a routine clinician-patient interaction (Table 1). This method includes creating and reinforcing a teachable moment (predisposing) by allowing learners to compare themselves to an ideal standard.17 We based the 3 morning lectures on the evidence-based GOLD recommendations and used case presentations and the audience response system to encourage learner participation (Table 1).7 Because clinicians learn best by doing,2 participants spent the afternoon in 3 small-group workshops (12 participants maximum per workshop), including simulated role-play exercises, hands-on spirometry, and case-based spirometry billing/coding and interpretation. We developed educational scenarios (on identifying and managing initial COPD diagnosis, medication nonadherence, comorbidities, end-of-life, and others) to allow participants to practice interview techniques to assess COPD symptoms and apply GOLD recommendations. The third portion of the instructional framework included providing reinforcing instructional materials to assist in competence recall; therefore, we provided a precourse suggested reading list, syllabus, pocket guidelines, tobacco-dependence treatment toolkit, and key articles. Finally, on the basis of concepts contributing to clinician behavior change,18 we addressed barriers to implementing changes in clinical practice.

ADDIE: Evaluation Phase

As part of the pilot program, SMEs attended each session and reviewed participants' course evaluations. After the program, the SMEs and staff met to determine areas for improvement. On the basis of the observations and evaluations, we made adjustments to the program during the first 5 courses.

Faculty Training and Development

Creating a 3-hour faculty-training program was crucial to design an interactive and flexible but easily reproducible program. The training focused on time management, adult-learning principles, and group facilitation skills. We provided faculty members with detailed lesson plans and links to previously recorded programs to ensure reproducibility.

Statistical Analyses

We described demographic characteristics and participant characteristics, with frequencies and percentages for discrete variables and mean and SD for continuous variables. We used a McNemar test to compare matched pairs of pretest and posttest questions for clinician self-confidence and knowledge. We also examined the score change from pretest to posttest for all 10 questions and subscores of knowledge (5 questions) and comprehension/application (5 questions) by using a paired t test. We examined the proportion of GOLD criteria among the practice types (ie, APN, PA, and MD/DO) by using a Pearson χ2 test. We evaluated the measure of agreement (improved or worsened) of pretest to posttest for each question among the practice types, number of practice years, and age group by using a κ statistic. P<.05 was considered significant for all analyses. We performed all analyses with SAS statistical software, version 9.1 (SAS Institute Inc, Cary, NC).

Results

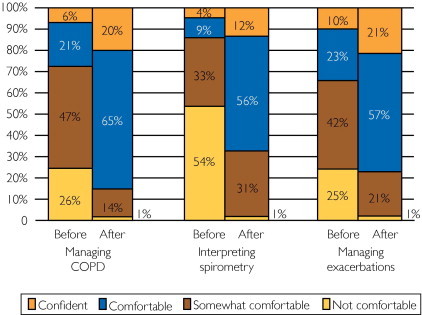

Three hundred fifty-one participants attended one of the interactive CME/CE programs. We recorded responses to demographic, self-confidence, and knowledge/comprehension questions by using an audience response system. Of the respondents to the audience response system, 237 of 322 (73.6%) were women and 180 of 351 (51.3%) were APNs (Table 2). Before attending the program, 173 of 320 participants (54.1%) had never used the GOLD recommendations. Clinician self-confidence improved after the course in all areas measured (Figure 1). In addition, clinician knowledge/comprehension significantly improved (mean ± SD pretest percentage correct, 77.1%±16.4; 95% confidence interval [CI], 76.2%-78.9%; and mean ± SD posttest percentage correct, 94.7%±8.7; 95% CI, 94.2%-95.2%; P<.001), with an absolute percentage change of 17.6%±13.2%. Of the 5 knowledge (recall) questions, the mean ± SD improvement in pretest vs posttest scores was 14%±5.0% (95% CI, 9.6%-18.4%), from 83.1% to 97.1% (P=.001). The mean improvement in the 5 comprehension/application questions was 22.7%±17.5% (95% CI, 7.4%-38.0%), from 68.8% to 91.5% (P<.001).

FIGURE 1.

Percentage of participants feeling not comfortable, somewhat comfortable, comfortable, or confident with managing chronic obstructive pulmonary disease (COPD), interpreting spirometry, and managing acute exacerbations of COPD before and after the program.

We noted participant differences in demographic characteristics, self-confidence, and knowledge/comprehension according to discipline. In general, APNs were in practice fewer years than were MDs/DOs or PAs (P<.001). A total of 97 of 180 NPs/APNs (53.9%), 37 of 55 PAs (67.3%), and 40 of 74 MDs/DOs (54.0%) were unaware of the GOLD recommendations before the course (P=.002). The NPs and PAs generally reported significantly less comfort with managing COPD and interpreting/coding spirometry than did MDs/DOs (P<.001). These trends continued after the course for interpreting spirometry (P<.001), managing acute exacerbations (P=.002), and billing/coding (P<.001). However, the groups no longer differed in comfort with GOLD recommendations (P=.90) or managing COPD (P=.19). Practitioners also did not differ in 9 of 10 knowledge/comprehension questions. Significantly more MDs/DOs correctly answered a spirometry flow-volume loop interpretation/application question than did APNs and PAs on the pretest (P=.048), but no differences were found after the course (P=.20). Despite substantial differences in confidence and knowledge before the program, many gaps between disciplines closed after this educational intervention.

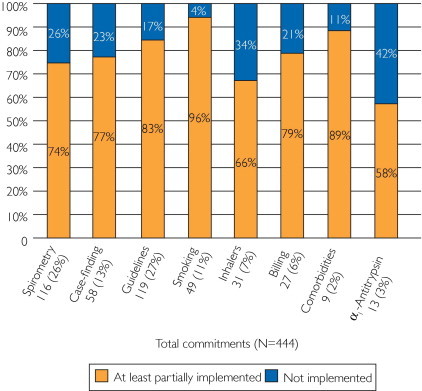

Three hundred thirteen clinicians participated in the last 15 courses, in which we implemented the commitment-to-change statement. A total of 271 of 313 participants (86.6%) participated in the commitment-to-change process and completed 971 citations (mean ± SD, 3.58±0.72 commitments per person). Participants cited commitments related to spirometry (ordering/interpreting tests and/or acquiring a spirometer), identification of new COPD patients (incorporating screening questionnaires into practice), implementing guidelines, smoking cessation, and others (Figure 2). A total of 132 of 271 participants (48.7%) responded to the 3- to 6-month follow-up survey and reported the status of completing 444 commitments (46% of original commitments; mean, 3.36 per respondent).

FIGURE 2.

Percentage of commitments addressing a variety of topics grouped in categories that were not implemented vs those that were at least partially implemented by study participants. Topics included the following: spirometry—increase spirometry use, personally interpret more spirometry tests, or acquire a spirometer for the office; case finding—implement a chronic obstructive pulmonary disease (COPD) screener questionnaire and ask more questions about subtle respiratory symptoms in smokers older than 40 years; guidelines—implement the Global Initiative for Chronic Obstructive Lung Disease recommendations in daily practice, use long-acting bronchodilators as first-line therapy in COPD, send patients with COPD to pulmonary rehabilitation, reduce risk factors, and improve management of acute exacerbations; smoking—ask every patient about smoking status, advise every smoker to quit, and increase smoking cessation counseling; inhalers—instruct patients in proper inhaler use and ask patients to perform return demonstration to ensure proper technique; billing—start coding and billing for spirometry; comorbidities—search for and address comorbidities, such as depression, anxiety, osteoporosis, and cardiovascular disease, in patients with COPD; α1-antitrypsin—start testing patients with COPD for α1-antitrypsin deficiency; and other (not shown in figure).

Notably, 92 of 132 respondents (69.7%) completely implemented at least one commitment. Only 8 of 132 (6.1%) were unable to implement any changes, 7 of whom were no longer employed or in practice. Overall, clinicians reported at least partially implementing most commitments (Figure 2). No differences were found in practice type (MD/DO vs APN vs PA), number of practice years, or age among those who were or were not able to at least partially implement their commitments. On the basis of lessons learned from this interactive program, 125 of 132 respondents (94.7%) self-reported making substantial practice changes.

Participants were asked about barriers encountered when implementing these changes. Respondents reported 223 barriers while trying to implement 444 commitments. The most common barriers included time limitations (42 of 223 [18.8%]), limited resources (27 of 223 [12.1%]), lack of fiscal support (25 of 223 [11.2%]), difficulty remembering to incorporate into daily routine (20 of 223 [9.0%]), organizational issues (20 of 223 [9.0%]), and inadequate knowledge. The respondents identified substantial barriers, but most clinicians still reported practice changes despite these barriers.

Discussion

We describe our experience designing and implementing a successful interactive, multimedia, multidisciplinary, collaborative CME/CE COPD program for primary care clinicians in 20 cities across the United States. We based the foundation of this unique program on adult learning and instructional design principles and the ACCP 6 learning categories. Our data suggest that this program is associated with clinical practice changes and significant improvements in clinician self-confidence and knowledge/comprehension. Similar to our findings during the initial needs assessment in the analysis phase, we found that 173 of 320 clinician attendees (54.1%) had never used the GOLD recommendations and admitted to substantial discomfort with managing COPD and spirometry interpretation and billing. A total of 125 of 132 respondents (94.7%) to the follow-up commitment-to-change survey reported substantial clinical practice changes after the program.

Our results indicate that this COPD CME/CE program appears to have helped close the clinical practice gaps between what was happening before the program and the positive practice changes after the program. Adults seek information that they can apply pragmatically to their immediate circumstances. The more relevant and practical the information, the more likely they are to change behavior. Chronic obstructive pulmonary disease is important to these clinicians as evidenced by high response rates and interest in staying engaged with the learning and practice improvement process during and after the course, despite the lack of financial incentives.

Our study is unique in that we evaluated self-confidence, knowledge/comprehension, and commitments to change from a large, multidisciplinary group of clinicians. Other investigators have implemented the commitment-to-change strategy to evaluate CME/CE programs.19-29 Most of these studies included a small number of participants (N=26-84),19-21,23-25,27 and only 3 included nonphysician clinicians.24,25,27 A total of 952 of 971 (98.0%) of the commitments written by clinicians attending our CME/CE program were directly related to learning objectives, and all learning objectives had at least one commitment statement. In contrast, Dolcourt and Zuckerman25 reported 32% of commitment-to-change statements were unmatched to their objectives (classified as unanticipated learning), and there were no commitment statements for 34% of their objectives.

Our study has 2 main limitations. The first is that we did not design the study for independent verification of the degree of adherence to self-reported commitments. Adams et al29 performed a systematic review, describing limitations inherent to data reported by clinicians and not corroborated by objective data. They reported that 8 of 10 selected studies found considerable bias in self-reported practice guideline adherence when compared with objective measures.29 However, investigators in 2 studies not included in the review, but specifically involving the commitment-to-change process, evaluated the association of self-reported commitments with actual behavioral changes.21,22 These investigators found that physicians who committed to change prescribing practices were truly likely to change their prescribing habits.21,22 These study results indicate that self-reported changes can be a proxy for actual change.21,22 A second limitation of our study includes the potential for reporting bias of the nonresponders.24 Nonresponders may be uncomfortable reporting their potential inability to implement changes. Because of the limitations of our study design, it is not possible to analyze the magnitude of this potential bias accurately. However, in the worst-case scenario, even if all 139 nonresponders did not implement a single change, then still nearly half (132 of 271 [48.7%]) of all participants reported successfully implementing substantial practice changes.

Our data stress the need for future programs to implement similar strategies in designing and delivering CME/CE programs and for measuring long-term outcomes. Although the commitment-to-change assessment is not perfect and may be subject to reporting bias, it may shed light on actual clinical practice changes. Our strategy, however, does not specifically measure health outcomes in COPD patients because we did not directly assess new clinical diagnosis rates or specific management changes. In future programs, investigators should evaluate these outcomes.

Conclusions

Using diverse instructional models, teaching techniques, and media, we created an interactive, dynamic, collaborative, multidisciplinary, and reproducible CME/CE program for primary care clinicians throughout the United States. This program addresses practice gaps in COPD recognition and management and is associated with substantial self-reported changes in clinical practice. We developed this unique educational program by (1) linking instructional methods to outcome strategy, (2) creating teachable moments, (3) using formative assessment throughout the process, and (4) fostering true collaboration among the various disciplines. In addition to making considerable self-reported changes in clinical practice, clinicians who attended this program significantly improved their self-confidence and knowledge/comprehension of COPD. This program may be used as a template for creating CME/CE programs on other medical topics.

Footnotes

Potential Competing Interests: Dr Adams discloses the following: investigator/grant research: National Institute of Health, Veterans Affairs Cooperative Studies Program, Bayer Pharmaceuticals Corp, Boehringer Ingelheim Pharmaceuticals Inc, Centocor Inc, GlaxoSmithKline, Novartis Pharmaceuticals AG, Pfizer Inc, and Schering-Plough Corp; honoraria for speaking at CE programs (unrestricted grants for CE): AstraZeneca Pharmaceuticals LP, Bayer Pharmaceuticals Corp, Boehringer Ingelheim Pharmaceuticals Inc, GlaxoSmithKline, Novartis Pharmaceuticals AG, Pfizer Inc, and Schering-Plough Corp. Ms Pitts and Mr Dellert are both employees of the ACCP. Ms Wynn is employed by the AANP. Dr Yawn discloses the following: research support from Aerocrine, Boehringer Ingelheim, Forrest, GlaxoSmithKline, and Novartis. Dr Hanania discloses the following: investigator/research support from Astra Zeneca, Boehringer Ingelheim, GlaxoSmithKline, MedImmune, Novartis, Pfizer, and Sunovion; speaker bureau of Boehringer Ingelheim, GlaxoSmithKline, and Pfizer; advisory board/consultancy for Dey Inc, GlaxoSmithKline, Novartis, Pearl, and Pfizer. The programs described were conducted and sponsored by the ACCP and the AANP, which received unrestricted grants from AstraZeneca Pharmaceuticals LP, Wilmington, DE, Boehringer Ingelheim Pharmaceuticals Inc, Ridgefield, CT, and GlaxoSmithKline Pharmaceuticals Ltd, Philadelphia, PA. The funders of the unrestricted grants were not involved in the development or implementation of these programs, in the interpretation of the data, or in the preparation, review, or any part of the manuscript.

Supplemental Online Material

Author Interview Video

References

- 1.Moores L.K., Dellert E., Baumann M.H., Rosen M.J., American College of Chest Physicians Health and Science Policy Committee Executive summary: effectiveness of continuing medical education: American College of Chest Physicians Evidence-Based Educational Guidelines. Chest. 2009;135(3, suppl):1S–4S. doi: 10.1378/chest.08-2511. [DOI] [PubMed] [Google Scholar]

- 2.Moore D.E., Jr, Green J.S., Gallis H.A. Achieving desired results and improved outcomes: integrating planning and assessment throughout learning activities. J Contin Educ Health Prof. 2009;29(1):1–15. doi: 10.1002/chp.20001. [DOI] [PubMed] [Google Scholar]

- 3.Institute of Medicine of the National Academies . The National Academies Press; Washington, DC: 2010. Redesigning Continuing Education in the Health Professions: Summary. [PubMed] [Google Scholar]

- 4.Marinopoulos S.S., Dorman T., Ratanawongsa N. Effectiveness of Continuing Medical Education. Agency for Healthcare Research and Quality; Rockville, MD: 2007. [Google Scholar]

- 5.Davis D.A., Mazmanian P.E., Fordis M., Van Harrison R., Thorpe K.E., Perrier L. Accuracy of physician self-assessment compared with observed measures of competence: a systematic review. JAMA. 2006;296(9):1094–1102. doi: 10.1001/jama.296.9.1094. [DOI] [PubMed] [Google Scholar]

- 6.Grant J. Learning needs assessment: assessing the need. BMJ. 2002;324(7330):156–159. doi: 10.1136/bmj.324.7330.156. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Global Initiative for Chronic Obstructive Lung Disease (GLOBAL) Global strategy for diagnosis, management, and prevention of COPD. http://www.goldcopd.org/ Accessed December 19, 2010.

- 8.Foster J.A., Yawn B.P., Maziar A., Jenkins T., Rennard S.I., Casebeer L. Enhancing COPD management in primary care settings. MedGenMed. 2007;9(3):24. [PMC free article] [PubMed] [Google Scholar]

- 9.Confronting COPD in America: executive summary. http://www.aarc.org/resources/confronting_copd/exesum.pdf Accessed December 19, 2010.

- 10.van Schayck C.P., Chavannes N.H. Detection of asthma and chronic obstructive pulmonary disease in primary care. Eur Respir J Suppl. 2003;39:16s–22s. doi: 10.1183/09031936.03.00040403. [DOI] [PubMed] [Google Scholar]

- 11.Bloom B.S. David McKay; New York, NY: 1956. Taxonomy of Educational Objectives, Handbook 1: The Cognitive Domain. [Google Scholar]

- 12.Mazmanian P.E., Waugh J.L., Mazmanian P.M. Commitment to change: ideational roots, empirical evidence, and ethical implications. J Contin Educ Health Prof. 1997;17(3):133–140. [Google Scholar]

- 13.Miller B.M., Moore D.E., Jr, Stead W.W., Balser J.R. Beyond Flexner: a new model for continuous learning in the health professions. Acad Med. 2010;85(2):266–272. doi: 10.1097/ACM.0b013e3181c859fb. [DOI] [PubMed] [Google Scholar]

- 14.Bordage G., Carlin B., Mazmanian P.E., American College of Chest Physicians Health and Science Policy Committee Continuing medical education effect on physician knowledge: effectiveness of continuing medical education: American College of Chest Physicians Evidence-Based Educational Guidelines. Chest. 2009;135(3, suppl):29S–36S. doi: 10.1378/chest.08-2515. [DOI] [PubMed] [Google Scholar]

- 15.Mazmanian P.E., Davis D.A., Galbraith R., American College of Chest Physicians Health and Science Policy Committee Continuing medical education effect on clinical outcomes: effectiveness of continuing medical education: American College of Chest Physicians Evidence-Based Educational Guidelines. Chest. 2009;135(3, suppl):49S–55S. doi: 10.1378/chest.08-2518. [DOI] [PubMed] [Google Scholar]

- 16.Davis D., Galbraith R., American College of Chest Physicians Health and Science Policy Committee Continuing medical education effect on practice performance: effectiveness of continuing medical education: American College of Chest Physicians Evidence-Based Educational Guidelines. Chest. 2009;135(3, suppl):42S–48S. doi: 10.1378/chest.08-2517. [DOI] [PubMed] [Google Scholar]

- 17.Green L.W., Kreuter M.W. Health Promotion Planning: An Educational and Environmental Approach. 2nd ed. Mayfield Publishing; Mountain View, CA: 1991. pp. 151–177. [Google Scholar]

- 18.Albanese M., Mejicano G., Xakellis G., Kokotailo P. Physician practice change II: implications of the Integrated Systems Model (ISM) for the future of continuing medical education. Acad Med. 2009;84(8):1056–1065. doi: 10.1097/ACM.0b013e3181ade83c. [DOI] [PubMed] [Google Scholar]

- 19.Purkis I.E. Commitment for changes: an instrument for evaluating CME courses. J Med Educ. 1982;57(1):61–63. [PubMed] [Google Scholar]

- 20.Jones D.L. Viability of the commitment-for-change evaluation strategy in continuing medical education. Acad Med. 1990;65(9, suppl):S37–S38. doi: 10.1097/00001888-199009000-00033. [DOI] [PubMed] [Google Scholar]

- 21.Curry L., Purkis I.E. Validity of self-reports of behavior changes by participants after a CME course. J Med Educ. 1986;61(7):579–584. doi: 10.1097/00001888-198607000-00005. [DOI] [PubMed] [Google Scholar]

- 22.Wakefield J., Herbert C.P., Maclure M. Commitment to change statements can predict actual change in practice. J Contin Educ Health Prof. 2003;23(2):81–93. doi: 10.1002/chp.1340230205. [DOI] [PubMed] [Google Scholar]

- 23.Pereles L., Lockyer J., Hogan D., Gondocz T., Parboosingh J. Effectiveness of commitment contracts in facilitating change in continuing medical education intervention. J Contin Educ Health Prof. 1997;17(1):27–31. [Google Scholar]

- 24.Dolcourt J.L. Commitment to change: a strategy for promoting educational effectiveness. J Contin Educ Health Prof. 2000;20(3):156–163. doi: 10.1002/chp.1340200304. [DOI] [PubMed] [Google Scholar]

- 25.Dolcourt J.L., Zuckerman G. Unanticipated learning outcomes associated with commitment to change in continuing medical education. J Contin Educ Health Prof. 2003;23(3):173–181. doi: 10.1002/chp.1340230309. [DOI] [PubMed] [Google Scholar]

- 26.Mazmanian P.E., Daffron S.R., Johnson R.E., Davis D.A., Kantrowitz M.P. Information about barriers to planned change: a randomized controlled trial involving continuing medical education lectures and commitment to change. Acad Med. 1998;73(8):882–886. doi: 10.1097/00001888-199808000-00013. [DOI] [PubMed] [Google Scholar]

- 27.Fjortoft N. The effectiveness of commitment to change statements on improving practice behaviors following continuing pharmacy education. Am J Pharm Educ. 2007;71(6):112. doi: 10.5688/aj7106112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Lockyer J.M., Fidler H., Ward R., Basson R.J., Elliott S., Toews J. Commitment to change statements: a way of understanding how participants use information and skills taught in an educational session. J Contin Educ Health Prof. 2001;21(2):82–89. doi: 10.1002/chp.1340210204. [DOI] [PubMed] [Google Scholar]

- 29.Adams A.S., Soumerai S.B., Lomas J., Ross-Degnan D. Evidence of self-report bias in assessing adherence to guidelines. Int J Qual Health Care. 1999;11(3):187–192. doi: 10.1093/intqhc/11.3.187. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Author Interview Video