Abstract

The ability to recognize familiar faces across different viewing conditions contrasts with the inherent difficulty in the perception of unfamiliar faces across similar image manipulations. It is widely believed that this difference in perception and recognition is based on the neural representation for familiar faces being less sensitive to changes in the image than it is for unfamiliar faces. Here, we used an functional magnetic resonance-adaptation paradigm to investigate image invariance in face-selective regions of the human brain. We found clear evidence for a degree of image-invariant adaptation to facial identity in face-selective regions, such as the fusiform face area. However, contrary to the predictions of models of face processing, comparable levels of image invariance were evident for both familiar and unfamiliar faces. This suggests that the marked differences in the perception of familiar and unfamiliar faces may not depend on differences in the way multiple images are represented in core face-selective regions of the human brain.

Keywords: face recognition, FFA, FRU, image invariance

Introduction

The ability to recognize familiar faces across a variety of changes in illumination, expression, viewing angle, and appearance contrasts with the inherent difficulty found in the perception and matching of unfamiliar faces across similar image manipulations (Bruce et al. 1987; Hancock et al. 2000). This difference in perception has been incorporated into cognitive models of face processing, which propose that familiar and unfamiliar faces are represented differently in the human visual system (Bruce and Young 1986; Burton et al. 1999). These models propose that faces are initially encoded in a pictorial or image-dependent representation. This image-dependent representation is used for the perception and matching of unfamiliar faces. In contrast, the identification of a familiar face involves the formation of an image-invariant representation—“face recognition units”—that are used for the perception of identity.

Our aim was to draw on the predictions from these models to elucidate how different images with the same identity are represented in face-selective regions of the human brain. Functional imaging studies have consistently found regions in the occipital and temporal lobes that respond selectively to faces, which form a core system that is involved in the visual analysis of faces (Kanwisher et al. 1997). Models of face processing suggest that one region—the fusiform face area (FFA)—is important for the representation of invariant facial characteristics that are necessary for recognition (Haxby et al. 2000; Fairhall and Ishai 2007). Evidence that the FFA is important for face recognition is evident in studies using functional magnetic resonance (fMR)-adaptation, which have shown a reduced response (adaptation) to repeated images of the same face (Grill-Spector et al. 1999; Andrews and Ewbank 2004; Loffler et al. 2005; Rotshtein et al. 2005; Yovel and Kanwisher 2005). Adaptation to faces has been reported to be invariant to changes in the size (Grill-Spector et al. 1999; Andrews and Ewbank 2004) and position (Grill-Spector et al. 1999) of the face image. However, these studies use the same image across size and position changes and therefore do not test for invariant representations of identity. Other studies that used different images of an identity have shown mixed results. Some studies have shown that changes in the appearance, illumination, or viewpoint of the face results in a complete release from adaptation in the FFA (Grill-Spector et al. 1999; Andrews and Ewbank 2004; Eger et al. 2005; Pourtois et al. 2005a, 2005b; Davies-Thompson et al. 2009; Xu et al. 2009), whereas other studies have shown continued adaptation across similar manipulations (Winston et al. 2004; Loffler et al. 2005; Rotshtein et al. 2005; Ewbank and Andrews 2008). There are 2 main problems with the interpretation of these studies. The first is that many of these studies fail to provide a direct comparison of familiar and unfamiliar faces; models only predict an invariant representation for familiar faces (Bruce and Young 1986; Burton et al. 1999). The second is that many studies do not control for physical changes in the images across conditions. For example, low-level changes caused by lighting or viewpoint of the same identity are often greater than the low-level changes that occur with different identities when the viewing conditions are similar (Xu et al. 2009). In an attempt to circumvent these issues, we directly compared image invariance with familiar and unfamiliar faces in 2 experiments in which we systematically controlled the amount of image variance. Our aim was to determine whether differences in image invariance can explain the marked differences in the recognition of familiar and unfamiliar faces.

Materials and Methods

Participants

All participants were right handed and had normal to corrected-to-normal vision. Written consent was obtained for all participants, and the study was approved by the York Neuroimaging Centre (YNiC) Ethics Committee. Visual stimuli (ca. 8° × 8°) were presented 57 cm from the participants’ eyes. Familiarity with the faces was tested prior to the scan session using images that were not used in the functional magnetic resonance imaging (fMRI) experiments. Only participants who were able to recognize all the familiar faces participated in the study. Experiment 1 determined the behavioral ability of participants to identify whether images of faces were from the same or different identity. Experiment 2 systematically varied the number of different face images with the same identity to determine image invariance in face-selective regions. Experiment 3 used an identical design to Experiment 2 but used different identities to determine whether neural responses in Experiment 2 can be explained by identity repetition or by image repetition. Experiment 1 was run after Experiments 2 and 3. Five subjects participated in both the behavioral and the fMRI experiments.

Experiment 1

A behavioral experiment was used to determine the ability of participants to identify familiar and unfamiliar faces. Twenty participants took part in Experiment 1 (10 females; mean age, 27). Pairs of images were presented in succession, and participants were asked to indicate by a button press whether the 2 face images were from the same person or from 2 different people (Fig. 1). Each face was presented for 700 ms and separated by an interval of 300 ms. There were 3 possible conditions: same image (identical face images), different images (different images of the same person), and different identity (different images of different people). Each participant viewed a total of 256 trials.

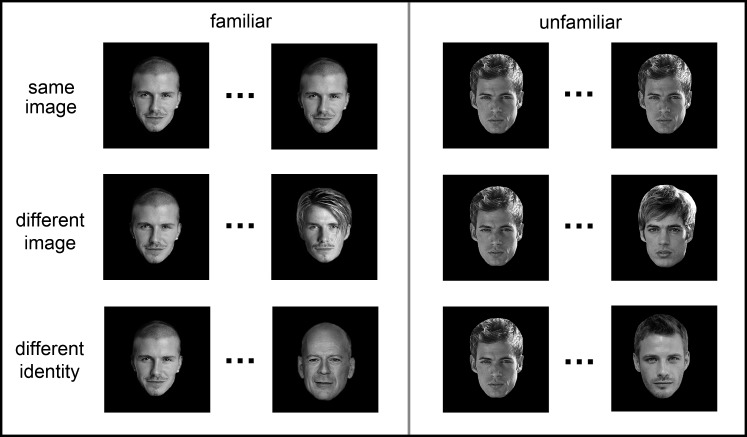

Figure 1.

Design and images used in Experiment 1. Successive images were either the same, different images of the same person (different image) or images of different identities. Pairs of images were either familiar or unfamiliar faces.

Experiment 2

To determine image invariance in face-selective regions, the images from Experiment 1 were incorporated into a block design fMR-adaptation paradigm. Twenty participants took part in Experiment 2 (12 females; mean age, 22). There were 5 image conditions: (1) 1-image of the same identity; (2) 2-images of the same identity; (4) 4-images of the same identity; (8) 8-images of the same identity, and (D) 8-images with different identities. Examples of the stimuli are shown in Figure 2. The faces were either familiar or unfamiliar faces of males and females. Unfamiliar faces were unknown to the participants and were chosen to match familiar faces for their variation in age and appearance. In the same-identity conditions, 8 different familiar identities (4 male, 4 female) and 8 different unfamiliar identities (4 male, 4 female) were used. The different images of the same identity varied in lighting, hairstyle. Images were presented in gray scale and were adjusted to an average brightness level. The mean change in image intensity across images was calculated by taking the average of the absolute differences in gray value at each pixel for successive pairs of images within a block. Table 1 shows that there was a similar mean intensity change in the corresponding familiar and unfamiliar conditions.

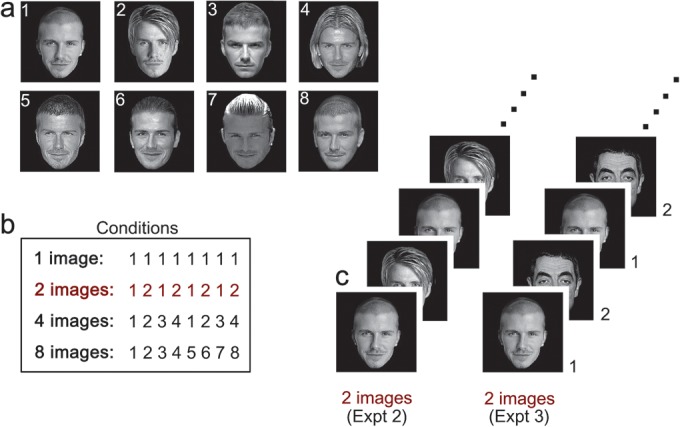

Figure 2.

Design and images used in Experiments 2 and 3. (a) Examples of familiar faces used in Experiment 2. (b) Each experiment had 4 conditions in which 1 image, 2 images, 4 images, or 8 images were presented in each stimulus block. In Experiment 2, the images in each block were from the same identity, whereas, in Experiment 3, the images were from different identities. (c) Examples of the 2-image condition in Experiment 2 (left) and Experiment 3 (right).

Table 1.

Mean change in intensity (standard error) between successive images for each condition in Experiments 2 and 3

| 1 image | 2 images | 4 images | 8 images | Different | |

| Experiment 2 | |||||

| Familiar | 0 (0.0) | 16.1 (0.3) | 16.2 (0.6) | 16.1 (0.5) | 16.2 (0.9) |

| Unfamiliar | 0 (0.0) | 17.0 (0.4) | 16.4 (0.4) | 16.8 (0.5) | 17.7 (0.5) |

| Experiment 3 | |||||

| Familiar | 0 (0.0) | 14.9 (0.0) | 15.2 (1.0) | 15.1 (1.6) | — |

| Unfamiliar | 0 (0.0) | 12.1 (0.0) | 12.3 (0.6) | 12.2 (0.9) | — |

A blocked design was used to present the stimuli. Each stimulus block consisted of 8 images. In each block, images were shown for 1 s followed by a 125 ms fixation cross, resulting in 9 s stimulus blocks, which were separated by a 9 s fixation gray screen. Male and female faces were shown in separate blocks. Eight images of each female and male identity were used. Each condition was repeated 8 times in a counterbalanced order giving a total of 40 blocks per scan, with each face image being presented a total of 15 times across the experiment. An additional 64 faces (32 male, 32 female) were presented in the “different-identities” condition. Each scan was repeated for each participant with familiar and unfamiliar faces in separate runs. The task during the scan was to press a button to indicate the presence of a target familiar face (Hugh Grant or Marilyn Monroe).

Experiment 3

To address whether the pattern of response in Experiment 2 was due to repetition of identity rather than repetition of image, we used a similar design but instead used images with different identities. Twenty participants took part in Experiment 3 (13 females; mean age, 22). There were 4 image conditions: (1) 1-image with the same identity; (2) 2-images with different identities; (4) 4-images with different identities; (8) 8-images with different identities. Each condition was repeated 8 times in a counterbalanced order giving a total of 32 blocks per scan. Eight face images (a subset of those presented in Experiment 2) were used in this experiment, with each image being presented a total of 32 times across the experiment. Each scan was repeated for each participant with familiar and unfamiliar faces in separate runs. The block length, image timings, and task were identical to Experiment 2.

fMRI Analysis

fMRI data was collected with a GE 3-T HD Excite MRI scanner at the YNiC at the University of York. An 8-channel phased-array head coil (GE, Milwaukee) tuned to 127.4 MHz was used to acquire MRI data. A gradient-echo EPI sequence was used to collect data from 38 contiguous axial slices. (time repetition [TR] = 3 s, time echo [TE] = 25 ms, field of view = 28 × 28 cm, matrix size = 128 × 128, slice thickness = 3 mm). Statistical analysis of the fMRI data was carried out using FEAT (http://www.fmrib.ox.ac.uk/fsl). The initial 9 s of data from each scan were removed to minimize the effects of magnetic saturation. Motion correction was followed by spatial smoothing (Gaussian, full-width at half-maximum 6 mm) and temporal high-pass filtering (cut off, 0.01 Hz).

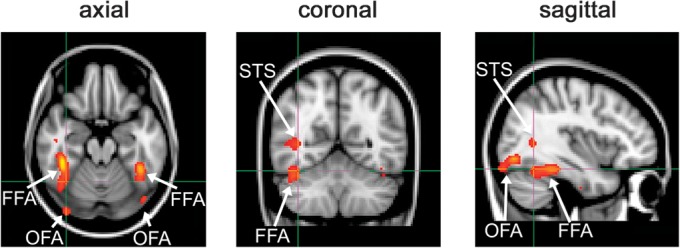

To identify regions responding selectively to faces in the visual cortex, a localizer scan was carried out for each participant. There were 5 conditions: faces, bodies, objects, places, or Fourier-scrambled images from each category. All images were presented in gray scale. Participants viewed images from each category in stimulus blocks that contained 10 images. Each image was presented for 700 ms followed by a 200 ms fixation cross. Stimulus blocks were separated by a 9 s fixation gray screen. Each condition was repeated 4 times, in a counterbalanced block design, giving 20 stimulus blocks. For the localizer scan, face-selective regions of interest (ROIs) were determined by the averaged contrasts of “face > places, faces > objects, faces > places, and faces > scrambled,” thresholded at P < 0.001 (uncorrected). This analysis revealed 3 face-selective regions: FFA, occipital face area (OFA), and posterior temporal sulcus (pSTS) that were identified for each individual (Fig. 3). Data from the left and right hemisphere were combined for each participant for each ROI. The time series of each voxel within a region was converted from units of image intensity to percentage signal change. All voxels in a given ROI were then averaged to give a single time series in each ROI for each participant. The peak response was calculated as an average of the response at 9 and 12 s after the onset of a block. Repeated-measures analyses of variance (ANOVA) were used to determine differences in response to each stimulus condition.

Figure 3.

Location of face-selective regions (FFA, OFA, STS).

Results

Experiment 1

To determine the degree to which the identity of the familiar and unfamiliar faces used in this study could be discriminated across different images, we used a behavioral paradigm in which participants were presented with pairs of images (see Fig. 1). There were 3 conditions: same image (identical face images), different images (of the same person), or different identities (different images of different people). Participants were asked to indicate by a button press whether the 2 faces were of the same person or 2 different people. The accuracy and reaction time (RT) for correct responses to familiar and unfamiliar faces are shown in Figure 4.

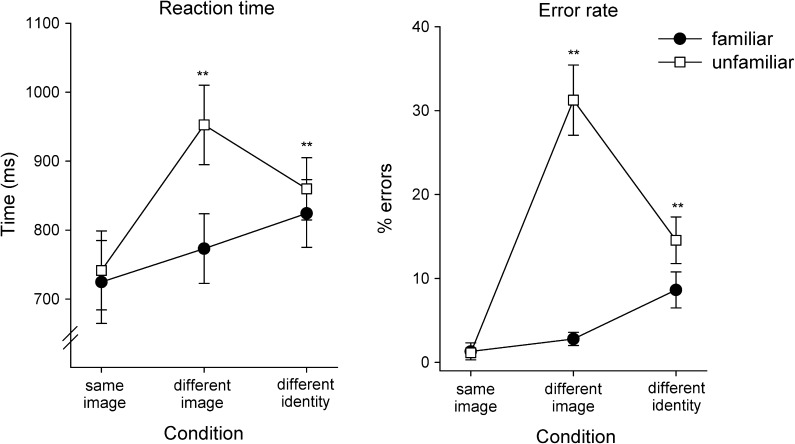

Figure 4.

Experiment 1: RTs and Errors to images of familiar and unfamiliar faces. Participants were asked to indicate whether a pair of successively presented images was from the same or a different identity. The images were either identical (same image), different images of the same person (different image), or images of different people (different identity). The largest difference between familiar and unfamiliar faces occurred when participants responded to different images of the same person. Error bars represent ±standard error across participants, *P < 0.05, **P < 0.01.

A 2 × 3 ANOVA (familiarity, condition) was carried out to examine the effect of familiarity on accuracy RT. For RT, there was a significant effect of familiarity (F1,19 = 75.55, P < 0.001) and condition (F2,38 = 29.10, P < 0.001). A significant interaction between familiarity × condition was also found for RT (F2,38 = 22.92, P < 0.001). A similar pattern was observed for the error rates (ERs), with significant effects of familiarity (F1,19 = 114.27, P < 0.001), condition (F2,38 = 20.11, P < 0.001), and an interaction between familiarity and condition (F2,38 = 33.77, P < 0.001).

To examine the difference between familiar and unfamiliar faces, we compared the response times and ERs for each condition. The shortest RT and lowest ERs occurred when the same face image was repeated. There was no difference between familiar and unfamiliar faces (RT: t19 = −1.23, r = 0.03, P = 0.24; ER: t19 = 0.18, r = 0.02, P = 0.86). However, when different images of the same person were shown (different image), participants were significantly slower (t19 = −7.10, r = 0.35, P < 0.001) and made more errors (t19 = −7.56, r = 0.74, P < 0.001) with unfamiliar faces compared with familiar faces. Indeed, the ER for judging whether 2 images of an unfamiliar person was the same or different was 31 ± 4% (chance = 50% errors). In the different identities condition, participants responded slower and made more errors for unfamiliar faces as compared with familiar faces (RT: t19 = 3.92, r = 0.09, P < 0.001; ER: t19 = −3.90, r = 0.26, P < 0.001), but the differences were less marked compared with the different image condition. Together, these results are consistent with previous findings of a behavioral advantage for the recognition of familiar faces compared with unfamiliar faces across changes in appearance (Hancock et al. 2000; Davies-Thompson et al. 2009).

Experiment 2

To determine image invariance in face-selective regions, the images from Experiment 1 were incorporated into a block design fMR-adaptation paradigm. The number of different images in each block was varied systematically across conditions. The response to the different image blocks was compared with the response when either one image was repeated or when different images of different identities were shown. If a region is invariant to changes in the image, there should be no significant difference between the responses to one repeated image and the multiple images of the same person. In addition, the response to stimulus blocks with the same identity should be lower than the response to blocks in which different identities are presented.

There was no difference in the neural responses of face-selective regions to the different conditions in the right and left hemispheres (OFA: F4,60 = 0.40, P = 0.81; FFA: F4,52 = 0.50, P = 0.74). Consequently, we combined the data across hemisphere. The peak responses of face-selective regions were analyzed using a 3-way ANOVA (condition, familiarity, region). There was a significant effect of image condition (F4,48 = 33.30, P < 0.001) and region (F2,24 = 34.63, P < 0.001), but no effect of familiarity (F1,12 = 0.03, P = 0.87). There was no interaction between familiarity × image condition (F4,48 = 1.15, P = 0.34) suggesting a similar pattern of response to familiar and unfamiliar faces. However, there was a significant interaction between region × image condition (F8,96 = 5.63, P < 0.001), suggesting that different regions responded differently to the image conditions.

Fusiform Face Area

Figure 5 (top) shows the response in the FFA to familiar and unfamiliar faces across all image conditions in Experiment 1. To determine invariance to different images of the same identity, we compared the response of each condition with the corresponding 1-image and different-identity conditions. We found no difference in the response to the 1-image condition compared with the 2-images condition for familiar (t19 = 0.84, r = 0.07, P = 0.41) or unfamiliar (t19 = 0.13, r = 0.01, P = 0.90) faces. There was a small but significant increased response to the 4-images condition compared with the 1-image condition for familiar (t19 = 2.85, r = 0.26, P < 0.05), but not unfamiliar (t19 = 0.12, r = 0.01, P = 0.90) faces. The response to the 8-image condition was larger than the 1-image condition for both familiar (t19 = 4.30, r = 0.38, P < 0.001) and unfamiliar (t19 = 4.49, r = 0.24, P < 0.001) faces.

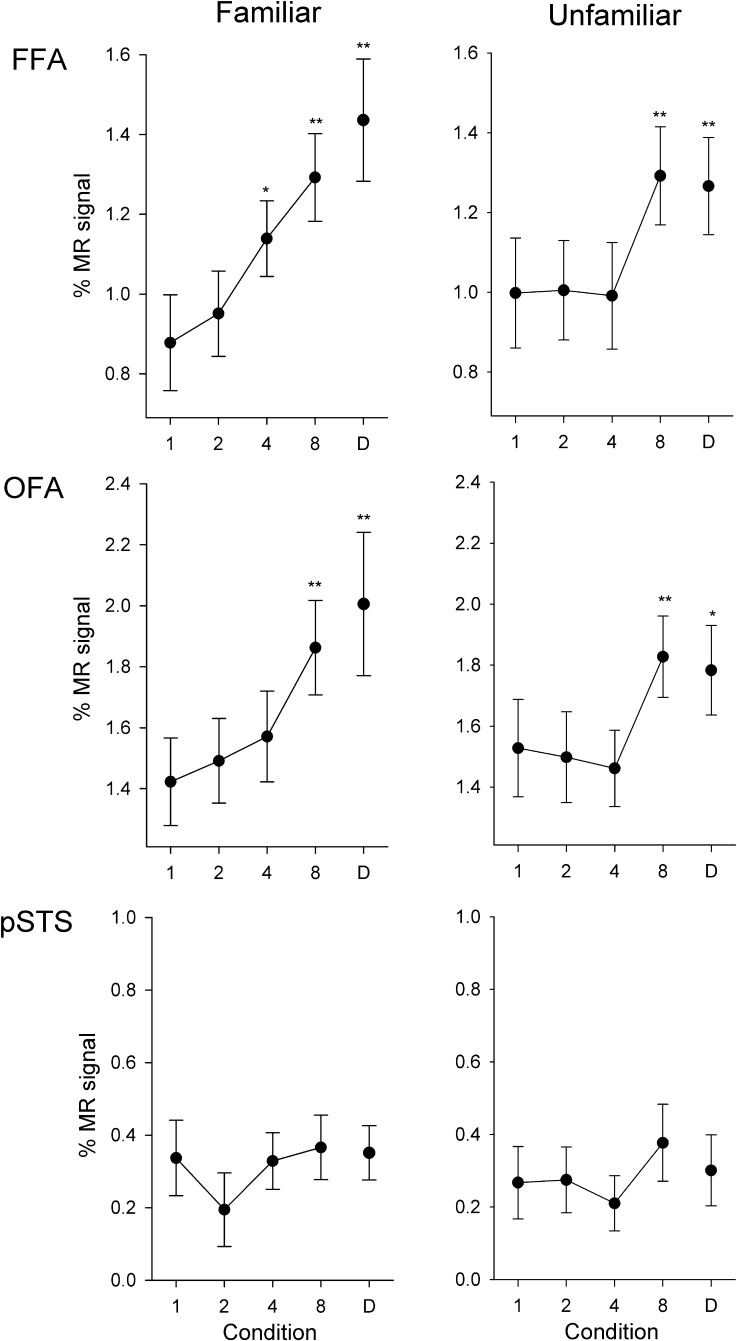

Figure 5.

Experiment 2: Responses of face-selective regions to different images of the same identity. Peak responses to the different conditions are shown in the FFA, OFA, and pSTS, for familiar and unfamiliar faces. There was a gradual increase in response in the FFA and OFA with increases in the number of different images shown in a stimulus block for both familiar and unfamiliar faces. However, there was no difference between any of the conditions in the pSTS. Error bars represent ±standard error across all participants. *P < 0.05, **P < 0.01, indicates an increased response relative to the 1-image condition.

Compared with the different identities condition, the response was lower in the 1-image (familiar: t19 = 5.02, r = 0.41, P < 0.001; unfamiliar: t19 = 4.37, r = 0.23, P < 0.001), 2-images (familiar: t19 = 6.02, r =0. 38, P < 0.001; unfamiliar: t19 = 3.63, r = 0.23, P < 0.005), and 4-images (familiar: t19 = 2.91, r = 0.25, P < 0.05; unfamiliar: t19 = 4.93, r = 0.23, P < 0.001) conditions. However, the response to the 8-images condition was not significantly different to the different-identities condition (familiar: t19 = 1.13, r = 0.12, P = 0.28; unfamiliar: t19 = 0.46, r = 0.02, P = 0.65).

Occipital Face Area

There was a similar pattern of response to the FFA in the OFA (Fig. 5, middle row). We found no difference in response between 1-image and 2-images (familiar: t17 = 0.73, r = 0.06, P = 0.47; unfamiliar: t17 = 0.40, r = 0.02, P = 0.70) or between 1-image and 4-images (familiar: t17 = 1.36, r = 0.12, P = 0.19; unfamiliar: t17 = 0.98, r = 0.05, P = 0.34) conditions. However, there was an increased response in the 8-images condition for both familiar (t17 = 3.56, r = 0.33, P < 0.005) and unfamiliar (t17 = 3.29, r = 0.23, P < 0.01) faces relative to the 1-image condition. Compared with the different-identities condition, the response was lower in the 1-image (familiar: t17 = 4.38, r = 0.33, P < 0.001; unfamiliar: t17 = 2.60, r = 0.19, P < 0.05), 2-images (familiar: t17 = 3.48, r = 0.30, P < 0.01; unfamiliar: t17 = 4.16, r = 0.22, P < 0.005), and 4-images (familiar: t17 = 2.68, r = 0.25, P < 0.05; unfamiliar: t17 = 5.53, r = 0.27, P < 0.001) conditions. However, the response to the 8-images condition was not significantly different to the different-identities condition (familiar: t17 = 0.76, r = 0.08, P = 0.46; unfamiliar: t17 = 0.92, r = 0.04, P = 0.37).

Posterior Temporal Sulcus

The response to familiar and unfamiliar faces in the pSTS can be seen in Figure 5 (bottom row). There was no difference in the response between 1-image and the 2-images (familiar: t12 = 1.80, r = 0.19, P = 0.10; unfamiliar: t12 = 0.11, r = 0.01, P = 0.92), 4-images (familiar: t12 = 0.11, r = 0.01, P = 0.91; unfamiliar: t12 = 0.89, r = 0.09, P = 0.39), or 8-images (familiar: t12 = 0.30, r = 0.04, P = 0.77; unfamiliar: t12 = 1.33, r = 0.15, P = 0.21) conditions for familiar or unfamiliar faces. However, there was also no difference between the 1-image condition and the different-identities condition for either familiar (t12 = 0.21, r = 0.02, P = 0.88) or unfamiliar (t12 = 0.41, r = 0.05, P = 0.69) faces, suggesting that the pSTS is not sensitive to changes in facial identity.

Experiment 3

To address whether the pattern of response in Experiment 2 was due to repetition of image rather than repetition of identity, we used a similar design but instead used images with different identities (see Fig. 2). If the response in face-selective regions was dependent on image repetition, we would expect a similar pattern of results to that obtained in Experiment 2. However, if the response was sensitive to changes in identity, we would expect a complete release from adaptation when different identities are presented within a block.

There was no difference in the neural responses of face-selective regions to the different conditions in the right and left hemispheres (OFA: F3,48 = 0.25, P = 0.86; FFA: F3,54 = 0.32, P = 0.81). Consequently, we combined the data across hemisphere. The peak responses to the different conditions were analyzed using a 3-way ANOVA (condition, familiarity, region). There was a significant effect of image condition (F3,42 = 21.22, P < 0.001) and region (F2,28 = 114.13, P < 0.001), but no effect of familiarity (F1,14 = 0.02, P = 0.88). There was no interaction between familiarity × image condition (F3,42 = 0.14, P = 0.93) suggesting similar patterns of response for familiar and unfamiliar faces. However, there was a significant interaction between region × image condition (F6,84 = 13.66, P < 0.001), suggesting that different regions responded differently to the image conditions.

Fusiform Face Area

Figure 6 (top) shows the response in the FFA to familiar and unfamiliar faces across all image conditions in Experiment 2. For familiar faces, the effect of image condition is explained by a larger response (i.e., a release from adaptation) to all conditions compared with the 1-image condition (2-image: t19 = 5.80, r = 0.49, P < 0.001; 4-images: t19 = 7.70, r = 0.55, P < 0.001; 8-images: t19 = 5.88, r = 0.47, P < 0.001). The 8-images condition (equivalent to the different-identities condition in Experment 1) was not significantly different from the 2-images (t19 = 0.19, r = 0.01, P = 0.85) and 4-images conditions (t19 = 0.58, r = 0.01, P = 0.57). The same pattern was found for unfamiliar faces, with a reduced response to the 1-image condition compared with all other conditions (2-images: t19 = 5.24, r = 0.47, P < 0.001; 4-images: t19 = 8.01, r = 0.57, P < 0.001; 8-images: t19 = 6.10, r = 0.52, P < 0.001). There was also no difference in the response between the 8-images condition and the 2-images (t19 = 0.57, r = 0.03, P = 0.58) and 4-images (t19 = 0.24, r = 0.01, P = 0.82) conditions.

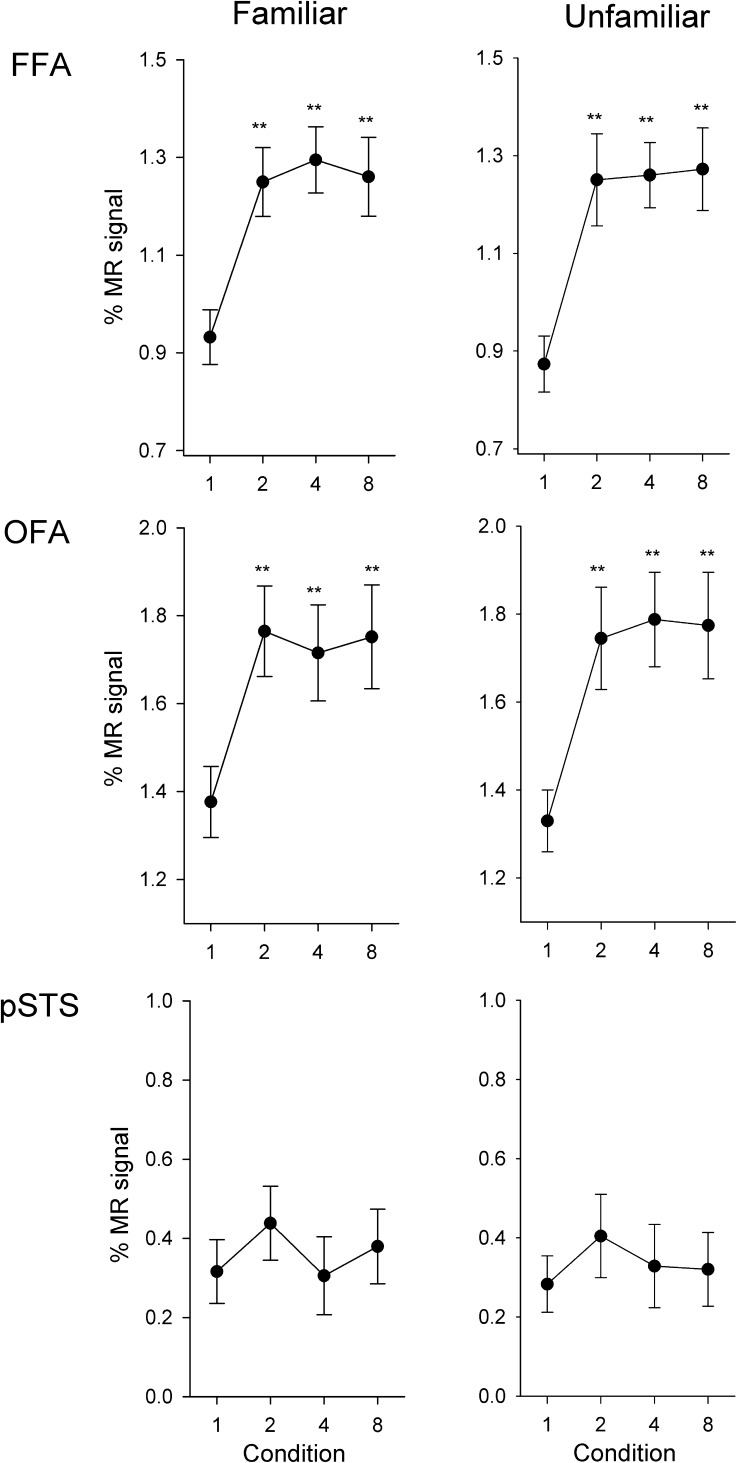

Figure 6.

Experiment 3: Responses of face-selective regions to different images of different identities. Peak responses to the different conditions are shown in the FFA, OFA, and pSTS. In contrast to Experiment 2, there was an immediate increase in response in the FFA and OFA when different images were shown in a block for both familiar and unfamiliar faces. However, there was no difference between any of the conditions in the pSTS. Error bars represent ±standard error across all participants. *P < 0.05, **P < 0.01, indicates an increased response relative to the 1-image condition.

Occipital Face Area

A similar pattern of response was found in the OFA and FFA (Fig. 6, middle row). For familiar faces, there was a reduced response (adaptation) to the 1-image condition compared with all other conditions (2-images: t19 = 6.62, r = 0.42, P < 0.001; 4-images: t19 = 4.81, r = 0.37, P < 0.001; 8-images: t19 = 5.66, r = 0.38, P < 0.001). There was no difference in response between the 8-images condition and the 2-images (t19 = 0.19, r = 0.01, P = 0.85) and 4-images (t19 = 0.46, r = 0.04, P = 0.65) conditions. The same pattern was found for unfamiliar faces, with a reduced response to the 1-image condition compared with all other conditions (2-images: t19 = 4.29, r = 0.43, P < 0.001; 4-images: t19 = 5.04, r = 0.49, P < 0.001; 8-images: t19 = 3.90, r = 0.45, P < 0.001). There was no difference in response between the 8-images condition and the 2-images (t19 = 0.39, r = 0.03, P = 0.70) and 4-images (t19 = 0.21, r = 0.01, P = 0.84) conditions.

Posterior Temporal Sulcus

The response to familiar and unfamiliar faces in the pSTS can be seen in Figure 6 (bottom row). For familiar faces, there was no difference in response between the 1-image condition and the 2-images (t14 = 1.76, r = 0.18, P = 0.10), 4-images (t14 = 0.12, r = 0.01, P = 0.91), and 8-images (t14 = 1.04, r = 0.09, P = 0.32) conditions. Similarly, for unfamiliar faces, there was no difference in response between the 1-image condition and the 2-images (t14 = 1.33, r = 0.17, P = 0.21), 4-images (t14 = 0.56, r = 0.06, P = 0.59), and 8-images (t14 = 0.50, r = 0.06, P = 1.49) conditions.

Occipital Pole

To determine the selectivity of the responses we observed in face-selective regions, we measured the peak response in an early visual region for each condition in Experiments 2 and 3. An occipital pole mask (http://www.cma.mgh.harvard.edu/fsl_atlas.html) was transformed into each participant's EPI coordinates. A 2 × 2 × 4 ANOVA showed no effect of Experiment (F1,19 = 0.78, P = 0.39), Condition (F3,57 = 0.36, P = 0.78), or Familiarity (F1,19 = 3.84, P = 0.07). There were also no interactions (Experiment × Familiarity [F1,19 = 0.95, P = 0.34]; Experiment × Condition [F3,57 = 1.27, P = 0.30]; Familiarity × Condition [F3,57 = 1.11, P = 0.35]; Experiment × Familiarity × Condition [F3,57 = 0.80, P = 0.50]). These results show that the significant effects found in some face-selective regions do not appear to be inherited from responses at early stages of the visual system.

Discussion

This study used behavioral and fMR-adaptation paradigms to evaluate differences in the neural representation underlying familiar and unfamiliar faces. In line with previous studies (Bruce et al. 1987, 1999; Hancock et al. 2000; Megreya and Burton 2006; Davies-Thompson et al. 2009), our results show a clear behavioral advantage for matching familiar faces as compared with unfamiliar faces across similar image manipulations. We also found clear evidence for some degree of image-invariant representations of facial identity in face-selective regions. However, there was no evidence for more image invariance in the neural response to familiar faces. These findings suggest that marked differences in the perception of familiar and unfamiliar faces may not be due to different levels of image invariance at this level of the face processing network.

Models of face processing predict a more image-invariant neural representation for familiar faces compared with unfamiliar faces. However, previous neuroimaging studies have reported mixed results about whether face-selective regions have an image-invariant representation to identity. Some studies have reported image invariance (Winston et al. 2004; Loffler et al. 2005; Rotshtein et al. 2005; Ewbank and Andrews 2008), whereas others have reported image dependence (Grill-Spector et al. 1999; Andrews and Ewbank 2004; Eger et al. 2005; Pourtois et al. 2005a, 2005b; Davies-Thompson et al. 2009; Xu et al. 2009). By systematically varying the amount of image variation within a block, our results are able to show the level of image invariance in these face-selective regions. In Experiment 2, we found a gradual release from adaptation. For example, there was no significant difference between the same repeated image and repetitions of 2-images of the same identity. Although this could be explained by image invariance in face-selective regions, it could also be explained by image repetition. To differentiate between these explanations, Experiment 3 used the same design but with images of different identities. In contrast to Experiment 2, there was an immediate release from adaptation to repetitions of 2 images. Together, these experiments provide clear evidence for image-invariant responses in the core face-selective regions of the human brain.

Although our results show some degree of image invariance in these face-selective regions, the response is not completely invariant to changes in the image—a complete invariant representation would predict sustained adaptation across multiple images of the same identity, whereas we observed a release of adaptation to 8-images. This suggests that the neural response to different images of the same person may involve overlapping rather than identical populations of neurons. In a recent study, we varied the viewing angle of successive face images in a similar fMR-adaptation paradigm (Ewbank and Andrews 2008). Adaptation in the FFA was found across all changes in viewing angle of familiar faces, but a release from adaptation with increasing viewing angles for unfamiliar faces. Although this could suggest a complete image-invariant response to familiar faces, the changes in viewing angle were small. In a subsequent study, we investigated adaptation to identity across much larger changes in the image and found a complete release from adaptation for both familiar and unfamiliar faces (Davies-Thompson et al. 2009). Together, these findings suggest that there are limits to image invariance in these regions (Natu and O’Toole 2011). This conclusion is consistent with priming studies that show that the repetition advantage is maximal for the same image and decreases with changes in the image (Bruce and Valentine 1985; Ellis et al. 1987).

The key finding from this study is that there were similar levels of response to both familiar and unfamiliar faces in the OFA and FFA. Indeed, if anything the response to unfamiliar faces showed a more image-invariant response in Experiment 2. The lack of a more invariant response to familiar compared with unfamiliar faces contrasts with the marked differences in the behavioral responses. In Experiment 1, we found that participants were less accurate at identifying unfamiliar faces across changes in appearance as compared with familiar identities across similar manipulations. For example, different images of the same unfamiliar identity were reported as different faces on over 30% of trials (chance performance is 50%). There was also a significant increase in RT to different images of the same unfamiliar identity, showing that participants were taking longer to respond. This contrasts to the pattern of neural response in Experiments 2 and 3, which shows that face-selective regions such as the FFA are able to discriminate that different images belong to the same facial identity for both familiar and unfamiliar faces. It is equally clear, however, that participants are not able to use this information for correct behavioral judgments for identifying unfamiliar faces. Therefore, it would appear that the computations that occur in the core face-selective regions are not sufficient to explain the difference in perception of familiar and unfamiliar faces.

Neural models of face processing propose that different face-selective regions represent different aspects of facial information. The OFA is suggested to be an early processing region that has an image-dependent representation. In contrast, the FFA is thought to have more invariant representations critical for the perception of identity. In this study, we observed similar patterns of responses in the OFA and FFA. This fits with other studies using fMR adaptation (Andrews and Ewbank 2004; Davies-Thompson et al. 2009; Andrews et al. 2010) and suggests that both these regions are highly interconnected and may represent early stages of processing in face perception. In contrast to the OFA and FFA, the response of the pSTS was not sensitive to changes in facial identity. This fits with previous studies that have shown a distinction between inferior temporal processes involved in facial recognition and superior temporal processes involved in understanding dynamic aspects of faces (Haxby et al. 2000; Hoffman and Haxby 2000; Andrews and Ewbank 2004).

In conclusion, we provide evidence for some degree of image invariance to facial identity for familiar and unfamiliar faces in face-selective regions of the human brain. However, the similarity in response to familiar and unfamiliar faces contrasts with the marked differences in the way these visual stimuli are perceived. Taken together, these results suggest that the clear behavioral difference in the ability to perceive and recognize familiar and unfamiliar faces may not be due to differences in the way multiple images of the same face identity are represented in the core face-selective regions. Together, these results provide a significant challenge for understanding the neural basis of face recognition.

Funding

J.D.-T was supported by an ESRC studentship. This work was supported by a grant from the Wellcome Trust (WT087720MA).

Acknowledgments

We would like to thank Andy Young for helpful discussion and comments on the manuscript and Laura Binns, Fiona Spiers, Helen Warwick, and Alicia Wooding for their help with Experiment 2. Conflict of Interest : None declared.

References

- Andrews TJ, Davies-Thompson J, Kingstone A, Young AW. Internal and external features of the face are represented holistically in face-selective regions of visual cortex. J Neurosci. 2010;30:3544–3552. doi: 10.1523/JNEUROSCI.4863-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Andrews TJ, Ewbank MP. Distinct representations for facial identity and changeable aspects of faces in the human temporal lobe. Neuroimage. 2004;23:905–913. doi: 10.1016/j.neuroimage.2004.07.060. [DOI] [PubMed] [Google Scholar]

- Bruce V, Henderson Z, Greenwood K, Hancock PJB, Burton AM, Miller P. Verification of face identities from images captured on video. J Exp Psychol. 1999;5(4):339–360. [Google Scholar]

- Bruce V, Valentine T. Identity priming in the recognition of familiar faces. Br J Psychol. 1985;76(3):373–383. doi: 10.1111/j.2044-8295.1985.tb01960.x. [DOI] [PubMed] [Google Scholar]

- Bruce V, Valentine T, Baddeley A. The basis of the 3/4 view advantage in face recognition. Appl Cogn Psychol. 1987;1(2):109–120. [Google Scholar]

- Bruce V, Young A. Understanding face recognition. Br J Psychol. 1986;77:305–327. doi: 10.1111/j.2044-8295.1986.tb02199.x. [DOI] [PubMed] [Google Scholar]

- Burton AM, Bruce V, Hancock PJB. From pixels to people: a model of familiar face recognition. Cogn Sci. 1999;23(1):1–31. [Google Scholar]

- Davies-Thompson J, Gouws A, Andrews TJ. An image-dependent representation of familiar and unfamiliar faces in the human ventral stream. Neuropsychologia. 2009;47:1627–1635. doi: 10.1016/j.neuropsychologia.2009.01.017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eger E, Schweinberger SR, Dolan RJ, Henson RN. Familiarity enhances invariance of face representations in human ventral visual cortex: fMRI evidence. Neuroimage. 2005;26:1128–1139. doi: 10.1016/j.neuroimage.2005.03.010. [DOI] [PubMed] [Google Scholar]

- Ellis AW, Young AW, Flude BM, Hay DC. Repetition priming of face recognition. Q J Exp Psychol. 1987;39a:193–210. doi: 10.1080/14640748708401784. [DOI] [PubMed] [Google Scholar]

- Ewbank MP, Andrews TJ. Differential sensitivity for viewpoint between familiar and unfamiliar faces in human visual cortex. Neuroimage. 2008;40:1857–1870. doi: 10.1016/j.neuroimage.2008.01.049. [DOI] [PubMed] [Google Scholar]

- Fairhall SI, Ishai A. Effective connectivity within the distributed cortical network for face perception. Cereb Cortex. 2007;17:2400–2406. doi: 10.1093/cercor/bhl148. [DOI] [PubMed] [Google Scholar]

- Grill-Spector K, Kushnir T, Edelman S, Avidan G, Itzchak Y, Malach R. Differential processing of objects under various viewing conditions in human lateral occipital complex. Neuron. 1999;24:187–203. doi: 10.1016/s0896-6273(00)80832-6. [DOI] [PubMed] [Google Scholar]

- Hancock PJB, Bruce V, Burton AM. Recognition of unfamiliar faces. Trends Cogn Sci. 2000;4(9):330–337. doi: 10.1016/s1364-6613(00)01519-9. [DOI] [PubMed] [Google Scholar]

- Haxby JV, Hoffman EA, Gobbini MI. The distributed human neural system for face perception. Trends Cogn Sci. 2000;4:223–233. doi: 10.1016/s1364-6613(00)01482-0. [DOI] [PubMed] [Google Scholar]

- Hoffman EA, Haxby JV. Distinct representations of eye gaze and identity in the distributed human neural system for face perception. Nat Neurosci. 2000;3(1):80–84. doi: 10.1038/71152. [DOI] [PubMed] [Google Scholar]

- Kanwisher N, McDermott J, Chun M.M. The fusiform face area: A module in extrastriate cortex specialised for face perception. J Neurosci. 1997;17:4302–4311. doi: 10.1523/JNEUROSCI.17-11-04302.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Loffler G, Yourganov G, Wilkinson F, Wilson HR. fMRI evidence for the neural representation of faces. Nat Neurosci. 2005;8(10):1386–1391. doi: 10.1038/nn1538. [DOI] [PubMed] [Google Scholar]

- Megreya AM, Burton AM. Unfamiliar faces are not faces: evidence from a matching task. Mem Cogn. 2006;34(4):865–876. doi: 10.3758/bf03193433. [DOI] [PubMed] [Google Scholar]

- Natu V, O’Toole AJ. The neural processing of familiar and unfamiliar faces: a review and synopsis. Br J Psychol. 2011;102:726–747. doi: 10.1111/j.2044-8295.2011.02053.x. [DOI] [PubMed] [Google Scholar]

- Pourtois G, Schwartz S, Seghier ML, Lazeyras F, Vuilleumier P. Portraits or people? Distinct representations of face identity in the human visual cortex. J Cogn Neurosci. 2005a;17:1043–1057. doi: 10.1162/0898929054475181. [DOI] [PubMed] [Google Scholar]

- Pourtois G, Schwartz S, Seghier ML, Lazeyras F, Vuilleumier P. View-independent coding of face identity in frontal and temporal cortices is modulated by familiarity: an event-related fMRI study. Neuroimage. 2005b;24:1214–1224. doi: 10.1016/j.neuroimage.2004.10.038. [DOI] [PubMed] [Google Scholar]

- Rotshtein P, Henson RNA, Treves A, Driver J, Dolan RJ. Morphing Marilyn into Maggie dissociates physical and identity face representations in the brain. Nat Neurosci. 2005;8:107–113. doi: 10.1038/nn1370. [DOI] [PubMed] [Google Scholar]

- Winston JS, Henson RNA, Fine-Goulden MR, Dolan RJ. fMRI adaptation reveals dissociable neural representations of identity and expression in face perception. J Neurophysiol. 2004;92:1830–1839. doi: 10.1152/jn.00155.2004. [DOI] [PubMed] [Google Scholar]

- Xu X, Yue X, Lescroart MD, Biederman I, Kim JG. Adaptation in the fusiform face area (FFA): image or person? Vis Res. 2009;49:2800–2807. doi: 10.1016/j.visres.2009.08.021. [DOI] [PubMed] [Google Scholar]

- Yovel G, Kanwisher N. The neural basis of the behavioral face-inversion effect. Curr Biol. 2005;15:2256–2262. doi: 10.1016/j.cub.2005.10.072. [DOI] [PubMed] [Google Scholar]