Abstract

Objective:

This study explored the Internet log files from emergency department workstations to determine search patterns, compared them to discharge diagnoses, and the emergency medicine curriculum as a way to quantify physician search behaviors.

Methods:

The log files from the computers from January 2006 to March 2010 were mapped to the EM curriculum and compared to discharge diagnoses to explore search terms and website usage by physicians and students.

Results:

Physicians in the ED averaged 1.35 searches per patient encounter using Google.com and UpToDate.com 83.9% of the time. The most common searches were for drug information (23.1%) by all provider types. The majority of the websites utilized were in the third tier evidence level for evidence-based medicine (EBM).

Conclusion:

We have shown a need for a readily accessible drug knowledge base within the EMR for decision support as well as easier access to first and second tier EBM evidence.

Physicians are increasingly pressured to deliver rapid, efficient, and precise care in a world with exponential growth of medical knowledge, complex disease patterns, litigation risk, and cost management. The explosion of medical knowledge, procedures, and new drugs makes it nearly impossible for clinicians to maintain a working knowledge of the current literature. Thirty years ago, Dr. Clem McDonald spoke of the “non-perfectibility” of man when he reported one of the first prospective studies of computerized physician reminders and we know today that this is still true.1 Human memory has a limited capacity for storage and retrieval requiring the use of electronic resources to obtain relevant clinical information during patient encounters.

Previous work investigating physician information needs and search behaviors by Andrews, et al in 2005 found that 58% of family practice providers sought clinical information several times each week but only 68% sought information while the patient waited.2 Primary care physicians generate an average of 0.07 to 1.85 questions per patient encounter and seek answers to 30–57% of them.3 The urgency of the problem and the expectation of finding an answer are independent predictors of whether or not clinicians seek answers to their questions3. Other factors considered obstacles to seeking answers in 2002 include excessive time required to search, difficulty formulating the question, selection of appropriate search strategy, failure of the selected resource to answer the question, uncertainty about when the ‘correct’ answer is found, and inadequate synthesis of multiple sources in a usable format.4 Electronic references have evolved in the past 10 years and now include websites with topic summaries or systematic reviews like Clinical Evidence (clinicalevidence.com), UpToDate (uptodate.com), Best Evidence Topics (bestbets.org), and Cochrane Reviews (cochrane.org).5 These sites allow the busy clinician to access relevant evidence based information without formulating a search strategy and provide a concise summary of the topic. A 2006 study observing physician Internet searching behaviors found a mean of 13.3 minutes and 1.8 resources accessed per question searched6 while a similar 2008 study found an average of 5 minutes per search with 53% yielding a complete answer to the question.7 While the time to access information is decreasing, the expectation of finding a complete answer to a clinical question remains abysmal. Several studies have evaluated search behaviors using a clinical decision pre-test followed by the use of electronic references to correct any perceived incorrect answers.6,8,9 The use of electronic references improved diagnostic ability by 21%9 but 7–10% of correct answers in two studies changed from correct to incorrect as a result of the search.6,9 As of 2003, approximately 56% of residency training programs had access to electronic reference materials at the facilities in which they trained.10 Despite the availability of electronic information resources, the majority of physicians in 2005 used paper references to find clinical information.2 The majority of the previous studies in this area have been laboratory studies of physician searches for investigator derived questions rather than search behavior during actual clinical encounters.

There has been little published in this area since 2005 even though there has been a rapid expansion of online resources and increasingly sophisticated Internet search engines specific to scientific research. The purpose of this study was to review 5 years of Internet log files from the physician workstations in an urban public hospital emergency department to determine which search engines and what topics are searched by medical providers in order to quantify the information seeking behaviors of emergency medicine (EM) physicians and physicians in training. We compared the topics searched with the EM curriculum and the ED discharge diagnoses. Our working hypotheses are that there is a difference between faculty and in-training physicians in the choice and utilization of on-line resources for clinical information in the emergency department; and the topics searched will closely match the discharge diagnoses and cover the entire EM curriculum. We conducted an analysis of the reliability of information sources and the ability to find up-to-date, evidence-based, and relevant clinical information with a goal of developing tools to provide timely, reliable, and easy access to clinical information.

Study Setting

EM physicians must have a working knowledge of all medical specialties in order to diagnose and treat the over 100,000 patients that are seen in the Wishard Hospital Emergency Department each year. The IUSOM Emergency Medicine Residency trains over 60 EM and EM-Peds residents each year with a faculty of 62 at three downtown hospitals (including Wishard). This is an ideal laboratory to study information retrieval practices of physicians. The Wishard ED is the only one of the three hospitals that keeps Internet log files at this time.

Methods

This project was reviewed and approved by the Indiana University IRB and Wishard Hospital. The Regenstrief Institute data managers accessed and compiled the log files from all 45 of the Internet accessible workstation computers in the Wishard ED for the period from January 2006 to March 2010. These files include provider ID, date, time, and web address. The provider ID was cross-referenced with a list of provider type (resident, staff, medical student, nurse, nurse practitioner, pharmacist, paramedic, or auxiliary staff) obtained from the Regenstrief data managers in 2010. It is possible that providers changed roles from resident to staff or MS to resident during the course of the study. We used the role assigned 2010 when the cross referencing was done. Once the provider type was identified, the provider ID was replaced by a study ID code that identifies the training level to allow for monitoring individual trends without compromising data security and the identifiable provider IDs. The de-identified data was used for the remainder of the data cleaning and analysis. We initially wanted to classify providers as EM or non-EM, but the Wishard system allows residents and students to change their department affiliation depending on where they are assigned making this impossible to accurately code with the information available.

We obtained a copy of the 2009 EM curriculum from the Society of Academic Emergency Medicine website in order to code the search terms and identify possible curriculum deficiencies in the clinical setting. We also obtained a list of Wishard ED discharge diagnoses to compare Internet searching to patient population in the ED. This list was manually coded to reflect the EM curriculum for comparison with the coded searches. There is no way to know which diagnoses were made by residents, staff or medical students from this dataset so comparisons are made to the whole group.

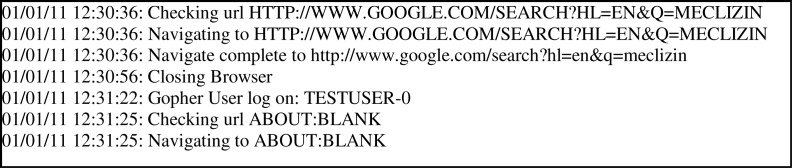

The de-identified log files were parsed using BBEdit (BareBones Software version 9.6.3) to remove all lines containing the words navigating, checking, blank, browser, javascript, ad.doubleclick, googleads, couldn’t, sites, blocking, mail, exchange, regenstrief, RMRS, svapps, and clarian (figure 1). This initial cleaning was done to remove obvious duplicate searches (from checking and navigating to lines), non-clinical sites, advertising, local EMR connections, mail, and browser files. The data files were then opened in Excel (Microsoft Corporation Mac version 14.1.4) to continue data cleaning using macros to separate the web address from the search terms and delete non-clinical sites. We did a final manual review of each individual line to eliminate obvious non-clinical sites. The final dataset was manually coded to match the emergency medicine curriculum (2009 version) with additions for anatomy, drug information, education, laboratory, procedure, and test searches.

Figure 1.

Sample Raw Log File Data

The 1837 providers who accessed the ED workstations include EM staff, EM residents, and medical students both on EM service and off service, rotating residents, consulting residents, and consulting staff. The ED is a level 1 trauma center, is open 24 hours a day 7 days a week and provides care for over 100,000 patients per year. It is one of three primary training sites for the IUSOM EM residency training program with approximately 60 trainees in 3 or 5-year programs. EM clinical staff physicians work approximately 16 shifts per month while teaching staff may work as few as two shifts a month at this site. EM residents spend approximately 3 months a year in the Wishard ED working an average of 15 shifts per month.

Statistics

Statistical analysis was done in SPSS (IBM version 19 for Mac). We performed frequency analysis of search engines and search terms, stratified by level of training, for each log entry meeting the criteria for clinical information searching. We used independent samples t-test to compare search percentages to discharge diagnosis percentages and qualitative analysis by group for choice of search engine, search terms used, curriculum coverage, and an evidence-based (EBM) reliability analysis of the sites searched.

Results

The initial data pull contained 995.2MB of data in 200 files from 45 workstations in the Wishard ED. The automated data cleaning eliminated 184.7MB of data containing the keywords exchange, mail, RMRS, regenstrief, svapps, and clarian. The manual data cleaning further decreased the file to 51538 records with readable search terms and 1837 unique provider IDs. The results are from this final dataset. Personnel accessing the workstations were classified as medical student (MS), resident, staff, and other (nurses, pharmacists, paramedics, and ancillary staff) for purposes of this analysis. Staff accounted for 30.9% of the searches, residents 29.9%, unknown provider type 22.4%, other 10.4%, and medical students accounted for 6.4%. Individual providers searched an average of 28 times during the study period with a high of 919 searches by a single individual staff member (see table 1).

Table 1.

Top 5 Searches by Provider Type

| Rank | Provider Type | Search Term | Number (%) |

|---|---|---|---|

| 1 | MS | Blood in urine | 38 (0.94) |

| 2 | MS | Chest pain | 35 (0.86) |

| 3 | MS | Rash with wheels | 30 (0.74) |

| 4 | MS | Vertigo | 22 0.54) |

| 5 | MS | COPD | 16 (0.39) |

| 1 | Resident | Dizziness | 93 (0.49) |

| 2 | Resident | DKA | 63 (0.33) |

| 3 | Resident | Hypertensive Emergency | 51 (0.27) |

| 4 | Resident | Bactrim | 48 (0.25) |

| 5 | Resident | Hyponatremia | 42 (0.22) |

| 1 | Staff | Tramadol | 35 (0.29) |

| 2 | Staff | Hyponatremia | 27 (0.23) |

| 3 | Staff | Trazadone | 27 (0.23) |

| 4 | Staff | Keppra | 23 (0.19) |

| 5 | Staff | Abdominal Pain | 21 (0.18) |

There were 226 websites accessed with human readable search terms in the log files. The top 10 websites accessed were Google (43.3%), uptodate (40.6%), thomsonhc (4.7%), Wikipedia (3.9%), WebMD (2.4%), ebscohost (1.6%), tripdatabase (0.39%), accessmedicine (0.42%), NEJM (0.1%), and Medscape (0.1%). The Google total includes generic Google searches, Google scholar, and Google images combined.

There were 18237 search terms identified from the Internet log files of which 59% were determined to be clinically related searches. The most commonly searched terms by provider type were PEG (102 times by unk), dizziness (93 times by residents), blood in urine (38 times by medical students), tramadol (35 times by staff), and black widow brown recluse pictures (30 times by other). The search term total count includes multiple entries if a term was misspelled or abbreviated.

Across all physician and medical student groups, the most common search category was drug information (23.1%) followed by cardiovascular (9.4%). The EM curriculum was well covered by searches with the least number of searches in environmental (0.9%) and psychiatric (0.7%). Comparing the percentages of search terms by curriculum category to percentages of discharge diagnosis by curriculum category using independent samples t-test, there was a significant higher percentage of searches than percentage of discharge diagnoses in abdominal and GI, cardiovascular, endocrine, immune, neurological, hematologic, and toxicological categories.

Discussion

There were 51,613 searches identified from 98,491 valid web addresses over the 1550 days of data collected for an average of 33 searches per day and 635 web sites visited per day. Residents and staff conducted majority (60.8%) of the searches during the study period. Identified searches do not include those conducted from within websites like UpToDate, MDCalc, Access Medicine, PubMed or others. These sites identify their internal searches in non-human readable code. Extrapolating from the percentage of search terms for non-clinical topics (41%) we can assume that an additional 580,820 actual clinical searches were conducted during the study period for an average of 374 searches per day or 1.36 searches per patient per day.

Andrews, et al (2005) found that physicians searched for answers to clinical questions 68% of the time while patients were still present in clinic2 and Coumou, et al (2006) found that primary care physicians generate 0.7 to 1.85 questions per patient and seek answers to clinical questions a maximum of 57% of the time.3 From the Internet log data, we can speculate that EM physicians search for answers to the majority of their questions, and the search likely occurs during the patient visit.

Analysis of Information Sources

There were 226 web sites associated with clinically relevant search terms. Google and UpToDate accounted for 83.9% of all searches with identified search terms. All of the EM residents and medical students from IUSOM receive training in evidence-based medicine (EBM) including appropriate resources for finding evidence-based answers to clinical questions. The best evidence is described as filtered in the form of critically-appraised individual articles, critically appraised topics, and systematic reviews followed by randomized clinical trials, cohort studies and case-controlled studies, case series, and reports. The lowest form of evidence is background information and expert opinion.11 The Dartmouth Biomedical Website identifies the Turning Research into Practice (TRIP) database, Cochrane Database, Database of Abstracts of Reviews of Effects (DARE), Clinical Evidence, ACP Journal Club, Bandolier, Evidence Updates, Dartmouth EBM Database and “Evidence-Based” journals as examples of filtered top tier resources.12 They go on to identify how to search PubMed and Ovid for the highest level of evidence.12 Dartmouth further identifies UpToDate, eMedicine, eBooks, and the National Guideline Clearing house as examples of expert opinion evidence or the lowest level of evidence in EBM.11,12 The top 10 websites (table 4) used by providers includes only one filtered source (TRIP), 3 unfiltered sites (ebscohost, thomsonhc, and NEJM), 1 expert opinion site (UpToDate) and 4 sites that provide information that could be considered reliable or unreliable (Google, Wikipedia, WebMD, and Medscape). This finding suggests that more training is required for evaluating and accessing higher tier evidence-based sources when searching for clinical information in the ED.

Table 4.

Top 10 Websites Searched

| Website | Total | % Total |

|---|---|---|

| Google.com | 22319 | 43.3 |

| Uptodate.com | 20923 | 40.6 |

| Thomsonhc.com | 2444 | 4.7 |

| Wikipedia.com | 2027 | 3.9 |

| Webmd.com | 1243 | 2.4 |

| Ebscohost.com | 837 | 1.6 |

| Accessmedicine.com | 209 | 0.4 |

| Tripdatabase.com | 202 | 0.4 |

| NEJM.org | 160 | 0.3 |

| Medscape.com | 41 | 0.1 |

EM Curriculum Mapping

There were 18,237 search terms identified from the Internet logs. These include misspellings and abbreviations as separate terms. All of the terms were mapped and coded to the EM curriculum major subject areas for analysis to determine the breadth of curriculum coverage by search and to determine if there are any apparent gaps in knowledge that could be corrected during didactic sessions. The overwhelming majority of searches (almost twice the next most frequent) were for drug information (table 3). These searches were conducted primarily in Google and UpToDate with a few accessing WebMD for drug information. This suggests a need for an easily accessible comprehensive drug database for drug information built into the EMR to facilitate rapid searches in a reliable database. Identified search terms covered the curriculum major topics with the lowest number of searches in environmental and psychiatric emergencies and the highest number after drug information in cardiovascular. This pattern could represent knowledge confidence level, patient complexity, fear of missing something important, faculty teaching interests, or patient population. Additional observational studies are needed to determine the underlying reasoning for search behaviors.

Table 3.

Curriculum Mapping

| Curriculum Section | Search Total % | Discharge Dx % | Significant difference Search Total - Discharge Dx | Resident % | Staff % | MS % |

|---|---|---|---|---|---|---|

| General | 4.3 | 21.6 | P=0.005 | 4.3 | 3.7 | 6 |

| Abd & GI | 4.6 | 5.4 | P=0.02 | 4.3 | 4.9 | 4.6 |

| Cardiovascular | 9.4 | 8.1 | P<0.005 | 8.6 | 10 | 10.6 |

| Skin | 3.0 | 5.4 | P=0.046 | 2.7 | 2.1 | 6.2 |

| Endo Metab Nutrition | 4.9 | 2.3 | P<0.0001 | 5.4 | 5.2 | 4.3 |

| Environmental | 0.9 | 0.8 | NS | 0.7 | 1.1 | 1.3 |

| HEENT | 4.7 | 8.7 | P=0.013 | 5.3 | 2.8 | 4.8 |

| Hematologic | 1.9 | 0.5 | P<0.0001 | 2.0 | 2.5 | 1.5 |

| Immune | 1.5 | 0.8 | NS | 1.4 | 1.9 | 0.8 |

| Infectious | 3.5 | 1.7 | NS | 3.6 | 2.6 | 2.7 |

| Musculoskeletal | 2.8 | 5.3 | NS | 2.8 | 2.3 | 2.4 |

| Neurological | 5.1 | 5.5 | P=0.025 | 4.7 | 5.8 | 6.6 |

| OB Gyn | 1.6 | 2.3 | NS | 1.7 | 1.0 | 1.7 |

| Psych | 0.7 | 3.6 | NS | 0.7 | 0.6 | 1.1 |

| Renal | 2.9 | 4.4 | NS | 2.8 | 2.9 | 4.4 |

| Pulmonary | 4.4 | 7.0 | P=0.02 | 4.3 | 3.9 | 4.5 |

| Tox | 5.4 | 3.4 | P=0.049 | 5.4 | 7.6 | 4.1 |

| Trauma | 3.1 | 11.4 | P=0.021 | 2.6 | 3.2 | 3.3 |

| Anatomy | 1.2 | 1.0 | 0.7 | 2.1 | ||

| Drug | 23.1 | 0.3 | 24.6 | 24.7 | 16.8 | |

| Educ | 3.8 | 3.6 | 4.4 | 2.3 | ||

| Lab | 3.4 | 0.8 | 3.2 | 3.2 | 4.2 | |

| Proc | 2.0 | 2.6 | 1.7 | 1.7 | ||

| Test | 1.7 | 1.4 | 1.1 | 2.2 |

Bold indicates statistically significant difference for number of total searches greater than discharge diagnoses in each curriculum category.

EM Curriculum and Discharge Diagnoses

We identified 4 of 18 curricular topics that had a higher percentage of searches conducted than percentage of discharge diagnoses (table 3). These areas were cardiovascular, endocrine, hematologic, and toxicological emergencies. There are a number of explanations for this finding that require further exploration. Patients presenting with complaints in these categories tend to be complex with multiple comorbidities, which would increase the information need and thus the number of searches conducted. Wishard ED is a teaching facility, staff members may prompt increased searching to help residents and students formulate differential diagnoses for these complex patients. Hematology and immunology patients represent the lowest percentage of total patients seen in the ED (table 3), which could account for the necessity of more searches per patient. Further observational studies are required to establish the explanation for these discrepancies.

Top 5 Search Terms

The top five searches for residents were dizziness, DKA, hypertensive emergency, Bactrim, and hyponatremia (table 2). These five searches do not correlate with the number of patients discharged with related diagnoses. These areas could be indicative of curriculum gaps or insecurity in patient management among residents. The top five searches for staff included trazadone, tramadol, keppra, hyponatremia, and abdominal pain (table 2). The 3 of the 5 are drug information searches, an overlap with the residents for hyponatremia, and a unique search for abdominal pain. The top five for medical students includes blood in urine, chest pain, rash, vertigo, and COPD, which reflects a variety of curricular areas likely, appropriate for the level of training and acuity of patients seen in the ED (table 2). These results once again support the need for readily accessible drug information for ED physicians. Further observational studies are required to help understand the specific target of searches like COPD or abdominal pain. We have no way of knowing from the log files if the provider was searching for diagnostic criteria, treatment options, or something else entirely.

Table 2.

Searches by Provider Type

| Provider Type | Total Searches | Avg.per provider | High per prov. |

|---|---|---|---|

| MS | 4061 | 16 | 180 |

| Other | 2487 | 10 | 111 |

| Resident | 18871 | 35 | 265 |

| Staff | 11872 | 31 | 919 |

| Unk | 14247 | 34 | 737 |

Limitations

One of the major limitations of this study is that many websites code the search terms with non-human readable terms so it is impossible to know what was searched after entering PubMed or UpTpDate or MDcalc or access medicine. Data cleaning and search identification required several passes with text editing tools and excel macros as well as manual review. Spelling errors, abbreviations, and multiple terms for the same concept further complicate the process. We did not attempt to correct the log files for spelling, multiple terms, or abbreviations. These were accounted for when the search terms were coded to the EM curriculum but total number of terms searched and most common concepts may be affected.

We were unable to map provider ID to provider type for approximately 22% of the Internet log files. This is likely due to the turnover of medical students, residents, staff, and other personnel during the study period. We were also unable to accurately determine whether personnel were emergency medicine or other specialties as the Wishard system allows providers to change their department affiliation when they change rotations in order to provide the most appropriate EMR abstract for the clinical environment in which they are working. The methodology of this study did not allow us to observe whether the patient was still present in the ED when the search took place, nor how long it took to complete the search, nor whether an answer was found. Combining log files with direct observation and interviews would improve our understanding of the outcome of the search and the length of time necessary to complete a search.

Teaching faculty often ask residents and students to search topics that they normally would not choose to search and may suggest a particular site which contaminates our data when we are looking at website choice by training level. Faculty search for information to provide evidence based decision support to residents and MS as well as to answer their own questions. There are direct links to some drug information from within the EMR. Personal handheld devices, computers, and tablets are often used to conduct searches, which would not be captured by this process, and paper references also exist in the clinical environment. Some of these weaknesses could be addressed by using a web portal for all searches to capture all websites and search terms or direct observation and recording search behaviors of individual providers in the course of patient care in the ED.

Conclusion

Physicians using the ED workstations use electronic resources on average 1.35 times per patient encounter indicating a definite shift from paper resources to electronic databases. The searches performed by the providers in the ED cover the entire EM curriculum and match closely with the discharge diagnoses. It is clear that a comprehensive drug information reference is necessary from within the EMR. The websites selected by staff, residents and medical students to obtain clinical information are not the highest quality EBM sites. Obtaining the highest quality of information is an important component in accurately diagnosing and treating ED patients. This study illustrates some potential weaknesses in the resident knowledge base, the training program, or the patient population that deserve special attention during didactic and simulation sessions. The results of our analysis will help guide the development of specialty specific tools to aid rapid, accurate, and up-to-date acquisition of clinical information for decision support in the Emergency Department.

References

- 1.McDonald CJ. Protocol-based computer reminders, the quality of care and the non-prefectibility of man. N Engl J Med. 1976;295:4. doi: 10.1056/NEJM197612092952405. [DOI] [PubMed] [Google Scholar]

- 2.Andrews JE, Pearce KA, Ireson C, Love MM. Information-seeking behaviors of practitioners in a primary care practice-based resarch network. J Med Libr Assoc. 2005;93(2):6. [PMC free article] [PubMed] [Google Scholar]

- 3.Coumou CH, Meijman FJ. How do primary care physicians seeks answers to clinical questions? A literature review. J Med Libr Assoc. 2006;94(1):5. [PMC free article] [PubMed] [Google Scholar]

- 4.Ely JW, Osheroff JA, Ebell MH, et al. Obstacles to answering doctors’ questions about patient care with evidence: qualitative study. British Medical Journal. 2002 Mar 23;:324. doi: 10.1136/bmj.324.7339.710. 2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Wyer PC, Rowe BH. Evidence-based reviews and databases: are they worth the effort? Developing evidence summaries for emergency medicine. Acad Emerg Med. 2007 Nov;14(11):960–964. doi: 10.1197/j.aem.2007.06.011. [DOI] [PubMed] [Google Scholar]

- 6.McKibbon KA, Fridsma DB. Effectiveness of clinician-selected electronic information resources for answering primary care physicians’ information needs. Journal of the American Medical Informatics Association. 2006 Nov-Dec;13(6):653–659. doi: 10.1197/jamia.M2087. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Hoogendam A, Stalenhoef AF, de Vries Robbe PF, Overbeke AJ. Answers to questions posed during daily patient care are more likely to be answered by UpToDate than PubMed. J Med Internet Res. 2008;10(4):e29. doi: 10.2196/jmir.1012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Falagas ME, Ntziora F, Makris GC, Malietzis GA, Rafailidis PI. Do PubMed and Google searches help medical students and young doctors reach the correct diagnosis? A pilot study. 2009;8:788–790. doi: 10.1016/j.ejim.2009.07.014. Available at: http://ovidsp.ovid.com/ovidweb.cgi?T=JS&PAGE=reference&D=medl&NEWS=N&AN=19892310. Accessed 9003220, 20. [DOI] [PubMed] [Google Scholar]

- 9.Westbrook J, Coiera EW, Gosling AS. Do online information retrieval systems help experienced clnicians answer clinical questions? JAMIA. 2005;12(3):7. doi: 10.1197/jamia.M1717. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Pallin D, Lahman M, Baumlin K. Information technology in emergency medicine residency-affiliated emergency departments. Academic Emergency Medicine. 2003 Aug;10(8):848–852. doi: 10.1197/aemj.10.8.848. [DOI] [PubMed] [Google Scholar]

- 11.Sackett DL, Straus SE, Richardson WS. Evidence-based medicine: how to practice and teach EBM. 2 ed. Edinburgh: Churchill Livingstone; 2000. [Google Scholar]

- 12.Dartmouth Biomedical Libraries Evidence-Based Medicine (EBM) Resources. 2008. http://www.dartmouth.edu/~biomed/resources.htmld/guides/ebm_resources.shtml. Accessed 12 March 2012.