Abstract

The primary purpose of this study was to develop a clinical tool capable of identifying discriminatory characteristics that can predict patients who will return within 72 hours to the Pediatric emergency department (PED). We studied 66,861 patients who were discharged from the EDs during the period from May 1 2009 to December 31 2009. We used a classification model to predict return visits based on factors extracted from patient demographic information, chief complaint, diagnosis, treatment, and hospital real-time ED statistics census. We began with a large pool of potentially important factors, and used particle swarm optimization techniques for feature selection coupled with an optimization-based discriminant analysis model (DAMIP) to identify a classification rule with relatively small subsets of discriminatory factors that can be used to predict — with 80% accuracy or greater — return within 72 hours. The analysis involves using a subset of the patient cohort for training and establishment of the predictive rule, and blind predicting the return of the remaining patients.

Good candidate factors for revisit prediction are obtained where the accuracy of cross validation and blind prediction are over 80%. Among the predictive rules, the most frequent discriminatory factors identified include diagnosis (> 97%), patient complaint (>97%), and provider type (> 57%). There are significant differences in the readmission characteristics among different acuity levels. For Level 1 patients, critical readmission factors include patient complaint (>57%), time when the patient arrived until he/she got an ED bed (> 64%), and type/number of providers (>50%). For Level 4/5 patients, physician diagnosis (100%), patient complaint (99%), disposition type when patient arrives and leaves the ED (>30%), and if patient has lab test (>33%) appear to be significant. The model was demonstrated to be consistent and predictive across multiple PED sites.

The resulting tool could enable ED staff and administrators to use patient specific values for each of a small number of discriminatory factors, and in return receive a prediction as to whether the patient will return to the ED within 72 hours. Our prediction accuracy can be as high as over 85%. This provides an opportunity for improving care and offering additional care or guidance to reduce ED readmission.

Introduction

Among patients who are discharged from the ED, 3%–4% return within 72 hours. Revisits can be related to the nature of the disease, medical errors, and/or care during their initial treatment1–3.

Early returns to the ED may involve patients who are in a high-risk population, but other factors, such as an overcrowded ED, which decreases efficiency, can also contribute to the problem2,4–6. Alessandrini et al analyzed unscheduled revisits and the similarity of return visit rates between pediatric ED and general ED7. Previous studies have identified risk factors for the early return to the ED, including diagnosis, complaints, and patient demographic factors8,9. Gordon et al. indicated that initial diagnosis may be a useful predictor of early ED return10. McCusker et al. developed a screening tool called the Identification of Senior at Risk (ISAR) to identify elderly patients at high risk of return to the ED11. Other efforts have focused on predictors of the return for pediatric mental health care12, Acute Pulmonary Embolism13, and chronic obstructive pulmonary disease (COPD) exacerbations14.

Although these studies have identified factors that appear to be linked to return visits, little is known about actually predicting return visits. Studies have applied prediction / classification methods to a variety of types of healthcare data15–17. In 1997, Gallagher et al. presented a mixed integer programming model (DAMIP) for constrained discriminant analysis, an approach to classification with constraints to control the likelihood of misclassification18. Lee et al. subsequently demonstrated the capability of DAMIP on a wide variety of medical problems compared to other classification methods19–22. In this study, we leverage DAMIP along with swarm optimization to develop a clinical tool capable of identifying discriminatory characteristics that can predict patients who will return to the ED within 72 hours. We contrast the DAMIP results against other classification approaches.

Methods

This study was conducted in the EDs of two sites of Children’s Healthcare of Atlanta (CHOA): CHOA at Hospital 1 and CHOA at Hospital 2. Included in this study are 66,861 patients who were discharged from the EDs during the period from May 1 2009 to December 31 2009. Patients were identified from the ED information system, including 2519 patients (3.77%) who returned within 72 hours. The patients were classified into two groups as the input of the classification model: the patients who revisit within 72 hours, and other discharged patients.

The data included 96 factors for each of the patients, including chief and secondary complaint, physician diagnosis, 5 factors related to demographic information, 8 factors related to patient arrivals, 44 factors related to the treatment and procedures received, and 35 factors related to the hospital environment.

Factors of patient information, diagnosis, and treatment have been used in previous studies to analyze the early return patients7–11. In this study, the demographic factors include age, sex, race, and weight; the hospital environment factors include day of week, time of arrival, method of arrival, payor status, triage category (acuity level), number of patients in the ED, number of patients waiting for triage, number of available physicians, and number of available beds when the patient arrives; and the treatment factors include length of service, waiting time before a physician arrives, number of orders, number of requested resources, and whether they have taken CT scan, lab tests, radiology test, or IV therapy.

The hospital environment data was extracted from the ED electronic medical record and tracking system (Picis ED PulseCheck) into the hospitals enterprise Oracle database. For ED descriptors and available patient level details, this occurred on an hourly basis. During extraction, variables were recorded and calculations for aggregate indicators were written to an Oracle datamart. Final patient data determined after the visit (final icd-9 codes) were written to the datamart when they became available.

Early return of patients is considered a measure of quality of health care23. Many studies have indicated that the errors in medical care or patient education may increase the risk of early return. However, studies have not adequately analyzed the effect of the hospital environment on the patient’s decision to revisit. Previous studies have used logistic linear regression models to find patients at increased risk of return. However, these models fail to accurately predict a return visit since the association between the Boolean value of return and the risk factors is more complicated than linear association. In order to predict the revisit patients among the discharged patients, we used a classification model as the predictive model. The implemented classifier is discriminant analysis via mixed integer program (DAMIP) which realizes the optimal parameters of the Anderson’s classification model18,19,24. DAMIP aims to maximize the overall prediction accuracy using a set of factors, subject to an upper bound on the misclassification rate. In the next section, we describe the DAMIP-based machine learning framework.

Machine Learning Framework for Establishing Predictive Rules

The computational design of our machine learning framework focuses on the ‘wrapper approach’, where a feature selection algorithm is coupled to the DAMIP learning/classification module. The feature selection, classification and cross validation procedures are coupled such that the feature selection algorithm searches through the space of attribute subsets using the cross-validation accuracy from the classification module as a measure of goodness. The attributes selected can be viewed as critical clinical/hospital variables that drive certain diagnosis or early detection. This allows for feedback to clinical decision makers for prioritization/intervention of patients and tasks.

Optimization-Based Classifier: Discriminant Analysis via Mixed Integer Program

Suppose we have n entities from K groups with m features. Let 𝒢 = {1,2, … ,K} be the group index set, 𝒪 = {1,2, … ,n} be the entity index set, and ℱ = {1,2, …, m} be the feature index set. Also, let 𝒪k, k ∈𝒢 and 𝒪k ⊆ 𝒪, be the entity set which belong to group k. Moreover, let ℱj, j ∈ℱ, be the domain of feature j, which could be the space of real, integer, or binary values. The ith entity, i ∈𝒪, is represented as (yi,xi) = (yi, xi1, … ,xim) ∈ 𝒢 × ℱ1 × ⋯ × ℱm, where yi is the group to which entity i belongs, and (xi1, … ,xim) is the feature vector of entity i. The classification model finds a function f: (ℱ1 × ⋯ × ℱm) → 𝒢 to classify entities into groups based on a selected set of features.

Let πk be the prior probability of group k and fk(x) be the conditional probability density function for the entity x ∈ ℝm of group k, k ∈ 𝒢. Also let αhk ∈ (0,1), h, k ∈ 𝒢, h ≠ k, be the upperbound for the misclassification percentage that group h entities are misclassified into group k. DAMIP seeks a partition {P0, P1, … ,PK} of ℝK, where Pk, k ∈ 𝒢 is the region for group k, and P0 is the reserved judgement region with entities for which group assignment are reserved (for potential further exploration).

Let uki be the binary variable to denote if entity i is classified to group k or not. Mathematically, DAMIP can be formulated as

| (1) |

| (2) |

| (3) |

| (4) |

| (5) |

DAMIP has many appealing characteristics including: 1) the resulting classification rule is strongly universally consistent, given that the Bayes optimal rule for classification is known22; 2) the misclassification rates using the DAMIP method are consistently lower than other classification approaches in both simulated data and real-world data; 3) the classification rules from DAMIP appear to be insensitive to the specification of prior probabilities, yet capable of reducing misclassification rates when the number of training entities from each group is different; 4) the DAMIP model generates stable classification rules regardless of the proportions of training entities from each group.18–22

In the ED readmission classification experiments and analysis, there are two groups of patients: Group 1: non-returning, Group 2: return within 72-hour. Each entity is a patient, and each feature is the factor. Patient data from the period May 1 2009 to December 31 2009 were randomly divided into two sets: a training set and an independent set for blind prediction. The DAMIP classifier is first applied to the training set to establish the classification rule. The accuracy of the rule is first gauged by performing 10-fold cross validation, and can be further gauged by applying the rule to the independent set of patient data for blind prediction. Blind prediction is performed only when the 10-fold classification for the training set satisfies a pre-set minimum accuracy criteria. To gauge the performance of our classifier, we compare the results with linear discriminate analysis, Naive Bayesian classifier, support vector machine, logistic regression, decision tree, random forest and nearest shrunken centroid approaches that are implemented in the R© language/environment.

By design, the machine learning process strives to identify the smallest set of discriminatory features that offers reliable prediction. In our application, a data stream can be fed automatically into our machine learning framework. In other applications, e.g., using hand-held device for early diagnostics etc, it is desirable that the final prediction rule depends on relatively few factors so that it is not a burden on the healthcare staff to enter the information. Regardless of the input of data stream, these discriminatory features impact decisions for hospital policy, and thus should contain only the critical factors.

Incorporating the Feature Selection Algorithm

We developed a heuristic algorithm using particle swarm optimization (PSO) to iteratively search among subsets of factors. PSO, originally developed by Kennedy and Eberhart25,26, is an evolutionary computation technique for solving optimization problems. Below, we describe the DAMIP/PSO machine learning framework.

Let n be the desired number of factors to be selected. Let m be the size of the particles population. Let xi and pi be binary vectors representing sets of chosen factors. Let vi be a real-value vector representing the velocity of particle i. vi is randomly assigned during initialization.

Associated with each particle is a current set of factors, xi, and a record of the best classification accuracy with its corresponding factor set, pi, reached thus far by this particle. We use a Von Neumann topology with 36 particles (6 × 6 block). Each particle’s neighborhood is defined by its top, bottom, left and right.

At each iteration, the factor set for a particle is updated by the following algorithm:

○ Step 1 Perform DAMIP classifier for cross validation on the training data using the selected set of factors xi;

○ Step 2 If the overall accuracy and the accuracy of each group in the 10-fold cross validation are over a pre-set value (e.g., > 70%), perform blind prediction using this rule on the independent set and output results. Otherwise go to Step 3.

- ○ Step 3 Update the velocity of the particle: The new velocity vi is obtained from the current velocity, the current factor set, and the best accuracy of this particle and its neighborhood and their corresponding factor set:

where ω, c1 and c2 are fixed positive coefficients, r1 and r2 are randomly generated in the range (0,1), N(i) is the neighborhood of particle i. ○ Step 4 The highest n velocity entries of this new vi form the associated new factor set of this particle.

The algorithm updates the m particles sequentially in each iteration, and terminates when it reaches a pre-determined maximum number of iterations.

We implemented the DAMIP classifier and PSO feature selection algorithm in C++. In this study, the particle population is 36, and the machine learning process consists of 1000 DAMIP/PSO iterations (= one complete learning cycle). Each cycle requires an average of 1,080 CPU seconds. The experiment is repeated 100 times with randomly selected starting subsets of factors to strategize our search space and to avoid local optimum.

The output of the algorithm is a collection of discriminatory subsets of factors that are good candidates for the prediction of return visits within 72 hours. While users can set the desired number of discriminatory factors, the size of factors reported herein ≤ 10) is reflected from our experimental findings (see Figure 1).

Figure 1.

The highest prediction accuracy obtained via DAMIP/PSO for the two hospital sites. The solid lines represent the accuracy of cross validation, and the dashed lines represent the blind prediction accuracy. H1: Hospital 1, H2: Hospital 2, CV: 10-fold cross validation, BT: blind prediction.

Results

Due to the diversity of patients in the two hospital sites, we ran the classification model separately for each site. There were 27,534 ED patients at Hospital 1, 996 (3.62%) of whom returned within 72 hours; and there were 39,327 at Hospital 2, 1523 (3.87%) of whom returned within 72 hours. All patients went home after the first ED visit. In our analysis, the training set is 15,000 and 20,000 respectively, and the blind prediction set consists of the rest of the patients.

The patient factors were acquired from the patient records and the ED information system. Table 1 shows the selected patient information for the two sites. We categorized free text factors including method of arrival, patient complaint, physician diagnosis, race, payor code, financial class, and disposition type via natural language processing, and then ranked the categories for each factor based on the corresponding revisit rate.

Table 1.

Selected characteristics of patient information

| CHOA at Hospital 1 | CHOA at Hospital 2 | |||||

|---|---|---|---|---|---|---|

| Total number | Percent (%) | % of return in 72 hours (%) | Total number | Percent (%) | % of return in 72 hours (%) | |

| Total | 27534 | 100 | 3.62 | 39327 | 100 | 3.87 |

| Day of week | ||||||

| Monday | 4036 | 14.66 | 3.20 | 5835 | 14.84 | 3.75 |

| Tuesday | 3910 | 14.20 | 3.12 | 5514 | 14.02 | 3.46 |

| Wednesday | 3782 | 13.74 | 3.64 | 5481 | 13.94 | 3.45 |

| Thursday | 3794 | 13.78 | 3.61 | 5334 | 13.56 | 3.86 |

| Friday | 3683 | 13.38 | 4.13 | 5323 | 13.54 | 4.30 |

| Saturday | 4061 | 14.80 | 4.14 | 5660 | 14.39 | 4.47 |

| Sunday | 4268 | 15.50 | 3.51 | 6180 | 15.71 | 3.82 |

| Time of arrival | ||||||

| 20:00-08:00 | 10681 | 38.79 | 3.95 | 16013 | 40.72 | 4.05 |

| 08:00–12:00 | 4110 | 14.93 | 3.58 | 5854 | 14.89 | 3.79 |

| 12:00–16:00 | 5772 | 20.96 | 3.50 | 8139 | 20.70 | 3.70 |

| 16:00–20:00 | 6971 | 25.32 | 3.23 | 9321 | 23.70 | 3.78 |

| Acuity Level | ||||||

| 1 | 1333 | 4.84 | 2.32 | 637 | 1.62 | 2.67 |

| 2 | 8514 | 30.92 | 2.65 | 8382 | 21.31 | 3.30 |

| 3 | 12060 | 43.80 | 3.91 | 18781 | 47.76 | 3.89 |

| 4 | 5583 | 20.28 | 4.76 | 11372 | 28.92 | 4.36 |

| 5 | 44 | 0.16 | 4.55 | 155 | 0.39 | 1.29 |

Good candidates for revisit prediction are obtained by filtering the good results among the 100 complete learning cycles. These results are filtered using the criteria that the accuracy of cross validation and blind prediction for both groups are over 70%. Based on the filtered criteria, we found 7 sets of discriminatory factors for Hospital 1 and 70 sets of discriminatory factors for Hospital 2 with set size less than 10. The most frequent factors appearing among these discriminatory sets are listed in Table 2. The factors patient chief complaint, patient diagnosis, and provider type appear in the list of both sites.

Table 2.

Factors most frequently occurring among the 7 sets of discriminatory factors for predicting <72-hour returns at Hospital 1, and those most frequently occurring among the 70 sets of discriminatory factors for predicting <72-hour returns at Hospital 2.

| Hospital 1 | Hospital 2 | |||

|---|---|---|---|---|

| Factor Name | Frequency (%) | Factor Name | Frequency (%) | |

| Patient diagnosis | 7 (100%) | Patient diagnosis | 68 (97.14%) | |

| Patient chief complaint | 7 (100%) | Patient chief complaint | 68 (97.14%) | |

| Training Physician: Resident or Fellow. | 4 (57.14%) | Physician Extender (i.e., nurse practitioners or others) | 51 (72.86%) | |

| If IV antibiotics was ordered. | 4 (57.14%) | If the patient received a radiological test | 27 (38.57%) | |

| Attending Provider Ratio (The provider ratio (PR) determines the volume of patients that can be evaluated and treated by the physician providers) see http://www.ncbi.nlm.nih.gov/pubmed/11691670 for reference | 3 (42.86%) | Expectant Patient: This is a patient is on the way to the ED who was called in by a care provider. | 21 (30%) | |

| Patient has been in ED in last 72 hours | 3 (42.86%) | Time it took when the first medical doctor arrived until the attending arrived | 19 (27.14%) | |

| Primary nurse involved | 2 (28.57%) | Patients who arrived ambulance | 14 (20%) | |

| Time when the patient got an ED bed to time until first medical doctor arrived | 2 (28.57%) | Number of triaged patients at time | 13 (18.57%) | |

| Number of nursing resources requested | 2 (28.57%) | |||

Figure 1 depicts the highest accuracy values achieved in DAMIP/PSO cross validation and blind prediction. The classification accuracy increases as the number of factors selected in the classification rule increases, and the highest accuracy was achieved when 4 to 10 factors were used. Figure 1 also shows that performance levels off as the number of factors increases. We include both the cross-validation and the blind prediction results to reflect the consistency of predictive power of the developed classification rules.

Table 3 contrasts DAMIP/PSO results with other classification methods. Uniformly other classification methods suffer from group imbalance and the classifiers tend to place all entities into the Non-return group. In particular, linear discriminant analysis, support vector machine, logistic regression, classification trees, and random forest placed almost all patients (> 99%) into the “Non-return” group, by sacrificing the very small percentage of “Return” patients. This table also showcases the importance of reporting the classification accuracy for each group, in addition to the overall accuracy.

Table 3.

Comparison of DAMIP/PSO results against other classification methods.

| Hospital 1 | Training Set: 15,000 | Blind Prediction Set: 12,534 | ||||

| 10-fold Cross Validation Accuracy | Blind Prediction Accuracy | |||||

| Classification Method | Overall | Non-return | Return | Overall | Non-return | Return |

| Linear Discriminant Analysis | 96.3% | 99.6% | 5.5% | 96.1% | 99.6% | 5.3% |

| Naïve Bayesian | 51.6% | 50.3% | 87.0% | 51.7% | 50.2% | 89.2% |

| Support Vector Machine | 96.5% | 100.0% | 0.0% | 96.2% | 100.0% | 0.0% |

| Logistic Regression | 96.5% | 99.8% | 5.9% | 96.3% | 99.8% | 8.3% |

| Classification Tree | 96.6% | 99.9% | 4.4% | 96.3% | 100.0% | 3.0% |

| Random Forest | 96.6% | 100.0% | 1.5% | 96.3% | 100.0% | 1.9% |

| Nearest Shrunken Centroid | 62.7% | 62.9% | 50.0% | 48.7% | 48.2% | 64.7% |

| DAMIP/PSO | 83.1% | 83.9% | 70.1% | 82.2% | 83.1% | 70.5% |

| Hospital 2 | Training Set: 20,000 | Blind Prediction: 19,327 | ||||

| Overall | Non-return | Return | Overall | Non-return | Return | |

| LDA | 96.2% | 100.0% | 0.1% | 96.0% | 100.0% | 0.3% |

| Naïve Bayesian | 53.4% | 52.2% | 83.9% | 54.4% | 53.2% | 84.2% |

| SVM | 96.3% | 100.0% | 0.0% | 96.0% | 100.0% | 0.0% |

| Logistic Regression | 96.3% | 100.0% | 0.0% | 96.1% | 99.9% | 3.3% |

| Classification Tree | 96.2% | 100.0% | 0.0% | 96.0% | 100.0% | 0.0% |

| Random Forest | 96.2% | 100.0% | 0.5% | 96.1% | 100.0% | 0.5% |

| Nearest Shrunken Centroid | 60.5% | 60.6% | 50.1% | 45.8% | 45.1% | 61.2% |

| DAMIP/PSO | 80.1% | 81.1% | 70.1% | 80.5% | 81.5% | 70.0% |

Acuity level is a crucial indicator of ED patient treatment resource and service needs. To better understand the 72-hour readmission characteristics of patients across different acuity levels, we perform DAMIP/PSO classification on patients with acuity level 1, 2, 3, and 4/5 – level 1 being the highest acuity. We combine Levels 4 and 5 patients in this analysis since there are only 44 and 155 Level 5 patients in each hospital respectively. The classification results, reported in Table 4, show higher classification and predictive accuracy for Level 1 and Level 4/5 patients. This may be explained by the fact that these patients have less diagnosis uncertainty than those in Levels 2 and 3.

Table 4.

DAMIP/PSO classification results for patients in each of the acuity levels.

| Acuity | Training set size | 10-fold Cross Validation Accuracy | Blind Prediction set size | Blind Prediction Accuracy | ||||

|---|---|---|---|---|---|---|---|---|

| Hospital 1 | Overall | Non-return | Return | Overall | Non-return | Return | ||

| 1 | 700 | 87.9% | 82.9% | 92.8% | 633 | 85.1% | 85.3% | 76.4% |

| 2 | 5000 | 76.0% | 76.4% | 71.4% | 3514 | 73.2% | 73.6% | 71.6% |

| 3 | 6000 | 80.2% | 81.0% | 70.2% | 6060 | 80.3% | 81.1% | 70.3% |

| 4 and 5 | 3000 | 85.2% | 81.1% | 89.2% | 2627 | 81.0% | 81.0% | 81.3% |

| Hospital 2 | Overall | Non-return | Return | Overall | Non-return | Return | ||

| 1 | 350 | 77.2% | 75.6% | 78.8% | 287 | 76.4% | 76.4% | 75.0% |

| 2 | 4500 | 74.8% | 75.3% | 70.0% | 3882 | 74.2% | 74.7% | 70.5% |

| 3 | 10000 | 77.5% | 78.2% | 70.1% | 8781 | 77.5% | 78.2% | 70.0% |

| 4 and 5 | 6000 | 80.1% | 83.7% | 76.5% | 5527 | 78.3% | 78.4% | 76.2% |

Specifically, patients with Level 1 acuity have the lowest re-admission percentage (Table 1). These patients have the highest acuity, and thus require the most urgent rapid service. The prediction accuracy for these patients can be as high as 88%. Patients at Levels 4 and 5 have the highest re-admission percentage (Table 1). These patients have less pain severity, and are more concerned with quality of service. The most frequent factors shown in the discriminatory sets at the two hospitals are listed in Tables 5a, and 5b. We observe similarities between the two hospital sites for Level 1 patients (and Level 4/5 patients) critical readmission factors. For Level 1 patients, among the acquired discriminatory sets with good predictive results, time when the patient arrived until he/she got an ED bed, patient complaint, type/number of providers, and patients receive radiologic/CT scans are common and most frequent factors in both sites. For Level 4/5 patients, patient diagnosis, patient complaint, disposition type when patient arrives and leaves the ED, if the patient has a lab test, and if an IV was ordered are among the most critical readmission factors in both hospitals.

Table 5a.

Factors most frequently occurring among the 236 sets of discriminatory factors for predicting <72-hour returns for acuity-level 1 patients at Hospital 1, and those most frequently occurring among the 42 sets of discriminatory factors for predicting <72-hour returns for acuity-level 1 patients at Hospital 2.

| Hospital 1 | Hospital 2 | |||

|---|---|---|---|---|

| Factor Name | Frequency (%) | Factor Name | Frequency (%) | |

| Patient diagnosis | 230 (97.46%) | Time when the patient arrived until he/she got a bed | 27 (64.29%) | |

| Time when the patient arrived until he/she got a bed | 228 (96.61%) | Number of beds reserved at time | 24 (57.14%) | |

| Payor type | 180 (76.27%) | Patient chief complaint | 24 (57.14%) | |

| Patient chief complaint | 178 (75.42%) | Physician extender (ie. Nurse Practitioners or others). | 22 (52.38%) | |

| Number of residents in ED | 129 (54.66%) | Month | 16 (38.1%) | |

| Number of medical students in ED | 106 (44.92%) | If the patient received a radiologic test | 12 (28.57%) | |

| If the patient received a CT scan for his/her head | 80 (33.9%) | Patient’s weight | 11 (26.19%) | |

| If the patient had a rapid strep test | 77 (32.63%) | If the patient received Chest X-Ray | 11 (26.19%) | |

| Number or waiting patients divided by the number of available beds29 | 66 (27.97%) | |||

Discussion and Conclusion

In this study, we developed a machine-learning framework combining a PSO feature selection algorithm and a DAMIP classifier to predict patients who will return to the ED within 72 hours. We used this model to select sets of discriminatory factors to establish classification rules, and to develop prediction criteria based on these rules that differentiate the revisit patients from the rest of the patients with predictive accuracy over 80%.

The input factor pool included patient information, patient complaint, physician diagnosis, operations and treatment, and hospital real-time utilization records. For Level 1 patients, among the acquired discriminatory sets with good predictive results, time when the patient arrived until he/she got an ED bed, patient complaint, type/number of providers, and patients receive a radiologic/CT scan are common and most frequent factors in both sites. For Level 4/5 patients, physician diagnosis, patient complaint, disposition type when patient arrives and leaves, if the patient has a lab test, and if an IV was ordered are among the most common factors across the two hospitals. We also note that some key hospital environment factors (e.g., time when the patient arrived until he/she got an ED bed, type/number of providers) appear among the most frequently chosen factors. Besides the common factors, the predictive factors for the two sites are different due to the diversity of the patients and the hospital characteristics. This supports the point indicated by Joynt et al. that the hospital location may affect readmission of the patients25.

Our classification model was demonstrated to be consistent when the hospital environment varies, and its objective can be extended from short-term revisit to any class of revisit. The DAMIP/PSO machine learning framework is generalizable for predictive analytics across different hospital sites. It can adapt to different feature input and identify the appropriate set of discriminatory features for consistent prediction.

Among the ED patients, about 3–4% are return patients. Their returns may be related to their first visit experience. Being able to anticipate and predict return patterns may facilitate quality of ED service and quality of patient care and allow ED providers to intervene appropriately. The DAMIP/PSO classifier is able to blind predict with over 80% accuracy, and outperforms other classifiers.

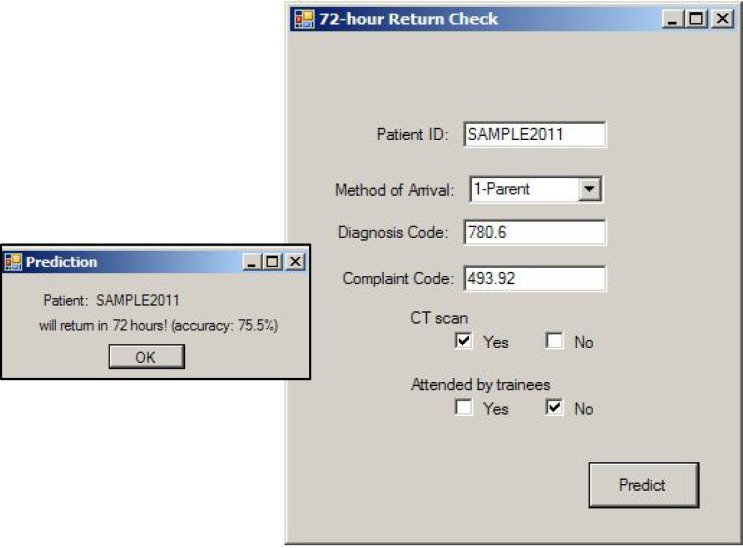

Based on the set of discriminatory factors with high accuracy, we developed a decision support tool for predicting patients returning within 72 hours. When implemented in clinical settings, the tool can potentially acquire data in real-time from the ED database and acquire the current hospital resource status. As the relevant factors for a patient are entered by the ED staff or through automated data-streaming, the system will return readmission prediction status of the patient. Since each discriminatory set of factors corresponds to a delivery or policy change, and requires action from ED staff, we would expect the set of discriminatory factors to be rather small, as discovered in our study.

Figure 2 shows a simple user interface based on a set of factors that predicted return visits with accuracy over 80%. After the required patient data is entered, and the employee clicks the “predict” button, the tool will retrieve the hospital related factors from the hospital database system, and present the revisit prediction result based on the implemented criteria. Such a computerized system allows real-time decision making, and ongoing learning and retraining of the predictive rule (and thus the discriminatory factors) as the ED data evolves over time.

Figure 2.

A sample user interface of a prediction tool for 72-hour return. Key features are typed in or selected.

We caution that these are only preliminary results based on a subset of patients in predicting readmission cases. Currently, we are conducting more detailed analysis where different patient cases will be drawn for training, and consistency among the discriminant features will be analyzed. Although we obtain better predictive accuracy (≥ 85%) when more discriminatory factors are selected, it is important to keep in mind that using too many factors is impractical.

Figure 5b.

Factors most frequently occurring among the 246 sets of discriminatory factors for predicting <72-hour returns for acuity-level 4/5 patients at Hospital 1, and those most frequently occurring among the 491 sets of discriminatory factors for predicting <72-hour returns for acuity-level 4/5 patients at Hospital 2.

| Factor Name | Frequency (%) | Factor Name | Frequency (%) |

|---|---|---|---|

| Patient diagnosis | 246 (100%) | Patient diagnosis | 491 (100%) |

| Patient chief complaint | 246 (100%) | Patient chief complaint | 487 (99.19%) |

| Disposition type when patient first arrives | 206 (83.74%) | Disposition type when patient leaves | 399 (81.26%) |

| if the patient comprehensive metabolic panel | 122 (49.59%) | Called in, patient is on way | 210 (42.77%) |

| Disposition type when patient leaves | 72 (29.27%) | If the patient had any lab tests done | 166 (33.81%) |

| Number of patients in bed waiting to be discharged | 69 (28.05%) | Disposition type when patient first arrives | 163 (33.2%) |

| Time when patient arrived until a first medical doctor arrived on scene | 44 (17.89%) | Month | 145 (29.53%) |

| Arrival method | 43 (17.48%) | Acuity level when the patient leaves | 138 (28.11%) |

| If an IV of ondansetron was ordered | 42 (17.07%) | If an IV of fluids was ordered | 117 (23.83%) |

Acknowledgments

The authors acknowledge the AMIA reviewers for providing useful comments to improve the paper. This research is partially supported by a grant from the National Science Foundation (0832390), and from the National Institutes of Health.

References

- 1.Kelly AM, Chirnside AM, Curry CH. An analysis of unscheduled return visits to an urban emergency department. N Z Med J. 1993;106:334–336. [PubMed] [Google Scholar]

- 2.Pierce JM, Kellerman AL, Oster C. ‘Bounces’: an analysis of short-term revisits to a public hospital emergency department. Ann Emerg Med. 1990;19:752–757. doi: 10.1016/s0196-0644(05)81698-1. [DOI] [PubMed] [Google Scholar]

- 3.Lerman B, Kobernick MS. Return visits to the emergency department. J Emerg Med. 1987;5:359–362. doi: 10.1016/0736-4679(87)90138-7. [DOI] [PubMed] [Google Scholar]

- 4.Hu SC. Analysis of patient revisits to the emergency department. Am J Emerg Med. 1992;10:366–370. doi: 10.1016/0735-6757(92)90022-p. [DOI] [PubMed] [Google Scholar]

- 5.Keith KD, Bocka JJ, Kobernick MS, et al. Emergency department revisits. Ann Emerg Med. 1989;18:964–968. doi: 10.1016/s0196-0644(89)80461-5. [DOI] [PubMed] [Google Scholar]

- 6.Raphael D, Bryant T. The state’s role in prompting population health: public health concerns in Canada, USA, UK, and Sweden. Health Policy. 2006;78:39–55. doi: 10.1016/j.healthpol.2005.09.002. [DOI] [PubMed] [Google Scholar]

- 7.Alessandrini EA, Lavelle JM, et al. Return Visits to a Pediatric Emergency Department. Pediatric Emergency Care. 2004;20(3):166–171. doi: 10.1097/01.pec.0000117924.65522.a1. [DOI] [PubMed] [Google Scholar]

- 8.McCusker J, Healey E, Bellavance F, et al. Predictors of repeat emergency department visits by elders. Acad Emerg Med. 1997;4:581–588. doi: 10.1111/j.1553-2712.1997.tb03582.x. [DOI] [PubMed] [Google Scholar]

- 9.Martin-Gill C, Reiser RC. Risk factors for 72-hout admission to the ED. Am J Emerg Med. 2004;22:448–53. doi: 10.1016/j.ajem.2004.07.023. [DOI] [PubMed] [Google Scholar]

- 10.Gordon JA, An LC, Hayward RA, et al. Initial emergency department diagnosis and return visits: risk versus perception. Ann Emerg Med. 1998;32:569–573. doi: 10.1016/s0196-0644(98)70034-4. [DOI] [PubMed] [Google Scholar]

- 11.McCusker J, Cardin S, Bellavance F, et al. Return to the emergency department among elders: patterns and predictors. Acad Emerg Med. 2000;7:249–259. doi: 10.1111/j.1553-2712.2000.tb01070.x. [DOI] [PubMed] [Google Scholar]

- 12.Newton AS, Ali S, Johnson DW, et al. Who Comes Back? Characteristics and Predictors of Return to Emergency Department Services for Pediatric Mental Health Care. Academic Emergency Medicine. 2010;17:177–186. doi: 10.1111/j.1553-2712.2009.00633.x. [DOI] [PubMed] [Google Scholar]

- 13.Aujesky D, Maria K, et al. Predictors of early hospital readmission after acute pulmonary embolism. Arch Intern Med. 2009;169(3):287–293. doi: 10.1001/archinternmed.2008.546. [DOI] [PubMed] [Google Scholar]

- 14.Goksu E, Oktay C. Factors affecting revisit of COPD exacerbated patients presenting to emergency department. European Journal of Emergency Medicine. 2010;17(5):283–285. doi: 10.1097/MEJ.0b013e3283314795. [DOI] [PubMed] [Google Scholar]

- 15.Krzysztof JC, Moore GW. Uniqueness of medical data mining. Artificial intelligence in medicine. 2002;26(1):1–24. doi: 10.1016/s0933-3657(02)00049-0. [DOI] [PubMed] [Google Scholar]

- 16.Obenshain MK. Application of Data Mining Techniques to Healthcare Data. Infection Control and Hospital Epidemiology. 2004;25(8):690–695. doi: 10.1086/502460. [DOI] [PubMed] [Google Scholar]

- 17.Lavrač N. Selected techniques for data mining in medicine. Artificial Intelligence in Medicine. 1999;16(1):3–23. doi: 10.1016/s0933-3657(98)00062-1. [DOI] [PubMed] [Google Scholar]

- 18.Gallagher R, Lee E, Patterson D. Constrained discriminant analysis via 0/1 mixed integer programming. Ann Oper Res. 1997;74:65–88. [Google Scholar]

- 19.Lee EK. Large-scale optimization-based classification models in medicine and biology. Annals of Biomedical Engineering --Systems Biology, Bioinformatics, and Computational Biology. 2007;35(6):1095–1109. doi: 10.1007/s10439-007-9317-7. [DOI] [PubMed] [Google Scholar]

- 20.Lee EK, Wu TL. Classification and disease prediction via mathematical programming. In: Seref O, Kundakcioglu OE, Pardalos P, editors. Data Mining, Systems Analysis, and Optimization in Biomedicine; AIP Conference Proceedings; 2007. pp. 1–42. [Google Scholar]

- 21.Querec TD, Akondy R, Lee EK, et al. Systems biology approaches predict immunogenicity of the yellow fever vaccine in humans. Nature Immunology. 2009;10:116–125. doi: 10.1038/ni.1688. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Brooks JP, EK Lee. Analysis of the consistency of a mixed integer programming-based multi-category constrained discriminant model. Annals of Operations Research on Data Mining. 2010;174(1):147–168. [Google Scholar]

- 23.Benbassat J, Taragin M. Hospital readmissions as a measure of quality of health care. Arch Intern Med. 2000;160:1074–1081. doi: 10.1001/archinte.160.8.1074. [DOI] [PubMed] [Google Scholar]

- 24.Anderson J. Constrained discrimination between k populations. J Roy Stat Soc B Met. 1969;31(1):123–39. [Google Scholar]

- 25.Kennedy J, Eberhart R. Particle swarm optimization. IEEE IJCNN. 1995:1942–8. [Google Scholar]

- 26.Poli R, Kennedy J, Blackwell T. Particle swarm optimization: an overview. Swarm Intelligence. 2007;1:33–57. [Google Scholar]

- 27.Joynt KE, et al. Thirty-day readmission rates for Medicare beneficiaries by race and site of care. JAMA. 2011;305(7):675–681. doi: 10.1001/jama.2011.123. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Simon HK, Hirsh DA, Rogers AJ, Massey R, DeGuzman MA. Pediatric emergency department overcrowding: Electronic medical record for identification of frequent, lower acuity visitors. Can we effectively identify patients for enhanced resource utilization? J of Emergency Med. 2009 Apr;36:311–316. doi: 10.1016/j.jemermed.2007.10.090. PMID 18657929. [DOI] [PubMed] [Google Scholar]

- 29.Epstein SK, Tian L. Development of an Emergency Department work score to predict ambulance diversion. Academic Emergency Medicine. 2006;13:421–426. doi: 10.1197/j.aem.2005.11.081. [DOI] [PubMed] [Google Scholar]