Abstract

Time Motion Studies (TMS) have proved to be the gold standard method to measure and quantify clinical workflow, and have been widely used to assess the impact of health information systems implementation. Although there are tools available to conduct TMS, they provide different approaches for multitasking, interruptions, inter-observer reliability assessment and task taxonomy, making results across studies not comparable. We postulate that a significant contributing factor towards the standardization and spread of TMS would be the availability and spread of an accessible, scalable and dynamic tool. We present the development of a comprehensive Time Capture Tool (TimeCaT): a web application developed to support data capture for TMS. Ongoing and continuous development of TimeCaT includes the development and validation of a realistic inter-observer reliability scoring algorithm, the creation of an online clinical tasks ontology, and a novel quantitative workflow comparison method.

Background and Significance

The ability to understand and measure clinical workflow provides critical information for hospital management and clinical research. Time Motion Studies (TMS) have been introduced as a successful technique to gather this information, being conducted in a broad spectrum of settings, ranging from evaluating the effect of system implementations1 and the assessment of costs,2 to describing general workflow3 and time usage by clinicians.4 These Time Motion Studies are also known as “time-motion studies” or “time and motion studies” and are defined in the National Library of Medicine controlled vocabulary thesaurus (MeSH) as “the observation and analysis of movements in a task with emphasis on the amount of time required to perform the task.” It is a business efficiency technique that merged the contributions of Frank and Lillian Gilbreth (Motion Studies) with those of Frederick Taylor (Time Studies), and has been massively adopted as a working method to gather quantitative information in clinical workflow studies, specifically through continuous direct observation, work sampling and self-reporting techniques. Of these three types of TMS approaches, continuous direct observation has proven to be more accurate than work-sampling5 and self-reporting,6,7 and is accepted as the gold standard to measure and quantify clinical workflow.8,9

In earlier direct observation TMS, an independent observer shadowed a predefined subject for a period of time, recording detailed workflow information and timing tasks as they occur, usually with a paper form and a stopwatch.10 However, since the adoption of the technique, electronic time capture tools have been created to support data capture and have proven to be more efficient and accurate than previous paper based methods.11,12 From earlier PDA-based tools to the full-fledged tablet PCs, a small set of tools have been cited in the TMS literature (Westbrook’s PDA data collection tool13, Mache’s Ultramobile PC instrument14 and Overhage’s Palm program15), but none have been widely adopted, perpetuating the use of paper and stopwatches16,17,18, or leading researchers to continuously develop simple tools aimed to work for one specific study using software such as TimerPro,19 HanDBase 3,20 Handibase 4,21 Workstudy Plus,22 XNote Stopwatch 1.423 and Microsoft Access,24 among others.

More recently, special attention has been directed towards the evaluation of health information systems implementations, its impact on clinical workflow, costs, efficiency, and repercussions on health care delivery, patient safety and outcomes. TMS have been used to locally assess the impact of such implementations. However, since each of the previously described tools provides different approaches for multitasking, interruptions, inter-observer reliability assessment and task taxonomy, results across these tools are not comparable. This and the lack of methodological standardization in TMS has frustrated efforts to review and aggregate findings across studies,25 also making study comparisons and conclusion synthesis impossible.26

Objective

We postulate that a significant contributing factor towards the standardization and spread of TMS would be the availability of a comprehensive, accessible, scalable and dynamic time capture tool. In this paper we describe the development of a fully customizable, web-based, freely available, time capture tool (TimeCaT) designed for workflow studies that includes novel methods to assess multitasking, interruptions, inter-observer reliability and taxonomy selection.

Methods

During the second half of 2010, while planning a clinical workflow study in an ambulatory clinic,27 we conducted a systematic review of clinical workflow TMS focused on identifying an existing TMS tool suitable for our study. None met our requirements, which included the following: focus on communication workflow (or flexible taxonomy input) + multitasking capable + run on tablet device (e.g., iPad). Instead of creating a simple tool aimed at working just for our study, we recognized the need for a flexible tool suitable for clinical TMS and realized a potential contribution to TMS standardization. Thus we decided to develop a comprehensive data capture tool for TMS, first learning and incorporating knowledge from existing tools, and then improving and developing new features. We previously presented the concept of this tool at the 2011 AMIA Summit on Clinical Research Informatics (TimeCaT).28

We began the design process by identifying and tracking down existing tools, and evaluated their features and limitations. We incorporated this knowledge into the development of TimeCaT. We prototyped an initial minimalistic BETA version and piloted it in an Emergency Medicine Workflow Study. Updates were incorporated through an iterative and incremental user centered design process using Agile29 Methods: constant improvements and new features were implemented in a tight feedback loop of prototype, evaluation, and redesign. User-based usability testing methods (e.g., performance and satisfaction assessments, log-file review) were performed throughout the development process. TimeCaT Beta was also piloted in two TMS studies aimed at evaluating the impact of a new Health Information System in Medication Administration and ICU workflow respectively, which provided feedback and data that assisted in further improvement of the tool. The software developers participated in the projects’ regular meetings, interacting with principal investigators (PIs) and observers to assess usability issues and potential improvements. We focused the redesign efforts on compatibility, ease of administration, usability, interruptions, multitasking, inter-observer reliability and taxonomy selection. The resulting software was created using freely available languages and libraries (HTML5, CSS3, PHP, MySQL, Google Charts API and jQuery).

Results

a). Existing Tools Assessment.

Through our systematic review of clinical workflow TMS using existing electronic means, we were able to identify four tools and their inventors (fully detailed methods and results of the review are being prepared for a separated journal publication). We excluded all simple electronic data collection forms utilized just once for a specific purpose.

J. Marc Overhage, Lisa Pizziferri, Yi Zhang. Widely considered the pioneer of TMS in clinical workflow, Overhage et al. published in 2001 a time motion study regarding the effect of Computerized Physician Order Entry (CPOE) implementation on physicians’ time utilization in ambulatory primary care practices15. They created a program for a Palm PDA. They developed activity classification categories with which the observers could classify visible physical activities (e.g., talking-phone), and then developed analysis categories that regrouped category-subcategory pairs into meaningful categories for the PI (e.g., direct patient care). The tool recorded the time when observers clicked on a main task category, being able to identify a subcategory at their leisure. The user was able to see a list of the 6 most recent tasks he recorded. Tasks were recorded sequentially, and data were synced after each observation to a personal computer for storage and analysis. Pizziferri et al.30 adapted Overhage’s categorization scheme, adding new tasks and categories, and created a Microsoft Access database installed on a touchscreen PC. Tasks were also recorded sequentially, having the observers identify a primary task whenever multitasking occurred. Observers selected a “Now” button at the start of each activity to log the time, and then selected the activity, being able to choose and correct the selection while the task was occurring. The activity was not saved until the observer selected “Add Record.” Additionally, Zhang et al.31 adapted Pizziferri’s tool, loading a nursing activities taxonomy, and modifying the data capture process requiring additional attributes to be captured (location, whom the activity served, standing/sitting/walking, admission or discharge, and clinical purpose of activities). They also modified the tool so it could capture communication multitasking (main task and communication simultaneously). Finally, they manually mapped the task list used to The Omaha System: an interface terminology designed to describe health care activities by interventions consisting in combined terms [problem + category + target + care description].

Philip Asaro. Asaro presented in the 2003 AMIA symposium his Palm computer application for TMS in the emergency department. Timing began when one of the 8 main categories button was pressed, allowing some deliberation before picking the subcategory from a dropdown list. The tool allowed simultaneous timing of two activities: the user tapped a multitasking button before selecting the second activity, and stopped each task independently. He also captured a patient ID for each task. He conducted a pilot study in 200432 implementing a synchronized data capture method: multiple data collectors across different providers using a synchronized timestamp allowing to reconstruct tasks/events of the ED care of individual patients. Then, in 2008, he used the tool to evaluate the impact of CPOE on Nursing Documentation Workflow.33

Johanna Westbrook. In 2007, Westbrook et al. presented at MEDINFO the impact of Information Technologies (IT) on clinician’s communication, developing and using a new electronic data collection tool.13 They developed a PocketPC application with the intention of capturing a greater level of work complexity than previous paper methods. The interface allowed observers to select between ten broad work task categories, additional participants involved in the task, and the tools/equipment used to perform the task. Each task was automatically time-stamped. An “Add” button allowed the observer to create a new task whenever interruption or multitasking occurred. For multitasking, they had to select an “End Multi” button when multitasking concluded, while for interruptions, they clicked an “interruption” button and a new tag was created displaying the interrupted task, allowing them to resume tasks placed on hold. An “ignore” button was used to delete data recorded in error. At the end of the observation, the PDA had to be synced with a local database. For inter-observer reliability assessment they used the agreement of overall percentage time in tasks of more than 85%. The tool was then used by the same research team for an observational study to quantify how and with whom doctors spend their time.34 In 2008 they published the final results of the pilot study, naming their method WOMBAT (Work observation Method by Activity Timing), and repeating the method in a new setting in 2011.35 Simultaneously, they developed a similar PDA based tool, but designed it specifically for Medication Administration36,37 presenting a more detailed yet complex data capture screen. While events were also time-stamped, the focus of this tool was medication administration errors and its association with interruptions.38

Stephanie Mache. In 2008 Mache et al. developed and evaluated a “computer-based medical work assessment program.”14 They generated a list of tasks physicians performed in different settings, and created a database-linked object oriented software. It was implemented in an Ultra Mobile PC (small handheld laptop with a pressure-sensitive screen). They assessed inter-observer reliability by a hit/miss approach: a ‘miss’ resulted if two observers being compared recorded the same task but with a time delay of more than five seconds, or if they recorded a completely different task. They presented a percentage of hits out of the total number of hits and misses during the observation period (>80%). They either allowed recording tasks in a sequential order or multitasking. Multitasking observers were required to identify a primary and secondary task, which were then stopped independently. Observers could record interruptions by identifying an interruptive event leading to cessation of the active task. After the observation, data had to be synchronized with a PC, from where they could write and print reports. By creating and piloting new taxonomies for specific scenarios, this tool has been used repeatedly by the developer team in German workflow studies regarding surgeons,39 junior OB/GYN’s,3 junior Gastroenterology physicians,40 pediatricians,41 oncology residents,42 anesthesiologists,43 and emergency physicians.44

b). Features incorporated/developed.

Assessing the existing tools provided us insight into common issues such as multitasking and interruption approaches which, in addition to novel features developed incrementally during the pilot studies, were incorporated into the actual version of TimeCaT. We describe them below, grouped by functionality.

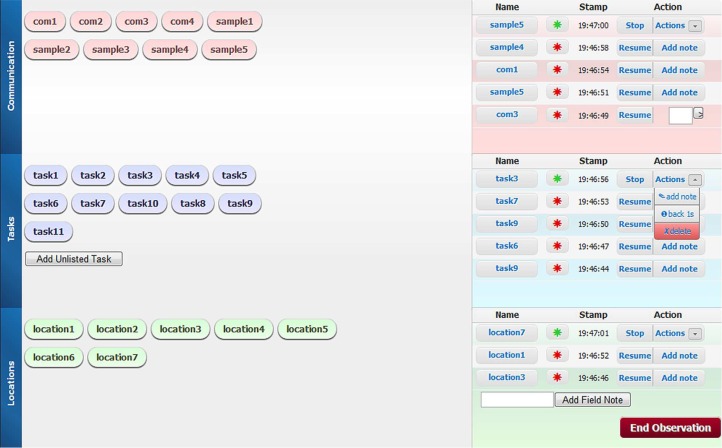

Data Capture: Operation. TMS require observers to move constantly and to maintain their attention on the environment. Thus, we focused our development on mobile devices and touch capable screens. The pilot studies used the iPad to collect data. We studied our observers’ device orientation preference, concluding that they were more comfortable holding the tablet sized devices in landscape orientation, so we optimized the resolution for landscape mode. To allow observers to keep their attention on the subjects, we designed a very simple data input screen adopting the one-click timestamp method: observers click on the task name and the task is automatically stored in the database. Observers can see a list of both active and completed tasks, time stamps, and add notes to any recorded task while conducting the observation (Figure 1). The tool also allows them to record field notes during the observation, upon completion and/or while reviewing their stored observation.

Figure 1:

Screenshot of the data capture module for multitasking studies. Three dimensional records of communication, task and location (for example: administering drug while explaining procedure to the patient while standing in the patient room). On the left, a list of tasks per category is displayed with big touch friendly buttons. On the right panel, real time feedback of the latest tasks recorded is displayed, allowing the observer to add notes, rename, stop, resume or delete tasks, and correct time stamps for each task. In training mode and in taxonomy validation mode, “add unlisted task” button is available in case the observer cannot classify the task in the existing list.

Data Capture: Multitasking. TMS are usually conducted as simple “linear time” studies, meaning that whenever a new task is recorded, the previous task is automatically stopped.45 This method provides structured analyzable data, but in some scenarios, it might underrepresent the complexity of a real workflow, leading to non-representative results and misleading conclusions. To assess this issue, some tools require the observer to invoke a “multi” mode when required to record parallel tasks.33 Other TMS tools handle multitasking by allowing the observers to record more than one task simultaneously, and require the observer to manually stop running tasks.34 Although, for an earlier version of TimeCaT we initially adopted the methodology to allow observers to record more than one task at a time, we soon realized that the results were chaotic, with data impossible to replicate among observers and to compare against other studies. Also, complex multitasking data capture scenarios as described, such as requiring the observers to classify concurrent tasks as Main or Secondary,3 led to large discrepancies when trying to assess inter-observer reliability. To address this issue, we created a simplified method that allowed recording multitasking scenarios while maintaining a standard and easy to use method for capturing data. After assessing the taxonomies used in most tools, we were able to classify tasks into three mutually exclusive groups: Communications, Tasks, and Locations. Tasks within a group cannot be performed simultaneously, while tasks from different groups can be executed at the same time (talking to a patient, while administering a drug, while standing in the patient’s room). This allowed the creation of a user-friendly data capture screen, with 3 orthogonal dimensions to focus on. Whenever a new task is recorded, it stops the previous tasks from its own group, not affecting the other 2 dimensions (Figure 1). We believe this method strikes a perfect balance between detailed data and accurate observations. Recording additional details, such as the intention of a task, may provide a better understanding during data analysis, but these are extremely hard to collect by an external observer and produce unreliable data.

Data Capture: Interruptions. We propose a global approach for interruption studies. As with other tools, TimeCaT allows observers to record the interruption as a task itself,46 or to register whenever a new task is disruptive.47,44 Some tools allowed interruption with a tabs feature, allowing observers to resume disrupted tasks.13 However, our observers could realize that a task was put on hold (and not totally stopped) only until it was resumed. So we provided the observers with two methods for recording interruptions: a light bulb system (green=running task, yellow=disrupted task, red=stopped task) and a “resume” button. Thus, if the observer realized a task was disrupted, he/she could click the red light bulb of the stopped task turning it to yellow. If he/she did not realize a task was disrupted but detected a previously stopped task was being resumed, he/she could click the “resume” button, resuming the task. Using this method, we are able to detect interruptions and identify interrupted tasks being resumed, therefore improving the accuracy on tasks count and tasks duration by not counting resumed events as different tasks.

Data Capture: Clean Data. Data from the pilot studies showed that even with a simple data capture interface, errors such as clicking a task twice, decreasing observers’ attention/increasing their response time, or hitting the wrong button occurred. Notes like “delete this task”, “this task happened 5 seconds ago” or “this is actually task B, not A” were among the most common. Therefore, we adopted a “delete” button,13 and created a “−1 second” button, allowing the users to delete or to correct the timestamps in real time respectively. Also, we included the ability to rename tasks recorded, allowing the users to correct errors in task identification without losing the time stamp by simply clicking the task name and re-selecting the correct one from a drop down list (Figure 1). We perceived that assessing inter-observer reliability prior and during real data capture might not be enough to ensure data quality, so we added a data quality report at the end of each observation. Thus, observers could report whenever they felt the data captured was not representative, or they had an event that complicated accurate data collection, by rating their observation in a scale from 1–5, permitting the later analysis of the data by the self-report score in the case an outlier observations were detected.

Data Analysis: Time Calculations. While storing time in a human readable format is useful for display purposes (hh:mm:ss), for calculations it is not. Authors sometimes require decimal conversion for analysis,48 and need to store date data separately. We adopted the Unix Time Stamp, which is a standard method to track time as a running total of seconds elapsed from the UNIX Epoch [00:00:00 UTC on 1 January 1970]. This is very useful for computer systems to track and sort date information in applications both online and on the client side. The stored timestamp can be translated to a full ISO 8601 international date format, in the user’s local time zone. For example, 1329497834 can be translated to 2012-02-17T16:57:14Z ISO8601, or to a user-friendly readable representation on a local time zone (Friday, 17th February 2012 11:57am GMT-5). Thus, TimeCaT has the capability to represent a human readable format for presentation purposes, but also supplies a computer friendly format for analysis. Another benefit of using a centralized synced time49 format is the ability to utilize this data with other complementary data sources also using synced time (Electronic Health Records, databases time stamps, etc.), or to conduct multi-observer studies.32

Data Analysis: Real-Time Reports. TimeCaT provides a role-based access control permitting super-users to configure the tool, capture data, access the dashboard with real time analytics, and create new observers for the study, while observers may only capture data and evaluate their own observations. Several tools (mostly PDAs) require data to be exported/synced to a local machine for analysis, constantly creating updated copies of the database, and relying on asynchronous statistics of the observers’ performance. TimeCaT addresses these issues by providing online reports which include basic descriptive statistics that help the PI making administrative decisions. Reports query the database in real-time to calculate the total number of observations and total time observed, and to stratify data by observer, location, hour of the day, day of the week, date, self-report and provide descriptive statistics of the data (Figure 2).

Figure 2:

Dashboard and Real Time Reports screenshots.

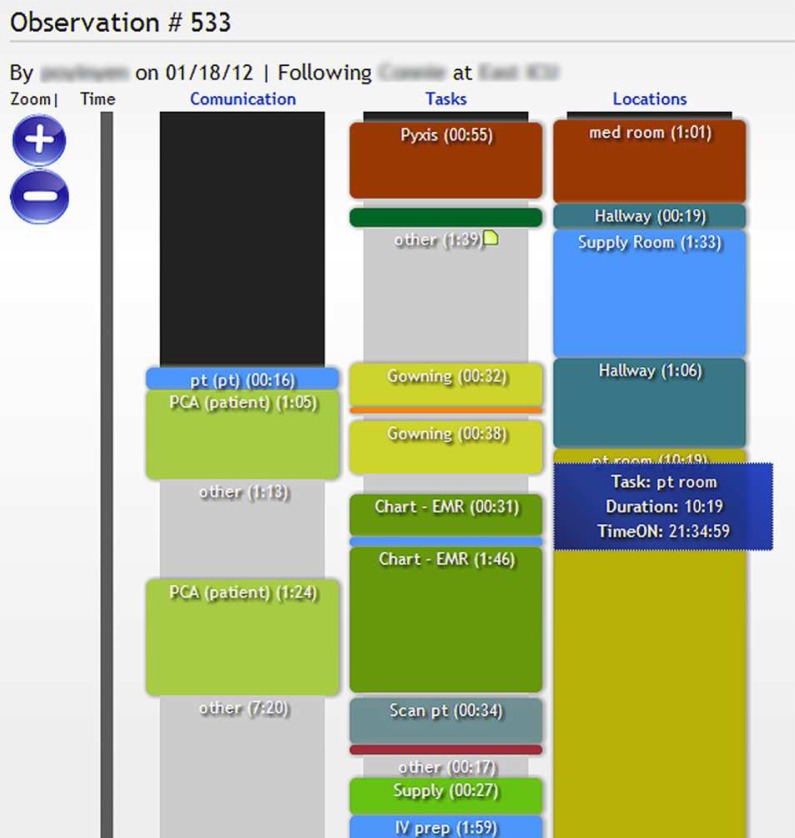

Data Analysis: Visualization. Information visualization is complementary to data mining for hypothesis generation in large data sets50. By transforming large quantities of numeric and textual data into graphics, this technique enables a researcher to easily discover patterns in the data. Based on the structured multitasking feature previously described, TimeCaT includes a workflow visualization package, transforming tables of observations into colored columns, representing task names (colors) and duration (length). The researcher then is able to visualize, side by side, three columns that represent each dimension (communication, tasks and locations) and describe precisely the process recorded by the observer (Figure 3).

Figure 3:

Workflow visualization in multitasking mode. Each column represents one dimension of the data collected (communication, tasks and locations), displaying colored blocks representing tasks from top to bottom in chronological order. The vertical length of the block is proportional to the duration of the task. Variable zoom is possible for shorter/longer tasks. Mouseover provides detailed info about each task, and notes if available.

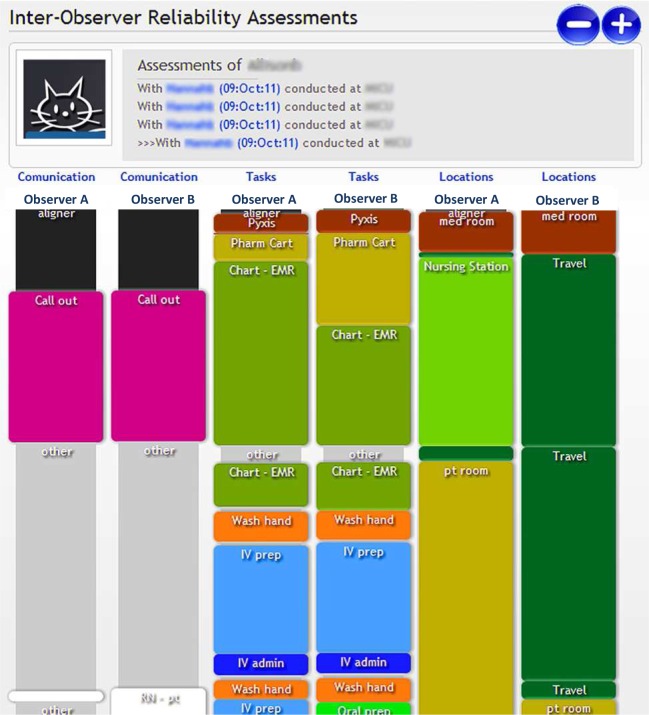

Standardization Efforts: Inter-observer Reliability. Assessing inter-observer reliability has not yet been standardized for TMS, and is not a common practice. Of those who describe some level of inter-observer reliability assessment, some provide a percentage of “hit vs miss”,14 or an overall percentage time in tasks,13 while others provide a Kappa-coefficient inter-rater agreement.51 The use of categorical data agreement measures52 for TMS’ data, however, does not take into account the granularity of the multidimensional aspect of the information (e.g., task name, start time, end time, number of tasks), providing a simplified and non-representative agreement percentage.

As a reliable and accurate inter-observer reliability scoring system has not yet been developed, we provide a visual analysis package to qualitatively assess it. Similar to the clinical workflow analysis tool (CWAt)53 and the sample report of Mache’s tool,14 we developed a side by side comparison of a graphical representation of the workflow captured by two observers following the same process (Figure 4).

Figure 4:

Inter-observer Reliability Assessment of a training session. This visualization mode enables synchronization of 2 observations, side by side, performed at the same time and observing the same process, allowing the comparison of naming and taming tasks. Each pair of columns represents one of the three dimensions of data captured by each of the 2 observers.

Standardization Efforts: Taxonomy. One of the cornerstones for standardizing TMS is the taxonomy selection. So far, researchers have developed and internally validated new taxonomies for each study. This has led to different definitions for the same type of task, and categorization into nonstandard categories, making workflow comparison virtually impossible. Zhang31 addressed this issue by matching his taxonomy against an existing interface terminology in nursing TMS. TimeCaT partially assesses this issue by allowing researchers to upload the taxonomy they used in their study to our online repository, and to share it with other researchers repeating a similar study, facilitating results comparison among the studies.

To validate the taxonomy within a study, we provide the observers with an “Add unlisted task” button during the training sessions. Thus, whenever observers find themselves unable to classify a process, they can create a temporary task name and use it. However, the observation is not considered validated until that session data is assessed by the PI, resulting either in the reclassification of the event into an existing task name, or by adding a new task.

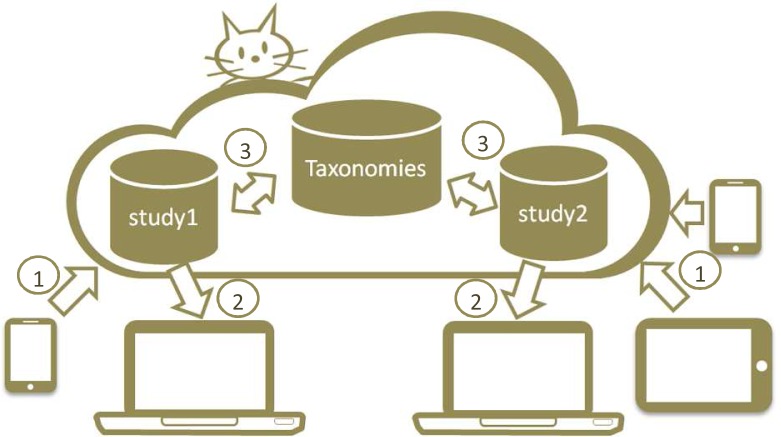

Others: Compatibility. Electronic tools available are typically designed for a specific operating system (Palm OS®, Windows Mobile®), specific software (Microsoft Access®, Microsoft Excel®) or specific hardware (Tablet PCs). To provide a more flexible and scalable solution, we developed TimeCaT as a web application that can run on any Internet capable device. Observers can capture data from mobile devices and store it on the online server, while the PI can follow the progress from his workstation in real-time (Figure 5). This application was designed for most major web browsers and mobile web browsers, with the exception of Internet Explorer, because of its weak support of HTML5.

Figure 5:

TimeCaT global architecture. (1) Data Capture, (2) Data analysis, (3) Taxonomy Normalization.

Others: TMS Network. Researchers using TimeCaT have access to a private network where they can interact with other researchers using the tool in order to exchange experiences, provide feedback, submit requests to the developers, report bugs, and to maintain an archive of TMS around the world. Users are encouraged to complete a form based on the STAMP checklist:54 a set of 29 data elements suggested by Zheng et al. aimed at improving the consistency of the TMS methodology and results reporting.

Discussion

Interestingly, we found that most tools used for more than one TMS were actually used by the same team who created them and not adopted by others. Availability did not seem to be the issue since most of the tools are available via requests to the authors and even available from the Agency for Healthcare Research and Quality website.55 These tools are reported to be flexible regarding the task selection. So, one may ask—What is impairing the widespread adoption of the tools? Since there continues to be a big variability in the methods used to conduct TMS, we postulate that the available tools are in fact not flexible enough to allow researchers to investigate their hypotheses, or are not easy to use and adopt by non-informaticians. We assessed both ease of use and flexibility in TimeCaT in order to facilitate the adoption, while proposing standard methods for data capturing.

Although our initial tool concept took into account Multitasking and Interruptions, the first BETA version of TimeCaT was developed for a study focusing on milestones of the patient care process in the emergency department (admission, first encounter, turn-around time, discharge, etc.) Hence, we decided to develop a sequential time capture tool for that phase, and thus focused on validating basic data capture features. When multitasking was studied for incorporation in the tool, resulting in the development of the novel 3 orthogonal dimensions approach (communications, tasks, and locations), we decided to keep the sequential tasks mode available for future simple studies such as our first pilot, thus aiming to reach more researchers and satisfy different use case scenarios.

Some limitations of the tool include the requirement of an Internet connection and low accuracy for very short tasks (1–2 seconds). Unstable Wi-Fi connections have also been identified as potential issues. Accuracy in timing short tasks might be impaired by high network latency and poor server response times during busy server hours or unreliable network connections. Also, Unix Time does not take into account leap seconds56. This might affect studies recording data on June 30 and December 31, whenever a leap second is declared by the International Earth Rotation and Reference Systems Service.57 However, we believe that one second differences in results are unlikely to have a clinically significance in most clinical workflow scenarios. To address these limitations, we are preparing the next upgrade of the software, which will include offline support, enabling the users to collect data faster, not rely on an Internet connection, and sync to the server passively whenever the device obtains access to the network.

Conclusion

We introduce our Time Capture Tool (TimeCaT): a comprehensive, flexible and user-centered web application developed to support data capture for TMS. This tool is aimed at the widespread adoption and creation of a collaborative network of TMS researchers, willing to contribute to the further development and standard formulations regarding multitasking, inter-observer reliability assessment and taxonomy selection. The end goal of the project is to create standardized TMS methods and thus produce studies from which we might aggregate results and facilitate knowledge discovery. Ongoing and continuous development of TimeCaT towards the definitive Time Capture Tool includes the development and validation of a realistic Inter-observer reliability scoring algorithm, the creation of an online clinical tasks ontology, and a quantitative workflow comparison method.

TimeCaT is accessible at http://www.timecat.org and available at no cost to non-profit researchers.

Acknowledgments

We recognize the important contribution of the observers of the 3 mentioned studies who gave valuable input to the development and perfection of TimeCaT (Araya, Arenas, Bey, Bulgrin, Chipps, Izquierdo, Jiang, Kuschel, Lara, Li, Marunowski, Patel, Quiroga, Raue, Salas, Scheel, Schwarts, Varas, Villaroel, Vivent, Zadvinskis). Continuous input was received from the Clinical Research Informatics Group (Department of Biomedical Informatics, The Ohio State University), and the “Unidad de Informatica en Biomedicina y Salud UC” (Escuela de Medicina, Pontificia Universidad Catolica de Chile). This project was funded by the Department of Biomedical Informatics at The Ohio State University.

References

- 1.Amusan AA, Tongen S, Speedie SM, Mellin A. A time-motion study to evaluate the impact of EMR and CPOE implementation on physician efficiency. Journal of healthcare information management : JHIM. 2008;22(4):31–7. [PubMed] [Google Scholar]

- 2.Schiller B, Doss S, DE Cock E, Del Aguila MA, Nissenson AR. Costs of managing anemia with erythropoiesis-stimulating agents during hemodialysis: a time and motion study. Hemodialysis international. International Symposium on Home Hemodialysis. 2008;12(4):441–9. doi: 10.1111/j.1542-4758.2008.00308.x. [DOI] [PubMed] [Google Scholar]

- 3.Kloss L, Musial-Bright L, Klapp BF, Groneberg DA, Mache S. Observation and analysis of junior OB/GYNs’ workflow in German hospitals. Archives of gynecology and obstetrics. 2010;281(5):871–8. doi: 10.1007/s00404-009-1194-x. [DOI] [PubMed] [Google Scholar]

- 4.Kim CS, Lovejoy W, Paulsen M, Chang R, Flanders SA. Hospitalist time usage and cyclicality: opportunities to improve efficiency. Journal of hospital medicine : an official publication of the Society of Hospital Medicine. 5(6):329–34. doi: 10.1002/jhm.613. [DOI] [PubMed] [Google Scholar]

- 5.Wirth P, Kahn L, Perkoff GT. Comparability of two methods of time and motion study used in a clinical setting: work sampling and continuous observation. Medical care. 1977;15(11):953–60. doi: 10.1097/00005650-197711000-00009. [DOI] [PubMed] [Google Scholar]

- 6.Gordon BD, Flottemesch TJ, Asplin BR. Accuracy of staff-initiated emergency department tracking system timestamps in identifying actual event times. Annals of emergency medicine. 2008;52(5):504–11. doi: 10.1016/j.annemergmed.2007.11.036. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Ampt A, Westbrook J, Creswick N, Mallock N. A comparison of self-reported and observational work sampling techniques for measuring time in nursing tasks. Journal of health services research & policy. 2007;12(1):18–24. doi: 10.1258/135581907779497576. [DOI] [PubMed] [Google Scholar]

- 8.Burke TA, McKee JR, Wilson HC, et al. A comparison of time-and-motion and self-reporting methods of work measurement. The Journal of nursing administration. 2000;30(3):118–25. doi: 10.1097/00005110-200003000-00003. [DOI] [PubMed] [Google Scholar]

- 9.Bratt JH, Foreit J, Chen PL, et al. A comparison of four approaches for measuring clinician time use. Health policy and planning. 1999;14(4):374–81. doi: 10.1093/heapol/14.4.374. [DOI] [PubMed] [Google Scholar]

- 10.Weigl M, Müller A, Zupanc A, Angerer P. Participant observation of time allocation, direct patient contact and simultaneous activities in hospital physicians. BMC health services research. 2009;9:110. doi: 10.1186/1472-6963-9-110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Tapp J, Ticha R, Kryzer E, et al. Comparing observational software with paper and pencil for time-sampled data: a field test of Interval Manager (INTMAN) Behavior research methods. 2006;38(1):165–9. doi: 10.3758/bf03192763. [DOI] [PubMed] [Google Scholar]

- 12.Rivera ML, Donnelly J, Parry BA, et al. Prospective, randomized evaluation of a personal digital assistant-based research tool in the emergency department. BMC medical informatics and decision making. 2008;8:3. doi: 10.1186/1472-6947-8-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Westbrook JI, Ampt A, Williamson M, Nguyen K, Kearney L. Methods for measuring the impact of health information technologies on clinicians’ patterns of work and communication. Studies in health technology and informatics. 2007;129(Pt 2):1083–7. [PubMed] [Google Scholar]

- 14.Mache S, Scutaru C, Vitzthum K, et al. Development and evaluation of a computer-based medical work assessment programme. Journal of occupational medicine and toxicology (London, England) 2008;3:35. doi: 10.1186/1745-6673-3-35. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Overhage JM, Perkins S, Tierney WM, McDonald CJ. Controlled trial of direct physician order entry: effects on physicians’ time utilization in ambulatory primary care internal medicine practices. Journal of the American Medical Informatics Association : JAMIA. 2001;8(4):361–71. doi: 10.1136/jamia.2001.0080361. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Kuzmiak CM, Cole E, Zeng D, et al. Comparison of image acquisition and radiologist interpretation times in a diagnostic mammography center. Academic radiology. 2010;17(9):1168–74. doi: 10.1016/j.acra.2010.04.018. [DOI] [PubMed] [Google Scholar]

- 17.Biron AD, Lavoie-Tremblay M, Loiselle CG. Characteristics of work interruptions during medication administration. Journal of nursing scholarship : an official publication of Sigma Theta Tau International Honor Society of Nursing / Sigma Theta Tau. 2009;41(4):330–6. doi: 10.1111/j.1547-5069.2009.01300.x. [DOI] [PubMed] [Google Scholar]

- 18.Cady R, Finkelstein S, Lindgren B, et al. Exploring the translational impact of a home telemonitoring intervention using time-motion study. Telemedicine journal and e-health : the official journal of the American Telemedicine Association. 2010;16(5):576–84. doi: 10.1089/tmj.2009.0148. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Chu ES, Hakkarinen D, Evig C, et al. Underutilized time for health education of hospitalized patients. Journal of hospital medicine : an official publication of the Society of Hospital Medicine. 2008;3(3):238–46. doi: 10.1002/jhm.295. [DOI] [PubMed] [Google Scholar]

- 20.Were MC, Sutherland JM, Bwana M, et al. Patterns of care in two HIV continuity clinics in Uganda, Africa: a time-motion study. AIDS care. 2008;20(6):677–82. doi: 10.1080/09540120701687067. [DOI] [PubMed] [Google Scholar]

- 21.Victores A, Roberts J, Sturm-O’Brien A, et al. Otolaryngology resident workflow: a time-motion and efficiency study. Otolaryngology--head and neck surgery : official journal of American Academy of Otolaryngology-Head and Neck Surgery. 2011;144(5):708–13. doi: 10.1177/0194599810396789. [DOI] [PubMed] [Google Scholar]

- 22.Tipping MD, Forth VE, O’Leary KJ, et al. Where did the day go?--a time-motion study of hospitalists. Journal of hospital medicine : an official publication of the Society of Hospital Medicine. 5(6):323–8. doi: 10.1002/jhm.790. [DOI] [PubMed] [Google Scholar]

- 23.Hartmann B, Rill LN, Arreola M. Workflow efficiency comparison of a new CR system with traditional CR and DR systems in an orthopedic setting. Journal of digital imaging : the official journal of the Society for Computer Applications in Radiology. 2010;23(6):666–73. doi: 10.1007/s10278-009-9213-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Keohane CA, Bane AD, Featherstone E, et al. Quantifying nursing workflow in medication administration. The Journal of nursing administration. 2008;38(1):19–26. doi: 10.1097/01.NNA.0000295628.87968.bc. [DOI] [PubMed] [Google Scholar]

- 25.Tipping MD, Forth VE, Magill DB, Englert K, Williams MV. Systematic review of time studies evaluating physicians in the hospital setting. Journal of hospital medicine : an official publication of the Society of Hospital Medicine. 5(6):353–9. doi: 10.1002/jhm.647. [DOI] [PubMed] [Google Scholar]

- 26.Zheng K, Haftel HM, Hirschl RB, O’Reilly M, Hanauer D a. Quantifying the impact of health IT implementations on clinical workflow: a new methodological perspective. Journal of the American Medical Informatics Association : JAMIA. 2010;17(4):454–61. doi: 10.1136/jamia.2010.004440. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Lopetegui M, Pressler T, Payne P. Method Design to Evaluate the Effect of EMR-Based Communication Tools and Model HEalthcare Provider Patterns[Abstract] AMIA Annual Symposium Proc. 2010 [Google Scholar]

- 28.Lopetegui MA, Pressler T, Payne PRO. AMIA Clinical Research Informatics Summit Proc San Francisco, CA. San Francisco, CA: 2011. Time CaT: Development of A Novel Application to Support Data Capture for Time and Motion Studies [Abstract] [Google Scholar]

- 29.Beck K. Manifesto for Agile Software Development. Agile Alliance. 2001. Available at: http://agilemanifesto.org/

- 30.Pizziferri L, Kittler AF, Volk L a, et al. Primary care physician time utilization before and after implementation of an electronic health record: a time-motion study. Journal of biomedical informatics. 2005;38(3):176–88. doi: 10.1016/j.jbi.2004.11.009. [DOI] [PubMed] [Google Scholar]

- 31.Zhang Y, Monsen K a, Adam TJ, et al. Systematic refinement of a health information technology time and motion workflow instrument for inpatient nursing care using a standardized interface terminology. AMIA ... Annual Symposium proceedings / AMIA Symposium. AMIA Symposium. 2011;2011:1621–9. [PMC free article] [PubMed] [Google Scholar]

- 32.Asaro PV. Synchronized time-motion study in the emergency department using a handheld computer application. Studies in health technology and informatics. 2004;107(Pt 1):701–5. [PubMed] [Google Scholar]

- 33.Asaro PV, Boxerman SB. Effects of computerized provider order entry and nursing documentation on workflow. Academic emergency medicine : official journal of the Society for Academic Emergency Medicine. 2008;15(10):908–15. doi: 10.1111/j.1553-2712.2008.00235.x. [DOI] [PubMed] [Google Scholar]

- 34.Westbrook JI, Ampt A, Kearney L, Rob MI. All in a day’s work: an observational study to quantify how and with whom doctors on hospital wards spend their time. The Medical journal of Australia. 2008;188(9):506–9. doi: 10.5694/j.1326-5377.2008.tb01762.x. [DOI] [PubMed] [Google Scholar]

- 35.Ballermann MA, Shaw NT, Mayes DC, Gibney RTN, Westbrook JI. Validation of the Work Observation Method By Activity Timing (WOMBAT) method of conducting time-motion observations in critical care settings: an observational study. BMC medical informatics and decision making. 2011;11:32. doi: 10.1186/1472-6947-11-32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Westbrook JI, Braithwaite J, Georgiou A, et al. Multimethod evaluation of information and communication technologies in health in the context of wicked problems and sociotechnical theory. Journal of the American Medical Informatics Association : JAMIA. 2007;14(6):746–55. doi: 10.1197/jamia.M2462. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Westbrook JI, Woods A. Development and testing of an observational method for detecting medication administration errors using information technology. Studies in health technology and informatics. 2009;146:429–33. [PubMed] [Google Scholar]

- 38.Westbrook JI, Woods A, Rob MI, Dunsmuir WTM, Day RO. Association of interruptions with an increased risk and severity of medication administration errors. Archives of internal medicine. 2010;170(8):683–90. doi: 10.1001/archinternmed.2010.65. [DOI] [PubMed] [Google Scholar]

- 39.Mache S, Kelm R, Bauer H, et al. General and visceral surgery practice in German hospitals: a real-time work analysis on surgeons’ work flow. Langenbeck’s archives of surgery / Deutsche Gesellschaft für Chirurgie. 2010;395(1):81–7. doi: 10.1007/s00423-009-0541-5. [DOI] [PubMed] [Google Scholar]

- 40.Mache S, Bernburg M, Scutaru C, et al. An observational real-time study to analyze junior physicians’ working hours in the field of gastroenterology. Zeitschrift für Gastroenterologie. 2009;47(9):814–8. doi: 10.1055/s-0028-1109175. [DOI] [PubMed] [Google Scholar]

- 41.Mache S, Vitzthum K, Kusma B, et al. Pediatricians’ working conditions in German hospitals: a real-time task analysis. European journal of pediatrics. 2010;169(5):551–5. doi: 10.1007/s00431-009-1065-2. [DOI] [PubMed] [Google Scholar]

- 42.Mache S, Schöffel N, Kusma B, et al. Cancer care and residents’ working hours in oncology and hematology departments: an observational real-time study in German hospitals. Japanese journal of clinical oncology. 2011;41(1):81–6. doi: 10.1093/jjco/hyq152. [DOI] [PubMed] [Google Scholar]

- 43.Hauschild I, Vitzthum K, Klapp BF, Groneberg DA, Mache S. Time and motion study of anesthesiologists’ workflow in German hospitals. Wiener medizinische Wochenschrift (1946) 2011;161(17–18):433–40. doi: 10.1007/s10354-011-0028-1. [DOI] [PubMed] [Google Scholar]

- 44.Mache S, Vitzthum K, Klapp BF, Groneberg DA. Doctors’ working conditions in emergency care units in Germany: a real-time assessment. Emergency medicine journal : EMJ. 2011 doi: 10.1136/emermed-2011-200599. [DOI] [PubMed] [Google Scholar]

- 45.Cornell P, Herrin-Griffith D, Keim C, et al. Transforming nursing workflow, part 1: the chaotic nature of nurse activities. The Journal of nursing administration. 2010;40(9):366–73. doi: 10.1097/NNA.0b013e3181ee4261. [DOI] [PubMed] [Google Scholar]

- 46.Chisholm CD, Weaver CS, Whenmouth L, Giles B. A task analysis of emergency physician activities in academic and community settings. Annals of emergency medicine. 2011;58(2):117–22. doi: 10.1016/j.annemergmed.2010.11.026. [DOI] [PubMed] [Google Scholar]

- 47.Edwards A, Fitzpatrick L-A, Augustine S, et al. Synchronous communication facilitates interruptive workflow for attending physicians and nurses in clinical settings. International journal of medical informatics. 2009;78(9):629–37. doi: 10.1016/j.ijmedinf.2009.04.006. [DOI] [PubMed] [Google Scholar]

- 48.Abbey M, Chaboyer W, Mitchell M. Understanding the work of intensive care nurses: A time and motion study. Australian critical care : official journal of the Confederation of Australian Critical Care Nurses. 2011 doi: 10.1016/j.aucc.2011.08.002. [DOI] [PubMed] [Google Scholar]

- 49.Haygood TM, Wang J, Atkinson EN, et al. Timed efficiency of interpretation of digital and film-screen screening mammograms. AJR. American journal of roentgenology. 2009;192(1):216–20. doi: 10.2214/AJR.07.3608. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Shneiderman B. In: Inventing Discovery Tools: Combining Information Visualization with Data Mining. Jantke K, Shinohara A, editors. Vol. 2226. Springer Berlin / Heidelberg; 2001. pp. 17–28. [Google Scholar]

- 51.Weigl M, Müller A, Zupanc A, Angerer P. Participant observation of time allocation, direct patient contact and simultaneous activities in hospital physicians. BMC health services research. 2009;9:110. doi: 10.1186/1472-6963-9-110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Landis JR, Koch GG. The measurement of observer agreement for categorical data. Biometrics. 1977;33(1):159–74. [PubMed] [Google Scholar]

- 53.Hanauer DA, Zheng K. Detecting workflow changes after a CPOE implementation: a sequential pattern analysis approach. AMIA Annual Symposium proceedings / AMIA Symposium. AMIA Symposium. 2008. p. 963. [PubMed]

- 54.Zheng K, Guo MH, Hanauer DA. Using the time and motion method to study clinical work processes and workflow : methodological inconsistencies and a call for standardized research. Medical Informatics. 2011 doi: 10.1136/amiajnl-2011-000083. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Anon Agency for Healthcare Research and Quality. Available at: http://healthit.ahrq.gov/portal/server.pt/community/health_it_tools_and_resources/919/time_and_motion_studies_database/27878. [DOI] [PubMed]

- 56.Nelson R, McCarthy D, Malys S, et al. The leap second: its history and possible future. Metrologia. 2001;38(209):529. [Google Scholar]

- 57.Anon. International Earth Rotation and Reference Systems Service. Available at: http://www.iers.org.