Abstract

Patient navigation programs are increasing throughout the USA, yet some evaluation measures are too vague to determine what and how navigation functions. Through collaborative efforts an online evaluation program was developed. The goal of this evaluation program is to make data entry accurate, simple, and efficient. This comprehensive program includes major components on staff, mentoring, committees, partnerships, grants/studies, products, dissemination, patient navigation, and reports. Pull down menus, radio buttons, and check boxes are incorporated whenever possible. Although the program has limitations, the benefits of having access to current, up-to-date program data 24/7 are worth overcoming the challenges. Of major benefit is the ability of the staff to tailor summary reports to provide anonymous feedback in a timely manner to community partners and participants. The tailored data are useful for the partners to generate summaries for inclusion in new grant applications.

Keywords: American Indian, Native American, Navigation, Evaluation, Cancer, Benefits, Limitations

Introduction

Patient navigation (PN) programs increased significantly since Dr. Harold Freeman’s initial Harlem Hospital program was initiated during the latter 1980s. The first community-based Native organization to implement a navigation program was the “Waíanae Coast Cancer Control Project” (1989–1993) for the Native Hawaiian and breast health [1–4]. The next was “Native American Women’s Wellness through Awareness” (NAWWA) Study in 1994 conducted by current staff within Native American Cancer Research Corporation (NACR). NAWWA was focused on early detection and originated through a collaboration of Denver-based American Indian community organizations and a research institute [5, 6]. Both the Waíanae and NAWWA studies integrated navigation programs using the community-based participatory research (CBPR) processes [7].

NAWWA evolved into another NCI-supported, NACR community-driven breast cancer screening navigation study among under-screened urban American Indian women [8]. This study led to the NCI CBPR NACR Navigator breast health study among under-screened white, African-American, Latina, and American Indian women [9]. Eventually, these studies led to two more NIH-supported NACR studies in the 2000s that document Navigator’s work with patients throughout the continuum of cancer care (prevention through end-of-life) [10, 11].

Overall, PN programs appear to increase the inclusion of medically under-screened populations into cancer services. However, some measures are too vague to determine what and how navigation was responsible for these increases (“did you like having a Navigator?”) and others may be very specific to a clinical setting (“how did the Navigator work with the patient who received BRCA1 testing?”). The limitations of the measures may inadvertently under-report Navigators’ (and other staff) roles and activities. The measures ideally include process and outcome evaluation measures documenting the extensiveness of the Navigators’ functions.

NACR implements and evaluates many cancer education and Navigator interventions with American Indian and Alaska Native communities. As these respective studies evolved over time, so did the rigor of the evaluation measures. Process measures may include items such as those summarized in Table 1.

Table 1.

Excerpt of process evaluation measures to document the duration and tasks completed during Navigator’s interactions with patients

|

Outcome measures for PN programs may include intermediate measures such as screening rates, adherence rates to follow-up for abnormal screenings, timeliness of follow-up, and quality of care. Measures may include: (a) date screening was completed; (b) date results were sent to patient; (c) what were the results? Normal, suspicious, insufficient, or incomplete sample/specimen, abnormal specimen; and (d) date when the patient is to return for the next screening? Patient satisfaction is for both the experience of working with the PN and the satisfaction of the navigation services.

NACR expanded PN CBPR studies and collaborated with other Native American organizations: Intertribal Council of Michigan, Incorporated (ITCMI), Rapid City Regional Hospital (RCRH), Great Plains Tribal Chairmen’s Health Board (GPTCHB), and the Comanche nation. All of the partners felt PN evaluation measures needed to be consistent among settings and quantifiable as well as qualitative in nature.

Beginning in 2005 and in collaboration with Mr. Eduard Gamito (deceased), the NACR staff created an outline for an online evaluation program. The outline was organized in Excel and then programmed using Filemaker Pro®. Initially, partners of the Mayo Clinic’s Spirit of Eagles Community Network Programs [PI: Kaur; NCI U01 CA 114609] used the program, although infrequently. Beginning in 2009, partners for the “Native Navigators and the Cancer Continuum” (NNACC) [PI: Burhansstipanov; R24MD002811] began consistent use of the program and more recently “Native Navigation across the Cancer Continuum in Comanche Nation” [PI: Eschiti; R15 NR012195] began using the program.

Online Evaluation Program Components and Features

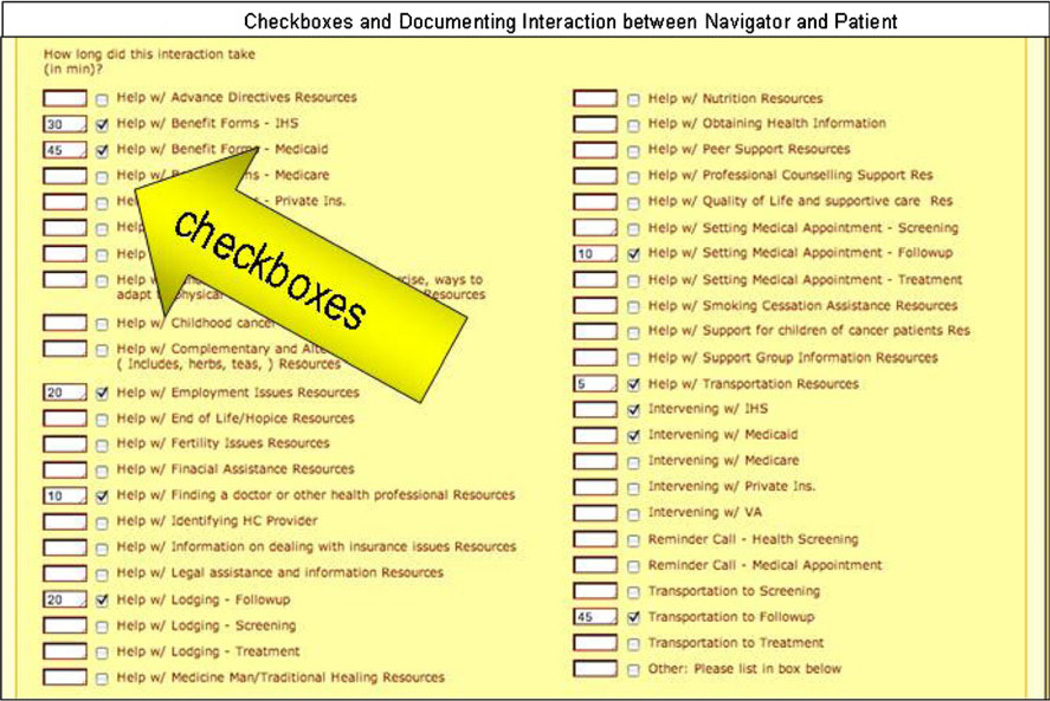

The goal of the online evaluation program is to make data entry accurate, simple, and efficient. Designed to reduce data entry burdens for staff and subcontractor, this system encourages timely uploading of the data, immediate reports for checking entries and, ultimately, meeting standards of data quality. This comprehensive program includes major components (called tabs) on staff, mentoring, committees, partnerships, grants/studies, products, dissemination, patient navigation, and reports (see Table 2) and incorporates pull down menus, radio buttons, and check boxes whenever possible (see Fig. 1).

Table 2.

Online evaluation tab names and functions

| Tab name | Function |

|---|---|

| Staff | Records information on current staff members’ work-related to specific grant activities |

| Mentoring | Summarizes information on any mentoring and technical assistance staff members provide to others but does not list the individuals’ names or private information |

| Committees | Tracks the committees and advisory boards that the staff take part in and specifies their activities |

| Resources | Keeps track of new equipment, software, and hardware or existing resources such as office space, clinical space, electronic evaluation systems, and equipment that are available for the organization and research study |

| Partnerships | Lists of organizations with whom the study has partnerships and documents the type(s) of relationships (memorandum of agreement/ understanding, subcontracts) |

| Grants/studies | Summarizes information for any studies, grants, or contracts that the organization submitted or has been awarded |

| Products | Provides information on any products (videos, brochures, posters, curricula, journal articles, newsletters, and slide presentations) developed and that may be available to others. Products not specifically developed by the organization but are used as part of ongoing grant activities are also entered here. The “Products”” tab also serves as an “inventory” of the items used by the program |

| Dissemination | Documents when items listed in the “Products” tab were disseminated (the product information is cross-listed so that it does not need to be entered twice). It also documents all presentations, focus groups, workshops, conferences, and trainings the staff conducted. There are ways to enter both the dissemination of the organizations own products as well as the dissemination of other’s products |

| Navigation | Documents and tracks all staff interactions with an individual, such as helping an individual access cancer screening services or referring a person to a clinical trial |

| Reports | Allows reports of all records or tailored records based on the user’s priorities |

Fig. 1.

Checkboxes and documenting interaction between Navigator and patient

Tabs Assist in Inputting Data

Some tabs, such as staff, resources, and partnerships are very simple. Others, such as navigation include subsets of tabs, such as patient code numbers and de-identified information. Referral to cancer screening and/or clinical trials as well as how the Navigator worked with the patients are all documented (see Fig. 1). Staff complete how they interacted with a patient (phone, face-to-face, Internet) and how long they spent with each patient. This allows the program to document what the Navigators are doing including how much time is spent helping the same patient. Thus, patient “NACR003” (code number) may have three Navigators who spent a combined total of 12 h helping her into timely quality cancer care.

The program has many layers of security including required passwords, logins, and a short window for timing out. No identifying or confidential information is uploaded to the website. Each program uses a secure computer (not connected to the Internet) or hard copies to record private patient information and to assign the patient a non-identifiable code number without personal identifiers. Users must log out twice to complete the data entry process.

Online Evaluation—Limitations

In an ideal evaluation system, users make it a habit to upload data in a timely manner, preferably immediately after data collection. The user would need to be able to access the Internet at a speed that allows for uploading data prior to timing out which is not feasible in some areas (rural settings or Indian reservations) where programs are limited by internet and computer capacity. If immediate uploading is not feasible, the PN collecting data must remember to upload data when back in an area that has sufficient computer and internet access.

Another limitation of many online data entry systems is that only one staff member can update the database at a time. This requires that project staff communicate clearly about when the database is being used so that others may avoid that time. Also, the program requires submitting data at least every 5 min to prevent the program from timing out (i.e., internal protection to reduce the risk of hackers from accessing the data). While this feature reduces the likelihood of hacking into the database, staff may have difficulty completing entry within this time window. In addition, if the submit data button is not clicked about every 5 min, the data record may be incomplete when the screen blanks out. Those data are lost and must be reentered. In addition, the online system is not easily intuitive to operate, especially for those unfamiliar with data entry and online systems. Thus an initial investment of time is required to learn the system and become familiar with where data is to be stored. However, once learned, research and project staff can save a lot of time in comparison to paper documentation.

A final limitation is the report generation feature. The most common types of reports can be programmed to automatically create summaries of entered data like demographics, pre-post knowledge scores, and overall evaluation scores; however, these reports frequently need to be refined based on the type of information and the purpose of the report. To obtain a specific report that cannot be automatically generated, the individual must contact the database monitor, Mr. Richard Clark, who can tailor the reports to the individual user’s needs. This process requires a delay and is dependent on staff availability.

A further complication is that when data set fields are changed by adding new fields or data, SPSS, Excel, or other databases also must be modified to match the Filemaker Pro database. Hence, there must be good communication between the data analysts and the data base monitors to ensure that data are interpreted correctly and that valuable staff time is not wasted re-creating datasets for analysis.

Online Evaluation—Benefits

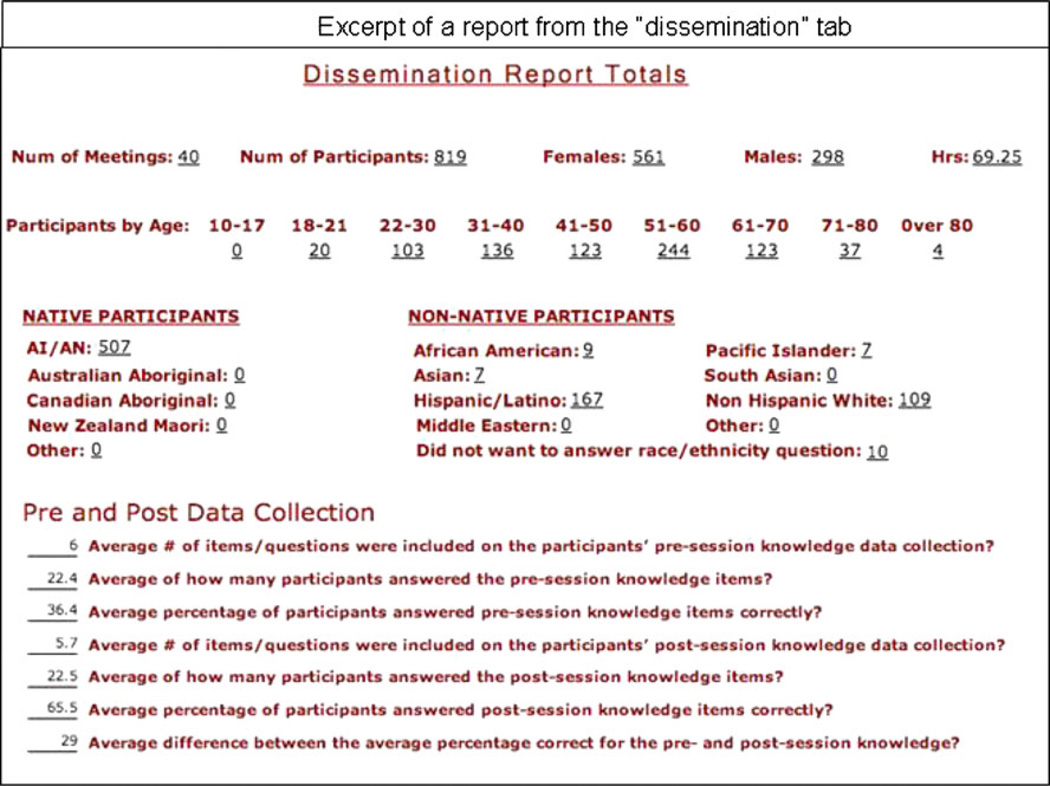

All of the benefits rely on the staff uploading and updating their respective data on at least a monthly basis. In general, NACR encourages the staff to upload information once an event is completed or the appropriate bodies have approved a product for dissemination. The greatest benefit is that current, up-to-date, data summaries are immediately available to the principal investigator and each of the partner program directors 24 h a day, 7 days a week (see Fig. 2). The partners do not have to gather data to respond to an agency request, limiting workday interruptions. For example, the PI for the navigation study needed current data to generate the annual NIH Progress Report. She was able to access each of the NNACC partners’ databases to gather almost all of the information necessary. This helped save each of the NNACC partners time and effort.

Fig. 2.

Excerpt of a report from the “dissemination” tab

Information uploaded once should not be lost and errors can be corrected in one master database and linked to the respective reports rather than needing to be updated in multiple datasets. An additional benefit is that if an omission or mistake has been made in an online entry, it can easily be edited; it is not necessary to create an entirely new entry. If a new variable is needed, NACR staff can create pop-up boxes with relevant options (usually within a day of the request) to add to the overall program.

The recorded data can also be exported into Excel and then into SPSS, SUDAAN, SAS, or other statistical analyses programs. Each partner can use their own organization’s data for new grant applications, publications, and reports.

Of major importance is that the online evaluation program provides information that can be tailored to each of the partners’ settings. For example, the “Native Navigators and the Cancer Continuum” (NNACC) [R24MD002811] has a baseline and follow-up Family Fun Event (FFE). The latter occurs 3 months after the Navigators implement and evaluate 24-h of community education workshops in partnership with another local AI organization. During the follow-up FFE, the Navigators produce a tailored summary sheet to provide preliminary results specific to workshops completed in partnership with the specific AI organization (see Fig. 2 in the article “Preliminary Lessons Learned from the ‘Native Navigators and the Cancer Continuum (NNACC)’,” also published in this supplement [12]).

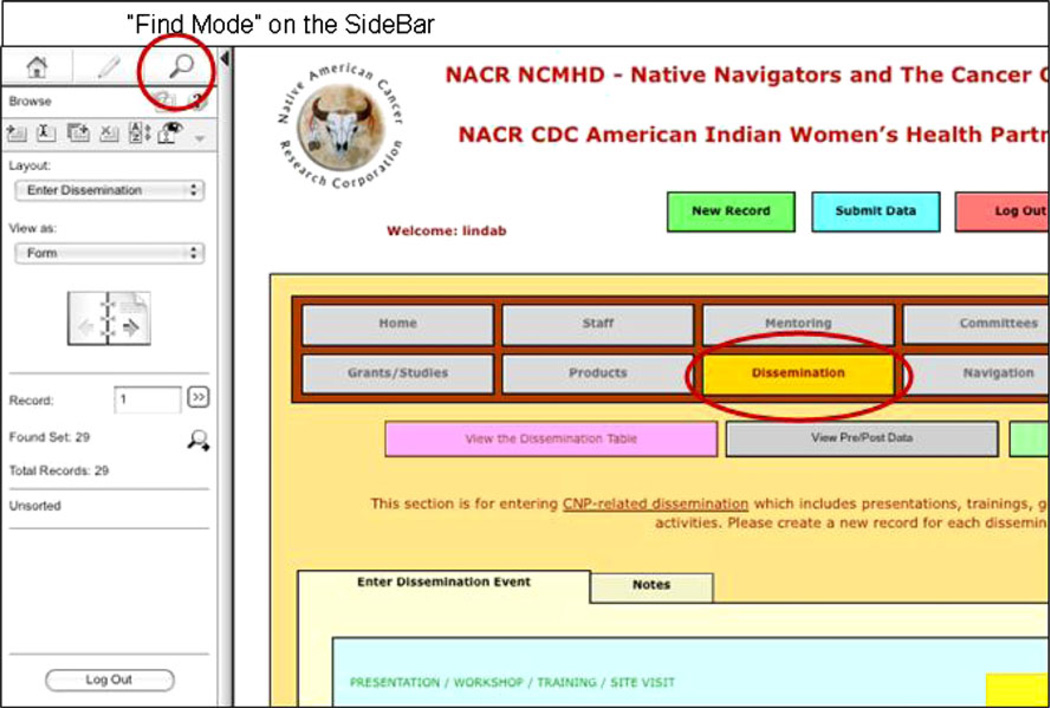

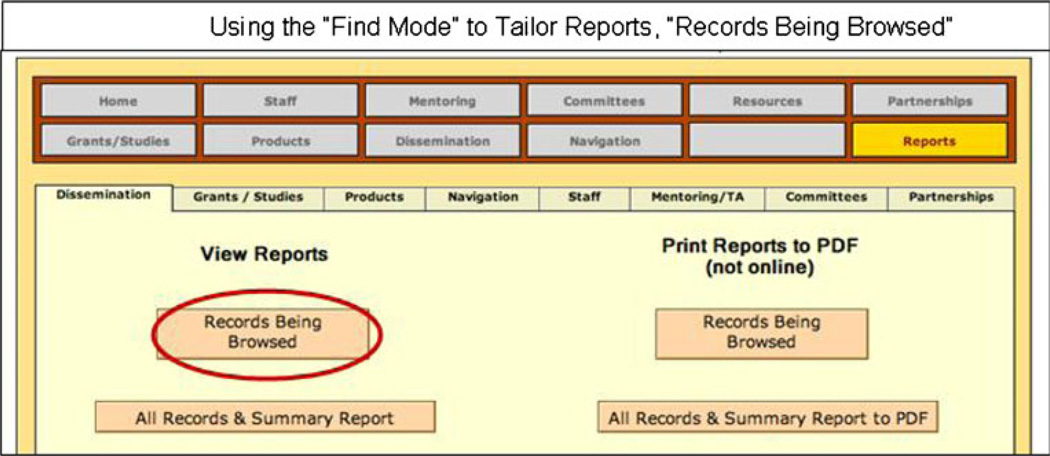

To tailor a report, the user logs in to the respective programs’ database. For example, to produce a tailored report within the “dissemination” tab, the user selects the tab “dissemination”, and opens the left-hand side menu within Filemaker Pro® (see Fig. 3). The user clicks on the “find” icon. Under the “Session/Meeting date” field, the user clicks on the field, types in the first date of the workshop series, followed by three periods, and then enters the last date of the workshop series, such as 01-10-2010…04-20-2010. The user clicks on “perform find” on the Filemaker Pro® side bar. Next the user clicks on the “report” tab, and then clicks on the “records being browsed” button (see Fig. 4). An excerpt of the summary report is in Fig. 2 of the article “Preliminary Lessons Learned from the ‘Native Navigators and the Cancer Continuum (NNACC)’,” also published in this supplement [12]. These data greatly assist in providing timely summaries of findings to the communities with whom the education intervention was implemented.

Fig. 3.

“Find mode” on the sidebar

Fig. 4.

Using the “find mode” to tailor reports, “records being browsed”

Discussion

The evolution of this online evaluation program resulted from experience implementing PN programs and great guidance from all of the study partners. The program required a great deal of refinement, but providing the tailoring reports function was among the greatest products for each of the partners. The program assists the study staff in different ways:

ITCMI Project Navigator, Amanda Leonard states, “I find the online system extremely helpful. I can easily review the outcomes of my sessions, and see which ones my participants scored best, or worse on. I can then review the modules that had lower gains in knowledge for content and any necessary updates or review, as I prepare for the next round of sessions.”

RCRH Kymberly Crawford, Native Navigator, says, “Working with the online system, helps me to evaluate the results of our sessions, and with the ability to generate reports from the data, provides excellent feedback to our community members.”

ITCMI Project Manager, Noel Pingatore also finds the system helpful. “I can pull pre and post knowledge scores for various modules and prepare easy to understand reports for the staff and community members.”

GPTCHB Project Manager, Dr. Shinobu Watanabe-Galloway says, “From the way the system is set up, it is clear to us what kind of information is needed from each site. At the same time, it allows flexibility for sites to collect additional data as needed.”

Small, community-based organizations (CBOs) face challenges documenting program outcomes and they can best meet these challenges by taking advantage of state-of-the-art data collection, entry, and reporting computer systems. In order to maximize CBO participation in multi-site projects, online systems are required that are easy to use and can be accessed by a mixture of computer infrastructures. The NACR online system creates a balance between meeting the needs of data collection required for participation in research projects and the ability of staff and organizations to successfully enter, check, and report collected data. Such online systems are important for fulfilling community-based participatory research goals of equal participation across different sites. Although it is always challenging to receive evaluation data from diverse geographic settings in a timely manner (and granting agencies frequently make urgent requests for specific data related to grant activities), collecting data from all NNACC partners was both feasible and efficient through the use of this online program.

This online system is an important mechanism to involve underserved populations in research projects that promise to benefit their communities. In addition to any benefits from participating in such research, communities benefit from increased computer literacy and infrastructure, enhanced organizational capacity to conduct their own research, and a track record of meeting or exceeding required standards for conducting research. These longer-term benefits are important if health disparities are to be addressed at the community level and with communities as lead agencies and partners. The databases can produce tailored summaries from multiple ongoing studies for inclusion within new grant applications.

Government agencies and other funders need to recognize and support CBPR projects that enhance community capacity to participate in research including basic research activities such as data entry and report generation. Community participation in research has often been limited to review of data reports generated and prepared by academic centers in a dependency relationship. Many communities remain skeptical of such prepared data because they do not have experience with databases and they often find the interpretation of the data to be incorrect and misleading. Involving communities in every step of the research process is critical because community members can make corrections in a timely manner and can provide interpretations of data early in the analysis phase, often explaining critical issues such as missing data. Such data systems create the opportunity for reciprocal communication between sites and investigators furthering understanding of the outcomes of research.

The NACR online system is a “living” project. Each year improvements are made that increase the ease of data entry, analysis, and reporting. As more sites join the online reporting process, a wide range of feedback and input shape the data fields and better capture project activities. Not only does this enhanced system better meet the needs of partners, but it provides information that can help meet funder reporting requirements as well as make such data available to groups like tribal councils that may feel more in control of their data. As underserved communities see and hear about cases of abuses of data, creating mechanisms like the NACR online data system is an important step toward re-establishing trust between researchers and communities. Creating a more equitable role for communities in processes such as protecting patient confidentiality and data entry may help communities participate in research addressing health disparities.

To view the program, go to http://www.NatAmCancer.org/nacreval/nacreval.html and click on the character at the bottom of the circle labeled “training”. The username is “train” and the password is “choochoo”.

During 2011, Mr. Clark of NACR added more help boxes. This has assisted both “new” users who require several hours of training, as well as provides refreshers for previous users. It will also make generating reports simpler for the users.

Acknowledgments

This work would not have been possible without the excellent dedication and efforts of the NNACC Navigators: Kim Crawford, Tinka Duran, Lisa Harjo, Rose Lee, Amanda Leonard, Denise Lindstrom, Audrey Marshall, Mark Ojeda-Vasquez, Stacey Weryackwe-Sanford, and Leslie Weryackwe. This work was supported by Mayo Clinic’s Spirit of Eagles Community Network Programs [PI: Kaur; NCI U01 CA 114609]; Native Navigators and the Cancer Continuum (NNACC) [PI: Burhansstipanov, R24MD002811]; and Native Navigation across the Cancer Continuum in Comanche Nation (NNACC-CN) [PI: Eschiti, R15 NR 012195].

Each publication, press release or other document that cites results from NIH grant-supported research must include an acknowledgment of NIH grant support and disclaimer such as “The project described was supported by Award Number R15NR012195 from the National Institute Of Nursing Research.

Abbreviations

- CBPR

Community-based participatory research

- GPTCHB

Great Plains Tribal Chairmen’s Health Board

- ITCMI

Intertribal Council of Michigan, Incorporated

- NNACC

Native Navigators and the Cancer Continuum

- NACR

Native American Cancer Research Corporation

- NAWWA

Native American Women’s Wellness through Awareness

- PN

Patient navigation

- RCRH

Rapid City Regional Hospital

Footnotes

Publisher's Disclaimer: The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institute Of Nursing Research or the National Institutes of Health.

Conflict of Interest The authors declare that they do not have a conflict of interest.

Contributor Information

Linda Burhansstipanov, Native American Cancer Research Corporation (NACR), 3022 South Nova Road, Pine, CO 80470-7830, USA, LindaB@NatAmCancer.net.

Richard E. Clark, Native American Cancer Research Corporation (NACR), 3022 South Nova Road, Pine, CO 80470-7830, USA, dontdoam@aol.com

Shinobu Watanabe-Galloway, Epidemiology Department, College of Public Health, 984395 University of Nebraska Medical Center, Omaha, NE 68198-4395, USA, swatanabe@unmc.edu.

Daniel G. Petereit, Department of Radiology Oncology, Rapid City Regional Hospital, John T. Vucurevich Cancer, 353 Fairmont Blvd, Rapid City, SD 57701, USA, DPetereit@rcrh.org

Valerie Eschiti, OUHSC College of Nursing, 1100 North Stonewall Ave, CNB 453, Oklahoma City, OK 73117, USA, valerie-eschiti@ouhsc.edu.

Linda U. Krebs, College of Nursing, Anschutz Medical Campus, University of Colorado at Denver, Box C288-18, ED2N Room 4209, 13120 East 19th Avenue, Aurora, CO 80045, USA, Linda.krebs@ucdenver.edu

Noel L. Pingatore, Inter-Tribal Council of Michigan, Inc, 2956 Ashmun St., Sault Ste. Marie, MI 49783, USA, noelp@itcmi.org

References

- 1.Segal-Matsunaga D, Enos R, Gotay CC, Banner RO, DeCambra H, Hammond OW, Hedlund N, Ilaban EK, Issell BF, Tsark JU. Participatory research in a native Hawaiian community: theWai’anae Cancer Research Project. Cancer. 1996;78(7):1582–1586. Supplement. [PubMed] [Google Scholar]

- 2.Buransstipanov L. Documentation of the cancer research needs of American Indians and Alaska natives (SuDoc HE 20.3162/4:1) Rockville, MD: Cancer Control Science Program, Division of Cancer Prevention and Control, National Cancer Institute; 1994. [Google Scholar]

- 3.Banner RO, DeCambra H, Enos R, Gotay CC, Hammond OW, Hedlund N, et al. A breast and cervical cancer project in a Native Hawaiian community: Waíanae Cancer Research Project. Prev Med. 1995;22:447–453. doi: 10.1006/pmed.1995.1072. [DOI] [PubMed] [Google Scholar]

- 4.U.S. Department of Health and Human Services. Native outreach: a report to American Indian, Alaska native and native Hawaiian communities (SuDoc HE 20.3152:N 21/4) Bethesda, MD: National Institutes of Health National Cancer Institute; 1999. [Google Scholar]

- 5.Burhansstipanov L, Bad Wound D, Capelouto N, Goldfarb F, Harjo L, Hatathlie L, Vigil G, White M. Culturally relevant “navigator” patient support: the native sisters. Cancer Pract. 1998;6(3):191–194. doi: 10.1046/j.1523-5394.1998.006003191.x. [DOI] [PubMed] [Google Scholar]

- 6.Burhansstipanov L, Dignan MB, Bad Wound D, Tenney M, Vigil G. Native American recruitment into breast cancer screening: the NAWWA project. J Cancer Educ. 2000;15:29–33. doi: 10.1080/08858190009528649. [DOI] [PubMed] [Google Scholar]

- 7.Burhansstipanov L. Native American community-based cancer projects: theory versus reality. Cancer Control. 1999;6(6):620–626. doi: 10.1177/107327489900600618. [DOI] [PubMed] [Google Scholar]

- 8.Dignan MB, Burhansstipanov L, Hariton J, Harjo L, Rattler T, Lee R, Mason M. A comparison of two Native American Navigator formats: face-to-face and telephone. Cancer Control. 2005;12(Suppl 2):28–33. doi: 10.1177/1073274805012004S05. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Burhansstipanov L, Dignan MB, Schumacher A, Krebs LU, Alfonsi G, Apodaca C. Breast screening navigator programs within three settings that assist underserved women. J Cancer Ed. 2010;25(2):247–252. doi: 10.1007/s13187-010-0071-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Burhansstipanov L, Krebs LU, Seals BF, Watanabe-Galloway S, Pingatore N, Petereit D. “Lessons Learned from the Native Navigators and the Cancer Continuum” (NNACC) [PI: Burhansstipanov, R24MD002811] submitted JCE winter. 2011 doi: 10.1007/s13187-012-0316-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Burhansstipanov L, Krebs LU, Seals BF, Bradley AA, Kaur JS, Iron P, Dignan MB, Thiel C, Gamito E. Native American breast cancer survivors’ physical conditions and quality of life. Cancer. 2010;116(6):1560–1571. doi: 10.1002/cncr.24924. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Burhansstipanov L, Krebs LU, Seals BF, Watanabe-Galloway S, Petereit DG, Pingatore NL, Eschiti V. Preliminary lessons learned from the “Native Navigators and the Cancer Continuum” (NNACC) J Cancer Ed. 2012 doi: 10.1007/s13187-012-0316-5. [DOI] [PMC free article] [PubMed] [Google Scholar]