Abstract

Item-specific spatial information is essential for interacting with objects and for binding multiple features of an object together. Spatial relational information is necessary for implicit tasks such as recognizing objects or scenes from different views but also for explicit reasoning about space such as planning a route with a map and for other distinctively human traits such as tool construction. To better understand how the brain supports these two different kinds of information, we used functional MRI to directly contrast the neural encoding and maintenance of spatial relations with that for item locations in equivalent visual scenes. We found a double dissociation between the two: whereas item-specific processing implicates a frontoparietal attention network, including the superior frontal sulcus and intraparietal sulcus, relational processing preferentially recruits a cognitive control network, particularly lateral prefrontal cortex (PFC) and inferior parietal lobule. Moreover, pattern classification revealed that the actual meaning of the relation can be decoded within these same regions, most clearly in rostrolateral PFC, supporting a hierarchical, representational account of prefrontal organization.

Keywords: fMRI, representation, hierarchical organization, reasoning

whereas most research into the neural basis of spatial processing has focused on the representation of object location in eye-, head-, or hand-centered coordinates, in much of daily life and language use, it is the relative positions between objects that is critical. For instance, view-independent object recognition depends on knowledge of the spatial relations between object parts. This could be accomplished implicitly, but explicit representation of the spatial relations between entities in the environment is necessary for communication about space beyond mere pointing, for creating tools and symbols composed of multiple independent elements, and for map-based navigation.

The prefrontal cortex (PFC) would be expected to be involved in tasks requiring explicit encoding and maintenance of object locations and spatial relations in working memory. Several theories of PFC organization suggest that more abstract or integrated information is represented more anteriorly than more concrete, sensorimotor information [see Badre (2008) for review; Courtney 2004; Christoff et al. 2001; Petrides 2005]. As relations are abstracted away from object-bound features and require integration among multiple objects, such theories would predict that in a direct comparison with object location, spatial relations between objects would be represented in more rostral regions.

The neural representation of individual object locations has been well studied in monkeys and humans. In addition to early-to-mid-level visual areas having retinotopically organized receptive fields, in monkeys, spatially selective neurons have been reported in frontal and supplementary eye fields (FEF and SEF, respectively), in several regions within posterior parietal cortex (Colby and Goldberg 1999), and during working memory tasks in dorsolateral PFC (Funahashi et al. 1989). In humans, the superior frontal sulcus (SFS) has been implicated in spatial working memory (Courtney et al. 1998; Mohr et al. 2006; Munk et al. 2002; Sala and Courtney 2007). The superior parietal lobule (SPL) has been implicated in shifts of spatial attention (Yantis et al. 2002). Topographic maps have also been reported in the intraparietal sulcus (IPS) and FEF [see Silver and Kastner (2009) for review] during delayed saccade tasks.

The neural representation of spatial relational information has been less well studied. In monkeys, single-unit recordings have shown that neurons in ventral visual areas V4 and posterior inferotemporal cortex are tuned for object-relative position of preferred contour fragments during a passive fixation task (Brincat and Connor 2004; Pasupathy and Connor 2001). A study of monkey parietal area 7a in the dorsal visual pathway found neurons that were tuned to the object-relative categorical location (left or right) of a subpart of a reference object during a delayed-response saccade task (Chafee et al. 2007). A series of monkey electrophysiological studies [starting with Olson and Gettner (1995)] in frontal lobe visuomotor area SEF has shown neuronal selectivity for categorical object-relative position of targets in a delayed-response saccade task. However, whereas these studies support the existence of object-relative coding schemes in the brain, they do not demonstrate the sort of explicit, object- and response-independent relational representations that models of relational reasoning anticipate (Hummel and Holyoak 2005).

Humans are likely to be an even more promising species in which to investigate spatial relational processing, because the most prominent benefit of relational/structural encoding—flexibility—is also the hallmark of human cognition. Indeed, it has been argued that the ability to represent structural relations explicitly marks a fundamental discontinuity between humans and other animals (Penn et al. 2008). In humans, lesions to the posterior parietal lobe have been implicated in constructional apraxia or the inability to reproduce a drawing or read a map—tasks that depend on the awareness of spatial relations between objects—and in the ability to represent left and right [Gerstmann's syndrome; see Rusconi et al. (2010) for review]. Studies of relational reasoning more broadly defined have implicated lateral and anterior prefrontal regions in humans. Several human-imaging (Bor et al. 2003; Christoff et al. 2001; Kroger et al. 2002; Wendelken et al. 2008) and lesion (Waltz et al. 1999) studies point to dorsolateral and frontopolar cortex activity during tasks that require cognitive manipulation of visual image structure, including explicitly analogical tasks such as Raven's Progressive Matrices. Similar areas seem to be involved in nonvisual analogical tasks (Geake and Hanson 2005).

However, these areas are implicated in many other processes as well, including manipulating object featural and spatial information, multitasking, sequence ordering, planning, and self-monitoring. Thus several important questions remain unanswered. Is spatial relational information maintained as a type of information distinct from absolute positional information? Does relational information—more abstract and integrated in nature than item-specific positional information—depend on higher levels of a putative PFC rostrocaudal hierarchy? Where in the brain are the actual spatial relations and locations necessary for behavior represented while humans are performing a spatial task? To address these questions, we designed a delayed-recognition working memory functional MRI (fMRI) study to directly compare the encoding and maintenance of object-specific and relational spatial information using identical visual stimuli. Moreover, our goal was not only to identify regions preferentially involved in processing relational or item-specific information but also to discover which regions actually carry the representations needed to do the processing.

MATERIALS AND METHODS

Overall experimental design.

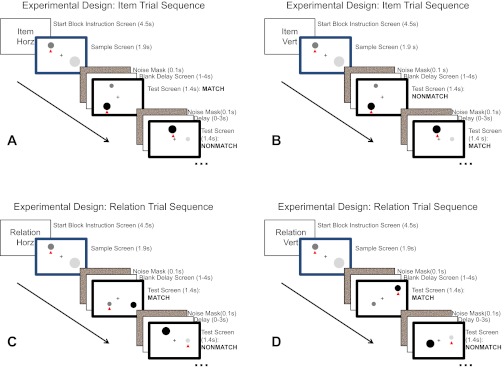

We conducted two fMRI experiments using the same basic delayed-recognition paradigm. The first was designed to identify regions that were commonly or differentially activated for item vs. relation processing while controlling for potential differences between Item and Relation trials regarding attention, memory load, and verbalization using a univariate general linear model (GLM) analysis. The second experiment used multivoxel pattern classification to distinguish potentially overlapping neural subpopulations responsive to item and relational processing and to identify regions whose neural activity patterns actually represented item-specific or relational information. In the second experiment, we eliminated the control conditions to be able to collect many more repetitions of the item and relation conditions of interest, which was necessary to have sufficient power for the multivariate pattern analysis; an ability to decode item or relation representations in a brain region's multivoxel activity patterns is prima facie evidence that that region is specifically involved in the task and is not simply reflecting generic attentional or memory-load demands. For both experiments, we used a mixed event-related and blocked design. Blocks of trials of the same type (Item or Relation, Low or High load, featural dimension: Horizontal or Vertical position/relation) allowed for the comparison of neural population representations of different information types. The blocks themselves consisted of trial components (sample, delay, test), randomly sequenced to allow isolation of the encoding, maintenance, and retrieval processes for each type of information. The order of block conditions was counterbalanced across runs and across subjects. The basic trial sequence and match logic are illustrated in Fig. 1.

Fig. 1.

Example trial sequences with correct responses shown for illustration. A: Item Horizontal trial; B: Item Vertical trial; C: Relation Horizontal trial; D: Relation Vertical trial. See materials and methods for details.

Participants.

Participants were 26 adults (experiment 1: n = 15, eight males, 18–35 years; experiment 2: n = 11, three males, 18–29 years) with normal or corrected vision who were nonsmokers in good health and had no history of head injury, neurological or mental disorders, or drug or alcohol abuse and no current use of medications that target central nervous system or cardiovascular function. The protocol was approved by the Institutional Review Boards of the Johns Hopkins University and the Johns Hopkins Medical Institutions. Participants were compensated with $50 for the scanner session and $10 for the practice session. All subjects gave written, informed consent.

Working memory tasks and stimuli.

Spatial working memory tasks were modified delayed-recognition tasks, in which subjects remembered either the specific horizontal or vertical location of one or two individual circles or the spatial relationship between the circles along the horizontal or vertical dimensions. Trials of a given type were grouped into blocks. A block consisted of 12 trials and lasted for 76.5 s. Each block began with a verbal instruction screen indicating whether an absolute object feature value or a relative value (“Item” or “Relation”) was to be remembered. (“Word” instruction screens also appeared in experiment 1.) The instruction screen also indicated the relevant feature dimension(s): horizontal position/relation or vertical position/relation (as well as high or low load in experiment 1).

A trial began with a sample screen consisting of a fixation cross and two gray-scale circles defined by their size, luminance, and position. This screen was distinguished from test screens by the presence of a colored border. Subjects encoded either the feature value of an individual object(s) along the relevant dimension or the relative value between the two objects along the relevant dimension(s). A small red triangle appeared below one of the objects. In low-load Item trials, this cue indicated which object's feature value should be encoded; in Relation trials, it served to match roles between sample and test screens (i.e., the cued circle was required to have the same relationship to the uncued circle on the test screen as the cued circle had to the uncued circle on the sample screen for it to be a match). The sample screen was presented for 1.9 s and was followed by a 0.1-s visual noise mask and a 1-s blank screen.

To equalize the perceptual aspects of the task, all sample and test screens in all conditions used similar stimuli. Circles could appear in any one of 12 x-axis and y-axis positions on the screen, and cued and uncued circles could appear in any one of 12 horizontal and vertical positions relative to each other; the distribution of locations was identical for Item and Relation trials and for match and nonmatch trials. The test screens were identical in format to the sample screens, with the exception of the colored borders and their duration, which was 1.4 s followed by a 0.1-s mask. Subjects were instructed to indicate via a button press whether the test screen matched the most recent sample screen along the relevant dimension(s). Irrelevant dimensions were free to vary and always varied along at least one dimension. Relevant dimensions varied so that one-half of the test screens was a match to their sample and one-half a nonmatch.

Each sample screen, delay, or test screen was equally likely to be followed by a sample, delay, or test event with a maximum of two events of the same type in a row. Having zero, one, or two sample, delay, and test events allowed for a pseudorandom order of events, enabling statistical modeling of activation magnitude related to each event independently. We used both jittering (variable delay period) and randomization to reduce collinearity among event regressors, because jittering alone does not always achieve sufficient separation for maximal statistical power when there are temporal dependencies among event types, such as in a typical sample–delay–test sequence in a delayed-recognition working memory task. This situation is particularly problematic when the range of interstimulus interval lengths is limited by the need to maximize the number of events of each type during a time-limited scanning session. The use of a randomized event order within trials, as well as jittering with zero, one, or two blank screens between trials, maximizes the independence among regressors and allows the greatest statistical power to detect differences among event types (Serences 2004). As soon as subjects encountered a new sample display, they were to discard their memory of the previous sample and encode the new one. This amounted to an implementation of the “partial trials” paradigm for isolating separate blood oxygenation level-dependent (BOLD) components of a rapid event-related delayed recognition task (Ollinger et al. 2001a, b).

In Item trials, a test screen “matched” a sample screen when the cued circle on the test screen had the same value along the relevant dimension (position along the x-axis for Horizontal or y-axis for Vertical), as did the cued circle on the sample screen. In Relation trials, a test screen matched a sample screen when the two circles were in the same position relative to each other along the relevant dimension [e.g., if “Horizontal” were the relevant dimension, and the cued object was a distance x to the left of the uncued object on the sample screen, the cued object on the test screen would need to be a distance x to the left of the uncued object on the test screen for the correct response to be a “match”, although the absolute x-axis (and y-axis) positions could vary]. Note that Relation trials combined directional (i.e., “left of”, “right of”, “above”, “below”) and magnitude (distance between objects) components. A relation test stimulus was a match only if both the direction and magnitude of the relation were the same as the sample.

Prior to scanning, all subjects were given 1 h of training on the tasks in our lab.

Control tasks and stimuli.

In experiment 1, we included two types of trials that served as controls for our main contrast of interest—that between Item and Relation trials. One type was a verbalization control. We wanted to ensure that any difference that we saw between conditions was not due to differences in use of a verbal encoding and maintenance strategy. Subjects saw a sample screen with one or two pseudowords (Rastle et al. 2002) and after a variable delay, saw a test screen with one or two pseudowords; they then had to indicate whether one of the words had changed. The test screen presented the words in a different font to encourage subjects to rely on phonological rehearsal rather than visual memory.

The other control was a load control intended to exclude brain areas that were sensitive to task difficulty or attentional demands rather than the type of processing or type of information per se. In the Item task, the “High-Load” condition required subjects to encode the (horizontal or vertical) position of both circles. A test screen was considered a match if either the cued circle's relevant feature value matched the cued circle's value on the sample screen or the uncued circle's value matched the uncued circle's value on the sample screen. Thus as in the Low-Load Relation trials, the High-Load Item task required subjects to attend to both circles. In the Relation task, the High-Load condition required subjects to encode both the horizontal and vertical relations between circles. A relational match along either of the instructed dimensions between the test screen and the sample screen constituted a match. We used the “or” logic in both the Item and Relation High-Load trials to encourage subjects to maintain the information in an unintegrated format so that there was indeed a clear difference in memory load (Luck and Vogel 1997). The design of these blocks was otherwise the same as that described above.

In experiment 1, we also tested item and relation working memory for two nonspatial feature dimensions (size and luminance), in addition to the two spatial ones. There were 16 blocks of one-feature Item trials (four for each feature, with each block consisting of 12 sample events, 12 delay events, and 12 test events); 16 blocks of one-feature Relation trials (four for each feature); eight blocks of two-object, one-feature Item trials; eight blocks of two-feature Relation trials; and eight control pseudoword recognition blocks (four single words; four double words). There were seven blocks/run and eight runs. The nonspatial dimensions, which were modeled as separate regressors in the GLM for experiment 1 but otherwise had no influence on the spatial data, are the subject of follow-up work to be described in a future report.

Experiment 2 used exclusively Low-Load spatial tasks with 11 runs, each consisting of one block of Item Horizontal trials, one block of Item Vertical trials, one block of Relation Horizontal trials, and one block of Relation Vertical trials. Trial structure and stimuli for these trial types were identical to those in experiment 1.

General neuroimaging data collection methods.

Scanning was carried out using a 3.0T Philips Achieva MRI system located in the F. M. Kirby Research Center for Functional Brain Imaging in the Kennedy Krieger Institute (Baltimore, MD). BOLD changes in the MRI signal were collected using a SENSE head coil (MRI Devices, Waukesha, WI). Stimuli were presented and behavioral data collected on a personal computer running MATLAB and Psychtoolbox (Brainard 1997) software. A liquid crystal display projector located outside of the scanning room back-projected the stimuli onto a screen located inside of the bore of the scanner. Subjects viewed the stimuli via a mirror mounted to the top of the head coil. Responses were made with left- or right-thumb presses of hand-held button boxes connected via fiber-optic cable to a Cedrus RB-610 response pad (Cedrus, San Pedro, CA). Structural scans were taken before or after acquisition of functional data and consisted of a T1-weighted, magnetization-prepared rapid acquisition gradient echo anatomical sequence [200 coronal slices, 1 mm thickness, time to recovery (TR) = 8.1 ms, time to echo (TE) = 3.7 ms, flip angle = 8°, 256 × 256 matrix, field of view (FOV) = 256 mm]. The functional T2*-weighted MR scans were interleaved gradient echo, echo planar images (27 axial slices, 3 mm thickness, 1 mm gap, TR = 1,500 ms, TE = 30 ms, flip angle = 65°, 80 × 80 matrix, FOV = 240 mm). All functional scans were acquired in the axial plane and aligned parallel to the line from the anterior commissure to the posterior commissure.

Univariate analysis (voxelwise GLM).

Analysis of Functional NeuroImages (AFNI) software (Cox 1996) was used for data analysis. Functional echo planar imaging (EPI) data were phase shifted using Fourier transformation to correct for slice acquisition time and were motion corrected using three-dimensional volume registration. High-resolution anatomical images were registered to the EPI data and then transformed into the Talairach coordinate system. Individual participant data were spatially smoothed using a smoothing kernel of 4 mm full width of half maximum (FWHM) and then normalized as percent change from the run mean. Multiple regression analysis was performed on the time-series data at each voxel for all voxels in the brain volume. There were separate event-related regressors for sample, delay, and test events at each load (in experiment 1) for Item, Relation, and Pseudoword (in experiment 1) trials and for errors. Regressors were convolved with a γ function model of the BOLD response. The individual subject GLM data were then warped to Talairach space for group analysis. Mixed-effects analyses, with subjects as a random factor, were performed on the imaging data.

Experiment 1.

For the results shown in Fig. 2, general linear contrasts were performed on the regression coefficients to assess a main effect of condition (Relation or Item) and of load for Relation, Item, and Pseudoword separately (two items vs. one item, two relations vs. one relation, two pseudowords vs. one pseudoword) and for Relations vs. Items (Low-Load) for each event type (sample, delay, test). All contrasts were performed within brain areas defined as task-related by showing elevated activity for Relations + Items compared with baseline. Tests of voxelwise significance were held to P < 0.01 and corrected for multiple comparisons via spatial extent of activation, holding each cluster of voxels to an experiment-wise P < 0.05, based on a Monte Carlo simulation with 1,000 iterations run via the AFNI software package, using a smoothing kernel of 4 mm on the task-related mask.

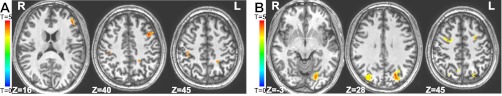

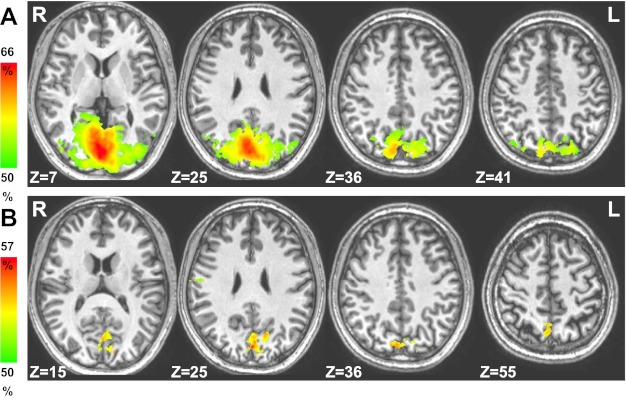

Fig. 2.

Univariate contrasts showing double dissociation between Items and Relations. A: Relations > Items, sample period. B: Items > Relations, delay period. R, right; L, left.

To isolate brain areas showing specificity for Relations, rather than potential confounds of attention, memory load, or verbalization, we then excluded from the Low-Load Relations > Items contrast (Fig. 2A) all areas showing a significant Item-load or Pseudoword-load effect as defined above. Analogously, to isolate areas preferentially responding to Items, we excluded from the low-load Items > Relations contrast (Fig. 2B) all areas showing a significant Relation-load or Pseudoword-load effect. Only correct trials were used.

Experiment 2: classification analysis.

A GLM was run on each subject's data with separate regressors for each trial period (sample, delay, test) as described above but with two differences: there was no spatial smoothing, and a separate GLM was applied to each run. Only correct trials were used. Depending on the classification performed, there were separate regressors for Relation and Item trials (see Figs. 3 and 4), Item feature value (left side of screen vs. right; top vs. bottom; see Fig. 5), Relation direction (“cued circle left of uncued” vs. right of; above vs. below; see Fig. 6A), and Relation magnitude (“large vs. small horizontal distance between circles”; “large vs. small vertical distance between circles”; see Fig. 6, B and C) for each time period. Classification was performed on the results of the GLM, with the T values for the regressor β weights of each run given as input to a linear support vector machine (SVM) classifier (library for SVM) (Chang and Lin 2001), using leave-one-out cross-validation, with the average classification accuracy over the 11 held-out runs reported.

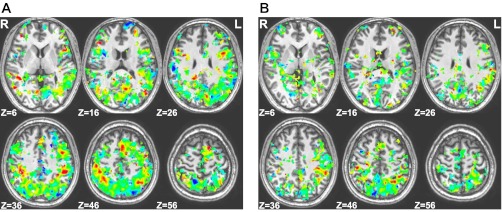

Fig. 3.

Searchlight weight maps for areas showing success in classification of Relation [voxels are hotter colors (red) to the extent that they “prefer” (i.e., were given positive weights by the support vector machine classifier) Relation trials] vs. Item [cooler colors (blue) to the extent that they prefer Items; negative weights] trials during sample (A) and delay (B) periods. Areas of intermediate shades (e.g., green) were those that either had a mix of large positive and negative weights, which were canceled out by smoothing, or had low weights for both conditions.

Fig. 4.

A and B: example weight maps generated by classifier for posterior parietal regions of interest (ROIs), showing an interdigitated pattern of voxel preferences for Relation (hotter colors) or Item (cooler colors) trials at the representative single-subject (A) and group (B) levels. C: classification results of Items vs. Relations within ROIs defined by significantly elevated activity in both Item and Relation trials and no trend toward differential activity between Item and Relation trials, showing that even within areas of common activation, dissociable neural subpopulations can by distinguished by their response patterns. Chance is 50%. BOCC, bilateral occipital cortex; LIPS, left intraparietal sulcus; BDOCC/PP, bilateral dorsal occipital/posterior parietal cortex; BFEF, bilateral frontal eye fields. *P < 0.05; †P < 0.005.

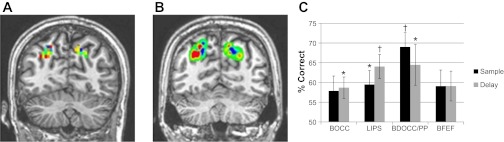

Fig. 5.

Areas in which information about item location could be decoded successfully via searchlight classification during sample (A) and delay (B) periods.

Fig. 6.

Successful searchlight classification of relations. A: classification of relational direction during delay. B and C: classification of relational magnitude in sample (B) and delay (C) periods.

Experiment 2: whole-brain searchlight.

Following the method of Kriegeskorte et al. (2006) and modified by Haynes and colleagues (2007), we performed a searchlight classification analysis on each individual subject's brain and then combined classification maps across subjects. Classification was performed at every voxel in the brain, within an approximately spherical 891-mm3 (33 voxels) “searchlight” centered on each voxel in turn. Each voxel in the resulting classification accuracy map was assigned the percentage correct of the searchlight centered on that voxel. After obtaining the classification accuracy map for each subject, we applied a Talairach transformation to combine the resulting statistical maps across the 11 subjects. The group mean classification accuracy map was then tested against chance (50% accuracy) with a two-tailed t-test and corrected for multiple comparisons with a whole-brain cluster threshold correction as described above.

To create the weight maps in Fig. 3, for each subject, we assigned to each voxel the average training weight given to the voxel by the SVM classifier in every searchlight neighborhood in which it was included. The resulting maps were then blurred using a Gaussian filter of FWHM of 4 mm and then Talairach transformed and combined across subjects. Filtering these maps through the above-chance brain regions in the Relation vs. Item classification accuracy maps for sample and delay periods yielded the maps shown.

Experiment 2: definition of regions of interest for Item vs. Relation classification.

The results of the searchlight classification analyses could be driven by either activation magnitude or pattern differences between conditions. The purpose of this additional classification analysis was to test whether items and relations were represented with different distributed neural patterns of activity within regions that had no overall group-level difference in activation magnitude. A GLM was run on each subject's data with separate regressors for each trial period (sample, delay, test) and condition (Relation, Item), and subject data were then combined for mixed-effects analysis as described above, combining data across all runs as for experiment 1. We separately contrasted Item trials with baseline, Relation trials with baseline, and Item trials with Relation trials, separately for sample and delay periods. For each trial period, we required that both Item and Relation trials had significantly elevated activity compared with baseline and that there was not even a trend toward a difference (voxelwise P > 0.25) between activity in Item and Relation trials. These contrasts each yielded several clusters in occipital, posterior parietal, and posterior PFC. Regions of interest (ROIs) were created from these clusters, and classification analysis was conducted within them, using all voxels in each ROI.

RESULTS

Experiment 1: identifying brain regions selectively involved in Item or Relation processing through univariate analysis, controlling for attention, memory load, and verbalization—behavioral results.

Load effects for Item trials were demonstrated by significantly lower accuracy and longer response times in two-object trials compared with one-object trials (71.9% vs. 91.8%, one-tailed P < 1·10−4; 1.0 s vs. 0.8 s, one-tailed P < 1·10−12). Load effects for Relation trials were demonstrated by significantly lower accuracy and longer response times in two-relation trials compared with one-relation trials (62.8% vs. 90.2%, one-tailed P < 1·10−6; 1.02 s vs. 0.87 s, one-tailed P < 1·10−8). Load effects for Pseudoword trials were demonstrated by significantly lower accuracy and longer response times in two-word trials compared with one-word trials (90.0% vs. 95.7%, one-tailed P < 0.05; 0.85 s vs. 0.71 s, one-tailed P < 1·10−5). These results demonstrate that the experimental manipulation effectively taxed working memory load. Exhibiting a similar level of difficulty, Relation and Item low-load trials showed no difference in accuracy (90.2% vs. 91.8%, two-tailed P = 0.34), although Relation trials did show slightly longer response times (0.87 s vs. 0.80 s, two-tailed P < 1·10−3), presumably reflecting the need to attend to both objects; however, in low-load Relation trials, subjects were significantly more accurate (90.2% vs. 71.9%, P < 1·10−4) and faster (0.87 s vs. 1.0 s, P < 1·10−5) than in the high-load Item trials. These results indicate that the masks used in the fMRI analysis, which were created from the High-Load vs. Low-Load Item contrast, were a good control for difficulty or attentional factors.

Experiment 1: univariate fMRI analysis.

To find cortical areas that were more sensitive to spatial relations than to absolute locations, we subtracted activity for the latter from the former, separately for sample, delay, and test events, for the low-load trials; an analogous procedure was used to find areas more sensitive to item locations than to spatial relations. The results are shown in Fig. 2. These slices show only areas that are specifically sensitive to trial type, because we masked out brain areas showing nonspecific load or verbalization effects (see materials and methods for details). These load effects were strongest in posterior parietal cortex (not shown); the overall results for items vs. relations analyses were qualitatively similar without this masking.

Figure 2A shows two regions in left lateral PFC that were significantly more sensitive to relational than to item-specific information during the sample period: a region just dorsal to the inferior frontal junction (IFJ) and an anterior mid-dorsolateral region. (These areas also showed greater or trending greater activation for low-load Relations compared with the much more challenging high-load Item condition, again suggesting that the difference is not being driven by encoding difficulty.) Bilateral inferior parietal lobule (IPL) also showed sample-period selectivity for relations. No areas showed significantly greater overall activity for Relations than for Items during the delay. There was a significant interaction between trial type and task period in the areas selective for Relations during the sample period, with a greater difference between Relations and Items during the sample period than the delay.

During the delay period, bilateral IPS, bilateral posterior SFS, and the left lingual gyrus showed significantly greater activity for the maintenance of item-specific information, as shown in Fig. 2B. Similar SFS and early visual regions, as well as a medial SPL region, were more active for Items than Relations during the sample period, but this did not quite reach significance. There was a trend in the bilateral IPS region toward greater activity for low-load Items even compared with high-load Relations, providing further evidence that the difference is not driven by maintenance difficulty. There was no significant trial-type by task-period interaction within the Item-selective areas.

Item vs. Relation β weights and relevant control effects for the regions shown in Fig. 2, A and B, are given in Table 1.

Table 1.

Item vs. Relation and control contrast β weights

| Relation Sample | Relation Minus Item | Item Load | Pseudoword Load |

|---|---|---|---|

| IFJ | 0.226 ± 0.05* | 0.067 ± 0.04 ns | 0.014 ± 0.09 ns |

| antPFC | 0.201 ± 0.03* | 0.020 ± 0.05 ns | 0.014 ± 0.13 ns |

| IPL | 0.060 ± 0.01* | 0.045 ± 0.02 ns | 0.028 ± 0.03 ns |

| Item Delay | Item Minus Relation | Relation Load | Pseudoword Load |

|---|---|---|---|

| FEF | 0.045 ± 0.01* | 0.043 ± 0.03 ns | 0.027 ± 0.02 ns |

| IPS | 0.065 ± 0.03* | 0.014 ± 0.03 ns | 0.058 ± 0.05 ns |

| BA18 | 0.079 ± 0.03* | 0.045 ± 0.03 ns | 0.057 ± 0.05 ns |

β weights (±SE), representing percent signal change, for Relation vs. Item contrast in regions shown in Fig. 2, along with those for relevant control contrasts. IFJ, inferior frontal junction; antPFC, anterior prefrontal cortex; IPL, inferior parietal lobule; FEF, frontal eye fields; IPS, intraparietal sulcus; BA18, Brodmann area 18.

P < 0.05; ns, not significant.

In addition to regions that exhibited an activity difference between relational and item-specific spatial information, Relation and Item trials both showed activation significantly greater than our verbal control task in a very similar set of cortical areas: posterior SFS, SPL, IPL, IPS, and lateral occipital cortex, regions previously identified in our lab as well as others as being part of a broad visual attention and spatial working memory network (Awh et al. 1995; Beauchamp et al. 2001; Corbetta et al. 2002; Courtney et al. 1998; Sala and Courtney 2007); these areas were one focus of our subsequent multivoxel analysis in experiment 2.

Experiment 2: distinguishing overlapping population codes and decoding Item and Relation representations using multivoxel classification.

Multivoxel pattern analysis affords the opportunity to extend these univariate findings in two ways: 1) by identifying regions that are involved in both Item and Relation processing but respond to each with different neural population codes and 2) by identifying which brain regions actually represent the information necessary to perform the Item and Relation tasks. In pursuit of these ends, we conducted multivoxel classification on trials from a second set of subjects who had been given more repetitions of each type of Relation and Item (low-load) trial.

Experiment 2: Item vs. Relation classification. I. searchlight.

Figure 3 shows areas of above-chance classification accuracy for Items vs. Relations as found by whole-brain searchlight. The figure is a “weight map” (see materials and methods). The overall character of the map comports very well with the findings of the univariate analysis of experiment 1 on a separate set of subjects—a preference (higher weights) for Items in SPL/precuneus, IPS, SFS, and posterior cingulate cortex and for Relations in IPL, lateral and anterior PFC, and anterior cingulate cortex. In addition, this classification success spans across a significantly greater extent of cortex than those regions identified by the experiment 1 GLM analysis, but since the attention, load, and verbalization controls were not available in experiment 2, further analysis is required to demonstrate task selectivity.

Experiment 2: Item vs. Relation classification. II. ROIs.

To investigate whether there are overlapping but dissociable neural populations engaged by Item vs. Relation trials in addition to the regions showing overall different magnitudes of activation (identified in experiment 1; shown in Fig. 2; and confirmed as item vs. relation, selective by the searchlight analysis), we conducted ROI-based classification analysis within areas that showed significantly elevated activity for both Item and Relation trials compared with baseline but that showed no suggestion of overall differential activity magnitude between Item and Relation trials (see materials and methods for details) in the GLM analysis. Consistent with experiment 1, this yielded several areas in occipital, posterior parietal, and posterior PFC (similar to the mixed-preference areas in the weight map in Fig. 3). Before classification, we also normalized the activation patterns to remove mean activation differences. As Fig. 4 shows, even with overall regional activation differences removed, Item trials could be distinguished from Relation trials by their characteristic voxel activation patterns in occipital and posterior parietal cortex during encoding and maintenance.

Experiment 2: information classification for Items and Relations.

A strong test of the selectivity of a region for involvement in a task is whether it is possible to decode task-related representations in that area. To decode where in the brain specific item locations are being encoded and maintained, we next tested searchlight classification accuracy for item position. Figure 5 shows results averaged over accuracy for “left” vs. “right” and “top” vs. “bottom” (of screen) classification. During the sample period, as might be expected because of the retinotopic difference in the visual stimuli, high classification accuracy was seen in visual cortex and IPS. During the delay, when no stimuli were present, information regarding location could be decoded in precuneus and medial SPL.

To detect representations of spatial relational information, we conducted two sets of classifications: relational direction (cued circle left of vs. right of or above vs. below uncued circle) and relational magnitude (“small distance between circles” vs. “large distance between circles”). Figure 6 shows searchlight results for this analysis. For classification of relational direction during the delay period (no regions reached significance during the sample), there was above-chance accuracy in middle and anterior regions of left lateral PFC and in left IPL. Classification for relational magnitude looked like a combination of relational direction information and object-specific information, with significant accuracy seen in anterior lateral prefrontal and precuneus/posterior cingulate regions. Note that the direction classification and the magnitude classification were independent analyses, but both implicated anterior left lateral PFC in the representation of relational information.

DISCUSSION

Our univariate results show that distinct brain regions are differentially involved in item vs. relational spatial processing. Several regions of lateral PFC—notably the left IFJ and anterior PFC—are more involved in processing relational information, whereas more posterior areas in the SFS and precentral sulcus are more involved in the encoding and maintenance of item-specific information. Within the parietal lobe, anterior IPL regions are more involved in relational processing, whereas SPL/precuneus and posterior IPS are more involved with item processing. Multivoxel pattern classification analysis supports these findings and extends them. As Fig. 3 shows, a similar pattern of voxel selectivity emerged in experiment 2 with a new set of subjects. More remarkably, this analysis also demonstrates that information regarding the particular spatial relation held in working memory can be decoded in anterior PFC and the IPL, whereas information regarding the particular object location held in working memory can be decoded in occipital cortex and SPL/precuneus, indicating that these areas are not merely involved in nonspecific processing but actually carry the representations needed to do the different tasks.

It was possible a priori that subjects could have used alternative, nonrelational strategies, such as visual or verbal encoding, to perform the relational trials, but these results suggest that subjects did in fact encode abstract, relational information. If subjects had adopted the strategy of visually encoding and maintaining both circles in memory in the relation condition, then the neural response would have resembled that of the two-item condition, in which case, we would have masked out all low-load relation > low-load item areas when we masked out high-load item > low-load item areas in Fig. 2. That we still see Relation trial-specific activity suggests that subjects were not primarily using this strategy. If the subjects had adopted a verbal or mixed visual-verbal strategy, then in a contrast with the low-load item conditions, only verbal areas would appear. We attempted to address verbalization concerns by masking areas showing a verbal (pseudoword) load effect. Although no such masking can be perfect, the fact that we do see areas beyond typical language areas activated for relations suggests that this strategy was not a major factor in our results. More generally, the fact that items do activate some regions more than relations suggests that relations are not simply “items plus something”. Finally, our success in classifying the informational content of relations again argues that subjects were in fact encoding the relations as instructed. Our item load control is meant to remove the effects of encoding difficulty due to attentional shifting, memory limits, or other nonspecific factors, and the behavioral data showing significantly better performance in terms of accuracy and reaction times in the low-load relation compared with high-load item condition suggest that the generic encoding demands of the two-item condition are even greater than for the relation condition, and thus areas sensitive to those would be masked out. At the least, there must be something specific to the encoding of relational information (e.g., assigning roles or calculating an object-centered reference frame) that is activating these regions. The fact that in some of these regions, we find success in classifying the representational content of relations further suggests that they are not simply involved in nonspecific encoding difficulty but that actual relation-specific information is being encoded.

The results of the current experiment, therefore, clearly demonstrate that there are cortical regions containing patterns of neural activity representing absolute retinotopic locations of individual objects that are distinct from those containing patterns of neural activity representing relative locations between objects. The question remains, however, whether these results provide evidence that absolute and relative spatial locations are truly different “types” of information in the same sense that auditory information is different from visual information, or spatial information is fundamentally different from verbal information. Individual object locations are also relative in the sense of being referenced to the fixation stimulus, the frame of the projection screen, or the retinal FOV. Are object-relative positions the same type of information but simply in a different reference frame relative to the cued object instead of to the fixation stimulus? The distinction may lie in whether the type of information being held in working memory can be maintained as a sensory or motor representation. There is evidence in both monkeys and humans that working memory for retinotopic locations of individual objects is maintained via feedback from the FEF, SEF, and dorsolateral PFC to parietal cortex (Reinhart et al. 2012). When task-appropriate, working memory for sensory information appears to bias selective attention in early visual areas [e.g., Sala and Courtney 2009; for review, see Gazzaley and Nobre (2012)]. The results of the current study for the Item trials are highly consistent with such a mechanism. In contrast, there is no relevant sensory representation that can be used to successfully complete the Relation trials. Correspondingly, the mechanism for maintenance of relational spatial information suggested by the current results appears to be more intra-prefrontal. This difference in working memory mechanism implies that the brain treats these as fundamentally different types of information, although further research will be needed on this point.

The involvement of the left IFJ during the sample period of the Relation trials is intriguing. An earlier study from our lab found a very similar area selectively activated for the updating of rule (compared with item) information in working memory [Montojo and Courtney 2008; see also Brass and von Cramon (2004) for more evidence that this region is implicated in updating of task rules]. Whereas we could not decode the representational content of relations in this region in the current study, the close concordance between the two studies suggests at least that there may be commonalities between rule and relational processing that recruit this region. Perhaps the relational trials required updating of procedural rules for determining a match (e.g., “find the cued circle then look left”), or perhaps rules can be thought of as a sort of relation, expressed as a conjunction among a stimulus, a context, and an action.

The sensitivity of rostrolateral PFC to both relational magnitude and relational direction information may reflect the integration of these two types of relational information required by the task. Previous research has suggested that this region is preferentially involved in integration (Bunge et al. 2009; Kroger et al. 2002), and a series of studies by Christoff and colleagues (Smith et al. 2007) has focused on frontopolar cortex as a region specialized for relational processing. However, it is not simply generic processing requirements that activate this area, because we were able to decode the actual representational content of the information that was being maintained. This is certainly an abstract form of information, not tied to any stimulus properties, and therefore, this result supports the notion that there may be something of a hierarchy, or at least a gradient, of representational abstractness within lateral PFC (Badre and D'Esposito 2009). In this vein, it is noteworthy that explicit relational representations only appear during the delay period, in the absence of visual stimuli, whereas explicit item-specific information is available during the sample period. This may be because converting relational information from a stimulus-bound format into an abstract format suitable for reasoning takes time or because the presence of visual anchors during the sample period provides an alternative to this conversion.

Our analysis also revealed a superior/inferior distinction within parietal lobe. The preferential involvement of SPL in item processing is consistent with earlier work in our lab (Montojo and Courtney 2008) and with its known role in directing spatial attention (Yantis et al. 2002). [It is likely that the SPL is involved in relational (and rule) encoding as well as item but to a lesser extent, because relational information can be converted into an abstract format.] The preferential involvement of IPL for relational processing makes sense in the context of the lesion data [e.g., construction apraxia and Gerstmann's syndrome; see Rusconi et al. (2010) for review], which reveal deficits with tasks requiring the sort of explicit knowledge of spatial relations probed in our study when IPL is damaged. It is also consistent with the suggestion that SPL is more involved with online visuomotor control, whereas inferior parietal cortex is part of a separate system involved in the working memory coding of spatial relationships (Milner and Goodale 1995; Milner et al. 1999). Brodmann's areas 39 and 40 are relatively new evolutionarily, and their involvement in this task, which requires coding of abstract, structural information in a format suitable for reasoning, suggests that these representations may underlie this distinctively human cognitive ability.

Univariate analysis identified visual, working memory, and attentional areas that were commonly activated in these tasks, but our multivoxel pattern classification analysis reveals that even within occipital and parietal regions that are activated by both the Item and Relation tasks without exhibiting a clear item vs. relation preference, the two trial types can be discriminated (Fig. 4), suggesting that there may be distinct neural subpopulations representing the different information types. This is consistent with single-unit studies that show coding of spatial relations within areas that have also been implicated in coding of object-specific locations: V4 (Pasupathy and Connor 2001), posterior inferotemporal cortex (Brincat and Connor 2004), superior parietal area 7a (Chafee et al. 2007), and SEF (Olson and Gettner 1995). It may be that these regions are involved in implicit coding of spatial relations for movement or recognition purposes.

There is a considerable overlap between the posterior frontal and parietal areas that we find implicated in item-specific processing (Figs. 2B and 3) and the “dorsal attention system” (DAS) network that has been found via resting-state functional connectivity analysis (Fox et al. 2005). In addition, the lateral prefrontal and anterior IPL areas that we find to be preferentially involved in representing relational information (Figs. 2A and 3) are remarkably similar to the “frontoparietal control system” (FPCS) that has been identified recently by functional connectivity (Vincent et al. 2008). These two systems include regions that are frequently activated together in tasks that involve exogenous attention (DAS) or “cognitive control” (FPCS). The DAS is involved in selecting and maintaining more concrete, stimulus-related information (Corbetta and Schulman 2002), whereas the FPCS may contribute to higher cognition by representing more conceptual, abstract information not tied to any specific stimulus or motor response (Fuster 2001). It seems plausible that the areas that show an intermixing of relation and item sensitivities (Fig. 3), which include portions of the DAS in addition to visual cortex, reflect relational representations useful for implicit processes as suggested above, whereas those that are within the FPCS reflect explicit representations useful for spatial reasoning. Of note, recent work comparing tractography and resting-state functional connectivity in macaques and humans suggests that humans have projections from inferior parietal cortex to anterior PFC that other primates do not (Mars et al. 2011).

Whereas it is common to describe lateral PFC in terms of processing requirements, such as “control” or “monitoring”, it is notable that these functions require access to relational information, for instance, knowledge of sequential order, relative preferences, or explicit rule mappings. Here, we demonstrate that explicit information about the spatial relations required in this task is indeed represented in anterior-lateral PFC. We also show that even without any task requirement for manipulation, spatial object-specific and abstract, relational information dissociates. These findings support the view that increasing demand for relational representations is an important component of the caudal-to-rostral gradient along lateral PFC.

GRANTS

Support for this work was provided by grants from the National Institute of Mental Health (1R01MH082957) and the National Science Foundation (0745448).

DISCLOSURES

The authors declare no potential conflicts of interest, financial or otherwise.

AUTHOR CONTRIBUTIONS

Author contributions: C.M.A. and S.M.C. conception and design of research; C.M.A. performed experiments; C.M.A. analyzed data; C.M.A. and S.M.C. interpreted results of experiments; C.M.A. prepared figures; C.M.A. drafted manuscript; C.M.A. and S.M.C. edited and revised manuscript; S.M.C. approved final version of manuscript.

REFERENCES

- Awh E, Smith EE, Jonides J. Human rehearsal processes and the frontal lobes: PET evidence. Ann NY Acad Sci 769: 97–118, 1995 [DOI] [PubMed] [Google Scholar]

- Badre D. Cognitive control, hierarchy, and the rostro-caudal organization of the frontal lobes. Trends Cogn Sci 12: 193–200, 2008 [DOI] [PubMed] [Google Scholar]

- Badre D, D'Esposito M. Is the rostro-caudal axis of the frontal lobe hierarchical? Nat Rev Neurosci 10: 659–669, 2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beauchamp MS, Petit L, Ellmore TM, Ingleholm J, Haxby JV. A parametric fMRI study of overt and covert shifts of visuospatial attention. Neuroimage 14: 310–321, 2001 [DOI] [PubMed] [Google Scholar]

- Bor D, Duncan J, Wiseman RJ, Owen AM. Encoding strategies dissociate prefrontal activity from working memory demand. Neuron 37: 361–367, 2003 [DOI] [PubMed] [Google Scholar]

- Brainard DH. The Psychophysics Toolbox. Spat Vis 10: 433–436, 1997 [PubMed] [Google Scholar]

- Brass M, von Cramon DY. Decomposing components of task preparation with functional magnetic resonance imaging. J Cogn Neurosci 16: 609–620, 2004 [DOI] [PubMed] [Google Scholar]

- Brincat SL, Connor CE. Underlying principles of visual shape selectivity in posterior inferotemporal cortex. Nat Neurosci 7: 880–886, 2004 [DOI] [PubMed] [Google Scholar]

- Bunge SA, Helskog EH, Wendelken C. Left, but not right, rostrolateral prefrontal cortex meets a stringent test of the relational integration hypothesis. Neuroimage 46: 338–342, 2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chafee MV, Averbeck BB, Crowe DA. Representing spatial relationships in posterior parietal cortex: single neurons code object-referenced position. Cereb Cortex 17: 2914–2942, 2007 [DOI] [PubMed] [Google Scholar]

- Chang CC, Lin CJ. LIBSVM: A Library for Support Vector Machines (Online). http://www.csie.ntu.edu.tw/∼cjlin/libsvm [2001].

- Christoff K, Prabhakaran V, Dorfman J, Zhao Z, Kroger JK, Holyoak KJ, Gabrieli JDE. Rostrolateral prefrontal cortex involvement in relational integration during reasoning. Neuroimage 14: 1136–1149, 2001 [DOI] [PubMed] [Google Scholar]

- Colby CL, Goldberg ME. Space and attention in parietal cortex. Annu Rev Neurosci 22: 319–349, 1999 [DOI] [PubMed] [Google Scholar]

- Corbetta M, Kincade JM, Shulman GL. Neural systems for visual orienting and their relationships to spatial working memory. J Cogn Neurosci 14: 508–523, 2002 [DOI] [PubMed] [Google Scholar]

- Corbetta M, Shulman GL. Control of goal-directed and stimulus-driven attention in the brain. Nat Rev Neurosci 3: 201–215, 2002 [DOI] [PubMed] [Google Scholar]

- Courtney SM. Attention and cognitive control as emergent properties of information representation in working memory. Cogn Affect Behav Neurosci 4: 501–516, 2004 [DOI] [PubMed] [Google Scholar]

- Courtney SM, Petit L, Maisog JM, Ungerleider L, Haxby JV. An area specialized for spatial working memory in human frontal cortex. Science 279: 1347–1351, 1998 [DOI] [PubMed] [Google Scholar]

- Cox RW. AFNI: software for analysis and visualization of functional magnetic resonance neuroimages. Comput Biomed Res 29: 162–173, 1996 [DOI] [PubMed] [Google Scholar]

- Fox MD, Snyder AZ, Vincent JL, Corbetta M, Van Essen DC, Raichle ME. The human brain is intrinsically organized into dynamic, anticorrelated functional networks. Proc Natl Acad Sci USA 102: 9673–9678, 2005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Funahashi S, Bruce CJ, Goldman-Rakic PS. Mnemonic coding of visual space in the monkey's dorsolateral prefrontal cortex. J Neurophysiol 61: 331–349, 1989 [DOI] [PubMed] [Google Scholar]

- Fuster JM. The prefrontal cortex—an update: time is of the essence. Neuron 30: 319–333, 2001 [DOI] [PubMed] [Google Scholar]

- Gazzaley A, Nobre AC. Top-down modulation: bridging selective attention and working memory. Trends Cogn Sci 16: 129–135, 2012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Geake JG, Hansen PC. Neural correlates of intelligence as revealed by fMRI of fluid analogies. Neuroimage 26: 555–564, 2005 [DOI] [PubMed] [Google Scholar]

- Haynes JD, Sakai K, Rees G, Gilbert S, Frith C, Passingham RE. Reading hidden intentions in the human brain. Curr Biol 17: 323–328, 2007 [DOI] [PubMed] [Google Scholar]

- Hummel JE, Holyoak KJ. Relational reasoning in a neurally plausible cognitive architecture. Curr Dir Psychol Sci 14: 153–157, 2005 [Google Scholar]

- Kriegeskorte N, Goebel R, Bandettini P. Information-based functional brain mapping. Proc Natl Acad Sci USA 103: 3863–3868, 2006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kroger JK, Sabb FW, Fales CI, Bookheimer SY, Cohen MS, Holyoak KJ. Recruitment of anterior dorsolateral prefrontal cortex in human reasoning: a parametric study of relational complexity. Cereb Cortex 12: 477–485, 2002 [DOI] [PubMed] [Google Scholar]

- Luck SJ, Vogel EK. The capacity of visual working memory for features and conjunctions. Nature 390: 279–281, 1997 [DOI] [PubMed] [Google Scholar]

- Mars RB, Jbabdi S, Sallet J, O'Reilly JX, Croxson PL, Olivier E, Noonan MP, Bergmann C, Mitchell AS, Baxter MG, Behrens TEJ, Johansen-Berg H, Tomassini V, Miller KL, Rushworth MFS. Diffusion-weighted imaging tractography-based parcellation of the human parietal cortex and comparison with human and macaque resting-state functional connectivity. J Neurosci 31: 4087–4100, 2011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Milner AD, Goodale MA. The Visual Brain in Action. New York: Oxford University Press, 1995 [Google Scholar]

- Milner AD, Paulignan Y, Dijkerman HC, Michel F, Jeannerod M. A paradoxical improvement of misreaching in optic ataxia: new evidence for two separate neural systems for visual localization. Proc Biol Sci 266: 2225–2229, 1999 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mohr HM, Goebel R, Linden DEJ. Content- and task-specific dissociations of frontal activity during maintenance and manipulation in visual working memory. J Neurosci 26: 4465–4471, 2006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Montojo CA, Courtney SM. Differential neural activation for updating rule versus stimulus information in working memory. Neuron 59: 173–182, 2008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Munk MHJ, Linden DEJ, Muckli L, Lanfermann H, Zanella FE, Singer W, Goebel R. Distributed cortical systems in visual short-term memory revealed by event-related functional magnetic resonance imaging. Cereb Cortex 12: 866–876, 2002 [DOI] [PubMed] [Google Scholar]

- Ollinger JM, Shulman GL, Corbetta M. Separating processes within a trial in event-related functional MRI. I. The method. Neuroimage 13: 210–217, 2001a [DOI] [PubMed] [Google Scholar]

- Ollinger JM, Shulman GL, Corbetta M. Separating processes within a trial in event-related functional MRI. II. Analysis. Neuroimage 13: 218–229, 2001b [DOI] [PubMed] [Google Scholar]

- Olson CR, Gettner SN. Object-centered direction selectivity in the supplementary eye field of the macaque monkey. Science 269: 985–988, 1995 [DOI] [PubMed] [Google Scholar]

- Pasupathy A, Connor CE. Shape representation in area V4: position-specific tuning for boundary conformation. J Neurophysiol 86: 2505–2519, 2001 [DOI] [PubMed] [Google Scholar]

- Penn DC, Holyoak KJ, Povinelli DJ. Darwin's mistake: explaining the discontinuity between human and nonhuman minds. Behav Brain Sci 31: 109–178, 2008 [DOI] [PubMed] [Google Scholar]

- Petrides M. Lateral prefrontal cortex: architectonic and functional organization. Philos Trans R Soc Lond B Biol Sci 360: 781–795, 2005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rastle K, Harrington J, Coltheart M. 358,534 nonwords: the ARC Nonword Database. Q J Exp Psychol A 55: 1339–1362, 2002 [DOI] [PubMed] [Google Scholar]

- Reinhart RMG, Heitz RP, Purcell BA, Weigand PK, Schall JD, Woodman GF. Homologous mechanisms of visuospatial working memory maintenance in macaque and human: properties and sources. J Neurosci 32: 7711–7722, 2012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rusconi E, Pinel P, Dehaene S, Kleinschmidt A. The enigma of Gerstmann's syndrome revisited: a telling tale of the vicissitudes of neuropsychology. Brain 133: 320–332, 2010 [DOI] [PubMed] [Google Scholar]

- Sala JB, Courtney SM. Binding of what and where during working memory maintenance. Cortex 43: 5–21, 2007 [DOI] [PubMed] [Google Scholar]

- Sala JB, Courtney SM. Flexible working memory representation of the relationship between an object and its location as revealed by interactions with attention. Atten Percept Psychophys 71: 1525–1533, 2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Serences JT. A comparison of methods for estimating the event-related BOLD signal in rapid fMRI. Neuroimage 21: 1690–1700, 2004 [DOI] [PubMed] [Google Scholar]

- Silver M, Kastner S. Topographic maps in human frontal and parietal cortex. Trends Cogn Sci 13: 488–495, 2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smith R, Keramatian K, Christoff K. Localizing the rostrolateral prefrontal cortex at the individual level. Neuroimage 36: 1387–1396, 2007 [DOI] [PubMed] [Google Scholar]

- Vincent JL, Kahn I, Snyder AZ, Raichle ME, Buckner RL. Evidence for a frontoparietal control system revealed by intrinsic functional connectivity. J Neurophysiol 100: 3328–3342, 2008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Waltz JA, Knowlton BJ, Holyoak KJ, Boone KB, Mishkin FS, de Menezes Santos M, Thomas CR, Miller BL. A system for relational reasoning in human prefrontal cortex. Psychol Sci 10: 119–125, 1999 [Google Scholar]

- Wendelken C, Bunge SA, Carter CS. Maintaining structured information: an investigation into functions of parietal and lateral prefrontal cortices. Neuropsychologia 46: 665–678, 2008 [DOI] [PubMed] [Google Scholar]

- Yantis S, Schwarzbach J, Serences JT, Carlson RL, Steinmetz MA, Pekar JJ, Courtney SM. Transient neural activity in human parietal cortex during spatial attention shifts. Nat Neurosci 5: 995–1002, 2002 [DOI] [PubMed] [Google Scholar]