Abstract

This study examined the effectiveness of using qualitatively different reinforcers to teach self-control to an adolescent boy who had been diagnosed with an intellectual disability. First, he was instructed to engage in an activity without programmed reinforcement. Next, he was instructed to engage in the activity under a two-choice fixed-duration schedule of reinforcement. Finally, he was exposed to self-control training, during which the delay to a more preferred reinforcer was initially short and then increased incrementally relative to the delay to a less preferred reinforcer. Self-control training effectively increased time on task to earn the delayed reinforcer.

Key words: delay discounting, self-control training, intellectual disability

Self-control is conceptualized as behavior that results in access to a delayed, more preferred consequence rather than to an immediate, less preferred consequence. Conversely, delay discounting occurs when an organism discounts the value of the delayed, more preferred consequence by selecting an immediate, less preferred consequence (Ainslie, 1974; Schweitzer & Sulzer-Azaroff, 1988). Results of studies with persons with disabilities show that a combination of two procedures—gradually increasing the delay to the more preferred reinforcer and presenting a distracter during the delay interval—teaches self-control more effectively than either procedure implemented alone (e.g., Dixon & Falcomata, 2004; Dixon & Tibbetts, 2009).

These earlier studies demonstrated that self-control could be taught when different amounts of the same reinforcer are used (e.g., access to one crossword puzzle immediately vs. access to three crossword puzzles after a delay). In these cases, as with much of the choice literature, the reinforcers were economically substitutable and differed only in amount. However, in daily life, individuals often choose between reinforcers that differ not only in amount but in kind (i.e., qualitatively different reinforcers). The effectiveness of teaching self-control using qualitatively different reinforcers is unknown. Evidence from research on the differential outcome effect in discrimination learning and multibehavior repertoires suggests that using qualitatively different reinforcers may enhance learning (Davison & Nevin, 1999; Goeters, Blakely, & Poling, 1992). However, these basic findings have not yet been extended to an applied setting.

The present study examined the effectiveness of using qualitatively different reinforcers to teach self-control to an adolescent boy with intellectual disability. First, we measured the duration of responding on a task in the absence of programmed reinforcement. Next, he was offered two choices: a more preferred reinforcer after responding for a specified duration on a task or a less preferred reinforcer immediately. Finally, he was exposed to the self-control training, which consisted of responding on a two-choice fixed-duration progressive schedule of reinforcement.

METHOD

Participant

Stevie was a 16-year-old boy who had been diagnosed with mild mental retardation, spastic cerebral palsy, and cortical blindness. He was enrolled in a residential educational program for students with intellectual disabilities but did not live at the facility. He was recruited for this study because he was reported by staff to have difficulty engaging in tasks for extended periods to earn delayed rewards.

Stimulus Preference Assessment

Prior to the study, the first author interviewed the participant and staff members to generate a list of seven preferred stimuli available in the classroom, including edible items (e.g., potato chips), toys (e.g., computer, Walkman, Jenga), and activities (e.g., taking a walk, visiting a friend). Identified stimuli and activities were used in a pictorial multiple-stimulus preference assessment without replacement (MSWO; DeLeon & Iwata, 1996), conducted prior to each session during choice baseline and self-control training conditions, to identify less preferred and more preferred reinforcers. The first- and fourth-ranked stimuli identified in each MSWO were classified as more and less preferred, respectively. Potato chips were the more preferred reinforcer chosen most often (45% of opportunities), and visiting a friend and walking were the less preferred reinforcers chosen most often (26% of opportunities each). Stevie accessed a stimulus or activity for 2 to 5 min during choice baseline, self-control training, and generalization sessions.

Setting and Materials

All sessions were conducted in the classroom. Prior to the study, the experimenter interviewed staff to identify age-appropriate distracting tasks that Stevie could complete independently. For the primary task, matching-to-sample workbook sheets and a writing utensil were used. A bin, markers, and containers were used to assess generalization during a sorting task.

Dependent Variables and Data Collection

The experimenter observed and collected data on two aspects of the participant's choices, the frequency that the participant chose the immediate, less preferred reinforcer or the delayed, more preferred reinforcer and the length of time the participant engaged in the task. Reinforcer choice was defined as the participant pointing to a card with a picture of the reinforcer. Engagement in the primary task (matching-to-sample workbook sheets) was defined as drawing a line to match one picture to the same picture in a field of dissimilar pictures. Engagement in the generalization task (sorting markers) was defined as grasping a marker and placing it in one of four corresponding containers based on its color.

Procedure

An average of 5.4 trials were conducted each session during the natural baseline, choice baseline, self-control training, and generalization conditions.

Natural baseline

The purpose of this condition was to establish a baseline level of task performance in the absence of reinforcement contingencies. At the start of the session, the experimenter placed the workbook sheets and writing utensils on the desk in front of him, modeled the task (e.g., drawing a line from the picture on the left to an identical or identical but silhouetted image on the right), and then provided an instruction to begin. Sessions were terminated when the participant failed to initiate the task within 30 s or stopped engaging in the task for 5 s. The next trial began immediately after the previous trial (approximately 5 s).

Choice baseline

Procedures were identical to those in the natural baseline, except a two-choice fixed-ratio schedule of reinforcement was in effect. Prior to giving the instruction to begin, the experimenter held up two picture icons associated with the more and less preferred reinforcers and stated, “If you would like to receive the [less preferred reinforcer] for doing nothing, point to it. If you would like to [do the task] until I say stop to receive the [more preferred reinforcer], point to it.”

The experimenter did not give any additional instructions or prompts. Trials ended when one of the following conditions were met: (a) The participant did not initiate the task within 30 s of the instruction to begin, (b) he stopped engaging in the task for 5 s, (c) he said “I'm done,” (d) he chose the immediate, less preferred reinforcer, or (e) he chose the delayed, more preferred reinforcer and performed at criterion levels. Programmed reinforcement was delivered for the last two conditions.The next trial began immediately after either the reinforcing event or the previous trial.

The targeted duration of time on task during the choice baseline was originally determined by multiplying the mean value of time on task during the natural baseline (42 s) by 4 (168 s). Stevie engaged in the activity for 168 s and gained access to the delayed, more preferred reinforcer; therefore, the criterion was altered so that the required time on task was equal to 10 times the mean length of time on task during the natural baseline (420 s).

Self-control training

A two-choice fixed-duration progressive-duration schedule of reinforcement was in effect. Except for the required time on task to gain access to the more preferred reinforcer, conditions were the same as during the choice baseline. The criterion for determining the amount of time required to engage in the task to obtain the more preferred reinforcer was similar to that in previous self-control studies (Dixon & Falcomata, 2004). The initial requirement to obtain the delayed reinforcer was set at the mean duration of on-task behavior during the natural baseline (42 s). After the particiant chose and gained access to the delayed, more preferred reinforcer in two of three trials, the criterion for access to the delayed, more preferred reinforcer was increased by 42 s until his time on task equaled 10 times the mean duration of on-task behavior during the natural baseline (420 s).

Generalization

Generalization probes were conducted during the natural baseline (A) and self-control training (C) conditions. Procedures were the same, except the participant was instructed to engage in a sorting task rather than the matching-to-sample task. During self-control training, reinforcement was provided after he engaged in the task for 420 s.

Interobserver Agreement and Procedural Fidelity

During the stimulus preference assessment, a second observer independently collected data on the student's choices during 31% of all trials. Agreement was 100%. A second observer independently collected data on choice selection during 36% of all trials including during generalization probes, and agreement was 100%. Interobserver agreement data on the duration of engagement in the task was collected during 30% of all trials across all conditions. Agreement, which averaged 99% (range, 97% to 100%), was calculated by dividing the smaller duration observed by the larger duration observed and converting the ratio to a percentage. An observer collected procedural fidelity data during 29% of all trials by completing a checklist comprised of the steps of the procedure. Procedural integrity was 100%.

Design

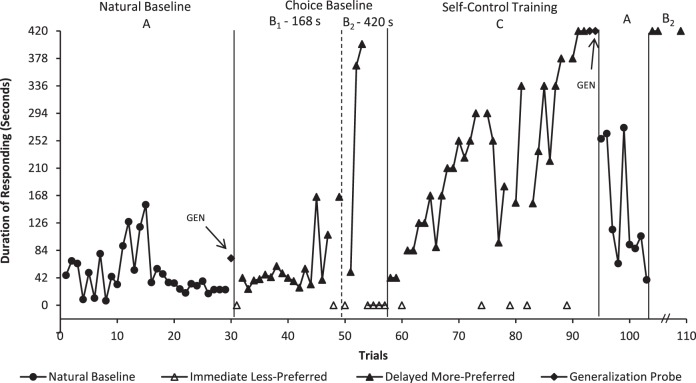

A multicomponent ABCAB reversal design was used. First, a naturalistic baseline was conducted to evaluate the participant's duration of responding in the absence of programmed reinforcement (A). Next, a choice baseline was conducted as a control condition (B1 and B2), by which changes in response preference following treatment could be evaluated. In B1, the criterion to earn the more preferred reinforcer was 168 s. In B2, the criterion to earn the more preferred reinforcer was 420 s. After the participant chose the immediate, less preferred reinforcer during three consecutive trials in B2, self-control training was implemented (C). After Stevie met the criterion in training (C), the natural baseline (A) was reintroduced and was followed by the choice baseline (B2) to determine whether the participant switched his preferences after experiencing self-control training.

RESULTS AND DISCUSSION

Figure 1 shows the duration of Stevie's on-task behavior during all phases of the study, as well as his choices for the immediate or delayed reinforcer during the choice baseline and self-control training conditions. During the natural baseline, Stevie engaged in relatively low levels of on-task behavior (M = 42 s) on the matching-to-sample workbook task. His time on task increased when he accessed the more preferred reinforcer after engaging in the task for 168 s. When the criterion to access the more preferred reinforcer was set at 420 s, Stevie initially increased his time on task, but then chose the immediate, less preferred reinforcer during three subsequent trials. During self-control training (C), he demonstrated criterion performance (420 s) after 35 trials. He also demonstrated criterion performance during the response generalization probe, suggesting that self-control generalized to a task for which no training occurred. When the natural baseline was reimplemented, the mean duration of responding decreased to 154 s. He chose the more preferred reinforcer and demonstrated criterion performance during the final choice baseline condition, which included a maintenance probe that was conducted 41 days later.

This study examined the effectiveness of teaching self-control to an adolescent boy with mild intellectual disability using qualitatively different reinforcers. Previous research has shown that individuals with intellectual disabilities can be taught to tolerate delays for quantitatively larger amounts of a reinforcer (Dixon & Falcomata, 2004; Dixon et al., 1998; Dixon & Tibbetts, 2009; Schweitzer & Sulzer-Azaroff, 1988), but individuals in applied settings are often asked to choose between qualitatively different reinforcers for which they show varying preferences. Demonstration of self-control with qualitatively different reinforcers mirrors more naturalistic choice and may make reinforcement interventions more effective. Preferences are rarely stable and vary for any given individual, both for this population (Zhou, Iwata, Goff, & Shore, 2001) and for typically developed individuals (Wine, Gilroy, & Hantula, 2012).

These findings suggest that individuals with intellectual disabilities can be taught self-control by progressively increasing the duration of task-related behavior required to produce a more preferred reinforcer. Furthermore, self-control may generalize to tasks for which no self-control training occurred. Further exploration of qualitatively different reinforcers and their effects on acquisition and generalization of self-control skills is warranted. During self-control training, our participant's duration of responding progressively increased to meet requirements to earn the delayed, more preferred reinforcer. However, the design did not permit replication of this effect within or between subjects. Future studies could utilize a multiple baseline design across participants, which would permit replication of our results.

Footnotes

Action Editor, Chris Ninness

Figure 1. .

Duration of Stevie's task engagement during natural baseline (A), choice baseline (B1 and B2), and self-control training (C) conditions. The solid triangles indicate that the participant chose the delayed, more preferred reinforcer; the open triangles indicate that the participant chose the immediate, less preferred reinforcer; GEN = generalization probe.

REFERENCES

- Ainslie G. W. Impulse control in pigeons. Journal of the Experimental Analysis of Behavior. (1974);21:485–489. doi: 10.1901/jeab.1974.21-485. doi:10.1901/jeab.1974.21-485. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Davison M, Nevin J. A. Stimuli, reinforcers, and behavior: An integration. Journal of the Experimental Analysis of Behavior. (1999);71:439–482. doi: 10.1901/jeab.1999.71-439. doi:10.1901/jeab.1999.71-439. [DOI] [PMC free article] [PubMed] [Google Scholar]

- DeLeon I. G, Iwata B. A. Evaluation of a multiple-stimulus presentation format for assessing reinforcer preferences. Journal of Applied Behavior Analysis. (1996);29:519–533. doi: 10.1901/jaba.1996.29-519. doi:10.1901/jaba.1996.29-519. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dixon M. R, Falcomata T. S. Preference for progressive delays and concurrent physical therapy exercise in an adult with acquired brain injury. Journal of Applied Behavior Analysis. (2004);37:101–105. doi: 10.1901/jaba.2004.37-101. doi:10.1901/jaba.2004.37-101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dixon M. R, Hayes L. J, Binder L. M, Manthley S, Sigman C, Zdanowski D. M. Using a self-control training procedure to increase appropriate behavior. Journal of Applied Behavior Analysis. (1998);31:203–210. doi: 10.1901/jaba.1998.31-203. doi:10.1901/jaba.1998.31-203. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dixon M. R, Tibbetts P. A. The effects of choice on self-control. Journal of Applied Behavior Analysis. (2009);42:243–252. doi: 10.1901/jaba.2009.42-243. doi:10.1901/jaba.2009.42-243. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goeters S, Blakely E, Poling A. The differential outcomes effect. The Psychological Record. (1992);42:389–411. [Google Scholar]

- Schweitzer J. B, Sulzer-Azaroff B. Self-control: Teaching tolerance for delay in impulsive children. Journal of the Experimental Analysis of Behavior. (1988);50:173–186. doi: 10.1901/jeab.1988.50-173. doi:10.1901/jeab.1988.50-173. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wine B, Gilroy S, Hantula D. A. Temporal (in)stability of employee preference for rewards. Journal of Organizational Behavior Management. (2012);32:58–64. doi:10.1080/01608061.2012.646854. [Google Scholar]

- Zhou L, Iwata B. A, Goff G. A, Shore B. A. Longitudinal analysis of leisure-item preferences. Journal of Applied Behavior Analysis. (2001);34:179–184. doi: 10.1901/jaba.2001.34-179. doi:10.1901/jaba.2001.34-179. [DOI] [PMC free article] [PubMed] [Google Scholar]