Abstract

Collaboration has become a dominant mode of scientific inquiry, and good collaborative processes are important for ensuring scientific quality and productivity. Often, the participants in these collaborations are not collocated, yet distance introduces challenges. There remains a need for evaluative tools that can identify potential collaboration problems early and provide strategies for managing and addressing collaboration issues. This paper introduces a new research and diagnostic tool, the Collaboration Success Wizard (CSW), and provides two case studies of its use in evaluating ongoing collaborative projects in the health sciences. The CSW is designed both to validate and refine existing theory about the factors that encourage successful collaboration and to promote good collaborative practices in geographically distributed team-based scientific projects. These cases demonstrate that the CSW can promote reflection and positive change in collaborative science.

Keywords: Collaboration, Success, Evaluation, Common ground, Collaboration readiness, Technology readiness

INTRODUCTION

Collaboration is becoming a dominant mode of scientific inquiry, especially in the health sciences where there is increasing interest in moving more quickly from fundamental research to generalizable interventions in medical practice and policy. As teamwork becomes more central, good collaborative processes become even more important for ensuring scientific quality and productivity. This is especially true with interdisciplinary and geographically dispersed teams. While fields like Organizational Science, Team Science, and Computer-Supported Cooperative Work have developed a significant body of theoretical research about collaborative practices, there remains a need for evaluative tools that can identify potential collaboration problems early and provide strategies for managing and correcting collaboration issues.

In this paper, we introduce the Collaboration Success Wizard (CSW), an online evaluation and reflection tool for collaborative scientific projects. Following the efforts toward translational science in medical fields, we are working to develop useful tools that translate our developing theoretical knowledge about successful collaborative work into practical interventions to provide better collaborative outcomes. The goal of the Wizard is to provide a snapshot of a project in order to uncover strong collaborative practices that should be encouraged, as well as challenging aspects of the collaboration that should be monitored or improved. Rather than focusing on providing post-mortem explanations of collaboration successes and failures, this theory-based tool is designed to evaluate projects in vivo, or even during their planning stages. Evaluating teams early in their collaborations makes it possible to provide immediate feedback about collaboration strengths and weaknesses and suggest interventions that could improve the possibility of achieving project goals. At the same time, our tool also provides valuable research data aimed at validating, refining, and extending our understanding of collaborative processes in science and healthcare. To date, we have used the CSW to evaluate 12 projects, with 149 individuals completing the survey.

After providing a review of relevant background literature, we briefly summarize the theory behind the Collaboration Success Wizard. We then describe how the CSW was developed and implemented. We provide examples of two specific projects in the medical domain that have used the CSW instrument to inform their collaborations. Finally, we discuss the ongoing process of validating and refining the underlying theory, the benefits and limits of this approach, and our expectations for future work in this project.

BACKGROUND

Evaluating collaboration

Teams are the core of today’s organizations in all sectors [1, 2] and, increasingly, such teams are not collocated [3, 4]. There are numerous reasons for the increasing prevalence of distributed teams. Perhaps the most important is that problems are larger, requiring both more people and people with diverse backgrounds and approaches. Page [5] has described in great detail the potential advantages of having diverse conceptual perspectives and problem-solving strategies in coming up with insights and solutions to difficult problems. Often, the right people are not all located at the same institution. Modern communication and computational technologies have given us a broad range of tools with which to try to overcome the challenges of distance.

However, despite the availability of increasingly sophisticated cyberinfrastructure to support all of this activity, working at a distance is still difficult [3, 6, 7]. Cummings & Kiesler [8] studied a series of projects funded by the National Science Foundation and found that, on average, those projects involving distributed teams had less success than those that were collocated. Olson and Olson [3] reviewed data from studies of both scientific projects and corporate teams, documenting many of the challenges and setting the stage for the Wizard’s theoretical foundations by identifying four of five categories of collaboration practice described in the next section.

Various approaches have been taken to evaluating collaborative projects, and the Theory of Remote Scientific Collaboration (TORSC) has some overlaps with many of these approaches. Larson and LaFasto’s model of effective teamwork draws on an extensive study of existing teams, and outlines a number of key factors including (among others) clear goals, unified commitment, a collaborative climate, and principled leadership [9]. Mattessich et al., drawing on a broad review of the literature, identify similar factors, but whereas Larson and LaFasto focus on individuals and practices within the team, Mattessich et al. understand collaboration as a “well-defined relationship entered into by two or more organizations” [10]. We adopt a similar level of focus on individual collaborative practices to Larson and LaFasto, but are also motivated by the “Wilder Collaboration Factors Inventory,” a diagnostic tool provided by Mattessich et al. We also extend these frameworks by including a novel focus on factors related to geographic dispersion and technology use, as described in the next section.

TORSC

The TORSC brings together findings from a broad literature on collaboration with findings from a large study of scientific collaborations. This study produced qualitative summaries of over 200 collaborative projects and in-depth analyses of 15 in order to derive a set of factors that relate to success in distributed, collaborative, scientific teams [11].

A unique aspect of the TORSC model is that it is specifically directed at geographically distributed collaborations that rely on electronic information and communication technologies. Achieving successful collaborations can be especially problematic when collaborators are separated by distance. Distance threatens collaborators’ ability to share context and develop common ground [12]. Trust can be more difficult to establish and maintain when the collaborators are separated from each other [13, 14]. While various information and communication technologies can alleviate some of the effects of distance, they are not a panacea, and the technologies can lead to new collaborative problems themselves [3]. For example, while it is now possible to have real-time electronic meetings with many participants from around the world, this requires scheduling meetings across time zones, frequently with some participants having to adjust their schedules to participate outside of normal work times. Unlike many frameworks for evaluating collaboration, TORSC deals directly with the ways that geographic distribution and technology use affect collaborative practices.

Other evaluation frameworks often use strong definitions of collaboration that insist on a strong shared goal or well-defined relationships [9], or create a hierarchy of types of relationships that distinguish collaboration from cooperation and coordination [10, 15]. Rather than enforce a strict definition of collaboration, TORSC embraces the diversity of scientific projects that involve multiple people working together from different locations. Collaborations in the scientific domain sometimes look very similar to the small, single-purpose, highly interdependent teams that populate much of the team literature, but they need not. They often involve groups with multiple and open-ended goals (e.g., “make transformative contributions”) [11], shifting organizational structures and fuzzy membership [16], and relatively long time horizons [17]. TORSC is less about defining what a collaboration should be and more about recognizing the interactions among task requirements, organizational processes, and technology use. For example, TORSC suggests that collaboration will be easier when participants have worked together and have common ground. Consequently, when it is important to have a diverse set of collaborators, the chances of success can be improved by providing opportunities for participants to get to know each other or by explicitly defining jargon and documenting terminology differences to help develop a common language. Likewise, making processes more formal or improving technology capability can be a strategy to help manage larger or more distributed projects.

TORSC also takes a broad view of success for collaborative projects. Scientific collaborations can have many different kinds of success, including effects on the science itself (e.g., new discoveries, publications, decreasing unnecessary duplication, etc.), improvements in scientific careers (e.g., greater diversity of scientists), educational improvements, increasing public perception, or providing new tools or infrastructure for future scientific work. The basis of TORSC rests not on predicting specific kinds of success, but instead on the finding that those projects that had stronger collaborative practices tended to be considered more successful by their stakeholders (participants, funders, etc.).

TORSC provides a detailed model of many variables that contribute to collaborative success. TORSC has five major classes of factors: the nature of the work, common ground, collaboration readiness, management and decision making, and technology readiness (see Table 1). Factors that deal with the nature of the work have to do with the task requirements, interdependencies, and ambiguities of the work itself. Common ground deals with the need to have mutual knowledge, beliefs, and/or assumptions [18]. Collaboration readiness deals with individuals’ motivations to collaborate, alignment of goals, trust, and collective empowerment. Management, planning, and decision making deal with the leadership of the collaboration, the effectiveness and timeliness of communication, clarity of roles and responsibilities, and decision making. Finally, technology readiness deals with the available technological infrastructure, availability of support and training for technology use, and the fit of the technologies to the project’s communication needs. TORSC provides 44 specific items under these five major classes, asking about detailed aspects of motivation, planning, technical tools and support, etc. Both the high-level categories and specific items are detailed in [11].

Table 1.

The components of TORSC

| Team challenges | Organizational strategies |

|---|---|

| Nature of the work | Structure tasks so that interdependence is reduced |

| Common ground | Develop and maintain a common base of shared knowledge and vocabulary for geographically distributed teams |

| Collaboration readiness | Implement incentive structures that support a mix of competition and cooperation |

| Management and decision making | Provide good leadership. Team members should feel that decisions are made fairly and clearly |

| Technology readiness | Provide sufficient technical support to enable remote workers to conduct their work |

TRANSLATING THEORY TO PRACTICE: THE COLLABORATION SUCCESS WIZARD

Our aim with this project was to develop a generalizable and reliable tool that can be used to evaluate the strengths and potential weaknesses of a collaborative project and to provide constructive strategic interventions to address any collaboration problems. As such, the tool has two primary goals. First, the tool is intended to provide useful diagnostics and strategic recommendations that participants in project teams can use to improve their collaborative processes. Second, the tool is intended to provide data to test the validity and generalizability of the TORSC framework.

The survey instrument

The CSW is an online web-based survey. Each of the collaborative factors identified in TORSC were operationalized into survey questions. The unit of analysis is a collaborative project. For each project, we invite all of the individual project members to complete the Wizard, across all roles and including both central and peripheral members. This research is conducted in accordance with the appropriate ethical standards for human subjects behavioral research and has been approved and is overseen by the appropriate university institutional review board. The CSW went through an iterative development process that focused on assuring the clarity of the question phrasing as well as the usability of the interface itself.

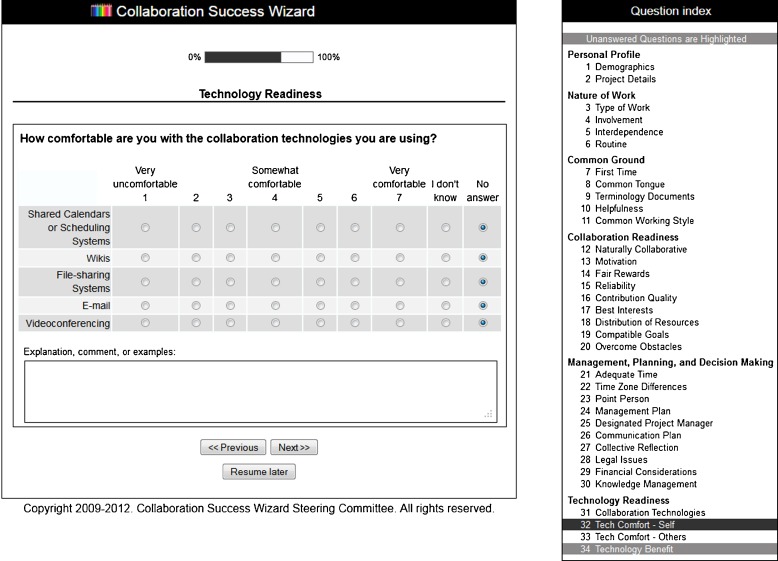

We develop a project membership list with the assistance of a project coordinator or central member. Each project member is sent an e-mail with instructions and individualized login credentials. After respondents login to the survey, they are presented with study information and a consent declaration detailing their participation and the data we collect. If respondents consent to participate, they click through to begin the survey. Each question is presented on a single page. A navigation tree along the side of the screen shows one′s progress through the survey and enables respondents to revisit prior questions if they wish to reconsider prior responses. We have found that a typical respondent takes about 25 min to complete the Wizard. Participants have the option of signing off and returning later if they cannot complete it in one sitting. Each question is accompanied by a free-text field to explain a response (if their situation does not match the question well), to provide examples, and to make any comments they would like to make, either about the substance of the question or about the comprehensibility of the question itself. Several questions employ conditional branching such that the next question asked depends on the answer to these questions (Fig. 1).

Fig. 1.

The collaboration success wizard interface

The CSW comes in three versions: past, present, and future. The questions in turn are phrased appropriately for these different circumstances. The past version is useful for reflection on a completed project, as a “lessons learned” exercise. The present version can help with mid-course corrections or adjustments to a project. And the future version can be helpful for planning a collaboration and even in writing a proposal for funding.

A distinguishing feature of the CSW is that when a respondent finishes the survey, they can view a report that provides feedback about their survey responses. We inform participants about the areas in which their collaboration, according to the TORSC framework, appears to be strong and which areas might require some attention. For the latter, we offer some suggestions for actions they could take to mitigate the risk. This report is generated automatically. Each potential response in the survey is assigned a value that can be used to generate a score for each of the five high-level categories in TORSC. These scores are used to generate automatic summary feedback at the beginning of the report. Most of the individual questions also have specific “praise” or “remedy” scripts that may be generated depending on the responses. For example, if data sharing will be an important part of the project, we recommend developing a data management plan that identifies appropriate formats for data sharing, policies about data access, and plans for long-term archiving of data. Considerable effort went into developing the feedback so it would be a smooth, as well as informative, narrative.

Depending on the time of year, the size of the project, and other factors, we set the survey period for each project to two to four weeks. After closing the survey, we aggregate the data collected across the project, manually analyze the aggregate data, and write a summary report for the project leadership (only aggregate measures are provided and no individuals are identified). In many ways, this report is similar to the individual report, but with the aggregated data, we can also report where there is considerable variation among respondents. Just as important, we can also analyze the free-text comments provided by respondents, which tend to add a great deal of richness to the numeric data.

Both the individual and aggregate reports acknowledge strengths and suggest strategies for mitigating vulnerabilities, where possible. The goal of the reports is not to provide a summary judgment or score. Rather, the goal is to facilitate reflection by the respondents and to motivate discussion among collaborators towards agreement on collective action where warranted. Such consequent discussions are the means by which TORSC can be translated into practice and, we believe, by which collaborations can be improved.

Generating a report that can motivate discussion is challenging. There is a limit to the number of questions the CSW can ask and this limits the specificity and detail of the report′s feedback. To be most effective, collaborators need to integrate the feedback with their own, more detailed knowledge to understand what it means in their context and to determine what actions they may undertake to improve their collaboration. In the next section, we detail two projects’ experiences with the CSW and how they have integrated the results into their discussions and practices.

EXPERIENCES WITH THE WIZARD

We have administered the CSW to 12 projects at the time of writing. To illustrate the kinds of collaborations that have successfully used the Wizard as part of their reflective practice, we describe two cases from the medical arena, their use of the CSW, and its consequent impact on their collaborations.

Clinical and translational science project in pediatrics

One group we studied was a group associated with the University of California Irvine Medical Center, who were in the process of applying to the National Institutes of Health (NIH) for a Clinical and Translational Science Award (CTSA). Since this was a proposal to create such a center, we administered a version of the Wizard that phrased the questions to focus on planning a future collaboration. We were given a list of 69 names to invite to participate, 43 of whom completed the Wizard survey for a 62 % response rate. We presented both an oral and a written summary of the results for the CTSA steering committee.

In this initial report, we identified specific concerns across all of the TORSC categories. For example, more than half of the respondents were concerned that the participants lacked a common vocabulary and working style—a common issue in interdisciplinary collaborations. As this was a new collaboration, participants had not yet had a chance to develop trusting relationships with each other, creating issues of collaboration readiness. Survey participants reported concerns about both intellectual property and financial issues, linked in part to budget uncertainty in the proposal process. However, we did find that the participants were, overall, optimistic about the success of the proposal and confident in the project leadership.

Key results from our report, and descriptions of how they planned to address the vulnerabilities we identified, were included in their subsequent proposal to the NIH. The project was consequently successful in obtaining an award and, in the fall of 2010, launched the Institute for Clinical and Translational Science (ICTS) at UC Irvine. A follow-up interview with the ICTS Director revealed that the report helped to stimulate internal discussion of collaborative issues and raised awareness of issues that need to be monitored, noting that “We love to show that we learned from this experience. I’m eager to keep this going … to include this in our renewal (proposal), to say that we started out with the Wizard, we kept working with you, and we’ll continue to do so.”

Some of the issues in our report have essentially worked themselves out. For example, once the project was funded and the budget was nailed down, the budget uncertainty was less present. However, even though participants are getting to know each other and building trust over time, developing common ground remains a challenge, especially when there is turnover within the project. In order to continually monitor these areas, they engage in frequent internal evaluations and have a staff person dedicated to tracking issues that arise in the collaborations. They have developed new documentation to formalize various collaborative processes and have also established a new Clinical Trials Office that provides collaboration support.

We are working with the ICTS to plan another evaluation with the CSW to gauge the efficacy of these interventions, understand how the project has changed, and identify any new issues that may have arisen.

Study of frontline nurse engagement in detecting operation failures

We studied another translational healthcare initiative with a landmark network study entitled “Small Troubles, Adaptive Responses: (STAR-2): Frontline Nurse Engagement in Quality Improvement” which engaged 14 hospitals in an investigation of operational problems that frontline nurses work around on a daily basis [19]. The study was conducted by the Improvement Science Research Network (ISRN), which coordinated principal investigators (PIs), coordinators, and research associates at each hospital to record data about work disruptions onto cards at the bedside and to enter the data into a web-based repository.

We asked 70 participants, across ten different hospitals involved in the study, to complete the CSW survey. Seventeen did so, for a response rate of 24.3 %. Although we did not have enough responses from each site to make comparisons between sites, the aggregated data yielded some useful insights.

Overall, the responses suggest that the project is well-positioned to be a successful collaboration, with many of the factors that we have found to be related to success already in place. Participants are collaborative and helpful; they have a lot of common ground both in how they talk and in their work practices; the project manager is strong and the practices of managing a project like this are primarily in place; technology being used and people’s comfort level with it are sufficient to suggest there are no vulnerabilities on that front. A key strength identified by respondents was a “protocol implementation kit,” provided by the coordinating center at the outset of the study, which integrated several success factors in TORSC. This kit documented study goals and procedures, specified what to do when problems arose, provided a common language, and served as a communication plan and a knowledge management plan. One tension identified by the CSW was that respondents did not agree about the extent of interaction that was expected between STAR-2 collaborators at different sites. The PIs and the coordinating center sought to minimize interactions between sites in the service of methodological and analytic rigor, yet the nurses wanted to learn from other sites to improve their own healthcare practices. For future studies, the PIs will look for ways to manage expectations from the outset of a study and to facilitate learning across sites without sacrificing rigor.

In a follow-up interview, the leadership of this nursing research study agreed that they benefitted from their CSW experience: “This is the first national collaborative that we’ve ever conducted within the ISRN … so this has given us confirmation that the policies and procedures we have created to manage a national, multi-site, quality improvement (research) project are indeed useful to our team of researchers … it is a beneficial report back to us, definitely. It really does help us gather information to improve the success of our future collaborations, particularly about managing expectations.”

Summary across the two cases in health care

In both of these cases, the leadership was well respected and the participants predicted a high degree of potential success. Where issues were raised, the projects are addressing them. In the case of the ICTS, over time, the leadership was able to make the participants more aware of and more comfortable with the financials and lessened the concern with disposition of intellectual property rights. They grew to be more comfortable with different working styles and vocabularies. In the case of STAR-2, most of the factors leading to success were in place, the leadership was well respected, and most of the participants thought the project would be successful. Through this survey, however, they were able to identify a desire by study volunteers to interact with and to learn from peers at other sites and can consider ways to facilitate that in future studies. Comments about the value of the CSW in both cases were very positive.

DISCUSSION

Validation and extension

As we discussed above, one of the goals of this project is to provide validation of the survey itself and of the TORSC framework. Our validation efforts are continuing on several fronts. First, we are conducting statistical analyses of the data from the first 135 participants across all projects. We have completed a question-by-question review of all of the items in the CSW. For each question we analyzed the overall distribution of answers and the differences in means and distributions across the different projects. We also analyzed the respondents free-text answers to determine if there were confusions about any of the questions. As a result, we have edited a number of items to provide new phrasing, additional explanatory information, or a modified set of responses.

We are also beginning to analyze the validity of TORSC itself. We are asking whether the items correlate with success measures as predicted. Our only success metric at present is respondents’ ratings of predicted success. However, we intend to follow-up with a number of the projects in the future to provide richer metrics of success and better test the theory’s predictions.

This work will also help us extend TORSC beyond its current instantiation. For example, TORSC currently provides a simple set of factors that predict successful collaborations. Once we have success metrics across a number of projects, we will be able to generate relative weights for the TORSC factors and discuss the conditions that may moderate the relationships.

Although the Wizard is based on TORSC, which emerged from studies of scientific collaborations, over 90 % of the survey questions are not specific to scientific collaborations, but address issues common to most dispersed collaborations. As a result, we are working to extend the CSW framework to include a much wider range of kinds of collaborations.

Practical intervention

Given the feedback we have received from projects that have used the CSW (including the two discussed in detail above), we are confident that the CSW is proving useful as a way to generate reflection and discussion around collaborative practices. The CSW, however, not only highlights strengths and potential weaknesses, but also suggests specific strategies for addressing these concerns. While we have generally received positive feedback in this area, some participants expressed concern that the suggested remedies were too general or did not take into consideration other local contingencies. In order to evaluate the efficacy of these strategies, we are conducting follow-up studies with several of the projects that have used the CSW. We will administer the survey to several projects at multiple points in their collaboration to gauge how their practices have changed over time. We will also conduct qualitative investigations (including interviews, observations, and document analysis) for focal projects to better understand how the CSW results were received, interpreted, and used within the collaborations. We are working to increase the breadth of collaborations using the Wizard, but an enduring challenge is identifying and enrolling those less successful collaborations that would improve our validation work and could potentially benefit most from the Wizard.

CONCLUSION

The Collaboration Success Wizard demonstrates the utility of creating theory-based evaluative instruments both to help people create successful collaborations and to provide data for theoretical validation. The CSW is an operationalization of the Theory of Remote Scientific Collaboration by Olson et al. [11] that allows us to both (a) validate and extend TORSC, and (b) provide a diagnostic tool that allows collaborative projects to investigate, reflect on, and improve their collaborations. To date, the CSW has been administered to 177 users from 12 projects, two of which were discussed in detail in this paper. Validation efforts are continuing as additional data are generated, and follow-up qualitative investigations will allow us to further determine the success of the suggested strategies on collaborative practices.

The CSW and TORSC take a slightly different approach than many other frameworks for evaluating collaboration in that it embraces the breadth of definitions of collaboration and versions of success. The CSW recognizes that collaboration takes many forms, but that each of those forms creates trade-offs for managing the collaboration. For example, TORSC says that projects that have high task interdependency across sites tend to be less successful. The CSW uses this finding to create an opportunity for reflection: if we find that there are high task interdependencies, the CSW would encourage the project to recognize the risk factor, and would suggest that the project consider whether the work can be rearranged to reduce this risk factor, or alternatively, to provide additional attention and resources to mitigate the risk (perhaps by having regular face-to-face all-hands meetings).

This project fits into a larger translational science agenda, modeled on the advances that have been made in health and medical fields. Behavioral scientists in Organizational Behavior, Computer-Supported Cooperative Work, Science of Team Science, and related fields have made great strides in understanding what makes collaborative work successful. However, it has remained difficult to translate these theoretical contributions into concrete practical outcomes and widely useful and usable diagnostic tools remain rare. The CSW provides another instrument in the collaboration toolbox.

Acknowledgments

The primary programmers for the Wizard have been Sameer Halai, Rahim Sonawalla, Coby Bassett, and Jingwen Zhang. Useful technical assistance was provided by Mark Madrilejo, Sajeev Cherian, Nina McDonald, and Melinda Choudhary. Financial support for the development of the Wizard has come from the Army Research Institute grant W91WAW-07-C-0060, the National Science Foundation grants OCI 1025769 and IIS 0085951, Google, and the Donald Bren Foundation. The Institute for Clinical and Translational Science at UC Irvine is supported by NIH grant UL1 TR000153. STAR-2 and the ISRN at the University of Texas Health Science Center San Antonio were supported by NIH/NINR grant 1RC2NR011946-01.

Conflict of interest

The authors assert they have no financial relationships with the organizations that sponsored this research. We have full control of all primary data, which the journal may review if requested.

Ethical standards

This research was conducted in compliance with appropriate ethical standards and with the approval of the University of California, Irvine Institutional Review Board.

Footnotes

Implications

Practice: The Collaboration Success Wizard provides a tool to evaluate and encourage reflection about distributed teams’ collaborative practices.

Policy: While team science promises greater scientific discoveries and translation of research into practice, research shows that achieving these outcomes is difficult and additional attention must be paid to ensuring good collaborative processes.

Research: Further research is needed to develop and validate appropriate collaboration strategies for different models of scientific teamwork.

References

- 1.Gibson CB, Cohen SG, editors. Virtual Teams That Work: Creating Conditions For Virtual Team Effectiveness. San Francisco: Jossey-Bass; 2003. [Google Scholar]

- 2.Stokols D, Hall KL, Moser RP, Feng A, Misra S, Taylor BK. Cross-disciplinary team science initiatives: Research, training, and translation. In: Frodeman R, Klein JT, Mitcham C, editors. The Oxford Handbook of Interdisciplinarity. New York: Oxford University Press; 2010. pp. 471–493. [Google Scholar]

- 3.Olson GM, Olson JS. Distance matters. Hum Comput Interact. 2000;15(2/3):139–178. doi: 10.1207/S15327051HCI1523_4. [DOI] [Google Scholar]

- 4.Jones C. Teleworking: The quiet revolution: Gartner Group; 2005. Publication 122284.

- 5.Page SE. The Difference: How the Power Of Diversity Creates Better Groups, Firms, Schools, and Societies. Princeton: Princeton University Press; 2007. [Google Scholar]

- 6.Allen TD, Poteet ML, Burroughs SM. The mentor’s perspective: a qualitative inquiry and future research agenda. J Vocat Behav. 1997;51:70–89. doi: 10.1006/jvbe.1997.1596. [DOI] [Google Scholar]

- 7.Kraut RE, Egido C, Galegher J. Patterns of contact and communication in scientific research collaborations. In: Galegher J, Kraut RE, Egido C, editors. Intellectual Teamwork: Social Foundations of Cooperative Work. Hillsdale: Erlbaum; 1990. pp. 149–172. [Google Scholar]

- 8.Cummings JN, Kiesler S. Collaborative research across disciplinary and organizational boundaries. Soc Stud Sci. 2005;35(5):703–722. doi: 10.1177/0306312705055535. [DOI] [Google Scholar]

- 9.Larson C, LaFasto F. TeamWork: What Must Go Right, What Can Go Wrong. Newbury Park: Sage; 1989. [Google Scholar]

- 10.Mattessich PW, Murray-Close M, Monsey BR. Collaboration: What Makes It Work. Saint Paul: Wilder; 2001. [Google Scholar]

- 11.Olson JS, Hofer EC, Bos N, et al. A theory of remote scientific collaboration. In: Olson GM, Zimmerman A, Bos N, et al., editors. Scientific collaboration on the internet. Cambridge: MIT Press; 2008. pp. 73–97. [Google Scholar]

- 12.Cramton CD. The mutual knowledge problem and its consequences for dispersed collaboration. Organ Sci. 2001;12(3):346–371. doi: 10.1287/orsc.12.3.346.10098. [DOI] [Google Scholar]

- 13.Shrum W, Chompalov I, Genuth J. Trust, conflict and performance in scientific collaborations. Soc Stud Sci. 2001;31(5):681–730. doi: 10.1177/030631201031005002. [DOI] [Google Scholar]

- 14.Kramer RM, Tyler TR. Trust in Organizations: Frontiers of Theory and Research. Thousand Oaks: Sage; 1995. [Google Scholar]

- 15.Neale DC, Carroll JM, Rosson MB. Evaluating Computer-Supported Cooperative Work: Models and Frameworks. Proceedings of the 2004 ACM Conference on Computer Supported Cooperative Work. Chicago: ACM; 2004. pp. 112–121. [Google Scholar]

- 16.Lee CP, Dourish P, Mark G. The human infrastructure of cyberinfrastructure. Proc. CSCW 2006. ACM; 2006:483–492.

- 17.Ribes D, Lee C. Sociotechnical studies of cyberinfrastructure and e-research: current themes and future trajectories. Comput Supported Coop Work (CSCW) 2010;19(3):231–244. doi: 10.1007/s10606-010-9120-0. [DOI] [Google Scholar]

- 18.Clark HH, Brennan SE. Grounding in communication. In: Resnick LB, Levine JM, Teasley S, editors. Perspectives on socially shared cognition. Washington, D.C.: American Psychological Association; 1991. pp. 127–149. [Google Scholar]

- 19.Ferrer R, Stevens KR. A mechanism to increase awareness of workarounds in Med-Surg units. Proceedings of the 2nd Annual Improvement Science Summit, San Antonio, TX. 2011