Abstract

Cochlear implant (CI) users can achieve remarkable speech understanding, but there is great variability in outcomes that is only partially accounted for by age, residual hearing, and duration of deafness. Results might be improved with the use of psychophysical tests to predict which sound processing strategies offer the best potential outcomes. In particular, the spectral-ripple discrimination test offers a time-efficient, nonlinguistic measure that is correlated with perception of both speech and music by CI users. Features that make this “one-point” test time-efficient, and thus potentially clinically useful, are also connected to controversy within the CI field about what the test measures. The current work examined the relationship between thresholds in the one-point spectral-ripple test, in which stimuli are presented acoustically, and interaction indices measured under the controlled conditions afforded by direct stimulation with a research processor. Results of these studies include the following: (1) within individual subjects there were large variations in the interaction index along the electrode array, (2) interaction indices generally decreased with increasing electrode separation, and (3) spectral-ripple discrimination improved with decreasing mean interaction index at electrode separations of one, three, and five electrodes. These results indicate that spectral-ripple discrimination thresholds can provide a useful metric of the spectral resolution of CI users.

INTRODUCTION

The use of cochlear implants (CIs) has led to remarkable successes such as mean open-set sentence recognition scores in a quiet background and without visual cues that are around 80%, where 70% is considered sufficient to support a telephone conversation (Wilson and Dorman, 2008; Zeng et al., 2008; Rubinstein, 2012). However, there is great variability in outcomes that is only partially accounted for by age, duration of deafness, and degree of residual hearing. Poor understanding of the factors that contribute to individual performance is a critical limitation affecting CI development. The spectral-ripple discrimination test offers a time-efficient, nonlinguistic measure that may be useful for predicting performance of CI users on speech perception (Henry and Turner, 2003; Won et al., 2007) and for comparing CI sound encoding strategies (Berenstein et al., 2008; Drennan et al., 2010). Performance in the task is correlated with vowel and consonant recognition by CI users in quiet (Henry and Turner, 2003; Henry et al., 2005), speech perception in noise (Won et al., 2007), and music perception (Won et al., 2010). These results have been interpreted as indicating the usefulness of spectral-ripple discrimination thresholds as an approximate metric of the spectral resolution of CI users, much as the ripple phase-inversion technique has been used to characterize the frequency resolving power of listeners with normal hearing (Supin et al., 1994, 1997, 1999).

However, there is controversy about the use of the spectral-ripple discrimination test and the interpretation of spectral-ripple discrimination thresholds when the listeners are CI users (Goupell et al. 2008; Azadpour and McKay, 2012). A summary of frequently raised concerns about the spectral-ripple discrimination test was provided by Azadpour and McKay (2012), who argued that it is not clear what underlying psychophysical abilities give rise to the correlation between spectral-ripple discrimination and speech understanding of CI users. First, Azadpour and McKay identified simple cues that they believe could be used by a CI user, such as overall loudness cues, spectral edge cues, or shifts in the spectral center of gravity; they designated these putative cues as “contaminating factors” in the spectral-ripple discrimination test. Goupell et al. (2008) suggested that changes in the intensity of a single channel might be the cue used by CI users in the spectral-ripple discrimination test. A second line of criticism concerns the method of stimulus presentation, in particular, the use of an acoustic stimulus. The spectral-ripple discrimination test is, by design, a fairly brief, “one-point” measure in which only the ripple density parameter is varied and a clinical CI speech processor is used. The criticism of this approach offers an illustration of how the purposes of the spectral-ripple discrimination test differ from the purposes of direct-stimulation testing paradigms, which are typically time intensive but allow the experimenter to specify all parameters of the electrical stimulus directly. Third, there is not general agreement about whether there is value in testing the spectral resolution of CI users. For example, there are questions about whether CI users are sensitive to spectral profiles (e.g., Goupell et al., 2008) and about whether spectral cues other than global spectral changes contribute to the speech understanding of CI users (Azadpour and McKay, 2012). Given the wide range of practical applications of the spectral-ripple discrimination test, it is crucial to address concerns about its usefulness for assessing the spectral resolution of CI users. This article presents the results of experiments that were conducted to investigate the relationship, if any, between extensive, multi-point measures of channel interactions and one-point spectral-ripple discrimination thresholds.

“Spectral ripple” refers to modulation of the amplitude spectrum of a stimulus. In the spectral-ripple discrimination test, the listener's sensitivity to inversions of ripple phase (exchanging the positions of spectral peaks and troughs) is measured at various ripple densities, expressed in ripples/octave. Higher thresholds (more ripples/octave) indicate better performance. Tests of discrimination or detection of spectrally modulated noise were first used to test listeners with normal hearing (Summers and Leek, 1994; Supin et al., 1994, 1999; Macpherson and Middlebrooks, 2003) and were adapted for tests in CI users (Henry and Turner, 2003; Henry et al., 2005, Litvak et al., 2007; Won et al., 2007; Saoji et al., 2009). Possible factors that could influence spectral-ripple discrimination performance in CI listeners include the number of electrodes available to the subjects, amount of intracochlear current spread, integrity or health of the auditory nerve, or sound processing strategies. Previous studies have shown that spectral-ripple discrimination improved as the number of electrodes increased (Henry and Turner, 2003), suggesting that spectral-ripple discrimination ability benefits from having multi-channel information. In addition, Won et al. (2011b) found that spectral-ripple discrimination ability increased with increasing electrode separation; this suggests that performance in the test depends on the extent of overlap in the excitation patterns of the stimulated electrodes.

Despite evidence from several studies suggesting a useful role in predicting speech outcomes for tests of spectral-ripple discrimination (e.g., Henry and Turner, 2003; Henry et al., 2005; Won et al., 2007) and spectral modulation detection (e.g., Litvak et al., 2007; Saoji et al., 2009), critics question the validity of measuring psychophysical ability with a test in which the ultimate parameters of the electrical stimulus are determined by the CI user's speech processing program. Azadpour and McKay (2012) suggested that the results reported in the literature arose due to the influence of “contaminating” factors, but the evidence for this claim is far from conclusive. In fact, sensitivity to some of the potential cues described by Azadpour and McKay (2012), such as spectral shifts and spectral edges, does require some degree of spectral resolution. Moreover, multiple lines of evidence suggest that these and other cues labeled as “contaminating” by Azadpour and McKay might not contribute significantly to performance on the task (Anderson et al., 2011; Won et al., 2011b). Finally, the potential presence of such cues in the acoustic stimulus would have little relevance for its practical application if there was compelling evidence that the test does provide a useful metric of the overall spectral resolution of CI users.

A far broader issue is the conclusion of Azadpour and McKay (2012) that spectral resolution might have very limited relevance to speech understanding in CI users. This has profound implications for speech encoding by CIs and for a large body of research concerning interactions between CI channels (e.g., Nelson et al., 1995; Chatterjee and Shannon, 1998; Throckmorton and Collins, 1999). This claim also has important consequences for the present experiment; namely, it raises the question of whether there is any value in assessing the spectral resolution of CI users. Azadpour and McKay support this claim with the finding that spatial resolution about electrode number 14 in eight users of the Nucleus Freedom™ implant was not correlated with speech scores. However, their result may just serve as an indication that it is difficult to predict speech outcomes from spatial resolution about any one electrode. The latter interpretation is consistent with the observation that the weight given to any one frequency band for speech recognition is highly variable in CI users (Mehr et al., 2001). Previous studies have found a relationship between speech understanding and a multi-electrode average of place-pitch sensitivity (Donaldson and Nelson, 2000) or electrode discrimination (Henry et al., 2000). As regards interactions involving a particular electrode, Stickney et al. (2006) calculated channel interactions due to a single perturbation electrode in the middle of the electrode array; they did not find significant correlations with speech recognition for continuously interleaved sampling (CIS), variations of which dominate the CI field today. Henry et al. (2000) did report significant correlations within narrow frequency bands, but they used a band-specific measure of information transmitted, not raw speech scores. In summary, the observation of Azadpour and McKay that raw speech scores could not be predicted from spatial resolution about a single electrode appears to be consistent with several previously published studies. In particular, their result can be explained without the need to exclude a role for spectral resolution in the speech understanding of CI users. Thus experiments to examine the relationship, if any, between spectral-ripple discrimination thresholds and the spectral resolution of CI users could have important implications for predicting speech outcomes in CI users.

There is evidence that performance on the spectral-ripple discrimination test might also not be predictable from spectral resolution at any one location along the electrode array. Anderson et al. (2011) found no significant correlation between the width of a single spatial tuning curve and broadband spectral-ripple discrimination thresholds. However, tuning curve bandwidths were correlated with spectral-ripple discrimination thresholds in an octave-wide band in the same frequency region. Thus the data of Anderson et al. suggest that multi-point measures of spectral resolution across the whole electrode array may be required to adequately characterize the relationship of broadband spectral-ripple discrimination thresholds with other measures of frequency selectivity.

One obvious limitation on the spectral resolution of CI users is the number of available channels. Spectral resolution is further limited when the channels are not independent. In multi-electrode CIs, there are psychophysically and physiologically measurable effects on sensitivity to a single-electrode stimulus when a second electrode on the same cochlear array is also stimulated. These “channel interactions” can result from overlapping excitation of peripheral auditory nerve fibers or from more central factors. Peripheral channel interactions would contribute to a CI user's ability to analyze and integrate information from multiple channels. For example, patients whose peripheral channel interactions are high receive inputs to the auditory nerve with a high degree of spectral smearing; such patients would be expected to have poorer spectral resolution.

The primary aim of this study was to evaluate the relationship of channel interactions and spectral-ripple discrimination across a range of CI users that includes both low- and high-performing listeners. It was hypothesized that higher channel interactions in CI users are associated with poorer spectral-ripple discrimination and vice versa. This hypothesis was tested using the spectral-ripple discrimination test in which stimuli are presented acoustically along with psychophysical measures of channel interactions under the controlled conditions afforded by direct stimulation with a research processor. Channel interactions were measured at dozens of electrode pairs spanning the entire electrode array and at several different electrode separations. Measured interactions were reported in the form of a normalized metric, the interaction index, as described in Sec. 2. Results supported the hypothesis with a significant negative correlation of spectral-ripple discrimination performance with mean interaction indices at multiple electrode separations.

CHANNEL INTERACTIONS MEASURED BY DIRECT STIMULATION

Methods

General approach

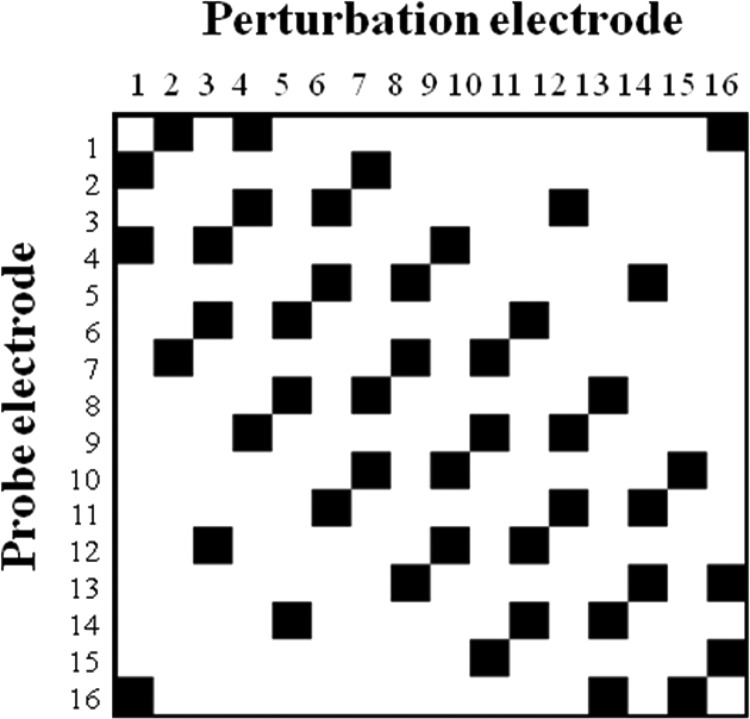

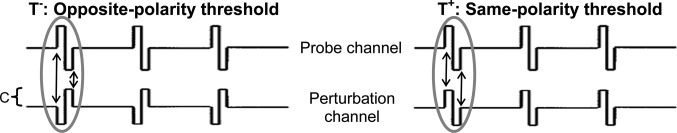

The interaction index (Eddington and Whearty, 2001; Boëx et al., 2003; Stickney et al., 2006) offers a normalized measure of how sensitivity to a probe electrode is affected by polarity inversions of simultaneous pulses on another electrode as shown in Fig. 1. The interaction index is calculated from detection thresholds for a probe pulse train in the presence of a subthreshold “perturbation” pulse train on another electrode with the opposite polarity of the probe (the “T−” threshold) or the same polarity as the probe (“T+”) (Boëx et al., 2003; Stickney et al., 2006). If there is zero overlap between the channels, then the effect of the pulse train in the perturbation channel on probe detection is the same for both polarities, i.e., T−−T+ = 0. If there is 100% overlap between the probe and perturbation channels, then for a perturbation current of C (see Fig. 1), C additional units of current are needed to reach the opposite-polarity threshold relative to the probe-alone threshold (T− = Talone + C), and C fewer units of current are needed to reach the same-polarity threshold (T + = Talone − C). Subtracting these two expressions yields the equation T−−T+ = 2C in the case of 100% overlap. To summarize, the phase-inversion technique maps a 0% interaction to a difference of 0 and a 100% interaction to a difference of 2C. Thus the formula in Eq. 1 expresses channel interactions on a normalized scale from 0 to 1:

| (1) |

Figure 1.

Pulse train stimuli used for measuring the interaction index are illustrated above. The testing paradigm measures how detection of a pulse train on a probe electrode is affected by polarity inversions of simultaneous “perturbation” pulses on another electrode.

Subjects

Six users of Advanced Bionics HiRes90K implants participated. These implants were chosen because they have independent current sources for each electrode that allow simultaneous pulse presentation on different electrodes via a BEDCS™ research interface. One bilaterally implanted patient was tested with each CI; thus data are reported for seven ears. Table TABLE I. shows basic information about CI users who participated in this experiment. All experimental procedures followed the regulations set by the National Institutes of Health and were approved by the University of Washington's Human Subject Institutional Review Board. All subjects had at least 6 months experience with their cochlear implant.

TABLE I.

Subject characteristics. Duration of severe to profound hearing loss is based on patients' self-report of the number of years they were unable to understand people on the telephone prior to implantation.

| Subject | Age (yr) | Duration of hearing loss (yr) | Duration of implant use (yr) | Implant device | Sound processor strategy |

|---|---|---|---|---|---|

| S48 | 70 | 10 | 3 | HiRes90K | HiResolution |

| S52 | 79 | 0 | 3 | HiRes90K | Fidelity 120 |

| S71 | 71 | 15 | 1.5 | HiRes90K | Fidelity 120 |

| S80 | 61 | 2 | 1.5 | HiRes90K | HiResolution |

| S84 | 46 | 26 | 0.5 | HiRes90K | HiResolution |

| S110 (L) | 47 | 17 | 8 | HiRes90K | Fidelity 120 |

| S110 (R) | 47 | 7 | 17 | HiRes90K | Fidelity 120 |

Stimuli

Pairs of 813-Hz biphasic pulse trains, a cathodic-first “probe” pulse train presented amid a subthreshold cathodic- or anodic-first perturbation pulse train with a temporal fringe, were used as described by Boëx et al. (2003). Each pulse of the brief (30-ms) probe train was simultaneous with a pulse of the longer (300-ms) perturbation train that began 135 ms before the probe and ended 135 ms after the probe. The simultaneity of probe and perturbation pulses was verified visually on an oscilloscope and was also confirmed indirectly by measuring interaction indices as large as 1, which are not observed with nonsimultaneous pulses (de Balthasar et al., 2003). Pulse width was 21.8 μs, which was found in initial testing to be the smallest pulse width at which T− and T+ thresholds could always be collected for all electrode pairings. Patients had pulse widths of 10.9 μs in their standard maps, but for adjacent electrode pairs at a 10.9-μs pulse width, the probe was often inaudible up to the highest available stimulation levels when the perturbation signal was polarity-inverted (the T− condition).

Procedures

These experiments used a PC with sound card, soundwave™ software for CI patient maps, a PSP™ research processor, and the BEDCS™ programming interface for direct stimulation of CII and HiRes90K implants from Advanced Bionics Corporation that was controlled through custom-written matlab- and python-based programs. First, at each active electrode (N ≤ 16), thresholds were collected for 300-ms pulse trains. Second, at each probe/perturbation electrode pair, maximum comfortable levels were determined for a 30-ms probe pulse train at each polarity of an inaudible (2 dB below threshold) 300-ms perturbation train on the perturbation electrode. Subsequently, detection levels for a 30-ms probe pulse train at each polarity of an inaudible (2 dB below threshold) 300-ms perturbation train on another electrode were measured adaptively in a two-down/one-up paradigm with six reversals using an approach similar to that of Boëx et al. (2003). Each pair of opposite-polarity T− and same-polarity T+ thresholds was collected in a single run using two randomly interleaved adaptive tracks. On each trial there were three stimulus intervals, only one of which contained the probe. The timing of the three intervals was indicated by lights displayed on a computer screen. The initial amplitude of the probe pulses in each track was set to a level that had been determined in initial testing to be below the maximum comfortable level but easily audible. The computer program that controlled the experiment specifically prevented the probe level in each adaptive track from exceeding the previously determined maximum comfortable level for that specific combination of perturbation electrode, probe electrode, and pulse polarity. The step size was 1.4 dB current until the first reversal, then 0.7 dB until the second reversal, and 0.35 dB thereafter. The run was stopped as soon as both adaptive tracks had completed at least six reversals. For each adaptive track, the threshold and standard error were calculated using the Spearman–Karber method (Miller and Ulrich, 2001, 2004). This method estimates the mid-point between chance performance (33.3% for the task in these experiments) and the maximum performance of 100% correct; i.e., thresholds correspond to 66.7% correct performance. The interaction index was calculated using Eq. 1.

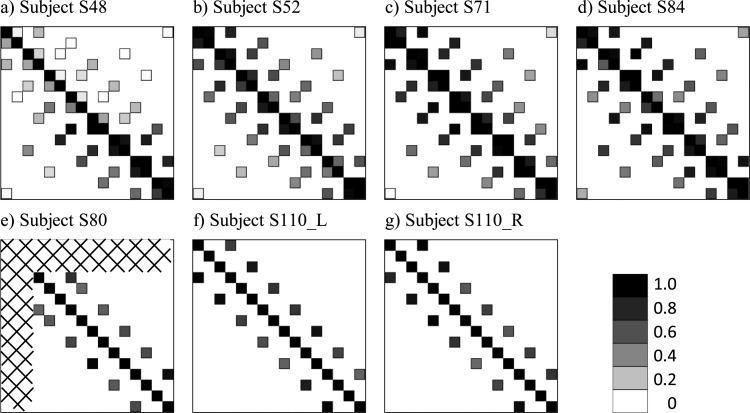

Channel interactions were quantified using the interaction index, which was calculated according to Eq. 1 from measurements at 46 electrode pairings in each subject. Four listeners completed the full testing protocol at all 46 electrode pairings. Evaluation of two listeners (three ears) was performed with a reduced protocol in which only electrode pairings with an electrode separation of three electrodes were tested. The 46 tested probe-perturbation electrode pairs that were included in the full test protocol are illustrated in Fig. 2. They consisted of 40 electrode pairs that were distributed in an approximately uniform manner across the electrode array at electrode separations of one, three, and five electrodes; four electrode pairs at an electrode separation of nine electrodes, and two electrode pairs consisting of opposite ends of the array. As noted in the preceding text, some listeners completed a reduced protocol in which only pairs separated by three electrodes were tested. In addition, there were slight modifications to which electrode pairings were tested for one listener, who had three disabled electrodes [see also Fig. 4e]. Total testing time for collecting the 46 interaction indices was about 20 h per subject.

Figure 2.

Electrode pairings for which interaction indices were measured in these experiments are shown by the dark squares. Interaction indices were measured for electrode separations ranging from one electrode (nearest-neighbor pairs) to 15 electrodes (opposite ends of the electrode array).

Figure 4.

The greyscale matrices show interaction indices measured at electrode pairs across the electrode array at various electrode separations in sixCI users (seven ears). The scale ranges from white at a 0% interaction to black at a 100% interaction. The measured data points are shown in black outline to distinguish them from the white background. Data for subject S80 and for two CIs in subject S110 (lower row) were collected for electrode separations of three electrodes only. The hatched pattern in (e) indicates that subject S80 had three disabled electrodes at the apex of the electrode array.

Results and discussion

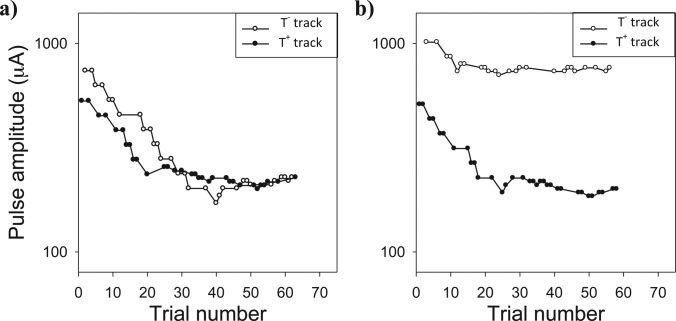

Interaction indices in these experiments spanned the full range from 0 (no interaction) to 1 (100% interaction). In some cases, the calculated interaction index was slightly negative (2% of adaptive tracks) or slightly greater than 1 (8% of adaptive tracks); calculated thresholds less than 0 or greater than 1 are reported as 0 or 1, respectively. The standard errors of the individual interaction indices were calculated using the Spearman–Karber method (see Sec. 2A4) and were fairly small: 95% of the standard errors were between 0.01 and 0.09. Two examples of pairs of interleaved adaptive tracks are shown for one subject in Fig. 3. The left panel of this figure shows the interleaved T− and T+ tracks at one perturbation-probe electrode pair with a separation of nine electrodes. For this large electrode separation, the T− and T+ adaptive tracks converged to similar levels; thus the interaction index was near 0 as one would expect when the numerator of Eq. 1 is small. The right panel shows the adaptive tracks for an electrode pair separated by one electrode. For this pair of adjacent electrodes, the T− and T+ tracks had very different points of convergence, and the calculated interaction index was near 1. The adaptive tracks in these plots exhibit a pattern that was typical of the data in these experiments in which the adaptive tracking procedure converged toward threshold rather quickly.

Figure 3.

Examples of adaptive tracks used to quantify channel interaction index at two electrode pairs. In the example in the left panel, the difference between the thresholds of the two adaptive tracks is very low, yielding a low interaction index. In the right panel, the two adaptive tracks have very different points of convergence, and the interaction index is higher.

The interaction indices measured across the electrode array for all seven tested CIs are shown in Fig. 4. For subjects in the upper row, the 46 measured interaction indices are plotted on a scale in which white indicates a 0% interaction and black indicates a 100% interaction. For subjects in the lower row, interaction indices for electrode pairs with a separation of three electrodes are plotted. Note that in both the upper and lower rows, the values for the interaction of each electrode with itself, by definition a 100% interaction, are also plotted; these values appear on the main diagonal, which extends from the upper left corner to the lower right corner of each matrix. The measured data points are shown in black outline to distinguish them from the white background. Visual inspection of the interactions matrices reveals several noteworthy patterns in the data. First, in each matrix, interaction indices generally decrease with increasing distance from the main diagonal. In other words, channel interaction decreased with increasing electrode separation. Second, there is considerable variability in channel interactions in different regions of the electrode array as can be seen by scanning parallel to the main diagonal of each matrix. Moreover, channel interactions varied across subjects: When comparing results of subjects whose data are shown in the upper row (i.e., subjects who completed the full testing protocol), interactions were generally lowest in the subject at the left and highest in the two subjects at the right.

PSYCHOPHYSICAL PERFORMANCE WITH ACOUSTICALLY PRESENTED STIMULI

Methods

Subjects

Participants were the same as in the experiment described in Sec. 2. Subjects who did not typically use a HiResolution (HiRes) processing strategy were mapped for a HiRes strategy on a PSPTM research processor, which they used during testing. The bilateral CI listener was tested in each ear separately.

Procedures

Spectral-ripple discrimination thresholds were collected previously in these subjects using established techniques (Won et al., 2007). Briefly, three rippled noise tokens with a 30-dB peak-to-trough ratio, two with standard ripple phase and one with inverted ripple phase, were selected for each trial. The order of presentation of the three tokens was randomized, and the task was to select the “oddball.” Stimuli were controlled by a desktop computer (Apple PowerMac G5 analog sound I/O) and presented in sound field in a double-walled sound-treated IAC booth at 65 dBA from a loudspeaker located directly in front of the listener at a distance of 1 m. A level attenuation of 1–8 dB (in 1-dB increments) was randomly selected for each interval in the three-interval task. Ripple density was varied within the range 0.125–11.314 ripples per octave in equal-ratio steps of 1.414 in an adaptive two-up/one- down procedure with 13 reversals that converges to the 70.7% correct point (Levitt, 1971).1 Thus the number of ripples per octave was increased by a factor of 1.414 after two consecutive correct responses and decreased by a factor of 1.414 after a single incorrect response. The threshold for each adaptive run was calculated as the mean of the last eight reversals. The spectral-ripple discrimination threshold for each subject is the mean of six adaptive runs. Total testing time to complete six runs was about 30 min.

Participants were also tested on speech recognition and temporal modulation detection. There is evidence that in addition to significant correlations with spectral-ripple discrimination thresholds (Henry and Turner, 2003; Won et al., 2007), speech results are also correlated with modulation detection thresholds (MDTs), and the variance in speech recognition explained by the combination of spectral-ripple discrimination thresholds and MDTs is greater than the variance explained by either threshold alone (Won et al., 2011a). Consonant–Nucleus–Consonant (CNC) word scores had previously been collected in these listeners with a HiRes strategy. Two sets of 50 CNC monosyllabic words (Peterson and Lehiste, 1962) were presented in a quiet background at 62 dBA from a single loudspeaker positioned 1 m in front of the subject. Two CNC word lists were randomly chosen out of 10 lists for each subject. The subjects were instructed to repeat the word that they heard. A total percent correct score was calculated after 100 presentations as the percent of words correctly repeated. Temporal MDTs had been collected previously in these listeners with a HiRes strategy. MDTs in dB relative to 100% modulation [20log10(mi)] were obtained. The basic approach follows that of Bacon and Viemeister (1985), and the details of the method are the same as Won et al. (2011a) except that only a single modulation frequency of 50 Hz was tested. A two-interval, two-alternative forced-choice (AFC) procedure was used to measure MDTs. Stimuli were presented at 65 dBA. During one of the two 1-s observation intervals, the carrier was sinusoidally amplitude modulated. The subjects were instructed to choose the interval that contained the modulated noise. Visual feedback of the correct answer was given after each presentation. A two-down, one-up adaptive procedure was used to measure the modulation depth (mi) threshold, converging on 70.7% correct performance (Levitt, 1971), starting with a modulation depth of 100% and changing in steps of 4 dB from the first to the fourth reversal, and 2 dB for the next 10 reversals. For each tracking history, the final 10 reversals were averaged to obtain the MDT for that tracking history. The threshold for each subject was calculated as the mean of three tracking histories.

Results and discussion

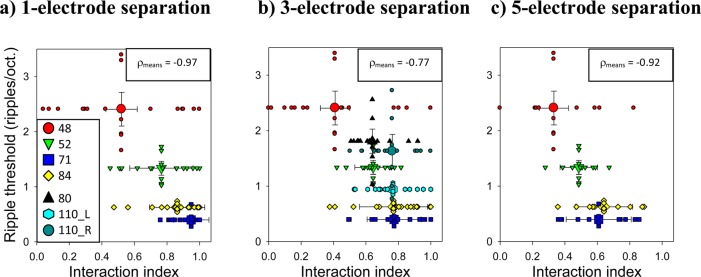

A comparison of mean interaction indices for the tested CI users with their spectral-ripple discrimination thresholds is shown at electrode separations of one, three, and five electrodes in Figs. 5a through 5c. Four CI users were tested at electrode separations of one and five electrodes [Figs. 5a and 5c]. Six CI users (seven ears) were tested at an electrode separation of three electrodes [Fig. 5b]. The individual measurements of spectral-ripple discrimination thresholds and interaction indices at each electrode separation are shown by small symbols, and the means for each subject are shown by large symbols. Spectral-ripple discrimination thresholds of CI users decrease with increasing mean interaction index.

Figure 5.

(Color online) Comparison of spectral-ripple discrimination thresholds with channel interactions at three different electrode separations: (a) one electrode, (b) three electrodes, and (c) five electrodes. A different symbol shape and fill is used for each listener (see legend). Small symbols show individual measurements and large symbols show the means for each listener.

There is support in the literature for the view that performance in the spectral-ripple discrimination task can be predicted from an average across the electrode array rather than from any one location along the electrode array (see Sec. 1). Nonetheless the variability across the electrode array within individual subjects could still play a role in performance. To allow for this possibility, the tests for a relationship between spectral-ripple discrimination and channel interactions were performed using a bootstrapping approach, which employs sampling of the variable interaction indices within a single subject. Specifically, interaction indices for each listener were randomly selected with replacement, in a bootstrapping procedure with 10 000 repetitions. In each repetition, the correlation coefficient was calculated between spectral-ripple discrimination scores and the subject means of the randomly selected interaction indices. There was a significant, negative correlation between spectral-ripple discrimination performance and mean interaction index at electrode separations of one electrode (r = −0.97, P < 0.001), three electrodes (r = −0.77, P < 0.001), and five electrodes (r = −0.92, P < 0.001). Note that the number of subjects is higher for tests at an electrode separation of three electrodes than at separations of one and five electrodes. For this correlational analysis at three different electrode separations, the criterion for statistical significance was divided by 3 (i.e., α* = 0.0167) to correct for the number of comparisons. The negative sign of the correlation coefficients indicates that performance on the spectral-ripple discrimination test is impaired with increasing channel interactions; this is consistent with the hypothesis.

The key finding is that there was a significant, strong correlation of spectral-ripple discrimination thresholds with the mean interaction index at multiple electrode separations. It was expected that the correlation between the mean interaction index and ripple discrimination might be constrained by a ceiling effect at the smallest electrode separation (one electrode) and by floor effects at moderate to large electrode separations. However, the correlation was significant at these electrode separations. There was also some indication of a relationship between the mean interaction index and ripple thresholds at the two largest electrode separations of nine and 15 electrodes (data not shown), but statistical comparisons were not planned at these large electrode separations due to the small number of data points at these separations and to limit the correction for the number of comparisons. The significant negative correlations observed at electrode separations of one, three, and five electrodes indicate that the spectral-ripple discrimination test does assess the spectral resolution of CI users.

The mean interaction indices at an electrode separation of three electrodes were compared to CNC word scores and MDTs in these same listeners using a bootstrapping approach as described in the preceding text. Mean interaction indices in seven ears were significantly correlated with CNC word scores (r = 0.43; P < 0.005), and the magnitude of the correlation was moderate. This is as expected because speech understanding is thought to depend on other cues (e.g., temporal) in addition to spectral cues. As regards the comparison between mean interaction indices and MDTs, the correlation magnitude was small (r = 0.14) and not statistically significant. The finding that spectral-ripple discrimination thresholds were significantly correlated with the mean of interactions across the electrode array, while MDTs were not, is consistent with the view that spectral-ripple discrimination thresholds provide information about the spectral resolution of CI users.

GENERAL DISCUSSION

The spectral-ripple discrimination test has several properties that are desirable for use in the evaluation and development of speech processing algorithms or in the selection of processing strategies for individual patients. These include its nonlinguistic nature, a significant correlation with multiple clinically pertinent outcomes, and reliability of thresholds between initial and subsequent testing (Henry and Turner, 2003; Henry et al., 2005; Won et al., 2007; Berenstein et al., 2008; Drennan et al., 2010; Won et al., 2010b). However, other key attributes of this “one-point” test, its ease of use and short duration, are connected to concerns that have been raised regarding the interpretation of spectral-ripple discrimination thresholds as a useful metric of spectral resolution. As was discussed in Sec. 1, the spectral resolution of CI users has typically been assessed with time-intensive testing paradigms in which electrical stimuli are specified by the experimenter. However, in the spectral-ripple discrimination test, the experimenter varies a parameter of an acoustic stimulus, while the electrical stimulus depends on the patient's clinical speech processor. The present study addressed these concerns by comparing performance in the spectral-ripple discrimination test to extensive psychophysical measurements of the interaction index under the controlled conditions afforded by direct stimulation with a research processor.

Results of these measurements suggest that spectral-ripple discrimination thresholds depend on spread of current along the cochlea to surviving neural afferents. This relationship was surprisingly robust. Contrary to the authors' expectation that the correlation between the mean interaction index and spectral-ripple discrimination thresholds might be reduced by ceiling effects at the smallest electrode separation and floor effects at moderate to large electrode separations, the mean interaction index was significantly correlated with spectral-ripple discrimination thresholds not only at a separation of three electrodes but also at separations of one and five electrodes. This correspondence with spread of current along the cochlea indicates that spectral-ripple discrimination thresholds do reflect the spectral resolution of CI users. Further support for this interpretation comes from the observation that the mean interaction index was not significantly correlated with temporal modulation detection thresholds (MDTs) at a 50-Hz modulation. The latter result parallels the finding by Won et al. (2011a) that spectral-ripple discrimination thresholds were not correlated with MDTs, and it is consistent with their observation that a significantly greater share of the variance in the CNC word scores of 24 CI users could be accounted for by a combination of spectral-ripple discrimination thresholds and MDTs than by the (primarily temporal) MDT measure alone. Taken together these results strongly suggest that the information contributed by spectral-ripple discrimination thresholds is spectral.

This result is consistent with the conclusions of previous studies that found significant correlations of spectral-ripple discrimination thresholds with speech understanding (e.g., Henry and Turner, 2003; Henry et al., 2005; Won et al., 2007). Nonetheless, there is a view among some CI researchers that the prediction of speech outcomes should be based on testing with speech materials (e.g., McKay et al., 2009). However, there would be numerous advantages to identifying a set of psychophysical tests that, collectively, could account for a large share of the variance in speech outcomes. The drawbacks of relying on a speech corpus include the need to develop test materials for tens or even hundreds of languages, the resulting difficulty of standardizing and comparing across various language versions of the test, and the long period of time required from when a CI user is fitted with a new speech processing strategy until the listener's improvement in speech understanding stabilizes. For example, Donaldson and Nelson (2000) found that place-pitch sensitivity was significantly correlated with long-term speech outcomes but not with performance after 1 month's use of a new speech processing strategy. Psychophysical tests, on the other hand, are “portable” across languages, and there is evidence that performance on such tests is relatively stable compared to their improvements in speech perception over time (Brown et al., 1995; Fu et al., 2002; Drennan et al., 2011). Moreover, there is the potential to tailor psychophysical tests such that individual tests are geared to different types of cues. Finally, psychophysical tests could also be useful for predicting outcomes in other areas such as music perception (e.g., Drennan and Rubinstein, 2008; Won et al., 2010).

Azadpour and McKay (2012) concluded that spectral resolution might have very limited relevance to speech understanding in CI users although it must be emphasized that they compared speech understanding to spatial resolution about a single electrode. Their result contrasts with the current study in which CNC word scores were significantly correlated with mean interaction indices. The current results are consistent with results of numerous published studies that have suggested a relationship between speech understanding and spectral resolution (e.g., Dorman et al., 1990; Busby et al., 1993; Nelson et al., 1995; Donaldson and Nelson, 2000; Throckmorton and Collins, 1999; Henry et al., 2000; Litvak et al., 2007). Although there is not complete agreement in the published data (e.g., Zwolan 1997), overall there is considerable support in the literature for the view that spectral resolution is relevant to speech understanding in CI users.

Azadpour and McKay (2012) discussed performance in tests with spectrally modulated noise in terms of a potential for unknown contributions of contaminating cues. However, it appears that use of the “contaminating” label does not result in meaningful distinctions among tests. Furthermore, it may overshadow crucial concerns such as time efficiency and potential clinical applicability. In light of the evidence that the spectral-ripple discrimination test provides a useful metric of the overall spectral resolution of CI users, these issues appear to have little relevance for practical applications of the test.

Given that the time requirements for measuring a large set of interaction indices render this approach impractical for most uses, the question of whether the channel interactions of individual CI users can be adequately characterized with more time-efficient measures such as spectral-ripple discrimination thresholds is of considerable practical importance. Results of the current study suggest that good results can be obtained by measuring the interaction index at just one electrode separation (e.g., at a separation of one, three, or five electrodes) provided that multiple probe and perturbation electrodes along the electrode array are tested. Nonetheless, that would still require many hours of testing. The published literature on the interaction index includes far smaller data sets than the current study (e.g., Boëx et al., 2003; Stickney et al., 2006). However, the interpretation of the results, particularly negative results, might not always be clear when the matrix of all possible electrode pairings is sampled quite sparsely. In other words, quite large data sets might be necessary to adequately characterize channel interactions using the interaction index. Thus approaches such as the spectral-ripple discrimination test could offer a far more efficient way to evaluate average channel interactions and spectral resolution across the electrode array.

ACKNOWLEDGMENTS

We are grateful for the extraordinarily dedicated efforts of our subjects. This research was supported by NIH-NIDCD Grants F32 DC011431, T32-DC000033, R01 DC007525, P30 DC04661, and L30 DC008490 and an educational fellowship from the Advanced Bionics Corporation.

Portions of this work were presented at the 161st meeting of the Acoustical Society of America, Seattle, WA, May 23–27, 2011.

Footnotes

Although a majority of stimulus parameters in psychoacoustics are of such a nature that the task becomes more difficult as the tested parameter (e.g., stimulus level) decreases, the spectral-ripple discrimination task becomes more difficult as the ripple density increases. Thus Won et al. (2007) designated the procedure that converges to 70.7% correct as “two-up/one-down,” and higher spectral-ripple discrimination thresholds indicate better performance.

References

- Anderson, E. S., Oxenham, A. J., Kreft, H., Nelson, P. B., and Nelson, D. A. (2011). “ Comparing spectral tuning curves, spectral ripple resolution, and speech perception in cochlear implant users,” J. Acoust. Soc. Am. 130, 364–375. 10.1121/1.3589255 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Azadpour, M., and McKay, C. M. (2012). “ A psychophysical method for measuring spatial resolution in cochlear implants,” J. Assoc. Res. Otolaryngol. 13, 145–157. 10.1007/s10162-011-0294-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bacon, S. P., and Viemeister, N. F. (1985). “ Temporal modulation transfer functions in normal-hearing and hearing-impaired listeners,” Audiology 24, 117–134. 10.3109/00206098509081545 [DOI] [PubMed] [Google Scholar]

- Berenstein, C. K., Mens, L. H., Mulder, J. J., and Vanpoucke, F. J. (2008). “ Current steering and current focusing in cochlear implants: Comparison of monopolar, tripolar, and virtual channel electrode configurations,” Ear. Hear. 29(2), 250–260. 10.1097/AUD.0b013e3181645336 [DOI] [PubMed] [Google Scholar]

- Boëx, C., de Balthasar, C., Kós, M. I., and Pelizzone, M. (2003). “ Electrical field interactions in different cochlear implant systems,” J. Acoust. Soc. Am. 114, 2049–2057. 10.1121/1.1610451 [DOI] [PubMed] [Google Scholar]

- Brown, C. J., Abbas, P. J., Bertschy, M., Tyler, R. S., Lowder, M., Takahashi, G., Purdy, S., and Gantz, B. J. (1995). “ Longitudinal assessment of physiological and psychophysical measures in cochlear implant users,” Ear. Hear. 16, 439–449. 10.1097/00003446-199510000-00001 [DOI] [PubMed] [Google Scholar]

- Busby, P. A., Tong, Y. C., and Clark, G. M. (1993). “ Electrode position, repetition rate, and speech perception by early- and late-deafened cochlear implant patients,” J. Acoust. Soc. Am. 93, 1058–1067. 10.1121/1.405554 [DOI] [PubMed] [Google Scholar]

- Chatterjee, M., and Shannon R. V. (1998). “ Forward masked excitation patterns in multielectrode electrical stimulation,” J. Acoust. Soc. Am. 103, 2565–2572. 10.1121/1.422777 [DOI] [PubMed] [Google Scholar]

- de Balthasar, C., Boëx, C., Cosendai, G., Valentini, G., Sigrist, A., and Pelizzone, M. (2003). “ Channel interactions with high-rate biphasic electrical stimulation in cochlear implant subjects,” Hear. Res. 182, 77–87. 10.1016/S0378-5955(03)00174-6 [DOI] [PubMed] [Google Scholar]

- Donaldson, G. S., and Nelson, D. A. (2000). “ Place-pitch sensitivity and its relation to consonant recognition by cochlear implant listeners using the MPEAK and SPEAK speech processing strategies,” J. Acoust. Soc. Am. 107, 1645–1658. 10.1121/1.428449 [DOI] [PubMed] [Google Scholar]

- Dorman, M. F., Smith, L., McCandless, G., Dunnavant, G., Parkin, J., and Dankowski, K. (1990). “ Pitch scaling and speech understanding by patients who use the Ineraid cochlear implant,” Ear Hear. 11, 310–315. 10.1097/00003446-199008000-00010 [DOI] [PubMed] [Google Scholar]

- Drennan, W. R., and Rubinstein, J. T. (2008). “ Music perception in cochlear implant users and its relationship with psychophysical capabilities,” J. Rehabil. Res. Dev. 45, 779–790. 10.1682/JRRD.2007.08.0118 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Drennan, W. R., Won, J. H., Jameyson, M. E., and Rubinstein, J. T. (2011). “ Stability of clinically meaningful, non-linguistic measures of hearing performance with a cochlear implant,” in the 2011 Conference on Implantable Auditory Prostheses, Pacific Grove, CA.

- Drennan, W. R., Won, J. H., Nie, K., Jameyson, M. E., and Rubinstein, J. T. (2010). “ Sensitivity of psychophysical measures to signal processor modifications in cochlear implant users,” Hear. Res. 262, 1–8. 10.1016/j.heares.2010.02.003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eddington, D. K., and Whearty, M. (2001). “ Electrode interaction and speech reception using lateral-wall and medial-wall electrode systems,” in the 2001 Conference on Implantable Auditory Prostheses, Pacific Grove, CA.

- Fu, Q.-J., Shannon, R. V., and Galvin, J. J.,III (2002). “ Perceptual learning following changes in the frequency-to-electrode assignment with the Nucleus-22 cochlear implant,” J. Acoust. Soc. Am. 112, 1664–1674. 10.1121/1.1502901 [DOI] [PubMed] [Google Scholar]

- Goupell, M. J., Laback, B., Majdak, P., and Baumgartner, W. D. (2008). “ Current-level discrimination and spectral profile analysis in multi-channel electrical stimulation,” J. Acoust. Soc. Am. 124, 3142–3157. 10.1121/1.2981638 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Henry, B. A., McKay, C. M., McDermott, H. J., and Clark, G. M. (2000). “ The relationship between speech perception and electrode discrimination in cochlear implantees,” J. Acoust. Soc. Am. 108, 1269–1280. 10.1121/1.1287711 [DOI] [PubMed] [Google Scholar]

- Henry, B. A., and Turner, C. W. (2003). “ The resolution of complex spectral patterns by cochlear implant and normal-hearing listeners,” J. Acoust. Soc. Am. 113, 2861–2873. 10.1121/1.1561900 [DOI] [PubMed] [Google Scholar]

- Henry, B. A., Turner, C. W., and Behrens, A. (2005). “ Spectral peak resolution and speech recognition in quiet: Normal hearing, hearing impaired, and cochlear implant listeners,” J. Acoust. Soc Am. 118, 1111–1121. 10.1121/1.1944567 [DOI] [PubMed] [Google Scholar]

- Levitt, H. (1971). “ Transformed up-down methods in psychoacoustics,” J. Acoust. Soc. Am. 49, 467–477. 10.1121/1.1912375 [DOI] [PubMed] [Google Scholar]

- Litvak, L. M., Spahr, A. J., Saoji, A. A., and Fridman, G. Y. (2007). “ Relationship between perception of spectral ripple and speech recognition in cochlear implant and vocoder listeners,” J. Acoust. Soc. Am. 122, 982–991. 10.1121/1.2749413 [DOI] [PubMed] [Google Scholar]

- Macpherson, E. A., and Middlebrooks, J. C. (2003). “ Vertical-plane sound localization probed with ripple-spectrum noise,” J. Acoust. Soc. Am. 114, 430–445. 10.1121/1.1582174 [DOI] [PubMed] [Google Scholar]

- McKay, C. M., Azadpour, M., and Akhoun, I. (2009). “ In search of frequency resolution,” in the 2009 Conference on Implantable Auditory Prostheses, Tahoe City, CA, p. 54.

- Mehr, M. A., Turner, C. W., and Parkinson, A. (2001). “ Channel weights for speech recognition in cochlear implant users,” J. Acoust. Soc. Am. 109, 359–366. 10.1121/1.1322021 [DOI] [PubMed] [Google Scholar]

- Miller, J., and Ulrich, R. (2001). “ On the analysis of psychometric functions: The Spearman-Kärber method,” Percept. Psychophys. 63, 1399–1420. 10.3758/BF03194551 [DOI] [PubMed] [Google Scholar]

- Miller, J., and Ulrich, R. (2004). “ A computer program for Spearman-Kärber and probit analysis of psychometric function data,” Behav. Res. Methods Instrum. Comput. 36, 11–16. 10.3758/BF03195545 [DOI] [PubMed] [Google Scholar]

- Nelson, D. A., Van Tasell, D. J., Schroder, A. C., Soli, S., and Levine, S. (1995). “ Electrode ranking of place pitch and speech recognition in electrical hearing,” J. Acoust. Soc. Am. 98, 1987–1999. 10.1121/1.413317 [DOI] [PubMed] [Google Scholar]

- Peterson, G., and Lehiste, I. (1962). “ Revised CNC lists for auditory tests,” J. Speech. Hear. Disord. 27, 62–70. [DOI] [PubMed] [Google Scholar]

- Rubinstein, J. T. (2012). “ Cochlear implants: The hazards of unexpected success,” Can. Med. Assoc. J. 184, 1343–1344. 10.1503/cmaj.111743 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Saoji, A. A., Litvak, L. M., Spahr, A. J., and Eddins, D. A. (2009). “ Spectral modulation detection and vowel and consonant identifications in cochlear implant listeners,” J. Acoust. Soc. Am. 126, 955–958. 10.1121/1.3179670 [DOI] [PubMed] [Google Scholar]

- Stickney, G. S., Loizou, P. C., Mishra, L. N., Assmann, P. F., Shannon, R. V., and Opie, J. M. (2006).“ Effects of electrode design and configuration on channel interactions,” Hear. Res. 211, 33–45. 10.1016/j.heares.2005.08.008 [DOI] [PubMed] [Google Scholar]

- Summers, V., and Leek, M. R. (1994). “ The internal representation of spectral contrast in hearing-impaired listeners,” J. Acoust. Soc. Am. 95, 3518–3528. 10.1121/1.409969 [DOI] [PubMed] [Google Scholar]

- Supin, A. Ya., Popov, V. V., Milekhina, O. N., and Tarakanov, M. B. (1994). “ Frequency resolving power measured by rippled noise,” Hear. Res. 78, 31–40. 10.1016/0378-5955(94)90041-8 [DOI] [PubMed] [Google Scholar]

- Supin, A. Ya., Popov, V. V., Milekhina, O. N., and Tarakanov, M. B. (1997). “ Frequency-temporal resolution of hearing measured by rippled noise,” Hear. Res. 108, 17–27. 10.1016/S0378-5955(97)00035-X [DOI] [PubMed] [Google Scholar]

- Supin, A. Ya., Popov, V. V., Milekhina, O. N., and Tarakanov, M. B. (1999). “ Ripple depth and density resolution of rippled noise,” J. Acoust. Soc. Am. 106, 2800–2804. 10.1121/1.428105 [DOI] [PubMed] [Google Scholar]

- Throckmorton, C. S., and Collins, L. M. (1999). “ Investigation of the effects of temporal and spatial interactions on speech-recognition skills in cochlear-implant subjects,” J. Acoust. Soc. Am. 105, 861–873. 10.1121/1.426275 [DOI] [PubMed] [Google Scholar]

- Wilson, B. S., and Dorman, M. F. (2008). “ Cochlear implants: A remarkable past and a brilliant future,” Hear. Res. 242, 3–21. 10.1016/j.heares.2008.06.005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Won, J. H., Drennan, W. R., Kang, R. S., and Rubinstein, J. T. (2010). “ Psychoacoustic abilities associated with music perception in cochlear implant users,” Ear. Hear. 31, 796–805. 10.1097/AUD.0b013e3181e8b7bd [DOI] [PMC free article] [PubMed] [Google Scholar]

- Won, J. H., Drennan, W. R., Nie, K., Jameyson, E. M., and Rubinstein J. T. (2011a). “ Acoustic temporal modulation detection and speech perception in cochlear implant listeners,” J. Acoust. Soc. Am. 130, 376–388. 10.1121/1.3592521 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Won, J. H., Drennan, W. R., and Rubinstein, J. T. (2007). “ Spectral-ripple resolution correlates with speech reception in noise in cochlear implant users,” J. Assoc. Res. Otolaryngol. 8, 384–392. 10.1007/s10162-007-0085-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Won, J. H., Jones, G. L., Drennan, W. R., Jameyson, E. M., and Rubinstein, J. T. (2011b). “ Evidence of across-channel processing for spectral-ripple discrimination in cochlear implant listeners,” J. Acoust. Soc. Am. 130, 2088–2097. 10.1121/1.3624820 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zeng, F.-G., Rebscher, S., Harrison, W., Sun, X., and Feng, H. (2008). “ Cochlear implants: System design, integration, and evaluation,” IEEE Rev. Biomed. Eng. 1, 115–142. 10.1109/RBME.2008.2008250 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zwolan, T. A., Collins, L. M., and Wakefield, G. H. (1997). “ Electrode discrimination and speech recognition in postlingually deafened adult cochlear implant subjects,” J. Acoust. Soc. Am. 102, 3673–3685. 10.1121/1.420401 [DOI] [PubMed] [Google Scholar]