Abstract

Context

A participant death is a serious event in a clinical trial and needs to be unambiguously and publicly reported.

Objective

To examine (1) how often and how numbers of deaths are reported in ClinicalTrials.gov records; (2) how often total deaths can be determined per arm within a ClinicalTrials.gov results record and its corresponding publication and (3) whether counts may be discordant.

Design

Registry-based study of clinical trial results reporting.

Setting

ClinicalTrials.gov results database searched in July 2011 and matched PubMed publications.

Selection criteria

A random sample of ClinicalTrials.gov results records. Detailed review of records with a single corresponding publication.

Main outcome measure

ClinicalTrials.gov records reporting number of deaths under participant flow, primary or secondary outcome or serious adverse events. Consistency in reporting of number of deaths between ClinicalTrials.gov records and corresponding publications.

Results

In 500 randomly selected ClinicalTrials.gov records, only 123 records (25%) reported a number for deaths. Reporting of deaths across data modules for participant flow, primary or secondary outcomes and serious adverse events was variable. In a sample of 27 pairs of ClinicalTrials.gov records with number of deaths and corresponding publications, total deaths per arm could only be determined in 56% (15/27 pairs) but were discordant in 19% (5/27). In 27 pairs of ClinicalTrials.gov records without any information on number of deaths, 48% (13/27) were discordant since the publications reported absence of deaths in 33% (9/27) and positive death numbers in 15% (4/27).

Conclusions

Deaths are variably reported in ClinicalTrials.gov records. A reliable total number of deaths per arm cannot always be determined with certainty or can be discordant with number reported in corresponding trial publications. This highlights a need for unambiguous and complete reporting of the number of deaths in trial registries and publications.

Keywords: Epidemiology, Public Health, Statistics & Research Methods, Qualitative Research

Article summary.

Article focus

We hypothesised that the lack of clear expectations for reporting all deaths in clinical trials give rise to discrepancies in the number of deaths reported across reports of a trial.

Key message

There is a lack of clarity, consistency and agreement in reporting of deaths in clinical trials which highlights the need for unambiguous templates to standardise reporting of total number of deaths per arm in ClinicalTrials.gov records and more explicit reporting guidelines for peer-reviewed publications.

Strengths and limitations of this study

Our findings indicate a need for explicit expectations for reporting of all deaths.

We suggest amendments to reporting formats such as: number of participants who started per arm, total number of deaths from any cause per arm and the time point of last ascertainment to prompt study investigators to sum up all deaths across participant loss, primary or secondary outcomes and serious adverse events.

We examined only a small number of matched cases which may not be generalisable. Nevertheless, even these small samples illustrate ambiguity within records and inconsistencies across reports of the same trial.

We used only data available in the publicly available reports and only counted actual number of deaths and not alternate information on death, such as percents or survival analyses, as exact back calculations are not always possible.

We followed operational rules to determine total deaths per arm within a report. These operational rules were not overly stringent and more rigid expectations would have resulted in fewer reports with the data amenable for detailed analysis.

Introduction

The death of a clinical trial subject is a serious event that needs to be publicly disclosed. Incomplete reporting of deaths may overemphasise health benefits when benefits and harms of medical interventions are summarised.1 2 For unambiguous reporting, all deaths have to be reported for each trial arm and the absence of deaths must be explicitly stated if none were known to have occurred.

Formal reporting expectations for public disclosure of deaths in clinical trials are complex. During a trial, the USA Food and Drug Administration (FDA) expects a sponsor of an investigational new drug to submit annual reports that include a list of subjects who died during participation in the investigation, with the cause of death for each subject.3 This means all deaths must be reported to the FDA, regardless of cause.

Sponsors of investigational new drugs also need to promptly report to the FDA and trial investigators serious unexpected events if they are suspected adverse reactions, meaning that there is a ‘reasonable possibility’ that the drug caused it.4 5 Further, the FDA regulations specify that the sponsor report ‘an aggregate analysis of specific events observed in a clinical trial … that indicates those events occur more frequently in the drug treatment group than in a concurrent or historical control group’6 suggesting that the events may be caused by the drug.5

After trial completion, trial registries such as ClinicalTrials.gov provide web-based public records of trial results of federally and privately funded trials.7 Results reporting in ClinicalTrials.gov is mandated by the USA FDA Amendments Act which requires the reporting of summary results for certain studies within 1 year of completing data collection for the prespecified primary outcome.7–9 These are phase II-IV interventional studies of FDA approved drugs, biological products and devices with at last one US site ongoing after 2007.7–9 Based on this Act, the results data bank of the ClinicalTrials.gov registry shall include ‘a table of anticipated and unanticipated serious adverse events grouped by organ system with number and frequency in each arms of the trial’.10 The ClinicalTrials.gov data element definitions define adverse events as ‘unfavorable changes in health …, that occur in trial participants during the clinical trial or within a specified period following the trial’ and under serious adverse events include ‘adverse events that result in death’.11 This reporting of deaths as a serious adverse event is currently the only requirement for reporting of deaths in ClinicalTrials.gov and requires a judgment about the possibility of a causal association. However, causality assessment for a non-specific event such as death may be a challenge.12

The peer-reviewed publication of clinical trials is guided by CONSORT.13 The main reporting CONSORT guideline does not specify a need to report all deaths; however, the extension for reporting of adverse events states that ‘Authors should always report deaths in each study group during a trial, regardless of whether death is an end point and regardless of whether attribution to a specific cause is possible’.14

We hypothesised that the complex reporting expectations for death give rise to discordance in deaths documented across reports of a trial. We first examined how number of deaths from any cause was reported in ClinicalTrials.gov records. We then attempted to determine the total deaths per arm in a ClinicalTrials.gov results record and in the corresponding publication. Finally, we conducted a detailed review of cases with discrepancies in death numbers to identify possible explanations.

Methods

The ClinicalTrials.gov team provided us with a database of results records indexed in ClinicalTrials.gov (search date July 12, 2011). The database contained all records of phase II, III or IV interventional trials with results entered between 9 September 2009 and 14 June 2011. In 500 randomly selected records, we examined the record for reporting of any number of deaths. This entailed review of three of the four scientific data modules, that is, participant flow, primary and secondary outcomes and serious adverse events, but not baseline characteristics. Online supplementary appendix 2 shows screenshots for the three pertinent modules from a sample ClinicalTrials.gov record. We considered deaths only when a zero or a positive number for death was reported in any module, that is, we did not derive death numbers from information on deaths reported as percentages, rates, risks or survival curves. In the 123 records that reported some number of death, we examined in which module deaths were reported. Deaths from serious adverse events would presumably be a reason for not completing a trial and qualify to be listed as such in the participant flow module. We examined how many records reported number of deaths only in the serious adverse events module without reporting any deaths as a reason for discontinuation.

Among the 500 records, we also identified studies with an outcome measure description that implied ascertainment of death, including overall survival, time to mortality, all cause deaths, disease-specific deaths, composite outcomes including death and serious adverse events including deaths. In this subset, we examined how often actual numbers of deaths were reported as part of the primary or secondary outcome module when the outcome suggested that deaths were collected.

We then compared death reporting between ClinicalTrials.gov results records and corresponding publications. To select a sample of pairs, we used 2 criteria (1) ClinicalTrials.gov records had to provide only a single PubMed Identifier matching a publication describing trial results to avoid the need for reconciliation across several publications and (2) publications had to be electronically accessible through our library. Based on these two criteria, we retrieved 27 publications matching the ClinicalTrials.gov records that reported death numbers. We sampled another 27 pairs of publications and ClinicalTrials.gov records where the record did not report death numbers.

For each record or publication, we attempted to determine the total deaths per arm and the numbers randomised or analysed per arm based on the data available in the record and publication, without contacting authors. This required assumptions when reconciling number of deaths across the three pertinent modules in the ClinicalTrials.gov record. For the publications, we searched the sections of the article corresponding to the modules. We used the following operational rules for decision-making:

If a report did not provide any direct information on number of deaths, no counts were implied.

If a number of deaths was reported in only one module in the ClinicalTrials.gov record or the corresponding sections in the publication, that is, either in participant flow, primary or secondary outcome or adverse events, this was determined to be the total number of deaths.

Otherwise, as a default, the highest unambiguous number of deaths in one module was taken as the total number of deaths.

Online supplementary appendix 3 shows an example of a record where the total number of deaths could not be determined with certainty based on these rules. When the number of deaths could be determined for both the ClinicalTrials.gov record and the corresponding publication following the rules, we compared the numbers between the record and the publication. A pair was discordant either when the total number of deaths was not the same, or when the ClinicalTrials.gov record did not include any information on death numbers, yet the publication mentioned a presence or absence of deaths. Discordant cases were reviewed in more detail. We extracted the denominators for number of deaths from information on number started, randomised or analysed. We further captured information on duration of follow-up and looked for possible reasons for differences in the number of deaths.

Results

Reporting of crude number of deaths in ClinicalTrials.gov results records

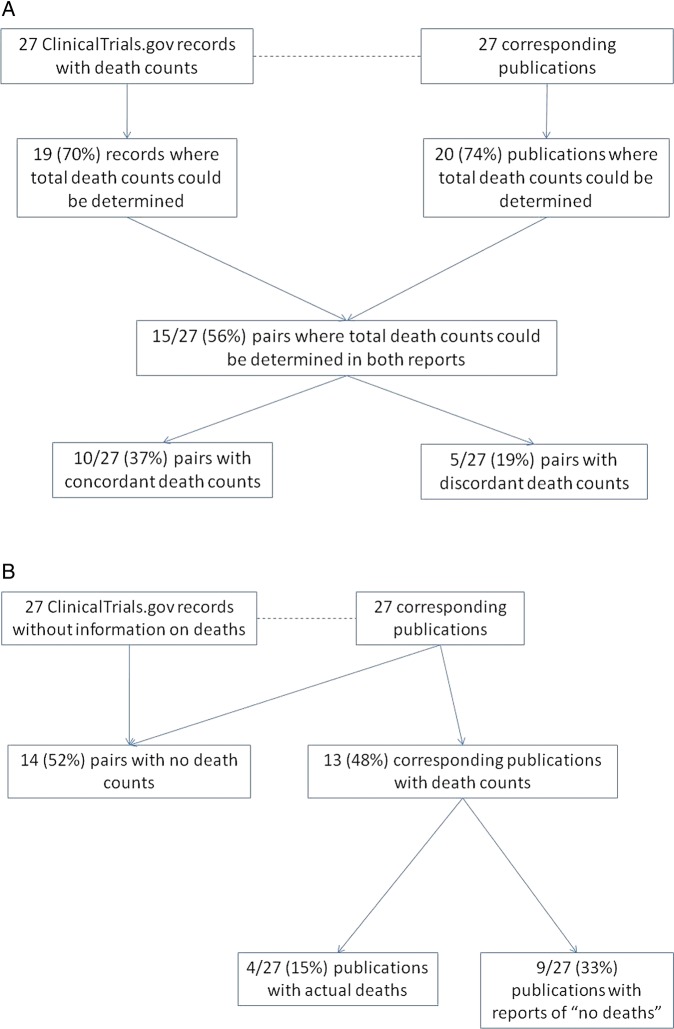

In July 2011, there were 1981 records with results in ClinicalTrials.gov and 500 records were randomly chosen for further analysis (see online supplementary appendix figure 1). These included 123 records (25%) which reported a number of deaths in at least one module. Deaths were variably reported across the three modules of participant flow, primary or secondary outcome and serious adverse events (figure 1). Sixty-four per cent of the records reported death numbers only in one of the modules, 32% in two modules and 4% in all of them. Approximately one-fifth (27/123) of the records reported number of deaths only in the module for the serious adverse events, that is,there were no deaths reported in the participant flow as a reason for not completing the trial. One-fifth (24/123) reported deaths in both of these modules.

Figure 1.

Reporting of number of deaths by data module in 123 ClinicalTrials.gov records.

Out of the 500 records, we identified 97 with a primary or secondary outcome measure definition that implied ascertainment of deaths. Of the 97, there were 32 (33%) that reported a crude number of deaths in the primary or secondary outcome module, with or without a result for death in another metric for death, such as percentage, rate and risk estimate. The 65 records that did not report crude number of deaths in the primary or secondary outcome module nonetheless still reported number of deaths under participant flow or serious adverse events.

Reporting of information on death, determination of total number of deaths per arm and congruency in matched pairs

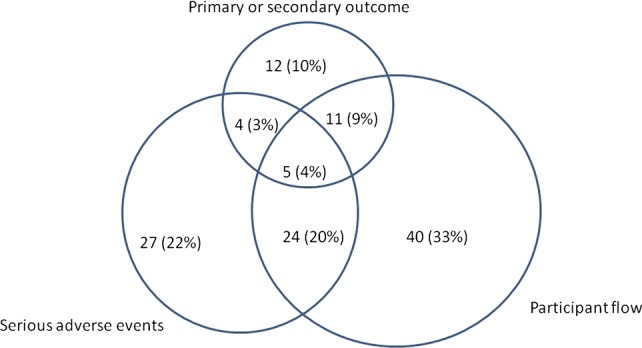

We examined congruence of reporting of number of deaths across pairs of ClinicalTrials.gov records and corresponding trial publications. Figure 2 tabulates whether there was any information on number of deaths in a trial report, and if so, whether total number of deaths could be determined per arm following simple rules, and finally whether the total numbers per arm were concordant or discordant across pairs. We examined 27 pairs where the ClinicalTrials.gov record contained some information on number of deaths and 27 pairs where the ClinicalTrial.gov record did not contain any information on death numbers.

Figure 2.

Consistency of death in matched pairs in (A) those with number of deaths in ClinicalTrials.gov and (B) those without any information on death numbers in ClinicalTrials.gov.

Of the 27 pairs with number of deaths reported in the ClinicalTrials.gov record, there were 15 (55%) in which the total number of deaths per arm could be determined in both reports (figure 2A). The number of deaths were concordant between the ClinicalTrials.gov record and the publication in 10 pairs (37%), but discordant in five (19%). In the remaining 12 (44%), concordance could not be assessed because the total number of deaths per arm could not be determined unambiguously for the record and the publication. The five discordant pairs are shown in detail in table 1.

Table 1.

Cases with number of deaths in ClinicalTrials.gov record that are discordant with the corresponding publication

| Population | Was death a specified outcome* Define | Reporting module or location | ClinicalTrials.gov record |

Publication |

||

|---|---|---|---|---|---|---|

| Deaths/Randomised |

Deaths/Randomised |

|||||

| Arm 1 | Arm 2 | Arm 1 | Arm 2 | |||

| Case 1 | ||||||

| Lung cancer | Yes Survival is a secondary outcome | Follow up: While on study drug+30 d after last dose (estimated 4 mo) | Follow up: From random assignment until first day of progression or until death | |||

| Flow | -/52 | -/51 | 4/52 | 2/51 | ||

| Outcome | -/52 | -/51 | – | – | ||

| SAE | 1/52 | 0/51 | 1/52 | 2/51 | ||

| Total | >1/52 | >0/51 | >4/52 | >2/51 | ||

| Summary | Both CT.gov record [NCT00085839] and the publication [PMID18281658] reported hazards ratios for survival and mean survival in months, but not the number of deaths for the outcome. Both reported deaths under serious adverse events, but counts differed between record and report. In addition the publication reported deaths in the flow diagram, while the record did not. The total number of deaths is discrepant between record and publication; however, neither is likely to represent the total number of deaths that occurred during the study. | |||||

| Case 2 | ||||||

| Multiple myeloma | No | Follow up: Up to 18 mo | Follow up: Enrolled 2/06-12/06, analysis through 8/2007 | |||

| Flow | 1/53 | 1/43 | 1/53 | 1/43 | ||

| Outcome | -/53 | -/41 | – | – | ||

| SAE | -/53 | -/42 | 4/53 | 1/42 | ||

| Total | 1/53 | 1/43 | 4/53 | 1/43 | ||

| Summary | Both CT.gov record [NCT00259740] and publication [PMID19714603] reported 1 death per arm in the participant flow. The total number of deaths is discrepant between record and publication, however, since the publication also reported 5 deaths under SAE. | |||||

| Case 3 | ||||||

| Refractory prostate cancer | Yes Survival is the primary outcome | Follow up: Analyzed through 9/2009 | Follow up: Analyzed through 9/2009 | |||

| Flow | -/377 | -/378 | -/377 | -/378 | ||

| Outcome | -/377 | -/378 | 279/377 | 234/378 | ||

| SAE | 0/371 sudden death | 1/371 sudden death | 275/371 | 227/371 | ||

| Total | >0/377 | >1/371 | 279/377 | 234/378 | ||

| Summary | The CT.gov record [NCT00417079] reported hazards ratios for survival as well as survival in months, but not the total number of deaths per arm for this outcome. The publication [PMID20888992] reported a large number of deaths per arms for the outcome of survival (as) and also a large number of deaths under SAE. The numerators and denominators differed slightly based on intention to treat analyses or per protocol analyses. The CT.gov record reported only one death under SAE; although based on the survival analysis, it appeared likely that the total number of deaths in the study was higher. The total number of deaths is discrepant between record and report. | |||||

| Case 4 | ||||||

| Chronic Obstructive Pulmonary Disease | Yes Death is a secondary outcome | Follow up: 52 wk | Follow up: 52 wk | |||

| Flow | -/772 | -/796 | -/772 | -/796 | ||

| Outcome | -/25 | -/25 | 25/772 | 25/796 | ||

| SAE | 1/778 sudden death; 0/778 death | 3/790 sudden death; 2/790 death | -/778 | -/790 | ||

| Total | 25/772 | 25/796 | 25/772 | 25/796 | ||

| Summary | The CT.gov record [NCT00297115] reported 25 per arm as number analyzed in the outcome module and defined the number analyzed as the number died. Further, the CT.gov record reports deaths under SAE using two different death definitions (‘sudden death’ and ‘death’), while the publication [PMID19716960] does not report any. Assuming that the deaths reported under SAE in the record are included in those reported for the outcome of death, the total number of deaths is consistent across record and publication. The publication describes 2 trials of similar design with two separate NCT number, but only the results corresponding to the trial in the index CT.gov record were compared. | |||||

| Case 5 | ||||||

| Prostate cancer | Yes Death is a secondary outcome | Follow up: From start of therapy up to 30 d after last dose | Follow up: Duration of therapy+30 d | |||

| Flow | -/48 | -/47 | – | -/47 | ||

| Outcome | 2/48 | 2/47 | – | -/47 | ||

| SAE | -/95 | 2/47 | ||||

| Total | 2/48 | 2/47 | – | 2/47 | ||

| Summary | The CT.gov record [NCT00385580] reported results for 2 arms. The publication presents only results for Arm 2. The CT.gov report shows 2 deaths in the outcome module, but none under SAE. The publication [PMID19920114] shows 2 deaths under SAE. The number of deaths reported for this arm was consistent between record and publication. | |||||

*In the ClinicalTrials.gov.record.

Data collection in ClinicalTrials.gov on Feb 14 2012.

Abbreviations: CT.gov, ClinicalTrials.gov; D/C, discontinuation; NCT, National Clinical Trial (number); SAE, serious adverse events; – (dash), not reported.

In the 27 pairs where the ClinicalTrials.gov record did not contain any information on death numbers, 14 (52%) pairs were concordant regarding the absence of information on deaths, that is, the trial publications also did not contain any death numbers (figure 2B). However, 13 (48%) publications contained information on number of deaths. In nine studies (33%), the published study affirmatively reported ‘no deaths’ and in four studies, the published report mentioned positive number of deaths (figure 2B). These four cases are shown in table 2. For example in Case 9, the ClinicalTrials.gov record did not contain any information on number of deaths; but the publication reported one death under serious adverse events (table 2).

Table 2.

Cases without any information on death numbers in ClinicalTrials.gov record but reports of number of deaths in the corresponding publication

| Population | Was death a specified outcome* Define | Reporting module or location | ClinicalTrials.gov record |

Publication |

||||

|---|---|---|---|---|---|---|---|---|

| Deaths/Randomised |

Deaths/Randomised |

|||||||

| Arm 1 | Arm 2 | Arm 1 | Arm 2 | |||||

| Case 6 | ||||||||

| Influenza vaccine in elderly | No | Follow up: 6 mo | Follow up: 6 mo | |||||

| Flow | -/857 | -/848 | -/870 | -/1262 | -/2575 | -/1262 | ||

| Outcome | – | – | – | – | – | – | ||

| SAE | -/855 | -/848 | -/870 | -/1260 | 16/2573 | 7/1260 | ||

| Total | -/2575 | -/1262 | 16/2575 | 7/1262 | ||||

| Summary | The CT.gov record [NCT00391053] did not report deaths counts across 4 arms. The publication [PMID19508159] described 23 deaths under SAE for 2 arms, collapsing arms 1-3 into one. | |||||||

| Case 7 | ||||||||

| Amyotrophic lateral sclerosis | No | Follow up: 9 mo | Follow up: 10 mo | |||||

| Flow | -/75 | -/75 | 3/75 | 5/75 | ||||

| Outcome | -/75 | -/75 | – | – | ||||

| SAE | -/75 | -/75 | 3/75 | 5/75 | ||||

| Total | -/75 | -/75 | 3/75 | 5/75 | ||||

| Summary | The CT.gov record [NCT00243932] did not report death counts. The publication [PMID19743457] describes 8 deaths under participant flow as well as under SAE, which are presumably the same. | |||||||

| Case 8 | ||||||||

| Diabetes Mellitus Type 2 | No | Follow up: 26 wk | Follow up: 26 wk | |||||

| Flow | -/239 | -/241 | -/239 | -/241 | ||||

| Outcome | – | – | – | – | ||||

| SAE | -/231 | -/238 | 0/231 | 1/238 | ||||

| Total | -/239 | -/241 | 0/239 | 1/241 | ||||

| Summary | The CT.gov record [NCT00469092] did not report death counts. The publication [PMID19821654] describes one death under SAE as a ‘treatment emergent death’. It also reported 2 deaths during the run-in period that were not included in the participant flow. | |||||||

| Case 9 | ||||||||

| Metastatic penile cancer | No (in record); Y (in publication) Overall survival was a reported outcome, unclear whether primary or secondary | Follow up: ‘Timeframe 9 y and 6 mo’ | Follow up: Duration of enrollment 4/2000 through 9/2008 (max FU up to 7 y 5 mo) | |||||

| Flow | -/30 | -/30 | ||||||

| Outcome | -/30 | 20/30 | ||||||

| SAE | -/30 | – | ||||||

| Total | -/30 | 20/30 | ||||||

| Summary | The CT.gov record [NCT00512096] did not include death counts even though “overall survival” was a pre-specified outcome. The publication [PMID20625118] reported 20 deaths for this outcome. | |||||||

*In the ClinicalTrials.gov.record.

Data collection in ClinicalTrials.gov on Feb 14 2012.

CT.gov, ClinicalTrials.gov; D/C, discontinuation; FU, follow up; NCT, National Clinical Trial (number); SAE, serious adverse events; – (dash), not reported.

Review of cases with discordant counts

Tables 1 and 2 show the detailed review of the cases with discordant counts. For each case, the crude number of deaths for each module or reporting location for the ClinicalTrials.gov record and the corresponding publication are shown, as well as the total number of deaths per arm that was determined following our operational rules. The summary contains comments and interpretation of the discrepancies.

In several cases, information on duration of follow-up or the time point of last assessment was not exact or varied across the reports. Comparison of the number of deaths required reconciliation across reports with discordant numbers of arms (Cases 5 and 6) or discordant number of studies (Case 4). For example, in Case 5, the ClinicalTrials.gov record included two arms treated with different drug doses, while the publication reported results only for one of the arms. The number of deaths for this single arm was consistent across the ClinicalTrials.gov record and the publication. In the other cases with the same number of arms, the inference or certainty about the number of deaths within each arm differed. In addition to discordant counts, problems were lack of provision of crude death numbers even when death was an outcome of interest (Cases 1 and 3), imprecision in data entry (Case 4), reporting of deaths under serious adverse events without specification as to whether they were counted as part of the death outcome (Case 4) or the participant flow (Case 7). In most cases, the publication included a slightly higher crude number of deaths. Large discrepancies were noted in cases where the record did not report counts for an outcome that included death, while the report did (Cases 3 and 9).

Discussion

Our study highlights a failure of consistent and clear reporting of number of deaths in clinical trials. Only 25% of ClinicalTrials.gov results records provided some number of deaths, with great variation and overlap in the reporting across the three data modules for participant flow, primary or secondary outcomes or serious adverse events. While we expected records reporting death as a serious adverse event to also list death as a reason for discontinuation from the trial in participant flow, a fifth of the records with death numbers reported deaths only under serious adverse events. Among ClinicalTrials.gov records with a definition for a primary or secondary outcome that implies ascertainment of death, only a third provided crude number of deaths in the data module for the primary or secondary outcome. This heterogeneous reporting and the uncertainty of whether deaths are reported in a redundant or exclusive manner across data modules, poses problems for reconciling deaths within a trial report.

Total number of deaths per arm could not always be determined unambiguously in the ClinicalTrials.gov results records or the corresponding publication. In the small sample where total deaths could be determined in both reports for the same trial, we identified examples where the number of deaths was discordant, highlighting lack of coherence and completeness. There were no clear patterns to explain the discrepancies. Finding a slightly higher crude number of deaths in publications than in ClinicalTrial.gov records suggests that number of deaths in the ClinicalTrials.gov records are not complete.

Our findings of haphazard reporting of deaths in clinical trials indicate a need for explicit expectations in reporting of all deaths regardless of whether they are considered to be a serious adverse event or not. We suggest that reporting formats for aggregate clinical trial results need to be amended to provide the following information: number of individuals who started per arm, number of deaths from any cause per arm and the time point of last ascertainment. This should prompt study investigators to sum up all deaths across participant flow, primary or secondary outcomes and serious adverse events. Information on mean duration of follow-up is also needed to allow calculation of rates. Given their prominent role supported by the legal regulations, clinical trials registries can spearhead uniform and consistent reporting of important trial outcomes, such as deaths. Similarly editors and sponsors must educate trialists to better meet the need for uniform reporting of all deaths.13 15

Our study has several limitations. We examined only a small number of matched cases which may not be generalisable. Nevertheless, even these small samples illustrate ambiguity within records and inconsistencies across reports of the same trial. Also, we used only data available in these reports to determine the total number of deaths per arm. It is possible that individual patient data available to the trial investigators would allow more studies to provide unambiguous number of deaths. However, this information is not publicly available and clinicians and policy makers rely on publicly accessible trial results reported in ClinicalTrials.gov records and in journal publications. Further, we only gave credit to number of deaths and not to alternate information on death, such as per cents or survival analyses, as exact back calculations are not always possible. Finally, we followed operational rules to determine total deaths per arm within a report. These operational rules were not overly stringent and more rigid expectations would have resulted in fewer reports with the data amenable for detailed analysis.

Our findings have to be viewed in context. Only 22% of studies report their results in ClinicalTrials.gov within 1 year of completion16 and fewer than half of studies funded by the National Institutes of Health publish their results in a Medline indexed journal within 30 months of trial completion.17 Thus, our matched pairs are drawn from a minority of trials that have been compliant with both expectations: publication of results in ClinicalTrials.gov and publication in a peer-reviewed journal.

Full reporting of all deaths enables more accurate assessment of risks and benefits associated with treatments. Assessment of patient safety relies on capturing signals, even when they are non-specific.18 19 Small differences in numbers of death may bias results and distort estimates across studies. From an ethical perspective, it is desirable that trials ascertain and report all deaths regardless of whether they appear to be related to study conduct or intervention, are unforeseen or non-specific. Even with clear instructions and prompts for trials to report deaths, however, there may be remaining uncertainty depending on the rigour of ascertainment or surveillance and the selection of trial outcomes. Further, crude numbers are not the only format for reporting deaths in a trial. Time to event reporting may be more meaningful, but may introduce uncertainty about how censoring and deaths are handled. While both approaches to presenting information on deaths may be necessary and complementary, our study suggests that some improvement could be made with simple means of standardised reporting formats.

In summary, our study shows lack of clarity, consistency and agreement in reporting of deaths in clinical trials. This highlights the need for unambiguous templates to standardise reporting of total number of deaths per arm in ClinicalTrials.gov records and more stringent reporting guidelines for peer-reviewed publications.

Supplementary Material

Footnotes

Contributors: KU, AE and JL conceived the idea of the study and were responsible for the design of the study. AE and KU were responsible for the acquisition of the data, for undertaking the data analysis and produced the tables and graphs. JL provided critical input into the data analysis and the interpretation of the results. The initial draft of the manuscript was prepared by KU and AE and then circulated repeatedly among all authors for critical revision.

Funding: This project was funded under Contract No. HHSA 290 2007-10055-I, entitled ‘Evidence-based Protocol for Reviewing ClinicalTrials.gov Results Database,’ from the Agency for Healthcare Research and Quality, U.S. Department of Health and Human Services. The authors of this report are responsible for its content. Statements in the report should not be construed as endorsement by the Agency for Healthcare Research and Quality or the U.S. Department of Health and Human Services.

Competing interests: None.

Provenance and peer review: Not commissioned; externally peer reviewed.

Data sharing statement: There are no additional data available.

References

- 1.Chou R, Helfand M. Challenges in systematic reviews that assess treatment harms. Ann Intern Med 2005;142(12 Pt 2):1090–9 [DOI] [PubMed] [Google Scholar]

- 2.Ioannidis JP, Lau J. Completeness of safety reporting in randomized trials: an evaluation of 7 medical areas. JAMA 2001;285:437–43 [DOI] [PubMed] [Google Scholar]

- 3.Food and Drug Administration Code of Federal Regulations Title 21. 21 CFR 312.33(b)(3)—Annual Reports. 2012. Ref Type: Bill/Resolution

- 4.Food and Drug Administration. Investigational new drug safety reporting requirements for human drug and biological products and safety reporting requirements for bioavailability and bioequivalence studies in humans. Final rule. Fed Regist 2010;75:59935–63 [PubMed] [Google Scholar]

- 5.Sherman RB, Woodcock J, Norden J, et al. New FDA regulation to improve safety reporting in clinical trials. N Engl J Med 2011;365:3–5 [DOI] [PubMed] [Google Scholar]

- 6.Food and Drug Administration Code of Federal Regulations Title 21. 21 CFR 312.32(c)(1)(i)(C)-IND safety reporting. 2012. Ref Type: Bill/Resolution

- 7.Zarin DA, Tse T, Williams RJ, et al. The ClinicalTrials.gov results database—update and key issues. N Engl J Med 2011;364:852–60 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Food and Drug Administration Amendments Act U.S. Public Law 110–85. 2012. Ref Type: Bill/Resolution

- 9.Tse T, Williams RJ, Zarin DA. Reporting ‘basic results’ in ClinicalTrials.gov. Chest 2009;136:295–303 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Food and Drug Administration Amendments Act U.S. Public Law 110–95. 2007. Ref Type: Bill/Resolution [Google Scholar]

- 11.ClinicalTrials.gov ClinicalTrials.gov ‘Basic Results’ Data Element Definitions. 2012. http://prsinfo.clinicaltrials.gov/results_definitions.html#AdverseEvents (accessed 26 Jun 2012). Ref Type: Online Source

- 12.Cato A. Premarketing adverse drug experiences: data management procedures. Unexpected death occurring early in clinical trials. Drug Inf J 1987;21:3–7 [DOI] [PubMed] [Google Scholar]

- 13.Schulz KF, Altman DG, Moher D. CONSORT 2010 statement: updated guidelines for reporting parallel group randomized trials. Ann Intern Med 2010;152:726–32 [DOI] [PubMed] [Google Scholar]

- 14.Ioannidis JP, Evans SJ, Gotzsche PC, et al. Better reporting of harms in randomized trials: an extension of the CONSORT statement. Ann Intern Med 2004;141:781–8 [DOI] [PubMed] [Google Scholar]

- 15.Mansi BA, Clark J, David FS, et al. Ten recommendations for closing the credibility gap in reporting industry-sponsored clinical research: a joint journal and pharmaceutical industry perspective. Mayo Clin Proc 2012;87:424–9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Prayle AP, Hurley MN, Smyth AR. Compliance with mandatory reporting of clinical trial results on ClinicalTrials.gov: cross sectional study. BMJ 2012;344:d7373. [DOI] [PubMed] [Google Scholar]

- 17.Ross JS, Tse T, Zarin DA, et al. Publication of NIH funded trials registered in ClinicalTrials.gov: cross sectional analysis. BMJ 2012;344:d7292. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Drazen JM. COX-2 inhibitors—a lesson in unexpected problems. N Engl J Med 2005;352:1131–2 [DOI] [PubMed] [Google Scholar]

- 19.Nissen SE, Wolski K. Rosiglitazone revisited: an updated meta-analysis of risk for myocardial infarction and cardiovascular mortality. Arch Intern Med 2010;170:1191–201 [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.