Abstract

We investigate the dynamics of a deterministic finite-sized network of synaptically coupled spiking neurons and present a formalism for computing the network statistics in a perturbative expansion. The small parameter for the expansion is the inverse number of neurons in the network. The network dynamics are fully characterized by a neuron population density that obeys a conservation law analogous to the Klimontovich equation in the kinetic theory of plasmas. The Klimontovich equation does not possess well-behaved solutions but can be recast in terms of a coupled system of well-behaved moment equations, known as a moment hierarchy. The moment hierarchy is impossible to solve but in the mean field limit of an infinite number of neurons, it reduces to a single well-behaved conservation law for the mean neuron density. For a large but finite system, the moment hierarchy can be truncated perturbatively with the inverse system size as a small parameter but the resulting set of reduced moment equations that are still very difficult to solve. However, the entire moment hierarchy can also be re-expressed in terms of a functional probability distribution of the neuron density. The moments can then be computed perturbatively using methods from statistical field theory. Here we derive the complete mean field theory and the lowest order second moment corrections for physiologically relevant quantities. Although we focus on finite-size corrections, our method can be used to compute perturbative expansions in any parameter.

Author Summary

One avenue towards understanding how the brain functions is to create computational and mathematical models. However, a human brain has on the order of a hundred billion neurons with a quadrillion synaptic connections. Each neuron is a complex cell comprised of multiple compartments hosting a myriad of ions, proteins and other molecules. Even if computing power continues to increase exponentially, directly simulating all the processes in the brain on a computer is not feasible in the foreseeable future and even if this could be achieved, the resulting simulation may be no simpler to understand than the brain itself. Hence, the need for more tractable models. Historically, systems with many interacting bodies are easier to understand in the two opposite limits of a small number or an infinite number of elements and most of the theoretical efforts in understanding neural networks have been devoted to these two limits. There has been relatively little effort directed to the very relevant but difficult regime of large but finite networks. In this paper, we introduce a new formalism that borrows from the methods of many-body statistical physics to analyze finite size effects in spiking neural networks.

Introduction

Realistic models of neural networks in the central nervous system are analytically and computationally intractable, presenting a challenge to our understanding of the highly complex spiking dynamics of neurons. Consequently, some degree of simplification is necessary for theoretical progress and there is a corresponding spectrum of models with a range of complexity. “Mean Field” models represent the highest degree of simplification and classically consider the evolution of an “activity” variable which is some average of the output of a population of neurons. Early examples of mean field models are those of Wilson-Cowan [1], [2], Cohen and Grossberg [3], and Amari [4]. These models have proven to be useful in studies of neural dynamics such as in pattern formation and visual hallucinations [5]–[7]. However, because of the nature of the activity variables as averages, they necessarily neglect individual neuron dynamics as well as population level effects of phase information and synchrony. Additionally, it is not clear how the time scales in the equations of mean field models are related to the response properties of the constituent neurons [8].

The next level of model complexity requires relating population level activity to single neuron dynamics. This is a question explored by Knight [9], [10], who noted in particular that although the population firing rate may track an external stimulus, the single neuron firing rate need not and generally does not. The important conceptual feature introduced was that a population of neurons, each of which has some potential variable,  , can be replaced with a density,

, can be replaced with a density,  , which counts the fraction of neurons whose potential lies within the infinitesimal range

, which counts the fraction of neurons whose potential lies within the infinitesimal range  . The firing rate of the population is then the current density of the population at the threshold potential. In the limit of an infinite population of neurons, one can introduce a continuity equation derived from the single neuron dynamics, producing what can be called density mean field theory. The density mean field approach to analyzing coupled networks has been pursued by Desai and Zwanzig [11], Strogatz and Mirollo [12]–[14], Treves [15], Abbott and van Vreeswijk [16] and others [17]–[26]. The spike response formalism considers an integral formulation of the continuity equations [27]. These density mean field approaches have been recently put on a mathematically rigorous footing using results from probability theory [28]–[31].

. The firing rate of the population is then the current density of the population at the threshold potential. In the limit of an infinite population of neurons, one can introduce a continuity equation derived from the single neuron dynamics, producing what can be called density mean field theory. The density mean field approach to analyzing coupled networks has been pursued by Desai and Zwanzig [11], Strogatz and Mirollo [12]–[14], Treves [15], Abbott and van Vreeswijk [16] and others [17]–[26]. The spike response formalism considers an integral formulation of the continuity equations [27]. These density mean field approaches have been recently put on a mathematically rigorous footing using results from probability theory [28]–[31].

Neuronal firing is inherently variable and the source of this variability has been subject to much study and debate [32]–[34]. Incorporating neuronal variability into theories is another level of complexity. Activity mean field models have been shown to exhibit complex dynamics with high variability when coupled with highly variable connectivity [35]–[38], but this is independent of single neuron dynamics. It is not clear in the context of the density mean field approach how to quantify the fluctuations arising from the interactions of discrete neurons in a finite-sized network, where the fluctuations are not suppressed by averaging over an infinite pool of neurons. Ad hoc attempts at quantifying finite-size effects include driving the system with external noise [13], introducing a self-consistent noise from neural firing [39], or assuming Poisson firing rates of the neurons within the population [17], [22], [40]. However, a systematic means of handling fluctuations due to the finite size of a population of neurons remain lacking.

Here, we present a systematic expansion around the density mean field behavior that quantifies the finite-size fluctuations and correlations of a population of neurons in terms of the interactions in the network. The expansion utilizes a kinetic theory approach adapted from plasma physics [41]–[46]. Because we are interested specifically in intrinsic fluctuations which arise across the population evolving via deterministic dynamics, we do not include any external “noise” or internal stochasticity. The network variability is thus entirely due to the fact that many possible neuron initial conditions and parameters are consistent for a given network, which implies that a given network is selected from an ensemble of networks. One should think of this ensemble as the ensemble of networks consistent with an initial experimental setup, or of those networks which are consistent with the experimentally accessible quantities in the network. In particular, we show that fluctuations and correlations and their effect on population behavior can be quantified in a fully deterministic dynamical system by considering the ensemble of system histories given a distribution of initial conditions and network parameters. In the finite size case, the density  will not represent the fraction of neurons in the network with potential in the interval

will not represent the fraction of neurons in the network with potential in the interval  (as it is in the infinite neuron case), but will represent the fraction of networks in the ensemble for which there is a neuron within the interval

(as it is in the infinite neuron case), but will represent the fraction of networks in the ensemble for which there is a neuron within the interval  . In the cases we consider, there is a “typical” system in the large neuron limit, so that the two are nearly identical. To a given order in the network size

. In the cases we consider, there is a “typical” system in the large neuron limit, so that the two are nearly identical. To a given order in the network size  , one can derive a moment hierarchy of differential-integral equations for the statistical moments of the density

, one can derive a moment hierarchy of differential-integral equations for the statistical moments of the density  . The calculations are facilitated by transforming the moment hierarchy into a functional or path integral expression of the moment generating functional from which a perturbative expansion can be derived. We show this for two synaptically coupled neural networks in the Results and provide some guidance on generalization to other models in the Discussion.

. The calculations are facilitated by transforming the moment hierarchy into a functional or path integral expression of the moment generating functional from which a perturbative expansion can be derived. We show this for two synaptically coupled neural networks in the Results and provide some guidance on generalization to other models in the Discussion.

Our approach is thus in the spirit of Gibbs' view of statistical mechanics [47]. Like Gibbs, we do not rely on ergodicity or make any claims about time averages of the dynamics. The systems we study do not obey detailed balance and thus there will not be a necessary correspondence between time averaging and the ensemble averages we study. Nonetheless, we obtain useful results for characterizing the fluctuations and correlations in a network. We consider a specific example with global coupling where these correlations will have well-defined expansions in terms of the inverse systems size  and we refer to them as “finite-size” effects. However, we wish to stress that our approach is not restricted to a finite-size expansion in

and we refer to them as “finite-size” effects. However, we wish to stress that our approach is not restricted to a finite-size expansion in  per se. Our main result is to provide a systematic framework to “average” over unknown or unessential degrees of freedom.

per se. Our main result is to provide a systematic framework to “average” over unknown or unessential degrees of freedom.

Results

The density description of neural networks

We present a formalism to analyze finite-size effects in a network of  synaptically coupled spiking neurons. Under fairly generic conditions, such a system can be reduced to a set of phase variables with a set of ancillary variables (such as those representing synaptic input) [48]–[51]. We consider the phase dynamics of a set of

synaptically coupled spiking neurons. Under fairly generic conditions, such a system can be reduced to a set of phase variables with a set of ancillary variables (such as those representing synaptic input) [48]–[51]. We consider the phase dynamics of a set of  phase neurons obeying

phase neurons obeying

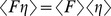

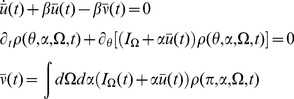

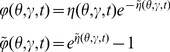

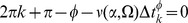

| (1) |

| (2) |

| (3) |

where each neuron has a phase  that is indexed by

that is indexed by  ,

,  is a global synaptic drive,

is a global synaptic drive,  is the population firing rate of the network and

is the population firing rate of the network and  is the

is the  th firing time of neuron

th firing time of neuron  and a neuron fires when its phase crosses

and a neuron fires when its phase crosses  . The frequency function

. The frequency function  depends on all the phases

depends on all the phases  and a set of

and a set of  parameters

parameters  , that can be distinct for each neuron

, that can be distinct for each neuron  . The neuron can be in an oscillatory or excitable regime.

. The neuron can be in an oscillatory or excitable regime.

We will develop our theory for a general frequency function  and apply it to the specific cases of a simple phase oscillator where

and apply it to the specific cases of a simple phase oscillator where  [10] and the theta model where

[10] and the theta model where  , where

, where  ,

,  is an external input and

is an external input and  is a parameter that can be neuron dependent. The theta model is the normal form of a Type I neuron near the bifurcation to firing and is equivalent to a quadratic integrate-and-fire neuron [52]. For some neural networks, a phase reduction of this sort results in a phase coupled model, such as the Kuramoto model (e.g. Hansel and Golomb [53]), which we have previously analyzed [41], [42]. In the present paper, we consider all-to-all or global coupling through a synaptic drive variable

is a parameter that can be neuron dependent. The theta model is the normal form of a Type I neuron near the bifurcation to firing and is equivalent to a quadratic integrate-and-fire neuron [52]. For some neural networks, a phase reduction of this sort results in a phase coupled model, such as the Kuramoto model (e.g. Hansel and Golomb [53]), which we have previously analyzed [41], [42]. In the present paper, we consider all-to-all or global coupling through a synaptic drive variable  . However, our basic approach is not restricted to global coupling.

. However, our basic approach is not restricted to global coupling.

Our goal is to derive the fluctuation and correlation effects beyond mean field theory for the system. For global coupling, these effects arise from the finite number of neurons  in the network. We calculate the effects of finite

in the network. We calculate the effects of finite  on the dynamics of the system as a perturbation expansion in

on the dynamics of the system as a perturbation expansion in  around the mean field limit of

around the mean field limit of  . In particular, we will compute the fluctuations and correlations of the synaptic drive

. In particular, we will compute the fluctuations and correlations of the synaptic drive  and network firing rate

and network firing rate  , defined as the variability over instances of the network given initial conditions as well as neuron and network parameters. We will do this through a probability density functional description of the neuron firing histories. Before we introduce our density functional approach, we describe the Klimontovich description of many-body systems. This description allows us to introduce the fundamental degrees of freedom in a straightforward manner without recourse to the statistical field theory formalism used in the density functional approach. While we focus on finite size effects in this paper, our method could also be used to generate perturbation expansions in other parameters.

, defined as the variability over instances of the network given initial conditions as well as neuron and network parameters. We will do this through a probability density functional description of the neuron firing histories. Before we introduce our density functional approach, we describe the Klimontovich description of many-body systems. This description allows us to introduce the fundamental degrees of freedom in a straightforward manner without recourse to the statistical field theory formalism used in the density functional approach. While we focus on finite size effects in this paper, our method could also be used to generate perturbation expansions in other parameters.

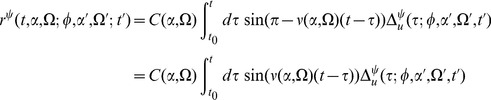

Klimontovich description

We adapt the methods of the kinetic theory as applied to gas and plasma dynamics to create a probabilistic description of the network dynamics [45], [46]. The approach will allow us to calculate the corrections to mean field theory due to correlations in the firing times of neurons. In particular, we employ a Klimontovich description, which considers the probability density of the phases of a population of neurons (i.e. the density of the empirical measure)

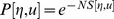

| (4) |

where  is the Dirac delta functional, and

is the Dirac delta functional, and  and

and  are the solutions to system (1)–(3). The neuron density gives a count of the number of neurons with phase

are the solutions to system (1)–(3). The neuron density gives a count of the number of neurons with phase  and parameters

and parameters  at time

at time  . We have included the parameter vector

. We have included the parameter vector  in the neuron density. Hence, neurons are characterized by their phase and parameter values. For systems that obey exchange symmetry or exchangeability (i.e. the system remains unchanged statistically after a relabeling of the neurons), the neuron density in (4) gives a complete description of the system. In systems without exchangeability, the neuron density will still capture the complete dynamics of the system if it includes labels for the information attached to individual neurons. Using the fact that the Dirac delta functional in (3) can be expressed as

in the neuron density. Hence, neurons are characterized by their phase and parameter values. For systems that obey exchange symmetry or exchangeability (i.e. the system remains unchanged statistically after a relabeling of the neurons), the neuron density in (4) gives a complete description of the system. In systems without exchangeability, the neuron density will still capture the complete dynamics of the system if it includes labels for the information attached to individual neurons. Using the fact that the Dirac delta functional in (3) can be expressed as  the population firing rate can be rewritten as

the population firing rate can be rewritten as

| (5) |

The neuron density formally obeys the conservation equation

| (6) |

which is known as the Klimontovich equation in kinetic theory and is only valid in the weak or distributional sense since  is not differentiable. The Klimontovich equation, the equation for the synaptic drive (2), and the firing rate expressed in terms of the neuron density (5), fully define the system. For the systems defined above, we expect that in the limit of a large number of neurons the ensemble of networks will converge to a “typical” network. In the infinite neuron limit, this will give the density equations of mean field theory, whereas for finite but large

is not differentiable. The Klimontovich equation, the equation for the synaptic drive (2), and the firing rate expressed in terms of the neuron density (5), fully define the system. For the systems defined above, we expect that in the limit of a large number of neurons the ensemble of networks will converge to a “typical” network. In the infinite neuron limit, this will give the density equations of mean field theory, whereas for finite but large  , there will be some variation in systems around the mean field solution. For this reason, we consider taking expectations of the Klimontovich equation (6) over initial conditions and neuron parameters, which produce smooth moment functions for the density. Because the interacting dynamics have a non-trivial effect on the distribution functions, computing this average is not always simple. In the next section, we will formally derive an expression for the measure or density functional

, there will be some variation in systems around the mean field solution. For this reason, we consider taking expectations of the Klimontovich equation (6) over initial conditions and neuron parameters, which produce smooth moment functions for the density. Because the interacting dynamics have a non-trivial effect on the distribution functions, computing this average is not always simple. In the next section, we will formally derive an expression for the measure or density functional  over which these averages are taken.

over which these averages are taken.

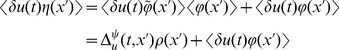

Denoting averages over initial conditions and neuron parameters (i.e. those over  ) by

) by  , the average of (6) yields the equation

, the average of (6) yields the equation

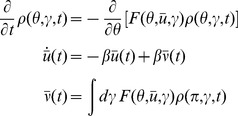

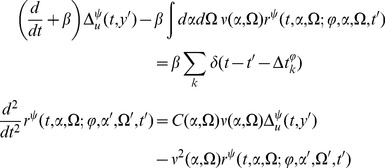

| (7) |

where  is the first moment of

is the first moment of  and called the one-neuron distribution function, which will depend on higher order moments since

and called the one-neuron distribution function, which will depend on higher order moments since  is a function of

is a function of  and hence

and hence  . Equations for the higher order moments can be constructed from (6) by multiplying by factors of

. Equations for the higher order moments can be constructed from (6) by multiplying by factors of  . However, each moment will depend on yet higher moments, resulting in a system of coupled moment equations called the BBGKY hierarchy. Solving the entire BBGKY hierarchy is equivalent to solving the original system and thus provides no computational advantage. However, perturbative solutions in a small parameter such as

. However, each moment will depend on yet higher moments, resulting in a system of coupled moment equations called the BBGKY hierarchy. Solving the entire BBGKY hierarchy is equivalent to solving the original system and thus provides no computational advantage. However, perturbative solutions in a small parameter such as  can be obtained by truncating the hierarchy and solving the truncated system. This has been the traditional approach in kinetic theory but is generally difficult to do. In the next section, we present a computational formalism where moments for the firing rate and synaptic drive are computed directly from a probability density functional of the neuron density.

can be obtained by truncating the hierarchy and solving the truncated system. This has been the traditional approach in kinetic theory but is generally difficult to do. In the next section, we present a computational formalism where moments for the firing rate and synaptic drive are computed directly from a probability density functional of the neuron density.

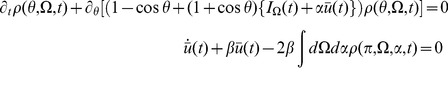

Mean field theory is obtained by neglecting all correlations and higher order cumulants. Thus, setting  gives the self-consistent mean field system

gives the self-consistent mean field system

|

(8) |

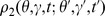

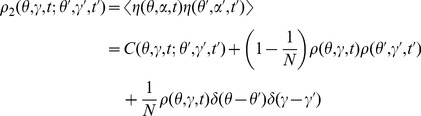

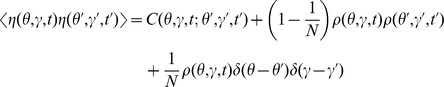

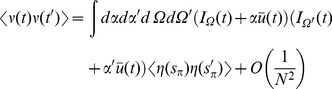

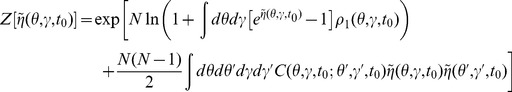

Higher order moments (distribution functions)  are likewise defined. The second moment (2-neuron distribution function),

are likewise defined. The second moment (2-neuron distribution function),  , is the fraction of networks in the ensemble for which there is a neuron of type

, is the fraction of networks in the ensemble for which there is a neuron of type  at

at  and another of type

and another of type  at

at  . It is given by

. It is given by

|

(9) |

We have implicitly defined the function  using the fact that if the neurons are prepared identically and independently, then

using the fact that if the neurons are prepared identically and independently, then  . We call

. We call  the connected contribution and the product of

the connected contribution and the product of  's the disconnected contribution. These labels are equivalent to whether the contribution can be factored into products of lower moments. The two-neuron density function has connected, disconnected and finite-size (those with factors of

's the disconnected contribution. These labels are equivalent to whether the contribution can be factored into products of lower moments. The two-neuron density function has connected, disconnected and finite-size (those with factors of  ) contributions. The finite-size contributions arise from the deviations in the ensemble average due to finite sample size. There are two types of finite size correction. There is a “sampling” correction because of the “diagonal” contribution where the indices

) contributions. The finite-size contributions arise from the deviations in the ensemble average due to finite sample size. There are two types of finite size correction. There is a “sampling” correction because of the “diagonal” contribution where the indices  from the two factors of the neuron density

from the two factors of the neuron density  (4) coincide. Since

(4) coincide. Since  represents the joint probability density function of two neurons drawn from the population, there is a finite-size correction due to the fact that once a neuron has been drawn from the population, that neuron's phase

represents the joint probability density function of two neurons drawn from the population, there is a finite-size correction due to the fact that once a neuron has been drawn from the population, that neuron's phase  is fixed and the probability density for that neuron is a point mass at that phase. Thus, the sampling finite size term consist of removing

is fixed and the probability density for that neuron is a point mass at that phase. Thus, the sampling finite size term consist of removing  th of the joint probability mass from

th of the joint probability mass from  and adding it back as the one-neuron density multiplied by the Dirac delta functional. In the infinite

and adding it back as the one-neuron density multiplied by the Dirac delta functional. In the infinite  case, the probability of drawing a strictly identical neuron twice is zero.

case, the probability of drawing a strictly identical neuron twice is zero.

The second type of finite size effect is due to the coupling and is contained in  (it will be proportional to

(it will be proportional to  ). For uncoupled neurons, if the neurons are not prepared such that

). For uncoupled neurons, if the neurons are not prepared such that  , then no such correlations will be generated by the dynamics. Note that integration of

, then no such correlations will be generated by the dynamics. Note that integration of  over

over  (or

(or  ) gives

) gives  . One can derive similar expressions for the higher moments, i.e. for the

. One can derive similar expressions for the higher moments, i.e. for the  -neuron densities. There will be connected terms which cannot be factored into products of lower moments, there will be disconnected terms which can be so factored, and there will be finite size corrections given by the combinatorics of drawing

-neuron densities. There will be connected terms which cannot be factored into products of lower moments, there will be disconnected terms which can be so factored, and there will be finite size corrections given by the combinatorics of drawing  neurons from a population of size

neurons from a population of size  .

.

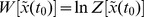

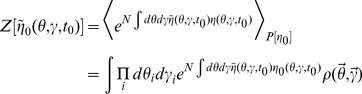

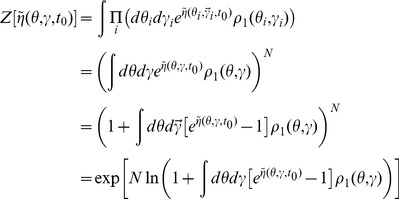

Density functional description

We have shown that one tractable approach for incorporating fluctuations and correlations is to truncate the BBGKY hierarchy. However, solving such truncated systems for any model of reasonable complexity quickly becomes unwieldy. For this reason, we adapt the density functional formalism developed for statistical field theory to obtain a formal expression for the probability density functional of the neuron density and synaptic drive  . The fundamental degrees of freedom in this approach reflect the moments of

. The fundamental degrees of freedom in this approach reflect the moments of  , albeit in a more compact and manageable form. The measure

, albeit in a more compact and manageable form. The measure  is a distribution over the possible network realizations. The “variance” of this distribution (represented by the two-neuron distribution function) provides an indication of the extent to which different realizations of the network will differ from each other. For the systems we consider, the estimates of the

is a distribution over the possible network realizations. The “variance” of this distribution (represented by the two-neuron distribution function) provides an indication of the extent to which different realizations of the network will differ from each other. For the systems we consider, the estimates of the  -neuron distribution functions behave as a power of

-neuron distribution functions behave as a power of  . This has the side benefit of demonstrating that there is a limit in which the ensemble converges to a “typical” system described by the

. This has the side benefit of demonstrating that there is a limit in which the ensemble converges to a “typical” system described by the  -neuron distribution function,

-neuron distribution function,  , i.e. the mean field theory. For the same reason, at large

, i.e. the mean field theory. For the same reason, at large  , we can use the

, we can use the  -neuron distribution functions as estimates of the fluctuations in the density for a single system. Because these fluctuations vanish in the limit of large

-neuron distribution functions as estimates of the fluctuations in the density for a single system. Because these fluctuations vanish in the limit of large  , we term them “finite-size” effects. In the examples below, we concentrate on computing the

, we term them “finite-size” effects. In the examples below, we concentrate on computing the  -neuron distribution function to lowest order in

-neuron distribution function to lowest order in  , which gives estimates of fluctuations of the network coupling variables and the firing rate.

, which gives estimates of fluctuations of the network coupling variables and the firing rate.

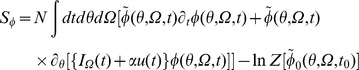

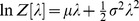

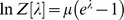

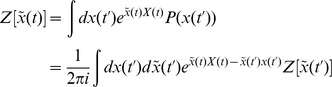

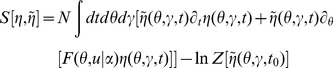

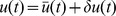

In this section we present only final results, the complete derivation and description of the computational method can be found in the Methods. The essential element of the field theoretic method is that the density functional be expressed in the form  , where

, where  is called the action. Given this density functional, moments can be obtained by integrating over this density. For example, the second moment of

is called the action. Given this density functional, moments can be obtained by integrating over this density. For example, the second moment of  is given by a functional or “path” integral

is given by a functional or “path” integral

where the measure in the integral is over functions of  and

and  in some appropriate functional space. A generating functional for all the moments or cumulants can be similarly defined (see Methods). The strategy of field theory is to exploit the fact that Gaussian integrals have closed form expressions in an arbitrary (including infinite) number of dimensions. Hence, the path integrals can be performed using Laplace's method or the method of steepest descents to obtain an asymptotic series expression for the integrals in terms of a small parameter, which in this case will be

in some appropriate functional space. A generating functional for all the moments or cumulants can be similarly defined (see Methods). The strategy of field theory is to exploit the fact that Gaussian integrals have closed form expressions in an arbitrary (including infinite) number of dimensions. Hence, the path integrals can be performed using Laplace's method or the method of steepest descents to obtain an asymptotic series expression for the integrals in terms of a small parameter, which in this case will be  .

.

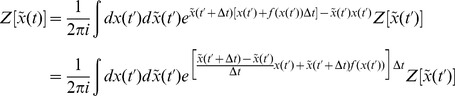

In general, the action  is not expressible in simple form. This is overcome by augmenting the system with an auxiliary set of imaginary response functions

is not expressible in simple form. This is overcome by augmenting the system with an auxiliary set of imaginary response functions

and

and  and defining an expanded action

and defining an expanded action  . The action can then be Taylor expanded around a critical or saddle point where

. The action can then be Taylor expanded around a critical or saddle point where  (where

(where  ), which produces an expansion of moments of a “Gaussian” distribution, in this case arising from the terms bilinear in the auxiliary variables

), which produces an expansion of moments of a “Gaussian” distribution, in this case arising from the terms bilinear in the auxiliary variables  and the configuration variables

and the configuration variables  . A perturbation expansion can then be constructed by exploiting the fact that complex Gaussian integrals of the form

. A perturbation expansion can then be constructed by exploiting the fact that complex Gaussian integrals of the form

|

(for some variables  ) have closed form expressions in terms of linear response functions or propagators

) have closed form expressions in terms of linear response functions or propagators  and are nonzero only if

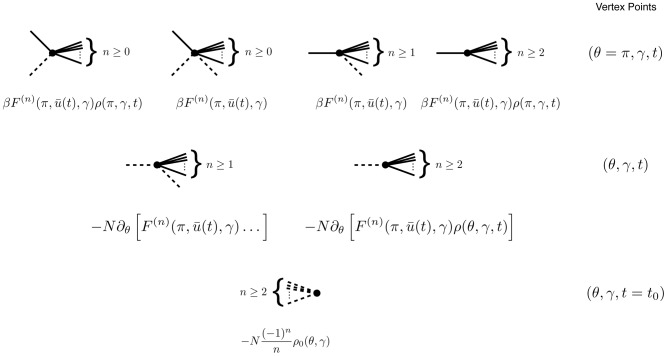

and are nonzero only if  . This path integral identity can be used to formulate an explicit set of rules to obtain expressions for each term of the perturbation expansion. The computation is simplified by encapsulating the rules for constructing the terms in the expansion into diagrams (i.e. Feynman diagrams, see Methods).

. This path integral identity can be used to formulate an explicit set of rules to obtain expressions for each term of the perturbation expansion. The computation is simplified by encapsulating the rules for constructing the terms in the expansion into diagrams (i.e. Feynman diagrams, see Methods).

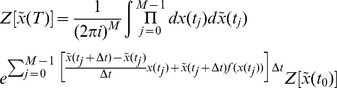

The variables in the action can be compared to those in a stochastic differential equation. The original variables (without a tilde, e.g.  ) denote the configuration variables, while the auxiliary variables (with a tilde, e.g.

) denote the configuration variables, while the auxiliary variables (with a tilde, e.g.  ), denote stochastic or noise forcing terms although in our case the noise is imposed by the uncertainty in the initial conditions and heterogeneity in a fully deterministic network. Finally, the method does not compute the action directly in terms of the neuron density

), denote stochastic or noise forcing terms although in our case the noise is imposed by the uncertainty in the initial conditions and heterogeneity in a fully deterministic network. Finally, the method does not compute the action directly in terms of the neuron density  but rather transforms it to a new set of neuron density variables

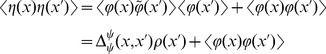

but rather transforms it to a new set of neuron density variables  and

and  through the transformation

through the transformation  and

and  . This transformation renders the action to be more amenable to analysis in a way that is similar in spirit to how the Cole-Hopf transformation reduces the nonlinear Burger's equation into the linear heat equation [54]. Specifically it removes the Poisson-like counting noise from the definitions of the moments. As an example, whereas

. This transformation renders the action to be more amenable to analysis in a way that is similar in spirit to how the Cole-Hopf transformation reduces the nonlinear Burger's equation into the linear heat equation [54]. Specifically it removes the Poisson-like counting noise from the definitions of the moments. As an example, whereas

|

the transformed variables have

As discussed in Methods, the population level coupling implies that the desired quantities will have an expansion in powers of  . We describe basic results of this approach on two particular example networks: the phase model and the quadratic integrate-and-fire model. For each model, we describe mean field theory, the linear response of the population, and all the correlation functions involving the population and the synaptic drive. Each quantity is calculated to lowest non-trivial order.

. We describe basic results of this approach on two particular example networks: the phase model and the quadratic integrate-and-fire model. For each model, we describe mean field theory, the linear response of the population, and all the correlation functions involving the population and the synaptic drive. Each quantity is calculated to lowest non-trivial order.

Phase model

We first apply the formalism on the simple phase model defined by

| (10) |

where  is the magnitude of the coupling of a given neuron to the global activity

is the magnitude of the coupling of a given neuron to the global activity  and

and  indexes the input. (In analytical terms,

indexes the input. (In analytical terms,  is an element of the sigma algebra representing the realizations of the inputs

is an element of the sigma algebra representing the realizations of the inputs  , for example an instance of Brownian motion input).

, for example an instance of Brownian motion input).

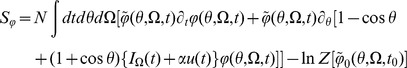

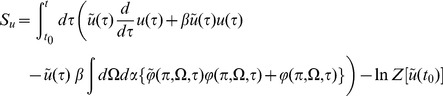

The action for the phase model as derived in the Methods has the form

| (11) |

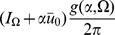

where

|

represents the contribution of the transformed neuron density to the action and

|

represents the global synaptic drive. The action (11) contains all the information about the statistics of the network. Given the action, mean field theory and a perturbative expansion around mean field theory can be derived using standard methods developed in field theory.

The mean field equations, which are given by a critical point of the action, are given by (8), which for parameters  and

and  are rewritten as

are rewritten as

|

(12) |

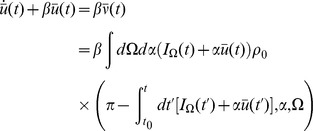

For the phase model, we can solve (12) directly for  to obtain

to obtain

|

where  is the initial distribution. In this case, the functional form given above is also the general (non-mean field) solution, upon replacing

is the initial distribution. In this case, the functional form given above is also the general (non-mean field) solution, upon replacing  with

with  . Recall that

. Recall that  is the population distribution averaged over the ensemble of prepared networks. If the neurons are distributed uniformly in phase, then

is the population distribution averaged over the ensemble of prepared networks. If the neurons are distributed uniformly in phase, then  . In this case, the global activity does not affect the phase distribution. On the other hand, if the neurons are always prepared at the same phase, then

. In this case, the global activity does not affect the phase distribution. On the other hand, if the neurons are always prepared at the same phase, then  , where

, where  is the prepared phase. In this case the neurons will remain in phase.

is the prepared phase. In this case the neurons will remain in phase.

Solving for  allows us to write a closed integro-differential equation for the synaptic drive

allows us to write a closed integro-differential equation for the synaptic drive

|

Note that as long as  is known, this mean field equation reduces the system from a partial differential equation to a two dimensional ODE, namely:

is known, this mean field equation reduces the system from a partial differential equation to a two dimensional ODE, namely:

|

The population behavior is reduced to the synaptic drive dynamics along with the dynamics of a fictitious oscillator  . This is the result of the fact that the only important dynamical quantity is the overall phase shift of each neuron from its initial phase and that this quantity is the same for each neuron. Knowing the initial distribution of states is therefore enough to reduce the dimensionality of the system.

. This is the result of the fact that the only important dynamical quantity is the overall phase shift of each neuron from its initial phase and that this quantity is the same for each neuron. Knowing the initial distribution of states is therefore enough to reduce the dimensionality of the system.

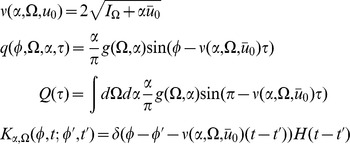

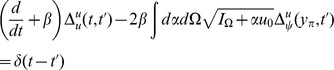

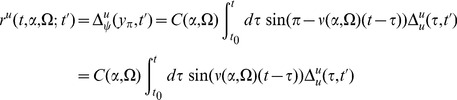

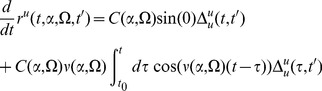

The steepest descent expansions to the path integrals will be expressed in terms of the propagators or linear response functions  , which appear as the inverses of the integral kernels of the bilinear terms in the actions. The linear response can be derived to order

, which appear as the inverses of the integral kernels of the bilinear terms in the actions. The linear response can be derived to order  by linearizing about the solutions of the mean field equation. Because there are two fields in the action (synaptic drive and density), there are four separate propagators:

by linearizing about the solutions of the mean field equation. Because there are two fields in the action (synaptic drive and density), there are four separate propagators:

|

(13) |

where  describes the response in the quantity

describes the response in the quantity  to a perturbation in the quantity

to a perturbation in the quantity  ,

,  denotes perturbations around the mean field solution,

denotes perturbations around the mean field solution,  and

and  . The equations for

. The equations for  reflect a perturbation that consists of adding a single neuron to the population with the specified initial condition and parameters.

reflect a perturbation that consists of adding a single neuron to the population with the specified initial condition and parameters.

If we assume a constant input  then in order to have a steady-state, the mean field must satisfy

then in order to have a steady-state, the mean field must satisfy

|

(14) |

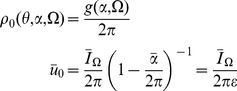

for a fixed parameter probability density  , where

, where  , and

, and  and

and  are the means of

are the means of  and

and  under the distribution

under the distribution  . The linear response around this solution is

. The linear response around this solution is

|

which we can immediately solve in closed form to obtain

|

(15) |

where  are the firing times of the fictitious oscillator

are the firing times of the fictitious oscillator  with initial condition

with initial condition  , and is determined by

, and is determined by  .

.  is the expected form of the linear response upon perturbing the synaptic drive

is the expected form of the linear response upon perturbing the synaptic drive  , i.e. exponential decay. The response of

, i.e. exponential decay. The response of  to the population density

to the population density  ,

,  , is a series of exponential pulses at the firing times of the additional neuron, which is what we would expect if we added a single neuron at a given phase. The other propagators govern the response of the population. Since the distribution is uniform and the firing rate does not depend upon phase, perturbing the synaptic drive only makes the entire population fire faster, but does not change the relative phase, thus

, is a series of exponential pulses at the firing times of the additional neuron, which is what we would expect if we added a single neuron at a given phase. The other propagators govern the response of the population. Since the distribution is uniform and the firing rate does not depend upon phase, perturbing the synaptic drive only makes the entire population fire faster, but does not change the relative phase, thus  . On the other hand, adding a single neuron adjusts the population density by a single delta function at the location of the new neuron, hence the form of

. On the other hand, adding a single neuron adjusts the population density by a single delta function at the location of the new neuron, hence the form of  . The fact that single oscillator perturbations are not damped away by the linear response is an indication that the stationary state is marginally stable. We expect that finite size effects at the next order will stabilize these marginal modes assuming there is some degree of heterogeneity similar to what happens in the Kuramoto model [41], [42].

. The fact that single oscillator perturbations are not damped away by the linear response is an indication that the stationary state is marginally stable. We expect that finite size effects at the next order will stabilize these marginal modes assuming there is some degree of heterogeneity similar to what happens in the Kuramoto model [41], [42].

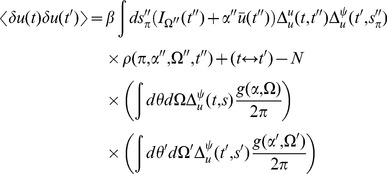

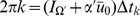

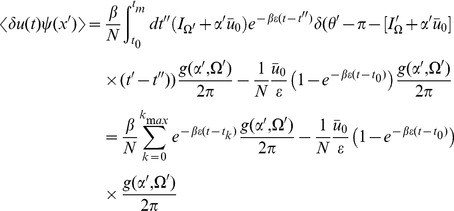

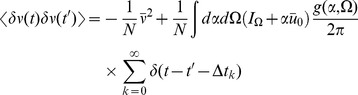

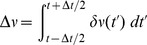

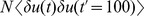

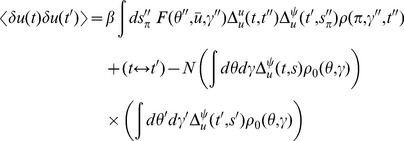

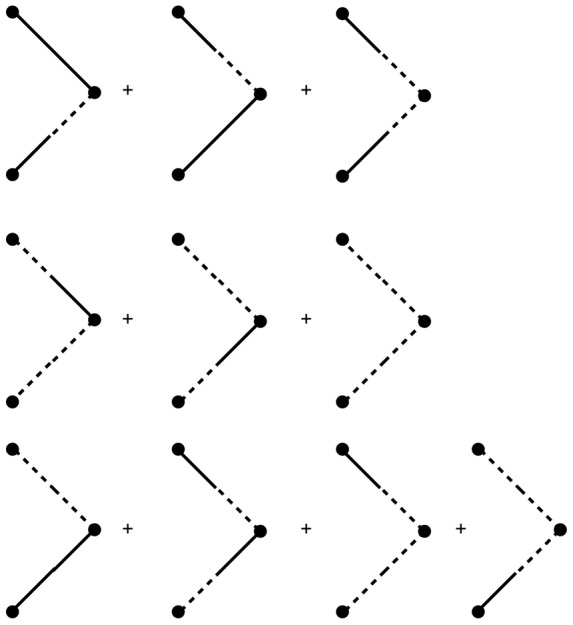

As described in Methods, the expansion of any  -neuron correlation function in powers of

-neuron correlation function in powers of  can be computed from the linear response and the “vertices” derived from the action

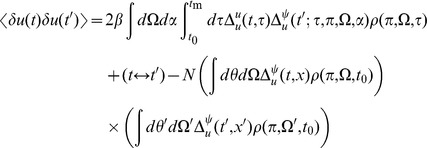

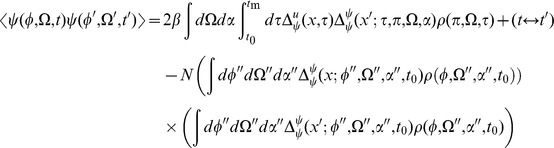

can be computed from the linear response and the “vertices” derived from the action  . Here we give the lowest order contribution to the 2-neuron correlation functions. In addition, this will give us the firing rate fluctuations. For the fluctuations in the synaptic drive

. Here we give the lowest order contribution to the 2-neuron correlation functions. In addition, this will give us the firing rate fluctuations. For the fluctuations in the synaptic drive  about an arbitrary mean field state

about an arbitrary mean field state  , the diagrams at tree level (

, the diagrams at tree level ( ) give

) give

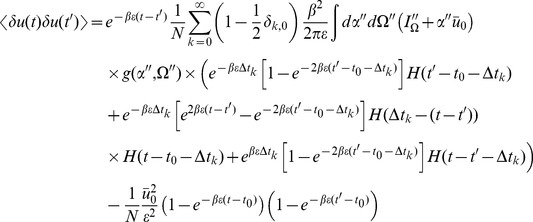

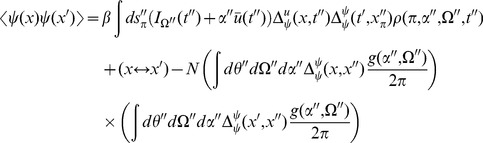

|

(16) |

where  . Inserting the expressions for the linear response in the stationary state (15) we obtain :

. Inserting the expressions for the linear response in the stationary state (15) we obtain :

|

(17) |

for  , where the

, where the  are determined by

are determined by  and

and  is the Kronecker delta. The reason for the Kronecker delta term is to account for the limiting process which defines the interaction vertex. Essentially only half the neurons within the vicinity of firing will contribute to the first cycle of firing (about half are above threshold, half under). On subsequent cycles, all neurons will contribute. This issue arises here because of an ambiguity of the continuum representation we are using. The vertex only measures those neurons which have passed threshold, whereas the linear response from (15) considers the limiting behavior of neurons initially configured in the neighborhood of some phase

is the Kronecker delta. The reason for the Kronecker delta term is to account for the limiting process which defines the interaction vertex. Essentially only half the neurons within the vicinity of firing will contribute to the first cycle of firing (about half are above threshold, half under). On subsequent cycles, all neurons will contribute. This issue arises here because of an ambiguity of the continuum representation we are using. The vertex only measures those neurons which have passed threshold, whereas the linear response from (15) considers the limiting behavior of neurons initially configured in the neighborhood of some phase  (consider the last equation in (15)). If the distribution

(consider the last equation in (15)). If the distribution  is smooth, it is more convenient to compute the term

is smooth, it is more convenient to compute the term  convolved with the function

convolved with the function  .

.

Performing the time integration gives

|

(18) |

The equal time correlation function has a simpler form:

|

(19) |

This correlation function quantifies the fluctuations in the global coupling variable  as a function of time. Recall that we defined an initial state in which each neuron is statistically independent in phase and parameters. The time

as a function of time. Recall that we defined an initial state in which each neuron is statistically independent in phase and parameters. The time  is the interval elapsed since the network was in that initial state.

is the interval elapsed since the network was in that initial state.  is a measure of the expected variance of the synaptic drive from the mean

is a measure of the expected variance of the synaptic drive from the mean  at time

at time  . As mentioned above, due to the fact that higher moments of

. As mentioned above, due to the fact that higher moments of  will be suppressed by higher powers of

will be suppressed by higher powers of  , this is also an estimate of the variance of the global coupling as a function of time

, this is also an estimate of the variance of the global coupling as a function of time  from a known mean field configuration. Because the linear response has a spectrum which includes the spectrum of the single neuron activity, we expect behavior characteristic of the time scales of single neuron dynamics to appear.

from a known mean field configuration. Because the linear response has a spectrum which includes the spectrum of the single neuron activity, we expect behavior characteristic of the time scales of single neuron dynamics to appear.

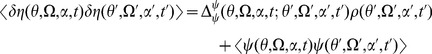

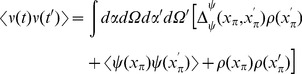

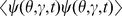

We now turn to the correlations in the density variable  . As discussed in the Methods section, these are given by (let

. As discussed in the Methods section, these are given by (let  and

and  )

)

|

(20) |

The first term is given by expressions derived above. The second term is of the same form as the correlation of the synaptic drive variable.

|

(21) |

The above is the general expression. For the fluctuations about steady state,  from (15), giving the simple relation

from (15), giving the simple relation

| (22) |

which is just the negative of the product of the mean field steady state solutions at each argument  times a factor of

times a factor of  . This term is due to the factor

. This term is due to the factor  from the sampling correction in the two-neuron distribution function (see equation (9) and below).

from the sampling correction in the two-neuron distribution function (see equation (9) and below).

The 2-neuron distribution function is given by

|

(23) |

At equal times ( ) we have

) we have

|

(24) |

which shows that (22) is the correction term for the normalization of the two-neuron distribution function. So for the case of the simple phase model, the fluctuations in the density about steady state are given by the sampling fluctuations from the steady state distribution. Note that for large  this means that the variance of the number of neurons at firing (

this means that the variance of the number of neurons at firing ( ) is equal to the mean times a factor of

) is equal to the mean times a factor of  , which is equivalent to the Poisson counting assumption of Brunel-Hakim [17]. As we will show in the next section, this will not hold in general. Note the form of the linear response (15) for the term

, which is equivalent to the Poisson counting assumption of Brunel-Hakim [17]. As we will show in the next section, this will not hold in general. Note the form of the linear response (15) for the term  . The fact that the linear response

. The fact that the linear response  , eliminated the first term in (21), which is the contribution to the fluctuations from the coupling. Comparing to the general form of the linear response (13), we see that the equation for

, eliminated the first term in (21), which is the contribution to the fluctuations from the coupling. Comparing to the general form of the linear response (13), we see that the equation for  has a source term proportional

has a source term proportional  . Because the phase model has a uniform steady state, this source term is zero. For a model with a non-uniform steady state (such as the quadratic integrate-and-fire model, which we examine in the next section) this will not be the case, and there will be further corrections to the fluctuations in

. Because the phase model has a uniform steady state, this source term is zero. For a model with a non-uniform steady state (such as the quadratic integrate-and-fire model, which we examine in the next section) this will not be the case, and there will be further corrections to the fluctuations in  . It occurs in the phase model because perturbations in the synaptic drive do not perturb the density in steady state. Thus the only fluctuations of the density in steady state are from the sampling fluctuations.

. It occurs in the phase model because perturbations in the synaptic drive do not perturb the density in steady state. Thus the only fluctuations of the density in steady state are from the sampling fluctuations.

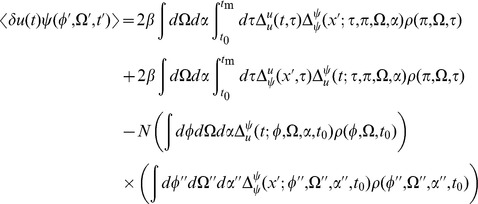

The correlation function between the global coupling and the density is given by (with  ).

).

| (25) |

Again, the first term is composed of factors derived above. The remaining unique term is given by

|

(26) |

In steady state, this term is

|

(27) |

where  , the

, the  are defined such that

are defined such that  , and

, and  is the largest

is the largest  such that

such that  .

.

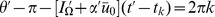

The firing rate of the population is given by

| (28) |

The mean field solution for this is

| (29) |

and in steady state we have

|

(30) |

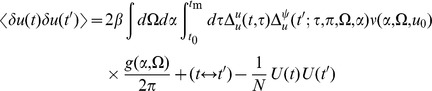

The second moment of the firing rate is given by

|

(31) |

Using our expression for the variance of  in steady state, we have

in steady state, we have

|

(32) |

where  and the

and the  are such that

are such that  . At equal time we have the simple form

. At equal time we have the simple form

| (33) |

which is equivalent to the Poisson finite size ansatz. The delta function evaluated at zero is a singularity which arises upon attempting to isolate a counting process at a single point on the real line. This can be regularized by considering an estimate of this quantity in a time interval  . The variance in the counts will vary as

. The variance in the counts will vary as

where  . This indicates that the population firing rate will appear as that from a population of independent Poisson neurons even though the individual neurons are regular. For intuition as to why this is the case, consider dividing up the interval

. This indicates that the population firing rate will appear as that from a population of independent Poisson neurons even though the individual neurons are regular. For intuition as to why this is the case, consider dividing up the interval  into bins of equal size and distributing

into bins of equal size and distributing  neurons into these bins. This is the initial state of the network when initialized in steady state. The distribution of the neuron counts in each bin will follow a hypergeometric distribution. In the limit of small bin size and large

neurons into these bins. This is the initial state of the network when initialized in steady state. The distribution of the neuron counts in each bin will follow a hypergeometric distribution. In the limit of small bin size and large  , the number of neurons in each bin will approximate a Poisson distribution. The factor of

, the number of neurons in each bin will approximate a Poisson distribution. The factor of  arises from normalizing the coupling by

arises from normalizing the coupling by  . Recall that the absence of any other correction is an artifact of the uniformity of the steady state of the phase model. This will not be the case for the quadratic integrate-and-fire model.

. Recall that the absence of any other correction is an artifact of the uniformity of the steady state of the phase model. This will not be the case for the quadratic integrate-and-fire model.

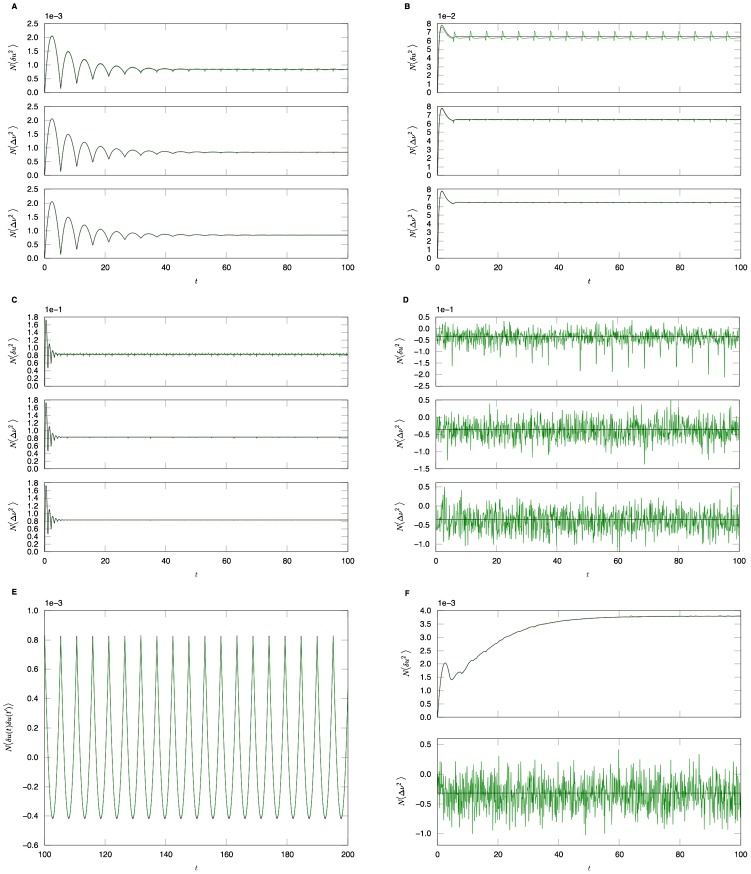

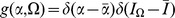

Figure 1 shows comparisons between our analytical predictions and numerical simulations. In (a) through (d), the network the parameters  and

and  are constant and homogeneous (i.e.

are constant and homogeneous (i.e.  ). Figure 1 (a)–(c) shows examples of the variance of the synaptic drive as a function of time. As seen in the figures, the correlation function has contributions that appear at the firing times of the fictitious oscillator

). Figure 1 (a)–(c) shows examples of the variance of the synaptic drive as a function of time. As seen in the figures, the correlation function has contributions that appear at the firing times of the fictitious oscillator  (Recall that

(Recall that  is a function which parameterizes the linear response). Each such “firing event” produces a new positive transient response in the correlation function. As

is a function which parameterizes the linear response). Each such “firing event” produces a new positive transient response in the correlation function. As  , each firing event produces ever smaller perturbations as the correlation approaches steady state. Note also in those figures that the analytic computation at order

, each firing event produces ever smaller perturbations as the correlation approaches steady state. Note also in those figures that the analytic computation at order  becomes better as

becomes better as  grows larger, and that the overall magnitude scales as

grows larger, and that the overall magnitude scales as  . Deviations are observable for small

. Deviations are observable for small  , particularly for the case

, particularly for the case  . Note also the firing rate of the fictitious oscillator increases as the population input increases. Comparison of numerical and analytic results for

. Note also the firing rate of the fictitious oscillator increases as the population input increases. Comparison of numerical and analytic results for  is shown in Figure 1 (d). We measured this quantity by binning the firing counts in a time window

is shown in Figure 1 (d). We measured this quantity by binning the firing counts in a time window  and have also subtracted the “Poisson” contribution. The analytic result is the first term from equation (33). Figure 1 (e) shows the two-time correlation function

and have also subtracted the “Poisson” contribution. The analytic result is the first term from equation (33). Figure 1 (e) shows the two-time correlation function  , where we have fixed

, where we have fixed  . As expected by our prediction in equation (18), the oscillations are much more pronounced. Figure 1 (f) shows the effects of heterogeneity on the synaptic drive. The drive distribution was chosen to be uniform, with inputs to each neuron chosen from the interval

. As expected by our prediction in equation (18), the oscillations are much more pronounced. Figure 1 (f) shows the effects of heterogeneity on the synaptic drive. The drive distribution was chosen to be uniform, with inputs to each neuron chosen from the interval  . The oscillations in the synaptic drive are damped by the heterogeneity and there is an effective increase in the mean drive fluctuations as expected from the theory. In this case the heterogeneity clearly dominates as a contribution to the fluctuations, as can be seen by comparing figures 1 (a) and 1 (f), which differ by close to a factor of four in steady state.

. The oscillations in the synaptic drive are damped by the heterogeneity and there is an effective increase in the mean drive fluctuations as expected from the theory. In this case the heterogeneity clearly dominates as a contribution to the fluctuations, as can be seen by comparing figures 1 (a) and 1 (f), which differ by close to a factor of four in steady state.

Figure 1. Phase model.

A. Numerical computations (green line) and analytical predictions (black line) for  (top),

(top),  (middle),

(middle),  (bottom) of

(bottom) of  for

for  ,

,  ,

,  . B. Numerical computations (green line) and analytical predictions (black line) for

. B. Numerical computations (green line) and analytical predictions (black line) for  (top),

(top),  (middle),

(middle),  (bottom) of

(bottom) of  for

for  ,

,  ,

,  . C. Numerical computations (green line) and analytical predictions (black line) for

. C. Numerical computations (green line) and analytical predictions (black line) for  (top),

(top),  (middle),

(middle),  (bottom) of

(bottom) of  for

for  ,

,  ,

,  . D. Numerical computations (green line) and analytical predictions (black line) for

. D. Numerical computations (green line) and analytical predictions (black line) for  (top),

(top),  (middle),

(middle),  (bottom) of

(bottom) of  for

for  ,

,  ,

,  , where the “Poisson” contribution has been subtracted. E. Two-time correlator

, where the “Poisson” contribution has been subtracted. E. Two-time correlator  for

for  ,

,  ,

,  , and

, and  . F. Equal time correlators in a heterogeneous network;

. F. Equal time correlators in a heterogeneous network;  and

and  for

for  ,

,  ,

,  and

and  .

.  is taken from the interval

is taken from the interval  for each neuron. Ensemble averages for all simulations are taken over

for each neuron. Ensemble averages for all simulations are taken over  samples.

samples.

The quadratic integrate-and-fire model

The second model we analyze is the quadratic integrate-and-fire model, whose single neuron dynamics are given by

| (34) |

This model exhibits a finite-time blow-up that is considered to be “firing” at which point the neuron's membrane potential  is reset to

is reset to  . We couple the neurons in the same manner as in the phase model with the synaptic drive

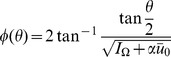

. We couple the neurons in the same manner as in the phase model with the synaptic drive  . Ermentrout and Kopell mapped this model to an oscillator using the transformation

. Ermentrout and Kopell mapped this model to an oscillator using the transformation  [55] to obtain

[55] to obtain

| (35) |

This form of the model is often called the theta model [55]. Hence, the function  is given by:

is given by:

| (36) |

A convenient feature of this model is that neurons cross the firing phase  at a constant rate

at a constant rate  .

.

Defining the neuron density in the same way as before

| (37) |

the continuity equation is

| (38) |

The action, constructed according to the procedure outlined in the Methods section, is

| (39) |

where the population part of the action is

|

and the part representing the synaptic drive is

|

(40) |

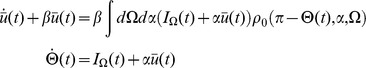

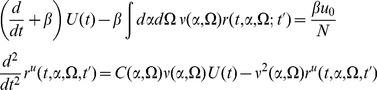

Mean field theory is given by

|

(41) |

Note that because the firing rate is constant at  , the input to the synaptic drive is only dependent upon

, the input to the synaptic drive is only dependent upon  and not directly on the synaptic drive itself.

and not directly on the synaptic drive itself.

It is useful to examine the steady state of this model in some detail. For a constant drive  , the steady state obeys

, the steady state obeys

| (42) |

For  , this solution is a unimodal distribution peaked at

, this solution is a unimodal distribution peaked at  whose width narrows in proportion to the size of the input. Conversely, for

whose width narrows in proportion to the size of the input. Conversely, for  , the peak is at

, the peak is at  . The higher the input, the more likely it is that any given neuron will be found near the firing phase,

. The higher the input, the more likely it is that any given neuron will be found near the firing phase,  . The synaptic drive variable must satisfy a consistency condition:

. The synaptic drive variable must satisfy a consistency condition:

| (43) |

This equation can be viewed as the steady state solution to a Wilson-Cowan type rate equation. The firing rate for the quadratic integrate-and-fire model is given, in the mean field approximation, by

| (44) |

In steady-state we have

| (45) |

so that we can identify  as the “gain” function for the neurons of type

as the “gain” function for the neurons of type  .

.

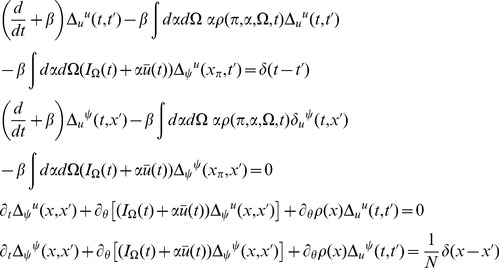

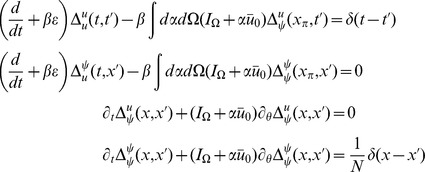

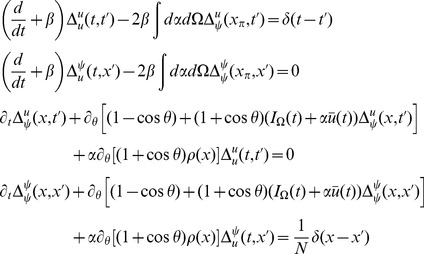

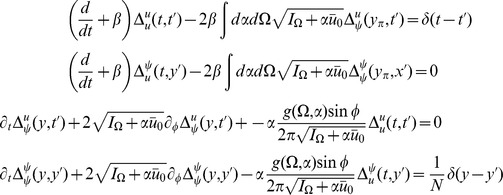

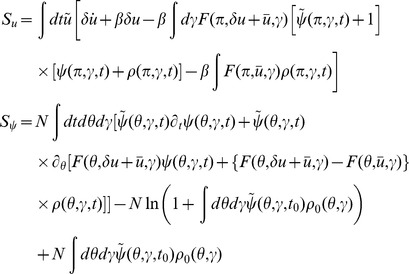

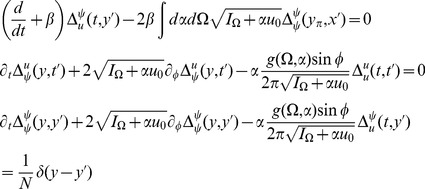

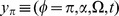

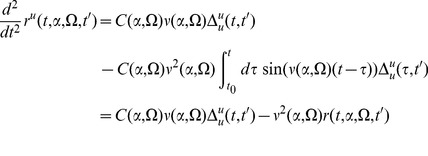

The linear response for the coupled theta model is given by the equations:

|

where again  and

and  .

.

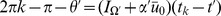

Consider the steady state and transform the angle variable for each  with

with

|

(46) |

Then we have

| (47) |

This change of variables makes the steady state uniform in  for each

for each  . The equations for the linear response in steady state in terms of

. The equations for the linear response in steady state in terms of  are

are

|

where  and

and  (note that

(note that  ).

).

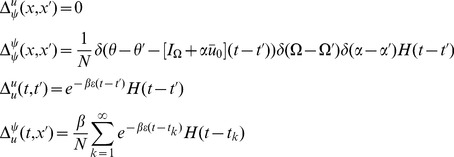

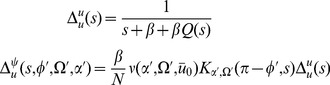

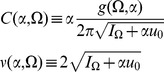

The linear response for the theta model is most easily expressed in terms of the Laplace variable  and is given by

and is given by

|

(48) |

where

|

is similar to the linear response of the synaptic drive in the phase model with the addition of the feedback response of the population through the filter

is similar to the linear response of the synaptic drive in the phase model with the addition of the feedback response of the population through the filter  .

.  is the same as in the phase model with this transformation. It is a series of pulses with the pulse shape given by the linear response and the pulse times determined by the firing times of a fictitious oscillator driven at rate

is the same as in the phase model with this transformation. It is a series of pulses with the pulse shape given by the linear response and the pulse times determined by the firing times of a fictitious oscillator driven at rate  .

.

We also have

|

(49) |

These results produce the primary qualitative difference between the phase and the theta models. The first term in  is analogous to the phase model calculation. It represents a perturbation of adding a single oscillator with initial coordinate

is analogous to the phase model calculation. It represents a perturbation of adding a single oscillator with initial coordinate  evolving at rate

evolving at rate  . The second term and the non-zero value of

. The second term and the non-zero value of  arise from the non-uniform distribution of the steady state, which arises from the functional dependence on

arise from the non-uniform distribution of the steady state, which arises from the functional dependence on  of the neural input function. This term produces deviations from the “Poisson” behavior of the firing rate fluctuations.

of the neural input function. This term produces deviations from the “Poisson” behavior of the firing rate fluctuations.

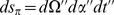

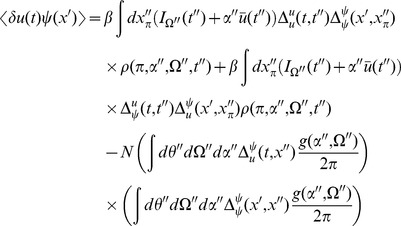

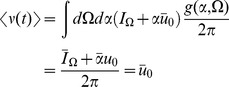

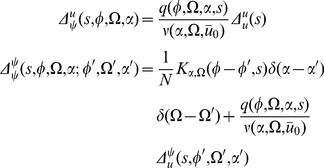

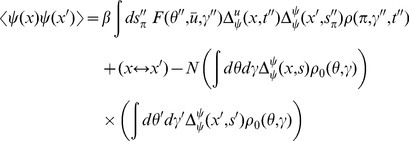

We can use these expressions to compute the tree level correlations with:

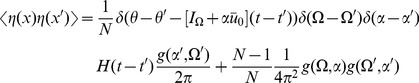

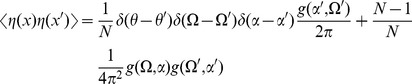

|

with  . The other correlation functions are given by

. The other correlation functions are given by

|

and

|

These are more difficult to put in closed form, other than in terms of the response function for the synaptic drive. Instead we show numerical results.

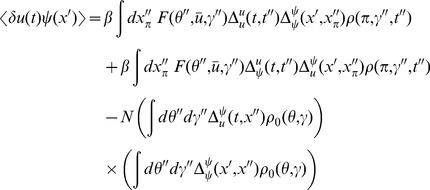

We can use the linear response formulas above to compute analytic formula for steady state. Changing coordinates and using the steady state mean field values we have

|

where the Laplace transform of  is given by

is given by

and

is the firing rate of the population in steady state. The correlations in the synaptic drive variable has the same basic form as that of the phase model. Because of the structure of  it will also have the same pulse behavior at an interval defined by a fictitious oscillator evolving according to the population activity. The primary difference is the replacement of the response function for the synaptic drive with the response for the theta coupling and the firing rate with the theta model firing rate.

it will also have the same pulse behavior at an interval defined by a fictitious oscillator evolving according to the population activity. The primary difference is the replacement of the response function for the synaptic drive with the response for the theta coupling and the firing rate with the theta model firing rate.

The two-neuron density function, by contrast, is different by virtue of the non-uniform nature of the steady state. In this case,  so there will be a contribution at first order in the perturbation expansion (i.e. tree level) to the density fluctuations. Similarly, there is an extra term for the correlation function

so there will be a contribution at first order in the perturbation expansion (i.e. tree level) to the density fluctuations. Similarly, there is an extra term for the correlation function  . Each of these correlation functions is only computable in closed form in terms of the response functions, which we compute numerically.

. Each of these correlation functions is only computable in closed form in terms of the response functions, which we compute numerically.

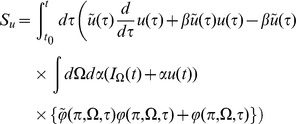

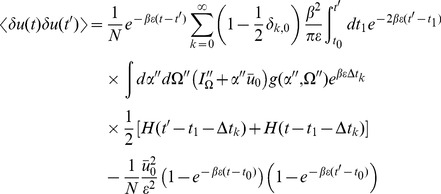

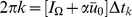

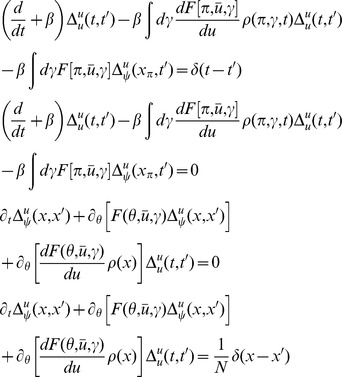

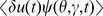

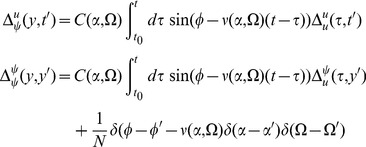

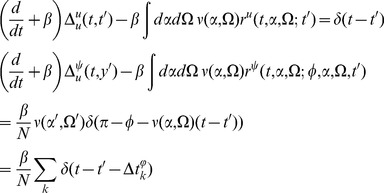

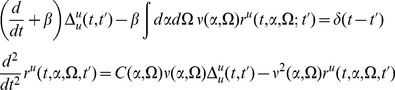

The firing rate fluctuations for the theta model are simpler than the phase model because the input for each neuron is the constant 2 at  . For the firing rate obeying

. For the firing rate obeying

| (50) |

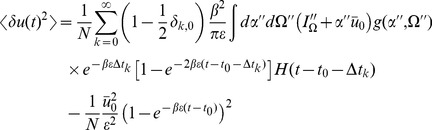

the second moment of the firing rate is

|

(51) |

for  . The equal time second moment is given by

. The equal time second moment is given by

| (52) |

where the  term has the same meaning as in the phase model. In the phase model case, the analogous expression to the first term on the right hand side was zero, and the population firing rate appeared to be the firing rate of the average of

term has the same meaning as in the phase model. In the phase model case, the analogous expression to the first term on the right hand side was zero, and the population firing rate appeared to be the firing rate of the average of  Poisson firing neurons. In the theta model case, however, there is a correction of order

Poisson firing neurons. In the theta model case, however, there is a correction of order  . From (52), it is simple to show that the firing rate fluctuations in a bin of size

. From (52), it is simple to show that the firing rate fluctuations in a bin of size  obey

obey

| (53) |

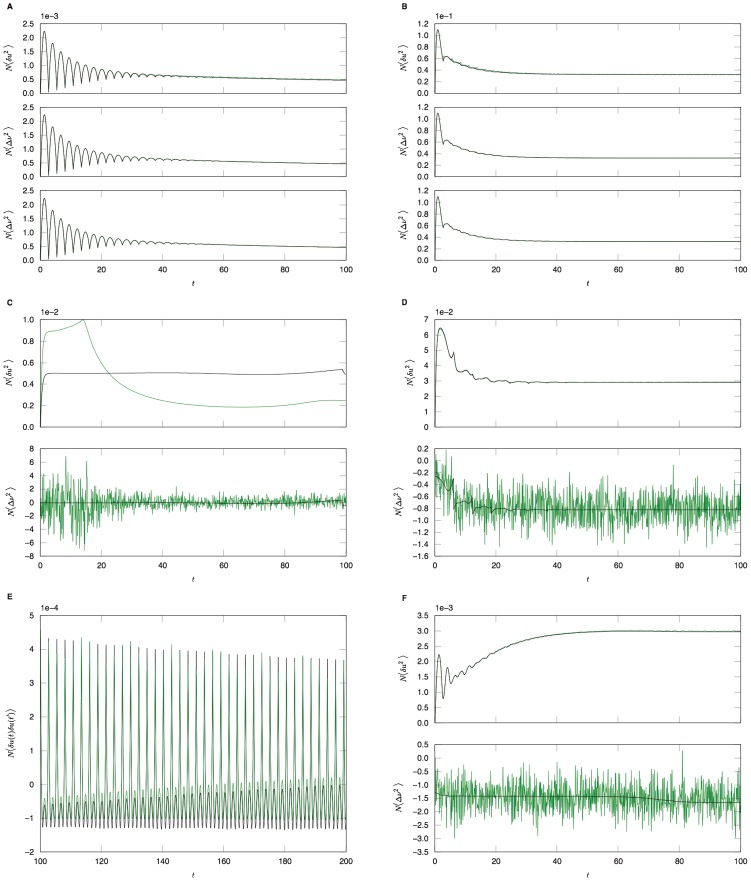

Comparisons between analytic and numerical results for the quadratic integrate-and-fire model are given in Figure 2. In (a) through (e), the parameters  and

and  are constant and homogeneous. One can see the qualitative similarity between the phase and quadratic integrate-and-fire models in the behavior of the activity correlations,

are constant and homogeneous. One can see the qualitative similarity between the phase and quadratic integrate-and-fire models in the behavior of the activity correlations,  . Both share the same pulsatile behavior driven by the fictitious oscillator, i.e. both show the spectral characteristics inherited from the single neuron dynamics. The density fluctuations, however, have an effect on the fluctuations in the firing rate. These effects can be seen in Figures 2 (c), (d). In addition to the nontrivial firing rate fluctuation dynamics, the quadratic integrate-and-fire model also shows near-critical behavior, owing to the phase transition between the “asynchronous state” and synchronous firing. For a population with no external drive, this transition occurs at

. Both share the same pulsatile behavior driven by the fictitious oscillator, i.e. both show the spectral characteristics inherited from the single neuron dynamics. The density fluctuations, however, have an effect on the fluctuations in the firing rate. These effects can be seen in Figures 2 (c), (d). In addition to the nontrivial firing rate fluctuation dynamics, the quadratic integrate-and-fire model also shows near-critical behavior, owing to the phase transition between the “asynchronous state” and synchronous firing. For a population with no external drive, this transition occurs at  . With

. With  , as in Figure 2 (c,) this represents a configuration in which the system is usually not firing, but with the occasional neuron moving across threshold. The reader is encouraged to draw an analogy with “avalanche” dynamics, in which the population will briefly fire in bursts and then go silent. While there is a small but fixed average firing rate, the fluctuations are large owing to this transient behavior. Even a small drive will regularize the system, as in Figure 2 (d). The finite size expansion is expected to break down near a phase transition, accordingly here it is expected to breakdown at the onset of synchrony. The breakdown of the expansion is evident in Figure 2 (c), where one can see enormous discrepancy between the analytic and numerical computations. Figure 2 (e) shows the two-time correlation function

, as in Figure 2 (c,) this represents a configuration in which the system is usually not firing, but with the occasional neuron moving across threshold. The reader is encouraged to draw an analogy with “avalanche” dynamics, in which the population will briefly fire in bursts and then go silent. While there is a small but fixed average firing rate, the fluctuations are large owing to this transient behavior. Even a small drive will regularize the system, as in Figure 2 (d). The finite size expansion is expected to break down near a phase transition, accordingly here it is expected to breakdown at the onset of synchrony. The breakdown of the expansion is evident in Figure 2 (c), where one can see enormous discrepancy between the analytic and numerical computations. Figure 2 (e) shows the two-time correlation function  where

where  . Figure 2 (f) shows the effects of heterogeneity on the synaptic drive, where the drive distribution was chosen to be uniform, with inputs to each neuron chosen from the interval

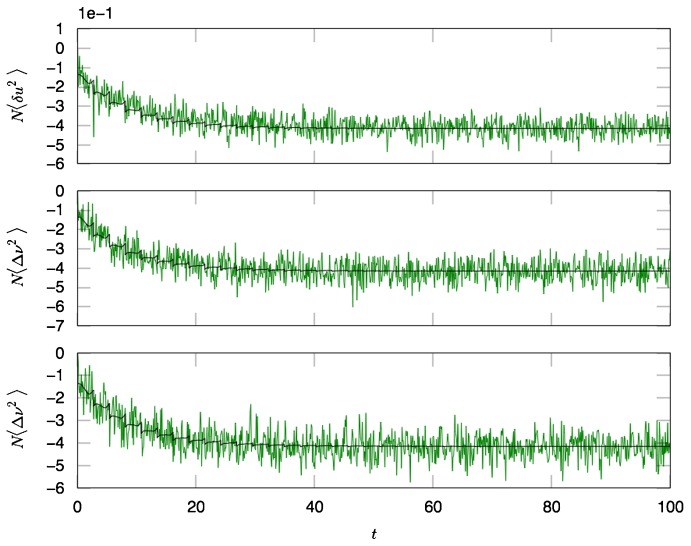

. Figure 2 (f) shows the effects of heterogeneity on the synaptic drive, where the drive distribution was chosen to be uniform, with inputs to each neuron chosen from the interval  . The oscillations in the synaptic drive are damped and there is an effective increase in the mean drive fluctuations as expected from the theory. Again the heterogeneity is the dominant contribution to the fluctuations, as can be seen by comparing figures 2 (a) and 2 (f), which differ by close to a factor of six in steady state. Figure 3 shows a comparison of the firing rate fluctuations. In contrast to the Phase Model, there is non-trivial temporal behavior owing to the phase dependence of the neuron dynamics.

. The oscillations in the synaptic drive are damped and there is an effective increase in the mean drive fluctuations as expected from the theory. Again the heterogeneity is the dominant contribution to the fluctuations, as can be seen by comparing figures 2 (a) and 2 (f), which differ by close to a factor of six in steady state. Figure 3 shows a comparison of the firing rate fluctuations. In contrast to the Phase Model, there is non-trivial temporal behavior owing to the phase dependence of the neuron dynamics.

Figure 2. Quadratic integrate-and-fire model.

A. Numerical computations (green line) and analytical predictions (black line) for  for

for  ,

,  ,

,  for

for  (top),

(top),  (middle),

(middle),  (bottom) neurons. B. Numerical computations (green line) and analytical predictions (black line) for

(bottom) neurons. B. Numerical computations (green line) and analytical predictions (black line) for  for

for  ,

,  ,

,  for

for  (top),

(top),  (middle),

(middle),  (bottom) neurons. C. Numerical computations (green line) and analytical predictions (black line) for

(bottom) neurons. C. Numerical computations (green line) and analytical predictions (black line) for  (top) and

(top) and  (bottom) for

(bottom) for  ,

,  ,

,  ,

,  . D.

. D.  (top) and

(top) and  (bottom) for

(bottom) for  ,

,  ,

,  ,

,  , where the Poisson contribution has been subtracted. E. Two-time correlator

, where the Poisson contribution has been subtracted. E. Two-time correlator  for

for  ,

,  ,

,  , and

, and  . F Equal time correlators in a heterogeneous network;

. F Equal time correlators in a heterogeneous network;  and

and  for

for  ,

,  ,

,  and

and  .

.  is taken from the interval

is taken from the interval  for each neuron. Ensemble average for all simulations are taken over

for each neuron. Ensemble average for all simulations are taken over  samples.

samples.

Figure 3. Numerical computations (green line) and analytical predictions (black line) of the firing rate fluctuations  for the quadratic integrate-and-fire model for

for the quadratic integrate-and-fire model for  ,

,  ,

,  for

for  (top),

(top),  (middle),

(middle),  (bottom) neurons with Poisson contribution subtracted.

(bottom) neurons with Poisson contribution subtracted.

Ensemble average is taken over  samples.

samples.

Discussion

We have constructed a system size expansion for the density formulation of spiking neural networks and computed the fluctuations and correlations of network variables to lowest order. In particular, we explicitly calculate two-neuron and higher order moments in the network. We have demonstrated our method in globally coupled networks with two different neuron types. We note that all the fluctuations and correlations are “finite-size” effects, i.e. they do not exist in mean field theory. There will also be finite-size effects on the mean firing rate and synaptic drive, which could also be calculated using our methods. However, in the systems we studied, the finite-size corrections to the mean field density in the steady state are necessarily zero by neuron conservation. The steady state is uniform and the fluctuation effects will not (for these models) break the symmetry.