Summary

Humans can see and name thousands of distinct object and action categories, so it is unlikely that each category is represented in a distinct brain area. A more efficient scheme would be to represent categories as locations in a continuous semantic space mapped smoothly across the cortical surface. To search for such a space, we used functional magnetic resonance imaging (fMRI) to measure human brain activity evoked by natural movies. We then used voxel-wise models to examine the cortical representation of 1705 object and action categories. The first few dimensions of the underlying semantic space were recovered from the fit models by principal components analysis. Projection of the recovered semantic space onto cortical flat maps shows that semantic selectivity is organized into smooth gradients that cover much of visual and non-visual cortex. Furthermore, both the recovered semantic space and the cortical organization of the space are shared across different individuals.

Keywords: fMRI, vision, category, semantic, regression, natural stimuli

Introduction

Previous functional magnetic resonance imaging (fMRI) studies have suggested that some categories of objects and actions are represented in specific cortical areas. Categories that have been functionally localized include faces (Avidan, et al., 2005; Clark et al., 1996; Halgren et al., 1999; Kanwisher et al., 1997; McCarthy et al., 1997; Rajimehr et al., 2009; Tsao, et al., 2008), body parts (Downing et al., 2001; Peelen & Downing, 2005; Schwarzlose et al., 2005), outdoor scenes (Aguirre et al., 1998; Epstein & Kanwisher, 1998), and human body movements (Peelen et al., 2006; Pelphrey et al., 2005). However, humans can recognize thousands of different categories of objects and actions. Given the limited size of the human brain it is unreasonable to expect that every one of these categories is represented in a distinct brain area. Indeed, fMRI studies have failed to identify dedicated functional areas for many common object categories including household objects (Haxby et al., 2001), animals and tools (Chao et al., 1999), food, clothes, and so on (Downing et al., 2006).

An efficient way for the brain to represent object and action categories would be to organize them into a continuous space that reflects the semantic similarity between categories. A continuous semantic space could be mapped smoothly onto the cortical sheet so that nearby points in cortex would represent semantically similar categories. No previous study has found a general semantic space that organizes the representation of all visual categories in the human brain. However, several studies have suggested that single locations on the cortical surface might represent many semantically related categories (Connolly et al., 2012; Downing et al., 2006; Edelman et al., 1998; Just et al., 2010; Konkle & Oliva, 2012; Kriegeskorte et al., 2008; Naselaris et al., 2009; Op de Beeck et al., 2008; O’Toole et al., 2005). Some studies have also proposed likely dimensions that organize these representations, such as animals versus non-animals (Connolly et al., 2012; Downing et al., 2006; Kriegeskorte et al., 2008; Naselaris et al., 2009), manipulation versus shelter versus eating (Just et al., 2010), large versus small (Konkle & Oliva, 2012), or hand- versus mouth- versus foot-related actions (Hauk et al., 2004).

To determine whether a continuous semantic space underlies category representation in the human brain we collected blood-oxygen-level-dependent (BOLD) fMRI responses from five subjects while they watched several hours of natural movies. Natural movies were used because they contain many of the object and action categories that occur in daily life, and they evoke robust BOLD responses (Bartels & Zeki, 2004; Hasson et al., 2004; Hasson et al., 2008; Nishimoto et al., 2011). After data collection we used terms from the WordNet lexicon (Miller, 1995) to label 1364 common objects (i.e., nouns) and actions (i.e., verbs) in the movies (see Experimental Procedures for details of labeling procedure and Fig. S1 for examples of typical labeled clips). WordNet is a set of directed graphs that represent the hierarchical is-a relationships between object or action categories. The hierarchical relationships in WordNet were then used to infer the presence of an additional 341 higher-order categories (e.g., a scene containing a dog must also contain a canine). Finally, we used regularized linear regression (see Experimental Procedures for details; Kay et al., 2008; Mitchell et al., 2008; Naselaris et al., 2009; Nishimoto et al., 2011) to characterize the response of each voxel to each of the 1705 object and action categories (Fig. 1). The linear regression procedure produced a set of 1705 model weights for each individual voxel, reflecting how each object and action category influences BOLD responses in each voxel.

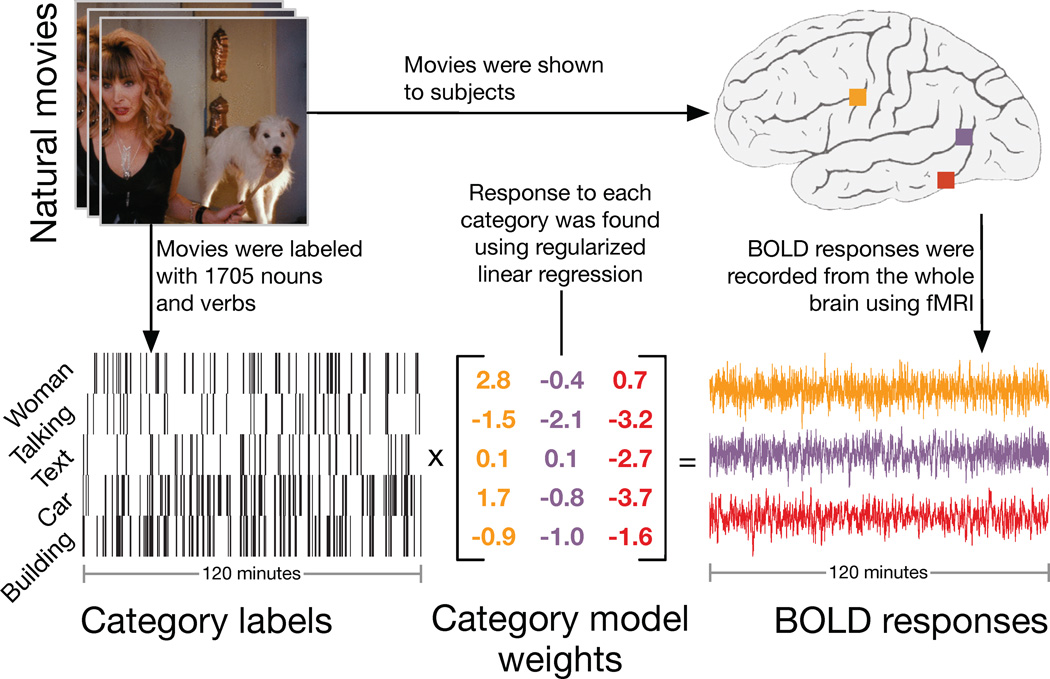

Figure 1.

Schematic of the experiment and model. Subjects viewed two hours of natural movies while BOLD responses were measured using fMRI. Objects and actions in the movies were labeled using 1364 terms from the WordNet lexicon (Miller, 1995). The hierarchical is a relationships defined by WordNet were used to infer the presence of 341 higher- order categories, providing a total of 1705 distinct category labels. A regularized, linearized finite impulse response regression model was then estimated for each cortical voxel recorded in each subject's brain (Kay et al., 2008; Mitchell et al., 2008; Naselaris et al., 2009; Nishimoto et al., 2011). The resulting category model weights describe how various object and action categories influence BOLD signals recorded in each voxel. Categories with positive weights tend to increase BOLD, while those with negative weights tend to decrease BOLD. The response of a voxel to a particular scene is predicted as the sum of the weights for all categories in that scene.

Results

Category selectivity for individual voxels

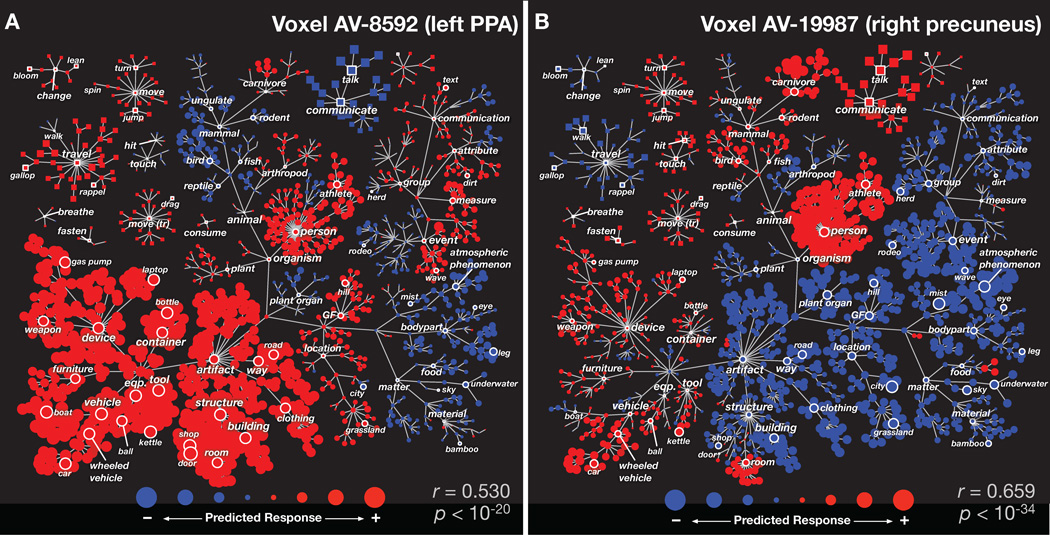

Our modeling procedure produces detailed information about the representation of categories in each individual voxel in the brain. Figure 2A shows the category selectivity for one voxel located in the left parahippocampal place area (PPA) of subject AV. The model for this voxel shows that BOLD responses are strongly enhanced by categories associated with man-made objects and structures (e.g. building, road, vehicle, and furniture), weakly enhanced by categories associated with outdoor scenes (e.g. hill, grassland, geological formation) and humans (e.g. person, athlete), and weakly suppressed by non-human biological categories (e.g. body parts, birds). This result is consistent with previous reports that PPA most strongly represents information about outdoor scenes and buildings (Epstein & Kanwisher, 1998).

Figure 2.

Category selectivity for two individual voxels. Each panel shows the predicted response of one voxel to each of the 1705 categories, organized according to the graphical structure of WordNet. Links indicate is a relationships (e.g. an athlete is a person); some relationships used in the model are omitted for clarity. Each marker represents a single noun (circle) or verb (square). Red markers indicate positive predicted responses and blue negative. The area of each marker indicates predicted response magnitude. The prediction accuracy of each voxel model, computed as the correlation coefficient (r) between predicted and actual responses, is shown in the bottom right of each panel along with model significance (see Results for details). (A) Category selectivity for one voxel located in the left-hemisphere parahippocampal place area (PPA). The category model predicts that movies will evoke positive responses when structures, buildings, roads, containers, devices, and vehicles are present. Thus, this voxel appears to be selective for scenes that contain man-made objects and structures (Epstein & Kanwisher, 1998). (B) Category selectivity for one voxel located in the right-hemisphere precuneus (PrCu). The category model predicts that movies will evoke positive responses from this voxel when people, carnivores, communication verbs, rooms, or vehicles are present, and negative responses when movies contain atmospheric phenomena, locations, buildings, or roads. Thus, this voxel appears to be selective for scenes that contain people or animals interacting socially (Iacoboni et al., 2004).

Figure 2B shows category selectivity for a second voxel located in the right precuneus (PrCu) of subject AV. The model shows that BOLD responses are strongly enhanced by categories associated with social settings (e.g. people, communication verbs, and rooms), and suppressed by many other categories (e.g. building, city, geological formation, atmospheric phenomenon). This result is consistent with an earlier finding that PrCu is involved in processing social scenes (Iacoboni et al., 2004).

A semantic space for representation of object and action categories

We used principal components analysis (PCA) to recover a semantic space from the category model weights in each subject. PCA ensures that categories that are represented by similar sets of cortical voxels will project to nearby points in the estimated semantic space, while categories that are represented very differently will project to different points in the space. To maximize the quality of the estimated space only voxels that were significantly predicted (p <0.05, uncorrected) by the category model were included (see Experimental Procedures for details).

Because humans can perceive thousands of categories of objects and actions, the true semantic space underlying category representation in the brain likely has many dimensions. However, given the limitations of fMRI and a finite stimulus set we expect that we will only be able to recover the first few dimensions of the semantic space for each individual brain, and fewer still dimensions that are shared across individuals. Thus of the 1705 semantic PCs produced by PCA on the voxel weights, only the first few will resemble the true underlying semantic space while the remainder will be determined mostly by the statistics of the stimulus set and noise in the fMRI data.

To determine which PCs are significantly different from chance, we compared the semantic PCs to the PCs of the category stimulus matrix (see Experimental Procedures for details of why the stimulus PCs are an appropriate null hypothesis). First, we tested the significance of each subject's own category model weight PCs. If there is a semantic space underlying category representation in the subject's brain, then we should find that some of the subject's model weight PCs explain more of the variance in the subject's category model weights than is explained by the stimulus PCs. However, if there is no semantic space underlying category representation in the subject's brain, then the stimulus PCs should explain the same amount of variance in the category model weights as do the subject's PCs. The results of this analysis are shown in Figure 3. Six to eight PCs from individual subjects explain significantly more variance in category model weights than do the stimulus PCs (p<0.001, bootstrap test). These individual subject PCs explain a total of 30–35% of the variance in category model weights. Thus, our fMRI data are sufficient to recover semantic spaces for individual subjects that consist of six to eight dimensions.

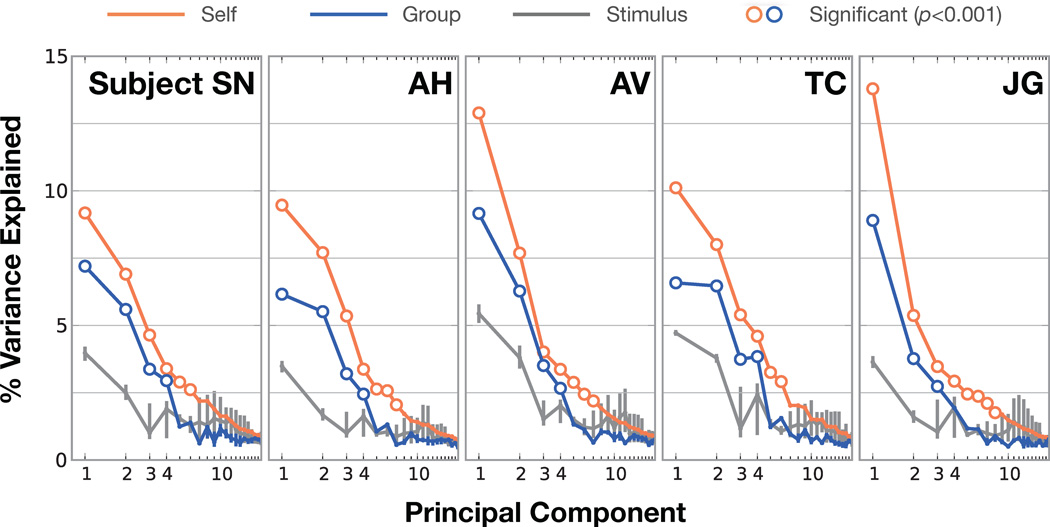

Figure 3.

Amount of model variance explained by individual subject and group semantic spaces. Principal components analysis (PCA) was used to recover a semantic space from category model weights in each subject. Here we show the variance explained in the category model weights by each of the 20 most important PCs. Orange lines show the amount of variance explained in category model weights by each subject's own PCs and blue lines show the variance explained by PCs of combined data from other subjects. Gray lines show the variance explained by the stimulus PCs, which serve as an appropriate null hypothesis (see text and methods for details). Error bars indicate 99% confidence intervals (the confidence intervals for the subjects' own PCs and group PCs are very small). Hollow markers indicate subject or group PCs that explain significantly more variance (p<0.001, bootstrap test) than the stimulus PCs. The first four group PCs explain significantly more variance than the stimulus PCs for four subjects. Thus, the first four group PCs appear to comprise a semantic space that is common across most individuals, and which cannot be explained by stimulus statistics. Furthermore, the first six to nine individual subject PCs explain significantly more variance than the stimulus PCs (p<0.001, bootstrap test). This suggests that while the subjects share broad aspects of semantic representation, finer-scale semantic representations are subject-specific.

Next, we used the same procedure to test the significance of group PCs constructed using data combined across subjects. To avoid overfitting, we constructed a separate group semantic space for each subject using combined data from the other four subjects. If the subjects share a common semantic space then some of the group PCs should explain more of the variance in the selected subject's category model weights than do the stimulus PCs. However, if the subjects do not share a common semantic space then the stimulus PCs should explain the same amount of variance in the category model weights as do the group PCs. The results of this analysis are also shown in Figure 3. The first four group PCs explain significantly more variance (p<0.001, bootstrap test) than do the stimulus PCs in four out of five subjects. These four group PCs explain on average 19% of the total variance, 72% as much as do the first four individual subject PCs. In contrast, the first four stimulus PCs only explain 10% of the total variance, 38% as much variance as the individual subject PCs. This result suggests that the first four group PCs describe a semantic space that is shared across individuals.

Finally, we determined how much stimulus-related information is captured by the group PCs and full category model. For each model we quantified stimulus-related information by testing whether the model could distinguish among BOLD responses to different movie segments (Kay et al., 2008; Nishimoto et al., 2011; see Experimental Procedures for details). Models using 4–512 group PCs were tested by projecting the category model weights for 2000 voxels (selected using the training dataset) onto the group PCs. Then the projected model weights were used to predict responses to the validation stimuli. We then tried to match the validation stimuli to observed BOLD responses by comparing the observed and predicted responses. The same identification procedure was repeated for the full category model.

The results of this analysis are shown in Fig. S2. The full category model correctly identifies an average of 76% of stimuli across subjects (chance is 1.9%). Models based on 64 or more group PCs correctly identify an average of 74% of the stimuli, but incorporate information that we know cannot be distinguished from the stimulus PCs. A model based on the 4 significant group PCs correctly identifies 49% of the stimuli, roughly two thirds as many as the full model. These results show that the 4-PC group space does not capture all of the stimulus-related information present in the full category model, indicating that the true semantic space is likely to have more than four dimensions. Further experiments will be required to determine these other semantic dimensions.

To visualize the group semantic space, we formed a robust estimate by pooling data from all five subjects (for a total of 49685 voxels) and then applying PCA to the combined data.

Visualization of the semantic space

The previous results demonstrate that object and action categories are represented in a semantic space consisting of at least 4 dimensions, and that this space is shared across individuals. To understand the structure of the group semantic space we visualized it in two different ways. First, we projected the 1705 coefficients of each group PC onto the graph defined by WordNet (Fig. 4). The first PC (shown in Fig. 4A) appears to distinguish between categories that have high stimulus energy (e.g. moving objects like person, vehicle, and animal) and those that have low stimulus energy (e.g. stationary objects like sky, city, building, and plant). This is not surprising, as the first PC should reflect the stimulus dimension with the greatest influence on brain activity, and stimulus energy is already known to have a large effect on BOLD signals (Fox et al., 2009; Nishimoto et al., 2011; Smith et al., 1998).

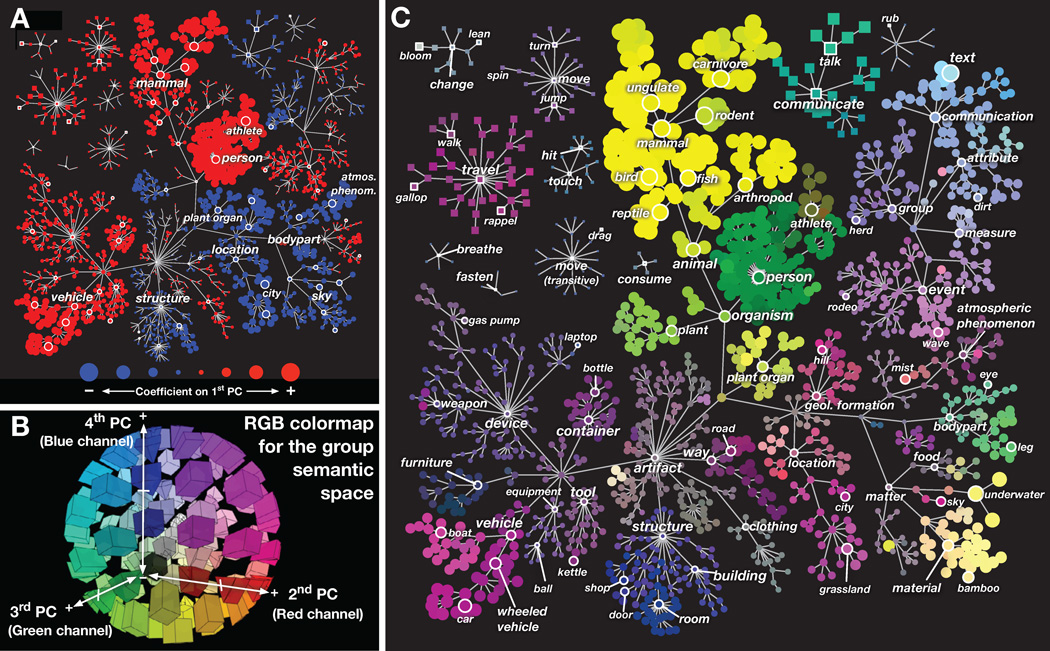

Figure 4.

Graphical visualization of the group semantic space. (A) Coefficients of all 1705 categories in the first group PC, organized according to the graphical structure of WordNet. Links indicate is a relationships (e.g. an athlete is a person); some relationships used in the model have been omitted for clarity. Each marker represents a single noun (circle) or verb (square). Red markers indicate positive coefficients and blue negative. The area of each marker indicates the magnitude of the coefficient. This PC distinguishes between categories with high stimulus energy (e.g. moving objects like person and vehicle) and those with low stimulus energy (e.g. stationary objects like sky and city). (B) The three-dimensional RGB colormap used to visualize PCs 2–4. The category coefficient in the second PC determined the value of the red channel, the third PC determined the green channel and the fourth PC determined the blue channel. Under this scheme categories that are represented similarly in the brain are assigned similar colors. Categories with zero coefficients appear neutral gray. (C) Coefficients of all 1705 categories in group PCs 2–4, organized according to the WordNet graph. The color of each marker is determined by the RGB colormap in panel B. Marker sizes reflect the magnitude of the three-dimensional coefficient vector for each category. This graph shows that categories thought to be semantically related (e.g. athletes and walking) are represented similarly in the brain.

We then visualized the second, third, and fourth group PCs simultaneously using a three-dimensional color map projected onto the WordNet graph. A color was assigned to each of the 1705 categories according to the following scheme: the category coefficient in the second PC determined the value of the red channel, the third PC determined the green channel and the fourth PC determined the blue channel (see legend Fig. 4B; see Fig. S3 for individual PCs). This scheme assigns similar colors to categories that are represented similarly in the brain. Figure 4C shows the second, third and fourth PCs projected onto the WordNet graph. Here humans, human body parts, and communication verbs (e.g., gesticulate and talk) appear in shades of green. Other animals appear yellow and green-yellow. Nonliving objects such as vehicles appear pink and purple, as do movement verbs (e.g., run), outdoor categories (e.g., hill, city, grassland) and paths (e.g. road). Indoor categories (e.g. room, door, and furniture) appear in blue and indigo. This figure suggests that semantically related categories (e.g. person and talking) are represented more similarly than unrelated categories (e.g. talking and kettle).

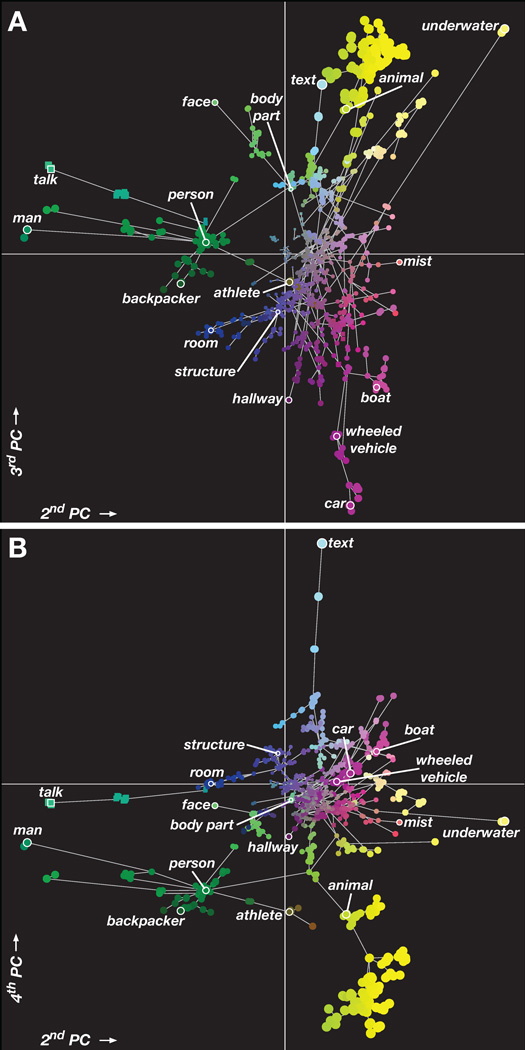

To better understand the overall structure of the semantic space we created an analogous figure in which category position is determined by the PCs instead of the WordNet graph. Figure 5 shows the location of all 1705 categories in the space formed by the second, third, and fourth group PCs (a movie showing the categories in 3D is included in supplemental materials). Here, categories that are represented similarly in the brain are plotted at nearby positions. Categories that appear near the origin have small PC coefficients, and thus are generally weakly represented or are represented similarly across voxels (e.g. laptop and clothing). In contrast, categories that appear far from the origin have large PC coefficients, and thus are represented strongly in some voxels and weakly in others (e.g. text, talk, man, car, animal, and underwater). These results support earlier findings that categories such as faces (Avidan et al., 2005; Clark et al., 1996; Halgren et al., 1999; N. Kanwisher et al., 1997; Gregory McCarthy et al., 1997; Rajimehr et al., 2009; Tsao et al., 2008) and text (Cohen et al., 2000) are represented strongly and distinctly in the human brain.

Figure 5.

Spatial visualization of the group semantic space. (A) All 1705 categories, organized by their coefficients on the second and third PCs. Links indicate is a relationships (e.g. an athlete is a person) from the WordNet graph; some relationships used in the model have been omitted for clarity. Each marker represents a single noun (circle) or verb (square). The color of each marker is determined by an RGB colormap based on the category coefficients in PCs 2–4 (see Fig. 4B for details). The position of each marker is also determined by the PC coefficients: position on the x axis is determined by the coefficient on the second PC and position on the y-axis is determined by the coefficient on the third PC. This ensures that categories that are represented similarly in the brain appear near each other. The area of each marker indicates the magnitude of the PC coefficients for that category; more important or strongly represented categories have larger coefficients. The categories man, talk, text, underwater, and car have the largest coefficients on these PCs. (B) All 1705 categories, organized by their coefficients on the second and fourth PCs. Format same as panel A. The large group of animal categories has large PC coefficients, and is mainly distinguished by the fourth PC. Human categories appear to span a continuum. The category person is very close to indoor categories such as room on the second and third PCs, but different on the fourth. The category athlete is close to vehicle categories on the second and third PCs, but is also close to animal on the fourth PC. These semantically-related categories are represented similarly in the brain, supporting the hypothesis of a smooth semantic space. However, these results also show that some categories (e.g. talk, man, text, and car) appear to be more important than others. A movie showing this semantic space in 3D is included in Supplemental Materials.

Interpretation of the semantic space

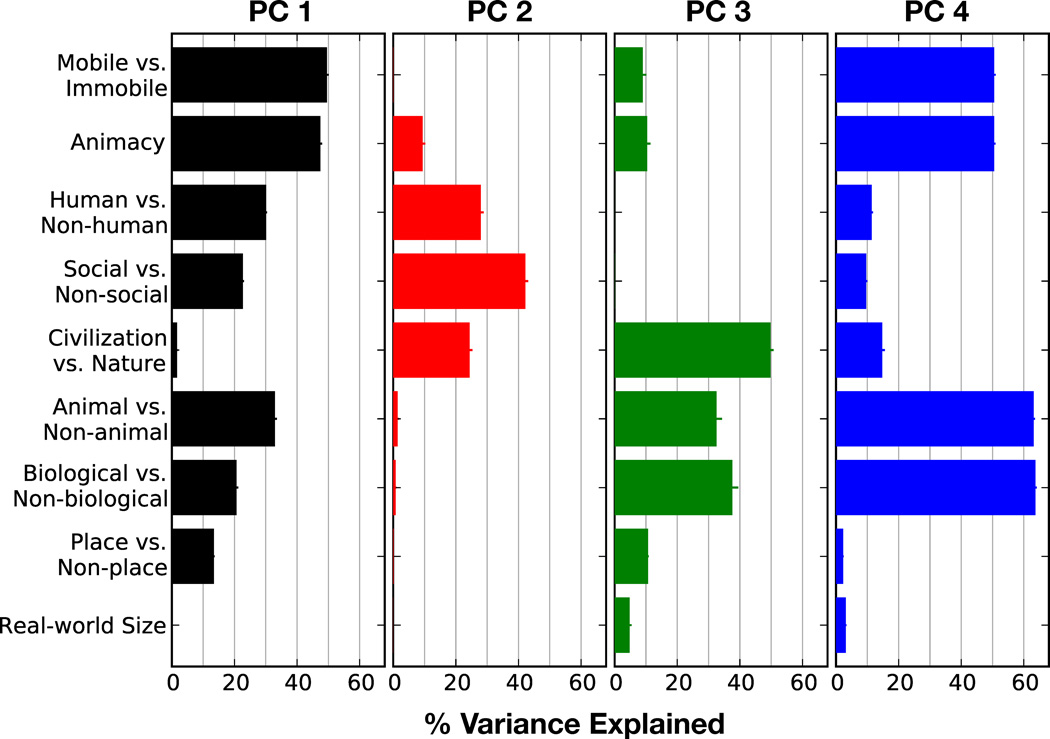

Earlier studies have suggested that animal categories (including people) are represented distinctly from non-animal categories (Connolly et al., 2012; Downing et al., 2006; Kriegeskorte et al., 2008; Naselaris et al., 2009). To determine whether hypothesized semantic dimensions such as animal versus non-animal are captured by the group semantic space, we compared each of the group semantic PCs to nine hypothesized semantic dimensions. For each hypothesized dimension we first assigned a value to each of the 1705 categories. For example, for the dimension animal versus non-animal we assigned the value +1 to all animal categories and the value 0 to all non-animal categories. Then we computed how much variance each hypothesized dimension explained in each of the group PCs. If a hypothesized dimension provides a good description of one of the group PCs, then that dimension will explain a large fraction of the variance in that PC. If a hypothesized dimension is captured by the group semantic space but does not line up exactly with one of the PCs, then that dimension will explain variance in multiple PCs.

The comparison between the group PCs and hypothesized semantic dimensions is shown in Figure 6. The first PC is best explained by a dimension that contrasts mobile categories (people, non-human animals, and vehicles) with non-mobile categories. The first PC is also well explained by a dimension that is an extension of a previously reported animacy continuum (Connolly et al, 2012). Our animacy dimension assigns the highest weight to people, decreasing weights to other mammals, birds, reptiles, fish, and invertebrates, and zero weight to all non-animal categories. The second PC is best explained by a dimension that contrasts categories associated with social interaction (people and communication verbs) with all other categories.

Figure 6.

Comparison between the group semantic space and nine hypothesized semantic dimensions. For each hypothesized semantic dimension we assigned a value to each of the 1705 categories (see methods for details) and we computed the fraction of variance that each dimension explains in each PC. Each panel shows the variance explained by all hypothesized dimensions in one of the four group PCs. Error bars indicate bootstrap standard error. The first PC is best explained by a dimension that contrasts mobile categories (people, non-human animals, and vehicles) with non-mobile categories, and an animacy dimension (Connolly et al, 2012) that assigns high weight to humans, decreasing weights to other mammals, birds, reptiles, fish, and invertebrates and zero weight to other categories. The second PC is best explained by a dimension that contrasts social categories (people and communication verbs) with all other categories. The third PC is best explained by a dimension that contrasts categories associated with civilization (people, man-made objects, and vehicles) with categories associated with nature (non-human animals). The fourth PC is best explained by a dimension that contrasts biological categories (people, animals, plants, body parts, plant parts) with non-biological categories, and a dimension that contrasts animals (people and non-human animals) with non-animals.

The third PC is best explained by a dimension that contrasts categories associated with civilization (people, man-made objects, and vehicles) with categories associated with nature (non-human animals). The fourth PC is best explained by a dimension that contrasts biological categories (animals, plants, people, and body parts) with non-biological categories, as well as a similar dimension that contrasts animal categories (including people) with non-animal categories. These results provide quantitative interpretations for the group PCs and show that many hypothesized semantic dimensions are captured by the group semantic space.

The results shown in Figure 6 also suggest that some hypothesized semantic dimensions are not captured by the group semantic space. The contrast between place categories (buildings, roads, outdoor locations, and geological features) and non-place categories is not captured by any group PC. This is surprising because the representation of place categories is thought to be of primary importance to many brain areas, including the parahippocampal place area (PPA; Epstein & Kanwisher, 1998), retrosplenial cortex (RSC; Aguirre et al., 1992), and temporo-occipital sulcus (TOS; Nakamura et al., 2000; Hasson et al., 2003). Our results may appear different from the results of earlier studies of place representation because those earlier studies used static images and not movies.

Another hypothesized semantic dimension that is not captured by our group semantic space is real-world object size (Konkle & Oliva, 2012). The object size dimension assigns a high weight to large objects (e.g. boat), medium weight to human-scale objects (e.g. person), a small weight to small objects (e.g. glasses), and zero weight to objects that have no size (e.g. talking) or can be many sizes (e.g. animal). This object size dimension was not well captured by any of the four group PCs. However, based upon earlier results (Konkle & Oliva, 2012) it appears that object size is represented in the brain. Thus it is likely that object size is captured by lower-variance group PCs that could not be significantly discerned in this experiment.

Cortical maps of semantic representation

The results of the PC analysis show that the brains of different individuals represent object and action categories in a common semantic space. Here we examine how this semantic space is represented across the cortical surface. To do this we first constructed a separate cortical flatmap for each subject using standard techniques (Van Essen et al., 2001). Then we used the scheme described above (see Fig. 4) to assign a color to each voxel according to the projection of its category model weights into the PC space (for separate PC maps see Fig. S4). The results are shown in Figs. 7A and C for two subjects (corresponding maps for other subjects are shown in Fig. S5). (Readers who wish to explore these maps in detail, and examine the category selectivity of each voxel, may do so by going to http://gallantlab.org/semanticmovies.)

Figure 7.

Semantic space represented across the cortical surface. (A) The category model weights for each cortical voxel in subject AV are projected onto PCs 2–4 of the group semantic space, and then assigned a color according to the scheme described in Figure 4B. These colors are projected onto a cortical flat map constructed for subject AV. Each location on the flat map shown here represents a single voxel in the brain of subject AV. Locations with similar colors have similar semantic selectivity. This map reveals that the semantic space is represented in broad gradients distributed across much of anterior visual cortex. Semantic selectivity is also apparent in medial and lateral parietal cortex, auditory cortex, and lateral prefrontal cortex. Brain areas identified using conventional functional localizers are outlined in white and labeled (see Table S1 for abbreviations). Boundaries that have been inferred from anatomy or which are otherwise uncertain are denoted by dashed white lines. Major sulci are denoted by dark blue lines and labeled (see Table S2 for abbreviations). Some anatomical regions are labeled in light blue (Abbreviations: PrCu=precuneus; TPJ=temporoparietal junction). Cuts made to the cortical surface during the flattening procedure are indicated by dashed red lines and a red border. The apex of each cut is indicated by a star. Blue borders show the edge of the corpus callosum and sub-cortical structures. Regions of fMRI signal dropout due to field inhomogeneity are shaded with black hatched lines. (B) Projection of voxel model weights onto the first PC for subject AV. Voxels with positive projections on the first PC appear red, while those with negative projections appear blue and those orthogonal to the first PC appear gray. (C) Projection of voxel weights onto PCs 2–4 of the group semantic space for subject TC. (D) Projection of voxel model weights onto the first PC for subject TC. See Figure S5 for maps of semantic representation in other subjects. Note: Explore these datasets yourself at http://gallantlab.org/semanticmovies

These maps reveal that the semantic space is represented in broad gradients that are distributed across much of anterior visual cortex (some of these gradients are shown schematically in Fig. S6). In inferior temporal cortex, regions of animal (yellow) and human representation (green and blue-green) run along the inferior temporal sulcus (ITS). Both the fusiform face area (FFA) and occipital face area (OFA) lie within the region of human representation, but the surrounding region of animal representation was previously unknown. In a gradient that runs from the ITS toward the middle temporal sulcus (MTS), human representation gives way to animal representation, which then gives way to representation of human action, athletes, and outdoor spaces (red and red-green). The dorsal part of the gradient contains the extrastriate body area (EBA) and area MT+/V5, and also responds strongly to motion (positive on the first PC, see Figs. 7B and D).

In medial occipitotemporal cortex, a region of vehicle (pink) and landscape (purple) representation sits astride the collateral sulcus (CoS). This region, which contains the parahippocampal place area (PPA), lies at one end of a long gradient that runs across medial parietal cortex. Toward retrosplenial cortex (RSC) and along the posterior precuneus (PrCu) the representational gradient shifts toward buildings (blue-indigo) and landscapes (purple). This gradient continues forward along the superior bank of the intraparietal sulcus (IPS) as far as the posterior end of the cingulate sulcus (CiS) while shifting representation toward geography (purple-red) and human action (red). This long gradient encompasses both the dorsal and ventral visual pathways (Ungerleider & Mishkin, 1982) in one unbroken band of cortex that represents a continuum of semantic categories related to vehicles, buildings, landscapes, geography, and human actions.

This map also reveals that visual semantic categories are well represented outside of occipital cortex. In parietal cortex, an anterior-posterior gradient from animal (yellow) to landscape (purple) representation is located in the posterior bank of the postcentral sulcus (PoCeS). This is consistent with earlier reports that movies of hand movements evoke responses in the PoCeS (Buccino et al., 2001; Hasson et al., 2004), and may reflect learned associations between visual and somatosensory stimuli.

In frontal cortex, a region of human action and athlete representation (red) is located at the posterior end of the superior frontal sulcus (SFS). This region, which includes the frontal eye fields (FEF), lies at one end of a gradient that shifts toward landscape (purple) representation while extending along the SFS. Another region of human action, athlete, and animal representation (red-yellow) is located at the posterior inferior frontal sulcus (IFS) and contains the frontal operculum (FO). Both FO and FEF have been associated with visual attention (Buchel et al., 1998), so we suspect that human action categories might be correlated with salient visual movements that attract covert visual attention in our subjects.

In inferior frontal cortex, a region of indoor structure (blue), human (green), communication verb (also blue-green), and text (cyan) representation runs along the IFS anterior to FO. This region coincides with the inferior frontal sulcus face patch (IFSFP) (Avidan et al., 2005; Tsao et al., 2008) and has also been implicated in processing of visual speech (Calvert & Campbell, 2003) and text (Poldrack et al., 1999). Our results suggest that visual speech, text and faces are represented in a contiguous region of cortex.

Smoothness of cortical semantic maps

We have shown that the brain represents hundreds of categories within a continuous 4-dimensional semantic space that is shared among different subjects. Furthermore, the results shown in Figure 7 suggest that this space is mapped smoothly onto the cortical sheet. However, the results presented thus far are not sufficient to determine whether the apparent smoothness of the cortical map reflects the specific properties of the group semantic space, or rather whether a smooth map might result from any arbitrary 4-dimensional projection of our voxel weights onto the cortical sheet. To address this issue we tested whether cortical maps under the 4-PC group semantic space are smoother than expected by chance.

In order to quantify the smoothness of a cortical map, we first projected the category model weights for every voxel into the 4-dimensional semantic space. Then we computed the correlation between the projections for each pair of voxels. Finally we aggregated and averaged these pairwise correlations based on the distance between each pair of voxels along the cortical sheet. To estimate the null distribution of smoothness values and to establish statistical significance, this procedure was repeated using 1000 random 4-dimensional semantic spaces (see Experimental Procedures for details).

Figure 8 shows the average correlation between voxel projections into the semantic space as a function of the distance between voxels along the cortical sheet. In all five subjects, the group semantic space projections have significantly (p<0.001) higher average correlation than the random projections, for both adjacent voxels (distance 1) and voxels separated by one intermediate voxel (distance 2). These results suggest that smoothness of the cortical map is specific to the group semantic space estimated here. Because the group semantic space was constructed without using any spatial information, this finding independently confirms the significance of the group semantic space.

Figure 8.

Smoothness of cortical maps under the group semantic space. To quantify smoothness of cortical representation under a semantic space, we first projected voxel category model weights into the semantic space. Then we computed the mean correlation between voxel semantic projections as a function of the distance between voxels along the cortical sheet. To determine whether cortical semantic maps under the group semantic model are significantly smoother than chance, smoothness was computed using the same analysis for 1000 random 4-dimensional spaces. Mean correlations for the group semantic space are plotted in blue, and mean correlations for the 1000 random spaces are plotted in gray. Gray error bars show 99% confidence intervals for the random space results. Group semantic space correlations that are significantly different from the random space results (p<0.001) are shown as hollow symbols. For adjacent voxels (distance 1) and voxels separated by one intermediate voxel (distance 2), correlations of group semantic space projections are significantly greater than chance in all subjects. This shows that cortical semantic maps under the group semantic space are much smoother than would be expected by chance.

Importance of category representation across cortex

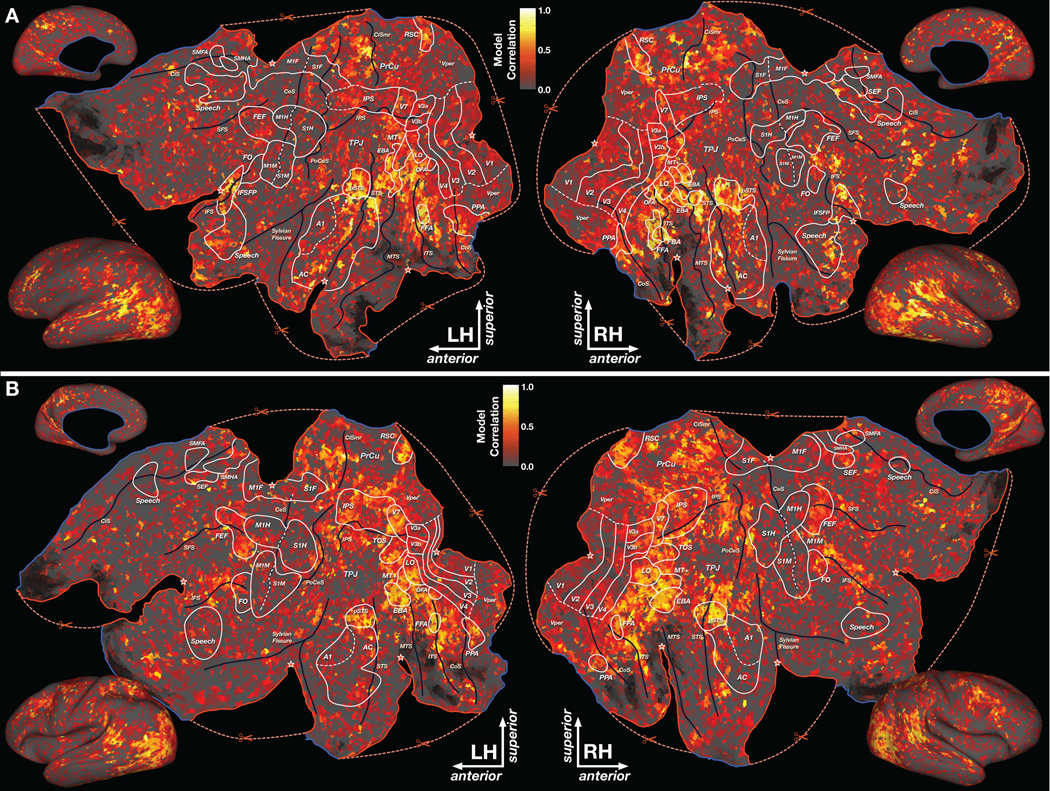

The cortical maps shown in Figure 7 demonstrate that much of neocortex is semantically selective. However, this does not necessarily imply that semantic selectivity is the primary function of any specific cortical site. To assess the importance of semantic selectivity across the cortical surface we evaluated predictions of the category model, using a separate data set reserved for this purpose (Kay et al., 2008; Naselaris et al., 2009; Nishimoto et al., 2011). Prediction performance was quantified as the correlation between predicted and observed BOLD responses, corrected to account for noise in the validation data (see methods and Hsu et al., 2004).

Figure 9 shows prediction performance projected onto cortical flat maps for two subjects (corresponding maps for other subjects are shown in Fig. S7). The category model accurately predicts BOLD responses in occipitotemporal cortex, medial parietal cortex, and lateral prefrontal cortex. On average, 22% of cortical voxels are predicted significantly (p<0.01 uncorrected; 19% in subject SN, 20% in AH, 26% in AV, 26% in TC, and 21% in JG). The category model explains at least 20% of the explainable variance (correlation > 0.44) in an average of 8% of cortical voxels (5% in subject SN, 7% in AH, 10% in AV, 12% in TC, and 7% in JG). These results show that category representation is broadly distributed across the cortex. This result is inconsistent with the results of previous fMRI studies that reported only a few category-selective regions (Schwarzlose et al., 2005; Spiridon et al., 2006). (Note, however, that the category selectivity of individual brain areas reported in these previous studies is consistent with our results.) We suspect that previous studies have underestimated the extent of category representation in the cortex because they used static images and tested only a handful of categories.

Figure 9.

Model prediction performance across the cortical surface. To determine how much of the response variance of each voxel is explained by the category model, prediction performance was assessed using separate validation data reserved for this purpose. (A) Each location on the flat map represents a single voxel in the brain of subject AV. Colors reflect prediction performance on the validation data. Well predicted voxels appear yellow or white, and poorly predicted voxels appear gray. The best predictions are found in occipitotemporal cortex, the posterior superior temporal sulcus, medial parietal cortex, and inferior frontal cortex. (B) Model performance for subject TC. See Figure S7 for model prediction performance in other subjects. See Table S3 for model prediction performance within known functional areas.

Figure 9 also shows that some regions of cortex that appeared semantically selective in Figure 7 are predicted poorly. This suggests that the semantic selectivity of some brain regions is inconsistent or non-stationary. These inconsistent regions include the middle precuneus, temporoparietal junction, and dorsolateral prefrontal cortex. All of these regions are thought to be components of the default mode network (DMN) (Raichle et al., 2001) and are known to be strongly modulated by attention (Downar et al., 2002). Because we did not control or manipulate attention in this experiment, the inconsistent semantic selectivity of these regions may reflect uncontrolled attentional effects. Future studies that control attention explicitly could improve category model predictions in these regions.

Discussion

We used brain activity evoked by natural movies to study how 1705 object and action categories are represented in the human brain. The results show that the brain represents categories in a continuous semantic space that reflects category similarity. These results are consistent with the hypothesis that the brain efficiently represents the diversity of categories in a compact space, and they contradict the common hypothesis that each category is represented in a distinct brain area. Assuming that semantically related categories share visual or conceptual features, this organization likely minimizes the number of neurons or neural wiring required to represent these features.

Across the cortex, semantic representation is organized along smooth gradients that seem to be distributed systematically. Functional areas defined using classical contrast methods are merely peaks or nodal points within these broad semantic gradients. Furthermore, cortical maps based on the group semantic space are significantly smoother than expected by chance. These results suggest that semantic representation is analogous to retinotopic representation, in which many smooth gradients of visual eccentricity and angle selectivity tile the cortex (Engel et al., 1997; Hansen et al., 2007). Unlike retinotopy, however, the relevant dimensions of the space underlying semantic representation are not known a priori, and so must be derived empirically.

Previous studies have shown that natural movies evoke widespread, robust BOLD activity across much of the cortex (Bartels & Zeki, 2004; Hasson et al., 2004; Hasson et al., 2008; Haxby et al., 2011; Nishimoto et al., 2011). However, those studies did not attempt to systematically map semantic representation or discover the underlying semantic space. Our results help explain why natural movies evoke widely consistent activity across different individuals: object and action categories are represented in terms of a common semantic space that maps consistently onto cortical anatomy.

One potential criticism of this study is that the WordNet features used to construct the category model might have biased the recovered semantic space. For example, the category surgeon only appears four times in these stimuli, but because it is a descendent of person in WordNet, surgeon appears near person in the semantic space. It is possible (however unlikely) that surgeons are represented very differently from other people, but that we are unable to recover that information from these data. On the other hand, categories that appeared frequently in these stimuli are largely immune to this bias. For example, among the descendents of person there is a large difference between the representations of athlete (which appears 282 times in these stimuli) and man (which appears 1482 times). Thus it appears that bias due to WordNet only affects rare categories. We do not believe that these considerations have a significant effect on the results of this study.

Another potential criticism of the regression-based approach used in this study is that some results could be biased by stimulus correlations. For example, we might conclude that a voxel responds to talking when in fact it responds to the presence of a mouth. In theory, such correlations are modeled and removed by the regression procedure as long as sufficient data are collected, but our data are limited and so some residual correlations may remain. However, we believe that the alternative—bias due to pre-selecting a small number of stimulus categories—is a more pernicious source of error and misinterpretation in conventional fMRI experiments. Errors due to stimulus correlation can be seen, measured and tested. Errors due to stimulus preselection are implicit and largely invisible.

The group semantic space found here captures large semantic distinctions such as mobile vs. stationary categories, but misses finer distinctions such as old faces vs. young faces (Op de Beeck, 2010) and small objects vs. large objects (Konkle & Oliva, 2012). These fine distinctions would likely be captured by lower-variance dimensions of the shared semantic space that could not be recovered in this experiment. The dimensionality and resolution of the recovered semantic space are limited by the quality of BOLD fMRI, and by the size and semantic breadth of the stimulus set. Future studies that use more sensitive measures of brain activity or broader stimulus sets will likely reveal additional dimensions of the common semantic space. Further studies using more subjects will also be necessary, in order to understand differences in semantic representation between individuals.

Some previous studies have reported that animal and non-animal categories are represented distinctly in the human brain (Downing et al., 2006; Kriegeskorte et al., 2008; Naselaris et al., 2009). Another study proposed an alternative: that animal categories are represented using an animacy continuum (Connolly et al., 2012), in which animals that are more similar to humans have higher animacy. Our results show that animacy is well represented on the first, and most important, PC in the group semantic space. The binary distinction between animals and non-animals is also well represented, but only on the fourth PC. Moreover, the fourth PC is better explained by the distinction between biological categories (including plants) and non-biological categories. These results suggest that the animacy continuum is more important for category representation in the brain than is the binary distinction between animal and non-animal categories.

A final important question about the group semantic space is whether it reflects visual or conceptual features of the categories. For example, people and non-human animals might be represented similarly because they share visual features such as hair, or because they share conceptual features such as agency or self-locomotion. The answer to this question likely depends upon which voxels are used to construct the semantic space. Voxels from occipital and inferior temporal cortex have been shown to have similar semantic representation in humans and monkeys (Kriegeskorte et al., 2008). Therefore, these voxels likely represent visual features of the categories, and not conceptual features. In contrast, voxels from medial parietal cortex and frontal cortex likely represent conceptual features of the categories. Because the group semantic space reported here was constructed using voxels from across the entire brain, it probably reflects a mixture of visual and conceptual features. Future studies using both visual and nonvisual stimuli will be required to disentangle the contributions of visual versus conceptual features to semantic representation. Furthermore, a model that represents stimuli in terms of visual and conceptual features might produce more accurate and parsimonious predictions than the category model used here.

Experimental Procedures

MRI data collection

MRI data were collected on a 3T Siemens TIM Trio scanner at the UC Berkeley Brain Imaging Center using a 32-channel Siemens volume coil. Functional scans were collected using a gradient echo-EPI sequence with repetition time (TR) = 2.0045s, echo time (TE) = 31ms, flip angle = 70 degrees, voxel size = 2.24×2.24×4.1 mm, matrix size = 100×100, and field of view = 224×224 mm. 32 axial slices were prescribed to cover the entire cortex. A custom-modified bipolar water excitation radiofrequency (RF) pulse was used to avoid signal from fat.

Anatomical data for subjects AH, TC, and JG were collected using a T1-weighted MP-RAGE sequence on the same 3T scanner. Anatomical data for subjects SN and AV were collected on a 1.5T Philips Eclipse scanner as described in an earlier publication (Nishimoto et al., 2011).

Subjects

Functional data were collected from five male human subjects, SN (author SN, age 32), AH (author AGH, age 25), AV (author ATV, age 25), TC (age 29), and JG (age 25). All subjects were healthy and had normal or corrected- to-normal vision.

Natural Movie Stimuli

Model estimation data were collected in twelve separate 10-minute scans. Validation data were collected in nine separate 10-minute scans, each consisting of ten 1-minute validation blocks. Each 1 minute validation block was presented 10 times within the 90 minutes of validation data. The stimuli and experimental design were identical to those used in (Nishimoto et al., 2011) except that here the movies were shown on a projection screen at 24x24 degrees of visual angle.

fMRI data pre-processing

Each functional run was motion-corrected using the FMRIB Linear Image Registration Tool (FLIRT) from FSL 4.2 (Jenkinson & Smith, 2001). All volumes in the run were then averaged to obtain a high quality template volume. FLIRT was also used to automatically align the template volume for each run to the overall template, which was chosen to be the template for the first functional movie run for each subject. These automatic alignments were manually checked and adjusted for accuracy. The cross-run transformation matrix was then concatenated to the motion-correction transformation matrices obtained using MCFLIRT, and the concatenated transformation was used to resample the original data directly into the overall template space.

Low-frequency voxel response drift was identified using a median filter with a 120-second window and this was subtracted from the signal. The mean response for each voxel was then subtracted and the remaining response was scaled to have unit variance.

Flatmap construction

Cortical surface meshes were generated from the T1-weighted anatomical scans using Caret5 software (Van Essen et al., 2001). Five relaxation cuts were made into the surface of each hemisphere and the surface crossing the corpus callosum was removed. The calcarine sulcus cut was made at the horizontal meridian in V1 using retinotopic mapping data as a guide. Surfaces were then flattened using Caret5.

Functional data were aligned to the anatomical data for surface projection using custom software written in MATLAB (MathWorks, Natick, MA).

Stimulus labeling and preprocessing

One observer manually tagged each second of the movies with WordNet labels describing the salient objects and actions in the scene. The number of labels per second varied between 1 and 14, with an average of 4.2. Categories were tagged if they appeared in at least half of the one second clip. When possible, specific labels (e.g. priest) were used instead of generic labels (e.g. person). Label assignments were spot-checked for accuracy by two additional observers. For example labeled clips see Fig. S1.

The labels were then used to build a category indicator matrix, in which each second of movie occupies a row and each category occupies a column. A value of 1 was assigned to each entry where that category appeared in that second of movie and all other entries were set to zero. Next, the WordNet hierarchy (Miller, 1995) was used to add all the superordinate categories entailed by each labeled category. For example, if a clip was labeled with wolf, we would automatically add the categories canine, carnivore, placental mammal, mammal, vertebrate, chordate, organism, and whole. According to this scheme the predicted BOLD response to a category is not just the weight on that category, but the sum of weights for all entailed categories.

The addition of superordinate categories should improve model predictions by allowing poorly sampled categories to share information with their WordNet neighbors. To test this hypothesis we compared prediction performance of the model with superordinate categories to a model that used only the labeled categories. The number of significantly predicted voxels is 10–20% higher with the superordinate category model than with the labeled category model. To ensure that the principal components analysis (PCA) results presented here are not an artifact of the added superordinate categories, we performed the same analysis using the labeled categories model. The results obtained using the labeled categories model were qualitatively similar to those obtained using the full model (data not shown).

The regression procedure also included one additional feature that described the total motion energy during each second of the movie. This regressor was added in order to explain away spurious correlation between responses in early visual cortex and some categories. Total motion energy was computed as the mean output of a set of 2139 motion energy filters (Nishimoto et al., 2011) where each filter consisted of a quadrature pair of space-time Gabor filters (Adelson & Bergen, 1985; Watson & Ahumada, 1985). The motion energy filters tile the image space with a variety of preferred spacial frequencies, orientations, and temporal frequencies. The total motion energy regressor explained much of the response variance in early visual cortex (mainly V1 and V2). This had the desired effect of explaining away correlations between responses in early visual cortex and categories that feature full-field motion (e.g. fire, snow). The total motion energy regressor was used to fit the category model, but was not included in the model predictions.

Voxel-wise model fitting and testing

The category model was fit to each voxel individually. A set of linear temporal filters was used to model the slow hemodynamic response inherent in the BOLD signal (Nishimoto et al., 2011). To capture the hemodynamic delay we used concatenated stimulus vectors that had been delayed by 2, 3, and 4 samples (4, 6, and 8 seconds). For example, one stimulus vector indicates the presence of wolf 4 seconds earlier, another the presence of wolf 6 seconds earlier, and a third the presence of wolf 8 seconds earlier. Taking the dot product of this delayed stimulus with a set of linear weights is functionally equivalent to convolution of the original stimulus vector with a linear temporal kernel that has non-zero entries for 4-, 6-, and 8-second delays.

For details about the regularized regression procedure, model testing, and correction for noise in the validation set, please see the Supplementary Experimental Procedures.

All model fitting and analysis was performed using custom software written in Python, which made heavy use of the NumPy (Oliphant, 2006) and SciPy (Jones et al., 2001) libraries.

Estimating predicted category response

In the semantic category model used here each category entails the presence of its superordinate categories in the WordNet hierarchy. For example, wolf entails the presence of canine, carnivore, etc. Because these categories must be present in the stimulus if wolf is present, the model weight for wolf alone does not accurately reflect the model's predicted response to a stimulus containing only a wolf. Instead, the predicted response to wolf is the sum of the weights for wolf, canine, carnivore, etc. Thus to determine the predicted response of a voxel to a given category we added together the weights for that category and all categories that it entails. This procedure is equivalent to simulating the response of a voxel to a stimulus labeled only with wolf.

We used this procedure to estimate the predicted category responses shown in Figure 2, to assign colors and positions to the category nodes shown in Figures 4 and 5, and to correct PC coefficients before comparing them to hypothetical semantic dimensions as shown in Figure 6.

Principal components analysis

For each subject we used principal components analysis (PCA) to recover a low-dimensional semantic space from category model weights. We first selected all voxels that the model predicted significantly, using a liberal significance threshold (p<0.05 uncorrected for multiple comparisons). This yielded 8269 voxels in subject SN, 8626 voxels in AH, 11697 voxels in AV, 11187 voxels in TC, and 9906 voxels in JG. We then applied PCA to the category model weights of the selected voxels, yielding 1705 PCs for each subject. (In additional tests we found that varying the voxel selection threshold does not strongly affect the PCA results.) Partial scree plots showing the amount of variance accounted for by each PC are shown in Figure 3. The first four PCs account for 24.1% of variance in subject SN, 25.9% of variance in AH, 28.0% of variance in AV, 25.8% of variance in TC, and 25.6% of variance in JG.

Next we tested whether the recovered PCs were different from what we would expect by chance. For details of this procedure, please see the Supplementary Experimental Procedures.

In this paper we present semantic analyses using PCA, but PCA is only one of many dimensionality reduction methods. Sparse methods such as independent components analysis (ICA) and non-negative matrix factorization (NNMF) can also be used to recover the underlying semantic space. We found that these methods produced qualitatively similar results to PCA on the data presented here. In this paper we present only PCA results because PCA is commonly used, easy to understand and the results are highly interpretable.

Stimulus identification using category model and models based on group PCs

To quantify the relative amount of information that can be represented by the full category model and the models based on group PCs, we used the validation data to perform an identification analysis (Kay et al., 2008; Nishimoto et al., 2011). For the full category model we calculated log-likelihoods of the observed responses given predicted responses to the validation stimuli and the fitted category model (Nishimoto et al., 2011). Here we declare correct identification if the highest likelihood for aggregated 18-second (9 TR) chunks of responses can be associated with the correct timings for the matched stimulus chunks within +/− one volume (TR). In order to minimize the potential confound due to non-semantic stimulus features, the prediction of the total motion energy regressor was subtracted from responses before the analysis.

To perform the identification analysis for models based on the group PCs we repeated the same procedures as above, but using group PC models. We obtained these models by voxel-wise regression using the category stimuli projected into the group PC space (see Voxel-wise model fitting and Principal Component Analysis in Methods). In order to assess variability in the performance measurements we performed the identification analysis 10 times, based on group PCs obtained using bootstrap voxel samples.

To reduce noise, the identification analyses used only the 2,000 most predictable voxels. Prediction performance was assessed using 10% of the training data that we reserved from the regression for this purpose. Voxel selection was performed separately for each model and subject.

Comparison between group semantic space and hypothesized semantic dimensions

To compare the dimensions of the group semantic space to hypothesized semantic dimensions, we first defined each hypothesized dimension as a vector with a value for each of the 1705 categories. We then computed the variance that each hypothesized dimension explains in each group PC as the squared correlation between the PC vector and hypothesized dimension vector. To find confidence intervals on the variance explained in each PC we bootstrapped the group PCA by sampling with replacement 100 times from the pooled voxel population.

We defined nine semantic dimensions based on previous publications and our own hypotheses. These dimensions included mobile vs. immobile, animacy, humans vs. non-humans, social vs. non-social, civilization vs. nature, animal vs. non-animal, biological vs. non-biological, place vs. non-place, and object size. For the mobile vs. immobile dimension we assigned positive weights to mobile categories such as animals, people, and vehicles, and zero weight to all other categories. For the animacy dimension based on (Connolly et al., 2012) we assigned high weights to people, and intermediate and low weights to other animals based on their phylogenetic distance from humans: more distant animals were assigned lower weights. For the human vs. non-human dimension we assigned positive weights to people and zero weights to all other categories. For the social vs. non-social dimension we assigned positive weights to people and communication verbs and zero weights to all other categories. For the civilization vs. nature dimension we assigned positive weights to people, man-made objects (e.g. buildings, vehicles, tools), and communication verbs, and negative weights to non-human animals. For the animal vs. non-animal dimension we assigned positive weights to non-human animals, people, and body parts, and zero weight to all other categories. For the biological vs. non-biological category we assigned positive weights to all organisms (e.g. people, non-human animals, plants), plant organs (e.g. flower, leaf), body parts, and body coverings (e.g. hair). For the place vs. non-place dimension we assigned positive weights to outdoor categories (e.g. geological formations, geographical locations, roads, bridges, buildings) and zero weight to all other categories. For the real-world size dimension based on (Konkle & Oliva, 2012) we assigned a high weight to large objects (e.g. boat), medium weight to human-scale objects (e.g. person), a small weight to small objects (e.g. glasses), and zero weight to objects that have no size (e.g. talking) and those that can be many sizes (e.g. animal).

Smoothness of cortical maps under group semantic space

Projecting voxel category model weights onto the group semantic space produces semantic maps that appear spatially smooth (see Fig. 7). However, these maps alone are insufficient to determine whether the apparent smoothness of the cortical map is a specific property of the 4-PC group semantic space. If the categorical model weights are themselves smoothly mapped onto the cortical sheet, then any 4-dimensional projection of these weights might appear equally as smooth as the projection onto the group semantic space. To address this issue we tested whether cortical maps under the 4-PC group semantic space are smoother than expected by chance.

First, we constructed a voxel adjacency matrix based on the fiducial cortical surfaces. The cortical surface for each hemisphere in each subject was represented as a triangular mesh with roughly 60,000 vertices and 120,000 edges. Two voxels were considered adjacent if there was an edge that connects a vertex inside one voxel to a vertex inside the other. Next, we computed the distance between each pair of voxels in the cortex as the length of the shortest path between the voxels in the adjacency graph. This distance metric does not directly translate to physical distance, because the voxels in our scan are not isotropic. However, this affects all models that we test and thus will not bias the results of this analysis.

Next, we projected the voxel category weights onto the 4-dimensional group semantic space, which reduced each voxel to a length 4 vector. We then computed the correlation between the projected weights for each pair of voxels in the cortex. Finally, for each distance up to 10 voxels, we computed the mean correlation between all pairs of voxels separated by that distance. This procedure produces a spatial autocorrelation function for each subject. These results are shown as blue lines in Fig. 8.

To determine whether cortical map smoothness is specific to the group semantic space, we repeated this analysis 1000 times using random semantic spaces of the same dimension as the group semantic space. Random orthonormal 4-dimensional projections from the 1705- dimensional category space were constructed by applying singular value decomposition to randomly generated 4x1705 matrices. One can think of these spaces as uniform random rotations of the group semantic space inside the 1705-dimensional category space.

We considered the observed mean pairwise correlation under the group semantic space to be significant if it exceeded all of the 1000 random samples, corresponding to a p-value of less than 0.001.

Supplementary Material

Highlights.

The brain represents object and action categories within a continuous semantic space

This semantic space is organized into broad gradients across the cortical surface

This semantic space is shared across different individuals

Acknowledgments

The work was supported by grants from the National Eye Institute (EY019684), and from the Center for Science of Information (CSoI), an NSF Science and Technology Center, under grant agreement CCF-0939370. AGH was also supported by the William Orr Dingwall Neurolinguistics Fellowship. We thank Natalia Bilenko and Tolga Çukur for helping with fMRI data collection, Neil Thompson for assistance with the WordNet analysis, and Tom Griffiths and Sonia Bishop for discussions regarding the manuscript. AGH, SN, and JLG conceived and designed the experiment. AGH, SN, and ATV collected the fMRI data. ATV and Tolga Çukur customized and optimized the fMRI pulse sequence. ATV did brain flattening and localizer analysis. AGH tagged the movies. SN and AGH analyzed the data. AGH and JLG wrote the paper.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

The authors declare no conflict of interest.

References

- Adelson EH, Bergen JR. Spatiotemporal energy models for the perception of motion. J Opt Soc Am A. 1985;2(2):284–299. doi: 10.1364/josaa.2.000284. [DOI] [PubMed] [Google Scholar]

- Aguirre GK, Zarahn E, D’esposito M. An Area within Human Ventral Cortex Sensitive to Building Stimuli: Evidence and Implications. Neuron. 1998;21(2):373–383. doi: 10.1016/s0896-6273(00)80546-2. [DOI] [PubMed] [Google Scholar]

- Avidan, Hasson U, Malach R, Behrmann M. Detailed exploration of face-related processing in congenital prosopagnosia 2. Functional neuroimaging findings. J Cogn Neurosci. 2005;17(7):1150–1167. doi: 10.1162/0898929054475145. [DOI] [PubMed] [Google Scholar]

- Bartels A, Zeki S. Functional brain mapping during free viewing of natural scenes. Hum Brain Mapp. 2004;21(2):75–85. doi: 10.1002/hbm.10153. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buccino G, Binkofski F, Fink GR, Fadiga L, Fogassi L, Gallese V, Seitz RJ, et al. Action observation activates premotor and parietal areas in a somatotopic manner: an fMRI study. Eur J Neurosci. 2001;13(2):400. [PubMed] [Google Scholar]

- Buchel C, Josephs O, Rees G, Turner R, Frith CD, Friston KJ. The functional anatomy of attention to visual motion. Brain. 1998;121:1281–1294. doi: 10.1093/brain/121.7.1281. [DOI] [PubMed] [Google Scholar]

- Calvert GA, Campbell R. Reading speech from still and moving faces: the neural substrates of visible speech. J Cogn Neurosci. 2003;15(1):57–70. doi: 10.1162/089892903321107828. [DOI] [PubMed] [Google Scholar]

- Chao LL, Haxby JV, Martin A. Attribute-based neural substrates in temporal cortex for perceiving and knowing about objects. Nat Neurosci. 1999;2:913–919. doi: 10.1038/13217. [DOI] [PubMed] [Google Scholar]

- Clark VP, Keil K, Maisog JM, Courtney S, Ungerleider LG, Haxby JV. Functional magnetic resonance imaging of human visual cortex during face matching: a comparison with positron emission tomography. Neuroimage. 1996;4(1):1–15. doi: 10.1006/nimg.1996.0025. [DOI] [PubMed] [Google Scholar]

- Cohen L, Dehaene S, Naccache L, Lehericy S, Dehaene-Lambertz G, Henaff MA, Michel F. The visual word form area: spatial and temporal characterization of an initial stage of reading in normal subjects and posterior split-brain patients. Brain. 2000 doi: 10.1093/brain/123.2.291. [DOI] [PubMed] [Google Scholar]

- Connolly AC, Guntupalli JS, Gors J, Hanke M, Halchenko YO, Wu Y-C, Abdi H, Haxby JV. The representation of biological classes in the human brain. J Neurosci. 2012;32:2608–2618. doi: 10.1523/JNEUROSCI.5547-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Downar J, Crawley AP, Mikulis DJ, Davis KD. A cortical network sensitive to stimulus salience in a neutral behavioral context across multiple sensory modalities. J Neurophysiol. 2002;87(1):615. doi: 10.1152/jn.00636.2001. [DOI] [PubMed] [Google Scholar]

- Downing PE, Chan AWY, Peelen MV, Dodds CM, Kanwisher N. Domain specificity in visual cortex. Cereb Cortex. 2006;16(10):1453. doi: 10.1093/cercor/bhj086. [DOI] [PubMed] [Google Scholar]

- Downing PE, Jiang Y, Shuman M, Kanwisher N. A cortical area selective for visual processing of the human body. Science. 2001;293(5539):2470. doi: 10.1126/science.1063414. [DOI] [PubMed] [Google Scholar]

- Edelman S, Grill-Spector K, Kushnir T, Malach R. Toward direct visualization of the internal shape representation space by fMRI. Psychobiology. 1998;26(4):309–321. [Google Scholar]

- Engel SA, Glover GH, Wandell BA. Retinotopic Organization in Human Visual Cortex and the Spatial Precision of Functional MRI. Cereb Cortex. 1997:181–192. doi: 10.1093/cercor/7.2.181. [DOI] [PubMed] [Google Scholar]

- Epstein R, Kanwisher N. A cortical representation of the local visual environment. Nature. 1998;392(6676):598–601. doi: 10.1038/33402. [DOI] [PubMed] [Google Scholar]

- Fox CJ, Iaria G, Barton JJS. Defining the face processing network: Optimization of the functional localizer in fMRI. Hum Brain Mapp. 2009;30(5):1637–1651. doi: 10.1002/hbm.20630. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Halgren E, Dale AM, Sereno MI, Tootell RBH, Marinkovic K, Rosen BR. Location of human face-selective cortex with respect to retinotopic areas. Hum Brain Mapp. 1999;7(1):29–37. doi: 10.1002/(SICI)1097-0193(1999)7:1<29::AID-HBM3>3.0.CO;2-R. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hansen Ka, Kay KN, Gallant JL. Topographic organization in and near human visual area V4. J Neurosci. 2007;27(44):11896–911. doi: 10.1523/JNEUROSCI.2991-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hasson U, Nir Y, Levy I, Fuhrmann G, Malach R. Intersubject synchronization of cortical activity during natural vision. Science. 2004 doi: 10.1126/science.1089506. 303/5664/1634. [DOI] [PubMed] [Google Scholar]

- Hasson U, Yang E, Vallines I, Heeger DJ, Rubin N. A hierarchy of temporal receptive windows in human cortex. J Neurosci. 2008;28(10):2539. doi: 10.1523/JNEUROSCI.5487-07.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hauk O, Johnsrude I, Pulvermüller F. Somatotopic Representation of Action Words in Human Motor and Premotor Cortex. Neuron. 2004:1–7. doi: 10.1016/s0896-6273(03)00838-9. [DOI] [PubMed] [Google Scholar]

- Haxby JV, Gobbini MI, Furey ML, Ishai A, Schouten JL, Pietrini P. Distributed and overlapping representations of faces and objects in ventral temporal cortex. Science. 2001;293(5539):2425–2430. doi: 10.1126/science.1063736. [DOI] [PubMed] [Google Scholar]

- Haxby, James V, Guntupalli JS, Connolly AC, Halchenko YO, Conroy BR, Gobbini MI, Hanke M, et al. A Common, High-Dimensional Model of the Representational Space in Human Ventral Temporal Cortex. Neuron. 2011;72(2):404–416. doi: 10.1016/j.neuron.2011.08.026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hsu A, Borst A, Theunissen F. Quantifying variability in neural responses and its application for the validation of model predictions. Network: Computation in Neural Systems. 2004;15(2):91–109. [PubMed] [Google Scholar]

- Iacoboni M, Lieberman MD, Knowlton BJ, Molnar-Szakacs I, Moritz M, Throop CJ, Fiske AP. Watching social interactions produces dorsomedial prefrontal and medial parietal BOLD fMRI signal increases compared to a resting baseline. Neuroimage. 2004;21(3):1167–1173. doi: 10.1016/j.neuroimage.2003.11.013. [DOI] [PubMed] [Google Scholar]

- Jenkinson M, Smith S. A global optimisation method for robust affine registration of brain images. Med Image Anal. 2001;5(2):143–156. doi: 10.1016/s1361-8415(01)00036-6. [DOI] [PubMed] [Google Scholar]

- Jones E, Oliphant TE, Peterson P. SciPy: Open source scientific tools for Python. 2001 [Google Scholar]

- Just Marcel Adam, Cherkassky VL, Aryal S, Mitchell TM. A Neurosemantic Theory of Concrete Noun Representation Based on the Underlying Brain Codes. PLoS ONE. 2010;5(1):e8622. doi: 10.1371/journal.pone.0008622. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kanwisher N, McDermott J, Chun MM. The fusiform face area: A module in human extrastriate cortex specialized for face perception. J Neurosci. 1997;17(11):4302–4311. doi: 10.1523/JNEUROSCI.17-11-04302.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kay KN, Naselaris T, Prenger RJ, Gallant JL. Identifying natural images from human brain activity. Nature. 2008;452(7185):352–355. doi: 10.1038/nature06713. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Konkle T, Oliva A. A real-world size organization of object responses in occipitotemporal cortex. Neuron. 2012;74:1114–1124. doi: 10.1016/j.neuron.2012.04.036. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kriegeskorte N, Mur M, Ruff DA, Kiani R, Bodurka J, Esteky H, Tanaka K, et al. Matching Categorical Object Representations in Inferior Temporal Cortex of Man and Monkey. Neuron. 2008;60(6):1126–1141. doi: 10.1016/j.neuron.2008.10.043. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McCarthy Gregory, Puce A, Gore JC, Allison T. Face-Specific Processing in the Human Fusiform Gyrus. J Cogn Neurosci. 1997;9(5):605–610. doi: 10.1162/jocn.1997.9.5.605. [DOI] [PubMed] [Google Scholar]

- Miller GA. WordNet: a lexical database for English. Commun ACM. 1995;38(11):39–41. [Google Scholar]

- Mitchell TM, Shinkareva SV, Carlson A, Chang K-M, Malave VL, Mason RA, Just MA. Predicting Human Brain Activity Associated with the Meanings of Nouns. Science. 2008;320(5880):1191–1195. doi: 10.1126/science.1152876. [DOI] [PubMed] [Google Scholar]

- Naselaris T, Prenger RJ, Kay KN, Oliver M, Gallant JL. Bayesian Reconstruction of Natural Images from Human Brain Activity. Neuron. 2009;63(6):902–915. doi: 10.1016/j.neuron.2009.09.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nishimoto S, Vu AT, Naselaris T, Benjamini Y, Yu B, Gallant JL. Reconstructing Visual Experiences from Brain Activity Evoked by Natural Movies. Curr. Biol. 2011;21(19):1641–1646. doi: 10.1016/j.cub.2011.08.031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oliphant TE. Guide to NumPy. Provo, UT: Brigham Young University; 2006. [Google Scholar]

- Op de Beeck HP, Haushofer J, Kanwisher NG. Interpreting fMRI data: maps, modules and dimensions. Nat Rev Neurosci. 2008;9(2):123–135. doi: 10.1038/nrn2314. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Op de Beeck HP, Brants M, Baeck A, Wagemans J. Distributed subordinate specificity for bodies, faces, and buildings in human ventral visual cortex. Neuroimage. 2010;49:3414–3425. doi: 10.1016/j.neuroimage.2009.11.022. [DOI] [PubMed] [Google Scholar]

- O’Toole AJ, Jiang F, Haxby JV. Partially Distributed Representations of Objects and Faces in Ventral Temporal Cortex. J Cogn Neurosci. 2005:580–590. doi: 10.1162/0898929053467550. [DOI] [PubMed] [Google Scholar]

- Peelen MV, Downing PE. Selectivity for the human body in the fusiform gyrus. J Neurophysiol. 2005;93(1):603. doi: 10.1152/jn.00513.2004. [DOI] [PubMed] [Google Scholar]

- Peelen MV, Wiggett AJ, Downing PE. Patterns of fMRI activity dissociate overlapping functional brain areas that respond to biological motion. Neuron. 2006;49(6):815–822. doi: 10.1016/j.neuron.2006.02.004. [DOI] [PubMed] [Google Scholar]

- Pelphrey KA, Morris JP, Michelich CR, Allison T, McCarthy G. Functional Anatomy of Biological Motion Perception in Posterior Temporal Cortex: An fMRI Study of Eye, Mouth and Hand Movements. Cereb Cortex. 2005;15(12):1866–1876. doi: 10.1093/cercor/bhi064. [DOI] [PubMed] [Google Scholar]

- Poldrack RA, Wagner AD, Prull MW, Desmond JE, Glover GH, Gabrieli JDE. Functional Specialization for Semantic and Phonological Processing in the Left Inferior Prefrontal Cortex. Neuroimage. 1999;10(1):15–35. doi: 10.1006/nimg.1999.0441. [DOI] [PubMed] [Google Scholar]

- Raichle ME, MacLeod AM, Snyder AZ, Powers WJ, Gusnard DA, Shulman GL. A default mode of brain function. Proceedings of the National Academy of Sciences. 2001;98(2):676. doi: 10.1073/pnas.98.2.676. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rajimehr R, Young JC, Tootell RBH. An anterior temporal face patch in human cortex, predicted by macaque maps. Proc Natl Acad Sci U S A. 2009;106(6):1995. doi: 10.1073/pnas.0807304106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schwarzlose R, Baker C, Kanwisher NG. Separate face and body selectivity on the fusiform gyrus. J Neurosci. 2005;25(47):11055–11059. doi: 10.1523/JNEUROSCI.2621-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smith AT, Greenlee MW, Singh KD, Kraemer FM, Hennig J. The processing of first-and second-order motion in human visual cortex assessed by functional magnetic resonance imaging (fMRI) J Neurosci. 1998;18(10):3816. doi: 10.1523/JNEUROSCI.18-10-03816.1998. [DOI] [PMC free article] [PubMed] [Google Scholar]