Abstract

Good localization accuracy depends on an auditory spatial map that provides consistent binaural information across frequency and level. This study investigated whether mapping bilateral cochlear implants (CIs) independently contributes to distorted perceptual spatial maps. In a meta-analysis, interaural level differences necessary to perceptually center sound images were calculated for 127 pitch-matched pairs of electrodes; many needed large current adjustments to be perceptually centered. In a separate experiment, lateralization was also found to be inconsistent across levels. These findings suggest that auditory spatial maps are distorted in the mapping process, which likely reduces localization accuracy and target-noise separation in bilateral CIs.

Introduction

Cochlear implants (CIs) were originally designed to be implanted in a single ear of deaf or profoundly hearing-impaired individuals. Over the last decade, bilateral implantation has become more prevalent. Using clinical processors, bilateral CI users can show improved speech understanding in quiet (e.g., Dunn et al., 2008), speech understanding in noise (e.g., Litovsky et al., 2006; Loizou et al., 2009), and sound localization (Litovsky et al., 2012) compared to unilateral CI users. Using direct electrical stimulation, bilateral CI users can be sensitive to interaural time differences (ITDs) and interaural level differences (ILDs), especially for post-lingually deafened implantees where some typical development of the binaural auditory pathways has occurred (Litovsky et al., 2010). Despite the fact that bilateral implantation is the standard of care in many clinics, there appears to be no uniform bilateral mapping protocol that clinicians can rely on. Such protocols, in which audiologists determine the levels that are delivered to each electrode, impact speech perception, and here we argue that they also affect listeners' ability to utilize binaural cues.

Bilateral CI mapping typically involves determining threshold (T) and/or comfort (C) or maximum comfortable (M) levels at individual electrodes, checking levels across electrodes in a single ear, making adjustments for multi-electrode stimulation, examining sound field thresholds and speech recognition scores, and adjusting map parameters in response to subjective comments and speech production ability of the patient. This study was motivated by the common finding in bilateral CI experiments that current level units (CUs) designed to elicit an equal loudness perception for pitch-matched pairs of electrodes do not necessarily produce a centered auditory image. Little attention has been given to this topic because such a phenomenon is often described briefly. For instance, in Litovsky et al. (2010, p. 403), “When pairs of electrodes were subjectively reported to be loudness balanced… the perceived location was typically displaced intra-cranially toward the right or left.”

With diotic presentation of stimuli in normal-hearing (NH) individuals, equal loudness implies zero ILD and a centered auditory image because the acoustic inputs to the ears and neural representation of inputs are assumed to be exact copies. Therefore, when the same stimulus is presented to the left and right ears sequentially over headphones to NH listeners, they perceive the loudness of a stimulus as the same. Likewise, when the same stimulus is presented to both ears simultaneously over headphones to NH listeners, they will hear such a stimulus as being centered in the head because the ILD equals zero.

In contrast, for attempted diotic presentation in bilateral CI users, there are several factors that will keep the neural representations of simultaneous bilateral electrical stimulation from being exact copies, even if the stimuli presented to the right and left ears are perceived to have equal loudness. A simple analogy using acoustic stimuli is that a 200-Hz narrowband noise centered at 1000 Hz may have the same loudness as a 400-Hz narrowband noise at 1500 Hz, but may not produce a centered auditory image when presented simultaneously to separate ears. Factors that may cause a difference in electrical stimulus bandwidth, center frequency, and underlying neural representation include, but are not necessarily limited to, unequal neural degeneration in the spiral ganglion cells between the two ears and differences in implantation depth and placement across the ears (e.g., Kawano et al., 1998). Given the differences between the ears in bilateral CI users, it may be unreasonable to assume that equal loudness in each ear would necessitate a centered auditory image because it is very difficult to truly present a diotic stimulus to a bilateral CI user.

This study had two goals. The first was to determine the number of off-centered auditory images and the magnitude of those displacements that occur in mapping bilateral CI users. We performed a meta-analysis on individual maps that had been generated in a number of previous experiments. The second was to investigate how perception of lateralization might vary as a function of overall level. The perceived lateralization of simultaneous electrical stimulation at pitch-matched electrodes was measured in post-lingually deafened bilateral CI users. We hypothesized that the auditory images would be highly inconsistent across level because of asymmetries in loudness growth functions or neural representation between the ears. Both clinical and experimental mapping procedures do not determine the underlying loudness growth functions, thus this is highly relevant to understand how this may affect CI users in the laboratory during psychoacoustics experiments and out of the laboratory using their clinical processors.

Archival data meta-analysis

Listeners, equipment, and stimuli

Data were analyzed from ten post-lingually deafened bilateral CI users (127 electrode pairs: 7 listeners had 15 pairs, 2 listeners had 10 pairs, and 1 listener had 2 pairs) that performed other bilateral CI experiments at the University of Wisconsin–Madison between 2009 and 2011 (e.g., Litovsky et al., 2012). All had Nucleus-type implants with 24 electrodes (Nucleus24, Freedom, or N5, Cochlear Ltd., Sydney, Australia).

Electrical pulses were presented to the listeners via the Nucleus Implant Communicator (NIC, Cochlear Ltd, Sydney, Australia), which was attached to a personal computer and was controlled by custom software run in MATLAB (Mathworks, Natick, MA). The pulses were bilaterally synchronized such that there was zero ITD. Biphasic monopolar electrical pulses were presented to listeners that had a 25-μs phase duration, 100- or 1000-pulse-per-second (pps) stimulation rate, a 300- or 500-ms duration, and no temporal windowing. The stimuli had constant amplitude.

Procedure

T, C, and M were determined for electrodes in both ears, separately, by having listeners report the perceived loudness of different current levels chosen by an experimenter. The set of levels is called a loudness map. C levels were compared across electrodes by sequentially activating them with an inter-stimulus interval of 100 ms. Any electrodes that were perceived as softer or louder than the other electrodes were adjusted so that all of the electrodes had equally loud C levels. Some listeners were allowed to sequentially compare loudness across ears, but not all.1 No extensive changes were made to the maps after such a bilateral comparison (e.g., remapping an entire side), as listeners reported that the perceived loudness was equal or very near equal between the two ears.

Pitch matching of electrode pairs was performed as outlined in Litovsky et al. (2012). Briefly, a place-pitch magnitude estimation was performed using a method of constant stimuli to estimate pitch-matched electrode pairs. Then a direct left-right electrode pitch comparison was performed using the estimated pairs. If this method did not yield a definitive pitch-matched electrode pair, the pair closest in electrode number was chosen. Pitch matching was performed at up to five different places on each electrode array corresponding to these groups: apical, mid-apical, middle, mid-basal, and basal (roughly electrodes 20, 16, 12, 8, and 4).

Finally, centering was performed by presenting the pulse train bilaterally at the pitch-matched pair. Listeners reported to an experimenter whether the stimulus was intracranially centered or not. The experimenter adjusted the level in one ear to impose an ILD on the C levels until the image was intracranially centered. The difference between the C levels from mapping and those that produced a centered image was recorded as the offset.

Results and discussion

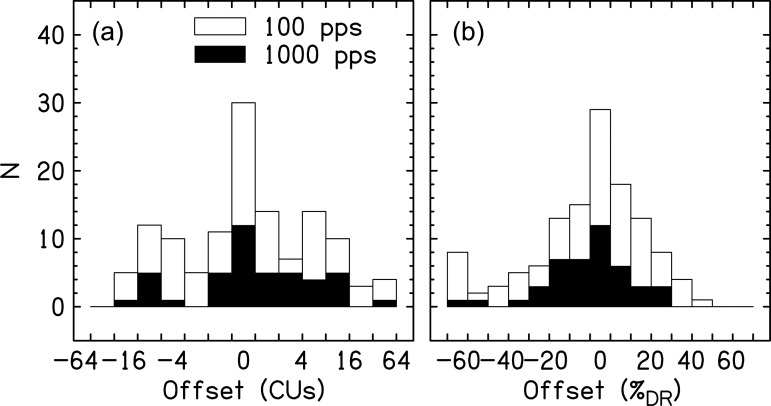

Figure 1 shows the offsets necessary to center the auditory image as a function of CUs and percentage of dynamic range (%DR, where the dynamic range is defined as C – T level).2 The data show that out of 127 instances in which pitch-matched electrodes were used to measure intracranial position, only a small fraction produced subjectively centered auditory images. Two subjects had a majority of pairs that produced centered images. The other centered pairs were distributed across listeners. In contrast, the majority of conditions across subjects did require an offset in ILD. In a few extreme examples, offsets were larger than 60%DR. Some listeners systematically needed offsets to only the right or left, but the majority of listeners had offsets to both the right and left. Therefore, it was the case that the direction of offsets was not necessarily consistent across location on the electrode array for an individual listener. In-depth analysis of factors such as pulse rate, electrode placement, duration, and hearing history did not produce strong patterns in the direction or magnitude of the offset. Explanations for the inconsistency across locations in the array is the likelihood of unequal neural degeneration along the cochlea or that the electrode arrays might be placed within the cochlea such that various locations electrodes are at different distances from the modiolus (e.g., Kawano et al., 1998). Since the measurement error was likely large given the mapping, and centering procedure was performed on many different subjects on several different days (sometimes with long gaps between mappings) by different experimenters, one should appreciate that large offsets are occurring but more rigorous methods would be necessary to truly quantify the magnitude of the effect.

Figure 1.

Histograms for the offset necessary to center an auditory image elicited by a 100-pps (open bars) and 1000-pps (closed bars) pulse train with respect to C level in both ears, either in CUs or percentage of the dynamic range (%DR). Zero offsets are shown in the center. (a) shows boxes that change by a factor of 2. Therefore, offsets of –64 to –32 are shown for the leftmost bar, –32 to –16 for the next bar,…, 0 for the center bar, 1 to 2 CUs for the next bar to the right, 2 to 4 CUs for the next bar to the right, and so on. (b) shows linear spacing in 10%DR units.

Investigating the magnitude of off-centered images as a function of level

Realistic stimuli consist of complex sounds that have ongoing fluctuations in level known as modulations. To understand how the location of a modulated signal might be perceived by a bilateral CI user, we measured the perceived intracranial location of sounds as a function of level and ILD.

Methods

Eight post-lingually deafened CI listeners participated in this experiment. The equipment was the same as that used in Sec. 2. Stimuli were like those described in Sec. 2, except they all were 300 ms, 100 pps, and the overall stimulation level was varied. The stimuli had one of five levels: 25, 50, 75, 100, and 150%DR. For levels ≤100%DR, target level = [%DR × (C − T) + T] and for levels >100%DR, target level = [(%DR − 100) × (M − C) + C] (i.e., 150%DR is half the number of CUs between C and M).3C levels in each ear were intentionally not centered as would happen in the clinic. In order to impose ILDs on the stimuli, level of stimulation in CUs was reduced in one of the ears. Negative ILDs were applied as a reduction of level in the right ear and positive ILDs were applied as reduction in the level in the left ear. If the ILD reduced stimulation to T level in one ear, the remaining CUs were added to the other ear. Typical ILDs were 0, ±2, ±5, and ±10 CUs, though these were adjusted depending on the subject's dynamic range and sensitivity to ILDs. All listeners were tested with at least seven different ILDs.

Stimulus presentation was initiated on each trial by pressing a button. Listeners performed a lateralization task: after hearing the stimulus, they selected the intracranial image location on a continuous slider bar imposed on an image of a head. In the event that multiple images were heard, listeners were instructed to identify the position of the most salient, strongest, or loudest source. The responses were translated to a numerical scale, from −10 (left ear) to 0 (center) to +10 (right ear). Listeners were allowed to repeat the stimulus presentations and they ended each trial with a button press. Each condition was presented 20 times in a random order. A cumulative Gaussian function was used to fit the data at each overall stimulation level. The function had the form

| (1) |

where x is the ILD, and A, σ, , and are the variables optimized to fit the data.

Results and discussion

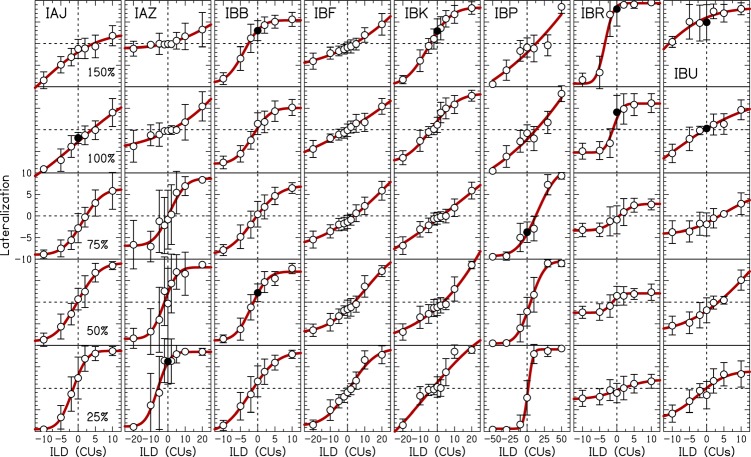

Figure 2 shows the lateralization data for each listener as a function of overall level with fits shown by the curved lines. All listeners at all levels showed a change in lateral position from left to right as the ILD was changed from negative to positive. Because the level was randomized from trial-to-trial, these data demonstrate that the listeners were relying on binaural level difference cues when performing this task.

Figure 2.

(Color online) Lateralization data as a function of ILD at different stimulation levels. Individual listeners are shown in different panels. Symbols represent the average response and error bars show ±1 standard deviation. Filled symbols show where the error bars at 0-CU ILD do not overlap zero lateralization. Solid curved lines are cumulative Gaussian fits to the average data.

Lateralization data were inconsistent within individual listeners. For example, listener IAJ was biased to the right at low levels and biased to the left at high levels. Seven out of eight listeners had at least one 0-CU ILD perceived as significantly off-center as measured by the overlap of the error bar at 0-CU ILD with zero lateralization (shown by filled symbols in Fig. 2). Lateralization fits produced by Eq. 1 show highly variable x- and y-offsets, ranges used, and slopes within individual listeners. Therefore, for a modulated stimulus like speech, different portions of the speech might be perceived at different locations. These uncontrolled perceived changes in lateralization may partially explain relatively poor sound localization (Majdak et al., 2011) and spatial release from masking (Loizou et al., 2009) in CI users compared to NH listeners.

Lateralization data were inconsistent across listeners. For example, comparing the 150%DR condition at the top of each panel shows that some listeners were biased to the left while others to the right. In Fig. 2, the distortions in perceived lateralization at 0-CU ILD when comparing across level are exaggerated as the magnitude of the ILD becomes larger. Listener IAZ utilized the full range of responses for 75%DR, but a small range at 150%DR. Listener IBR showed the opposite pattern to that of IAZ. Most listeners utilized the largest range of responses at low levels like IAZ because the ILDs decreased the level in one ear to T.

Summary

To effectively localize a sound source, the auditory system must relate ITDs and ILDs to spatial locations in a systematic way without a priori information about the level of a sound source. Current CI technology does not reliably encode ITDs and research has shown bilateral CI users rely on ILDs for sound source localization (Grantham et al., 2008; Aronoff et al., 2010). The simplest spatial location to encode would be directly in front of a listener, where there would be zero ITD and ILD imposed upon the stimulus. However, the data from this study demonstrate that spatial maps become distorted as the level of a sound source is changed, even when the stimuli are precisely controlled using bilaterally synchronized direct stimulation (see Fig. 2). These spatial map distortions within and across CI listeners as a function of overall level appear larger than what occurs in NH listeners (Yost, 1981), although a true quantitative comparison across the two groups is difficult. The distortions in the spatial maps come from an inconsistent lateralization perception that changes as a function of level for different ILDs. Since realistic stimuli are modulated, it is reasonable to expect that time-varying ILDs are added in basic CI processing of signals. This may provide insight into the gap that is known to exist between binaural capabilities of NH and CI listeners (e.g., Loizou et al., 2009; Majdak et al., 2011), particularly since bilateral CI users mostly rely on ILDs for sound localization (Grantham et al., 2008; Aronoff et al., 2010). The introduction of spurious ILDs has also been examined in NH and hearing-impaired listeners. Musa-Shufani et al. (2006) showed that dynamic compression that is independent across the ears increases ILD just-noticeable differences and sound localization errors for high frequencies. However, there was little effect of dynamic compression on ITD just-noticeable differences and sound localization errors for low frequencies.

Clinical mapping procedures of CIs use loudness as the primary percept to determine levels of stimulation. Our archival data meta-analysis showed that bilaterally equal loudness does not necessarily produce a centered auditory image in an experimental mapping procedure (i.e., a perceived lateralization was often introduced to the stimulus; Fig. 1). This experimental procedure was based on the general principles that are utilized in a clinical mapping session; therefore, such a result also likely occurs during clinical mapping. On the one hand, it seems reasonable that to remove some of the inconsistency in lateralization across level, clinicians should measure more points on a loudness growth function. On the other hand, the data from this study suggest that loudness perception is fairly independent of intracranial sound source location.

Since there is no standardized clinical bilateral CI mapping protocol, perhaps a bilateral mapping protocol should consider location and loudness percepts. However, the time needed to map a pair of CIs would greatly increase. Therefore, a more practical solution would be to incorporate better ITD encoding in bilateral CIs because ITDs are monotonically related to azimuthal location and are relatively consistent across frequency. ILDs suffer from non-monotonic relationships that are frequency dependent (Macaulay et al., 2010). ILDs would also be more affected than ITDs by basic CI processing such as automatic gain control and front-end compression. Therefore, having CI users solely rely on ILDs for sound localization places them at a great disadvantage to people who can utilize both ITDs and ILDs. Last, in this study pitch-matched pairs of electrodes were used, but pitch-matching is not performed in clinical mapping procedures. Therefore, these results may change if the insertion depths are vastly different, which may have to be considered in a bilateral clinical mapping protocol.

Acknowledgments

We would like to thank Cochlear Ltd. (Sydney, Australia) for providing the testing equipment and technical support. We would like to thank Melanie Buhr-Lawler for discussions on clinical mapping. We would like to thank the three anonymous reviewers for constructive comments on this work. This work was supported by NIH Grant Nos. K99/R00 DC010206 (M.J.G.), R01 DC003083 (R.Y.L.), and P30 HD03352 (Waisman Center core grant).

Footnotes

It was not the original intent of these studies to investigate differences in mapping procedures. The mapping process occurred much like it does in the clinic, sometimes with different people performing the mapping, each with their own style and method to determine these levels. This sometimes, but not always, included an across the ears sequential comparison. Given that only small changes were made from the across ear comparison, we do not think that this makes the data uninterpretable.

Because the DR was different between ears, the %DR was defined using DR in the ear that was manipulated to ultimately center the image.

We defined DR over two regions (below and above C level) because these two regions show drastically different loudness growth. For levels well below C, loudness growth functions are relatively shallow. For levels at C and above, loudness growth functions are relatively steep.

References and links

- Aronoff, J. M., Yoon, Y. S., Freed, D. J., Vermiglio, A. J., Pal, I., and Soli, S. D. (2010). “The use of interaural time and level difference cues by bilateral cochlear implant users,” J. Acoust. Soc. Am. 127, EL87–EL92. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dunn, C. C., Tyler, R. S., Oakley, S., Gantz, B. J., and Noble, W. (2008). “Comparison of speech recognition and localization performance in bilateral and unilateral cochlear implant users matched on duration of deafness and age at implantation,” Ear Hear. 29, 352–359. 10.1097/AUD.0b013e318167b870 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grantham, D. W., Ashmead, D. H., Ricketts, T. A., Haynes, D. S., and Labadie, R. F. (2008). “Interaural time and level difference thresholds for acoustically presented signals in post-lingually deafened adults fitted with bilateral cochlear implants using CIS+ processing,” Ear Hear. 29, 33–44. [DOI] [PubMed] [Google Scholar]

- Kawano, A., Seldon, H. L., Clark, G. M., Ramsden, R. T., and Raine, C. H. (1998). “Intracochlear factors contributing to psychophysical percepts following cochlear implantation,” Acta Oto-Laryngol. 118, 313–326. [DOI] [PubMed] [Google Scholar]

- Litovsky, R., Parkinson, A., Arcaroli, J., and Sammeth, C. (2006). “Simultaneous bilateral cochlear implantation in adults: A multicenter clinical study,” Ear Hear. 27, 714–731. 10.1097/01.aud.0000246816.50820.42 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Litovsky, R. Y., Jones, G. L., Agrawal, S., and van Hoesel, R. (2010). “Effect of age at onset of deafness on binaural sensitivity in electric hearing in humans,” J. Acoust. Soc. Am. 127, 400–414. 10.1121/1.3257546 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Litovsky, R. Y., Goupell, M. J., Godar, S., Grieco-Calub, T., Jones, G. L., Garadat, S. N., Agrawal, S., Kan, A., Todd, A., Hess, C., and Misurelli, S. (2012). “Studies on bilateral cochlear implants at the University of Wisconsin's Binaural Hearing and Speech Laboratory,” J. Am. Acad. Audiol. 23, 476–494. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Loizou, P. C., Hu, Y., Litovsky, R., Yu, G., Peters, R., Lake, J., and Roland, P. (2009). “Speech recognition by bilateral cochlear implant users in a cocktail-party setting,” J. Acoust. Soc. Am. 125, 372–383. 10.1121/1.3036175 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Macaulay, E. J., Hartmann, W. M., and Rakerd, B. (2010). “The acoustical bright spot and mislocalization of tones by human listeners,” J. Acoust. Soc. Am. 127, 1440–1449. 10.1121/1.3294654 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Majdak, P., Goupell, M. J., and Laback, B. (2011). “Two-dimensional localization of virtual sound sources in cochlear-implant listeners,” Ear Hear. 32, 198–208. 10.1097/AUD.0b013e3181f4dfe9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Musa-Shufani, S., Walger, M., von Wedel, H., and Meister, H. (2006). “Influence of dynamic compression on directional hearing in the horizontal plane,” Ear Hear. 27, 279–285. 10.1097/01.aud.0000215972.68797.5e [DOI] [PubMed] [Google Scholar]

- Yost, W. A. (1981). “Lateral position of sinusoids presented with interaural intensive and temporal differences,” J. Acoust. Soc. Am. 70, 397–409. 10.1121/1.386775 [DOI] [Google Scholar]