Abstract

Psychophysical, clinical, and imaging evidence suggests that consonant and vowel sounds have distinct neural representations. This study tests the hypothesis that consonant and vowel sounds are represented on different timescales within the same population of neurons by comparing behavioral discrimination with neural discrimination based on activity recorded in rat inferior colliculus and primary auditory cortex. Performance on 9 vowel discrimination tasks was highly correlated with neural discrimination based on spike count and was not correlated when spike timing was preserved. In contrast, performance on 11 consonant discrimination tasks was highly correlated with neural discrimination when spike timing was preserved and not when spike timing was eliminated. These results suggest that in the early stages of auditory processing, spike count encodes vowel sounds and spike timing encodes consonant sounds. These distinct coding strategies likely contribute to the robust nature of speech sound representations and may help explain some aspects of developmental and acquired speech processing disorders.

Keywords: multiplexed temporal coding, processing streams, rate code, spatiotemporal activity pattern

Introduction

A diverse set of observations suggest that consonants and vowels are processed differently by the central auditory system. Compared with vowels, consonant perception 1) matures later (Polka and Werker 1994), 2) is more categorical (Fry et al. 1962; Pisoni 1973), 3) is less sensitive to spectral degradation (Shannon et al. 1995; Xu et al. 2005), 4) is more sensitive to temporal degradation (Shannon et al. 1995; Kasturi et al. 2002; Xu et al. 2005), and 5) is more useful for parsing the speech stream into words (Bonatti et al. 2005; Toro et al. 2008). Clinical studies, imaging, and stimulation experiments also suggest that consonants and vowels are differentially processed. Brain damage can impair consonant perception while sparing vowel perception and vice versa (Caramazza et al. 2000). Electrical stimulation of the temporal cortex that impaired consonant discrimination did not impair vowel discrimination (Boatman et al. 1994, 1995, 1997). Human brain imaging studies also suggest that consonant and vowel sounds are processed differently (Fiez et al. 1995; Seifritz et al. 2002; Poeppel 2003; Carreiras and Price 2008). Unfortunately, none of these studies provides sufficient resolution to document how the neural representations of consonant and vowel sounds differ. In this study, we recorded action potentials from inferior colliculus (IC) and primary auditory cortex (A1) neurons of rats to test the hypothesis that consonant and vowel sounds are represented by neural activity patterns occurring on different timescales (Poeppel 2003).

Previous neurophysiology studies in animals revealed that both the number of spikes generated by speech sounds and the relative timing of these spikes contain significant and complementary information about important speech features. From auditory nerve to A1, the spectral shape of vowel and fricative sounds can be identified in plots of evoked spike count as a function of characteristic frequency (Sachs and Young 1979; Delgutte and Kiang 1984a, 1984b; Ohl and Scheich 1997; Versnel and Shamma 1998). Information based on spike timing can be used to identify the formants (peaks of spectral energy) of steady state vowels (Young and Sachs 1979; Palmer 1990). Relative spike timing can also be used to identify the onset spectrum, formant transitions, and voice onset time of many consonants (Steinschneider et al. 1982, 1995, 1999, 2005; Miller and Sachs 1983; Carney and Geisler 1986; Deng and Geisler 1987; Engineer et al. 2008). Behavioral discrimination of consonant sounds is highly correlated with neural discrimination using spike timing information collected in A1 (Engineer et al. 2008). However, no study has directly compared potential coding strategies for consonants and vowels with behavior.

In this study, we trained 19 rats to discriminate consonant or vowel sounds and compared behavioral and neural discrimination using activity recorded from IC and A1. It is reasonable to expect that sounds which evoke similar neural activity patterns will be more difficult to discriminate than sounds which evoke more distinct patterns. We tested whether the neural code for speech sounds was best described as the average number of action potentials generated by a population of neurons or as the average spatiotemporal pattern across a population or as both depending on the particular stimulus discrimination. Our results suggest that the brain represents consonant and vowel sounds on distinctly different timescales. By comparing responses in IC and A1, we confirmed that speech sound processing, like sensory processing generally, occurs as the gradual transformation from acoustic information to behavioral category (Chechik et al. 2006; Hernández et al. 2010; Tsunada et al. 2011).

Materials and Methods

Speech Stimuli

Twenty-eight English consonant–vowel–consonant words were recorded in a double-walled soundproof booth. Twenty of the sounds ended in “ad” (/æd/as in “sad”) and were identical to the sounds used in our earlier study (Engineer et al. 2008). The initial consonants of these sounds differ in voicing, place of articulation, or manner of articulation. The remaining 8 words began with either “s” or “d” and contained the vowels (/å/, /∧/, /i/, and/u/as in “said,” “sud,” “seed,” and “sood,” Fig. 1). To confirm that vowel discrimination was not based on coarticulation during the preceding “s” or “d” sounds (Soli 1981), we also tested vowel discrimination on a set of 5 sounds in which the “s” was replaced with a 10 ms burst of white noise (60 dB sound pressure level [SPL], 1–32 kHz). These noise burst–vowel–consonant syllables were only tested behaviorally. All sounds in this study ended in the terminal consonant “d.” As in our earlier study, the fundamental frequency and spectrum envelope of the recorded speech sounds were shifted up in frequency by one octave using the STRAIGHT vocoder in order to better match the rat hearing range (Kawahara 1997; Engineer et al. 2008). The vocoder does not alter the temporal envelope of the sounds. A subset of rats discriminated “dad” from a version of “dad” in which the pitch shifted one octave lower. The intensity of all speech sounds was adjusted so that the intensity during the most intense 100 ms was 60 dB SPL.

Figure 1.

Spectrograms of the 5 vowel sounds with 2 initial consonants. The initial recordings were shifted one octave higher to match the rat hearing range using the STRAIGHT vocoder (Kawahara 1997).

Operant Training Procedure and Analysis

Nineteen rats were trained using an operant go/no-go procedure to discriminate words differing in their initial consonant sound or in their vowel sound. Each rat was trained for 2 sessions a day (1 h each), 5 days per week. Rats first underwent a shaping period during which they were taught to press the lever. Each time the rat was in close proximity to the lever, the rat heard the target stimulus (“dad” or “sad”) and received a food pellet. Eventually, the rat began to press the lever without assistance. After each lever press, the rat heard the target sound and received a pellet. The shaping period lasted until the rat was able to reach the criteria of obtaining at least 100 pellets per session for 2 consecutive sessions. This stage lasted on average 3.5 days. Following the shaping period, rats began a detection task where they learned to press the lever each time the target sound was presented. Silent periods were randomly interleaved with the target sounds during each training session. Sounds were initially presented every 10 s, and the rat was given an 8 s window to press the lever. The sound interval was gradually decreased to 6 s, and the lever press window was decreased to 3 s. Once rats reached the performance criteria of a d′ ≥ 1.5 for 10 sessions, they advanced to the discrimination task. The quantity d′ is a measure of discriminability of 2 sets of samples based on signal detection theory (Green and Swets 1966).

During discrimination training, rats learned to discriminate the target (“dad” or “sad”) from the distracter sounds, which differed in initial consonant or vowel. Training took place in a soundproof double-walled training booth that included a house light, video camera for monitoring, speaker, and a cage that included a lever, lever light, and pellet receptacle. Trials began every 6 s, and silent catch trials were randomly interleaved 20–33% of the time. Rats were only rewarded for lever presses to the target stimulus. Pressing the lever at any other time resulted in a timeout during which the house light was extinguished and the training program paused for a period of 6 s. Rats were food deprived to motivate behavior but were fed on days off to maintain between 80% and 90% ad lib body weight.

Each discrimination task lasted for 20 training sessions over 2 weeks. Eight rats were trained to discriminate between vowel sounds. During the first task, 4 rats were required to press to “sad” and not “said,” “sud,” “seed,” “sood.” The other 4 rats were required to press to “dad” and not “dead,” “dud,” “deed,” “dood.” After 2 weeks of training, the tasks were switched and the rats were trained to discriminate the vowels with the initial consonant switched (“d” or “s”). After 2 additional weeks of training, both groups of rats were required to press to either “sad” or “dad” and reject any of the 8 other sounds (Fig. 1). For 2 sessions during this final training stage, the stimuli were replaced with noise burst–vowel–consonant syllables (described above).

Eleven rats were trained to discriminate between consonant sounds. Six rats performed each of 4 different consonant discrimination tasks for 2 weeks each (“dad” vs. “sad,” “dad” vs. “tad,” “rad” vs. “lad,” and “dad” vs. “bad” and “gad”), and 5 rats performed each of 4 different discrimination tasks for 2 weeks each (“dad” vs. “dad” with a lower pitch, “mad” vs. “nad,” “shad” vs. “chad” and “jad,” and “shad” vs. “fad,” “sad,” and “had”). These 11 consonant trained rats were the same rats as in our earlier study (Engineer et al. 2008).

Recording Procedure

Multiunit and single unit responses from the right inferior colliculus (IC, n = 187 recording sites) or primary auditory cortex (A1, n = 445 recording sites) of 23 experimentally naive female Sprague–Dawley rats were obtained in a soundproof recording booth. During acute recordings, rats were anesthetized with pentobarbital (50 mg/kg) and received supplemental dilute pentobarbital (8 mg/mL) every half hour to 1 h as needed to maintain areflexia. Heart rate and body temperature were monitored throughout the experiment. For most cortical recordings, 4 Parylene-coated tungsten microelectrodes (1–2 MΩ, FHC Inc., Bowdoin, ME) were simultaneously lowered to 600 μm below the surface of the right primary auditory cortex (layer 4/5). Electrode penetrations were marked using blood vessels as landmarks. The consonant responses from these 445 A1 recordings sites were also used in our earlier study (Engineer et al. 2008).

For collicular recordings, 1 or 2 Parylene-coated tungsten microelectrodes (1–2 MΩ, FHC Inc.) were introduced through a hole located 9 mm posterior and 1.5 mm lateral to Bregma. Using a micromanipulator, electrodes were lowered at 200 μm intervals along the dorsal ventral axis to a depth between 1100 and 5690 μm. Blood vessels were used as landmarks as the electrode placement was changed in the caudal–rostral direction. Based on latency analysis and frequency topography, the majority of IC recordings are likely to be from the central nucleus (Palombi and Caspary 1996).

At each recording site, 25 ms tones were played at 81 frequencies (1–32 kHz) at 16 intensities (0–75 dB) to determine the characteristic frequency of each site. All tones were separated by 560 ms and randomly interleaved. Sounds were presented approximately 10 cm from the left ear of the rat. Twenty-nine 60 dB speech stimuli were randomly interleaved and presented every 2000 ms. Each sound was repeated 20 times. Stimulus generation, data acquisition, and spike sorting were performed with Tucker-Davis hardware (RP2.1 and RX5) and software (Brainware). Protocols and recording procedures were approved by the University of Texas at Dallas IACUC.

Data Analysis

The distinctness of the spatial activity patterns generated by each pair of sounds (i.e., “dad” and “dud”) was quantified as the average difference in the number of spikes evoked by each sound across the population of neurons recorded. As in our earlier study, the analysis window for consonant sounds was 40 ms long beginning at sound onset (Engineer et al. 2008). The analysis window for vowel sounds was 300 ms long and began immediately at vowel onset.

A nearest-neighbor classifier was used to quantify neural discrimination accuracy based on single trial activity patterns (Foffani and Moxon 2004; Schnupp et al. 2006; Engineer et al. 2008; Malone et al. 2010). The classifier binned activity using 1–400 ms intervals and then compared the response of each single trial with the average activity pattern (poststimulus time histogram, PSTH) evoked by each of the speech stimuli presented. PSTH templates were constructed from 19 presentations since the trial currently considered was not included in the average activity pattern to prevent artifact. This model assumes that the brain region reading out the information in the spike trials has previously heard each of the sounds 19 times and attempts to identify which of the possible choices was most likely to have generated the trial under consideration. Euclidean distance was used to determine how similar each response was to the average activity evoked by each of the sounds. The classifier guesses that the single trial pattern was generated by the sound whose average pattern it most closely resembles (i.e., minimum Euclidean distance). The response onset to each sound was defined as time point at which neural activity exceeded the spontaneous firing rate by 3 standard deviations. Error bars indicate standard error of the mean. Pearson's correlation coefficient was used to examine the relationship between neural and behavioral discrimination on the 11 consonant tasks and 9 vowel tasks.

Results

Rats were trained on a go/no-go task that required them to discriminate between English monosyllabic words with different vowels. The rats were rewarded for pressing a lever in response to the target word (“dad” or “sad”) and punished with a brief timeout for pressing the lever in response to any of the 4 distracter words (“dead,” “dud,” “deed,” and “dood” or “said,” “sud,” “seed,” and “sood,” Fig. 1). Performance was well above chance after only 3 days of discrimination training (P < 0.005, Fig. 2a). By the end of training, rats were correct on 85% of trials (Fig. 2c). The pattern of false alarms suggests that rats discriminate between vowels by identifying differences in the vowel power spectrums (Fig. 3a,b). Vowels with similar spectral peaks (i.e., “dad” vs. “dead”; Peterson and Barney 1952) were difficult for rats to discriminate, and vowels with distinct spectral peaks (i.e., dad vs. deed) were easy to discriminate (Fig. 3c,d). For example, rats were able to correctly discriminate “dad” from “dead” on 58 ± 2% of trials but were able to correctly discriminate “dad” from “deed” on 78 ± 2% of trials (P < 0.02). Discrimination of vowel pairs was highly correlated with the difference between the sounds in the feature space created by the peak of the first and second formants (R2 = 0.77, P < 0.002, Fig. 3a,b) and with the Euclidean distance between the complete power spectrum of each vowel used (R2 = 0.79, P < 0.001). These results suggest that rats, like humans, discriminate between vowel sounds by comparing formant peaks (Delattre et al. 1952; Peterson and Barney 1952; Fry et al. 1962).

Figure 2.

Vowel sound discrimination by rats. Performance was evaluated using a go/no-go discrimination task requiring rats to lever press to the word “dad” or “sad.” while ignoring words with other vowel sounds. (a) During the first stage of training, half of the rats learned to discriminate between words beginning with “d” (i.e., “dad” vs. “dead,” “dud,” “deed,” or “dood”) and the other half learned to discriminate vowel sounds of words beginning with “s.” (b) During the second stage of training, the stimuli presented to the 2 groups of rats were switched. Performance was significantly above chance on the first day of performance (P < 0.05). (c) During the third stage of training, both groups were required to lever press to either “dad” or “sad,” while ignoring the 8 words with other vowel sounds. Open circles indicate performance on a modified set of stimuli in which the “s” sound was replaced with a noise burst to prevent discrimination based on coarticulation cues in the initial consonant sounds. Discrimination of these sounds was significantly above chance.

Figure 3.

Lever press rates for each of the 5 vowel sounds tested. (a) Peak frequency for the first and second formant of each sound tested is plotted in Hertz. Note that original speech sounds were shifted one octave higher to match the rat hearing range using the STRAIGHT vocoder (Kawahara 1997). (b) The relationship between the Euclidean distance in F1–F2 space in octaves is well correlated with behavioral discrimination. (c and d) Rats pressed the lever in response to the target vowel/æ/(as in “sad” and “dad”) significantly more than for any of the other vowel sounds. The solid line indicates the frequency that rats pressed the lever during silent catch trials. The dashed lines and the error bars represent standard error of the mean. Behavioral responses were from all 10 days of training.

We also tested whether rats could quickly generalize the task to a new set of stimuli with a different initial consonant to confirm that the rats were discriminating the speech sounds based on the vowel and not some other acoustic cue. Rats that had been trained for 10 days on words with the same initial consonant (either “d” or “s”) correctly generalized their performance when presented with a completely new set of stimuli with a different initial consonant (“s” or “d,” Fig. 2b). The average performance on the first day was well above chance (60 ± 2% correct performance, P < 0.005). Performance was above chance even during the first 4 trials of testing with each of the new stimuli (60 ± 4%, P < 0.05), which confirms that generalization of vowel discrimination occurred rapidly. The fact that rats can generalize their performance to target sounds with somewhat different formant structure (Fig. 3a) suggests the possibility that preceding consonants may alter the perception of vowel sounds, as in human listeners (Holt et al. 2000). However, additional studies with synthetic vowel stimuli would be needed to test this possibility.

During the final stage of training, rats were able to respond to the target vowel when presented in either of 2 contexts (“d” or “s”) and withhold responding to the 8 other distracters (85 ± 4% correct trials on the last day, Fig. 2c). To confirm that rats were using spectral differences during the vowel portion of the speech sounds instead of cues present only in the consonant, we tested vowel discrimination for 2 sessions (marked by open circles in Fig. 2c) using a set of sounds derived from the stimuli beginning with “s,” but where the “s” sound was replaced with a 30 ms white noise burst that was identical for each sound. Performance was well above chance (64 ± 3%, P = 0.016) and well correlated with formant differences even when the initial consonant was replaced by a noise burst (R2 = 0.65, P < 0.008). These results demonstrate that rats are able to accurately discriminate English vowel sounds and suggest that rats are a suitable model for studying the neural mechanisms of speech sound discrimination (Engineer et al. 2008).

We recorded multiunit neural activity from 187 IC sites and 445 A1 sites to evaluate which of several possible neural codes best explain the behavioral discrimination of consonant and vowel sounds. A total of 29 speech sounds were presented to each site, including 20 consonants and 5 vowels. As observed in earlier studies using awake and anesthetized preparations (Watanabe 1978; Steinschneider et al. 1982; Chen et al. 1996; Sinex and Chen 2000; Cunningham et al. 2002; Engineer et al. 2008), speech sounds evoked a brief phasic response as well as a sustained response (Fig. 4 for IC responses and Fig. 5 for A1 responses). As expected, IC neurons responded to speech sounds earlier than A1 neurons (3.1 ± 1.2 ms earlier, P < 0.05). The sustained spike rate was more pronounced in IC but was also observed in A1 neurons (IC: 62 ± 4 Hz, Fig. 4; A1:26 ± 0.9 Hz, Fig. 5). The pattern of neural firing was distinct for each of the vowels tested. For example, even though “dad” and “dood” evoked approximately the same number of spikes in IC (18 ± 1.3 and 19 ± 1.2 Hz) and A1 (8.3 ± 0.3 and 8.3 ± 0.2 Hz), the spatial pattern differed such that the average absolute value of the difference in the response to “dad” and “dood” across all IC and A1 sites was 28.4 ± 2.7 and 6.7 ± 0.4 Hz, respectively. The absolute value of the difference in the firing rate between each pair of vowel sounds was well correlated with the distance between the vowels in the feature space formed by the first and second formant peaks (IC: R2 = 0.88, P < 0.01; A1: R2 = 0.69, P < 0.01, Fig. 6). The finding that different vowel sounds generate distinct spatial activity patterns in the central auditory system is consistent with earlier studies (Sachs and Young 1979; Delgutte and Kiang 1984a; Ohl and Scheich 1997; Versnel and Shamma 1998) and suggests that differences in the average neural firing rate across a population of neurons could be used to discriminate between vowel sounds.

Figure 4.

Response of the entire population of IC neurons to each of the vowel sounds tested. Neurograms are constructed from the average PSTH of 187 IC recording sites ordered by the characteristic frequency of each recording site. The average PSTH for all the sites recorded is shown above each neurogram. The peak firing rate for the population PSTH was 550 Hz. To illustrate how the spatial activity patterns differed across each vowel, subplots to the right of each neurogram show the difference between the response to each sound and the mean response to all 5 sounds. A white line is provided at zero to make it clearer which responses are above or below zero. Compared with the average response, the words “seed” and “deed” evoked more activity among high frequency sites (>9 kHz) and less activity among low frequency sites (<6 kHz), which was expected from their power spectrums (Fig. 7).

Figure 5.

Response of the entire population of A1 neurons recorded to each of the vowel sounds tested. The conventions are the same as Figure 4, except that the height of the PSTH scale bar represents a firing rate of 250 Hz.

Figure 6.

The distinctness of the spatial patterns evoked in IC (a) and A1 (b) by each vowel pair was correlated with the distance between the vowels in the feature space formed by the first and second formant peaks. The distinctness of the spatial patterns evoked in IC (c) and A1 (d) was also correlated with behavior discrimination of the vowel pairs. In this figure, neural distinctness was quantified as the absolute value of the difference in the average firing rate recorded in response to each pair of vowel sounds. A 300 ms long analysis window beginning at vowel onset was used to quantify the vowel response.

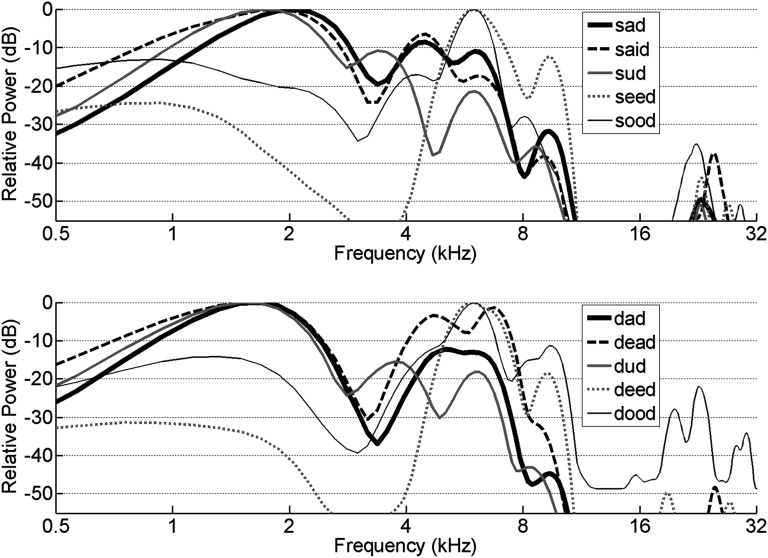

Although individual formants were not clearly visible in the spatial activity patterns of A1 or IC, the global characteristics of the spatial activity patterns (rate–place code) evoked by each vowel sound were clearly related to the stimulus acoustics. The difference plots in Figure 4 show that broad regions of the IC frequency map respond similarly to different vowel sounds. For example, the words “seed” and “deed” evoked more activity among high frequency IC sites (>9 kHz) compared with the other sounds (P < 0.05) and less activity among low frequency IC sites (<6 kHz) compared with the other sounds (P < 0.05). These differences in the spatial activity patterns likely result from the fact that the vowel sounds in “seed” and “deed” have little energy below 6 kHz compared with most of the other sounds and more energy above 9 kHz (Fig. 7). Although the global pattern of responses in A1 were similar to IC, A1 responses were less dependent on the characteristic frequency of each site compared with response in IC (Fig. 5). Pairs of A1 sites tuned to similar tone frequencies respond more differently to vowel sounds compared with pairs of IC sites. For pairs of IC sites with characteristic frequencies within half an octave, the average correlation between the responses to the 10 speech sounds was 0.41 ± 0.01. The average correlation was significantly less for A1 sites (0.29 ± 0.01, P < 10−10). Only 10% of nearby A1 pairs exhibited a correlation coefficient above 0.8 compared with 24% of IC sites. The observation that A1 responses to vowels are less determined by characteristic frequency compared with IC responses is consistent with earlier reports that neurons in A1 are sensitive to interactions among many different acoustic features which leads to a reduction in the information redundancy compared with IC (Steinschneider et al. 1990; DeWeese et al. 2005; Chechik et al. 2006; Mesgarani et al. 2008).

Figure 7.

Power spectrums of the 5 vowel sounds with 2 initial consonants. The initial recordings were shifted one octave higher using the STRAIGHT vocoder to better match the rat hearing range (Kawahara 1997).

Since some vowel sounds are more difficult for rats to distinguish than others (Fig. 3), we expected that the difference in the neural responses would be relatively small for vowels that were difficult to distinguish and larger for vowels that were easier to distinguish. For example, the average absolute value of the difference in the response to “dad” and “dead” across all A1 and IC sites was 5.3 ± 0.3 and 15.4 ± 1.3 Hz, respectively, which is significantly less than the absolute value of the difference between “dad” and “deed” (P < 0.05). Consistent with this prediction, the absolute value of the difference in the firing rate between each pair of vowel sounds was correlated (R2 = 0.58, P < 0.02) with the behavioral discrimination ability (Fig. 6). These results support the hypothesis that differences in the average neural firing rate are used to discriminate between vowel sounds.

It is important to confirm that any potential neural code can duplicate the accuracy of sensory discrimination. Analysis using a nearest-neighbor classifier makes it possible to document neural discrimination based on single trial data and allows the direct correlation between neural and behavioral discrimination in units of percent correct (Engineer et al. 2008). The classifier compares the PSTH evoked by each stimulus presentation with the average PSTH evoked by each stimulus and selects the most similar (see Materials and Methods). The number of bins used (and thus the temporal precision) is a free parameter. In the simplest form, the classifier compares the total number of spikes recorded on each trial with the average number of spikes in response to 2 speech sounds and classifies each trial as the sound that evoked the closest number of spikes on average (Fig. 8b). Vowel discrimination using this simple method was remarkably similar to behavioral discrimination (Fig. 9a). For example, using this method, the number of spikes evoked at a single IC site on a single trial is sufficient to discriminate between “dad” and “deed” 78 ± 1% of the time. This performance is very similar to the behavioral discrimination of 78 ± 2% correct. The correlation between behavioral and neural discrimination using a single trial from a single IC site was high (R2 = 0.59, P = 0.02) for the 9 vowel tasks tested (4 distracter vowels with 2 different initial consonants plus the pitch task). Although average accuracy was lower compared with IC (57 ± 0.5% vs. 73 ± 2%, P < 0.05), neural discrimination based on individual A1 sites was also significantly correlated with vowel discrimination behavior (R2 = 0.58, P = 0.02; Fig. 9c). The difference between A1 and IC neural discrimination accuracy may simply result from the lower firing rates of the multiunit activity in A1 compared with IC (26 ± 0.85 vs. 52 ± 3 Hz, P < 0.05). Single unit analysis suggests that the difference in multiunit activity is due at least in part to higher and more reliable firing rates of individual IC neurons compared with individual A1 neurons (6.1 ± 1.4 vs. 1.4 ± 0.4 Hz, P < 0.05), as seen in earlier studies (Chechik et al. 2006). These results suggest that vowel sounds are represented in the early central auditory system as the average firing rate over the entire duration of these sounds.

Figure 8.

Neural responses to speech sounds and classifier based discrimination of each sound from “dad.” (a and c) Dot rasters show the timing of action potentials evoked in response to speech sounds differing in the vowel or initial consonant sound. Responses from 20 individual trials are shown. The acoustic waveform is shown in gray. The performance of a PSTH based neural classifier is shown as percent correct discrimination from “dad.” The classifier compares the pattern of spike timing evoked on each trial with the average PSTHs generated by 2 sounds (i.e., “dead” and “dad”) and labels each sound based on which of the Euclidean distances between the single trial pattern and the average PSTHs is smaller. (b and d) Plots the number of spikes evoked by 20 presentations of each word. The classifier compares the spike count evoked each trial with the average spike count generated by 2 sounds (i.e., “dead” and “dad”) and labels each sound based on which of the difference between the single trial spike count and the average spike counts.

Figure 9.

Neural discrimination was well correlated with behavioral discrimination of vowels when the average firing rate over a long analysis window was used but was not significantly correlated with behavior when spike timing was considered. Neural discrimination was based on single trial multiunit activity recorded from individual sites in IC (a and b) and A1 (c and d). The nearest-neighbor classifier assigns each sweep to the stimulus that evokes the most similar response on average. In a and c, the classifier used a 300 ms long analysis window beginning at vowel onset and was used to quantify the vowel response. In b and d, the classifier compared the temporal pattern recorded over the same window and binned with 1 ms precision. These results suggest that vowel sounds are represented by the average spatial activity pattern and that spike timing information is not used.

Neural discrimination of consonant sounds was only correlated with behavioral discrimination when the classifier was able to use spike timing information (Engineer et al. 2008). To test the hypothesis that rats might also use spike timing information when discriminating vowel sounds, we compared vowel behavior with classifier performance using spike timing (Fig. 8a). The addition of spike timing information increased discrimination accuracy, such that neural discrimination greatly exceeded behavioral discrimination (Fig. 9b). As a result, there was no correlation between the difficulty of neural and behavioral discrimination of each vowel pair (Fig. 9b,d). This result suggests the possibility that spike timing is used for discrimination of consonant sounds but not for discrimination of the longer vowel sounds. Analysis based on 23 well-isolated IC single units confirmed that vowel discrimination is best correlated with neural discrimination based on spike count, while consonant discrimination was best correlated with neural discrimination that includes spike timing information.

Since our earlier study of consonant discrimination only evaluated neural discrimination by A1 neurons, we repeated the analyses (Fig. 8c,d) using IC responses (Fig. 10) to the set of consonant sounds tested in our earlier study (Engineer et al. 2008). Classifier performance was best correlated (R2 = 0.73, P = 0.0008) with behavioral discrimination when spike timing information was provided (Fig. 11a). The observation that behavior was still weakly correlated with neural discrimination when spike timing was eliminated in IC (Fig. 11b), but not in A1 (Engineer et al. 2008), suggests that the coding strategies used to represent consonant and vowel sounds may become more distinct as the information ascends the central auditory system (Chechik et al. 2006).

Figure 10.

Response of the entire population of IC neurons recorded to each of the consonant sounds tested. Neurograms are constructed from the average PSTH of 187 IC recording sites ordered by the preferred frequency of each recording site. The average PSTH for all the sites recorded is shown above each neurogram. The height of the scale bar to the left of each average PSTH represents a firing rate of 600 Hz. Only the initial onset response to each consonant sound is shown to allow the relative differences in spike timing to be visible. For example, high frequency neurons respond earlier to “dad” compared with “bad.” The opposite timing occurs for low frequency neurons. These patterns are similar to A1 responses (Engineer et al. 2008).

Figure 11.

Neural discrimination was well correlated with behavioral discrimination of consonants when spike timing information was used but was not as well correlated with behavior when the average firing rate over a long analysis window was used. Neural discrimination was based on single trial multiunit activity recorded from individual sites in IC and A1. The nearest-neighbor classifier assigns each sweep to the stimulus that evokes the most similar response on average. In (a), the classifier compared the temporal pattern recorded within 40 ms of consonant onset binned with 1 ms precision. In (b), the classifier used the average firing rate over this same period (i.e., spike timing information was eliminated). These results suggest that spike timing plays an important role in the representation of consonant sounds.

As in our earlier report, the basic findings were not dependent on the specifics of the classifier distance metric used or the bin size (Fig. 12). Consonant discrimination was well correlated with classifier performance when spike timing information was binned with 1–20 ms precision. In contrast, vowel discrimination was well correlated with classifier performance when neural activity was binned with bins larger than 100 ms. These observations are consistent with the hypothesis that different stimulus classes may be represented with different levels of temporal precision (Poeppel 2003; Boemio et al. 2005; Panzeri et al. 2010).

Figure 12.

Consonant discrimination was well correlated with neural discrimination when spike timing precise to 1–20 ms was provided. Vowel discrimination was well correlated with neural discrimination when spike timing information was eliminated and bin size was increased to 100–300 ms. The default analysis window for consonant sounds was 40 ms long beginning at sound onset and was increased as needed when the bin size was greater than 40 ms. The default analysis window for vowel sounds was 300 ms long beginning at vowel onset and was only extended when the 400 ms bin was used. Vowel discrimination was not correlated with neural discrimination when a 40 ms analysis window was used beginning at vowel onset regardless of the bin size used (data not shown). Neural discrimination was based on single trial multiunit activity recorded from individual sites in IC. These results suggest that spike timing plays an important role in the representation of consonant sounds but not vowel sounds. Asterisks indicate statistically significant correlations (P < 0.05).

Discussion

Sensory discrimination requires the ability to detect differences in the patterns of neural activity generated by different stimuli. Stimuli that evoke different activity patterns are expected to be easier to discriminate than stimuli that evoke similar patterns. There are many possible methods to compare neural activity patterns. This is the first study to evaluate plausible neural coding strategies for consonant and vowel sounds by comparing behavioral discrimination with neural discrimination. We compared behavioral performance on 9 vowel discrimination tasks and 11 consonant discrimination tasks with neural discrimination using activity recorded in inferior colliculus and primary auditory cortex. Vowel discrimination was highly correlated with neural discrimination when spike timing was eliminated and was not correlated when spike timing was preserved. In contrast, performance on 11 consonant discrimination tasks was highly correlated with neural discrimination when spike timing was preserved and was not well correlated when spike timing was eliminated. These results suggest that in the early stages of auditory processing, spike count encodes vowel sounds and spike timing encodes consonant sounds. Our observation that neurons in IC and A1 respond similarly, but not identically, to different speech sounds is consistent with the progressive processing of sensory information across multiple cortical and subcortical stations (Chechik et al. 2006; Hernández et al. 2010; Tsunada et al. 2011).

There has been a robust discussion over many years about the timescale of neural codes (Cariani 1995; Parker and Newsome 1998; Jacobs et al. 2009). Neural responses to speech sounds recorded in human and animal auditory cortex demonstrate that some features of speech sounds (e.g., voice onset time) can be extracted using spike timing (Young and Sachs 1979; Steinschneider et al. 1982, 1990 1995, 1999 2005; Palmer 1990; Eggermont 1995; Liégeois-Chauvel et al. 1999; Wong and Schreiner 2003). Information theoretic analyses of neural activity patterns almost invariably find that neurons contain more information about the sensory world when precise spike timing is included in the analysis (Middlebrooks et al. 1994; Schnupp et al. 2006; Foffani et al. 2009; Jacobs et al. 2009). However, the mere presence of information does not imply that the brain has access to the additional information. Direct comparison of behavioral discrimination and neural discrimination with and without spike timing information can be used to evaluate whether spike timing is likely to be used in behavioral judgments. Such experiments are technically challenging, and surprisingly, few studies have directly evaluated different coding strategies using a sufficiently large set of stimuli to allow for a statistically valid correlation analysis.

Some of the most convincing research on neural correlates of sensory discrimination suggests that sensory information is encoded in the mean firing rate averaged over 50–500 ms (Britten et al. 1992; Shadlen and Newsome 1994; Pruett et al. 2001; Romo and Salinas 2003; Liu and Newsome 2005; Wang et al. 2005; Lemus et al. 2009). These studies found no evidence that spike timing information was used for sensory discrimination. However, a recent study of speech sound processing in auditory cortex came to the opposite conclusion (Engineer et al. 2008). In that study, behavioral discrimination of consonant sounds was only correlated with neural discrimination if spike timing information was used in decoding the speech sounds. These apparently contradictory studies seemed at first to complicate rather than resolve the long-standing debate about whether the brain uses spike timing information (Young 2010). Our new observation that vowel discrimination is best accounted for by decoding mean firing rate and ignoring spike timing, while consonant discrimination is best accounted by decoding that includes spike timing supports the hypothesis that the brain can process sensory information within a single structure at multiple timescales (Cariani 1995; Wang et al. 1995, 2005; Parker and Newsome 1998; Victor 2000; Poeppel 2003; Boemio et al. 2005; Mesgarani et al. 2008; Buonomano and Maass 2009; Panzeri et al. 2010; Walker et al. 2011).

Earlier conclusions that the auditory, visual, and somatosensory systems use a simple rate code (and not spike timing) may have resulted from the choice of continuous or periodic stimuli (Britten et al. 1992; Shadlen and Newsome 1994; Pruett et al. 2001; Romo and Salinas 2003; Liu and Newsome 2005; Lemus et al. 2009). Additional behavior and neurophysiology studies are needed to determine whether complex transient stimuli are generally represented using spike timing information (Cariani 1995; Victor 2000). The long-standing debate about the appropriate timescale to analyze neural activity would be largely resolved if it were found that spike timing was required to represent transient stimuli but not steady state stimuli (Cariani 1995; Parker and Newsome 1998; Jacobs et al. 2009; Panzeri et al. 2010). Computational modeling will likely be useful in clarifying the potential biological mechanisms that could be used to represent sensory information on multiple timescales (Buonomano and Maass 2009; Panzeri et al. 2010).

Our observation that consonant and vowel sounds are encoded by neural activity patterns that may be read out at different timescales supports earlier psychophysical, clinical, and imaging evidence that consonant and vowel sounds might be represented differently in the brain (Pisoni 1973; Shannon et al. 1995; Boatman et al. 1997; Caramazza et al. 2000; Poeppel 2003; Bonatti et al. 2005; Carreiras and Price 2008; Wallace and Blumstein 2009). The findings that consonant discrimination is more categorical, more sensitive to temporal degradation, and less sensitive to spectral degradation (compared with vowel discrimination) are consistent with our hypothesis that spike timing plays a key role in the representation of consonant sounds (Pisoni 1973; Shannon et al. 1995; Kasturi et al. 2002; Xu et al. 2005; Engineer et al. 2008). Detailed studies of rat behavioral and neural discrimination of noise-vocoded speech could help explain the differential sensitivity of consonant and vowel sounds to spectral and temporal degradation and may be able to explain why consonant place of articulation information is so much more sensitive to spectral degradation compared with consonant manner of articulation and voicing (Shannon et al. 1995; Xu et al. 2005). The findings that consonant and vowel discrimination develop at different rates and that consonant and vowel discrimination can be independently impaired by lesions or electrical stimulation support the hypothesis that higher cortical fields decode auditory information on different timescales (Boatman et al. 1997; Caramazza et al. 2000; Kudoh et al. 2006; Porter et al. 2011). Regional differences in temporal integration could play an important role in generating sensory processing streams (like the “what” and “where” pathways) and hemispheric lateralization. Physiology and imaging results in animals and humans suggest that the distinct processing streams diverge soon after primary auditory cortex (Shankweiler and Studdert-Kennedy 1967; Schwartz and Tallal 1980; Liégeois-Chauvel et al. 1999; Binder et al. 2000; Rauschecker and Tian 2000; Zatorre et al. 2002; Poeppel 2003; Scott and Johnsrude 2003; Boemio et al. 2005; Obleser et al. 2006; Rauschecker and Scott 2009; Recanzone and Cohen 2010).

Our results are consistent with the asymmetric sampling in time hypothesis (AST, Poeppel 2003). AST postulates that 1) the central auditory system is bilaterally symmetric in terms of temporal integration up to the level of primary auditory cortex, 2) left nonprimary auditory cortex preferentially extracts information using short (20–50 ms) temporal integration windows, and 3) right nonprimary auditory cortex preferentially extracts information using long (150–250 ms) temporal integration windows. Neural recordings from secondary auditory fields and focal inactivation of secondary auditory fields are needed to test whether the AST occurs in nonhuman animals (Lomber and Malhotra 2008). If confirmed, differential temporal integration by different cortical fields is likely to contribute to the natural robustness of sensory processing, including speech processing, to noise and many other forms of distortion.

Future studies are needed to evaluate the potential effect of anesthesia and attention on speech sound responses. Earlier studies have shown qualitatively similar response properties in awake and anesthetized preparations for auditory stations up to the level of A1 (Watanabe 1978; Steinschneider et al. 1982; Chen et al. 1996; Sinex and Chen 2000; Cunningham et al. 2002; Engineer et al. 2008). However, anesthesia has been shown to reduce the maximum rate of click trains that A1 neurons can respond to, so it is likely that anesthesia reduces the A1 response to speech sounds, especially the sustained response to vowel sounds (Anderson et al. 2006; Rennaker et al. 2007). Although human imaging studies have shown little to no effect of attention on primary auditory cortex responses to speech sounds, single unit studies are needed to better understand how attention might shape the cortical representation of speech (Grady et al. 1997; Hugdahl et al. 2003; Christensen et al. 2008). Single unit studies would also clarify whether speech sound coding strategies differ across cortical layers. Human listeners can adapt to many forms of speech sound degradation (Miller and Nicely 1955; Strange 1989; Shannon et al. 1995). Our recent demonstration that the A1 coding strategy is modified in noisy environments suggests the possibility that other forms of stimulus degradation also modify the analysis windows used to represent speech sounds (Shetake et al. 2011)

In summary, our results suggest that spike count encodes vowel sounds and spike timing encodes consonant sounds in the rat central auditory system. Our study illustrates how informative it can be to evaluate potential neural codes in any brain region by comparing neural and behavioral discrimination using a large stimulus set. As invasive and noninvasive recording technology advances, it may be possible to test our hypothesis that consonant and vowel sounds are represented on different timescales in human auditory cortex. If confirmed, this hypothesis could explain a wide range of psychological findings and may prove useful in understanding a variety of common speech processing disorders.

Funding

National Institute on Deafness and Other Communication Disorders (grant numbers R01DC010433, R15DC006624).

Acknowledgments

We would like to thank Kevin Chang, Gabriel Mettlach, Jarod Roland, Roshini Jain, and Dwayne Listhrop for assistance with microelectrode mappings. We would also like to thank Chris Heydrick, Alyssa McMenamy, Anushka Meepe, Chikara Dablain, Jeans Lee Choi, Vismay Badhiwala, Jonathan Riley, Nick Hatate, Pei-Lan Kan, Maria Luisa Lazo de la Vega, and Ashley Hudson for help with behavior training. We would also like to thank David Poeppel, Monty Escabi, Kamalini Ranasinghe, and Heather Read for their suggestions about earlier versions of the manuscript. Conflict of Interest: None declared.

References

- Anderson SE, Kilgard MP, Sloan AM, Rennaker RL. Response to broadband repetitive stimuli in auditory cortex of the unanesthetized rat. Hear Res. 2006;213:107–117. doi: 10.1016/j.heares.2005.12.011. [DOI] [PubMed] [Google Scholar]

- Binder JR, Frost JA, Hammeke TA, Bellgowan PS, Springer JA, Kaufman JN, Possing ET. Human temporal lobe activation by speech and nonspeech sounds. Cereb Cortex. 2000;10:512–528. doi: 10.1093/cercor/10.5.512. [DOI] [PubMed] [Google Scholar]

- Boatman D, Hall C, Goldstein MH, Lesser R, Gordon B. Neuroperceptual differences in consonant and vowel discrimination: as revealed by direct cortical electrical interference. Cortex. 1997;33:83–98. doi: 10.1016/s0010-9452(97)80006-8. [DOI] [PubMed] [Google Scholar]

- Boatman D, Lesser R, Gordon B. Auditory speech processing in the left temporal lobe: an electrical interference study. Brain Lang. 1995;51:269–290. doi: 10.1006/brln.1995.1061. [DOI] [PubMed] [Google Scholar]

- Boatman D, Lesser R, Hall C, Gordon B. Auditory perception of segmental features: a functional neuroanatomic study. J Neurolinguistics. 1994;8:225–234. [Google Scholar]

- Boemio A, Fromm S, Braun A, Poeppel D. Hierarchical and asymmetric temporal sensitivity in human auditory cortices. Nat Neurosci. 2005;8:389–395. doi: 10.1038/nn1409. [DOI] [PubMed] [Google Scholar]

- Bonatti LL, Pena M, Nespor M, Mehler J. Linguistic constraints on statistical computations: the role of consonants and vowels in continuous speech processing. Psychol Sci. 2005;16:451–459. doi: 10.1111/j.0956-7976.2005.01556.x. [DOI] [PubMed] [Google Scholar]

- Britten KH, Shadlen MN, Newsome WT, Movshon JA. The analysis of visual motion: a comparison of neuronal and psychophysical performance. J Neurosci. 1992;12:4745–4765. doi: 10.1523/JNEUROSCI.12-12-04745.1992. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buonomano DV, Maass W. State-dependent computations: spatiotemporal processing in cortical networks. Nat Rev Neurosci. 2009;10:113–125. doi: 10.1038/nrn2558. [DOI] [PubMed] [Google Scholar]

- Caramazza A, Chialant D, Capasso R, Miceli G. Separable processing of consonants and vowels. Nature. 2000;403:428–430. doi: 10.1038/35000206. [DOI] [PubMed] [Google Scholar]

- Cariani P. As if time really mattered: temporal strategies for neural coding of sensory information. In: Pribram K, editor. Origins: brain and self-organization. Hillsdale (NJ): Erlbaum; 1995. pp. 161–229. [Google Scholar]

- Carney LH, Geisler CD. A temporal analysis of auditory-nerve fiber responses to spoken stop consonant–vowel syllables. J Acoust Soc Am. 1986;79:1896. doi: 10.1121/1.393197. [DOI] [PubMed] [Google Scholar]

- Carreiras M, Price CJ. Brain activation for consonants and vowels. Cereb Cortex. 2008;18:1727–1735. doi: 10.1093/cercor/bhm202. [DOI] [PubMed] [Google Scholar]

- Chechik G, Anderson MJ, Bar-Yosef O, Young ED, Tishby N, Nelken I. Reduction of information redundancy in the ascending auditory pathway. Neuron. 2006;51:359–368. doi: 10.1016/j.neuron.2006.06.030. [DOI] [PubMed] [Google Scholar]

- Chen GD, Nuding SC, Narayan SS, Sinex DG. Responses of single neurons in the chinchilla inferior colliculus to consonant–vowel syllables differing in voice-onset time. Audit Neurosci. 1996;3:179–198. [Google Scholar]

- Christensen TA, Antonucci SM, Lockwood JL, Kittleson M, Plante E. Cortical and subcortical contributions to the attentive processing of speech. Neuroreport. 2008;19:1101–1105. doi: 10.1097/WNR.0b013e3283060a9d. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cunningham J, Nicol T, King C, Zecker SG, Kraus N. Effects of noise and cue enhancement on neural responses to speech in auditory midbrain, thalamus and cortex. Hear Res. 2002;169:97–111. doi: 10.1016/s0378-5955(02)00344-1. [DOI] [PubMed] [Google Scholar]

- Delattre P, Liberman AM, Cooper FS, Gerstman LJ. An experimental study of the acoustic determinants of vowel color; observations on one- and two-formant vowels synthesized from spectrographic patterns. Word. 1952;8:195–210. [Google Scholar]

- Delgutte B, Kiang NY. Speech coding in the auditory nerve: I. Vowel-like sounds. J Acoust Soc Am. 1984a;75:866–878. doi: 10.1121/1.390596. [DOI] [PubMed] [Google Scholar]

- Delgutte B, Kiang NY. Speech coding in the auditory nerve: III. Voiceless fricative consonants. J Acoust Soc Am. 1984b;75:887–896. doi: 10.1121/1.390598. [DOI] [PubMed] [Google Scholar]

- Deng L, Geisler CD. Responses of auditory-nerve fibers to nasal consonant–vowel syllables. J Acoust Soc Am. 1987;82:1977–1988. doi: 10.1121/1.395642. [DOI] [PubMed] [Google Scholar]

- DeWeese MR, Hromádka T, Zador AM. Reliability and representational bandwidth in the auditory cortex. Neuron. 2005;48:479–488. doi: 10.1016/j.neuron.2005.10.016. [DOI] [PubMed] [Google Scholar]

- Eggermont JJ. Representation of a voice onset time continuum in primary auditory cortex of the cat. J Acoust Soc Am. 1995;98:911–920. doi: 10.1121/1.413517. [DOI] [PubMed] [Google Scholar]

- Engineer CT, Perez CA, Chen YH, Carraway RS, Reed AC, Shetake JA, Jakkamsetti V, Chang Q, Kilgard MP. Cortical activity patterns predict speech discrimination ability. Nat Neurosci. 2008;11:603–608. doi: 10.1038/nn.2109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fiez JA, Raichle ME, Miezin FM, Petersen SE, Tallal P, Katz WF. PET studies of auditory and phonological processing: effects of stimulus characteristics and task demands. J Cogn Neurosci. 1995;7:357–375. doi: 10.1162/jocn.1995.7.3.357. [DOI] [PubMed] [Google Scholar]

- Foffani G, Morales-Botello ML, Aguilar J. Spike timing, spike count, and temporal information for the discrimination of tactile stimuli in the rat ventrobasal complex. J Neurosci. 2009;29:5964–5973. doi: 10.1523/JNEUROSCI.4416-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Foffani G, Moxon KA. PSTH-based classification of sensory stimuli using ensembles of single neurons. J. Neurosci Methods. 2004;135:107–120. doi: 10.1016/j.jneumeth.2003.12.011. [DOI] [PubMed] [Google Scholar]

- Fry DB, Abramson AS, Eimas PD, Liberman AM. The identification and discrimination of synthetic vowels. Lang Speech. 1962;5:171–189. [Google Scholar]

- Gawne TJ, Kjaer TW, Richmond BJ. Latency: another potential code for feature binding in striate cortex. J. Neurophysiol. 1996;76:1356–1360. doi: 10.1152/jn.1996.76.2.1356. [DOI] [PubMed] [Google Scholar]

- Grady CL, Van Meter JW, Maisog JM, Pietrini P, Krasuski J, Rauschecker JP. Attention-related modulation of activity in primary and secondary auditory cortex. Neuroreport. 1997;8:2511–2516. doi: 10.1097/00001756-199707280-00019. [DOI] [PubMed] [Google Scholar]

- Green DM, Swets JA. Signal detection theory and psychophysics. New York: Wiley; 1966. [Google Scholar]

- Hernández A, Nácher V, Luna R, Zainos A, Lemus L, Alvarez M, Vázquez Y, Camarillo L, Romo R. Decoding a perceptual decision process across cortex. Neuron. 2010;66:300–314. doi: 10.1016/j.neuron.2010.03.031. [DOI] [PubMed] [Google Scholar]

- Holt LL, Lotto AJ, Kluender KR. Neighboring spectral content influences vowel identification. J Acoust Soc Am. 2000;108:710–722. doi: 10.1121/1.429604. [DOI] [PubMed] [Google Scholar]

- Hugdahl K, Thomsen T, Ersland L, Rimol LM, Niemi J. The effects of attention on speech perception: an fMRI study. Brain Lang. 2003;85:37–48. doi: 10.1016/s0093-934x(02)00500-x. [DOI] [PubMed] [Google Scholar]

- Jacobs AL, Fridman G, Douglas RM, Alam NM, Latham PE, Prusky GT, Nirenberg S. Ruling out and ruling in neural codes. Proc Natl Acad Sci U S A. 2009;106:5936–5941. doi: 10.1073/pnas.0900573106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kasturi K, Loizou PC, Dorman M, Spahr T. The intelligibility of speech with “holes” in the spectrum. J Acoust Soc Am. 2002;112:1102–1111. doi: 10.1121/1.1498855. [DOI] [PubMed] [Google Scholar]

- Kawahara H. Speech representation and transformation using adaptive interpolation of weighted spectrum: vocoder revisited. Proc 1997 IEEE Int Conf Acoust Speech Signal Process. 1997;2:1303–1306. [Google Scholar]

- Kudoh M, Nakayama Y, Hishida R, Shibuki K. Requirement of the auditory association cortex for discrimination of vowel-like sounds in rats. Neuroreport. 2006;17:1761–1766. doi: 10.1097/WNR.0b013e32800fef9d. [DOI] [PubMed] [Google Scholar]

- Lemus L, Hernández A, Romo R. Neural codes for perceptual discrimination of acoustic flutter in the primate auditory cortex. Proc Natl Acad Sci U S A. 2009;106:9471–9476. doi: 10.1073/pnas.0904066106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liégeois-Chauvel C, de Graaf JB, Laguitton V, Chauvel P. Specialization of left auditory cortex for speech perception in man depends on temporal coding. Cereb Cortex. 1999;9:484–496. doi: 10.1093/cercor/9.5.484. [DOI] [PubMed] [Google Scholar]

- Liu J, Newsome WT. Correlation between speed perception and neural activity in the middle temporal visual area. J Neurosci. 2005;25:711–722. doi: 10.1523/JNEUROSCI.4034-04.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lomber SG, Malhotra S. Double dissociation of ‘what’ and ‘where’ processing in auditory cortex. Nat Neurosci. 2008;11:609–616. doi: 10.1038/nn.2108. [DOI] [PubMed] [Google Scholar]

- Malone BJ, Scott BH, Semple MN. Temporal codes for amplitude contrast in auditory cortex. J Neurosci. 2010;30:767–784. doi: 10.1523/JNEUROSCI.4170-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mesgarani N, David SV, Fritz JB, Shamma SA. Phoneme representation and classification in primary auditory cortex. J Acoust Soc Am. 2008;123:899–909. doi: 10.1121/1.2816572. [DOI] [PubMed] [Google Scholar]

- Middlebrooks JC, Clock AE, Xu L, Green DM. A panoramic code for sound location by cortical neurons. Science. 1994;264:842–844. doi: 10.1126/science.8171339. [DOI] [PubMed] [Google Scholar]

- Miller GA, Nicely PE. An analysis of perceptual confusions among some English consonants. J Acoust Soc Am. 1955;27:338–352. [Google Scholar]

- Miller MI, Sachs MB. Representation of stop consonants in the discharge patterns of auditory-nerve fibers. J Acoust Soc Am. 1983;74:502–517. doi: 10.1121/1.389816. [DOI] [PubMed] [Google Scholar]

- Obleser J, Boecker H, Drzezga A, Haslinger B, Hennenlotter A, Roettinger M, Eulitz C, Rauschecker JP. Vowel sound extraction in anterior superior temporal cortex. Hum Brain Mapp. 2006;27:562–571. doi: 10.1002/hbm.20201. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ohl FW, Scheich H. Orderly cortical representation of vowels based on formant interaction. Proc Natl Acad Sci U S A. 1997;94:9440–9444. doi: 10.1073/pnas.94.17.9440. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Palmer AR. The representation of the spectra and fundamental frequencies of steady-state single-and double-vowel sounds in the temporal discharge patterns of guinea pig cochlear-nerve fibers. J Acoust Soc Am. 1990;88:1412–1426. doi: 10.1121/1.400329. [DOI] [PubMed] [Google Scholar]

- Palombi PS, Caspary DM. Physiology of the young adult Fischer 344 rat inferior colliculus: responses to contralateral monaural stimuli. Hear Res. 1996;100:41–58. doi: 10.1016/0378-5955(96)00115-3. [DOI] [PubMed] [Google Scholar]

- Panzeri S, Brunel N, Logothetis NK, Kayser C. Sensory neural codes using multiplexed temporal scales. Trends Neurosci. 2010;33:111–120. doi: 10.1016/j.tins.2009.12.001. [DOI] [PubMed] [Google Scholar]

- Parker AJ, Newsome WT. Sense and the single neuron: probing the physiology of perception. Annu Rev Neurosci. 1998;21:227–277. doi: 10.1146/annurev.neuro.21.1.227. [DOI] [PubMed] [Google Scholar]

- Peterson GE, Barney HL. Control methods used in a study of the vowels. J Acoust Soc Am. 1952;24:175–184. [Google Scholar]

- Pisoni DB. Auditory and phonetic memory codes in the discrimination of consonants and vowels. Percept Psychophys. 1973;13:253–260. doi: 10.3758/BF03214136. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Poeppel D. The analysis of speech in different temporal integration windows: cerebral lateralization as ‘asymmetric sampling in time’. Speech Commun. 2003;41:245–255. [Google Scholar]

- Polka L, Werker JF. Developmental changes in perception of nonnative vowel contrasts. J Exp Psychol Hum Percept Perform. 1994;20:421–435. doi: 10.1037//0096-1523.20.2.421. [DOI] [PubMed] [Google Scholar]

- Porter BA, Rosenthal TR, Ranasinghe KG, Kilgard MP. Discrimination of brief speech sounds is impaired in rats with auditory cortex lesions. Behav Brain Res. 2011;219:68–74. doi: 10.1016/j.bbr.2010.12.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pruett JR, Jr, Sinclair RJ, Burton H. Neural correlates for roughness choice in monkey second somatosensory cortex (SII) J Neurophysiol. 2001;86:2069–2080. doi: 10.1152/jn.2001.86.4.2069. [DOI] [PubMed] [Google Scholar]

- Rauschecker JP, Scott SK. Maps and streams in the auditory cortex: nonhuman primates illuminate human speech processing. Nat Neurosci. 2009;12:718–724. doi: 10.1038/nn.2331. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rauschecker JP, Tian B. Mechanisms and streams for processing of “what” and “where” in auditory cortex. Proc Natl Acad Sci U S A. 2000;97:11800–11806. doi: 10.1073/pnas.97.22.11800. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Recanzone GH, Cohen YE. Serial and parallel processing in the primate auditory cortex revisited. Behav Brain Res. 2010;206:1–7. doi: 10.1016/j.bbr.2009.08.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rennaker RL, Carey HL, Anderson SE, Sloan AM, Kilgard MP. Anesthesia suppresses nonsynchronous responses to repetitive broadband stimuli. Neuroscience. 2007;145:357–369. doi: 10.1016/j.neuroscience.2006.11.043. [DOI] [PubMed] [Google Scholar]

- Romo R, Salinas E. Flutter discrimination: neural codes, perception, memory and decision making. Nat Rev Neurosci. 2003;4:203–218. doi: 10.1038/nrn1058. [DOI] [PubMed] [Google Scholar]

- Sachs MB, Young ED. Encoding of steady-state vowels in the auditory nerve: representation in terms of discharge rate. J Acoust Soc Am. 1979;66:470–479. doi: 10.1121/1.383098. [DOI] [PubMed] [Google Scholar]

- Schnupp JW, Hall TM, Kokelaar RF, Ahmed B. Plasticity of temporal pattern codes for vocalization stimuli in primary auditory cortex. J Neurosci. 2006;26:4785–4795. doi: 10.1523/JNEUROSCI.4330-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schwartz J, Tallal P. Rate of acoustic change may underlie hemispheric specialization for speech perception. Science. 1980;207:1380–1381. doi: 10.1126/science.7355297. [DOI] [PubMed] [Google Scholar]

- Scott SK, Johnsrude IS. The neuroanatomical and functional organization of speech perception. Trends Neurosci. 2003;26:100–107. doi: 10.1016/S0166-2236(02)00037-1. [DOI] [PubMed] [Google Scholar]

- Seifritz E, Esposito F, Hennel F, Mustovic H, Neuhoff JG, Bilecen D, Tedeschi G, Scheffler K, Di Salle F. Spatiotemporal pattern of neural processing in the human auditory cortex. Science. 2002;297:1706–1708. doi: 10.1126/science.1074355. [DOI] [PubMed] [Google Scholar]

- Shadlen MN, Newsome WT. Noise, neural codes and cortical organization. Curr Opin Neurobiol. 1994;4:569–579. doi: 10.1016/0959-4388(94)90059-0. [DOI] [PubMed] [Google Scholar]

- Shankweiler D, Studdert-Kennedy M. Identification of consonants and vowels presented to left and right ears. Q J Exp Psychol. 1967;19:59–63. doi: 10.1080/14640746708400069. [DOI] [PubMed] [Google Scholar]

- Shannon RV, Zeng FG, Kamath V, Wygonski J, Ekelid M. Speech recognition with primarily temporal cues. Science. 1995;270:303–304. doi: 10.1126/science.270.5234.303. [DOI] [PubMed] [Google Scholar]

- Shetake JA, Wolf JT, Cheung RJ, Engineer CT, Ram SK, Kilgard MP. Cortical activity patterns predict robust speech discrimination ability in noise. Eur J Neurosci. 2011;34:1823–1838. doi: 10.1111/j.1460-9568.2011.07887.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sinex DG, Chen GD. Neural responses to the onset of voicing are unrelated to other measures of temporal resolution. J Acoust Soc Am. 2000;107:486–495. doi: 10.1121/1.428316. [DOI] [PubMed] [Google Scholar]

- Soli SD. Second formants in fricatives: acoustic consequences of fricative-vowel coarticulation. J Acoust Soc Am. 1981;70:976–984. doi: 10.1121/1.387031. [DOI] [PubMed] [Google Scholar]

- Steinschneider M, Arezzo J, Vaughan HG., Jr Speech evoked activity in the auditory radiations and cortex of the awake monkey. Brain Res. 1982;252:353–365. doi: 10.1016/0006-8993(82)90403-6. [DOI] [PubMed] [Google Scholar]

- Steinschneider M, Arezzo JC, Vaughan HG., Jr Tonotopic features of speech-evoked activity in primate auditory cortex. Brain Res. 1990;519:158–168. doi: 10.1016/0006-8993(90)90074-l. [DOI] [PubMed] [Google Scholar]

- Steinschneider M, Reser D, Schroeder CE, Arezzo JC. Tonotopic organization of responses reflecting stop consonant place of articulation in primary auditory cortex (A1) of the monkey. Brain Res. 1995;674:147–152. doi: 10.1016/0006-8993(95)00008-e. [DOI] [PubMed] [Google Scholar]

- Steinschneider M, Volkov IO, Fishman YI, Oya H, Arezzo JC, Howard MA., 3rd Intracortical responses in human and monkey primary auditory cortex support a temporal processing mechanism for encoding of the voice onset time phonetic parameter. Cereb Cortex. 2005;15:170–186. doi: 10.1093/cercor/bhh120. [DOI] [PubMed] [Google Scholar]

- Steinschneider M, Volkov IO, Noh MD, Garell PC, Howard MA., 3rd Temporal encoding of the voice onset time phonetic parameter by field potentials recorded directly from human auditory cortex. J. Neurophysiol. 1999;82:2346–2357. doi: 10.1152/jn.1999.82.5.2346. [DOI] [PubMed] [Google Scholar]

- Strange W. Dynamic specification of coarticulated vowels spoken in sentence context. J Acoust Soc Am. 1989;85:2135–2153. doi: 10.1121/1.397863. [DOI] [PubMed] [Google Scholar]

- Toro JM, Nespor M, Mehler J, Bonatti LL. Finding words and rules in a speech stream: functional differences between vowels and consonants. Psychol Sci. 2008;19:137–144. doi: 10.1111/j.1467-9280.2008.02059.x. [DOI] [PubMed] [Google Scholar]

- Tsunada J, Lee JH, Cohen YE. Representation of speech categories in the primate auditory cortex. J Neurophysiol. 2011;105:2634–2646. doi: 10.1152/jn.00037.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Versnel H, Shamma SA. Spectral-ripple representation of steady-state vowels in primary auditory cortex. J Acoust Soc Am. 1998;103:2502–2514. doi: 10.1121/1.422771. [DOI] [PubMed] [Google Scholar]

- Victor JD. How the brain uses time to represent and process visual information. Brain Res. 2000;886:33–46. doi: 10.1016/s0006-8993(00)02751-7. [DOI] [PubMed] [Google Scholar]

- Walker KM, Bizley JK, King AJ, Schnupp JW. Multiplexed and robust representations of sound features in auditory cortex. J Neurosci. 2011;31:14565–14576. doi: 10.1523/JNEUROSCI.2074-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wallace AB, Blumstein SE. Temporal integration in vowel perception. J Acoust Soc Am. 2009;125:1704–1711. doi: 10.1121/1.3077219. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wang X, Lu T, Snider RK, Liang L. Sustained firing in auditory cortex evoked by preferred stimuli. Nature. 2005;435:341–346. doi: 10.1038/nature03565. [DOI] [PubMed] [Google Scholar]

- Wang X, Merzenich MM, Beitel R, Schreiner CE. Representation of a species-specific vocalization in the primary auditory cortex of the common marmoset: temporal and spectral characteristics. J Neurophysiol. 1995;74:2685–2706. doi: 10.1152/jn.1995.74.6.2685. [DOI] [PubMed] [Google Scholar]

- Watanabe T. Responses of the cat's collicular auditory neuron to human speech. J Acoust Soc Am. 1978;64:333–337. doi: 10.1121/1.381952. [DOI] [PubMed] [Google Scholar]

- Wong SW, Schreiner CE. Representation of CV-sounds in cat primary auditory cortex: intensity dependence. Speech Commun. 2003;41:93–106. [Google Scholar]

- Xu L, Thompson CS, Pfingst BE. Relative contributions of spectral and temporal cues for phoneme recognition. J Acoust Soc Am. 2005;117:3255–3267. doi: 10.1121/1.1886405. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Young ED. Level and spectrum. In: Rees A, Palmer A, editors. The Oxford handbook of auditory science: the auditory brain. Oxford: Oxford University Press; 2010. pp. 93–124. [Google Scholar]

- Young ED, Sachs MB. Representation of steady-state vowels in the temporal aspects of the discharge patterns of populations of auditory-nerve fibers. J Acoust Soc Am. 1979;66:1381–1403. doi: 10.1121/1.383532. [DOI] [PubMed] [Google Scholar]

- Zatorre R, Belin P, Penhune V. Structure and function of auditory cortex: music and speech. Trends Cogn Sci. 2002;6:37–46. doi: 10.1016/s1364-6613(00)01816-7. [DOI] [PubMed] [Google Scholar]