Abstract

In a previous study (Simmons-Stern, Budson, & Ally 2010), we found that patients with Alzheimer’s disease (AD) better recognized visually presented lyrics when the lyrics were also sung rather than spoken at encoding. The present study sought to further investigate the effects of music on memory in patients with AD by making the content of the song lyrics relevant for the daily life of an older adult and by examining how musical encoding alters several different aspects of episodic memory. Patients with AD and healthy older adults studied visually presented novel song lyrics related to instrumental activities of daily living (IADL) that were accompanied by either a sung or a spoken recording. Overall, participants performed better on a memory test of general lyric content for lyrics that were studied sung as compared to spoken. However, on a memory test of specific lyric content, participants performed equally well for sung and spoken lyrics. We interpret these results in terms of a dual-process model of recognition memory such that the general content questions represent a familiarity-based representation that is preferentially sensitive to enhancement via music, while the specific content questions represent a recollection-based representation unaided by musical encoding. Additionally, in a test of basic recognition memory for the audio stimuli, patients with AD demonstrated equal discrimination for sung and spoken stimuli. We propose that the perceptual distinctiveness of musical stimuli enhanced metamemorial awareness in AD patients via a non-selective distinctiveness heuristic, thereby reducing false recognition while at the same time reducing true recognition and eliminating the mnemonic benefit of music. These results are discussed in the context of potential music-based memory enhancement interventions for the care of patients with AD.

Keywords: Alzheimer’s disease, recognition memory, music, mnemonics, familiarity, recollection

1. Introduction

Alzheimer’s disease (AD) affects approximately 5.2 million Americans age 65 and older. By 2030, this number is expected to reach 7.7 million (Alzheimer’s Association, 2011; Hebert, Scherr, Bienias, Bennett, & Evans, 2003). Globally, there may be as many as 80 million people living with AD by 2050 (Alzheimer’s Disease International, 2009). Episodic memory and instrumental activities of daily living (IADL; e.g., housework, medication adherence, use of transportation, management of money, etc.) are affected early in the course of AD, and are major contributors to the functional disability associated with the disease (Gaugler, Yu, Krichbaum, & Wyman, 2009; Tomaszewski Farias et al., 2009; McKhann et al., 2011). In order to alleviate the significant detriment to quality of life for the affected patient (Schölzel-Dorenbos, van der Steen, Engels, & Olde Rikkert, 2007) and burden of care for his or her caregivers (Razani et al., 2007) there exists a major interest in the development of interventions designed to reduce the impact of memory loss and IADL impairment in AD.

There are numerous studies of potential disease modifying drugs currently underway, many in Phase 3 clinical trials (for reviews see Budson & Kowall, 2011; Budson & Solomon, 2011). With the growing number of individuals with AD and the likelihood of a more slowly progressive disease as a result of the new treatments, there will be a growing need to improve the daily functioning and quality of life of AD patients in the years ahead. Music therapy, of which traditional forms consist of basic active (e.g., instrument playing, singing) or passive (e.g., listening) music engagement, represents a low cost intervention with a wide range of benefits. These benefits include improvements on measures of anxiety and depression (Guétin et al., 2009), agitation (see Witzke, Rhone, Backhaus, & Shaver, 2008 for review), autobiographical memory recall (Foster & Valentine, 2001; Irish et al., 2006), and a variety of cognitive functions (e.g., Thompson, Moulin, Hayre, & Jones, 2005; Janata, 2012). However, to the best of our knowledge no work to date has examined the potential benefit of music-based therapies specifically targeted to enhance new memory formation and improvement in IADL functioning in patients with AD. Furthermore, despite some anecdotal reports of effective use of musical mnemonics in a therapeutic setting, there are no accepted music-based memory enhancement therapies for the care of patients with AD.

In a previous study (Simmons-Stern, Budson, & Ally 2010) we demonstrated that patients with AD remember song lyrics better on a recognition memory test when those song lyrics are accompanied by a sung recording than when they are accompanied by a spoken recording at encoding. However, healthy older adults showed no benefit of musical encoding on subsequent recognition memory. This original experiment provided some of the first empirical evidence that musical mnemonics may serve as an effective therapeutic tool for the care of patients with AD, but was limited by the scope of its design. Because we only tested recognition for the lyrics of studied songs, we were unable to make any claims about the practical benefits of musical mnemonics. We showed that music does enhance basic recognition for a set of lyrics, but did not test content recognition or comprehension, both of which are requisites of an ecologically valid music-based therapy designed to improve memory and IADL functioning in AD patients. For example, recognition memory may be driven differentially by both familiarity and recollection (Eichenbaum, Yonelinas, & Ranganath, 2007; Yonelinas, 2002), and it is likely that the latter is particularly important to the functional transferability of musical mnemonics. However, a familiarity enhancement effect may in itself inform less specific but nevertheless highly impactful music-based memory enhancement therapies, as well as provide further support for the benefit of music interventions in general for AD patients.

An additional limitation of our original experiment was its inability to assess false alarms or false recognition – the incorrect belief that a novel stimulus has been previously encountered – with respect to presentation condition. Because participants studied the lyric stimuli bimodally at encoding (i.e., the lyrics along with sung or spoken audio) but were then tested unimodally (i.e., the lyrics alone), we were only able to generate an overall rate of false alarms. That is, we were unable to assess false alarm rate as a function of sung or spoken test condition. False recognition and memory distortions are common in patients with AD (Mitchell, Sullivan, Schacter, & Budson, 2006; Pierce, Sullivan, Schacter, & Budson, 2005). Minimization of false recognition and other memory distortions is essential to normal memory function (Schacter, 1999) and thus if musical mnemonics reduce false memory they may have additional practical value in the life of a patient with AD.

The present study was designed to address three main goals given these results and limitations of our 2010 study. First, we sought to determine whether the benefit of musical mnemonics on basic lyric recognition in patients with AD extends also to content memory. That is, if an AD patient studies musical song lyrics designed specifically to enhance new learning of relevant IADL-related material – what we will call functional musical mnemonics – can the content information encompassed by those lyrics be retrieved at a later time? Specifically, we tested both general and specific content memory in order to assess the utility of these functional musical mnemonics. Based on our previous finding and evidence that at least some forms of musical memory may be preferentially spared by the degenerative effects of AD (see Baird & Samson, 2009 for review; Hsieh, Hornberger, Piguet, & Hodges, 2011; Vanstone & Cuddy, 2010), we hypothesized that both general and specific content information would be better remembered for lyrics studied with a sung recording than for lyrics studied with a spoken recording.

Second, we were interested in understanding music-based memory enhancement in the context of the familiarity/recollection dual-process model of recognition memory. As noted, the ability of music to enhance familiarity, recollection, or both will necessarily affect the scope of its therapeutic applications. Although our paradigm of general and specific content questions is novel, it shares characteristics with many dual-process models of recognition memory (see Yonelinas, 2002 for review). In a standard dual-process model, familiarity is often illustrated by the experience of recognizing a person’s face as generally familiar, whereas recollection encompasses the ability to remember specific qualitative information such as the name of that person and where he or she was first encountered. Analogously, we sought to isolate familiarity in the present experiment by reducing lyrics to their most general content form (i.e., as the main topic of a lyric, or its “face”). The retrieval of additional information about the context in which that main lyric topic was encountered – the specific content of the musical mnemonic – may involve familiarity as well, but relies predominantly on recollection. By testing general (i.e., familiarity-based) and specific (i.e., recollection-based) content memory separately, we hoped to examine the relative benefit of musical mnemonics across each of these components of recognition memory. Thus, if music affects the familiarity and recollection components of recognition memory independently, we predicted a dissociation of performance on the two content memory tasks.

Furthermore, while healthy older adults exhibit impaired recollection relative to familiarity (Craik & Jennings, 1992; Light, 1991), patients with even mild AD demonstrate nearly complete degradation of recollection (Ally, Gold, & Budson, 2009; Ally, McKeever, Waring, & Budson, 2009). As a result, we predicted an interaction between the benefit of music and group for recollection-based memory, such that any benefit of music on specific content memory would be more pronounced in healthy older adults than in AD patients. Correspondingly, we predicted that any benefit of music on the familiarity-based general content memory would be of similar magnitudes across subject group.

Our third goal for the present study was to analyze the relative rate of false recognition for sung as compared to spoken stimuli. We tested participants in an audio-based recognition task that included both sung and spoken stimuli, unlike our previous experiment in which the test was independent of condition. We predicted lower rates of false recognition for sung stimuli, and thus expected the benefit – which we assessed using Pr, a measure of discrimination that accounts for false recognition (Snodgrass & Corwin, 1988) – of musical encoding to be more pronounced in the current paradigm for both patients with AD and healthy older adults. Furthermore, we hypothesized that stimulus condition would mediate participants’ response bias, or their overall tendency to rate items as studied or unstudied. Participants in tests of recognition memory who respond, “yes, that item was studied,” disproportionately are considered to have a liberal response bias, whereas participants who respond, “yes,” more infrequently are considered to have a conservative response bias.

2. Methods

2.1 Participants

Twelve patients with a clinical diagnosis of probable AD and 17 healthy older adults participated in this experiment. Patients with probable AD (MMSE: M = 24.67, SD = 3.45; MOCA: M = 14.50, SD = 5.83) met the criteria outlined by the National Institute of Neurological and Communicative Disorders and Stroke-Alzheimer’s Disease and Related Disorders Association (NINCDS-ADRDA; Mckhann, Drachman, Folstein, & Katzman, 1984). Healthy older adults were defined as demonstrating no cognitive impairment on a standardized neuropsychological test battery (Table 1) and were excluded if they had first-degree relatives with a history of AD or other neurodegenerative disorders or dementias. Participants with AD were recruited from the clinical populations of the Boston University Alzheimer’s Disease Center and the VA Boston Healthcare System, both in Boston, MA, and The Memory Clinic in Bennington, VT. Healthy controls were recruited from community postings in the Greater Boston area or were the spouses of the AD patients who participated in the study. Any participant was excluded if he/she had a self-reported history of psychiatric illness, alcohol or drug abuse, cerebrovascular disease, traumatic brain injury, and/or uncorrected vision or hearing problems.

Table 1.

Demographic and standard neuropsychological test data by group.

| OC | AD | |

|---|---|---|

| Gender | 6M/6F | 6M/6F |

| Age | 78.63 (8.77) | 81.17 (4.02) |

| Years of Education | 15.50 (2.50) | 14.17 (3.33) |

| Years Formal Musical Experience | 1.83 (5.73) | 1.33 (2.71) |

| Years Informal Musical Experience | 1.00 (2.37) | 0.75 (1.76) |

| MMSE | 28.91 (1.14) | 24.67 (3.45)** |

| MOCA | 25.67 (2.53) | 14.50 (5.83)** |

| CERAD | ||

| Immediate | 18.45 (2.94) | 10.92 (3.90)** |

| Delayed | 5.09 (1.64) | 1.33 (1.56)** |

| Recognition | 9.55 (0.52) | 5.92 (2.57)** |

| Trails-B | 67.91 (26.38) | 247.67 (82.79)** |

| FAS | 53.27 (10.34) | 25.67 (10.18)** |

| CAT | 51.64 (9.73) | 20.75 (10.44)** |

| BNT-15 | ||

| No Cue | 14.27 (1.10) | 11.42 (3.15)* |

| Semantic Cue | - | 0.08 (0.29) |

| Phonemic Cue | 0.55 (1.04) | 2.42 (1.62) |

Notes: Standard deviations are presented in italics. OC = healthy older adults; MMSE = Mini Mental State Examination (Folstein, Folstein, & McHugh, 1975); MOCA= The Montreal Cognitive Assessment (Nasreddine et al., 2005); CERAD = CERAD Word List Memory Test (J.C. Morris et al., 1989); Trails-B = Trail Making Test Part B (Adjutant General’s Office, 1944); FAS and CAT = Verbal Fluency (Monsch et al., 1992); BNT-15 = 15-item Boston Naming Test (Mack, Freed, Williams, & Henderson, 1992). Musical experience was defined as having any formal instrument or voice training and was self-reported by the participant. Significant between-group differences:

(p<.05) and

(p<.005)

Each participant completed a neuropsychological test battery in a 55-minute session either directly following the experimental session or on a different day; test data are presented in Table 1. Three control subjects were excluded because of overall impaired performance on these tests and two control subjects were excluded because of experimenter error, leaving 12 participants in each group for analysis.

Independent-samples t-tests revealed no significant differences between groups in age [t(22) = .838, p = .415], years of education [t(22) = 1.109, p = .280], or self-reported years of formal [t(22) = .491, p = .788] or informal [t(22) = .479, p = .773] musical experience. Formal musical experience was defined as any past or present musical training that required regular professional lessons (e.g., instrument lessons) and informal musical experience was defined as any other past or present regular musical engagement other than passive listening (e.g., singing in a church choir).

The human subjects committees of the VA Boston Healthcare System and the Boston University School of Medicine approved this study, and written informed consents were obtained from all participants or their caregivers (i.e., legally authorized representatives), where appropriate. Participants were compensated $10/hr for their participation.

2.2 Stimuli

Stimuli were derived from the four-line excerpts of 80 unfamiliar children’s songs used in our 2010 study (Simmons-Stern, et al. 2010) and originally gathered from the KIDiddles online children’s music database (http://www.kididdles.com). For the present experiment, novel lyrics related to common instrumental activities of daily living (IADLs) and other functions relevant for daily life were written to accompany each musical song excerpt. Content was generated by first selecting forty IADL-related objects (e.g., “pills”) along with two actions commonly associated with that object (e.g., “take the pills,” and “fill the pillbox”). For each of the object-action content items, we selected one of the original children’s song excerpts and rewrote its lyrics based on the new content item. This resulted in two songs whose general content was the same (e.g., “pills”), but whose specific action content was distinct (e.g., Song 1: “Fill the pillbox with your pills/ but be sure they do not spill/ Monday’s pill in Monday’s spot/ if you don’t they may be lost”; Song 2: "When it is breakfast time/take the pills take the pills/be sure to take yours not mine/so take your pills."). As in this example, lyrics were written to include a mandate related to their topic action so that they were able to serve as “functional” mnemonic devices with a specific testable message. The melody, rhyme scheme, meter, and general syntactic structure of the original lyrics were preserved to the best of our ability when creating these novel lyrics. The final stimulus set consisted of 40 pairs of songs (80 songs total).

A sung and a spoken version of each of the 80 novel lyrics were created in Apple’s Logic Pro 8 (Version 8.0.2; Apple Inc.). A 19-year-old female vocalist – the same vocalist from the 2010 experiment – recorded all sung and spoken tracks. Spoken tracks were recorded monotone (on G5, at approximately 780Hz, but without any pitch correction), with as little vocal inflection as possible, and at the same overall rate as the sung tracks such that the sung and spoken versions of each set of lyrics had equal durations. The sung version was recorded first, so that the vocalist could match her speech to the rate of the sung version. The vocalist added natural pauses to the spoken versions such that the total duration of the spoken version equaled the total duration of the sung version. In cases where the pauses in the spoken version became unnatural or obvious, the sung version was slowed down to accommodate the spoken version. Multi-track instrumental MIDI recordings for the songs were obtained with permission from KIDiddles, and the sung version of each set of lyrics consisted of the sung vocal track accompanied by these instrumentals. In order to maximize clarity and ease of listening, two versions of each sung track were created, one in the vocalist’s upper register and one in her lower register. These “high” and “low” sung versions were in the same key, either one or two octaves apart. We recruited a cohort of healthy younger and older adults to screen the two versions of each song and indicate which version they preferred. The results of this preference screening were used to guide final version selection for the experiment.

For counterbalancing purposes, the 80 songs were divided into four lists matched, in order of priority, for duration (M = 26.65 s, SD = 7.48 s), tempo (M = 95.8 bpm, SD = 27.5), number of words (M = 24.19, SD = 5.02), Flesch-Kincaid Grade Level (i.e., reading comprehension grade level; M = 8.87, SD = 2.51), and Flesch Reading Ease, a related measure of text readability (M = 72.68, SD = 10.67). Because the lyric content was organized into pairs of actions associated with each of 40 objects, songs were also divided into lists based on content pairs. Thus, two of the four matched lists contained one action content item for each of the 40 objects (e.g., the “Fill your pillbox” song), while the other two lists contained the paired action content item for each object (e.g., the “Take the pills” song). List presentation was arranged such that subjects studied only one of the two songs associated with each object, and presentation condition was counterbalanced across subjects so that each song appeared an equal number of times in each condition.

In addition to the 40 pairs of object-action content items for which lyrics were generated, we created a set of 40 similar object-action pairs that had no lyrics associated with them. These “dummy” content items were used in the content recognition tests outlined below.

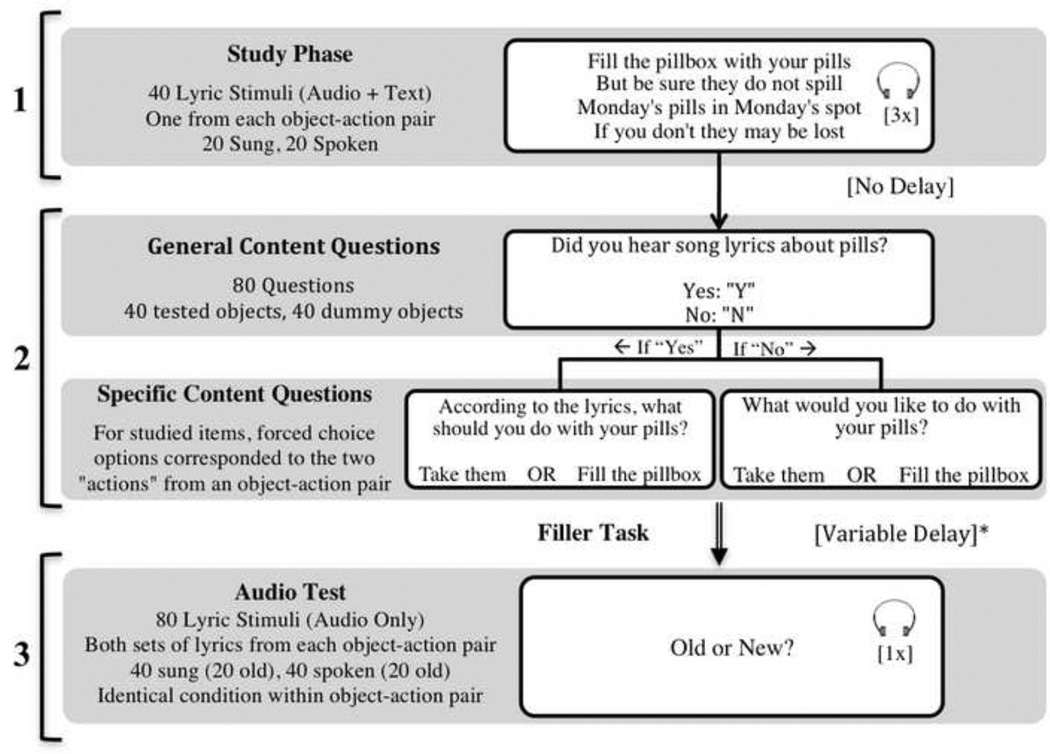

2.3 Design and Procedure

Participants were tested individually in a single session lasting approximately one and a half hours. Stimuli were presented on Dell laptop computers via E-Prime software, version 2.0 (Psychology Software Tools Inc.; http://www.pst-net.com/eprime). During the study phase, the lyrics to 40 songs were presented visually to all participants. As in Simmons-Stern et al. (2010), the visual presentation of the lyrics served to equalize intelligibility across the sung and spoken conditions. Only one set of lyrics (i.e., one action content item or for example, the “Fill the pillbox” song) was studied for each of the 40 objects, such that one action content item for each object remained unstudied (in this case, the “Take the pills” song). The visually presented lyrics contained no punctuation, were center justified, and appeared for the duration of stimulus presentation. Twenty of the song lyrics were accompanied by their corresponding sung recording and 20 were accompanied by their spoken recording. Stimuli were presented at random with respect to presentation condition. Each recording repeated three times, consecutively while the lyrics remained on the screen. Audio recordings were presented via over-ear Audio Technica ATH-M30 headphones (http://www.audio-technica.com) and participants were allowed to adjust the volume to a comfortable and clearly audible level. The minimum selected headphone volume resulted in stimulus presentation at between 50 and 55 decibels, A-weighted (dBA), and the maximum selected volume resulted in presentation at between 70 and 75 dBA.I Participants were informed that their memory for the lyrics would be tested, and were asked after each stimulus presentation to rate how much they liked the song lyrics on a five-point Likert scale.

Immediately following the study phase of the experiment, participants answered two questions about 80 object items, forty of which were studied and forty of which were unstudied “dummy” object items, in order to assess both general and specific content memory. For each item, the participant was first asked whether or not they had heard a set of lyrics about the object (e.g., “Did you hear song lyrics about pills?”). This question was the general content question and triggered one of two subsequent specific content questions. If a participant responded “yes” to the general content question, they were then asked what the lyrics had instructed them to do (e.g., “According to the lyrics, what should you do with your pills?”). If a participant responded “no,” they were asked to make a hypothetical preference choice (e.g., “What would you like to do with your pills?”). In both cases, the participants were given the choice of two actions, and these actions were always the same for a specific object item regardless of general content question response or accuracy. For example, if a participant studied the “Fill the pillbox” song lyrics yet responded that they had not heard a set of lyrics about pills, they were asked, “What would you like to do with your pills?” and given the choices, “Fill the pillbox OR Take them.” Similarly, if that participant responded that they had heard a set of lyrics about pills, they were asked, “According to the lyrics, what should you do with you pills?” and given the same choices, “Fill the pillbox OR Take Them.” In the case of the studied object items, one of the action options corresponded to the set of studied lyrics and was the correct response, while the other action option corresponded to the unstudied set of lyrics for that object and was incorrect. In the case of the dummy object items, the forced-choice specific content questions were similar to those of the studied object items but, of course, had no correct answer. All general and specific content test questions were presented visually on the computer screen without any audio.

Following the content memory test, participants took a short break, of duration calculated separately for each participant, such that a total of 40 minutes elapsed from the end of the study phase to the beginning the second test phase. During the break, participants completed either a brief and unrelated experiment involving pictures or a math filler task.

The last phase of the experiment consisted of a recognition test for sung and spoken audio clips. Participants were presented with the audio recordings for the 40 studied stimuli and their 40 unstudied object item pairs, randomly intermixed, and asked to make old/new recognition judgments for each. Studied stimuli were presented in their original sung or spoken presentation condition. Unstudied stimuli were presented in the same condition as their corresponding studied stimulus. For example, if a participant heard the sung version of the “Fill the Pillbox” song during the study phase of the experiment, they heard the sung versions of both the “Fill the Pillbox” and “Take the Pills” songs during the audio recognition test. All lyrics were presented in a random order without any visual component and repeated only once. Participants were required to listen to the duration of the audio clip before making an old/new judgment.

3. Results

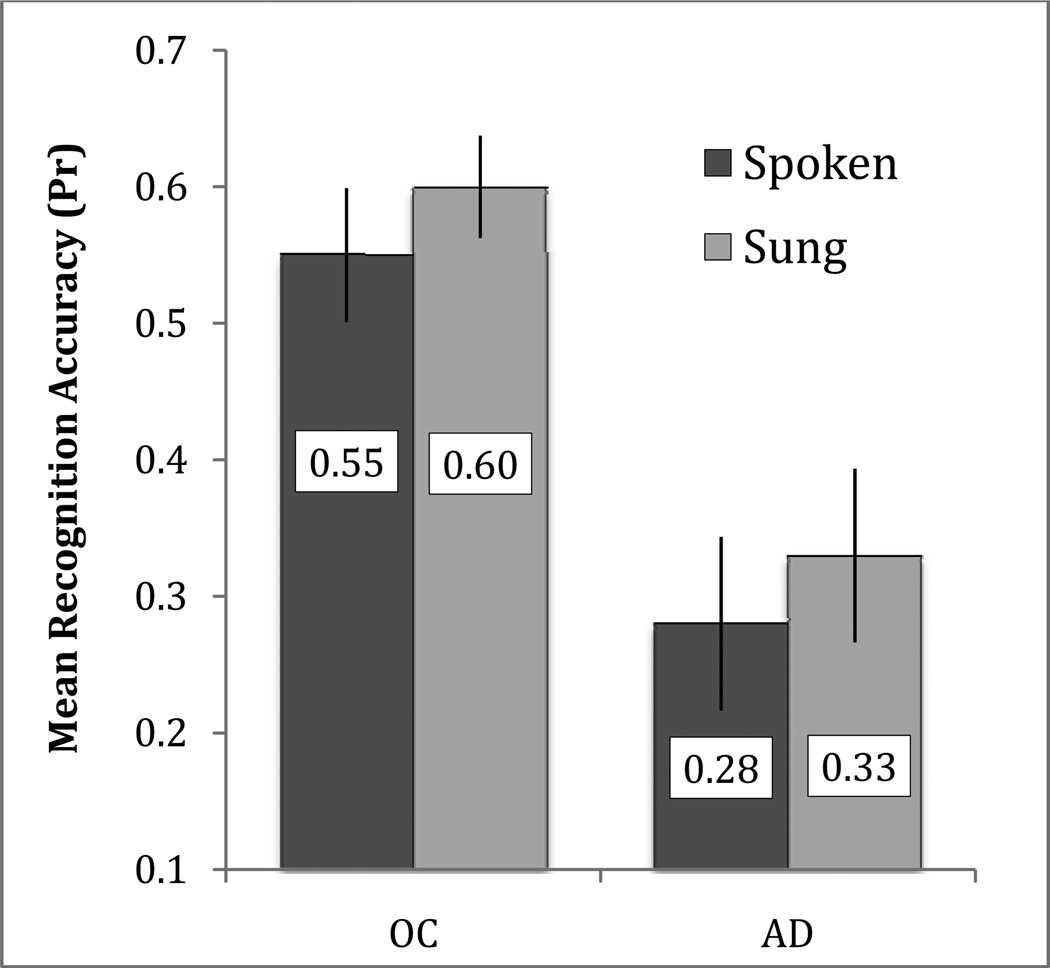

To investigate our first two hypotheses, that musical encoding would enhance memory for functional content information and that there may be a dissociation of performance between tests of general and specific content memory, we analyzed the effect of encoding condition on content memory test results. For the general content questions, we assessed performance using the recognition accuracy measure Pr (%hits-%false alarms; Snodgrass & Corwin, 1988). We performed a repeated-measures ANOVA with the factors of Group (AD, healthy older adults) and Condition (sung, spoken). The ANOVA revealed main effects of Group [F(1,22) = 13.49, p = .001, partial η2 = .380] and Condition [F(1,22) = 4.82, p = .039, partial η2 = .180]. The effect of Group was present because healthy older adults performed better on the recognition task than patients with AD, and the effect of Condition was present because Pr was better for the sung condition (M = 0.46, SE = 0.037) than for the spoken condition (M = 0.41, SE = 0.040) across groups (Figure 2). There was no Group by Condition interaction [F(1,22) < 1].

Figure 2.

Mean recognition accuracy (Pr; %hits - %false alarms) on the general content question for the sung and spoken conditions in healthy older adults (OC) and patients with Alzheimer's disease (AD). Error bars represent one standard error of the mean.

For the two-option forced-choice specific content questions, we first assessed performance as total percent correct collapsed across question type (i.e., we considered specific content question responses for all studied object items regardless of whether a participant had correctly identified an object as studied during the preceding general content question). A 2x2 ANOVA with the factors of Group and Condition revealed a main effect of Group [F(1,22) = 17.10, p < .001, partial η2 = .437], but no effect of Condition [F(1,22) = 0.09, p = .768], and no Group by Condition interaction [F(1,22) = 0.201, p = .658; Table 3, Combined]. The effect of Group was present because healthy older adults performed better than patients with AD. Additional analyses were performed on the specific content question results based on question type (i.e., with regard for recognition accuracy in the general content questions); these data did not, however, yield either effects of Condition or interactions with Condition (see Table 3 for details).

Table 3.

Forced Choice Specific Content Question Percent Correct by Group

| OC | AD | |||

|---|---|---|---|---|

| Spoken | Sung | Spoken | Sung | |

| General Question Hit | 0.88 | 0.87 | 0.68 | 0.71 |

| General Question Miss | 0.78 | 0.68 | 0.67 | 0.69 |

| Combined | 0.86 (0.11) | 0.83 (0.06) | 0.68 (0.14) | 0.68 (0.15) |

Notes: Mean accuracy on the two-item forced choice specific content questions, where 0.50 is chance, for studied object content items only (dummy content items had no correct response). Data in the General Question Hit row represent response accuracy for specific content questions of the form “According to the lyrics, what should you do with [object]?” triggered by a correct recognition of the studied object in the preceding general content question. Data in the General Question Miss row represent response accuracy for specific content questions of the form “What would you like to do with [object]?” triggered by an incorrect general content question response to studied items. Because the number of items in the General Question Hit and General Question Miss bins varies by subject based on individual general content question hit rate, these accuracy data are weighted means calculated as the total number of hits divided by the total number of items for each group. Data in the Combined row represent accuracy collapsed across the General Question Hit and General Question Miss bins and thus did not require weighting. Standard deviations are presented in italics. OC = healthy older adults.

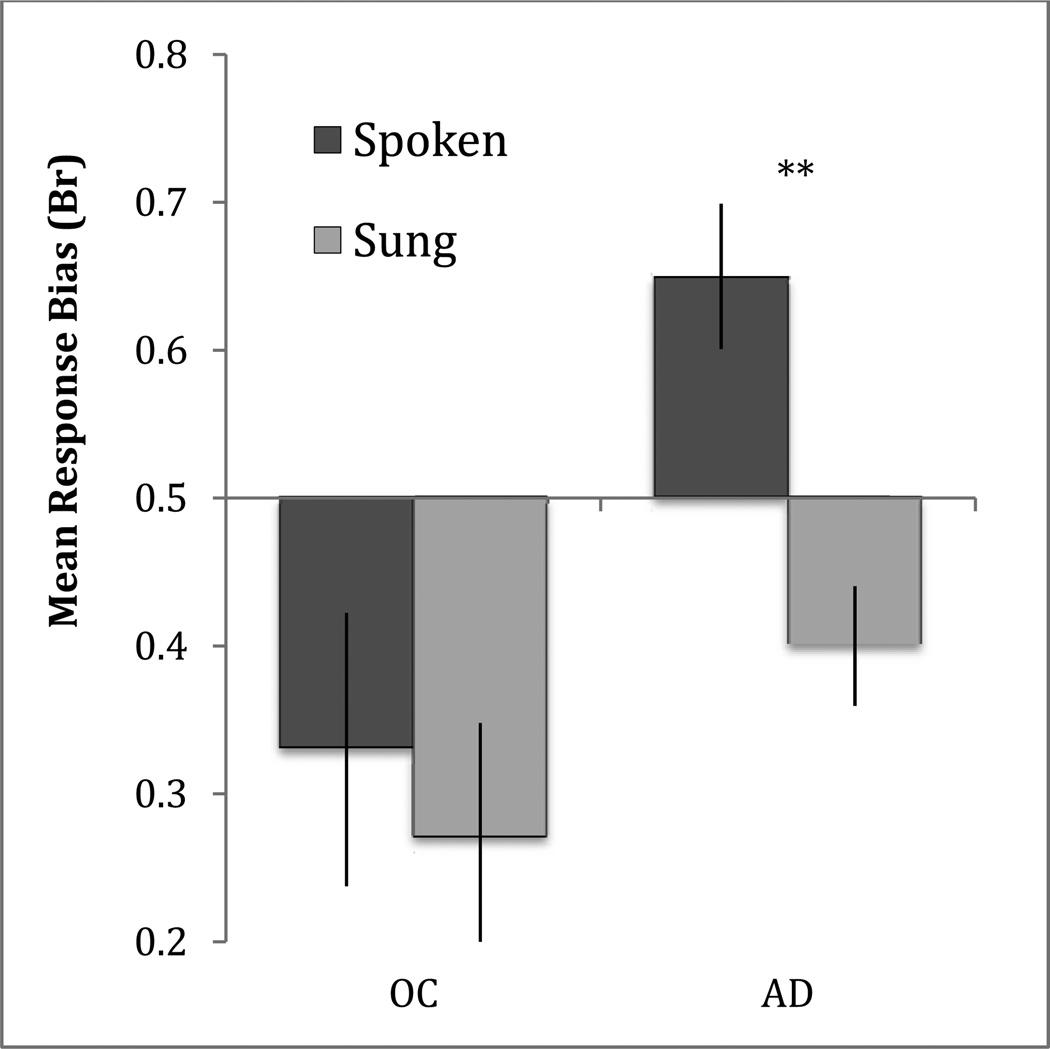

To investigate our third hypothesis, that encoding condition would reduce false recognition and affect discrimination and response bias, we assessed the results from the audio recognition test. These data were analyzed first via recognition accuracy, using Pr, and response bias, using the associated measure Br [%false alarm/(1-Pr); Snodgrass & Corwin, 1988]. Br reports liberal response biases as values between 0.5 and 1.0, with 1.0 being the most liberal bias (i.e., all items rated as studied) and 0.5 being a neutral bias, and conservative response biases as values between 0.0 and 0.5, with 0.0 being the most conservative response bias (i.e., all items rated as novel). We performed repeated measures ANOVAs with the factors of Group and Condition for each measure independently. The ANOVA for Pr revealed a main effect of Group [F(1,22) = 12.33, p = .002, partial η2 = .359], but no effect of Condition [F(1,22) = 2.160, p = .156] and no Group by Condition interaction [F(1,22) = 2.160, p = .156]. The effect of Group was present because healthy older adults performed better than patients with AD. The ANOVA using Br revealed main effects of Group [F(1,22) = 6.90, p = .015, partial η2 = .239] and Condition [F(1,22) = 12.56, p = .002, partial η2 = .363]. The effect of Group was present because patients were more liberal in their overall response bias than were healthy older adults, and the effect of Condition was present because response bias was more conservative for sung stimuli than for spoken stimuli across groups. The ANOVA also revealed a Group by Condition interaction [F(1,22) = 4.72, p = .041, partial η2 = .177]. To investigate the interaction, paired-sample t-tests were performed. These revealed that Br was significantly more conservative in the sung condition than in the spoken condition for AD patients [t(11) = 4.28, p = .001] but not for healthy older adults [t(11) = 0.92, p = .379].

Finally we assessed performance in the audio recognition test by examining hit and false alarm rates. We performed repeated measures ANOVAs with the factors of Group and Condition separately for hit rates and false alarm rates. The ANOVA for hit rates revealed a main effect of Condition [F(1,22) = 24.67, p < .001, partial η2 = .529], but no effect of Group [F < 1] and no Group by Condition interaction [F < 1]. The effect of condition was present because both groups had higher hit rates for spoken than for sung stimuli. The ANOVA for false alarm rates revealed main effects for Group [F(1,22) = 21.35, p < .001, partial η2 = .492] and Condition [F(1,22) = 5.48, p = .029, partial η2 = .199]. The effect of Group was present because AD patients had significantly more false alarms than the healthy older adults while the effect of Condition was present because false alarm rates were lower for the sung condition than for the spoken condition. The ANOVA also revealed a Group by Condition interaction [F(1,22) = 8.27, p = .009, partial η2 = .273]. To further investigate the interaction, paired-sample t-tests were performed. These revealed that false alarms were substantially reduced in the sung condition compared to the spoken condition for the AD patients [t(11) = 2.65, p = .023], but there was no difference in false alarms between conditions for healthy older adults [t(11) = 1.48, p = .166]

4. Discussion

We have shown previously that for patients with AD, but not healthy older adults, music enhances memory for associated verbal information (i.e., lyrics) in a basic test of recognition memory (Simmons-Stern, et al. 2010). A primary goal of the present experiment was to determine the extent to which this mnemonic benefit of musical encoding extends to memory for information contained in lyric content. The results support our hypothesis that general content information studied in sung lyrics may be better remembered than that studied in spoken lyrics. This benefit of musical encoding for general content memory was found for both AD patients and healthy older adults. Notably, however, we found that musical encoding enhances memory only for this general content information. Memory for specific content information, on the other hand, did not benefit from musical encoding. Furthermore, because musical encoding did not enhance memory for specific content information in either group, we found no support for our hypothesis that there would be an interaction between mnemonic benefit and group.

These results may be explained by a dual-process model of recognition memory wherein familiarity – the nonspecific sense of having previously experienced a stimulus – and recollection – the retrieval of qualitative source-specific information associated with the experience of a stimulus – contribute differentially to the memorial process (see Eichenbaum et al., 2007 for review). In order to answer the general content questions of the present experiment correctly, participants may have relied mainly on familiarity. Conversely, in order to correctly answer the specific content questions, participants were required to use recollection to retrieve detailed information about the context within which they had previously encountered an object content item. For example, a participant may have experienced a vague feeling of familiarity with the “pill” item at test and as a result answered the general content question correctly, but may not have been able recollect the specific action associated with that pill in the song stimulus they studied. In the context of this framework, it is possible to assess the preferential mnemonic benefit of music for general content questions but not specific content questions.

Our results therefore suggest that music may affect the distinct memorial process of familiarity to a greater extent than recollection. While it is unlikely that the distributed neural mechanisms associated with music perception interact differentially with the precise medial-temporal lobe (MTL) structures involved in familiarity and recollection (Eichenbaum et al., 2007; Haskins, Yonelinas, Quamme, & Ranganath, 2008; Ranganath et al., 2004; Yonelinas et al., 2005), we propose that musical encoding may create a robust, albeit non-specific representation outside of the MTL (Koelsch, 2011; Peretz et al., 2009; Limb, 2006) that preferentially facilitates familiarity but not recollection. Further support for this suggestion comes from evidence that familiarity may be subserved by a distributed, multimodal neural network (Curran & Dien, 2003), and that familiarity for musical stimuli in particular is associated with activation across a number of extra-MTL brain regions, including the left superior and inferior frontal gyri, the precuneus, and the angular gyrus, in addition to MTL structures such as the hippocampus (Plailly, Tillmann, & Royet, 2007). Musical encoding may also benefit MTL-based recognition processes, for example by enhancing attention, as proposed in Simmons-Stern et al. (2010). However, this explanation does not account for the dissociation between familiarity and recollection found in the present study. An attention-enhancement effect at encoding would benefit recollection, which is most dependent on MTL processes, as well as familiarity, which may also rely on extra-MTL processes (Yonelinas, 2002) of the sort activated by the perceptual components of musical encoding. Future imaging and behavioral work is necessary in order to test these proposals and elucidate the neural processes subserving the familiarity-enhancing musical mnemonic process.

The proposed dissociation of the effect of music on familiarity and recollection may also explain the group differences found in our previous experiment. For patients with AD, an enhanced familiarity component of recognition memory for sung but not spoken stimuli may have driven the shown benefit of musical encoding, while healthy older adults may have relied primarily on relatively intact recollection during the recognition task, thus showing no benefit of the familiarity-enhancing effect. That is, if music is inherently ill suited for enhancement of recollection, musical mnemonics may not be an effective learning tool for information whose intended retrieval method (e.g., lyric recognition) can be recollection-based. This finding may help clarify the as-yet equivocal body of empirical work on musical mnemonics in healthy individuals (e.g., Racette & Peretz, 2007).

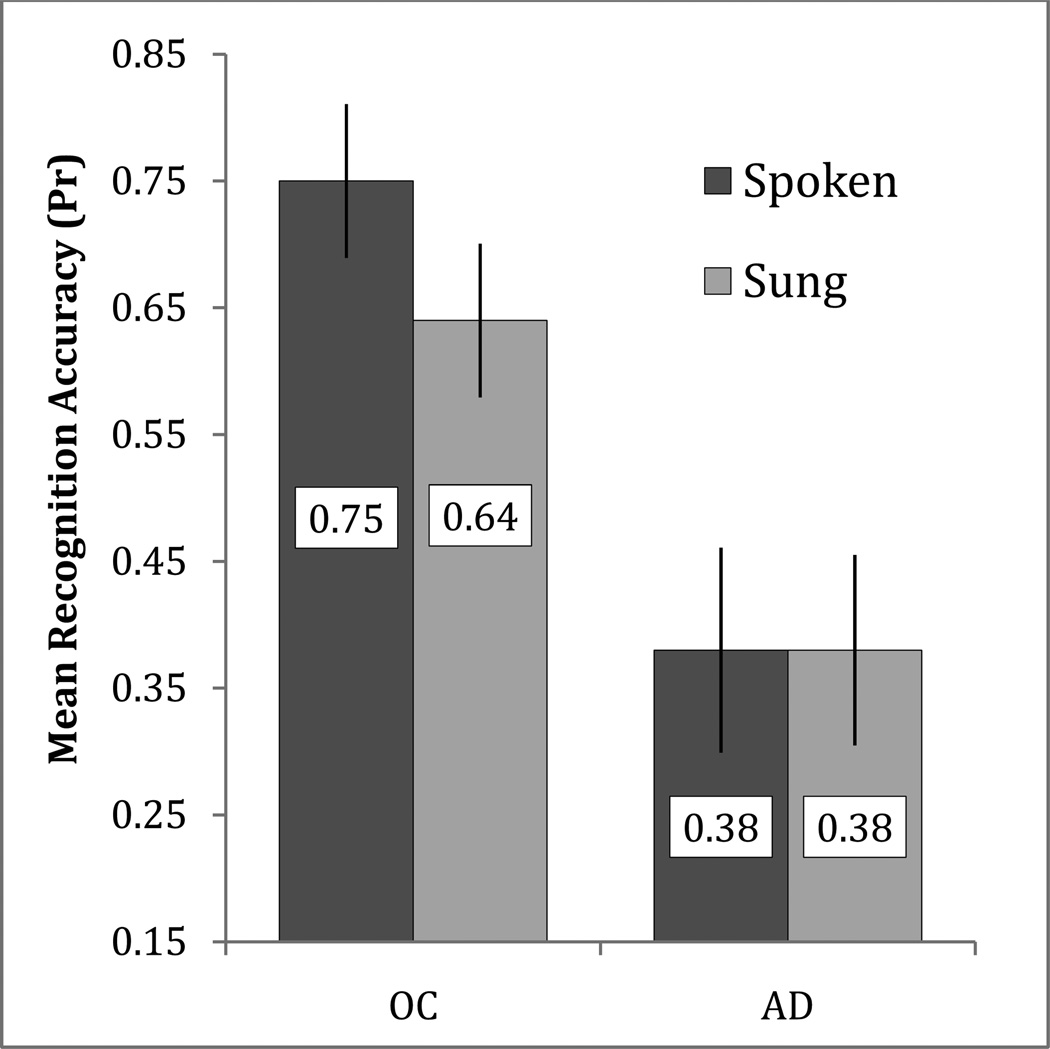

Our second main hypothesis of the present experiment was that patients with AD and healthy older adults would demonstrate lower rates of false recognition for sung as compared to spoken stimuli in the audio recognition test, and that this effect would improve discrimination (Pr) and affect response bias (Br). The results (Table 4) confirmed that patients with AD were less likely to false alarm (i.e., to incorrectly identify a novel stimulus as “old”) for sung stimuli than for spoken stimuli. Additionally, we found that patients with AD demonstrated a more conservative response bias for sung stimuli than for spoken stimuli. However, contrary to our hypothesis, patients did not show improved discrimination for sung stimuli due to significantly lower hit rates in the sung condition than in the spoken condition. Thus, relative to the spoken condition, sung encoding lowered both hit and false alarm rates by roughly the same amount, producing near identical discrimination (Figure 3). Also contrary to our hypothesis, healthy older adults showed a small but non-significant difference in response bias by condition.

Table 4.

Audio Recognition Test Hits, False Alarms, and Bias by Group

| OC | AD | |||

|---|---|---|---|---|

| Spoken | Sung | Spoken | Sung | |

| Hits | 0.83 (0.19) | 0.70 (0.20) | 0.79 (0.16) | 0.64 (0.16) |

| False Alarms | 0.05 (0.10) | 0.07 (0.13) | 0.42 (0.25) | 0.25 (0.15) |

| Recognition Accuracy (Pr) | 0.75 (0.21) | 0.64 (0.21) | 0.38 (0.28) | 0.38 (0.26) |

| Response Bias (Br) | 0.33 (0.32) | 0.27 (0.27) | 0.65 (0.17) | 0.40 (0.14) |

Notes: Standard deviations are presented in italics. OC = healthy older adults.

Figure 3.

Mean recognition accuracy (Pr; %hits - %false alarms) on the audio recognition test for the sung and spoken conditions in healthy older adults (OC) and patients with Alzheimer's disease (AD). Error bars represent one standard error of the mean. Significant within-group differences: *(p<.05)

We were particularly interested in the group by condition interaction observed for response bias, and specifically the finding that patients with AD were significantly more conservative in their response bias for sung stimuli than for spoken stimuli. We attribute this result to an engagement of the distinctiveness heuristic, a decision strategy based on the metacognitive expectation that vivid details of a stimulus or stimulus type will be recalled, else the item must be unstudied (Budson, Sitarski, Daffner, & Schacter, 2002; Schacter, Israel, & Racine, 1999). Sung lyrics are distinctive, particularly compared to spoken words. Importantly, most participants maintained a metamemorial expectation that sung lyrics would be better remembered than spoken lyrics (as reported on the post-experiment questionnaire). In the case of the present experiment, we propose that AD patients engaged the distinctiveness heuristic. For our audio test paradigm, this heuristic involves two assumptions: first, that if a sung lyric had been studied, a patient would be sure to remember it at test, and second, that if a patient heard a sung lyric and did not recall it vividly, that lyric must be unstudied. This expectation would thus work well to reduce false alarms to unstudied sung lyrics, as was found in the present experiment. However, if an AD patient is unable to vividly recall studied lyrics as a result of his or her memory impairment, applying the distinctiveness heuristic would result in a lower hit rate for the sung as compared to the spoken condition. In the setting of impaired memory, the application of the distinctiveness heuristic may thus have led to both fewer false alarms and fewer hits, making response bias more conservative without increasing discrimination. Very similar patterns of results were observed in Budson, Dodson, Daffner, and Schacter (2005) and Gallo, Chen, Wiseman, Schacter, and Budson (2007), in which AD patients showed a shift towards a more conservative response bias for all “distinctive” picture test items relative to word test items. In the context of impaired recollection in AD patients and our finding that musical encoding enhances familiarity but not recollection, we do not find it surprising that patients were unable to use the distinctiveness heuristic to effectively improve discrimination.

Healthy older adults may have also engaged the use of the distinctiveness heuristic, and by doing so may have impaired their performance in the sung condition. If healthy older adults, like AD patients, maintain the expectation that sung lyrics will engender a more vivid and memorable recollection than the spoken condition, they may have applied the more stringent criteria associated with the distinctiveness heuristic to the sung lyrics at test but not to the spoken lyrics. As we have discussed, however, the recollection shown by healthy older adults in the second part of our experiment (the specific content question) did not differ between sung and spoken lyrics. Our results may therefore represent a situation in which healthy older adults expected better memory for sung stimuli than spoken stimuli, despite actual rates of recognition that were equal across condition. This speculation that healthy older adults did apply the distinctiveness heuristic to the sung lyrics, through the use of more stringent criteria, is consistent with the observed trend towards more conservative response bias for sung relative to spoken lyrics. Typically, overall discrimination would remain the same between conditions if memory strength was the same and only bias changed, as in the results of the AD patients. However, because the baseline false alarm rate was so low for the older adults (essentially at floor), it may be that the more stringent criteria associated with the use of the distinctiveness heuristic lowered hits and not false alarms, leading to a trend toward overall poorer discrimination for the sung lyrics in the healthy older adults. Future studies can confirm this speculation by including more detailed measures of subjects’ expectations of their performance on the sung versus spoken conditions, and by experimentally manipulating false alarms such that the baseline false alarm rate is higher.

Combined, these findings from the content memory test and audio recognition test will inform a discussion of the practical benefits of music-based memory enhancement and general therapies. While our approach falls short of testing direct functional improvement, it represents a critical component in assessing the utility of future musical mnemonic interventions. For one, these results suggest that music is not able to effectively enhance explicit memory for specific content information of the sort that is necessary for interventions designed to affect targeted episodic memory or item-specific IADL functioning, at least using the methods investigated in this study. While future studies employing different methodology may find that music is able to enhance explicit memory, our findings suggest that music may be better suited to enhance familiarity and metamemorial confidence. These effects of musical mnemonics may be beneficial for more general memory related functioning, as well as for quality of life, depression, agitation, cognitive function, and other factors that are known to benefit from non-mnemonic music interventions.

As one example, consider the case of an AD patient in an assisted living facility who becomes regularly agitated by an inability to remember where he lives. A customized musical mnemonic designed to help encode facts about the facility, when presented on a regular basis, might affect a number of positive changes for the patient, for example: 1) improved mood and decreased distress from the frequent exposure to musical stimuli, 2) enhanced familiarity with the assisted living facility in which he lives, albeit without specific knowledge of details about the facility, and 3) enhanced metamemorial confidence in knowing where he lives, especially if prompted by the musical mnemonic.

Although our study found that under the present experimental conditions musical mnemonics were unable to enhance specific content information, these musical mnemonics were able to improve memory for more general content information in both healthy older adults and patients with AD. We are optimistic that future studies will be able to broaden these findings and translate them into practical methods that can improve the lives of patients with AD.

Figure 1.

1, Study Phase (Aprox. 30min); 2, Content Test (Aprox. 20 minutes, self paced); 3, Audio Test (Aprox. 15 minutes). Text appeared as depicted in the examples; only the lyrics, object item cue (e.g., “your pills”) and responses varied by trial and subject. Each general content question was followed immediately by a specific content question as diagramed. * Note: Time at the end of the Study Phase was recorded and a filler task was provided following the Content Test such that there was a total delay of 40 minutes between the end of the study phase and beginning of the audio test.

Figure 4.

Mean response bias [Br; %false alarms/(1- Pr)] on the audio recognition test for the sung and spoken conditions in healthy older adult controls (OC) and patients with Alzheimer's disease (AD). Neutral response bias is 0.50; conservative bias is <0.50, liberal bias is > 0.50. Error bars represent one standard error of the mean. Significant within-group differences: **(p<.005)

Table 2.

General Content Question Hit and False Alarm Rates by Group

| OC | AD | |

|---|---|---|

| Spoken Hits | 0.74 (0.11) | 0.56 (0.27) |

| Sung Hits | 0.79 (0.09) | 0.61 (0.31) |

| Overall Hits | 0.77 (0.09) | 0.59 (0.28) |

| False Alarms | 0.20 (0.13) | 0.28 (0.24) |

Notes: Standard deviations are presented in italics. OC = healthy older adults. Overall hits reported as the average hit rate (% hits) for stimuli studied both sung (sung hits) and spoken (spoken hits). False alarms are the percent of dummy object items incorrectly identified as studied by the participant.

Highlights.

We examined functional musical mnemonics in patients with AD and healthy older adults.

Patient’ memory for specific content of lyrics was the same whether sung or spoken.

All subjects had better memory for general content of lyrics when sung versus spoken.

Acknowledgements

This research was supported by National Institute on Aging grants R01 AG025815 (AEB), K23 AG031925 (BAA), P30 AG13846 (AEB), and a Department of Veterans Affairs, Veterans Health Administration, VISN 1 Early Career Development Award to RGD. This material is also the result of work supported with resources and the use of facilities at the VA Boston Healthcare System, Boston, MA. We would additionally like to thank our vocalist Anna Miller, and Levi Miller for his help with data processing.

Appendix: Sample Lyrics

1a. “Brush Your Teeth”

When you awake in the morning and go off to sleep

It’s very important to brush all your teeth

The front ones the back ones and even your tongue

Keep everything clean and you’ll never go wrong

1b. “Floss Your Teeth”

For really good hygiene

Try flossing your teeth

Morning and evening

Or after you eat

2a. “Button Your Shirt”

Button up that shirt today

And make sure that it stays

Such a pretty shirt of yours

Should always look that way

2b. “Fold your Shirts”

At the end of every day

Before you put your shirts away

Don’t forget to fold them tight

It’s good for storing overnight

3a. “Take Your Keys”

Every time you’re leaving

You must take your keys

Doesn’t matter where they go

Just take them with you please

3b. “Put Your Keys on the Hook”

Your keys should not be on the table

Put them on the hook I’ve labeled

Makes them easier to find

When their place has slipped your mind

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

In the absence of an objective measure of auditory acuity, the lack of between-group differences in selected presentation volume [t(22) = .74, p=0.467] may suggest that there were no significant differences in hearing ability between group.

References

- Adjutant General’s Office, editor. Army individual test battery. Manual of directions and scoring. Washington, DC: War Department; 1944. [Google Scholar]

- Ally BA, Gold CA, Budson AE. An evaluation of recollection and familiarity in Alzheimer’s disease and mild cognitive impairment using receiver operating characteristics. Brain and cognition. 2009;69(3):504–513. doi: 10.1016/j.bandc.2008.11.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ally BA, McKeever JD, Waring JD, Budson AE. Preserved frontal memorial processing for pictures in patients with mild cognitive impairment. Neuropsychologia. 2009;47(10):2044–2055. doi: 10.1016/j.neuropsychologia.2009.03.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Alzheimer’s Association. 2011 Alzheimer’s Disease Facts and Figures. 2011;7(2) doi: 10.1016/j.jalz.2011.02.004. [DOI] [PubMed] [Google Scholar]

- Alzheimer’s Disease International. World Alzheimer Report. 2009 [Google Scholar]

- Baird A, Samson S. Memory for music in Alzheimer’s disease: unforgettable? Neuropsychology review. 2009;19(1):85–101. doi: 10.1007/s11065-009-9085-2. [DOI] [PubMed] [Google Scholar]

- Budson AE, Dodson CS, Daffner KR, Schacter DL. Metacognition and false recognition in Alzheimer’s disease: further exploration of the distinctiveness heuristic. Neuropsychology. 2005;19(2):253–258. doi: 10.1037/0894-4105.19.2.253. [DOI] [PubMed] [Google Scholar]

- Budson AE, Kowall NW. In: The Handbook of Alzheimer’s Disease and Other Dementias. Budson AE, Kowall NW, editors. Oxford, UK: John Wiley & Sons; 2011. 2011. [Google Scholar]

- Budson AE, Sitarski J, Daffner KR, Schacter DL. False recognition of pictures versus words in Alzheimer's disease: The distinctiveness heuristic. Neuropsychology. 2002;16(2):163–173. doi: 10.1037//0894-4105.16.2.163. [DOI] [PubMed] [Google Scholar]

- Budson AE, Solomon PR. Memory Loss: A Practical Guide for Clinicians. Philadelphia: Elsevier Inc; 2011. [Google Scholar]

- Craik FIM, Jennings JM. Human Memory. In: Craik FIM, Salthouse TA, editors. The handbook of aging and cognition. Hillsdale, NJ: Lawrence Erlbaum Associates Inc; 1992. pp. 51–110. [Google Scholar]

- Curran T, Dien J. Differentiating amodal familiarity from modality-specific memory processes: An ERP study. Psychophysiology. 2003;40(6):979–988. doi: 10.1111/1469-8986.00116. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eichenbaum H, Yonelinas AP, Ranganath C. The medial temporal lobe and recognition memory. Annual review of neuroscience. 2007;30:123–152. doi: 10.1146/annurev.neuro.30.051606.094328. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Folstein MF, Folstein SE, McHugh PR. Mini-Mental State: A Practical Method for Grading the Cognitive State of Patients for the Clinician. J. Psychiat. Res. 1975;12:189–198. doi: 10.1016/0022-3956(75)90026-6. [DOI] [PubMed] [Google Scholar]

- Foster NA, Valentine ER. The effect of auditory stimulation on autobiographical recall in dementia. Experimental aging research. 2001;27(3):215–228. doi: 10.1080/036107301300208664. [DOI] [PubMed] [Google Scholar]

- Gallo DA, Chen JM, Wiseman AL, Schacter DL, Budson AE. Retrieval monitoring and anosognosia in Alzheimer’s disease. Neuropsychology. 2007;21(5):559–568. doi: 10.1037/0894-4105.21.5.559. [DOI] [PubMed] [Google Scholar]

- Gaugler JE, Yu F, Krichbaum K, Wyman JF. Predictors of nursing home admission for persons with dementia. Medical care. 2009;47(2):191–198. doi: 10.1097/MLR.0b013e31818457ce. [DOI] [PubMed] [Google Scholar]

- Guétin S, Portet F, Picot MC, Pommié C, Messaoudi M, Djabelkir L, Olsen aL, et al. Effect of music therapy on anxiety and depression in patients with Alzheimer’s type dementia: randomised, controlled study. Dementia and geriatric cognitive disorders. 2009;28(1):36–46. doi: 10.1159/000229024. [DOI] [PubMed] [Google Scholar]

- Haskins AL, Yonelinas AP, Quamme JR, Ranganath C. Perirhinal cortex supports encoding and familiarity-based recognition of novel associations. Neuron. 2008;59(4):554–560. doi: 10.1016/j.neuron.2008.07.035. [DOI] [PubMed] [Google Scholar]

- Hebert LE, Scherr PA, Bienias JL, Bennett DA, Evans DA. Alzheimer disease in the US population: prevalence estimates using the 2000 census. Archives of neurology. 2003;60(8):1119–1122. doi: 10.1001/archneur.60.8.1119. [DOI] [PubMed] [Google Scholar]

- Hsieh S, Hornberger M, Piguet O, Hodges JR. Neural basis of music knowledge: evidence from the dementias. Brain, 2011;134(9):2523–2534. doi: 10.1093/brain/awr190. [DOI] [PubMed] [Google Scholar]

- Irish M, Cunningham CJ, Walsh JB, Coakley D, Lawlor BA, Robertson IH, Coen RF. Investigating the enhancing effect of music on autobiographical memory in mild Alzheimer’s disease. Dementia and geriatric cognitive disorders. 2006;22(1):108–120. doi: 10.1159/000093487. [DOI] [PubMed] [Google Scholar]

- Janata P. Effects of Widespread and Frequent Personalized Music Programming on Agitation and Depression in Assisted Living Facility Residents With Alzheimer-Type Dementia. Music and Medicine. 2012;4(1):8–15. [Google Scholar]

- Koelsch S. Toward a neural basis of music perception - a review and updated model. Frontiers in psychology. 2011 Jun;2:110. doi: 10.3389/fpsyg.2011.00110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Light LL. Memory and aging: Four hypotheses in search of data. Annual review of psychology. 1991;42(1):333–376. doi: 10.1146/annurev.ps.42.020191.002001. [DOI] [PubMed] [Google Scholar]

- Limb CJ. Structural and functional neural correlates of music perception. The anatomical record. Part A. Discoveries in molecular, cellular, and evolutionary biology. 2006;288(4):435–446. doi: 10.1002/ar.a.20316. [DOI] [PubMed] [Google Scholar]

- Mack WJ, Freed DM, Williams BW, Henderson VW. Boston Naming Test: shortened versions for use in Alzheimer's disease. Journal of Gerontology. 1992;47(3):154–158. doi: 10.1093/geronj/47.3.p154. [DOI] [PubMed] [Google Scholar]

- McKhann GM, Knopman DS, Chertkow H, Hyman BT, Jack CR, Kawas CH, Klunk WE, et al. The diagnosis of dementia due to Alzheimer’s disease: Recommendations from the National Institute on Aging-Alzheimer's Association workgroups on diagnostic guidelines for Alzheimer's disease. Alzheimer’s & dementia : the journal of the Alzheimer's Association. 2011;7(3):263–269. doi: 10.1016/j.jalz.2011.03.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mckhann G, Drachman D, Folstein M, Katzman R, Price D, Stadlan EM. Clinical diagnosis of Alzheimer’s disease: Report of the NINCDS-ADRDA Work Group under the auspices of Department of Health and Human Services Task Force on Alzheimer's Diseease. Neurology. 1984 Jul;34:939–944. doi: 10.1212/wnl.34.7.939. [DOI] [PubMed] [Google Scholar]

- Mitchell JP, Sullivan AL, Schacter DL, Budson AE. Mis-attribution errors in Alzheimer’s disease: the illusory truth effect. Neuropsychology. 2006;20(2):185–192. doi: 10.1037/0894-4105.20.2.185. [DOI] [PubMed] [Google Scholar]

- Monsch AU, Bondi MW, Butters N, Salmon DP, Katzman R, Thal LJ. Comparisons of verbal fluency tasks in the detection of dementia of the Alzheimer type. Arch Neurol., 1992;49:1253–1258. doi: 10.1001/archneur.1992.00530360051017. [DOI] [PubMed] [Google Scholar]

- Morris JC, Mohs R, Rogers H, Fillenbaum G, Heyman A. CERAD clinical and neuropsychological assessment of Alzheimer’s disease. Psycholpharmacology Bulletin, 1989;198:641–651. [Google Scholar]

- Nasreddine ZS, Phillips NA, Bédirian V, Charbonneau S, Whitehead V, Collin I, Cummings JL, et al. The Montreal Cognitive Assessment, MoCA: A Brief Screening Tool For Mild Cognitive Impairment. Journal of the American Geriatrics Society. 2005;53:695–699. doi: 10.1111/j.1532-5415.2005.53221.x. [DOI] [PubMed] [Google Scholar]

- Peretz I, Gosselin N, Belin P, Zatorre RJ, Plailly J, Tillmann B. Music lexical networks: the cortical organization of music recognition. Annals of the New York Academy of Sciences. 2009;1169:256–265. doi: 10.1111/j.1749-6632.2009.04557.x. [DOI] [PubMed] [Google Scholar]

- Pierce BH, Sullivan AL, Schacter DL, Budson AE. Comparing source-based and gist-based false recognition in aging and Alzheimer’s disease. Neuropsychology. 2005;19(4):411–419. doi: 10.1037/0894-4105.19.4.411. [DOI] [PubMed] [Google Scholar]

- Plailly J, Tillmann B, Royet J-P. The feeling of familiarity of music and odors: the same neural signature? Cerebral cortex (New York NY:1991) 2007;17(11):2650–2658. doi: 10.1093/cercor/bhl173. [DOI] [PubMed] [Google Scholar]

- Racette A, Peretz I. Learning lyrics: to sing or not to sing? Memory & cognition. 2007;35(2):242–253. doi: 10.3758/bf03193445. [DOI] [PubMed] [Google Scholar]

- Ranganath C, Yonelinas AP, Cohen MX, Dy CJ, Tom SM, D’Esposito M. Dissociable correlates of recollection and familiarity within the medial temporal lobes. Neuropsychologia. 2004;42(1):2–13. doi: 10.1016/j.neuropsychologia.2003.07.006. [DOI] [PubMed] [Google Scholar]

- Razani J, Kakos B, Orieta-Barbalace C, Wong JT, Casas R, Lu P, Alessi C, et al. Predicting caregiver burden from daily functional abilities of patients with mild dementia. Journal of the American Geriatrics Society. 2007;55(9):1415–1420. doi: 10.1111/j.1532-5415.2007.01307.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schacter DL. The Seven Sins of Memory: Insights From Psychology and Cognitive Neuroscience. American Psychologist. 1999;54(3):182–203. doi: 10.1037//0003-066x.54.3.182. [DOI] [PubMed] [Google Scholar]

- Schacter DL, Israel L, Racine C. Suppressing false recognition in younger and older adults: The distinctiveness heuristic. Journal of Memory and Language. 1999;40:1–24. [Google Scholar]

- Schölzel-Dorenbos CJM, van der Steen MJMM, Engels LK, Olde Rikkert MGM. Assessment of quality of life as outcome in dementia and MCI intervention trials: a systematic review. Alzheimer disease and associated disorders. 2007;21(2):172–178. doi: 10.1097/WAD.0b013e318047df4c. [DOI] [PubMed] [Google Scholar]

- Simmons-Stern NR, Budson AE, Ally BA. Music as a memory enhancer in patients with Alzheimer’s disease. Neuropsychologia. 2010;48(10):3164–3167. doi: 10.1016/j.neuropsychologia.2010.04.033. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Snodgrass JG, Corwin J. Pragmatics of measuring recognition memory: applications to dementia and amnesia. Journal of experimental psychology. General. 1988;117(1):34–50. doi: 10.1037//0096-3445.117.1.34. [DOI] [PubMed] [Google Scholar]

- Thompson RG, Moulin CJA, Hayre S, Jones RW. Music Enhances Category Fluency in Healthy Older Adults and Alzheimer’s Disease Patients. Experimental aging research. 2005;31:91–99. doi: 10.1080/03610730590882819. [DOI] [PubMed] [Google Scholar]

- Tomaszewski Farias S, Cahn-Weiner Da, Harvey DJ, Reed BR, Mungas D, Kramer JH, Chui H. Longitudinal changes in memory and executive functioning are associated with longitudinal change in instrumental activities of daily living in older adults. The Clinical neuropsychologist. 2009;23(3):446–461. doi: 10.1080/13854040802360558. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vanstone AD, Cuddy LL. Musical memory in Alzheimer disease. Neuropsychology, development, and cognition. Section B, Aging, neuropsychology and cognition. 2010;17(1):108–128. doi: 10.1080/13825580903042676. [DOI] [PubMed] [Google Scholar]

- Witzke J, Rhone RA, Backhaus D, Shaver NA. How sweet the sound: research evidence for the use of music in Alzheimer’s dementia. Journal of gerontological nursing. 2008;34(10):45–52. doi: 10.3928/00989134-20081001-08. [DOI] [PubMed] [Google Scholar]

- Yonelinas AP. The Nature of Recollection and Familiarity: A Review of 30 Years of Research. Journal of Memory and Language. 2002;46(3):441–517. [Google Scholar]

- Yonelinas AP, Otten LJ, Shaw KN, Rugg MD. Separating the brain regions involved in recollection and familiarity in recognition memory. The Journal of neuroscience : the official journal of the Society for Neuroscience. 2005;25(11):3002–3008. doi: 10.1523/JNEUROSCI.5295-04.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]