Abstract

One of the defining characteristics of autism spectrum disorder (ASD) is difficulty with language and communication.1 Children with ASD's onset of speaking is usually delayed, and many children with ASD consistently produce language less frequently and of lower lexical and grammatical complexity than their typically developing (TD) peers.6,8,12,23 However, children with ASD also exhibit a significant social deficit, and researchers and clinicians continue to debate the extent to which the deficits in social interaction account for or contribute to the deficits in language production.5,14,19,25

Standardized assessments of language in children with ASD usually do include a comprehension component; however, many such comprehension tasks assess just one aspect of language (e.g., vocabulary),5 or include a significant motor component (e.g., pointing, act-out), and/or require children to deliberately choose between a number of alternatives. These last two behaviors are known to also be challenging to children with ASD.7,12,13,16

We present a method which can assess the language comprehension of young typically developing children (9-36 months) and children with autism.2,4,9,11,22 This method, Portable Intermodal Preferential Looking (P-IPL), projects side-by-side video images from a laptop onto a portable screen. The video images are paired first with a 'baseline' (nondirecting) audio, and then presented again paired with a 'test' linguistic audio that matches only one of the video images. Children's eye movements while watching the video are filmed and later coded. Children who understand the linguistic audio will look more quickly to, and longer at, the video that matches the linguistic audio.2,4,11,18,22,26

This paradigm includes a number of components that have recently been miniaturized (projector, camcorder, digitizer) to enable portability and easy setup in children's homes. This is a crucial point for assessing young children with ASD, who are frequently uncomfortable in new (e.g., laboratory) settings. Videos can be created to assess a wide range of specific components of linguistic knowledge, such as Subject-Verb-Object word order, wh-questions, and tense/aspect suffixes on verbs; videos can also assess principles of word learning such as a noun bias, a shape bias, and syntactic bootstrapping.10,14,17,21,24 Videos include characters and speech that are visually and acoustically salient and well tolerated by children with ASD.

Keywords: Medicine, Issue 70, Neuroscience, Psychology, Behavior, Intermodal preferential looking, language comprehension, children with autism, child development, autism

Protocol

1. IPL Video Creation

We design the videos to be interesting and attractive, but also non-aversive to young children with autism, in a number of ways: When animate characters are needed, we use animals rather than humans to make the scenes less socially/emotionally challenging for children with ASD. We use dynamic scenes with brightly colored objects to capture and hold attention. A red blinking light during the intertrial intervals (ITIs) holds the children's attention when the video screens are blank. We produce the voice audio using speech that is exaggerated in both intonation and duration to capture and hold the children's attention.

Figure 1. (Movie) One block of the Noun Bias video. Shows one block of the Noun Bias video, which tests whether children map a novel word onto an object vs. an action. Click here to view movie.

Video stimuli are created via commercial nonlinear editing programs such as FinalCut Pro or Avid Media Composer, using 4-8 sec movie clips.

The first 2 clips are arranged sequentially, alternating left and right, paired with the same familiarization or teaching audio.

The baseline trials come next; these present the test stimuli side-by-side, but are paired with nondirecting audios. The baseline trials tell us whether the children have any inherent or visual preference for either stimulus when there is no linguistic or directing audio.

The test trials appear last, paired with the test audios that distinguish the two visuals.

A 1kHz tone goes on the second audio channel, coincident with each trial, providing visual designation to coders of the onset and offset of trials.

2. At the Visit/Child's Home

Perform the consent process with the parent. Describe the study format in more detail and ask parent to sign the consent forms. Emphasize that the child should watch with minimal interaction with the parent, and introduce the mp3 player for the parent to use, if the child is to sit on the parent's lap. The parent listens to music on the mp3 player while watching the IPL video, so that s/he does not hear the IPL test audio and so potentially influence which way the child should look.

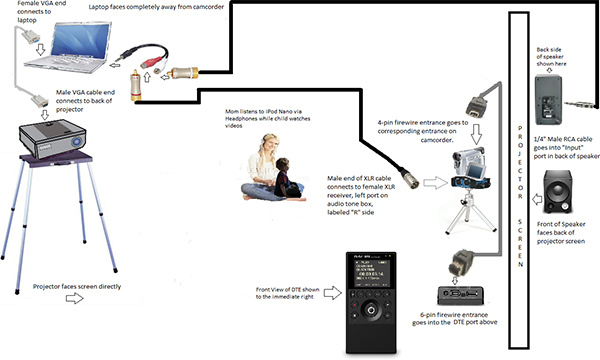

Set up the IPL components in the designated room, and connect to power sources using extension cords. It is helpful to bring a research assistant along who can play with the child during setup. Set up the big screen with its back to the major light source. Place the speaker centered behind the screen; adjust both volume knobs to halfway levels. Place the camera on the tripod atop the tone adaptor box, centered in front of screen. Load the tape into the camera and place the DTE on the floor next to the camera. Set up the projector stand 7-10 feet away from the screen; place the projector on top. Place the laptop next to the projector stand.

Figure 2. The portable IPL components and the arrangement of their setup in the home. Shows the portable IPL components and the spatial arrangement of their setup in the home. Click here to view larger figure.

Figure 2. The portable IPL components and the arrangement of their setup in the home. Shows the portable IPL components and the spatial arrangement of their setup in the home. Click here to view larger figure.

Connect the IPL components: Connect the laptop so that it sends video signal to the projector. The laptop also sends out two audio signals (via a splitter), with the voice audio going to the speaker and the tone audio going to the camera. Connect the camera so that it sends video and audio signal to the DTE. Once connected, power up all components. The DTE screen should show "AVI Type 1" on left and "Counter" underneath.

- Play the video(s) for the child: Load the first video on the laptop into Quicktime.

- Place a chair 2.5 to 3 feet from camera, in front of screen. Invite the child to sit on the chair, or on the parent's lap. If on parent's lap, give the parent the mp3 player and earbuds, tell her/him to start it. If child sits alone, parent may sit alongside. Children who get fidgety during the video may be encouraged in general terms to "watch the movie."

- Ensure that the child's face is visible in the camcorder. Re-check whenever the child moves around. Adjust lighting in room as needed.

- Write child's information on a dry erase whiteboard (pseudonym, gender, visit, video, date, birthdate, age).Turn on camcorder and press record. Press record on DTE. Film the whiteboard for 10 sec.

- On the laptop, play the first video by clicking "View" followed by "Enter Full Screen."

- After the video is completed, press the stop button on the DTE. If the child is willing, another video may be presented. Alternatively, this may be a good time for a break. Some children with ASD may need frequent breaks, but others may prefer to view all of the videos in a row.

Reward children with a gift at the end of the visit.

3. Coding Back in the Lab

- Check that the child's eye movements are already digitized on the DTE.

- Import this film into a nonlinear editing program, convert to the format (.avi) used by our custom coding program, and then export to a secure storage device.

- Code each video following its specific layout (i.e,. arrangement of familiarization/teaching, baseline, and test trials).

- Tab through the child's film and find the frames where the waveform of the 1kHz tone is visible, indicating that a visual stimulus is being presented to the child.

- Begin coding during the intertrial interval (ITI) before each target trial (i.e., there's no tone and the centering light is presented; the child should be centering or looking away). Tab ahead frame by frame, recording each change of gaze as L (to the left), R (to the right), C (to the center) or A (away: up, down, far left, far right, back).

The coding program outputs columns of numbers indicating the timing and type of each code entered. So, TSC 33499 means that at the onset of the trial, 3.3499 sec from the start of the video, the child looked to the center.

The coding arrays are analyzed by a custom matlab analysis program, which accesses the specific layout-which side is the match for each trial-of each video.

The analysis programs then calculate the child's duration and direction of looking during each coded trial, the child's latency to first look at the matching stimulus, and the number of times the child switches attention during each coded trial.

We then compare children's looking during the baseline vs. test trials on (a) duration of gaze to the matching image, (b) latency of first look to the matching image, (c) timecourse of looking to both images during the entire trial, and (d) number of switches of attention.

The rationale is that children who understand the linguistic stimulus will look longer at the matching image during the test trial than they had during the baseline trial, that they will look more quickly (once the trial begins) at the matching image than the nonmatching image, and that they will switch attention less during the test trials than during the baseline trials, because the linguistic stimulus is guiding their looking.

The only difference between baseline and test trials is the linguistic audio; the visual stimuli are the same but the baseline trials have a nondirecting audio (e.g., "look here!") while the test trials have a directing audio (e.g., "Where's toopen?") Therefore, comparing test trials to baseline trials for the duration and switches of attention measures tells us how much the directing audio is guiding the children's looking patterns. Also, if the children understand the directing audio, they should look more quickly at the matching image than at the nonmatching image.

Representative Results

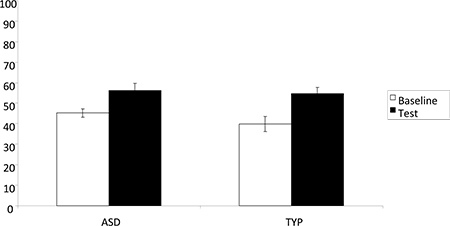

An example of looking patterns from children tested using P-IPL is presented in Figure 3. Viewing the NounBias video, TD children 21 months of age looked longer at the matching image during test compared with baseline trials, indicating they had mapped the novel words onto objects rather than actions.21 Children with ASD averaging 33 months of age behaved similarly, with similar effect sizes.21,24 Therefore, children with ASD show a similar word learning bias as TD children. Viewing the SVO word order video, children in both groups also showed longer looking to matching images during test over baseline (i.e., significant comprehension), demonstrating understanding of English word order (e.g., that "The girl pushes the boy" is a different relationship from "The boy pushes the girl").3,14,21 Developmental differences are seen with the wh-question video, as TD children showed significant comprehension at 28 months of age, whereas significant comprehension was first found in children with ASD at 54 months of age.10 Therefore, children with ASD are delayed in learning how to correctly interpret questions such as "What did the apple hit?"

Figure 3. Children's percent of looking time to the match (i.e., object rather than action), while viewing the Noun Bias video. Shows children's percent of looking time to the match (i.e., object rather than action), while viewing the Noun Bias video.

Figure 3. Children's percent of looking time to the match (i.e., object rather than action), while viewing the Noun Bias video. Shows children's percent of looking time to the match (i.e., object rather than action), while viewing the Noun Bias video.

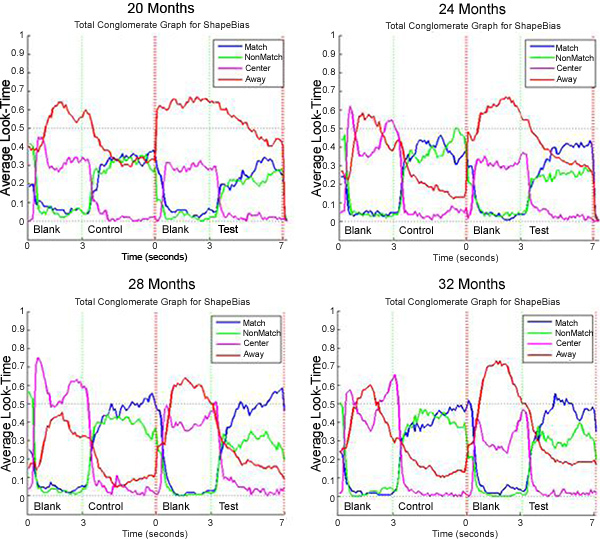

Figure 4 presents the more detailed timecourse analyses of TD children viewing the ShapeBias video at four successive visits, spanning 20-32 months. Blue lines indicate looking to the match (i.e., same shape object); notice that the height of the blue lines during the test trials (right side of each graph) increases with age, showing increasing shape preferences. Moreover, as the children get older, the blue lines rise to the match earlier in the trial, indicating that they are understanding the directing audio more quickly with age. Red lines indicate looking away; notice that the breadth of the red lines diminishes with age as children spend less time looking away. Pink lines indicate looking to the Center during the ITI; we use these to make sure that children aren't biased before the trial starts. These IPL data confirm those from other methods that the Shape Bias is an increasingly strong word learning principle for TD children. However, three replications have not yet succeeded in demonstrating a significant word-based shape bias in the children with ASD.17,24

Figure 4. TD children's timecourse of looking while viewing the Shape Bias video. Shows TD children's timecourse of looking to the match, nonmatch, center, and away, while viewing the Shape Bias video. Click here to view larger figure.

Figure 4. TD children's timecourse of looking while viewing the Shape Bias video. Shows TD children's timecourse of looking to the match, nonmatch, center, and away, while viewing the Shape Bias video. Click here to view larger figure.

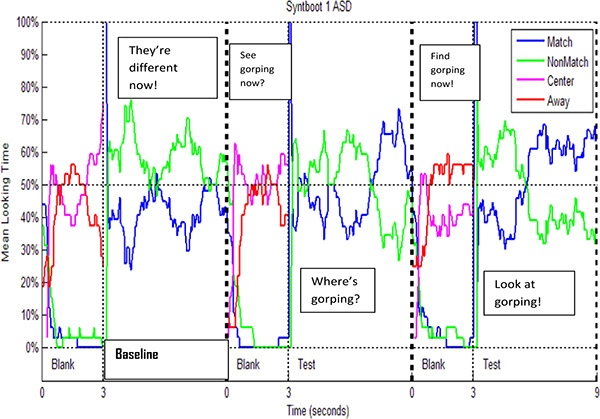

Figure 5 presents the timecourse data for the same children with ASD, when they averaged 41 months of age, while viewing the syntactic bootstrapping video, when they are asked to learn novel verbs using surrounding sentence frames (e.g., to determine that gorping in "The duck is gorping the bunny" refers to a causative action rather than to a noncausative action). Syntactic Bootstrapping is another word learning principle replicated in many studies of TD children. Notice that the children with ASD look longer at the nonmatching image (green lines) during the baseline trials, but then their looking is directed more at the matching images (blue lines) during the second half of the test trials, when they are asked to find the referent of the novel verb (e.g., "Find gorping!"). As a group, these children with ASD were thus able to correctly map the verb onto the causative action. Cross-visit regressions reveal that the children with ASD who looked longer at the match during the Syntactic Bootstrapping video had shorter latencies to the match when they viewed the Word Order video, 8 months earlier.14

Figure 5. Children with ASD's timecourse of looking while viewing the Syntactic Bootstrapping video. Shows children with ASD's timecourse of looking to the match, nonmatch, center, and away while viewing the Syntactic Bootstrapping video.

Figure 5. Children with ASD's timecourse of looking while viewing the Syntactic Bootstrapping video. Shows children with ASD's timecourse of looking to the match, nonmatch, center, and away while viewing the Syntactic Bootstrapping video.

Discussion

Specific attention is needed to ensure that coders be 'deaf' to/unaware of the specific stimuli that the child is experiencing on a given trial, so that they don't know which side is the matching one and inadvertently bias their coding. We ensure this via the inclusion of the 1kHz tone on the 2nd audio channel of the video, which is then copied onto the film of the child's eye movements. The waveform of this tone provides the visual indication of the onset and offset of each trial, thereby delimiting the trials without the coder knowing their video or audio content.

It is very important to ensure that the analysis layout takes account of the left-right switch that occurs when children are coded (i.e., if the matching scene is on the left side while the child is watching, it will appear to be on the right side to the eye-movement coder, and vice versa).

Intercoder reliability assessments yield correlations of about 0.98 for pairs of experienced coders on random selections of 10% of the data;3 with less experienced coders or if there is high turn-over in the coders used across the participants of the study, we recommend having every child's eye-movements coded by at least 2 people and requiring that their codes (usually duration to match) be within 0.3 sec of each other for each trial. If they are not, then a third, fourth or fifth person should code the child until reliability is achieved.17

With participants of toddler age and/or children with developmental delays, it is inevitable that some will not look at either scene for some proportion of each trial, and for some trials, never. The following conventions are generally applied for these lapses of attention: (a) Children need to look to at least one scene for a minimum of 0.3 sec for that trial to be counted. Otherwise, it is a missing trial. (b) For a given video, children need to provide data for more than half of the test trials in order to be included in the final dataset. (c) Missing trials are replaced with the mean across children in that age group/condition for that item.

IPL is a method that taps children's earliest mappings of linguistic forms (i.e., words and sentences) onto referential (i.e., objects and actions) or propositional (i.e., relations and events) meanings. It can be used to assess the processes by which children of different ages learn new words, as well as the ages at which they understand different types of grammatical forms. By requiring only eye movements as overt indicators of understanding (or not), IPL can be used with toddlers whose behavioral compliance is generally low, as well as with some special populations such as children with ASD. Moreover, because this technology can be brought to children's homes, it may also be used with populations which are hard to reach in conventional laboratories, such as children from low social economic groups, children in rural communities, and bilingual children of monolingual non-native speaking parents. Newer ways of analyzing these eye movements are revealing interesting and important effects of learning different types of languages, and of learning words at different ages. Future plans include using IPL concurrently with neuroimaging methodologies, so that children's brain activity during language comprehension can be compared with their behavioral indicators.

Disclosures

No conflicts of interest declared.

Acknowledgments

This research was funded by the National Institutes of Health-Deafness and Communication Disorders (R01 2DC007428).

References

- American Psychiatric Association. Diagnostic and Statistical Manual of Mental Disorders. IV-TR. Washington, DC: American Psychological Association; 2000. [Google Scholar]

- Brandone A, et al. Speaking for the wordless: Methods for studying the foundations of cognitive linguistics in infants. In: Gonzalez-Marquez M, et al., editors. Methods in Cognitive Linguistics. Amsterdam, Netherlands: John Benjamins Publishing Company; 2007. pp. 345–366. [Google Scholar]

- Candan A, et al. Language and age effects in children's processing of word order. Cognitive Development. 2012;27:205–221. [Google Scholar]

- Cauley K, et al. Revealing Hidden Competencies: A New Method for Studying Language Comprehension in Children with Motor Impairments. American Journal of Mental Retardation. 2009;94(1):53–63. [PubMed] [Google Scholar]

- Charman T, et al. Predicting language outcome in infants with autism and pervasive development disorder. International Journal of Language & Communication Disorders. 2003;38:265–285. doi: 10.1080/136820310000104830. [DOI] [PubMed] [Google Scholar]

- Eigsti IM, et al. Beyond pragmatics: Morphosyntactic development in autism. Journal of Autism and Developmental Disorders. 2007;37:1007–1023. doi: 10.1007/s10803-006-0239-2. [DOI] [PubMed] [Google Scholar]

- Ellis Weismer S, et al. Early Language Patterns of Toddlers on the Autism Spectrum Compared to Toddlers with Developmental Delay. Journal of Autism and Developmental Disorders. 2010;40:1259–1273. doi: 10.1007/s10803-010-0983-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fein D, et al. Preschool Children with Inadequate Communication. In: Rapin I, editor. Language and neuropsychological findings. London, UK: MacKeith Press; 1996. pp. 123–154. [Google Scholar]

- Fernald A, et al. Looking while listening: Using eye movements to monitor spoken language comprehension by infants and young children. In: Sekerina I, et al., editors. Developmental Psycholinguistics: On-line methods in children's language processing. Amsterdam: Benjamins; 2008. pp. 97–135. [Google Scholar]

- Goodwin A, et al. Comprehension of wh-questions precedes their production in typical development and autism spectrum disorders. Autism Research. 2012;5:109–123. doi: 10.1002/aur.1220. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hirsh-Pasek K, Golinkoff RM. The origins of grammar: Evidence from early language comprehension. Cambridge, MA: MIT Press; 1996. [Google Scholar]

- Kjelgaard MM, Tager-Flusberg H. An investigation of language impairment in autism: Implications for genetic subgroups. Language and Cognitive Processes. 2001;16:287–308. doi: 10.1080/01690960042000058. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Luyster R, et al. Characterizing communicative development in children referred for autism Spectrum disorders using the MacArthur-Bates Communicative Development Inventory (CDI. Journal of Child Language. 2007;34:623–654. doi: 10.1017/s0305000907008094. [DOI] [PubMed] [Google Scholar]

- Naigles L, et al. Abstractness and continuity in the syntactic development of young children with autism. Autism Research. 2011;4:422–437. doi: 10.1002/aur.223. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Paul R, et al. Dissociations in the development of early communication in autism spectrum disorders. In: Paul R, editor. Language Disorders from a Developmental Perspective. Hillsdale, NJ: Erlbaum; 2007. pp. 163–194. [Google Scholar]

- Pennington BF, Ozonoff S. Executive functions and developmental psychopathology. Journal of Child Psychology and Psychiatry. 1996;37(1):51–87. doi: 10.1111/j.1469-7610.1996.tb01380.x. [DOI] [PubMed] [Google Scholar]

- Piotroski J, Naigles L. Preferential Looking Guide to Research Methods in Child Language. In: Hoff E, editor. Guide to Research Methods in Child Language. Wiley-Blackwell; 2012. pp. 17–28. [Google Scholar]

- Seidl A, et al. Early understanding of subject and object wh-questions. Infancy. 2003;4:423–436. [Google Scholar]

- Sigman M, Ruskin E. Continuity and change in the social competence of children with autism, Down syndrome, and developmental delays. Monographs of the Society for Research in Child Development. 1999;64(1) doi: 10.1111/1540-5834.00002. [DOI] [PubMed] [Google Scholar]

- Stone WL, Yoder PJ. Predicting spoken language level in children with autism spectrum disorders. Autism [Special issue: Early interventions. 2001;5:341–361. doi: 10.1177/1362361301005004002. [DOI] [PubMed] [Google Scholar]

- Swensen L, et al. Children with autism display typical language learning characteristics: Evidence from preferential looking. Child Development. 2007;78:542–557. doi: 10.1111/j.1467-8624.2007.01022.x. [DOI] [PubMed] [Google Scholar]

- Swingley D. Looking while listening. In: Hoff E, editor. Guide to Research Methods in Child Language. Wiley-Blackwell; 2012. pp. 29–40. [Google Scholar]

- Tager-Flusberg H, et al. A longitudinal study of language acquisition in autistic and Down syndrome children. Journal of Autism and Developmental Disorders. 1990;20:1–21. doi: 10.1007/BF02206853. [DOI] [PubMed] [Google Scholar]

- Tek S, et al. Do children with autism show a shape bias in word learning. Autism Research. 2008;1:202–215. doi: 10.1002/aur.38. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Volkmar F, et al. Social deficits in autism: An operational approach using the Vineland adaptive behavior scales. Journal of the American Academy of Child and Adolescent Psychiatry. 1987;26(2):156–161. doi: 10.1097/00004583-198703000-00005. [DOI] [PubMed] [Google Scholar]

- Wagner L, et al. Children's Early Productivity with Verbal Morphology. Cognitive Development. 2009;24:223–239. [Google Scholar]